Systems Programming: Designing and Developing Distributed Applications, FIRST EDITION (2016)

Chapter 1. Introduction

Abstract

This chapter introduces the book, its structure, the way the technical material is organised and the motivation behind this. It provides a historical perspective and explains the significance of distributed systems in modern computing. It also explains the integrative, cross-discipline nature of the presentation used in the book and the underlying ‘systems thinking’ approach. It describes and justifies the way that material has been presented from four carefully selected viewpoints (ways of looking at systems structure, organisation and behaviour). These viewpoints have been chosen to overcome the artificial boundaries that are introduced when material is divided for the purposes of teaching into traditional categorisations of operating systems, networking, distributed systems, and programming; whereas many of the key concepts pertinent to the design and development of distributed applications overlap several of these areas or reside in the margins between these areas. This chapter also provides an essential concise introductory discussion of distributed systems to set the scene for the four core chapters which follow. Distributed systems are then examined in depth in Chapter 6. There is also an introduction to the three case studies and the extensive supplemental technical resources.

Keywords

Transparency

QoS metrics

Functional requirements

Nonfunctional requirements

Software architectures

Case studies.

This book is a self-contained introduction to designing and developing distributed applications. It brings together the essential supporting theory from several key subject areas and places the material into the context of real-world applications and scenarios with numerous clearly explained examples. There is a strong practical emphasis throughout the entire book, involving programming exercises and experiments for the reader to engage in, as well as three detailed fully functional case studies, one of which runs through the four core chapters, places theory aspects into application perspectives, and cross-links across the various themes of the chapters. The book is an ideal companion text for undergraduate degree courses.

This chapter introduces the book, its structure, the way the technical material is organized, and the motivation behind this. It provides a historical perspective and explains the significance of distributed systems in modern computing. It also explains the integrative, cross-discipline nature of the presentation and the underlying “systems thinking” approach. It describes and justifies the way that material has been presented from four carefully selected viewpoints (ways of looking at systems, structure, organization, and behavior). These viewpoints have been chosen to overcome the artificial boundaries that are introduced when material is divided for the purposes of teaching into traditional categorizations of operating systems, networking, distributed systems, and programming; whereas many of the key concepts pertinent to distributed systems overlap several of these areas or reside in the margins between these areas.

The overall goal of the book is to furnish the reader with a rounded understanding of the architecture and communication aspects of systems, and also the theoretical underpinning necessary to understand the various design choices available and to be able to appreciate the consequences of the various design decisions and tradeoffs. Upon reaching the end of this book, the reader will be equipped to design and build their first distributed application.

The technical content is brought alive through an interactive style which incorporates practical activities, examples, sample code, analogies, exercises, and case studies. Many practical experiments and simulation activities are provided by a special edition of the author’s established Workbenches teaching and learning resources suite. Application examples are provided throughout the book to put the conceptual aspects into perspective. These are backed up by extensive source code and a mix of theory and practice exercises to enhance both skills and supporting knowledge.

1.1 Rationale

1.1.1 The Traditional Approach to Teaching Computer Science

Computer science is an extremely broad field of knowledge, encompassing diverse topics which include systems architectures, systems analysis, data structures, programming languages, software engineering techniques, operating systems, networking and communication among many others.

Traditionally, the subject material within computer science has been divided into these topic areas (disciplines) for the pragmatic purposes of teaching at universities. Hence, a student will study Operating Systems as one particular subject, Networking as another, and Programming as another. This model works well in general, and has been widely implemented as a means of structuring learning in this field.

However, there are many aspects of computer systems which cut across the boundaries of several of these disciplines, and cannot be fully appreciated from the viewpoint of any single one of these disciplines; so to gain a deep understanding of the way systems work, it is necessary to study systems holistically across several disciplines simultaneously. One very important example where a cross-discipline approach is needed is the development of distributed applications.

To develop a distributed application (i.e. one in which multiple software components communicate and cooperate to solve an application problem), the developer needs to have programming skills and knowledge of a variety of related and supporting activities including requirements analysis, design, and testing techniques. However, successful design of this class of application also requires a level of expertise in each of several disciplines: networking knowledge (especially protocols, ports, addressing, and binding), operating systems theory (including process scheduling, process memory space and buffer management, and operating system handling of incoming messages), and distributed systems concepts (such as architecture, transparency, name services, election algorithms, and mechanisms to support replication and data consistency). Critically, an in-depth appreciation of the ways that these areas of knowledge interact and overlap is needed, for example, the way in which the operating systems scheduling of processes interacts with the blocking or non-blocking behavior of network sockets and the implications of this for application performance and responsiveness and efficiency of system resource usage. In addition, the initial requirements analysis can only be performed with due diligence if the developer has the ability to form a big-picture view of systems and is not focussing on simply achieving connectivity between parts, hoping to add detail subsequently on an incremental basis, which can lead to unreliable, inefficient or scale-limited systems (a problem that tends to occur if the developer has knowledge compartmentalized within the various disciplines). This is a situation where the whole is significantly greater than the sum of the parts; having segmented pools of knowledge in the areas of operating systems, networking, distributed systems theory, and programming is not sufficient to design and build high-quality distributed systems and applications.

There are in reality no rigid boundaries between the traditional disciplines of computer science, but rather these are overlapping subsets of the wider field. The development of almost any application or system that could be built requires aspects of understanding from several of these disciplines integrated to some extent. A systems approach addresses the problem of topics lying within the overlapping boundaries of several subject areas, and thus potentially only being covered briefly or being covered in distinctly different ways in particular classes. This can have the outcome that students don’t manage to connect up the various references to the same thing, or relate how an aspect covered in one course relates to or impacts on another aspect in another course. Mainstream teaching will continue along the traditional lines, fundamentally because it is an established way to provide the essential foundational building blocks of knowledge and skill. However, there is also plenty of scope for integrative courses that take a systems approach to build on the knowledge and skills provided by more traditional courses and give students an understanding of how the various concepts interrelate and must be integrated to develop whole systems.

1.1.2 The Systems Approach Taken in This Book

The main purpose of this book is to provide a self-contained introduction to designing and developing distributed applications, including the necessary aspects of operating systems, networking, distributed systems, and programming, and the interaction between these aspects is necessary to understand and develop such applications.

Rather than to anchor one of the traditional specific disciplines within computer science, much of the content necessarily occupies the space where several of these disciplines overlap, such as operating systems and networking (e.g. inter-process communication, the impact of scheduling behavior on processes using blocking versus nonblocking sockets, buffer management for communicating processes, and distributed deadlock); networking and programming (e.g. understanding the correct usage of the socket API and socket-level options, maintaining connection state, and achieving graceful disconnection); operating systems and programming in a network context (e.g. understanding the relationships between the various addressing constructs (process id, ports, sockets, and IP addresses), sockets API exception handling, and achieving asynchronous operation within a process using threads or combining timers and nonblocking sockets); and distributed systems and networking (e.g. understanding how higher-level distributed applications are built on the services provided by networks and network protocols).

The core technical content of the book is presented from four viewpoints (the process view, the communication view, the resource view, and the architecture view) which have been carefully chosen to best reflect the different types and levels of organization and activity in systems and thus maximize the accessibility of the book. I find that some students will understand a particular concept clearly when it is explained from a certain angle, while others will understand it only superficially, but will gain a much better grasp of the same concept when a second example is given, approaching and reinforcing from a different angle. This is the approach that has been applied here. Communications between components in modern computing systems is a complex topic, influenced and impacted by many aspects of systems design and behavior. The development of applications which are distributed is further complexified by the architectures of the underlying systems, and of the architectures of the applications themselves, including the functional split across components and the connectivity between the components. By tackling the core material from several angles, the book gives readers the maximum opportunity to understand in depth, and to make associations between the various parts of systems and the roles they play in facilitating communication within distributed applications. Ultimately, readers will be able to design and develop distributed applications and be able to understand the consequences of their design decisions.

Chapter 2: Process View

The first of the four core chapters presents the process view. This examines the ways in which processes are managed and how this influences communications at the low level, dealing with aspects such as process scheduling and blocking, message buffering and delivery, the use of ports and sockets, and the way in which process binding to a port operates, and thus enables the operating system to manage communications at the computer level, on behalf of its host processes. This chapter also deals with concepts of multiprocessing environments, threads, and operating system resources such as timers.

Chapter 3: Communication View

This chapter examines the ways networks and communication protocols operate and how the functionalities and behavior of these impact on the design and behavior of applications. This viewpoint is concerned with topics which include communication mechanisms and the different modes of communication, e.g., unicast, multicast, and broadcast, and the way this choice can impact on the behavior, performance, and scalability of applications. The functionality and features of the TCP and UDP transport-layer protocols are described, and compared in terms of performance, latency, and overheads. Low-level details of communication are examined from a developer viewpoint, including the role and operation of the socket API primitives. The remote procedure call and remote method invocation higher-level communication mechanisms are also examined.

Chapter 4: Resource View

This chapter examines the nature of the resources of computer systems and how they are used in facilitating communication within distributed systems. Physical resources of interest are processing capacity, network communication bandwidth, and memory. For the first two, the discussion focuses on the need to be efficient with, and not waste, these limited resources which directly impact on performance and scalability of applications and the system itself. Memory is discussed in the context of buffers for the assembly and storage of messages prior to sending (at the sender side), and for holding messages after receipt (at the receiver side) while the contents are processed. Also, important is the differentiation between process-space memory and system-space memory and how this is used by the operating system to manage processes, especially with respect to the receipt of messages by the operating system on behalf of local processes and subsequent delivery of the messages to the processes when they issue a receive primitive. The need for and operation of virtual memory is examined in detail.

Chapter 5: Architecture View

This chapter examines the structures of distributed systems and applications. The main focus is on the various models for dividing the logic of an application into several functional components and the ways in which these components interconnect and interact. The chapter also considers the ways in which the components of systems are mapped onto the underlying resources of the system and the additional functional requirements that arise from such mapping, for example, the need for flexible run-time configuration. The various architectural models are discussed in terms of their impact on key nonfunctional quality aspects such as scalability, robustness, efficiency, and transparency.

Chapter 6: Distributed Systems

Distributed systems form a backdrop for the four core viewpoint chapters, each of which deals with a specific set of theoretical aspects, concepts, and mechanisms. These are followed by a chapter which focuses on the distributed systems themselves, their key features and functional requirements, and the specific challenges associated with their design and development. This chapter thereby puts the content of the core chapters into the wider systems perspective and discusses issues that arise from the distribution itself, as well as techniques to address these issues.

The provision of transparency is key to achieving quality in distributed applications. For this reason, transparency is a theme that runs through all the chapters, in relation to the various topics covered, and is also a main focal aspect of the discussion of the main case study. To further reinforce the importance of transparency, it is covered in its own right, in depth, in this chapter. Ten important forms of transparency are defined and explored in terms of their significance and the way in which they impact on systems and applications. Techniques to facilitate the provision of these forms of transparency are discussed. This chapter also describes common services, middleware, and technologies that support interoperability in heterogeneous environments.

Chapter 7: Case Studies—Putting it All Together

This chapter brings together the content of the previous chapters in the form of a pair of fully detailed distributed application case studies which are used as vehicles to illustrate many of the various issues, challenges, techniques, and mechanisms discussed earlier.

The goal is to provide an integrative aspect, in which applications are examined through their entire life cycle. This chapter has a problem-solving approach and is based around provided working applications, their source code, and detailed documentation. The presentation of these applications makes and reinforces links between theory and practice, making references to earlier chapters as necessary.

1.2 The Significance of Networking and Distributed Systems in Modern Computing—A Brief Historical Perspective

My career began in the early 1980s, as a microprocessor technician at a local university. Computer systems were very different then to what is available now. Computers were isolated systems without network connections. Microprocessors were recently on the scene and the “IBM PC” was just about to arrive. There was no such thing as a computer virus. Mainframe computers were the dominant type of computing system for business. They really did look like those systems you see in old films with large removable disk drives that resembled top-loader washing machines, and large units the size of wardrobes with tape reels spinning back and forth on the front. These systems required a team of operators to change tapes and disks to meet users’ requirements, and this use model required that much of the processing was performed in batch mode, in which users submitted a job request and the actual processing was performed sometime, possibly hours, later.

Let me briefly take you back to that time. As part of my job, I built several complete microprocessor-based computer systems from scratch. The technology was manageable by a single person who could understand and develop all aspects of the system, the hardware, the operating software, and the applications that run on them. I was the designer of both hardware and software and used advanced (for their day) microprocessors such as the Zilog Z80 and various latest technologies from Motorola and Intel. I built the motherboard as well as the power supply, wrote the operating system (well the monitor system as it was), and wrote the applications that ran on the systems. I was responsible for testing and bug fixing at all levels of hardware and software. I was both the operator and the user at the same time. The complexity of the entire system was a mere fraction of the complexity of modern systems.

The advent of the microprocessor was a very significant milestone in the evolution of computing. It brought about many major changes and has led to the current situation of ubiquitous computing on a global scale. As a direct result, my modern-day students are faced with multicomponent multilayered interconnected systems, incredibly complex (in both their design and behavior) to the extent that no single individual can be expected to fully understand all aspects of them.

The “PC” (personal computer) concept was established in 1981 with the arrival of IBM’s Personal Computer. Up until this point, there had been a wide variety of desk-top computer offerings from a variety of manufacturers, but each had a different architecture and operating system. This represented risk for businesses as well as for software developers as there was a lack of consistency and it was difficult to make a commitment to a particular technology. The original IBM PC was relatively expensive, but it had an open and easy-to-copy hardware architecture. This enabled a variety of competitors to build “IBM-compatible” machines (sometimes called “PC clones”) which for the most part were considerably cheaper alternatives with acceptable quality. The fact that they had the same architecture, including the central processor (the Intel 8086 family of devices), meant that they all could support the same operating system (the most popular of the three that were available for the PC being Microsoft’s Disk Operating System) and thus could run the same applications. This consistent approach was the key to success of the PC and quite soon these systems were established in very many businesses and many people started buying them for home use too. This was, over the next few years, a true revolution in computing.

Perhaps the most dramatic development since the PC arrived has been the advent of networking. Once the personal computer concept had caught on, and with it the incredibly important notion of standardization at the platform level, there was an increasing need to pass data from one system to another. To achieve something resembling wide-area networking, we actually used to put floppy magnetic disks in the post in those days, while our version of local area networking was to save a file onto a floppy disk in one computer, remove the disk, walk to the desk of a colleague, and place the disk into their computer so that they could access the required file.

Local Area Networking (LAN) technologies started to become commercially available in the mid 1980s and were becoming commonplace by the end of the 1980s. Initially, the cost of wiring up buildings and the per-computer cost of connecting were quite high, so it was common for organizations to have labs of computers in which only a small number were connected to the network. It was also common to have isolated networks scattered about which provided local access to server systems (e.g. file servers) and shared resources such as printers, but were not connected to the wider systems.

Prior to the widespread implementation of LANs, the basic concepts of wide-area networking were already being established. Serial connections could be used to connect computers on a one-to-one basis, much the same as connecting a computer to a printer. A pair of MoDem (Modulator-Demodulator) devices could be placed one at each end of a phone line and thus extend the serial connection between the computers to anywhere that had a phone connection; although, this was cumbersome and had to use low data transmission rates to achieve an acceptable level of reliability.

The Internet is a wide-area network that had already existed in earlier forms since the 1970s, but was not used commercially, and was not yet available to the public at large. University mainframe computer systems were connected by various systems including the Joint Academic NETwork (JANET) in the United Kingdom.

Once the cost of per-computer connection was reduced into double digit sums, there was a rush to connect just about every computer to a LAN. I remember negotiating a deal across several departments of the university I worked for so that we could place a combined order for about 300 network adapter cards for all our PCs and thus make a large bulk saving on cost. Can you imagine the transformational effect of moving from a collection of hundreds of isolated PCs to a large networked infrastructure in a short timeframe? When you consider scaling this up across many universities and businesses simultaneously, you start to realize the impact networking had. Not only were the computers within organizations being connected to LANs in very large numbers but also the organizations were connecting their internal LANs to the Internet (which became much more accessible as the demand to connect brought about service provision companies and the cost of connecting reduced dramatically).

Suddenly, you could transmit data between remote locations, or log in to remote computers and access resources, from the computer on your desk. Initially, wide-area networking was dominated by e-mail and remote connections using Telnet or rlogin, while local area networking was dominated by resource sharing and file transfers. Once the infrastructure was commonplace and the number of users reached a critical mass, there was a sustained increase in the variety of applications that used network communications, and as bandwidths increased and reliability improved, this gave rise to the now common e-commerce and media-streaming applications not previously feasible.

Distributed systems can be considered an evolutionary step beyond applications that simply use network connections directly. Early networked applications suffered from a lack of transparency. For example, users would have to know the address of the other computer and the protocol being used and would have no way to ensure the application was running at the remote end. Such applications required that users have significant knowledge about the system configuration and did not scale well. Distributed systems employ a number of techniques and services to hide the details of the underlying network (e.g. to allow the various components of the system to automatically locate each other, and may even automatically start up remote services if not already running when a request is made). The user need not know details such as the architecture of the service or the number of or location of its components. The fundamental goal of distributed system design is to hide its internal detail, architecture, location of components, etc., from users, making the system of parts appear as a single coherent whole. This increases usability and reliability.

In recent years, distributed systems have come to dominate almost all business computing, including e-commerce, and also in the form of online banking, online stock brokerage, and a wide range of other Internet-based services, and also a large fraction of nonbusiness computing too, including gaming and e-government. Almost all applications are either distributed or at least use the services of networks in some form; an example of this latter category is a word processor which runs on the local computer but saves a file on a network drive (one that is held on a file server rather than the local machine), and which uses the Internet to access a remotely located help file. A question I ask my students at the beginning of each course is to consider the applications they use most commonly and to think about the underlying communication requirements of these. Ask yourself how many applications you have used this week which operate entirely on a single platform without any communication with another system?

When I first taught distributed systems concepts, I did so using the future tense to describe how they would become increasingly dominant due to their various characteristics and benefits. Now they are one of the most common types of software system in use. I can appreciate the number of lower-level activities that takes place behind the scenes to carry out seemingly simple (from the user perspective) operations. I am aware of the underlying complexity involved and realize just how impressive the achievement of transparency has become, such that for most of the time users are totally unaware of this underlying complexity and of the distribution itself.

This section has set the scene for this book. Distributed systems are here to stay and will be ever more dominant in all walks of our increasingly online and information-dependent lives. It is important to understand the complexities of these systems and to appreciate the need for careful design and development by an army of well informed and highly skilled engineers. This book contributes to this effort by providing a solid introduction to the key concepts and challenges involved.

1.3 Introduction to Distributed Systems

This section provides an essential concise background to distributed systems. This serves as a brief primer and sets the scene for the four core chapters. Distributed systems concepts are discussed throughout the book, forming a backdrop to the core chapters and are examined in depth in Chapter 6. There are also three distributed application case studies, one of which runs throughout the core chapters and the remaining two are presented in depth in Chapter 7.

A distributed computing system is one where the resources used by applications are spread across numerous computers which are connected by a network. Various services are provided to facilitate the operation of distributed applications. This is in contrast to the simpler centralized model of computing in which resources are located on a single computer and the processing work can be carried out without any communication with, or dependency on, other computers.

A distributed application is one in which the program logic is spread across two or more software components which may execute on different computers within a distributed system. The components have to communicate and coordinate their actions in order to carry out the computing task of the application.

1.3.1 Benefits and Challenges of Distributed Systems

The distributed computer system approach has several important benefits which arise from the ability to use several computers simultaneously in cooperative ways. These benefits include:

• The ability to scale up the system (because additional resources can be added incrementally).

• The ability to make the system reliable (because there can be multiple copies of resources and services spread around the system, faults which prevent access to one replica instance of a resource or service can be masked by using another instance).

• The ability to achieve high performance (because the computation workload can be spread across multiple processing entities).

• The ability to geographically distribute processing and resources based on application-specific requirements and user locality.

However, the fact that applications execute across several computers simultaneously also gives rise to a number of significant challenges for distributed computing. Some of the main challenges which must be understood and overcome when building distributed systems include:

• Distributed systems exhibit several forms of complexity, in terms of their structure, the communication and control relationships between components, and the behavior that results. This complexity increases with the scale of the system and makes it difficult to test systems and predict their behavior.

• Services and resources can be replicated; this requires special management to ensure that the load is spread across the resources and to ensure that updates to resources are propagated to all instances.

• Dynamic configuration changes can occur, both in the system resources and in the workload placed on the system. This can lead to abrupt changes in availability and performance.

• Resources can be dispersed and moved throughout the system but processes need to be able to find them on demand. Access to resources must therefore be facilitated by naming schemes and support services which allow for resources to be found automatically.

• Multiple processes access shared resources. Such access must be regulated through the use of special mechanisms to ensure that the resources remain consistent. Updates of a particular resource may need to be serialized to ensure that each update is carried out to completion without interference from other accesses.

1.3.2 The Nature of Distribution

Distributed systems can vary in character depending on which aspects of systems are distributed and the way in which the distribution is achieved. Aspects of systems which can be distributed include:

• Files: Distributed file systems were one of the earliest forms of distribution and are commonplace now. Files can be spread across the system to share the service load over multiple file server processes. Files can also be replicated with copies located at multiple file server instances to provide greater accessibility and robustness if a single copy of a file is destroyed.

• Data: Distributed databases allow geographical dispersion of data so that it is local to the users who need it; this achieves lower access latency. The distribution also spreads the load across multiple servers and as with file services, database contents can also be replicated for robustness and increased availability.

• Operating System: The operating system can be distributed across multiple computers. The goal is to provide an abstract single computer system image in which a process appears to execute without direct binding to any particular host computer. Of course the actual process instructions are physically executed on one particular host, but the details of this are masked by the process-to-operating system interface. Middleware essentially achieves the same thing at one level higher, i.e., middleware is a virtual service layer that sits on top of traditional node-centric operating systems to provide a single system abstraction to processes.

• Security and authentication mechanisms can be distributed across computers to ensure consistency and prevent weak entry points. The benefits include the fact that having consistent management of the security across whole systems facilitates better oversight and control.

• Workload: Processing tasks can be spread across the processing resources dynamically to share (even out) the load. The main goal is to improve overall performance by taking load from heavily utilized resources and placing it on underutilized resources. Great care must be taken to ensure that such schemes remain stable under all workload conditions (i.e. that load oscillation does not occur) and that the overheads of using such a scheme (the monitoring, management, and task transfer actions that occur) do not outweigh the benefits.

• Support services (services which support the operation of distributed systems) such as name services, event notification schemes, and systems management services can themselves be distributed to improve their own effectiveness.

• User applications can be functionally divided into multiple components and these components are distributed within a system for a wide variety of reasons. Typically, there will be a user-local component (which provides the user interface as well as some local processing and user-specific data storage and management), and one or more user-remote parts which are located adjacent to shared data and other resources. This aspect of distribution is the primary focus of this book.

1.3.3 Software Architectures for Distributed Applications

The architecture of an application is the structure by which its various components are interconnected. This is a very important factor which impacts on the overall quality and performance. There are several main aspects that affect the structure of software systems; these include:

• The number of types of components.

• The number of instances of each component type.

• The cardinality and intensity of interconnections between the component instances, with other components of the same type and/or of other types.

• Whether connectivity is statically decided or determined dynamically based on the configuration of the run-time system and/or the application context.

The simplest distributed systems comprise two components. When these are of the same type (i.e. each component has the same functionality and purpose), the architecture is described as peer-to-peer. Peer-to-peer applications often rely on ad hoc connectivity as components are dynamically discovered and also depart the system independently; this is especially the case in mobile computing applications in which peer-to-peer computing is particularly popular.

Client server applications comprise two distinct component types, where server components provide some sort of service to the client components, usually on an on-demand basis driven by the client. The smallest client server applications comprise a single component of each type. There can be multiple instances of either component type and most business applications that have this architecture have a relatively high ratio of clients to servers. This works on the basis of statistical multiplexing because client lifetimes tend to be short and collectively the requests they make to the server are dispersed over time.

Applications that comprise three or more types of components are termed three-tier or multi-tier applications. The basic approach is to divide functionality on a finer-grained basis than with two-tier applications such as client server. The various areas of functionality (such as the user interface aspect, the security aspect, the database management aspect, and the core business logic aspect) can be separated each into one or more separate components. This leads to flexible systems where different types of components can be replicated independently of the other types or relocated to balance availability and workload in the system.

Hybrid architectures can be created which combine concepts from the basic models. For example, in client server applications, the client components do not interact directly with each other and in many applications they do not interact at all. Where there is communication between clients, it occurs indirectly via the server. A hybrid could be created where clients communicate directly with other clients, i.e. adding a peer-to-peer aspect, but in such cases, great care must be taken to manage the trade-off between the added value to the application and the increased complexity and interaction intensity. In general, keeping the interaction intensity as low as possible and using hierarchical architectures rather than flat schemes leads to good outcomes in terms of scalability, maintainability, and extensibility of applications.

1.3.4 Metrics for Measuring the Quality of Distributed Systems and Applications

Requirements of distributed applications fall into two categories: those that are related to the functional behavior of the application and those which are related to the overall quality of the application in terms of aspects such as responsiveness and robustness. The latter are called nonfunctional requirements because they cannot be implemented as functions. For example, you cannot write a software function called “responsiveness” which can control this characteristic; instead, characteristics such as responsiveness are achieved through the design of the entire system. There is a cyclic relationship in which almost all functional–behavior parts of an application will typically contribute to the achievement of the nonfunctional aspects, which in turn influence the quality by which the functional requirements are met. It is thus very important that all stages of the design and development of distributed applications place very high importance on the nonfunctional requirements. In some respects, it is even more important to emphasize the nonfunctional requirements because the functional requirements are more likely to be self-defining and thus less likely to be overlooked. For example, a functional requirement such as “back up all transaction logs at the end of each day” can be clearly stated and specific mechanisms can be identified and implemented. The functionality can be easily tested and checked for conformance. However, it is not so straightforward to specify and to ensure the achievement of the nonfunctional requirements such as scalability and robustness.

The functional requirements are application-specific and can relate to behavior or outcomes such as sequencing of activities, computational outputs, control actions, security restrictions, and so forth. These must be defined precisely in the context of a particular application, along with clear test specifications so that the developers can confirm the correct interpretation of the requirement and that the end-result operates correctly.

Nonfunctional requirements are less clearly defined generally and open to interpretation to some extent. Typical nonfunctional requirements include scalability, availability, robustness, responsiveness, and transparency. While all of these requirements contribute to the measure of quality of the distributed application, it is transparency which is considered to be the most important in general. Transparency can be described at the highest level as “hiding the details of the multiplicity and distribution of components such that users are presented with the illusion of a single system.” Transparency provision at the system level also reduces the burden on application developers so that they can focus their efforts on the business logic of the application and not have to deal with all the vast array of technical issues arising because of distribution. For example, the availability of a name service removes the need for developers to build name-to-address resolution mechanisms into applications, thus reducing the complexity of applications as well as reducing development time and cost.

Transparency is a main cross-cutting theme in this book and various aspects of it are discussed in depth in several places. It is such a broad concept that it is subdivided into several forms, each of which plays a different role in achieving high quality systems.

1.3.5 Introduction to Transparency

Transparency can be categorized into a variety of forms which are briefly introduced below. Transparency is discussed in greater detail throughout the book. It is discussed contextually in relation to the various topics covered in the four core chapters, and is covered in significant depth as a subject in its own right in Chapter 6.

1.3.5.1 Access Transparency

Access transparency requires that objects are accessed with the same operations regardless of whether they are local or remote. That is, the interface to access a particular object should be consistent for that object, no matter where it is actually stored in the system.

1.3.5.2 Location Transparency

Location transparency is the ability to access objects without knowledge of their location. This is usually achieved by making access requests based on an object’s name or ID which is known by the application making the request. A service within the system then resolves this name or ID reference into a current location.

1.3.5.3 Replication Transparency

Replication transparency is the ability to create multiple copies of objects without any effect of the replication seen by applications that use the objects. It should not be possible for an application to determine the number of replicas, or to be able to see the identities of specific replica instances. All copies of a replicated data resource, such as files, should be maintained such that they have the same contents and thus any operation applied to one replica must yield the same results as if applied to any other replica.

1.3.5.4 Concurrency Transparency

Concurrency transparency requires that concurrent processes can share objects without interference. This means that the system should provide each user with the illusion that they have exclusive access to the objects. Mechanisms must be in place to ensure that the consistency of shared data resources is maintained despite being accessed and updated by multiple processes.

1.3.5.5 Migration Transparency

Migration transparency requires that data objects can be moved without affecting the operation of applications that use those objects, and that processes can be moved without affecting their operations or results.

1.3.5.6 Failure Transparency

Failure transparency requires that faults are concealed such that applications can continue to function without any impact on behavior or correctness arising from the fault.

1.3.5.7 Scaling Transparency

Scaling transparency requires that it should be possible to scale up an application, service, or system without changing the underlying system structure or algorithms. Achieving this is largely dependent on efficient design, in terms of the use of resources, and especially in terms of the intensity of communication.

1.3.5.8 Performance Transparency

Performance transparency requires that the performance of systems should degrade gracefully as the load on the system increases. Consistency of performance is important for predictability and is a significant factor in determining the quality of the user experience.

1.3.5.9 Distribution Transparency

Distribution transparency requires that the presence of the network and the physical separation of components are hidden such that distributed application components operate as though they are all local to each other (i.e. running on the same computer) and, therefore, do not need to be concerned with details of network connections and addresses.

1.3.5.10 Implementation Transparency

Implementation transparency means hiding the details of the ways in which components are implemented. For example, this can include enabling applications to comprise components developed in different languages in which case, it is necessary to ensure that the semantics of communication, such as in method calls, is preserved when these components interoperate.

1.3.5.11 Achieving Transparency

The relative importance of the various forms of transparency is system-dependent and also application-dependent within systems. However, access and location transparencies can be considered as generic requirements in any distributed system. Sometimes, the provision of these two forms of transparency is a prerequisite step toward the provision of other forms (such as migration transparency and failure transparency). Some of the transparency forms will not be necessary in all systems; for example, if all parts of applications are developed with the same language and run on the same platform type, then implementation transparency becomes irrelevant.

The various forms of transparency are achieved in different ways, but in general this is resultant of a combination of the design of the applications themselves as well as the provision of appropriate support services at the system level. A very important example of such a service is a name service, which is the basis for the provision of location transparency in many systems.

1.4 Introduction to the Case Studies

Distributed applications are multifaceted multicomponent software which can exhibit rich and complex behaviors. It is therefore very important to anchor theoretical and mechanistic aspects to relevant examples of real applications. The writing of this book has been inspired by an undergraduate course entitled Systems Programming that has been developed and delivered over a period of 13 years. This course has a theory-meets-practice emphasis in which the lecture sessions introduce and discuss the various theory, concepts, and mechanisms, and then the students go into the laboratory to carry out programming tasks applying and investigating the concepts. A favorite coursework task for this course has been the design and development of a distributed game; specifically for the coursework, it must be a game with a client server architecture that can be played across a network. The students are free to choose the game that they will implement, and it is made clear to the students that the assessment is fundamentally concerned with the communication and software-architecture aspects, and not, for example, concerned with graphical interface design (although many have been impressive in this regard too). By choosing their own game, the students take ownership and engage strongly in the task. The game theme certainly injects a lot of fun into the laboratory sessions, and I am certainly a keen advocate of making learning fun, but it should also be appreciated that the functional and nonfunctional requirements of a distributed game are similar to those of many distributed business applications and thus the learning outcomes are highly transferable.

1.4.1 The Main (Distributed Game) Case Study

In keeping with the coursework theme described above, the main case study application used in the book is a distributed version of the game Tic-Tac-Toe (also known as Noughts-and-Crosses). The business logic and control logic for this game has been functionally divided across the client and server components. Multiple players, each using an instance of the client component, can connect to the game server and select other players to challenge in a game. The actual game logic is not particularly complex, and thus it does not distract from the architectural and communication aspects that are the main focus of the case study.

The case study runs through the core chapters of the book (the four viewpoints), placing the theory and concepts into an application context and also providing continuity and important cross-linking between those chapters. This approach facilitates reflection across the different viewpoints and facilitates understanding of some of the design challenges and the potential conflicts between the various design requirements that can arise when developing distributed applications.

The Tic-Tac-Toe game has been built into the Distributed Systems Workbench (see later in this chapter) so that you can explore the behavior of the application as you follow the case study explanation throughout the book. The entire source code is provided as part of the accompanying resources.

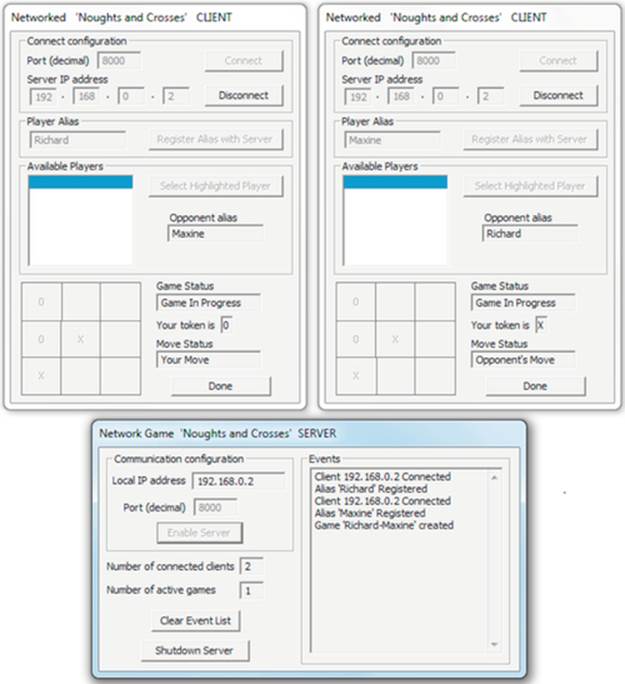

Figure 1.1 shows the distributed game in use; there are two client processes connected to a server process and a game is in play between the two clients, mediated by the server. For demonstration purposes, the game has been designed so that all components can run on a single computer, as was the case in the scenario illustrated. However, the normal use mode of the game is to run the different components on different computers.

FIGURE 1.1 The Tic-Tac-Toe game in play.

1.4.2 The Additional Case Studies

Due to the very wide scope of distributed systems, additional case studies are necessary to ensure that a variety of architectures, functionalities, and communication techniques are evaluated. Two additional case studies are therefore provided in the final chapter. These are covered in depth taking the reader through the development life cycle and including activities ranging from requirements analysis through to testing.

The first of these case studies is a time-service client which accesses the standard NIST time service using the Network Time Protocol. The second case study is an Event Notification Service (ENS), complete with a sample distributed application comprising event publisher and consumer components which communicate indirectly via the ENS. The application components have been developed in a variety of languages to facilitate exploration of interoperability and issues relating to heterogeneous systems.

1.5 Introduction to Supplementary Material and Exercises

A significant amount of supplementary material accompanies this book. The supplementary material falls into several categories:

• Three complete case studies with full source code and detailed documentation.

• Extensive sample code and executable code to support practical activities in chapters and to support the programming challenges (including starting points for development and example solutions).

• Special editions of the established Workbenches suite of teaching and learning tools (see later in this chapter).

• Detailed documentation accompanying the Workbenches, describing further experiments and exploitation of the supported concepts and mechanisms.

• Teaching support materials including student coursework task specifications and introductory laboratory exercises to familiarize students with the basics of communication using the TCP and UDP protocols; these are based on provided sample code which students can extend.

Due to the complex and dynamic nature of distributed systems, it can be challenging to convey details of their behavior, and the way in which design choices impact this behavior, to students. Inconsistencies can arise in the way students perceive different descriptions of behavior presented to them, for example:

• The theoretical behavior of a system (for example, as you might read in a book, instruction manual, or lecture presentation).

• An individual’s expectation of behavior, based on extrapolation of experience in other systems, or previous experience with the same system but with different configuration.

• The observed behavior of a system when an empirical experiment is carried out; especially since this can be sensitive to the system configuration and exact method of carrying out the experiment and thus typically the results obtained require some skill in their interpretation.

To understand in depth, the student needs to be able to see the way in which applications’ behavior is affected by the combination of its configuration and its run-time environment. This highlights why this book is structured as it is, with practical activities interspersed throughout. There is a risk when studying complex systems of gaining a superficial understanding based on a subset of the theory, without appreciating the underlying reasons for why systems are as they are, and behave as they do. The inclusion of experiments to provide a practical grounding for the theory concepts and to make links between observed phenomenon and the underlying causes is therefore very important (see next section).

1.5.1 In-Text Activities

In-text activities are embedded into the main text of the book and form an important part of the theory-meets-practice approach. These practical activities are tightly integrated with the relevant sections of the discussion, and are intended to be carried out as a parallel activity to reading the book. The activities are identified using activity boxes which set them out in a consistent format, comprising:

• An explanation of the activity, the intended learning outcomes, and its relevance to the particular section of the book.

• Identification of which supplementary material is required.

• Description of the method for carrying out the activity.

• Evaluation, including discussion of the outcomes, results observed, and their implications.

• Reflection, questions and/or challenges to encourage further independent investigation and to reinforce understanding.

Activity I1 provides an example of the format of the in-text activities and explains how to access the book’s supporting resources from the companion Web site.

Activity I1

Accessing the Supplementary Material

The four core chapters (the process view, the communication view, the resource view, the architecture view) and the distributed systems chapter each contain embedded practical activities which should, where possible be completed as the book is read. These activities have been designed to anchor key concepts and to allow the reader to explore more broadly, as many of the activities involve configurable experiments or simulations which support what-if exploration.

The activities are set out in a consistent format with identified learning outcomes, a clear method, expected results, and reflection. This first activity introduces the style of the subsequent activities which are embedded into the text of the other chapters. It has the same layout as those activities.

Prerequisites: Any specific prerequisites for the activity will be identified here; this activity has none.

Learning Outcomes

1. Familiarization with the style and purpose of the in-text activities.

2. Familiarization with the support resources.

Method: This activity is carried out in two steps:

1. Locate and visit the book’s online resources Web site.

i. The book’s online Web site is located at: http://booksite.elsevier.com/9780128007297.

ii. The resources are accessible via the “Resources” tab at the bottom of the page.

2. Copy resources locally to your computer.

Please note that all materials, programs, source code, and documentation are provided as is, in good faith but without guarantee or warranty. They are provided with the best intention of being a valuable resource to aid investigation and understanding of the text book that they accompany.

The recommended way to use the supporting resources is to create a separate folder named “SystemsProgramming” on your computer to hold the resources that relate to each chapter, and to copy the resources across in their entirety.

Notes:

• Obtaining all of the resources at the outset will make the reading of the book flow smoothly without the interruption of regularly having to revisit the Web site.

• The executable applications, including the Workbenches are designed to be downloaded onto your computer before running.

Expected Outcome

At the end of this activity, you should be aware of the nature and extent of the supporting resources available. You should know how to copy the resources to your own computer to facilitate their use.

Reflection

For each of the subsequent activities, there is a short reflection section which provides encouragement and steering for additional experimentation and evaluation.

1.6 The Workbenches Suite of Interactive Teaching and Learning Tools

The book is accompanied by a suite of sophisticated interactive teaching and learning applications (called Workbenches) that the author has developed over a period of 13 years to promote student-centered and flexible approaches to teaching and learning, and to enable students to work remotely, interactively and at their own pace. The Networking Workbench, Operating Systems Workbench, and Distributed Systems Workbench provide combinations of configurable simulations, emulations, and implementations to facilitate experimentation with many of the underlying concepts of systems. The Workbenches have been tried and tested with many cohorts of students and are used as complementary support to several courses by several lecturers at present. Specific technical content in the book is linked to particular exercises and experiments which can be carried out using the Workbench software tools. For example, there are specific experiments in the Networking Workbench that deal with addressing, buffering, blocking versus nonblocking socket IO modes, and the operation of the TCP and UDP protocols.

The motivation for developing the Workbenches came from my experience of teaching aspects of computer systems which are fundamentally dynamic in their behavior. It is very difficult to convey the dynamic aspects of systems such as communication (e.g. connectivity, message sequence), scheduling, or message buffering with traditional teaching techniques, especially in a classroom environment. It is also problematic to move too soon to a program-developmental approach, as inexperienced programmers get bogged down with the problems of programming itself (syntax errors, programming concepts, etc.) and often the networking or distributed systems learning outcomes are not reached or fully developed due to slow progression.

The Workbenches were developed to fill these gaps. Lecturers can demonstrate concepts with live experiments and simulations in lectures for example, and students can explore the behaviors of protocols and mechanisms in live experiments but without actually having to write the underlying code. A series of progressive activities have been developed to accompany the workbench engines, and have been very well received by students. Specific advantages of the Workbenches include:

• The replacement of static representations of systems and systems behavior with dynamic, interactive, user-configured simulations (showing how components interact and also the temporal relationships within those interactions).

• Readers can learn at their own pace, with configurable and repeatable experiments.

• The Workbenches can be used in lectures and tutorials to demonstrate concepts as well as in the laboratory and unsupervised, and thus reinforce the nature of the book as an ideal course guide.

• The Workbenches are accompanied by progressive laboratory exercises, designed to encourage analysis and critical thinking. As such, the tools bring the content of the book to life, and allow the reader to see first-hand the behaviors and effects of the various operating systems mechanisms, distributed systems mechanisms, and networking protocols.

A special Systems Programming edition of the Workbenches has been released specifically to accompany this book. This edition is also referred to as release 3.1.

1.6.1 Operating Systems Workbench 3.1 “Systems Programming Edition”

Configurable simulations of process scheduling and scheduling algorithms; threads, thread priorities, thread locking, and mutual exclusion; deadlock; and memory management and virtual memory.

1.6.2 The Networking Workbench 3.1 “Systems Programming Edition”

Practical experimentation with the UDP and TCP protocols; ports, addressing, binding, blocking and nonblocking socket IO modes; message buffering; unicast, multicast, and broadcast modes of communication; communication deadlock (distributed deadlock); and DNS name resolution.

1.6.3 Distributed Systems Workbench 3.1 “Systems Programming Edition”

Practical experimentation with an election algorithm; a directory service; a client server application (the distributed game that forms the main case study); one-way communication; and the Network Time Protocol (the network time service client case study in the final chapter).

1.7 Sample Code and Related Exercises

1.7.1 Source Code, in C++, C#, and Java

There are numerous complete applications and also some skeletal code which are used as a starting point for readers to get begin developing their own applications, as well as serving as the basis and starting point for some of the end of chapter exercises. There are examples written in various languages and the event notification server case study purposefully demonstrates interoperability between components developed using three different languages.

1.7.2 Application Development Exercises

Chapters 2–7 include application development exercises related to the specific chapter’s content. Working examples are provided and, in some cases, these lead on from the in-text activities, so readers will already be familiar with the functionality of the application. The general approach encouraged is to start by examining the provided sample code and to relate the application’s behavior to the program logic. Understanding can be enhanced by compiling and single-stepping through the sample code instructions. Once a clear understanding has been achieved, the second step is to extend the functionality of the application by adding new features as set out in the specific task. Sample solutions are provided where appropriate.