Systems Programming: Designing and Developing Distributed Applications, FIRST EDITION (2016)

Chapter 2. The Process View

Abstract

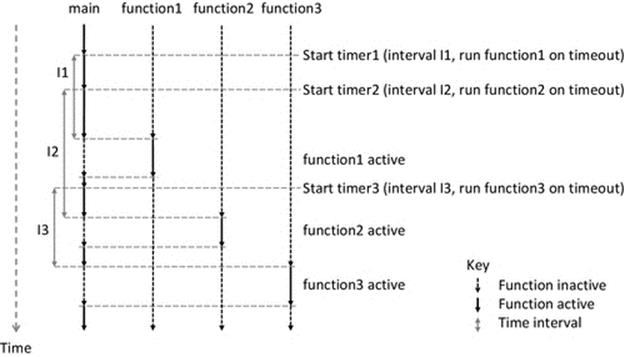

This chapter examines systems and communication within systems from the process viewpoint. The process is the central theme around which the concepts of modular systems and multiple communicating systems are presented and explained. Process scheduling and management are examined for both real-time and non-real-time applications. Communication is treated as input to and output from processes. The way that interprocess communication is facilitated, and the way that communication behavior may interact with process scheduling behavior, is discussed. Multithreading is introduced and discussed in terms of ensuring responsiveness of processes. The use of timers within processes to provide control and generate periodic tasks is investigated.

Keywords

Process

Scheduling

Threads

Thread scheduling

Sockets

Input stream

Output stream

Pipe

Process states

Workload characteristics

Deadline

Interprocess communication

Program

Real time scheduling

Priority

Preemption

Operating system

2.1 Rationale and Overview

The process is the ultimate endpoint of communication in distributed systems. Within a particular system or application, multiple processes execute as separate entities, scheduled by the operating system. However, for these processes to act cooperatively and coherently to solve application problems, they need to communicate. Understanding the nature of processes and the way they interact with the operating system is a key prerequisite to designing systems of processes that communicate to build higher-level structures and thus solve application-level problems of distributed systems.

The abbreviation IO will be used when discussing input and output in generic contexts.

2.2 Processes

This section examines the nature of processes and the way in which they are managed by operating systems. In particular, the focus is on the way in which input and output (IO) from devices is mapped onto the IO steams of a process and the way in which interprocess communication (IPC) is achieved with pipelines.

2.2.1 Basic Concepts

It is first necessary to introduce the basic concepts of processes and their interaction with systems. This sets the scene for the subsequent deeper investigation.

We start with the definition of a program.

A program is a list of instructions together with structure and sequence information to control the order in which the instructions are carried out.

Now let us consider the definition of a process.

A process is the running instance of a program.

This means that when we run (or execute) a program, a process is created (see below).

The most important distinction between a program and a process is that a program does not do anything; rather, it is a description of how to do something. When a process is created, it will carry out the instructions of the related program.

A set of instructions that are supplied with home-assembly furniture are a useful analogy to a computer program. They provide a sequenced (numbered) set of individual steps describing how to assemble the furniture. The furniture will not be assembled just because of the existence of the instructions. It is only when someone actually carries out the instructions, in the correct order, that the furniture gets built. The act of following the instructions step by step is analogous to the computer process.

Another important relationship between a program and a process is that the same program can be run (executed) many times, each time giving rise to a unique process. We can extend the home-assembly furniture analogy to illustrate; each set of furniture is delivered with a copy of the instructions (i.e., the same “program”), but these are instantiated (i.e., the instructions are carried out) at many different times and in different places, possibly overlapping in time such that two people may be building their furniture at the same time—unbeknownst to each other.

2.2.2 Creating a Process

The first step is to write a program that expresses the logic required to solve a particular problem. The program will be written in a programming language of your choice, for example, C++ or C#, enabling you to use a syntax that is suitably expressive to represent high-level ideas such as reading input from a keyboard device, manipulating data values, and displaying output on a display screen.

A compiler is then used to convert your human-friendly high-level instructions into low-level instructions that the microprocessor understands (thus the low-level instruction sequence that the compiler generates is called machine code). Things can go wrong at this point in two main ways. Syntax errors are those errors that break the rules of the language being used. Examples of syntax errors include misspelled variable names or keywords, incorrect parameter types, or incorrect numbers of parameters being passed to methods and many other similar mistakes (if you have done even a little programming, you can probably list at least three more types of syntax error that you have made yourself). These errors are automatically detected by the compiler and error messages are provided to enable you to locate and fix the problems. Thus, there should be no ongoing issues arising from syntax once you get the code to compile.

Semantic errors are errors in the presentation of your logic. In other words, the meaning as expressed by the logic of the program code is not the intended meaning of the programmer. These errors are potentially far more serious because generally, there will be no way for the compiler to detect them. For example, consider that you have three integer variables A, B, and C and you intend to perform the calculation C = A – B but you accidentally type C = B – A instead! The compiler will not find any errors in your code as it is syntactically correct, and the compiler certainly has no knowledge of the way your program is supposed to work and does not care what actual value or meaning is ascribed to variables A, B, and C. Semantic errors must be prevented by careful design and discipline during implementation and are detected by vigilance on the part of the developer, and this must be supported by a rigorous testing regime.

Let us assume we now have a logically correct program that has been compiled and stored in a file in the form of machine code that the microprocessor hardware can understand. This type of file is commonly referred to as an executable file, because you can “execute” (run) it by providing its name to the operating system. Some systems identify such files with a particular filename extension, for example, the Microsoft Windows system uses the extension “.exe”.

It is important to consider the mechanism for executing a program. A program is a list of instructions stored in a file. When we run a program, the operating system reads the list of instructions and creates a process that comprises an in-memory version of the instructions, the variables used in the program, and some other metadata concerning, for example, which instruction is to be executed next. Collectively, this is called the process image and the metadata and variables (i.e., the changeable parts of the image) are called the process state, as the values of these describe, and are dependent on, the state of the process (i.e., the progress of the process through the set of instructions, characterized by the specific sequence of input values in this run-instance of the program). The state is what differentiates several processes of the same program. The operating system will identify the process with a unique Process IDentifier (PID). This is necessary because there can be many processes running at the same time in a modern computer system and the operating system has to keep track of the process in terms of, among other things, who owns it, how much processing resource it is using, and which IO devices it is using.

The semantics (logic) represented by the sequence of actions arising from the running of the process is the same as that expressed in the program. For example, if the program adds two numbers first and then doubles the result, the process will perform these actions in the same order and will provide an answer predictable by someone who knows both the program logic and the input values used.

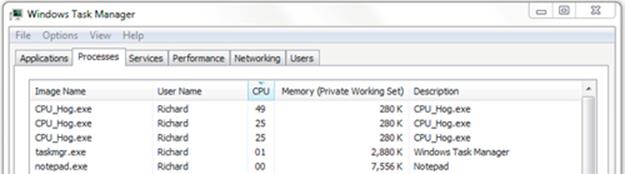

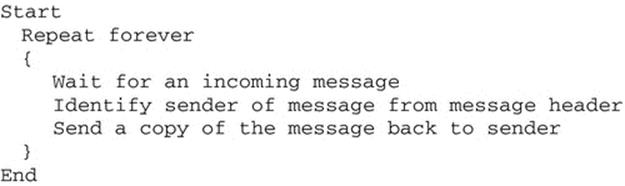

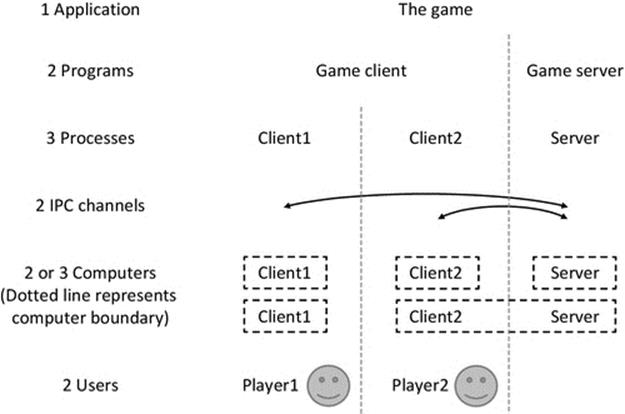

Consider the simplest program execution scenario as illustrated in Figure 2.1.

FIGURE 2.1 Pseudocode for a simple process.

Figure 2.1 shows the pseudocode for a very simple process. Pseudocode is a way of representing the main logical actions of the program, in a near-natural language way, yet still unambiguously, that is independent of any particular programming language. Pseudocode does not contain the full level of detail that would be provided if the actual program were provided in a particular language such as C++, C#, or Java, but rather is used as an aid to explain the overall function and behavior of programs.

The program illustrated in Figure 2.1 is a very restricted case. The processing itself can be anything you like, but with no input and no output, the program cannot be useful, because the user cannot influence its behavior and the program cannot signal its results to the user.

A more useful scenario is illustrated in Figure 2.2.

FIGURE 2.2 Pseudocode of a simple process with input and output.

This is still conceptually very simple, but now, there is a way to steer the processing to do something useful (the input) and a way to find out the result (the output).

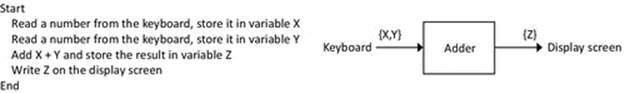

Perhaps the easiest way to build a mental picture of what is happening is to assume that the input device is a keyboard and the output device is the display screen. We could write a program Adder with the logic shown in Figure 2.3.

FIGURE 2.3 Two aspects of the process, the pseudocode and a block representation, which shows the process with its inputs and outputs.

If I type the number 3 and then the number 6 (the two input values), the output on the display screen will be 9.

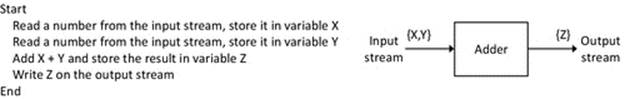

More accurately, a process gets its input from an input stream and sends its output to an output stream. The process when running does not directly control or access devices such as the keyboard and display screen; it is the operating system's job to pass values to and from such devices. Therefore, we could express the logic of program Adder as shown in Figure 2.4.

FIGURE 2.4 The Adder process with its input and output streams.

When the program runs, the operating system can (by default) map the keyboard device to the input stream and the display screen to the output stream.

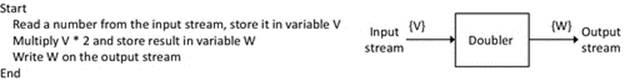

Let's define a second program Doubler (see Figure 2.5).

FIGURE 2.5 The Doubler process; pseudocode and representation with input and output streams.

We can run the program Doubler, creating a process with the input stream connected to the keyboard and the output stream connected to the display screen. If I type the number 5 (the input), the output on the screen will be 10.

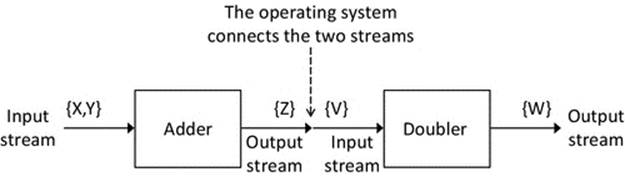

The concept of IO streams gives rise to the possibility that the IO can be connected to many different sources or devices and does not even have to involve the user directly. Instead of the variable W being displayed on the screen, it could be used as the input to another calculation, in another process. Thus, the output of one process becomes the input to another. This is called a pipeline (as it is a connector between two processes, through which data can flow) and is one form of IPC. We use the pipe symbol | to represent this connection between processes. The pipeline is implemented by connecting the output stream of one process to the input stream of another. Notice that it is the operating system that actually performs this connection, and not the processes themselves.

The Adder and Doubler processes could be connected such that the output of Adder becomes the input to Doubler, by connecting the appropriate steams. In this scenario, the input stream for process Adder is mapped to the keyboard, and the output stream for process Doubler is mapped to the display screen. However, the output stream for Adder is now connected to the input stream for Doubler, via a pipeline.

Using the pipe notation, we can write Adder | Doubler.

This is illustrated in Figure 2.6.

FIGURE 2.6 The Adder and Doubler processes connected by a pipe.

If I type the number 3 and then the number 6 (the two input values), the output on the display screen will be 18. The output of process Adder is still 9, but this is not exposed to the outside world (we can call this a partial result as the overall computation is not yet complete). Process Doubler takes the value 9 from process Adder and doubles it, giving the value 18, which is then displayed on the screen.

We have reached the first practical activity of this chapter. The activities are each set out in the form of an experiment, with identified learning outcomes and a method to follow.

I have specifically taken the approach of including carefully scoped practical tasks that the reader can carry out as they read the text because the learning outcomes are better reinforced by actually having the hands-on experience. The practical tasks are designed to reinforce the theoretical concepts introduced in the main text. Many of the practical tasks also support and encourage your own exploration by changing parameters or configuration to ask what-if questions.

The presentation of the activities also reports expected outcomes and provides guidance for reflection and in some cases suggestions for further exploration. This is so that readers who are unable to run the experiments at the time of reading can still follow the text.

The first Activity P1 provides an opportunity to investigate IPC using pipes.

The example in Activity P1 is simple, yet nevertheless extremely valuable. It demonstrates several important concepts that are dealt with in later sections:

Activity P1

Exploring Simple Programs, Input and Output Streams, and Pipelines

Prerequisites

The instructions below assume that you have previously performed Activity I1 in Chapter 1. This activity places the necessary supplemental resources on your default disk drive (usually C:) in the directory SystemsProgramming. Alternatively, you can manually copy the resources and locate them in a convenient place on your computer and amend the instructions below according to the installation path name used.

Learning Outcomes

This activity illustrates several important concepts that have been discussed up to this point:

1. How a process is created as the running instance of a program by running the program at the command line

2. How the operating system maps input and output devices to the process

3. How two processes can be chained together using a pipeline

Method

This activity is carried out in three steps:

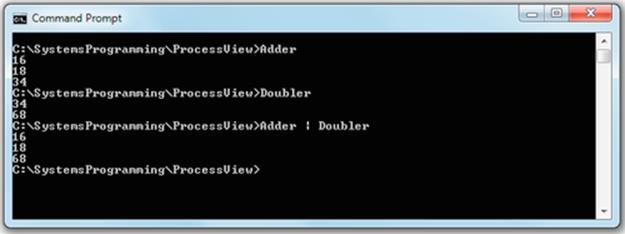

1. Run the Adder.exe program in a command window. Navigate to the ProcessView subfolder of the SystemsProgramming directory and then execute the Adder program by typing Adder.exe followed by the Enter key. The program will now run as a process. It waits until two numbers have been typed (each one followed by the Enter key) and then adds the numbers and displays the result, before exiting.

2. Run the Doubler.exe program in the same manner as for the Adder.exe program in step 1. The process waits for a single number to be typed (followed by the Enter key) and then doubles the number and displays the result, before exiting.

3. Run the Adder and Doubler programs in a pipeline. The goal is to take two numbers input by the user and to display double the sum of the two numbers. We can write this formulaically as:

![]()

where X and Y are the numbers entered by the user and Z is the result that is displayed. For example, if X = 16 and Y = 18, the value of Z will be 68.

The command-line syntax to achieve this is Adder | Doubler.

The first process will wait for two numbers to be typed by the user. The output of the Adder process will be automatically mapped onto the input of the Doubler process (because the | was used), and the Doubler process will double the value it receives, which is then output to the display screen.

The image below shows the results when the three steps above are followed:

Expected Outcome

For each of the first two steps, you will see that the program was executed as a process that follows the logic of the program (see the source code in file Adder.cpp and also Doubler.cpp). Notice that by default, the operating system automatically mapped the keyboard as the input device and the display screen as the output device although this was not specified in the program. You will also see how the operating system remaps the input and output streams of the processes when the pipeline is used. The individual programs are used as building blocks to construct more complex logic.

Reflection

1. Study the source code of the two programs and read the built-in comments.

2. Think about the different roles of the programmer who wrote the two programs, the operating system, and the user who types the command line and provides the input and how each influence the end result. It is important to realize that the overall behavior seen in this activity is a result of a combination of the program logic, the operating system control of execution, and the user's input data.

1. Processes can communicate; for example, the output from one process can become the input to another process.

2. In order for processes to communicate, there needs to be a mechanism to facilitate this. In the simple scenario here, the operating system facilitates the IPC by using the pipeline mechanism as a connector between the output stream of one process and the input stream of the other.

3. Higher-level functionality can be built up from simpler logic building blocks. In the example, the externally visible logic is to add two numbers and double the result; the user sees the final result on the display screen and does not need to know how it was achieved. This is the concept of modularity and is necessary to achieve sophisticated behavior in systems while keeping the complexity of individual logical elements to manageable levels.

2.3 Process Scheduling

This section examines the role the operating system plays in managing the resources of the system and scheduling processes.

A process is “run” by having its instructions executed in the central processing unit (CPU). Traditionally, general-purpose computers have had a single CPU, which had a single core (the core is the part that actually executes a process). It is becoming common in modern systems to have multiple CPUs and/or for each CPU to have multiple cores. Since each core can run a single process, a multiple core system can run multiple processes at the same time. For the purpose of limiting the complexity of the discussion in this book, we shall assume the simpler, single-core architecture, which means that only a single process can actually execute instructions at any instant (i.e., only one process is running at a time).

The most fundamental role of an operating system is to manage the resources of the system. The CPU is the primary resource, as no work can be done without it. Thus, an important aspect of resource management is controlling usage of the CPU (better known as process scheduling). Modern operating systems have a special component, the scheduler, which is specifically responsible for managing the processes in the system and selecting which one should run at any given moment. This is the most obvious and direct form of interaction between processes and the operating system, although indirect interaction also arises through the way in which the operating system controls the other resources that a particular process may be using.

Early computers were very expensive and thus were shared by large communities of users. A large mainframe computer may have been shared across a whole university, for example. A common way to accommodate the needs of many users was to operate in “batch mode.” This required that users submit their programs to be run into a “batch queue” and the system operators would oversee the running of these programs one by one. In terms of resource management, it is very simple; each process is given the entire resources of the computer, until either the task completes or a run-time limit may be imposed. The downside of this approach is that a program may be held in the queue for several hours. Many programs were run in overnight batches, which meant that the results of the run were not available until the next day.

The Apollo space missions to the moon in the 1960s and 1970s provide an interesting example of the early use of computers in critical control applications. The processing power of the onboard Apollo Guidance Computer1 (AGC) was a mere fraction of that of modern systems, often likened simplistically to that of a modern desktop calculator, although through sophisticated design, the limited processing power of this computer was used very effectively. The single-core AGC had a real-time scheduler, which could support eight processes simultaneously; each had to yield if another higher-priority task was waiting. The system also supported timer-driven periodic tasks within processes. This was a relatively simple system by today's standards, although the real-time aspects would still be challenging from a design and test viewpoint. In particular, this was a closed system in which all task types were known in advance and simulations of the various workload combinations could be run predeployment to ensure correctness in terms of timing and resource usage. Some modern systems are closed in this way, in particular embedded systems such as the controller in your washing machine or the engine management system in your car (see below). However, the general-purpose computers that include desktop computers, servers in racks, smartphones, and tablets are open and can run programs that the user chooses, in any combination; thus, the scheduler must be able to ensure efficient use of resources and also to protect the quality of service (QoS) requirements of tasks in respect to, for example, responsiveness.

Some current embedded systems provide useful examples of a fixed-purpose computer. Such a system only performs a design-time specified function and therefore does not need to switch between multiple applications. Consider, for example, the computer system that is embedded into a typical washing machine. All of the functionality has been predecided at design time. The user can provide inputs via the controls on the user interface, such as setting the wash temperature or the spin speed, and thus configure the behavior but cannot change the functionality. There is a single program preloaded

Activity P2

Examine List of Processes on the Computer (for Windows Operating Systems)

Prerequisites

Requires a Microsoft Windows operating system.

Learning Outcomes

1. To gain an understanding of the typical number of and variety of processes present on a typical general-purpose computer

2. To gain an appreciation of the complexity of activity in multiprocessing environment

3. To gain an appreciation of the need for and importance of scheduling

Method

This activity is performed in two parts, using two different tools to inspect the set of processes running on the computer.

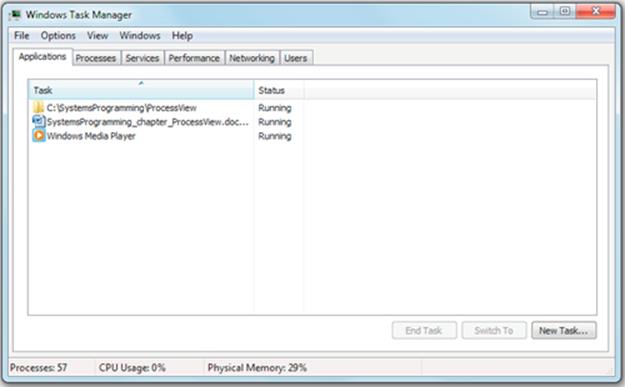

Part 1

Start the Task Manager by pressing the Control, Alt, and Delete keys simultaneously and select “Start Task Manager” from the options presented. Then, select the Applications tab and view the results, followed by the Processes tab, and also view the results. Make sure the “Show processes for all users” checkbox is checked, so that you see everything that is present. Note that this procedure may vary across different versions of the Microsoft operating systems.

Expected Outcome for Part 1

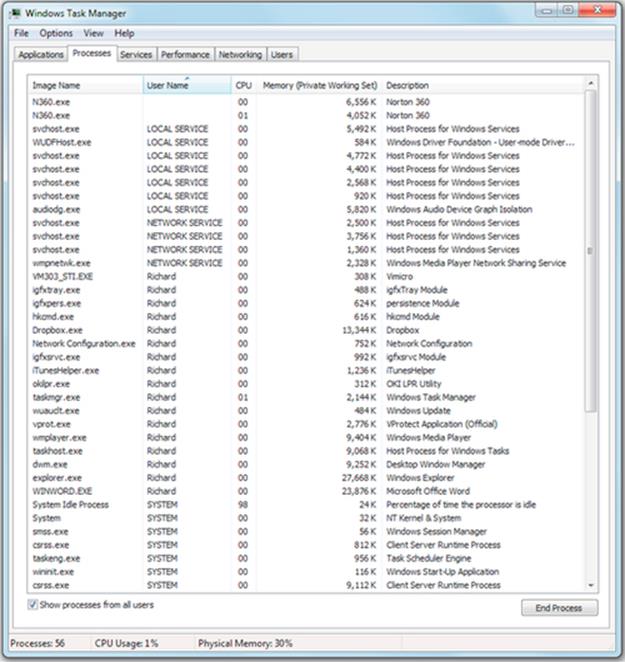

You will see a new window that contains a list of the applications running on the computer, or a list of the processes present, depending on which tab is selected. Examples from my own computer (which has the Windows 7 Professional operating system) are shown. The first screenshot shows the list of applications, and the second screenshot shows the list of processes.

Part 2

Explore the processes present in the system using the TASKLIST command run from a command window. This command gives a different presentation of information than that provided by the Task Manager. Type TASKLIST /? to see the list of parameters that can be used. Explore the results achieved using some of these configuration parameters to see what can you find out about the processes in the system and their current state.

Reflection

You will probably have a good idea which applications are running already (after all, you are the user), so the list of applications will be of no surprise to you. In the examples from my computer, you can see that I was editing a chapter from this book while listening to a CD via the media player application.

However, you will probably see a long list of processes when selecting the Processes tab. Look closely at these processes; is this what you expected? Do you have any idea what all these are doing or why they are needed?

Note that many of the processes running are “system” processes that are either part of the operating system or otherwise related to resource management; device drivers are a good example of this.

Note especially the “System Idle Process,” which in the example above “runs” for 98% of the time. This pseudoprocess runs when no real processes are ready to run, and the share of CPU time it gets is often used as an inverse indication of the overall load on the computer.

into these systems, and this program runs as the sole process. Many embedded systems platforms have very limited resources (especially in terms of having a small memory and low processing speed) and thus cannot afford the additional overheads of having an operating system.

Now, bearing in mind how many processes are active on your computer, consider how many processes can run at any given time. For older systems, this is likely to be just 1. For newer systems, which are “dual core,” for example, the answer is 2, or 4 if it is “quad core.” Even if we had perhaps 32 cores, we would typically still have more processes than cores.

Fundamentally, the need for scheduling is that there tend to be more processes active on a computer than there are processor units, which can deal with them. If this is the case, then something has to arbitrate; this is one of the most important things the operating system does.

2.3.1 Scheduling Concepts

Several different scheduling techniques (algorithms) have been devised. These have different characteristics, the most important differentiator being the basis on which tasks are selected to run.

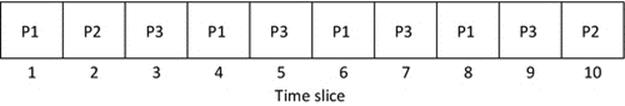

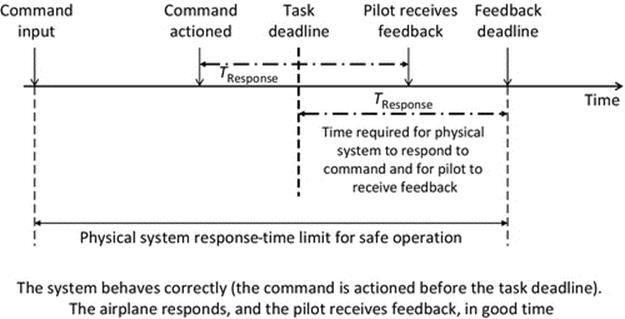

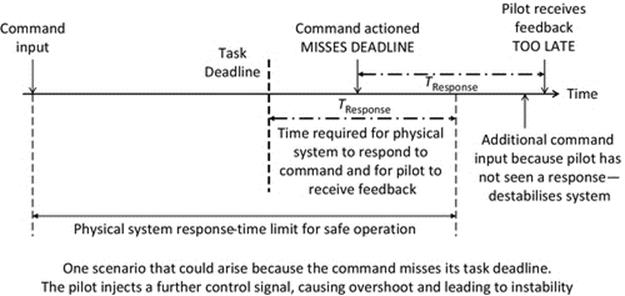

Given that generally there are more processes than processors, the processes can be said to be competing for the processor. To put it another way, the processes each have work to do and it is important that they eventually complete their work, but at any given moment, some of these may be more important, or urgent than others. The scheduler must ensure that wherever possible, the processor is always being used by a process, so that resource is not wasted. The performance of the scheduler is therefore most commonly discussed in terms of the resulting efficiency of the system, and this is measured in terms of the percentage of time that the processing unit is kept busy. “Keeping the processor busy” is commonly expressed as the primary goal of the scheduler. Figures 2.7 and 2.8illustrate this concept of efficiency.

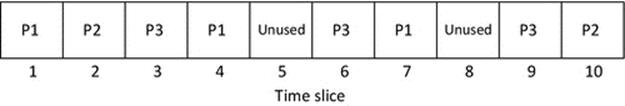

FIGURE 2.7 Schedule A uses 8 out of 10 timeslots (a system with three processes).

FIGURE 2.8 Schedule B uses 10 out of 10 timeslots (a system with three processes).

As shown in Figure 2.7, schedule A uses 8 of the 10 processor timeslots and is thus 80% efficient. The two timeslots that were not used are lost, that resource cannot be reclaimed. Another way to think of this situation is that this system is performing 80% of the work that it could possibly do, so is doing work at 80% of its maximum possible rate.

Figure 2.8 shows the ideal scenario in which every timeslot is used and is thus 100% efficient. This system is performing as much work as it could possibly do, so is operating its maximum possible rate.

2.3.1.1 Time Slices and Quanta

A preemptive scheduler will allow a particular process to run for a short amount of time called a quantum (or time slice). After this amount of time, the process is placed back in the ready queue and another process is placed into the run state (i.e., the scheduler ensures that the processes take turns to run).

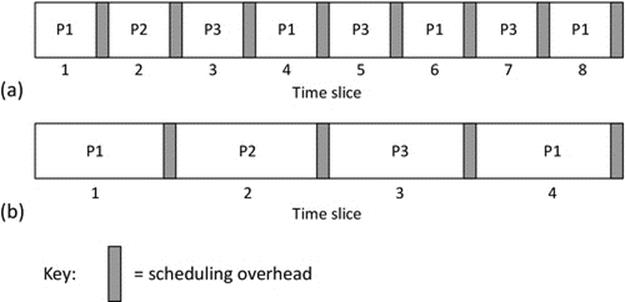

The size of a quantum has to be selected carefully. Each time the operating system makes a scheduling decision, it is itself using the processor. This is because the operating system comprises one or more processes and it has to use system processing time to do its own computations in order to decide which process to run and actually move them from state to state; this is called context switching and the time taken is the scheduling overhead. If the quanta are too short, the operating system has to perform scheduling activities more frequently, and thus, the overheads are higher as a proportion of total system processing resource.

On the other hand, if the quanta are too long, the other processes in the ready queue must wait longer between turns, and there is a risk that the users of the system will notice a lack of responsiveness in the applications to which these processes belong.

Figure 2.9 illustrates the effects of quanta size on the total scheduling overheads. Part (a) of the figure shows the situation that arises with very short quanta. The interleaving of processes is very fine, which is good for responsiveness. This means that the amount of time a process must wait before its next quanta of run time is short and thus the process appears to be running continuously to an observer such as a human user who operates on a much slower timeline. To understand this aspect, consider, as analogy, how a movie works. Many frames per second (typically 24 or more) are flashed before the human eye, but the human visual system (the eye and its brain interface) cannot differentiate between the separate frames at this speed and thus interpret the series of frames as a continuous moving image. If the frame rate is reduced to less than about 12 frames per second, a human can perceive them as a series of separate flashing images. In processing systems, shorter quanta give rise to more frequent scheduling activities, which absorbs some of the processing resource. The proportion of this overhead as a fraction of total processing time available increases as the quanta get smaller, and thus, the system can do less useful work in a given time period, so the optimal context switching rate is not simply the fastest rate that can be achieved.

FIGURE 2.9 Quantum size and scheduling overheads.

In part (b) of Figure 2.9, the quanta have been increased to approximately double the size of those shown in part a. This increases the coarseness of the process interleaving and could impact on the responsiveness of tasks, especially those that have deadlines or real-time constraints associated with their work. To get an initial understanding of the way this can materialize, imagine a user interface that has a jerky response, where it seems to freeze momentarily between dealing with your input. This jerky response could be a symptom that the process that controls the user interface is not getting a sufficient share of processing time or that the processing time is not being shared on a sufficiently fine-grained basis. Despite the impacts on task responsiveness that can arise with having larger quanta, they are more efficient because the operating system's scheduling activities occur less frequently, thus absorbing a smaller proportion of overall processing resource; for the scenario shown, the scheduling overhead for system (b) is half that of system (a).

Choosing the size of the time quantum is an optimization problem between on the one hand the overall processing efficiency and on the other hand the responsiveness of tasks. To some extent, the ideal value depends on the nature of the applications running in the system. Real-time systems (i.e., ones that have applications with a time-based functionality or dependency, such as streaming video where each frame must be processed with strict timing requirements) generally need to use shorter quanta to achieve finer interleaving and thus ensure the internal deadlines of applications are not missed. Specific scheduling issues arising from deadlines or real-time constraints of processes will be discussed in more detail later.

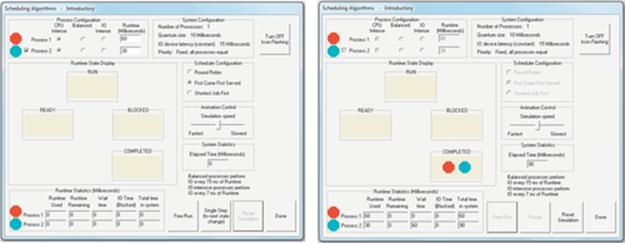

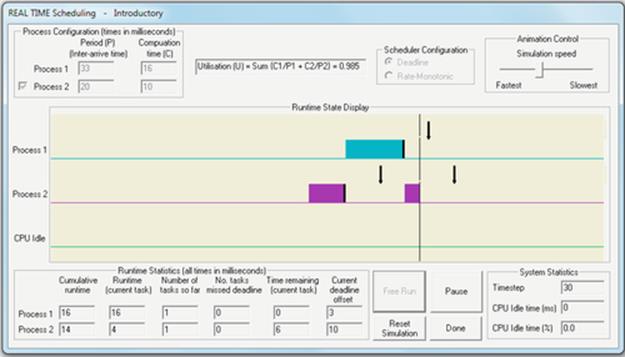

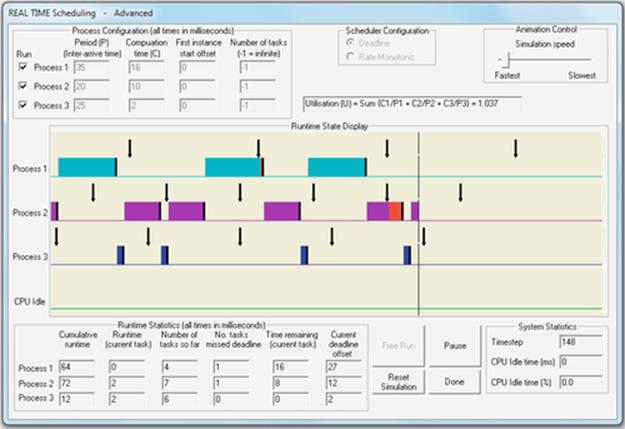

Activity P3

Examining Scheduling Behavior with Real Processes—Introductory

Prerequisites

The instructions below assume that you have obtained the necessary supplemental resources as explained in Activity P1.

Learning Outcomes

This activity explores the way in which the scheduler facilitates running several processes at the same time:

1. To understand that many processes can be active in a system at the same time

2. To understand how the scheduler creates an illusion that multiple processes are actually running at the same time, by interleaving them on a small timescale

3. To gain experience of command-line arguments

4. To gain experience of using batch files to run applications

Method

This activity is carried out in three parts, using a simple program that writes characters to the screen on a periodic basis, to illustrate some aspects of how the scheduler manages processes.

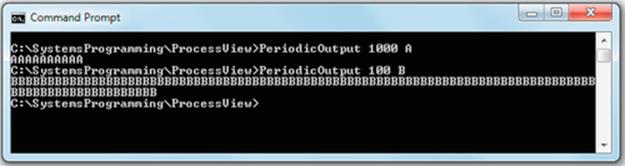

Part 1

1. Run the PeriodicOutput.exe program in a command window. Navigate to the “ProcessView” subfolder and then execute the PeriodicOutput program by typing PeriodicOutput.exe followed by the Enter key. The program will now run as a process. It will print an error message because it needs some additional information to be typed after the program name. Extra information provided in this way is termed “command-line arguments” and is a very useful and important way of controlling the execution of a program.

2. Look at the error message produced. It is telling us that we must provide two additional pieces of information, which are the length of the time interval (in ms) between printing characters on the display screen and the character that will be printed at the end-of-each time interval.

3. Run the PeriodicOutput.exe program again, this time providing the required command-line parameters.

For example, if you type PeriodicOutput 1000 A, the program will print a series of A's, one each second (1000 ms).

As another example, if you type PeriodicOutput 100 B, the program will print a series of B's, one every 1/10th of a second.

Experiment with some other values. Notice that the program will always run for 10 s (10,000 ms) in total, so the number of characters printed will always be 10,000 divided by the first parameter value. Examine the source code for this program (which is provided as part of the supplemental resources) and relate the program's behavior to the instructions.

Expected Outcome for Part 1

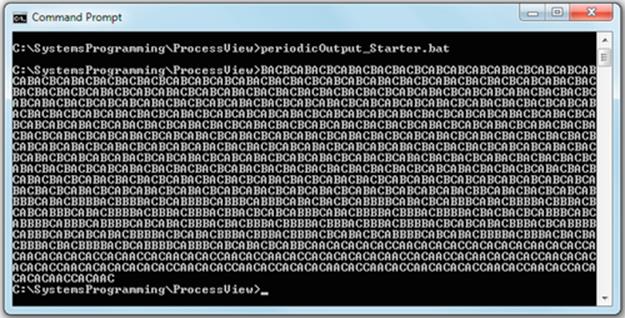

The screenshot below shows the output for a couple of different parameter settings. So far, we have only run one process at a time.

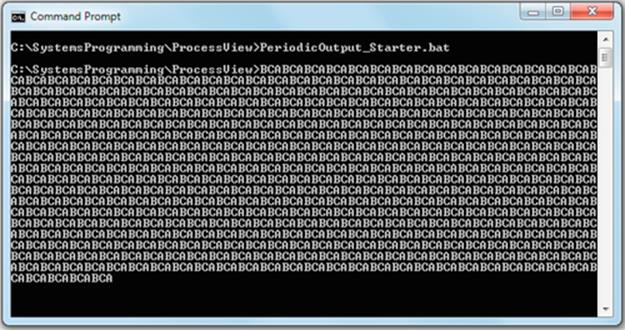

Part 2

1. We will now run several copies of the PeriodicOutput.exe program at the same time. To do this, we shall use a batch file. A batch file is a script containing a series of commands to be executed by the system; for the purpose of this activity, you can think of it as a metaprogram that allows us to specify how programs will be executed. The batch file we shall use is called “PeriodicOutput_Starter.bat”. You can examine the contents of this file by typing “cat PeriodicOutput_Starter.bat” at the command prompt. You will see that this file contains three lines of text, each of which is a command that causes the operating system to start a copy of the PeriodicOutput.exe program, with slightly different parameters.

2. Run the batch file by typing its name, followed by the Enter key. Observe what happens.

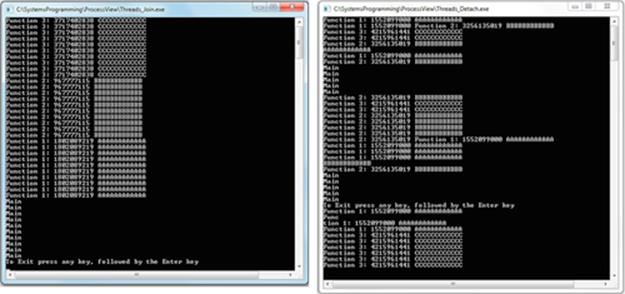

Expected Outcome for Part 2

You should see the three processes running simultaneously, evidenced by the fact that their outputs are interleaved such that you see an “ABCABC” pattern (perfect interleaving is not guaranteed and thus the pattern may vary). The screenshot below shows the typical output you should get.

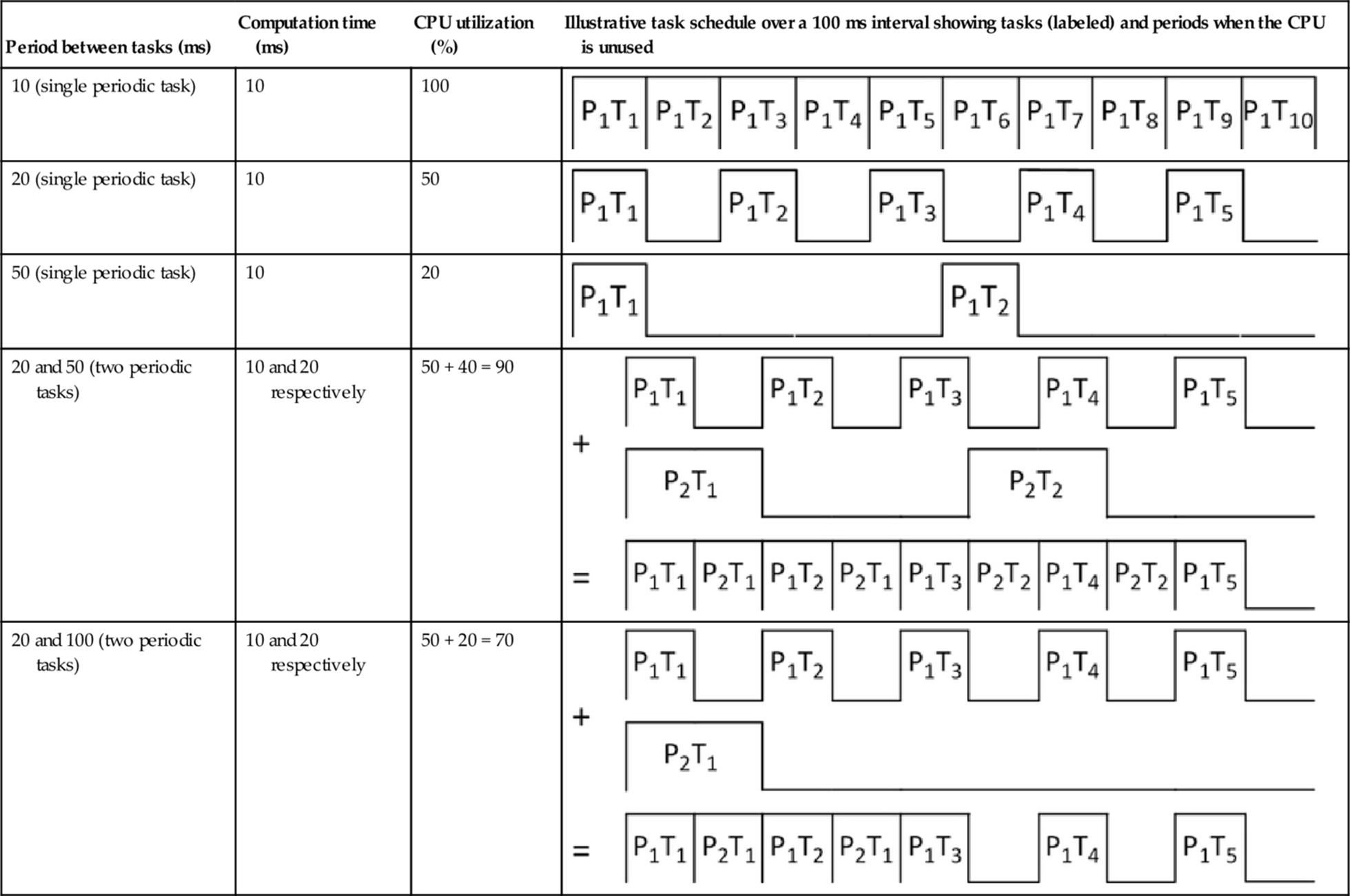

The batch file sets the intervals between printing characters to 20 ms for each of the three processes. This is on a similar scale to the size of a typical scheduling quantum and yields quite a regular interleaving (which arises because the three processes spend a relatively long time “sleeping” between their brief single-character-at-a-time output activity, giving the scheduler plenty of time to manage other activities in the system).

Part 3

Experiment with different settings by editing the batch file (use a simple text editor such as Notepad, and do not use a word processor as this may add special characters, which confuse the command interpreter part of the operating system). Try changing the time interval, or adding a few extra processes. In particular, try a shorter character printing interval for the three processes, such as 5 ms. The faster the rate of activity in the three processes, the less likely it becomes that the scheduler will achieve the regular interleaving we saw in part 2 above. Because the computer has other processes running (as we saw in Activity P2), we may not see a perfect interleaved pattern, and if we run the batch file several times, we should expect slightly different results each time; it is important that you realize why this is.

Reflection

You have seen that the simple interleaving achieved by running three processes from a batch file can lead to a regular pattern when the system is not stressed (i.e., when the processes spend most of their time blocked because they perform IO, between short episodes of running and becoming blocked once again). Essentially, there is sufficient spare CPU capacity in this configuration that each process gets the processing time it needs, without spending long in the ready state.

However, depending on the further experimentation you carried out in part 3, you will probably have found that the regularity of the interleaving in fact depends on the number of, and behavior of, other processes in the system. In this experiment, we have not stated any specific requirement of synchrony between the three processes (i.e., the operating system has no knowledge that a regular interleaving pattern is required), so irregularity in the pattern would be acceptable.

In addition to gaining an understanding of the behavior of scheduling, you should have also gained a level of competence with regard to configuring and running processes, the use of command-line arguments and batch files, and an understanding of the effects of the scheduler.

An interesting observation is that while over the past couple of decades central processor operating speeds (the number of CPU cycles per second) have increased dramatically, the typical range of scheduling quantum sizes has remained generally unchanged. One main reason for this is that the complexity of systems and the applications that run on them have also increased at high rates, requiring more processing to achieve their rich functionality. The underlying trade-off between responsiveness (smaller quantum size) and low overheads (larger quantum size) remains, regardless of how fast the CPU operates. Quantum sizes in modern systems are typically in the range of about 10 ms to about 200 ms, although smaller quanta (down to about 1 ms) are used on very fast platforms. A range of quantum sizes can be used as a means of implementing process priority; this works on the simple basis that higher-priority processes should receive a greater share of the processing resource available. Of course, if processes are IO-intensive, they get blocked when they perform IO regardless of priority.

Another main factor why quantum sizes have not reduced in line with technological advances is that human perception of responsiveness has not changed. It is important from a usability viewpoint that interactive processes respond to user actions (such as typing a key or moving the mouse) in a timely way, ideally to achieve the illusion that the user's application is running continuously, uninterrupted, on the computer. Consider a display-update activity; the behind-the-scenes processing requirement has increased due, for example, to higher-resolution displays and more complex windowing user-interface libraries. This means that a display update requires more CPU cycles in modern systems than in simpler, earlier systems.

For interactive processes, a quantum must be sufficiently large that the processing associated with the user's request can be completed. Otherwise, once the quantum ends, the user's process will have to wait, while other processes take turns, introducing delay to the user's process. In addition, as explained earlier, a smaller quantum size incurs a higher level of system overhead due to scheduling activity. So although CPU cycle speed has increased over time, the typical scheduling quantum size has not.

2.3.1.2 Process States

This section relates primarily to preemptive scheduling schemes, as these are used in the typical systems that are used to build distributed systems.

If we assume that there is only a single processor unit, then only one process can use the processor at any moment. This process is said to be running, or in the run state. It is the scheduler's job to select which process is running at a particular time and, by implication, what to do with the other processes in the system.

Some processes that are not currently running will be able to run; this means that all the resources they need are available to them, and if they were placed in the run state, they would be able to perform useful work. When a process is able to run, but not actually running, it is placed in the ready queue. It is thus said to be ready, or in the ready state.

Some processes are not able to run immediately because they are dependent on some resource that is not available to them. For example, consider a simple calculator application that requires user input before it can compute a result. Suppose that the user has entered the sequence of keys 5 x; clearly, this is an incomplete instruction and the calculator application must wait for further input before it can compute 5 times whatever second number the user enters. In this scenario, the resource in question is the keyboard input device, and the scheduler will be aware that the process is unable to proceed because it is waiting for input. If the scheduler were to let this process run, it would be unable to perform useful work immediately; for this reason, the process is moved to the blockedstate. Once the reason for waiting has cleared (e.g., the IO device has responded), the process will be moved into the ready queue. In the example described above, this would be when the user has typed a number on the keyboard.

Taking the Adder program used in Activity P1 as an example; consider the point where the process waits for each user input. Even if the user types immediately, say, perhaps one number each second, it takes 2 s for the two numbers to be entered; and this makes minimal allowance for user thinking time, which tends to be relatively massive compared to the computational time needed to process the data entered.

The CPU works very fast relative to human typing speed; even the slowest microcontroller used in embedded systems executes 1 million or more instructions per second.2 The top-end mobile phone microprocessor technology (at the time of writing) contains four processing cores, which each operate at 2.3 GHz, while a top-end desktop PC microprocessor operates at 3.6-4.0 GHz, also with four processing cores. These figures provide an indication of the amount of computational power that would be lost if the scheduler allowed the CPU to remain idle for a couple of seconds while a process such as Adder is waiting for input. As discussed earlier, scheduling quanta are typically in the range of 10-200 ms, which given the operating speed of modern CPUs represents a lot of computational power.

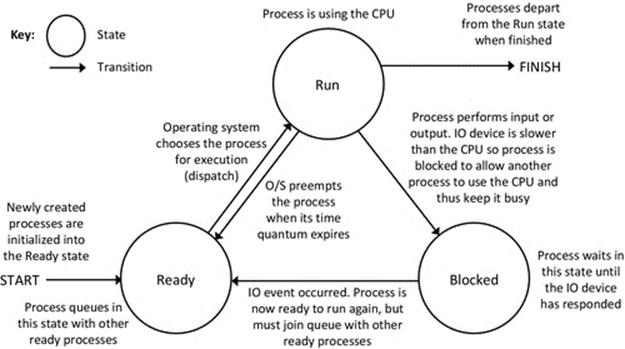

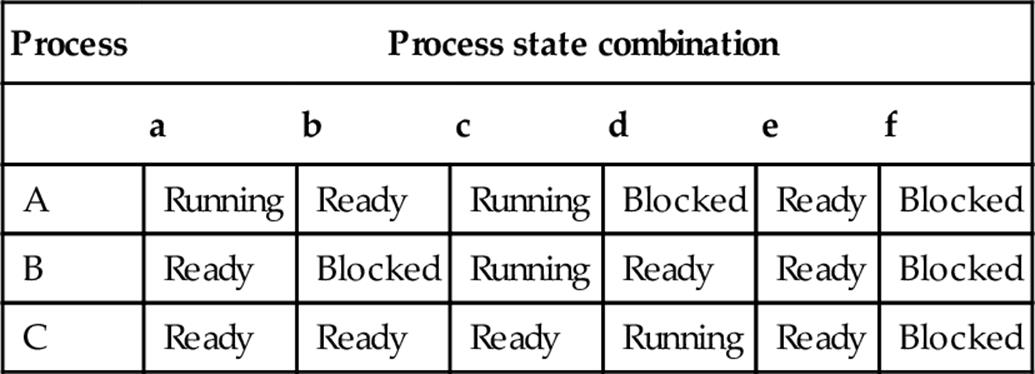

The three process states discussed above form the basic set required to describe the overall behavior of a scheduling system generically, although some other states also occur and will be introduced later. The three states (running, ready, and blocked) can be described in a state-transition diagram, so-called because the diagram shows the allowable states of the system and the transitions that can occur to move from one state to another. A newly created process will be placed initially in the ready state.

Figure 2.10 shows the three-state transition diagram. You should read this diagram from the perspective of a particular process. That is, the diagram applies individually to each process in the system; so, for example, if there are three processes {A, B, C}, we can describe each process as being in a particular state at any given moment. The same transition rules apply to all processes, but the states can be different at any given time.

FIGURE 2.10 The three-state state transition diagram.

The transitions shown are the only ones allowable. The transition from ready to running occurs when the process is selected to run, by the operating system; this is known as dispatching. The various scheduling algorithms select the next process to run based on different criteria, but the general essence is that the process selected either has reached the top of the ready queue because it has been waiting longest or has been elevated to the top because it has a higher priority than others in the queue.

The transition from running to ready occurs when the process has used up its time quantum. This is called preemption and is done to ensure fairness and to prevent starvation; this term is used to describe the situation where one process hogs the CPU, while another process is kept waiting, possibly indefinitely.

The transition from running to blocked occurs when the process has requested an IO operation and must wait for the slower (slow with respect to the CPU) IO subsystem to respond. The IO subsystem could be a storage device such as a hard magnetic disk drive or an optical drive (CD or DVD), or it could be an output device such as a printer or video display. The IO operation could also be a network operation, perhaps waiting for a message to be sent or received. Where the IO device is a user-input device such as a mouse or keyboard, the response time is measured in seconds or perhaps tenths of a second, while the CPU in a modern computer is capable of executing perhaps several thousand million instructions in 1 s (this is an incredible amount). Thus, in the time between a user typing keys, the CPU can perform many millions of instructions, thus highlighting the extent of wastage if the process were allowed to hog the CPU while idle.

The transition from blocked to ready occurs when the IO operation has completed. For example, if the reason for blocking was waiting for a user keystroke, then once the keystroke has been received, it will be decoded and the relevant key-code data provided on the input stream of the process.3 At this point, the process is able to continue processing, so it is moved to the ready state. Similarly, if the reason for blocking was waiting for a message to arrive over a network connection, then the process will be unblocked once a message has been received4 and placed into a memory buffer accessible to the process (i.e., the operating system moves the message into the process' memory space).

Note that when a process is unblocked, it does not reenter the running state directly. It must go via the ready state.

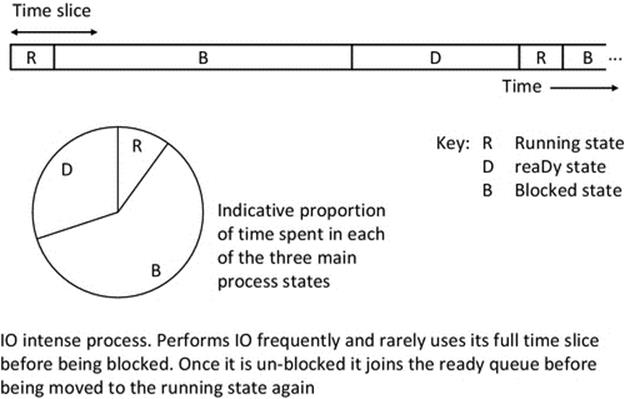

As explained above, blocking and unblocking are linked to actual events in the process' behavior and in the response of IO subsystems, respectively. To illustrate this, consider what takes place when a process writes to a file on a hard disk. A hard disk is a “block device”—this means that data are read from, and written to, the disk in fixed-size blocks. This block-wise access is because a large fraction of the access time is taken up by the read/write head being positioned over the correct track where the data are to be written and waiting for the disk to rotate to the correct sector (portion of a track) where the data are to be written. It would thus be extremely inefficient and require very complex control systems if a single byte were written at a time. Instead a block of data, of perhaps many kilobytes, is read or written sequentially once the head is aligned (the alignment activity is termed “seeking” or “head seek”). Disk rotation speeds and head movement speeds are very slow relative to the processing speeds of modern computers. So, if we imagine a process that is updating a file that is stored on the disk, we can see that there would be a lot of delays associated with the disk access. In addition to the first seek, if the file is fragmented across the disk, as is common, then each new block that is to be written requires a new seek. Even after a seek is complete, there is a further delay (in the context of CPU-speed operations) as the actual speed at which data are written onto the disk or read from it is also relatively very slow. Each time the process were ready to update a next block of data, it would be blocked by the scheduler. Once the disk operation has completed, the scheduler would move the process back into the ready queue. When it enters the running state, it will process the next section of the file, and then, as soon as it is ready to write to the disk again, it is blocked once more. We would describe this as an IO-intensive task and we would expect it to be regularly blocked before using up its full-time slices. This type of task would spend a large fraction of its time in the blocked state, as illustrated in Figure 2.11.

FIGURE 2.11 Run-time behavior illustration for an IO-intensive process.

Figure 2.11 illustrates the process state sequence for a typical IO-intensive process. The actual ratios shown are indicative, because they depend on the specific characteristics of the process and the host system (including the mix of other tasks present, which affects the waiting time).

The above example concerning access to a secondary storage system such as a disk drive raises a key performance issue, which must be considered during design of low-level application behavior, which affects not only its performance but also its robustness. Data held in memory while the program is running are volatile; this means that if the process crashes or the power is lost, the data are also lost. Secondary storage such as a magnetic hard disk is nonvolatile, or “persistent,” and thus will preserve the data after the process ends and even after the computer is turned off. Clearly, there is a conflict between ultimate performance and ultimate robustness, because the time-cost overheads of the most robust approach of writing data to disk every time there is a change are generally not tolerable from a performance point of view. This is a good example of a design trade-off to reach an appropriate compromise depending on the specific requirements of the application.

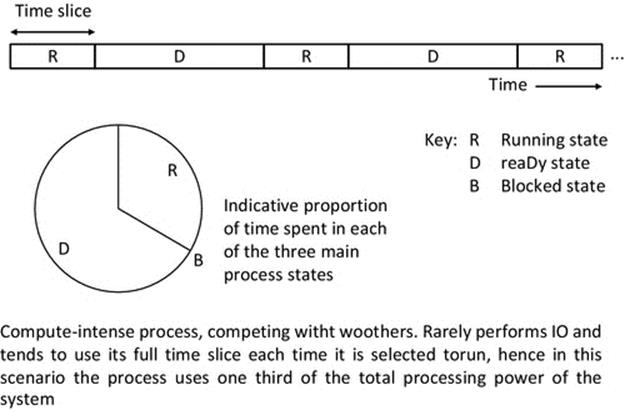

Compute-intensive processes tend to use their full-time slice and are thus preempted by the operating system to allow another process to do some work. The responsiveness of compute-intensive processes is thus primarily related to the total share of the system resources each gets. For example, if a compute-intensive process were the only process present, it would use the full resource of the CPU. However, if three CPU-intensive tasks were competing in a system, the process state behavior of each would resemble that shown in Figure 2.12.

FIGURE 2.12 Run-time behavior illustration for a compute-intensive process competing with two similar processes.

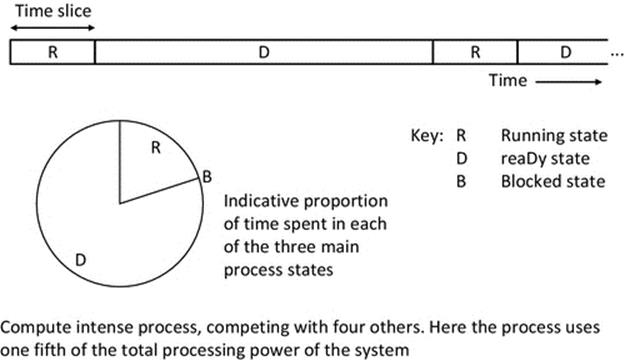

One further example is provided to ensure the behavior is clear. If a compute-intensive process competes with four similar processes, each will account for one-fifth of the total computing resource available, as illustrated in Figure 2.13.

FIGURE 2.13 Run-time behavior illustration for a compute-intensive process competing with four similar processes.

2.3.1.3 Process Behavior

IO-Intensive Processes

Some processes perform IO frequently and thus are often blocked before using up their quantum; these are said to be IO-intensive processes. An IO-intensive program not only could be an interactive program where the IO occurs between a user and the process (via, e.g., the keyboard and display screen)

Activity P4

Examining Scheduling Behavior with Real Processes—Competition for the CPU

Prerequisites

The instructions below assume that you have obtained the necessary supplemental resources as explained in Activity P1.

Learning Outcomes

This activity explores the way in which the scheduler shares the CPU resource when several competing processes are CPU-intensive:

1. To understand that the CPU resource is finite

2. To understand that the performance of one process can be affected by the other processes in the system (which from its point of view constitute a background workload with which it must compete for resources).

Method

This activity is carried out in four parts and uses a CPU-intensive program, which, when running as a process, performs continual computation without doing any IO and thus never blocks. As such, the process will always fully use its time slice.

Part 1 (Calibration)

1. Start the Task Manager as in Activity P2 and select the “Processes” tab. Sort the display in order of CPU-use intensity, highest at the top. If there are no compute-intensive tasks present, the typical highest CPU usage figure might be about 1%, while many processes that have low activity will show as using 0% of the CPU resource. Leave the Task Manager window open, to the side of the screen.

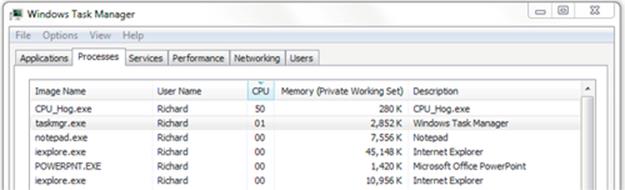

2. Run the CPU_Hog.exe program in a command window. Navigate to the “ProcessView” subfolder of the SystemsProgramming folder and then execute the CPU_Hog program by typing CPU_Hog.exe followed by the Enter key. The program will now run as a process. Examine the process statistics in the Task Manager window while the CPU_Hog process runs.

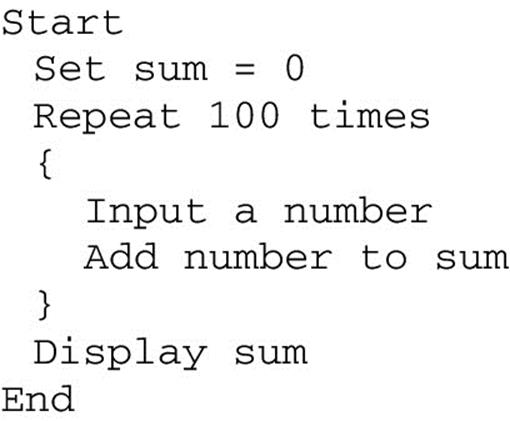

The screenshot below shows that the CPU_Hog takes as much CPU resource as the scheduler gives it, which in this case is 50%.

3. Run the CPU_Hog process again, and this time, record how long it takes to run, using a stopwatch. The program performs a loop a large fixed number of times, each time carrying out some computation. The time taken will be approximately the same each time the process is run, on a particular computer, as long as the background workload does not change significantly. It is necessary that you time it on your own computer to get a baseline for the subsequent experiments in this activity and that you do this without any other CPU-intensive processes running. Timing accuracy to the nearest second is adequate. As a reference, it took approximately 39 s on my (not particularly fast) computer.

Part 2 (Prediction)

1. Taking into account the share of CPU resource used by a single instance of the CPU_Hog, what do you think will happen if we run two instances of this program at the same time? How much share of the CPU resource do you think each copy of the program will get?

2. Based on the amount of time that it took for a single instance of the CPU_Hog to run, how long will each copy take to run if two copies are running at the same time?

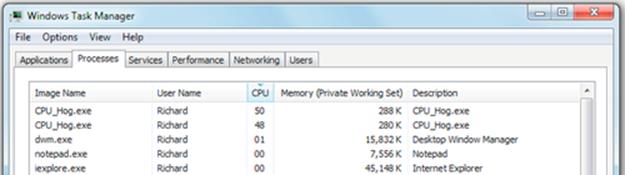

3. Now, use the batch file named “CPU_Hog_2Starter.bat” to start two copies of CPU_Hog at the same time. Use the Task Manager window to observe the share of CPU resource that each process gets, and don't forget to also measure the run time of the two processes (when they have completed, you will see them disappear from the list of processes).

The screenshot below shows that each CPU_Hog process was given approximately half of the total CPU resource. Is this what you expected?

Expected Outcome for Part 2

In my experiment, the processes ran for approximately 40 s, which is what I had expected, on the basis that each process had very slightly less than 50% (on average) of the CPU resource, which was essentially the same as the single process had had in part 1. The fact that the processes took very slightly longer to run than the single process had taken is important. This slight difference arises because the other processes in the system, while relatively inactive, do still use some processing resource and, importantly, the scheduling activity itself incurs overheads each time it switches between the active processes. These overheads were absorbed when the CPU was idle for nearly 50% of the time but show up when the resource is fully utilized.

Part 3 (Stressing the System)

It would not satisfy my inquisitive nature to leave it there. There is an obvious question that we should ask and try to answer: If a single instance of CPU_Hog takes 50% of the CPU resource when it runs and two copies of CPU_Hog take 50% of the CPU resource each, what happens when three or even more copies are run at the same time?

1. Make your predictions—for each of the three processes, what will be the share of CPU resource? And what will be the run time?

2. Use the batch file named “CPU_Hog_3Starter.bat” to start three copies of CPU_Hog at the same time. As before, use the Task Manager window to observe the share of CPU resource that each process gets, and also remember to measure the run time of the three processes (this may get trickier, as they might not all finish at the same time, but an average timing value will suffice to get an indication of what is happening).

The screenshot below shows the typical results I get.

Expected Outcome for Part 3

Interestingly, the first process still gets 50% of the CPU resource and the other two share the remaining 50%. Before the experiment, I had expected that they would get 33% of the resource each. There are almost certainly other schedulers out there for which that would have been the case; but with distributed systems, you won't always know or control which computer your process will run on, or which scheduler will be present, or the exact run-time configuration including workload, so this experiment teaches us to be cautious when predicting run-time performance even in a single system, let alone in heterogeneous distributed systems.

Coming back to our experiment, this result means that the first process ends sooner than the other two. Once the first process ends, the remaining two are then given 50% of the CPU resource each, so their processing rate has been speeded up. I recorded the following times: first process, 40 s; second and third processes, approximately 60 s. This makes sense; at the point where the first process ends, the other two have had half as much processing resource each and are therefore about halfway through their task. Once they have 50% of the CPU resource each, it takes them a further 20 s to complete the remaining half of their work—which is consistent.

Part 4 (Exploring Further)

I have provided a further batch file “CPU_Hog_4Starter.bat” to start four copies of CPU_Hog at the same time. You can use this with the same method as in part three to explore further.

The screenshot below shows the behavior on my computer.

In this case, each process used approximately 25% of the CPU resource and each took approximately 80 s to run—which is consistent with the earlier results.

Reflection

This experiment illustrates some very important aspects of scheduling and of the way in which the behavior of the scheduler and the behavior of the processes interact. This includes the complexities of predicting run times and the extent to which the behavior of schedulers may be sensitive to the mix of processes in the system.

Firstly, we see that the CPU resource is finite and must be shared among the processes in the system. We also see that as some processes complete, the share of resource can be reallocated to the remaining processes. It is also important to realize that a process will take a predictable amount of CPU run time to execute. However, because the process gets typically less than 100% of the CPU resource, its actual execution time (its time in the system, including when it is in the ready queue) is greater than its CPU run time, and the overall execution time is dependent on the load on the system—which in general is continually fluctuating. These experiments have scratched the surface of the complexity of scheduling but provide valuable insight into the issues concerned and equip us with the skills to explore further. You can devise further empirical experiments based on the tools I have provided (e.g., you can edit the batch files to fire off different combinations of programs), or you can use the Operating Systems Workbench scheduling simulations that support flexible experimentation over a wide range of conditions and process combinations and also allow you to decide which scheduler to use. The introductory scheduling simulation provides configurations with three different schedulers and workload mixes of up to five processes of three different types. The advanced scheduling simulation supports an additional scheduling algorithm and facilitates configuration of the size of the CPU scheduling quantum and also the device latency for IO-intensive processes. The workbench will be introduced later in the chapter.

but also may perform its IO to other devices including disk drives and the network—for example, it may be a server process that receives requests from a network connection and sends responses back over the network.

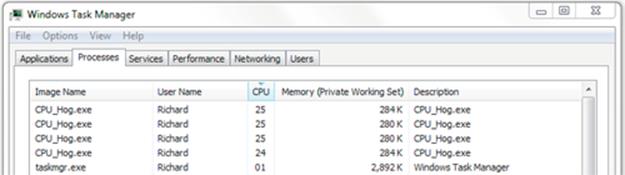

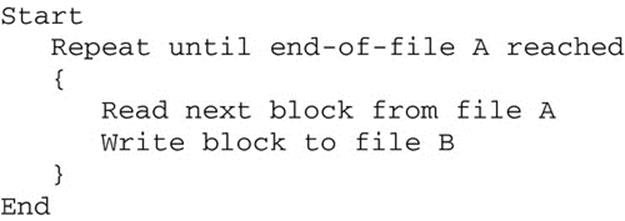

Examples of IO-intensive programs are shown in the pseudocode in Figure 2.14 (user-interactive IO), Figure 2.15 (disk-based IO), and Figure 2.16 (network-based IO).

FIGURE 2.14 The Summer IO-intensive (interactive) program (computes the sum of 100 numbers).

FIGURE 2.15 The FileCopy IO-intensive (disk access) program (copy from one file to another).

FIGURE 2.16 NetworkPingService IO-intensive (network communication) program (echo back a received message to the sender).

Figure 2.14 shows the pseudocode of an interactive IO-intensive process that spends a very large proportion of its time waiting for input. The time to perform the add operation would be much less than a millionth of a second, so if the user enters numbers at a rate of 1 per second, the program could spend greater than 99.9999% of its time waiting for input.

Figure 2.15 shows pseudocode for a disk IO-intensive program that spends most of its time waiting for a response from the hard disk; each loop iteration performs two disk IO operations.

Figure 2.16 shows pseudocode for a network IO-intensive program that spends most of its time waiting for a message to arrive from the network.

Figures 2.14–2.16 provide three examples of processes that are IO-driven and the process spends the vast majority of its time waiting (in blocked state). Such processes will be blocked each time they make an IO request, which means that they would not fully use their allocated quanta. The operating system is responsible for selecting another process from the ready queue to run as soon as possible to keep the CPU busy and thus keep the system efficient. If no other process were available to run, then the CPU time would be unused and the system efficiency falls.

Compute-Intensive (or CPU-Intensive) Processes

Compute-intensive processes are ones that perform IO rarely, perhaps to read in some initial data from a file, for example, and then spend long periods processing the data before producing the result at the end, which requires minimal output. Most scientific computing using techniques such as computational fluid dynamics (CFD) and genetic programming in applications such as weather forecasting and computer-based simulations fall into this category. Such applications can run for extended periods, perhaps many hours without necessarily performing any IO activities. Thus, they almost always use up their entire allocated time slices and rarely block.

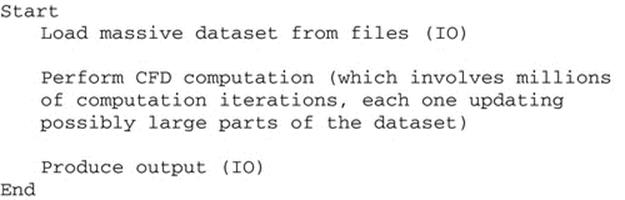

Figure 2.17 shows pseudocode for a compute-intensive program where IO is performed only at the beginning and end. Once the initial IO has been performed, this process would be expected to use its quanta fully and be preempted by the operating system, thus predominantly moving between the running and ready states.

FIGURE 2.17 WeatherForecaster compute-intensive program (perform extensive CFD computation on a massive dataset).

Balanced Processes

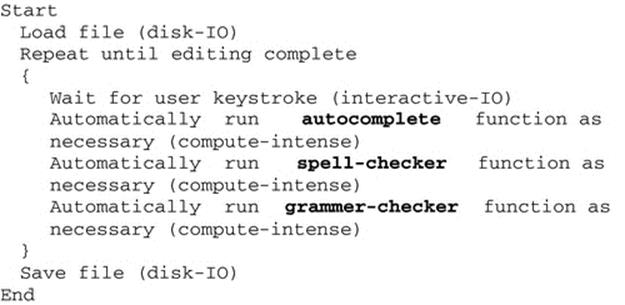

The term “balanced” could be used to describe a process that not only uses the CPU moderately intensively but also performs IO at a moderate level. This terminology is used in the Operating Systems Workbench (see the accompanying resources). An example of this category of program could be a word processor that spends much of its time waiting for user input but that also incorporates computationally intense activities such as spell-checking and grammar checking. Such functionality may be activated automatically or on demand by the user and require short bursts of significant amounts of processing resource.

Figure 2.18 shows pseudocode for a “balanced” program the behavior of which is sometimes IO-intensive and sometimes compute-intensive. Such a process would have periods where it spends much of its time in the blocked state, but there would also be periods where it moves predominantly between the run and ready states.

FIGURE 2.18 Word processor “balanced” program (alternates between IO-intensive behavior and bursts of high-intensity computation).

2.3.1.4 Scheduler Behavior, Components, and Mechanisms

The act of moving a process from the ready state to the running state is called “dispatching,” and this is performed by a subcomponent of the scheduler called the dispatcher. The dispatcher must ensure the appropriate preparation is carried out so that the process can operate correctly. This involves restoring the process' state (e.g., the stack and also the program counter and other operating system structures) so that the process' run-time environment (as seen by the process itself) is exactly the same as it was when the process was last running, immediately before it was preempted or blocked. This changing from the running context of one process to the running context of another is called a context switch. This is the largest component of the scheduling overhead and must be done efficiently because the CPU is not performing useful work until the process is running again. As the program counter is restored to its previous value in the process' instruction sequence, the program will continue running from the exact place it was interrupted previously. Note that this is conceptually similar to the mechanism of returning from a function (subroutine) call. The dispatcher is part of the operating system, and so it runs in what is called “privileged mode”; this means it has full access to the structures maintained by the operating system and the resources of the system. At the end-of-doing its work, the dispatcher must switch the system back into “user mode,” which means that the process that is being dispatched will only have access to the subset of resources that it needs for its operation and the rest of the system's resources (including those owned by other user-level processes) are protected from, and effectively hidden from, the process.

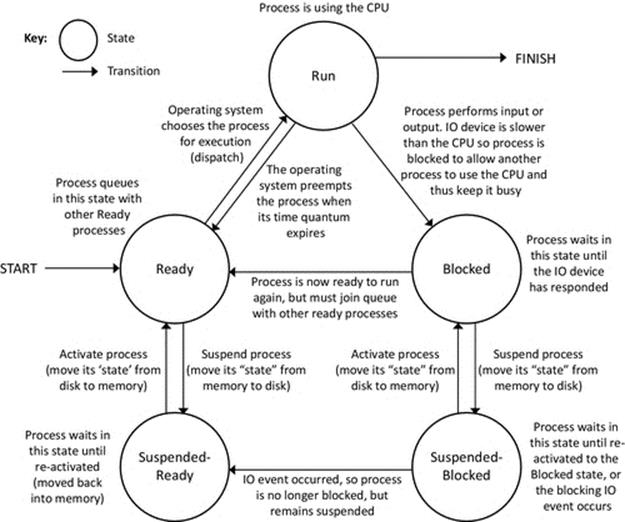

2.3.1.5 Additional Process States: Suspended-Blocked and Suspended-Ready

The three-state model of process behavior discussed above is a simplification of actual behavior in most systems; however, it is very useful as a basis on which to explain the main concepts of scheduling. There are a few other process states that are necessary to enable more sophisticated behavior of the scheduler. The run, ready, and blocked set of states are sufficient to manage scheduling from the angle of choosing which process should run (i.e., to manage the CPU resource), but do not provide flexibility with respect to other resources such as memory. In the three-state model, each process, once created, is held in a memory image that includes the program instructions and all of the storage required, such as the variables used to hold input and computed data. There is also additional “state” information created by the operating system in order to manage the execution of the process; this includes, for example, details of which resources and peripherals are being used and details of communication with other processes, either via pipelines as we have seen earlier or via network protocols as will be examined in Chapter 3.

Physical memory is finite and is often a bottleneck resource because it is required for every activity carried out by the computer. Each process uses memory (the actual process image has to be held in physical memory while the process is running; this image contains the actual program instructions, the data it is using held in variables, memory buffers used in network communication, and special structures such as the stack and program counter which are used to control the progress of the process). The operating system also uses considerable amounts of memory to perform its management activities (this includes special structures that keep track of each process). Inevitably, there are times when the amount of memory required to run all of the processes present exceeds the amount of physical memory available.

Secondary storage devices, such as hard disk drives, have much larger capacity than the physical memory in most systems. This is partly because of the cost of physical memory and partly because of limits in the number of physical memory locations that can be addressed by microprocessors. Due to the relatively high availability of secondary storage, operating systems are equipped with mechanisms to move (swap-out) process images from physical memory to secondary storage to make room for further processes and mechanisms to move (swap-in) process images back from the secondary storage to the physical memory. This increases the effective size of the memory beyond the amount of physical memory available. This technique is termed virtual memory and is discussed in more detail in Chapter 4.

To incorporate the concept of virtual memory into scheduling behavior, two additional process states are necessary. The suspended-blocked state is used to take a blocked-state process out of the current active set and thus to free the physical memory that the process was using. The mechanism of this is to store the entire process' memory image onto the hard disk in the form of a special file and to set the state of the process to “suspended-blocked,” which signifies that it is not immediately available to enter the ready or run states. The benefit of this approach is that physical memory resource is freed up, but there are costs involved. The act of moving the process' memory image to disk takes up time (specifically, the CPU is required to execute some code within the operating system to achieve this, and this is an IO activity itself—which slows things down) adding to the time aspect of scheduling overheads. The process must have its memory image restored (back into physical memory) before it can run again, thus adding latency to the response time of the process. In addition to regular scheduling latency, a suspended process must endure the latency of the swap to disk and also subsequently back to physical memory.

A swapped-out process can be swapped-in at any time that the operating system chooses. If the process is initially in the suspended-blocked state, it will be moved to the blocked state, as it must still wait for the IO operation that caused it to originally enter the blocked state to complete.

However, while swapped out, the IO operation may complete, and thus, the operating system will move the process to the suspended-ready state; this transition is labeled event-occurred. The suspended-ready state signifies that the process is still swapped out but that it can be moved into the ready state when it is eventually swapped-in.

The suspended-ready state can also be used to free up physical memory when there are no blocked processes to swap-out. In such case, the operating system can choose a ready-state process for swapping out to the suspended-ready state.

Figure 2.19 illustrates the five-state process model including the suspended-ready and suspended-blocked states and the additional state transitions of suspend, activate, and event-occurred.

FIGURE 2.19 The extended process state transition model including suspended process states.

2.3.1.6 Goals of Scheduling Algorithms

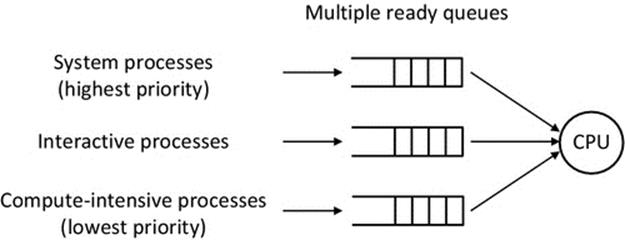

As discussed earlier, efficiency in terms of keeping the CPU busy and thus ensuring the system performs useful work is a main goal of general-purpose scheduling. Additional goals of scheduling include the following:

• To ensure fairness to all processes

When comparing scheduling algorithms, the concept of “fairness” is usually close behind efficiency in terms of performance metrics. This is in the context of giving the various processes a “fair share” of the processing resource. However, as you shall see later when you carry out experiments with the simulations in the Operating Systems Workbench, the concept of fairness is subjective and difficult to pin down to any single universal meaning. If all processes have the same priority and importance, then it is easy to consider fairness in terms of giving the processes equal processing opportunities. However, things get much more complex when there are different priorities or when some or all processes have real-time constraints. A special case to be avoided is “starvation” in which a process never reaches the front of the ready queue because the scheduling algorithm always favors the other processes.

• To minimize scheduling overheads

Performing a context switch (the act of changing which process is running at any moment) requires that the scheduler component of the operating system temporarily runs in order to select which process will run next and to actually change the state of each of the processes. This activity takes up some processing time and thus it is undesirable to perform context switches too frequently. However, a system can be unresponsive if processes are left to run for long periods without preemption; this can contradict other goals of scheduling, such as fairness.

• To minimize process wait time

Waiting time is problematic in terms of responsiveness, and thus, a general goal of scheduling is to keep it to a minimum for all processes. Waiting time most noticeably impacts interactive processes and processes with real-time constraints, but it is also important to consider waiting time as a ratio of total run time. A process with a very short run-time requirement (in terms of actual CPU usage) will have its performance affected relatively much more than a process with a long run-time requirement, by any given amount of delay. The shortest job first, and shortest remaining job next scheduling algorithms specifically favor shorter run-time processes. This has two important effects: firstly, the shortest processes do the least waiting, and thus, the mean waiting time ratios (wait time/run time) of processes are lowered; and secondly, the mean absolute waiting times of processes are lowered because a long process waits only a short time for the short process to complete, rather than the short process waiting a long time for the long process to complete.

• To maximize throughput

Throughput is a measure of the total work done by the system. The total processing capacity of the system is limited by the capacity of the CPU, hence the importance placed on keeping the CPU busy. If the CPU is used efficiently, that is, it is kept continuously busy by the scheduler, then the long-term throughput will be maximized. Short-term throughput might be influenced by which processes are chosen to run, such that several short processes could be completed in the same time that one longer one runs for. However, as a measure of performance, throughput over the longer-term is more meaningful.

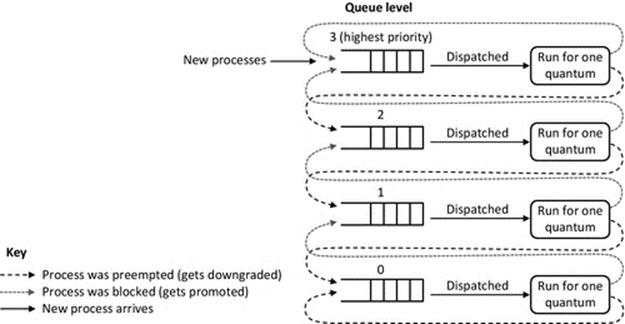

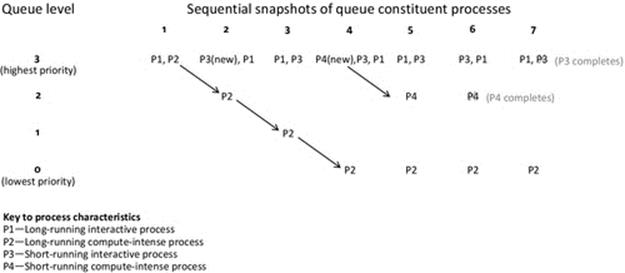

2.3.1.7 Scheduling Algorithms

First Come, First Served (FCFS)

FCFS is the simplest scheduling algorithm. There is a single rule; schedule the first process to arrive, and let it run to completion. This is a non-preemptive scheduling algorithm, which means that only a single process can run at a time, regardless of whether it uses the resources of the system effectively, and also regardless of whether there is a queue of other processes waiting, and the relative importance of those processes. Due to these limitations, the algorithm is not widely used but is included here as a baseline on which to compare other simple algorithms (see Activity P5).

Shortest Job First (SJF)

SJF is a modification of FCFS, in which the shortest job in the queue is executed next. This algorithm has the effect of reducing average waiting times, since the shortest jobs run first and the longer jobs spend less time waiting to start than if it were the other way around. This is a significant improvement, but this algorithm is also non-preemptive and thus suffers the same general weaknesses with respect to resource use efficiency as FCFS.

Round Robin (RR)

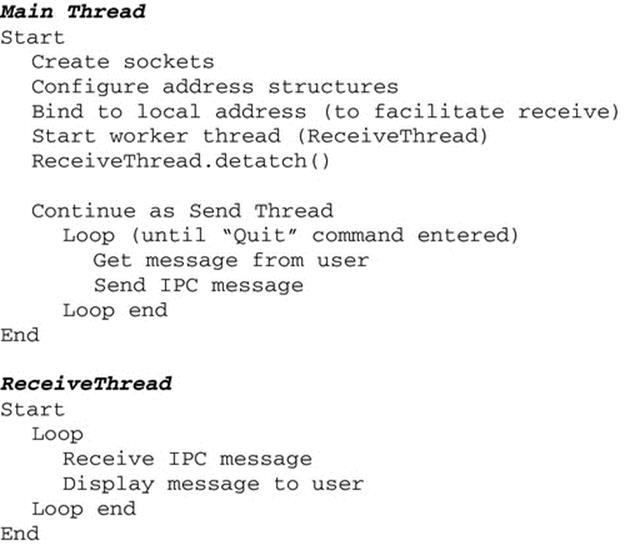

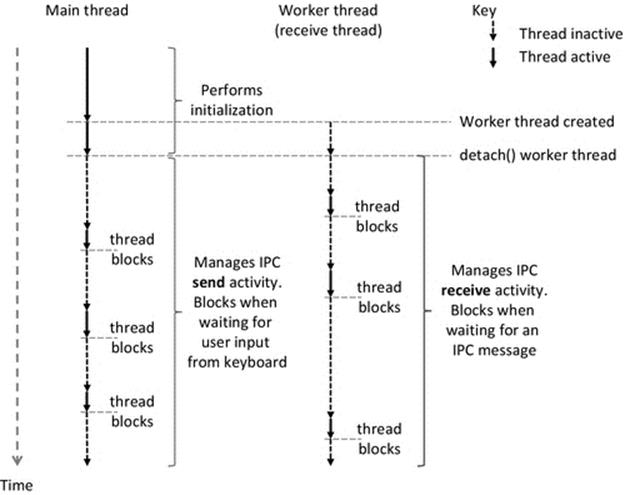

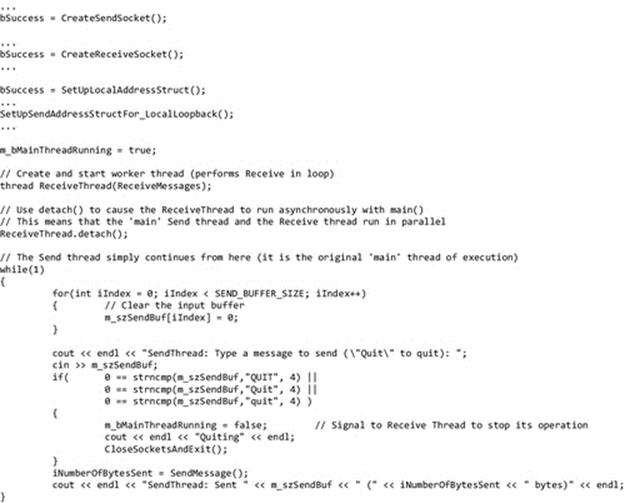

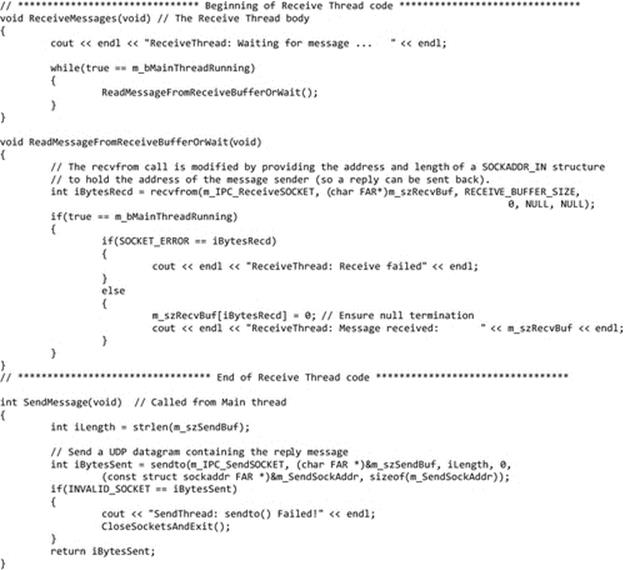

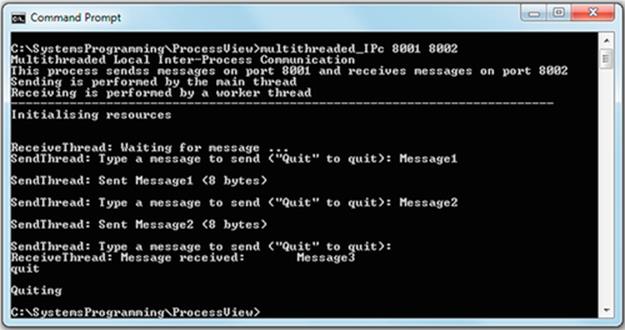

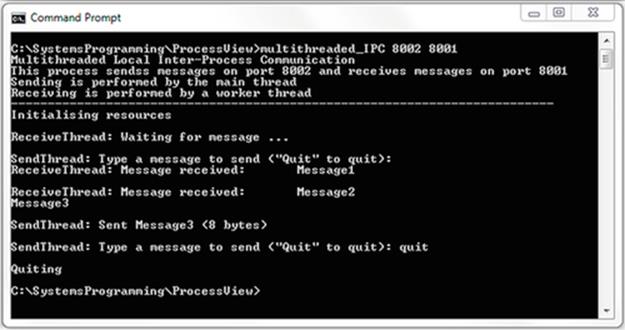

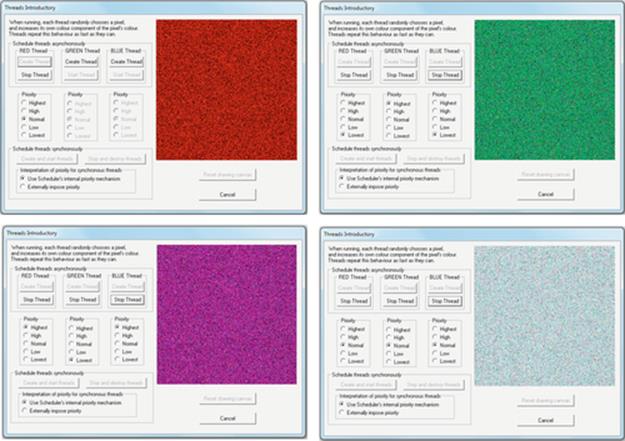

The simplest preemptive scheduling algorithm is round-robin, in which the processes are given turns at running, one after the other in a repeating sequence, and each one is preempted when it has used up its time slice. So, for example, if we have three processes {A, B, C}, then the scheduler may run them in the sequence A, B, C, A, B, C, A, and so on, until they are all finished. Figure 2.20 shows a possible process state sequence for this set of processes, assuming a single processing core system. Notice that the example shown is maximally efficient because the processor is continuously busy; there is always a process running.