Systems Programming: Designing and Developing Distributed Applications, FIRST EDITION (2016)

Chapter 4. The Resource View

Abstract

This chapter examines the resource aspects of distributed systems. It is concerned with ways in which resource availability and resource usage impact on communication and on the performance of the communicating processes. The main physical resources of interest are processing power, network communication bandwidth, and memory. The discussion focuses on the need to use these resources efficiently as they directly impact on performance and scalability of applications and the system itself. The characteristics and behavior of network protocols and transmission mechanisms are examined with particular emphasis on the way messages are passed across networks and the ways in which congestion can build up and the effects of congestion on distributed applications.

Memory is discussed in terms of its use as a resource for general processing and more specifically in the context of its use to provide buffers for the assembly and storage of messages prior to sending (at the sender side) and for holding messages after receipt (at the receiver side) while the contents are processed. The memory hierarchy is discussed in terms of the trade-offs between access, availability, and IO latency and the way in which program design can impact on efficiency and performance in this regard. Memory management is examined, in particular in terms of the use of virtual memory to extend the effective size of the physical memory available.

A number of virtual resource types, including sockets and ports, are discussed in terms of their relationship to processes and the management of communication.

Ways in which the design of distributed applications impacts on resource usage and efficiency are discussed, and the case study is examined from the resource perspective.

Keywords

Resource management

Memory buffer

Memory hierarchy

Virtual memory

Page replacement algorithms

Dynamic memory allocation

Shared resources

Lost updates

Transactions

Locks

Deadlock

Resource replication

Network bandwidth

Data compression

Serialization

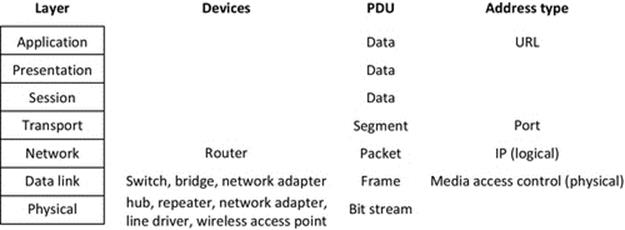

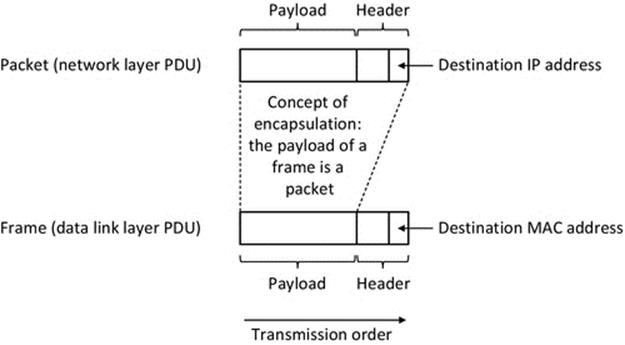

Protocol data units

Encapsulation

Routing

Data consistency

Replication

4.1 Rationale and Overview

A computer system has many different types of resource to support its operation; these include the central processing unit (CPU) (which has a finite processing rate), memory, and network bandwidth. Different activities use different combinations and amounts of resources. All resources are finite, and for any activity, there will often be one particular resource that is more limited than the others in terms of availability and thus acts as a bottleneck in terms of performance. Resource management therefore is a key part of ensuring system efficiency, and it is thus necessary for the developers to understand the distributed applications they build in terms of the resources they use. It is also very important from a programming viewpoint to understand the way resources such as message buffers are created and accessed. This chapter presents a resource-oriented view of communication between processes, with a focus on how the resources are used by applications and the way the resources are managed, either by the operating system or implicitly through the way applications use them.

4.2 The CPU as a Resource

The CPU is a very important resource in any computer system, because no work can be done without it. A process can only make progress when it is in the running state, that is, when it is given access to the CPU. The other main resources (memory and network) get used as a result of process executing instructions.

Even when there are several processing cores available in a system, they are still a precious resource that must be used very efficiently. As we investigated in Chapter 2, there can be very many processes active in a modern multiprocessing system, and thus, the allocation of processing resource to processes is a key aspect that determines the overall efficiency of the system.

The Chapter 2 has focused mainly on the CPU in terms of resources. In particular, we looked at the way in which the processing power of the CPU has to be shared among the processes in the system by the scheduler. We also investigated the different types of process behavior and how competition for the CPU can affect the performance of processes. This chapter therefore focuses mainly on the memory and network resources.

However, the use of the various resources is intertwined; their use is not orthogonal. A process within a distributed system that sends a message to another process actually uses many resource types simultaneously. Almost all actions in a distributed system involve the main resource types (CPU, memory, and network) and virtual resources such as sockets and ports to facilitate communication.

For example, when dealing with memory as a resource, it is important to realize that the CPU can only directly access the contents of its own registers and the contents of random-access memory (RAM). Other possibly more abundant memory types, such as hard disk drives and USB memory sticks, are accessed with significantly higher latency as the data must be moved into RAM before being accessed. This illustrates the importance of understanding how the resources are used and the interaction between resources that occurs, which in turn is necessary in order to be able to make good design decisions for distributed applications and to be able to understand the consequences of those design decisions.

Poor design leading to inefficient resource usage can impact the entire system, beyond a single application or process. Negative impacts could arise, for example, in the form of network congestion, wasted CPU cycles, or thrashing in virtual memory (VM) systems.

4.3 Memory as a Resource for Communication

Consider the very simple communication scenario between a pair of processes in which a single message is to be sent from one process to the other. Several types of resource are needed to achieve this, so let us first look at the use of memory.

In order to be able to send a message, the sending process must have access to the message; that is, it must have been defined and stored in memory accessible to the process. The normal way to arrange this is to reserve a block of memory specially for holding a message prior to sending; we call this the send buffer or transmission buffer. A message can then be placed into this buffer for subsequent transmission across the network to the other processes.

A buffer is a contiguous block of memory, accessible by the process that will read and write data to/from it. The process may be part of a user application or may be part of the operating system. By contiguous, we mean that the memory must be a single unbroken block. For example, there must not be a variable stored in the same block of memory. In fact, we say that the block of memory is “reserved” for use as the buffer (of course, this requires sensible and informed behavior on the part of the programmer).

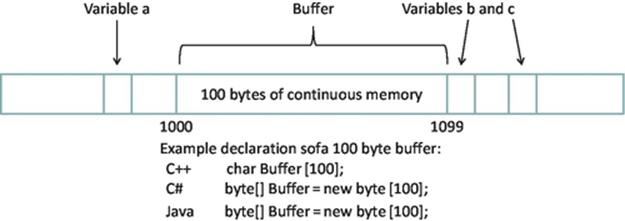

There are three significant attributes of a buffer (start address, length, and end address), as illustrated in Figure 4.1.

FIGURE 4.1 Illustration of a buffer; the one illustrated is 100 bytes long starting at address 1000 and ending at address 1099. Suitable declaration statements for some popular languages are also shown.

A buffer can be described precisely by providing any two of its three attributes, and the most common way to describe a buffer is by using start address and length. As each address in memory has a unique address and the memory used by the buffer must be in a contiguous block as discussed above, this description precisely and uniquely describes a particular block of memory. For the example shown in Figure 4.1, this is 1000 and 100. This information can be passed to the communication part of the application process; in the case of the sender, this indicates where the message that must be sent is stored, or in the case of the receiver, this indicates where to place the arriving message. Figure 4.1 also illustrates the requirement that the buffer's memory must be reserved such that no other variables overlap the allocated space. It is very important to ensure that accesses to the buffer remain within bounds. In the example shown, if a message of more than 100 bytes were written into the buffer, the 101st character would actually overwrite variable b.

The first resource-related issue we come across here is that the size of the buffer must be large enough to hold the message. The second issue is how to inform the code that performs the sending where the buffer is located and the actual size of the message to send (because it would be very inefficient to send the entire buffer contents if the message itself were considerably smaller than the buffer size, which would waste network bandwidth).

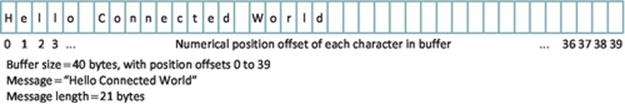

Figure 4.2 illustrates the situation where a message of 21 bytes is stored into a buffer of 40 bytes in size. From this figure, we can see several important things. Firstly, each byte in the buffer has an index that is its numerical offset from the start of the buffer, so the first byte has an index of 0, the second byte an index of 1, and so on, and perhaps, the most important thing to remember when writing code that uses this buffer is that the last byte has an offset of 39 (not 40). We can also see that each character of the message, including spaces, occupies one byte in the buffer (we assume simple ASCII encoding in which each character code will always fit into a single byte of memory). We can also see that the message is stored starting from the beginning of the buffer. Finally, we can see that the message in this case is considerably shorter than the buffer, so it is more efficient to send across the network only the exact number of bytes in the message, rather than the whole buffer contents.

FIGURE 4.2 Buffer and message size.

A single process may have several buffers; for example, it is usual to have separate buffers for sending and receiving to permit simultaneous send and receive operations without conflict.

For many years, memory size was a limiting factor for performance in most systems due to the cost and the physical size of memory devices. Over the last couple of decades, memory technology has advanced significantly such that modern multiprocessing systems have very large memories, large enough to accommodate many processes simultaneously. The operating system maintains a memory map that keeps track of the regions of memory that have been allocated to each process and must isolate the various processes present in the system from each other. In particular, each process must only have access to its allocated memory space and must not be able to access memory that is owned by another process.

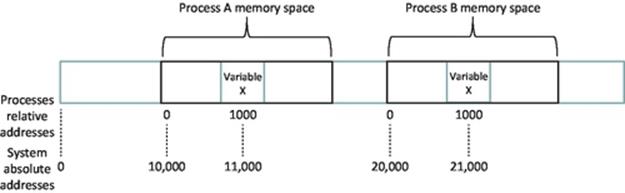

Each process is allocated its own private memory area at a specific location in the system memory map. Processes are unaware of the true system map and thus are unaware of the presence of other processes and the memory they use. To keep the programming model simple, each process works with a private address range, which starts from address 0 as it sees it (i.e., the beginning of its own address space), although this will not be located at the true address 0 at the system level. The true starting address of a process' memory area is used as an offset so that the operating system can map the process' address spaces onto the real locations in the system memory. Using the private address space, two different processes can both store a variable at address 1000 (as they see it). This address is actually address 1000 relative to the offset of where the process' memory begins; thus, its true address is 1000 plus the process' memory offset in the system memory address space; see Figure 4.3.

FIGURE 4.3 Process memory offsets and relative addressing.

Figure 4.3 illustrates the way in which different processes are allocated private memory areas with offsets in the true address range of the system and the way in which relative addressing is used by processes within their allocated memory space. This permits two process instances of the same program to run on the same computer, each storing a variable X at relative address 1000. The operating system stores the offsets for the two memory spaces (in this example, 10,000 and 20,000), thus using the true memory address offsets for each of the processes; the true locations of the two variables are known to the operating system (in this example, 11,000 and 21,000). This is a very important mechanism because it means that the relative addresses used within a program are independent of where the process is loaded into the true physical memory address range; which is something that cannot be known when the program is compiled.

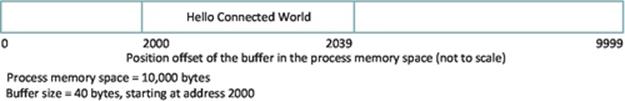

Next, let us consider what information is needed to represent the size and location of a buffer within the address space of a particular process and thus the size and location of the message within it.

Figure 4.4 shows how the buffer is located within the process' memory space. Note here that the memory address offsets of the 10,000 bytes are numbered 0 through 9999 and that address 10,000 is not actually part of this process' memory space.

FIGURE 4.4 A buffer allocated within a process' address space.

The message starts at the beginning of the buffer (i.e., it has an offset of 0 within the buffer space) and has a length of 21 bytes. Therefore, by combining our knowledge of the message position in the buffer and our knowledge of the buffer position in the process' memory space, we can uniquely identify the location of the message within the process' memory space. In this case, the message starts at address 2000 and has a length of 21 bytes. These two values will have to be passed as parameters to the send procedure in our code, so that it can transmit the correct message.

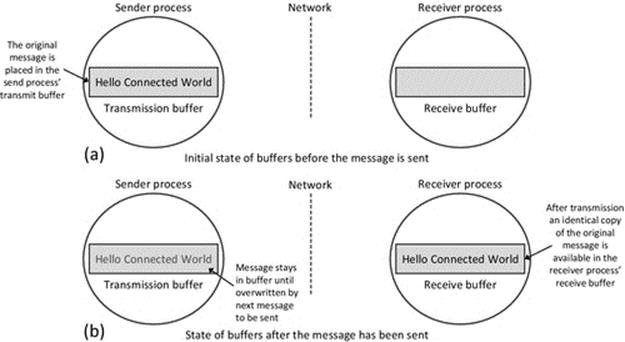

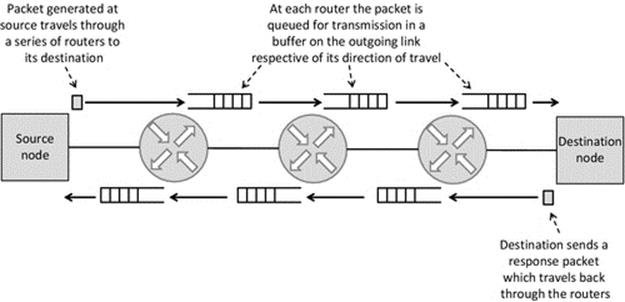

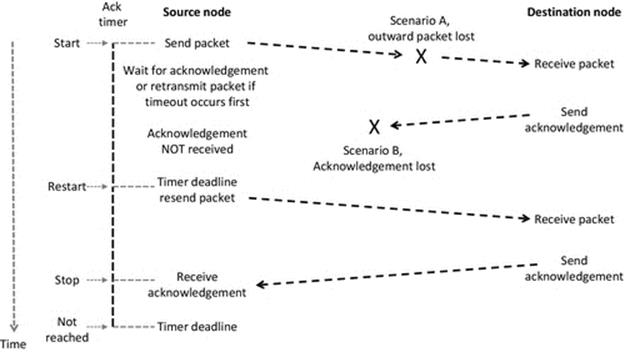

We now consider the role of memory buffers in the complete activity of sending a message from one process (we shall call the sender) to another process (we shall call the receiver). The sender process must have the message stored in a buffer, as explained above, before it can send the message. Similarly, the receiver process must reserve a memory buffer in which to place the message when it arrives. Figure 4.5 provides a simplified view of this concept.

FIGURE 4.5 Simplified view of sender and receiver use of buffers.

Figure 4.5 shows the role of memory buffers in communication, in a simplified way. Part (a) of the figure shows the situation before the message is sent. The message is stored in a buffer in the memory space of the sending process, and the buffer in the receiving process is empty. Part (b) of the figure shows the situation after the message has been sent. The essential point this figure conveys is that the sending of a message between processes has the effect of transferring the message from a block of memory in the sender process to a block of memory in the receiver process. After the transfer is complete, the receiver can read the message from its memory buffer, and it will be an exact replica of the message the sender had previously placed in its own send buffer.

As stated above, this is a simplified view of the actual mechanism for the purpose of establishing the basic concept of passing a message between processes. The message is not automatically deleted from the send buffer through the action of sending; this is logical because it is possible that the sender may wish to send the same message to several recipients. A new message can be written over the previous message when necessary, without first removing the earlier message. The most significant difference between Figure 4.5and the actual mechanism used is that the operating system is usually responsible for receiving the message from the network and holding it in its own buffer until the recipient process is ready for it, at which point the message is transferred to the recipient process' receive buffer.

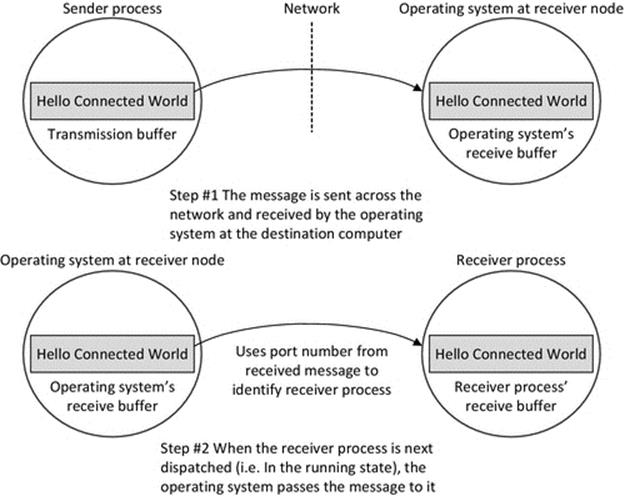

The receive mechanism is implemented as a system call that means that the code for actually performing the receive action is part of the system software (specifically the TCP/IP protocol stack). This is important for two main reasons: Firstly, the system call mechanism can operate when the process is not running, which is vital because it is not known in advance exactly when a message will arrive. The receiver process is only actually running when it is scheduled by the operating system, and thus, it may not be in the running state at the moment when the message arrives (in which case, it would not be able to execute instructions to store the message in its own buffer). This is certain to be the case when the socket is configured in “blocking” mode that means that as soon as the process issues the receive instruction, it will be moved from the running state to the blocked state and stays there until the message has been received from the network. Secondly, the process cannot directly interact with the network interface because it is a shared resource needed by all processes that perform communication. The operating system must manage sending and receiving at the level of the computer itself (this corresponds to the network layer). The operating system uses port numbers, contained in the message's transport layer protocol header to determine which process the message belongs to. This aspect is discussed in depth in Chapter 3, but the essence of what occurs in the context of the resource view is shown in Figure 4.6.

FIGURE 4.6 A message is initially received by the operating system at the destination computer and then passed to the appropriate process.

The most important aspect of Figure 4.6 is that it shows how the operating system at the receiving node decouples the actual sending and receiving processes. If the receiving process were guaranteed to be always running (in the running state), then this decoupling may be unnecessary, but as we have seen in Chapter 2, the receiving process may actually only be in the running state for a small fraction of the total time. The sending process cannot possibly synchronize its actions such that the message arrives at exactly the moment the recipient process is running, because, among other things, the scheduling at the receiving node is a dynamic activity (and thus, the actual state sequences are not knowable in advance) and also the network itself is a dynamic environment (and thus, the end-to-end delay is continuously varying). If the operating system did not provide this decoupling network, communication would be unreliable and inefficient as the two communicating processes would have to be tightly synchronized in order that a message could be passed between them.

4.3.1 Memory Hierarchy

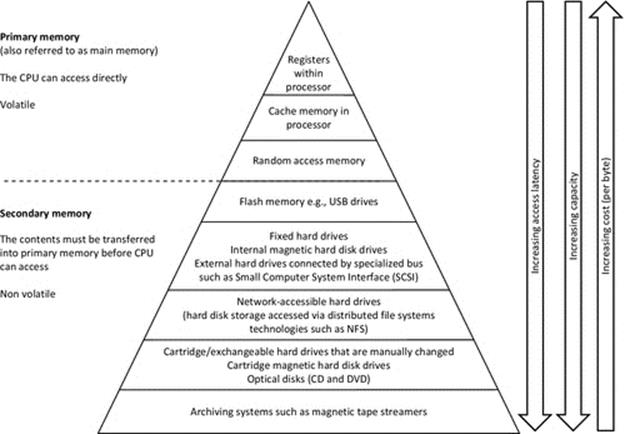

There are many types of memory available in a distributed system, the various types having different characteristics and thus being used in different ways. The memory types can be divided into two main categories: primary memory and secondary storage.

Primary memory is the memory that the CPU can access directly; that is, data values can be read from and written to primary memory using a unique address for each memory location. Primary memory is volatile (it will lose its contents if power is turned off) and comprises the CPU's registers and cache memory and RAM.

Secondary storage is persistent (nonvolatile) memory in the form of magnetic hard disks, optical disks such as CDs and DVDs, and flash memory (which includes USB memory devices and also solid-state hard disks and memory cards as used, e.g., in digital cameras). Secondary storage devices tend to have very large capacities relative to primary memory, and many secondary storage devices use replaceable media, so the drive itself can be used to access endless amounts of storage, but this requires manual replacement of the media. The contents of secondary storage cannot be directly addressed by the CPU, and thus, the data must be read from the secondary storage device into primary storage prior to its use by a process. As the secondary storage is nonvolatile (and primary memory is volatile), it is the ultimate destination of all persistent data generated in a system.

Let us consider the memory-use aspect of creating and running a process. The program is initially held in secondary storage as a file that contains the list of instructions. Historically, this will have been held on a magnetic hard disk or an optical disk such as a CD or DVD. In addition, more recently, flash memory technologies have become popular, such that large storage sizes of up to several gigabytes can be achieved on a physically very small memory card or USB memory stick. When the program is executed, the program instructions are read from the file on secondary storage and loaded into primary memory RAM. As the program is running, the various instructions are read from the RAM in sequence depending on the program logic flow.

The CPU has general purpose registers in which it stores data values on a temporary basis while performing computations. Registers are the fastest access type of memory, being integrated directly with the processor itself and operating at the same speed. However, there are a very limited number of registers; this varies across different processor technologies but is usually in the range of about eight to about sixty-four registers, each one holding a single value. Some processor architectures have just a handful of registers, so registers alone are not sufficient to execute programs; other forms of memory and storage are needed.

The data values used in the program are temporarily held in CPU registers for purposes of efficiency during instruction execution but are written back to RAM storage at the end of computations; in high-level languages, this happens automatically when a variable is updated, because variables are created in RAM (and not registers). This is an important point; using high-level languages, the programmer cannot address registers, only RAM locations (which are actually chosen by the compiler and not the programmer). Assembly language can directly access registers, but this is a more complex and error-prone way of programming and in modern systems is only used in special situations (such as for achieving maximum efficiency on low-resourced embedded systems or for achieving maximum speed in some timing critical real-time applications).

The memory hierarchy shown in Figure 4.7 is a popular way of representing the different types of memory organized in terms of their access speed (registers being the fastest) and access latency (increasing down the layers) and the capacity (which tends to also increase down the layers) and cost, which if normalized to a per byte value increases as you move up the layers. The figure is a generalized mapping and needs to be interpreted in an informed way and not taken literally in all cases. For example, not all flash USB memory drives have larger capacity than the amount of RAM in every system, although the trend is heading that way. Network-accessible storage has the additional latency of the network communication, on top of the actual device access latency. Network-accessible drives are not necessarily individually any larger than the local one, but an important point to note, especially with the distributed systems theme of this book, is that once you consider network access, you can potentially access a vast number of different hard drives spread across a large number of remote computers. Cartridge disk drives and removable media systems such as CD and DVD drives are shown as being slower to access than network drives. This is certainly the case if you take into account the time required for manual replacement of media. The capacity of replaceable media systems is effectively infinite, although each instance of the media (each CD or DVD) has well-defined limits.

FIGURE 4.7 The memory hierarchy.

RAM is so named because its data locations can be accessed individually, in any order (i.e., we can access memory locations in whatever sequence is necessary as the process runs), and the access order does not affect access time, which is the same for all locations. However, the name can be misleading; there is usually a pattern to the accesses that tends to exhibit spatial or temporal locality. The locations accessed are done so purposefully in a particular sequence and not “randomly.” Spatial locality arises for a number of reasons. Most programs contain loops or even loops within loops, which cycle through relatively small regions of the instruction list and thus repeatedly access the same memory locations. In addition, data are often held in arrays, which are held in a set of contiguous memory locations. Iteration through an array will result in a series of accesses to different, but adjacent, memory locations, which will be in the same memory page (except when a boundary is reached). An event handler will always reference the same portion of memory (where its instructions are located) each time an instance of the event occurs; this is an example of spatial locality, and if the event occurs frequently or with a regular timing pattern, then this is also an example of temporal locality.

The characteristics of secondary storage need to be understood in order to design efficient applications. For example, a hard disk is a block device; therefore, it is important to consider the latency of disk IO in terms of overall process efficiency. It may be more efficient, for example, to read in a whole data file into memory in one go (or at least a batch of records) and access the records as necessary from the cache, rather than reading each one from disk when needed. This is very application-dependent and is an important design consideration.

4.4 Memory Management

In Chapter 2, we looked closely at how the operating system manages processes in the system; in particular, the focus was on scheduling. In this chapter, we examine memory management, which is another very important role of the operating system. There are two main aspects of memory management: dynamic memory allocation to processes (which is covered in depth in a later section) and VM (which is discussed in this section).

As we have seen above, processes use memory to store data, and this includes the contents of messages received from the network or to be sent across the network. Upon creation of a process, the operating system allocates sufficient memory to hold all the statically declared variables; the operating system can determine these requirements as it loads and reads the program. In addition, processes often request allocation of additional memory dynamically; that is, they ask the operating system for more memory as their execution progresses, depending on the actual requirements. Thus, it is not generally possible to know the memory requirements of processes precisely at the time of process creation.

As discussed in the section above, there are several different types of storage in a computer system, and the most common form of primary memory is RAM, which is addressable from the processor directly. A process can access data stored in RAM with low latency, much faster than accessing secondary storage. The optimal situation is therefore to hold all data used by active processes in RAM. However, in all systems, the amount of RAM is physically limited, and very often, the total amount of memory demanded by all the processes in the system exceeds the amount of RAM available.

Deciding how much memory to allocate to each process, actually performing the allocation, and keeping track of which process is using which blocks of memory and which blocks are free to allocate are all part of the memory management role of the operating system. In the earliest systems, once the physical RAM was all allocated, then no more processes could be accommodated. VM was developed to overcome this serious limitation. The simplest way to describe VM is a means of making more memory available to processes than what actually exists in the form of RAM, by using space on the hard disk as temporary storage. The concept of VM was touched upon in Chapter 2 when the suspended process states were discussed. In that chapter, the focus was on the management of processes and the use of the CPU, so we did not get embroiled in the details of what happens when a process' memory image was actually moved from RAM to disk (this is termed being “swapped out”).

Activity R1 explores memory availability and the way this changes dynamically as new memory-hungry processes are created. The activity has been designed to illustrate the need for a VM system in which secondary storage (the hard disk) is used to increase the effective size of primary memory (specifically the RAM).

“Thrashing” is the term used to describe the situation in which the various processes access different memory pages at a high rate, such that the paging system spends almost all of its time swapping pages in and out, instead of actually running processes, and thus, almost no useful work can be performed.

Activity R1

Examine memory availability and use. Observe behavior of system when memory demand suddenly and significantly increases beyond physical availability

Learning Outcomes

1. Understand memory requirements of processes.

2. Understand physical memory as a finite resource.

3. Understand how the operating system uses VM to increase the effective memory availability beyond the amount of physical memory in the system.

This activity is performed in two parts. The first of these involves observing memory usage under normal conditions. The second part is designed to stress-test the VM mechanics of the operating system by suddenly and significantly increasing the amount of memory demanded by processes, beyond the amount of physical RAM available.

Part 1: Investigate memory availability and usage under normal conditions.

Method

(Assume a Windows operating system; the required commands and actions may vary across different versions of the operating system. The experiments were carried out on a computer with Windows 7 Professional installed.)

1. Examine memory availability. Open the Control Panel and select “System and Security,” and from there, select “System.” My computer has 4 GB RAM installed, 3.24 GB usable (available for processes).

2. Use the Task Manager utility to examine processes' memory usage. Start the Task Manager by pressing the Control, Alt, and Delete keys simultaneously and select “Start Task Manager” from the options presented. Select the “Applications” tab to see what applications are running in the system.

3. Select the “Processes” tab, which provides more details than the Applications tab; in particular, it provides memory usage per process. Look at the range of memory allocations for the processes present. These can range from about 50 kB up to hundreds of megabytes. Observe the memory usage of processes associated with applications you are familiar with, such as Windows (File) Explorer and Internet Explorer and perhaps a word processor; are these memory usage values in the ranges that you would have expected? Can you imagine what all that memory is being used for?

4. With the Task Manager “Processes” tab still open for diagnostic purposes, start the Notepad application but do not enter any characters into the form. How much memory is needed for the application itself? We could call this the memory overhead, as this much memory is needed for this application without including any user data (in my system, this was 876 kB). I typed a single character and the memory usage went up to 884 kB; does that tell us anything useful? I don't have access to the source code for this application, but it seems as if a single memory page of 8 kB was dynamically allocated to hold the user data. If I am right, then typing a few more characters will not further increase the memory usage; try it. After some experimentation, I found that Notepad allocates a further 8 kB of memory for approximately each 2000 characters typed into the form. This is approximately what I would expect and is quite efficient; using 4 bytes of memory to hold all the information, it needs about each character of data. You should experiment further with this simple application as it is an accessible way to observe dynamic memory allocation in operation in a real application.

Part 2: Investigate system behavior when memory demand increases beyond the size of physical RAM available in the system.

Caution! This part of the activity can potentially crash your computer or cause data loss. I recommend that you follow this part of the activity as a “pseudo activity,” since I have performed the activity and reported the results so you can observe safely without actually risking your system. The source code for the Memory_Hog program is provided so that you can understand its behavior. If you choose to compile and run the code, make sure you first save all work, close all files, and shut down any applications, which may corrupt data.

Method

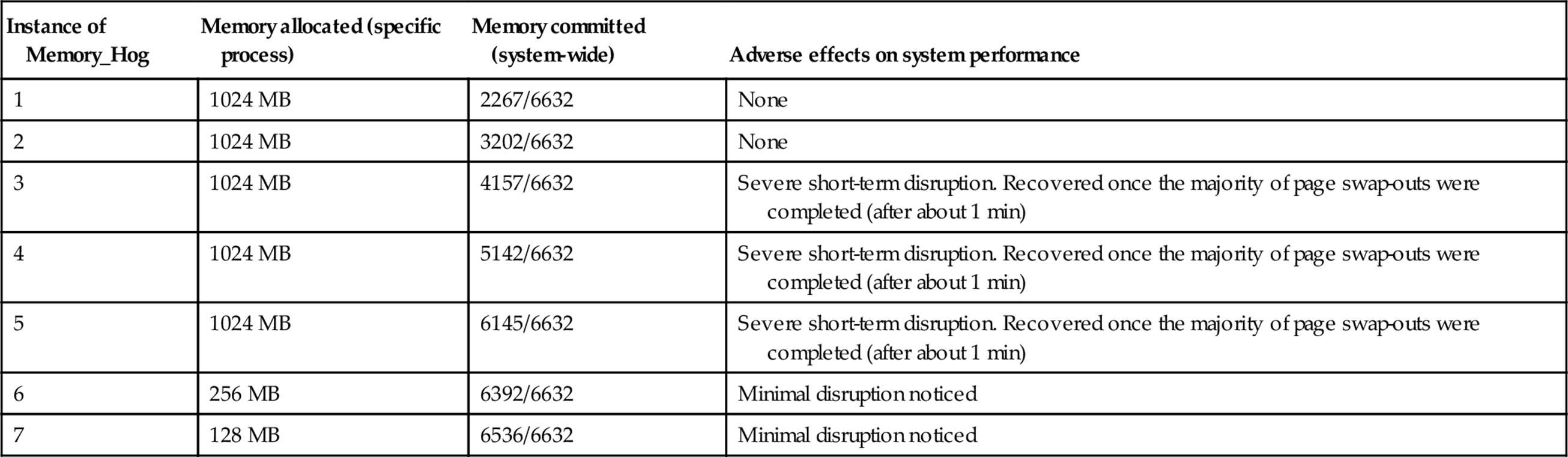

(Part 2A uses the system default page file size, which is 3317 MB; this is approximately the same size as the amount of physical RAM installed; the VM size is the sum of the usable RAM plus the page file size and was 6632 MB for this experiment.)

5. Keep the Task Manager “Processes” tab open for diagnostic purposes, while completing the following steps. You can also use the “Performance” tab to inspect the total memory in use and available in the system.

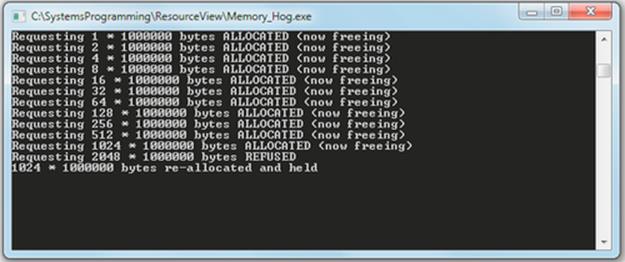

6. Execute a single copy of Memory_Hog within a command window to determine the maximum amount of memory that the operating system will allocate to a single process. The Memory_Hog requests increasing memory amounts, starting with 1 megabyte (MB). Each time it is successful, it frees the memory, doubles the request size, and requests again. This is repeated until the request is refused by the operating system, so the maximum it gets allocated might not be the exact maximum that can be allocated, but it is in the approximate ballpark, and certainly, the system will not allocate twice this much. The screenshot below shows the output of the Memory_Hog on my computer. The maximum size that was successfully allocated was 1024 MB.

7. Execute multiple copies of the Memory_Hog each in a separate command window. Do this progressively, starting one copy at a time and observing the amount of memory allocated to each copy, both in terms of the output reported by the Memory_Hog program and also by looking at the Task Manager “Processes” tab. I used the Task Manager “Performance” tab to inspect the amount of memory committed, as a proportion of the total VM available. The results are presented in the table below.

The first and second instances of the Memory_Hog process were allocated the process maximum amount of memory (1024 MB, as discovered in step 6 above). This was allocated without exceeding the amount of physical RAM available, so there was no requirement for the VM system to perform paging.

The third, fourth, and fifth instances of the Memory_Hog process were also allocated the process maximum amount of memory (1024 MB). However, the memory requirement exceeded the physical memory availability, so the VM system had to swap currently used pages out to disk to make room for the new memory allocation. This caused significant disk activity for a limited duration, which translated into system disruption; processes became unresponsive and the media player stopped and started the sound in a jerky fashion (I was playing a music CD at the time). Eventually, the system performance recovered once the majority of the disk activity was completed. No data were lost and no applications crashed, although I had taken the precaution of closing down almost all applications before the experiment.

The sixth instance of the Memory_Hog process was allocated 256 MB of memory. The VM system had to perform further swap-outs, but this was a much smaller proportion of total memory and had a much lower, almost unnoticeable effect on system performance.

The seventh instance of the Memory_Hog process was allocated 128 MB of memory. As with the sixth instance, the VM system performed further page swap-outs, but this had an almost unnoticeable effect on system performance.

When evaluating the system disruption effect of the Memory-Hog processes, it is important to consider the memory-use intensity of the large amounts of memory it requests. If you inspect the source code for the Memory_Hog program, you will see that once it has allocated the memory, it does not access it again; it sits in an infinite loop after the allocation activity, doing nothing. This makes it easy for a paging mechanism based on Least Frequently Used or Least Recently Used memory pages to be able to identify these pages for swapping out. This indeed is the effect witnessed in the experiments and explains why the system disruption is short-lived. If the processes accessed all of their allocated memory aggressively, then the system disruption would be continual, as the paging would be ongoing, for each process. If multiple processes are making such continuous access, then the VM system may begin to “thrash.” This means that the VM system is continually moving pages between memory and disk and that processes perform very little useful work because they spend most of the time in the blocked state waiting for a specific memory page to become available for access.

Method

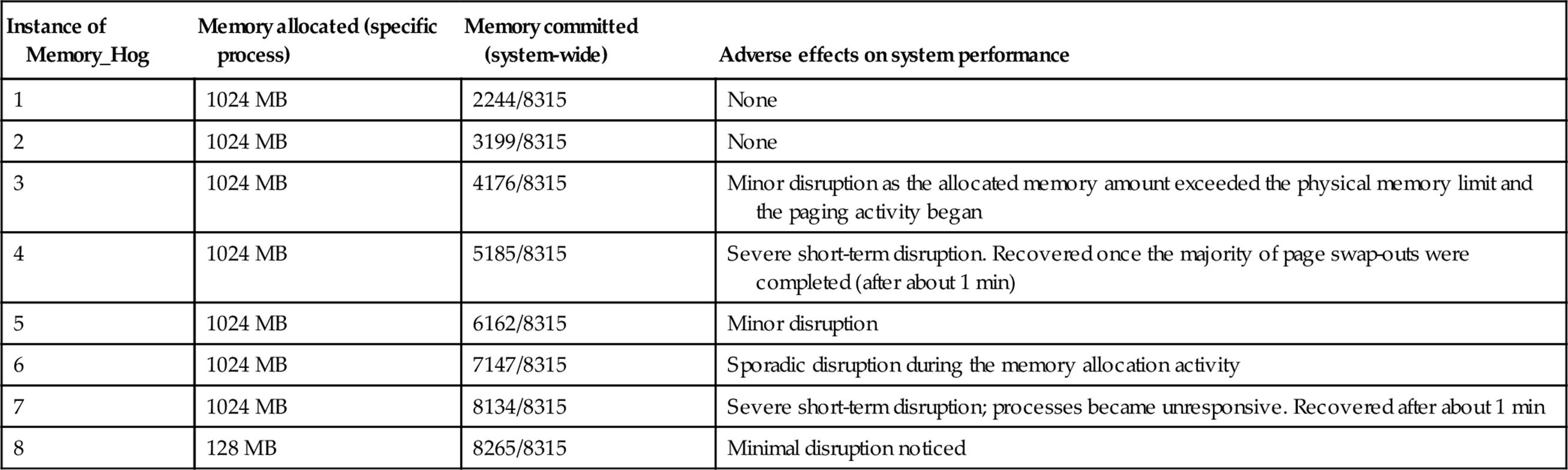

(Part 2B uses an increased page-file size of 5000 MB, which exceeds the physical memory size significantly. The VM size in this case was 8315 MB.)

8. Execute multiple copies of the Memory_Hog each in a separate command window, progressively as in step 7. The results are presented in the table below.

A similar pattern of disruption was observed as with the experiment in part 2A. When the total memory requirement can be satisfied with physical memory, there is no system performance degradation. Once the VM system starts performing page swaps, the performance of all processes is potentially affected. This is due to the latency of the associated disk accesses and the fact that a queue of disk access requests can build up. Once the majority of page swaps necessary to free the required amount of memory for allocation have been completed, the responsiveness of the other processes in the system returns to normal.

Reflection

This activity has looked at several aspects of memory availability, allocation, and usage. The VM system has been investigated in terms of its basic operation and the way in which it extends the amount of memory available beyond the size of physical memory in the system.

When interpreting the results of parts 2A and 2B, it is important to realize that the exact behavior of the VM system will vary each time the experiment is carried out. This is because the sequence of disk accesses will be different each time. The actual set of pages that are allocated to each process will differ as will the exact location of each page at any particular instant (i.e., whether each specific page is held on disk or in physical RAM). Therefore, the results will not be perfectly repeatable, but can be expected to exhibit the same general behavior each time. This is an example of nondeterministic behavior that can arise in systems: It is possible to describe the behavior of a system in general terms, but it is not possible to exactly predict the precise system state or low-level sequence of events.

4.4.1 Virtual Memory

This section provides an overview of the mechanism and operation of VM.

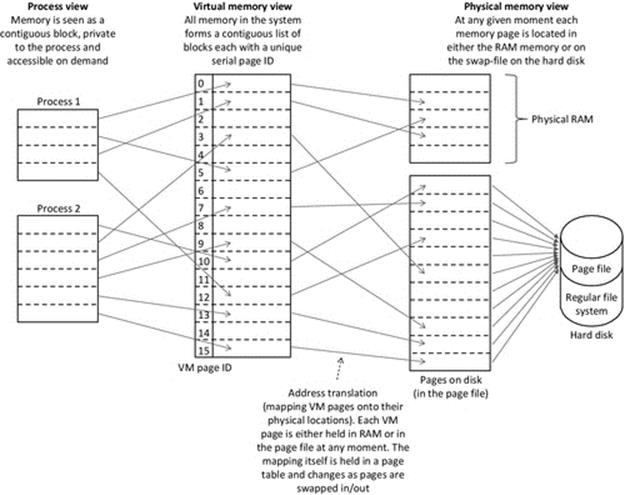

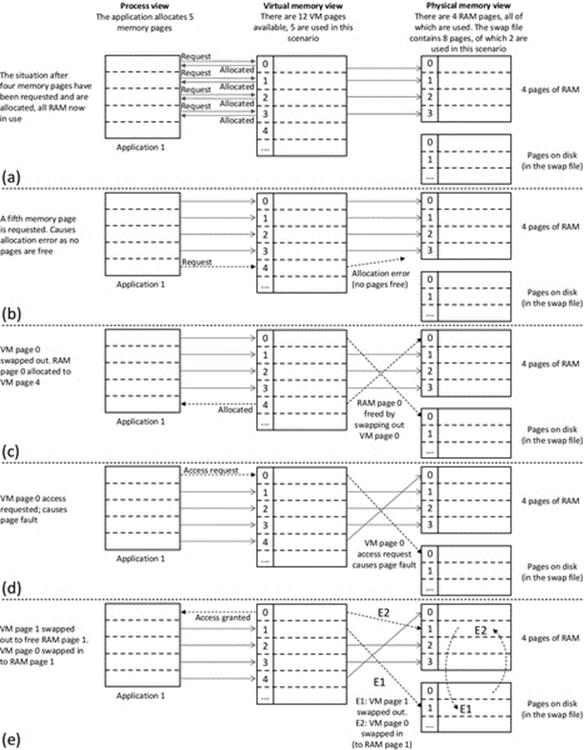

Figure 4.8 illustrates the mapping of process' memory space into the VM system and then into actual locations on storage media. From the process' viewpoint, its memory is a contiguous block, which is accessible on demand. This requires that the VM mechanism is entirely transparent; the process accesses whichever of its pages it needs to, in the required sequence. It will incur delays when a swapped out page is requested, leading to a page fault which the VM mechanism handles, but the process will be moved to the blocked state while this takes place and is unaware of the VM system activity. The process does not need to do anything differently to access the swapped out page, so effectively, the VM mechanism is providing access transparency. The VM system however is aware of the true location of each memory page (which it keeps track of in a special page table), the set of pages that are in use by each process and the reference counts or other statistics for each page (depending on the page replacement algorithm in use). The VM system is responsible for keeping the most needed memory pages in physical memory and swapping in any other accessed pages on demand. The physical memory view reflects the true location of memory pages, which is either the RAM or a special file called the page file on the secondary storage (usually a magnetic hard disk).

FIGURE 4.8 VM overview.

4.4.1.1 VM Operation

Memory is divided into a number of pages, which can be located either in physical memory or on the hard disk in the page file. Each memory page has a unique VM page number. Memory pages retain their VM page number but can be placed into any numbered physical memory page or disk page slot as necessary.

A process will use one or more memory pages. The memory pages contain the actual program itself (the instructions) and the data used by the program.

The CPU is directly connected to the physical memory via its address and data buses. This means that it can directly access memory contents that are contained in pages that are held in physical memory. All memory pages have a VM page ID, which is permanent and thus can be used to track a page as it is moved between physical memory and the page file on disk.

Memory pages that are not currently held in physical memory cannot be immediately accessed. These have to be moved into the physical memory first and then accessed. Running processes can thus access their in-memory pages with no additional latency but will incur a delay if they need to access a page currently held on disk.

Some key terms are defined:

Swap-out: A VM page is transferred from physical memory to disk.

Swap-in: A VM page is transferred from disk to physical memory.

Page fault: Processes can only access VM memory pages that are held in physical memory. A page fault is the name given to the error that occurs when an attempt is made to access a memory page that is not currently in physical memory. To resolve a page fault, the relevant page must be swapped in. If there are no physical memory pages available to permit the swap-in, then another page must be swapped out first to free space in physical memory.

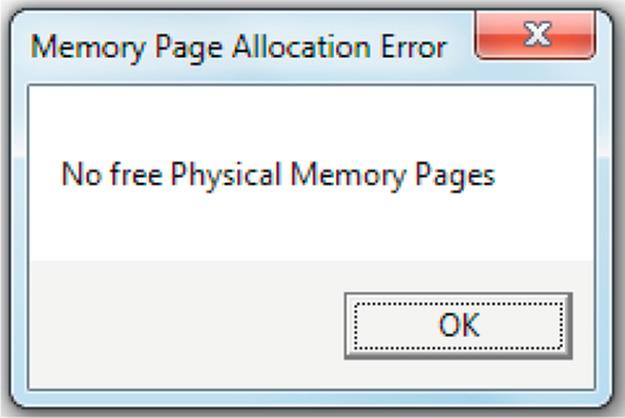

Allocation error: If the VM system cannot allocate sufficient memory to satisfy a memory allocation request from a process, an allocation error will occur.

Thrashing: When processes allocate a lot of memory, such that a significant amount of the swap file is used and also makes frequent access to the majority of their allocated pages, the VM system will be kept busy swapping out pages to make room for required pages and then swapping in those required pages. This situation is worse when there is low spatial locality in the memory accesses. In extreme cases, the VM system is continuously moving pages between memory and disk, and processes are almost always blocked, waiting for their memory page to become available. The system becomes very inefficient, as it spends almost all of its effort moving pages around and the processes present perform very little useful work.

As is reflected in the results of Activity R1, the overall performance of the VM system depends in part on the size of the swap file allocated on the disk. The swap file size is usually chosen to be in the range of 1-2 times the primary memory size, with 1.5 times being a common choice. It is difficult to find an optimum value for all systems, as the amount of paging activity that occurs is dependent on the actual memory-use behavior of the processes present.

4.4.1.2 Page Replacement Algorithms

When a page in memory needs to be swapped out to free up some physical memory, a page replacement algorithm is used to select which currently in-memory page should be moved to disk.

There are a variety of different page replacement algorithms that can be used. The general goal of these is the same: to remove a page from memory that is not expected to be used soon and store it on the disk (i.e., swap out this page) so that a needed page can be retrieved from disk and placed into the physical memory (i.e., the needed page is swapped in).

4.4.1.3 General Mechanism

As processes execute, they access the various memory pages as necessary, which depends on the actual locations where the used variables are held. Access to a memory location is performed either to read the value or to update the value. If even a single location within a page is accessed, it is said to have been referenced, and this is tracked by setting a special “referenced bit” for the specific page. Similarly, modification of one or more locations in a page is tracked by setting a special “modified bit” for the specific page. The referenced and/or modified bits are used by the various page replacement algorithms to select which page to swap out.

4.4.1.4 Specific Algorithms

The Least Recently Used (LRU) algorithm is designed to keep pages in physical memory that have been recently used. This works on the basis that most processes exhibit spatial locality in their memory referencing behavior (i.e., the same subset of locations tend to be accessed many times over during specific functions, loops, etc.), and thus, it is efficient to keep the pages that have been recently referenced in physical memory. Referenced bits are periodically cleared so that old reference events are forgotten. When a page needs to be swapped out, it is chosen from those that have not been recently referenced.

The Least Frequently Used (LFU) algorithm keeps track of the number of times a VM page in physical memory is referenced. This can be achieved by having a counter (per page) to keep track of the number of references to that page. The algorithm selects the page with the lowest reference count to swap out. A significant issue with LFU is that it does not take into account how the accesses are spread over time. Therefore, a page that was accessed many times, for example, in a loop, a while ago may show up as being more important than a page that is in current use but is not used so repetitively. Because of this issue, the LFU algorithm is not often used in its pure form, but the basic concept of LFU is sometimes used in combination with other techniques.

The First-In, First-Out (FIFO) algorithm maintains a list of the VM pages in physical memory, ordered in terms of when they were placed into the memory. Using a round-robin cycle through the circular list, the algorithm selects the VM page that has been in physical memory the longest, when a swap-out is needed. FIFO is simple and has low overheads but generally performs poorly as its basis for selection of pages does not relate to their usage since they were swapped in.

The clock variant of FIFO works fundamentally in the same round-robin manner as FIFO, but on the first selection of a page for potential swapping out, if the referenced bit is set, the page is given a “second chance” (because at least one access to the page has occurred). The referenced bit is cleared and the round robin continues from the next page in the list until one is found to swap out.

The Random algorithm selects a VM page randomly from those that are resident in physical memory for swapping out. This algorithm is simpler to implement than LRU and LFU because it does not need to track page references. It tends to perform better than FIFO, but less well than LRU, although this is dependent on the actual patterns of memory accesses that occur.

The performance of a page replacement algorithm is dependent on the general memory-access behavior of the mix of processes in the system. There is no single algorithm that works best in all circumstances, in terms of it being able to correctly predict which pages will be needed again soon and thus keep these in physical memory. The various algorithms discussed above have relative strengths and weaknesses, which are highlighted with the aid of some specific scenarios below:

• LRU and LFU are well suited to applications that allocate a large amount of memory and iterate through it or otherwise access it in a predictable way in which there is a pattern to references, for example, a scientific data processing application or a simulation that uses techniques such as computational fluid dynamics (as used, e.g., in weather prediction). In such scenarios, the working set of pages at any moment tends to be a limited subset of the process' entire memory space, so pages that have not been used recently are not particularly likely to be needed in the short term. Depending on the relative size of the working set of pages and the total allocated memory, the clock variant of FIFO may work well.

• An application that has a very large allocated memory and uses the contents very sparsely and with low repetition will have a much less regular patterns in terms of the pages used. In this case, it is very difficult to predict which pages will be needed next and which ones are thus likely to be good candidates to swap out. In such a case, the Random algorithm may perform relatively better than it would with programs that display greater temporal or spatial locality, since no algorithm is able to predict very well, but the Random algorithm at least has very low overheads.

Memory management behavior can be investigated using the Operating Systems Workbench. The VM simulation demonstrates the need for VM and facilitates experimentation with swapping pages between RAM and the hard disk as necessary to meet the applications' memory needs. Activity R2 examines the basics of the VM mechanism and its operation and does not focus on any particular algorithm although the simulation can support evaluation of specific page replacement algorithms, which can be investigated subsequently (see Section 4.11).

Activity R2

Using the Operating Systems Workbench to explore the basics of memory management and VM

Prerequisite: Download the Operating Systems Workbench and the supporting documentation from the book's supplementary materials website. Read the document “Virtual Memory—Activities and Experiments.”

Learning Outcomes

1. To gain an understanding of the need for memory management

2. To gain an understanding of the operation of VM

3. To gain an understanding of the meaning of “page fault,” “swap-out,” and “swap-in”

4. To explore the basics of page replacement algorithms

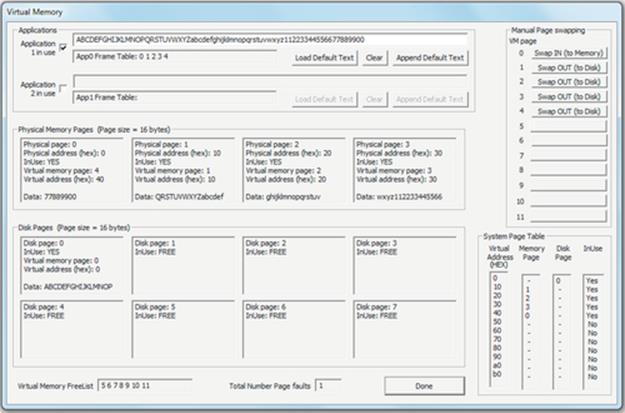

This activity uses the “virtual memory” simulation provided by the Operating Systems Workbench on the Memory Management tab. The simulation provides an environment in which there are four pages of physical memory and a further eight pages of storage on the disk. Each page holds 16 characters. Either one or two simple text-editing type applications (which are built in to the simulation) can be used to access the memory, for the purpose of investigating the memory management behavior.

Part 1: Simple illustration of the need for, and basic concept of, VM.

Method

1. Enable the first application.

2. Carefully type the letters of the alphabet (from A to Z) in uppercase initially and then repeat in lower case and watch how the characters are stored in the memory as you type. This initial block of text comprises 52 characters. The memory is organized into 16-character pages, so this text spans 4 pages. At this point, all of the text you have typed is held in VM pages currently in physical memory—this is an important observation.

3. Now, type the characters “11223344556677889900”; this adds another 20 characters, totaling 72, but the four physical memory pages can only hold only 64, so what happens? We get a page allocation error.

4. You have exhausted the physical memory when you get to the first “7” character. The solution is to select one of the four VM pages in use, to be swapped out (i.e., stored in one of the disk pages), thus freeing up a physical memory page so that you can finish typing your data. Select VM page 0 and press the adjacent “Swap OUT (to Disk)” button. Notice that VM page 0 is moved onto the disk and that the “7” character is now placed into the new page (VM page 4), at the physical page 0 address. Now, you can continue typing the sequence.

The screenshot below shows what you should see after step 4 has been completed.

Expected Outcome for Part 1

You can see how your application is using five pages of memory, but the system only has four physical pages. This is the fundamental basis of VM; it allows you to use more memory than you actually have. It does this by temporarily storing the extra pages of memory on the disk, as you can see in the simulation.

Part 2: An example of a page fault; we shall now try to access the memory page that is held on the disk. This part of the activity continues from where part 1 above left off.

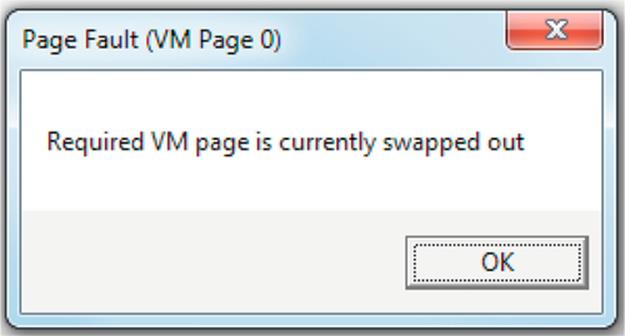

5. Try to change the first character you typed, the “A,” into an “@” by editing the application data in the same text box where you originally typed it. You will get a “page fault” because this character is in the page that we swapped out to disk; that is, at present, the process cannot access this page.

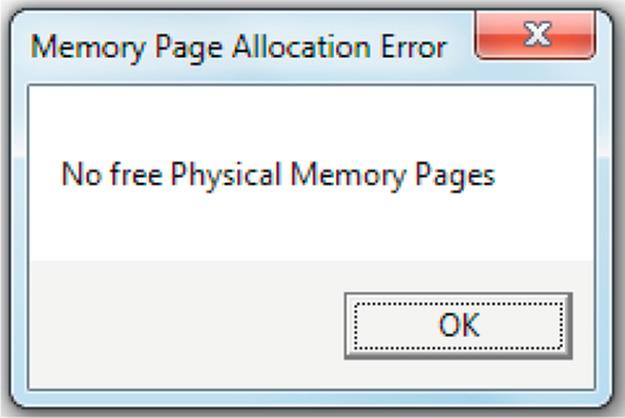

6. We need to swap in VM page 0 so we can access it—press the button labeled “Swap IN (to Memory)” for VM page 0 at the top of the right-hand pane. What happens? We get a page allocation error, because there are no free pages of physical memory that can be used for the swap-in.

7. We need to select a page to swap out so as to create space to swap in VM page 0. Ideally, we will choose a page that we won't need for a long time, but this can be difficult to predict. In this case, we shall choose VM page 1. Press the button labeled “Swap OUT (to Disk)” for VM page 1 at the top of the right-hand pane. Notice that VM page 1 is indeed swapped out and appears in the disk pages section of the display.

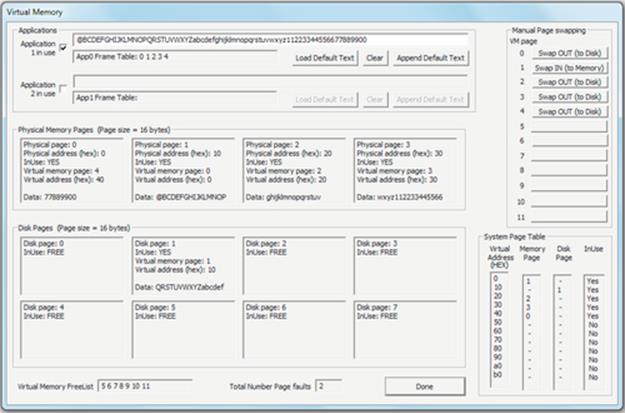

8. Now, we try to swap in VM page 0 again. Press the button labeled “Swap IN (to Memory)” for VM page 0 at the top of the right-hand pane again. This time, it works and the page appears back in physical memory—but not in its original place—the contents are still for page 0, but this time, the page is mapped as physical page 1. This is a very important point to note: The system needs to keep a mapping of the ordering of the pages and this is done by the fact that the page is still numbered as VM page 0. The frame table for application 1 confirms that it is using page 0 (as well as pages 1, 2, 3, and 4). Note also that as soon as the page reappears in physical memory, the application is able to access it and the “A” is finally changed to the “@” you typed.

The screenshot below shows what you should see after step 8 has been completed.

Expected Outcome for Part 2

You should be able to see how the VM pages have been moved between physical memory and disk as necessary to make more storage available than the actual amount of physical memory in the system and to enable the application to access any of the pages when necessary. Note that steps 6-8 were separated for the purpose of exploration of the VM system behavior, but in actual systems, these are carried out automatically by the VM component of the operating system.

Reflection

It is important to realize that in this activity, we have played the role of the operating system by choosing which page to swap out on two occasions: first, to create additional space in the physical memory and, second, to reload a specific swapped out page that we needed to access. In real systems, the operating system must automatically decide which page to swap out. This is achieved using page replacement algorithms that are based on keeping track of page accesses and selecting a page that is not expected to be needed in the short term, on a basis such as it being either the Least Recently Used or Least Frequently Used page.

Further Exploration

VM is one of the more complex functions of operating systems and can be quite difficult to appreciate from a purely theoretical approach. Make sure you understand what has happened in this activity, the VM mechanism by which it was achieved, and the specific challenges that such a mechanism must deal with. Carry out further experiments with the simulation model as necessary to ensure that the concept is clear to you.

Figure 4.9 shows the VM manager behavior as the process makes various memory page requests during Activity R2. Part (a) depicts the first four memory page allocation requests, which are all granted. Part (b) shows that the fifth memory page request causes an allocation error (all RAM is in use). Part (c) shows how VM page 0 is swapped out to free RAM page 0, which is then allocated to the requesting process (as VM page 4); this coincides with the end of part 1 of Activity R2. Part (d) shows that when VM page 0 is subsequently requested by the process, it causes a page fault (the page is not currently in RAM). Part (e) shows the steps required to swap in VM page 0 so that the process can access it. E1: VM page 1 was swapped out (from RAM page 1) to disk page 1 (because initially, VM page 0 occupied disk page 0 so disk page 1 was the next available). This frees RAM page 1. E2: VM page 0 was then swapped in to RAM page 1, so it is accessible by the process. This reflects the state of the system at the end of part 2 of Activity R2.

FIGURE 4.9 Illustration of VM manager behavior during Activity R2.

4.5 Resource Management

4.5.1 Static Versus Dynamic Allocation of Private Memory Resources

Memory can be allocated to a process in two ways. Static memory allocation is performed when a process is created, based directly on the declarations of variables in the code. For example, if an array of 20 characters is declared in the code, then a block of 20 bytes will be reserved in the process' memory image and all references to that array will be directed to that 20-byte block. Static memory allocation is inherently safe because by definition, the amount of memory needed does not change as the process is running.

However, there are situations where the developer (i.e., at design time) cannot know precisely how much memory will be needed when a program runs. This typically arises because of the run-time context of the program, which can lead to different behaviors on each execution instance. For example, consider a game application in which the server stores a list of active players' details. Data stored about each player may comprise name, high score, ratio of games lost to games won, etc.; perhaps a total of 200 bytes per player, held in the form of a structure. The designer of this game might wish to allow the server to handle any number of players, without having a fixed limit, so how is the memory allocation performed?

There are two approaches that can be used. One is to imagine the largest possible number of players that could exist, to add a few extra for luck, and then to statically allocate an array to hold this number of player details structures. This approach is unattractive for two reasons: Firstly, a large amount of memory is allocated always, even if only a fraction of it is actually used, and secondly, ultimately, there is still a limit to the number of players that can be supported.

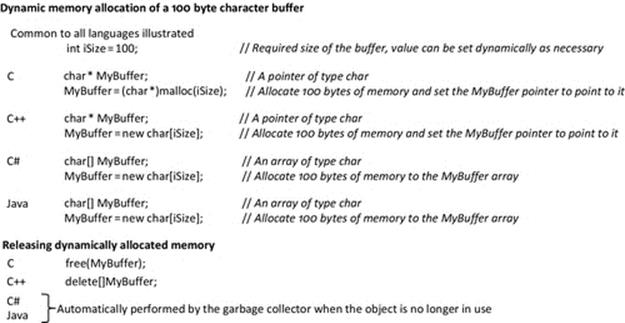

The other approach that is generally more appropriate in this situation is to use dynamic memory allocation. This approach enables an application to request additional memory while the program is running. Most languages have a dynamic memory allocation mechanism, often invoked with an allocation method such as malloc() in C or by using a special operator such as “new” to request that enough memory for a particular object is allocated. Using this approach, it is possible to request exactly the right amount of memory as and when needed. When a specific object that has been allocated memory is no longer needed, the memory can be released back to the available pool. C uses the free() function, while C++ has a “delete” operator for this purpose. In some languages such as C# and Java, the freeing of dynamically allocated memory is performed automatically when the associated object is no longer needed; this is generically termed “garbage collection” (Figure 4.10)

FIGURE 4.10 Dynamic memory allocation of a character array (compare with the static allocation code shown in Figure 4.1).

This approach works very well in applications in which it is easy to identify all of the places in the code and the logical flows through that code, where the objects are created and where the objects need to be destroyed.

However, in programs with complex logic or in which the behavior is dependent on contextual factors leading to many different possible paths through the code, it can be difficult to determine where in the code to allocate and release memory for objects. This can lead to several types of problem, which include the following:

• An object is destroyed prematurely, and subsequently, an access to the object is attempted causing a memory-access violation.

• Objects are dynamically created in a loop that does not terminate properly, thus causing spiraling memory allocation that will ultimately reach the limit that the operating system will allow, leading to an out-of-memory error.

• Objects are created over time, but due to varying paths through the code, some do not get deleted; this leads to an increasing memory usage over time. This is called a “memory leak” as the system gradually “loses” available memory.

These types of problem can be particularly difficult to locate in the code, as they can arise from the run-time sequence of calls to parts of the code which are individually correct; it is the actual sequence of calls to the object creation and object deletion code that is erroneous, and this can be difficult to find with design-time test approaches. These types of problem can ultimately lead to the program crashing after it has been running for some period of time, and the actual behavior at the point of crashing can be different each time, therefore making detection of the cause even harder.

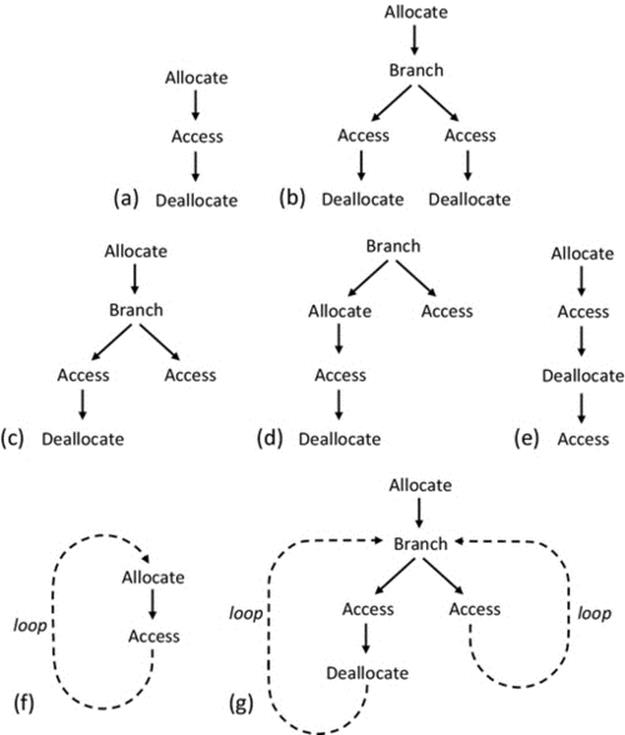

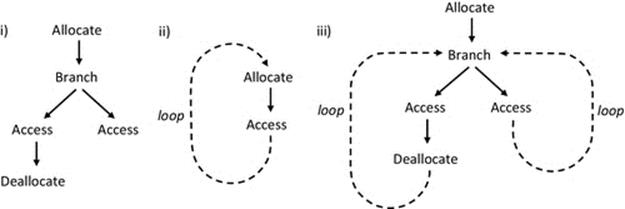

For these reasons, the use of dynamic memory allocation should be treated with utmost respect and care by developers and is perhaps the most important aspect of program design from a memory-resource viewpoint. Figure 4.11 illustrates some common dynamic memory allocation patterns.

FIGURE 4.11 Dynamic memory allocation and deallocation. Examples of correct sequences and some simplified illustrations of common mistakes.

Common dynamic memory allocation and deallocation sequences are illustrated in Figure 4.11. The scenarios are kept simple for the purpose of clarity and are intended to show general common patterns that arise rather than specific detailed instances. It is clear that if the common mistakes shown can occur in simple cases, there is considerable scope for these problems in larger, complex code with many contextual branches and loops and many function call levels and thus very large numbers of possible logical paths. Therefore, these problems can be very difficult to locate, and it is better to prevent them through high vigilance and rigor in the design and testing stages of development. For the specific patterns shown: (a) is the simplest correct case; (b) illustrates a more complex yet correct scenario; (c) causes a memory leak, but the program logic itself will work; (d) can cause an access violation as it is possible to attempt to access unallocated memory, depending on which branch is followed; (e) causes an access violation because there is an attempt to access memory after it has been de-allocated; (f) causes a behavior to arise that is highly dependent on the loop characteristics—it causes a memory leak, and if the loop runs a large number of times, the program may crash due to memory allocation failure, having exhausted the memory availability limits; (g) is representative of a wide variety of situations with complex logical paths, where some paths follow the allocation and access rules of dynamic memory allocation correctly and some do not. In this specific scenario, one branch through the code de-allocates memory that could be subsequently accessed in another branch. In large software systems, this sort of problem can be very complex to track down, as it can be difficult to replicate a particular fault that may only arise if a certain sequence of branches, function calls, loop iterations, etc. occurs.

4.5.2 Shared Resources

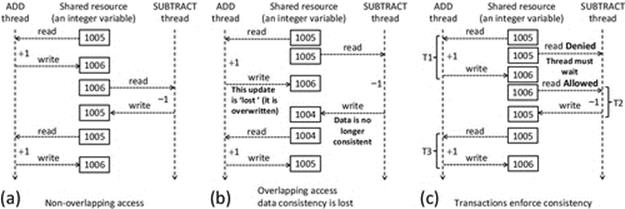

Shared resources must be managed in such a way that the system remains consistent despite several processes reading and updating the resource value. This means that while one process (or thread) is accessing the resource, there must be some restriction on access by other processes (or threads). Note that if all accesses are reads, then there is no problem with consistency because the resource value cannot change. However, even if only some accesses involve writes (i.e., changing the value), then care must be taken to control accesses to maintain consistency.

One way in which a system can become inconsistent is when a process uses an out-of-date value of a variable as the basis of a computation and subsequently writes back a new (but now erroneous) value. The lost update problem describes a way in which this can occur.

Figure 4.12 illustrates the problem of lost updates. The three parts to the figure all represent a scenario in which two threads update a single shared variable. To keep the example simple, one thread just increments the variable's value, and the other thread just decrements the variable's value. Thus, if equal numbers of events occur in each thread, the resulting value should be the same as its starting value. Part (a) shows an ideal situation (that cannot be guaranteed) where two or more processes access a shared resource in an unregulated way without an overlap ever occurring in their access pattern; in such a scenario, the system remains consistent. Part (b) shows how it is possible for the accesses to overlap. In such a case, it is possible that one process overwrites the updates performed by the other process, hence the term “lost update.” In this example, the ADD thread reads the value and adds one to it, but while this is happening, the SUBTRACT thread reads the original value and subtracts one from it. Whichever thread writes its update last overwrites the other thread's update, and the data become inconsistent. In this specific case, one thread adds 1 and one thread subtracts 1, so the value after these two events should be back to where it started. However, you can see that the resulting value is actually one less than it should be. The third event, on the ADD thread, does not actually overlap any other accesses, but the data are already inconsistent before this event starts.

FIGURE 4.12 The problem of lost updates.

To put this “lost update” concept into the context of a distributed application, consider a banking system in which the in-branch computer systems and automatic teller machines (ATM) are all part of a large complex distributed system. The ADD thread could represent someone making a deposit in a branch to a joint account, while at the same time, the SUBTRACT thread represents the other account holder making a withdrawal from an ATM. In an unregulated-access scheme, the two events could overlap as in part (b) of Figure 4.12 depending on the exact timing of the events and the various delays in the system; one of the events could overwrite the effect of the other. This means that either the bank or the account holders lose money!

4.5.3 Transactions

The lost update problem illustrates the need to use special mechanisms to ensure that system resources remain consistent. Transactions are a popular way of achieving this by protecting a group of related accesses to a resource by a particular process from interference arising from accesses by other processes.

The transaction is a mechanism that provides structure to resource access. In overview, it works as follows: When a process requests access to a resource, a check is performed to make sure that the resource is not already engaged in an on-going transaction. If so, the new request must wait. Otherwise, a new transaction is started (preventing access to the resource by other processes). The requesting process then makes one or more accesses that can be read or written in any order, until it is finished with the resource, and the transaction is then terminated and the resource is released.

To ensure that transactions are implemented robustly and provide the appropriate level of protection, there are four criteria that must be met:

Atomicity. The term atomic is used here to imply that transactions must be indivisible. A transaction must be carried out in its entirety, or if any single part of it cannot be completed or fails, then none of it must be completed. If a transaction is in progress and then a situation arises that prevents it from completing, all changes that have been made must be rolled back so that the system is left in the same consistent state as it was originally, before the transaction began.

Consistency. Before a transaction begins, the system is in a certain stable state. The transaction moves the system from one stable state to another. For example, if an amount of money is transferred from one bank account to another, then both the deduction of the sum from one account and the addition of the sum to the other account must be carried out, or if either fails, then neither must be carried out. In this way, money cannot be “created” by the transaction (such as if the addition took place but the deduction did not) or “lost” (such as if the deduction took place but not the addition). In all cases, the total amount of money in the system remains constant.

Isolation. Internally, a transaction may have several stages of computation and may write temporary results (also called “partial” results). For example, consider a “calculate net interest” function in a banking application. The first step might be to add interest at the gross rate (say 5%), so the new balance increases by 5% (this is a partial result, as the transaction is not yet complete). The second step might be to take off the tax due on the interest (say at 20%). The net gain on the account in this particular case should be 4%. Only the final value should be accessible. The partial result, if visible, would lead to errors and inconsistencies in the system. Isolation is the requirement that the partial results are not visible outside of the transaction, so that transactions cannot interfere with one another.

Durability. Once a transaction has completed, the results should be made permanent. This means that the result must be written to nonvolatile secondary storage, such as a file on a hard disk.

These four criteria are collectively referred to as the ACID properties of transactions.

Transactions are revisited in greater depth in Chapter 6 (Section 6.2.4); here, the emphasis is on the need to protect the resource itself rather than the detailed operation of transactions.

Part (c) of Figure 4.12 illustrates how the use of transactions can prevent lost updates and thus ensure consistency. The important difference between part (b) and part (c) of the figure is that (in part (c)) when the ADD thread's activity is wrapped in a transaction T1, the SUBTRACT thread's transaction T2 is forced to wait until T1 has completed. Thus, T2 cannot begin until the shared resource is stable and consistent.

4.5.4 Locks

Fundamentally, a lock is the simple idea of marking a resource as being in-use and thus not available to other processes. Locks are used within transactions but can also be used as a mechanism in their own right. A transaction mechanism places lock activities into a structured scheme that ensures that the resource is first locked, then one or more accesses occur, and then the resource is released.

Locks can apply to read operations, write operations, or read and write operations. Locks must be used carefully as they have the effect of serializing accesses to resources; that is, they inhibit concurrency. A performance problem arises if processes that hold locks on resources do not release them promptly after they finish using the resource. If all accesses are read-only, then the lock is an encumbrance that does not actually have any benefit.

Some applications lock resources at a finer granularity than others. For example, some databases lock an entire table during access by a process, while others lock only the rows of the table being accessed, which enhances concurrency transparency because other processes can access the remainder of the table while the original access activity is ongoing.

Activity R3 explores the need for and behavior of locks and the timing within transactions of when locks are applied and released.

Activity R3

Using the Operating Systems Workbench to explore the need for locks and the timing within transactions of when locks are applied and released

Prerequisite

Download the Operating Systems Workbench and the supporting documentation from the book's supplementary materials website.

Read the document “Threads (Threads and Locks) Activities and Experiments.”

Learning Outcomes

1. To gain an understanding of the concept of a transaction

2. To understand the meaning of “lost update”

3. To explore the need for and effect of locking

4. To explore how an appropriate locking regime prevents lost updates

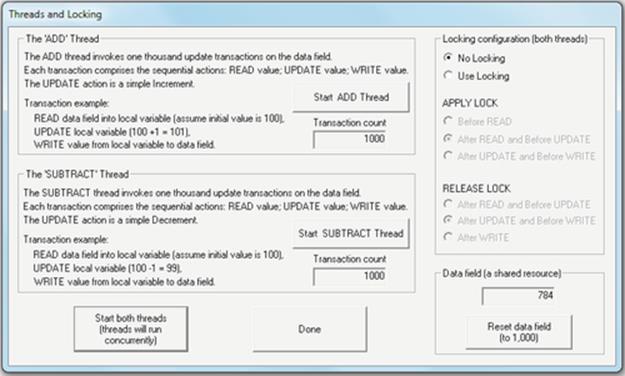

This activity uses the “Threads and Locks” simulation provided by the Operating Systems Workbench. Two threads execute transactions that access a shared memory location.

Part 1: Run threads without locking to see the effect of lost updates.

Method

1. Press the “Reset data field” button and check that the value of the data field is initialized to 1000. Run the ADD thread by clicking the “Start ADD Thread” button. This will carry out 1000 transactions each time adding the value 1. Check if the end result is correct.

2. Now, run the SUBTRACT thread by clicking the “Start SUBTRACT Thread” button. This will carry out 1000 transactions each time subtracting the value 1. Check if the end result is correct (it should be back to the original value prior to step 1).

3. Press the “Reset data field” button again; check that the value of the data field is initialized to 1000. Without setting any locking (leave the selection as “No Locking”), run both threads concurrently by clicking the “Start both threads” button. This will carry out 1000 transactions of each thread. The threads run asynchronously (this concept has been discussed in Chapter 2). Each thread runs as fast as it is allowed, carrying out its particular action (so either adding or subtracting the value 1 from the data value). Check the end result—is it correct?

The screenshot below provides an example of the behavior that occurs when the two threads are run concurrently without locking.

Expected Outcome

Overlapped (unprotected) access to the memory resource has led to lost updates, so that although each thread has carried out the same number of operations (which in this simulation are designed to cancel out), the end result is incorrect (it should be 1000).

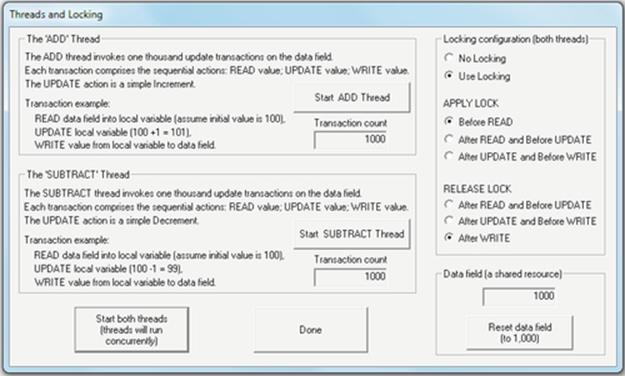

Part 2: Using locks to prevent lost updates

Method

Experiment with different lock and release combinations to determine at which stage in the transaction the lock should be applied and at which stage it should be released to achieve correct isolation between transactions and thus prevent lost updates.

1. On the right-hand pane, select “Use Locking.”

2. The transactions in the two threads each have three stages, during which they access the shared data field (Read, Update, and Write). The APPLY LOCK and RELEASE LOCK options refer to the point in the transaction at which the lock is applied and released, respectively. You should experiment with the APPLY and RELEASE options to determine which combination(s) prevents lost updates.

The second screenshot shows a combination of lock timing that results in complete isolation of the two threads' access to the memory resource, thus preventing lost updates.

Expected Outcome

Through experimentation with the various lock timing combinations, you should determine that the isolation requirements of transactions are only guaranteed when all accesses to the shared resource are protected by the lock. Even just allowing one thread to read the data variable before the lock is applied is problematic if the second thread then changes the value before releasing the lock so that the first thread can write its change (which is based on the previously read value). See the main text for full discussion of lost updates.

Reflection

This activity has illustrated the need for protection when multiple processes (or threads) access a shared resource. To ensure data consistency, the four ACID properties of transactions must be enforced (see main text for details).

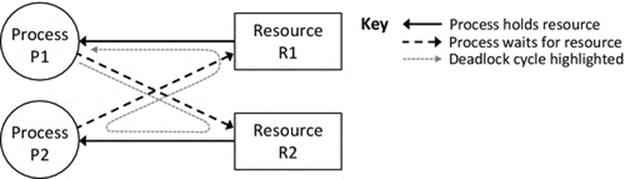

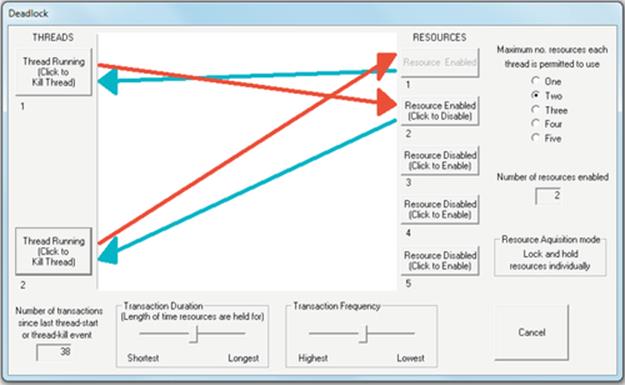

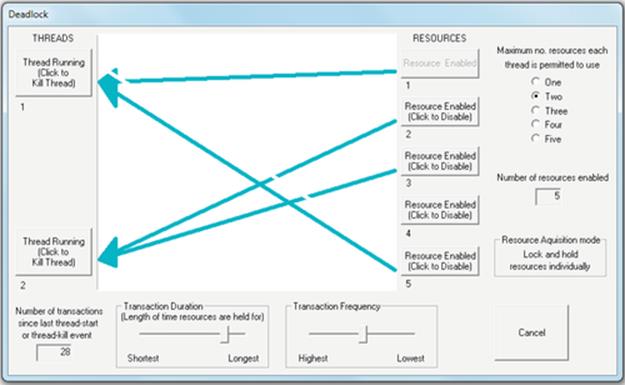

4.5.5 Deadlock

Depending on the way in which resources are allocated and on which resources are already held by other processes, a specific problem called deadlock can arise in which a set of two or more processes are each waiting to use resources held by other processes in the set, and thus, none can proceed. Consider the situation where two processes P1 and P2 each require to use resources R1 and R2 in order to perform a particular computation. Suppose that P1 already holds R1 and requests R2 and that P2 already holds R2 and requests R1. If P1 continues to hold R1 while it waits for R2 and P2 continues to hold R2 while waiting for R1, then a deadlock occurs—that is, neither process can make progress because each requires a resource that the other is holding, and each will continue to hold the resource until it has made progress (i.e., used the resource in its awaited computation). The situation is permanent until one of the processes is removed from the system. Figure 4.13 illustrates this scenario.

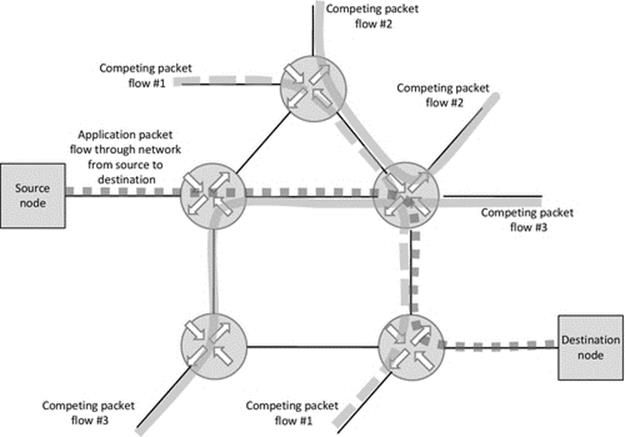

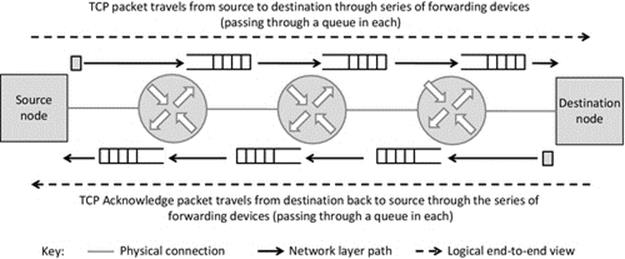

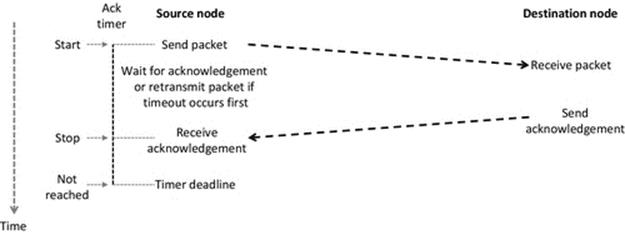

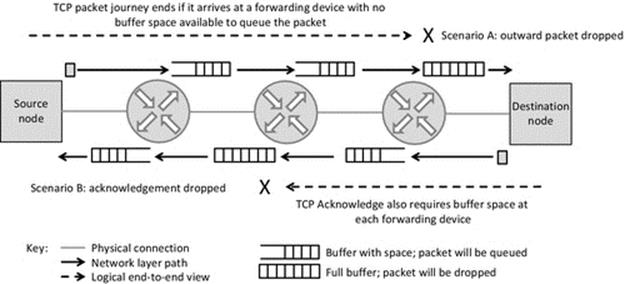

FIGURE 4.13 A deadlock cycle.