Cyber Security Basics: Protect your organization by applying the fundamentals (2016)

Section Zero: Introduction

“You can't build a great building on a weak foundation. You must have a solid foundation if you're going to have a strong superstructure.”

Gordon B. Hinckley

Introduction

One of the worst man-made disasters in history is the collapse of a coliseum in Fidenae, Italy in the year 27 A.D. This catastrophe resulted in the death of over 20,000 people. To prevent tragedies like this from ever occurring again, the Roman senate passed laws that mandated that all large stadiums be built on a “sound foundation.” This event provided the world with a valuable learning lesson that has influenced building construction ever since.[1]

A building with a solid foundation rarely falls down on its own. It can even prove itself resilient against significant attacks. The same goes for a security program that is built and reinforced using sound design principles and best practices. This book is intended as a guide for identifying and implementing solutions that support a solid information security program. This could be a program that does not yet exist, or one that is being improved (and there will always be room for improvement.) Security solutions and programs cannot be set in stone, which is one thing that separates the foundation of a security program from physical structures.

This book is not meant to be a comprehensive text on every aspect of information security. Instead, the goal is to cover the fundamentals. Organizations can establish a competent and effective information security program by focusing on best practices, which translate into specific security controls and processes to support them. The goal of this book is to help reduce the technical hurdles that sometimes prevent solutions from being implemented. This may be due to a lack of understanding or feeling overwhelmed by the myriad choices that are available. Too much complexity is a deterrent to security (a concept that is covered in more detail later in this section.) The objective is to clear some of the technical fog that often surrounds and impedes information security efforts from being successful.

For this book, an organization or entity can be defined as one of the following:

· A for-profit company

· A non-for-profit organization or foundation

· A federal or local government unit

· A library, hospital, or school

Just as the size and composition of an organization can vary, so can the makeup of the team responsible for maintaining the security of its information assets. The organization may be fortunate enough to have a dedicated InfoSec or cyber security team with an appropriate level of staffing. Or it may be just a single individual who performs these duties while juggling several other responsibilities. Regardless of the size of the team, the experience level of those on the team, or size or scope of the organization, this book will hopefully provide the fundamentals for building a new, or reinforcing an existing, information security foundation.

0.1 Building a Foundation

This book is intended to provide an InfoSec primer for those with a beginner to intermediate skill level in the field. Hopefully this book will also provide value to those who are experienced with the challenges of protecting an entity’s data, network, endpoints and employees from cyber-attacks. The goal is to demystify some of what may be considered to be the more complicated technical aspects of InfoSec, and to provide the fundamentals of a good information security program that can be executed by individuals of all skill and experience levels.

Ostensibly everyone at an organization is playing on the same team (not including the insider threat.) So there is no reason why security controls cannot be implemented in a way that is conducive and minimally disruptive to IT operations, while protecting against attacks that originate from both inside and outside of the organization.

0.2 Demystifying IT Security

By demystifying IT security, the chasm that separates security and non-security groups can be bridged so that everyone can work together to raise the security posture of the organization. Focusing on clear, easy to understand best practices can clear the fog that obscures many security concepts.

The contents of this book are broken up into the following three main sections of information security:

· Protect: Take steps to implement controls to prevent attacks and protect the organization.

· Detect: Implement sensors and monitoring solutions that alert appropriate personnel when certain security events or incidents take place.

· Respond: React to security events and incidents quickly and appropriately.

Technical details should not get in the way of practicing good security. Security does not need to be overly complicated. If best practices are followed, a majority of security weaknesses can be mitigated, and the majority of attacks we read about in the news almost every day could be prevented.

0.3 Using a Risk Based Approach

A risk-based approach may be the best way to provide some clarity. Focus first on the risks to the organization. Once you come up with a list of risks, this list can be prioritized according to severity, which helps identify what to focus on first. Some questions to ask during the risk gathering process include:

· What are the biggest threats?

· What does the organization value most?

· What kind of attack would be the most damaging to the organization?

By taking the time to answer these questions, you can focus the finite resources that are available on the highest priority items first. But remember: if everything is a priority then nothing is a priority. There is a concept called the defender’s dilemma. The gist of this term of art is that the defender has to protect all points of a system, yet an attacker only has to find one exploitable weakness to be successful. Since not all possible points of attack can be completely protected, risk management can help determine the most important points that need to be defended. Due to resource constraints, it is nearly impossible to fortify every aspect of an organization. Therefore, a more approach is to approximate and apply controls to areas where the most benefit can be gained. Another concept to be aware of is residual risk. When security controls are applied, risk is reduced. The risk that remains after applying these controls is the risk that is left over, or residual risk. You should continually work on reducing residual risk.

0.4 General Security Concepts

Each security decision that is made should address at least one of the following concepts in this section. If it doesn’t, challenge its value. Implementing a new control means making a change to the production environment, and any change runs the risk of breaking something that was working before, or of even increasing the attack surface. Changes should not be taken lightly. Any change that is made should maintain, if not improve, the security posture of an organization. Therefore, if you do decide to implement a security control, the net benefit of that change should be able to be mapped back to a core security concept.

Speaking of change for change’s sake, Security Theater is one of my favorite expressions. It basically means implementing a security control only for the sake of saying that a security control has been implemented. It’s just busy work, in other words, providing no actual improvement in security. Since changes of this type do not make the organization any safer, Security Theater is definitely something to avoid.

Changes also run the risk of increasing complexity to an organization. There is the rule that the more complex something is, the greater the chance that something will to go wrong with it. In a security context, complexity increases the chance of vulnerabilities and bugs that can be exploited. In addition to value, proposed changes should also be evaluated on the basis of the added complexity they bring. Changes that increase complexity should be considered carefully, as it can adversely impact the overall security of the organization.

The following can be considered the top 10 principles security professionals should follow, in no particular order.

0.4.1 Least Privilege

Objects can be protected by limiting access to them. Permissions, otherwise known as privileges or rights, can be assigned to objects. An object can be a protected resource like a document, a database, a system, etc. Restricting how a subject can interact with an object is an example ofhardening. Hardening is applying security best practices to an object to make it more resilient to attacks.

Access permissions can be enforced and managed by an access control solution. By following the principle of least privilege, permissions are explicitly defined based on the rights that a subject needs. Permissions assigned to objects do not exceed what is needed. Least privilege is also known as “need to know.”

To effectively manage access for all subjects and objects, a ticket system can be utilized so that permission changes get the appropriate approvals and are documented. Ideally, access is tied to Human Resources so that permissions are based on the role of the employee, and that those same permissions are revoked if that employee separates from the organization.

A permissions snowball is a phenomenon where the amount of access someone has directly reflects of the amount of time he or she has been with the organization. Privileges are continually granted to the user as that person changes roles, but they are never revoked. This is due to poor access management processes. Effective access management can help ensure that users only have the permissions needed to perform the job, and no more. When someone changes roles, access rights for the previous role should be revoked, and the rights needed for the new role should be granted.

0.4.2 Separation of Duties

To protect against the insider threat, permissions should be designed such that there is no single individual with access to everything. Excessive privileges can give individuals of questionable morals the opportunity to commit end-to-end fraud. The protection against this is to create logical barriers between systems and functionality in the form of a secure permissions design.

Separation of duties is similar to the principle of least privilege, except the separation of duties is more focused on distributing permissions among more than one individual. For example, let’s look at the IT systems of an insurance company. To reduce the chance of fraud being committed by an employee, separation of duties should be used to prevent the same person from being able to both create a new insurance policy, and then file a claim against that policy. If one person were able to do both of these actions, insurance fraud could be the result.

When designing a new system, or hardening an existing one, analysis should be done to determine what permissions subjects should have on objects. This analysis should include playing out different negative scenarios where insider fraud can be committed. These scenarios can then be used to identify permissions, access controls, and the roles individuals will serve. Once the controls that have been identified are implemented, the chance of insider fraud can be greatly reduced.

0.4.3 Confidentiality

Even in the world of social networks and general acceptance that “privacy is dead”, there is still some data that needs to remain confidential. This need could be driven by regulatory or legal requirements, as well as to prevent costly data breaches. Ensuring the confidentiality of sensitive data means limiting access to these data assets to only authorized individuals.

The following steps are part of a process that can be used to identify data that should be treated as confidential:

· Identify all of the data that the organization uses and retains. The result of this exercise should be a data inventory or data dictionary.

· Understand what regulations and legal requirements the organization needs to adhere to. These requirements should include how confidential data should be defined. There may also be an organizational policy regarding data and data retention. If so, these policies should also be consulted to determine how to correctly define confidential data.

· Review the organization’s data inventory (e.g. customer name, mailing address, etc.) and assign a confidentiality label to each data item. The labeling should follow the confidentiality labels recommended by relevant regulations and policies.

Take the time to identify all the data that will be transmitted and stored. All data that is used by production networks and systems should be covered. To be thorough, though, include development and test environments as well. A data classification exercise is worth the effort. By identifying the confidentiality requirements of data, the appropriate controls, processes, and architectural design can be implemented. A solution such as encryption for data in transit and at rest is one of the best ways to ensure that this security principle is followed. Encryption by itself is not a solution however; it also needs to be implemented properly.

0.4.4 Integrity

Maintaining the integrity of data, systems and software means ensuring that those objects have not been subjected to unauthorized changes. These can be changes that are done either intentionally or accidentally.

System files are an excellent example of objects whose integrity is critical to protect. Changes to these files could be the result of actions performed by an adversary in the form of malware that has infected a system. These integrity checks can be performed by an agent installed on a device, by the operating system (OS), or by the low level BIOS that underlies and supports it all. As integrity controls may be available in different areas of a device, be familiar of the options available to help make the right decision about how best to prevent unauthorized changes from being made on critical files.

Documents and databases can also have integrity requirements. There are some files that should never be changed once they are created, such as log files. Using integrity checks on log files will let the appropriate teams know if modifications have been made or attempted. Detection of a user or process making changes to a log file could lead to the discovery of a malicious actor trying to cover his or her tracks.

0.4.5 Keep it Simple

Complexity is bad for security. They say the devil is in the details, and indeed it is in the obscure, less-understood dark corners of code and networks that opportunity presents itself to attackers. The more complicated something is (such as application code, network design, or firewall rules), the greater the chance there is something wrong with it. These flaws could be security vulnerabilities.

The Keep it Simple, Sir (KISS) approach should be used whenever possible when implementing IT and security-related solutions. This principle is also known as economy of mechanism.[2] Less complexity results in software and systems that are easier to use and support. It also makes it easier to find vulnerabilities and fix them. And when problems do arise, a less-complex system will be easier to troubleshoot to find the root cause.

Information systems and security are complicated enough. When deciding which solutions to use, and how they should implemented, the KISS principle should be kept in mind. If a proposed change seems to introduce more complexity but not much value, it should be reconsidered.

0.4.6 Logging

Logging is the recording of activities performed by individuals and IT assets. For each event that is logged, the following table lists the properties that should, at a minimum, be recorded.

|

Term |

Definition |

|

Who |

Source of the action: user, system, or process |

|

What |

Description of the action taken |

|

When |

When the event took place: a timestamp that is synchronized across systems |

|

Where |

Object involved or acted on to perform action |

Table 0.1: Each log event should tell the story “who did what, when and how.”

Logging gives an organization the ability to monitor the actions of employees, systems and software. It can also provide valuable insight that can be used to research activities, such as security events that can turn out to be security incidents. The current news regularly provides examples of successful attacks that include a prolonged presence on a network. This means malware and other compromises ran undetected. Ensuring that systems perform effective logging, and capturing those events in a centralized log repository that is actively monitored, is key to detecting malicious activity like this.

The quality and integrity of the log data needs to be unquestionable. Security events and incidents should be responded to with the assumption that any log files that are involved will later be used as evidence for investigatory and legal proceedings. If there is any doubt that the log data has not been tampered with, that data may become inadmissible as evidence. As a result, preservation of the log data should follow a formal chain of custody process. Best practices for effective logging and log data preservation is covered later in section 3.2.2 Data Preservation.

0.4.7 Defense in Depth

A castle provides a good example of using a defense in depth security strategy. A castle does not rely on a single defensive measure to provide total protection for the kingdom within. Some of these defenses include:

· Building the structure in a mountainous or hilly region that provides a high vantage point so that adversaries can be seen from afar

· A moat with a single drawbridge that can be retracted at the time of attack

· An outer wall

· An inner wall

· A main gate that provides a chokepoint

Fast forward to today. When it comes to IT systems, a single solution or control should never be relied on to provide total protection for an organization.

Figure 0.1: Most of today’s networks are protected by following a defense in depth approach.

The use of layered security can help ensure that an organization is still protected even if a single control fails. As in Figure 0.1, if the attacker gets past the firewall, the attack will hopefully be stopped by a subsequent security control. This is analogous to a castle where, if the enemy breaches the outer wall, there is still the inner wall and several other defenses to contend with.

An adversary will follow the path of least resistance to reach a goal. If security controls are in place that block all attempts, the attacker will seek an alternate route. Or a different target altogether. A well-defended fortress not only can defend itself against attacking hordes, but also deter enemies from expending the resources required to acquire any treasures protected within its high walls.

0.4.8 Fail Securely

All software has bugs. Just like people, there exists no software that is perfect. Regardless of how pristine the software appears, it is just a matter of time before a security vulnerability is discovered. It could code that is written by in-house or contracted developers, software that runs in vendor-provided solutions such as a security appliance, or components that developers download from open source repositories over the Internet.

Sometimes the issues are discovered accidentally. Other times it is the vendor or development community that finds (and hopefully fixes) them. But there may also times be when it is an adversary who finds the vulnerabilities, and perhaps keeps this information confidential, or sells it to the highest bidder.

The methods by which software vulnerabilities are found can also vary. Sometimes it is by accident through non-malicious usage or a review of the software. Other times it can be the result of someone intentionally attempting to break the software in order to see what happens. When intentionally causing an application or server to fail, the attacker can gain information that can be used in a subsequent attack. This is a form of reconnaissance. Software failures can also present an opportunity for the adversary, such as privilege escalation or authentication bypass.

Software that is not designed to fail safe or fail secure has an increased chance of providing opportunities to an attacker. When software crashes, it should handle the failure in a safe and secure way. A secure software design will help ensure this. Some examples of the actions that software should take when encountering an exception, error or failure include:

· Close all connections to databases and protected resources

· Clear out all sensitive information from memory and caches

· Terminate sessions and invalidate tokens

Examples of information that can be provided by software that does not follow the fail secure principle include:

· Database connection information

· Authentication credentials

· Directory information and drive mappings

· Technical details about the software and systems it is running on

Software should be developed and implemented under the assumption that it will crash at some point. This crash should not provide an opportunity for attackers. The “fail safe” principle, where all possible exceptions and errors are handled in a consistent and secure way, should be part of the design to prevent sensitive information disclosure and other kinds of attacks.

0.4.9 Complete Mediation

Complete mediation supports the “trust but verify” principle. Many applications use protected resources like databases, file shares, authentication servers, etc. When software interacts with these types of resources, the design of the software should ensure that this interaction takes place in a circumspect way. For example, software should not open a connection to a database and leave that connection open for the duration of the user session. This is like opening the door for a guest, then leaving it open for the rest of the day. While it was opened only for a specific purpose, the persisted open connection presents a potential opportunity for the adversary to gain access to sensitive data and files.

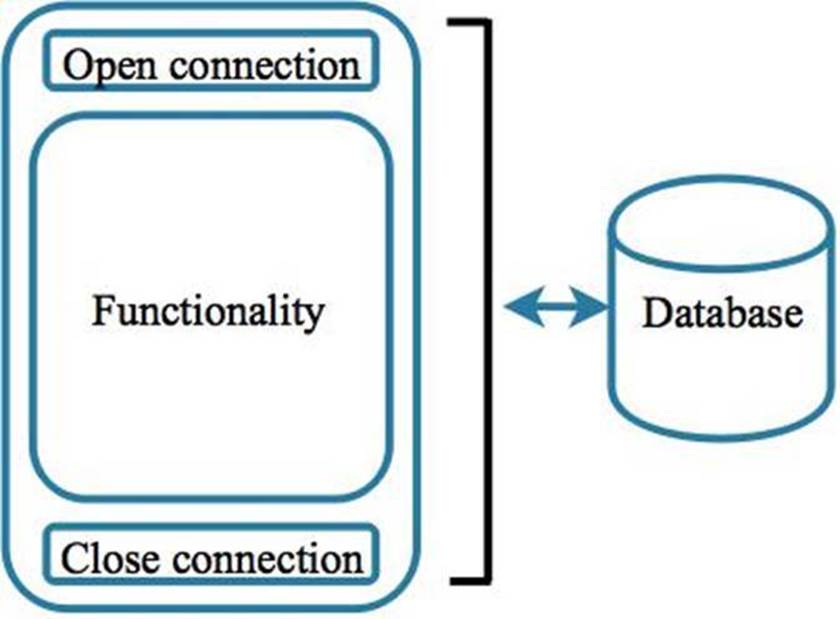

Figure 0.2: When a large block of functionality uses a single open connection, this presents a window of opportunity for an adversary.

By following the principle of complete mediation, access to protected resources is validated before it is provided. This validation occurs every time access is requested. Once the use of the resource is complete and no longer needed (such as a data fetch from a database) the connection is immediately closed.

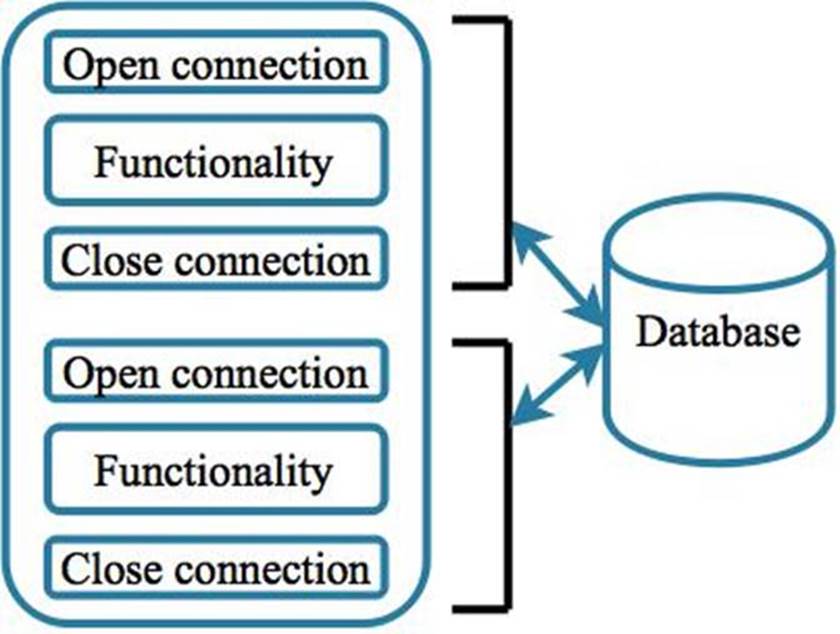

Figure 0.3: Explicitly requesting access before using it, then immediately terminating that access when it is no longer needed, severely reduces windows of opportunity for an attacker to leverage.

Code sections (a.k.a. the functionality) that use a database connection are surrounded by their own separate “open connection” and “close connection” blocks. An access validation check is performed in the open connection blocks, and the access termination is performed in the close connection blocks. By following the principle of complete mediation, an adversary has a severely reduced chance of having access to open connections to protected resources.

0.4.10 Obscurity is Not Security

The best approach to security is to operate under the assumption that adversaries knows all the secrets. Hoping the attackers don’t know certain things, such as how a network is designed, what security defenses are in place, or the internal details of how an application works, only provides a false sense of security. It should be assumed that adversaries will get the technical specifications, source code, and the details about how things work. Yet, everything can remain secure due to the strength of the design and implementation of the software and systems used by an organization.

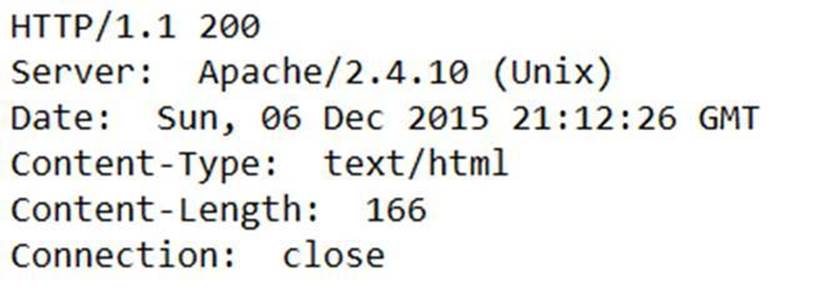

The design itself is what provides the security, not the secrecy of the design. Publicly accepted encryption standards are a good example of open design. The details about how these encryption algorithms work is publicly available. Despite this, there are several publicly-disclosed algorithms that can provide best in class security when implemented correctly. Even though obscurity itself should not be considered a complete solution, a security strategy should include making the job of the attacker as difficult as possible. The cost of obtaining the objective should exceed its value. Information can be hidden strategically, such as making web server information non-obvious to scans.

Figure 0.4: Some publicly-available information can be useful to attackers, such as web server banners, which could provide clues as to what vulnerabilities the server may have.

Some ways to hide or disguise Internet-facing web servers include:

· Hiding HTTP header information

· Changing banner information

· Disabling unnecessary services like ICMP (ping)

Information can not only be hidden from plain view, but can also be deliberately changed. Disguising or changing information to throw off the adversary is also known as counterintelligence or dis-information. Also, air-gapping (removing Internet access) may help protect information from being easily accessible. Security should be regularly evaluated, and can be done in the form of penetration tests, otherwise known as red team assessments. These can reveal what information about the organization can be easily obtained, and identify hardening opportunities that should be taken.

Operating under the assumption that certain information will never be discovered is a setup for failure. The focus should be on making and implementing the best security choices, not on hoping that certain information will never be discovered.

0.5 Security Maturity Levels

Many of the topics in the following sections have a level indicator. This indicator represents the level of maturity and expertise that a security team should have in order to effective deploy the respective security control. It does not necessarily represent the value the control can bring to an organization. However, this indicator will hopefully assist the reader identifying and prioritizing the most appropriate security solutions and controls.

For an organization that is just starting to implement an information security program, Level 1 items may be the place to start. For those organizations that already have an InfoSec program that is more mature and established, Level 2 or 3 objectives may be more appropriate. These levels are included in sections and one and two of this book.

These maturity levels are not meant to indicate their importance or effectiveness. They are meant to offer suggestions for a phased and iterative approach to build and constantly improve an information security program.

|

Level |

Description |

|

1 |

A security best practice, core to the foundation of any IT security program. Intended for a newly created security team, or an individual recently tasked with improving the IT security of an organization. This could be starting with a blank slate, or with some basic security controls that are already (at least partially) implemented. |

|

2 |

Controls to consider once the organizational security program has a solid foundation of controls and processes, and is effective at providing security. Intended for an established security team or operations center that has been and functioning for some time, with repeatable and effective processes and controls in place. |

|

3 |

Solutions that improve visibility and fill the gaps between the controls and processes utilized by an effective information security program. Intended for a security team or Security Operations Center (SOC) that has repeatable and effective security processes that have proven themselves in the form of detecting and stopping attacks. They have perhaps even experienced “trials by fire” by responding to incidents. There is enough staff, resources, and support from leadership to push the security program to “best of class” status by implementing controls in every security category, and working towards a state where every aspect of the network and endpoints are actively monitored and effectively protected. |

Table 0.1: There are three Security Maturity Level indicators used throughout this book.

These recommendations are based on my experience. The intent is to help the reader focus on implementing best practices first, which provides a solid foundation on which to build.

0.6 Summary

Each decision made about controls and processes should be able to be mapped to one or more of the security principles discussed in this section. If it doesn’t, reconsider whether the effort will actually improve the security of the organization, as it may just result in Security Theater. An increase in complexity has a potentially adverse impact on the security of an organization’s network, systems and software. Finally, as you read on, consider the maturity levels assigned to the security controls that are discussed. These security maturity levels are intended to serve as a guide to help determine which controls are appropriate for your own organization’s information security program.

Is this book comprehensive? No. Is there a lot about cyber security that won’t be included? Absolutely. My goal here is to help those who are just starting, or those who are looking for inspiration about where to go next. It will hopefully provide something that promotes further reading and investigation into the different aspects of cyber security, of which there are plenty.

Overall I think Bret Arsenault, Chief Information Security Officer of Microsoft, summed it up well when he said the following: “I firmly believe that security is a journey and not a destination. It’s also an issue that must be addressed holistically by the industry and not by a single vendor. It’s only by working closely with our partners, the security ecosystem and governments around the world, that we can ensure consumers and businesses are able to trust the technology they use and don’t view security as a barrier to technology adoption.”[3]

0.7 Terms and Definitions

The following are the terms discussed in this section.

|

Term |

Definition |

|

Asset |

Something of value to an organization, that therefore requires protection. |

|

BIOS |

Basic Input Output System. The low-level software that allows a computing device to boot up by managing its hardware components such as memory, display, and audio. If the BIOS gets compromised, all of the operating systems and applications running on the device also become compromised. |

|

Chain of Custody |

A process that is followed to demonstrate that data has not been tampered with since its creation. This is critical for preserving data that may later be used in legal investigations and proceedings. |

|

Complete Mediation |

Verifying the permission to a protected resource before providing access to it, every time. |

|

Confidentiality |

Ensuring that sensitive information is accessible only by authorized individuals. |

|

Counterintelligence |

Disguising or deliberately changing information in order to throw off the attacker. |

|

Defender’s Dilemma |

A defender has to protect all points all the time, while an adversary often just has to find one vulnerability to be successful. |

|

Defense in Depth |

Not relying on a single control to provide all security. |

|

Economy of Mechanism |

Reusing existing components versus creating components from scratch. This helps ensure that the design and implementation are as simple as possible, since complexity increases the chance of vulnerabilities. Economy relates to cost in terms of lines of code or unique components used. Also see the KISS principle. |

|

Fail Safe |

Also known as “fail secure.” When an application or system encounters an exception, the design of the application or system should ensure that the failure happens in a secure way. Proper exception handling can include closing connections to protected resources, not divulging sensitive or excessive information, and not providing elevated access. |

|

Fail Secure |

See Fail Safe. |

|

Hardening |

Improving the security of an object by taking actions such as restricting permissions or removing unnecessary services. Doing this reduces the attack surface. |

|

HTTP |

Hypertext Transfer Protocol. Part of the backbone of the Internet, it is a protocol used to transfer information such as the HTML and JavaScript code of web sites and applications. |

|

ICMP |

Internet Control Message Protocol. A service that makes systems discoverable by using the ping command. |

|

Integrity |

A security tenant focused on the assurance that data or documents have not experienced unauthorized changes, tampering, or destruction. |

|

KISS |

Keep it Simple, Sir. An approach to design and programming that is used to minimize complexity. There is a direct correlation between complexity and the number of bugs and vulnerabilities found in software. |

|

Least Privilege |

Limiting the permissions a subject has on an object, based on what actions the subject is required to perform. This is also known as “need to know.” |

|

Open Design |

Relying on a solid design, not the secrecy of the design, to provide security. |

|

Permissions Snowball |

A phenomenon seen at many organizations where employees who have been there the longest also have the most access. This is because privileges have not been updated and removed to reflect changes in the positions held by said employee. |

|

Residual Risk |

The risk that remains after applying security controls. The residual risk needs to be at an acceptable level. |

|

SOC |

Security Operations Center. This is an area in an organization dedicated to security monitoring, analysis, and event and incident response. It is staffed by security professionals and may have a command center “look and feel” that includes dashboards displayed on walls and being located in a physically-restricted area. |

|

Security Theater |

Implementing security controls for the sake of being able to show that security controls have been implemented, even though their actual value is not proven or known. This runs the risk of adding unnecessary complexity. |

|

Separation of Duties |

Not granting a single individual the ability to perform a series of actions that could provide the opportunity to commit insider fraud or attacks. |

|

Social Engineering |

Manipulating someone by exploiting trust. Playing on emotions, and using enticement or fear to get victim to perform an action like click a link, open an email attachment, or provide personal information to an attacker. |