Hacking Happiness: Why Your Personal Data Counts and How Tracking It Can Change the World (2015)

[PART]

1

Be Accountable

4

MOBILE SENSORS

The idea that the smartphone is a mobile sensor platform is absolutely central to my thinking about the future. And it should be central to everyone’s thinking, in my opinion, because the way that we learn to use the sensors in our phones and other devices is going to be one of the areas where breakthroughs will happen.1

TIM O’REILLY

WHAT MYSTERIES are revealed by your behavior that you can’t see? What would you learn about yourself if you could see the data reflecting your words and actions? If all your senses left a trail, if your actions painted a picture in data, how might that feel?

It would be magic.

Seeing your life visualized in data can be extremely empowering. Instead of fretting about the unfinished items on your to-do list, seeing your data lets you revel in the experience of your “I am” list. There’s a weight to personal data, a permanence you can point to. The lines, curves, or numbers on a page, revealed in the form of data—that’s you. Want to see if you’re having an effect on the world? Visualize your output. It’s a powerfully rewarding experience.

Sensors are the tools that interpret your data. Sometimes they’re simple, like a pedometer, which counts your steps. Sometimes they’re complex, like an MRI machine, which measures brain patterns. But they both provide insights that prompt action.

The first sensors we’re made aware of in life are our bodies. They’re highly articulated instruments, as they can feel, interpret, and respond to stimuli almost simultaneously. As children, we’re exposed to sensors in a doctor’s office, having our blood pressure taken and wondering why the scratchy black fabric of the monitor gets so tight we can feel our hearts beating in our arms. We get older and go through security and experience metal detectors. The concept of technology measuring the invisible is something we accept at a fairly young age.

But where we get skittish is when sensors begin to track us. We don’t mind if air quality is measured for negative emissions, or if thermal patterns are tracked to portend weather patterns. But when things get personal, we flinch at first. It’s a bit like scraping your knee and seeing blood for the first time; it feels like something that’s supposed to stay inside of you has come out. Data has an unexpected intimacy upon revelation. It also cries out for comparison and action. You measure your resting heart rate knowing you’ll gauge the resulting number against an elevated reading during exercise.

And what about Hacking H(app)iness? Can we use technology to identify and predict emotion? Would we want to if we could?

Affective computing is a multidisciplinary field deriving from the eponymous paper2 written by Rosalind W. Picard, director of Affective Computing Research at the MIT Media Lab. Picard’s work has defined the idea of measuring physical response to quantify emotion. While it’s easy to focus on the creepy factor of sensors or machines trying to measure our emotions, it’s helpful to see some applications of how this type of technology can and is already improving people’s lives.

In the New York Times article “But How Do You Really Feel? Someday the Computer May Know,” Karen Weintraub describes a prototype technology focused on autism created by Picard and a colleague that helped people with Asperger’s syndrome better deal with conversation in social settings. The technology featured a pair of glasses outfitted with a tiny traffic light that flashed yellow and red, alerting the wearer to visual cues they couldn’t recognized due to Asperger’s (things like yawning that indicate the person you’re speaking to is not interested in what you have to say).3

It’s easy to imagine this type of technology being created for Google Glass. The famous American psychologist Paul Ekman classified six emotions that are universally expressed by humans around the globe: anger, surprise, disgust, happiness, fear, and sadness. Measuring these cues via facial recognition technology could become commonplace within a decade. Cross-referencing GPS data with measurement of these emotions could be highly illuminating—what physical location has the biggest digital footprint of fear? Should more police be made available in that area?

Picard’s boredom-based technology would also certainly be useful in the workplace. Forget sensitivity workshops; get people trained in using this type of platform, where when a colleague looks away while you’re speaking you get a big text message on the inside of your glasses that reads, “Move on, sport.” Acting on these cues would also increase your reputation, with time stamps noting when you helped someone increase their productivity by getting back to work versus waxing rhapsodic about the latest episode of Downton Abbey.

“The Aztec Project: Providing Assistive Technology for People with Dementia and Their Carers in Croydon” is a report from 2006 documenting sensor-based health solutions for dementia and Alzheimer’s patients in South London. The report starts off with the harrowing statistic that “there are currently some twenty-four million [Alzheimer’s disease] sufferers, a number that will double every twenty years until 2040.”4 The scale of the population including patient families greatly increases this number, and the financial burden for all parties involved places significant stress on health costs.

My grandmother had Alzheimer’s, so I identify with the scenarios described in the report. Wandering is a standard behavior, with patients not recognizing they’ve left or entered a room or even their home. Accidents in the kitchen are common, as is forgetting to eat for days on end. The report identified that previous solutions, including things like locking doors to keep patients from wandering or having them wear bulky lanyards outfitted with alarms, were ineffective. When lucid, patients felt trapped or tagged and resented feeling so scrutinized in their own homes.

Technical fixes due to advances in technology, even in 2006, provided solutions that brought great comfort to patients, their families, and caregivers. For instance, instead of having a patient wear an arm or leg band outfitted with a sensor detecting when they went beyond the radius of their home or property (a practice associated with criminals and upsetting to patients), an early form of geo-fencing technology was utilized instead that sent a warning text to caregivers when patients crossed over a virtual perimeter on their property. Sensors were also placed on doors that acted as simple alarms when patients left their houses unattended.

A more recent implementation of sensors to help treat Alzheimer’s patients is taking place in Greece via a technology in development called Symbiosis.5 Pioneered by a team from the Aristotle University of Thessaloniki’s Department of Electrical and Computer Engineering, Symbiosis has a number of components to help patients and their families and caregivers. SymbioEyes incorporates the automatic taking of photographs via a mobile app also outfitted with GPS tracking and emergency detection capabilities. Worn by patients as a way to monitor location, the pictures are also viewed at the end of the day as a way to inspire memory retention and curb the onset of dementia. SymbioSpace utilizes augmented reality to create digital content that reminds patients of simple behaviors. For instance, the image of a plate triggers a text reminding patients how to eat with a spoon. Along with the pragmatic benefits of these reminders, they are designed to make a patient feel they are “surrounded by a helpful environment that provides feedback and seems to interact with him/her, responding to his/her needs for continuous reminding and memory refreshing.”

Kat Houghton is cofounder and research director for Ilumivu, a “robust, patient-centered software platform designed to capture rich, multimodal behavioral data streams through user engagement” (according to their website). I asked Kat her definition of affective computing and why sensors are so central to her work:

Affective computing is the attempt to use systems and devices (including sensors) to identify, quantify, monitor, and possibly simulate states of human affect. It is another way in which we humans are attempting to understand our own emotional experiences. Sensors, both wearable and embedded in the environment, combined with ubiquitous wireless computing devices (e.g., smartphones), offer us a large data set on human behavior that has never before been possible to access.6

A great deal of Kat’s work is focused on autism, where wearable sensors are being utilized for preemptive or just-in-time intervention delivery. “Using data from sensors to generate algorithms that allow us to accurately predict a person’s behavior could radically change our ability to facilitate behavior change much more quickly and effectively,” she says. Sensors can also play a role in identifying and preemptively intervening in states of what is known as “dis-regulation”:

When a person with autism (actually all of us to some degree) is in a state of physiological dis-regulation, they are more likely to engage in challenging behaviors (tantrums, self-injury, aggression, property destruction, etc.), which cause a lot of stress to themselves and their caregivers and significantly restrict the kinds of learning opportunities available to that individual. We are using wearable sensors that monitor autonomic (involuntary) arousal levels in combination with momentary assessments from caregivers and data from sensors in the immediate environment to see if we can identify triggers of dis-regulation. If we can do this, then we are in a position to be able to experiment with providing preemptive interventions to help people with autism maintain a regulated state by providing input before they become dis-regulated. Right now the only option to caregivers is to try to offer support after the fact.7

As Kat notes, being able to know ahead of time what is going on for a person with autism could be a significant game changer for many of the more challenged people on the autism spectrum, along with their loved ones and caregivers. Sensors are providing a unique portrait of behavior invisible before these new technologies existed.

To find out more about the idea of interventions involving sensors and the tracking of emotions, I interviewed my friend Mary Czerwinski, research manager of the Visualization and Interaction (VIBE) Research Group at Microsoft.

Can you please describe your most recent work?

For the last three years we have been exploring the feasibility of emotion detection for both reflection and for real-time intervention. In addition, we have been exploring whether or not we can devise policies around the appropriateness and cadence of real-time interventions, depending on personality type and context. Interventions we are exploring include those inspired by cognitive behavioral therapy, positive psychology, and such practices, but also from observations of what people naturally do on the Web for enjoyment anyway.

How would you define “emotion tracking”?

Emotion tracking involves detecting a user’s mood through technologies like wearable sensors, computer cameras, or audio analysis. We can determine a user’s mood state, after collecting some ground truth through self-reports, by analyzing their electrodermal activity (EDA), heart-rate variability (HRV), [and] activity levels, or from analyzing facial and speech gestures. Machine-learning algorithms are used to categorize the signals into probable mood states.

How has emotion tracking evolved in the past few years, in general and in your work?

Because of the recent advent of inexpensive (relatively speaking) wearable sensors, we have gotten much better at detecting mood accurately and in real time. Also, the affective computing community has come up with very sophisticated algorithms for detecting key features, like smiling or stress in the voice, through audio and video signal analysis.

Do you think emotion tracking will have its own “singularity”? Meaning, will emotion tracking become so articulated, advanced, and nuanced that technology could get to know us better than we know ourselves?

That’s a very interesting question and one I’ve been thinking about. What is certainly clear is that most of us don’t think about our own emotional states that often, and perhaps aren’t as clued into our own stress or anxiety levels as we sometimes should be. The mere mention of a machine being able to sense one’s mood pretty accurately makes some people very uncomfortable. That is why we focus so much on the hard human-computer research questions around what the technology should be used for that is useful and appropriate, given the context one is in.8

•

Along with trying to demonstrate how technology is helping map and quantify our emotions, my bigger goal is in providing you permission for reflection. As Mary points out, many of us don’t think about our emotional states, which means it’s harder to change or improve. Note there’s a huge difference between observing emotions and experiencing them, by the way. Observation implies objectivity, whereas in the moment it’s pretty hard to note, “Gosh, I’m in a blind rage right now.” So while it may take us some time to get used to tracking our emotions and understanding how sensors reveal what we’re feeling, a bigger adjustment needs to happen in our lives outside of technology for the greatest impact to take place.9

Sensor-tivity

“We’re really in the connection business.” Iggy Fanlo is cofounder and CEO of Lively, a platform that provides seniors living at home with a way to seamlessly monitor their health through sensors that track health-related behavior. “Globalization has torn families apart who now live hundreds of miles from one another,” he noted in an interview for Hacking H(app)iness. “Our goal is to connect generations affected by this trend.”10

After investing in years of study, Fanlo came to realize that older people care more about why they’re getting out of bed than how. So he focused on finding the emotional connections that would empower seniors while bringing a sense of peace to the “sandwich parents” (people with kids who are also dealing with elderly parents) concerned about their parents’ health. Sadly, a primary reason for friction in these child-to-parent caregiver situations is that kids tend to badger parents to make sure they’re taking their medications or following other normal daily routines. The nagging drives the parents to resent their kids and potentially avoid calling, even if they have a health-related incident that needs attention.

The company’s tech is surprisingly simple to use, although its Internet of Things sensor interior is state-of-the-art. The system contains a hub, a white orb-shaped device the size of a small toaster. It plugs into the wall and is cellular, as many seniors don’t have Wi-Fi or don’t know how to reboot a router if it goes down. A series of sensors, each about two inches in diameter, has adhesive backing to get stuck in strategic locations around the house:

· Pillboxes—sensors have accelerometers that know when the pills are picked up, serving as proxy behavior of assuming parents have taken their meds.

· Refrigerator door—a sensor knows when the door is opened and closed, serving as proxy behavior for parents eating regular meals.

· Silverware drawer—a sensor knows when the drawer opens and closes, serving as a secondary proxy measuring number of meals eaten.

· Back of the phone receiver—a sensor knows if the phone hasn’t been lifted, and after a few days, a message is sent to the child of the elderly person living at home reminding them to give their parent a call.

· Key fob—this features geo-fencing technology and indicates if a parent has left the house in the past few days.

A final component to Lively is that friends and family members contribute to a physical book that is mailed to seniors living at home twice a week. It’s a literal facebook that adds to the emotional connection between generations.

The most powerful component of the platform, however, is the improvement of relationships between parents and their adult children due to a lack of constant nagging. “This was an unintended consequence we learned during testing,” said Fanlo. “The seniors we asked were now happy to talk to their children. In the past, relationships had gotten toxic. The nagging was poisoning relationships. Since the children of seniors knew parent health was monitored, this took away the toxicity between the generations.”11

Along with Big Data, the trend or notion of Little Data has been growing in prominence. Outside of the technical aspects of sensors and tracking technology being inexpensive enough for the general public to take advantage of, Little Data also refers to the types of interactions involving platforms like Lively. Data is centered around one primary node (the seniors) and their actions (four or five activity streams). It’s intimate, contained, and highly effective at achieving a set goal to the benefit of multiple stakeholders.

Here’s another aspect of sensors to note in these examples: They’re invisible. Tracking doesn’t always have to be nefarious in nature. Lively calls their health monitoring “activity sharing.” For the next few years, we’ll be aware of sensors in the form of wearable devices because they’re new, much like we first felt about mobile phones when they were introduced. But after we become used to them, they’ll fade from prominence and do their passive collection while we go on with our lives.

Disaster Data

Patrick Meier is an internationally recognized thought leader on the application of new technologies for crisis early warning, humanitarian response, and resilience. He regularly updates his iRevolution blog, focusing on issues ranging from Big Data and cloud computing to crisis mapping and humanitarian-focused technology.

Recently, he blogged about the creation of an app that could be utilized during crisis situations to immediately connect people in need to those who could provide assistance. During crises, it can take many hours or even days for outside assistance to come to the aid of a devastated community. Meier is working to create solutions that can empower communities to provide help to one another in the critical time frame occurring directly after a negative event.

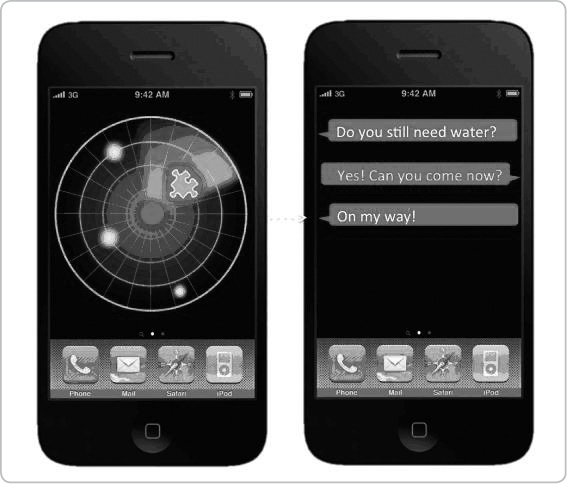

In his post “MatchApp: Next Generation Disaster Response App?”12 Meier lays out the vision for an app using a combination of sensors that could help people both ask for and provide assistance during a crisis. (Note the image here is a mock-up; Meier also recently wrote13 about an existing app called Jointly that has created a similar framework.) The concept of the MatchApp idea is quite simple: Like a shifting jigsaw puzzle, people’s needs shift dramatically in real time in the wake of a crisis. But location, identified via GPS, plays a key role in helping match need with resources in the most streamlined way possible. The figure on the previous page shows how a specific need is being met via a combination of GPS (on the left) and a confirming text message (on the right).

Meier describes how privacy is maintained in this framework while also providing a vehicle for increasing digital trust:

Once a match is made, the two individuals in question receive an automated alert notifying them about the match. By default, both users’ identities and exact locations are kept confidential while they initiate contact via the app’s instant messaging (IM) feature. Each user can decide to reveal their identity/location at any time. The IM feature thus enables users to confirm that the match is indeed correct and/or still current. It is then up to the user requesting help to share her or his location if they feel comfortable doing so. Once the match has been responded to, the user who received help is invited to rate the individual who offered help.14

The app and scenario provide a compelling example of how implementing sensors can help improve our health and even save lives. By proactively implementing protected data plans as Meier has in the MatchApp, we also work around privacy concerns, as people’s preferences are taken into consideration and they’re provided the choice to reveal their information as they see fit.

Margaret Morris—Left to Our Own Devices

Margaret Morris is a clinical psychologist and senior researcher at Intel. She examines how people relate to technology, and creates mobile and social applications to invite self-awareness and change. In her TED Talk “The New Sharing of Emotions” (April 2013), she discusses her work of creating a “mood phone” with her research team at Intel. Designed to be a “psychoanalyst in your pocket,” her tool lets people self-track moods and other behavior in experiments when they are “left to their own devices.” Morris’s logic is that, as our mobile phones are always at our side, we can leverage them for insights to improve our well-being. The bond that patients form with traditional therapy can extend to our phones for connection to social networks or other resources.

Here’s my favorite quote from her TED Talk: “We’re at this moment where we can enable all kinds of sharing by bringing together very intimate technologies like sensors with massive ones like the cloud. As we do this, we’ll witness new kinds of breakthough moments, and bring our thinking and all our approaches about emotional well-being into the twenty-first century.”15

I interviewed Morris to ask about the issues she brought up in her talk, to see how people relate to their smartphones, where the technological aspects are less important than the feeling of being helped by having an omnipresent, trusted tool at their side. A number of these issues are also elucidated in an excellent paper Morris wrote with a number of other researchers from Intel, Oregon Health and Sciences University, and Columbia University called Mobile Therapy: Case Study Evaluations of a Cell Phone Application for Emotional Self-Awareness.16

Can you describe your work with the “mood phone”? How did that work come about, and how has it evolved?

I created the mood phone to show how tools for emotional well-being (e.g., those from psychotherapy and mindfulness practices) could move into daily life, be available to everyone who has a phone, and be contextually relevant. It started as a complex system involving wireless sensing of ECG, calendar integration, and just-in-time prompting based on cognitive therapy, yoga, and mindfulness. It emerged because I was asked (within my research group) to develop a new approach to technologies for cardiovascular disease. It was important to me to take a preventive approach, focusing on psychosocial risks, and make something that would be very desirable and improve quality of life immediately while lowering long-term risk. I was interested in emotional well-being and relationship enhancement as motivational hooks for self-care. They are more palpable than long-term cardiovascular risk, and of course, the quality of our relationships affects everything.

Where do you see the balance between human psychoanalysts and the ones “in your pocket”? How can people determine that balance?

Most people do not have access to terribly good mental health care of any sort, much less psychoanalysis. They are “left to their own devices” and are remarkably resourceful, learning from friends, strangers, and using everything at their disposal, including their devices, apps, social media, and their own data.17

•

I think this last point is really important when considering why technology can be utilized to improve our well-being, whether it’s mentally, physically, or emotionally focused. While it’s understandable people would be concerned about replacing a human therapist with technology, it also doesn’t make sense to ignore a tool we all have with us all the time that could help us examine and improve our well-being. Our mobile phones also provide us direct, real-time contact with our loved ones or people responsible for our care. Whether they provide aid in emergency situations or simply a reminder that people in our lives are looking out for us, it’s also relevant to ask why we feel comfortable allowing these tools to track our behavior for marketing purposes, but get leery of using them to measure our emotions directly. Advertisers have no compunction about analyzing every decision, interaction, word, and action you take to get insights about the perfect timing to introduce their products or services. Why not utilize these same methodologies to understand your emotions in a personal context?

A final word on this idea that Morris mentions in her TED Talk. When her first experiment was done with the mood phone, she thought a lot of participants might have concerns about their privacy—how their data was being used, and so on. But what she heard most often was people asking if they could get the technology for their spouses. The insights the tools generated about their emotional lives led many of them to believe that their relationships would be greatly improved if they could take those insights and share them with the ones they love most. Elements of their personalities revealed by the technology created opportunities for discussions infused with an objectivity that wasn’t available before the mood phone was put to use.

So my final question here would be, would you rather be “left to your own device” or continue to do your best on your own?

The H(app)athon Project

There’s a growing movement to standardize the metrics around well-being that can lead to happiness. The combination of Big Data, your social graph, and artificial intelligence means everyone will soon be able to measure individual progress toward well-being, set against the backdrop of all humanity’s pursuit to do the same. In the near future, our virtual identity will be easily visible by emerging technology like Google’s Project Glass and our actions will be just as trackable as our influence. We have two choices in this virtual arena: Work to increase the well-being of others and the world, or create a hierarchy of influence based largely on popularity.17

—JOHN C. HAVENS

I wrote this article four months before I founded the H(app)athon Project. The piece was the inspiration for this book and the project I’m focusing on full-time. I believe mobile technology, utilizing sensors, will transform the world for good if personal data is managed effectively and people utilize these tools however works best for them. I’m writing this not to pitch you on the Project (although we are a nonprofit and all of our tools are free anyway, so of course I’d love for you to check it out), but because I want to be accountable to you as a writer. I love researching and writing, I love interviewing experts and providing a unique perspective. But I also believe taking action based on your passions is of paramount importance to best encourage others. That way, expertise is tempered and shaped by experience.

I was recently interviewed by the good folks at Sustainable Brands about our Project, as I spoke at their upcoming conference. Here is a description of the H(app)athon Project as I related it to author Bart King:

Our vision is that mobile sensors and other technologies should be utilized to identify what brings people meaning in their lives. We’ve created a survey that’s complemented by tools that track action and behavior in a private data environment. By analyzing a person’s answers and data, we create their personal happiness indicator (PHI) score, a representation of their core strengths versus a numbered metric.

A person can then be matched to organizations that reflect their PHI score in a form of data-driven micro-volunteerism. There’s a great deal of science documenting that action and altruism increase happiness. So we’re simply identifying where people already find meaning and help them find ways to get happier while helping others. At scale, we feel this is the way we save the world.

At the moment, we’re just beginning our work. Our survey can be taken online and on iPhones, but we’re seeking funding to build out the sensor portion of our data collection. We’ve partnered with the City of Somerville, MA, to pilot our proof-of-concept model over the next ten months. Somerville is the only American city to implement Happiness Indicator metrics with a sitting government. Our hope is that by adding sensor data into the mix we can gain critical insights to help with transparent city planning that improves citizens’ well-being.18

I want to make it clear how important Hacking H(app)iness is to my life in the form of this book and the H(app)athon Project. The technology of sensors provides a way to reveal aspects of ourselves we may not see. In the same way that you achieve catharsis watching a play where actors exhibit emotion you may not always be able to reveal, sensors give you permission to act on the data driving your life.

Does your heart rate increase when you think about playing guitar in a band? Maybe you should act on that. Does your stress level increase at your job no matter what task you’re doing? Could be time to switch divisions or look for new work. Affective sensors and their complementary technologies will begin to work their magic on your life in the near future if you let them. In the case of the H(app)athon Project, global Happiness Indicator metrics also provide the framework of a positive vision for the future not dependent solely on influence or wealth.

Need help defining your own vision? Check in with your data and see what revelations you have to offer yourself.