Information Security A Practical Guide: Bridging the gap between IT and management (2015)

CHAPTER 9. PENETRATION TESTING

Chapter Overview

Poor penetration testing frustrates me, and I have come across a few organisations that fail to get the basics right. Good penetration testing offers a high degree of assurance that the systems you have implemented have been done so securely, but you only get this assurance if your testing is thorough. This chapter starts with explaining the difference between white box and black box. Both tests have their pros and cons, so it’s important to know what these are so that you can select the right sort of testing. I also explain the sorts of tests you can do, which range from traditional penetration testing to build reviews and vulnerability assessments. Penetration testing doesn’t just have to include the usual attempted attack (hack) that we are accustomed to; we can tailor our testing to suit our needs and budget. Finally I discuss what to do after the test results are received. The most important thing when looking at the penetration test report is to add your own system knowledge to the reports, as the Critical to Low risk label findings usually given will not be effective in managing the fixes.

Objectives

In this chapter you will learn the following:

• The different types of test

• Different ways to test security

• How to scope a penetration test

• What to do with the results.

Types of Penetration Test

There are many types of penetration test, each with a varying degree of complexity, expense and time requirements. They include the following:

• White box testing

• Black box testing

• Vulnerability test

• Build review

• Code review

• Physical assessment.

White box testing

White box testing is arguably the most efficient and effective form of testing. It involves providing the tester with design and process documents before the test starts. The idea is that the tester understands how the system works so that they don’t need to waste time analysing the system and putting together attacks. In the real world it is unlikely that the attacker would fully understand our system. However, if we apply the scenario that, say, a cleaner has been collecting documents to be given to an attacker then we can better appreciate the importance of this test. A white box test forms probably the most dangerous of attacks given the tester’s knowledge of the system. It’s much better that we conduct the most thorough assessment rather than wait for an attacker to exploit the system. The other point of a white box test is to bring the tester inside the network. Connecting them from outside the network behind a firewall only tells you how secure the firewall is, not the system itself. If we know a system is secure after a white box test then we have a high degree of assurance that a black box test would be unsuccessful.

Advantages:

• Faster, so costs less

• Thorough test.

Disadvantages:

• Not likely in a real scenario.

Black box testing

Black box testing is the opposite to white box testing, which means that the tester has no prior knowledge of the system itself. Black box testing emulates the most lifelike scenario of a hacker attacking the system. Often testers are only provided with an IP address and a timeframe within which to attack the system. Although black box testing is the most lifelike, they often don’t teach us much about the system’s vulnerabilities. Often the test team is a service that has been brought in and they only have a limited time to attack the system. In reality a hacker has much more time to watch, analyse the system and formulate an attack. I recommend coupling a black box test with a white box test afterwards, stipulating that anything learned during the black box test can be applied during the white box assessment.

Advantages:

• Lifelike scenario.

Disadvantages:

• Doesn’t usually teach us much

• Takes a long time if we want to be thorough (costly).

Vulnerability assessments

Vulnerability assessments are a favourite of mine as they are often cheap and quick and can offer a quick security assessment of a system. They are particularly useful in an agile working environment where we want to deliver systems quickly. Vulnerability assessments can be carried out using software such as Nessus or Metasploit. These tools can be configured to log in to the target system in order to conduct their scans, and they then produce reports with recommended actions. These scans are a good assurance control before deploying software and can be configured to run on a regular basis so that you can check a system’s configuration hasn’t changed to make it less secure. Vulnerability scans are also useful in verifying that patches have been applied across the estate. These scanners regularly receive updates to check for the latest vulnerabilities, so including them in a patch-management process can be extremely useful, especially in ensuring a system hasn’t been missed.

Something to note with vulnerability assessments is that they are automated, so the results need human analysis to confirm the results and recommendations. Also, a system may be configured with an inherent vulnerability that is part of its general running, for example a web server will always have a degree of weakness as part of its purpose. However, what we can do with our vulnerability assessment reports is use them as part of our white box testing. So pick out the vulnerabilities that concern us and ask the testers to try to exploit those weaknesses and then tell us how easy it was. We can also use these reports to see if our black box testers were able to find the vulnerabilities and exploit them. If vulnerabilities are difficult to exploit then we may choose to live with them.

Advantages:

• Cheap

• Fast

• Automated

• Can be scheduled.

Disadvantages:

• Requires human analysis

• Can only be run on common recognised software.

Build reviews

A build review is carried out on the underlying infrastructure. Operating systems are insecure out of the box, so the administrator will need to customise the system so that it can provide the service needed and also harden it from attack. Despite popular belief even Linux systems are insecure out of the box. A vulnerability assessment is a good place to start but the real advantage is human analysis of the system. An automated tool only looks at individual settings whereas a good build reviewer considers the aggregate of those settings and understands if a vulnerability is introduced through a certain combination. The build reviewer also understands the context of certain settings without blindly flagging them up as vulnerabilities. A good build review is often time consuming and expensive. However, this expense can be offset when reviewing baseline systems because the same build can be deployed multiple times. So if an organisation wants to spin up separate Linux web servers, we can test the original build and then deploy it multiple times. Of course some work should still be done to ensure no settings have changed once deployed and a regular review should be scheduled of the build to take into account new vulnerabilities and system upgrades.

Advantages:

• Thorough

• Human analysis.

Disadvantages:

• Time consuming

• Costly

• People can miss things.

Code reviews

Code reviews focus on the software your team has developed, and the task can be done securely and insecurely. For example, some types of database calls can leave SQL injection vulnerabilities whereas other types of calls can prevent this from occurring. A code review can ensure the software that has been written has not introduced these vulnerabilities and has followed best practice. Code reviews can be carried out in many ways: you can have developers conduct peer reviews, use an independent review party or use an automated tool such as Gerrit (Java) or Review Board (Python).

Peer reviews are the quickest and cheapest way of conducting a code review, as often the resource and knowledge is there ready to be used. I recommend having developers who haven’t written the code review it, or if your organisation is large enough then have a separate development team. The key benefit of your own people conducting the review is that they already have some idea of the system and the code they are reviewing. This is also a double-edged sword, however, because by using internal knowledge it is likely that the same mistakes will be made across teams and not be spotted.

Third-party reviews are very useful from an independent point of view. The reviewers have no bias and have experience in reviewing lots of different code from organisations. This can be particularly beneficial for injecting new knowledge and new best practice into the organisation. The drawbacks to third-party reviews is the time and expense in bringing the reviewer up to speed with the code they are reviewing, and it can also mean that they might overlook something if they fail to understand the system.

Automated code reviews like our vulnerability assessment can be an extremely useful way of conducting a baseline assessment and ensuring a minimum level of quality and security. Another benefit is that often these tools allow you to customise and add your own review points. This can be useful if you want to review code and ensure it follows a consistent approach as well as security. Also, if we identify an insecure piece of code during a manual assessment then we can create a script to scan the rest of the codebase to ensure this problem doesn’t exist elsewhere. The real advantage to creating your own scripts is re-use: once the time has been spent creating the script it can then be re-used over and over to check for that vulnerability.

Physical assessment

Physical assessments assess the security of an office or data centre. The aim is to ensure the systems can physically be accessed or stolen. An organisation will typically have a data centre(s) where multiple systems are located. I don’t recommend carrying out a physical assessment every time a new system is deployed there as this becomes onerous. Instead carry out a detailed annual assessment or perhaps when adding a new sensitive system, the reason being that the level of risk posed to the data centre may change with the new system moving there.

Physical assessments come in two flavours: an inspection of the data centre or an attempted break-in where the assessor tries to gain unauthorised access. An inspection usually involves the assessor being escorted around the facility noting their findings. The other test where the assessor attempts to break in is far more sensitive and requires a lot more management on your behalf. You first need to discuss in more detail with the assessor about how they intend to attack the facility; you need to agree things such as whether can they cut a fence or break a window to gain access. Remember that these things will need fixing after the test. Also, you need to give the tester a ‘get out of jail free card’ in case he is arrested! You could brief the security guard of an attempted break-in in the next month so the police aren’t called if the assessor is caught.

Scoping the test

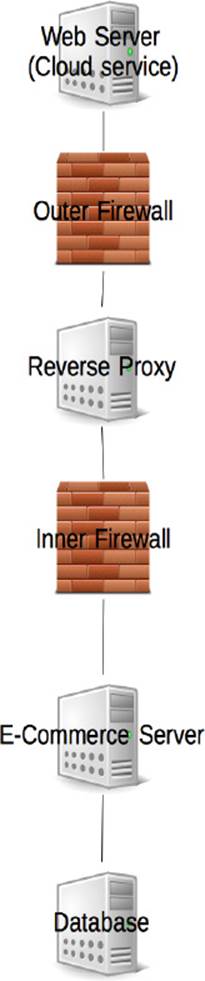

We now have awareness of all the different types of testing we can do, but we need to bring that together into a penetration test. Remember that you have to secure the entire system, whereas the attacker only needs to exploit one weakness. There is no point in securing the front door and spending a lot of effort testing it only to forget about the back door. A good penetration test includes a mixture of tests and includes at least the live system. Depending on how your test systems are deployed and used, there may not be a need to include them. For example, if those test systems only have test data on them and aren’t connected to the Internet, you need to assess the risks posed by the test systems and make a decision on whether they are in scope or not. Based on the previous section on documenting the system I have put together the following basic diagram showing the physical systems:

The diagram is extremely simple, and only needs to give the testers a high-level understanding of what needs to be tested. As well as the diagram the testers need a written description of what is to be tested.

Web server: IP 123.123.123.123

The web server provides the frontend for the service, and the service is deployed to an Amazon Web Service instance. The server is using Apache on Linux and has been developed using Java.

The server is subject to the following tests:

• Build review

• External penetration test.

External firewall: IP 124.124.124.124

The external firewall is a SonicWall and protects the DMZ from attack. It is configured to allow HTTPS connections through on port 443.

The server is subject to the following tests:

• Build review (firewall rules)

• External penetration test.

Reverse proxy: IP 125.125.125.125

The reverse proxy is an NginX proxy and manages the incoming connections to the network to the e-commerce server only.

The server is subject to the following tests:

• Build review

• External penetration test.

e-commerce server: IP 126.126.126.126

The e-commerce server provides the business logic and is responsible for carrying out transactions. The system has been deployed on an Ubuntu Server OS using Apache. The service itself has been created using Java.

The server is subject to the following tests:

• Code review

• Build review

• External penetration test.

Database server: IP 127.127.127.127

The database server runs on Windows Server 2008 and is an Oracle Database. The database itself stores product and user data.

The server is subject to the following tests:

• External penetration test

• Vulnerability assessment.

It is probable that the test team will have questions based on the scope. They will want to clarify whether the systems are in use for live services, and they may have questions about the technology deployed to them and will want to confirm server numbers. Many penetration testing firms will help you create a scope for a test but they will of course charge for this service. The more information you can provide, the quicker they can put together a proposal for testing your system.

Trusting the Testers

Trusting testers is important, as these people potentially have direct access to your data. You need to have trust in the assessor and trust they have the technical ability so that they are thorough and do not accidentally compromise the data. Ask if they have undergone a government security check, and consider a criminal records check as an alternative. Judging someone’s technical ability is much harder; ensure the testers have experience testing real live systems like yours. You want to vary the amount of experience depending on the sensitivity of the system being tested.

Non-disclosure agreements are very common in the private sector. Often testers will, especially when carrying out white box testing, have access to sensitive information. The last thing you want to do is share company secrets with someone who will inform a rival.

You may implement an agreement with a particular firm to provide testing on a regular basis. There are advantages from a trust and ability point of view but there are also drawbacks. For example, if the same testers test your systems and they miss a vulnerability, it is likely they will miss it again. Rotate who carries out the testing to ensure a greater chance of finding vulnerabilities, and this can be done by forming an agreement with two or more testing firms.

Implementing Fixes

Of course now that you have a list of vulnerabilities you are going to want to fix them. There are two key ways of managing this depending on how important the fixes are and how quickly you want to implement them. You can either set up a live support team to fix vulnerabilities as they are found, or you can wait for the full report and then implement fixes on a risk-based approach.

Setting up a live team to work with the penetration testing team can be chaotic but also very rewarding. One of the key benefits is having the testers retest systems to confirm fixes have been implemented effectively. If lots of remediation work is needed after a penetration test then you may need a second test, and using this fix method avoids this (assuming things aren’t really bad). If the systems are live with real users, many patches require a system restart, so fixing during working hours could be disruptive to the business and cause you a lot of problems without engaging the proper management people. If you are fortunate to have a change-management team who have the relevant business contacts and have experience in managing this sort of agile patching then great, otherwise I recommend not using this method.

The more traditional method is to wait for the full penetration test report. Understand the finds and put them into context. A critical vulnerability identified in the report may not be as critical, for example if a web-based vulnerability is found and the server is in the core of the network then the vulnerability is less likely to be exploited by an attacker. Conversely, a vulnerability labelled medium could be on a system connected to the Internet, which would make the system a higher risk as it’s more likely to be attacked.