Abusing the Internet of Things (2015)

Chapter 6. Connected Car Security Analysis: From Gas to Fully Electric

In 2014, a Sierra Leone doctor in Nebraska and a Liberian visitor in Dallas died of the deadly disease, Ebola. This caused a media frenzy in the United States and made a lot of citizens concerned about contracting the disease, even though one is more likely to die of their pajamas catching fire than from Ebola. Pajamas side, we do have a tendency to underestimate and overestimate things that may kill us. Cancer and heart disease are known to be the leading causes of death, yet our attention often focuses on improbable scenarios such as dying in an airplane crash. The numbers speak otherwise: There is a 1 in 11 million chances of being killed in a plane crash. Yet our chances of being killed in a car crash is 1 in 5,000 and most of us get into a car on a daily basis without giving it a second thought.

Car accidents can be a result of distracted driving, speeding, drunk driving, bad weather, running red lights, car defects, unsafe lane changes, improper turns, tailgating, road rage, bad roads, tire blowouts, fog, and animal crossings. Most people reading this book either have been in a minor or major car accident or know someone who has.

Despite the risks posed by cars and driving, society gains tremendous benefits from people having personal transport vehicles. Individuals in cities that lack a good public transport infrastructure depend on having a car to get to work and back home and to run errands. From the 12 day traffic jam in China in 2010 to the 2.5 million car and 48 hour traffic jam in Houston consisting of people attempting to flee the city from an oncoming hurricane, we have seen clear evidence that many cities around the world would come to a halt should the personal vehicle infrastructure be disrupted.

The issue of pollution and the negative effects on our climate is unquestionable which has led to the public promotion and understanding of the importance of hybrid cars such as the Toyota Prius, and the Tesla Model S, which is fully electric. Owning a car may be a luxury to some, a matter of livelihood to others, and a matter of concern for the climate to the collective human race.

In the past few years, cars have started to become increasingly connected to serve their drivers and passengers. Safety and entertainment related features that rely on wireless communications are not only becoming popular but expected by new car buyers. Cars are also increasingly catering to reducing emissions to comply with regulations and appease customers who are genuinely concerned for the environment (and those who want to save gas money).

In this chapter we will take a look at what it means for car to be a thing that is accessible and controllable remotely. Unlike many other devices, the interconnectedness of the car can serve important safety function, yet security vulnerability can lead to the loss of lives. We will first examine a low-range wireless system, and then review the extensive research performed by leading experts in academia. Lastly, we will analyze and discuss features that can be found in the Tesla Model S sedan including possible ways the security of the car can be improved.

Tire Pressure Monitoring System (TPMS)

The Ford Explorer was first put on sale in March 1990. It is alleged that Ford engineers recommended changes to the design of the car because it rolled over in tests before mass production. These cars were equipped with tired manufactured by Firestone. The Firestone-equipped Explorers ultimately caused accidents involving 174 deaths and more than 700 injuries; in response, Firestone recalled its tires. This resulted in a blame game where Ford was accused of releasing a product with known safety issues, while Ford accused Firestone of manufacturing defective tires (the defect involved “tread separation,” which caused the tires to disintegrate, resulting in decreased stability of the vehicle).

This controversy resulted in the federal law enacted in 2000 called The Transportation Recall Enhancement, Accountability and Document (TREAD) act. The act mandated the use of a suitable Tire Pressure Monitoring System (TPMS). TPMS is a system designed to monitor the state of the air pressure inside the tires and report any issues—such as low tire pressure—to the driver.

The Ford/Firestone situation caused more than a hundred deaths. From that, we can easily extrapolate the high number of deaths that are caused on a daily basis by faulty tire pressure. So clearly, well-designed TPMS systems are extremely important. The system should be able to report low tire pressure to the driver, and the system should not be vulnerable to other actors who could, for example, influence the system to show a low-tire pressure warning when in fact the tire pressures are in the correct range. Otherwise, highway robbers within the vicinity of a car could make the driver stop in a remote area by activating the low-pressure warning. Researchers from University of South Carolina performed an in-depth analysis of TPMS and found security design flaws that can be exploited. In this section, we will take a look at their research to understand TPMS and what issues were uncovered. Since TPMS relies on very basic wireless communication mechanisms, it is the appropriate first topic to cover as we learn about the security of connected cars.

The TPMS measure the tire pressure inside all of the tires on a vehicle and alerts the driver of loss of tire pressure. Two different types of TPMS exist: direct and indirect measurement systems. The direct measurement system uses battery-powered pressure sensors inside each tire to monitor the pressure. Since it is difficult to place wire around rotating tires, Radio Frequency (RF) transmitters are used instead. The sensors communicate using RF and send data to a receiving tire-pressure control unit, which collects information from all the tire sensors. When a sensor reports that a tire is running low on air pressure, the control unit sends information using the Controller Area Network (CAN) to trigger a warning message on the car’s dashboard. Indirect measurement systems, on the other hand, infer pressure differences by leveraging the Anti-locking Breaking System (ABS) sensors. ABS can help detect when a tire is rotating faster than the other tires, which is the case when a tire loses pressure. However, this method is less accurate and cannot account for cases when all the tires lose pressure. As of 2008, all cars in the US are required to employ direct TPMS.

Cars are full of Electronic Control Units (ECUs) which use the CAN specifications to communicate. ECUs are mini-computers that control various aspects of the car. All ECUs in the car are connected to two wires running along the body of the car (CAN-High and CAN-Low). ECUs transmit information by raising and dropping voltages on the wires. Since all ECUs are connected to the same wires, data transmitted by an ECU is available to all other ECUs on the network. The collection of ECUS communicating using the CAN standard is known as the “CANbus”.

The TPMS architecture consists of a set of components. The TPMS sensors are fitted onto the tires that periodically broadcast the pressure and temperature measurements. The sensors activate when the speed of the car is higher than 40km/h or when it receives an RF activational signal that is used during installation to get the sensors to transmit their IDs. A RF receiving unit that is part of the TPMS system remembers the sensor IDs so that it can filter out communication from sensors of nearby cars. There is also a TPMS ECU installed in the car as well, as four antennas that are connected to the RF receiving unit. The low-pressure warning light is also part of the TPMS system. As the sensors routinely broadcast the pressure and temperature measurements, the receiving unit collects the packets and first makes sure they don’t belong to neighboring cars (based on the ID). If any of the sensors transmits a reading that indicates low tire pressure, the system then displays a warning light.

Reversing TPMS Communication

The researchers from the University of South Carolina attempted to analyze the proprietary protocol used between the sensors and the receiving unit. As we will see in this section, their approach and analysis is unique because they manipulated the temperature around the sensors to reverse engineer the protocol. This type of mindset is critical as it illustrates creativity on part of security researchers. This type of approach can also be employed by malicious entities to reverse engineer communication, so it is important that the design of communication protocols and supporting architecture is secure.

Based on a collection of marketing materials, the researchers learnt that TPMS communication occurs in the 315 MHz and 433 MHZ bands (known as Ultra High Frequency, or UHF, designated to be between the ranges of 300 MHz and 3 GHz) and uses Amplitude Shift Keying (ASK) or FSK (Frequency Shift Keying) modulation. Modulation is basically the way we facilitate communication over any given medium, such as air or over a wire. Take for instance our ability to transmit our vocal communications through a medium such as radio. The process of converting our voice to a radio signal so that it can be sent wirelessly is called modulation. A carrier wave (often just called “carrier”) is a waveform that is modulated to transmit communications wirelessly. In the case of ASK, the amplitude of the wave is changed to a fixed value when a binary symbol of 1 is communicated; the carrier signal is turned off to transmit a binary value of 0. In the case of FSK, the frequency of the carrier signal is changed to a fixed value to represent a 1 or a 0. There are various tutorials available online that discuss the topic of modulation in more detail.

The researchers did not disclose the exact manufacturers of the two different types of the sensors they focused on, instead referring to them as “Pressure Sensor A” (TPS-A) and “Pressure Sensor B” (TPS-B). They used the ATEQ VT55 TPMS trigger tool (Figure 6-1) to trigger the sensors so that they transmit data.

Figure 6-1. The ATEQ VT55 TPMS trigger tool

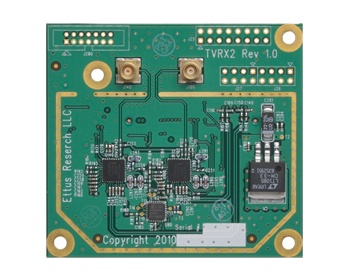

The TVRX daughterboard (Figure 6-2) attached to a USRP (Universal Software Radio Peripheral) allowed the research team to capture TPMS communications. The advantage of software-defined radios is that, wherever possible, they strive to implement features in software rather than hardware, making it less expensive for tinkerers to analyze radio communications.

Figure 6-2. The TVRX daughterboard

Data from USRP was analyzed using the GNU Radio, an open source software that can be used to process the captured signals.

As the research team analyzed the data transmitted by the sensors, they guessed that a technique known as Manchester Encoding was being applied. They were able to confirm this after applying the algorithm to decode Manchester encoded data, which resulted in a stream of information containing a known Sensor ID. This is an important mindset in the art of reversing a given architecture, i.e. looking for a known tuple of data (Sensor ID, in this case) and using it to see if the proper decoding algorithm has been applied. Although Manchester encoding is not a form of encryption, this technique of looking for a known tuple to see if the analysis is on the right track is similar to the idea of a known-plaintext attack in the field of cryptography, where an attacker has a copy of both the encrypted text and the plain text, and is able to use this information to infer weaknesses or secrecy embedded in the algorithm.

Next, the research team manipulated the sensors by heating the tires with hot guns and cooling them with refrigerators. Then they looked at which bits within the communication changed. They also adjusted the air pressure in the tires. This is another unique and critical step to remember when dealing with IoT devices. In the world of software, the idea of influencing the environment around a physical object is not applicable, but it is definitely within scope of the methodology of testing IoT devices that contain sensors which collect information about the physical world. Using this technique, the researchers were further able to decode the stream of communication from the sensors to pinpoint which bits referred to temperature data.

Eavesdropping and Privacy Implications

The data transmitted by the tire sensors is not encrypted, allowing someone in the vicinity of a car equipped with TPMS to capture the information. The researchers found they were able to eavesdrop on sensor data from up to 40 meters away in cases where the target car was stationary.

From the viewpoint of privacy, one risk is that a tracking system deployed alongside roads can be used to track particular cars around the city to capture drivers’ whereabouts based on their Sensor IDs. The feasibility of this is low since sensors only transmit data every 60 seconds. However, the researchers proposed that a tracking system could potentially leverage the fact that sensors respond to an activational signal (at 125 kHz). This means that one could implant a device that would issue the activational signal to trigger the transmission by the sensor. Based on the average speed limit around the area, wireless capture devices could be placed at appropriate distances to capture the data transmitted by the sensors. In this way, one could cheaply deploy a system for tracking cars at various spots within a given city.

The gravity of this example stems from the fact that millions of cars have TPMS and so are transmitting sensor data that can be captured by individuals or devices in the vicinity. Not only that, most people who own TPMS enabled cars have no idea that their cars are transmitting this information. Furthermore, there is no easy way for an average car owner to turn the system off, even though most individuals will want to leave the system on since they’re more concerned about dangerously low tire pressure than about being tracked.

What makes this research interesting is that it encourages us to pause and reflect on how we are going to design interconnected devices in the future. The lesson here is that over-the-air communication of potentially trackable data can compromise the privacy of consumers, especially in cases where the platform is implemented in millions of devices whose shelf life is measured in decades. Furthermore, device manufacturers must do a better job of informing their customers what information is being transmitted and what it could mean to their privacy.

Spoofing Alerts

Another type of scenario the Unversity of South Carolina researchers contemplated is one where an attacker could potentially spoof wireless network data to trigger alerts in the victim’s car. The researchers found that they could craft spoofed network packets transmitted from the front left tire of the attacker’s car that would trigger an alert in the car on its right. The assumption here is that the attacker would have to know the Sensor ID of one of the tires of the victim’s car. However, this can easily be obtained by issuing an activational signal. It was found that the spoofed packets were picked up by the victim’s car as far as 38 meters away and could trigger the car’s low pressure warning light.

During the analysis, the researchers attempted to transmit as many as 40 spoofed packets per second and found this arose no suspicion on the receiving unit or the TPMS ECU, even though the expected frequency of a sensor packet is once every 60 seconds. The researchers also uncovered that the warning light would go on and off at “random” intervals when forged packets with different pressure rates were transmitted at the rate of 40 packets per second.

Another peculiar thing uncovered during testing was the fact that, when a spoofed packet was transmitted, the victim’s car’s TPMS ECU did not immediately turn on the warning signal but instead sent out two activational signals that caused the victim’s car’s sensors to respond. However, even though these responses from the legitimate sensors contained normal readings, the ECU still flashed the warning signal based on the original spoofed packet transmitted prior to the two activation signals. Not only did this ultimately make the attack successful, but this situation also opens up the victim’s car to a battery drain attack: A neighboring car can drain the victim car’s sensor batteries by sending a spoofed packet that causes the victim’s car to transmit two activation packets, in turn causing each of the car’s sensors to send response packets.

After two weeks of experiments, the researchers inadvertently caused the test car’s TPMS ECU to crash, completely disabling the TPMS service. They were not able to revive the unit and ultimately had to buy a brand new ECU at the car dealership. This illustrates that the manufacturer of the ECU did not invest time into implementing resiliency against unexpected events and malicious spoofed packets.

This case is yet another example of how security needs to be designed into the product at the earliest stages. In software, we’ve learnt that we need to employ security principles during use case design, architecture design, development, testing, and post-production. In the case of the test units, it’s clear that the manufacturers did not take security into account in most, if not all, phases of product development.

As we continue to head towards a world full of inter-connected vehicles, we ought to demand more effort into the implementation of security and privacy related controls. Without this requirement, we are going to continue to put our privacy and physical safety at risk.

Exploiting wireless connectivity

As we’ve seen so far, ECUs communicating on the CANBus make up the connected car. We’ve looked at the design of the TPMS ECU, but there are many other ECUs that are popular and critical to the secure functioning of the car. Researchers Charlie Miller and Chris Valasek have explained the functionalities of many ECUs in their papers titled A Survey of Remote Automative Attack Surfaces and Adventures in Automotive Networks and Control Units. Although some of the news coverage of their work dismissed the impact of their findings because their demonstrations assumed physical access to the car, their analysis of various ECUs and the CANBus ecosystem of cars is quite useful. Furthermore, researchers at the University of San Diego and the University of Washington have already demonstrated that it’s possible to remotely gain access to a car by exploiting short-range and long-range wireless networks. This research, coupled with Miller and Valasek’s analysis, leads us to ponder scenarios that may allow malicious entities to remotely compromise and control targeted cars by exploiting wireless networks used by the car, and then levering their understandings of how each ECU works. In this section we will couple the ideas presented by both research teams to further our understanding of attack surfaces targeting Bluetooth and cellular networks in cars.

Injecting CAN Data

Miller and Valasek have done a fantastic job of explaining the structure of CAN data. It is crucial we understand how the CAN packets are structured so we have a solid concept of how these packets are constructed and computed by various ECUs.

Here is a sample packet from a Ford Escape:

IDH: 03, IDL: B1, Len: 08, Data: 80 00 00 00 00 00 00 00

In this packet, the CAN ID transmitted is 03B1 (a concatenation of the ID-High and ID-low values). Each ECU that receives the CAN packet decides whether to process the packet or ignore it depending upon how it is programmed to recognize the CAN ID of the packet. The next byte represents the size of the data portion of the packet, which in this case is 8.

Here is an example of a CAN packet transmitted by a Toyota:

IDH: 00, IDL: B6, Len: 04, Data: 33 A8 00 95

In the case of Toyota, it was found that the last byte represented a checksum value computed by the following algorithm:

Checksum = (IDH + IDL + Len + Sum(Data[0] – Data[Len-2])) & 0xFF

For simplicity, here are the values of our packet in decimal:

0xB6 = 182

0x04 = 4

0x33 = 51

0xA8 = 168

Adding it all up, we have 182 + 4 + 51 +168 = 405 which in binary is represented as:

0000 0001 1001 0101

The value of 0xFF in decimal is 255 and here is the binary value:

0000 0000 1111 1111

Here is the resulting binary if we were to perform an AND operation between the two:

0000 0000 1001 0101

The value of the result in decimal is 149, which computes to a hexadecimal representation of 0x95. This is exactly the value of the last byte in our example packet, so we’ve confirmed that our understanding of Toyota’s checksum works.

Miller and Valasek used the ECOM Cable to capture the CANBus traffic and analyze it on their laptop. This cable doesn’t directly connect using the OBD2 interface found is most cars, so the researchers purchased an OBD2 adapter to rectify this. The advantage of this setup is the availability of the ECOM Developer’s API, which can be used to program and automate the capture and injection of CAN Data. The researchers wrote their own suite of tools using this API to assist in security evaluation of CAN packets. The project is called ecomcat_api and it is free to download.

Here is an example of Python code usually required to initialize tolls written using the project:

from ctypes import *

import time

mydll = CDLL('Debug\\ecomcat_api')

class SFFMessage(Structure):

_fields_ = [("IDH", c_ubyte),

("IDL", c_ubyte),

("data", c_ubyte * 8),

("options", c_ubyte),

("DataLength", c_ubyte),

("TimeStamp", c_uint),

("baud", c_ubyte)]

Next, we initialize the connection to the ECOM cable:

handle = mydll.open_device(1,0)

According to the researchers, 1 represents a high speed CAN network and 0 represents that the first connected cable is being used.

Next, it is possible to inject a CAN packet onto the CANBus:

y = pointer(SFFMessage())

mydll.DbgLineToSFF("IDH: 02, IDL: 30, Len: 08, Data: A1 00 00 00 00 00

5D 30", y)

mydll.PrintSFF(y, 0)

mydll.write_message_cont(handle, y, 1000)

mydll.close_device(handle)

This will transmit the packet continuously for 1000ms.

That’s how easy it is to send a CAN packet on a CANBus network. For more details on how to use this tool to test and inject various types of CAN packets, please read the whitepaper about it.

Now that we understand how easy it is to inject CAN packets, let’s take a look at possible ways to remotely gain access to the CAN. As we have seen in this section, once we have access to the CAN, it’s easy to inject data. This gives us good perspective of the high probability of potential abuse once an attacker has compromised an ECU that is on the CANBus.

Bluetooth Vulnerabilities

Miller and Valasek’s paper on the survey of automotive attack surfaces states: “Right now the authors of this paper consider Bluetooth to be one of the biggest and most viable attack surfaces on the modern automobile, due to the complexity of the protocol and underlying data. Additionally, Bluetooth has become ubiquitous within the automotive spectrum, giving attackers a very reliable entry point to test”.

In the 2010 Ford Escape analyzed by Miller and Valasek, the Bluetooth functionality was the Accessory Protocol Interface Module (APIM) module also known as the “Ford SYNC Computer”. The researchers found that one has explicitly press a button in the car to put it into “pairing mode” in order for it to connect with and trust a particular smart-phone. The car displays a 6 digit PIN that must be entered on the smart-phone for the pairing to take place. However, the research performed by the University of San Diego and the University of Michigan have asserted that they have found scenarios to exploit Bluetooth through “indirect” and “direct” wireless attacks.

The University of San Diego researchers discovered various buffer-overflow attacks after reverse-engineering the Bluetooth firmware from the car they used for their experiment (their paper does not mention the model or the manufacturer). Buffer overflow attacks can be used to over-run the victim computer’s memory with injected code thus allowing the attacker full control of the computer. The researchers did not disclose the exact code they were able to exploit, but they indicated they were able to abuse improper implementation of the strcpy function which is a very common avenue leading to buffer overflow attacks.

Prior to exploiting the buffer overflow condition, an attacker first needs to pair a malicious smart phone to the car using bluetooth. The researchers explained that this could be done in two ways: indirect and direct. In indirect mode, the attacker assumes access to a phone owned by the driver of the car that has already been paired with the Bluetooth system. This scenario would work when the attacker has temporary physical access to the smart-phone, but it is not necessary. A more plausible scenario is that the attacker can lure the driver of the car to download an app that has been infected. There have been many cases where malicious apps have slipped past the scrutiny of famous app store platforms such as the Android App store, so we have evidence that attackers have been able to make their apps available for download on these platforms. The researchers claim that once the driver with a smartphone that has been paired to the Bluetooth system is lured to download and launch the malicious app, the buffer overflow condition can be exploited to take over the ECU responsible for handling the Bluetooth functionality.

In the case of direct mode, the researchers portray a scenario where an attacker who is within the vicinity of the car can sniff the car. To pair a new device with the car, the user normally has to explicitly enable “pairing mode”. When the driver does this, the car displays a 6 digit PIN that the user has to enter on the smart phone. However, the researchers found that the car they were analyzing would pair with new devices even when pairing mode was not requested. However, the car would not display the PIN so the researchers suggested a brute force scenario where an attacker would try all possible combinations (0-999999). The researchers noted that they were able to brute-force and guess the PIN in an average of 10 hours. Once the attacker’s device is paired, the attacker can launch the malicious app on his or her own paired smart-phone that would exploit the known buffer over-flow condition and take over the ECU. The researchers acknowledged that 10 hours is a long time for the attack to be feasible because the car would have to be running. They presented a scenario where a potential attacker in a parking garage could parallelize this attack vector and simultaneously target multiple cars in a crowded garage to increase his or her odds.

Vulnerabilities in Telematics

Many cars contain cellular radio equipment that is used to connect the car to a cellular network. One popular example of this is General Motor’s OnStar which provides many features to the drivers and passengers, including contacting call centers during an emergency. As part of this service, the system can also track the car’s location and relay it to the call centers during an accident so that assistance can be automatically dispatched. The system also provides features such as stolen vehicle tracking, and even allows the call centers to remotely slow down a stolen vehicle. The computer responsible for handling this cellular communication is known as the Telematics ECU. Figure 6-3 illustrates the Telematics ECU for the 2010 Toyota Prius as found in the whitepaper written by Miller and Valasek.

Figure 6-3. The Telematics ECU for the 2010 Toyota Prius

Miller and Valasek state the following opinion on Telematics ECUs: “This is the holy grail of automotive attacks since the range is quite broad (i.e. as long as the car can have cellular communications). Even though a telematics unit may not reside directly on the CAN bus, it does have the ability to remotely transfer data/voice, via the microphone, to another location. Researchers previously remotely exploited a telematics unit of an automobile without user interaction”.

A successful attack against the telematics system would indeed be the most impactful since the scenario allows for attackers to remotely break into cars from anywhere in the world given that many of the telematics system have an actual cellular phone number that can receive incoming connections. The researchers from the University of San Diego and the University of Washington claim to have successfully exploited a telematics system powered by Airbiquity’s aqLink software. This software allows for the transmission of critical data through channels normally reserved for voice communication. This is useful because wireless networks intended for voice communication such as GSM and CDMA are have greater coverage areas than networks such as 3G.

TIP

On a similar note, researchers Mathew Solnik and Don Bailey found a way to exploit Short Messaging System (SMS) to remotely unlock a Subaru Outback and even start the car. Their presentation, titled “War Texting” is available for download.

The researchers were able to find the actual phone number assigned to the car and called it to listen to the initiation tone. Since aqLink uses the audio channel to transmit digital data, this was the first step employed by the researchers to reverse engineer the protocol. The whitepaper does not discuss the actual exploit code utilized, but the researchers claim to have found various buffer overflow conditions in the implementation of aqLink. They devised an exploit that took 14 seconds to transmit, however it was found that the car’s telematics unit would terminate the call after 12 seconds upon receiving the call.

To get around this limitation, they found another flaw in the authentication algorithm of aqLink, which is responsible for authenticating incoming calls to make sure they are from a legitimate source. The researchers found that the car would initiate an “authentication challenge” upon receiving the call. In the simplest terms, this means that the car expects the caller, if legitimate, to be able to know a shared cryptographic secret that is used to respond with the correct answer to the challenge. In most cases a random token, i.e. a “nonce”, is used to make sure that the same challenge is not issued. It was found that the car would user the same nonce sequence when turned off and on again. This created a situation where the researchers could capture a legitimate response to the challenge and resend it to a car that has just been turned on (also known as a “replay attack”). Furthermore, it was found that the car would accept an incorrect response once every 256 times. Therefore, the researchers were able to authenticate with the car by repeatedly calling and bypassing authentication at the average rate of 128 calls.

Once authenticated, the researchers were able to change the timeout from 12 seconds to 60 seconds and then re-call the car to deliver the buffer overflow exploit discovered earlier. In this way, the researchers demonstrated they could remotely call the cars and take over the telematics ECU. Since the ECU is on the CANBus, they were further able to influence additional aspects of the car, such as flashing the TPMS ECU with custom code to trigger rogue notification packets.

Significant Attack Surface

The ability to surreptitiously take control of a car’s telematics ECU presents an attack surface whose implications are profound. Once the attacker is able to compromise an ECU, he or she can then compromise other ECUs and inject fake packets that can cause the car to slow down, speed up, come to a halt, or unlock its doors. Of course, the scenario would differ because of the difference in architecture between different cars. Some manufacturers may hard-wire functionality that is outside of the realm of the CANBus while others may rely upon the notion that every packet on the CANBus may be trusted.

A crucial point to note here is the scenario where an attacker may abuse remotely exploitable conditions en masse, i.e. attempt to exploit as many cars as possible. Such a brute-force attack may yield greater fruit for the attacker because every successful attempt would result in unlocking the car and transmittal of the car’s current GPS coordinates. Imagine a situation where an attacker has been able to gain control of hundreds or even thousands of cars in this way. Demented individuals, malicious activists, or even terrorists with malicious intent could remotely compromise the safety of drivers in moving vehicles to get attention or to obtain media coverage at the potential cost of injuries to innocent drivers.

The case for alarm regarding physical safety is clear and real. Consider also the risk to privacy. Attackers can easily track compromised cars and possibly listen in on private conversations of executives, business competitors, or politicians to obtain and abuse corporate and personal data. It is easy to imagine how this can be automated and even targeted towards certain individuals or corporations depending upon their location.

We have learnt to detect anomalies in our computing environment to figure out if suspicious activity warrants our attention. This can be done simply by looking for network port scanning activity or correlating various log sources such as email, anti-virus, host intrusion detection systems, and others to obtain greater intelligence. No such approach is seen on popular vehicles that allow short and long range communication such as Bluetooth and cellular. For example, Miller and Valasek state the following in their paper:

“Besides just replaying CAN packets, it is also possible to overload the CAN network, causing a denial of service on the CAN bus. Without too much difficulty, you can make it to where no CAN messages can be delivered. In this state, different ECUs act differently. In the Ford, the PSCM ECU completely shuts down. This causes it to no longer provide assistance when steering. The wheel becomes difficult to move and will not move more than around 45% no matter how hard you try. This means a vehicle attacked in this way can no longer make sharp turns but can only make gradual turns”.

A denial of service attack is one of the easiest issues to detect given the noise the attack generates that includes excessive amounts of network traffic. The car should be able to notcie a flood of CAN packets and realize that suspicious activity is taking place. Cars should employ a fallback scenario when this occurs to guarantee the safety of the driver and passengers.

Furthermore, it is clear that much of the ECU software looked at by researchers contains basic software flaws such as buffer overflow vulnerabilities, relying on obscurity, and bad implementation of cryptography (reoccurring nonce). This makes it clear that the car manufacturers discussed in this chapter have not invested in analyzing the code to find and remediate the most fundamental security issues that are well known in the software development community. In addition analyzing the code, car manufacturers should design their telematics systems to connect outbound to a trusted destination rather than accept incoming connections.

In the past two decades, we have learnt the hard way that it is a bad idea to for laptops and desktops to trust each other just because they are on the same local network. The probability of one of the devices on a local network eventually being compromised is high and so it is unacceptable to approve an architecture where devices on the same network don’t employ end point protection to fend for themselves. Most cars today employ this architecture because ECUs on the CANBus explicitly trust the integrity and authenticity of packets. In the past, the risk posed from this design may have been acceptable because it required physical access to the car. However, as we’ve seen in this section, research has proved that this approach can be exploited remotely, which can compromise the physical security and privacy of individuals. The motivation of an attacker for exploiting these conditions can range from a simple prank, a targeted attack against an individual, or even terrorism targeting a large group of car owners and passengers.

One important point to take away from this section is the fact that the vulnerabilities being discovered in cars today are rooted in the ignorance of fundamental principles of memory management, practical cryptography, and basic security controls. As we look into the future, cars will continue to increase their reliance on wireless communication. We ought to learn from the mistakes we are committing today so that we can create vehicles that can keep drivers and passengers safe without exposing vulnerabilities that can be abused by attackers.

Tesla Model S

The words “Tesla Motors,” “SpaceX,” and “Elon Musk” have become synonymous with relentless innovation. The eventual goal of SpaceX is to lower the cost of space travel so that the human race can migrate to other planets. The goal of Tesla motors is to increase our knowledge of how to generate energy most efficiently and cleanly, and the company has demonstrated this by releasing one of the safest and fastest four door electric sedans, the Model S. The eventual goal of Tesla is to bring to market an affordable electric car. Elon Musk, the South African born engineer and executive behind SpaceX and Tesla, is leading the charge towards the success of both the companies.

Figure 6-4. The Tesla Model S P85+

In the words of Elon Musk: “Well, I didn’t really think Tesla would be successful. I thought we would most likely fail. But I thought that we at least could address the false perception that people have that an electric car had to be ugly and slow and boring like a golf cart”. The Model S is far from a slow golf cart. The P85+ model (Figure 6-4) has 416 horsepower that can take it from 0 to 60 miles per hour in 4.2 seconds. The P85D model has 691 horsepower and can reach 60 miles per hour in 3.2 seconds. Given that the car is fully electric, this amount of power is phenomenal and unprecedented.

Every Tesla owner is entitled to free use the hundreds of Tesla Supercharger stations (Figure 6-5) that are strategically placed across the North America, parts of Asia-Pacific, and Europe. These stations can charge a car in less than 30 minutes so that the car can then drive 170 miles. These stations allow Tesla owners to easily make cross-country trips, and there is no cost for charging. No other electric car company has made such phenomenal investment in infrastructure and this has positioned Tesla as one of the leading electric car copanies in the world.

Figure 6-5. Tesla Supercharger Stations

The center display depicted in Figure 6-6 is a popular feature of the car. The display not only lets you control media, access navigation, and turn on the rear view camera, but it also lets you adjust the suspension, open the panoramic roof, lock and unlock doors, and adjust the height and breaking of the vehicle. This is all done via the touch screen.

Figure 6-6. The center display in the Tesla Model S

The Model S is commonly referred to as a “computer on wheels” because it is always connected to the Internet via a 3G connection. New features are delivered as software updates. For example, the “hill assist” feature that prevents the car from rolling backwards on a hill was automatically delivered as a software update through the 3G connection. This is revolutionary since the installation of such features on other vehicles requires taking the car to a dealer or mechanic.

Tesla is recognized as one of the leading innovators in the field of electric cars and it is quite likely that Tesla’s architecture will inspire other manufacturers. The always-on 3G and the ability to update the car’s software makes the Model S a true IoT device that drivers and passengers will depend upon for their safety and privacy. In this section, we will take a look at some of the features of the Model S and analyze their design from a security perspective. This will help us understand how security is being designed into cars that are going to lead us to the future and what improvements we need to make along the way.

Locate and Steal a Tesla the Old Fashioned Way

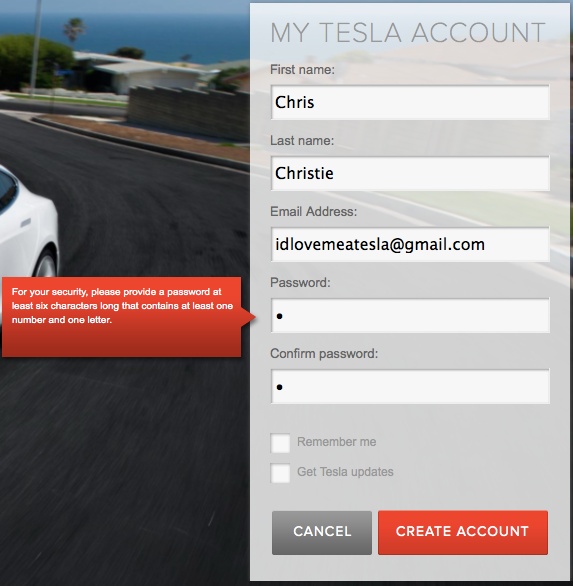

It is common knowledge that weak passwords are a bad idea and most popular online services require that users pick a password with reasonable complexity. Otherwise, users tend to select passwords that are easily guessable and attackers exploit this situation to guess all possible combinations of passwords (also known as a “brute force” attack) to gain access to the victim’s account. As shown in Figure 6-7, Tesla’s older website enforced a password complexity of 6 characters containing 1 letter and 1 number. This allowed for weak passwords such as “password1”, “Tesla123”, and so on. According to a survey, “123456” is one of the most common passwords while “abc123” is the 14th most common password (and this would pass Tesla’s complexity requirement). Furthermore, Tesla’s website (and iPhone app as shown in Figure 6-8) did not originally enforce any password lockout policy which allowed a potential attacker to guess a target’s password unlimited times. Once the attacker is able to guess the password, he or she can locate the physical location of the car by using the iPhone App. The attacker can also unlock the car, start it, and drive the car using the iPhone App!

Figure 6-7. Password complexity requirement of 6 characters inclusive of 1 letter and 1 number

Tesla updated its password complexity requirements in April 2014 to require a minimum of 8 characters containing 1 letter and 1 number. According to the password survey, the 25th most common password of “trustno1” would qualify for this requirement. Tesla also implemented a password lockout policy that locks a given account when 6 incorrect login attempts are made. When an account is locked out, the user can request a password reset link to be emailed to his or her email account on file.

Figure 6-8. Tesla iPhone App

Tesla’s increased password complexity requirement and lockout policy may deter some attackers, but it is not enough to stop determined attackers who can still employ traditional tactics such as phishing to obtain the password. All they would have to do is setup a website that looks like that of the legitimate Tesla website and lure car owners to submit their credentials. Phishing is relatively easy to carry out and thousands of individuals fall prey to phishing attacks on a daily basis. In 2011, a phishing attack compromised the cryptographic keys of the RSA SecureID product, ultimately leading to the compromise of data from Lockheed Martin, one of the largest military contractors. In 2013, a phishing attack led to the compromise of 110 million customer records and credit cards at Target.

Communication between the Tesla iPhone App and the Tesla cloud infrastructure has been documented by the Tesla Model S API project. The iPhone App connects to the server at portal.vn.teslamotors.com to authenticate and authorize the user based on credentials. Once logged in, the iPhone App can connect to this server to issue commands (such as to unlock the car) and receive information about the car (such as the car’s location). Malicious users can also use this service to automate their work. Assume a situation where an attacker has been able to capture a few hundred credentials of Tesla owners. The attacker can write a simple script that can use the API to quickly find the location of all the cars and unlock them:

1. Login to the stolen account by submitting a request to /login and populating the user_session field with the victim’s email and the user_session field with the password.

2. Submit a request to /vehicles to obtain a list of all Tesla cars associated with the victim’s account.

3.

4.

It is evident that single-factor authentication of just a username and password, even with password complexity requirements and account lockout policies, are not sufficient to protect the security of a vehicle since simple and traditional phishing attacks can allow a malicious user to locate, unlock, and even start the car. Also consider the case where an attacker has temporary access to the victim’s email. The attacker can simply request a password reset from the Tesla website and get hold of the user’s Tesla account. Take a moment to consider the impact of this situation: attackers that have compromised the email of a Tesla owner can locate and steal the car.

Users have a tendency to re-use their credentials across online services. This creates a situation where an attacker that has compromised a major website can attempt to try the same password credentials on Tesla website and iPhone app. We also see situations of major password leaks on a daily basis which are easy to find by way of projects like LeakedIn that collect and report on credentials that have been publicly exposed and available to the public. An attacker can easily use usernames and passwords from such leaks and attempt login on the Tesla iPhone app (or automate the process described in step 2) to locate and unlock cars.

This sets new perspective on how traditional attack vectors can be abused to not only gain access to the victim’s online information such as email and instant messages, but they can be leveraged to locate and steal a luxury car. Yet again, the point here is that an IoT device capable of going 0-60 miles per hour in 3.2 seconds should not be vulnerable to traditional attacks that are a result of single factor authentication. We also know that bot-nets relating to malware are always incorporating new methods to locate and pillage user information. If companies like Tesla continue to implement controls such as traditional username and password based authentication, it is quite likely that malware authors are likely to look for and capture these credentials. Since particular strains of malware can compromise millions of laptops and desktops, this will create a situation where a significant number of connected vehicles may be compromised and remotely accessible by bot-net herders who may be located anywhere in the world.

Social Engineering Tesla Employees and the Quest for Location Privacy

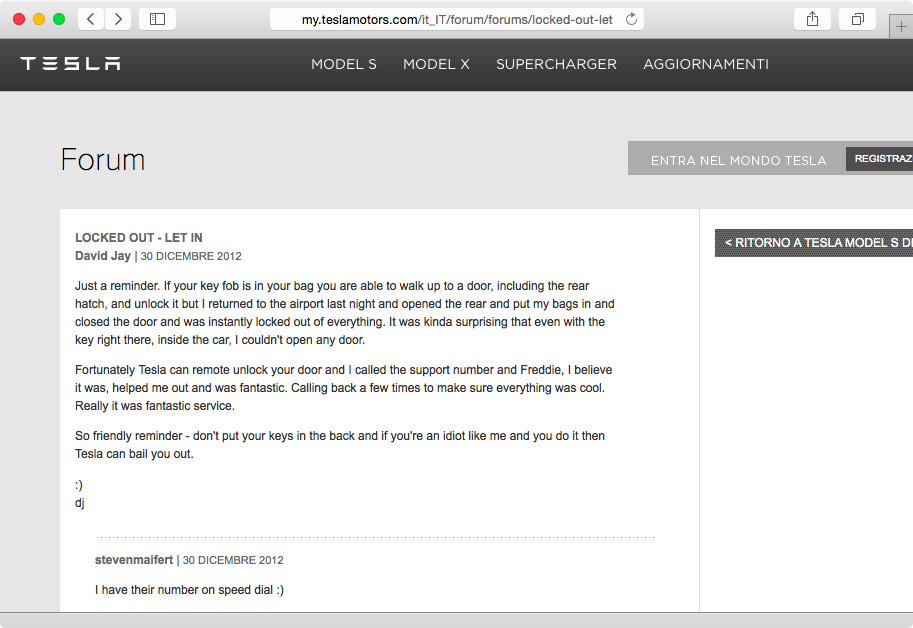

It’s tough luck for most people who forget their car keys or lock themselves out. Tesla owners can unlock their cars using the iPhone App in such cases. They can also call customer service and request that their cars be unlocked in cases where they are unable to use their iPhones. As shown in Figure 6-9, customers can call customer services to unlock their cars in cases when other options are not available.

Figure 6-9. Tesla customer service can unlock cars remotely

The ability of Tesla employees to be able to unlock cars remotely is certainly helpful to customers, but it is not clear how customer service is able to authenticate legitimate car owners. Tesla has not published actual guidelines on exactly what information is required for verification. This could create a situation where individuals may attempt to social engineer Tesla customer support to gain access to the car.

It is also unclear what background checks Tesla employees are subject to prior to be given the power to unlock any Tesla car. Uber, the app-based cab company, recently faced scrutiny for violation of their customer’s privacy by their employees who had access to all customer’s data (internally known as “God View”), including where they were picked up from and where they were dropped off. In fact, Uber has actually bragged about being able to identify individuals who travel to locations late at night to engage in “frisky” behavior on their blog which has since been taken down but an archived version is available. Since the Model S is always connected via 3G, Tesla can easily collect information on where every car is at any given time. Yet, Tesla has not communicated what they have done to make sure only authorized employees have access to the data and how stored location data is secured against external entities who may seek to gain unauthorized access to Tesla’s technology infrastructure.

Handing Out Keys to Strangers

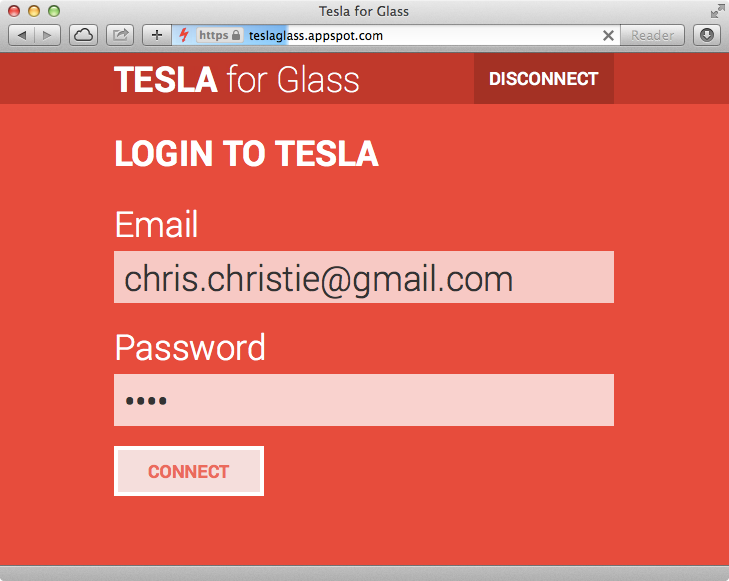

The Tesla iOS App uses a web based API to communicate and send commands to the car. Tesla has not intended for this API to be directly invoked by 3rd parties. However, 3rd party apps have already started to leverage the Tesla API to build applications. For example, the Tesla for Glass application lets users monitor and control their Teslas using Google Glass. In order to setup this application, Google glass owners have to authorize and add the app. Once this step is complete, the user is redirected to a login page as shown in Figure 6-10. On this page, the user enters the credentials they use to login to their Tesla account and the iOS app.

Figure 6-10. Tesla for Glass login page

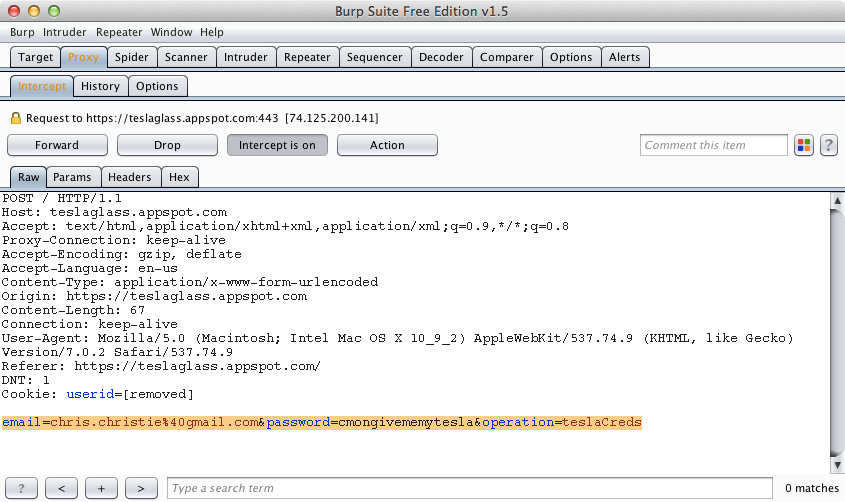

When the user enters his or her login information and clicks on “CONNECT”, the username and password is sent to a third party server (teslaglass.appspot.com) as shown in Figure 6-11! This is basically the electronic equivalent of handing one’s car key to a complete stranger!

Figure 6-11. Tesla website credentials are collected by third party app

TIP

The screenshot in Figure 6-11 depicts the Burp Suite tool. This is a free tool that can be used as a proxy server to capture and modify HTTP content. In this case, we have used it to capture the HTTP request to teslaglass.appspot.com to figure out the actual content being transmitted.

In other words, the “Tesla for Glass” application is not written or sanctioned officially by Tesla, yet it receives the actual credentials of users that choose to use it. This presents the risk of malicious third party applications where third party application owners may abuse this situation to collect credentials of Tesla accounts. As we’ve seen before, these credentials can allow anyone to locate the cars associated with the account, unlock them, and even drive the car.

Another risk imposed by this situation is the possibility of the third party infrastructure being compromised. This issue has been raised in the community by George Reese. Elon Musk has confirmed that Tesla has plans to eventually release an SDK for 3rd party developers. It is likely that the Tesla sponsored solution includes an SDK, access to a remote API, local sandbox, OAUTH like authorization functionality, and a vetting process that draws inspiration from the Apple App Store.

Perhaps Tesla cannot be explicitly and fully blamed for it’s customers handing over their credentials to third parties. However, it is the nature of traditional password based systems that give arise to outcomes and situations where this becomes an issue. Rather than place the blame on car owners (who are in most cases broadcasting their credentials to third party applications unintentionally), the only way this issue can be remedied is by Tesla offering an ecosystem where the secure development and vetting of applications is defined and encouraged.

Or Just Borrow Someone’s Phone

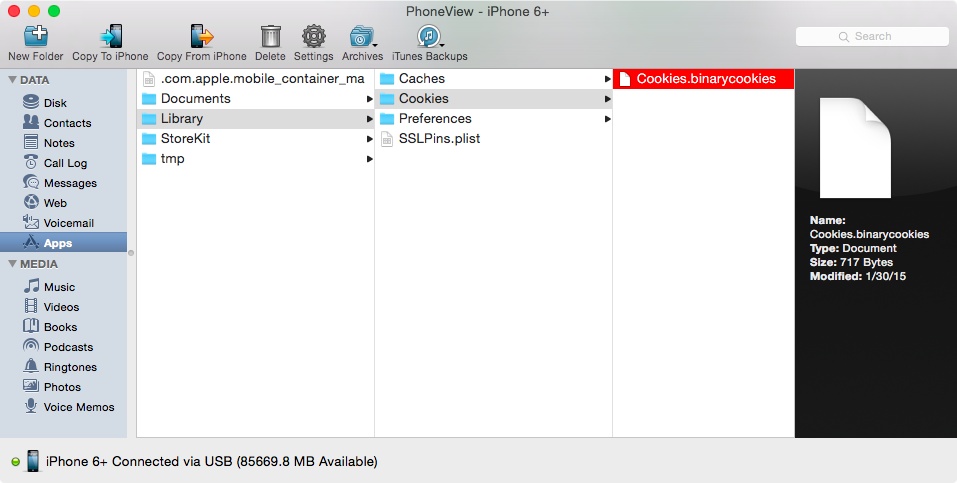

The Tesla iOS app stores a session token obtained from successful authentication with the API in the Library/Cookies/ directory within the App in the file called Cookies.binarycookies. As shown in Figure 6-12, anyone with physical access to a Tesla owner’s iPhone can grab this file using a tool such as PhoneView.

Figure 6-12. The Cookies.binarycookies file on the iPhone contains the authentication token.

Anyone with temporary access to a Tesla owner’s phone can steal the content of this file to make direct requests to control the API functionality. This value of this session token has been documented to be valid for 3 months at a time.

The probability of this issue is low because it requires physical access to the phone. Note, however, that unlike temporary access to a physical key (the role of which is played by the phone), the potential malicious entity will have prolonged access to the functionality even after returning the phone.

Yet again, the risk posed to owners is due to the reliance on traditional username and password credentials which are likely to rely upon validated session tokens such as these so that user’s don’t have to enter their password every time they launch the iPhone app.

One simple and elegant way to improve this situation is for Tesla and other car manufacturers to leverage built-in authentication and authorization functionality in operating systems such as Apple’s iOS. The Touch ID fingerprint sensor in the most recent iPhone models securely saves partial fingerprint data that can easily and quickly verified. Apple has opened up the use of the Touch ID API to third party developers. Tesla can and should use this framework to further protect the security of their owner’s by requiring the use of Touch ID for critical use-cases such as unlocking and starting the car.

Additional Information and Potential Low Hanging Fruit

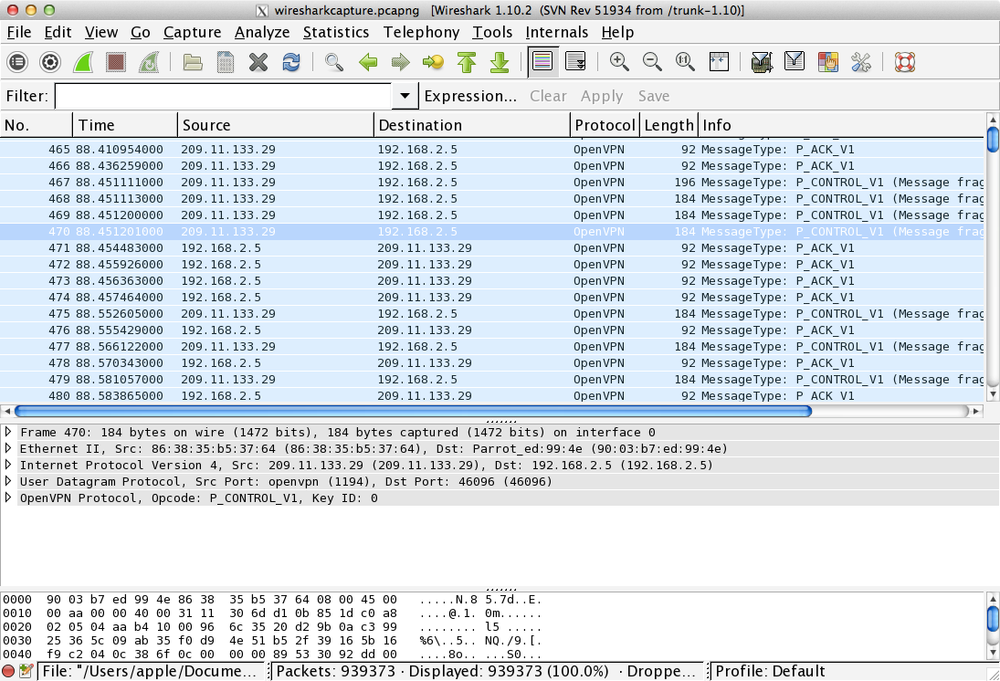

We know that the Model S maintains a 3G connection to the Tesla infrastructure. The car can also hop on to a local WiFi network, which makes it easier for to intercept the network traffic that is travelling outbound from the car to Tesla. As shown in Figure 6-13, the car uses the OpenVPN protocol to encrypt network traffic between the car and the Tesla servers.

Figure 6-13. Network capture of outbound connection from Tesla Model S on Wi-Fi

Earlier in the chapter, we looked at how the car analyzed by researchers in San Diego and University of Washington were able to exploit a condition where the car answered to incoming phone calls instead of connecting outbound to a trusted destination. The use of OpenVPN by Tesla to initiate an outbound connection to a known service is more secure, yet this area is open to further research and may lead to security and privacy issues depending upon a detailed analysis of the configuration. The outgoing connection using OpenVPN can be configured using pre-shared keys, or by using a username and password, or using certificates. It will be interesting to see where in the internal file system this information is located. Once this information is obtained, a potential intruder could test the internal network infrastructure of the OpenVPN end-point and also the integrity of how software updates are performed.

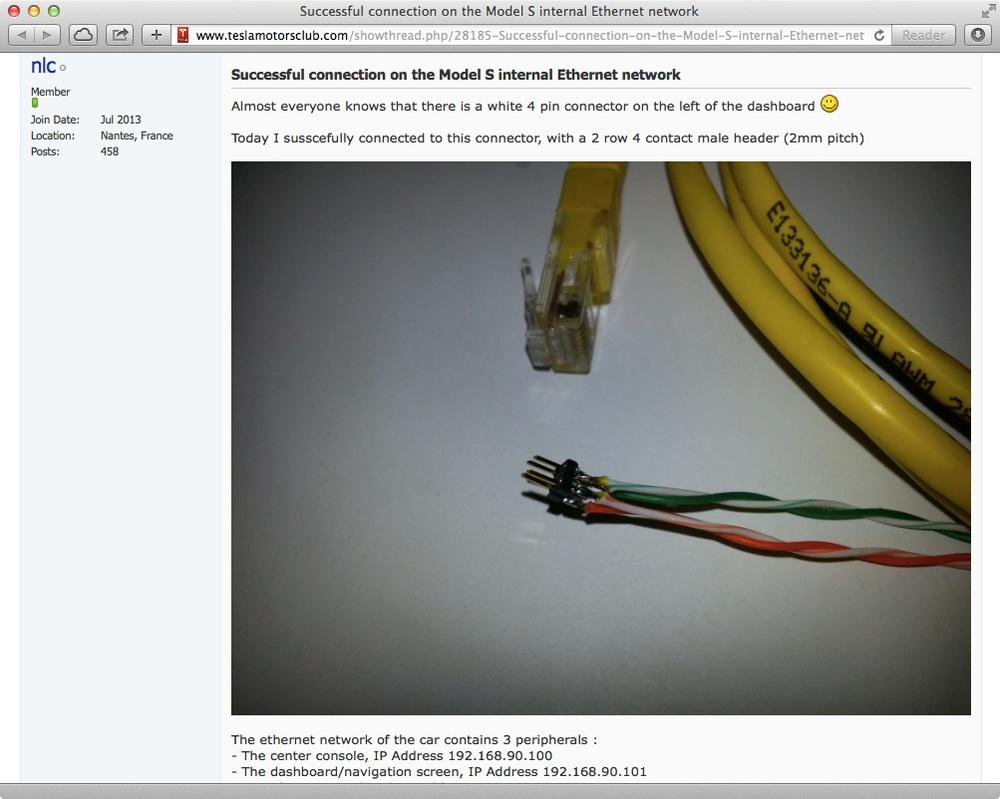

In addition to 3G and WiFi, The Model S also has a 4-pin connector on the left of the dashboard. A M12 to RJ45 adapter can be used to connect a laptop to this port. Users on the teslamotorsclub.com forum have reported various information about the internal network after having plugged into it, as shown in Figure 6-14.

Figure 6-14. Forum discussion about Tesla Model S’ internal network

Upon scanning the internal network after connecting through the RJ45 adapter, the following IP addresses and services have been found to exist in the Model S:

§ Potential center console with IP address of 192.168.90.100 and the following services open:

22/tcp open ssh

53/tcp open domain

80/tcp open http

111/tcp open rpcbind

2049/tcp open nfs

6000/tcp open X11

MAC Address: FA:9E:70:EA:xx:xx (Unknown)

§ Dashboard screen with IP address of 192.168.90.101 and the following services open:

22/tcp open ssh

111/tcp open rpcbind

6000/tcp open X11

MAC Address: 36:C4:1F:2A:xx:xx (Unknown)

§ Another device with IP address of 192.168.190.102 with the following services open:

23/tcp open telnet

1050/tcp open java-or-OTGfileshare

MAC Address: 00:00:A7:01:xx:xx (Network Computing Devices)

§ The SSH service on 192.168.90.100 has the banner of SSH-2.0-OpenSSH_5.5p1 Debian-4ubuntu4

§ The DNS on 192.168.90.100 is of version dnsmasq-2.58

§ The HTTP server on 192.1168.90.100 appears to expose /nowplaying.png which is the album art displayed on the dashboard.

§ The NFS service on 192.168.90.100 exposes /opt/navigon directory which contains the following structure:

dr-xr-xr-x 5 1111 1111 4096 Mar 21 2013 .

drwxrwxrwt 20 root root 20480 Mar 18 17:01 ..

dr-xr-xr-x 4 1111 1111 4096 Mar 21 2013 EU (Contains /maps and /data)

dr-xr-xr-x 2 1111 1111 4096 Mar 21 2013 lost+found

-r--r--r-- 1 1111 1111 7244 Mar 21 2013 MD5SUM-ALL

dr-xr-xr-x 2 1111 1111 4096 Mar 21 2013 sound

-r--r--r-- 1 1111 1111 150 Mar 21 2013 VERSION

/VERSION:

UI/rebase/5.0-to-master-238-g734c31d7,EU

NTQ312_EU,14.9.1_RC1_sound.tgz

build/upgrade/mknav-EU-ext3.sh

It is fascinating that the internal IP network in the Model S contains IP addresses that appear to be running the Linux operating system (Ubuntu).

There have not been any public reports of these services being abused. However, this information is worth mentioning since it gives us perspective on the IP based architecture in the Model S. It is likely that additional researchers as well as malicious parties will be drawn to this IP based internal network for potential attack vectors and vulnerabilities that may lie undiscovered.

Auto Pilot and the Autonomous Car

In October of 2014, Tesla announced that all new Model S cars will contain hardware to enable the “Auto Pilot” functionality as a software update that will be issued to the cars in the coming months (other cars also have similar features, however, we will stick to Tesla since the Model S is our focus). Here is the description from Tesla:

“The launch of Dual Motor Model S coincides with the introduction of a standard hardware package that will enable autopilot functionality. Every single Model S now rolling out of the factory includes a forward radar, 12 long range ultrasonic sensors positioned to sense 16 feet around the car in every direction at all speeds, a forward looking camera, and a high precision, digitally controlled electric assist braking system.

Building on this hardware with future software releases, we will deliver a range of active safety features, using digital control of motors, brakes, and steering to avoid collisions from the front, sides, or from leaving the road.

Model S will be able to steer to stay within a lane, change lanes with the simple tap of a turn signal, and manage speed by reading road signs and using active, traffic aware cruise control.”

Tesla is careful to note that this feature is not completely autonomous, to quote:

“Our goal with the introduction of this new hardware and software is not to enable driverless cars, which are still years away from becoming a reality. Our system is called Autopilot because it’s similar to systems that pilots use to increase comfort and safety when conditions are clear. Tesla’s Autopilot is a way to relieve drivers of the most boring and potentially dangerous aspects of road travel – but the driver is still responsible for, and ultimately in control of, the car.”

And to sum it up:

“The Autopilot hardware opens up some exciting long term possibilities. Imagine having your car check your calendar in the morning (a feature introduced in Software v6.0), calculate travel time to your first appointment based on real time traffic data, automatically open the garage door with Homelink, carefully back out of a tight garage, and pull up to your door ready for your commute. Of course, it could also warm or cool your car to your preferences and select your favorite morning news stream.”

These new features quite wonderful and is likely to decrease accidents in the cases where drivers may be distracted. Companies such as Google, are working on completely autonomous cars that don’t even have a steering wheel. It is evident that “Auto Pilot” ability will take us towards a future where completely self driving vehicles will be around us. As we look into the future, the following new risks are likely to be introduced into the car security ecosystem whose results are bound to be fascinating to analyze:

§ Legal precedence and liability: Tesla as explicitly mentioned that the driver is “responsible for, and ultimately in control of (the car)”. As we move into completely autonomous driving, it will be interesting to see which parties are found to be liable for damages and accidents in the future. Could the car company be held liable if an accident is caused by a hardware or software error? The legal terms and conditions, as well as the difference of legal opinion between the states in the USA and other countries, combined with actual mishaps is likely to shape our understanding of liability and ultimate responsibility.

§ The impact of software bugs: As consumers, we have all come across a software glitch at some point in our lives that may have delayed our online shopping, prevented access to email, or perhaps the ability to print a boarding pass. Now imagine a software glitch in a feature such as the “Auto Pilot” which has the ability to conduct an actual lane change. Such a glitch could have physical consequences to the passengers of the car and nearby cars that can result in physical harm.

§ Vehicle to vehicle communications: As consumer cars become truly autonomous, they will need to implement a peer to peer communication protocol allowing nearby cars to negotiate turns, manage flow of traffic, and alerting each other to road conditions. There are two buzz-words in the industry today that attempt to capture this need, mainly V2V (Vehicle to Vehicle) and V2I (Vehicle to Infrastructure). The combination of V2V and V2I is commonly referred to as V2X. The US Department of Transportation (DOT) and the National Highway Traffic Safety Administration (NHTSA) have set up a website to announce upcoming and proposed laws that automotive manufacturers will be expected to adhere to. As more and more vehicles communicate with each other an with the underlying infrastructure provided by the government (to manage traffic and collect tolls, for example), the attack surface available to malicious entities will increase. In response to government mandates, car manufacturers are going to design solutions that may initially contain security vulnerabilities. The NHTSA has issued a proposal to obtain feedback from the industry on how to securely implement a V2V communication system. This attack surface is going be attractive to the entire spectrum of actors: hardware and software tinkerers, pranksters, nation states, and groups that engage in terrorism.

The Tesla Model S is a great car and a fantastic product of innovation. Owners of Tesla as well as other cars are increasingly relying on information security to protect the physical safety of their loved ones and their belongings. Given the serious nature of this topic, we know we can’t attempt to secure our vehicles the way we have attempted to secure our workstations at home in the past by relying on static passwords and trusted networks. The implications to physical security and privacy in this context have raised stakes to the next level.

Tesla has demonstrated fantastic leaps in innovation that is bound to inspire other car manufacturers. It is hoped that this chapter will encourage car owners to think deeply about doing their part as well as for companies like Tesla to have an open dialogue with it’s owners on what they are doing to take security seriously.

Conclusion

Our reliance on car for our livelihood is unquestionable. Besides being in control of our own vehicle, we also rely on the faculties of other drivers in the road and the safety features of the cars they are driving. In this chapter, we’ve seen firm evidence of the existing (or lack thereof) security mechanisms designed in cars that use and depend on wireless communication to support privacy and security features that are important to passengers.

In the case of the TPMS analysis, it is evident that fundamental security design principles were not baked in to the design of the architecture. It is quite startling to be able to send rogue tire pressure alerts to nearby cars and to be able to abuse the design to potentially track particular vehicles thereby invading the privacy of citizens who are likely not even aware that their cars are using insecure mechanisms to transmit tire pressure data.

The ability to remotely take over a telematics ECU is quite phenomenal. We’ve seen that the CANBus architecture explicitly trusts every other ECU so a simple successful cellular attack can be lethal giving the spectrum of malicious actors control of the car. It is unnerving to uncover that most of the vulnerabilities found were a result of basic software mistakes such as buffer overflows, the reliance on obscurity, and improper implementation of cryptography.

The Tesla Model S is indeed a set of computer on wheels, fully electric and always connected to the Internet. At 691 horsepower, the power of this beast probably makes it one of the fastest consumer grade Internet of Things available for purchase. Despite being a pleasure to drive and lauded as one of the most innovative cars ever produced, owners should be concerned that this luxury vehicle provides remote unlock and start functionality which is protected by a single-factor password system that the security community has known to be easily susceptible to social engineering, phishing, and malware attacks. This can lead to a situation where cars such as the Tesla may be exploited by a myriad of malicious actors such as pranksters or state sponsored activists for various motives. In addition, it is unclear to owners what mechanisms and processes are being employed by Tesla to prevent social engineering of their own employees and also to protect the location privacy of car owners.

Cars in the market today are vulnerable to software flaws that can be mitigated by the inclusion of security design and analysis earlier on and throughout the product development process. We out to pay careful attention and strive to remediate issues that can put our physical safety and privacy at risk. It’s probably not a bad guess to insist that our exposure to the attack surface posed by connected cars that take us to the world of autonomous is bound to multiply should we not take considerable action now.