Understanding Context: Environment, Language, and Information Architecture (2014)

Part II. Physical Information

Chapter 6. The Elements of the Environment

The earth is not a building but a body.

—WALLACE STEVENS

Invariants

WE’VE LOOKED AT HOW our basic functions of perception and cognition work in an environment and how affordances form that environment. Given that context is largely about how one thing relates to another thing, let’s now look at how we perceive elements and their relationships.

Luckily, our friend James J. Gibson, the ecological psychology theorist, created an elaborate yet straightforward system describing the structures that make environments. We won’t be exploring all its details, but there are some major portions we can borrow for making a sort of building-block kit for purposes of design. These elements start with the most basic: what Gibson calls invariants.

Invariants are persistently stable properties of the environment; they persist as unchanging, in the midst of change.[112] These are not permanent properties in the scientific sense of permanence.[113] A hill might erode; a fallen tree might rot; the sun will eventually burn out, but they still involve invariants because they have properties that have been “strikingly constant throughout the whole evolution of animal life.”[114] Invariance is, then, about the way the animal perceives the environment, not an objective measurement of permanent structure.

The only reason we can do anything is because some parts of our environment are stable and persistent enough to afford our action. The laptop keyboard I’m typing on right now is solid, with little square keys that spring up and down in response to my fingers. The keys wouldn’t be able to do this if the body of the laptop and the bed of the keyboard were made of, say, helium. Only because it’s a solid surface with a particular kind of shape can this activity take place.

Likewise, when I get up from my writing spot and go to make a cup of coffee, I can get to the kitchen because the surface of my floor is made of wood, which is solid and supportive of my weight. That is, it’s enough like the ground my species evolved on that I can walk on it, as well.

There are also walls around me, and they have openings that are windows and doors. The doorway between my current writing spot and the kitchen is large enough for me to walk through; I know this because I’ve walked through many other doors and have a good feel for which ones will afford passage and which ones won’t. I’ve also grown up in a culture where conventional doorways afford walking through for someone of average size such as myself. I take them for granted as persistent structures in the built world, the way a squirrel takes for granted that trees will afford climbing.

Perception itself originates with our perceiving of invariants.[115] “The persisting surfaces of the environment are what provide the framework of reality.”[116] We can intellectually know that an earthquake or a hurricane isn’t actually changing “reality,” but at an ecological level, these rare events disrupt our most deeply embodied knowledge of how reality should work.

Invariants exist along a wide scale of the environment, from the most basic components to large structures. The invariant properties of stone are such that a human can pick up a fist-sized rock and throw it at a bird’s nest or use it to bash open a walnut. The same properties of stone make it so that a mountain serves as a landmark for generations of people, not to mention a source of fresh water and a habitat for millions of creatures that evolved there. But, invariance is ultimately about individual perceiving agents and how structure persists in their environment.

Context is impossible to comprehend without invariants. We understand something only in relation to something else, and the “something else” has to be invariant enough to be “a thing” to begin with. (Or, as Richard Saul Wurman so often says, “You only understand something relative to something you already understand.”)[117]

I know I am outside my house when I can see the outside of the structure and look up and notice that I am on uncovered ground, and the sky is above me. If these structures were not invariant, I’d have no idea where I was (not to mention they would no longer be structures).

Invariance is a simple idea that we don’t think about much because it’s so intrinsically a part of our world. It’s why we can say something “is” or that we are “here.” Our cognition evolved in a world in which invariance just comes along, automatically, with the places and objects we experience.

Examples of Invariants

There are dozens of invariants explored in Gibson’s work, but here are just a few:

Earth and sky

The earth “below” and the air “above.” The ground is level, solid, affording support for walking, sitting, lying down. The air is the opposite of the ground, affording locomotion, allowing light and sound to penetrate, and giving room for living things to grow and breathe. In Gibsonian terms, this earth/sky pairing forms the most basic invariant human-scale structure, a shell within which all other structures are structured, comprehended, and acted upon. Humans didn’t evolve in outer space, so like it or not, we have ingrained in us these structures of earth and sky, and what is up or down.

Gravity

We tend to think of gravity as a mathematically expressed property of physics, but our bodies don’t comprehend gravitation as a scientific concept. Long before Newton, our bodily sense of up and down was written into our DNA through natural selection. Our embodied experience of gravity isn’t concerned with purely elastic elementary bodies interacting in space; the planet’s actual environment is made mostly of surfaces that don’t behave much at all like the mathematical ideals of Newtonian physics.[118]

The occluding edge of one’s nose

Human vision is framed most of all by the edge of the human nose; “of all the occluding edges of the world, the edge of the nose sweeps across the surfaces behind it at the greatest rate whenever the observer moves or turns his head” providing an “absolute base line, the absolute zero of distance-from-here.”[119] In English, we often turn to an embodied verbal expression to describe something as “right in front of my nose” as a way to mean it’s as present and obvious as possible (even though we may not notice it). The edge of the nose is, to our vision, the purest instantiation of what “here” means. This reminds us of how our bodies are a crucial element of our environment. The perception-action loop depends on the “landscape” of the body as much as any other of the invariant features in the experienced world.

Other, more complex invariants Gibson describes run the gamut from “the unchanging relations among four angles in a rectangle” to “the penumbra of a shadow” and “margins between patches of luminance.”[120] These are as important as the aforementioned more basic invariants, because they set the foundation for how we know a table is within our reach or whether we can fit through a doorway. A more detailed inventory of Gibson’s invariants is beyond our scope here, but they are central to the key challenges of much industrial and interaction design.

Invariants significantly affect how learnable an environment is. They are what contribute to what we tend to call “consistency.” In the natural world, we come to depend on the patterns we learn about with respect to what different substances and objects do and don’t do; we quickly pick up on the rules of cause-and-effect. It’s crucial to reemphasize, though, that invariants are not necessarily permanent, “frozen” structures; they are invariant because they are experienced that way by the perceiver, in contrast to the changing parts of the environment: variants.[121]

Variants

In contrast to invariants, variants are the environmental properties that tend to change in our perception. The shadows cast by a tree will change as the direction of sunlight changes through the day. The shape of water changes as wind interacts with it, or as it is poured into a differently shaped container. Although these changes might be predictable and follow some consistent patterns, we perceive them as changing in relation to the relatively invariant surfaces and objects around them. Elements all around us have variant properties that we don’t count on for affording action. Imagine a tribe of humans that lived for generations on an island where most of the ground was actually quicksand that was impossible to perceive as such without stepping on it. One could be sure they would walk differently than the rest of us, testing each step as they went.

Compound Invariants

Invariants can be simple properties of simple structures, such as the hardness of a substance. But, there are also combinations—or compound invariants—that we experience as a singular “unit” of invariant properties.[122] A bicycle is a combination of invariants providing many affordances: pedals support feet, but they also move to pull a chain, and the chain turns a wheel; the seat supports my weight; the handlebars support my body leaning forward, but also afford turning the front wheel for steering. Yet, as soon as I’ve grown used to riding a bicycle, I don’t approach the vehicle as a pedal-handlebar-seat-wheel-turning-etc. object, but as an object that affords “riding a bicycle.” The invariant affordances combine into a unit that I learn to perceive as a single invariant entity.

In the science literature, this idea of compound invariants is still being debated and fleshed out, but I think it’s safe for us to think of it as a basis for much of what we make with technology. Most of what we design is more complex than a stone or stick; it combines invariants and variants in multiple ways. As we’ll see, with language and software, invariants can be compounded to the point that they’re not directly connected to physical properties at all; rather, they’re part of a self-referential system distantly derived from our physical-information surroundings.

Invariants Are Not Only About Affordance

Here’s an important point to make regarding invariants and affordances: although affordance involves perception of invariant information, not all invariant information is about affordances. We are using Affordance for physical information. Yet, we encounter all sorts of other invariant information that is meaningful to us without being directly perceived. A stop sign means “stop,” but its affordances have only to do with how the object is visible or how it is sturdy enough to lean against while waiting to walk across the street. The meaning of “stop” is one of the most stable invariants in English (and red, octagonal stop signs have a conventional, invariantly informative presence in many non-English-speaking places, as well). But the most important meaning of the stop sign is not its physical affordances. Because digital environments depend on such continuities of meaning, this distinction between language-based invariants and physical invariants is central to the challenges of context in a digital era.

Digital Invariants

In digital interfaces and objects, we still have need of invariants because perception depends on them. However, this sort of information doesn’t naturally lend itself to the stability we find in physical surfaces and objects. The properties of user interfaces need to be consistent for us to learn them well. We hunger for stable landmarks in the often-ambiguous maze of digital interfaces.

The Windows operating system’s innovation of a Start button was, in part, a way to offer an invariant object that would always be available, no matter how lost users might become in the complexities of Windows. Likewise, websites have retained the convention of a Home page, even in the age of Google, when many sites are mainly entered through other pages. It provides an invariant structure one can cling to in a blizzard of pages and links.

These examples exist partly because the rest of these environments tend to be so variant and fluid. Digital technology gives us the capability to break the rules, in a sense, creating variants where we expect invariants. There are huge benefits to this physically unbound freedom; we can handle scale and make connections that are impossible in the physical environment. Folders can contain more folders to near-infinite capacity; a desktop can multiply into many desktops. But there are equally substantial dangers and pitfalls.

For example, across platforms, actions can have significantly different consequences: in one mobile app, double-tapping a photo zooms in on it, whereas in another, the same gesture marks the photo as “liked,” even if you find the photo horrible or offensive. Even within a single platform, the rules can radically change. In Facebook, it seems every few months the architecture of privacy morphs again, disrupting the invariants users learned about who could see what information and when. This is not unlike waking up one day to find the roof removed from one’s house. If these invariants were created by physical affordances, they couldn’t change so radically. We know software isn’t physical. But because perception depends upon invariant structure, we see it and use it whenever it seems to be offered to us, even if a software code release can upset those invariants in a moment’s time.

These differences and disruptions are not the sorts of behaviors we evolved to perceive with any accuracy. They are instead the black ice, or quicksand, of digital objects and places.

The Principle of Nesting

All these invariant components of the environment are perceived in relation to one another, and the principle by which we perceive these relationships is the ecological principle of nesting. Animals experience their environment as “nested,” with subordinate and superordinate structures.

Nested invariants establish the persistent context within which motion and change happen, both fast and slow. As Gibson says somewhat poetically, “For terrestrial animals like us, the earth and the sky are a basic structure on which all lesser structures depend. We cannot change it. We all fit into the substructures of the environment in our various ways, for we were all, in fact, formed by them. We were created by the world we live in.”[123] The way the world is nested forms a framework for how we understand it.

Within the earth and sky, at subordinate levels, there is more nesting: “canyons are nested within mountains; trees are nested within canyons; leaves are nested within trees. There are forms within forms both up and down the scale of size.”[124] Nesting provides a framework for the agent to pick up information about the environment at different levels of scale, focusing from wide to narrow, huge to tiny.

Even without telescopes and microscopes, humans have a great deal of environmental information to pick up; the ability to learn an environment (and therefore, learning in general) might be largely due to the ability to break it down into loosely defined tiers and components, none of which exists discretely in a vacuum.[125]

Nesting versus Hierarchy

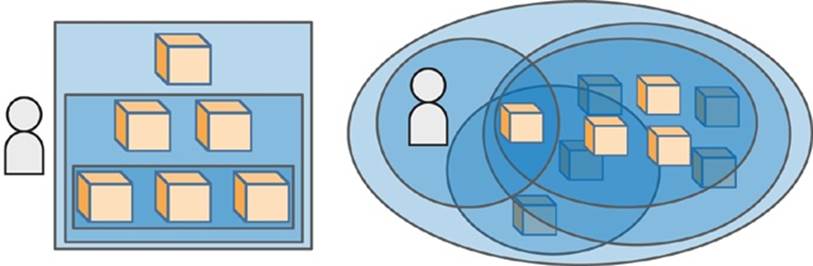

Nesting is not the same as hierarchy (see Figure 6-1), because it is “not categorical but full of transitions and overlaps. Hence, for the terrestrial environment, there is no special proper unit in terms of which it can be analyzed once and for all.”[126] Again, this is a property of how terrestrial creatures such as humans perceive the environment, not an artificially defined property like “meter” or “kilogram.” It isn’t about logically absolute schemas such as the periodic table of elements, or the mutually exclusive branching we see in strict hierarchies.

Figure 6-1. Hierarchies (left) are categorically defined, with in an objective parent-child tree structure, separate from the perceiver. Ecological “nesting” (right) has elements contained within others, but in perceiver-dependent fashion that can shift and overlap depending on the present perceptual needs of the individual.

A conventional hierarchy is about categorical definition that’s true regardless of context, whereas our ecological experience of the environment shifts depending on circumstances. A geological definition of “cave” doesn’t change with the weather. For an animal, however, a cave that provides excellent protection in dry weather might be useless during the rainy season, if its opening is angled to let water in: suddenly “inside” feels like “outside.”

Likewise, the way we think of “here” and “there” can shift—which on the face of them seem like clear opposites. But, these designations are dependent on the nested context. You might be sitting at home, in a comfortable reading chair, from which you can see a bookcase on the far wall. You’re trying to remember if you left a particular book at work and wonder if the book is there or if it is here at home—which could mean anywhere in the house. You look up to the bookcase and see it, and realize with relief that, yes, not only is it here at home but here in the room with you, not there in some other room. Still, when you decide you want to read the book, it’s no longer here but there on the bookshelf, because now here and there are defined not by mere location but by the factor of human reach. From a geometrical point of view, nothing changed, but from an ecological point of view, many shifts in perceived structure happened in a matter of a few moments.

Digital Environments and Nesting

Interfaces can graphically represent these nested relationships with boxes and panels, outlines and background shading, with one set of information contained by another, and so on. Information architectures (which depend in part on interfaces representing this containment) also present nested structures through categories and semantic relationships.

Yet, interfaces need ways to provide invariants while still allowing users to move through structures from various angles based on need; a user might shift between different nested perspectives in the course of only a few minutes.

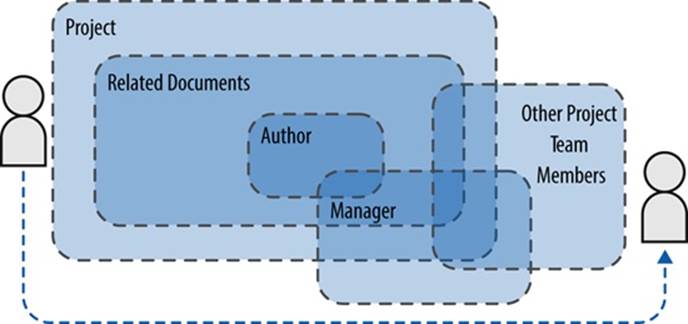

For example, Figure 6-2 presents a scenario in which an office worker might need to gather information about a prior project, including its documentation, personnel, and manager involvement. The worker might begin with his focus mainly on finding a related document, from which he discovers the name of the document’s author. He then uses the author to find who the team manager was, in order to find others who might have past knowledge of the project.

Figure 6-2. Information is nested from different perspectives for the same user, at different points in an activity

In this case, the user’s information pickup organizes itself around documents and projects first, then finding people by name, and then finding people by organizational relationship. All these structures overlap in various ways: a document can be just a document or part of a project, and the project might be related to a larger ongoing enterprise program but also related to various teams in several departments, which themselves can shift in importance depending on a worker’s cognitive activity from one task to the next.

In particular, for the work of information architecture, we have to remember that users might comprehend an information environment in a nested way, but that doesn’t mean they naturally comprehend artificial, categorical hierarchies. Learning a new hierarchy of categories depends on explicit, conscious effort. But an information environment that works well at an ecological, embodied level might seem incoherent through the lens of logical hierarchy. When online retailers add holiday-specific categories to their global navigation menus, it upsets the logic of hierarchy, but it makes perfect sense to the many shoppers who are seasonally interested in holiday gifts.

Facebook users experienced disorientation, especially in its early years, as it expanded from being nested within a single university to multiple universities, and then to high schools, and finally opened up to everyone. Likewise, its internal structures (and its invisible reach into other contexts, such as with Beacon) are continually “innovated” to the point at which users struggle to grasp where they and their actions are nested in the shifting fun-house of illusory invariants.

Surface, Substance, Medium

When we perceive information in the environment, we’re largely perceiving information about its surfaces, which are formed by “the interface between any two of these three states of matter—solid, liquid, and gas.” Where air meets water is the surface of a pond. Where a river meets earth is a surface is a riverbed. Where air meets earth is a surface—the ground—which, for terrestrial animals, is “both literally and figuratively” the ground of perception and behavior.[127] The structure of the environment is, in essence, its “joinery” between surfaces and substances. These are the “seams” of our world. If it were “seamless,” there would be no structure, and no perception.

These surfaces are made of substances which are substantial enough that, when environmental energy (sound, light, and so on) interacts with them, the resulting information can be perceived. We didn’t evolve to readily perceive invisible things or things that are too tiny or huge to apprehend, at least as far as physical information is concerned.

Medium refers to the substance through which a given animal moves. For a fish, it’s water. For terrestrial creatures, it’s air.[128] Another quality of a medium is that it physical information can be detected through it. Solids do not allow the full pickup of information because they impede ambient energy.[129] Sure, we can sometimes hear noise through a solid wall, but only because there is air on both sides of the wall that reverberates the sound. Fill our ears and the room around us with wall plaster, and we’ll hear a great deal less.

Digital Examples

In software, we see users trying to figure out what parts of a system they can move through (medium) versus parts that do not afford action (impeding substances/surfaces). An understandable interface or information architecture provides invariant indications of where a user can go and how. Perception evolved among actual substances and surfaces, made of atoms, so even in the insubstantial realm of language and bits, it still reaches for substantial information, hoping something will catch hold.

Objects

An object is a “substance partially or wholly surrounded by the medium.” Objects have surfaces but with a topology that makes them perceivable as distinct from other surfaces.

Some objects are attached and others detached. An attached object is a “protuberance” that has enough of its own independent surface that it can be perceived to “constitute a unit.”[130] It’s topologically closed up to the point at which it is attached to a surface. An attached object can be counted, named, thought of as a thing, but still understood to be a persistent part of the place to which it’s attached. An attached object can’t be displaced unless it’s detached by some kind of force (which, once applied, then results in a detached object). Attached objects are in an invariant location, so they make up part of the invariance of the surrounding information.

Detached objects are topologically closed, independent entities. Learning to perceive a detached object is different from perceiving an attached one because it has different properties and affords different actions. A tree branch might be attached to a tree, but when we break it off, it is now detached, affording actions unavailable in its attached state. The many bumpers of a pinball machine and other structures are attached objects; the pinball is detached.

Recall how we established earlier that when we pick up an object and use it as a tool with which we extend our body’s abilities, we perceive it as an extension of ourselves.[131] A tree branch can go from being an attached object, to a detached object, to part of “me” with great ease.

Phenomenology and Objects

In some ways, embodied-cognition science has been catching up to ideas that have been explored in other disciplines for at least a century. For example, phenomenologist Martin Heidegger famously described three modes of experiencing the world:

Readiness-to-hand

When an experienced carpenter is using a hammer, the carpenter doesn’t think consciously about the hammer. Instead, the hammer becomes a natural extension of the carpenter’s action, and the carpenter “sees through” the tool, focusing instead on the material being affected by hammering. In our levels of consciousness spectrum, this is tacit, unconscious action, in which there’s no explicit consideration of the tool any more than explicitly considering our finger when we scratch an itch.

Unreadiness-to-hand

When the action of hammering is disrupted by something—a slippery handle, a bent nail, a hard knot in the wood—the hammer can become “unready-to-hand.” Suddenly the carpenter must stop and think explicitly about the tool and the materials. The hammer can become less of an extension of the self at this point—no longer “seen through” but considered as an object with a frustrated or interrupted potential as a natural extension of the body.

Presence-to-hand

When looking at a hammer as “present-to-hand,” we remove it from its context as a tool or a potential extension of the self; instead, we consider it neutrally as an object with properties of size, density, color, and shape.[132] This is explicit consideration outside of the cycling between the “unreadiness” and “readiness” in the context of the tool’s affording functions. As designers, we often need to switch into this level of awareness of what we are designing: its form and specification and other aspects that the user doesn’t (or normally shouldn’t) have to worry about.

Manipulating and Sorting

A detached object can also be manipulated, considered, and organized in ways that attached objects can’t. It can be compared with another object, grouped with similar ones, and sorted into classification schemes, allowing counting and assignment of a number.[133] As we’ll see, this is what we do with the abstractions of language, with which we use words or pictures to categorize and arrange the world with signifiers rather than the physical elements themselves.

Objects with Agency

Among the world’s detached objects are animals, including humans.[134] We evolved to comprehend that these objects are special, and have a sort of agency of their own, an animus evident in their physical actions, signals, and (for humans) symbolic language. In modern life, many of us don’t encounter a lot of wild animals, but we’re familiar with pets as independent agents in our midst. Other humans interface better with us than animals (usually...), because we share more of an umwelt (environmental frame) with them, including the full capabilities of human language. Regardless of human or animal, we spend a lot of energy figuring out what these agents are up to, what they must be thinking, or what they need.

Of course this means we ourselves are detached objects, and in moments of objective consideration, we sometimes perceive ourselves as such. Typically, though, we don’t see ourselves with the objectivity that other agents perceive us. So, a human agent might be puzzled or troubled when an environment treats him like another object rather than as a “self.” When we find ourselves in bureaucratic labyrinths, shuttled along as if on a conveyor belt, it feels “dehumanizing”; we feel the same when digital systems communicate with abrupt sterility, or coldly drop us from what felt like a meaningful exchange. Technological systems tend to lack contextual empathy.

Digital Objects

Digital objects might be simulated things on screens or physical objects with digitally enabled properties.

Simulated objects are, for example, icons in a computer’s interface. From early in graphical-user-interface (GUI) design, the convention has been that these should be able to be moved about on a surface (such as the desktop) or placed in other container objects (folders). However, these are flat simulations on a flat screen. If they were physical objects, we would be able to determine through action and various angles of inspection whether they were attached or detached, or if they were merely painted on a surface. Digital displays don’t give us that luxury, so we have to poke and prod them to see what they do, often being surprised when what seemed an interactive object is really a decorative ornament. Like Wile E. Coyote in the Road Runner cartoons, we slam into a wall where the tunnel is only painted on.

Software also uses text as objects of a sort. Labeling our icons means we’re attaching word objects to the picture objects. But even in something like a text-based email application, or a command-line file-transfer-protocol (FTP) interface, for which there’s little or no graphical simulation, there are still commands such as “put” and “get” and “save” that indicate we are treating chunks of text as singular objects to be moved about, modified, put away, or retrieved from storage.

Sometimes, we perceive an entire interface as an object, with buttons and things that we are manipulating the way we would manipulate a physical object such as a microwave or typewriter. These objects are made up of other, smaller objects, nested together into compound objects (with compound invariants). Other times, we perceive an interface as a place (which we will discuss shortly). And often, because of the weird nature of digital information, we perceive a digital thing as both object and place at the same time, which can be just fine or very confusing, depending on how appropriately it’s expressing itself as one and the other, or the perspective the user brings to it.

As David Weinberger explains in Small Pieces Loosely Joined: A Unified Theory Of The Web (Basic Books), this has been the case with the Web for a long time: “With normal documents, we read them, file them, throw them out, or send them to someone else. We do not go to them. We don’t visit them. Web documents are different. They’re places on the Web....They’re there. With this phrase, space—or something like it—has entered the picture.”[135] Our everyday language shows how this works: we find ourselves using prepositions interchangeably in digital environments, such as “you can learn more on Wikipedia” or “I’m in Facebook right now” or “find my book at Amazon.com!” Physical structures tend to constrain the prepositional phrasing that makes sense for them. I don’t say I am “on” my house if I am inside it, but I might say I am “in” or “at” my house. Digitally driven structures are more fluid, allowing us to use words with more flexibility, based on the context of the interaction.

Other times, it’s clear that what we are using is an object, but it conflates not with place but with our bodies. When we use a desktop computer with a mouse, our cursor becomes an extension of the arm as much as our finger is an extension of our hands-on touch screens. Our bodies have an appetite for environmental extension, always working to find equilibrium that allows us to be more tacit than explicit, more “ready-to-hand” than otherwise.

Digital objects are also physical objects with digital information driving some of their affordances and agency. We are increasingly surrounded by these, in our burgeoning Internet of Things. When we perceive these objects and interact with them, we’re tapping into ancient cognitive functions to comprehend what they’re up to in relation to ourselves.

But, we don’t always know if an object has agency or not, and they’re often harder to comprehend than any natural creature. One wouldn’t expect one’s computer to whisper secrets to one’s friends about buying a private item from Amazon or cranking up a game on Kongregate during business hours. The information readily perceived by the user was not clearly indicative of those behaviors. Yet, when Beacon posted news items on behalf of Facebook users, it was doing just that as a digital agent making a decision not directly controlled by the human agent, and without clearly informing its intentions and rules of cause and effect.

How well we perceive context in digitally affected environments is often a matter of how well the environment clarifies what is an object that is detached or attached, what sort of object it is, whether it has agency of its own, and what rules it follows.

SMARTPHONES “AT-HAND”

Recent research into how we use our smartphones shows that we tend to use them—in Heidegger’s terms—as “ready-to-hand” objects, extensions of ourselves.

Figure 6-3. An honest bit of graffiti in the San Francisco Mission District (photo by Jeff Elder)[136]

In one study, over 50 percent of smartphone owners kept their devices close to them when going to bed at night.[137] In another study, for well over 80 percent of days tracked, the smartphone was the first and last computing device used by participants—bookending their days more pervasively than laptop and desktop computers. Generally, users “always” kept their phones with them, defaulting to desktop and laptop devices only “when absolutely necessary.” The study concluded that “the phone is emerging as a primary computing device for some users, rather than as a peripheral to the PC.”

Of course, for many populations in the world—those who missed out on the personal-computer revolution—phones are their first and only computing devices. And according to a World Bank report, “About three-quarters of the world now have easier access to a mobile phone than a bank account, electricity, or clean water.”[138]

Mobile phone devices have many characteristics that, taken together, make them a sort of “perfect storm” object for extending our cognitive abilities—and the dimensions of our personal context. Here are some of those characteristics:

§ For over a century, the telephone has been one of our most intimate modes of communication, allowing us to whisper to each other—lips to ears—in private conversations.

§ Mobile phones are small, portable, and more easily treated as “ready-to-hand” extensions of ourselves than other, larger devices.

§ They’re always connected, often through multiple networks.

§ Phones are more likely to be geolocation capable, providing extended-cognitive situational awareness.

§ Because we carry phones on our person, they can communicate with us through touch—vibrating our skin, asking for attention.

Considering all these factors, it makes sense that adoption of mobile phones is outstripping all other devices by leaps and bounds.[139]

Layout

Layout means “the persisting arrangement of surfaces relative to one another and to the ground.”[140] It’s the invariant relationship of elements such as objects and other features in a particular setting.[141] Each arrangement provides a particular set of affordances that differs from some other layout; one layout involving trees might afford one sort of behaviors for a squirrel and different behaviors for a bird.[142]

Perceiving layout depends on both perception and action. We tend to use the word “layout” for static two-dimensional artifacts such as newspapers or posters, which have pictures and text. However, our perception of layout evolved in three dimensions, which we come to understand by moving through an area and seeing things from different angles, touching them, smelling, and hearing. The layouts we see in two-dimensional surfaces such as paper and screens are representational, artificial layouts. They borrow some properties of the three-dimensional environment but are not as physically information-rich.

Layout affects the efficacy of action and an environment’s understandability. The layout of a kitchen in a restaurant can make or break its success—you don’t want wait staff bumping into the sous-chef, or the baker’s oven opening over the head of the saucier. Likewise, an airport ideally has a layout that clearly informs us about the rules and expectations of the space.

Related to layout is clutter, which are objects that obscure a clear view of the ground and the sky for a given perceiver.[143] Yet, when one of those objects becomes the subject of the perceiver’s attention, it’s no longer perceived as clutter but as an affording object in its own right. A stone on the ground is part of the clutter that obscures a full view of the ground; but the stone can also be an object that affords picking up and using as a tool or placing as part of a wall. A room might be full of furniture that is clutter to the person trying to get as quickly as possible from one end of the room to the next; but when the same person is tired from all that jumping about, a chair affords respite.

Sometimes, a cluttering object acts as a barrier, which hinders further movement. A barrier’s affordance is that it blocks our movement. Remember, all affordances are just affordances, not anti-affordances—whether an affordance is positive or negative for the perceiver is a matter of context.

What is clutter or not is a nested property of the perceiver’s current experience. Again, this isn’t a permanent categorical hierarchy. Contextually, anything can change its role in the layout depending on current behavioral needs.

Digital Layout

Digital interfaces and linked environments likewise have these layout concerns. Some are about efficiency of action, such as with guidelines like Fitts’ law, which concerns the relationship between size of target and distance to target. Some are about comprehending the relationships of the elements on the screen and between different “places” in the software environment. Users must understand the difference between an underlying surface and what is an attached or detached object and what these things allow the user to do: Can I move a document into a folder? Can I edit or erase a string of text? Is a drop-shadow under an object an indication of its movability, its press-ability, its priority in the visual hierarchy, or is it just decoration? These treatments are all clues as to the relationship of a particular entity to other entities, in an overall layout.

Clutter is a factor for digital interfaces, as well. We often hear users say “there’s so much clutter” in an interface. Yet, everything in an interface was put there by someone for some reason, whether warranted or not. One user’s trash is another user’s treasure. One shopper’s clutter is a marketer’s sale promotion insert.

Events

An event is a change in the invariant structures of the environment, such as a change in substance, object, or layout.[144] Gibson offers a thorough classification of terrestrial events, which we won’t cover fully, but it includes three major categories:

§ Changes in the layout of surfaces (and by extension, objects, and so on)

§ Changes in color and texture of surfaces

§ Changes in the existence of surfaces[145]

Examples of these could include things such as a ball being thrown from the pitcher’s mound to home plate; the movement of a rabbit from “in range” for catching to “out of range” down a hole; or the changes observed in substances as they transition, such as from ice to water or as soft clay hardens. Changes in existence can be due to burning in fire, or decay, or in an animal that was once not in existence being born and now in the environment as a detached object.

Like other structural elements of the environment, events can be nested and interdependent.[146] A place can change from “cool shade” to “hot and bright” if a tree (an attached object) falls (another event, changing the tree to a detached object). Most natural events contain so many small events that it’s impossible to identify them all. We perceive these complex events in a compound way, not having to keep cognitive track of every observable change. We don’t have to perceive every single raindrop to know that it’s raining.

Events are related to a psychological phenomenon called change blindness. This occurs when something in the environment changes without our noticing it. We can easily overlook events if they’re not perceived as part of what is relevant to our current action. In a well-known video demonstrating this effect, the viewer is asked to keep track of how many times a group passes a basketball between its members. Most viewers don’t notice the weird, costumed “dancing bear” among them. Facebook’s Beacon presented only a small indicator of its activity that was easily overlooked in the busy events happening on a kinetic site such as Kongregate. These change-blindness issues are even more of a danger in screens and displays, because the information available for pickup is so limited compared to a three-dimensional, object-laden environment.

Affordances and Laws for Events

Events can have affordances of their own. A campfire can afford keeping us warm; rain affords watering our crops. These events are, of course, nested in particular contexts; the campfire can also burn our bodies, and rain can flood our fields.

Perception evolved in a world in which events tend to follow natural laws that can be learned. The motion of water can be variant as a substance, but its behaviors are invariant in that water will always behave like water. The sun moves across the sky through the day, but it does so every day, following an invariant pattern.

Events and Time

We actually perceive time as events rather than as abstractions such as seconds or minutes.[147] We feel time passing because things are changing around us. Until only a century or so ago, most people still thought of “noon” as when the sun was directly overhead. Even though clocks were already fairly common, they were thought of as approximations within a given locality, not synchronized with any global standard. It was the rise of railroads that brought absolute, standardized time to the general public; railways couldn’t have one town’s noon be different from another’s; otherwise, trains would never be on time anywhere, or (at worst) they might collide at junctions.[148]

We now live in an artificially mechanized sort of “time.” Our culture regulates the events around us to the point at which our surroundings tick to the same rhythm as the clock, no longer tied to the relatively fluid event of the sun rising and setting. Even when it’s a mechanical structure, though, it’s the environment that creates information marking time, not the abstract measurement of seconds or hours.

This is why time can feel so relative to us, no matter how many seconds are measured.[149] A bad and boring 90-minute movie feels interminable; an exciting, quality 90-minute movie feels like it flies by. In digital design, we might want to judge the efficacy of a website, for example, by how long it takes a user to get from one page to another, either in terms of seconds or mouse clicks—but we find the user perceives the passage of that time differently depending on context. User perception is important to consider when looking at the results of analytics and other performance measurements. Ten clicks might be fine, if the user is getting value out of each one (and feels like she’s getting where she needs to go); three clicks can feel like forever if the user is floundering in confusion.

Digital Events

There are many sorts of events we perceive in digital interfaces. There are rich-interaction transitions (windows zooming in and out; swiped objects disappearing in a puff of animated smoke) that make clear where simulated objects have gone or where they came from. There are also system processes, including backing up a file or establishing a wireless connection. These can be initiated by us or initiated by the system’s own digital agency.

Users often struggle to comprehend the events that occur in software environments, where cause-and-effect does not have to follow natural laws. This can be especially true when the system is doing something on its own, outside of our immediate perception. The more complex our digital infrastructure becomes, the greater role these invisible, automated events play in our environment.

Place

Place is the last element we will cover in this summary. It’s especially important to context because so much of context has to do with how we perceive our environment as organized into places. Here are the key factors that describe place:[150]

§ A place is a location or region in the environment that has a particular combination of features that are learned by an animal. An animal experiences its habitat as being made up of places.

§ Unlike an object, in nature, a place has no definite boundaries, which might overlap in a nested fashion depending on the current action of the perceiver, and other factors. Sometimes, however, there are in artificial boundaries like fences, walls, or city blocks. These are layout structures that nudge us into perceiving them as places, because of how they constrain and channel action. It’s still up to the perceiver to distinguish a city block or the inside of a building as a place, but the environment exerts great control over that perception.

§ Places are nested—smaller ones are nested within larger ones, and they can overlap or be seen differently from various points of view, depending on the particular actions and perceptual motivations of the perceiver.

§ Movement in the environment is movement among places, which can be reached via one or more paths (which themselves go through other places). Even a path through the woods can be experienced as a place—it’s a layout in the environment that affords motion between other places. Yet, the path is meaningful because of the places it connects.

§ We don’t perceive “space.” Instead, we perceive places. Space is a mathematical concept, not an embodied one. Space is undifferentiated; but just by perceiving and moving within an area, the perceiver’s presence is already coming to grips with the area as one or more places.

§ Place-learning is “learning the affordances of places, and learning to distinguish among them.” It is an especially important kind of learning for all animals, including humans. As we saw earlier, place has a function in how our extended cognition learns and recalls information, such as when people had more accurate recollection when staying in the same room.

§ Place-learning and orienting oneself among places is what we call wayfinding—knowing where one is in relation to the entire environment, or at least the places that matter to the agent.

§ Places persist from the point of view of the perceiver—they cannot be displaced the way objects can. Our perception counts on places being where they are, from one day to the next.

The persistence of places and the way we learn them is especially important to point out. A place can be altered, such as by fire or a destructive storm, but it is still in the same location in relation to other places. Place-learning depends on this persistent situated quality.

Digital Places

This issue of persistence is especially important when we look at software environments. Our perceptual system relies on the persistent properties of place, and can be confounded by environments that don’t meet those expectations. Yet, software can create the useful illusion of persistent places and then obliterate or move them or change them fundamentally in ways that cannot occur through the natural laws that govern physical information.

A website can feel like a place, but it can also feel as though it has places within it. Likewise, a portal that gathers articles from many sites can be its own place, even if all the content is from elsewhere. An application can be a place nested in an operating system. A document is an object until it’s opened and, in a sense, “dwelled in,” and feels like a place again. Likewise, digital technology can change the way we experience physical places, such as when we use something like a “geo fence” to teach a smartphone to present home-related information when it’s near our home address.

Places, and “placemaking,” will figure centrally in our exploration as we continue through semantic information, digital information, and the vast systems of language we inhabit. For humans, it turns out that place is as much about how we communicate as what we physically perceive.

[112] Gibson, J. J. The Senses Considered as Perceptual Systems. Boston: Houghton Mifflin, 1966:201.

[113] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:13.

[114] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:18-19.

[115] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:254.

[116] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:100.

[117] Epstein, Nadine. “In Search of the God of Understanding.” Moment (momentmag.com) September-October 2013.

[118] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:15.

[119] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:117.

[120] Goldstein, E. Bruce. “The Ecology of J. J. Gibson’s Perception.” Leonardo Cambridge, MA: The MIT Press, 1981;14(3):191-195. Stable URL: http://www.jstor.org/stable/1574269.

[121] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:87.

[122] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:141.

[123] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:130.

[124] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:9.

[125] Wagman, Jeffrey B., and David B. Miller. “Animal-Environment Systems: Energy and Matter Flows at the Ecological Scale.” Wiley Periodicals, 2003 (http://bit.ly/1vX9ZrJ).

[126] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:9.

[127] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:16.

[128] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:307.

[129] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:17.

[130] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:241.

[131] Bodies and Environments; earlier this chapter.

[132] Dotov D. G., L. Nie, A. Chemero. “A Demonstration of the Transition from Ready-to-Hand to Unready-to-Hand.” PLoS ONE 2010;5(3):e9433. doi:10.1371/journal.pone.0009433.

[133] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:241.

[134] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:307.

[135] Weinberger, David. Small Pieces Loosely Joined: A Unified Theory Of The Web. New York: Basic Books, 2002:39.

[136] https://twitter.com/JeffElder/status/431673279128936449

[137] Smith, Aaron. “The Best (and Worst) of Mobile Connectivity.” Pew Internet & American Life Project, November 30, 2012 (http://bit.ly/1oneutq).

[138] Mlot, Stephanie. “Infographic: Mobile Use in Developing Nations Skyrockets.” PC Magazine July 18, 2012 (http://www.pcmag.com/article2/0,2817,2407335,00.asp).

[139] Karlson, Amy K.,1 Brian R. Meyers,1 Andy Jacobs,1 Paul Johns,1 and Shaun K. Kane2. “Working Overtime: Patterns of Smartphone and PC Usage in the Day of an Information Worker,” (http://research.microsoft.com/pubs/80165/pervasive09_patterns_final.pdf).

[140] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:307.

[141] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:148.

[142] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:128.

[143] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:307.

[144] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:242.

[145] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:94.

[146] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:110.

[147] ———. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:100.

[148] Kuniavsky, Mike. Smart Things: Ubiquitous Computing User Experience Design. Burlington, MA: Morgan Kaufmann, 2010:3.

[149] Popova, Maria “Why Time Slows Down When We’re Afraid, Speeds Up as We Age, and Gets Warped on Vacation,” brainpickings.org July 15, 2013.

[150] The points following all come from Gibson, 1979, pp. 240-241.