Designing Connected Products: UX for the Consumer Internet of Things (2015)

Chapter 15. Designing Complex, Interconnected Products and Services

BY CLAIRE ROWLAND[243]

Most of the consumer IoT products on the market right now offer a limited set of functions through a small set of devices. As we saw in Chapter 10, many don’t interoperate well or at all with third-party devices. This keeps things (relatively) simple in conceptual terms. But IoT is called the Internet of Things because our ambitions stretch further than this, to create interconnected networks of many devices, web services, and users. Your product may grow to interoperate with other third-party devices or services, or become a platform supporting many different devices and services. As it develops, the pattern of logical interconnections between devices, data, and users will become very complicated, very quickly.

Technical interoperability is a huge challenge in its own right. But creating a design that allows users to understand, anticipate, and control how interconnected services and devices work together is an equally big challenge. The makers of IoT technology are only just beginning to address this.

This chapter looks ahead to a future of complex systems composed of many interconnected devices and applications. It discusses the challenges in making complex systems understandable and valuable, keeping users in control without overwhelming them with configuration options.

This chapter introduces:

§ How systems become complex as they begin to scale (see It’s Complicated...)

§ The UX challenges of scaling the system (see Scaling the UX)

§ The challenges of putting users in control of complex systems (see Control)

§ The challenges of handing over control to the machines (see Giving Machines Control)

§ Approaches to scaling UX in the platform (see Approaches to Managing Complexity)

§ Using data models to coordinate devices and systems around user needs (see Data Models)

This chapter addresses the following issues:

§ How adding just a few more devices to a system can create a complex web of interrelationships for the user to navigate (see It’s Complicated...)

§ Why adding more devices to a system means the UX focus has to shift to activities, instead of controlling devices (see Looking Beyond Devices)

§ Why putting users in control of IoT is asking them to think like programmers, and why that’s a challenge (see Putting Users in Control)

§ Why handing over control to machines can make complex tasks easier... as long as we don’t lose control (see Giving Machines Control)

§ Using data models to describe devices, system components, the context of use and the user (see Data Models)

It’s Complicated...

In Chapter 9, we saw that even fairly simple connected devices and services can be conceptually much more complex than nonconnected equivalents. Users need some grasp of how functionality is distributed around the system, and how it may fail if parts go offline.

But so far, we’ve talked about examples that are relatively straightforward. Most of the consumer IoT systems out there involve a small set of known devices. Many are designed for only one user, or are blind to the fact that they may have multiple users. As we saw in Chapter 10, many of these are controlled via a software platform (and often web/mobile apps) provided by the manufacturer. The UX design of these apps is often geared toward controlling the individual devices—for example, Philips Hue or WeMo (see Figure 15-1).

Figure 15-1. Device controls in the WeMo app (image: Belkin)

When you only have a few devices, this is perfectly acceptable. You open one mobile app to control your thermostat, another to control a couple of lights, and perhaps one more for a connected socket or two.

But once you start adding more and more devices, things become complicated. As discussed in Chapter 10, you could soon end up with lots of apps, each controlling one or two devices. Just finding the right app for the thing you want to do becomes a challenge. One app giving you individual controls for lots of devices isn’t necessarily much better if you have to dig deep to find the right device or control.

But making device controls easy to access is only a part of the problem. As you add more and more devices, the number of potential interconnections between them increases rapidly.[244] To make those connections meaningful, we’ll want to add more and more ways for them to coordinate with each other. That creates more potential interrelationships between devices, and more device and cross-device functionality to understand, configure, and control. The most complex consumer example of this is currently the connected home.

The whole point of IoT is that the sum of the parts is much more powerful than the individual components. Considering automation and interactions between device types is vital when designing easy to use and flexible products.

—GAWAIN EDWARDS, IOT CONSULTANT[245]

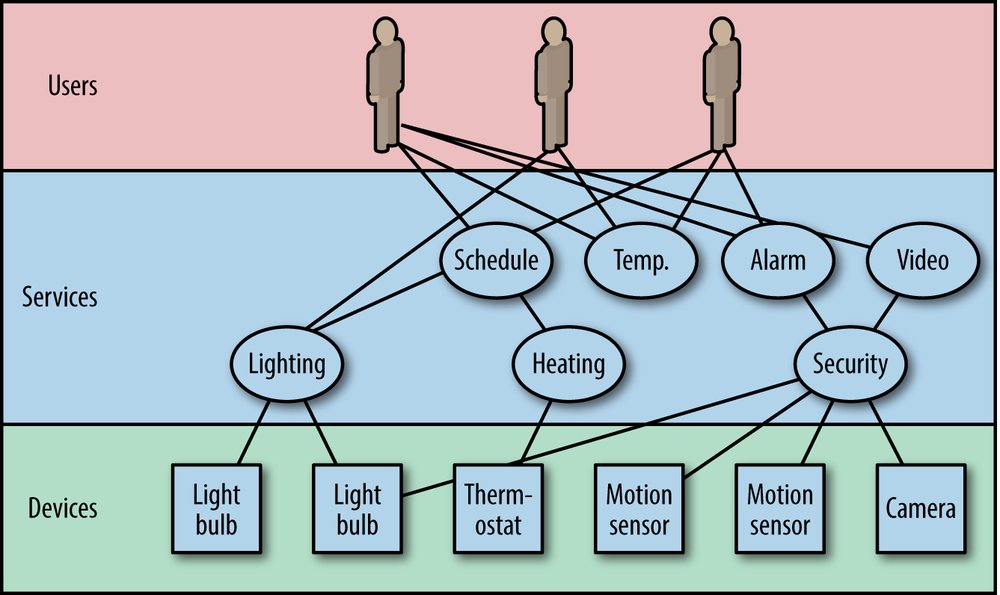

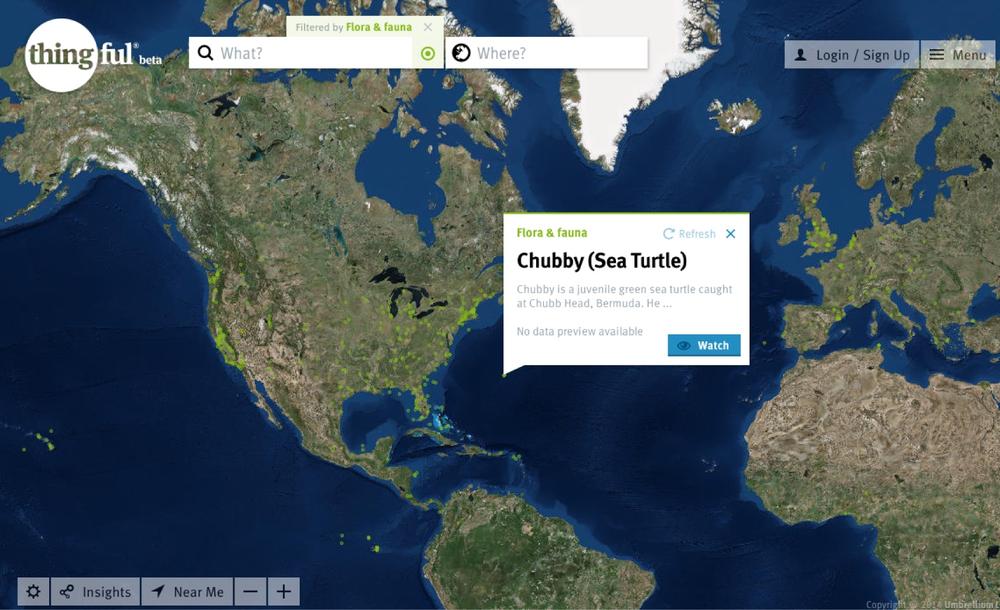

The example in Figure 15-2 represents a fairly simple connected home setup.

Figure 15-2. Interrelationships between devices, services, and users in a simple connected home setup

There are six devices: two connected lights, a thermostat, two motion sensors, and a security camera.

There are three user-facing applications (or services): security, heating, and lighting.

Some devices support more than one service, so for example, motion sensors are part of the security system but also provide temperature data to the heating system. And one of the lights can be activated by the security alarm to provide illumination for the camera.

Three users are currently set up on the system. The system allows different levels of permissions to be granted to different users on different services. The “admin user” can access all the functionality, but the other two (who might be regular visitors or other household members) have limited access. They can adjust the heating temperature but not set the schedule. Only one can activate the security alarm, and neither can view the video feed from the camera.

Although this is a fairly simple system, there is already a complex pattern of interdependencies and relationships emerging between the devices, services, and users. The more devices and services are added, the more complex this will become.

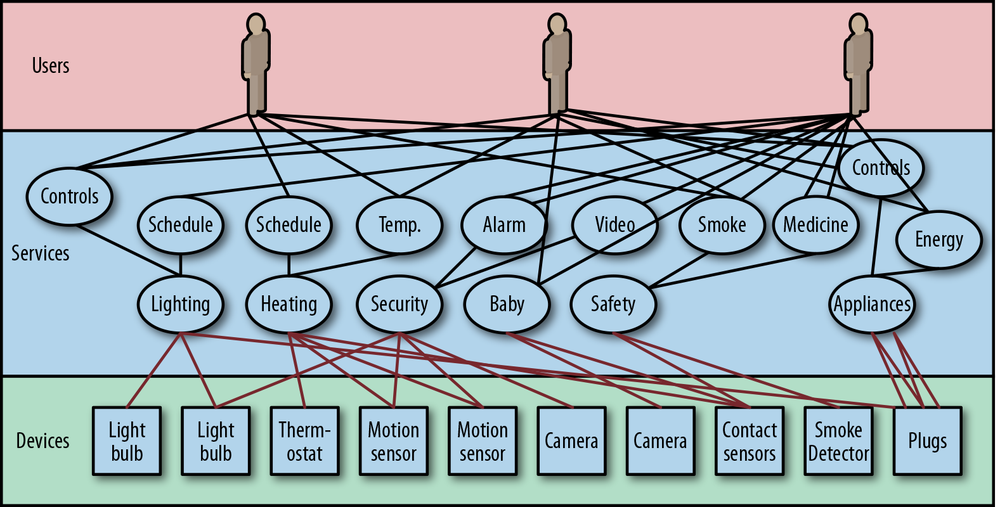

Here’s the same system with just nine extra devices added, plus additional lighting controls (in Figure 15-3):

§ An extra camera in a baby’s room (accessed via a baby monitoring service).

§ Three contact sensors that also measure temperature: one on a medicine cupboard (part of a safety service), one on the baby’s window (also feeding temperature data to the baby monitoring service), and one on another door. All of these also supply temperature data to the heating service.

§ Four smart plugs: all accessed via an appliances app with energy monitoring and controls. One that is on a lamp is also available to the lighting service.

§ A smoke alarm, accessed via the safety service along with the medicine cupboard alarm.

As in the previous diagram, not all users can access all functionality.

This is already a complicated web of interrelationships, but in device and service terms it’s still pretty basic. Most of us have more than 2–3 lights, and even a medium-sized house will need more than 4–5 alarm sensors. Effective energy monitoring would require a whole house reading (e.g., from a smart meter).

Figure 15-3. Interrelationships between devices, services, and users in a slightly more complicated (but still fairly basic!) connected home setup

And there are additional ways of controlling and organizing devices and services that aren’t shown here. Many of these devices may be assigned to rooms in the house. Functions from various services may be controlled by automated rules, which are user-configured groups of behaviors. Some of these may be dependent on tracking the presence of the various occupants and visitors. A typical rule might be “if it’s after sunset and no one is home, turn on the living room and kitchen lights,” to make the house look occupied.

In a system like this, enabling the user to understand the system and keep track of everything that’s going on is a major design challenge. And we’re not even taking account here of the challenges of interoperability (as explored in Chapter 10), or interusability between the various control interfaces (as explored in Chapter 9).

Understanding the interrelationships and interdependencies becomes yet more complicated when the system (or just some devices) is/are autonomous or intelligent in some way. Instead of responding only to direct user commands, the system infers what users need and acts independently. Done well, this is powerful: users want devices to behave in common sense ways, which requires intelligence. But users can also fear loss of control. The smart home/environment gone wrong is a well-known trope in contemporary fiction, from HAL in the film 2001: A Space Odyssey to the sinister Happylife Home in the Ray Bradbury story “The Veldt”:[246]

When domestic technology goes awry, it is often more invasive than office technology; not only do we expect our homes to provide a haven of calm and security, but breakdowns in domestic technology can actually prevent us from meeting our basic needs.

—JENNIFER RODE, ELEANOR TOYE, AND ALAN BLACKWELL[247]

Making sense of this complexity is an enormous challenge for UX. As yet there are no easy answers, but unless there is progress in this area, a consumer Internet of Things will remain unrealizable.

Scaling the UX

In general, the more devices are added to a system, the more complex it becomes to accommodate them in a UX design that works well for users.

Scaling the UX is a particular issue for systems that support lots of actuators and user-facing controls, because each new device added is potentially increasing the amount of user-facing functionality required.

It’s less of an issue for networks of sensors, whose UX is likely to be data driven. Adding new data points may require you to change the way you present insights to the user, or enable new insights. But adding a new set of controls creates more interdependencies for the user to understand.

Keeping Track of Multiple Devices

Handling multiple devices raises practical issues. Just identifying devices can be a challenge if you have several of the same type, such as multiple motion sensors. Is the device in front of you “motion sensor 1,” or “motion sensor 2”? The system needs to differentiate them, and the user needs a way to differentiate them in the interface, such as giving them names. And there also needs to be some way of matching the physical device in front of you with the correct representation named in the interface. As we saw in Chapter 12, some systems provide an “identify” button on the UI that makes an LED flash on the device.

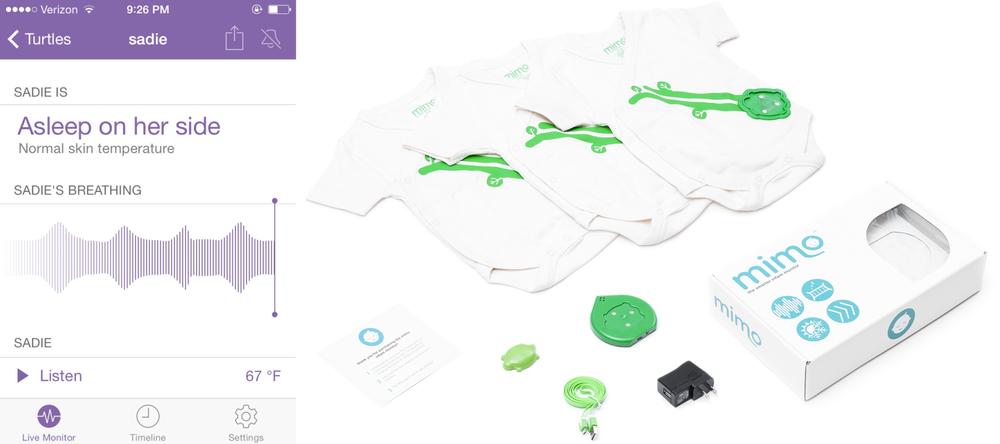

Keeping track of which device is broadcasting what information can be tricky. For instance, say you have twins and two baby monitoring sensor kits. Do you want each transmitter always to inform you about the well-being of the same baby (this might save valuable seconds in an emergency)? The Mimo baby monitoring kimonos from Rest Devices (see Figure 15-4) monitor baby vital signs, like sleep position and breathing. A standard kit for one child comprises three kimonos with onboard sensors, one Bluetooth transmitter (called a “turtle”) that fits onto the kimonos, and one gateway (called a “lilypad”). Parents of twins can use one lilypad but need a turtle for each baby. If you want to keep the data from each baby separate, you must always use the same turtle for each child.

Figure 15-4. The Mimo baby kit (image: Rest Devices)[248]

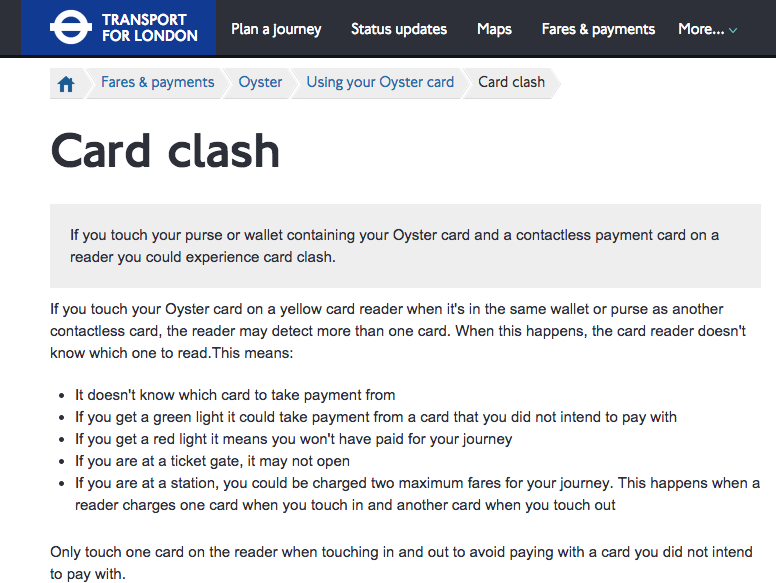

With multiple devices performing similar functions, there is the possibility of choosing the wrong one. The introduction of contactless credit cards as an alternative to the Oyster card has created the risk of “card clash” where two Oyster-compatible payment cards are kept together in a wallet (see Figure 15-5). The user could inadvertently swipe in on one at the start of the journey, and swipe out on the other at the end, incurring two penalty charges for “incomplete” journeys instead of the normal fare. It would make sense to link both cards to the user’s account and combine the data from the two, but this isn’t possible on the current system.

Figure 15-5. The Transport for London website warns of the risk of card clash (image: Tfl.gov.uk)

Identifying devices isn’t the only challenge, either. If you have lots of devices, just configuring them to work together is a huge overhead.

Keeping track of what they are doing and predicting how they may behave can be challenging, too. In a complex system, that might mean knowing which one is connected to what, what each is doing (right now), which software or which user is controlling it, what data it creates, and who can see it.

Adding automated behaviors into the mix makes things yet more complicated. Some devices may be triggered not only by explicit user interactions, but by preconfigured rules established sometime in the past, or perhaps system intelligence. So there are more interdependencies to map, and it becomes harder to understand what the system is up to and why. The conceptual model is far more complex.

Looking Beyond Devices

As already discussed, device-centric UXes are fine for small numbers of devices. It will be quite easy to find the controls you need by looking for the specific device. You don’t need to create semantic groupings (e.g., by room or function), to find the one you need.

But if you have 100 devices, a device-centric UX becomes more burdensome. Trawling through 99 other devices to find the one you want, like a living room light, could take a long time. You don’t even have to get as far as 100 devices to get fed up with controlling them one at a time: you may only have 3 lights in your living room. but it’s quite reasonable to want to turn them all on at once. In that case, you might want to group devices, perhaps by their location in the house, or by function. But you might still want to turn just the one reading lamp on or off, so you’ll still need the individual device control. And you might want to put a device in two overlapping groups: perhaps one for “living room” and one for “security lights.”

The more complex the system, the more you have to design it around what it does for the user and not the devices. For example, users don’t want to have to monitor lots of sensors just to know they’ve left a window open or someone has broken in. As your system scales, it will not (always) make sense for the UX to focus on individual devices anymore.

As with many of the examples in this book, we’ll look at the connected home as an example, as it’s the environment in which the consumer is likely to have the most devices working, possibly together. Homes are challenging to design for, as they are complex social contexts, which we view as our refuge from the world. They are the last place we want to be frustrated in our daily routines by bad design or unreliable technology, and within the home (as opposed to, say, the workplace) we are mostly able to choose the technology we use. So the bar for perceived value and good UX is set high.

Some home devices, like thermostats or the garage door opener, might be “first order” devices that map closely to a user need. These may be under the user’s direct control and represented prominently in the UI. Others, like contact sensors, may not be that meaningful as standalone devices. They are more valuable as part of a service, like the security alarm. Some devices, like lights, could exist in both categories: you want individual control of a light, but also to use it as part of a group.

The more devices your system has, the more impractical it becomes to rely on device-level controls and the more the UX needs to shift to higher-level service or application behaviors. Services might include irrigation, heating/cooling, medicine cabinet alarm, smoke alarm, energy monitoring, window shades, and Granny’s emergency alarm. A service might have direct device controls (e.g., the heating/cooling service includes thermostat controls, but also home-wide temperature monitoring), or like the intruder alarm, it might derive its function and value from a network of devices. It is application software that determines whether the security alarm is “set,” “unset,” or triggered, not any of the individual devices.

Users need you to be considerate in managing their attention, and a good UX will organize functionality in ways that map well to their higher-level needs and goals. As we saw in Chapter 5, these might be very varied, inconsistent, and driven by the complex contexts in which the devices are used. In the next section, we’ll look at some possible ways of organizing functionality to suit daily life in the home.

Addressing User Needs

Let’s consider some of the needs a user might have around the home and what that might mean for organizing devices and functionality in the system. There are multiple, overlapping ways of making sense of this (see Table 15-1).

Some can be viewed in terms of devices: tightly mapped to a single device, like turning off the TV, or a group of devices, like the master switch that turns off all the lights.

Some might target a particular location. Many connected home systems allow users to group devices by room. For example, the living room group might give you one easy place to control your immediate environment without leaving the sofa. But not all user needs fall easily into room groups. Heating and cooling may have room-based data or controls, but usually cover the entire house. The concept of location might extend to turning off the alarm downstairs, turning off the outside lights, or lowering the blinds on the west side of the house. Classifying every single device by all the potential ways you could describe its location would easily become overwhelming.

Location is a useful way to describe devices like fridges and televisions that don’t move around often. Smaller devices, like lamps, or smart plugs, may get moved. There may also be several in a room, making location information less useful in identifying them. Some devices, like baby monitors, are designed to be carried around and the user may forget where they are.

Sometimes user goals might be function based, such as setting the hot water to come on at 5 p.m., or monitoring safety alarms. Or they could be activity based, such as getting ready in the morning (needing hot water, coffee, and transport updates), or watching a movie (turning down the lights and turning on the TV and surround sound system). Connected home systems often offer the option to group functionality as “scenes” to support different activities. For example, there might be a “dinner party” lighting scene, a “movie scene” that turns off most lamps and turns on the home entertainment system, or a “goodnight scene” that turns nonessential devices off when everyone goes to bed.

Needs could also be person based, such as notifying you if Grandpa hasn’t got out of bed, or letting you know when Jake gets home from school. Or they might be authorization based, perhaps locking the medicine or liquor cabinet when there are no adults at home.

Sometimes needs are time or state based (e.g., activating security lights at dusk, and/or when the house is unoccupied). Some may be optimization based (e.g., keep the house temperature comfortable, use energy efficiently). This is a tricky need to fulfill, as defining “comfortable” or “efficient” requires information about the current state and the wider context of use.

Table 15-1. A taxonomy of user needs (needs can fall into multiple categories)

|

Type of Need |

Example |

|

Device/group of devices |

§ Turn on the TV § Turn off all the lights |

|

Location |

§ Set the alarm downstairs § Turn off outside lights § Lower the blinds on the west side of the house |

|

Function |

§ Turn on the hot water § Dry the laundry |

|

Activity |

§ Help me get ready and out of the door on time in the morning § Set the living room up to watch a movie |

|

Person |

§ Tell me if Grandpa hasn’t got out of bed § Tell me when Jake gets home from school § Track my weight and blood pressure |

|

Authorization |

§ Lock the medicine cabinet when the adults are not at home § Let the courier into the porch |

|

Time |

§ Warm the house for 7 a.m. § Don’t run the sprinklers between 11 a.m. and 7 p.m. |

|

State |

§ Turn off nonessential devices when I’m on vacation § Turn on the security lights at dusk § Disable notifications when I’m driving |

|

Optimization |

§ Keep the house temperature comfortable § Use energy efficiently |

Context

Creating a UX that bridges this collection of user needs with a bunch of devices requires some understanding of the context in which the devices and the system are being used. The range of contextual information you could capture is huge, but physical, activity, and social contexts are all good categories to consider (see Chapter 5).

The physical context of a device may affect how it works. For example, your window alarm sensors may well be able to measure temperature. That might seem like useful data to help control your heating system, too. But they are likely to read a lower temperature than the center of the room or an internal wall, which better reflect the temperature as perceived by a human in the room. Using them to control the heating or AC in that room may result in it feeling too warm, as the heating overcompensates (or AC undercompensates). Understanding the physical environment of the device will allow you to interpret and use its data more accurately.

The extent to which the system supports or conflicts with the activities that happen in the household can determine how effective or valuable it is. It’s easy, for example, to wish to use energy “efficiently” and “reduce waste” (as mentioned earlier) but quite hard to translate that into actual rules as to which appliances the system should turn on/off and when. It’s not just about using less energy, which would mean turning everything off. That requires an understanding of how the household weighs cost and efficiency savings over comfort and convenience, and which appliances or energy needs are most important (e.g., cooling the baby’s room, having a clean, dry shirt by 8 a.m.).

As discussed in Chapter 5, homes are complex social contexts. You may need to consider people who live in the house, have access to enter the physical property, or the service UI. You may have to capture contextual information such as presence (who’s in or out), who is authorized or not authorized to access particular functionality, proximity (who is nearby or far away), and who is available or unavailable. Proximity and availability might be used to identify the most suitable respondent if the security alarm goes off—who is nearby, and likely to receive the message/be able to get home?

You don’t necessarily want to model all the relationships between different stakeholders in your system, but you should consider how your design supports or conflicts with the social context of the home as you create it. For example, a really rigid permission system might seem to offer lots of control but will force users to do lots of categorizing of who is allowed to do what. This may suit law-and-order types with time on their hands, but won’t suit those who like to be more flexible sometimes (perhaps allowing little Jimmy to stay up late to watch the World Cup). And it could cause friction.

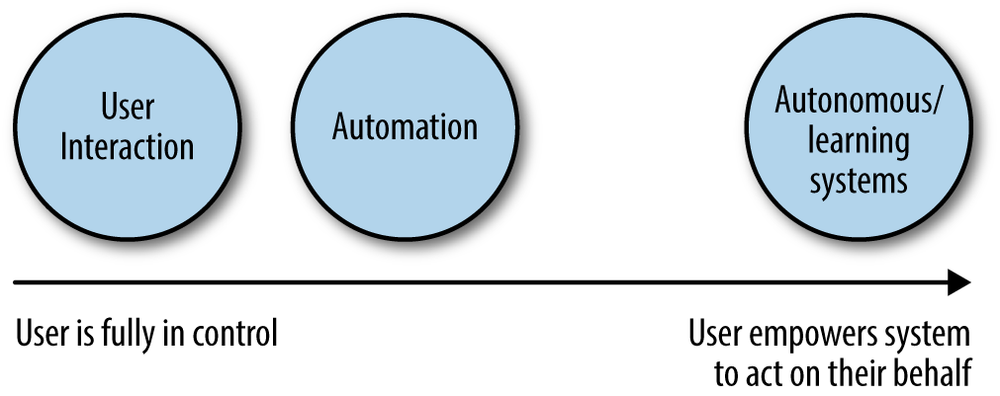

Control

As systems become more complex, two contrasting approaches to controlling them have to be weighed up (see Figure 15-6).

Figure 15-6. Approaches to controlling IoT systems

On the one hand, we can try to create intuitable interfaces for users to control the systems themselves. This is where many consumer IoT products are right now: remote controls are often the core of the value proposition. The next natural step, also user controlled, is automation: the user configures functionality to run automatically in the future (e.g., by setting up heating to come on at 4 p.m.).

On the other, we can hand over control to intelligent or autonomous systems, empowered to act independently on our behalf (e.g., the heating system infers that it should come on at 4 p.m. because you are likely to be home).

Both approaches have advantages and disadvantages.

People are good at intuiting context, especially social context and “common sense” (unlike machines). Remote controls make sense, until there are too many of them to manage. But complex systems require us to understand interrelationships. And as we saw in Chapter 9, people may not be very good at understanding networks. What’s more, people are notoriously bad at predicting their own future needs, so automation can be problematic (this is discussed in depth later). People also get tired, bored, distracted, or overwhelmed by other demands on their time, and sometimes behave irrationally and against their own self-interest (e.g., using thermostats in inefficient ways).

Computers are good at storing and analyzing huge amounts of information, logical reasoning, and maintaining constant vigilance without getting tired or distracted. But computers are terrible at understanding context (unlike people). Teaching a computer the kind of “common sense” that humans take for granted is a very challenging artificial intelligence problem. The computer has to be taught what information is relevant to the situation and how to interpret it.

Of course, this isn’t an either/or choice. It often makes sense to combine some user-facing controls with system intelligence. In the next section, we’ll look at some of the challenges of each approach individually.

Putting Users in Control

In this section, we’ll consider the design challenges of putting users in control of complex systems, and why using IoT is a lot like programming.

Why is using IoT like programming?

Back in 2002, Ann Light suggested that “we stop thinking about products’ end-users and start thinking about the system’s end-designers. Because that is what we are all becoming as we choose and use network components.”[249]

For the past 30+ years, the prevailing trend in UX has been toward direct manipulation: “a human–computer interaction style which involves continuous representation of objects of interest and rapid, reversible, and incremental actions and feedback.”[250] This is the approach behind tools such as WYSIWYG (What You See Is What You Get) editing in word processors and web production tools like Dreamweaver. It’s also evident in the new generation of content-driven UIs found on modern smartphone and tablet UIs such as Windows Phone 8 and iOS 7 (see Figure 15-7), and natural user interfaces (NUIs) like the Kinect (see Figure 15-8).

Direct manipulation interfaces tend to be easier to use as objects and possible actions are laid out in front of the user. This lessens the effort required to build an abstract mental representation of the scope and capabilities of the system (as you would have to with, for example, a command-line interface). The state of the system is visible: users can immediately see the effect of their actions on the objects in front of them, and choose to reverse them if needed. Users understand what’s going on, what actions are available, and need not fear breaking things.

Figure 15-7. Windows Phone 8 on the HTC One M8 (image: Microsoft)

Figure 15-8. Playing Kinect Sports 2 on the Xbox (image: Doug Kline via Wikicommons/CC license)

A key condition of direct manipulation is that the user’s actions and the results happen at the same time, in the same situation.

But consider these interactions:

§ Turning your heating off remotely via smartphone.

§ A security alarm that is activated by one or more motion or contact sensors being triggered, which sounds an alarm, sends a text message or app alert to the homeowner, and records two minutes of video on the hall camera.

§ A heating system that sets the internal target temperature to 21°C when the homeowner’s smartphone location is less than one mile from home and heading toward home.

§ When Mom or Dad are not at home and the medicine or liquor cabinet is opened, send an alert to Mom and Dad’s phones.

§ An energy customer agrees to let their supplier company temporarily suspend power to certain appliances, such as the dishwasher, during periods of high demand on the grid. In return, they receive a cheaper tariff.

§ A user configures a smart home system with an “away” mode, so that when they leave the house, the heating is turned down, the security alarm is set, nonessential electrical appliances are turned off, and the lights are off (unless it is after dusk and before midnight, in which case a pattern of random security lights is activated).

All of these scenarios break direct manipulation, because they involve the separation of user actions and system responses. IoT services are inherently distributed across multiple devices, and often involve remotely controlling one device from another in a different location, or configuring devices to perform actions at different times or in different conditional situations. This requires the user to expend more effort building and maintaining an abstract mental representation of the system and the consequences an action may produce. It means that using, and especially configuring, an IoT product or service is often more like programming than our general experiences of using UIs. Our actions have become displaced from the results of our actions:

Programming permits us to initiate appliances’ actions at future times, or to create macros to make repeated tasks easier.

—JENNIFER RODE, ELEANOR TOYE, ALAN BLACKWELL[251]

People are often bad at articulating their own current needs. They are even worse at predicting their future needs, and often get them wrong. When your goal is to configure a system to perform actions in the future, this is a barrier. Even more challenging to users is the need to anticipate all the changing conditions to which the system might have to respond.

In the next section, we look at a number of ways in which displacement between actions and results can cause unintended effects.

How IoT products displace actions from results

Displacement in space

When the action and execution happen at different locations, the user may not be aware of what’s going on at the other end and inadvertently cause problems. The action may be inappropriate or unsafe at that time, such as setting the security alarm when there’s still someone at home, or more dangerously, turning on an electrical appliance when there’s a gas leak in the home. Users may not see the consequences of their actions, which is particularly important if the action was done unintentionally (e.g., opening the garage door by accident). In addition, users may not realize that the action they thought they took didn’t work as planned: for example, a user might think they turned off the smart plug on the hair straightener (a common appliance that people often worry they’ve left on), but didn’t, or the command got “lost” by the system. Confirmation as to whether actions have been performed or not is, of course, critical.

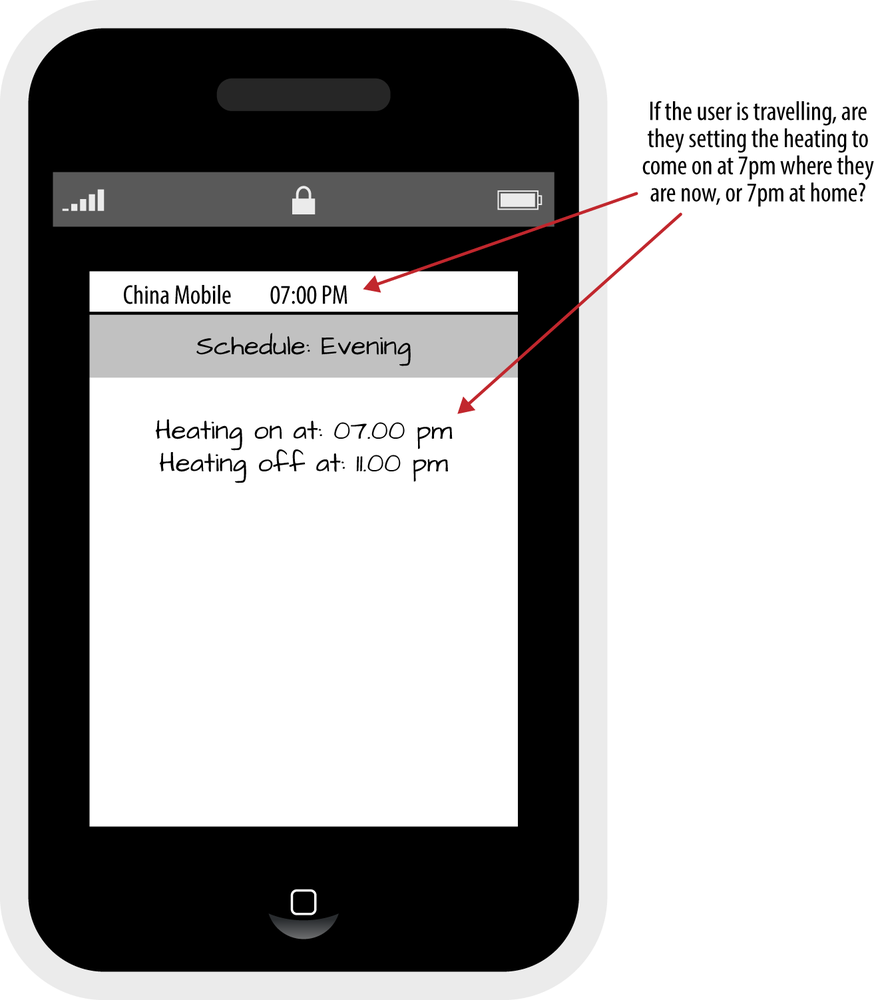

Sometimes, the difference in location between commands and actions causes unintended confusion or worse. A user of a remote control heating system once asked us whether, when he was traveling and used his phone to set his home heating to turn off at 7 p.m., the heating would be turned off at 7 p.m. local time to his house, or 7 p.m. in the time zone where his phone was currently located (see mockup in Figure 15-9).

Figure 15-9. Mockup of a mobile app showing local time in info bar and schedule setting in app

Both of these scenarios require users to have a fairly sophisticated understanding of the system to predict and explain likely behavior in different locations.

Displacement in time

Here, users are effectively configuring a system to execute an action at a future time. An example might be a well-intentioned house rule that turns off “unnecessary” appliances to save electricity overnight. This requires users to anticipate their own future needs, which of course humans are not very good at. That “unnecessary” appliance that gets turned off might be the tumble dryer running overnight to ensure a shirt is ready for an important meeting. This is also an example of the consequences of users forgetting that they have set up an action, and then being negatively impacted by the execution of that action.

Displacement in function/application

Another factor that can make it more complex for users to understand the cause-effect relationship of system actions is multifunctionality, or the repurposing of one device or piece of data for a different or additional use than that originally intended. This requires users to understand additional consequences: for example, an “intelligent” security system could in theory draw on information from external services, like your calendar, to detect possible intrusions and avoid false alarms. Imagine you enter an evening social event into your calendar, which is subsequently cancelled at the last minute. You don’t bother to update your calendar, and head home. On attempting to unlock your front door, the alarm sounds: the system thinks you’re out and so identifies you as an intruder. And which rule dominates? Should it be the rule that turns the lights down when you’re watching a move, or the rule that turns the lights on to welcome someone home?

If a device set up for one usage is subsequently used for a second, it may function less well as a result of being inappropriately configured for the second usage. Some devices, notably smart plugs/outlets, can be moved and repurposed. A smart plug may be placed on a lamp in the living room, controlled by a rule to turn the lamp on for 10 minutes when motion is detected. If the plug is moved to a computer in the study without the system being reconfigured, the rule will now turn the computer on and off, potentially damaging it. Or if the plug is moved to a set of hair straighteners, it could even start a fire. A smart system would detect when the power consumption pattern of the smart plug had changed and ask the user to confirm whether it was now being used on another device, but there’s no reliable way to prevent errors.

Of course, users must, where possible, explicitly grant permission before new uses of data or devices can take place, even if it’s just making a light available to the security alarm. But it’s almost impossible to prevent users from doing ridiculous, unwise, or even dangerous things with a system. There are many potentially unintended consequences of repurposing data and devices for secondary uses, and in a highly interconnected world, it may be hard for users to unpick these interrelationships and understand why certain things are not working as expected (not to mention what data is held about them and where it may end up).

Programming and notations

As well as the need to form an abstract conceptual model of the system and its capabilities, a user engaged in a programming activity must generally also learn a form of notation in order to create instructions for the system. This is yet another level of abstraction and cognitive overhead. Examples of notation systems that can be used with IoT services include If This Then That and smart home rules. But most of these systems are better suited to regular rules than the flexible permissions that govern many of our home lives—for example, if Jenny gets a good grade on her math test, then she can stay up late playing Xbox this weekend.

What is programming?

At what point does usage or configuration become programming? People argue at length about what is or is not programming—for example, whether using a scripting language such as HTML or Excel macros should be included in this category. For our purposes, this doesn’t matter. Our definition of programming represents a particular mindset and set of complex cognitive tasks that are, from an end user perspective, more mentally demanding than those typically required to use a product or UI.

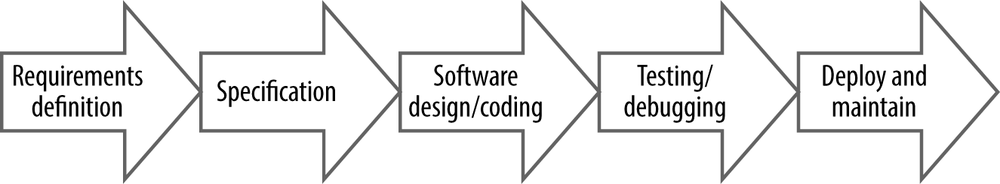

First, users must understand and frame their own problem. Perhaps they want to keep the garden lawn nice and green, whatever the weather. In software engineering terms, this is requirements definition. Then, they must figure out which tools they need to solve it (perhaps a connected garden sprinkler and maybe some moisture sensors, see Figure 15-10). This is specification.

Next, they must break down the problem into logical components. They have to understand the “normal” operation of the system but also need to anticipate future conditions and needs that might provide exceptions, such as hosepipe bans, the grandkids visiting and playing outside, and barbecues (to avoid the impromptu soaking of house guests). They then need to figure out how to get the tools they have to produce the desired actions: a problem of software design involving some form of abstract representation, or coding.

Figure 15-10. The skydrop smart irrigation controller (image: skydrop)

Finally, they have to check it actually works (testing), which may involve simulating conditions of use or simply letting it run for a while and seeing whether it behaves as expected. If it soaks the barbecue guests, they’ll have to figure out why and fix it—aka debugging. And if any part of that system is autonomous or learns over time, some bugs may only reveal themselves after lengthy use.

As illustrated in Figure 15-11, all of these—specification, design, testing, and debugging (and of course maintenance)—are software engineering problems. These can be interesting challenges, but they involve an unusual amount of effort for a consumer product. As discussed in Chapter 5, whether consumer users invest this effort will depend not just on their ability to do so, but also whether they perceive the value of the end result to outweigh the effort or risk of doing so.

Figure 15-11. The software engineering process

Programming closed systems is complex enough, but interconnectivity adds yet more challenges. Instructions and data may end up being used with devices or services other than the ones for which they were originally intended or authorized, which makes understanding the potential consequences of your actions far more complicated. You might have a home automation rule that turns off heavy power consuming devices when you go to bed, but if you subsequently buy an electric car that needs charging overnight, you don’t want that to lose power, too. Or devices shared between systems may be subject to conflicting instructions: the energy system is trying to turn off nonessential appliances at night when you’re on holiday, but the lighting system is trying to turn lights on to make the house appear occupied.

The risk for users is of losing control through sheer complexity. Interconnected autonomous systems, which make decisions and act independently, will be even more unpredictable (see “Autonomous/intelligent systems”). A related issue is retaining control of data and privacy, addressed in Chapter 11.

For every action or system behavior configured, users must consider the following:

§ How it will function under currently known conditions (e.g., which appliances should be run after 11 p.m. to make the most of cheap electricity).

§ What may happen in the future if some of those conditions change—for example, if the timing of cheap electricity changes. Or, as might happen at Little Kelham in the connected home case study, the user switches on an appliance when solar-generated energy is available and cheap but if everyone else does the same, then the price may rise while they are using it.

§ What may happen if the data or behaviors are shared with other apps, devices, tasks, people, or services. Are your well-meaning energy saving rules now preventing your guests from turning on the lights or kettle in the middle of a sleepless night?

Professional programmers do not expect to write bug-free code the first, second, or even third time around. Consumer users are extremely likely to misconfigure automation rules, causing unexpected things to happen. System designers must do everything they can to mitigate any damage.

How can we make controlling complex systems easier?

Programming will frequently be too hard, or at least too effortful, for users. What can we do about this? There are three broad solutions here: make programming easier, find an alternative to programming, or get someone else to do it.

Make programming easier

Many attempts have been made to make programming-like activities easier for end users. Most attempt to make programming feel more concrete, generally through simplified graphical approaches.

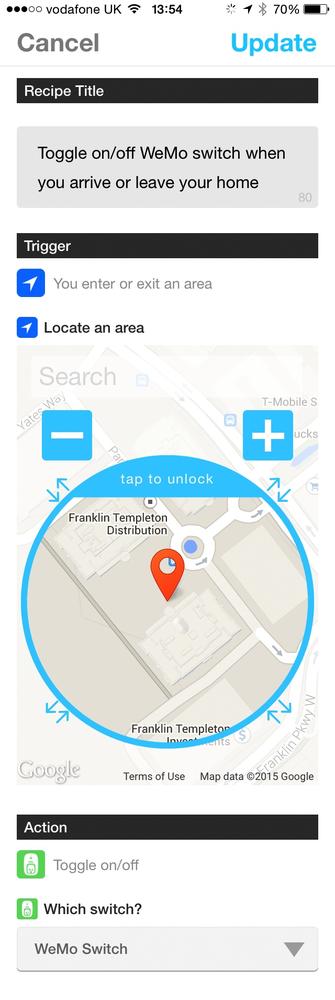

If This Then That is probably the best known consumer example, which can be used with a number of Internet of Things devices and systems (e.g., Philips Hue, Belkin WeMo products, and SmartThings devices). IFTTT (see Figure 15-12) offers simple trigger-action commands: so when a trigger event occurs (such as a child’s smart key fob arriving home), an action can be executed (such as texting the parent that the child is home from school).

Figure 15-12. IFTTT can be used to control a WeMo Switch

Another example of a consumer-oriented programming experience is WigWag: a connected home kit based around sensors and tags designed explicitly to support similar trigger-action programming (see Figure 15-13).

Figure 15-13. WigWag connected home system (image: WigWag)

Triggers can be directly measured with sensors (e.g., the front door is open), inferred from sensors (the user’s smartphone is not at home, therefore the user isn’t), or require some combination of sensor data and interpretation (electricity usage is currently 4kW and this is unusually high for this time of day). Some actions might require more than one trigger or action (e.g., if the liquor cupboard is opened and neither Mom nor Dad is at home, then text both of them). Or an action might be conditional on an ongoing state instead of a one-off trigger (e.g., while electricity is less than [x] cents per kWh, run the tumble dryer).

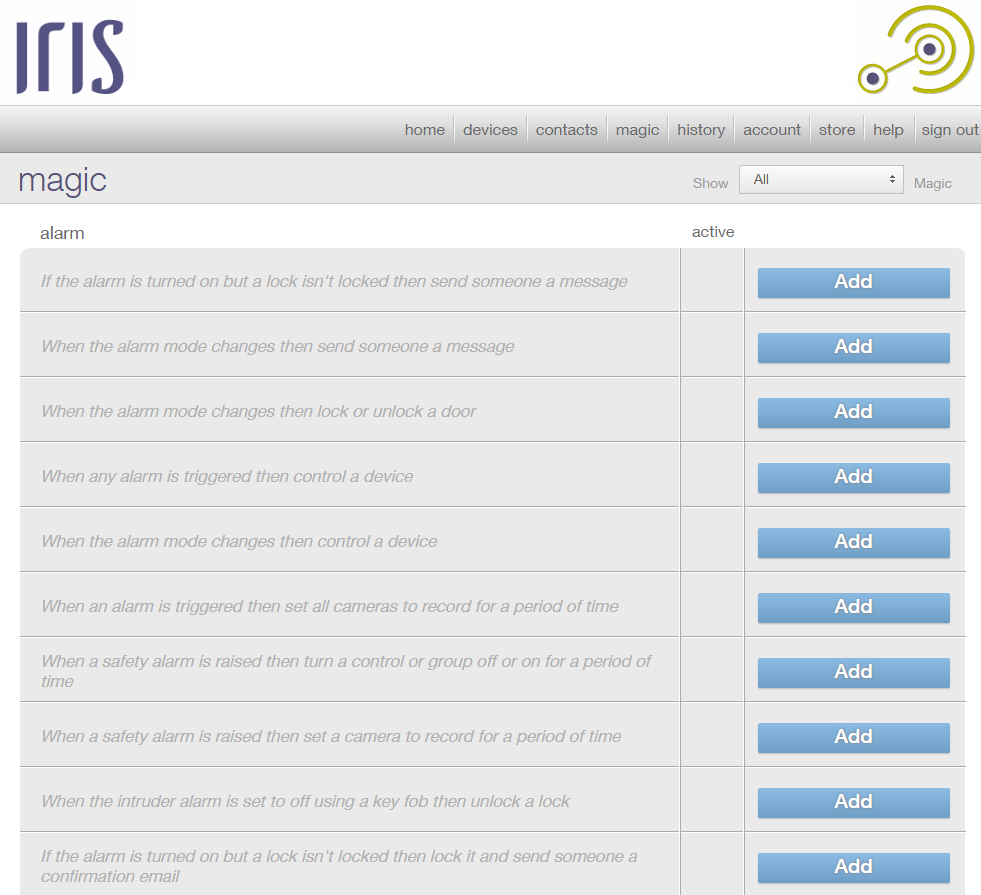

Smart home systems like Comcast XFINITY Home and Lowes Iris have long offered similar interfaces for creating home automation “rules” and “modes,” although not necessarily allowing devices to link in with third-party services.

Rules are typically trigger-action commands—for example, “when I turn the bathroom light off, then turn off the speakers in the bathroom” (see Figure 15-14).

Figure 15-14. Rules in Lowes Iris

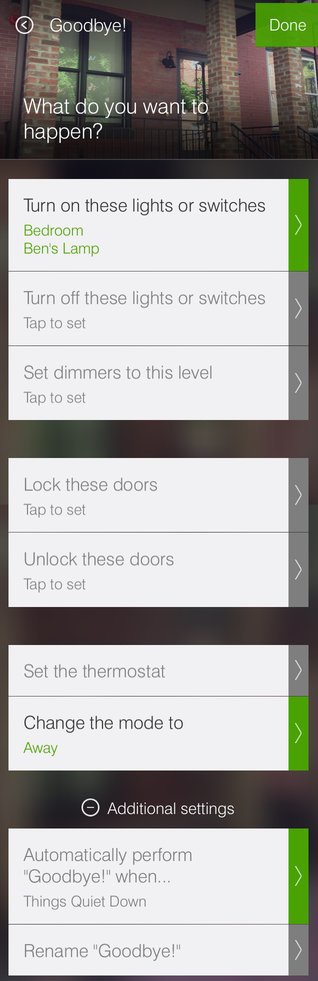

Modes are generally just grouped actions or macros required in a particular context. For example, activating holiday/vacation mode will turn down the heating, set the security alarm, and turn on a lighting pattern meant to simulate occupancy (see Figure 15-15). It’s important to differentiate between modes, which are ongoing states, and macros, which are shortcuts that execute multiple commands.[252] Vacation mode may be an ongoing state, but “at home” mode (used when you get home) is logically more of a macro. Some system designers mix the two. But attempting to make macros modal can get very messy.

Figure 15-15. Applying rules to modes in Comcast XFINITY Home (image: Comcast)

Imagine you turn on “movie” mode, which dims lights and turns on the home entertainment system. Half way through, your housemate wants to grab a drink and go to the bathroom so turns some of these lights back on. Is movie mode still “on” despite some of the conditions of movie mode no longer being true? Are you in a modified form of movie mode with some functions overridden? How is this shown on the UI? You can easily end up in a mess of states and overrides.

It’s far easier to treat these things as macros. Macros turn a bunch of devices to some predetermined settings. If you want to change the state of those devices, you can. If you want to rerun the macro, you can. Modes set the system to an ongoing state. You then have to deal with the logical problems of changes being made to that state.

SmartThings treats modes and macros separately. The “Hello, Home” feature allows users to create macros of combined commands, such as “Good morning” (waking up) and “Goodbye” (leaving home). Macros can be used to activate modes (the defaults are “Home,” “Away,” and “Night”). So when the “Goodbye” macro runs, the home is set to “Away” mode (see Figure 15-16). Depending on the current active mode, a device may behave differently, for example, a motion sensor might turn on a light in “Night” mode but activate an alarm in “Away” mode. This is more logical than making everything a mode. But as the number of devices in a system mount up, and users configure their own macros and modes, it can become very complicated to keep track of all the interrelationships between devices, macros, and modes.

Figure 15-16. The Goodbye macro in SmartThings, which triggers “Away” mode (image: SmartThings)

Our personal opinion, based on small-scale consumer testing, is that grouping functions as macros makes a lot of sense. But the effort of configuring modes, and the potential confusion they can cause, is currently far greater than the perceived benefit for most people, with the possible exception of holiday/vacation mode. Romantic mode (dim lights, raise thermostat temperature, turn on soft music) is common but perhaps the most jarring. Unless you and the person you wish to impress are both die-hard smart home enthusiasts, the effort involved in setting up your romantic mode may make you seem as if you have a bit too much time on your hands. Or worse, you are a bit of a lothario who requires automation because of the high frequency of your romantic encounters. Neither gives the impression of spontaneity or specialness that many people consider to be elements of romantic experience.

Just to make matters more complicated in the connected home, lighting systems often have another way of grouping controls: scenes (see Figure 15-17). These are akin to macros and modes. A home system of interoperating subsystems and devices could easily have all three of modes, macros, and scenes, possibly implemented slightly differently on each subsystem. This could easily become overwhelmingly complex.

At some point, it’s likely that these models will begin to standardize. But it’s far from clear what the eventual standard will be.

Figure 15-17. Control4’s MyHome Android tablet app, showing lighting scenes for a kitchen (image: Control4)

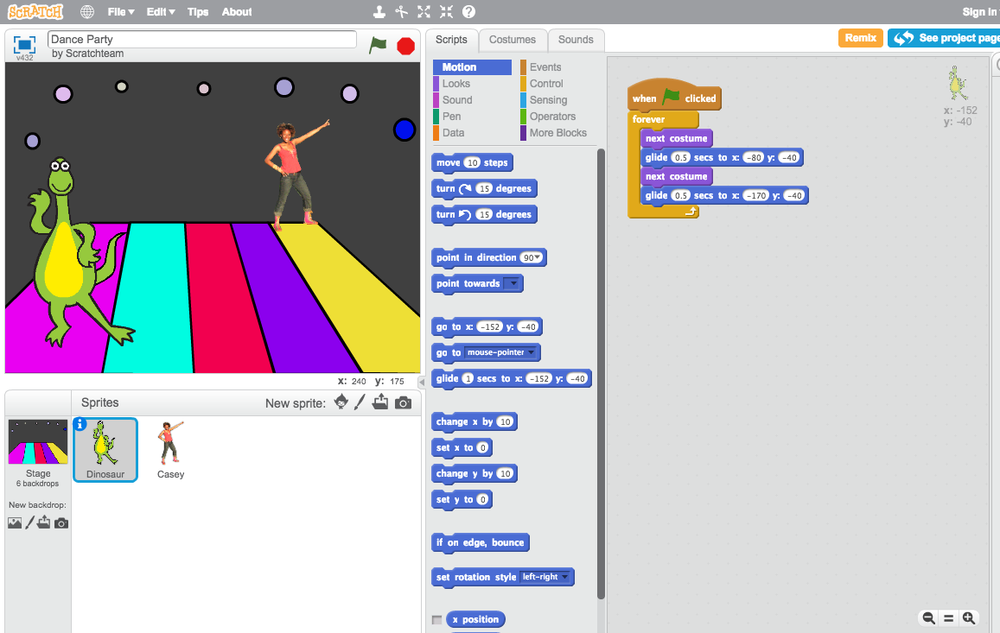

Academic researchers have explored various approaches over the years to make programming easier and more concrete for end users. The Scratchable Devices project from Rutgers University used a UI based on MIT’s children’s visual programming tool Scratch (see Figure 15-18) for configuring many home devices.[253] Other projects have used visual metaphors such as magnetic poetry,[254] jigsaw puzzles,[255] or even physical blocks.[256]

Figure 15-18. A game in Scratch[257] (image: MIT)

Sometimes the focus of these is device-centric; occasionally as in the magnetic poetry example, attempts are made to allow users to structure commands around people, tasks, or goals.

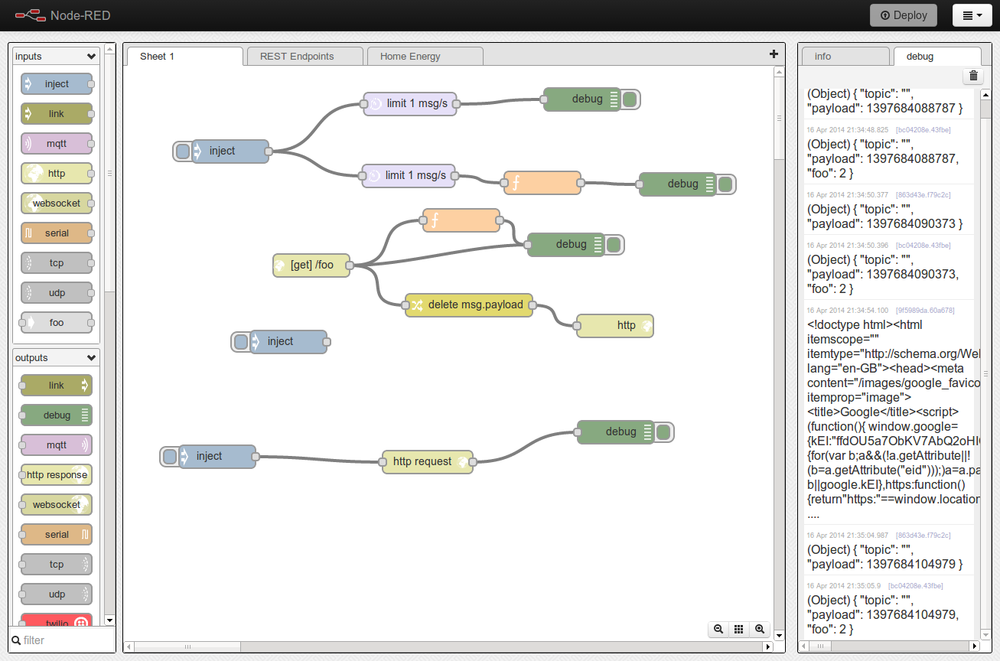

NodeRED[258] from IBM is worth a look as an example of visual programming, although it is designed to widen the audience for programming rather than to create a mass-market end user tool. This is a free, open source visual coding tool specifically designed for the Internet of Things, based on Node.js (a server-side JavaScript platform). It enables users to stitch together web services and hardware by replacing common low-level coding tasks (such as getting a service talking to a serial port) with a visual drag-and-drop interface. Components are connected together to create a flow, and much of the code needed to link them together is generated automatically (see Figure 15-19).

Figure 15-19. NodeRED (image: IBM)

An alternative approach to visual or written syntax is programming by example: demonstrating to the system what you want it to do. Microsoft employed this approach with HomeOS.[259] Users can record macros to activate sequences of actions, such as turning on lights when a door is opened. This allows users to set up simple macros without the need to learn an abstract visual or verbal representation of the system.

It sounds simple, but it’s not without issues. What if you put the house in learn mode, and are trying to demonstrate a sequence of actions you want the house to remember when someone else in the house does something unexpected and the system learns that too? Walking into the living room now doesn’t just turn on the lights, but also opens the cat flap and puts SpongeBob SquarePants on TV.

And programming by example does not get around the need accurately to predict future needs, nor allow users to set up multiple triggers, nor specify conditions under which a macro should or should not be triggered.

It’s also not clear what happens when the user wants to edit a macro, or just find out which macros exist: do they still then need to engage with an abstract representation?

This highlights a trade-off in end user programming: making the tool easier to use generally limits its expressibility, or flexibility to support a wide range of commands and logic. Full-featured programming languages may be highly expressible and able to do a wide range of things, but have a steep learning curve for novices. For each tool, you need to define an appropriate balance, depending on what the user may need the system to do, and their prior knowledge and ability to configure it.

As discussed previously, the complexity in programming-like activities is not just in understanding how to instruct the system to do what you want. It’s also in diagnosing and fixing bugs, and ensuring there are no unintended consequences of your commands.

The Whyline programming tool (a research project from Carnegie Mellon University) allows users to ask “why did” and “why didn’t” questions about their program’s output.[260] For the purposes of research, the project focused on users controlling a Pac-Man character around a virtual world. But an approach like this could be hugely valuable in, for example, a home automation system. Users would be able to get answers to questions such as:

§ “Why didn’t the dishwasher run this morning?” (perhaps the smart electricity grid turned off the supply to that nonessential device at a time of peak demand)

§ “Why is the hall light flashing on and off?” (perhaps it is receiving conflicting instructions from security and energy saving apps)

For predicting future actions or testing, simulation is a possible approach—for example, the ability to test your new smart home rule in a sandbox environment and identify any clashes with other rules safely before unleashing them on the main system.

Is programming the right model?

We’ve proposed that configuring a system to do something in another place, or at another time, is a form of programming, and that the Internet of Things is largely concerned with these things. That means that focusing on designing programming-like experiences is a key challenge for IoT. As programming is mentally complex, ways need to be found to make it easier for a wider audience.

But the success (or otherwise) of a programming-like experience for a particular problem isn’t just about whether users are able to understand their tools (which we tend to assume is a function of some combination of innate ability, learned skill, and the design of the tool). It’s also about attention.

In a world that is mostly powered by computers, it’s important that we empower as many people as possible with the logical and practical skills needed to understand and control those computers. Not everyone need be a professional programmer, but all of us benefit from a better grasp of computational thinking. Introductory tools like Scratch can be very helpful here. But however easy the tools or good our training, programming-like activities that are supposed to make life easier through automation can paradoxically make intensive demands on our attention to set up and manage them.

As discussed in Chapter 4, this effort needs to be justified in terms of the value to consumers, who tend to favor focused products over tools. But we can’t preconfigure products to know exactly how to work in complex multidevice systems. Some configuration is inevitable; it will be conceptually like programming, and it will need our attention.

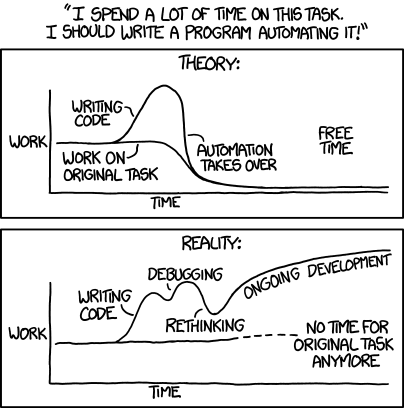

For many of us, attention is in short supply. Most consumers probably have at most a couple of burning issues in their lives in which they are sufficiently interested or invested to expend time in advanced configuration or system administration. Alan Blackwell frames the user’s dilemma as the “investment of attention”: should he spend time figuring out how to program a system, considering how hard it will be, how much time it will take, and what the benefit or risk might be? Or is it more efficient or less risky just to do it manually (see Figure 15-20)?

By embedding programming-like activities in daily tasks, we risk disenfranchising a percentage of the population who lack the skills or time to cope with them.

Figure 15-20. Automation, by XKCD[261] (CC license)

At the times when this may be too limiting, there is another approach: pay someone else to do it. Consider household plumbing: some people do their own, some people enlist the help of a friend or relative with good DIY skills. Anyone with a job beyond their own (or their friends’) abilities hires a pro.

Translating this to IoT, users might configure their own behaviors or buy apps for their homes or other consumer needs, or find shared “recipes” on services like If This Then That. But these would still require a considerable degree of customization to fit each user’s personal circumstances. For the most daunting problems, they might pay someone else to understand their needs and set it up the way it should work, as is the case with the Time It Right Orthodox Jewish home automation example briefly described in Chapter 4. We may see the emergence of new categories of home configuration and support technicians.

Giving Machines Control

In this section, we’ll look at the opportunities and challenges of putting the system in control.

Make the system smarter

Some researchers have argued that making programming more usable is not feasible, and instead, we should make smarter systems that learn and adapt to the user’s needs. Popular fiction loves to characterize AIs as anthropomorphized servants, like The Jetsons’ robot maid Rosie[262] or J.A.R.V.I.S. in Iron Man.[263] But most examples of intelligent or adaptive systems right now are more prosaic, specialized devices like thermostats and vacuum cleaners (see Figure 15-21).

Figure 15-21. The iRobot Roomba 870 vacuum cleaner (image: iRobot)

Making devices smarter offers far greater potential than remote controls and programming. Nicholas Negroponte, the founder of MIT Media Lab, recently argued that the kind of remote control–based IoT products coming out right now are neither innovative nor intelligent:

I look today at some of the work being done about the Internet of Things, and I think it’s kind of tragically pathetic, because what has happened is people take the oven panel and put it on your cell phone, or the door key onto your cell phone, just taking it and bringing it to you, and in fact that’s actually what you don’t want. You want to put a chicken in the oven, and the oven says, “Aha, it’s a chicken,” and it cooks the chicken. “Oh, it’s cooking the chicken for Nicholas, and he likes it this way and that way.” So the intelligence, instead of being in the device, we have started today to move it back onto the cell phone or closer to the user, not a particularly enlightened view of the Internet of Things.[264]

What intelligent systems can do

A simpler autonomous system may have a goal, be able to observe specific data inputs, recognize patterns in the data, and execute a limited range of functions in response.

For example, a thermostat might have the goal of maintaining a comfortable temperature in a home when it is occupied. It would monitor sensor inputs, such as motion, temperature, and lighting, or the location of mobile phones, and activate behaviors in response, such as turning the heating or AC down when there is no one at home. It might also, over time, learn the patterns of occupancy of the home and adjust its own schedule accordingly (as the Nest thermostat does).

A robot vacuum cleaner, like the Roomba, has the goal of cleaning the entire floor of a room. By traveling around the room bumping into things, it learns the layout of the room and its obstacles over time. This allows it to calculate the optimum path for cleaning the room next time around, and know when it has finished.

A more advanced artificially intelligent system might have some broader knowledge about the domain in which it operates, and the ability to learn about the user’s needs and intentions. This is the stuff of the kind of smart home dreams that have been around since Mark Weiser, and before. For example, if a “smart” home system noticed that a user had an early meeting on the other side of town and that rainy weather was likely to result in more traffic congestion, it might decide to adjust her alarm clock to wake her up earlier and turn the hot water on for an earlier shower, factoring in the amount of time it has observed she typically takes to get ready.

There’s definitely scope for autonomous systems to help us out with some of the tasks that humans are simply not very good at or find boring (such as controlling heating, and vacuuming). Research projects such as Intel’s Proact[265]and MIT’s house_n[266] have also explored the use of intelligent systems to monitor the day to day of people with dementia, through tagging household items with sensors, learning their “normal” patterns of use, and alerting users or caregivers to anomalies and risks, such as ovens left on.

The fundamental challenge is that it is very hard for computers to understand humans, and behave in “common sense” ways. For an autonomous system to understand how to act in any situation, it needs to know how to infer and use context appropriately. Humans are able to understand context holistically. We take in a huge amount of information about a given situation (much of it subconsciously) and intuit what is important and how best to act. Getting a computer to do the same thing requires us to tell it, in very concrete and logical terms, what to look for, how to interpret it, and how to act.

This is extremely hard to get precisely right, and even a very smart system will sometimes get it wrong. When it does, it will be intensely annoying at best and disastrous at worst. Cold showers, unpalatable meals, or unnecessarily early awakenings while the system learns your preferences are one thing. Being locked out of your own house because your home thinks you are an intruder or being unable to make your car take you to hospital because it thinks it’s a work day would be utterly unacceptable. But getting it right can take a level of intelligence that is prohibitively time consuming and expensive to develop, just to do something that is common sense to a human.

Scott Jenson uses the example of sensor-based lighting in a bedroom to illustrate how complex inferring the right context of a simple task can be. It sounds simple to turn on the lights when you walk into a room, and most of the time that might be useful, but the exceptions to the rule are very hard to get right:

Problem: I walk into the room and my wife is sleeping, turning on the lights wakes her up.

Solution: More sensors: detect someone on the bed.

Problem: I walk into the room and my dog is sleeping on the bed, my room lights don’t turn on

Solution: Better sensors: detect human vs pets

Problem: I walk into the room, my wife is watching TV on the bed. She wants me to hand her a book but, as the room is dark, I can’t see it.

Solution: Read my mind.[267]

Humans are extremely good at this type of context inference, and will not tolerate “silly” errors from machines, especially not in their own homes where we expect things to be adapted to our needs. Intelligent systems may have to be so smart to work effectively that the effort of building and teaching them will only be justified if they provide significant value. But in a consumer setting, some of the more advanced ideas are overkill for the amount of value we get from them. Most of us don’t need or want hard AI just to turn on a coffee machine. And maybe the advanced AI would resent doing something this menial:

Here I am, brain the size of a planet, and they ask me to take you to the bridge. Call that job satisfaction? ‘Cause I don’t.

—MARVIN THE PARANOID ANDROID, THE HITCHHIKERS GUIDE TO THE GALAXY[268]

And the learning period the system requires to have any hope of getting it right can require additional user effort and vigilance to teach the system “normal” behavior. For example, Nest thermostat users must control the system manually for the first week or so. If a heating system is using electrical activity above baseload as a proxy for presence or activity, then home occupants will have to avoid running the dishwasher or tumble dryer when they are out or in bed during the learning period, or it will think they are in, up and about.

However, we can expect the accuracy of machine learning to improve in the future. Huge investments are being made into research in autonomous technologies, such as self-driving cars, as companies like Google see this as a valuable market opportunity.

A possible middle ground that could help alleviate some of the pain of configuring complex systems is a mix of user control and autonomy. An intelligent heating system might monitor occupancy and set its own schedule, but also give users control to turn the heat up or down, or demand more hot water, and over time the system would adjust better to their needs. It might also take into account the fallible human psychology in place around heating: for example, the tendency to crank the thermostat up high when feeling cold in the false belief the house will warm up faster. The system might acknowledge the user’s action and give the impression that the heating had been turned up a lot, but actually only turn it up a tiny bit. Or if the house was already in the process of warming up to a schedule setting, it might quietly ignore their command. Autonomous systems can be useful in bridging the gap between what users actually need and their ability to configure the tools at hand to achieve that, without taking away control.

A system might also observe the user taking an action repeatedly (e.g., always turning off certain appliances at night), and ask whether they would like to automate this in the future. Or a system might notice a pattern of repeated overrides of a smart home rule (e.g., a light that has been turned on as part of a macro is always manually turned off immediately afterward) and offer help modifying the rule.

UX risks of autonomous systems

Autonomous systems can save users time and effort, and do certain types of job better than humans. But of course the user always needs a safe, easy override, in case the system fails or does something strange. And designers need to be aware of some potential user experience pitfalls.[269]

Reduced control

Giving too much control to the system can take control away from the user and prevent them from getting the system to do what they want it to do. The system may not be pulling a HAL and trying to take over, but it will be frustrating and disempowering at best. A smart home might have windows that are opened and closed automatically to manage the internal climate, but if these controls stop working properly they risk opening and closing windows at random. Residents should have an override switch to control the windows themselves, and if all else fails the system should be able to be shut off without losing manual control of the windows.

If the autonomous actions are only occasional, the system could ask permission before acting, for example: “I see you never come home before 5 p.m. but the heating comes on at 4. Would you like me to adjust your schedule?” Or it could notify the user after making a change and give them the option to cancel the action. But if the system is making lots of decisions and frequently asking for permission, this will become intrusive and irritating.

Reduced predictability and comprehensibility

For users to be in control, they need a clear understanding of how the system works, what it does, and why it behaves in particular ways in response to their actions or certain situations. It may not be that easy to explain why a complex system controlled by adaptive rules is behaving as it is, especially if it has a limited UI through which to communicate. It may not even be that easy to understand the boundaries of what it can or cannot do.

As the writer Howard Rheingold puts it:

“What child will be able to know that a doorknob that recognizes their face doesn’t also know many other things? We will live in a world where many things won’t work, and nobody will know how to fix them.” [270]

And autonomous systems interacting with other autonomous systems will be especially hard to keep track of.

Balancing user control with system autonomy

As smart (truly smart, not just connected) devices become more commonplace in the world around us, we will need to figure out how to design systems that allow people to do what they are good at, and machines to do what they are good at.

Users should never lose control: there must always be an override. If the override fails, the system should be usable manually. While the system is learning, it should ask the user’s permission to act autonomously if that would not overwhelm them with messages (e.g., “I see you tend to wake up at 7 a.m. Would you like me to set an automatic alarm for 7 a.m. every weekday?”). If that creates too much overhead for the user, it should at least be possible for the user to understand everything the system does, why it does it, and to correct any incorrect assumptions in the user model (“Mom does not live here, she just visits occasionally”).

Systems could offer to automate routines that are clearly established, like shutting all windows when the last person leaves.

Intelligence can also be used not to change system functionality but to offer adaptive help to the user as they try to complete unfamiliar tasks, or to adapt the interface or information presented to better fit their needs at that time or abilities.

We should also not forget the emotional dimension to automation. The fear of the machines taking over is almost as big a barrier as the actuality. Users often overestimate how much a system actually knows about them and how much control it really has. Even if a system isn’t really that smart or capable, it must be made to feel nonthreatening. Part of that is about being transparent about the information being captured, and what is being done with it, as described earlier. Another part is about the emotional design of the system: the form and appearance of the devices, and the conversation it has with the user.

In robotics and 3D animation, the term “uncanny valley” is used to describe the unsettling effect of faces that are almost, but not quite, human. A face that is clearly not human feels far less alien and threatening. The designer Matt Jones draws the parallel with autonomous systems, suggesting that in trying (and failing) to be smart like a human, they will fail and feel creepy. Instead, he proposes designing things that we know to be less smart and capable than ourselves, that help us retain our sense of control and not feel threatened. He calls this principle “be as smart as a puppy”:

Making smart things that don’t try to be too smart and fail, and indeed, by design, make endearing failures in their attempts to learn and improve. Like puppies.

—MATT JONES[271]

Approaches to Managing Complexity

In this section, we’ll consider some emerging methods by which complex systems of many interconnected devices can be made more manageable to users.

How UX in the Platform Scales Up

In Chapter 12, we talked about designing platforms: software systems that support the development of multiple applications. In that chapter, our focus was on the initial design challenges of a growing platform: common design components to make the UX and UI design feel coherent and consistent.

As a platform scales to support more and more devices and more applications (or services), you’ll find it’s no longer practical to hardcode in all the interrelationships between those devices, applications, and users. You need some way to automate the work of coordinating all these elements. And you need flexibility in the system to respond to devices, functions, and combinations of devices and functions that you haven’t anticipated.

This will take the form of data models and logic that specify how devices and software services should work together. In architectural terms, this will sit in the platform “middleware.” Your system engineers will have many issues to address in the platform logic, not all of which will have a direct impact on UX. But for a complex system, your ability to create a compelling and useful design is based on building blocks that the platform creates for you. The right platform logic will enable you to create a UX architecture that fits user needs well. If the platform logic doesn’t help you put the pieces together in the right way, it will be difficult or impossible to mitigate this with UI design.

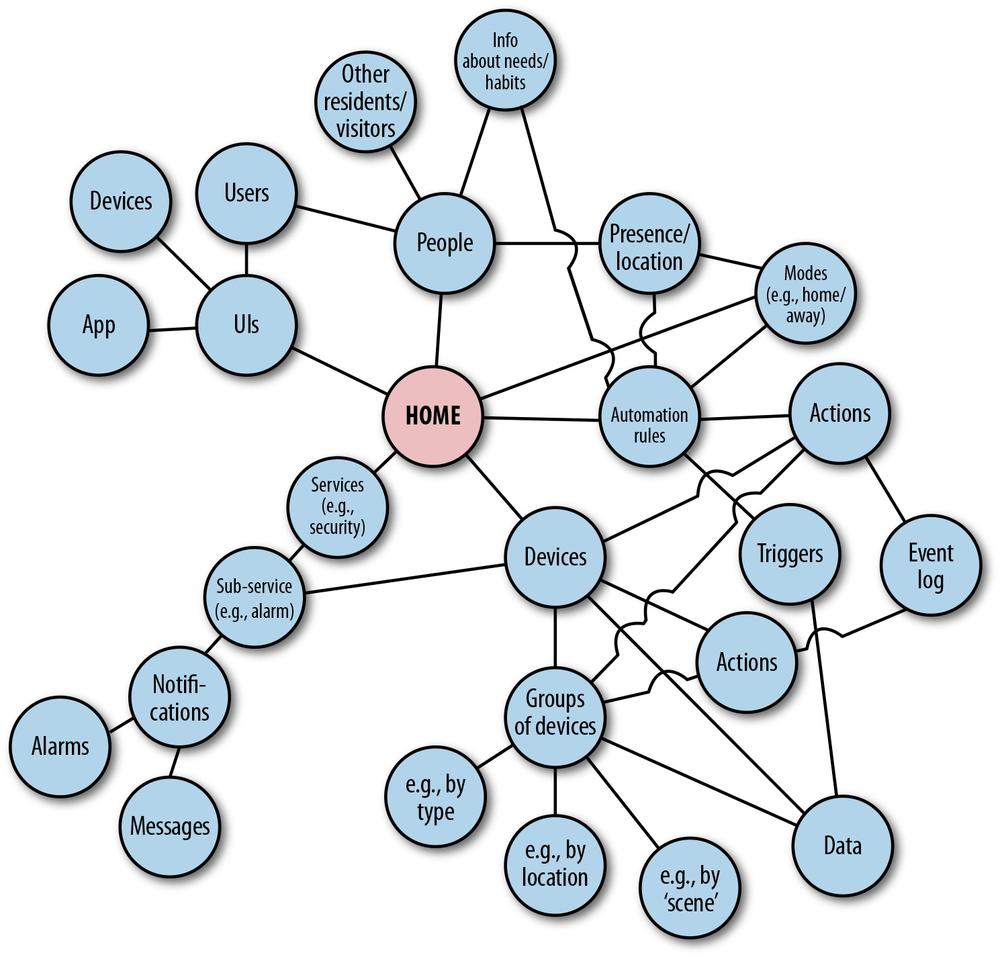

Earlier we talked about some system constructs for a connected home. There might be services (the smoke alarm, lighting, energy usage), devices (motion sensors, cabinet sensors, window shade controls), controls (on/off, up/down, timers, set/unset), various forms of notification (alarm, message, status), users or contacts (with presence status or location information and varying system permissions). There might even be multiple properties or gateways. We also presented the variety of ways user needs might be thought of: around devices, people, locations in the home, times or states (away, night), optimization (comfort, efficiency), controlling access to dangerous or private things.

Some of the specific use cases that you might need to support could include:

§ The user needs a way to keep track of everything that happens on the system and why it happens (e.g., what rules are running, and what assumptions is the system making when it acts autonomously?). For example, “At 8 p.m., the random security lights app was activated, because it was dark outside and no one was at home.”

§ When the user adds a new device, the system suggests sensible ways of incorporating it into the system, and sensible ways it can work with other devices. It already knows that lights can be useful for security, and that there is no point offering to turn on the washing machine when the burglar alarm is activated.

§ The user can address devices and functions in ways that make sense, for example, turning off downstairs lights or entertainment devices or locating Jessica’s toys or monitoring the baby’s room or Granny’s apartment.

§ The user needs a way to see and edit who is allowed to do what on the system. The builder needs to be able to come and go for a week, but shouldn’t be able to pass on their access to anyone else.

§ The user needs a way to look up a device, and find out which applications, services, or rules are controlling it, and which other devices it is coordinating with. Why is that light flashing on and off at 8 p.m. every night?

§ The user wants to add a rule to turn off nonessential devices at night to save energy. Will this break anything, conflict with any other rules, or otherwise cause unexpected consequences?

There is no one way of organizing system functionality in a traditional hierarchical UX that supports all of these needs. You can force people to think in terms of a simplified schema (i.e., organizing the house by rooms), but it will feel pretty clunky and unnatural once you get beyond a certain number of devices. You’d prefer to design something that’s flexible enough to support the way they think, but just giving them every possible piece of functionality would risk overloading them with options. So, you need some kind of organizational logic to underpin the UX and bridge the system makeup with user needs. This means some mix of system intelligence and data models to describe the devices, data, and system functionality, and how those things can be combined in different ways to meet different needs. Think of this as UX design for the platform layer.

The goal of your platform UX is to create a system that not only feels consistent and coherent, but also behaves in common sense ways. But computers don’t have the knowledge and experience of the world that we do or know how to reason with it, unless we program it in. If a user has to do all that themselves, they might well decide that the system is too much hassle to use. So we, as system designers, have to help them.

The UX design work that goes into a platform covers two key areas:

§ Provide common design elements that help create consistency and coherence in the UX across different functions and applications (as we saw in Chapter 12).

§ Data models and logic about how all the components in the system relate to help it behave smarter. These can be used to organize controls and interpret data in smarter ways. If you want to be really flexible and provide lots of different views, you’ll need some metadata to model the relationship between the devices, data, context, and people. Who is “Jimmy”? What is in the living room? Which devices and states relate to watching a film?

§ An autonomous system might also have broader knowledge about user needs and the context in which it is operating.

If you’re designing services that run on top of an existing platform, you’ll need to understand what it does and what standard ways of handling things exist. If you’re designing things that run on a new platform, you may find yourself having to think about general platform components and logic as well as the specifics of your new application. This can be hard: you have to think about both the big picture and the details. You have to create generalizable components and logic that work for many use cases and situations. But you also have to design for specific use cases/situations or the design won’t be good for those things.

Data Models

Data models describe connected things to applications, driving discovery and linking.

—IOT RESEARCHER MICHAEL KOSTER[272]

In this chapter, we use the term “data model” as a catch-all for a range of concepts that might be used in system software. These might describe system components, including devices, users, and services (in the sense of applications that may run on your platform). They may describe the data collected by your system, and they may define the relationships between different system components. Or they might provide wider domain knowledge about user needs or the context in which the system operates.

Data models allow us to configure the system to behave in smarter ways. In a small system, you could code in every single possible interrelationship between the different people and devices and services. But as we saw earlier (Figure 15-3) that would soon become an unmanageable headache. Instead, you create an abstract representation in software that automates some of the work of orchestrating those components.

Data models can be used to improve interoperability, organize controls, and interpret data in smarter ways, better suited to bigger systems. An autonomous system might use domain knowledge about user needs, activities, and context to determine how to act.

Data models can make or break a user experience. They give the system a “mental model” of how things work together. If there is no abstraction in your system, you’ll find that as it scales and more devices are added, you’ll feel increasingly constrained in the functionality you can offer. Abstractions allow you to make the system more general purpose. Instead of viewing a camera as just part of a security alarm, you can treat it as a way to capture media, freeing it up for alternative uses such as monitoring a baby, or a pet’s feeding habits. And the right data models will support you in designing a system that is a good fit for the user’s mental model of how things should work together, even as it scales.

If IoT is to scale, some kind of data models are essential. The question is not whether data models are needed, but which ones. Commercial platforms (such as Thingworx[273]) may have their own proprietary data models. Some people are working on shared standards to drive interoperability, but this is still a nascent technical field.

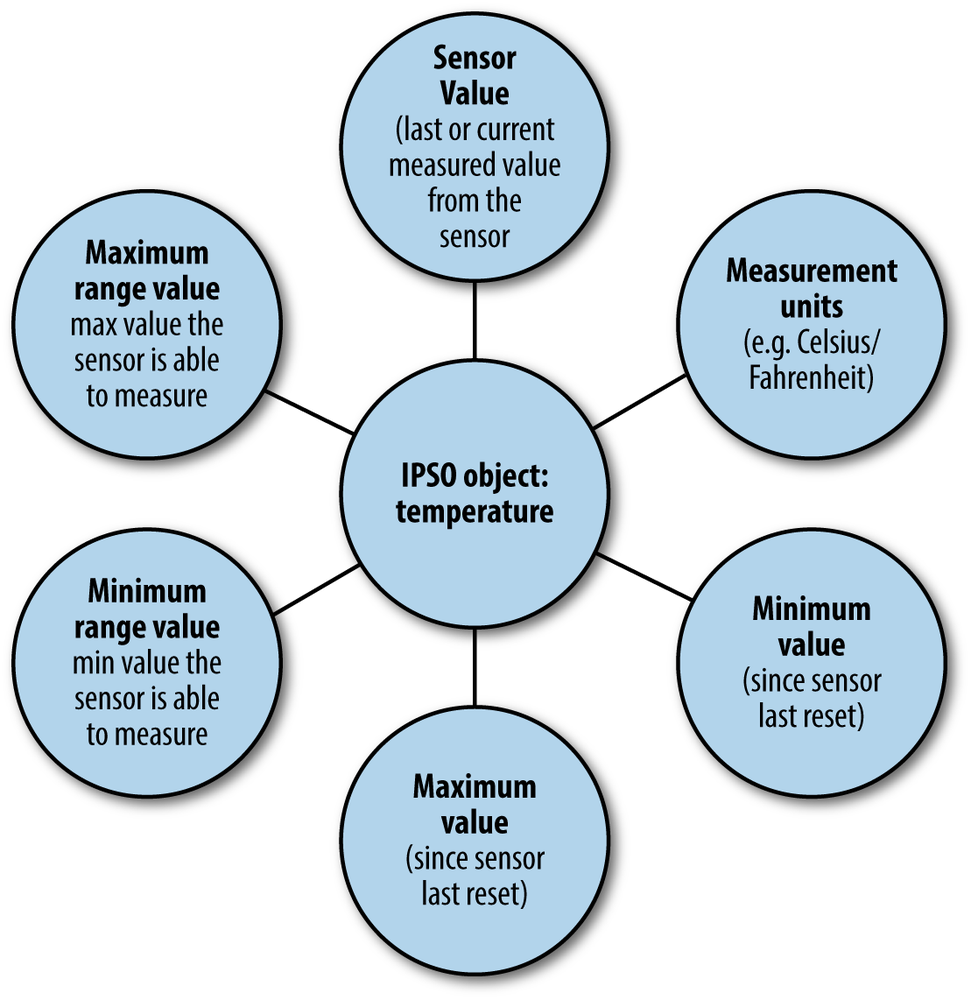

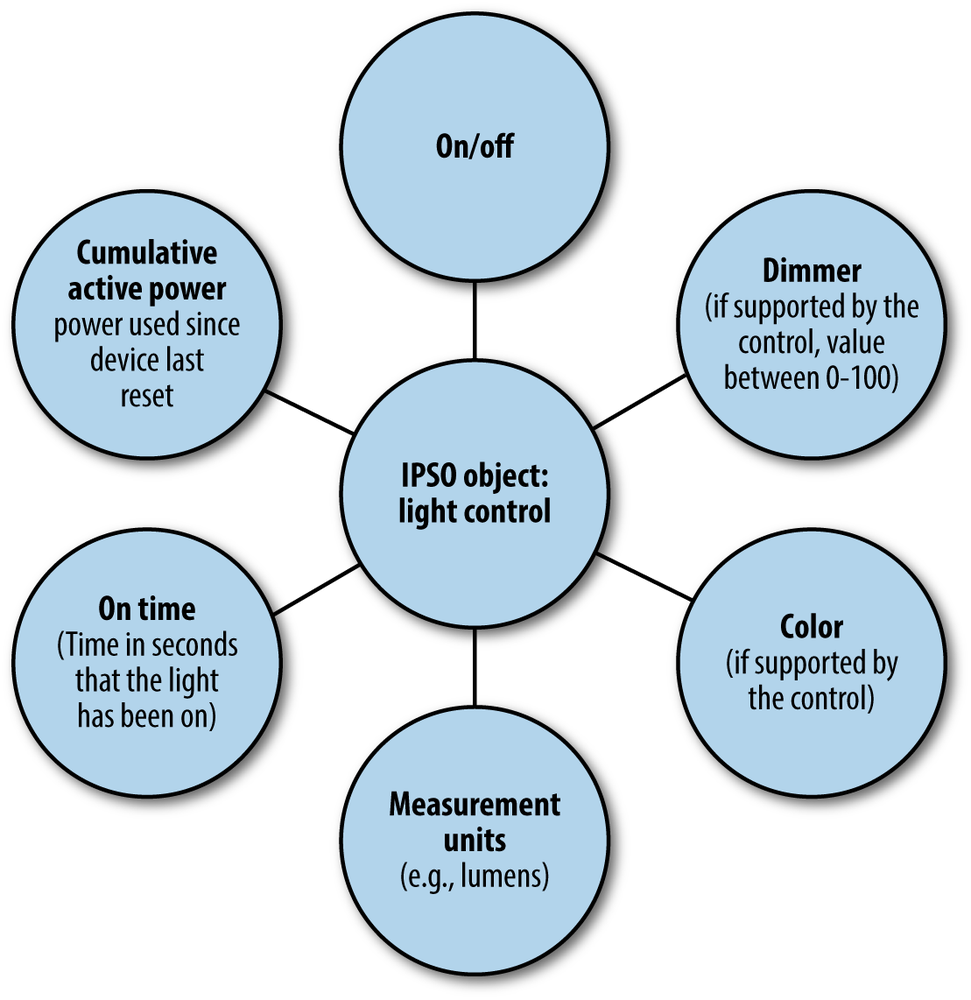

Next, we’ll look at some ways that data models might be used to support the design of IoT systems. These may include:

§ System-generated metadata about devices. This might include information about the device’s type and capabilities, and the data it gathers. Having standard ways of sharing this information enables devices to describe themselves and their data, so applications (and users) can find useful and relevant devices and know how to work with them.

§ Metadata about the device context, which may be generated or edited by the user. This might include its name, location, who owns it, or a description of the purpose for which it is used. Applications can use metadata like this to present devices and functions in smarter ways to the user, or better interpret the data they are measuring.

§ A domain model that describes your system’s logical components, and how they should work together to support particular user activities or in particular contexts.