eCommerce in the Cloud (2014)

Part III. To the Cloud!

Chapter 11. Hybrid Cloud

Though ecommerce applications have traditionally been viewed as monolithic with the frontends (e.g., HTML, CSS, JavaScript) being inseparably combined with backends (e.g., code containing business logic connected to a database), it doesn’t have to be this way. In today’s omnichannel world, the approach of having the two inseparably combined no longer makes sense technically or strategically.

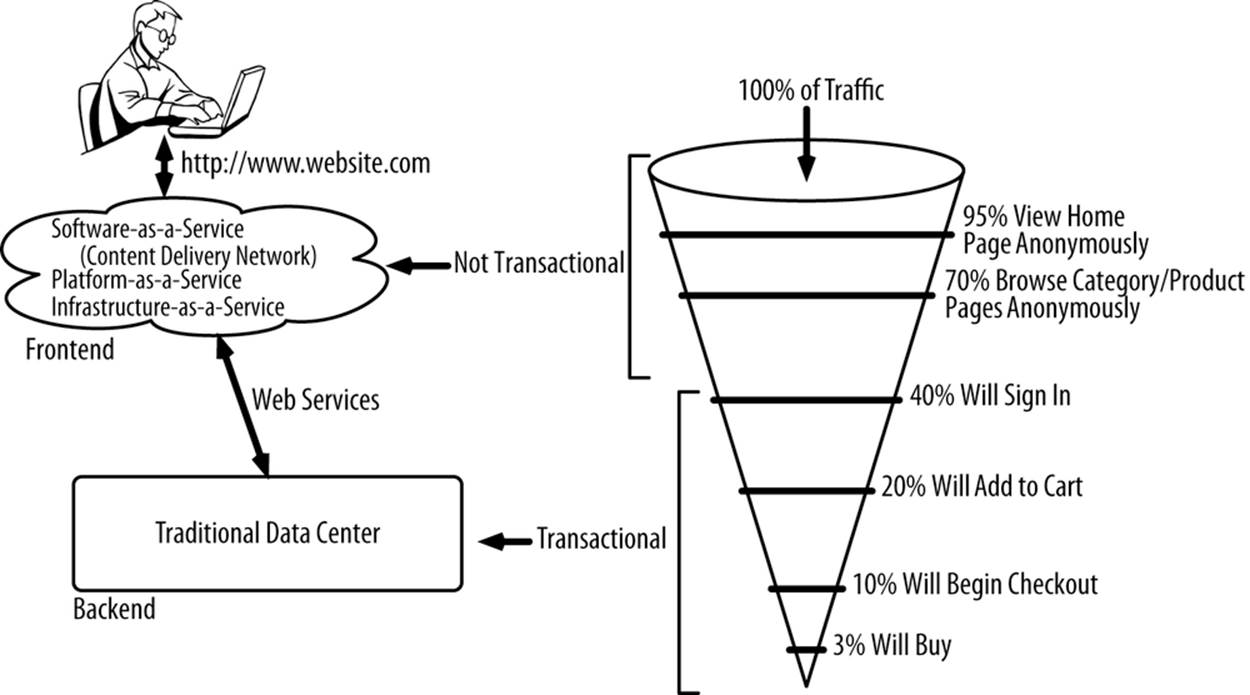

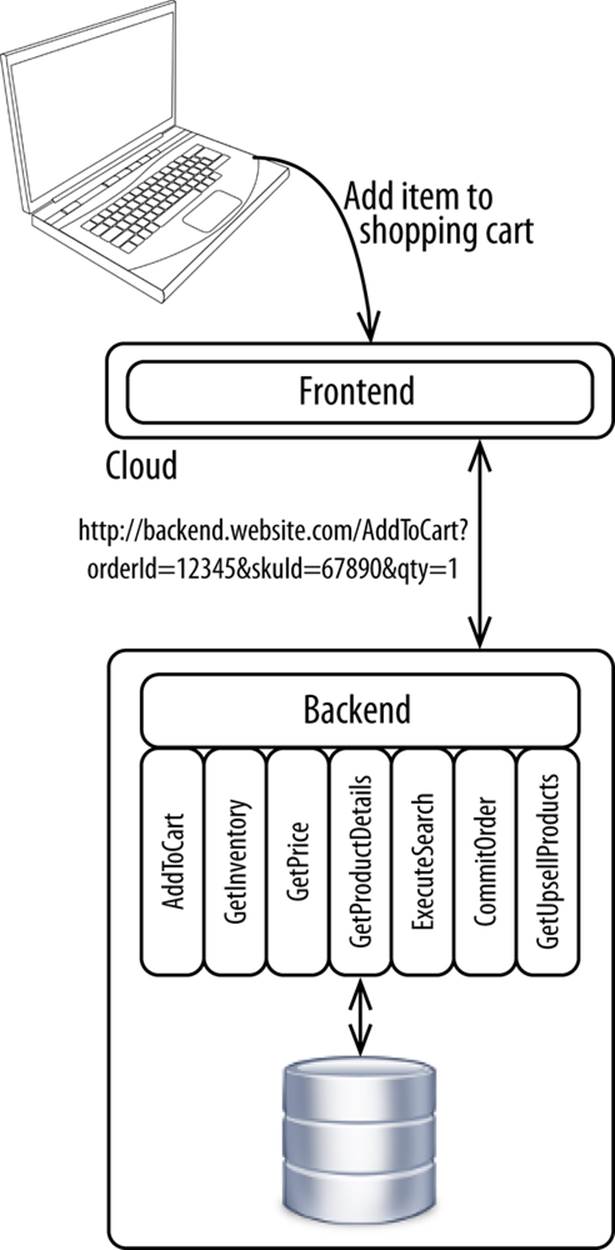

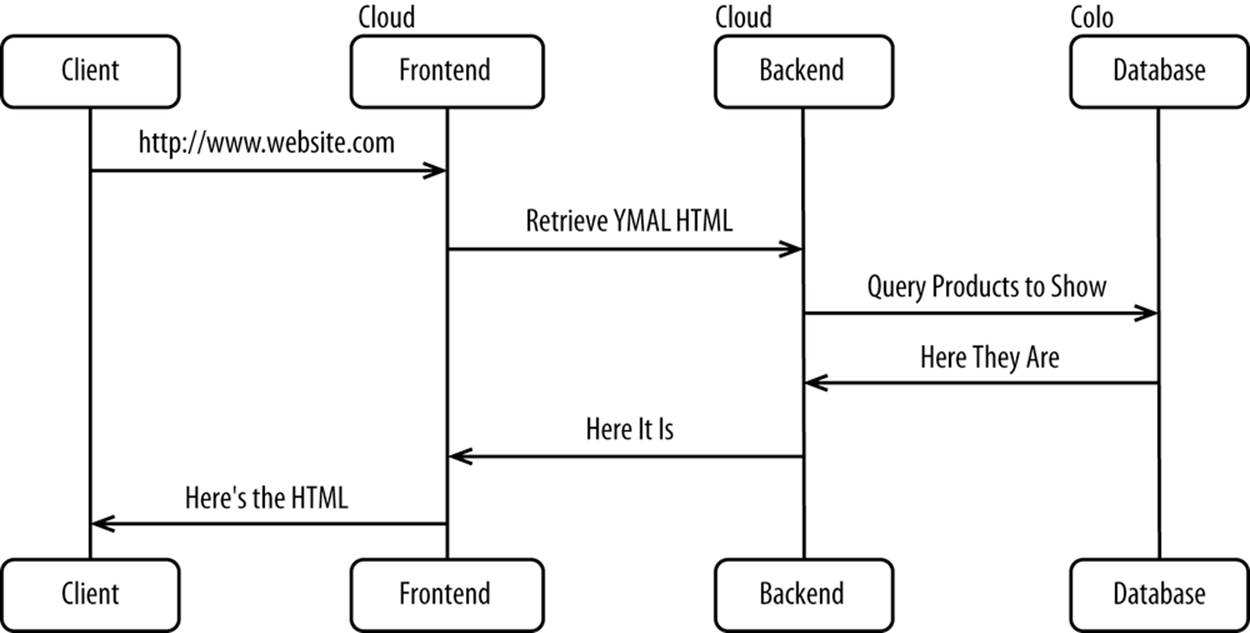

If you split your frontend from your backend, most page views can be served from your frontend without the backend ever being touched. Only when you transact—add to cart, check out, update profile, and so forth—do you actually have to touch your backend. In this model, your backend substantially shrinks and remains under your firm control while your frontend can elastically soak up most of the page views in a public cloud. DNS ultimately resolves to your frontend, with your frontend then calling your backend when necessary, as shown in Figure 11-1.

Figure 11-1. Nontransactional frontend served from a cloud, transactional backend served from a traditional data center

This is exactly how most other channels work today, with the exception of the Web. Thick client applications like those found on kiosks or smartphones already interact with your backend in this way. It’s time for the Web to catch up to this model.

In addition to strategic reasons, there are practical reasons the two should be split. The hosting needs for a frontend are different from what a backend needs. Your backend needs the following:

§ One or more highly available and fully backed-up databases

§ Terabytes of highly available and fully backed-up storage

§ High-quality, reliable hardware

§ Multiple firewalls

§ Integration with other backend systems

§ Highest possible availability

§ Highest possible security for data at rest

While clouds can offer this, many will find it easier and safer to keep this in-house. Your frontend needs the following:

§ Rapid elasticity

§ A lot of bandwidth

§ Highest possible security for data in motion

§ To be inexpensive

These attributes make cloud a natural fit for frontends. Before we explore the various flavors of how to split frontends from backends, let’s explore how this architecture is a natural by-product of an architecture for omnichannel.

Hybrid Cloud as a By-product of Architecture for Omnichannel

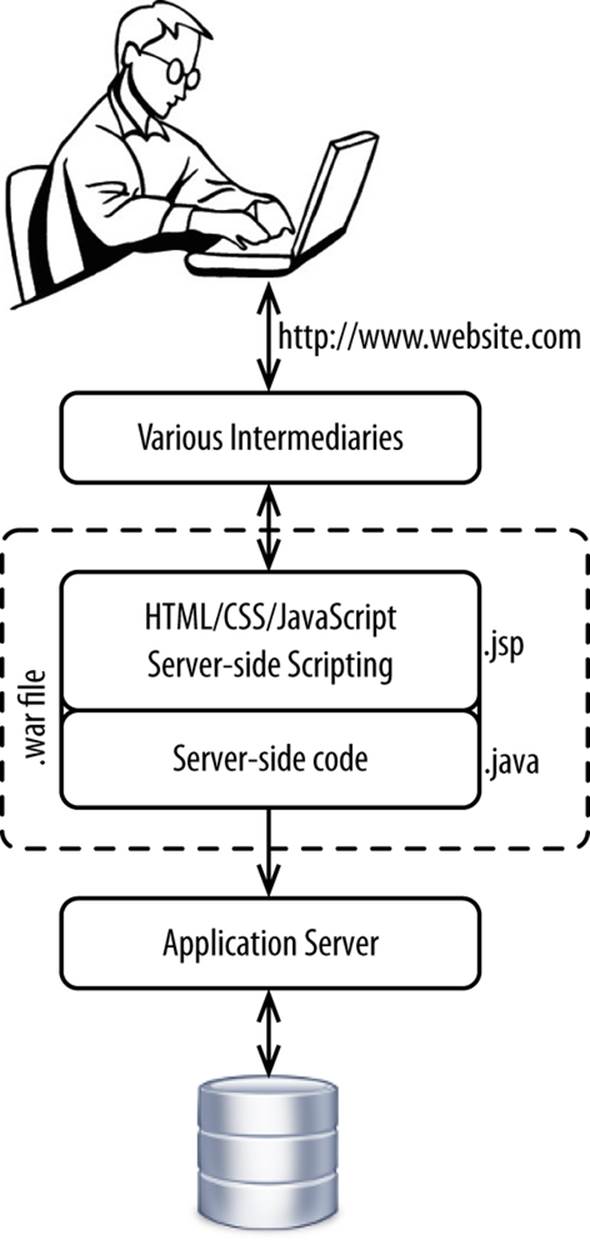

Traditionally, ecommerce applications have been written and deployed in a single package containing the following:

§ HTML/CSS/JavaScript

§ Server-side scripting code like JSP and ASP

§ Server-side code like Java or C#

This package was typically deployed to an application server as a single archive, like a WAR or EAR file. To get to the compiled server-side code, you had to first go through your server-side scripting code. Developers worked on both frontend and backend code. When the Web was the only channel, this worked just fine (see Figure 11-2).

Figure 11-2. Code packaging for one channel

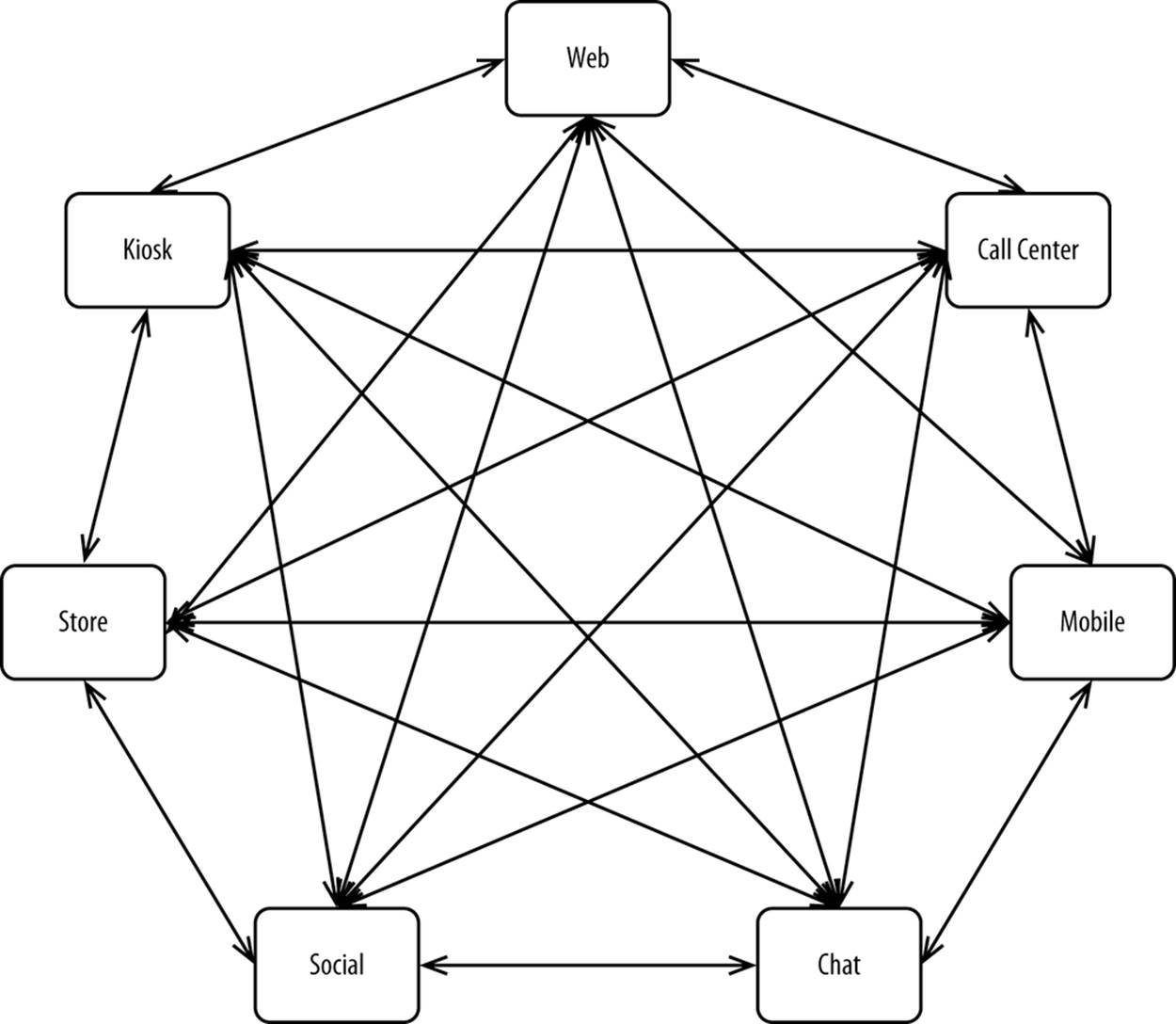

Then multichannel came of age in the mid-2000s as mobile began to take off. No substantial architecture changes were made to support mobile and the new channels that followed it. Additional channels were built alongside the existing stack supporting the web browser–based HTML user interface, with integration gluing everything together. Each channel operated in a silo, unaware of what was going on in another channel unless there was full bidirectional integration between each channel, as shown in Figure 11-3.

Figure 11-3. What multichannel ecommerce evolved into

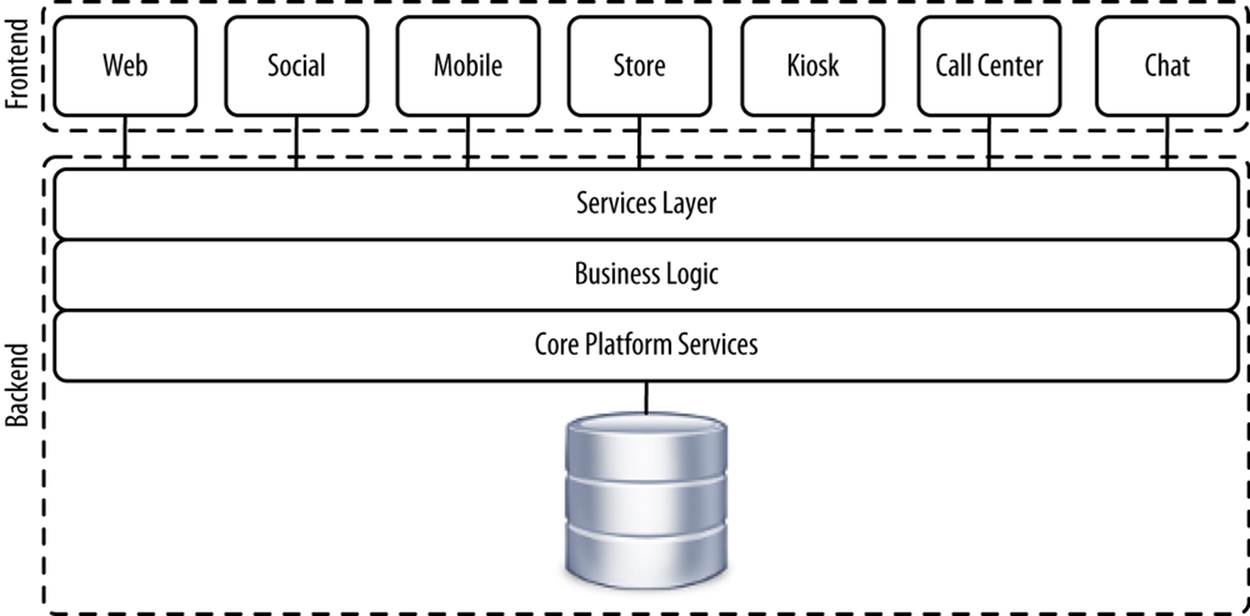

Even with full bidirectional integration, the customer experience was still poor because integrating heterogeneous systems is inherently error prone and updates are always asynchronous. As we’ve discussed in previous chapters, the solution to this problem is to build a single omnichannelplatform whereby core functionality (place order, add to cart, register new account) is exposed as a service, with any user interface able to consume those services (see Figure 11-4).

Figure 11-4. Omnichannel-friendly architecture

This architecture entirely eliminates the need to perform any integration, because the various channel-specific user interfaces now serve as conduits to the same backend platform.

NOTE

Both omnichannel retailing and hybrid clouds require the frontend to be split from the backend. The split is a natural outcome of an omnichannel-based architecture.

Once you break the frontend from the backend, you can deploy each tier separately, with all interaction taking place through a clearly defined API, as depicted in Figure 11-5.

Figure 11-5. Frontend in a cloud, backend in a traditional data center

This API can be reused across any channels and frontends, with all clients transacting against the same backend. Customers love the seamless interaction across channels for the following reasons:

§ Pricing, promotions, inventory, product assortment, and all other data is the same across all channels.

§ Customers don’t have to create profiles unique to each channel.

§ Customers can update the same shopping cart across multiple channels. For example, a customer can begin an order on a mobile device and finish it on a desktop at work.

§ Customers can have contact center agents or employees in a physical store help to complete an order started online.

Multichannel inherently leads to fragmentation, which upsets customers. Omnichannel, on the other hand, allows for seamless customer engagements across channels.

Before we can discuss the different approaches to a hybrid cloud and how it intersects with omnichannel retailing, we have to discuss how to best connect your backend to your frontend in a cloud.

Connecting to the Cloud

When your frontend is physically distant from your backend, you need to bridge them together with a connection. Every HTTP request to your frontend may result in between zero and potentially dozens of requests to your backend. Requests are typically HTTP, but they may even be calls to your database. The connection discussed in this chapter is a vitally important link between the two halves of your platform, so it must at least be highly available and offer enough bandwidth. Beyond that, the connection can optionally offer security and low latency.

Despite this seeming like an important attribute, security doesn’t matter all that much. The contents of each HTTP request or other communication protocol must be secured (e.g., SSL/TLS in the case of HTTP), but the communication can flow over the Internet. For example, VPNs secure the payload but operate over the Internet. Securing the payload should be your focus, as your assumption should be that your connection is always compromised.

Latency is also a less important attribute, though it depends on your architecture. The more calls you make from your frontends to your backends and the more those calls are synchronous, the more you’ll need low latency. Some applications will require that your frontend be almost colocated with your backend because of the number of lookups that are made to backend systems. To improve performance, strongly consider using a WAN accelerator.

If you serve your backend out of two or more data centers, strongly consider pointing your frontends to your backends through a Global Server Load Balancer with latency-based routing. This will ensure that each frontend is connecting to an available backend that offers the lowest possible latency.

When selecting a vendor, the breadth and depth of connectivity options a prospective cloud vendor offers should be heavily weighted. Let’s explore the three broad approaches to connecting your backends to your frontends in a cloud.

Public Internet

The first approach is to use the public Internet. You can expose your backend to the Internet through a domain like backend.website.com. This is exactly what’s done today for every channel but the Web. Data can then be transferred between the frontend and backend over HTTPS, exactly as it is between your customer and the cloud. In other words, data in flight between your frontend and backend is no more unsecure than it is between your customer and the cloud.

With this approach, HTTP GET (e.g., https://backend.website.com/AddToCart?orderId=12345&skuId=67890&qty=1) is unsecure because even though the contents of the HTTP request are secure, the URL is visible to the world. To avoid this issue, you’d have to HTTP POST the data tohttps://backend.website.com/AddToCart:

{

"orderId": "12345",

"skuId": "67890",

"qty": 1,

...

}

In addition to HTTPS, you’ll want to use an additional security mechanism to ensure that nobody but your frontend is able to transact with your backend. Otherwise, anybody on the Internet would be able to arbitrarily execute commands against your backend. Certificate-based mutual authentication is a great way of doing this, though there are others. The requirement should be that only authenticated clients are able to issue HTTP requests to your backend.

You could configure your backend load balancer to accept only traffic from a range of IP addresses belonging to your cloud vendor, but with ecommerce so elastic, you’ll never be able to configure your backend load balancer to whitelist every IP address. It also wouldn’t take much for a hacker to provision a server from a public cloud and issue HTTP requests from there to bypass your range filter.

VPN

In addition to HTTPS, you can add another layer of security over communication between your frontend and backend by making use of an IPsec-based VPN. Traffic is still going over the Internet, but now you have two layers of security: SSL/TLS for HTTPS, and IPsec. Cloud vendors offer these VPNs as an integrated part of their offering.

As always, you should avoid using HTTP GET to move sensitive data back and forth.

Direct Connections

Many cloud vendors offer colo vendors the ability to run dedicated fiber lines into their data centers. This allows colo vendors to build data centers in the same metro area as the cloud vendors and offer what amounts to private LAN connections into a cloud. With the data centers physically close, you get millisecond-level latency and multigigabit per second throughput. These connections are dedicated and do not touch the Internet, thus adding another layer of security.

Approaches to Hybrid Cloud

Caching Entire Pages

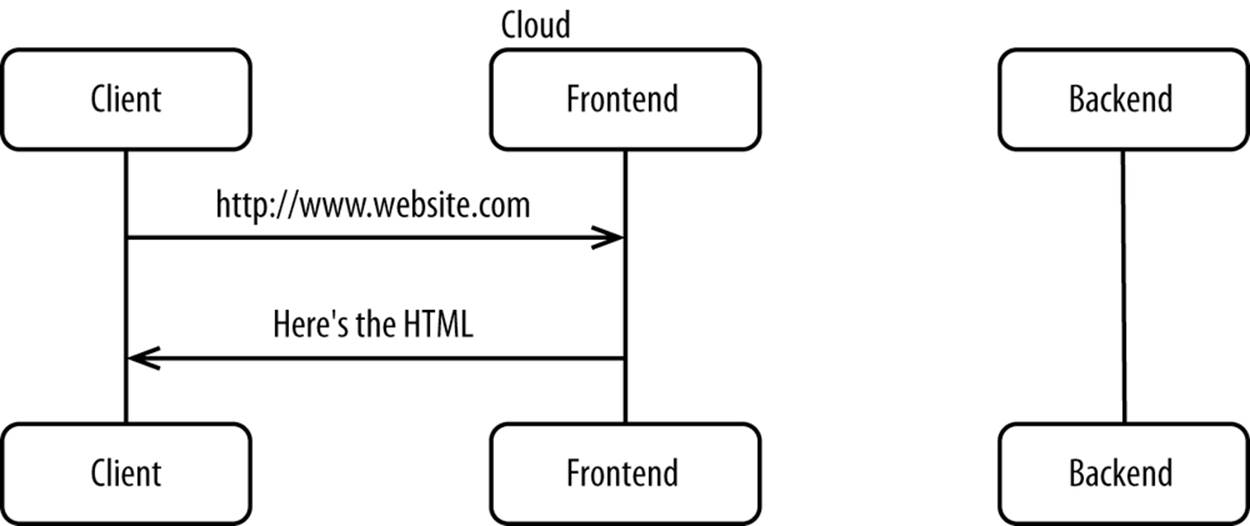

A great first step in adopting cloud computing is to move static pages out to a cloud in much the same way that CDNs serve static pages and static content (e.g., images, JavaScript, and CSS; see Figure 11-6).

Figure 11-6. Serving static pages from your frontend in the cloud

Of course, anything dynamic or not yet cached must be served directly from the origin, as Figure 11-7 shows.

Figure 11-7. Serving static pages from your frontend in the cloud, going back to backend as required

HTTP responses, whether the response type is HTML, XML, JSON, or some other format, work in much the same way, except that the content can vary based on several factors:

§ Whether the customer is logged in

§ Web browser/user agent

§ Physical location (often accurate to zip + 4 within the US or post code outside the US)

§ Internet connection speed

§ Locale

§ Operating system

Provided you can identify the variables that affect HTTP responses and vary the HTTP responses accordingly, this approach works very well for offloading most of your HTTP requests. Caching of this nature is commonly employed, especially in advance of large bursts of traffic, like before a holiday or during a special event like the Superbowl.

Use this approach to optimize any existing ecommerce website, whether or not it’s adhering to the omnichannel architectural principles. It’s quick and easy to do and works best for pages that don’t change all that much. Home pages, category landing pages, and product detail pages work best for this.

To do this, you need software that does the following:

§ Sits between the client and your endpoint (typically an application server). Proxies and load balancers commonly meet this need.

§ Can examine each HTTP request. In the OSI stack, this refers to layer 7. For example, the software should be able to look at the user agent HTTP request header.

§ Can accept blacklist rules for what not to cache. For example, you should be able to define a rule that says anything under /checkout should be immediately passed back to the origin.

§ Can understand the variables that affect the response. For example, you may vary your response based on whether the customer is logged in, what the user agent is, and physical location. Each of those attributes would come together to form a unique key. If a cached copy of, say, XML corresponding to that key exists, it should be returned. If not, the request should be passed back to the origin, with the response the origin generates being cached.

§ Can store and retrieve gigabytes worth of cached data quickly and effectively.

§ Can quickly flush caches following updates to the underlying data.

The key is identifying the variables that cause the output of each URL to vary its response. Once you’ve identified those, you can cache the vast majority of HTTP requests.

Intermediaries of all types are capable of doing this. Load balancers and proxies are common examples, but even many web servers are capable of this. This functionality is built into CDNs that are capable of serving as reverse proxies, but you can also deploy software of your choosing to public IaaS clouds.

It’s generally best to rely on CDNs to provide this functionality, as they have the advantage of pushing your cached pages out to each of their dozens or even hundreds of data centers around the world. Customers are unlikely to be more than a few milliseconds away from an endpoint, meaning most HTTP requests can be served with virtually no latency and no waiting for the response to be generated. In addition to performance, CDNs expose this functionality as SaaS, which frees you up to focus your energy higher up the value chain.

The only case for doing this in a public Infrastructure-as-a-Service cloud is if this is your first foray into more-substantive cloud computing and you want to do this as an educational exercise. This is something that’s fairly easy to do yet provides substantial benefits.

If you’re not yet sold on public clouds, you can also do this in your existing data center(s). The load balancer you use today probably has this functionality. While this is an excellent approach for a fraction of your traffic, to cache more you have to look at the next approach.

Overlaying HTML on Cached Pages

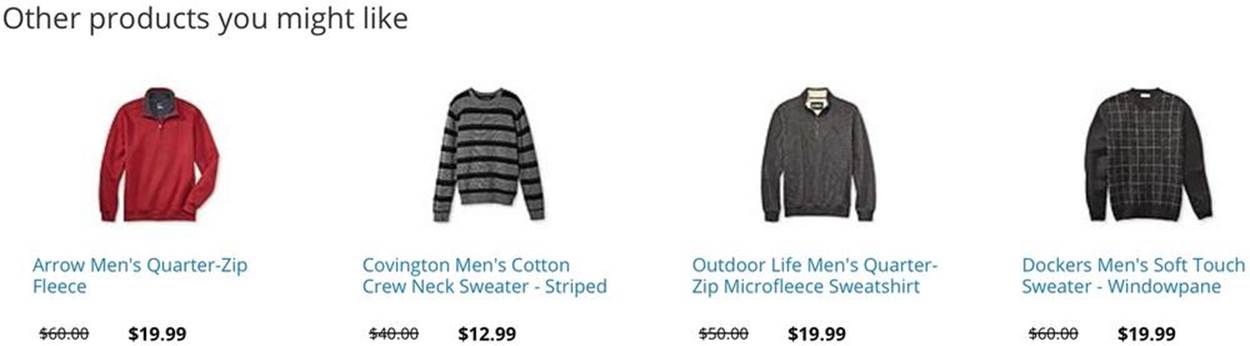

While many HTTP requests can be served directly from cache, some cannot. For example, customers viewing a product detail page often see a list of products recommended based on their browsing history or purchase history. They look like Figure 11-8.

Figure 11-8. You Might Also Like products

These are called You Might Also Like products, abbreviated YMAL for short.

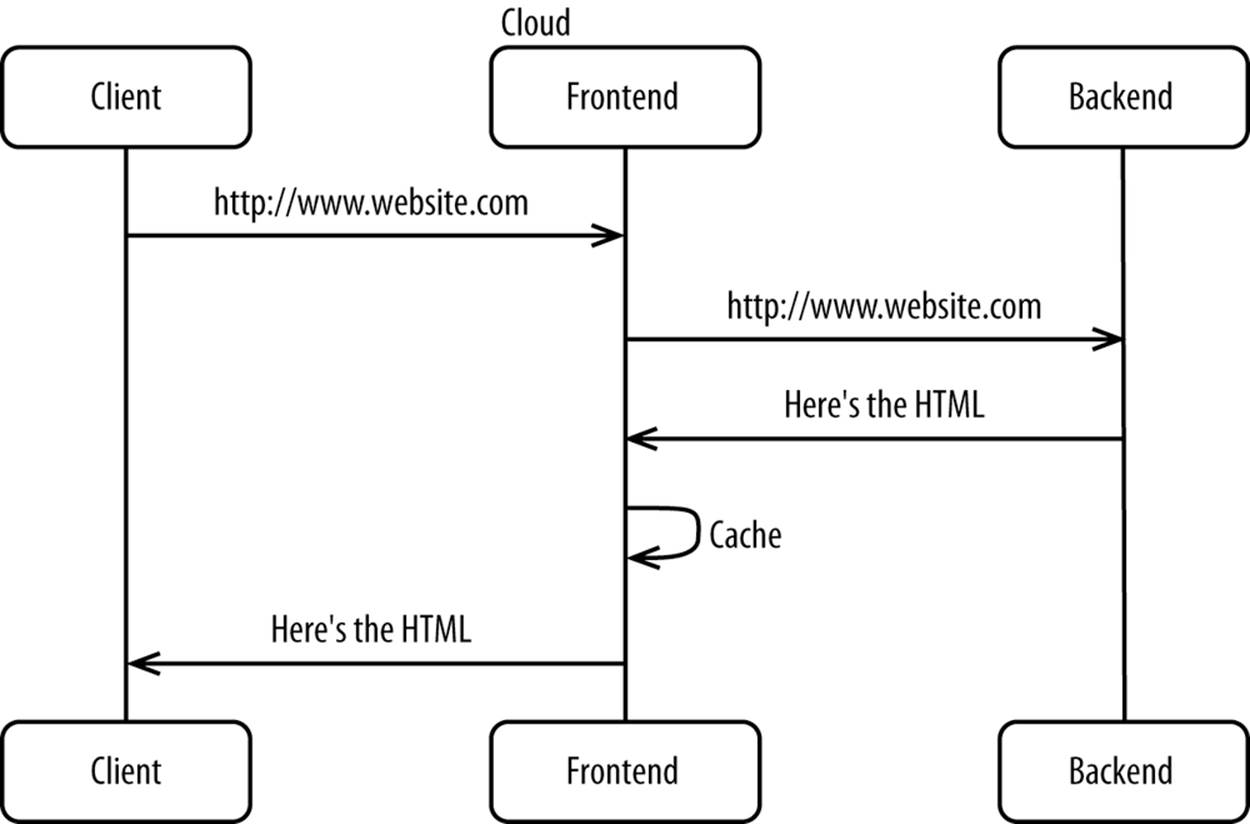

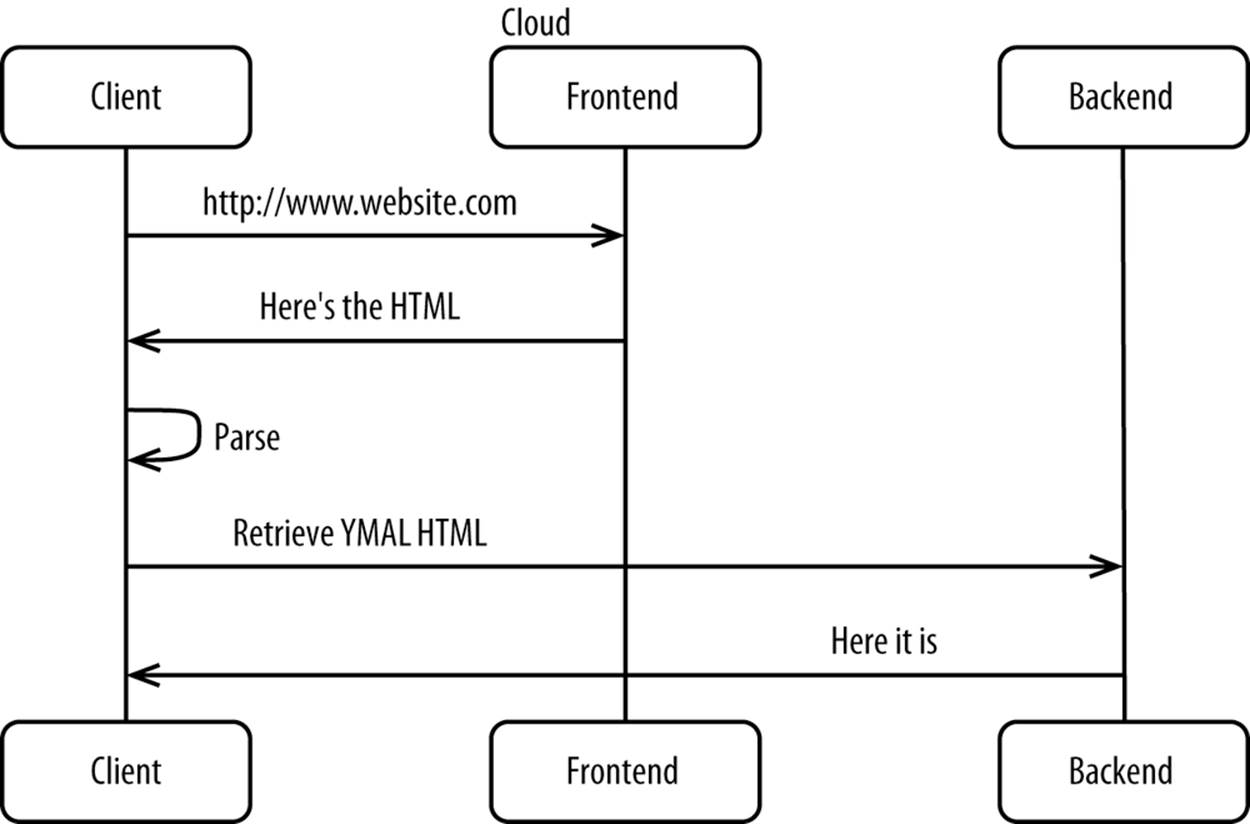

When most of a given page is the same but only a small part varies, you can cache the entire page in an intermediary as per the prior approach, but then dynamically overlay the few fragments of content that actually change on the client side, as in Figure 11-9.

Figure 11-9. Overlaying HTML on cached pages

You can easily apply this technique to the following:

§ Ratings and reviews

§ Shopping carts

§ Breadcrumbs

§ “Hello, <First Name>!” banners in the header

Here’s a very simple example of what the code would look like to do this:

<head>

<script src="http://www.website.com/app/jquery/jquery.min.js">

</script>

<script>

$.ajax({url:"http://backend.website.com/app/RetrieveYMALs?

productId=12345&customerId=54321",

success:function(result){

$("#YMALs").html(result);

}});

</script>

</head>

<body>

<!-- Product details... -->

<div id="YMALs"></div>

... rest of web page

</body>

To make this work, your asynchronous HTTP request must be returned as quickly as possible. If you wait too long to make the asynchronous request, the customer will see a fully rendered page but without the overlay. In the YMAL example, the customer will see screen repainting or, worse yet, whitespace where the products are supposed to be listed. To do this, make the asynchronous call as early as you can when loading the main page to parallelize as much of the loading as possible. Put the call at the top of the header. Also, ensure the response time of the service delivering the content responds as quickly as possible. It should take just a few milliseconds to get a response to avoid the page “jumping around” as various parts of the page are painted.

You also have to design your user interface for failure. If the asynchronous request doesn’t work, the customer should never know. In other words, there shouldn’t be a hole where the content loaded asynchronously is supposed to be.

Finally, design your user interface for graceful fallback. If the client doesn’t support JavaScript, either omit the dynamic content entirely or go back to the origin to render a dynamic version of the page. For example, most search engine bots don’t support JavaScript. You’ll want the entire page to be indexed.

When fully and properly implemented, this technique can substantially decrease the amount of load that hits your backend. The more you serve out of your frontend in the cloud, the less you have to serve out of your backend.

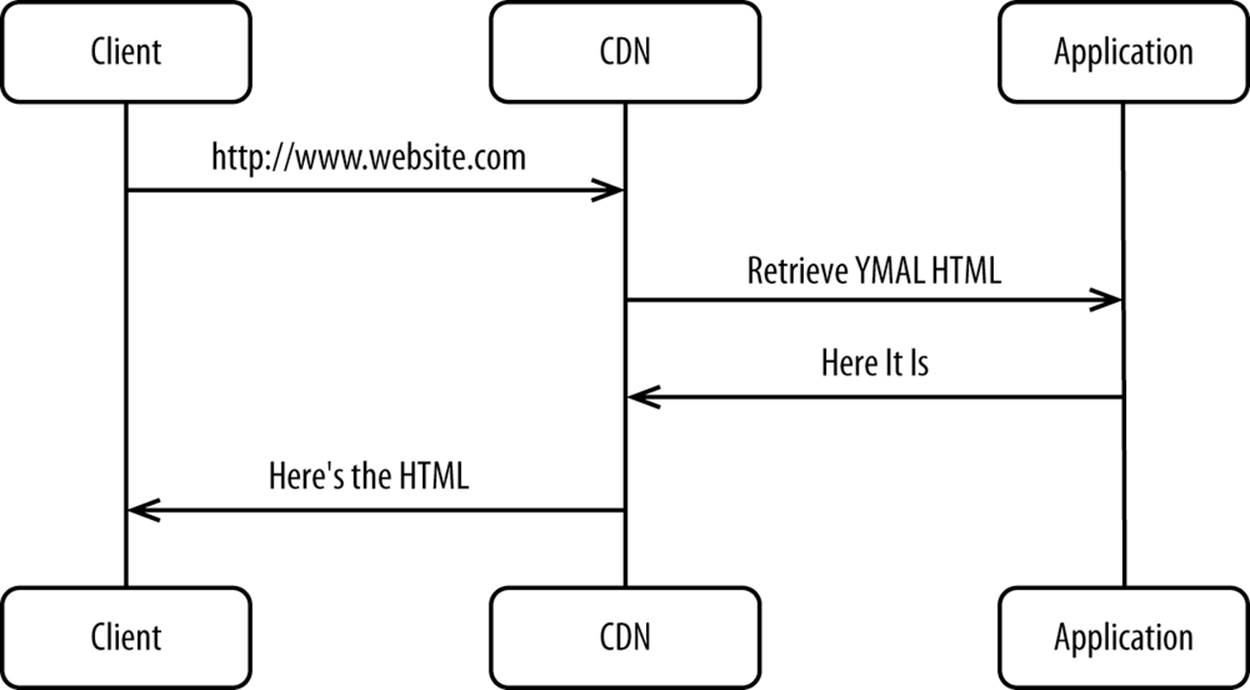

Using Content Delivery Networks to Insert HTML

Rather than overlay your dynamic fragments on the client side as with the previous approach, you can overlay them in a CDN or an equivalent intermediary (see Figure 11-10).

Figure 11-10. Using CDNs to insert HTML

By not doing anything on the client side, you don’t have to worry about gracefully falling back if the client doesn’t support JavaScript or any of the other issues that arise when you’re trying to build a page by asynchronously loading HTML from somewhere else. The other advantage is that you don’t have to forcibly split your frontend from your backend, as we’ll discuss in the next approach. If you’re looking to increase the number of pages you can serve from cache and don’t want to rewrite your application, this is your approach.

As with the client-side overlay, the technology to implement this doesn’t matter all that much. What matters is that you’re able to clearly demarcate where you’d like to insert dynamic content and from what source. The most common framework is Edge Side Includes (ESI). ESI is a simple markup language that closely mimics the capabilities of server-side includes, which we’ll discuss next.

Here’s an example of what the code would look like to do this:

<body>

<!-- Product details... -->

<div id="YMALs">

<esi:include

src="http://backend.website.com/app/

RetrieveYMALs?productId=12345&customerId=54321" />

</div>

... rest of web page

</body>

Most of the major CDNs support ESI, as do some load balancers and reverse proxies. While it’s best to use this technique with CDNs because of their ability to cache content and push it to the edge, you can certainly use load balancers and reverse proxies.

The code here is much easier to write because you don’t have to worry about doing anything asynchronously or the problems arising from that. You just insert the dynamic fragments of your page and return the entire HTML document to the client when it’s ready. Some frameworks even allow you to load each of the includes asynchronously, with the entire page not returned to the client until the last include is returned. This improves performance, especially if you have many different includes.

Again, this approach is a great middle ground that will allow you to cache much more than you otherwise would, but without having to rewrite your application.

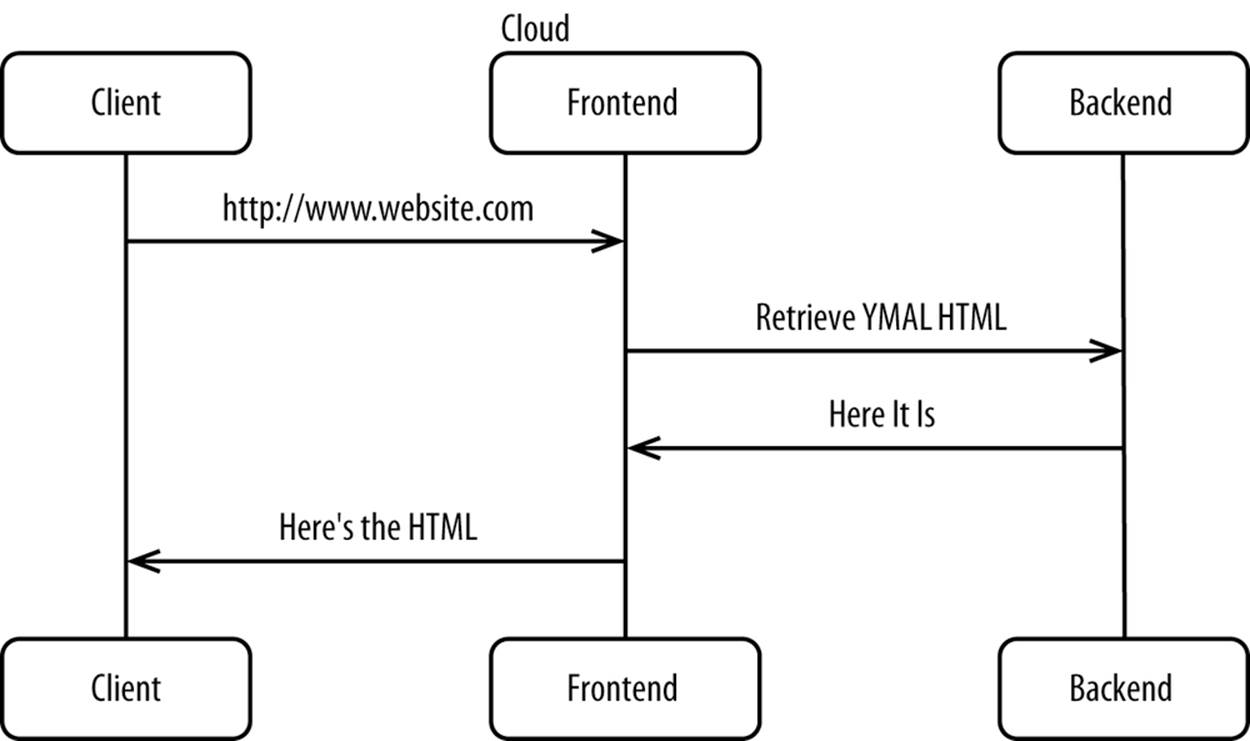

Overlaying HTML on the Server Side

This next approach is where the frontend for your website is independently served out of a cloud, with dynamic content from your backend woven into the page generated by your frontend. This is a fundamentally different approach than using a CDN or client to overlay fragments, because with this model you’re actually serving your frontend independently of your backend, as shown in Figure 11-11.

Figure 11-11. Overlaying HTML on the server side

With the frontend split from the backend, you’re pulling in only small, dynamic fragments from your backend. Your frontend is then free to run wherever you want, as in a public cloud. Your frontend, which handles most of the traffic, can then dynamically scale up and down, pulling in dynamic fragments from your backend as required. Your backend can then be much smaller and hosted traditionally.

The capabilities to insert dynamic content into an existing page have existed since the early days of the Internet, beginning with server-side includes, which are still used today. All scripting tag libraries also have support for this, through import or include tags.

Here’s an example of what the code would look like to do this:

<body>

<!-- Product details... -->

<div id="YMALs">

<!--#include

virtual="http://backend.website.com/app/

RetrieveYMALs?productId=12345&customerId=54321" -->

</div>

... rest of web page

</body>

While the technology is fairly simple, the architectural implementations are enormous. Rather than simply serving a static HTML document and then overlaying the dynamic bits, you’re actually generating a dynamic page for each customer in the cloud and simply including the dynamic fragments that you need from your backend where appropriate. This is a great way to move much of the workload out to a dynamic cloud. In this model, the backend is solely responsible for delivering a small amount of content, and the frontend is responsible for delivering much of the actual content. It’s a big difference, though the technical underpinnings have existed for decades.

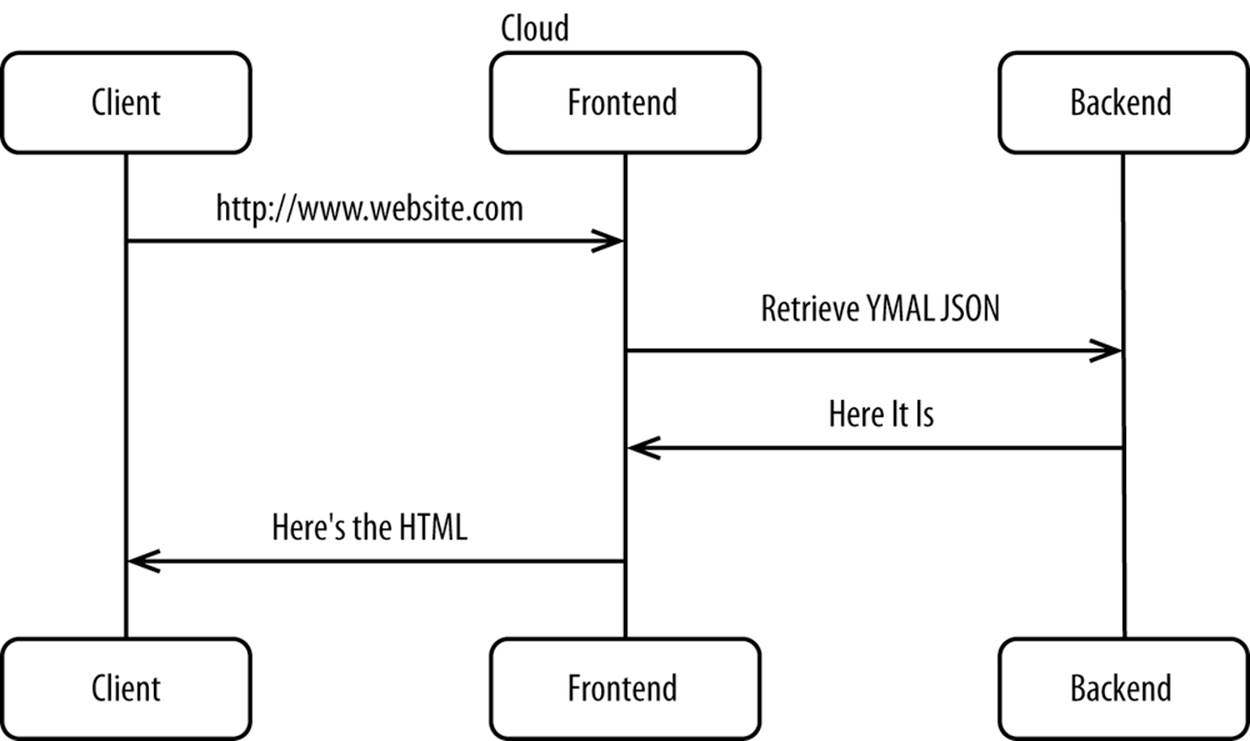

Fully Decoupled Frontends and Backends

All of the approaches documented thus far have assumed that the content that would be overlaid is HTML. But as you recall from earlier in the book, the web channel where HTML is used is rapidly being marginalized in favor of mobile and other channels. Only web browsers use HTML. Every other channel consumes some form of XML or JSON from your origin, making all of the approaches thus far irrelevant to nonweb channels.

With this approach, your frontend is out in a cloud, retrieving small fragments of dynamic content from your backend as required. The difference between this and the previous approach is that the response from the backend should be XML or JSON instead of HTML, as Figure 11-12 shows.

Figure 11-12. Fully decoupled frontends and backends

It’s certainly easier to return responses in HTML, because you don’t have to change your code all that much. But the problem with that is it prevents you from ever reusing your backend services across channels because none of the other channels can consume HTML. In this model, you construct your HTML pages by using data from your backend but not the presentation. It’s a clean separation between presentation and business logic.

Here’s an example of what the code would look like to do this:

<body>

<!-- Product details... -->

<div id="YMALs">

<c:import url="http://backend.website.com/app/

RetrieveYMALs?productId=12345&customerId=54321"

var="ymals" />

<h2><c:out value="${ymals.displayText}"></h2>

<c:forEach items="${ymals.products}" var="product">

<jsp:include page="/app/productDetailYMAL.jsp">

<jsp:param name="product" value="${product}" />

</jsp:include>

</c:forEach>

</div>

... rest of web page

</body>

Again, this is a fundamental departure from how many web pages are constructed today, with the backend providing only structured data. What’s best about this approach is that the services exposed by the backend (e.g., http://backend.website.com/app/RetrieveYMALs) can be reused across all channels because all you’re exposing is raw data.

NOTE

This is the future of ecommerce architecture, regardless of where you deploy your front and backends. This approach requires substantial changes to your code and architecture, but the long-term benefits are transformational to the way you do business.

Everything but the Database in the Cloud

The most extreme form of hybrid computing is putting everything out in a public cloud except the database. Your frontend is fully split from your backend to adhere to omnichannel architecture principles, but you deploy both tiers to a cloud, with your frontend-to-backend communication occurring entirely within the cloud. Only your database is outside the cloud, as Figure 11-13 shows.

Figure 11-13. Everything but the database in the cloud

Because latency is so important, this approach must be used in conjunction with the direct-connection approach from , whereby you host your database in a colo that has a direct physical connection to the cloud you’re operating from. Pulling a single product from the database may result in dozens of SQL queries, because data may be spread out across dozens of tables. When you have potentially dozens of serial SQL statements executed per HTTP request, latency quickly kills performance. With a direct connection, you should have a millisecond or less of latency, making this no longer an issue.

Databases can be deployed in a cloud, but you may find it easier to deploy them in a colo connected to the cloud, as databases have stringent software, hardware, networking, storage, and security requirements that may not be fully offered by a database in a cloud. Monolithic applications with inseparable frontends and backends can also benefit from this approach.

We’ll discuss this more in the next chapter.

Summary

As with everything, there are benefits and trade-offs to each of the approaches listed. Clearly the trend is moving toward a design that supports full omnichannel retailing, whereby the backend is serving snippets of XML and JSON to a frontend in a cloud that builds the HTML responses. Until that goal can be fully realized, the approaches before it are great steps that provide substantial benefits.

Here’s a quick summary of the approaches in Table 11-1.

Table 11-1. Summary of hybrid approaches

|

Approach |

Where fragment is included |

Relative level of backend offload |

Format of HTTP response from backend |

Requires clean front/backend separation |

Channels applicable to |

Level of changes to application |

|

Caching entire pages |

N/A |

Low |

N/A |

No |

All |

None |

|

Overlaying HTML on cached pages |

Client |

Medium |

HTML |

No |

Web |

Low |

|

Using Content Delivery Networks to insert HTML |

Content Delivery Network |

High |

HTML |

No |

Web |

Medium |

|

Overlaying HTML on the server side |

Frontend servers |

High |

HTML |

No |

Web |

High |

|

Fully decoupled frontends and backends |

Frontend servers |

High |

XML or JSON |

Yes |

All |

High |

|

Everything but database in the cloud |

N/A |

N/A |

N/A |

No |

All |

None |

It’s best to start at the top of this table and work your way down as you build competence.

For more information on hybrid cloud-based architectures, read Bill Wilder’s Cloud Architecture Patterns (O’Reilly).

As the move to smartphones, tablets, and other nonweb browser devices accelerates, HTML-based frontends are going to be increasingly marginalized. The value of a hybrid cloud is that your frontend, which is handling most of the traffic today, can leverage what a cloud has to offer—elasticity, unlimited ability to scale up, and cost savings. But over time, the traffic to your frontend will drop, eventually reaching a point where a majority of traffic is from devices that have thick clients. A hybrid cloud is fundamentally a transitional technology. It will be around for a while, but eventually many workloads, like ecommerce, will shift entirely to the cloud.