SEO For Dummies, 6th Edition (2016)

Part I. Getting Started with SEO

Chapter 3. Your One-Hour, Search Engine–Friendly Web Site Makeover

In This Chapter

![]() Finding your site in the search engines

Finding your site in the search engines

![]() Choosing keywords

Choosing keywords

![]() Examining your pages for problems

Examining your pages for problems

![]() Getting search engines to read and index your pages

Getting search engines to read and index your pages

A few small changes can make a big difference in your site’s position in the search engines. So instead of forcing you to read this entire book before you can get anything done, this chapter helps you identify problems with your site and, with a little luck, shows you how to make a significant difference through quick fixes.

It’s possible that you may not make significant progress in a single hour, as the chapter title promises. You may identify serious problems with your site that can’t be fixed quickly. Sorry, that’s life! The purpose of this chapter is to help you identify a few obvious problems and, perhaps, make some quick fixes with the goal of really getting something done.

Is Your Site Indexed?

It’s important to find out whether your site is actually in a search engine or directory. Your site doesn’t come up when someone searches at Google for rodent racing? Can’t find it in Bing? Have you ever thought that perhaps it simply isn’t there? In the next several sections, I explain how to find out whether your site is indexed in a few different systems.

Various browser add-ons, such as SEOQuake, automatically tell you the number of indexed pages when you visit a site.

Various browser add-ons, such as SEOQuake, automatically tell you the number of indexed pages when you visit a site.

I’ll start with the behemoth: Google. Here’s the quickest and easiest way to see what Google has in its index. Search Google, either at the site or through the Google toolbar (see Chapter 1) for the following:

site:domain.com

Don’t type the www. piece, just the domain name. For instance, say your site’s domain name is RodentRacing.com. You’d search for this:

site:rodentracing.com

Google returns a list of pages it’s found on your site; at the top, underneath the search box (or perhaps somewhere else; it moves around occasionally), you see something like this:

2 results (0.16) seconds

That’s it — quick and easy. You know how many pages Google has indexed on your site and can even see which pages.

Well, you may know. This number is not always accurate; Google will show different numbers in different places. The number you see in the first page of search results may not be the same as the number it shows you on, say, the tenth page of search results, nor the same as the number it shows you in the Google Webmasters Console (see Chapter 13). Still, it gives you a general idea (usually reasonably accurate) of your indexing in Google.

Well, you may know. This number is not always accurate; Google will show different numbers in different places. The number you see in the first page of search results may not be the same as the number it shows you on, say, the tenth page of search results, nor the same as the number it shows you in the Google Webmasters Console (see Chapter 13). Still, it gives you a general idea (usually reasonably accurate) of your indexing in Google.

Here’s another way to see what’s in the index — in this case, a particular page in your site. Simply search for the URL: Type, or copy and paste, the URL into the Google search box and press Enter. Google should return the page in the search results (if it’s in the index).

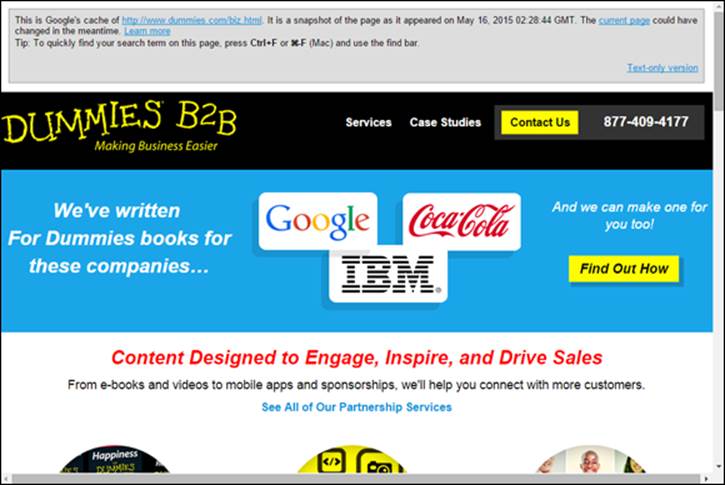

You can also click the little green down triangle at the end of the URL in the search results and select Cached to see the copy of the page that Google has actually stored in its cache (see Figure 3-1). If you’re unlucky, Google tells you that it has nothing in the cache for that page. That doesn’t necessarily mean that Google hasn’t indexed the page, though.

You can also click the little green down triangle at the end of the URL in the search results and select Cached to see the copy of the page that Google has actually stored in its cache (see Figure 3-1). If you’re unlucky, Google tells you that it has nothing in the cache for that page. That doesn’t necessarily mean that Google hasn’t indexed the page, though.

Figure 3-1: A page stored in the Google cache.

A cache is a temporary storage area in which a copy of something is placed. In this context, the search engine cache stores a Web page that shows what the search engine found the last time it downloaded the page. Google, Bing, and Yahoo! keep a copy of many of the pages they index, and they all also tell you the date that they indexed the cached pages. (Bing currently also uses a little green triangle, just like Google, at the end of the URL; click that to see the Cached page link pop up. Yahoo! currently puts a Cached link in gray text immediately after the URL in the search results.)

A cache is a temporary storage area in which a copy of something is placed. In this context, the search engine cache stores a Web page that shows what the search engine found the last time it downloaded the page. Google, Bing, and Yahoo! keep a copy of many of the pages they index, and they all also tell you the date that they indexed the cached pages. (Bing currently also uses a little green triangle, just like Google, at the end of the URL; click that to see the Cached page link pop up. Yahoo! currently puts a Cached link in gray text immediately after the URL in the search results.)

You can also go directly to the cached page on Google. Type the following into the Google search box:

cache:http://yourdomain.com/page.htm

Replace yourdomain.com with your actual domain name, and page.htm with the actual page name, of course. When you click Search, Google checks to see whether it has the page in its cache.

What if Google doesn’t have the page? Does that mean your page isn’t in Google? No, not necessarily. Google may not have gotten around to caching it. Sometimes Google grabs a little information from a page but not the entire page.

You can search for a Web site at Google another way, too. Simply type the domain name into the Google search box and click Search. Google returns that site’s home page at the top of the results, generally followed by more pages from the site.

You can search for a Web site at Google another way, too. Simply type the domain name into the Google search box and click Search. Google returns that site’s home page at the top of the results, generally followed by more pages from the site.

Yahoo! and Bing

And now, here’s a bonus. The search syntax I used to see what Google had in its index for RodentRacing.com — site:rodentracing.com — not only works on Google but also Yahoo! and Bing. That’s right, type the same thing into any of these search sites and you see how many pages on the Web site are in the index (though doing this at Yahoo!, of course, gets you the Bing results because they share the same index).

Open Directory Project

You might also want know whether your site is listed in the Open Directory Project (www.dmoz.org; assuming that the site still functions when you read this). This is a large directory of Web sites, actually owned by AOL although volunteer run; it’s potentially important, because its data is “syndicated” to many different Web sites, providing you with many links back to your site. (You find out about the importance of links in Chapter 16.) If your site isn’t in the directory, it should be (if possible; getting it in there can be quite difficult).

Just type the domain name, without the www. piece. If your site is in the index, the Open Directory Project will tell you. If it isn’t, you should try to list it; see Chapter 14.

Taking Action If You’re Not Listed

What if you search for your site in the search engines and can’t find it? If the site isn’t in Google or Bing, you have a huge problem. (If the site isn’t in the Open Directory Project, see Chapter 14.) Or perhaps your home page, or maybe one or two pages, are indexed, but nothing else within your site is indexed. There are two basic reasons your site isn’t being indexed in the search engines:

· The search engines haven’t found your site yet. The solution is relatively easy, though you won’t get it done in an hour.

· The search engines have found your site but, for several possible reasons, can’t or won’t index it. This is a serious problem, though in some cases you can fix it quickly.

These are the specific reasons your site may not be indexed, in order of likelihood, more or less:

· There are no links pointing to the site, so the search engines don’t know the site exists.

· The Web server is unreliable.

· The robots.txt is blocking search-engine access to the site.

· The robots meta tag is blocking pages individually.

· You bought a garbage domain name.

· The site is using some kind of navigation structure that search engines can’t read, so they can’t find their way through the site.

· The site is creating dynamic pages that search engines choose not to read (this is quite rare today).

· You have a “canonical” tag referencing another Web site (very unlikely, though possible if you are inheriting the site from someone else).

No links

The single most common reason that search engines don’t index sites is that they don’t know the sites exist. I frequently work with clients whose sites, we discover, are not indexed; when I examine the incoming links, I find that…there aren’t any.

The search engines “crawl” the Web, following links from site to site to site. If there are no links from other Web sites, pointing to your site, the search engines will never find your site.

You may have heard that you can “submit” your site to the search engines, and you should definitely do that; not by using “submission services,” but by submitting an XML sitemap through the Google and Bing Webmaster accounts (see Chapter 13 for the details). But this may not be enough. Even if the search engines know your site exists — because you told them it does — if they see no links from other sites, they may not care. In fact, if no other site cares enough to link to your site, why should the search engines care? If you have no links from other sites to yours, the search engines may not index it, or they may take their sweet time getting around to it, or perhaps they’ll index a little piece of it but not much.

So, the solution is simple; get some links right away! Ask all your friends, colleagues, and family members to link to it, from whatever Web sites and social-network accounts they have. Then read Chapters 16 and 17 to learn about links.

Unreliable Web Server

Another reason that search engines may not index a site is that the site is on an unreliable Web server. If your site keeps crashing — or if it’s unavailable when the search engines come by to crawl it — they can’t crawl it. Or maybe they won’t, because even if they can get in now and then, they don’t want unreliable sites listed in the search results. That’s “bad user experience.”

So check your site. Is it incredibly slow to load, or is it down a lot of the time? You might even sign up with a site-checking service such as www.pingdom.com or https://uptimerobot.com (the former has a free trial, the latter has a free, reduced-service account).

robots.txt is blocking your site

Enter this URL into your browser (replacing domain with your actual domain name) and press Enter: www.domain.com/robots.txt.

If a simple text file loads into your browser, you’re looking at the robots.txt file. (If you see a server error instead, move onto the next issue. You don’t have a robots.txt file.)

The robots.txt file provides instructions to “robots” — search as search engines’ search bots — about what areas of your site can and cannot be indexed. If you see a line that says Disallow, read Chapter 21 to find out how robots.txt works. It’s common to block some areas of the site, but sometimes people make mistakes in robots.txt and accidentally block crawling.

robots meta tags are blocking pages

It’s also possible that individual robot meta tags are blocking specific pages — perhaps all your pages. Check out your pages to see if robots tags are causing problems:

1. Open the source code of your Web pages.

In the three primary browsers — Chrome, Firefox, and Internet Explorer — type Ctrl-U (Command-U on the Mac, sometimes) or right-click and select either View Page Source or View Source.

2. Search the page for the text robots.

If your pages are using robots meta tags, you’ll see something like <META NAME=“robots”.

3. If you don’t find this tag in your pages, fuggedaboutit.

4. If you do find a robots meta tag in your pages, see Chapter 21 for information on figuring out problems.

Bad domain name

Did you recently buy a domain name? It’s possible that you purchased a domain name that had previously been blocked by the search engines because a previous owner was using the domain used for various nefarious — “spammy” — practices, attempting to trick the search engines but getting caught. (I talk about these practices in Chapters 9 and 21.)

Firstly, if nobody has ever used the domain name before, then the name can’t be bad; you can use a service such as www.domainhistory.net or www.domaintools.com to see the domain name’s history and find out whether it’s been used before.

If the domain previously has been in use, how do you know if it has problems? How do you know if the site has been penalized by the search engines? There is simply no easy answer to this question, as the search engines don’t have a public list of spammy sites. Google says there are indications of problems that you can look for, but no definitive answer:

· If you are buying a domain name that is currently in use, and the site has links pointing to it but is not indexed, that’s a bad sign.

· If the old site is not up and running — perhaps you are buying an old expired domain name — go to www.archive.org and search the Wayback Machine to see what the Web site on that domain name looked like at various times in the past. Does it look “spammy”? It is an ugly site with little real value or real content, perhaps promoting get-rich-quick schemes, online gambling, pharmaceuticals or the like? Does it have a lot of what looks like auto-generated content, perhaps really clumsily written? Did it use a lot of SEO tricks (see Chapter 9)? Those are big problems.

· Search for the domain name and see what people on the Web are saying about the domain name. Are people complaining about it, stating that it uses SEO tricks? Obviously, another problem.

If you think this is a possible problem, I suggest you Google the phrase matt cutts buy domain spam still rank youtube and see the Matt Cutts video on this issue (I talk a little about Matt Cutts in Chapter 23). It may be possible to “revive” a “damaged” domain name, though you’re probably better off just getting another domain name!

Unreadable navigation

Problems with navigation structures are much less common than they used to be, because search engines today are far better at reading Web pages than they were in the early days. Still, just in case …

A site may have perfectly readable pages, with the exception that the searchbots — the programs search engines use to index Web sites — can’t negotiate the site navigation. The searchbots can reach the home page, index it, and read it, but they can’t go any further.

Why can’t the searchbots find their way through? The navigation system may have been created using JavaScript, and because search engines mostly ignore JavaScript, they don’t find the links in the script. Look at this example:

<SCRIPT TYPE="javascript" SRC="/menu/menu.js"></SCRIPT>

In many sites, this is how navigation bars are placed into each page: Pages call an external JavaScript, held in menu.js in the menu subdirectory. Search engines may not read menu.js, in which case they’ll never read the links in the script. And it’s not just JavaScript; problems can be caused by putting navigation in Flash and Silverlight (Adobe’s and Microsoft’s animation formats).

However, these days Google does read and execute JavaScript, and does a good job with Flash, too, so this sort of thing is unlikely to be a problem. (Google states that they don’t do so well with Silverlight.)

But it’s not all about Google. As recently as May 2015, Bing had these statements in its Webmaster guidelines:

Don’t bury links to content inside JavaScript … don’t bury links in Javascript/flash/Silverlight; keep content out of these as well … avoid housing content inside Flash or JavaScript — these block crawlers from finding the content … The technology used on your website can sometimes prevent Bingbot from being able to find your content. Rich media (Flash, JavaScript, and so forth) can lead to Bing not being able to crawl through navigation, or not see content embedded in a web page.

Of course, there are other search engines, not as big as Google and Bing but worth keeping happy nonetheless.

If you think your navigation system may be causing problems, try these simple ways to help search engines find their way around your site, whether or not your navigation structure is hidden:

If you think your navigation system may be causing problems, try these simple ways to help search engines find their way around your site, whether or not your navigation structure is hidden:

· Create more text links throughout the site. Many Web sites have a main navigation structure and then duplicate the structure by using simple text links at the bottom of the page. You should do the same.

· Add an HTML sitemap page to your site. This page contains links to most or all of the pages on your Web site. Of course, you also want to link to the sitemap page from those little links at the bottom of the home page.

· If working with Flash, use sIFR (Scalable Inman Flash Replacement), which combines Flash with search-engine readable text.

Dealing with dynamic pages

This is another case in which things are much better today than in the past. In the past, dynamic sites often had problems getting indexed — that is pages with long, complicated URLs that are being created on the fly when a browser requests them. The data is pulled from a database, pasted into a Web page template, and sent to the user’s browser. The long, complicated URL is a database query requesting the data; for example, product information that should be placed into a page, like this:

http://yourdomain.com/products/index.html?&DID=18&CATID=13&ObjectGroup_ID=79

Search engines often wouldn’t index such pages, for a variety of reasons explained in Chapter 9.

Such URLs are unlikely to be a problem these days, but there’s still a really good reason for not using URLs like this: It would be better, from an SEO perspective, to have keywords in your URLs, rather than database-query nonsense. There’s a way to do that, using URL rewriting, which I explain in Chapter 7.

Another problem is caused by session IDs — URLs that are different every time the page is displayed. Look at this example:

http://yourdomain.com/buyAHome.do;jsessionid=07D3CCD4D9A6A9F3CF9CAD4F9A728F44

Each time someone visits this site, the server assigns a special ID number to the visitor. That means the URL is never the same, so Google probably won’t index it. In fact, Google used to recommend that sites not use session IDs (there are technical alternatives). It’s still probably a good idea today to avoid ssession IDs, if possible.

Search engines may choose not to index pages with session IDs. If the search engine finds links that appear to have session IDs in them, it quite likely will not index the referenced page, in order to avoid filling the search index with duplicates.

Search engines may choose not to index pages with session IDs. If the search engine finds links that appear to have session IDs in them, it quite likely will not index the referenced page, in order to avoid filling the search index with duplicates.

The Canonical tag

This problem is probably quite rare, though does happen now and then. There’s something called the canonical tag, which looks like this:

<link rel="canonical" href="https://yourdomain.com/rodents/blue-mice.html"/>

This tag is used in situations in which a Web site might deliver the same content using different URLs; articles in a blog might appear in multiple sections, content might be delivered with session IDs, content might be syndicated onto other Web sites, and so on.

So the canonical tag tells the search engines, “This is the original URL for this page; don’t index the page you’re looking at, index this other one.” Clearly, a misplaced canonical tag can cause pages to not get indexed.

Picking Good Keywords

Getting search engines to recognize and index your Web site can be a problem, as the first part of this chapter makes clear. Another huge problem — one that has little or nothing to do with the technological limitations of search engines — is that many companies have no idea whatkeywords (the words people are using at search engines to search for Web sites) they should be using. They try to guess the appropriate keywords, without knowing what people are really using in search engines.

I explain keywords in detail in Chapter 6, but here’s how to do a quick keyword analysis:

1. Point your browser to https://adwords.google.com/select/KeywordToolExternal.

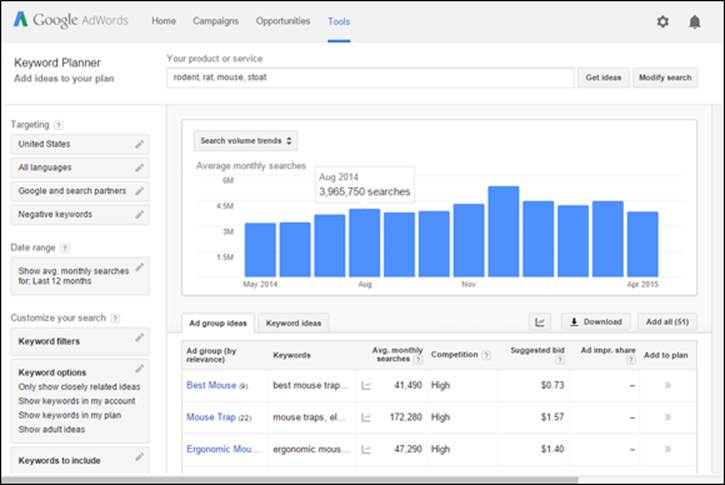

Log into your Google account (yes, you’ll need a Google account). You see the Google AdWords Keyword Planner. AdWords is Google’s PPC (pay per click) division.

2. In the top search box, type a keyword you think people may use to search for your products or services and then click Search.

3. Click the Get Ideas button at the bottom.

The tool returns a list of keywords, showing you how often that term and related terms are used by people searching on Google and partner sites (see Figure 3-2). Click between the Ad Group Ideas and Keyword Ideas to see different groupings of keywords

Figure 3-2: The Google AdWords Keyword Planner provides a quick way to check keywords.

You may find that the keyword you guessed is perfect. Or you may discover better words, or, even if your guess was good, find several other great keywords. A detailed keyword analysis almost always turns up keywords or keyword phrases you need to know about. I often speak with clients whose sites rank really well for the chosen keywords, but they are totally unaware that they are missing some other, very popular, terms; it’s a common problem.

Don’t spend a lot of time on this task right now. See whether you can come up with some useful keywords in a few minutes and then move on; see Chapter 6 for details about this process.

Examining Your Pages

Making your Web pages “search engine–friendly” was probably not uppermost in your mind when you sat down to design your Web site. That means your Web pages — and the Web pages of millions of others — may have a few problems in the search engine–friendly category. Fortunately, such problems are pretty easy to spot; you can fix some of them quickly, but others are more troublesome.

Using frames

To examine your pages for problems, you need to read the pages’ source code. Remember, I said you’d need to be able to understand HTML! To see the source code, choose View ⇒ Source or View ⇒ Page Source in your browser. (Or use a tool such as Firebug, an add-on designed for Firefox but available, in a lite form, for other browsers. See www.getfirebug.com.)

When you first peek at the source code for your site, you may discover that your site is using frames. (Of course, if you built the site yourself, you already know whether it uses frames. However, you may be examining a site built by someone else.) You may see something like this on the page:

<HTML>

<HEAD>

</HEAD>

<FRAMESET ROWS="20%,80%">

<FRAME SRC="navbar.html">

<FRAME SRC="content.html">

</FRAMESET>

<BODY>

</BODY>

</HTML>

When you choose View ⇒ Source or View ⇒ Page Source in your browser, you’re viewing the source of the frame-definition document, which tells the browser how to set up the frames. In the preceding example, the browser creates two frame rows, one taking up the top 20 percent of the browser and the other taking up the bottom 80 percent. In the top frame, the browser places content taken from the navbar.html file; content from content.html goes into the bottom frame.

Framed sites don’t index well. The pages in the internal frames get orphaned in search engines; each page ends up in search results alone, without the navigation frames with which they were intended to be displayed. The good news is that framed sites now are rare; they were very popular at the turn of the century, but seem to have fallen out of favor. Still, I run into them now and then.

Framed sites are bad news for many reasons. I discuss frames in more detail in Chapter 8, but here are a few quick fixes:

Framed sites are bad news for many reasons. I discuss frames in more detail in Chapter 8, but here are a few quick fixes:

· Add TITLE and DESCRIPTION tags between the <HEAD> and </HEAD> tags. (To see what these tags are and how they can help with your frame issues, check out the next two sections.)

· Add <NOFRAMES> and </NOFRAMES> tags between the <BODY> and </BODY> tags and place 200 to 300 words of keyword-rich content between the tags. The NOFRAMES text is designed to be displayed by browsers that can’t work with frames, and search engines will read this text, although they won’t rate it as highly as normal text (because many designers have used <NOFRAMES> tags as a trick to get more keywords into a Web site, and because the NOFRAMES text is almost never seen these days, because almost no users have browsers that don’t work with frames).

· In the text between the <NOFRAMES> tags, include a number of links to other pages in your site to help search engines find their way through.

· Make sure every page in the site contains a link back to the home page, in case it’s found "orphaned" in the search index.

Looking at the TITLE tags

TITLE tags tell a browser what text to display in the browser’s title bar and tabs, and they’re very important to search engines. Quite reasonably, search engines figure that the TITLE tags may indicate the page’s title — and, therefore, its subject.

Open your site’s home page and then choose View ⇒ Source in your browser to see the page source. A window opens, showing you what the page’s HTML looks like. Here’s what you should see at the top of the page:

<HTML>

<HEAD>

<TITLE>Your title text is here</TITLE>

Here are a few problems you may have with your <TITLE> tags:

· They’re not there. Many pages simply don’t have <TITLE> tags. If they don’t, you’re failing to give the search engines one of the most important pieces of information about the page’s subject matter.

· They’re in the wrong position. Sometimes you find the <TITLE> tags, but they’re way down in the page. If they’re too low in the page, search engines may not find them.

· There are two sets. Now and then I see sites that have two sets of <TITLE> tags on each page; in this case, the search engines will probably read the first and ignore the second.

· Every page on the site has the same <TITLE> tag. Many sites use the exact same tag on every single page. Bad idea! Every <TITLE> tag should be different.

· They’re there, but they’re poor. The <TITLE> tags don’t contain the proper keywords.

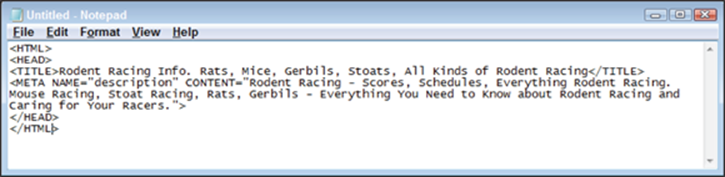

Your TITLE tags should be immediately below the <HEAD> tag and should contain useful keywords. Have 40 to 60 characters between the <TITLE> and </TITLE> tags (including spaces) and, perhaps, repeat the primary keywords once. If you’re working on your Rodent Racing Web site, for example, you might have something like this:

Your TITLE tags should be immediately below the <HEAD> tag and should contain useful keywords. Have 40 to 60 characters between the <TITLE> and </TITLE> tags (including spaces) and, perhaps, repeat the primary keywords once. If you’re working on your Rodent Racing Web site, for example, you might have something like this:

<TITLE>Rodent Racing Info. Rats, Mice, Gerbils, Stoats, All Kinds of Rodent Racing</TITLE>

Find out more about keywords in Chapter 6 and titles in Chapter 7.

Examining the DESCRIPTION tag

The DESCRIPTION tag is important because search engines may index it (under the reasonable assumption that the description describes the contents of the page) and, in many cases, use the DESCRIPTION tag to provide the site description on the search results page. Thus you might think of the DESCRIPTION tag as serving two purposes: to help with search rank and as a "sales pitch" to convince people viewing the search-results page to click your link. (Google says it doesn’t use the tag for indexing, but it’s still important because Google will often display the tag contents in the search-results page.)

Open a Web page, open the HTML source (select View ⇒ Source from your browser’s menu), and then take a quick look at the DESCRIPTION tag. It should look something like this:

<META NAME="description" CONTENT="your description goes here">

Sites often have the same problems with DESCRIPTION tags as they do with <TITLE> tags. The tags aren’t there, are hidden away deep down in the page, are duplicated, or simply aren’t very good.

Place the DESCRIPTION tag immediately below the <TITLE> tags (see Figure 3-3) and create a keyworded description of up to 250 characters (again, including spaces). Here’s an example:

<META NAME="description" CONTENT="Rodent Racing - Scores, Schedules, Everything Rodent Racing. Mouse Racing, Stoat Racing, Rats, Gerbils - Everything You Need to Know about Rodent Racing and Caring for Your Racers.">

Figure 3-3: A clean start to your Web page, showing the <TITLE> and <DESCRIPTION> tags.

Sometimes Web developers switch the attributes in the tag, putting the CONTENT= before the NAME=, like this:

<META CONTENT="your description goes here" NAME="description">

I’m sure the order of the attributes isn’t important for Google or Bing, but I have seen it confuse some smaller systems in the past. There’s no real reason to switch the order of the attributes so I’d recommend not doing it.

I’m sure the order of the attributes isn’t important for Google or Bing, but I have seen it confuse some smaller systems in the past. There’s no real reason to switch the order of the attributes so I’d recommend not doing it.

Giving search engines something to read

You don’t necessarily have to pick through the HTML code of your Web page to evaluate how search engine–friendly it is. You can find out a lot just by looking at the Web page in the browser. Determine whether you have any text on the page. Page content — text that search engines can read — is essential, but many Web sites don’t have any page content on the front page and often have little or none on interior pages. This is often a problem for e-commerce sites, which all too frequently have nothing more than a short blurb for each product they are selling, ending up with a content-light site.

Here are some potential problems:

· Having a (usually pointless) Flash intro on your site

· Creating a totally Flash-based site

· Embedding much of the text on your site into images, rather than relying on readable text

· Banking on flashy visuals to hide the fact that your site is light on content

· Using the wrong keywords (Chapter 6 explains how to pick keywords.)

If you have these types of problems, they can often be time consuming to fix. (Sorry, you may run over the one-hour timetable by several weeks.) The next several sections detail ways you might overcome the problems.

Eliminating Flash and Silverlight

Huh? What’s Flash? You’ve seen those silly animations when you arrive at a Web site, with a little Skip Intro link hidden away in the page. Words and pictures appear and disappear, scroll across the pages, and so on. These are Adobe Flash (formerly Macromedia Flash) files, or perhaps Microsoft Silverlight files.

Huh? What’s Flash? You’ve seen those silly animations when you arrive at a Web site, with a little Skip Intro link hidden away in the page. Words and pictures appear and disappear, scroll across the pages, and so on. These are Adobe Flash (formerly Macromedia Flash) files, or perhaps Microsoft Silverlight files.

I suggest that you kill the Flash intro on your site. They don’t hurt your site in the search engines (unless, of course, you’re removing indexable text and replacing it with Flash), but they don’t help, either, and I rarely see a Flash intro that actually serves any purpose. In most cases, they’re nothing but an irritation to site visitors. (The majority of Flash intros are created because the Web designer likes playing with Flash.) And a site that is nothing but Flash — no real text; everything’s in the Flash file — is a disaster from a search engine perspective. (Indeed, since I’ve been writing this book Flash intros have fallen out of favor to a great degree, but they’re still out there.)

If you’re really wedded to your Flash intro, though — and there are occasionally some that make sense — there are ways to use Flash and still do a good job in the search engines; see Chapter 9 for information on the SWFObject method. Just don’t expect Flash on its own to work well in the search engines.

Replacing images with real text

If you have an image-heavy Web site, in which all or most of the text is embedded onto images, you need to get rid of the images and replace them with real text. If the search engine can’t read the text, it can’t index it.

It may not be immediately clear whether text on the page is real text or images. You can quickly figure it out a couple of ways:

It may not be immediately clear whether text on the page is real text or images. You can quickly figure it out a couple of ways:

· Try to select the text in the browser with your mouse. If it’s real text, you can select it character by character. If it’s not real text, you simply can’t select it — you’ll probably end up selecting an image.

· Right-click the text, and if you see menu options, such as Save Image and Copy Image, you know it’s an image, not text.

Using more keywords

The light-content issue can be a real problem. Some sites are designed to be light on content, and sometimes this approach is perfectly valid in terms of design and usability. However, search engines have a bias for content — that is, for text they can read. (I discuss this issue in more depth in Chapter 11.) In general, the more text — with the right keywords — the better.

Using the right keywords in the right places

Suppose that you do have text, and plenty of it. But does the text have the right keywords? The ones discovered with the Google AdWords Keyword Planner earlier in this chapter? It should.

Where keywords are placed and what they look like is also important. Search engines use position and format as clues to importance. Here are a few simple techniques you can use — but don’t overdo it!

· Use keywords in folder names and filenames, and in page files and image files.

· Use keywords near the top of the page.

· Place keywords into <H> (heading) tags.

· Use bold and italic keywords; search engines take note of these.

· Put keywords into bulleted lists; search engines also take note of this.

· Use keywords multiple times on a page, but don’t use a keyword or keyword phrase too often. If your page sounds really clumsy through over-repetition, it may be too much.

You can avoid over-repetition by using synonyms.

You can avoid over-repetition by using synonyms.

Ensure that the links between pages within your site contain keywords. Think about all the sites you’ve visited recently. How many use links with no keywords in them? They use buttons, graphic navigation bars, short little links that you have to guess at, click here links, and so on. Big mistakes.

Some writers have suggested that you should never use click here because it sounds silly and people know they’re supposed to click. I disagree, and research shows that using the words can sometimes increase the number of clicks on a particular link. However, for search-engine purposes, you should rarely, if ever, use a link with only the words click here in the link text; you should include keywords in the link.

Some writers have suggested that you should never use click here because it sounds silly and people know they’re supposed to click. I disagree, and research shows that using the words can sometimes increase the number of clicks on a particular link. However, for search-engine purposes, you should rarely, if ever, use a link with only the words click here in the link text; you should include keywords in the link.

To reiterate, when you create links, include keywords in the links wherever possible. For example, on your rodent-racing site, if you’re pointing to the scores page, don’t create a link that says To find the most recent rodent racing scores, click here or, perhaps, To find the most recent racing scores, go to the scores page. Instead, get a few more keywords into the links, like this: To find the most recent racing scores, go to the rodent racing scores page. That tells the search engine that the referenced page is about rodent racing scores.

Getting Your Site Indexed

So your pages are ready, but you still have the indexing problem. Your pages are, to put it bluntly, just not in the search engine! How do you fix that problem?

For the Open Directory Project, you have to go to dmoz.org and register directly, but before doing that, you should read Chapter 14. With Google, Bing, and Ask.com, the process is a little more time consuming and complicated.

The best way to get into the search engines is to have them find the pages by following links pointing to the site. In some cases, you can ask the search engines to come to your site and pick up your pages. However, if you ask search engines to index your site, they probably won’t do it. And if they do come and index your site, doing so may take weeks or months. Asking them to come to your site is unreliable.

So how do you get indexed? The good news is that you can often get indexed by some of the search engines very quickly.

Find another Web site to link to your site, right away. I’m not talking about a full-blown link campaign here, with all the advantages I describe in Chapters 16 and 17. You simply want to get search engines — particularly Google, Bing, and Ask.com — to pick up the site and index it. Call friends, colleagues, and relatives who own or control Web sites, and ask them to link to your site; many people have blogs these days, or even social-networking pages they can link from. Of course, you want sites that are already indexed by search engines. The searchbots have to follow the links to your site.

When you ask friends, colleagues, and relatives to link to you, specify what you want the links to say. No click here or company name links for you. You want to place keywords into the link text. Something like Visit this site for all your rodent racing needs - mice, rats, stoats, gerbils, and all other kinds of rodent racing. Keywords in links are a powerful way to tell a search engine what your site is about.

When you ask friends, colleagues, and relatives to link to you, specify what you want the links to say. No click here or company name links for you. You want to place keywords into the link text. Something like Visit this site for all your rodent racing needs - mice, rats, stoats, gerbils, and all other kinds of rodent racing. Keywords in links are a powerful way to tell a search engine what your site is about.

After the sites have links pointing to yours, it can take from a few days to a few weeks to get into the search engines. With Google, if you place the links right before Googlebot indexes one of the sites, you may be in the index in a few days. I once placed some pages on a client’s Web site on a Tuesday and found them in Google (ranked near the top) on Friday. But Google can also take several weeks to index a site. The best way to increase your chances of getting into search engines quickly is to get as many links as you can on as many sites as possible.

You should also create an XML sitemap, submit that to Google and Bing, and add a line in your robots.txt file that points to the sitemap so that search systems — such as Ask.com — that don’t provide a way for you to submit the sitemap can still find it. You find out all about that inChapter 13.