The Core iOS Developer’s Cookbook, Fifth Edition (2014)

Chapter 8. Common Controllers

The iOS SDK provides a wealth of system-supplied controllers that you can use in your day-to-day development tasks. This chapter introduces some of the most popular ones. You’ll read about selecting images from your device library, snapping photos, and recording and editing videos. You’ll discover how to allow users to compose e-mails and text messages, and how to post updates to social services like Twitter and Facebook. Each controller offers a way to leverage prepackaged iOS system functionality. Here’s the know-how you need to get started using them.

Image Picker Controller

The UIImagePickerController class enables users to select images from a device’s media library and to snap pictures with its camera. It is somewhat of a living fossil; its system-supplied interface was created back in the early days of iPhone OS. Over time, as Apple rolled out devices with video recording (iOS 3.1) and front and rear cameras (iOS 4), the class evolved. It introduced photo and video editing, customizable camera view overlays, and more.

Image Sources

The image picker works with three sources:

![]() UIImagePickerControllerSourceTypePhotoLibrary—This source contains all images synced to iOS. Material in this source includes images snapped by the user (Camera Roll), from photo streams, from albums synced from computers, copied via the camera connection kit, and so on.

UIImagePickerControllerSourceTypePhotoLibrary—This source contains all images synced to iOS. Material in this source includes images snapped by the user (Camera Roll), from photo streams, from albums synced from computers, copied via the camera connection kit, and so on.

![]() UIImagePickerControllerSourceTypeSavedPhotosAlbum—This source refers only to the Camera Roll, which consists of pictures and videos captured by the user on units with cameras or to the Saved Photos album for noncamera units. Photo stream items captured on other devices also sync into the Camera Roll.

UIImagePickerControllerSourceTypeSavedPhotosAlbum—This source refers only to the Camera Roll, which consists of pictures and videos captured by the user on units with cameras or to the Saved Photos album for noncamera units. Photo stream items captured on other devices also sync into the Camera Roll.

![]() UIImagePickerControllerSourceTypeCamera—This source enables users to shoot pictures with a built-in iPhone camera. The source provides support for front and back camera selection and both still and video capture.

UIImagePickerControllerSourceTypeCamera—This source enables users to shoot pictures with a built-in iPhone camera. The source provides support for front and back camera selection and both still and video capture.

Although you might want more nuanced access to iCloud and to shared and individual photo streams, for now you can access your entire library, just the Camera Roll, or just the Camera. Submit your enhancement suggestions to http://bugreport.apple.com.

Presenting the Picker on iPhone and iPad

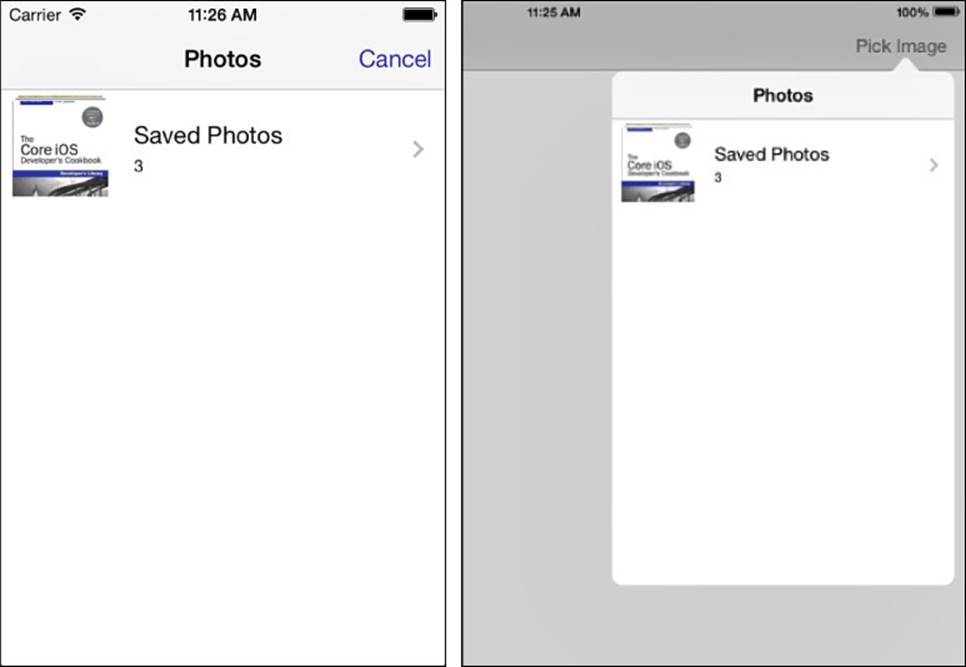

Figure 8-1 shows the image picker presented on an iPhone and iPad, using a library source. The UIImagePickerController class is designed to operate in a modal presentation on iPhone-like devices (left) or a popover on tablets (right).

Figure 8-1 The core image picker allows users to select images from pictures stored in the media library.

On iPhone-like devices, present the picker modally. On the iPad, embed pickers into popovers instead. Never push image pickers onto an existing navigation stack. On older versions of iOS, doing so would create a second navigation bar under the primary one. On modern versions of iOS, it throws a nasty exception: "Pushing a navigation controller is not supported by the image picker".

Recipe: Selecting Images

In its simplest role, the image picker enables users to browse their library and select a stored photo. Recipe 8-1 demonstrates how to create and present a picker and retrieve an image selected by the user. Before proceeding with general how-to, you need to know about two key how-to’s.

How To: Adding Photos to the Simulator

Before running this recipe on a Mac, you might want to populate the simulator’s photo collection. You can do this in two ways. First, you can drop images onto the simulator from Finder. Each image opens in Mobile Safari, where you can then tap-and-hold and choose Save Image to copy the image to your photo library.

Once you set up your test photo collection as you like, navigate to the Application Support folder in your home library on your Mac. Open the iPhone Simulator folder and then the folder for the iOS version you’re currently using (for example, 7.0). Inside, you’ll find a Media folder. The path to the Media folder will look something like this: /Users/(Your Account)/Library/Application Support/iPhone Simulator/(OS Version)/Media.

Back up the newly populated Media folder to a convenient location. Creating a backup enables you to restore it in the future without having to re-add each photo individually. Each time you reset the simulator’s contents and settings, this material gets deleted. Having a folder on hand that’s ready to drop in and test with can be a huge time saver.

Alternatively, purchase a copy of Ecamm’s PhoneView (http://ecamm.com). PhoneView offers access to a device’s Media folder through the Apple File Connection (AFC) service. Connect an iPhone or iPad, launch the application, and then drag and drop folders from PhoneView to your Mac. Make sure you check Show Entire Disk in PhoneView preferences to see all the relevant folders.

Using PhoneView, copy the DCIM, PhotoData, and Photos folders from a device to a folder on your Macintosh. Once copied, quit the simulator and add the copied folders into the ~/Library/Application Support/iPhone Simulator/(OS Version)/Media destination. When you next launch the simulator, your new media will be waiting for you in the Photos app.

The Assets Library Module

This recipe uses the assets library module. Be sure to add it to your source file with @import AssetsLibrary.

Using the assets library may sound complicated, but there are strong underlying reasons why this is a best practice for working with image pickers. An image picker may return an asset URL without providing a direct image to use. Recipe 8-1 assumes that this is a possibility and offers a method to load an image from the assets library (loadImageFromAssetURL:into:). A typical URL looks something like this:

assets-library://asset/asset.JPG?id=553F6592-43C9-45A0-B851-28A726727436&ext=JPG

This URL provides direct access to media.

Fortunately, Apple has now moved past an extremely annoying assets library issue. Historically, iOS queried the user for permission to use his or her location—permissions that users would often deny. Apps would get stuck because you cannot force the system to ask again. Beginning with iOS 6, the message properly states that the app would like to access a user’s photos rather than location, hopefully leading users to grant access. Determine your authorization situation by querying the class’s authorizationStatus. You can reset these granted privileges by opening Settings > Privacy and updating service-based permissions (like location and photo access) on an app-by-app basis.

Unfortunately, as of the initial release of iOS 7, Apple added a nasty bug related to asset library authorization on the iPad. A crash occurs when returning from the permission request if you display a picker in a popover. Restarting the app resolves the issue but provides a poor first-run experience. A workaround is to request asset library access prior to displaying the popover:

// Force authorization for asset library

[assetsLibrary enumerateGroupsWithTypes:ALAssetsGroupAll

usingBlock:^(ALAssetsGroup *group, BOOL *stop) {

// If authorized, catch the final iteration and display popover

if (group == nil)

{

dispatch_async(dispatch_get_main_queue(), ^{

popover = [[UIPopoverController alloc]

initWithContentViewController:

viewControllerToPresent];

popover.delegate = self;

[popover presentPopoverFromBarButtonItem:

self.navigationItem.rightBarButtonItem

permittedArrowDirections:

UIPopoverArrowDirectionAny

animated:YES];

});

}

*stop = YES;

} failureBlock:nil];

With the procedural details addressed, the next section introduces the image picker itself.

Presenting a Picker

Create an image picker by allocating and initializing it. Next, set its source type to the library (all images) or Camera Roll (captured images). Recipe 8-1 sets the photo library source type, allowing users to browse through all library images.

UIImagePickerController *picker = [[UIImagePickerController alloc] init];

picker.sourceType = UIImagePickerControllerSourceTypePhotoLibrary;

An optional editing property (allowsEditing) adds a step to the interactive selection process. When enabled, it allows users to scale and frame the image they picked before finishing their selection. When it is disabled, any media selection immediately redirects control to the next phase of the picker’s life cycle.

Be sure to set the picker’s delegate property. The delegate property conforms to the UINavigationControllerDelegate and UIImagePickerControllerDelegate protocols; it receives callbacks after a user has selected an image or cancelled selection. When using an image picker controller with popovers, declare the UIPopoverControllerDelegate protocol as well.

When working on iPhone-like devices, always present the picker modally; check for the active device at runtime. The following test (iOS 3.2 and later) returns true when run on an iPhone and false on an iPad:

#define IS_IPHONE (UI_USER_INTERFACE_IDIOM() == UIUserInterfaceIdiomPhone)

The following snippet shows the typical presentation patterns for image pickers:

if (IS_IPHONE)

{

[self presentViewController:picker animated:YES completion:nil];

}

else

{

if (popover) [popover dismissPopoverAnimated:NO];

popover = [[UIPopoverController alloc]

initWithContentViewController:picker];

popover.delegate = self;

[popover presentPopoverFromBarButtonItem:

self.navigationItem.rightBarButtonItem

permittedArrowDirections:UIPopoverArrowDirectionAny

animated:YES];

}

Handling Delegate Callbacks

Recipe 8-1 considers the following three possible image picker callback scenarios:

![]() The user has successfully selected an image.

The user has successfully selected an image.

![]() The user has tapped Cancel (only available on modal presentations).

The user has tapped Cancel (only available on modal presentations).

![]() The user has dismissed the popover that embeds the picker by tapping outside it.

The user has dismissed the popover that embeds the picker by tapping outside it.

The last two cases are simple. For a modal presentation, dismiss the controller. For a popover, remove any local references holding onto the instance. Processing a selection takes a little more work.

Pickers finish their lives by returning a custom information dictionary to their assigned delegate. This info dictionary contains key/value pairs related to the user’s selection. Depending on the way the image picker has been set up and the kind of media the user selects, the dictionary may contain few or many of these keys.

For example, when working with images on the simulator dropped in via Safari, expect to see nothing more than a media type and a reference URL. Images shot on a device and then edited through the picker may contain all six keys listed here:

![]() UIImagePickerControllerMediaType—Defines the kind of media selected by the user—normally public.image for images or public.movie for movies. Media types are defined in the Mobile Core Services framework. Media types are primarily used in this context for adding items to the system pasteboard.

UIImagePickerControllerMediaType—Defines the kind of media selected by the user—normally public.image for images or public.movie for movies. Media types are defined in the Mobile Core Services framework. Media types are primarily used in this context for adding items to the system pasteboard.

![]() UIImagePickerControllerCropRect—Returns the portion of the image selected by the user as an NSValue that stores a CGRect.

UIImagePickerControllerCropRect—Returns the portion of the image selected by the user as an NSValue that stores a CGRect.

![]() UIImagePickerControllerOriginalImage—Offers a UIImage instance with the original (unedited) image contents.

UIImagePickerControllerOriginalImage—Offers a UIImage instance with the original (unedited) image contents.

![]() UIImagePickerControllerEditedImage—Provides the edited version of the image, containing the portion of the picture selected by the user. The UIImage returned is small, sized to fit the device screen.

UIImagePickerControllerEditedImage—Provides the edited version of the image, containing the portion of the picture selected by the user. The UIImage returned is small, sized to fit the device screen.

![]() UIImagePickerControllerReferenceURL—Specifies a file system URL for the selected asset. This URL always points to the original version of an item, regardless of whether a user has cropped or trimmed an asset.

UIImagePickerControllerReferenceURL—Specifies a file system URL for the selected asset. This URL always points to the original version of an item, regardless of whether a user has cropped or trimmed an asset.

![]() UIImagePickerControllerMediaMetadata—Offers metadata for a photograph taken within the image picker.

UIImagePickerControllerMediaMetadata—Offers metadata for a photograph taken within the image picker.

Recipe 8-1 uses several steps to move from the info dictionary contents to produce a recovered image. First, it checks whether the dictionary contains an edited version. If it does not find this, it accesses the original image. If that fails, it retrieves the reference URL and tries to load it through the assets library. Normally, at the end of these steps, the application has a valid image instance to work with. If it does not, it logs an error and returns.

Finally, don’t forget to dismiss modally presented controllers before wrapping up work in the delegate callback.

Note

When it comes to user interaction zoology, UIImagePickerController is a cow. It is slow to load. It eagerly consumes application memory and spends extra time chewing its cud. Be aware of these limitations when designing your apps and do not tip your image picker.

Recipe 8-1 Selecting Images

#define IS_IPHONE (UI_USER_INTERFACE_IDIOM() == \

UIUserInterfaceIdiomPhone)

// Dismiss the picker

- (void)performDismiss

{

if (IS_IPHONE)

[self dismissViewControllerAnimated:YES completion:nil];

else

{

[popover dismissPopoverAnimated:YES];

popover = nil;

}

}

// Present the picker

- (void)presentViewController:

(UIViewController *)viewControllerToPresent

{

if (IS_IPHONE)

{

[self presentViewController:viewControllerToPresent

animated:YES completion:nil];

}

else

{

// Workaround to an Apple crasher when asking for asset

// library authorization with a popover displayed

ALAssetsLibrary * assetsLibrary =

[[ALAssetsLibrary alloc] init];

ALAuthorizationStatus authStatus;

if (NSFoundationVersionNumber >

NSFoundationVersionNumber_iOS_6_0)

authStatus = [ALAssetsLibrary authorizationStatus];

else

authStatus = ALAuthorizationStatusAuthorized;

if (authStatus == ALAuthorizationStatusAuthorized)

{

popover = [[UIPopoverController alloc]

initWithContentViewController:viewControllerToPresent];

popover.delegate = self;

[popover presentPopoverFromBarButtonItem:

self.navigationItem.rightBarButtonItem

permittedArrowDirections:UIPopoverArrowDirectionAny

animated:YES];

}

else if (authStatus == ALAuthorizationStatusNotDetermined)

{

// Force authorization

[assetsLibrary enumerateGroupsWithTypes:ALAssetsGroupAll

usingBlock:^(ALAssetsGroup *group, BOOL *stop){

// If authorized, catch the final iteration

// and display popover

if (group == nil)

{

dispatch_async(dispatch_get_main_queue(), ^{

popover = [[UIPopoverController alloc]

initWithContentViewController:

viewControllerToPresent];

popover.delegate = self;

[popover presentPopoverFromBarButtonItem:

self.navigationItem.rightBarButtonItem

permittedArrowDirections:

UIPopoverArrowDirectionAny

animated:YES];

});

}

*stop = YES;

} failureBlock:nil];

}

}

}

// Popover was dismissed

- (void)popoverControllerDidDismissPopover:

(UIPopoverController *)aPopoverController

{

popover = nil;

}

// Retrieve an image from an asset URL

- (void)loadImageFromAssetURL:(NSURL *)assetURL

into:(UIImage **)image

{

ALAssetsLibrary *library = [[ALAssetsLibrary alloc] init];

ALAssetsLibraryAssetForURLResultBlock resultsBlock =

^(ALAsset *asset)

{

ALAssetRepresentation *assetRepresentation =

[asset defaultRepresentation];

CGImageRef cgImage =

[assetRepresentation CGImageWithOptions:nil];

CFRetain(cgImage); // Thanks, Oliver Drobnik

if (image) *image = [UIImage imageWithCGImage:cgImage];

CFRelease(cgImage);

};

ALAssetsLibraryAccessFailureBlock failureBlock =

^(NSError *__strong error)

{

NSLog(@"Error retrieving asset from url: %@",

error.localizedFailureReason);

};

[library assetForURL:assetURL

resultBlock:resultsBlock failureBlock:failureBlock];

}

// Update image and for iPhone, dismiss the controller

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info

{

// Use the edited image if available

UIImage __autoreleasing *image =

info[UIImagePickerControllerEditedImage];

// If not, grab the original image

if (!image)

image = info[UIImagePickerControllerOriginalImage];

// If still no luck, check for an asset URL

NSURL *assetURL = info[UIImagePickerControllerReferenceURL];

if (!image && !assetURL)

{

NSLog(@"Cannot retrieve an image from the selected item. Giving up.");

}

else if (!image)

{

// Retrieve the image from the asset library

[self loadImageFromAssetURL:assetURL into:&image];

}

// Display the image

if (image)

imageView.image = image;

if (IS_IPHONE)

[self performDismiss];

}

// iPhone-like devices only: dismiss the picker with cancel button

- (void)imagePickerControllerDidCancel:

(UIImagePickerController *)picker

{

[self performDismiss];

}

- (void)pickImage

{

if (popover) return;

// Create and initialize the picker

UIImagePickerController *picker =

[[UIImagePickerController alloc] init];

picker.sourceType =

UIImagePickerControllerSourceTypePhotoLibrary;

picker.allowsEditing = editSwitch.isOn;

picker.delegate = self;

[self presentViewController:picker];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Snapping Photos

In addition to selecting pictures, the image picker controller enables you to snap photos with a device’s built-in camera. Because cameras are not available on all iOS units (specifically, older iPod touch and iPad devices), begin by checking whether the device running the application supports camera usage:

if ([UIImagePickerController isSourceTypeAvailable:

UIImagePickerControllerSourceTypeCamera]) ...

The rule is this: Never offer camera-based features for devices that don’t have cameras. Although iOS 7 was deployed only to camera-ready devices, no one but Apple knows what hardware will be released in the future. As unlikely as it sounds, Apple could introduce new models without cameras. Until Apple says otherwise, assume that the possibility exists for a noncamera system, even under modern iOS releases. Further, assume that this method will accurately report state for camera-enabled devices whose source has been disabled through some future system setting.

Setting Up the Picker

You instantiate a camera version of the image picker the same way you create a picture selection one. Just change the source type from the library or Camera Roll to the camera:

picker.sourceType = UIImagePickerControllerSourceTypeCamera;

As with other modes, you can allow or disallow image editing as part of the photo-capture process by setting the allowsEditing property.

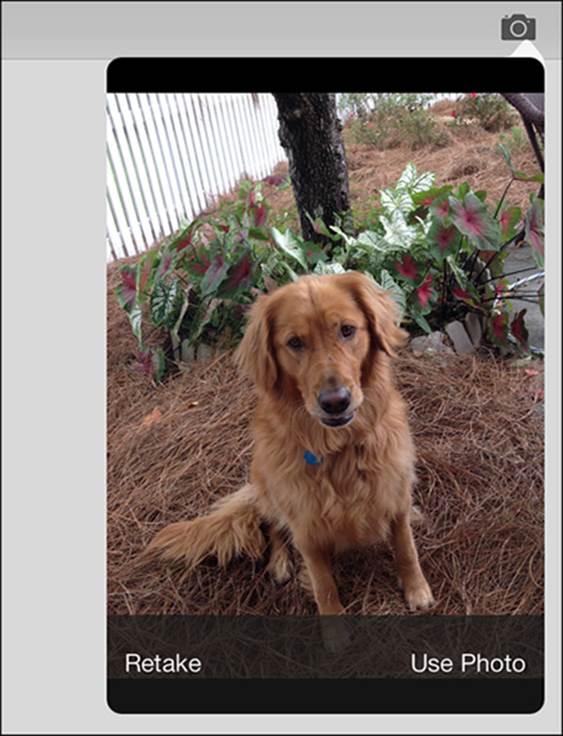

Although the setup with UIImagePickerControllerSourceTypeCamera is the same as with UIImagePickerControllerSourceTypePhotoLibrary, the user experience differs slightly (see Figure 8-2). The camera picker offers a preview that displays after the user taps the camera icon to snap a photo. This preview lets users retake the photo or use the photo as is. Once they tap Use, control passes to the next phase. If you’ve enabled image editing, the user will be able to edit the image. If not, control moves to the standard “did finish picking” method in the delegate.

Figure 8-2 The camera version of the image picker controller offers a distinct user experience for snapping photos.

Most modern devices offer more than one camera. All iOS 7–capable devices ship with both a rear- and front-facing camera. Even among the iOS 6–supported devices, only the iPhone 3GS has a single camera. Assign the cameraDevice property to select which camera you want to use. The rear camera is always the default.

The isCameraDeviceAvailable: class method queries whether a specific camera device is available. This snippet checks to see whether the front camera is available, and if it is, selects it:

if ([UIImagePickerController isCameraDeviceAvailable:

UIImagePickerControllerCameraDeviceFront])

picker.cameraDevice = UIImagePickerControllerCameraDeviceFront;

Here are a few more points about the camera or cameras that you can access through the UIImagePickerController class:

![]() You can query the device’s ability to use flash by using the isFlashAvailableForCameraDevice: class method. Supply either the front or back device constant. This method returns YES for available flash, or otherwise NO.

You can query the device’s ability to use flash by using the isFlashAvailableForCameraDevice: class method. Supply either the front or back device constant. This method returns YES for available flash, or otherwise NO.

![]() When a camera supports flash, you can set the cameraFlashMode property directly to auto (UIImagePickerControllerCameraFlashModeAuto, which is the default), to always used (UIImagePickerControllerCameraFlashModeOn), or always off (UIImagePickerControllerCameraFlashModeOff). Selecting off disables the flash regardless of ambient light conditions.

When a camera supports flash, you can set the cameraFlashMode property directly to auto (UIImagePickerControllerCameraFlashModeAuto, which is the default), to always used (UIImagePickerControllerCameraFlashModeOn), or always off (UIImagePickerControllerCameraFlashModeOff). Selecting off disables the flash regardless of ambient light conditions.

![]() Choose between photo and video capture by setting the cameraCaptureMode property. The picker defaults to photo-capture mode. You can test what modes are available for a device by using availableCaptureModesForCameraDevice:. This returns an array ofNSNumber objects, each of which encodes a valid capture mode, either photo (UIImagePickerControllerCameraCaptureModePhoto) or video (UIImagePickerControllerCameraCaptureModeVideo).

Choose between photo and video capture by setting the cameraCaptureMode property. The picker defaults to photo-capture mode. You can test what modes are available for a device by using availableCaptureModesForCameraDevice:. This returns an array ofNSNumber objects, each of which encodes a valid capture mode, either photo (UIImagePickerControllerCameraCaptureModePhoto) or video (UIImagePickerControllerCameraCaptureModeVideo).

Displaying Images

When working with photos, keep image size in mind. Snapped pictures, especially those from high-resolution cameras, can be quite large, even in the age of Retina displays. Those captured from front-facing video cameras use lower-quality sensors and are much smaller.

Content modes provide an in-app solution to displaying large images. They allow image views to scale their embedded images to available screen space. Consider using one of the following modes:

![]() The UIViewContentModeScaleAspectFit mode ensures that the entire image is shown with the aspect ratio retained. The image may be padded with empty rectangles on the sides or the top and bottom to preserve that aspect.

The UIViewContentModeScaleAspectFit mode ensures that the entire image is shown with the aspect ratio retained. The image may be padded with empty rectangles on the sides or the top and bottom to preserve that aspect.

![]() The UIViewContentModeScaleAspectFill mode displays as much of the image as possible, while filling the entire view. Some content may be clipped so that the entire view’s bounds are filled.

The UIViewContentModeScaleAspectFill mode displays as much of the image as possible, while filling the entire view. Some content may be clipped so that the entire view’s bounds are filled.

Saving Images to the Photo Album

Save a snapped image (or any UIImage instance, actually) to the photo album by calling UIImageWriteToSavedPhotosAlbum(). This function takes four arguments. The first is the image to save. The second and third arguments specify a callback target and selector, typically your primary view controller and image:didFinishSavingWithError:contextInfo:. The fourth argument is an optional context pointer. Whatever selector you use, it must take three arguments: an image, an error, and a pointer to the passed context information.

Recipe 8-2 uses the UIImageWriteToSavedPhotosAlbum() function to demonstrate how to snap a new image, allow user edits, and then save the image to the photo album.

Recipe 8-2 Snapping Pictures

// "Finished saving" callback method

- (void)image:(UIImage *)image

didFinishSavingWithError:(NSError *)error

contextInfo:(void *)contextInfo;

{

// Handle the end of the image write process

if (!error)

NSLog(@"Image written to photo album");

else

NSLog(@"Error writing to photo album: %@",

error.localizedFailureReason);

}

// Save the returned image

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info

{

// Use the edited image if available

UIImage __autoreleasing *image =

info[UIImagePickerControllerEditedImage];

// If not, grab the original image

if (!image)

image = info[UIImagePickerControllerOriginalImage];

// If still no luck, check for an asset URL

NSURL *assetURL = info[UIImagePickerControllerReferenceURL];

if (!image && !assetURL)

{

NSLog(@"Cannot retrieve an image from selected item. Giving up.");

}

else if (!image)

{

NSLog(@"Retrieving from Assets Library");

[self loadImageFromAssetURL:assetURL into:&image];

}

if (image)

{

// Save the image

UIImageWriteToSavedPhotosAlbum(image, self,

@selector(image:didFinishSavingWithError:contextInfo:),

NULL);

imageView.image = image;

}

[self performDismiss];

}

- (void)loadView

{

self.view = [[UIView alloc] init];

self.view.backgroundColor = [UIColor whiteColor];

imageView = [[UIImageView alloc] init];

imageView.contentMode = UIViewContentModeScaleAspectFit;

[self.view addSubview:imageView];

PREPCONSTRAINTS(imageView);

STRETCH_VIEW(self.view, imageView);

// Only present the "Snap" option for camera-ready devices

if ([UIImagePickerController isSourceTypeAvailable:

UIImagePickerControllerSourceTypeCamera])

self.navigationItem.rightBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemCamera,

@selector(snapImage)));

// Set up title view with Edits: ON/OFF

editSwitch = [[UISwitch alloc] init];

UILabel * editLabel =

[[UILabel alloc] initWithFrame:CGRectMake(0, 0, 40, 13)];

editLabel.text = @"Edits";

self.navigationItem.leftBarButtonItems =

@[[[UIBarButtonItem alloc] initWithCustomView:editLabel],

[[UIBarButtonItem alloc] initWithCustomView:editSwitch]];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Recording Video

Even in the age of ubiquitous cameras on iOS 7, exercise caution regarding not just the availability but also the kinds of cameras provided by each device. When recording video, your application should check whether a device supports camera-based video recording.

This is a two-step process. It isn’t sufficient to only check for a camera, such as those in the first-generation and 3G iPhones (in contrast to early iPad and iPod touch models, which shipped without cameras). Only the 3GS and newer units provided video-recording capabilities and, however unlikely, future models could ship without cameras or with still cameras.

That means you perform two checks: first, that a camera is available, and second, that the available capture types include video. This method returns a Boolean value indicating whether the device running the application is video-ready:

- (BOOL)videoRecordingAvailable

{

// The source type must be available

if (![UIImagePickerController isSourceTypeAvailable:

UIImagePickerControllerSourceTypeCamera])

return NO;

// And the media type must include the movie type

NSArray *mediaTypes = [UIImagePickerController

availableMediaTypesForSourceType:

UIImagePickerControllerSourceTypeCamera]

return [mediaTypes containsObject:(NSString *)kUTTypeMovie];

}

This method searches for a movie type (kUTTypeMovie, aka public.movie) in the results for the available media types query. Uniform Type Identifiers (UTIs) are strings that identify abstract types for common file formats such as images, movies, and data. UTIs are discussed in further detail in Chapter 11, “Documents and Data Sharing.” These types are defined in the Mobile Core Services module. Be sure to import the module in your source file:

@import MobileCoreServices;

Creating the Video-Recording Picker

Recording video is almost identical to capturing still images with the camera. Recipe 8-3 allocates and initializes a new image picker, sets its delegate, and presents it:

UIImagePickerController *picker =

[[UIImagePickerController alloc] init];

picker.sourceType = UIImagePickerControllerSourceTypeCamera;

picker.videoQuality = UIImagePickerControllerQualityTypeMedium;

picker.mediaTypes = @[(NSString *)kUTTypeMovie]; // public.movie

picker.delegate = self;

Choose the video quality you want to record. As you improve quality, the data stored per second increases. Select from high (UIImagePickerControllerQualityTypeHigh), medium (UIImagePickerControllerQualityTypeMedium ), low (UIImagePickerController-QualityTypeLow), or VGA (UIImagePickerControllerQualityType640x480).

As with image picking, the video version allows you to set an allowsEditing property, as discussed in Recipe 8-5.

Saving the Video

The info dictionary returned by the video picker contains a UIImagePickerController-MediaURL key. This media URL points to the captured video, which is stored in a temporary folder within the app sandbox. Use the UISaveVideoAtPathToSavedPhotosAlbum()function to store the video to your library.

This save method takes four arguments: the path to the video you want to add to the library, a callback target, a selector with three arguments (basically identical to the selector used during image save callbacks), and an optional context. The save method calls the target with that selector after it finishes its work, allowing you to check for success.

Recipe 8-3 Recording Video

- (void)video:(NSString *)videoPath

didFinishSavingWithError:(NSError *)error

contextInfo:(void *)contextInfo

{

if (!error)

self.title = @"Saved!";

else

NSLog(@"Error saving video: %@",

error.localizedFailureReason);

}

- (void)saveVideo:(NSURL *)mediaURL

{

// check if video is compatible with album

BOOL compatible =

UIVideoAtPathIsCompatibleWithSavedPhotosAlbum(

mediaURL.path);

// save

if (compatible)

UISaveVideoAtPathToSavedPhotosAlbum(

mediaURL.path, self,

@selector(video:didFinishSavingWithError:contextInfo:),

NULL);

}

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info

{

[self performDismiss];

// Save the video

NSURL *mediaURL =

info[UIImagePickerControllerMediaURL];

[self saveVideo: mediaURL];

}

- (void)recordVideo

{

if (popover) return;

self.title = nil;

// Create and initialize the picker

UIImagePickerController *picker =

[[UIImagePickerController alloc] init];

picker.sourceType = UIImagePickerControllerSourceTypeCamera;

picker.videoQuality = UIImagePickerControllerQualityTypeMedium;

picker.mediaTypes = @[(NSString *)kUTTypeMovie];

picker.delegate = self;

[self presentViewController:picker];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Playing Video with Media Player

The MPMoviePlayerViewController and MPMoviePlayerController classes simplify video display in your applications. Part of the Media Player framework, these classes allow you to embed video into your views or to play movies back, full screen. With the ready-built full-feature video player shown in Figure 8-3, you do little more than supply a content URL. The player provides the Done button, the time scrubber, the aspect control, and the playback controls, plus the underlying video presentation.

Figure 8-3 The Media Player framework simplifies adding video playback to your applications. This class allows off-device streaming video as well as fixed-size local assets. Supported video standards include H.264 Baseline Profile Level 3.0 video (up to 640×480 at 30fps) and MPEG-4 Part 2 video (Simple Profile). Most files with .mov, .mp4, .mpv, and .3gp extensions can be played. Audio support includes AAC-LC audio (up to 48KHz) and MP3 (MPEG-1 Audio Layer 3, up to 48KHz) stereo.

Recipe 8-4 builds on the video recording introduced in Recipe 8-3. It adds playback after each recording by switching the Camera button in the navigation bar to a Play button. Once the video finishes playing, the button returns to Camera. This recipe does not save any videos to the library, so you can record, play, and record, play, ad infinitum.

The image picker supplies a media URL, which is all you need to establish the player. Recipe 8-4 instantiates a new player and sets two properties. The first enables AirPlay, letting you stream the recorded video to an AirPlay-enabled receiver like Apple TV or a commercial application like Reflector (http://reflectorapp.com). The second sets the playback style to show the video full screen. It then presents the movie.

The two movie player classes consist of a presentable view controller and the actual player controller, which the view controller owns as a property. This is why Recipe 8-4 makes so many mentions of player.moviePlayer. The view controller class is quite small and easy to launch. The real work takes place in the player controller.

Movie players use notifications rather than delegates to communicate with applications. You subscribe to these notifications to determine when the movie starts playing, when it finishes, and when it changes state (as in pause/play). Recipe 8-4 observes two notifications: when the movie becomes playable and when it finishes.

After the movie loads and its state changes to playable, Recipe 8-4 starts playback. The movie appears full screen and continues playing until the user taps Done or the movie finishes. In either case, the player generates a finish notification. At that time, the app returns to recording mode, presenting its Camera button to allow the user to record the next video sequence.

This recipe demonstrates the basics for playing video in iOS. You are not limited to video you record yourself. The movie player controller is agnostic about its video source. You can set the content URL to a file stored in your sandbox or even point it to a compliant resource on the Internet.

Note

If your movie player opens and immediately closes, check your URLs to make sure they are valid. Do not forget that local file URLs need fileURLWithPath:, whereas remote ones can use URLWithString:.

Recipe 8-4 Video Playback

#define SYSBARBUTTON(ITEM, SELECTOR) [[UIBarButtonItem alloc] \

initWithBarButtonSystemItem:ITEM target:self action:SELECTOR]

- (void)playMovie

{

// Prepare movie player and play

MPMoviePlayerViewController *player =

[[MPMoviePlayerViewController alloc]

initWithContentURL:mediaURL];

player.moviePlayer.allowsAirPlay = YES;

player.moviePlayer.controlStyle = MPMovieControlStyleFullscreen;

[self.navigationController

presentMoviePlayerViewControllerAnimated:player];

// Handle the end of movie playback

[[NSNotificationCenter defaultCenter]

addObserverForName:MPMoviePlayerPlaybackDidFinishNotification

object:player.moviePlayer queue:[NSOperationQueue mainQueue]

usingBlock:^(NSNotification *notification){

// Return to recording mode

self.navigationItem.rightBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemCamera,

@selector(recordVideo));

// Stop listening to movie notifications

[[NSNotificationCenter defaultCenter]

removeObserver:self];

}];

// Wait for the movie to load and become playable

[[NSNotificationCenter defaultCenter]

addObserverForName:MPMoviePlayerLoadStateDidChangeNotification

object:player.moviePlayer queue:[NSOperationQueue mainQueue]

usingBlock:^(NSNotification *notification) {

// When the movie sets the playable flag, start playback

if ((player.moviePlayer.loadState &

MPMovieLoadStatePlayable) != 0)

[player.moviePlayer performSelector:@selector(play)

withObject:nil afterDelay:1.0f];

}];

}

// After recording any content, allow the user to play it

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info

{

[self performDismiss];

// recover video URL

mediaURL = info[UIImagePickerControllerMediaURL];

self.navigationItem.rightBarButtonItem =

SYSBARBUTTON(UIBarButtonSystemItemPlay,

@selector(playMovie));

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Editing Video

Enabling an image picker’s allowsEditing property for a video source activates the yellow editing bars you’ve seen in the built-in Photos app. (Drag the grips at either side to see them in action.) During the editing step of the capture process, users drag the ends of the scrubbing track to choose the video range they want to use.

Surprisingly, the picker does not trim the video itself. Instead, it returns four items in the info dictionary:

![]() UIImagePickerControllerMediaURL

UIImagePickerControllerMediaURL

![]() UIImagePickerControllerMediaType

UIImagePickerControllerMediaType

![]() _UIImagePickerControllerVideoEditingStart

_UIImagePickerControllerVideoEditingStart

![]() _UIImagePickerControllerVideoEditingEnd

_UIImagePickerControllerVideoEditingEnd

The media URL points to the untrimmed video, which is stored in a temporary folder within the sandbox. The video start and end points are NSNumbers, containing the offsets the user chose with those yellow edit bars. The media type is public.movie.

If you save the video to the library (as shown in Recipe 8-3), it stores the unedited version, which is not what your user expects or you want. The iOS SDK offers two ways to edit video. Recipe 8-5 demonstrates how to use the AV Foundation framework to respond to the edit requests returned by the video image picker. Recipe 8-6 shows you how to pick videos from your library and use UIVideoEditorController to edit.

AV Foundation and Core Media

This recipe requires access to two very specialized modules. The AV Foundation module provides an Objective-C interface that supports media processing. Core Media uses a low-level C interface to describe media properties. Together these provide an iOS version of the Mac’s QuickTime media experience. Import both modules in your source file for this recipe.

Recipe 8-5 begins by recovering the media URL from the image picker’s info dictionary. This URL points to the temporary file in the sandbox created by the image picker. The recipe creates a new AV asset URL from that. Next, it creates the export range, the times within the video that should be saved to the library. It does this by using the Core Media CMTimeRange structure, building it from the info dictionary’s start and end times. The CMTimeMakeWithSeconds() function takes two arguments: a time and a scale factor. This recipe uses a factor of 1, preserving the exact times.

An export session allows your app to save data back out to the file system. This session does not save video to the library; that is a separate step. The session exports the trimmed video to a local file in the sandbox tmp folder, alongside the originally captured video. To create an export session, allocate it and set its asset and quality.

Recipe 8-5 saves the trimmed video to a new path. This path is identical to the one it read from but with “-trimmed” added to the core filename. The export session uses this path to set its output URL, uses the export range to specify what time range to include, and selects a QuickTime movie output file type. Then it’s ready to process the video. The export session asynchronously performs the file export, using the properties and contents of the passed asset.

When the trimmed movie is complete, save it to the central media library. Recipe 8-5 does so in the export session’s completion block.

Recipe 8-5 Trimming Video with AV Foundation

- (void)trimVideo:(NSDictionary *)info

{

// recover video URL

NSURL *mediaURL =

info[UIImagePickerControllerMediaURL];

AVURLAsset *asset =

[AVURLAsset URLAssetWithURL:mediaURL options:nil];

// Create the export range

CGFloat editingStart =

[info[@"_UIImagePickerControllerVideoEditingStart"]

floatValue];

CGFloat editingEnd =

[info[@"_UIImagePickerControllerVideoEditingEnd"]

floatValue];

CMTime startTime = CMTimeMakeWithSeconds(editingStart, 1);

CMTime endTime = CMTimeMakeWithSeconds(editingEnd, 1);

CMTimeRange exportRange =

CMTimeRangeFromTimeToTime(startTime, endTime);

// Create a trimmed version URL: file:originalpath-trimmed.mov

NSString *urlPath = mediaURL.path;

NSString *extension = urlPath.pathExtension;

NSString *base = [urlPath stringByDeletingPathExtension];

NSString *newPath = [NSString stringWithFormat:

@"%@-trimmed.%@", base, extension];

NSURL *fileURL = [NSURL fileURLWithPath:newPath];

// Establish an export session

AVAssetExportSession *session = [AVAssetExportSession

exportSessionWithAsset:asset

presetName:AVAssetExportPresetMediumQuality];

session.outputURL = fileURL;

session.outputFileType = AVFileTypeQuickTimeMovie;

session.timeRange = exportRange;

// Perform the export

[session exportAsynchronouslyWithCompletionHandler:^(){

if (session.status ==

AVAssetExportSessionStatusCompleted)

[self saveVideo:fileURL];

else if (session.status ==

AVAssetExportSessionStatusFailed)

NSLog(@"AV export session failed");

else

NSLog(@"Export session status: %d", session.status);

}];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Picking and Editing Video

You can use the image picker class to select videos as well as images, as demonstrated in Recipe 8-6. All it takes is a little editing of the media types property. Set the picker source type as normal, to either the photo library or the saved photos album, but restrict the media types property. The following snippet shows how to set the media types to request a picker that presents video assets only:

picker.sourceType = UIImagePickerControllerSourceTypePhotoLibrary;

picker.mediaTypes = @[(NSString *)kUTTypeMovie];

Once the user selects a video, Recipe 8-6 enters edit mode. Always check that the video asset can be modified. Call the UIVideoEditorController class method canEditVideoAtPath:. This returns a Boolean value that indicates whether the video is compatible with the editor controller:

if (![UIVideoEditorController canEditVideoAtPath:vpath]) ...

If it is compatible, allocate a new video editor. The UIVideoEditorController class provides a system-supplied interface that allows users to interactively trim videos. Set its delegate and videoPath properties and present it. (This class can also be used to re-encode data to a lower quality via the videoQuality property.)

The editor uses a set of delegate callbacks that are similar but not identical to the ones used by the UIImagePickerController class. Callbacks include methods for success, failure, and user cancellation:

![]() videoEditorController:didSaveEditedVideoToPath:

videoEditorController:didSaveEditedVideoToPath:

![]() videoEditorController:didFailWithError:

videoEditorController:didFailWithError:

![]() videoEditorControllerDidCancel:

videoEditorControllerDidCancel:

Cancellation occurs only when the user taps the Cancel button within the video editor. Tapping outside a popover dismisses the editor but doesn’t invoke the callback. For both cancellation and failure, Recipe 8-6 responds by resetting its interface, allowing users to pick another video.

A success callback occurs when a user has finished editing the video and taps Use. The controller saves the trimmed video to a temporary path and calls the did-save method. Do not confuse this “saving” with storing items to your photo library; this path resides in the application sandbox’s tmp folder. If you do nothing with the data, iOS deletes it the next time the device reboots. Once past this step, Recipe 8-6 offers a button to save the trimmed data into the shared iOS photo album, using the save-to-library feature introduced in Recipe 8-3.

Recipe 8-6 Using the Video Editor Controller

// The edited video is now stored in the local tmp folder

- (void)videoEditorController:(UIVideoEditorController *)editor

didSaveEditedVideoToPath:(NSString *)editedVideoPath

{

[self performDismiss];

// Update the working URL and present the Save button

mediaURL = [NSURL URLWithString:editedVideoPath];

self.navigationItem.leftBarButtonItem =

BARBUTTON(@"Save", @selector(saveVideo));

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Pick", @selector(pickVideo));

}

// Handle failed edit

- (void)videoEditorController:(UIVideoEditorController *)editor

didFailWithError:(NSError *)error

{

[self performDismiss];

mediaURL = nil;

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Pick", @selector(pickVideo));

self.navigationItem.leftBarButtonItem = nil;

NSLog(@"Video edit failed: %@", error.localizedFailureReason);

}

// Handle cancel by returning to Pick state

- (void)videoEditorControllerDidCancel:

(UIVideoEditorController *)editor

{

[self performDismiss];

mediaURL = nil;

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Pick", @selector(pickVideo));

self.navigationItem.leftBarButtonItem = nil;

}

// Allow the user to edit the media with a video editor

- (void)editMedia

{

if (![UIVideoEditorController canEditVideoAtPath:mediaURL.path])

{

self.title = @"Cannot Edit Video";

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Pick", @selector(pickVideo));

return;

}

UIVideoEditorController *editor =

[[UIVideoEditorController alloc] init];

editor.videoPath = mediaURL.path;

editor.delegate = self;

[self presentViewController:editor];

}

// The user has selected a video. Offer an edit button.

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info

{

[self performDismiss];

// Store the video URL and present an Edit button

mediaURL = info[UIImagePickerControllerMediaURL];

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Edit", @selector(editMedia));

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: E-mailing Pictures

The Message UI framework allows users to compose e-mail and text messages within applications. As with camera access and the image picker, first check whether a user’s device has been enabled for these services. A simple test allows you to determine when mail is enabled:

[MFMailComposeViewController canSendMail]

When mail capabilities are enabled, users can send their photographs via instances of MFMailComposeViewController. Texts are sent through MFMessageComposeViewController instances.

Recipe 8-7 uses this composition class to create a new mail item populated with the user-snapped photograph. The mail composition controller works best as a modally presented client on both the iPhone family and iPad. Your primary view controller presents it and waits for results via a delegate callback.

Creating Message Contents

The composition controller’s properties allow you to programmatically build a message including to/cc/bcc recipients and attachments. Recipe 8-7 demonstrates the creation of a simple HTML message with an attachment. Properties are almost universally optional. Define the subject and body contents via setSubject: and setMessageBody:. Each method takes a string as its argument.

Leave To Recipients unassigned to greet the user with an unaddressed message. The times you’ll want to prefill this field include adding call-home features such as Report a Bug or Send Feedback to the Developer or when you allow the user to choose a favorite recipient in your settings.

Creating the attachment requires slightly more work. To add an attachment, you need to provide all the file components expected by the mail client. Supply data (via an NSData object), a MIME type (a string), and a filename (another string). Retrieve the image data using theUIImageJPEGRepresentation() function. This function can take time to work, so expect slight delays before the message view appears.

This recipe uses a hard-coded MIME type of image/jpeg. If you want to send other data types, you can query iOS for MIME types via typical file extensions. Use UTTypeCopyPreferredTagWithClass(), which is defined in the Mobile Core Services framework, as shown in the following method:

#import <MobileCoreServices/UTType.h>

- (NSString *) mimeTypeForExtension: (NSString *) ext

{

// Request the UTI for the file extension

CFStringRef UTI = UTTypeCreatePreferredIdentifierForTag(

kUTTagClassFilenameExtension,

(__bridge CFStringRef) ext, NULL);

if (!UTI) return nil;

// Request the MIME file type for the UTI,

// may return nil for unrecognized MIME types

NSString *mimeType = (__bridge_transfer NSString *)

UTTypeCopyPreferredTagWithClass(UTI, kUTTagClassMIMEType);

CFRelease(UTI);

return mimeType;

}

This method returns a standard MIME type based on the file extension passed to it, such as .jpg, .png, .txt, .html, and so on. Always test to see whether this method returns nil because the iOS’s built-in knowledge base of extension-MIME type matches is limited. Alternatively, search the Internet for the proper MIME representations and add them to your project by hand.

The e-mail uses a filename you specify to name the transmitted data you send. Use any name you like. Here, the name is set to pickerimage.jpg. Because you’re just sending data, there’s no true connection between the content you send and the name you assign:

[mcvc addAttachmentData:UIImageJPEGRepresentation(image, 1.0f)

mimeType:@"image/jpeg" fileName:@"pickerimage.jpg"];

Note

When you use the iOS mail composer, attachments appear at the end of sent mail. Apple does not provide a way to embed images inside the flow of HTML text. This is due to differences between Apple and Microsoft representations.

Recipe 8-7 Sending Images by E-mail

- (void)mailComposeController:

(MFMailComposeViewController*)controller

didFinishWithResult:(MFMailComposeResult)result

error:(NSError*)error

{

// Wrap up the composer details

[self performDismiss];

switch (result)

{

case MFMailComposeResultCancelled:

NSLog(@"Mail was cancelled");

break;

case MFMailComposeResultFailed:

NSLog(@"Mail failed");

break;

case MFMailComposeResultSaved:

NSLog(@"Mail was saved");

break;

case MFMailComposeResultSent:

NSLog(@"Mail was sent");

break;

default:

break;

}

}

- (void)sendImage

{

UIImage *image = imageView.image;

if (!image) return;

// Customize the e-mail

MFMailComposeViewController *mcvc =

[[MFMailComposeViewController alloc] init];

mcvc.mailComposeDelegate = self;

// Set the subject

[mcvc setSubject:@"Here's a great photo!"];

// Create a prefilled body

NSString *body = @"<h1>Check this out</h1>\

<p>I snapped this image from the\

<code><b>UIImagePickerController</b></code>.</p>";

[mcvc setMessageBody:body isHTML:YES];

// Add the attachment

[mcvc addAttachmentData:UIImageJPEGRepresentation(image, 1.0f)

mimeType:@"image/jpeg" fileName:@"pickerimage.jpg"];

// Present the e-mail composition controller

[self presentViewController:mcvc];

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Sending a Text Message

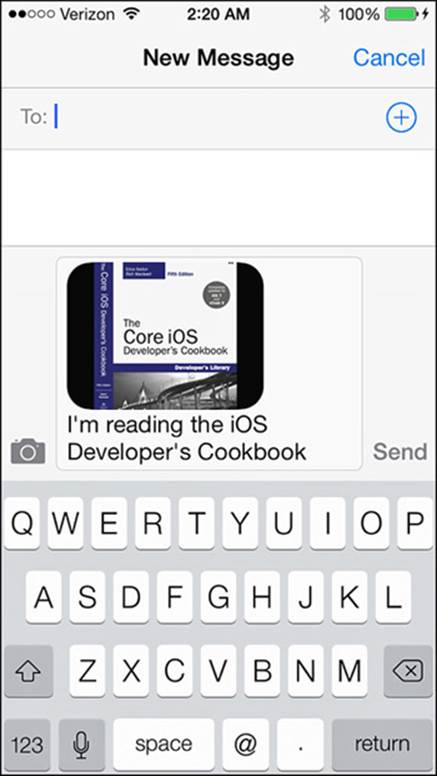

It’s even easier to send a text from your applications than to send an e-mail. This particular controller is shown in Figure 8-4. As with mail, first ensure that the capability exists on the iOS device and declare the MFMessageComposeViewControllerDelegate protocol:

[MFMessageComposeViewController canSendText]

Figure 8-4 The message compose view controller.

Monitor the availability of text support, which may change over time, by listening for the MFMessageComposeViewControllerTextMessageAvailabilityDidChangeNotification notification.

Recipe 8-8 creates the new controller and sets its messageComposeDelegate and its body. If you know the intended recipients, you can prepopulate that field by passing an array of phone number strings. Present the controller however you like and wait for the delegate callback, where you dismiss it.

In iOS 7, the message composer now supports attachments. Be sure to check for availability with the MFMessageComposeViewController class method canSendAttachments before adding yours. Recipe 8-8 adds an image to the message, if supported.

Recipe 8-8 Sending Texts

- (void)messageComposeViewController:

(MFMessageComposeViewController *)controller

didFinishWithResult:(MessageComposeResult)result

{

[self performDismiss];

switch (result)

{

case MessageComposeResultCancelled:

NSLog(@"Message was cancelled");

break;

case MessageComposeResultFailed:

NSLog(@"Message failed");

break;

case MessageComposeResultSent:

NSLog(@"Message was sent");

break;

default:

break;

}

}

- (void)sendMessage

{

MFMessageComposeViewController *mcvc =

[[MFMessageComposeViewController alloc] init];

mcvc.messageComposeDelegate = self;

if ([MFMessageComposeViewController canSendAttachments])

[mcvc addAttachmentData:

UIImagePNGRepresentation([UIImage

imageNamed:@"BookCover"])

typeIdentifier:@"png" filename:@"BookCover.png"];

mcvc.body = @"I'm reading the iOS Developer's Cookbook";

[self presentViewController:mcvc];

}

- (void)loadView

{

self.view = [[UIView alloc] init];

self.view.backgroundColor = [UIColor whiteColor];

if ([MFMessageComposeViewController canSendText])

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Send", @selector(sendMessage));

else

self.title = @"Cannot send texts";

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Recipe: Posting Social Updates

The Social framework offers a unified API for integrating applications with social networking services. The framework currently supports Facebook, Twitter, and the China-based Sina Weibo and Tencent Weibo. As with mail and messaging, start by testing whether the service type you want to support is supported:

[SLComposeViewController isAvailableForServiceType:SLServiceTypeFacebook]

If it is, you can create a composition view controller for that service:

SLComposeViewController *fbController = [SLComposeViewController

composeViewControllerForServiceType:SLServiceTypeFacebook];

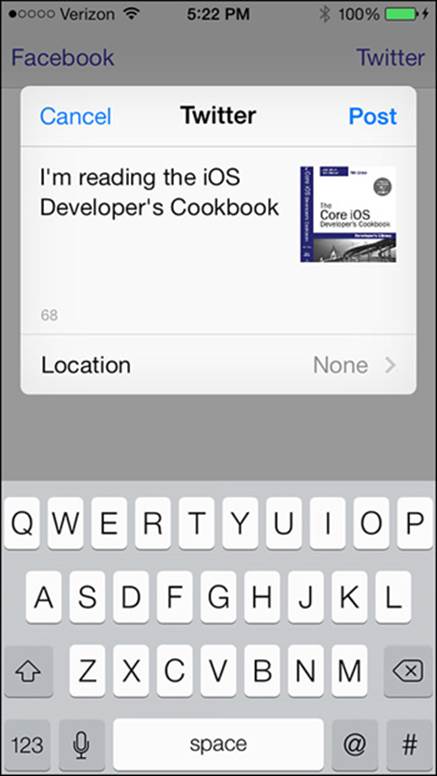

You customize a controller with images, URLs, and initial text. Recipe 8-9 demonstrates the steps to create the interface shown in Figure 8-5.

Figure 8-5 Composing Twitter messages.

Note

With the introduction of SLComposeViewController in iOS 6, the original TWTweetComposeViewController introduced in iOS 5 was deprecated. While the APIs are nearly identical, the deprecated version has quirks worth avoiding. Use theSLComposeViewController variant when Twitter sharing is needed.

Recipe 8-9 Posting Social Updates

- (void)postSocial:(NSString *)serviceType

{

// Establish the controller

SLComposeViewController *controller = [SLComposeViewController

composeViewControllerForServiceType:serviceType];

// Add text and an image

[controller addImage:[UIImage imageNamed:@"BookCover"]];

[controller setInitialText:

@"I'm reading the iOS Developer's Cookbook"];

// Define the completion handler

controller.completionHandler =

^(SLComposeViewControllerResult result){

switch (result)

{

case SLComposeViewControllerResultCancelled:

NSLog(@"Cancelled");

break;

case SLComposeViewControllerResultDone:

NSLog(@"Posted");

break;

default:

break;

}

};

// Present the controller

[self presentViewController:controller];

}

- (void)postToFacebook

{

[self postSocial:SLServiceTypeFacebook];

}

- (void)postToTwitter

{

[self postSocial:SLServiceTypeTwitter];

}

- (void)loadView

{

self.view = [[UIView alloc] init];

self.view.backgroundColor = [UIColor whiteColor];

if ([SLComposeViewController

isAvailableForServiceType:SLServiceTypeFacebook])

self.navigationItem.leftBarButtonItem =

BARBUTTON(@"Facebook", @selector(postToFacebook));

if ([SLComposeViewController

isAvailableForServiceType:SLServiceTypeTwitter])

self.navigationItem.rightBarButtonItem =

BARBUTTON(@"Twitter", @selector(postToTwitter));

}

Get This Recipe’s Code

To find this recipe’s full sample project, point your browser to https://github.com/erica/iOS-7-Cookbook and go to the folder for Chapter 8.

Data Sharing and Viewing

The previous recipes represent individualized methods of sharing information via e-mail, text message, and specific social networks. iOS provides controllers that simplify the sharing and viewing of data for a vastly larger set of data types and sharing targets.UIActivityViewController centralizes the sharing of data, including many built-in activities, such as e-mail, social sharing, printing, and many more, as well as the ability to extend the controller to your own custom activities. QLPreviewController allows the viewing of many types of data that your app likely could not handle itself. Both of these classes are covered fully in Chapter 11.

Summary

This chapter introduces a number of ready-to-use controllers that you can prepare and present to good effect. System-supplied controllers simplify programming for common tasks like tweeting and sending e-mail. Here are a few parting thoughts about the recipes you just encountered:

![]() You can roll your own versions of a few of these controllers, but why bother? System-supplied controllers are the rare cases where enforcing your own design takes a back seat to a consistency of user experience across applications. When a user sends an e-mail, he or she expects that e-mail compose screen to look basically the same, regardless of application. Go ahead and leverage Apple system services to mail, tweet, and interact with the system media library.

You can roll your own versions of a few of these controllers, but why bother? System-supplied controllers are the rare cases where enforcing your own design takes a back seat to a consistency of user experience across applications. When a user sends an e-mail, he or she expects that e-mail compose screen to look basically the same, regardless of application. Go ahead and leverage Apple system services to mail, tweet, and interact with the system media library.

![]() The image picker controller has grown to be a bit of a Frankenclass. It has long deserved a proper refresh and redesign. From controlling sources at a fine grain to reducing its memory overhead, the class deserves some loving attention from Apple. Now that so many great media processing classes have made the jump to iOS, we’d love to see better integration with AV Foundation, Core Media, and other key technologies—and not just through a visual controller. Although preserving user privacy is critical, it would be nice if the library opened up a more flexible range of APIs (with user-directed permissions, of course).

The image picker controller has grown to be a bit of a Frankenclass. It has long deserved a proper refresh and redesign. From controlling sources at a fine grain to reducing its memory overhead, the class deserves some loving attention from Apple. Now that so many great media processing classes have made the jump to iOS, we’d love to see better integration with AV Foundation, Core Media, and other key technologies—and not just through a visual controller. Although preserving user privacy is critical, it would be nice if the library opened up a more flexible range of APIs (with user-directed permissions, of course).

![]() The Social framework can do a lot more than post Facebook updates and tweets. The class lets you submit authenticated and unauthenticated service requests using appropriate security. Use the Accounts framework along with Social to retrieve login information for placing credentialed requests.

The Social framework can do a lot more than post Facebook updates and tweets. The class lets you submit authenticated and unauthenticated service requests using appropriate security. Use the Accounts framework along with Social to retrieve login information for placing credentialed requests.