Emerging Trends in Image Processing, Computer Vision, and Pattern Recognition, 1st Edition (2015)

Part III. Registration, Matching, and Pattern Recognition

Chapter 31. Improving performance with different length templates using both of correlation and absolute difference on similar play estimation

Kyota Aoki; Ryo Aita Graduate school of Engineering, Utsunomiya University, 7-1-2, Yoto, Utsunomiya, Tochigi, Japan

Abstract

Plays in sports are described as the motions of players. This paper proposes the similar play retrieving method based on the motion compensation vector in MPEG sports videos. This work uses the one-dimensional degenerated descriptions of each motion image between two adjacent frames. Connecting the one-dimensional degenerated descriptions on time direction, we have the space-time image. This space-time image describes a sequence of frames as a two-dimensional image. Using this space-time image, this work shows the performance using both of the correlation and the absolute difference to retrieve a small number of plays in a huge number of frames based on a single template play. There are differences between the templates for absolute difference and correlation. We discuss about the length of templates. Our experiment records 0.899 as recall, 0.922 as precision and 0.910 as F-measure in 168 plays in 132485 frames with the best mix of two different length of templates.

Keywords

Play estimation

Motion compensation vector

MPEG video

Absolute difference

Correlation

1 Introduction

There are many videos about sports. There is a large need for content-based video retrievals. The amount of videos is huge, so we need an automatic indexing method [1]. We proposed the method to retrieve shots including a similar motion based on the similarity of the motion of a sample part of videos [2–5].

In former works, we used the correlation of motions and the correlation of textures [6]. We have a good performance using both the correlation of motions and the correlation of textures.

This paper proposes the method to retrieve the plays using only motion compensation vectors in MPEG videos. Using multiple features, the performance increases. Many works try to index sport videos using the motions in the videos. Many works try to understand the progress of games with tracking the players. Many of the works use the motion vectors in MPEG videos. They succeed to find camera works. They are zoom-in, zoom-out, pan, etc. However, no work retrieves a play of a single player from only motions directly. Of course, camera works have an important role in understanding videos. Sound also has some roles in understanding videos. Many works use camera works and sound for understanding sport videos. Those feature-combining methods have some successes about retrieving home runs and other plays. However, those works did not success to retrieve plays from only motions. Recently, many works focused on retrieval combining many features and their relations [7,8].

In this paper, we try to propose a method that retrieves similar plays only from motions. With the help of texture, the performance of similar play retrieval increases. We showed that the combination both of motions and textures show better performance about similar play retrieval [9]. However, in many games, there are changes of fields and spectators. The colors of playing fields changes with the change of seasons. The motion based method can works with textures, sound, and camera works. In a previous paper, we proposed the method to estimate similar plays only from motions in motion compensation vectors in MPEG videos [10]. There are many motion estimation methods [11,12]. However, they need large computations. The method gets the motions from motion compensation vectors in MPEG videos, and makes the one-dimensional projections from the X motion and Y motion. The motion compensation vector exists at each 16 × 16 pixel's square. Very sparse description of motions is the motion description made from the motion compensation vector. The one-dimensional projection represents the motions between a pair of adjacent frames as a one-dimensional color strip. The method connects the strips in the temporal direction and gets an image that has one space dimension and one time dimension. The resulting image has the one-dimensional space axis and the one-dimensional time axis as the temporal slice [13–15]. Our method carries information about all pixels, but the temporal slice method does only about the cross-sections. We call this image as a space-time image. Using the images, the method retrieves parts of videos as fast as image retrievals do. We can use many features defined on images.

Our previous work uses a single template space-time image, and retrieves similar plays as the play described in the template space-time image [10]. However, using absolute difference and correlation, we can use different templates. Using different templates, they need not to be a same length. In our old works, for retrieving the pitches in baseball games, the best length of template is 20 frames. However, the experiments are restricted.

A long space-time image template is good for retrieving the same play in the template. However, in a similar play retrieval, the long template of a space-time image is not robust about the change of the duration of a play. A short space-time image template is robust about the change of the duration in a similar play retrieval. However, a short space-time image template is weak in the discrimination power.

This paper discusses the effects of the duration of templates in similar play retrieval using correlation and absolute difference in similar play retrieval on only motions. First, we show the over-all structure of the proposed method. Then, space-time image is discussed. Next, we discuss the similarity measures based on correlation and absolute difference. Then, we shows the experiments on a video of a real baseball game on a Japanese TV broad cast. And last, we conclude this work.

2 Structure of the proposed method

The proposed play retrieving method is the composition of a correlation and an absolute difference of motion space-time images [1–6]. We describe the space-time image as ST (space-time) image in the followings. In MPEG video, each 16 × 16 pixels’ block has the motion compensation vector. Using the MPEG motion compensation vector as the motion description, the size of the description is 1/64 of the original frames. In other word, the static texture description is 64 times larger than the motion descriptions in size. The static texture description is 96 times larger than the motion description in total amount with the consideration of the vector size.

The color description fits for describing the static scenes. The motion description does for describing the dynamic scenes. A play in a sport is a composition of motions. Therefore, the motion description fits for describing plays in a sport. For retrieving the same play in a video, we can use any similarity measures. However, our goal is the retrieval of similar plays from videos. The speed of a play may change. In this case, the similarity measure must be robust about the absolute amount of motions. Our motion retrieval method uses the simple correlation as the similarity measure. The simple correlation works well in the similar play retrieval in sport videos. Using correlation as the similarity measure, there is no hint for the absolute amount of motions. As a result, there are some error retrievals. In the relative motion space, there is no difference between large motions and small motions.

The absolute difference is a major difference feature. In similar play retrieval, the absolute difference shows poor performance in our preparation experiments [10]. However, with other similarity measures the absolute difference can work some role for distinguishing the similarity measured with the correlations.

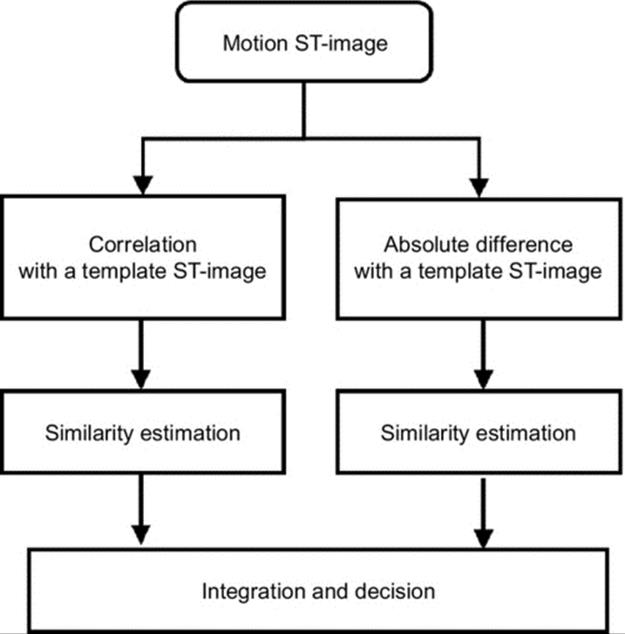

The proposed similar play retrieving method uses both of the correlation and the absolute difference between a template ST image and the ST image from videos. We can easily differentiate the large motion difference with the absolute difference. In sport videos, the absolute difference can find the camera motions. Composing the correlation based measure and the absolute difference based measure, we construct the composite similar play retrieval method.

Figure 1 shows the structure of the proposed method. We use absolute difference and correlation separately. Therefore, there is no need to use same length of templates.

FIGURE 1 Outline of the proposed similar play estimating method.

3 1D degeneration from videos

There are many degeneration methods. Some works use the temporal slices [9,16,17]. The temporal slices are easy to make and represent videos in small representation. The temporal slice is the sequence of the set of selected pixels in frames. There is no information about other pixels. These works make the temporal slices from color and textures in videos.

In this paper, we makes 1D degenerated representation using the statistical features of the set of pixels. The main statistical features are mean, mode, and median. We uses the mean for making 1D degeneration [9]. For treating sports, the motions in videos are important.

3.1 Motion Extraction from MPEG Videos and Construction of Space-Time Image

From an MPEG video, we get a two-dimensional image describing motion compensation vectors in a video. In the following, we abbreviate space-time image as ST image. The ST image has a space axis and time axis. The ST image represents the sequence of frames in one two-dimensional image. The ST image is a very compact description of a video, and it is easy to treat.

3.2 Motion Compensation Vectors from MPEG Videos

First, we must have the motions in an MPEG video. An MPEG video is a sequence of GOP (Group of Pictures). Each GOP is starting from I-frame, and has B-frames and P-frames. The motion compensation vector is block wise. The block size is 16 × 16. There is a 640 × 480 MPEG video. The image of motion compensation vector is only 40 × 30 pixels.

3.3 Space-Time Image

We have the motion vector (two-dimensional) on every motion compensation block. The amount of information is 2/16 × 16 × 3 of the original color video. This is very small comparing with the original color frames. The baseball games can long about 2 h. This video has 200k frames. If we compare frame by frame, there needs a huge computation. There is a large difficulty to retrieve similar parts of a video.

We can retrieve similar parts of videos using classical representative frame wise video retrieve method. However, it is difficult to retrieve similar part of videos based on the player’s motions, because motion leads a change of subsequent frames.

We can use many feature-based methods to retrieve similar part of videos. However, the applicability of the method depends on the features selected to use. The generality of the method may be lost using specified features.

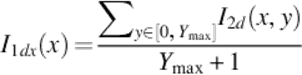

This paper uses the one-dimensional degeneration for reducing the amount of information without lost generality. We make one-dimensional degeneration of each frame as:

(1)

(1)

(2)

(2)

Equation (1) makes one-dimensional degenerated description of X-direction from a two-dimensional image. Equation (2) makes one-dimensional degenerated description of Y-direction from a two-dimensional image. In Equations (1) and (2), I2d(x, y) stands for the intensity at the pixel (x, y). (Xmax, Ymax) is the coordinate of the right-upper corner.

The resulting one-dimensional degenerated description is defined as:

![]() (3)

(3)

There are two directions to make a one-dimensional degeneration. We use both two directions that are X-axis and Y-axis using Equations (1) and (2). In each color plane, we have a one-dimensional-degenerated description. We connect the two degenerated descriptions onto X-axis and the transposed projection onto Y-axis. We represent the X-direction motion in red, and Y-direction motion in green. There is no value in blue. Then, we have a one-dimensional degenerated color strip from the motion compensation vector. In the color strip, red represents the X-direction motion and green does the Y-direction motion. For the convenience, we set 255 in blue when both of X- and Y-direction motions are zero.

We connect the one-dimensional color strips describing motion frames on time passing direction. Connecting one-dimensional color strips, we have a color image that has one space axis and one time axis. In this paper, we describe this image as ST image. In the following experiments, we use the 320 × 240 pixels half-size frames. In the MPEG format, each 16 × 16 pixels block holds a motion compensation vector. This leads to reduce the amount of information into 1/256. The resulting motion image is 20 × 15 pixels. The 1D degenerated description is 7/60 of the original 20 × 15 pixels image. As a result, the usage of the ST image of motion compensation vector in MPEG video reduces the amount of information into 0.045% from the original half-size video frames. In the ST image used in this paper, X-axis holds the space and Y-axis does the time. There is no reduction in information in time axis.

The similar motion retrieval estimates what kind of motions exists on a place. It is same as the cost of the retrieval on images to retrieve similar part of videos on an ST image.

There are many similar image retrieval methods. They can be applied in ST images describing videos’ motions. This paper uses the correlation between two images. We normalize the resulting correlations for compensating the variance among videos. We have two independent correlations between two ST images from each color plane. The blue plane exists for only the convenience for our eyes. We use an X-direction motion and a Y-direction motion in red and green planes.

3.4 Matching Between Template ST Image and Retrieved ST Image

All ST images have same space direction size. The estimated motion image is X × Y pixels. Then, the size of the space axis of ST images is X + Y pixels. For computing the correlations between the template ST image and any part of retrieved ST image, there is no freedom on space axis. There is only the freedom on time axis. If a template ST image is S × t and a retrieved ST image is S × T, the computation cost of correlations is S × t × T.

In a baseball game, the length t of an interesting play of a video is short. Therefore, the computational cost of correlations is small enough to be able to apply large-scale video retrieval. Because of the shortness of the retrieved part, there is no need to compensate the length of the part. There is no very slow pitch or no very fast one. There is no very slow running or no very fast one.

4 Similarity Measure with Correlation and Absolute Difference in Motion Retrieving Method

4.1 Similarity Measure in Motion Space-Time Image Based on Correlations

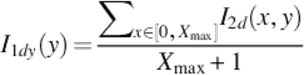

We use the mutual correlation as the measure of similarity. We have two-dimensional correlation vectors. They are X-direction motion, Y-direction motion. If there is a similar motion between the template and the retrieved part of ST image, both of the two correlations are large. We use the similarity measure shown as follows:

![]() (4)

(4)

(5)

(5)

In Equation (4), SC(IT, IV) is the similarity between an ST-image IT and IV. IT is a template ST-image. IV is a part of ST-image from a video. NCol(ITp, IVp) is the normalized correlation between ITp and IVp over a video V. The normalized correlation is normalized on the pair of the template ST-image and the ST-image from a video over the video. Thp is the threshold. p is one of x and y that represent the X-direction motion and Y-direction motion. This similarity measure is scalar.

Equation (5) is the formal definition of NCol. SD is a standard derivation over the video V. Col is the correlation.

4.2 Similarity Measure in Motion Space-Time Image Based on Absolute Differences

We use the absolute difference as the measure of similarity in the motion description. In the case, we have two-dimensional absolute differences. They are the absolute differences based on both of X-direction motion and Y-direction motion. If there is a similar motion between the template and the retrieved part of the ST image, both of the two absolute differences are small. We use the similarity measure shown in the following equation:

![]() (6)

(6)

(7)

(7)

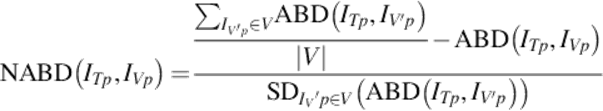

In Equation (6), NABD(I0p, I1p) is the normalized negation of absolute difference between I0p and I1p. I0p and I1p are ST images. Thp is the threshold. p is one of x and y that represent the X-direction motion and Y-direction motion. This similarity measure is scalar.

Equation (7) is the formal definition of NABD. SD is a standard derivation over the video V. ABD is the absolute difference between two ST-images. In Equation (7), the terms in the numerator are placed reverse order from a normal position. This implements the negation.

5 Experiments on baseball games and evaluations

5.1 Baseball Game

This paper treats baseball game MPEG videos. In baseball games, players’ uniforms change between half innings. The pitch is the most frequent play in a baseball game. There is a large number of pitches. This paper uses a single play of a pitch as a template. Using this template, the proposed method retrieves large number of pitches using similar motion retrieval.

Motion based similar video retrieval can find many types of plays based on the template. A few repeated plays are not pitches. This paper distinguishes a pitch and other plays.

5.2 Experimental Objects

This paper uses a whole baseball game for experiments. The game is 79 min, 132485 frames in a video. In the game, there are right-hand pitchers and a left-hand pitcher. There are 168 pitches. 31648 frames represent the camera works that catch the pitching scenes.

5.3 Experiment Process

The experimental videos are recorded from Japanese analog TV to DVD. Then, the recorded videos are reduced into 320 × 240 pixels and encoded MPEG1 format. Most plays of pitches are very short. Therefore, there is no reduction on time direction. There are 30 frames in 1S. There are all parts, including telops, sportscasters, and computer graphics. The first step of our experiment is the extraction of motion compensation vectors. The motion compensation vector is at each 16 × 16 pixel blocks. In every motion compensation block, we have a motion compensation vector. The similar play retrieval in motion frames uses a continuous sequence of frames as a template and retrieves the shots, including pitches of a video.

5.4 Correlation-Based Similarity Measure in Pitching Retrieval

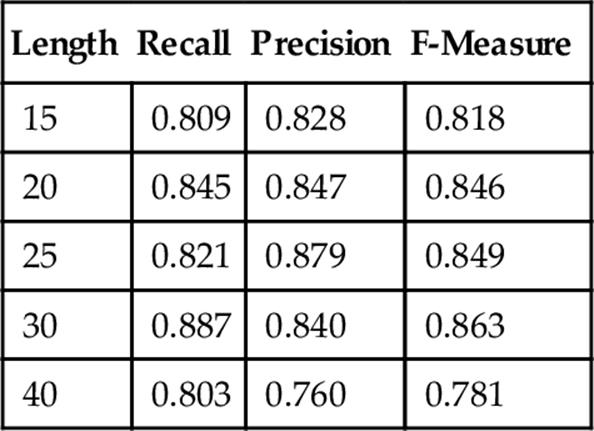

In this experiment, we use template pitches. The pitches include the pitch shows the best performance in our previous work. Around the pitch, we search the best length and the starting point of the template ST-image. In the experiments, the lengths of the template ST-images are 15, 20, 25, 30, and 40. The F-measures are 0.818, 0.846, 0.849, 0.863, and 0.781 respectively.

Table 1 shows the relations between the performances and the length of templates using correlation for calculating similarities. Using correlation for the similarity measure, the 40 frames of length is too long for retrieving pitches in baseball games. Comparing the 30 frames of length, the performance of the 40 is 10% lower than one of the 30.

Table 1

Performance with Correlation

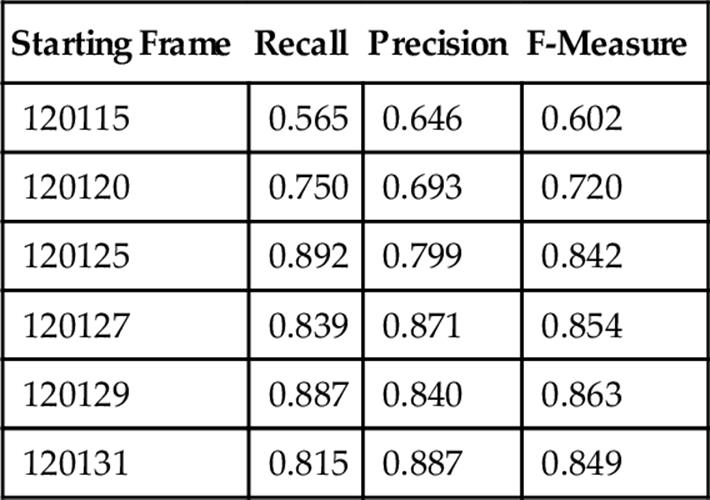

Table 2 shows the relation between the performance and the starting point of 30 frames template ST-images. In a range of six frames from 120125 to 120131, the F-measures keep over 0.84.

Table 2

Performance with Correlation by Starting Frame in 30 Frames Length Template ST Image

5.5 Absolute Difference Based Similarity Measure in Pitching Retrieval

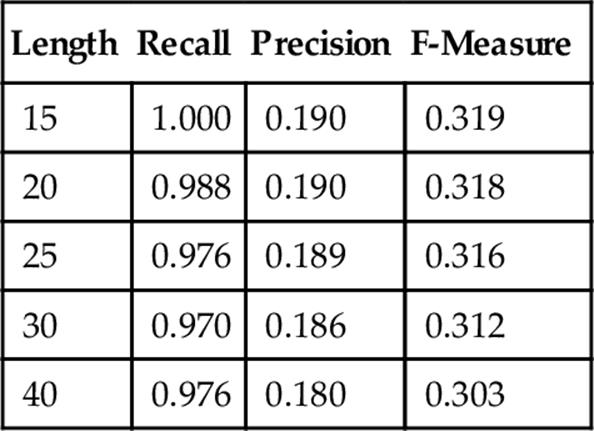

The length of durations of templates affects the performance a little. In the experiments range, the shortest one is the best one. The 15 frames length template retrieves all pitches. Moreover, it is best in F-measure (Table 3).

Table 3

Performance with Absolute Difference

5.6 Combination Both of Correlations and Absolute Differences

For retrieving the precise pitches in videos, we need to use the correlation-based similarity. Combining the correlation based similarity and the absolute difference based similarity, we can improve the performance of the similar pitch retrieval.

Using the correlation based similarity or the absolute difference based similarity, we have no difficulties to find the proper set of thresholds. In the correlation based similarity or the absolute difference based similarity, two thresholds work in each X- and Y-direction motions.

We use logical conjunction to combine the correlation-based similarity and the absolute difference-based similarity. We can use logical disjunction. However, minimum is the extension of logical conjunction. We select logical conjunction in this paper. We have four thresholds in the combination. It is difficult to optimize all thresholds at once with short processing time. We divide the optimization of thresholds into two steps. They are the correlation-based similarity threshold optimization, and the absolute difference-based one.

First, we optimize the thresholds in the absolute difference based similarity. Then, we do ones in the correlation-based similarity. In the absolute difference based experiments, the recalls are high enough. This leads the decision. Of cause, there is no difference on the results between the correlation based first and the absolute difference based first.

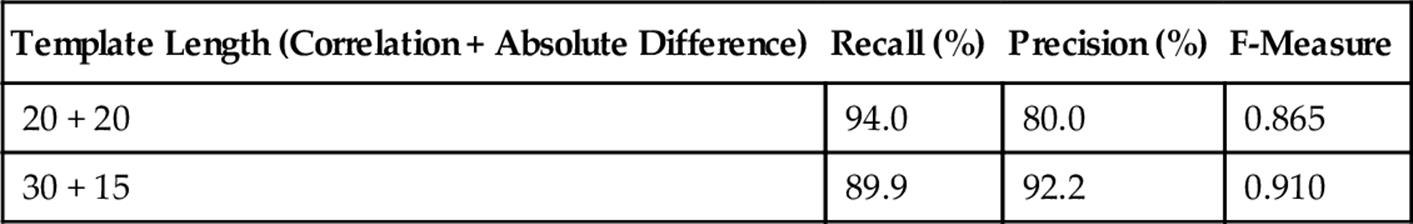

Table 4 shows the result of the experiments. The templates are same in the previous experiments. Using the same length templates in absolute difference and correlation, the F-measure is 0.865. In number, there is 158 recalls and 39 errors. The error retrieval rate is 0.000297. This is 99.97% precision. This precision is very high. In the best mix of the templates’ lengths, the absolute difference measure uses the 15 frames in the length of the template. The correlation one uses 30 frames in the length of the template. The range of the correlation’s template includes the one of the absolute difference’s one. Using the best mix both of absolute difference and correlation, the F-measure is 0.910. In number, there is 151 recalls and 13 errors. This error retrieval rate is 0.0000981. This is 99.99% precision. The number of error retrievals decreases 26. This is 67% decrease.

Table 4

Performance with the Combination of Correlation and Absolute Difference

The best mix of two similarities works well. The F-measure of the best mix one increases 5% from the single correlation similarity. There is a difference in the best length of templates between the similarity based on absolute difference and the one based on correlation.

6 Conclusions

This paper discusses the length of templates on the retrieval of similar plays in sport MPEG videos using similar motion retrieval based on both of correlation and absolute difference. For recognizing sport videos, the motions represent important meanings. In the cases, there must be similar video retrieval methods based on the motions described in the videos. The proposed similar play retrieval method is the combination of correlation and absolute difference motion based on only motion compensation vectors in MPEG videos.

The experiments show that the similar play retrieval works well using correlation and absolute difference on ST-images made from motion compensation vectors in MPEG videos. Moreover, the best mix of the length of templates in absolute difference and correlation is better than the same template. Classical works using MPEG motion compensation vector only use global-scale motions. However, the proposed method utilizes local motions. The proposed combination of correlation-based similarity measure and absolute difference-based similarity measure works well in our experiments. Using both similarity measures, the proposed method gets some more performance than a single correlation based similarity measure based on motion based ST-images.

References

[1] Hu W, Xie N, Li L, Zeng X, Maybank S. A survey on visual content-based video indexing and retrieval. IEEE Trans Syst Man Cybern C Appl Rev. 2011;41(6):797–819.

[2] Aoki K. Video retrieval based on motions, JIEICE technical report, IE, 108, 217, pp. 45–50 ; 2008.

[3] Aoki K. Plays from motions forbaseball video retrieval. In: Second international conference on computer engineering and applications, 2010; 2010:271–275.

[4] Aoki K. Play estimation using multiple 1D degenerated descriptions of MPEG motion compensation vectors. In: Fifth international conference on computer sciences and convergence information technology, 2010; 2010:176–181.

[5] Aoki K. Play estimation using sequences of multiple 1d degenerated descriptions of mpeg motion compensation vectors. In: Proceedings of the eighth IASTED international conference on signal processing, pattern recognition, and applications; 2011:268–275.

[6] Aoki K, Fukiba T. Play estimation with motions and textures in space-time Map description. In: Workshop on developer-centred computer vision in ACCV 2012; 279–290. Lecture Notes in Computer Science. 2012;vol. 7728.

[7] Fleischman M, Roy D. Situated models of meaning for sports video retrieval. In: Proceedings of the NAACLHLT2009, Companion Volume; 2007:37–40.

[8] Sebe N, Lew MS, Zhou X, Huang TS, Bakker EM. The state of the art in image and video retrieval. Lecture Notes in Computer Science. 2003;vol. 2728 pp. 7–12.

[9] Peng S-L. Temporal slice analysis of image sequences. In: Proceedings CVPR '91. IEEE computer society conference on computer vision and pattern recognition, 1991; 1991:283–288.

[10] Aoki K, Aoyagi R. Improving performance using both of correlation and absolute difference on similar Play estimation. In: 2014 6th international conference on digital image processing, proceedings; 2014:289–295.

[11] Aoki K. Block-wise high-speed reliable motion estimation method applicable in large motion, JIEICE technical report. IE, 106, 536, pp. 95–100 ; 2007.

[12] Nobe M, Ino Y, Aoki K. Pixel-wise motion estimation based on multiple motion estimations in consideration of smooth regions, JIEICE technical report. IE, 106, 423, pp. 7–12 ; 2006.

[13] Ngo C-W, Pong T-C, Zhang H-J. On clustering and retrieval of video shots through temporal slices analysis. IEEE Trans Multimedia. 2002;4(4):446–458.

[14] Bouthemy P, Fablet R. Motion characterization from temporal cooccurrences of local motion-based measures for video indexing. In: 14th international conference on pattern recognition, ICPR’98, Brisbane; August 1998:905–908.

[15] Keogh E, Ratanamahatana CA. Exact indexing of dynamic time warping. Knowl Inf Syst. 2005;7(3):358–386.

[16] Ngo CW, Pong TC, Chin RT. Detection of gradual transitions through temporal slice analysis. 41. IEEE Computer Society conference on computer vision and pattern recognition, 1999. 1999;vol. 1.

[17] Ngo C-W, Pong T-C, Chin RT. Video partitioning by temporal slice coherency. IEEE Trans Circuit Syst Video Technol. 2001;11(8):941–953.

![]() IPCV paper: Improving Performance using both of Correlation and Absolute Difference on Similar Play Estimation

IPCV paper: Improving Performance using both of Correlation and Absolute Difference on Similar Play Estimation