High Performance MySQL (2012)

Chapter 8. Optimizing Server Settings

In this chapter, we’ll explain a process by which you can create a good configuration file for your MySQL server. It is a roundabout trip, with many points of interest and side trips to scenic overlooks. These are necessary, because determining the shortest path to a good configuration doesn’t start with studying configuration options and asking which ones you should set or how you should change them, nor does it start with examining server behavior and asking whether any configuration options can improve it. It’s best to begin with an understanding of MySQL’s internals and behavior. You can then use that knowledge as a guide for how MySQL should be configured. Finally, you can compare the desired configuration to the current configuration and correct any differences that are important and worthwhile.

People often ask, “What’s the optimal configuration file for my server with 32 GB of RAM and 12 CPU cores?” Unfortunately, it’s not that simple. The server should be configured for the workload, data, and application requirements, not just the hardware. MySQL has scores of settings that you can change—but you shouldn’t. It’s usually better to configure the basic settings correctly (and there are only a few that really matter in most cases) and spend more time on schema optimization, indexes, and query design. After you’ve set MySQL’s basic configuration options correctly, the potential gains from further changes are usually small.

On the other hand, the potential downside of fiddling with the configuration can be great. We’ve seen more than one “highly tuned” server that was crashing constantly, stalling, or performing slowly due to unwise settings. We’ll spend a bit of time on why that can happen and what not to do.

So what should you do? Make sure the basics such as the InnoDB buffer pool and log file size are appropriate, set a few safety and sanity options if you wish to prevent bad behavior (but note that these usually won’t improve performance—they’ll only avoid problems), and then leave the rest of the settings alone. If you begin to experience a problem, diagnose it carefully with the techniques shown in Chapter 3. If the problem is caused by a part of the server whose behavior can be corrected with a configuration option, then you might need to change it.

Sometimes you might also need to set specific configuration options that can have a significant performance impact in special cases. However, these should not be part of a basic server configuration file. You should set them only when you find the specific performance problems they address. That’s why we don’t suggest that you approach configuration options by looking for bad things to improve. If something needs to be improved, it should show up in query response times. It’s best to start your search with queries and their response times, not with configuration options. This could save you a lot of time and prevent many problems.

Another good way to save time and trouble is to use the defaults unless you know you shouldn’t. There is safety in numbers, and a lot of people are running with default settings. That makes them the most thoroughly tested settings. Unexpected bugs can arise when you change things needlessly.

How MySQL’s Configuration Works

We’ll begin by explaining MySQL’s configuration mechanisms, before covering what you should configure in MySQL. MySQL is generally pretty forgiving about its configuration, but following these suggestions might save you a lot of work and time.

The first thing to know is where MySQL gets configuration information: from command-line arguments and settings in its configuration file. On Unix-like systems, the configuration file is typically located at /etc/my.cnf or /etc/mysql/my.cnf. If you use your operating system’s startup scripts, this is typically the only place you’ll specify configuration settings. If you start MySQL manually, which you might do when you’re running a test installation, you can also specify settings on the command line. The server actually reads the contents of the configuration file, removes any comment lines and newlines, and then processes it together with the command-line options.

NOTE

A note on terminology: because many of MySQL’s command-line options correspond to server variables, we sometimes use the terms option and variable interchangeably. Most variables have the same names as their corresponding command-line options, but there are a few exceptions. For example, --memlock sets the locked_in_memory variable.

Any settings you decide to use permanently should go into the global configuration file, instead of being specified at the command line. Otherwise, you risk accidentally starting the server without them. It’s also a good idea to keep all of your configuration files in a single place so that you can inspect them easily.

Be sure you know where your server’s configuration file is located! We’ve seen people try unsuccessfully to configure a server with a file it doesn’t read, such as /etc/my.cnf on Debian servers, which look in /etc/mysql/my.cnf for their configuration. Sometimes there are files in several places, perhaps because a previous system administrator was confused as well. If you don’t know which files your server reads, you can ask it:

$ which mysqld

/usr/sbin/mysqld

$ /usr/sbin/mysqld --verbose --help | grep -A 1 'Default options'

Default options are read from the following files in the given order:

/etc/mysql/my.cnf ~/.my.cnf /usr/etc/my.cnf

This applies to typical installations, where there’s a single server on a host. You can design more complicated configurations, but there’s no standard way to do this. The MySQL server distribution used to include a now-deprecated program called mysqlmanager, which can run multiple instances from a single configuration with separate sections. (This was a replacement for the even older mysqld_multi script.) However, many operating system distributions don’t include or use this program in their startup scripts. In fact, many don’t use the MySQL-provided startup script at all.

The configuration file is divided into sections, each of which begins with a line that contains the section name in square brackets. A MySQL program will generally read the section that has the same name as that program, and many client programs also read the client section, which gives you a place to put common settings. The server usually reads the mysqld section. Be sure you place your settings in the correct section in the file, or they will have no effect.

Syntax, Scope, and Dynamism

Configuration settings are written in all lowercase, with words separated by underscores or dashes. The following are equivalent, and you might see both forms in command lines and configuration files:

/usr/sbin/mysqld --auto-increment-offset=5

/usr/sbin/mysqld --auto_increment_offset=5

We suggest that you pick a style and use it consistently. This makes it easier to search for settings in your files.

Configuration settings can have several scopes. Some settings are server-wide (global scope); others are different for each connection (session scope); and others are per-object. Many session-scoped variables have global equivalents, which you can think of as defaults. If you change the session-scoped variable, it affects only the connection from which you changed it, and the changes are lost when the connection closes. Here are some examples of the variety of behaviors of which you should be aware:

§ The query_cache_size variable is globally scoped.

§ The sort_buffer_size variable has a global default, but you can set it per-session as well.

§ The join_buffer_size variable has a global default and can be set per-session, but a single query that joins several tables can allocate one join buffer per join, so there might be several join buffers per query.

In addition to setting variables in the configuration files, you can also change many (but not all) of them while the server is running. MySQL refers to these as dynamic configuration variables. The following statements show different ways to change the session and global values ofsort_buffer_size dynamically:

SET sort_buffer_size = <value>;

SET GLOBAL sort_buffer_size = <value>;

SET @@sort_buffer_size := <value>;

SET @@session.sort_buffer_size := <value>;

SET @@global.sort_buffer_size := <value>;

If you set variables dynamically, be aware that those settings will be lost when MySQL shuts down. If you want to keep the settings, you’ll have to update your configuration file as well.

If you set a variable’s global value while the server is running, the values for the current session and any other existing sessions are not affected. This is because the session values are initialized from the global value when the connections are created. You should inspect the output of SHOW GLOBAL VARIABLES after each change to make sure it’s had the desired effect.

Variables use different kinds of units, and you have to know the correct unit for each variable. For example, the table_cache variable specifies the number of tables that can be cached, not the size of the table cache in bytes. The key_buffer_size is specified in bytes, whereas still other variables are specified in number of pages or other units, such as percentages.

Many variables can be specified with a suffix, such as 1M for one megabyte. However, this works only in the configuration file or as a command-line argument. When you use the SQL SET command, you must use the literal value 1048576, or an expression such as 1024 * 1024. You can’t use expressions in configuration files.

There is also a special value you can assign to variables with the SET command: the keyword DEFAULT. Assigning this value to a session-scoped variable sets that variable to the corresponding globally scoped variable’s value; assigning it to a globally scoped variable sets the variable to the compiled-in default (not the value specified in the configuration file). This is useful for resetting session-scoped variables back to the values they had when you opened the connection. We advise you not to use it for global variables, because it probably won’t do what you want—that is, it doesn’t set the values back to what they were when you started the server.

Side Effects of Setting Variables

Setting variables dynamically can have unexpected side effects, such as flushing dirty blocks from buffers. Be careful which settings you change online, because this can cause the server to do a lot of work.

Sometimes you can infer a variable’s behavior from its name. For example, max_heap_table_size does what it sounds like: it specifies the maximum size to which implicit in-memory temporary tables are allowed to grow. However, the naming conventions aren’t completely consistent, so you can’t always guess what a variable will do by looking at its name.

Let’s take a look at some commonly used variables and the effects of changing them dynamically:

key_buffer_size

Setting this variable allocates the designated amount of space for the key buffer (or key cache) all at once. However, the operating system doesn’t actually commit memory to it until it is used. Setting the key buffer size to one gigabyte, for example, doesn’t mean you’ve instantly caused the server to actually commit a gigabyte of memory to it. (We discuss how to watch the server’s memory usage in the next chapter.)

MySQL lets you create multiple key caches, as we explain later in this chapter. If you set this variable to 0 for a nondefault key cache, MySQL discards any indexes cached in the specified cache, begins to cache them in the default cache, and deletes the specified cache when nothing is using it anymore. Setting this variable for a nonexistent cache creates it. Setting the variable to a nonzero value for an existing cache will flush the specified cache’s memory. This blocks all operations that try to access the cache until the flush is finished.

table_cache_size

Setting this variable has no immediate effect—the effect is delayed until the next time a thread opens a table. When this happens, MySQL checks the variable’s value. If the value is larger than the number of tables in the cache, the thread can insert the newly opened table into the cache. If the value is smaller than the number of tables in the cache, MySQL deletes unused tables from the cache.

thread_cache_size

Setting this variable has no immediate effect—the effect is delayed until the next time a connection is closed. At that time, MySQL checks whether there is space in the cache to store the thread. If so, it caches the thread for future reuse by another connection. If not, it kills the thread instead of caching it. In this case, the number of threads in the cache, and hence the amount of memory the thread cache uses, does not immediately decrease; it decreases only when a new connection removes a thread from the cache to use it. (MySQL adds threads to the cache only when connections close and removes them from the cache only when new connections are created.)

query_cache_size

MySQL allocates and initializes the specified amount of memory for the query cache all at once when the server starts. If you update this variable (even if you set it to its current value), MySQL immediately deletes all cached queries, resizes the cache to the specified size, and reinitializes the cache’s memory. This can take a long time and stalls the server until it completes, because MySQL deletes all of the cached queries one by one, not instantaneously.

read_buffer_size

MySQL doesn’t allocate any memory for this buffer until a query needs it, but then it immediately allocates the entire chunk of memory specified here.

read_rnd_buffer_size

MySQL doesn’t allocate any memory for this buffer until a query needs it, and then it allocates only as much memory as needed. (The name max_read_rnd_buffer_size would describe this variable more accurately.)

sort_buffer_size

MySQL doesn’t allocate any memory for this buffer until a query needs to do a sort. However, when there’s a sort, MySQL allocates the entire chunk of memory immediately, whether the full size is required or not.

We explain what these variables do in more detail elsewhere, and this isn’t an exhaustive list. Our goal here is simply to show you what behavior to expect when you change a few common variables.

You should not raise the value of a per-connection setting globally unless you know it’s the right thing to do. Some buffers are allocated all at once, even if they’re not needed, so a large global setting can be a huge waste. Instead, you can raise the value when a query needs it.

The most common example of a variable that you should probably keep small and raise only for certain queries is sort_buffer_size, which controls how large the sort buffer should be for filesorts. MySQL performs some work to initialize the sort buffer after allocating it.

In addition, the sort buffer is allocated to its full size even for very small sorts, so if you make it much larger than the average sort requires, you’ll be wasting memory and adding allocation cost. This can be surprising to those readers who think of memory allocation as an inexpensive operation. Without digging into all of the technical details, it’s enough to say that memory allocation includes setting up the address space, which can be relatively expensive; in Linux in particular, memory allocation uses a couple of strategies with varying cost depending on the size.

In summary, a large sort buffer can be very expensive, so don’t increase its size unless you know it’s needed.

When you find a query that needs a larger sort buffer to perform well, you can raise the sort_buffer_size value just before the query and then restore it to DEFAULT afterward. Here’s an example of how to do this:

SET @@session.sort_buffer_size := <value>;

-- Execute the query...

SET @@session.sort_buffer_size := DEFAULT;

Wrapper functions can be handy for this type of code. Other variables you might set on a per-connection basis are read_buffer_size, read_rnd_buffer_size, tmp_table_size, and myisam_sort_buffer_size (if you’re repairing tables).

If you need to save and restore a possibly customized value, you can do something like the following:

SET @saved_<unique_variable_name> := @@session.sort_buffer_size;

SET @@session.sort_buffer_size := <value>;

-- Execute the query...

SET @@session.sort_buffer_size := @saved_<unique_variable_name>;

NOTE

The sort buffer size is one of the settings that is the focus of far too much “tuning.” Some people seem to have the idea that bigger is better, and we’ve even seen servers with this variable set to 1 GB. Perhaps not surprisingly, this can cause the server to try to allocate too much memory and crash, or simply to burn a lot of CPU time when initializing the sort buffer for a query; see MySQL bug 37359 for more on this.

Don’t assign too much importance to the sort buffer size. Do you really need your queries to allocate 128 MB of memory to sort 10 rows and return them to the client? Think about what kinds of sorting your queries are doing, and how much, and try to avoid them with proper indexing and query design (see Chapter 5 and Chapter 6) rather than trying to make the sorting operation itself faster. And you should definitely profile your queries to see whether sorting is where you should focus your attention anyway; see Chapter 3 for an example of a query that performs a sort but doesn’t spend much of its time sorting.

Getting Started

Be careful when setting variables. More is not always better, and if you set the values too high, you can easily cause problems: you might run out of memory, causing your server to swap, or run out of address space.[109]

You should always have a monitoring system in place to measure whether a change improves or hurts your server’s overall performance in real life. Benchmarks aren’t enough, because they’re not real. If you don’t measure your server’s actual performance, you might hurt performance without knowing it. We’ve seen many cases where someone changed a server’s configuration and thought it improved performance, when in fact the server’s performance worsened overall because of a different workload at a different time of day or day of the week.

If you take notes, perhaps with comments in the configuration file, you might save yourself (and your colleagues) a lot of work. An even better idea is to place your configuration file under version control. This is a good practice anyway, because it lets you undo changes. To reduce the complexity of managing many configuration files, simply create a symbolic link from the configuration file to a central version control repository.

Before you start changing your configuration, you should optimize your queries and your schema, addressing at least the obvious things such as adding indexes. If you get deep into tweaking the configuration and then change your queries or schema, you might need to reevaluate the configuration. Keep in mind that unless your hardware, workload, and data are completely static, chances are you’ll need to revisit your configuration later. And in fact, most people’s servers don’t even have a steady workload throughout the day—meaning that the “perfect” configuration for the middle of the morning is not right for midafternoon! Obviously, chasing the mythical “perfect” configuration is completely impractical. Thus, you don’t need to squeeze every last ounce of performance out of your server; in fact, the return for such an investment of time will probably be very small. We suggest that you stop at “good enough,” unless you have reason to believe you’re forgoing a significant performance improvement.

Iterative Optimization by Benchmarking

You might be expected (or believe that you’re expected) to set up a benchmark suite and “tune” your server by changing its configuration iteratively in search of optimal settings. This usually is not something we advise most people to do. It requires so much work and research, and the potential payoff is so small in most cases, that it can be a huge waste of time. You are probably better off spending that time on other things such as checking your backups, monitoring changes in query plans, and so on.

It’s also very hard to know what side effects your changes might have over the long run. If you change an option and it appears to improve your benchmark, but your benchmark doesn’t measure everything that’s important, or you don’t run it long enough to detect changes in the system’s long-term steady-state behavior, you might cause problems such as periodic server stalls or sporadic slow queries. These can be difficult to detect.

We do sometimes run sets of benchmarks to examine or stress particular parts of the server so we can understand their behavior better. A good example is the many benchmarks we’ve run over the years to understand InnoDB’s flushing behavior, in our quest to develop better flushing algorithms for various workloads and types of hardware. It often happens that we benchmark extensively with different settings to understand their effects and how to optimize them. But this is not a small undertaking—it can take many days or weeks—and it is also not beneficial for most people to do, because such tunnel vision about a specific part of the server often obscures other concerns. For example, sometimes we find that specific combinations of settings enable better performance in edge cases, but the configuration options are not really practical for production usage, due to factors such as wasting a huge amount of memory or optimizing for throughput while ignoring the impact on crash recovery altogether.

If you must do this, we suggest that you develop a custom benchmark suite before you begin configuring your server. You need something that represents your overall workload and includes edge cases such as very large and complex queries. Replaying your actual workload against your actual data is usually a good approach. If you have identified a particular problem spot—such as a single query that runs slowly—you can also try to optimize for that case, but you risk impacting other queries negatively without knowing it.

The best way to proceed is to change one or two variables, a little at a time, and run the benchmarks after each change, being sure to run them long enough to observe the steady-state behavior. Sometimes the results will surprise you; you might increase a variable a little and see an improvement, then increase it a little more and see a sharp drop in performance. If performance suffers after a change, you might be asking for too much of some resource, such as too much memory for a buffer that’s frequently allocated and deallocated. You might also have created a mismatch between MySQL and your operating system or hardware. For example, we’ve found that the optimal sort_buffer_size might be affected by how the CPU cache works, and the read_buffer_size needs to be matched to the server’s read-ahead and general I/O subsystem configuration. Larger is not always better, and can be much worse. Some variables are also dependent on others, which is something you learn with experience and by understanding the system’s architecture.

WHEN BENCHMARKING IS GOOD

There are exceptions to our advice not to benchmark. We sometimes do advise people to run some iterative benchmarks, although usually in a different context than “server tuning.” Here are some examples:

§ If you’re approaching a large investment, such as purchasing a number of new servers, you can run benchmarks to understand your hardware needs. (The context here is capacity planning, not server tuning.) In particular, we like to run benchmarks with different amounts of memory allocated to the InnoDB buffer pool, which helps us draw a “memory curve” that shows how much memory is really needed and how it impacts the demands on the storage systems.

§ If you want to understand how long it will take InnoDB to recover from a crash, you can repeatedly set up a replica, crash it intentionally, and “benchmark” how long InnoDB takes to recover after restarting. The context here is for high availability planning.

§ For read-mostly applications, it can be a great idea to capture all queries with the slow query log (or from TCP traffic with pt-query-digest), use pt-log-player to replay it against the server with full slow query logging enabled, and then analyze the resulting log with pt-query-digest. This lets you see how various types of queries perform with different hardware, software, and server settings. For example, we once helped a customer assess the performance changes of a migration to a server with much more memory, but with slower hard drives. Most queries became faster, but some analytical queries slowed down because they remained I/O-bound. The context of this exercise was workload comparison.

[109] A common mistake we’ve seen is to set up a server with twice as much memory as your existing server, and—using the old server’s configuration as a baseline—create the new server’s configuration by multiplying everything by two. This doesn’t work.

What Not to Do

Before we get started with server configuration, we want to encourage you to avoid a few common practices that we’ve found to be risky or harmful. Warning: rants ahead!

First, you should not “tune by ratio.” The classic “tuning ratio” is the rule of thumb that your key cache hit ratio should be higher than some percentage, and you should increase the cache size if the hit rate is too low. This is very wrong advice. Regardless of what anyone tells you, the cache hit ratio has nothing to do with whether the cache is too large or too small. To begin with, the hit ratio depends on the workload—some workloads simply aren’t cacheable no matter how big the cache is—and secondly, cache hits are meaningless, for reasons we’ll explain later. It sometimes happens that when the cache is too small, the hit rate is low, and increasing the cache size increases the hit rate. However, this is an accidental correlation and does not indicate anything about performance or proper sizing of the cache.

The problem with correlations that sometimes appear to be true is that people begin to believe they will always be true. Oracle DBAs abandoned ratio-based tuning years ago, and we wish MySQL DBAs would follow their lead.[110] We wish even more fervently that people wouldn’t write “tuning scripts” that codify these dangerous practices and teach them to thousands of people. This leads to our second suggestion of what not to do: don’t use tuning scripts! There are several very popular ones that you can find on the Internet. It’s probably best to ignore them.[111]

We also suggest that you avoid the word “tuning,” which we’ve used liberally in the past few paragraphs. We favor “configuration” or “optimization” instead (as long as that’s what you’re actually doing; see Chapter 3). The word “tuning” conjures up images of an undisciplined novice who tweaks the server and sees what happens. We suggested in the previous section that this practice is best left to those who are researching server internals. “Tuning” your server can be a stunning waste of time.

On a related topic, searching the Internet for configuration advice is not always a great idea. You can find a lot of bad advice in blogs, forums, and so on.[112] Although many experts contribute what they know online, it is not always easy to tell who is qualified. We can’t give unbiased recommendations about where to find real experts, of course. But we can say that the credible, reputable MySQL service providers are a safer bet in general than what a simple Internet search turns up, because people who have happy customers are probably doing something right. Even their advice, however, can be dangerous to apply without testing and understanding, because it might have been directed at a situation that differed from yours in a way you don’t understand.

Finally, don’t believe the popular memory consumption formula—yes, the very one that MySQL itself prints out when it crashes. (We won’t repeat it here.) This formula is from an ancient time. It is not a reliable or even useful way to understand how much memory MySQL can use in the worst case. You might see some variations on this formula on the Internet, too. These are similarly flawed, even though they add in more factors that the original formula doesn’t have. The truth is that you can’t put an upper bound on MySQL’s memory consumption. It is not a tightly regulated database server that controls memory allocation. You can prove that very simply by logging into the server and running a number of queries that consume a lot of memory:

mysql> SET @crash_me_1 := REPEAT('a', @@max_allowed_packet);

mysql> SET @crash_me_2 := REPEAT('a', @@max_allowed_packet);

# ... run a lot of these ...

mysql> SET @crash_me_1000000 := REPEAT('a', @@max_allowed_packet);

Run that in a loop, creating new variables each time, and you’ll eventually run the server out of memory and crash it! And it requires no privileges to execute.

The points we’ve tried to illustrate in this section have sometimes made us unpopular with people who perceive us as arrogant, think that we’re trying to discredit others and set ourselves up as the sole authority, or feel that we’re trying to promote our services. It is not our intention to be self-serving. We have simply seen so much bad advice that appears legitimate if you are not experienced enough to know better, and helped clean up the wreckage so many times, that we think it is important to debunk a few myths and warn our readers to be careful whose expertise they trust. We’ll try to avoid ranting from here on.

[110] If you are not convinced that “tuning by ratio” is bad, please read Optimizing Oracle Performance by Cary Millsap (O’Reilly). He even devotes an appendix to the topic, with a tool that can artificially generate any cache hit ratio you wish, no matter how badly your system is performing! Of course, it’s all for the purpose of illustrating how useless the ratio is.

[111] An exception: we maintain a (good) free online configuration tool at http://tools.percona.com. Yes, we’re biased.

[112] Q: How is query formed? A: They need to do way instain DBAs who kill thier querys, becuse these querys cant frigth back?

Creating a MySQL Configuration File

As we mentioned at the beginning of this chapter, we don’t have a one-size-fits-all “best configuration file” for, say, a 4-CPU server with 16 GB of memory and 12 hard drives. You really do need to develop your own configurations, because even a good starting point will vary widely depending on how you’re using the server.

MySQL’s compiled-in default settings aren’t all great, although most of them are fine. They are designed not to use a lot of resources, because MySQL is intended to be very versatile, and it does not assume it is the only thing running on the server on which it is installed. By default, MySQL uses just enough resources to start and run simple queries with a little bit of data. You’ll certainly need to customize it if you have more than a few megabytes of data.

You can start with one of the sample configuration files included with the MySQL server distribution, but they have their own problems. For example, they have a lot of commented-out settings that might tempt you to think that you should choose values and uncomment them (it’s a bit reminiscent of an Apache configuration file). And they have a lot of prose comments that explain the options, but these explanations are not always well-balanced, complete, or even correct. Some of the options don’t even apply to popular operating systems at all! Finally, the samples are perpetually out of date for modern hardware and workloads.

MySQL experts have had many conversations about how to fix these problems over the years, but the issues remain. Here’s our suggestion: don’t use those files as a starting point, and don’t use the samples that ship with your operating system’s packages either. It’s better to start from scratch.

That’s what we’ll do in this chapter. It’s actually a weakness that MySQL is so configurable, because it makes it seem as though you should spend a lot of time on configuration, when in fact most things are fine at their defaults, and you are often better off setting and forgetting. That’s why we’ve created a sane minimal sample configuration file for this book, which you can use as a good starting point for your own servers. You must choose values for a few of the settings; we’ll explain those later in this chapter. Our base file looks like this:

[mysqld]

# GENERAL

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

pid_file = /var/lib/mysql/mysql.pid

user = mysql

port = 3306

storage_engine = InnoDB

# INNODB

innodb_buffer_pool_size = <value>

innodb_log_file_size = <value>

innodb_file_per_table = 1

innodb_flush_method = O_DIRECT

# MyISAM

key_buffer_size = <value>

# LOGGING

log_error = /var/lib/mysql/mysql-error.log

log_slow_queries = /var/lib/mysql/mysql-slow.log

# OTHER

tmp_table_size = 32M

max_heap_table_size = 32M

query_cache_type = 0

query_cache_size = 0

max_connections = <value>

thread_cache_size = <value>

table_cache_size = <value>

open_files_limit = 65535

[client]

socket = /var/lib/mysql/mysql.sock

port = 3306

This might seem too minimal in comparison to what you’re used to seeing,[113] but it’s actually more than many people need. There are a few other types of configuration options that you are likely to use as well, such as binary logging; we’ll cover those later in this and other chapters.

The first thing we configured is the location of the data. We chose /var/lib/mysql for this, because it’s a popular location on many Unix variants. There is nothing wrong with choosing another location; you decide. We’ve put the PID file into the same location, but many operating systems will want to place it in /var/run instead. That’s fine, too. We simply needed to have something configured for these settings. By the way, don’t let the socket and PID file be located according to the server’s compiled-in defaults; there are some bugs in various MySQL versions that can cause problems with this. It’s best to set these locations explicitly. (We’re not advising you to choose different locations; we’re just advising you to make sure the my.cnf file mentions those locations explicitly, so they won’t change and break things if you upgrade the server.)

We also specified that mysqld should run as the mysql user account on the operating system. You’ll need to make sure this account exists, and that it owns the data directory. The port is set to the default of 3306, but sometimes that is something you’ll want to change.

We’ve chosen the default storage engine to be InnoDB, and this is worth explaining. We think InnoDB is the best choice in most situations, but that’s not always the case. Some third-party software, for example, might assume the default is MyISAM, and will create tables without specifying the engine. This might cause the software to malfunction if, for example, it assumes that it can create full-text indexes. And the default storage engine is used for explicitly created temporary tables, too, which can cause quite a bit of unexpected work for the server. If you want your permanent tables to use InnoDB but any temporary tables to use MyISAM, you should be sure to specify the engine explicitly in the CREATE TABLE statement.

In general, if you decide to use a storage engine as your default, it’s best to configure it as the default. Many users think they use only a specific storage engine, but then discover another engine has crept into use because of the configured default.

We’ll illustrate the basics of configuration with InnoDB. All InnoDB really needs to run well in most cases is a proper buffer pool size and log file size. The defaults are far too small. All of the other settings for InnoDB are optional, although we’ve enabled innodb_file_per_table for manageability and flexibility reasons. Setting the InnoDB log file size is a topic that we’ll discuss later in this chapter, as is the setting of innodb_flush_method, which is Unix-specific.

There’s a popular rule of thumb that says you should set the buffer pool size to around 75% or 80% of your server’s memory. This is another accidental ratio that seems to work okay sometimes, but isn’t always correct. It’s a better idea to set the buffer pool roughly as follows:

1. Begin with the amount of memory in the server.

2. Subtract out a bit for the operating system and perhaps for other programs, if MySQL isn’t the only thing running on the server.

3. Subtract some more for MySQL’s memory needs; it uses various buffers for per-query operations, for example.

4. Subtract enough for the InnoDB log files, so the operating system has enough memory to cache them, or at least the recently accessed portion thereof. (This advice applies to standard MySQL; in Percona Server, you can configure the log files to be opened with O_DIRECT, bypassing the operating system caches.) It might also be a good idea to leave some memory free for caching at least the tail of the binary logs, especially if you have replicas that are delayed, because they can sometimes read old binary log files on the master, causing some pressure on its memory.

5. Subtract enough for any other buffers and caches that you configure inside MySQL, such as the MyISAM key cache or the query cache.

6. Divide by 105%, which is an approximation of the overhead InnoDB adds on to manage the buffer pool itself.

7. Round the result down to a sensible number. Rounding down won’t change things much, but overallocating can be a bad thing.

We were a bit blasé about some of the amounts of memory involved here—what exactly is “a bit for the operating system,” anyway? That varies, and we’ll discuss it in some depth later in this chapter and the rest of this book. You need to understand your system and estimate how much memory you think it’ll need to run well. This is why one-size-fits-all configuration files are not possible. Experience and sometimes a bit of math will be your guide.

Here’s an example. Suppose you have a server with 192 GB of memory, and you want to dedicate it to MySQL and to use only InnoDB, with no query cache and not very many connections to the server. If your log files are 4 GB in total, you might proceed as follows: “I think that 2 GB or 5% of overall memory, whichever is larger, should be enough for the OS and for MySQL’s other memory needs; subtract 4 GB for the log files; use everything else for InnoDB.” The result is about 177 GB, but it’s probably a good idea to round that down a bit. You might configure the server with 168 GB or so of buffer pool. If the server tends to run with a fair amount of unallocated memory in practice, you might set the buffer pool larger when there is an opportunity to restart it for some other purpose.

The result would be very different if you had a number of MyISAM tables and needed to cache their indexes, naturally. It would also be quite different on Windows, which has trouble using large amounts of memory in most MySQL versions (although it’s improved in MySQL 5.5), or if you chose not to use O_DIRECT for some reason.

As you can see, it’s not crucial to get this setting precisely right from the beginning. It’s better to start with a safe value that’s larger than the default but not as large as it could be, run the server for a while, and see how much memory it really uses. These things can be hard to anticipate, because MySQL’s memory usage isn’t always predictable: it can depend on factors such as the query complexity and concurrency. With a simple workload, MySQL’s memory needs are pretty minimal—around 256 KB per connection. But complex queries that use temporary tables, sorting, stored procedures, and so forth can use a lot more RAM.

That’s why we chose a pretty safe starting point. You can see that even the conservative setting for InnoDB’s buffer pool is actually 87.5% of the server’s installed RAM—more than 75%, which is why we said that simple ratios aren’t the right approach.

We suggest that when it comes to configuring the memory buffers, you err on the side of caution, rather than making them too large. If you make the buffer pool 20% smaller than it could be, you’ll likely impact performance only a small amount—maybe a few percent. If you set it 20% too large, you’ll probably cause much more severe problems: swapping, thrashing the disks, or even running out of memory and crashing hard.

This InnoDB configuration example illustrates our preferred approach to configuring the server: understand what it does internally and how that interacts with the settings, and then decide.

TIME CHANGES EVERYTHING

The need to configure MySQL’s memory buffers precisely has become less important over time. When a powerful server had 4 GB of memory, we worked hard to balance its resources so it could run a thousand connections. This typically required us to reserve a gigabyte or so for MySQL’s needs, which was a quarter of the server’s total memory and greatly influenced how we sized the buffer pool.

Nowadays a comparable server has 144 GB of memory, but we typically see about the same number of connections in most applications, and the per-connection buffers haven’t really changed much either. As a result, we might generously reserve 4 GB of memory for MySQL, which is a drop in the bucket. It doesn’t impact how we size the buffer pool very much.

Most of the other settings in our sample file are pretty self-explanatory, and many of them are a matter of judgment. We’ll explore several of them in the rest of this chapter. You can see that we’ve enabled logging, disabled the query cache, and so on. We’ll also discuss some safety and sanity settings later in this chapter, which can be very helpful for making your server more robust and helping prevent bad data and other problems. We don’t show those settings here.

One setting to explain here is the open_files_limit option. We’ve set this as large as possible on a typical Linux system. Open filehandles are very cheap on modern operating systems. If this setting isn’t large enough, you’ll see error 24, “too many open files.”

Skipping all the way to the end, the last section in the configuration file is for client programs such as mysql and mysqladmin, and simply lets them know how to connect to the server. You should set the values for client programs to match those you chose for the server.

Inspecting MySQL Server Status Variables

Sometimes you can use the output from SHOW GLOBAL STATUS as input to your configuration to help customize the settings better for your workload. For the best results, look both at absolute values and at how the values change over time, preferably with several snapshots at peak and off-peak times. You can use the following command to see incremental changes to status variables every 60 seconds:

$ mysqladmin extended-status -ri60

We will frequently refer to changes in status variables over time as we explain various configuration settings. We will usually expect you to be examining the output of a command such as the one we just showed. Other helpful tools that can provide a compact display of status counter changes are Percona Toolkit’s pt-mext or pt-mysql-summary.

Now that we’ve shown you the preliminaries, we’ll take you on a guided tour of some server internals, interleaved with advice on configuration. This will give you the background you’ll need to choose appropriate values for configuration options when we return to the sample configuration file later.

[113] Question: where are the settings for the sort buffer size and read buffer size? Answer: they’re off minding their own business. Leave them at their defaults unless you can prove the defaults are not good enough.

Configuring Memory Usage

Configuring MySQL to use memory correctly is vital to good performance. You’ll almost certainly need to customize MySQL’s memory usage for your needs. You can think of MySQL’s memory consumption as falling into two categories: the memory you can control, and the memory you can’t. You can’t control how much memory MySQL uses merely to run the server, parse queries, and manage its internals, but you have a lot of control over how much memory it uses for specific purposes. Making good use of the memory you can control is not hard, but it does require you to know what you’re configuring.

As shown previously, you can approach memory configuration in steps:

1. Determine the absolute upper limit of memory MySQL can possibly use.

2. Determine how much memory MySQL will use for per-connection needs, such as sort buffers and temporary tables.

3. Determine how much memory the operating system needs to run well. Include memory for other programs that run on the same machine, such as periodic jobs.

4. Assuming that it makes sense to do so, use the rest of the memory for MySQL’s caches, such as the InnoDB buffer pool.

We go over each of these steps in the following sections, and then we take a more detailed look at the various MySQL caches’ requirements.

How Much Memory Can MySQL Use?

There is a hard upper limit on the amount of memory that can possibly be available to MySQL on any given system. The starting point is the amount of physically installed memory. If your server doesn’t have it, MySQL can’t use it.

You also need to think about operating system or architecture limits, such as restrictions 32-bit operating systems place on how much memory a given process can address. Because MySQL runs in a single process with multiple threads, the amount of memory it can use overall might be severely limited by such restrictions—for example, 32-bit Linux kernels limit the amount of memory any one process can address to a value that is typically between 2.5 and 2.7 GB. Running out of address space is very dangerous and can cause MySQL to crash. This is pretty rare to see these days, but it used to be common.

There are many other operating system–specific parameters and oddities that must be taken into account, including not just the per-process limits, but also stack sizes and other settings. The system’s glibc libraries can also impose limits per single allocation. For example, you might not be able to set innodb_buffer_pool larger than 2 GB if that’s all your glibc libraries support in a single allocation.

Even on 64-bit servers, some limitations still apply. For example, many of the buffers we discuss, such as the key buffer, are limited to 4 GB on a 64-bit server in 5.0 and older MySQL versions. Some of these restrictions are lifted in MySQL 5.1, and the MySQL manual documents each variable’s maximum value.

Per-Connection Memory Needs

MySQL needs a small amount of memory just to hold a connection (thread) open. It also requires a base amount of memory to execute any given query. You’ll need to set aside enough memory for MySQL to execute queries during peak load times. Otherwise, your queries will be starved for memory, and they will run poorly or fail.

It’s useful to know how much memory MySQL will consume during peak usage, but some usage patterns can unexpectedly consume a lot of memory, which makes this hard to predict. Prepared statements are one example, because you can have many of them open at once. Another example is the InnoDB data dictionary (more about this later).

You don’t need to assume a worst-case scenario when trying to predict peak memory consumption. For example, if you configure MySQL to allow a maximum of 100 connections, it theoretically might be possible to simultaneously run large queries on all 100 connections, but in reality this probably won’t happen. For example, if you set myisam_sort_buffer_size to 256M, your worst-case usage is at least 25 GB, but this level of consumption is highly unlikely to actually occur. Queries that use many large temporary tables, or complex stored procedures, are the most likely causes of high per-connection memory consumption.

Rather than calculating worst cases, a better approach is to watch your server under a real workload and see how much memory it uses, which you can see by watching the process’s virtual memory size. In many Unix-like systems, this is reported in the VIRT column in top, or VSZ in ps. The next chapter has more information on how to monitor memory usage.

Reserving Memory for the Operating System

Just as with queries, you need to reserve enough memory for the operating system to do its work. The best indication that the operating system has enough memory is that it’s not actively swapping (paging) virtual memory to disk. (See the next chapter for more on this topic.)

You should reserve at least a gigabyte or two for the operating system—more for machines with a lot of memory. We suggest starting with 2 GB or 5% of total memory as the baseline, whichever is greater. Add in some extra for safety, and add in some more if you’ll be running periodic memory-intensive jobs on the machine (such as backups). Don’t add any memory for the operating system’s caches, because they can be very large. The operating system will generally use any leftover memory for these caches, and we consider them separately from the operating system’s own needs in the following sections.

Allocating Memory for Caches

If the server is dedicated to MySQL, any memory you don’t reserve for the operating system or for query processing is available for caches.

MySQL needs more memory for caches than anything else. It uses caches to avoid disk access, which is orders of magnitude slower than accessing data in memory. The operating system might cache some data on MySQL’s behalf (especially for MyISAM), but MySQL needs lots of memory for itself, too.

The following are the most important caches to consider for most installations:

§ The InnoDB buffer pool

§ The operating system caches for InnoDB log files and MyISAM data

§ MyISAM key caches

§ The query cache

§ Caches you can’t really configure, such as the operating system’s caches of binary logs and table definition files

There are other caches, but they generally don’t use much memory. We discussed the query cache in detail in the previous chapter, so the following sections concentrate on the caches InnoDB and MyISAM need to work well.

It is much easier to configure a server if you’re using only one storage engine. If you’re using only MyISAM tables, you can disable InnoDB completely, and if you’re using only InnoDB, you need to allocate only minimal resources for MyISAM (MySQL uses MyISAM tables internally for some operations). But if you’re using a mixture of storage engines, it can be very hard to figure out the right balance between them. The best approach we’ve found is to make an educated guess and then observe the server in operation.

The InnoDB Buffer Pool

If you use mostly InnoDB tables, the InnoDB buffer pool probably needs more memory than anything else. The InnoDB buffer pool doesn’t just cache indexes: it also holds row data, the adaptive hash index, the insert buffer, locks, and other internal structures. InnoDB also uses the buffer pool to help it delay writes, so it can merge many writes together and perform them sequentially. In short, InnoDB relies heavily on the buffer pool, and you should be sure to allocate enough memory to it, typically with a process such as that shown earlier in this chapter. You can use variables from SHOW commands or tools such as innotop to monitor your InnoDB buffer pool’s memory usage.

If you don’t have much data, and you know that your data won’t grow quickly, you don’t need to overallocate memory to the buffer pool. It’s not really beneficial to make it much larger than the size of the tables and indexes that it will hold. There’s nothing wrong with planning ahead for a rapidly growing database, of course, but sometimes we see huge buffer pools with a tiny amount of data. This isn’t necessary.

Large buffer pools come with some challenges, such as long shutdown and warmup times. If there are a lot of dirty (modified) pages in the buffer pool InnoDB can take a long time to shut down, because it writes the dirty pages to the data files upon shutdown. You can force it to shut down quickly, but then it just has to do more recovery when it restarts, so you can’t actually speed up the shutdown and restart cycle time. If you know in advance when you need to shut down, you can change the innodb_max_dirty_pages_pct variable at runtime to a lower value, wait for the flush thread to clean up the buffer pool, and then shut down once the number of dirty pages becomes small. You can monitor the number of dirty pages by watching the Innodb_buffer_pool_pages_dirty server status variable or using innotop to monitor SHOW INNODB STATUS.

Lowering the value of the innodb_max_dirty_pages_pct variable doesn’t actually guarantee that InnoDB will keep fewer dirty pages in the buffer pool. Instead, it controls the threshold at which InnoDB stops being “lazy.” InnoDB’s default behavior is to flush dirty pages with a background thread, merging writes together and performing them sequentially for efficiency. This behavior is called “lazy” because it lets InnoDB delay flushing dirty pages in the buffer pool, unless it needs to use the space for some other data. When the percentage of dirty pages exceeds the threshold, InnoDB will flush pages as quickly as it can to try to keep the dirty page count lower. InnoDB will also go into “furious flushing” mode when there isn’t enough space left in the transaction logs, which is one reason that large logs can improve performance.

When you have a large buffer pool, especially in combination with slow disks, the server might take a long time (many hours or even days) to warm up after a restart. In such cases, you might benefit from using Percona Server’s feature to reload the pages after restart. This can reduce warmup times to a few minutes. MySQL 5.6 will introduce a similar feature. This is especially beneficial on replicas, which pay an extra warmup penalty due to the single-threaded nature of replication.

If you can’t use Percona Server’s fast warmup feature, some people issue full-table scans or index scans immediately after a restart to load indexes into the buffer pool. This is crude, but can sometimes be better than nothing. You can use the init_file setting to accomplish this. You can place SQL into a file that’s executed when MySQL starts up. The filename must be specified in the init_file option, and the file can include multiple SQL commands, each on a single line (no comments are allowed).

The MyISAM Key Caches

The MyISAM key caches are also referred to as key buffers; there is one by default, but you can create more. Unlike InnoDB and some other storage engines, MyISAM itself caches only indexes, not data (it lets the operating system cache the data). If you use mostly MyISAM, you should allocate a lot of memory to the key caches.

The most important option is the key_buffer_size. Any memory not allocated to it will be available for the operating system caches, which the operating system will usually fill with data from MyISAM’s .MYD files. MySQL 5.0 has a hard upper limit of 4 GB for this variable, no matter what architecture you’re running. MySQL 5.1 allows larger sizes. Check the current documentation for your version of the server.

When you’re deciding how much memory to allocate to the key caches, it might help to know how much space your MyISAM indexes are actually using on disk. You don’t need to make the key buffers larger than the data they will cache. You can query the INFORMATION_SCHEMA tables and sum up the INDEX_LENGTH column to find out the size of the files storing the indexes:

SELECT SUM(INDEX_LENGTH) FROM INFORMATION_SCHEMA.TABLES WHERE ENGINE='MYISAM';

If you have a Unix-like system, you can also use a command like the following:

$ du -sch `find /path/to/mysql/data/directory/ -name "*.MYI"`

How big should you set the key caches? No bigger than the total index size or 25% to 50% of the amount of memory you reserved for operating system caches, whichever is smaller.

By default, MyISAM caches all indexes in the default key buffer, but you can create multiple named key buffers. This lets you keep more than 4 GB of indexes in memory at once. To create key buffers named key_buffer_1 and key_buffer_2, each sized at 1 GB, place the following in the configuration file:

key_buffer_1.key_buffer_size = 1G

key_buffer_2.key_buffer_size = 1G

Now there are three key buffers: the two explicitly created by those lines and the default buffer. You can use the CACHE INDEX command to map tables to caches. You can tell MySQL to use key_buffer_1 for the indexes from tables t1 and t2 with the following SQL statement:

mysql> CACHE INDEX t1, t2 IN key_buffer_1;

Now when MySQL reads blocks from the indexes on these tables, it will cache the blocks in the specified buffer. You can also preload the tables’ indexes into the cache with the init_file option and the LOAD INDEX command:

mysql> LOAD INDEX INTO CACHE t1, t2;

Any indexes you don’t explicitly map to a key buffer will be assigned to the default buffer the first time MySQL needs to access the .MYI file.

You can monitor key buffer usage with information from SHOW STATUS and SHOW VARIABLES. You can calculate the percentage of the buffer in use with this equation:

100 - ( (Key_blocks_unused * key_cache_block_size) * 100 / key_buffer_size )

If the server doesn’t use all of its key buffer after it’s been running for a long time, you can consider making the buffer smaller.

What about the key buffer hit ratio? As we explained previously, this number is useless. For example, the difference between 99% and 99.9% looks small, but it really represents a tenfold increase. The cache hit ratio is also application-dependent: some applications might work fine at 95%, whereas others might be I/O-bound at 99.9%. You might even be able to get a 99.99% hit ratio with properly sized caches.

The number of cache misses per second is much more empirically useful. Suppose you have a single hard drive that can do 100 random reads per second. Five misses per second will not cause your workload to be I/O-bound, but 80 per second will likely cause problems. You can use the following equation to calculate this value:

Key_reads / Uptime

Calculate the number of misses incrementally over intervals of 10 to 100 seconds, so you can get an idea of the current performance. The following command will show the incremental values every 10 seconds:

$ mysqladmin extended-status -r -i 10 | grep Key_reads

Remember that MyISAM uses the operating system cache for the data files, which are often larger than the indexes. Therefore, it often makes sense to leave more memory for the operating system cache than for the key caches. Even if you have enough memory to cache all the indexes, and the key cache miss rate is very low, cache misses when MyISAM tries to read from the data files (not the index files!) happen at the operating system level, which is completely invisible to MySQL. Thus, you can have a lot of data file cache misses independently of your index cache miss rate.

Finally, even if you don’t have any MyISAM tables, bear in mind that you still need to set key_buffer_size to a small amount of memory, such as 32M. The MySQL server sometimes uses MyISAM tables for internal purposes, such as temporary tables for GROUP BY queries.

The MyISAM key block size

The key block size is important (especially for write-intensive workloads) because of the way it causes MyISAM, the operating system cache, and the filesystem to interact. If the key block size is too small, you might encounter read-around writes, which are writes that the operating system cannot perform without first reading some data from the disk. Here’s how a read-around write happens, assuming the operating system’s page size is 4 KB (typically true on the x86 architecture) and the key block size is 1 KB:

1. MyISAM requests a 1 KB key block from disk.

2. The operating system reads 4 KB of data from the disk and caches it, then passes the desired 1 KB of data to MyISAM.

3. The operating system discards the cached data in favor of some other data.

4. MyISAM modifies the 1 KB key block and asks the operating system to write it back to disk.

5. The operating system reads the same 4 KB of data from the disk into the operating system cache, modifies the 1 KB that MyISAM changed, and writes the entire 4 KB back to disk.

The read-around write happened in step 5, when MyISAM asked the operating system to write only part of a 4 KB page. If MyISAM’s block size had matched the operating system’s, the disk read in step 5 could have been avoided.[114]

Unfortunately, in MySQL 5.0 and earlier there’s no way to configure the key block size. However, in MySQL 5.1 and later you can avoid read-around writes by making MyISAM’s key block size the same as the operating system’s. The myisam_block_size variable controls the key block size. You can also specify the size for each key with the KEY_BLOCK_SIZE option in a CREATE TABLE or CREATE INDEX statement, but because all keys are stored in the same file, you really need all of them to have blocks as large as or larger than the operating system’s to avoid alignment issues that could still cause read-around writes. (For example, if one key has 1 KB blocks and another has 4 KB blocks, the 4 KB block boundaries might not match the operating system’s page boundaries.)

The Thread Cache

The thread cache holds threads that aren’t currently associated with a connection but are ready to serve new connections. When there’s a thread in the cache and a new connection is created, MySQL removes the thread from the cache and gives it to the new connection. When the connection is closed, MySQL places the thread back into the cache, if there’s room. If there isn’t room, MySQL destroys the thread. As long as MySQL has a free thread in the cache it can respond rapidly to connection requests, because it doesn’t have to create a new thread for each connection.

The thread_cache_size variable specifies the number of threads MySQL can keep in the cache. You probably won’t need to configure this value unless your server gets many connection requests. To check whether the thread cache is large enough, watch the Threads_created status variable. We generally try to keep the thread cache large enough that we see fewer than 10 new threads created each second, but it’s often pretty easy to get this number lower than 1 per second.

A good approach is to watch the Threads_connected variable and try to set thread_cache_size large enough to handle the typical fluctuation in your workload. For example, if Threads_connected usually stays between 100 and 120, you can set the cache size to 20. If it stays between 500 and 700, a thread cache of 200 should be large enough. Think of it this way: at 700 connections, there are probably no threads in the cache; at 500 connections, there are 200 cached threads ready to be used if the load increases to 700 again.

Making the thread cache very large is probably not necessary for most uses, but keeping it small doesn’t save much memory, so there’s little benefit in doing so. Each thread that’s in the thread cache or sleeping typically uses around 256 KB of memory. This is not very much compared to the amount of memory a thread can use when a connection is actively processing a query. In general, you should keep your thread cache large enough that Threads_created doesn’t increase very often. If this is a very large number, however (e.g., many thousand threads), you might want to set it lower because some operating systems don’t handle very large numbers of threads well, even when most of them are sleeping.

The Table Cache

The table cache is similar in concept to the thread cache, but it stores objects that represent tables. Each object in the cache contains the associated table’s parsed .frm file, plus other data. Exactly what else is in the object depends on the table’s storage engine. For example, for MyISAM, it holds the table data and/or index file descriptors. For merge tables it might hold many file descriptors, because merge tables can have many underlying tables.

The table cache can help you reuse resources. For instance, when a query requests access to a MyISAM table, MySQL might be able to give it a file descriptor from the cached object. Although this does avoid the cost of opening a file descriptor, that’s not as expensive as you might think. Opening and closing file descriptors is very fast on local storage; the server should be able to do it a million times a second easily (it’s different on network-attached storage, though). The real benefit of the table cache is for MyISAM tables, where it lets the server avoid modifying the MyISAM file headers to mark a table as “in use.”[115]

The table cache’s design is one of the areas where the separation between the server and the storage engines is not completely clean, for historical reasons. The table cache is a little less important for InnoDB, because InnoDB doesn’t rely on it for as many purposes (such as holding file descriptors; it has its own version of a table cache for this purpose). However, even InnoDB benefits from caching the parsed .frm files.

In MySQL 5.1, the table cache is separated into two parts: a cache of open tables and a table definition cache (configured via the table_open_cache and table_definition_cache variables). Thus, the table definitions (the parsed .frm files) are separated from the other resources, such as file descriptors. Opened tables are still per-thread, per-table-used, but the table definitions are global and can be shared among all connections efficiently. You can generally set table_definition_cache high enough to cache all your table definitions. Unless you have tens of thousands of tables, this is likely to be the easiest approach.

If the Opened_tables status variable is large or increasing, the table cache might not be large enough, and you can consider increasing the table_cache system variable (or table_open_cache, in MySQL 5.1). However, note that this counter increases when you create and drop temporary tables, so if you do that a lot, you’ll never get the counter to stop increasing.

One downside to making the table cache very large is that it might cause longer shutdown times when your server has a lot of MyISAM tables, because the key blocks have to be flushed and the tables have to be marked as no longer open. It can also make FLUSH TABLES WITH READ LOCKtake a long time to complete, for the same reason.

More seriously, the algorithms that check the table cache aren’t very efficient; more on this later.

If you get errors indicating that MySQL can’t open any more files (use the perror utility to check what the error number means), you might need to increase the number of files MySQL is allowed to keep open. You can do this with the open_files_limit server variable in your my.cnf file.

The thread and table caches don’t really use much memory, and they can be beneficial when they conserve resources. Although creating a new thread and opening a new table aren’t really expensive compared to other things MySQL might do, the overhead can add up. Caching threads and tables can sometimes improve efficiency.

The InnoDB Data Dictionary

InnoDB has its own per-table cache, variously called a table definition cache or data dictionary, which you cannot configure in current versions of MySQL. When InnoDB opens a table, it adds a corresponding object to the data dictionary. Each table can take up 4 KB or more of memory (although much less space is required in MySQL 5.1). Tables are not removed from the data dictionary when they are closed.

As a result, the server can appear to leak memory over time, due to an ever-increasing number of entries in the dictionary cache. It isn’t truly leaking memory; it just isn’t implementing any kind of cache expiration. This is normally a problem only when you have many (thousands or tens of thousands) large tables. If this is a problem for you, you can use Percona Server, which has an option to limit the data dictionary’s size by removing tables that are unused. There is a similar feature in the yet-to-be-released MySQL 5.6.

The other performance issue is computing statistics for the tables when opening them for the first time, which is expensive because it requires a lot of I/O. In contrast to MyISAM, InnoDB doesn’t store statistics in the tables permanently; it recomputes them each time it starts, and thereafter when various intervals expire or events occur (changes to the table’s contents, queries against the INFORMATION_SCHEMA, and so on). If you have a lot of tables, your server can take hours to start and fully warm up, during which time it might not be doing much other than waiting for one I/O operation after another. You can enable the innodb_use_sys_stats_table option in Percona Server (also in MySQL 5.6, but called innodb_analyze_is_persistent) to store the statistics persistently on disk and solve this problem.

Even after startup, InnoDB statistics operations can have an impact on the server and on individual queries. You can turn off the innodb_stats_on_metadata option to avoid time-consuming refreshes of table statistics. This can make a big difference when tools such as IDEs are querying the INFORMATION_SCHEMA tables.

If you use InnoDB’s innodb_file_per_table option (described later), there’s a separate limit on the number of .ibd files InnoDB can keep open at any time. This is handled by the InnoDB storage engine, not the MySQL server, and is controlled by innodb_open_files. InnoDB doesn’t open files the same way MyISAM does: whereas MyISAM uses the table cache to hold file descriptors for open tables, in InnoDB there is no direct relationship between open tables and open files. InnoDB uses a single, global file descriptor for each .ibd file. If you can afford it, it’s best to set innodb_open_files large enough that the server can keep all .ibd files open simultaneously.

[114] Theoretically, if you could ensure that the original 4 KB of data was still in the operating system’s cache, the read wouldn’t be needed. However, you have no control over which blocks the operating system decides to keep in its cache. You can find out which blocks are in the cache with the fincore tool, available at http://net.doit.wisc.edu/~plonka/fincore/.

[115] The concept of an “opened table” can be a little confusing. MySQL counts a table as opened many times when different queries are accessing it simultaneously, or even when a single query refers to the same table more than once, as in a subquery or a self-join. MyISAM’s index files contain a counter that MyISAM increments when the table is opened and decrements when it is closed. This lets MyISAM see when the table wasn’t closed cleanly: if it opens a table for the first time and the counter is not zero, the table wasn’t closed cleanly.

Configuring MySQL’s I/O Behavior

A few configuration options affect how MySQL synchronizes data to disk and performs recovery. These can affect performance dramatically, because they involve expensive I/O operations. They also represent a trade-off between performance and data safety. In general, it’s expensive to ensure that your data is written to disk immediately and consistently. If you’re willing to risk the danger that a disk write won’t really make it to permanent storage, you can increase concurrency and/or reduce I/O waits, but you’ll have to decide for yourself how much risk you can tolerate.

InnoDB I/O Configuration

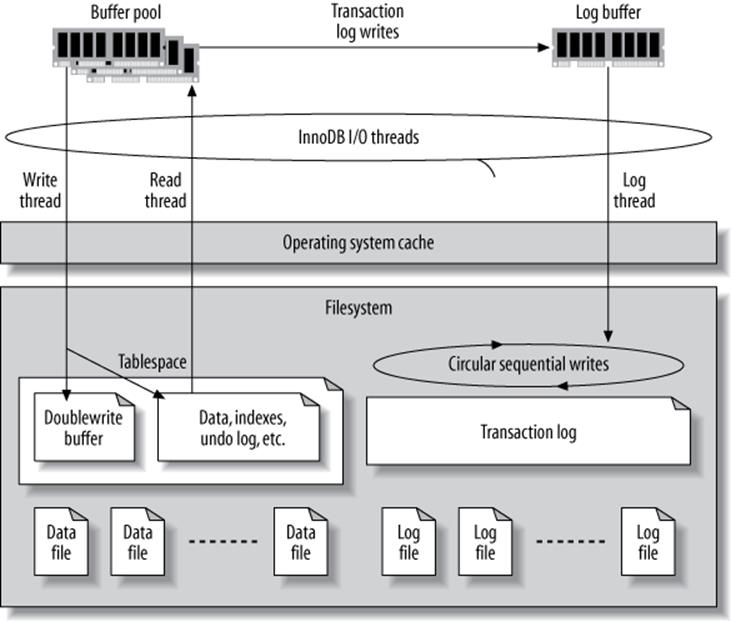

InnoDB permits you to control not only how it recovers, but also how it opens and flushes its data, which greatly affects recovery and overall performance. InnoDB’s recovery process is automatic and always runs when InnoDB starts, though you can influence what actions it takes. Leaving aside recovery and assuming nothing ever crashes or goes wrong, there’s still a lot to configure for InnoDB. It has a complex chain of buffers and files designed to increase performance and guarantee ACID properties, and each piece of the chain is configurable. Figure 8-1 illustrates these files and buffers.

A few of the most important things to change for normal usage are the InnoDB log file size, how InnoDB flushes its log buffer, and how InnoDB performs I/O.

The InnoDB transaction log

InnoDB uses its log to reduce the cost of committing transactions. Instead of flushing the buffer pool to disk when each transaction commits, it logs the transactions. The changes transactions make to data and indexes often map to random locations in the tablespace, so flushing these changes to disk would require random I/O. InnoDB assumes it’s using conventional disks, where random I/O is much more expensive than sequential I/O because of the time it takes to seek to the correct location on disk and wait for the desired part of the disk to rotate under the head.

Figure 8-1. InnoDB’s buffers and files

InnoDB uses its log to convert this random disk I/O into sequential I/O. Once the log is safely on disk, the transactions are permanent, even though the changes haven’t been written to the data files yet. If something bad happens (such as a power failure), InnoDB can replay the log and recover the committed transactions.

Of course, InnoDB does ultimately have to write the changes to the data files, because the log has a fixed size. It writes to the log in a circular fashion: when it reaches the end of the log, it wraps around to the beginning. It can’t overwrite a log record if the changes contained there haven’t been applied to the data files, because this would erase the only permanent record of the committed transaction.

InnoDB uses a background thread to flush the changes to the data files intelligently. This thread can group writes together and make the data writes sequential, for improved efficiency. In effect, the transaction log converts random data file I/O into mostly sequential log file and data file I/O. Moving flushes into the background makes queries complete more quickly and helps cushion the I/O system from spikes in the query load.

The overall log file size is controlled by innodb_log_file_size and innodb_log_files_in_group, and it’s very important for write performance. The total size is the sum of each file’s size. By default there are two 5 MB files, for a total of 10 MB. This is much too small for a high-performance workload. You need hundreds of megabytes, or even gigabytes, of log files.

InnoDB uses multiple files as a single circular log. You usually don’t need to change the default number of logs, just the size of each log file. To change the log file size, shut down MySQL cleanly, move the old logs away, reconfigure, and restart. Be sure MySQL shuts down cleanly, or the log files will actually have entries that need to be applied to the data files! Watch the MySQL error log when you restart the server. After you’ve restarted successfully, you can delete the old log files.

Log file size and the log buffer

To determine the ideal size for your log files, you’ll have to weigh the overhead of routine data changes against the recovery time required in the event of a crash. If the log is too small, InnoDB will have to do more checkpoints, causing more log writes. In extreme cases, write queries might stall and have to wait for changes to be applied to the data files before there is room to write into the log. On the other hand, if the log is too large, InnoDB might have to do a lot of work when it recovers. This can greatly increase recovery time, although this process is much more efficient in newer MySQL versions.

Your data size and access patterns will influence the recovery time, too. Suppose you have a terabyte of data and 16 GB of buffer pool, and your total log size is 128 MB. If you have a lot of dirty pages (i.e., pages whose changes have not yet been flushed to the data files) in the buffer pool and they are uniformly spread across your terabyte of data, recovery after a crash might take a long time. InnoDB will have to scan through the log, examine the data files, and apply changes to the data files as needed. That’s a lot of reading and writing! On the other hand, if the changes are localized—say, if only a few hundred megabytes of data are updated frequently—recovery might be fast, even when your data and log files are huge. Recovery time also depends on the size of a typical modification, which is related to your average row length. Short rows let more modifications fit in the log, so InnoDB might need to replay more modifications on recovery.[116]

When InnoDB changes any data, it writes a record of the change into its log buffer, which it keeps in memory. InnoDB flushes the buffer to the log files on disk when the buffer gets full, when a transaction commits, or once per second—whichever comes first. Increasing the buffer size, which is 1 MB by default, can help reduce I/O if you have large transactions. The variable that controls the buffer size is called innodb_log_buffer_size.

You usually don’t need to make the buffer very large. The recommended range is 1 to 8 MB, and this usually will be enough unless you write a lot of huge BLOB records. The log entries are very compact compared to InnoDB’s normal data. They are not page-based, so they don’t waste space storing whole pages at a time. InnoDB also makes log entries as short as possible. They are sometimes even stored as the function number and parameters of a C function!

There’s an additional circumstance where a larger value might be beneficial: when it can reduce contention during allocation of space in the buffer. When we’re configuring servers with a large amount of memory, we’ll sometimes allocate 32 to 128 MB of log buffer simply because spending such a relatively small amount of extra memory is not detrimental and it can help avoid pressure on a bottleneck. The bottleneck shows up as contention on the log buffer mutex when it’s a problem.