Magento Search Engine Optimization (2014)

Chapter 7. Technical Rewrites for Search Engines

In previous chapters, we have looked at some of the standard adjustments we can make to our .htaccess and robots.txt files in order to either speed up our website or completely block search engine access to our development site.

In this section, we'll be looking at a few crucial adjustments that we can make to these files in order to further enhance our protection over duplicate-content-related penalties and to reduce the number of unnecessary pages being cached by search engines.

The .htaccess and robots.txt files that we are editing in this section exist on the root directory of our Magento website.

In this chapter, we will be learning how to:

· Use advanced techniques in the .htaccess file to maintain URL consistency and redirect old URLs to their newer equivalents

· Improve our robots.txt file to disallow areas that should not be visible by search engines

· Use an observer to block duplicate content issues on filtered category pages

Additional .htaccess modifications

Apart from the in-built URL rewrite system in Magento, we can use the .htaccess file to perform complex rewrites on any URL that's requested through our website.

A key part of performing well in search engines is to provide consistent URLs. When we alter our categories/products/pages or even when we migrate from an older website to the Magento format, we must try to redirect users to the new URL path using 301 redirects.

A few common problems that we can resolve through modifying our .htaccess file are:

· Domain consistency: Ensuring that our domain is always displayed with or without the www prefix (depending on preference)

· Forcing the removal of index.php and /home from our URLs

· 301 redirecting old pages to their newer equivalents—both static pages and ones that once contained query strings

Maintaining a www or non-www domain prefix

It is important to choose whether we wish to publically display our domain name with or without the www prefix. Websites such as twitter.com choose to remove it, whereas many other websites always display it.

Although there are no SEO-related benefits to using either one or the other, it is important that we are not allowing both versions of our website to be accessible simultaneously as search engines see both versions as two completely separate instances of a page.

The easiest way to resolve this is through the Magento administration panel by:

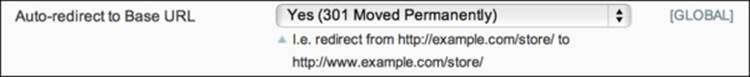

1. Navigating to System | Configuration | Web, and then within Url Options, we change Auto-redirect to Base URL to Yes (301 Moved Permanently) as shown in the following screenshot:

The same result, however, can be accomplished through the .htaccess file, and we should implement it also, especially if we are running Magento CE 1.3 or our website contains other publically-accessible pages that are not part of the Magento platform.

To force the www prefix to our domain name, we would first of all set our base URL in system configuration and then add the following code to our .htaccess file (replacing mymagento.com with our own domain name):

<IfModule mod_rewrite.c>

Options +FollowSymLinks

RewriteEngine on

RewriteCond %{HTTP_HOST} !^www.mymagento.com$ [NC]

RewriteRule ^(.*)$ http://www.mymagento.com/$1 [R=301,L]

</IfModule>

To remove www from the URL, we must first of all change our base URLs in the system configuration so that they do not contain www and then replace the preceding code with:

<IfModule mod_rewrite.c>

Options +FollowSymLinks

RewriteEngine on

RewriteCond %{HTTP_HOST} !^mymagento.com$ [NC]

RewriteRule ^(.*)$ http://mymagento.com/$1 [R=301,L]

</IfModule>

In the preceding example code, we are essentially asking the server that if the website URL is not exactly mymagento.com (RewriteCond %{HTTP_HOST} !^mymagento.com$ [NC]), we will rewrite everything (.*) to after our non-www domain name, therefore forcing away the www prefix.

Essentially, RewriteCond is used to test our value (in this case our website URL). RewriteRule then performs the redirect but only if those tests (conditions) are passed.

Removing the /index.php/ path once and for all

Within Chapter 1, Preparing and Configuring Your Magento Website, we looked at enabling Use Web Server Rewrites; however, we will notice that by default, this simply removes the /index.php/ path from our displayed URLs; it doesn't stop them from being accessible using the old path (for example, http://www.mymagento.com/index.php/furniture.html). Apart from this, we will also be able to access our homepage using www.mydomain.com/index.php.

In order to resolve this, we can use a few simple lines of code within our .htaccess file (with or without www):

# Removing /index.php/ from any other URL when not in admin

RewriteCond %{THE_REQUEST} ^.*/index.php

RewriteCond %{THE_REQUEST} !^.*/index.php/admin

RewriteRule ^index.php/(.*)$ http://www.mymagento.com/$1 [R=301,L]

# Removing our duplicate homepage

RewriteCond %{THE_REQUEST} ^.*/index.php

RewriteRule ^(.*)index.php$ http://www.mymagento.com/$1 [R=301,L]

Note

We may notice that the Magento Connect downloader ceases to operate when we add the preceding code. It is therefore recommended to comment out these lines using # whenever we wish to use Magento Connect (and then uncomment them once completed).

Also, it is important to change the admin path to the correct admin path for your store within the line RewriteCond %{THE_REQUEST} ^!/admin/index.php. Without this line, the Magento admin may not save data correctly.

Redirecting /home to our domain

Apart from being accessible though www.mydomain.com/index.php, the home page is also available by default through www.mydomain.com/home. This is due to the fact that Magento uses a CMS page as the home page (the /home portion of the URL may change depending on the CMS page used).

In order to rewrite /home, we can simply perform a standard 301 redirect in our .htaccess file (where www.mymagento.com is your domain name):

redirect 301 /home http://www.mymagento.com

Redirecting older pages

Similar to redirecting the /home page, we can also redirect any other page to another URL. This is useful if we have an existing website where our old URLs have now been changed.

The two most common types of redirect that we need to apply in these instances are redirect 301 commands and rewrite conditions (for URL parameters).

In order to set up a standard 301 redirect from an old page to the new page, we would simply add the following to the end of our .htaccess file:

redirect 301 /my-old-page.html http://www.mymagento.com/my-new-page.html

When we wish to redirect a URL that used to contain a parameter (something that used to be quite common in older e-commerce systems), we need to perform a different type of redirect.

In the following example, we will be redirecting a query string with a specific request to our new product page. This would work for a URL such as http://www.mymagento.com/my-old-product-page.php?product_id=35.

RewriteCond %{QUERY_STRING} ^product_id=35$ [NC]

RewriteRule .* http://www.mymagento.com/my-new-product.html? [R=301,L]

Note

Please note that editing the .htaccess file is always risky; improper configuration will lead to a server error. It is always recommended that the .htaccess file modifications be implemented first on a test environment.

For a fantastic run down on extra modifications including robot-specific blocking, please see the following .htaccess boilerplate (github.com) goo.gl/Gn5i35.

Improving our robots.txt file

As mentioned in previous chapters, the robots.txt file should only be used to let search engines know which pages/paths on the website we wish or do not wish to be crawled. Ideally, we would only want our main pages to be crawled and cached by search engines (products, categories, and CMS pages).

The robots.txt file should be updated whenever a page is created that we do not wish to be crawled; however, the following list is a good place to start and will help to reduce the number of unnecessary pages cached by search engines.

Inside the robots.txt file, we would add the following options (one per line, under User-Agent: *):

Disallow: /checkout/

# To stop our checkout pages being crawled

Disallow: /review/

# To disallow our product review pages (especially if we are also showing reviews directly on our product pages)

Disallow: /catalogsearch/

# To disallow our search-results pages from being indexed by search engines

Disallow: /catalog/product/view/

# A further duplicate page where we can view our products by passing the ID

Disallow: /catalog/category/view/

# Similar to the preceding option but for categories

Note

For a further list of possible Magento pages to disallow within the robots.txt file, please see the following link (github.com) goo.gl/utyJi0.

Resolving layered-navigation duplicate content

One of the most common duplicate-content-related issues in Magento comes in the form of layered-navigation-enabled category pages. When we set our categories to be "anchored" (see Chapter 2, Product and Category Page Optimization), we allow them to be filtered by certain product attributes.

Magento uses parameters within the URL in order to filter the product collection. The drawback of this is that sometimes these parameters can be cached as separate pages—leading to thousands upon thousands of duplicated category pages.

In an ideal world, the canonical element would take care of this problem, but sometimes our duplicate pages may still be cached by search engines (especially if the website has been live prior to turning on the canonical elements).

One of the most effective solutions to this problem is to use a bespoke function within Magento called an Observer function that will perform the following tasks:

· Check to see whether we are viewing a category page

· Check to see whether filters have been activated

· Dynamically modify our <meta name="robots"> tag to NOINDEX,FOLLOW

In order to do this, we need to create a very simple module consisting of a config.xml file, an Observer.php file, and a module declaration file.

Due to content limitations within this book, we cannot go into detail about how to create a Magento extension from scratch, so instead we'll assume that a module has already been created and that we are free to edit our config.xml and Observer.php model files.

Note

For a quick tutorial on setting up Magento extensions, please visit the following link (magento.com) goo.gl/uqDsz3.

Also, please note that the following functionality is also available for free within the Creare SEO extension mentioned within Chapter 8, Purpose-built Magento Extensions for SEO/CRO.

Assuming that our app/code/[codePool]/[namespace]/[module]/etc/config.xml file has been created and set up, we need to add the following code (substituting [module] for our own module name):

<frontend>

<events>

<controller_action_layout_generate_xml_before>

<observers>

<[module]>

<type>singleton</type>

<class>[module]/observer</class><!-- replace with

your module name -->

<method>changeRobots</method>

</[module]>

</observers>

</controller_action_layout_generate_xml_before>

</events>

</frontend>

The preceding code will register an observer to listen for the controller_action_layout_generate_xml_before event on the customer-facing side of our Magento installation. This will allow us to adapt the layout XML that has been compiled for our pages and insert our own XML dynamically.

To do this, we need to create our own method that we have mentioned in our config.xml file, changeRobots, which exists inside our observer class located in the app/code/[codePool]/[namespace]/[module]/Model/Observer.php file.

Inside our Observer.php file, we will add the following method:

public function changeRobots($observer)

{

if($observer->getEvent()->getAction()->getFullActionName() == 'catalog_category_view')

{

$uri = $observer->getEvent()->getAction()->getRequest()->getRequestUri();

if(stristr($uri,"?")): // looking for a ?

$layout = $observer->getEvent()->getLayout();

$layout->getUpdate()->addUpdate('<reference name="head"><action method="setRobots"><value>NOINDEX,FOLLOW</value></action></reference>');

$layout->generateXml();

endif;

}

return $this;

}

In the preceding code, we are checking that our current page is using the action name catalog_controller_view; that is, we are viewing a category page. We are then checking for a question mark within the URL request (checking that filters have been activated). If a? exists, we add our own XML to our <head> block setting the robots' meta tag with the value of NOINDEX,FOLLOW.

With this simple implementation, we will add the NOINDEX directive to our parameter-based category URLs, essentially asking search engines to remove these URLs from their own indexes.

Note

For more information on the impact of category filters and duplicate content issues, please visit the following link (creare.co.uk) goo.gl/PfkPAQ.

Summary

In this section, we have looked at several ways in which we can keep the URLs on our website consistent, especially the home page. Using the .htaccess file, we can also redirect old URLs to their new counterparts.

We've also looked at how we can use the robots.txt file to disallow unnecessary pages from search engines in order to avoid any duplicate-content-related issues.

We've also seen how category pages can sometimes be duplicated due to active layered-navigation filters and how we can resolve this by using a bespoke Observer function to dynamically change our meta information.

In the next chapter, we will be looking at some of the best Magento extensions available that are specifically designed with search engine optimization or conversion rate optimization in mind.