Oracle PL/SQL Programming (2014)

Part VI. Advanced PL/SQL Topics

Chapter 24. PL/SQL Architecture

In my experience, relatively few PL/SQL developers exhibit a burning curiosity about the underlying architecture of the PL/SQL language. As a community, we seem to mostly be content with learning the basic syntax of PL/SQL, writing our programs, and going home to spend quality time with family and friends. That’s a very healthy perspective!

I suggest, however, that all PL/SQL developers would benefit from a basic understanding of this architecture. Not only will you be able to write programs that utilize memory more efficiently, but you will be able to configure your programs and overall applications to behave in ways that you might have thought were impossible.

You will find in this chapter answers to the following questions, and many more:

§ How does the PL/SQL runtime engine use memory, and what can I do to manage how much memory is used?

§ Should I be running natively compiled code or stick with the default interpreted code? What does native compilation mean, anyway?

§ Why do my programs become INVALID, and how can I recompile them back to health?

§ I have duplicated tables in 20 different schemas. Do I really have to maintain 20 copies of my code, in each of those schemas?

§ And... who or what is DIANA?

DIANA

In earlier editions of this book, the title of this chapter was “Inside PL/SQL.” I decided to change the name to “PL/SQL Architecture” for two reasons:

§ Most of what was in the chapter was not truly in any sense “internals.” In fact, it is very difficult for PL/SQL developers (or anyone outside of Oracle headquarters) to get information about “internal” aspects of PL/SQL.

§ I don’t want to encourage you to try to uncover otherwise hidden aspects of PL/SQL. Developers, I believe, benefit most from learning the syntax of the language, not from trying to “game” or trick the PL/SQL compiler into doing something it wouldn’t do of its own volition.

Having said that, a very common question that touches on the internal structures of the PL/SQL compiler is: “Who or what is DIANA?”

Asking a PL/SQL programmer “Who is Diana?” is like asking a San Francisco resident “Who’s Bart?” The answer to both questions is not so much a who as a what. For the San Francisco Bay Area resident, BART is the Bay Area Rapid Transit system—the subway. For the PL/SQL programmer, DIANA is the Distributed Intermediate Annotated Notation for Ada and is part of PL/SQL’s heritage as an Ada-derived language. In some Ada compilers, the output of the first part of the compilation is a DIANA. Likewise, PL/SQL was originally designed to output a DIANA in the first part of the compilation process.

As a PL/SQL programmer, however, you never really see or interact with your program’s DIANA. Oracle Corporation may decide, like some Ada compiler publishers, that PL/SQL has outgrown DIANA, and another mechanism may be used. Changes in the internals of the database happen. For example, segment space management used to use free lists, but now uses bitmaps. So, while you might get an error if your DIANA grows too large (how embarrassing—your DIANA is showing!), you don’t really do anything with DIANA, and if she (it) goes away, you probably won’t even know.

Knowing about DIANA might win you a T-shirt in a trivia contest at Oracle Open World, but it probably won’t improve your programming skills— unless you are programming the PL/SQL compiler.

So... enough with internals. Let’s get on with our discussion of critical aspects of the PL/SQL architecture.

How Oracle Executes PL/SQL Code

Before I explore how an Oracle database executes PL/SQL programs, I first need to define a couple of terms of art:[25]

PL/SQL runtime engine (a.k.a. PL/SQL virtual machine)

The PL/SQL virtual machine (PVM) is the database component that executes a PL/SQL program’s bytecode. In this virtual machine, the bytecode of a PL/SQL program is translated to machine code that makes calls to the database server and returns results to the calling environment. The PVM itself is written in C. Historically, Oracle has included a PVM in some client-side tools such as Oracle Forms, where the runtime engine opens a session to a remote database, communicating with the SQL engine over a networking protocol.

Database session

For most (server-side) PL/SQL, the database session is the process and memory space associated with an authenticated user connection (note: with the multithreaded server, or MTS, sessions can share the same process and the same session can use different processes). Each session is a logical entity in the database instance memory that represents the state of a current user logged into that database. The sessions connected to a database are visible through the view V$SESSION. Oracle maintains different kinds of memory for a session, including the process global area (PGA), user global area (UGA), and call global area (CGA). These are discussed later in the chapter.

To put these terms into context, let’s take a look at several variations on running a trivial program from a very common frontend, SQL*Plus. This is a good representative of a session-oriented tool that gives you direct access to the PL/SQL environment inside the database server. (I introduced SQL*Plus and showed how to use it with PL/SQL back in Chapter 2.) Of course, you may be calling the server from other tools, such as Oracle’s other client-side tools or even a procedural language such as Perl, C, or Java. But don’t worry: processing on the server side is relatively independent of the client environment.

PL/SQL execution launched directly from SQL*Plus always involves a top-level anonymous block. While you may know that the SQL*Plus EXECUTE command converts the call to an anonymous block, did you know that SQL’s CALL statement uses a (simplified) kind of anonymous block? Actually, until Oracle9i Database’s direct invocation of PL/SQL from SQL, all PL/SQL invocations from SQL used anonymous blocks.

An Example

Let’s begin with a look at the simplest possible anonymous block:

BEGIN

NULL;

END;

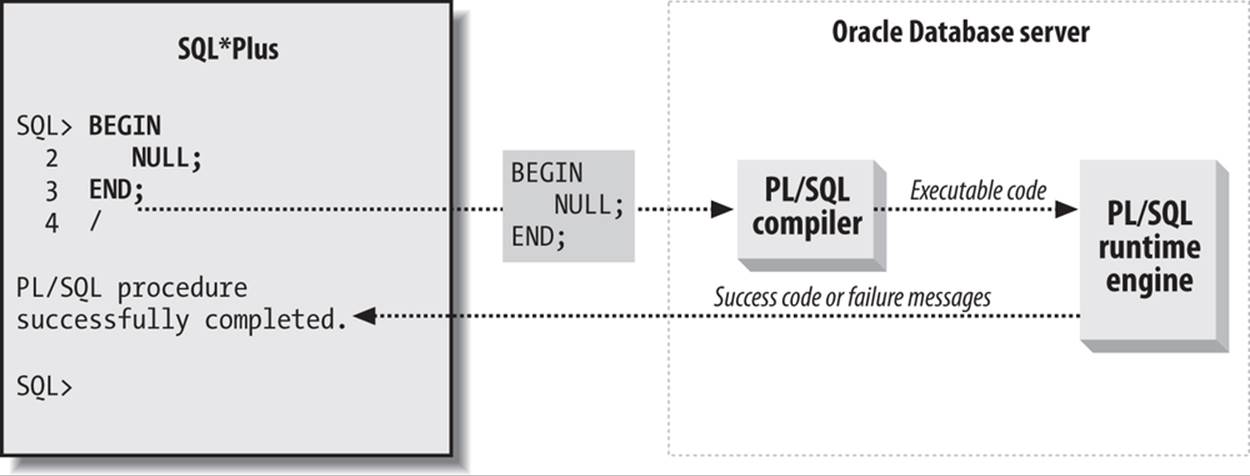

and find out just what happens when you send this block to the database server (Figure 24-1).

Figure 24-1. Execution of a trivial anonymous block

Let’s step through the operations shown in this figure:

1. Starting on the left side of Figure 24-1, the user composes the source code for the block and then gives SQL*Plus the go-ahead command (a slash). As the figure shows, SQL*Plus sends the entire code block, exclusive of the slash, to the server. This transmission occurs over whatever connection the session has established (for example, Oracle Net or interprocess communication).

2. Next, the PL/SQL compiler tries to convert this anonymous block to bytecode.[26] A first phase is to check the syntax to ensure that the program adheres to the grammar of the language. In this simple case, there are no identifiers to figure out, only language keywords. If compilation succeeds, the database puts the block’s compiled form (the bytecode) into a shared memory area; if it fails, the compiler will return error messages to the SQL*Plus session.

3. Finally, the PVM interprets the bytecode and ultimately returns a success or failure code to the SQL*Plus session.

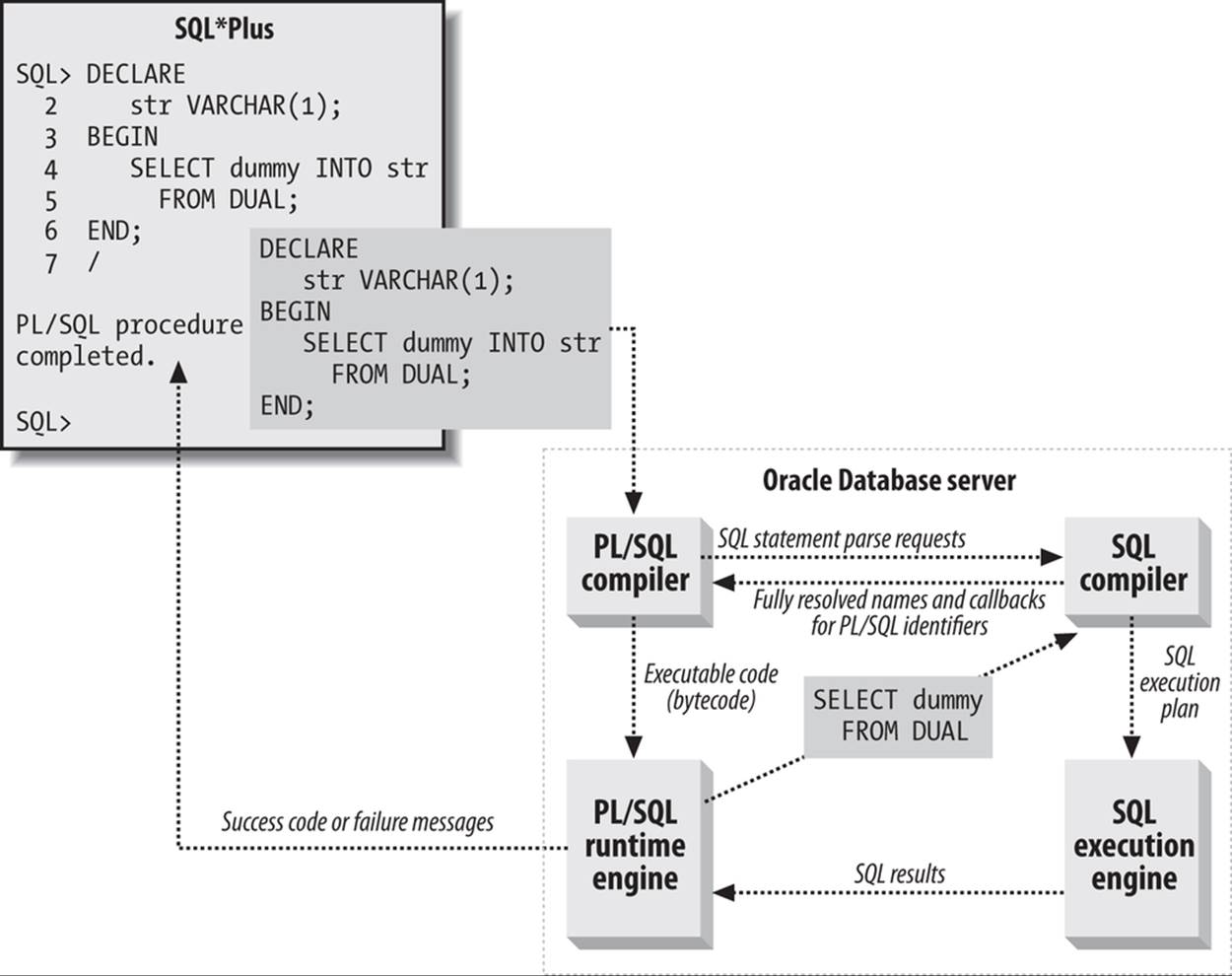

Let’s add an embedded SQL query statement into the anonymous block and see how that changes the picture. Figure 24-2 introduces some of the SQL-related elements of the database server.

Figure 24-2. Execution of an anonymous block that contains SQL

This example fetches a column value from the well-known table DUAL.[27]

After checking that the PL/SQL portions of the code adhere to the language’s syntax, the PL/SQL compiler communicates with the SQL compiler to hand off any embedded SQL for execution. Likewise, PL/SQL programs called from SQL statements cause the SQL compiler to hand off the PL/SQL calls to the PL/SQL compiler. The SQL parser will resolve any expressions, look for opportunities to use the function result cache (starting with Oracle Database 11g), execute semantic and syntax checks, perform name resolution, and determine an optimal execution plan. These steps are all part of the parse phase of the SQL execution and precede any substitution of bind variables and the execution and fetch of the SQL statement.

While PL/SQL shares a SQL compiler with the database, this does not mean that every SQL function is available in PL/SQL. For example, SQL supports the NVL2 function:

SELECT NVL2(NULL, 1, 2) FROM DUAL;

But attempting to use NVL2 directly in PL/SQL results in a PLS-00201: identifier ‘NVL2’ must be declared error:

SQL> EXEC DBMS_OUTPUT.PUT_LINE(NVL2(NULL, 1, 2));

2 BEGIN DBMS_OUTPUT.PUT_LINE (NVL2(NULL, 1, 2)); END;

*

ERROR at line 1:

ORA-06550: line 1, column 28:

PLS-00201: identifier 'NVL2' must be declared

ORA-06550: line 1, column 7:

PL/SQL: Statement ignored

When a PL/SQL program is compiled, embedded SQL statements are modified slightly such that INTO clauses are removed, local program variables are replaced with bind variables, comments are removed, and many of the identifiers in the statement are uppercased (including keywords, column names, table names, etc., but not hints and literals). For example, if myvar is a local program variable, PL/SQL will change this:

select dummy into str from dual where dummy = myvar

into something like this:

SELECT DUMMY FROM DUAL WHERE DUMMY = :B1

There are two other kinds of callouts you can make from PL/SQL:

Java stored procedures

The default database server installation includes not just a PL/SQL virtual machine but also a Java virtual machine. You can write a PL/SQL call spec whose logic is implemented as a static Java class. For details and examples, see Chapter 27 on the book’s website.

External procedures

You can also implement the executable portion of a PL/SQL subprogram in custom C code, and at runtime the database will run your code in a separate process and memory space from the main database server. You are responsible for backing up these binaries and making sure each RAC node has a copy. Chapter 28 discusses external procedures and is also available on the book’s website.

You can learn more about the runtime architecture of these two approaches by consulting their respective chapters.

Compiler Limits

Large PL/SQL programs may encounter the server error PLS-00123: Program too large. This means that the compiler bumped into the maximum allowed number of “nodes” in the parse tree. The normal workaround for this error is to split the program into several smaller programs or reengineer it (for example, to use a temporary table instead of 10,000 parameters). It’s difficult to predict how many nodes a program will need because nodes don’t directly correspond to anything easily measurable, such as tokens or lines of code.

Oracle advises that a “typical” stored program generate four parse tree nodes per line of code; this equates to the approximate upper limits specified in the following table.

|

PL/SQL program type |

Upper limit (estimated) |

|

Package and type bodies; standalone functions and procedures |

256 MB |

|

Signatures (headers) of standalone functions and procedures |

128 KB |

|

Package and type specifications; anonymous blocks |

128 KB |

These are only estimates, though, and there can be a fair amount of variance in either direction.

Other documented hard limits in the PL/SQL compiler include those in the following table.

|

PL/SQL elements |

Upper limit (estimated) |

|

Levels of block nesting |

255 |

|

Parameters you can pass to a procedure or function |

65,536 |

|

Levels of record nesting |

64 |

|

Objects referenced in a program unit |

65,536 |

|

Number of exception handlers in one program |

65,536 |

|

Precision of a NUMBER (digits) |

38 |

|

Size of a VARCHAR2 (bytes) |

32,767 |

Few of these are likely to cause a problem, but you can find a complete list of them in Oracle’s official PL/SQL documentation, the PL/SQL Language Reference.

The Default Packages of PL/SQL

A true object-oriented language like Java has a root class (in Java it is called Object, not surprisingly) from which all other classes are derived. PL/SQL is, officially, an object-relational language, but at its core it is a relational, procedural programming language and it has at its core a “root” package named STANDARD.

The packages you build are not derived from STANDARD, but almost every program you write will depend on and use this package. It is, in fact, one of the two default packages of PL/SQL, the other being DBMS_STANDARD.

To best understand the role that these packages play in your programming environment, it is worth traveling back in time to the late 1980s, before the days of Oracle7 and SQL*Forms 3, before Oracle PL/SQL even existed. Oracle had discovered that while SQL was a wonderful language, it couldn’t do everything. Its customers found themselves writing C programs that executed the SQL statements, but those C programs had to be modified to run on each different operating system.

Oracle decided that it would create a programming language that could execute SQL statements natively and be portable across all operating systems on which the Oracle database was installed. The company also decided that rather than come up with a brand-new language on its own, it would evaluate existing languages and see if any of them could serve as the model for what became PL/SQL.

In the end, Oracle chose Ada as that model. Ada was originally designed for use by the US Department of Defense, and was named after Ada Lovelace, an early and widely respected software programming pioneer. Packages are a construct adopted from Ada. In the Ada language, you can specify a “default package” in any given program unit. When a package is the default, you do not have to qualify references to elements in the package with the package_name dot syntax, as in my_package.call_procedure.

When Oracle designed PL/SQL, it kept the idea of a default package but changed the way it is applied. We (users of PL/SQL) are not allowed to specify a default package in a program unit. Instead, there are just two default packages in PL/SQL, STANDARD and DBMS_STANDARD. They are defaults for the entire language, not for any specific program unit.

You can (and almost always will) reference elements in either of these packages without using the package name as a dot-qualified prefix. Let’s now explore how Oracle uses the STANDARD package (and to a lesser extent, DBMS_STANDARD) to, as stated in the Oracle PL/SQL User Guide, “define the PL/SQL environment.”

STANDARD declares a set of types, exceptions, and subprograms that are automatically available to all PL/SQL programs and would be considered (mistakenly) by many PL/SQL developers to be “reserved words” in the language. When compiling your code, Oracle must resolve all unqualified identifiers. It first checks to see if an element with that name is declared in the current scope. If not, it then checks to see if there is an element with that name defined in STANDARD or DBMS_STANDARD. If a match is found for each identifier in your program, the code can be compiled (assuming there are no syntax errors).

To understand the role of STANDARD, consider the following (very strange-looking) block of PL/SQL code. What do you think will happen when I execute this block?

/* File on web: standard_demo.sql */

1 DECLARE

2 SUBTYPE DATE IS NUMBER;

3 VARCHAR2 DATE := 11111;

4 TO_CHAR PLS_INTEGER;

5 NO_DATA_FOUND EXCEPTION;

6 BEGIN

7 SELECT 1 INTO TO_CHAR

8 FROM SYS.DUAL WHERE 1 = 2;

9 EXCEPTION

10 WHEN NO_DATA_FOUND

11 THEN

12 DBMS_OUTPUT.put_line ('Trapped!');

13 END;

Most PL/SQL developers will say one of two things: “This block won’t even compile,” or “It will display the word ‘Trapped!’ since 1 is never equal to 2.”

In fact, the block will compile, but when you run it you will see an unhandled NO_DATA_FOUND exception:

ORA-01403: no data found

ORA-06512: at line 7

Now isn’t that odd? NO_DATA_FOUND is the only exception I am actually handling, so how can it escape unhandled? Ah, but the question is: which NO_DATA_FOUND am I handling? You see, in this block, I have declared my own exception named NO_DATA_FOUND. This name is not a reserved word in the PL/SQL language (in contrast, BEGIN is a reserved word—you cannot name a variable “BEGIN”). Instead, it is an exception that is defined in the specification of the STANDARD package, as follows:

NO_DATA_FOUND exception;

PRAGMA EXCEPTION_INIT(NO_DATA_FOUND, 100);

Since I have a locally declared exception with the name NO_DATA_FOUND, any unqualified reference to this identifier in my block will be resolved as my exception and not STANDARD’s exception.

If, on the other hand, line 12 in my exception section looked like this:

WHEN STANDARD.NO_DATA_FOUND

then the exception would be handled and the word “Trapped!” displayed.

In addition to the oddness of NO_DATA_FOUND, the lines pointed out in the following table also appear to be rather strange.

|

Line(s) |

Description |

|

2 |

Define a new type of data named DATE, which is actually of type NUMBER. |

|

3 |

Declare a variable named VARCHAR2 of type DATA and assign it a value of 11111. |

|

4 |

Declare a variable named TO_CHAR of type PLS_INTEGER. |

I can “repurpose” these names of “built-in” elements of the PL/SQL language, because they are all defined in the STANDARD package. These names are not reserved by PL/SQL; they are simply and conveniently referenceable without their package name.

The STANDARD package contains the definitions of the supported datatypes in PL/SQL, the predefined exceptions, and the built-in functions, such as TO_CHAR, SYSDATE, and USER. The DBMS_STANDARD package contains transaction-related elements, such as COMMIT, ROLLBACK, and the trigger event functions INSERTING, DELETING, and UPDATING.

Here are a few things to note about STANDARD:

§ You should never change the contents of this package. If you do, I suggest that you not contact Oracle Support and ask for help. You have likely just violated your maintenance agreement! Your DBA should give you read-only authority on the RDBMS/Admin directory, so that you can examine this package along with any of the other supplied packages, like DBMS_OUTPUT (check out dbmsotpt.sql) and DBMS_UTILITY (check out dbmsutil.sql).

§ Oracle even lets you read the package body of STANDARD; unlike most package bodies, such as that for DBMS_SQL, it is not wrapped or pseudoencrypted. Look in the stdbody.sql script and you will see, for instance, that the USER function always executes a SELECT from SYS.dual, while SYSDATE will only execute a query if a C program to retrieve the system timestamp fails.

§ Just because you see a statement in STANDARD doesn’t mean you can write that same code in your own PL/SQL blocks. You cannot, for example, declare a subtype with a range of values, as is done for BINARY_INTEGER.

§ Just because you see something defined in STANDARD doesn’t mean you can use it in PL/SQL. For example, the DECODE function is declared in STANDARD, but it can be called only from within a SQL statement.

The STANDARD package is defined by the stdspec.sql and stdbody.sql files in $ORACLE_HOME/RDBMS/Admin (in some earlier versions of the database, this package may be found in the standard.sql file). You will find DBMS_STANDARD in dbmsstdx.sql.

If you are curious about which of the many predefined identifiers are actually reserved words in the PL/SQL language, check out the reserved_words.sql script on the book’s website. This script is explained in Chapter 3.

Execution Authority Models

The Oracle database offers two different models for object permissions in your PL/SQL programs. The default (and the only model way back in the days before Oracle8i Database) is definer rights. With this model, a stored program executes under the authority of its owner, or definer.[28] The other permission model uses the privileges of the user invoking the program and is referred to as invoker rights.

You need to understand the nuances of both the definer rights model and the invoker rights model, because many PL/SQL applications rely on a combination of the two. Let’s explore these in a little more detail, so you know when you want to use each model.

The Definer Rights Model

Before a PL/SQL program can be executed from within a database instance, it must be compiled and stored in the database itself. Thus, a program unit is always stored within a specific schema or database account, even though the program might refer to objects in other schemas.

With the definer rights model, keep the following rules in mind:

§ Any external reference in a program unit is resolved at compile time, using the directly granted privileges of the schema in which the program unit is compiled.

§ Database roles are ignored completely when stored programs are compiling. All privileges needed for the program must be granted directly to the definer (owner) of the program.

§ Whenever you run a program compiled with the definer rights model (the default), its SQL executes under the authority of the schema that owns the program.

§ Although direct grants are needed to compile a program, you can grant EXECUTE authority to give other schemas and roles the ability to run your program.

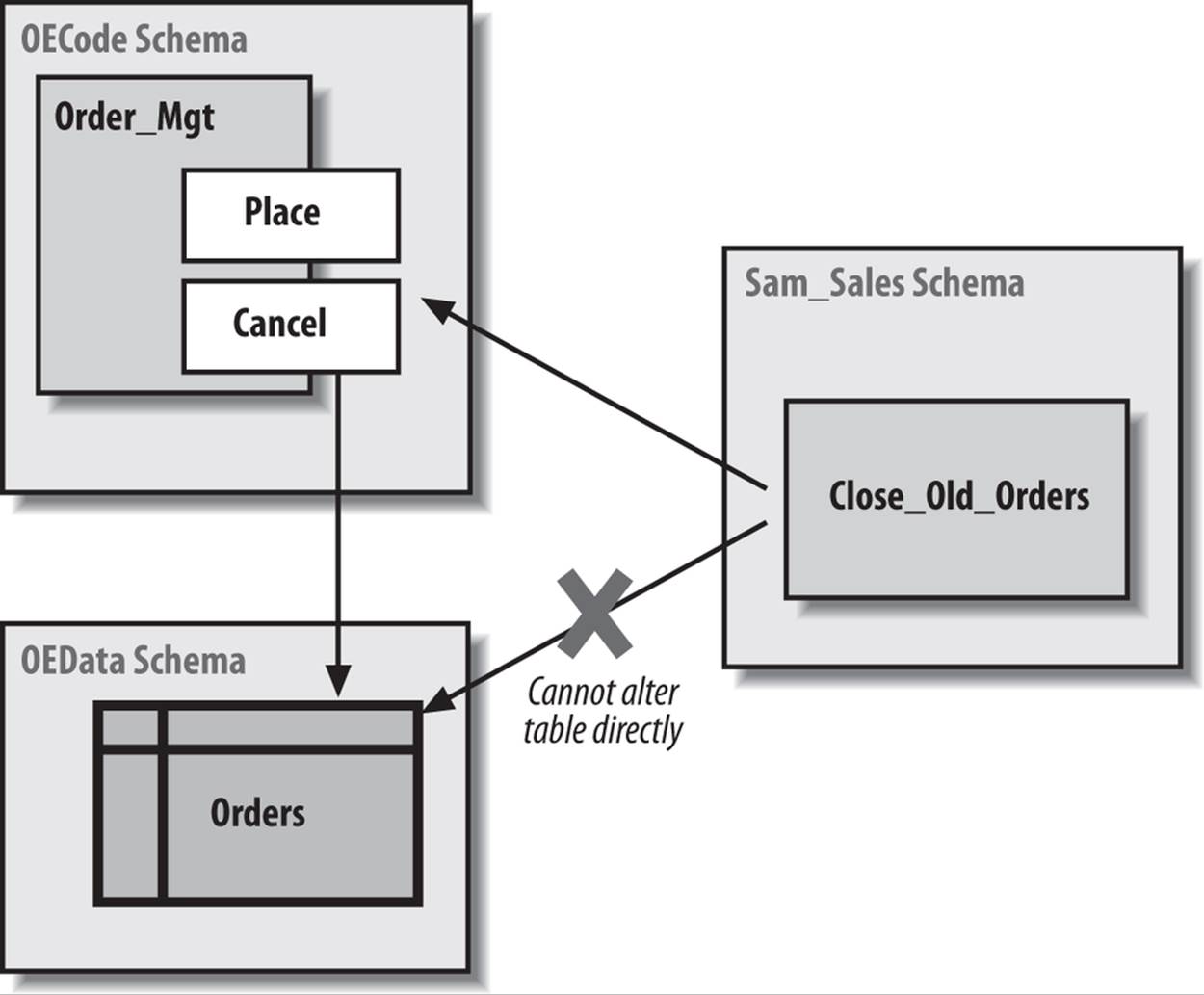

Figure 24-3 shows how you can use the definer rights model to control access to underlying data objects. All the order entry data is stored in the OEData schema. All the order entry code is defined in the OECode schema. OECode has been granted the direct privileges necessary to compile the Order_Mgt package, which allows you to both place and cancel orders.

Figure 24-3. Controlling access to data with the definer rights model

To make sure that the orders table is updated properly, no direct access (either via roles or via privileges) is allowed to that table through any schema other than OECode. Suppose, for example, that the Sam_Sales schema needs to run through all the outstanding orders and close out old ones. Sam will not be able to issue a DELETE statement from the Close_Old_Orders procedure; instead, he will have to call Order_Mgt.cancel to get the job done.

Advantages of definer rights

Certain situations cry out for definer rights. This model offers the following advantages:

§ You are better able to control access to underlying data structures. You can guarantee that the only way the contents of a table can be changed is by going through a specific programmatic interface (usually a package).

§ Application performance improves dramatically because the PL/SQL engine does not have to perform checks at runtime to determine if you have the appropriate privileges or—just as important—which object you should actually be manipulating (my accounts table may be quite different from yours!).

§ You don’t have to worry about manipulating the wrong table. With definer rights, your code will work with the same data structure you would be accessing directly in SQL in your SQL*Plus (or other execution) environment. It is simply more intuitive.

Disadvantages of definer rights

But there are problems with the definer rights model as well. These are explored in the remainder of this section.

Where’d my table go?

Let’s see what all those definer rights rules can mean to a PL/SQL developer on a day-to-day basis. In many databases, developers write code against tables and views that are owned by other schemas, with public synonyms created for them to hide the schema. Privileges are then granted via database roles.

This very common setup can result in some frustrating experiences. Suppose that my organization relies on roles to grant access to objects. I am working with a table called accounts, and I can execute this query without any problem in SQL*Plus:

SQL> SELECT account#, name FROM accounts;

Yet when I try to use that same table (and the same query, even) inside a procedure, I get an error:

SQL> CREATE OR REPLACE PROCEDURE show_accounts

2 IS

3 BEGIN

4 FOR rec IN (SELECT account#, name FROM accounts)

5 LOOP

6 DBMS_OUTPUT.PUT_LINE (rec.name);

7 END LOOP;

8 END;

9 /

Warning: Procedure created with compilation errors.

SQL> sho err

Errors for PROCEDURE SHOW_ACCOUNTS:

LINE/COL ERROR

-------- ------------------------------------------------------

4/16 PL/SQL: SQL Statement ignored

4/43 PLS-00201: identifier 'ACCOUNTS' must be declared

This doesn’t make any sense... or does it? The problem is that ACCOUNTS is actually owned by another schema; I was unknowingly relying on a synonym and roles to get at the data. So, if you are ever faced with this seemingly contradictory situation, don’t bang your head against the wall in frustration. Instead, ask the owner of the object or the DBA to grant you the privileges you require to get the job done.

How do I maintain all that code?

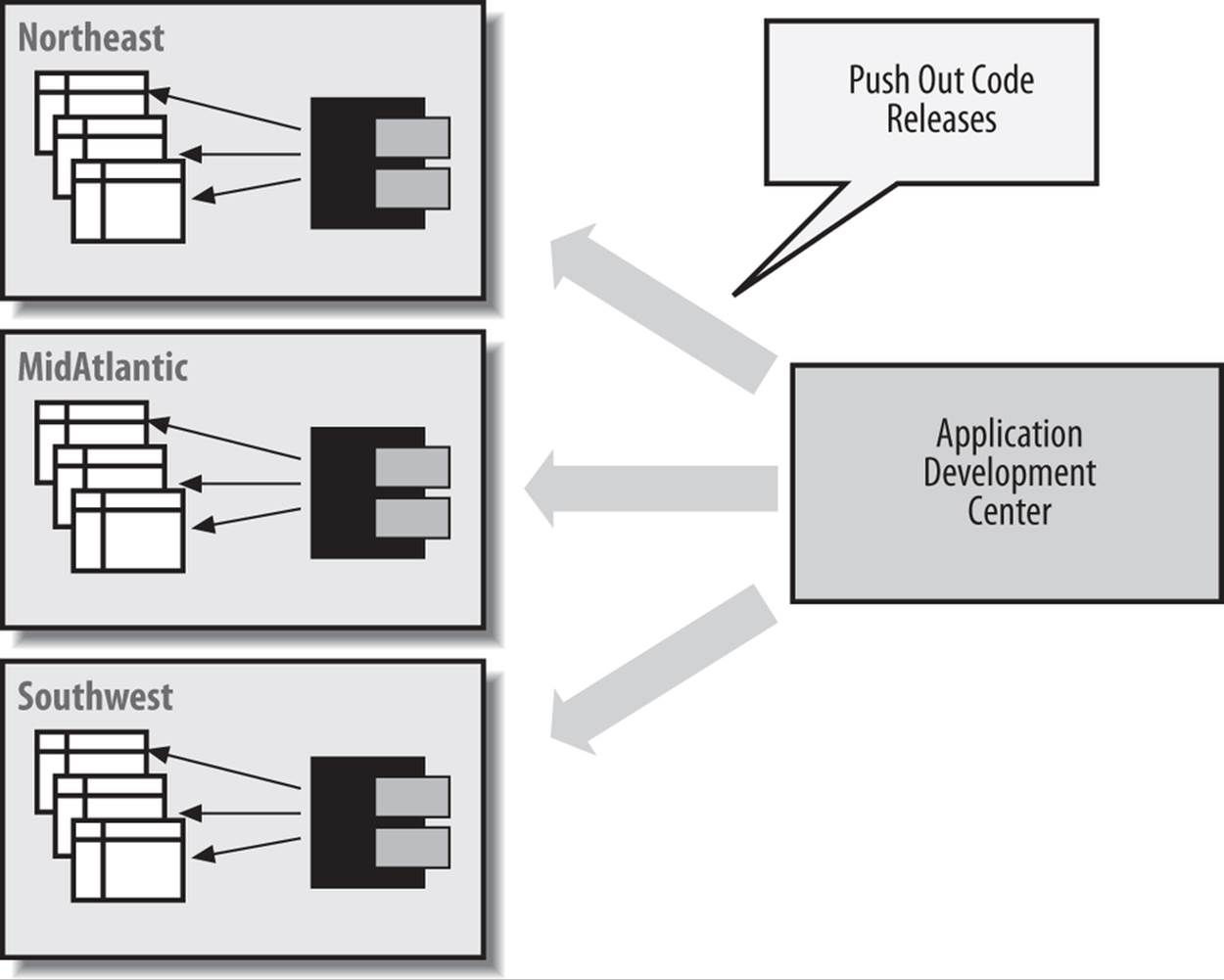

Suppose that my database instance is set up with a separate schema for each of the regional offices in my company. I build a large body of code that each office uses to analyze and maintain its data. Each schema has its own tables with the same structure but different data, a design selected for both data security and ease of movement via transportable tablespaces.

Now, I would like to install this code so that I spend the absolute minimum amount of time and effort setting up and maintaining the application. The way to do that is to install the code in one schema and share that code among all the regional office schemas.

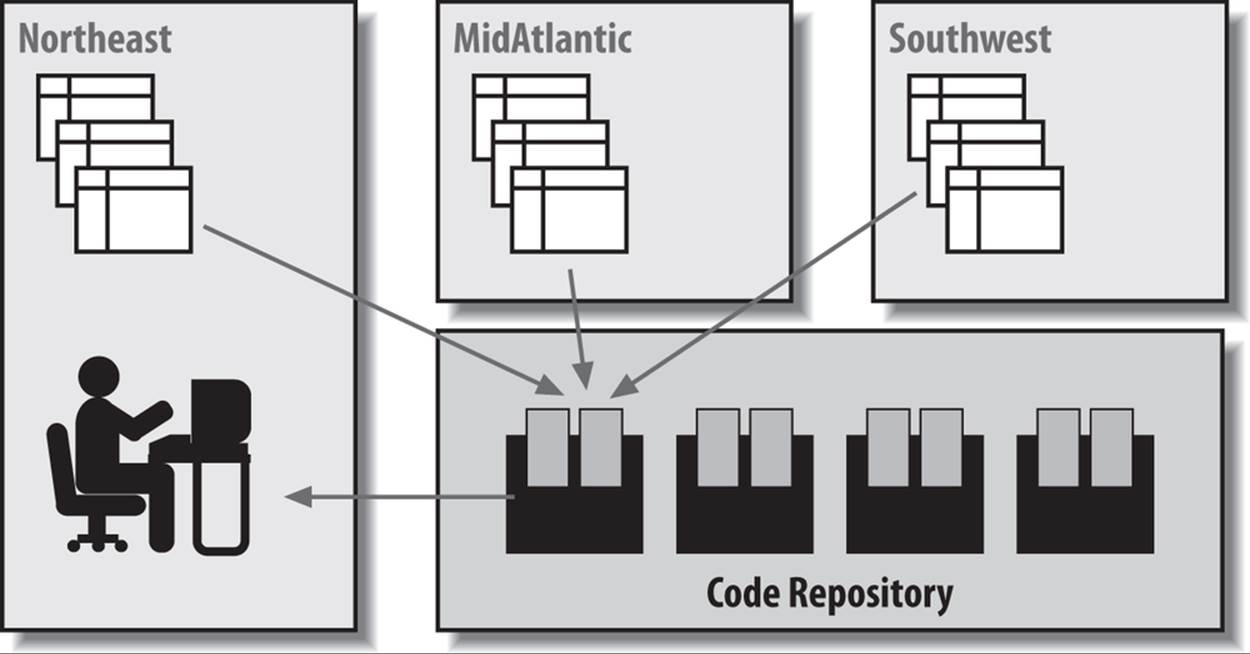

With the definer rights model, unfortunately, this goal and architecture are impossible to achieve. If I install the code in a central schema and grant the EXECUTE privilege authority to all regional schemas, then all those offices will be working with whatever set of tables is accessible to the central schema (perhaps one particular regional office or, more likely, a dummy set of tables). That’s no good. I must instead install this body of code in each separate regional schema, as shown in Figure 24-4.

Figure 24-4. Repetitive installations of code needed with definer rights

The result is a maintenance and enhancement nightmare. Perhaps invoker rights will give us options for a better solution.

Dynamic SQL and definer rights

Another common source of confusion with definer rights occurs when using dynamic SQL (described in Chapter 16). Suppose that I create a generic “exec DDL” program (yes, this is a really bad idea security-wise, but it illustrates the unintended consequences of this learning exercise) as follows:

/* File on web: execddl.sp */

PROCEDURE execDDL (ddl_string IN VARCHAR2)

IS

BEGIN

EXECUTE IMMEDIATE ddl_string;

END;

After testing it in my schema with outstanding results, I decide to share this neat utility with everyone else in my development organization. I compile it into the COMMON schema (where all reusable code is managed), grant EXECUTE to PUBLIC, and create a public synonym. Then I send out an email announcing its availability.

A few weeks later, I start getting calls from my coworkers. “Hey, I asked it to create a table, and it ran without any errors, but I don’t have the table.” “I asked it to drop my table, and the execddl procedure said that there is no such table. But I can do a DESCRIBE on it.” You get the idea. I begin to have serious doubts about sharing my code with other people. Sheesh, if they can’t use something as simple as the execddl procedure without screwing things up... but I decide to withhold judgment and do some research.

I log into the COMMON schema and find that, sure enough, all of the objects people were trying to create or drop or alter are sitting here in COMMON. And then it dawns on me: unless a user of execddl specifies her own schema when she asks to create a table, the results will be most unexpected.

In other words, this call to execddl:

EXEC execddl ('CREATE TABLE newone (rightnow DATE)')

would create the newone table in the COMMON schema. And this call to execddl:

EXEC execddl ('CREATE TABLE scott.newone (rightnow DATE)')

might solve the problem, but would fail with the following error:

ORA-01031: insufficient privileges

unless I grant the CREATE ANY TABLE privilege to the COMMON schema. Yikes... my attempt to share a useful piece of code got very complicated very fast! It sure would be nice to let people run the execddl procedure under their own authority and not that of COMMON, without having to install multiple copies of the code.

Privilege escalation and SQL injection

The time to pause and review your design with a colleague is when your program executes dynamic SQL that relies on the owner’s directly granted system privileges—and you think definer rights is appropriate to use. You should be very reluctant to create procedures like the previous one in the SYS schema or any other schema having system privileges that could serve as a gateway to privilege escalation if unexpected, but legal, input is passed in.

Please review Chapter 16 for information on how to prevent code injection.

The Invoker Rights Model

Sometimes, your programs should execute using the privileges of the person running the program and not the owner of the program. In such cases, you should choose the invoker rights model. With this approach, all external references in the SQL statements in a PL/SQL program unit are resolved according to the privileges of the invoking schema, not those of the owning or defining schema.

Figure 24-5 demonstrates the fundamental difference between the definer and invoker rights models. Recall that in Figure 24-4, I had to push out copies of my application to each regional office so that the code would manipulate the correct tables. With invoker rights, this step is no longer necessary. Now I can compile the code into a single code repository. When a user from the Northeast region executes the centralized program (probably via a synonym), it will work automatically with tables in the Northeast schema.

Figure 24-5. Use of invoker rights model

So that’s the idea behind invoker rights. Let’s see what is involved in terms of code, and then explore how best to exploit the feature.

Invoker rights syntax

The syntax to support this feature is simple enough. You add the following clause before your IS or AS keyword in the program header:

AUTHID CURRENT_USER

Here, for example, is that generic “exec DDL” engine again, this time defined as an invoker rights program:

/* File on web: execddl.sql */

PROCEDURE execddl (ddl_in in VARCHAR2)

AUTHID CURRENT_USER

IS

BEGIN

EXECUTE IMMEDIATE ddl_in;

END;

The AUTHID CURRENT_USER clause before the IS keyword indicates that when execddl executes, it should run under the authority of the invoker or “current user,” not the authority of the definer. And that’s all you have to do. If you do not include the AUTHID clause, or if you include it and explicitly request definer rights as shown here:

AUTHID DEFINER

then all references in your program will be resolved according to the directly granted privileges of the owning schema.

INVOKER RIGHTS FOR DYNAMIC SQL

I have written hundreds of programs using dynamic SQL, and with definer rights I would have to worry about schema issues. Where is the program running? Who is running it? What will happen when someone runs it? These are serious questions to ask about your code!

You may be tempted to use the AUTHID CURRENT_USER clause with every stored program unit that uses any kind of dynamic SQL. Once you take this step, you reason, you can rest assured that no matter where the program is compiled and which schema runs the program, it will always act on the currently connected schema.

The problem with this approach, though, is twofold. First, your users now require the same privileges that the programs have (and you may not want the HR clerk to be able to modify a salary outside the designated program). Second, there is extra runtime checking that the database must perform for invoker rights programs—and that can be a drag on performance. Use invoker rights where it is appropriate—neither model should be adopted blindly. I like to think about which privilege model my program needs and deliberately code to that model.

Rules and restrictions on invoker rights

There are a number of rules and restrictions to keep in mind when you are taking advantage of the invoker rights model:

§ AUTHID DEFINER is the default option.

§ The invoker rights model checks the privileges assigned to the invoker at the time of program execution to resolve any SQL-based references to database objects.

§ With invoker rights, roles are in effect at runtime as long as the invoker rights program hasn’t been called from a definer rights program.

§ The AUTHID clause is allowed only in the header of a standalone subprogram (procedure or function), a package specification, or an object type specification. You cannot apply the AUTHID clause to individual programs or methods within a package or object type. So, the whole package will be invoker rights, or the whole package will be definer rights. If parts of your package should be invoker rights and parts should be definer rights, then you need two packages.

§ Invoker rights resolution of external references will work for the following kinds of statements:

§ SELECT, INSERT, UPDATE, MERGE, and DELETE data manipulation statements

§ LOCK TABLE transaction control statements

§ OPEN and OPEN FOR cursor control statements

§ EXECUTE IMMEDIATE and OPEN FOR USING dynamic SQL statements

§ SQL statements parsed using DBMS_SQL.PARSE

§ Definer rights will always be used to resolve all external references to PL/SQL programs and object type methods at compilation time.

§ You can use invoker rights to change the resolution of static external data element references (tables and views).

You can also use invoker rights to resolve external references to PL/SQL programs. Here is one way to do it:

EXECUTE IMMEDIATE 'BEGIN someprogram; END;';

In this fragment, someprogram would get resolved at runtime according to the rights and namespace of the invoker. Alternatively, I could have used SQL’s CALL statement instead of the anonymous block. (I can’t just use a naked CALL statement because it is not directly supported within PL/SQL.)

Combining Rights Models

What do you think would happen if a definer rights program called an invoker rights program, or vice versa? The rules are simple:

§ If a definer rights program calls an invoker rights program, the rights of the calling program’s owner apply while the called program executes.

§ If an invoker rights program calls a definer rights program, the rights of the called program’s owner apply while the called program executes. When control returns to the caller, invoker rights resume.

To help keep all of this straight in your head, just remember that definer rights are “stronger” than (take precedence over) invoker rights.

Here are some files on the book’s website that you can use to explore the nuances of the invoker rights model in more detail:

invdefinv.sql and invdefinv.tst

Two scripts that demonstrate the impact of the precedence of definer rights over invoker rights

invdef_overhead.tst

Examines the overhead of reliance on invoker rights (hint: runtime resolution is slower than compile-time resolution)

invrole.sql

Demonstrates how a change in roles can affect how object references are resolved at runtime

irdynsql.sql

Explores some of the complexities involved in using invoker and definer rights with dynamic SQL

Granting Roles to PL/SQL Program Units (Oracle Database 12c)

Before Oracle Database 12c, a definer rights program unit (defined with AUTHID DEFINER or no AUTHID clause) always executed with the privileges of the definer of that unit. An invoker rights program unit (defined with AUTHID CURRENT_USER clause) always executed with the privileges of the invoker of that unit.

A consequence of these two distinct AUTHID settings was that any program unit that needed to be executed by all users had to be created as a definer rights unit. It would then execute with all the privileges of the definer, which might not be optimal from a security standpoint.

As of Oracle Database 12c, you can grant roles to PL/SQL packages and schema-level procedures and functions. Role-based privileges for program units allow developers to fine-tune the privileges available to the invoker of a program unit.

We can now define a program unit as invoker rights and then complement the invoker’s privileges with specific, limited privileges granted through the role.

Let’s walk through an example showing how to grant roles to program units and the impact it has. Suppose that the HR schema contains the employees and departments tables, defined and populated with data as follows:

CREATE TABLE departments(

department_id INTEGER,

department_name VARCHAR2 (100),

staff_freeze CHAR (1)

)

/

BEGIN

INSERT INTO departments

VALUES (10, 'IT', 'Y');

INSERT INTO departments

VALUES (20, 'HR', 'N');

COMMIT;

END;

/

CREATE TABLE employees

(

employee_id INTEGER,

department_id INTEGER,

last_name VARCHAR2 (100)

)

/

BEGIN

DELETE FROM employees;

INSERT INTO employees VALUES (100, 10, 'Price');

INSERT INTO employees VALUES (101, 20, 'Sam');

INSERT INTO employees VALUES (102, 20, 'Joseph');

INSERT INTO employees VALUES (103, 20, 'Smith');

COMMIT;

END;

/

The SCOTT schema contains only an employees table:

CREATE TABLE employees

( employee_id INTEGER,

department_id INTEGER,

last_name VARCHAR2 (100)

)

/

BEGIN

DELETE FROM employees;

INSERT INTO employees VALUES (100, 10, 'Price');

INSERT INTO employees VALUES (101, 20, 'Sam');

INSERT INTO employees VALUES (102, 20, 'Joseph');

INSERT INTO employees VALUES (103, 20, 'Smith');

COMMIT;

END;

/

HR also contains a procedure that removes all employees from the specified department as long as the department does not have its staff “frozen.” I will first create this procedure as a definer rights unit:

CREATE OR REPLACE PROCEDURE remove_emps_in_dept (

department_id_in IN employees.department_id%TYPE)

AUTHID DEFINER

IS

l_freeze departments.staff_freeze%TYPE;

BEGIN

SELECT staff_freeze

INTO l_freeze

FROM HR.departments

WHERE department_id = department_id_in;

IF l_freeze = 'N'

THEN

DELETE FROM employees

WHERE department_id =

department_id_in;

END IF;

END;

/

And allow SCOTT to execute this procedure:

GRANT EXECUTE

ON remove_emps_in_dept

TO SCOTT

/

When SCOTT executes the procedure as shown next, it will remove three rows—but from HR’s employees table, since the procedure is a definer rights unit:

BEGIN

HR.remove_emps_in_dept (20);

END;

/

I need to change this procedure so that it will remove rows from SCOTT’s employees table, not HR’s. That is precisely what invoker rights does for us. But if I change the AUTHID clause of this procedure to:

AUTHID CURRENT_USER

and run the procedure again, I see this:

BEGIN * ERROR at line 1:

ORA-00942: table or view does not exist

ORA-06512: at "HR.REMOVE_EMPS_IN_DEPT", line 7

ORA-06512: at line 2

The problem is that Oracle is now using the privileges of SCOTT to resolve the references to two tables: HR.departments and HR.employees. SCOTT has no privileges on HR’s departments table, however, so Oracle raises the ORA-00942 error. Prior to Oracle Database 12c, the DBA would have had to grant the necessary privileges on HR.departments to SCOTT. Now, however, the following steps can be taken instead:

1. From a schema with the necessary privileges, create a role and grant that role to HR:

2. CREATE ROLE hr_departments

3. /

4. GRANT hr_departments TO hr

/

5. Connected to HR, grant the desired privilege to the role and then grant the role to the procedure:

6. GRANT SELECT

7. ON departments

8. TO hr_departments

9. /

10.GRANT hr_departments TO PROCEDURE remove_emps_in_dept

/

Now when I execute the following statements from SCOTT, the rows are removed properly:

SELECT COUNT (*)

FROM employees

WHERE department_id = 20

/

COUNT(*)

----------

3

BEGIN

hr.remove_emps_in_dept (20);

END;

/

SELECT COUNT (*)

FROM employees

WHERE department_id = 20

/

COUNT(*)

----------

0

Roles granted to a program unit do not affect compilation. Instead, they affect the privilege checking of SQL statements that the unit issues at runtime. Thus, the procedure or function executes with the privileges of both its own roles and any other currently enabled roles.

This feature will be of most use with invoker rights program units, as just shown. You will likely consider granting roles to a definer rights unit when that unit executes dynamic SQL, since the privileges for that dynamic statement are checked at runtime.

“Who Invoked Me?” Functions (Oracle Database 12c)

Oracle Database 12c offers two new functions (which can only be called from within SQL statements) that tell you the invoking user based on whether invoker rights or definer rights are used:

ORA_INVOKING_USER

Returns the name of the user who is invoking the current statement or view. This function treats the intervening views as specified by their BEQUEATH clauses. If the invoking user is an Oracle Database Real Application Security–defined user (also new in 12.1, the next generation of the virtual private database feature), then this function returns XS$NULL.

ORA_INVOKING_USERID

Returns the identifier (ID) of the user who is invoking the current statement or view. This function treats the intervening views as specified by their BEQUEATH clauses. If the invoking user is an Oracle Database Real Application Security–defined user, then this function returns an ID that is common to all Real Application Security sessions but is different from the ID of any database user.

These functions are demonstrated in the next section, when we explore BEQUEATH CURRENT_USER for views.

BEQUEATH CURRENT_USER for Views (Oracle Database 12c)

Prior to Oracle Database 12c, if your view executed a function, it would always be run under the privileges of the view’s owner, not the function’s owner. So if the function was defined as invoker rights, the behavior could be quite different from what you would have expected.

12c adds the BEQUEATH clause for views so that you can define a view that will accommodate invoker rights functions referenced in the view.

Let’s take a look at how this feature works (all code in this section may be found in the 12c_bequeath.sql file available on the book’s website). Here, I will create a table and a function in the HR schema:

CREATE TABLE c12_emps

(

employee_id INTEGER,

department_id INTEGER,

last_name VARCHAR2 (100)

)

/

BEGIN

INSERT INTO c12_emps VALUES (1, 100, 'abc');

INSERT INTO c12_emps VALUES (2, 100, 'def');

INSERT INTO c12_emps VALUES (3, 200, '123');

COMMIT;

END;

/

CREATE OR REPLACE FUNCTION emps_count (

department_id_in IN INTEGER)

RETURN PLS_INTEGER

AUTHID CURRENT_USER

IS

l_count PLS_INTEGER;

l_user VARCHAR2 (100);

l_userid VARCHAR2 (100);

BEGIN

SELECT COUNT (*)

INTO l_count

FROM c12_emps

WHERE department_id = department_id_in;

/* Show who is invoking the function */

SELECT ora_invoking_user, ora_invoking_userid

INTO l_user, l_userid FROM DUAL;

DBMS_OUTPUT.put_line ('Invoking user=' || l_user);

DBMS_OUTPUT.put_line ('Invoking userID=' || l_userid);

RETURN l_count;

END;

/

Notice that the function calls the two new invoking functions: ORA_INVOKING_USER and ORA_INVOKING_USER_ID.

Then I create a view, specifying invoker rights for the BEQUEATH setting, and I make sure that SCOTT can query that view:

CREATE OR REPLACE VIEW emp_counts_v

BEQUEATH CURRENT_USER

AS

SELECT department_id, emps_count (department_id) emps_in_dept

FROM c12_emps

/

GRANT SELECT ON emp_counts_v TO scott

/

In the SCOTT schema, I create another c12_emps table, but I populate it with different data:

CREATE TABLE c12_emps

(

employee_id INTEGER,

department_id INTEGER,

last_name VARCHAR2 (100)

)

/

BEGIN

INSERT INTO c12_emps VALUES (1, 200, 'SCOTT.ABC');

INSERT INTO c12_emps VALUES (2, 200, 'SCOTT.DEF');

INSERT INTO c12_emps VALUES (3, 400, 'SCOTT.123');

COMMIT;

END;

/

Then I select all the rows from the view. Here’s the output of the query:

SQL> SELECT * FROM hr.emp_counts_v

2 /

DEPARTMENT_ID EMPS_IN_DEPT

------------- ------------

100 0

100 0

200 2

SCOTT

107

SCOTT

107

SCOTT

107

As you can see, the data returned by the view is from HR’s table (there is a department ID of 100), but the totals returned by the function call reflect data in SCOTT’s table. And the ORA_INVOKING* functions return SCOTT’s information.

Note that BEQUEATH CURRENT_USER does not transform the view itself into an invoker rights object. Name resolution within the view is still handled using the view owner’s schema, and privilege checking for the view is done using the view owner’s privileges.

The primary benefit of this feature is that it allows functions like SYS_CONTEXT and USERENV to return consistent results when these functions are referenced in a view.

Constraining Invoker Rights Privileges (Oracle Database 12c)

When a user runs a procedure or function defined in a PL/SQL program unit that has been created with the AUTHID CURRENT_USER clause, that subprogram temporarily inherits all of the privileges of the invoking user while the procedure runs. Conversely, during that time, the owner of the invoker rights unit has access to the invoking user’s privileges.

If the invoker has a more powerful set of privileges than the owner, there is a risk that the subprogram’s owner could misuse the higher privileges of the invoking user. These types of risks increase when application users are given access to a database that uses invoker rights procedures.

Oracle Database 12c adds two new privileges that you can use to control whether the owner’s procedure can access the invoker’s privileges: INHERIT PRIVILEGES and INHERIT ANY PRIVILEGES.

Any user can grant or revoke the INHERIT PRIVILEGES privilege on himself to the users whose invoker rights procedures he wants to run. The INHERIT ANY PRIVILEGES privilege is managed by SYS users.

When a user runs an invoker rights procedure, Oracle Database checks it to ensure that either the procedure owner has the INHERIT PRIVILEGES privilege on the invoking user, or the owner has been granted the INHERIT ANY PRIVILEGES privilege. If the privilege check fails, then Oracle Database returns the following error:

ORA-06598: insufficient INHERIT PRIVILEGES privilege error.

The benefit of these two privileges is that they give invoking users control over who can access their privileges when they run an invoker rights procedure or query a BEQUEATH CURRENT_USER view.

All users are granted INHERIT PRIVILEGES ON USER newuser TO PUBLIC by default, when their accounts are created or when accounts that were created earlier are upgraded to the current release. This means that if you do not have a risk of “weak owners” maliciously leveraging privileges of “strong invokers,” everything will work the same as it did in earlier versions of Oracle Database.

An invoking user can revoke the INHERIT PRIVILEGES privilege from other users and grant it only to trusted users. The syntax for the INHERIT PRIVILEGES privilege grant is as follows

GRANT INHERIT PRIVILEGES ON USER invoking_user TO procedure_owner

Conditional Compilation

Introduced in Oracle Database 10g Release 2, conditional compilation allows the compiler to compile selected parts of a program based on conditions you provide with the $IF directive.

Conditional compilation will come in very handy when you need to:

§ Write a program that will run under different versions of Oracle, taking advantage of features specific to those versions. More specifically, you may want to take advantage of new features of the Oracle database where available, but you also need your program to compile and run in older versions. Without conditional compilation, you would have to maintain multiple files or use complex SQL*Plus substitution variable logic.

§ Run certain code during testing and debugging, but then omit that code from the production code. Prior to conditional compilation, you would need to either comment out lines of code or add some overhead to the processing of your application—even in production.

§ Install/compile different elements of your application based on user requirements, such as the components for which a user is licensed. Conditional compilation greatly simplifies the maintenance of a code base with this complexity.

You implement conditional compilation by placing compiler directives (commands) in your source code. When your program is compiled, the PL/SQL preprocessor evaluates the directives and selects those portions of your code that should be compiled. This pared-down source code is then passed to the compiler for compilation.

There are three types of directives:

Selection directives

Use the $IF directive to evaluate expressions and determine which code should be included or avoided.

Inquiry directives

Use the $$identifier syntax to refer to conditional compilation flags. These inquiry directives can be referenced within an $IF directive or used independently in your code.

Error directives

Use the $ERROR directive to report compilation errors based on conditions evaluated when the preprocessor prepares your code for compilation.

I’ll first show some simple examples, then delve more deeply into the capabilities of each directive. You’ll also learn how to use two packages related to conditional compilation, DBMS_DB_VERSION and DBMS_PREPROCESSOR.

Examples of Conditional Compilation

Let’s start with some examples of different types of conditional compilation.

Use application package constants in $IF directive

The $IF directive can reference constants defined in your own packages. In the following example, I vary the way that a bonus is applied depending on whether or not the location in which this third-party application is installed complies with the Sarbanes-Oxley guidelines. Such a setting is unlikely to change for a long period of time. If I rely on the traditional conditional statement in this case, I will leave in place a branch of logic that should never be applied. With conditional compilation, the code is removed before compilation:

/* File on web: cc_my_package.sql */

PROCEDURE apply_bonus (

id_in IN employee.employee_id%TYPE

,bonus_in IN employee.bonus%TYPE)

IS

BEGIN

UPDATE employee

SET bonus =

$IF employee_rp.apply_sarbanes_oxley

$THEN

LEAST (bonus_in, 10000)

$ELSE

bonus_in

$END

WHERE employee_id = id_in;

NULL;

END apply_bonus;

Toggle tracing through conditional compilation flags

I can now set up my own debug/trace mechanisms and have them conditionally compiled into my code. This means that when my code rolls into production, I can have this code completely removed so that there will be no runtime overhead to this logic. Note that I can specify both Boolean and PLS_INTEGER values through the special PLSQL_CCFLAGS compile parameter:

/* File on web: cc_debug_trace.sql */

ALTER SESSION SET PLSQL_CCFLAGS = 'oe_debug:true, oe_trace_level:10';

PROCEDURE calculate_totals

IS

BEGIN

$IF $$oe_debug AND $$oe_trace_level >= 5

$THEN

DBMS_OUTPUT.PUT_LINE ('Tracing at level 5 or higher');

$END

NULL;

END calculate_totals;

The Inquiry Directive

An inquiry directive is a directive that makes an inquiry of the compilation environment. Of course, that doesn’t really tell you much, so let’s take a closer look at the syntax for inquiry directives and the different sources of information available through the inquiry directive.

The syntax for an inquiry directive is as follows:

$$identifier

where identifier is a valid PL/SQL identifier that can represent any of the following:

Compilation environment settings

The values found in the USER_PLSQL_OBJECT_SETTINGS data dictionary view

Your own custom-named directive

Defined with the ALTER...SET PLSQL_CCFLAGS command, described in Using the PLSQL_CCFLAGS parameter

Implicitly defined directives

$$PLSQL_LINE and $$PLSQL_UNIT, providing you with the line number and program name

Inquiry directives are designed for use within conditional compilation clauses, but they can also be used in other places in your PL/SQL code. For example, I can display the current line number in my program with this code:

DBMS_OUTPUT.PUT_LINE ($$PLSQL_LINE);

I can also use inquiry directives to define and apply application-wide constants in my code. Suppose, for example, that the maximum number of years of data supported in my application is 100. Rather than hardcoding this value in my code, I can do the following:

ALTER SESSION SET PLSQL_CCFLAGS = 'max_years:100';

PROCEDURE work_with_data (num_years_in IN PLS_INTEGER)

IS

BEGIN

IF num_years_in > $$max_years THEN ...

END work_with_data;

Even more valuable, I can use inquiry directives in places in my code where a variable is not allowed. Here are two examples:

DECLARE

l_big_string VARCHAR2($$MAX_VARCHAR2_SIZE);

l_default_app_err EXCEPTION;

PRAGMA EXCEPTION_INIT (l_default_app_err, $$DEF_APP_ERR_CODE);

BEGIN

The DBMS_DB_VERSION package

The DBMS_DB_VERSION built-in package offers a set of constants that give you absolute and relative information about the version of your installed database. The constants defined in the 12.1 version of this package are shown in Table 24-1. With each new version of Oracle, two new relative constants are added, and the values returned by the VERSION and RELEASE constants are updated.

Table 24-1. DBMS_DB _VERSION constants

|

Name of packaged constant |

Description |

Value in Oracle Database 12c Release 1 |

|

DBMS_DB_VERSION.VERSION |

The database version number |

12 |

|

DBMS_DB_VERSION.RELEASE |

The database release number |

1 |

|

DBMS_DB_VERSION.VER_LE_9 |

TRUE if the current version is less than or equal to Oracle9i Database |

FALSE |

|

DBMS_DB_VERSION.VER_LE_9_1 |

TRUE if the current version is less than or equal to 9.1 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_9_2 |

TRUE if the current version is less than or equal to 9.2 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_10 |

TRUE if the current version is less than or equal to Oracle Database 10g |

FALSE |

|

DBMS_DB_VERSION.VER_LE_10_1 |

TRUE if the current version is less than or equal to10.1 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_10_2 |

TRUE if the current version is less than or equal to 10.2 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_11_1 |

TRUE if the current version is less than or equal to 11.1 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_11_2 |

TRUE if the current version is less than or equal to 11.2 |

FALSE |

|

DBMS_DB_VERSION.VER_LE_12 |

TRUE if the current version is less than or equal to 12.1 |

TRUE |

|

DBMS_DB_VERSION.VER_LE_12_1 |

TRUE if the current version is less than or equal to 12.1 |

TRUE |

While this package was designed for use with conditional compilation, you can, of course, use it for your own purposes.

Interestingly, you can write expressions that include references to as-yet-undefined constants in the DBMS_DB_VERSION package. As long as they are not evaluated, as in the following case, they will not cause any errors. Here is an example:

$IF DBMS_DB_VERSION.VER_LE_12_1

$THEN

Use this code.

$ELSIF DBMS_DB_VERSION.VER_LE_13

This is a placeholder for the future.

$END

Setting compilation environment parameters

The following information (corresponding to the values in the USER_PLSQL_OBJECT_SETTINGS data dictionary view) is available via inquiry directives:

$$PLSQL_DEBUG

Debug setting for this compilation unit

$$PLSQL_OPTIMIZE_LEVEL

Optimization level for this compilation unit

$$PLSQL_CODE_TYPE

Compilation mode for this compilation unit

$$PLSQL_WARNINGS

Compilation warnings setting for this compilation unit

$$NLS_LENGTH_SEMANTICS

Value set for the NLS length semantics

See the cc_plsql_parameters.sql file on the book’s website for a demonstration that uses each of these parameters.

Referencing unit name and line number

Oracle implicitly defines four very useful inquiry directives for use in $IF and $ERROR directives:

$$PLSQL_UNIT

The name of the compilation unit in which the reference appears. If that unit is an anonymous block, then $$PLSQL_UNIT contains a NULL value.

$$PLSQL_LINE

The line number of the compilation unit where the reference appears.

$$PLSQL_UNIT_OWNER (Oracle Database 12c)

The name of the owner of the current PL/SQL program unit. If that unit is an anonymous block, then $$PLSQL_UNIT_OWNER contains a NULL value.

$$PLSQL_UNIT_TYPE (Oracle Database 12c)

The type of the current PL/SQL program unit—ANONYMOUS BLOCK, FUNCTION, PACKAGE, PACKAGE BODY, PROCEDURE, TRIGGER, TYPE, or TYPE BODY. Inside an anonymous block or non-DML trigger, $$PLSQL_UNIT_TYPE has the value ANONYMOUS BLOCK.

You can call two built-in functions, DBMS_UTILITY.FORMAT_CALL_STACK and DBMS_UTILITY.FORMAT_ERROR_BACKTRACE, to obtain current line numbers, but then you must also parse those strings to find the line number and program unit name. These inquiry directives provide the information more directly. Here is an example:

BEGIN

IF l_balance < 10000

THEN

raise_error (

err_name => 'BALANCE TOO LOW'

,failed_in => $$plsql_unit

,failed_on => $$plsql_line

);

END IF;

...

END;

Run the cc_line_unit.sql file on the book’s website to see a demonstration of using these last two directives.

Note that when $$PLSQL_UNIT is referenced inside a package, it will return the name of the package, not the name of the individual procedure or function within the package.

Using the PLSQL_CCFLAGS parameter

Oracle offers a parameter, PLSQL_CCFLAGS, that you can use with conditional compilation. Essentially, it allows you to define name/value pairs, and the name can then be referenced as an inquiry directive in your conditional compilation logic. Here is an example:

ALTER SESSION SET PLSQL_CCFLAGS = 'use_debug:TRUE, trace_level:10';

The flag name can be set to any valid PL/SQL identifier, including reserved words and keywords (the identifier will be prefixed with $$, so there will be no confusion with normal PL/SQL code). The value assigned to the name must be one of the following: TRUE, FALSE, NULL, or a PLS_INTEGER literal.

The PLSQL_CCFLAGS value will be associated with each program that is then compiled in that session. If you want to keep those settings with the program, then future compilations with the ALTER...COMPILE command should include the REUSE SETTINGS clause.

Because you can change the value of this parameter and then compile selected program units, you can easily define different sets of inquiry directives for different programs.

Note that you can refer to a flag that is not defined in PLSQL_CCFLAGS; this flag will evaluate to NULL. If you enable compile-time warnings, this reference to an undefined flag will cause the database to report a PLW-06003: unknown inquiry directive warning (unless the source code is wrapped).

The $IF Directive

Use the selection directive, implemented through the $IF statement, to direct the conditional compilation step in the preprocessor. Here is the general syntax of this directive:

$IF Boolean-expression

$THEN

code_fragment

[$ELSIF Boolean-expression

$THEN

code_fragment]

[$ELSE

code_fragment]

$END

where Boolean-expression is a static expression (it can be evaluated at the time of compilation) that evaluates to TRUE, FALSE, or NULL. The code_fragment can be any set of PL/SQL statements, which will then be passed to the compiler for compilation, as directed by the expression evaluations.

Static expressions can be constructed from any of the following elements:

§ Boolean, PLS_INTEGER, and NULL literals, plus combinations of these literals.

§ Boolean, PLS_INTEGER, and VARCHAR2 static expressions.

§ Inquiry directives (i.e., identifiers prefixed with $$). These directives can be provided by Oracle (e.g., $$PLSQL_OPTIMIZE_LEVEL; the full list is provided in the section The Optimizing Compiler) or set via the PLSQL_CCFLAGS compilation parameter (explained earlier in this chapter).

§ Static constants defined in a PL/SQL package.

§ Most comparison operations (>, <, =, and <> are fine, but you cannot use an IN expression), logical Boolean operations such as AND and OR, concatenations of static character expressions, and tests for NULL.

A static expression may not contain calls to procedures or functions that require execution; they cannot be evaluated during compilation and therefore will render invalid the expression within the $IF directive. You will get a compile error as follows:

PLS-00174: a static boolean expression must be used

Here are a few examples of static expressions in $IF directives:

§ If the user-defined inquiry directive controlling debugging is not NULL, then initialize the debug subsystem:

§ $IF $$app_debug_level IS NOT NULL $THEN

§ debug_pkg.initialize;

$END

§ Check the value of a user-defined package constant along with the optimization level:

§ $IF $$PLSQL_OPTIMIZE_LEVEL = 2 AND appdef_pkg.long_compilation

§ $THEN

§ $ERROR 'Do not use optimization level 2 for this program!' $END

$END

NOTE

String literals and concatenations of strings are allowed only in the $ERROR directive; they may not appear in the $IF directive.

The $ERROR Directive

Use the $ERROR directive to cause the current compilation to fail and return the error message provided. The syntax of this directive is:

$ERROR VARCHAR2_expression $END

Suppose that I need to set the optimization level for a particular program unit to 1, so that compilation time will be improved. In the following example, I use the $$ inquiry directive to check the value of the optimization level from the compilation environment. I then raise an error with the $ERROR directive as necessary:

/* File on web: cc_opt_level_check.sql */

SQL> CREATE OR REPLACE PROCEDURE long_compilation

2 IS

3 BEGIN

4 $IF $$plsql_optimize_level != 1

5 $THEN

6 $ERROR 'This program must be compiled with optimization level = 1' $END

7 $END

8 NULL;

9 END long_compilation;

10 /

Warning: Procedure created with compilation errors.

SQL> SHOW ERRORS

Errors for PROCEDURE LONG_COMPILATION:

LINE/COL ERROR

-------- -----------------------------------------------------------------

6/4 PLS-00179: $ERROR: This program must be compiled with

optimization level = 1

Synchronizing Code with Packaged Constants

Use of packaged constants within a selection directive allows you to easily synchronize multiple program units around a specific conditional compilation setting. This is possible because Oracle’s automatic dependency management is applied to selection directives. In other words, if program unit PROG contains a selection directive that references package PKG, then PROG is marked as dependent on PKG. When the specification of PKG is recompiled, all program units using the packaged constant are marked INVALID and must be recompiled.

Suppose I want to use conditional compilation to automatically include or exclude debugging and tracing logic in my code base. I define a package specification to hold the required constants:

/* File on web: cc_debug.pks */

PACKAGE cc_debug

IS

debug_active CONSTANT BOOLEAN := TRUE;

trace_level CONSTANT PLS_INTEGER := 10;

END cc_debug;

I then use these constants in the procedure calc_totals:

PROCEDURE calc_totals

IS

BEGIN

$IF cc_debug.debug_active AND cc_debug.trace_level > 5 $THEN

log_info (...);

$END

...

END calc_totals;

During development, the debug_active constant is initialized to TRUE. When it is time to move the code to production, I change the flag to FALSE and recompile the package. The calc_totals program and all other programs with similar selection directives are marked INVALID and must then be recompiled.

Program-Specific Settings with Inquiry Directives

Packaged constants are useful for coordinating settings across multiple program units. Inquiry directives, drawn from the compilation settings of individual programs, are a better fit when you need different settings applied to different programs.

Once you have compiled a program with a particular set of values, it will retain those values until the next compilation (from either a file or a simple recompilation using the ALTER...COMPILE command). Furthermore, a program is guaranteed to be recompiled with the same postprocessed source as was selected at the time of the previous compilation if all of the following conditions are TRUE:

§ None of the conditional compilation directives refer to package constants. Instead, they rely only on inquiry directives.

§ When the program is recompiled, the REUSE SETTINGS clause is used and the PLSQL_CCFLAGS parameter is not included in the ALTER...COMPILE command.

This capability is demonstrated by the cc_reuse_settings.sql script (available on the book’s website), whose output is shown next. I first set the value of app_debug to TRUE and then compile a program with that setting. A query against USER_PLSQL_OBJECT_SETTINGS shows that this value is now associated with the program unit:

/* File on web: cc_reuse_settings.sql */

SQL> ALTER SESSION SET plsql_ccflags = 'app_debug:TRUE';

SQL> CREATE OR REPLACE PROCEDURE test_ccflags

2 IS

3 BEGIN

4 NULL;

5 END test_ccflags;

6 /

SQL> SELECT name, plsql_ccflags

2 FROM user_plsql_object_settings

3 WHERE NAME LIKE '%CCFLAGS%';

NAME PLSQL_CCFLAGS

------------------------------ ----------------------------

TEST_CCFLAGS app_debug:TRUE

I now alter the session, setting $$app_debug to evaluate to FALSE. I compile a new program with this setting:

SQL> ALTER SESSION SET plsql_ccflags = 'app_debug:FALSE';

SQL> CREATE OR REPLACE PROCEDURE test_ccflags_new

2 IS

3 BEGIN

4 NULL;

5 END test_ccflags_new;

6/

Then I recompile my existing program with REUSE SETTINGS:

SQL> ALTER PROCEDURE test_ccflags COMPILE REUSE SETTINGS;

A query against the data dictionary view now reveals that my settings are different for each program:

SQL> SELECT name, plsql_ccflags

2 FROM user_plsql_object_settings

3 WHERE NAME LIKE '%CCFLAGS%';

NAME PLSQL_CCFLAGS

------------------------------ ----------------------------

TEST_CCFLAGS app_debug:TRUE

TEST_CCFLAGS_NEW app_debug:FALSE

Working with Postprocessed Code

You can use the DBMS_PREPROCESSOR package to display or retrieve the source text of your program in its postprocessed form. DBMS_PREPROCESSOR offers two programs, overloaded to allow you to specify the object of interest in various ways, as well as to work with individual strings and collections:

DBMS_PREPROCESSOR.PRINT_POST_PROCESSED_SOURCE

Retrieves the postprocessed source and then displays it with the function DBMS_OUTPUT.PUT_LINE

DBMS_PREPROCESSOR.GET_POST_PROCESSED_SOURCE

Returns the postprocessed source as either a single string or a collection of strings

When working with the collection version of either of these programs, you will need to declare that collection based on the following package-defined collection:

TYPE DBMS_PREPROCESSOR.source_lines_t IS TABLE OF VARCHAR2(32767)

INDEX BY BINARY_INTEGER;

The following sequence demonstrates the capability of these programs. First I compile a very small program with a selection directive based on the optimization level. I then display the postprocessed code, and it shows the correct branch of the $IF statement:

/* File on web: cc_postprocessor.sql */

PROCEDURE post_processed

IS

BEGIN

$IF $$PLSQL_OPTIMIZE_LEVEL = 1

$THEN

-- Slow and easy

NULL;

$ELSE

-- Fast and modern and easy

NULL;

$END

END post_processed;

SQL> BEGIN

2 DBMS_PREPROCESSOR.PRINT_POST_PROCESSED_SOURCE (

3 'PROCEDURE', USER, 'POST_PROCESSED');

4 END;

5 /

PROCEDURE post_processed

IS

BEGIN

-- Fast and modern and easy

NULL;

END post_processed;

In the following block, I use the “get” function to retrieve the postprocessed code, and then display it using DBMS_OUTPUT.PUT_LINE:

DECLARE

l_postproc_code DBMS_PREPROCESSOR.SOURCE_LINES_T;

l_row PLS_INTEGER;

BEGIN

l_postproc_code :=

DBMS_PREPROCESSOR.GET_POST_PROCESSED_SOURCE (

'PROCEDURE', USER, 'POST_PROCESSED');

l_row := l_postproc_code.FIRST;

WHILE (l_row IS NOT NULL)

LOOP

DBMS_OUTPUT.put_line ( LPAD (l_row, 3)

|| ' - '

|| rtrim ( l_postproc_code (l_row),chr(10))

);

l_row := l_postproc_code.NEXT (l_row);

END LOOP;

END;

Conditional compilation opens up all sorts of possibilities for PL/SQL developers and application administrators. And its usefulness only increases as new versions of the Oracle database are released and the DBMS_DB_VERSION constants can be put to full use, allowing us to take additional advantage of each version’s unique PL/SQL features.

PL/SQL and Database Instance Memory

By economizing on its use of machine resources such as memory and CPU, an Oracle database can support tens of thousands of simultaneous users on a single database. Oracle’s memory management techniques have become quite sophisticated over the years, and correspondingly difficult to understand. Fortunately, the picture as it applies to PL/SQL and session-related memory is relatively simple. In addition, while there are a few things developers can do to reduce memory consumption in their PL/SQL code, database memory configuration and allocation is for the most part handled by DBAs.

The SGA, PGA, and UGA

When a client program such as SQL*Plus or SQL Developer interacts with the database, three memory structures may be used.

System global area (SGA)

The SGA is a group of shared memory structures, or SGA components, that contain data and control information for a single Oracle Database instance. All server and background processes share the SGA. Examples of data stored in the SGA include cached data blocks and shared SQL areas.

Process global area (PGA)

A PGA is a memory region that is not shared with multiple sessions and instead contains data and control information exclusively for use by a single Oracle process. Oracle Database creates the PGA when an Oracle process starts. There is one PGA for each server process and background process. The collection of individual PGAs is referred to as the total instance PGA, or instance PGA. Database initialization parameters set the size of the instance PGA, not individual PGAs.

User global area (UGA)

The UGA is memory allocated for storing information associated with a user session, such as logon information, and other information required by a database session. Essentially, the UGA stores the session state.

The location of the UGA in memory depends on whether the session has connected to the database using a dedicated or shared server mode.

Dedicated server mode

The database spawns a dedicated server process for each session. This is appropriate for workloads that either are intensive or involve long-running database calls. The UGA is placed in the PGA because no other server process will need to access it.

Shared server mode

Database calls are queued to a group of shared server processes that can service calls on behalf of any session. This is appropriate if there are many hundreds of concurrent sessions making short calls with a lot of intervening idle time. The UGA is placed in the SGA so that it can be accessed by any of the shared server processes.

The total size of the PGA can vary quite a bit based on what kinds of operations your application requires the server to perform. A PL/SQL package that populates a large PL/SQL collection in a package-level variable may, for example, consume large amounts of UGA memory.

If your application uses shared server mode, user processes may have to wait in a queue to be serviced. If any of your user processes invoke long-running PL/SQL blocks or SQL statements, the DBA may need to either configure the server with a greater number of shared processes or assign to those sessions a dedicated server.

Let’s next consider what memory looks like to an individual running program.

Cursors, Memory, and More

You may have written hundreds of programs that declare, open, fetch from, and close cursors. It’s impossible to execute SQL or PL/SQL without cursors, and statements often automatically make recursive calls that open more cursors. Because every cursor, whether implicit or explicit, requires an allocation of memory on the database server, tuning the database sometimes involves reducing the number of cursors required by an application.

NOTE

Although this section is devoted to memory, keep in mind that memory is only one aspect of tuning the database; you may actually improve overall performance by increasing the number of cursors to avoid soft parses.

The database assigns cursors to anonymous PL/SQL blocks in much the same way that it assigns cursors to SQL statements. For example, on the first parse call from a session, the database opens an area in UGA memory (the “private SQL area”) where it will put information specific to the run.

When executing a SQL statement or a PL/SQL block, the server first looks in the library cache to see if it can find a reusable parsed representation of it. If it does find such a shared PL/SQL area, the runtime engine establishes an association between the private SQL area and the shared SQL area. The shared cursor has to be found or built before the private SQL area can be allocated. The private SQL area memory requirements are part of what is determined during compilation and cached in the shared cursor. If no reusable shared area exists, the database will “hard parse” the statement or block. (As an aside, note that the database also prepares and caches a simple execution plan for anonymous PL/SQL blocks, which consists of calling the PL/SQL engine to interpret the bytecode.)

The database interprets the simplest of PL/SQL blocks—those that call no subprograms and include no embedded SQL statements—using only the memory allocated for its primary cursor. If your PL/SQL program includes SQL or PL/SQL calls, though, the database requires additional private SQL areas in the UGA. PL/SQL manages these on behalf of your application.

This brings us to another important fact about cursors: there are two ways a cursor can be closed. A soft-closed cursor is one that you can no longer use in your application without reopening it. This is what you get when you close a cursor using a statement such as this one:

CLOSE cursor_name;

or even when an implicit cursor closes automatically. However, PL/SQL does not immediately free the session memory associated with this cursor. Instead, it caches the cursor to avoid a soft parse should the cursor be opened again, as often happens. You will see, if you look in the V$OPEN_CURSOR view, that the CLOSE alone does not reduce the count of this session’s open cursors; as of 11.1, you can also fetch the new CURSOR_TYPE column value to get additional information about a cursor.

It turns out that PL/SQL maintains its own “session cursor cache”; that is, it decides when to close a cursor for good. This cache can hold a maximum number of cursors, as specified by the OPEN_CURSORS database initialization parameter. A least recently used (LRU) algorithm determines which of the soft-closed cursors need to be hard closed and hence deallocated.

However, PL/SQL’s internal algorithm works optimally only if your programs close their cursors immediately after they are through fetching with them. So remember:

If you explicitly open a cursor, you should explicitly close it as soon as you are through using it (but not sooner).