Achieving Extreme Performance with Oracle Exadata (Oracle Press) (2011)

PART II

Best Practices

CHAPTER 5

Managing the Exadata Database Machine

Once the Oracle Exadata Database Machine is deployed in your data center, you are required to perform ongoing administration tasks on its software and hardware components, as you would with any other system.

The initial setup and configuration of the Database Machine is typically priced into Exadata proposals by Oracle and performed by Oracle Advanced Customer Services (ACS) upon delivery and installation of the hardware. The setup process includes the installation and configuration of the operating system and the Oracle software, and the configuration of InfiniBand and Ethernet networks. At the end of the setup process, the Database Machine is ready and configured and includes a working Oracle database. You are then able to create additional new databases and start migrating databases from non-Exadata platforms, or start deploying your brand-new database applications. Since you might want to better understand the installation process or might want to configure additional databases on the Exadata Storage Servers on your own, we cover this process in this chapter.

The Exadata Storage Server Software components, including the Exadata Storage Server Software and the Oracle Linux operating system, need to be administered on an ongoing basis. The administration tasks include patching and upgrading of the operating system and the Exadata Storage Server Software, and monitoring of the software and hardware components. Apart from the regular administration activities, you may also perform tasks related to the setup of advanced Exadata Storage Server Software features, such as I/O Resource Manager and Exadata security.

This chapter will discuss the different processes and tools available for performing administration tasks on the Exadata Storage Server components. The topics covered in the remainder of this chapter are focused on the architecture, administration, and monitoring of the Exadata Storage Servers.

NOTE

This chapter does not focus on administration of the database servers in the Exadata Database Machine. The database servers are managed, maintained, and monitored similar to a regular Oracle Database 11g Release 2 utilizing Real Application Clusters (RAC) and Automatic Storage Management (ASM). When required, you would patch the Oracle database and the grid infrastructure software, perform administration tasks, monitor and tune the databases, back up the database and operating system, and perform database upgrades, just as you would on standard Oracle systems. Moreover, you need to manage the database server nodes operating system (Oracle Linux or Solaris) and the hardware as you would normally manage any other Intel-based servers in your data center. Please also refer to Chapter 2.

Exadata Storage Server Architecture

At a high level, the Exadata Database Machine is composed of a database grid, a storage grid, and the InfiniBand network that provides the unified fabric for storage and database interinstance communication. The Oracle Sun servers that comprise the database and the storage grids are built using open standards-based hardware, and are made of components that are normally found in enterprise-class servers such as CPUs, memory, PCIe slots, hard disks, Host Channel Adapters (HCA), and Ethernet interfaces. The operating system managing the Oracle Sun servers that are the Oracle Exadata Storage Server cells in the storage grid is Oracle Linux.

In the following section, we will discuss the architecture of the software components of Exadata Database Machine in detail.

Database Server Software Components

The database grid of the Database Machine utilizes Oracle ASM as the storage management layer. Oracle ASM acts as the cluster volume manager for the storage served by the Exadata Storage Servers, and is the only software that can be used to manage the storage grid of the Database Machine. Moreover, you are not allowed to directly attach or mount the storage served by the Exadata Storage Servers to any other server or storage volume manager.

Oracle ASM provides data placement and management services on the Exadata Storage Servers, stripes and mirrors the database extents into chunks called ASM allocation units (ASM AU, also called ASM extents), and spreads them across the disks served by the Exadata Storage Servers. Oracle ASM provides high availability of the extents and eliminates single points of failure by keeping a mirrored copy of the extent available at all times. The striping of data by ASM provides optimal I/O performance, as the I/O requests will be parallelized across all the available Exadata Storage Servers in the grid that houses the striped data.

Oracle ASM will be active during the initial placement of data, and also when performing ongoing management operations, such as rebalancing of data across the Exadata Storage Servers. The database server processes initiate I/O requests directly with the Exadata Storage Servers and bypass the Oracle ASM instance, thus reducing the unnecessary overhead of involving ASM for each database I/O operation. The direct communication of the database with the storage is possible because the database server stores the extent maps of ASM files in the SGA, which enables read/write I/O requests directly with the Exadata Storage Servers without requiring the Oracle ASM instance. This method of initiating I/O requests is the same when the ASM files reside on non-Exadata-based storage.

The database server process and the Oracle ASM instance communicate with Exadata Storage Servers using the iDB protocol. iDB is a data transfer protocol implemented in the Oracle kernel and provides intelligence that enables the database and ASM instances to utilize Exadata-specific features such as offload processing and I/O Resource Manager (IORM). iDB is built upon Reliable Datagram Sockets (RDS), which is the same protocol used by database RAC interinstance communication over the InfiniBand network. RDS incorporates optimizations such as Remote Direct Memory Access (RDMA) to minimize the network protocol overhead by allowing distributed applications to transfer data directly between their memory structures without involving the operating system memory, a feature that is more commonly referred to as Zero-copy Datagram Protocol (ZDP).

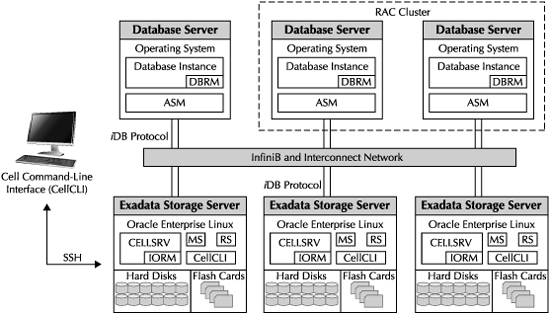

The RDS protocol also enables iDB to facilitate I/O bandwidth allocations for Exadata IORM, and provides bandwidth aggregation and failover of the InfiniBand network. The iDB communication is facilitated by software processes that reside on the database nodes and the Exadata Storage Servers, as shown in Figure 5-1.

FIGURE 5-1. Software components of the Oracle Exadata Database Machine

The Database Resource Manager (DBRM) component of the Oracle database performs management of CPU and I/O resources. DBRM communicates with Exadata Storage Server processes for performing I/O Resource Management for intradatabase resource manager plans. IORM utilizes the DBRM intradatabase plans generated by administrators, and regulates the I/O utilization among different consumer groups within the database.

Exadata Storage Server Software Components

The storage grid in the Exadata Database Machine is composed of multiple Exadata Storage Servers, with each server providing a network-accessible storage device to be utilized by Oracle ASM. The operating system managing the Exadata Storage Servers is Oracle Linux, and the Oracle software providing the core features of the Exadata Storage Server is Oracle Exadata Storage Server Software.

The Exadata Storage Server Software consists of the following components:

![]() Cell Server (CELLSRV) The CELLSRV process is the main kernel of Exadata Storage Server Software and is responsible for serving iDB requests initiated by the database and ASM instances. The CELLSRV process handles simple block I/O requests along with offload processing requests. The CELLSRV process implements IORM plans and performs throttling of I/O bandwidth based on I/O priorities as defined by the plan. CELLSRV also interacts with DBRM for implementing intradatabase resource manager plans.

Cell Server (CELLSRV) The CELLSRV process is the main kernel of Exadata Storage Server Software and is responsible for serving iDB requests initiated by the database and ASM instances. The CELLSRV process handles simple block I/O requests along with offload processing requests. The CELLSRV process implements IORM plans and performs throttling of I/O bandwidth based on I/O priorities as defined by the plan. CELLSRV also interacts with DBRM for implementing intradatabase resource manager plans.

![]() Management Server (MS) The MS process provides management and monitoring functions for the Exadata Storage Server Software. The MS process is responsible for triggering alerts when exceptions are encountered by the Exadata Storage Server components. The MS process communicates with CELLSRV and the operating system utilities in order to check the health of the software and hardware components.

Management Server (MS) The MS process provides management and monitoring functions for the Exadata Storage Server Software. The MS process is responsible for triggering alerts when exceptions are encountered by the Exadata Storage Server components. The MS process communicates with CELLSRV and the operating system utilities in order to check the health of the software and hardware components.

![]() Restart Server (RS) The RS process handles startup and shutdown requests of the Exadata Storage Server Software. The RS process monitors the CELLSRV and MS process, and restarts them when they die abruptly. There is also a backup RS process that takes care of restarting the primary RS process if the primary itself is not running.

Restart Server (RS) The RS process handles startup and shutdown requests of the Exadata Storage Server Software. The RS process monitors the CELLSRV and MS process, and restarts them when they die abruptly. There is also a backup RS process that takes care of restarting the primary RS process if the primary itself is not running.

![]() CellCLI The Cell Command Line Interface (CellCLI) is the utility that you use to perform management and administration functions on the Exadata Storage Server Software. More details on CellCLI are covered later in this chapter.

CellCLI The Cell Command Line Interface (CellCLI) is the utility that you use to perform management and administration functions on the Exadata Storage Server Software. More details on CellCLI are covered later in this chapter.

Exadata Storage Layout

The components of the Exadata Storage Servers that provide persistent storage services are the High Performance or High Capacity hard disks and the flash disks that are created on Sun Flash Accelerator F20 PCIe cards. In this section, we will discuss the best practices for provisioning these storage devices and presenting them to Oracle ASM. Once the storage is presented to Oracle ASM, you can create ASM diskgroups, and eventually create tablespaces in the Oracle database that utilizes the diskgroups to store database objects.

LUNs, Cell Disks, and Grid Disks

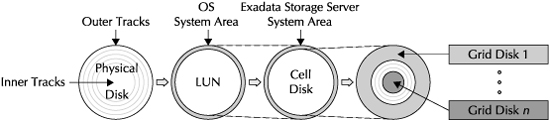

As shown in Figure 5-2, each hard disk gets presented to the Exadata Storage Server Software and the OS as a logical unit (LUN). A LUN is the representation of the hard disk to the OS. The Exadata Storage Server Software will take the LUN and format it to create a cell disk. There is a one-to-one relationship between a LUN and a cell disk.

When the cell disk is created, it reserves a small portion of the available storage on the LUN for a system area. The system area is used to store information about the LUNs, cell disks, and grid disks (discussed next) created on top of the cell disks. The system area on the first two drives is special since it stores the Oracle Linux operating system and the Linux file systems of the Exadata Storage Server.

Grid disks add another layer of logical abstraction on the physical storage and are created on top of cell disks. Grid disks are the entities that get presented to Oracle ASM as ASM disks, and ASM diskgroups get created across the available ASM disks (i.e., grid disks). You can create multiple grid disks per cell disk, and when a grid disk is created, it will carve out a chunk of space from the unused outer tracks of the physical disk. The first grid disk created on the cell disk will allocate space from the outer tracks and move towards the inner tracks, reserving the number of tracks that correspond to the size of the grid disk. This grid disk provides the fastest performance since the outer tracks of a hard disk provide the best read/write performance. The next grid disk you will create starts from the tracks where the first grid disk ends, and this process repeats until you exhaust all the space on the cell disk or you are done creating the grid disks.

Creating multiple grid disks per cell disk allows you to create multiple pools of storage on the same Exadata Storage Server. The multiple grid disks can be assigned to separate ASM diskgroups, which can be provisioned to different databases. Even within the same database, you can store your active data on the grid disks created on the outer tracks and the less active data on the grid disks created on the inner tracks. The best practice for creating grid disks is to create a minimum of two grid disks per cell disk. The first grid disk will be used to store user data since it provides the best performance. The second grid disk can be used to store the database Fast Recovery Area (FRA) for storing the database flashback logs, archive logs, and backups.

FIGURE 5-2. Relationship between LUNS, cell disks, and grid disks

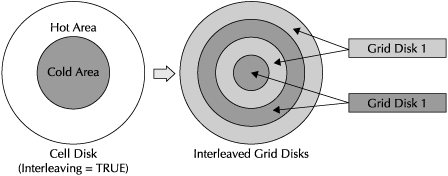

Interleaved Grid Disks

One drawback of creating multiple grid disks per cell disk as just described is that it does not provide the second grid disk the opportunity to be placed on the faster outer tracks. Moreover, the space utilization within a grid disk is such that only half of the space tends to be used for hot data, and the remaining half is used for mirrored blocks or colder data, which does not need the higher performance of the outer tracks. This situation can be mitigated by creating interleaved grid disks instead of regular grid disks, as shown in Figure 5-3.

With interleaved grid disks, you would provide the second grid disk the opportunity to be placed towards the faster-performing outer tracks. This mechanism is useful when you set up the Exadata Storage Server for multiple storage pools by creating multiple ASM diskgroups. All the ASM diskgroups will have the opportunity to utilize the faster-performing areas of the cell disk, and thus attempt to equalize the performance.

FIGURE 5-3. Interleaved grid disks

Interleaving is enabled at the cell disk level by setting the INTERLEAVING attribute at the cell disk creation time. When you enable interleaving, it divides the cell disk into two equal parts; the first part is the faster-performing or the hot part, and is allocated towards the outer tracks; the second part is slower-performing or the cold part, and is allocated towards the inner tracks. When you create the interleaved grid disk on the cell disk, half of the interleaved grid disk is placed in the hot area and the remaining half is placed in the cold area. The process will be repeated when you create multiple interleaved grid disks until you exhaust the space on the cell disk or you no longer wish to create additional grid disks.

Flash Disks and Exadata Smart Flash Cache

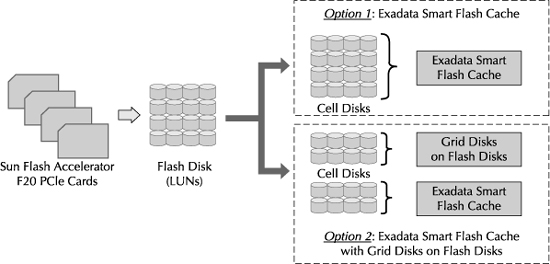

Each Exadata Storage Server in the Exadata Database Machine comes preinstalled with Sun Flash Accelerator F20 PCIe cards. The PCIe-based flash storage technology is primarily used to speed up access to data, since I/Os against flash are much faster than I/Os against the hard disks. Each flash card is divided into multiple modules, and each module is exposed to the operating system as one LUN, and this LUN is categorized as a flash disk LUN, as shown in Figure 5-4.

FIGURE 5-4. Relationship between flash disk, Exadata Smart Flash Cache, and flash-based grid disks

The three main options for managing and utilizing the flash disks in the Exadata Storage Server are highlighted here:

![]() Exadata Smart Flash Cache The first option is to let the Exadata Storage Server Software, described in detail in Chapter 3, manage the flash disks and configure them to be used by the Exadata Smart Flash Cache feature. The Exadata Smart Flash Cache will store the frequently accessed data (hot data) into the flash cards, leading to a substantial improvement in disk read performance on hot data, whereas the infrequently accessed data (cold data) resides on the cost-effective, hard disk-based storage. When the database server requests read or write operations from the Exadata Storage Server Software, it sends additional information about the request that indicates whether the blocks are candidates to be read again. Based upon this additional information, the Exadata Storage Server decides on caching the blocks in the Exadata Smart Flash Cache.

Exadata Smart Flash Cache The first option is to let the Exadata Storage Server Software, described in detail in Chapter 3, manage the flash disks and configure them to be used by the Exadata Smart Flash Cache feature. The Exadata Smart Flash Cache will store the frequently accessed data (hot data) into the flash cards, leading to a substantial improvement in disk read performance on hot data, whereas the infrequently accessed data (cold data) resides on the cost-effective, hard disk-based storage. When the database server requests read or write operations from the Exadata Storage Server Software, it sends additional information about the request that indicates whether the blocks are candidates to be read again. Based upon this additional information, the Exadata Storage Server decides on caching the blocks in the Exadata Smart Flash Cache.

When the I/O requests sent to Exadata Storage Server are for random reads and index reads (db file sequential reads), the blocks are likely to be read again and hence will be cached by the Exadata Smart Flash Cache algorithm. In case of I/Os involving table scans, backups, redo log writes, and ASM mirrored writes, the blocks are most likely not read again and will bypass the Exadata Smart Flash Cache. However, there is an exception to this rule, and it applies to tables and partitions that are defined using the KEEP qualifier, as described in Chapter 3 and also next.

Exadata Smart Flash Cache is configured by default and is also the best practice for managing the flash disks, since the Exadata Storage Server Software will be responsible for managing the flash without requiring any user intervention or tuning.

![]() Pinning Objects in Exadata Smart Flash Cache This option is an extension to the first one, and it allows the user to enable the database objects such as tables, indexes, partitions, and LOB columns, to be pinned in the Exadata Smart Flash Cache. The objects that are specified to be pinned are not exactly pinned in the cache 100 percent of the time, but rather given special treatment by the Exadata Smart Flash Cache algorithm, and the treatment is to keep the object in cache more aggressively and longer than the other objects. It is important to point out that the Flash Cache does not get preloaded with the pinned objects, but rather, the individual blocks of the object get loaded in an incremental manner upon being accessed by the users.

Pinning Objects in Exadata Smart Flash Cache This option is an extension to the first one, and it allows the user to enable the database objects such as tables, indexes, partitions, and LOB columns, to be pinned in the Exadata Smart Flash Cache. The objects that are specified to be pinned are not exactly pinned in the cache 100 percent of the time, but rather given special treatment by the Exadata Smart Flash Cache algorithm, and the treatment is to keep the object in cache more aggressively and longer than the other objects. It is important to point out that the Flash Cache does not get preloaded with the pinned objects, but rather, the individual blocks of the object get loaded in an incremental manner upon being accessed by the users.

The database object-level setting of the CELL_FLASH_CACHE attribute determines whether the object is enabled to be pinned in the Flash Cache. The CELL_FLASH_CACHE storage attribute can be set to KEEP, DEFAULT, or NONE. When set to KEEP, the object will be enabled for pinning, and when set to NONE it will never be cached. The DEFAULT attribute invokes the default caching algorithm, which is what was discussed in the first option.

The default behavior of the caching algorithm when it encounters Exadata Smart Scans is to skip the Smart Scan blocks from being cached altogether. However, if you have the CELL_FLASH_CACHE set to KEEP on the table undergoing a Smart Scan operation, the table will be cached in the Flash Cache.

You can modify the caching behavior on the object with a simple ALTER statement. In this example, the stocks table is enabled to be pinned in the Flash Cache.

SQL> ALTER TABLE stocks STORAGE (CELL_FLASH_CACHE KEEP);

NOTE

Be aware that changing the CELL_FLASH_CACHE from KEEP to NONE or DEFAULT will not remove the kept blocks from the Flash Cache—this change will simply subject the pinned blocks to the more aggressive aging algorithm used for the NONE or DEFAULT settings.

![]() User Managed Flash Disks The third option of managing the flash disks is to have them partially managed as Exadata Smart Flash Cache and partially managed by the user for manually placing database objects. When the user manages the flash disk, the grid disks are created on a portion of the flash-based cell disks and exposed to Oracle ASM. Oracle ASM creates ASM diskgroups that reside on the flash disks, and thus provide the extreme I/O performance of flash storage. This option allows you to place the hot or frequently accessed tables directly on a tablespace that is created on the flash-based ASM diskgroup.

User Managed Flash Disks The third option of managing the flash disks is to have them partially managed as Exadata Smart Flash Cache and partially managed by the user for manually placing database objects. When the user manages the flash disk, the grid disks are created on a portion of the flash-based cell disks and exposed to Oracle ASM. Oracle ASM creates ASM diskgroups that reside on the flash disks, and thus provide the extreme I/O performance of flash storage. This option allows you to place the hot or frequently accessed tables directly on a tablespace that is created on the flash-based ASM diskgroup.

One of the drawbacks of this method is that it requires the user to analyze and identify the tables that qualify as “frequently accessed”, and also when the access pattern of the data changes, the user needs to perform ongoing management of the flash disk space by moving objects in and out of the flash-based ASM diskgroup.

Another drawback of this method is that you will be wasting at least half of the flash disk space by mirroring the data using NORMAL or HIGH redundancy settings on the ASM diskgroup. You cannot do away with mirroring since flash-based storage has higher failure rates when compared with the hard disks and mirroring will provide you a better level of protection.

ASM Disks and ASM Diskgroup

An ASM diskgroup is the primary storage entity of ASM that is exposed to the Oracle Database for storing database files. The ASM diskgroup is composed of one or more ASM disks, which are nothing but the grid disks exposed from each Exadata Storage Server to Oracle ASM. It is important to know that all ASM disks within an ASM diskgroup should reside in the Exadata Storage Servers in order for the database to utilize the Exadata-related features such as Exadata SQL offload processing.

With Oracle ASM, you can define failure groups in an ASM diskgroup. An ASM failure group is composed of ASM disks within the ASM diskgroup that have the tendency to fail together because they share the same hardware. The reason for creating failure groups is to identify the ASM disks that are candidates for storing mirrored copies of data. You do not want to store the mirror copy of an ASM extent in an ASM disk that belongs to the same failure group, because they both can fail together and you can end up losing your data. When you are creating ASM diskgroups in an Exadata environment, the failure groups are created automatically by the ASM instance since ASM knows to group the disks belonging to the same Exadata Storage Server in the same failure group.

An Exadata Grid redundant array of inexpensive disks (Grid RAID) uses ASM mirroring capabilities by specifying a redundancy level when creating the ASM diskgroup. In order for the ASM extents to be available during Exadata Storage Server failures, it is a requirement to use ASM mirroring by using NORMAL or HIGH redundancy diskgroups. The NORMAL redundancy option will allocate one mirror copy of the ASM extent in a different failure group as the one allocated to the primary copy, whereas the HIGH redundancy option will allocate two mirrored copies of the extent in two separate failure groups.

The NORMAL redundancy tolerates a single Exadata Storage Server failure or multiple failures within the same Storage Server at a time, and the HIGH redundancy tolerates two Exadata Storage Server failures or multiple failures confined to two Storage Servers at a time in the storage grid. When you choose the redundancy level, ensure that the post-failure capacity and performance due to reduced number of Exadata Storage Servers in the Database Machine provide an acceptable level of service to the applications.

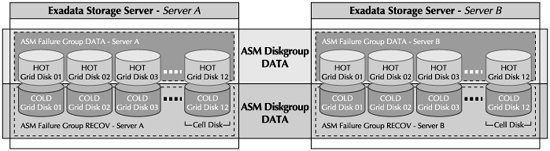

In the example shown in Figure 5-5, there are two Exadata Storage Servers—Server A and Server B. Two ASM diskgroups are created across Server A and Server B in the grid. The DATA diskgroup is defined on the faster-performing (or hot) grid disks, and the RECOV diskgroup is defined on the slower-performing (or cold) grid disks. The diskgroups are created with NORMAL redundancy, which implicitly creates two failure groups per each diskgroup, and the extents from Server A’s failure group will be mirrored to Server B’s failure group. If Server A fails, then Oracle ASM transparently fetches the mirrored extent from Server B and satisfies the I/O request without incurring any downtime.

The names of the ASM disks are the same as the Exadata Storage Server grid disks, and can be retrieved from the ASM instance by querying the name attribute of v$asm_disk. By looking at the ASM disk name, you can pinpoint the Exadata Storage Server and the cell disk to which the ASM disk belongs. This is possible because the ASM disk name contains the Exadata Storage Server name and the cell disk name as a prefix. The prefix is added by the system and works well to locate the cell disk to which the grid disk belongs. However, when you have multiple grid disks created per cell disk, in order to identify whether the grid disk was created on the hot or the cold areas of the hard disk, you need to add a user-defined prefix to the system-defined name. This is accomplished by using the PREFIX attribute of the CREATE GRIDDISK command. The PREFIX needs to be meaningful to the user and should be defined such that it will help you identify the location of the grid disk within the cell disk.

FIGURE 5-5. ASM diskgroup architecture

Exadata Storage Server Administration

To perform administration tasks on the Exadata Storage Servers, you first need to establish a communication path to the server’s terminal console. One of the options for accessing the console is through KVM (keyboard, video and mouse) hardware that comes with X2-2 configurations of the Oracle Exadata Database Machine and its predecessors. But to access the KVM hardware, you need to be physically present alongside the Database Machine, and you have no choice but to use KVM when configuring the Database Machine for the first time. Once you perform the initial configuration steps that are outlined in the Exadata Storage Server first boot sequence section later in the chapter, the Exadata Storage Servers are made accessible over the Ethernet network and you can then use alternative methods that allow you to log in remotely.

Once the server is accessible over the network, you can use the Secure Shell (SSH) protocol to login remotely using an SSH-enabled client. The KVM console can also be redirected over the network to your desktop using the Sun Integrated Lights Out Management (ILOM) remote client. Another method to access the server console without the graphics is to redirect the console to a serial port and use ILOM to access the serial port through its web-based interface. No matter what remote method you use, the host you log in from should be able to access the Ethernet management network of the Exadata Database Machine.

Once you are at the OS login prompt, you need an OS user with privileges that are appropriate for performing administration tasks. The OS users and groups that exist on the Exadata Storage Servers are configured during the factory install process, and you will use only these preconfigured users to log in.

After logging in with the appropriate user, you have access to a variety of OS tools and the Cell Command-Line Interface (CellCLI). The CellCLI utility is available only on the Exadata Storage Servers, and if you need to access this utility from a remote host, you can use the Distributed Command Line Interface (dcli). The dcli utility has the ability to execute CellCLI commands, simultaneously and in parallel, against a set of Exadata Storage Servers, thereby eliminating the need to run the same command multiple times on each Exadata Storage Server. Dcli comes in quite handy when performing certain configuration and monitoring steps. The CellCLI and dcli utilities are discussed next.

Using CellCLI

Using CellCLI, you can configure the Exadata Storage Server Software components, perform administration tasks such as startup and shutdown of the Exadata Storage Server Software, and perform monitoring and maintenance activities. CellCLI is a command-line executable that comes preinstalled on the Exadata Storage Servers and allows you to administer only the Storage Server on which the utility resides. You can execute CellCLI either by directly logging on to the Storage Server or through a networked server that can access the Storage Server using SSH.

NOTE

The term “Cell” is sometimes used to reference the Exadata Storage Server. The Cell is also an object within the Exadata Storage Server that can be queried through CellCLI. The Cell object refers to the Exadata Storage Server itself and stores its attributes such as the name, status, and network settings.

The commands that can be executed at the CellCLI prompt follow syntactical rules as with any other command-line interface. The lexical syntax of CellCLI commands is

{admin-command | object-command object} [options];

The first part of the command indicates the type of the command, and this can be of type admin-command or the object-command. The detailed description on the lexical syntax is given here:

![]() admin-command dictates an administration action to CellCLI. These commands will perform operations that are directed to the CellCLI utility. Common admin commands are START, SPOOL, HELP, and QUIT.

admin-command dictates an administration action to CellCLI. These commands will perform operations that are directed to the CellCLI utility. Common admin commands are START, SPOOL, HELP, and QUIT.

![]() object-command will direct administrative actions to be performed on the cell objects. The object command is qualified by an object clause, which is the cell object upon which the command will be applied. Examples of cell objects are the cell disks, grid disks, flash cache, and the cell. Common object commands are CREATE, ALTER, and LIST.

object-command will direct administrative actions to be performed on the cell objects. The object command is qualified by an object clause, which is the cell object upon which the command will be applied. Examples of cell objects are the cell disks, grid disks, flash cache, and the cell. Common object commands are CREATE, ALTER, and LIST.

![]() options provides the ability to specify additional parameters or options to the command.

options provides the ability to specify additional parameters or options to the command.

CellCLI can be invoked in an interactive mode, which is the default mode and does not require any options to be specified at the command line. The interactive mode allows users to execute multiple commands in a single session until the user executes the QUIT or EXIT command.

The following example invokes the CellCLI utility and runs the LIST command to list the attributes of the Cell object. The Cell object is associated with the Exadata Storage Server and will store its attributes such as the cell name, online/offline status, and the IP addresses configured for the server. The LIST command is explained in detail in the monitoring section of this chapter.

$> CellCLI

CellCLI> LIST CELL DETAIL

CellCLI> QUIT

You can also invoke CellCLI with the -e option and supply the CellCLI command directly at the OS command line as shown next. This command will display the same result as the previous example.

$> CellCLI -e LIST CELL DETAIL

Exadata Storage Server OS Users and Privileges

The CellCLI utility does not have its own authentication mechanism and will rely on the operating system authentication of the Linux user. Based upon the user that invokes CellCLI, the users will be assigned appropriate privileges within the CellCLI domain. The pre-created OS users that you will use to log in to the Exadata Storage Server are root, celladmin, and cellmonitor, and these are the only users allowed on the Storage Servers. These users are authorized to perform tasks related to administration, monitoring, and maintenance of the Exadata Storage Server and the Exadata Storage Server Software. Since you will have super-user privileges on the server with the root user, remember that performing any modifications to the Exadata Storage Server or its components is prohibited by Oracle, and this includes installation and modification of both existing and new software and hardware components, except for the modifications done by applying ongoing Exadata Storage Server patches and upgrades.

The Exadata Storage Server OS users have the following characteristics and privileges associated with them:

![]() cellmonitor The cellmonitor user can perform monitoring functions on the Exadata Storage Server using the CellCLI interface. The user is authorized only to execute the CellCLI LIST command, and all other commands that modify the configuration of the Exadata Storage Server, such as CREATE and ALTER commands, are blocked for cellmonitor.

cellmonitor The cellmonitor user can perform monitoring functions on the Exadata Storage Server using the CellCLI interface. The user is authorized only to execute the CellCLI LIST command, and all other commands that modify the configuration of the Exadata Storage Server, such as CREATE and ALTER commands, are blocked for cellmonitor.

![]() celladmin The celladmin user has the authority to perform administration tasks from the CellCLI interface. The administration tasks include creating, altering, and deleting of cell objects, such as grid disks and cell disks. The only CellCLI command that the celladmin user is not authorized to run is the CALIBRATE command, which is the command to capture I/O performance metrics of hard disks and Exadata Smart Flash Cache by exercising an I/O load against these devices.

celladmin The celladmin user has the authority to perform administration tasks from the CellCLI interface. The administration tasks include creating, altering, and deleting of cell objects, such as grid disks and cell disks. The only CellCLI command that the celladmin user is not authorized to run is the CALIBRATE command, which is the command to capture I/O performance metrics of hard disks and Exadata Smart Flash Cache by exercising an I/O load against these devices.

At the OS level, the celladmin user can access the Automatic Diagnostics Repository Command Interface (adrci) utility to package incidents for sending to Oracle support.

![]() root The root user has super-user privileges at the OS and the CellCLI levels. The root user can execute OS utilities for performing maintenance and setup of Exadata Storage Server hardware and software components. Root can modify the OS parameter files, which is required for certain configuration steps, such as modifying the Exadata Storage Server IP addresses and setting up Exadata security.

root The root user has super-user privileges at the OS and the CellCLI levels. The root user can execute OS utilities for performing maintenance and setup of Exadata Storage Server hardware and software components. Root can modify the OS parameter files, which is required for certain configuration steps, such as modifying the Exadata Storage Server IP addresses and setting up Exadata security.

Root is also a super-user on the CellCLI interface and has the authority to run all CellCLI commands, including CALIBRATE.

Using dcli

The dcli utility provides a centralized command-line interface for managing multiple Exadata Storage Servers from a single location. The dcli utility allows you to execute an OS command, in parallel, against a set of Exadata Storage Servers. When the command completes, the output of the command from each of the Exadata Storage Servers is combined into one and displayed as a unified result.

By using dcli, you can execute a CellCLI command against a set of Exadata Storage Servers in parallel, and you can observe the output returned by the command from all the Storage Servers from one central location. The dcli utility comes in extremely handy when you want to execute the same command against multiple servers without having to type them individually, in contrast to the CellCLI utility that will only allow you to run commands on a single Storage Server at a time.

The dcli utility can be set up on any host, provided the following requirements are met:

![]() The dcli executable residing on the Exadata Storage Server needs to be copied to the host that will execute dcli commands. The Exadata Storage Servers have dcli preinstalled with the factory image.

The dcli executable residing on the Exadata Storage Server needs to be copied to the host that will execute dcli commands. The Exadata Storage Servers have dcli preinstalled with the factory image.

![]() dcli is a Python script and requires Python to be installed and accessible by the user invoking dcli. Python is preinstalled and configured on the database servers and the Exadata Storage Servers, and if you plan on using a server residing outside of the Database Machine, ensure that it can support Python 2.3 or later.

dcli is a Python script and requires Python to be installed and accessible by the user invoking dcli. Python is preinstalled and configured on the database servers and the Exadata Storage Servers, and if you plan on using a server residing outside of the Database Machine, ensure that it can support Python 2.3 or later.

![]() SSH equivalence of the user executing the dcli commands on the remote host needs to be set with the Exadata Storage Server user that will be used to execute the commands. This configuration needs to be done on all Exadata Storage Servers that you will be managing from dcli. You can use the dcli utility itself to set up SSH equivalence. Refer to the Exadata Storage Server Software Users Guide—Using the dcli for more details.

SSH equivalence of the user executing the dcli commands on the remote host needs to be set with the Exadata Storage Server user that will be used to execute the commands. This configuration needs to be done on all Exadata Storage Servers that you will be managing from dcli. You can use the dcli utility itself to set up SSH equivalence. Refer to the Exadata Storage Server Software Users Guide—Using the dcli for more details.

The dcli utility is invoked from the OS command line and has the usage syntax specified here:

$> dcli [options] [command]

The two parameters that the dcli takes as input are options and command. The command is the OS command that you will execute on the Exadata Storage Server. The CellCLI command is our focus for this chapter, but essentially, you can execute any OS commands (for example, OS-level monitoring commands such as vmstat) from dcli as long as the user that was used for the SSH equivalence has the authority to run the command on the Exadata Storage Server. A point to be noted is that dcli executes one command at a time and does not allow executing multiple commands in a single invocation.

Some frequently used options of dcli are listed here:

![]() -c cells Allows you to specify the Exadata Storage Server(s) that you want to execute the command on. Multiple servers are separated by a comma.

-c cells Allows you to specify the Exadata Storage Server(s) that you want to execute the command on. Multiple servers are separated by a comma.

![]() -g group_file You can add the list of Exadata Storage Servers to group_file and use the file instead of a long, comma-separated list.

-g group_file You can add the list of Exadata Storage Servers to group_file and use the file instead of a long, comma-separated list.

![]() -l user This option will log in to the Exadata Storage Server using the user specified. The user needs to be set up for SSH equivalence, with the remote OS user invoking dcli.

-l user This option will log in to the Exadata Storage Server using the user specified. The user needs to be set up for SSH equivalence, with the remote OS user invoking dcli.

![]() -k This option is used to set up SSH equivalence. It will also need the -c or the -g option to specify the Exadata Storage Servers to set up SSH equivalence.

-k This option is used to set up SSH equivalence. It will also need the -c or the -g option to specify the Exadata Storage Servers to set up SSH equivalence.

![]() -x script If the command is too long to be specified on the command line, you can save the command in a script file and use the -x option to execute the script file.

-x script If the command is too long to be specified on the command line, you can save the command in a script file and use the -x option to execute the script file.

![]() -h This will display the help on dcli.

-h This will display the help on dcli.

When executing CellCLI through dcli, you should use the -e option of CellCLI at the dcli command line.

dcli Examples

To set up SSH user equivalence using dcli, create a text file (named group_file for this example) that contains all Exadata Storage Server hostnames or IP addresses, and execute the dcli command with the -k option, as shown next. The command prompts you to enter the password for the user that will have the SSH equivalence set. The password is required to set up the SSH equivalence key files.

$> dcli -k -g group_file

If you want to display the name and status of the grid disks on each Exadata Storage Server, execute the following dcli command. The command will execute the LIST GRIDDISK command on all the Exadata Storage Servers that are specified in the group_file.

$> dcli -g group_file "CellCLI -e LIST GRIDDISK ATTRIBUTES name, status"

Exadata Storage Server Setup

The initial setup and configuration of the Exadata Storage Servers will be performed when the Exadata Database Machine gets installed at the customer site. We will touch upon the initial configuration setup steps briefly in this section.

At a high level, the setup process of the Exadata Storage Servers can be divided into two phases. The first phase deals with configuring the Exadata Storage Server and the operating system to make them accessible via the management network by listening on the appropriate Ethernet IP addresses. This phase will also configure the Fully Qualified Domain Name (FQDN), Domain Name Server (DNS), and a few other steps that we will discuss in the next section.

The steps involved in the second phase configure the Exadata Storage Server Software and carve out storage devices to make the Exadata Storage Server presentable to Oracle ASM as ASM disks. The ASM disks will be used to configure ASM diskgroups, and eventually used to create tablespaces in the Oracle Database.

The next section will first discuss the steps required for configuring the Exadata Storage Server and the OS.

Phase 1: Exadata Storage Server and OS Configuration

The Database Machine gets delivered to the customer site with a preinstalled factory image of the OS and the Exadata Storage Server Software. The OS and the software are not fully configured upon delivery, since the configuration steps require IP addresses of InfiniBand and Ethernet networks to be set up and routable (among other requirements, which we will see later), and this is only possible when the machine is at the customer site and not at the factory.

Exadata Storage Server First Boot Sequence

When the server is booted for the first time using the factory image, it will undergo a first boot sequence. The sequence is a series of prompts requesting your input, and, based on the information you provide, the Exadata Storage Server will be configured to listen on the network using the IP addresses provided at the prompt.

It will be necessary to have the following information ready prior to going through the first boot sequence:

![]() DNS Servers: It is not a requirement to register the Ethernet IP addresses of the Exadata Storage Server in the DNS, but if you are doing so, the Primary and Secondary DNS Server information needs to be provided.

DNS Servers: It is not a requirement to register the Ethernet IP addresses of the Exadata Storage Server in the DNS, but if you are doing so, the Primary and Secondary DNS Server information needs to be provided.

![]() Time zone and location of the Exadata Storage Servers.

Time zone and location of the Exadata Storage Servers.

![]() Network Time Protocol (NTP) Servers are needed for network time synchronization.

Network Time Protocol (NTP) Servers are needed for network time synchronization.

![]() Ethernet and InfiniBand IP addresses: You will require one InfiniBand IP address during the first boot sequence. Even though you have two InfiniBand ports in each Exadata Storage Server, the ports are bonded for high availability on each server and will be presented to the OS as one port requiring one IP address. You also need one Ethernet IP address for the management network that connects the Ethernet ports of the Exadata Storage Server to the Exadata Database Machine’s Ethernet admin switch.

Ethernet and InfiniBand IP addresses: You will require one InfiniBand IP address during the first boot sequence. Even though you have two InfiniBand ports in each Exadata Storage Server, the ports are bonded for high availability on each server and will be presented to the OS as one port requiring one IP address. You also need one Ethernet IP address for the management network that connects the Ethernet ports of the Exadata Storage Server to the Exadata Database Machine’s Ethernet admin switch.

![]() Fully qualified domain name (FQDN) for the server.

Fully qualified domain name (FQDN) for the server.

![]() Oracle ILOM setup: The Oracle ILOM interface will be set up during the first boot sequence, and its configuration requires one IP address upon which its built-in web server will listen upon. Once ILOM is configured, you will be able to use a web browser to connect to ILOM and perform server-level management functions, such as startup and shutdown of the server, monitor the hardware, and many more tasks. More details on ILOM are discussed later in this chapter.

Oracle ILOM setup: The Oracle ILOM interface will be set up during the first boot sequence, and its configuration requires one IP address upon which its built-in web server will listen upon. Once ILOM is configured, you will be able to use a web browser to connect to ILOM and perform server-level management functions, such as startup and shutdown of the server, monitor the hardware, and many more tasks. More details on ILOM are discussed later in this chapter.

After the first boot configuration is complete, the Exadata Storage Server will be booted to the Linux prompt and you are ready to log in with the built-in users. Once you log in, the Exadata Storage Server Software processes are started, but still will not be fully functional and require additional configuration, which will be discussed in the next phase.

Perform Sanity Checks on the Exadata Storage Server Using CALIBRATE

In order to make sure that the Exadata Storage Server hardware is performing as advertised, you should execute the CellCLI CALIBRATE command. The CALIBRATE command will exercise the storage devices of the server with a prebuilt I/O load profile, and will monitor the raw I/O performance at the block level. You should compare the metrics you get from the result of CALIBRATE and compare it with the advertised values. An example of running the CALIBRATE command is shown next. The use of the FORCE parameter will be mandatory if you have already created the Exadata Cell object (the Exadata Cell object is discussed in the next section).

[root@cell01 ~]# CellCLI

CellCLI: Release 11.2.1.2.0 - Production on Mon Nov 02 16:42:06 PST 2009

Copyright (c) 2007, 2009, Oracle. All rights reserved.

Cell Efficiency ratio: 1.0

CellCLI> CALIBRATE FORCE

Calibration will take a few minutes…

Aggregate random read throughput across all hard disk luns: 1601 MBPS

Aggregate random read throughput across all flash disk luns: 4194.49 MBPS

Aggregate random read IOs per second (IOPS) across all hard disk luns: 4838

Aggregate random read IOs per second (IOPS) across all flash disk luns: 137588

Controller read throughput: 1615.85 MBPS

Calibrating hard disks (read only) …

Lun 0_0 on drive [20:0] random read throughput: 152.81 MBPS, and 417 IOPS

Lun 0_1 on drive [20:1] random read throughput: 154.72 MBPS, and 406 IOPS

. . .

Lun 0_10 on drive [20:10] random read throughput: 156.84 MBPS, and 421 IOPS

Lun 0_11 on drive [20:11] random read throughput: 151.58 MBPS, and 424 IOPS

Calibrating flash disks (read only, note that writes will be significantly

slower).

Lun 1_0 on drive [[10:0:0:0]] random read throughput: 269.06 MBPS, and 19680

IOPS

Lun 1_1 on drive [[10:0:1:0]] random read throughput: 269.18 MBPS, and 19667

IOPS

…

Lun 5_2 on drive [[11:0:2:0]] random read throughput: 269.15 MBPS, and 19603

IOPS

Lun 5_3 on drive [[11:0:3:0]] random read throughput: 268.91 MBPS, and 19637

IOPS

CALIBRATE results are within an acceptable range.

NOTE

The CALIBRATE command will exhaust all the available I/O resources on the Exadata Storage Server. Take caution when running this command, especially if you have databases that are actively utilizing the storage.

Phase 2: Exadata Storage Server Software Configuration

Before you start configuring the Exadata Storage Server Software, you need to decide on the provisioning model of the grid disks and the flash disks. The points to be considered are the number of grid disks to be created per cell disk, use of interleaved grid disks, and whether to utilize the flash disks as Exadata Smart Flash Cache or as grid disks, or a mix of the two. The best practice is to create at least two grid disks per cell disk, and to configure all the flash disks as Exadata Smart Flash Cache. For more details, refer to the earlier section in this chapter about architecting the storage on the Exadata Storage Servers.

The first step in configuring the Exadata Storage Server Software is to configure the Cell object. The Cell object refers to the Exadata Storage Server Software instance as a whole, and some of the parameters that you will set up in this step are the interconnect network that the Exadata Storage Server uses to serve the data traffic to the database nodes and the SNMP settings for monitoring of and forwarding of alerts. The steps that will follow the Cell creation step deal with configuring Exadata cell disks, grid disks, and Exadata Flash Cache.

Create the Exadata Cell Object

When configuring the Cell object, ensure that the interconnect network that the Exadata Storage Server uses to route data to the database nodes is the InfiniBand network. You can do this by verifying the name of the InfiniBand network interface using the Linux ifconfig utility. The dual ports of the InfiniBand network interface are configured during the initial setup process in a bonded mode and given a logical name of bond0.

Some of the other optional settings that you can perform during the Cell creation are to set up the Simple Mail Transfer Protocol (SMTP) server information for forwarding of alerts via e-mails and to set up the Simple Network Management Protocol (SNMP) to forward SNMP traps to a management and monitoring software infrastructure. You can also assign the logical realm name to the Exadata Storage Server. Realms are a logical grouping of Exadata Storage Servers for security purposes, and are discussed later in this chapter.

To create the Cell object, invoke the CellCLI utility and execute the CREATE CELL command.

CellCLI> CREATE CELL cell01 interconnect1=bond0, realmname=prod_cells

Cell cell01 successfully altered

Create the Cell Disks and Grid Disks

The LUNs (logical units) presented to the Exadata Storage Server Software are used to create cell disks and grid disks. Use the CREATE CELLDISK command shown next to create cell disks on the LUNs for which the cell disks have not yet been created, using default values. The cell disk is created on the entire LUN, and you are only allowed to create one cell disk per LUN, since there is a one-to-one mapping between a cell disk and a LUN. The CREATE CELLDISK command can be run repeatedly without affecting the LUNs or cell disks that were created earlier. This step should be repeated on all Exadata Storage Servers available in the Database Machine.

CellCLI> CREATE CELLDISK ALL

CellDisk CD_00_cell01 successfully created

. . .

CellDisk CD_10_cell01 successfully created

CellDisk CD_11_cell01 successfully created

After creating the cell disks, the next step is to create the grid disks. You can create one or more grid disks on a cell disk, and the first grid disk you create will be placed on the outermost tracks of the cell disks, unless you use interleaved grid disks, as described previously. The outer tracks are faster performing than the inner tracks, so the data that gets placed on grid disks residing on the outer tracks of the hard disk can be fetched much quicker than the data placed on the grid disk created on inner tracks. The best practice is to create a minimum of two grid disks on each cell disk and use the first (and the faster) grid disk for storing user data, and the second (and the slower) grid disk will be assigned to the ASM diskgroup that will house the FRA of the Oracle database.

The commands listed next will create two grid disks, allocating 300GB to the first disk and the remaining to the second. The PREFIX clause will prefix a text string to the name of the grid disk, which is useful for identifying the grid disks from ASM as mentioned earlier.

CellCLI> CREATE GRIDDISK ALL PREFIX=data, size=300G

GridDisk data_CD_1_cell01 successfully created

. . .

GridDisk data_CD_12_cell01 successfully created

CellCLI> CREATE GRIDDISK ALL PREFIX=recov

GridDisk recov_CD_1_cell01 successfully created

…

GridDisk recov_CD_12_cell01 successfully created

Create the Exadata Smart Flash Cache

Each Exadata Storage Server has flash disks created on the Sun Flash Accelerator F20 PCIe card modules, with each module exposed as one flash disk. As described earlier, you have a choice of creating Exadata Smart Flash Cache using the flash disks, or a combination of Flash Cache and grid disks. The default and the best practice configuration of flash disks is to create Exadata Smart Flash Cache on all the available flash disks, using the command shown here:

CellCLI> CREATE FLASHCACHE ALL

Flash cache cell01_FLASHCACHE successfully created

ASM and Database Configuration

The Oracle ASM cluster on the database nodes needs to be configured to use the grid disks created by the previous steps. Oracle ASM will be creating ASM diskgroups across the grid disks that are exposed by the Exadata Storage Servers. The database server also needs to be configured to communicate with the Exadata Storage Servers. The list of steps provided here configures the database and ASM nodes.

1. Set up the configuration files on the database and ASM host. The database and ASM nodes need the cellip.ora and cellinit.ora files that will be used by the database and the ASM cluster for routing the storage traffic to the Exadata Storage Servers via the InfiniBand network. The cellip.ora file stores the IP addresses of the Exadata Storage Server that will be accessed by the database and ASM clusters. The cellinit.ora file stores the IP address of the local database host InfiniBand network interface. These files are located in the /etc/oracle/networkconfig/directory on the database and ASM hosts. The sample contents of these files are as shown:

$> cat /etc/oracle/cell/networkconfig/cellip.ora

$> cat /etc/oracle/cell/networkconfig/cellinit.ora

2. Configure the database instance. The database cluster initialization parameter file needs to have the COMPATIBLE parameter set to 11.2.0.1.0 in order to ensure communication with the Exadata Storage Server Software. The ASM and database binaries require the COMPATIBLE parameter to be set to the version of the Exadata Storage Server Software. The parameter cannot be dynamically modified and will require a restart of the database. Use this command to set COMPATIBLE:

SQL Plus> ALTER SYSTEM SET COMPATIBLE='11.2.0.1.0' SCOPE=SPFILE;

3. Configure the ASM instance. In order for the ASM instance to be able to discover the grid disks on the Exadata Storage Servers, the ASM instance parameter file needs to be updated with the appropriate ASM_DISKSTRING parameter. The ASM_DISKSTRING parameter will determine the discovery path of the storage devices exposed by the Exadata Storage Servers to ASM. For the grid disks created on the Exadata Storage Servers, the storage path is o/*/*. You can update the ASM_DISKSTRING parameter dynamically with the following alter system command:

SQL Plus> ALTER SYSTEM SET ASM_DISKSTRING='o/*/*' SCOPE=BOTH;

4. Create ASM diskgroups. The Exadata Storage Server grid disks available to Oracle ASM are used to create ASM diskgroups. The grid disks can be queried from the ASM instance using the following SQL against the v$asm_disk view:

SQL> SELECT PATH, header_status STATUS FROM V$ASM_DIS

WHERE path LIKE 'o/%';

Once you have the list of the available ASM disks, you can use the CREATE DISKGROUP command shown next to create ASM diskgroups. This example will create two ASM diskgroups—DATA and RECOV. Note that you do not require the FAILGROUP clause, since ASM will define a failure group for each Exadata Storage Server. You are required to set the COMPATIBLE.RDBMS and COMPATIBLE.ASM attributes to 11.2.0.1.0, and the CELL.SMART_SCAN_CAPABLE attribute to true. This attribute enables the SQL offload processing on the objects that are placed in this ASM diskgroup.

SQL> CREATE DISKGROUP data NORMAL REDUNDANCY DISK 'o/*/DATA*'

ATTRIBUTE 'AU_SIZE' ='4M',

'cell.smart_scan_capable'='TRUE',

'compatible.rdbms'='11.2.0.1.0',

'compatible.asm'='11.2.0.1.0';

SQL> CREATE DISKGROUP recov NORMAL REDUNDANCY DISK 'o/*/RECOV*'

ATTRIBUTE 'AU_SIZE' ='4M',

'cell.smart_scan_capable'='TRUE',

'compatible.rdbms'='11.2.0.1.0',

'compatible.asm'='11.2.0.1.0';

Exadata Storage Server Security Configuration

The grid disks on the Exadata Storage Servers are the logical entities that will be used by ASM and the database to store database objects. Using the security features that are built into the Exadata Storage Server, you can configure permissions and set access controls on a grid disk and thereby authorize it to be accessed by a specific database or ASM cluster. This feature is useful in a consolidation environment with multiple databases deployed on the Exadata Database Machine, requiring you to control access to grid disks to a database or set of databases.

Exadata Security can be configured using one of the following modes:

![]() Open security In open security (or no security) mode, no access controls are set on the grid disks. Open security is useful when you are running development or test databases on the Exadata Database Machine and do not require stringent security requirements, or when you are running a production database and have secured access to the Database Machine by securing the network and the operating system. This is the default security mode, and out of the box, this is the mode that gets configured during the default setup of the Database Machine.

Open security In open security (or no security) mode, no access controls are set on the grid disks. Open security is useful when you are running development or test databases on the Exadata Database Machine and do not require stringent security requirements, or when you are running a production database and have secured access to the Database Machine by securing the network and the operating system. This is the default security mode, and out of the box, this is the mode that gets configured during the default setup of the Database Machine.

![]() ASM-scoped security Using the ASM-scoped security mode, you can set access controls on a grid disk and make it available to a specific ASM cluster, and to all databases that are accessing the ASM cluster. ASM-scoped security can be used when all databases that utilize an ASM cluster need the same level of access controls on the grid disks.

ASM-scoped security Using the ASM-scoped security mode, you can set access controls on a grid disk and make it available to a specific ASM cluster, and to all databases that are accessing the ASM cluster. ASM-scoped security can be used when all databases that utilize an ASM cluster need the same level of access controls on the grid disks.

![]() Database-scoped security The database-scoped security mode allows you to set access controls on a grid disk such that it is made available to a specific database cluster. ASM-scoped security is a pre-requisite for setting up database-scoped security. This mode of security is appropriate when you want to control the access to grid disks at the individual database cluster level.

Database-scoped security The database-scoped security mode allows you to set access controls on a grid disk such that it is made available to a specific database cluster. ASM-scoped security is a pre-requisite for setting up database-scoped security. This mode of security is appropriate when you want to control the access to grid disks at the individual database cluster level.

Exadata Storage Server Realms

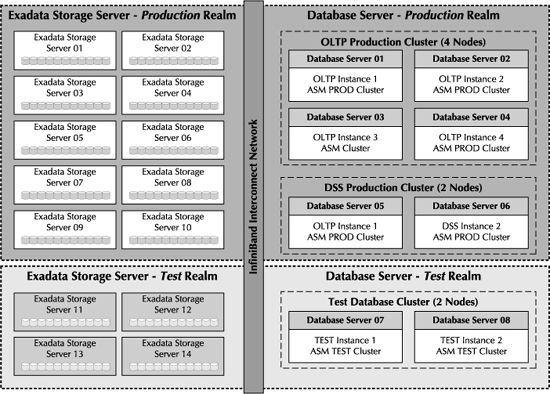

Exadata Storage Server realms are logical groups of Exadata Storage Servers that are associated with a specific database or ASM cluster. Realms are optionally defined at the Storage Server level, with each server being associated with at most one realm, and the grid disks that belong to the server all reside in the same realm. Exadata Storage Servers belonging to a realm will have the same level of security and access controls set for the database and the ASM clusters. Figure 5-6 shows a typical use of realms.

There are multiple reasons for implementing Exadata Storage Server realms. One reason is to create multiple pools of storage in the storage grid. Storage pools are useful when you are consolidating multiple database environments on the Database Machine and you require dedicated storage pools for a particular database or ASM cluster. Another reason to use realms is to group Exadata Storage Servers together based upon similar security and access policy requirements of the database and ASM instances.

FIGURE 5-6. Exadata Storage Server realms

You can associate an Exadata Storage Server with a realm when you execute the CREATE CELL command using CellCLI. Or, you can use the ALTER CELL command to associate a realm with an existing Exadata Storage Server on which the Exadata Cell object was already created, as shown here:

CellCLI> ALTER CELL realmname=my_production_realmn

Implementing Exadata Security

The mechanism used for authenticating and authorizing grid disks to ASM and database clusters is based on security keys. The Exadata Storage Server assigns keys to grid disks, and the entities requesting access to the grid disk will need to provide this key during the security handshake phase. In case of ASM-scoped security, the ASM cluster provides the key, and the Exadata Storage Server will match up the key to the assigned keys of the grid disk. If they match, then the ASM cluster is authenticated to access the grid disk. The process is similar for the database-scoped security, except that the database cluster provides the key to the Exadata Storage Servers when requesting access to the grid disks. This authentication mechanism is transparent to the end user and happens underneath the covers, and only needs to be configured one time when performing the initial setup.

The keys for the grid disks are created and assigned using the CREATE KEY and ASSIGN KEY commands at the CellCLI prompt. The keys for the database-scoped and ASM-scoped security will be stored in the cellkey.ora file. The cellkey.ora file is located on the database and ASM cluster nodes, and each ASM and database cluster will have its own cellkey.ora file stored in different locations on the server.

Use the following guidelines as best practices for configuring Exadata Security:

![]() The security configuration should be implemented on all database servers that belong to the same realm. You cannot just configure a few nodes within a realm and not all of them. Ensure that the cellkey.ora file is the same for all the nodes within the realm in order to avoid a mismatch in the configuration, which could result in errors.

The security configuration should be implemented on all database servers that belong to the same realm. You cannot just configure a few nodes within a realm and not all of them. Ensure that the cellkey.ora file is the same for all the nodes within the realm in order to avoid a mismatch in the configuration, which could result in errors.

![]() ASM-scoped security and database-scoped security should not be mixed in the same realms; you will configure the realm to use one or the other, but not both.

ASM-scoped security and database-scoped security should not be mixed in the same realms; you will configure the realm to use one or the other, but not both.

![]() When you configure the security on the grid disks, you can use the dcli utility to avoid mismatch of access control configuration for grid disks that belong to the same ASM diskgroup. Dcli will ensure that you run the same command across multiple grid disks and will ensure consistency across the grid disks access control definitions to minimize errors.

When you configure the security on the grid disks, you can use the dcli utility to avoid mismatch of access control configuration for grid disks that belong to the same ASM diskgroup. Dcli will ensure that you run the same command across multiple grid disks and will ensure consistency across the grid disks access control definitions to minimize errors.

Exadata Storage Server Monitoring

The need for monitoring the hardware and software components comprising the Exadata Database Machine is unquestionable. Sometimes, a malfunctioning hardware component or an erratic behavior of a software component can cause failures that can adversely affect performance, or even the availability of the Exadata Database Machine. Being able to proactively detect and fix hardware and software issues will ensure optimal performance and healthy functioning of the Database Machine components.

This section will discuss the different tools and utilities available to monitor the Exadata Storage Servers. The monitoring methods will ensure that the different software and hardware components of the Exadata Storage Servers are operating as advertised and, more importantly, performing to their full potential without incurring undue bottlenecks. Any anomalies in the behavior of these components will be captured by the monitoring infrastructure, and the administrators will be alerted so that the corrective actions can be immediately implemented.

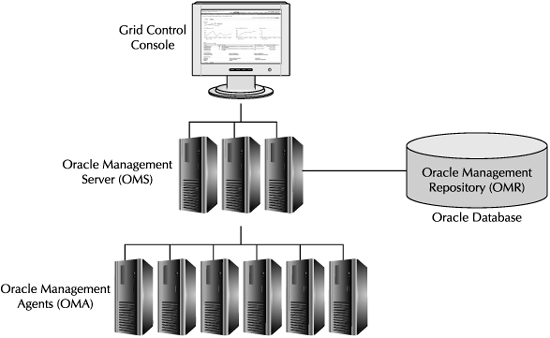

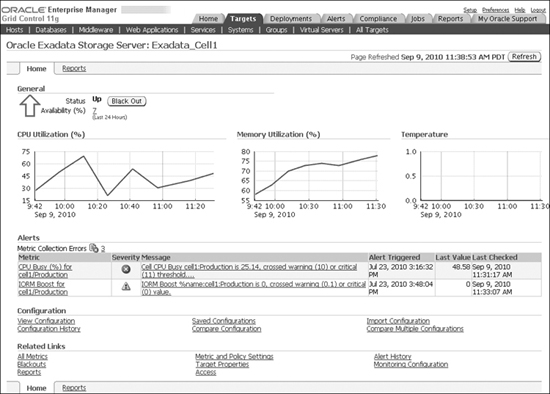

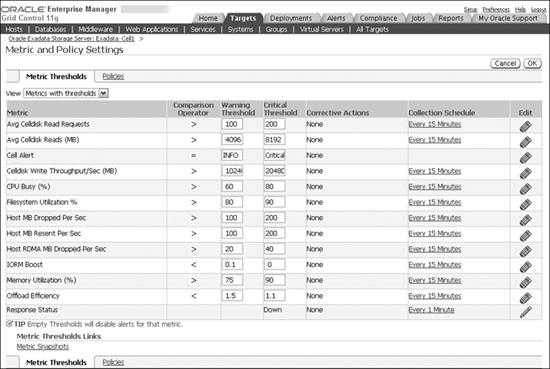

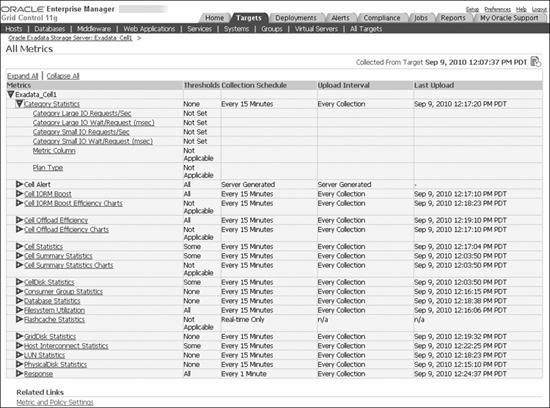

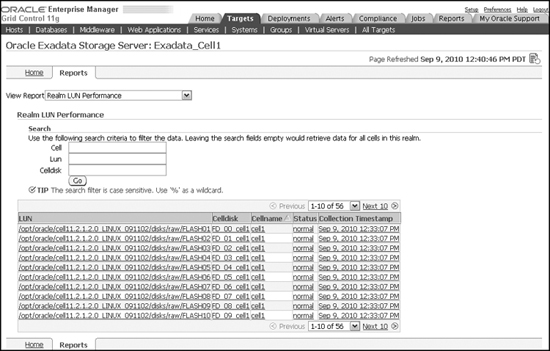

The tools available for monitoring the Exadata Storage Servers are the command-line utilities such as CellCLI and SQLPlus, and the web-based Oracle Enterprise Manager. The following options for monitoring the Exadata Storage Servers are discussed in this section:

![]() Monitoring with metrics and alerts

Monitoring with metrics and alerts

![]() Monitoring of active requests on the Exadata Storage Server

Monitoring of active requests on the Exadata Storage Server

![]() Monitoring using the Oracle Database v$views

Monitoring using the Oracle Database v$views

![]() Using Oracle Enterprise Manager for monitoring

Using Oracle Enterprise Manager for monitoring

![]() Using Oracle Integrated Lights Out Manager

Using Oracle Integrated Lights Out Manager

Monitoring with Metrics and Alerts

The Exadata Storage Server Software has a built-in infrastructure to monitor the overall health of the server and the health of its individual hardware and software components. The monitoring infrastructure periodically collects information about certain parameters that are deemed critical for the normal functioning of the Exadata Storage Server. When these parameters cross predefined threshold boundaries, the monitoring infrastructure will automatically trigger alerts to the concerned administrators. The alerts will assist administrators to take corrective actions in a timely manner and help ensure a speedy resolution to the problem. These types of problems, if left unresolved, can hamper the availability of the Exadata Storage Servers and the Exadata Database Machine.

Metrics and alerts form the core infrastructure behind the monitoring of Exadata Storage Servers. These topics are discussed in this section.

Metrics

The software and hardware components that comprise the Exadata Storage Server are characterized into entities called objects. Examples of objects are cell disks, grid disks, Exadata Smart Flash Cache, host interconnect network, and the Exadata Storage Server itself. Each object is associated with certain properties that measure its performance or state, and these properties are characterized as metrics. An example of a metric associated with the cell disk object is the property that measures the total MBs read from the cell disk due to large I/O requests (the metric MBytes read from disk for large I/Os).

The metrics are categorized into the following types based on their values:

![]() Instantaneous metrics always capture the current value of the property of the object being measured.

Instantaneous metrics always capture the current value of the property of the object being measured.

![]() Cumulative metrics measure the property of the object whose values have accumulated since the metric was created.

Cumulative metrics measure the property of the object whose values have accumulated since the metric was created.

![]() Rate metrics record the rate of change of the metric value measured over a given period.

Rate metrics record the rate of change of the metric value measured over a given period.

![]() Transition metrics capture a change in the state of the object when the object transitions to a different state.

Transition metrics capture a change in the state of the object when the object transitions to a different state.

The metric values are categorized into different domains called thresholds. The different types of thresholds that are predefined by the system are normal, warning, and critical. When the object associated with the metric is working as expected, the metric value will fall in the normal range, below the warning threshold. A metric that falls over the critical threshold indicates that the Exadata Storage Server has encountered a critical issue with the object or the metric and the object may not be functioning as expected. The metric value that falls between the warning and critical thresholds is considered to be in the warning range.

Metrics are measured in units that correlate to the type of metric. For example, the CPU utilization metric will be measured as a percentage, and the CPU temperature will be measured in degrees Celsius.

Multiple metrics will be associated with an object, and all the possible metrics that have an effect on the overall functioning and behavior of the Exadata Storage Server have been predefined by the Exadata Storage Server Software. Users are not allowed to modify or add to the system-defined metrics; however, they are allowed to modify the preset thresholds or create new thresholds on the built-in metrics.

The metric collection process in the Exadata Storage Server is similar to the Automatic Workload Repository (AWR) metrics collection of the Oracle database. The CELLSRV process periodically records the metric values in the main process memory. The Management Server (MS) process will periodically flush the metrics accumulated by CELLSRV from the main memory and persist it to the hard disk. The metrics are then kept on disk for a default period of seven days. This default period can be modified, if needed, by altering the metricHistoryDays attribute using CellCLI. This example will modify the metricHistoryDays attribute to store 12 days’ worth of metric history:

CellCLI> alter cell set metricHistoryDays=12

NOTE

The complete list of system-defined metrics, objects, alerts, and thresholds are described in the Oracle Exadata Storage Server Software Users Guide.

Alerts

Alerts are notices of important events occurring in the Exadata Storage Server. The events leading to an alert will generally indicate that the server has encountered an issue that can hamper its normal function. The Exadata Storage Server Software has predefined alerts on critical events that are known to interfere with the normal functioning of the Exadata Storage Server.

The MS process is responsible for triggering alerts. MS will trigger alerts when it encounters the following issues:

![]() Hardware issues Errors due to the failure of a hardware component such as the flash card.

Hardware issues Errors due to the failure of a hardware component such as the flash card.

![]() Software issues Internal errors raised by the CELLSRV process or errors due to a misconfiguration of the Exadata Storage Server Software.

Software issues Internal errors raised by the CELLSRV process or errors due to a misconfiguration of the Exadata Storage Server Software.

![]() Metrics having critical or warning values When metric values cross into the warning or critical threshold range, alerts can be triggered. These alerts will be triggered once the users define thresholds on the metric values.

Metrics having critical or warning values When metric values cross into the warning or critical threshold range, alerts can be triggered. These alerts will be triggered once the users define thresholds on the metric values.

Alerts can be stateful or stateless. Stateful alerts indicate a condition that can be tested again to see if the issue still exists. Alerts that are raised due to a cumulative metric exceeding a threshold value is an example of a stateful alert. Stateless alerts are events that have occurred at a point in time and the condition that triggered the alert no longer exists. An example of a stateless alert is the ORA-00600 errors raised by the CELLSRV process in the Automatic Diagnostics Repository (ADR) due to internal software issues that were encountered in the past.

Alerts are associated with a severity level of critical, warning, clear, or informational. The level of severity determines the degree of the fault that occurred in the system.

![]() Critical alerts indicate a condition that can interfere with the normal functioning of the Exadata Storage Server. Critical alerts will require immediate attention from the administrators to take corrective actions. If the alert definition is based on a metric (not hardware or internal alerts), the critical alert is raised when the metric value crosses over to the critical threshold. For hardware and internal alerts, the critical alerts are raised based on predefined conditions.

Critical alerts indicate a condition that can interfere with the normal functioning of the Exadata Storage Server. Critical alerts will require immediate attention from the administrators to take corrective actions. If the alert definition is based on a metric (not hardware or internal alerts), the critical alert is raised when the metric value crosses over to the critical threshold. For hardware and internal alerts, the critical alerts are raised based on predefined conditions.

![]() Warning alerts indicate an issue that is categorized as a warning condition, or a condition that has the tendency to become critical if it is not immediately taken care of. If the alert definition is based on a metric, the warning alert is raised when the metric value crosses into the warning threshold.

Warning alerts indicate an issue that is categorized as a warning condition, or a condition that has the tendency to become critical if it is not immediately taken care of. If the alert definition is based on a metric, the warning alert is raised when the metric value crosses into the warning threshold.