Achieving Extreme Performance with Oracle Exadata (Oracle Press) (2011)

PART II

Best Practices

CHAPTER 6

High Availability and Backup Strategies

The continuous availability of business-critical information is a key requirement for enterprises to survive in today’s information-based economy. Enterprise database systems managing critical data such as financial and customer information, and operational data such as orders, need to be available at all times. Making important business decisions based on these systems requires the databases storing this data to provide high-service uptime with enhanced reliability.

When the loss of data or the database service occurs due to planned events such as software patching and upgrading, or due to unforeseen events such as media failures, data corruption, or disasters, the data and the database service need to be recovered quickly and accurately. If recovery cannot be performed in the optimal timeframe as dictated by the business Service Level Agreements (SLA), the business can incur loss of revenues and customers. Choosing the right high-availability architecture of the Exadata Database Machine will enable the enterprises to safeguard critical data and provide the highest level of service, availability, and reliability.

The basic premise of high-availability architectures is based on redundancies across the hardware and software components in the deployment architecture. Redundant systems provide high availability when remaining active at the same time. High availability can also be built into the software layer by incorporating features that provide continuous availability of data. A few examples are the features that help repair data corruption, perform online maintenance operations, and help you recover from user errors while the database remains available.

Oracle provides a myriad of high-availability technologies for implementing highly available database systems. This chapter highlights such technologies and the recommended practices and guidelines for implementing them. We will also discuss the best-practice methods of performing backup and recovery on the Oracle Exadata Database Machine.

Exadata Maximum Availability Architecture (MAA)

Oracle Maximum Availability Architecture (MAA) is the Oracle-recommended best-practice architecture for achieving the highest availability of service with Oracle products, using Oracle’s proven high-availability technologies.

MAA best practices are published as a series of technical whitepapers and blue prints, and assist in deploying highly available Oracle-based applications and platforms that are capable of meeting and exceeding the business service-level requirements. The MAA architecture covers all components of the technology stack, includes the hardware and the software, and addresses planned and unplanned downtime.

MAA provides a framework for high availability by incorporating redundancies into the components of the technology stack, and also by utilizing certain built-in high-availability features of the Oracle software. For example, the Database Flashback feature enables you to recover from user errors such as an involuntary DROP TABLE statement by using a simple FLASHBACK TABLE command. This cuts down the recovery time drastically, which otherwise would be needed for performing a point-in-time recovery using backups.

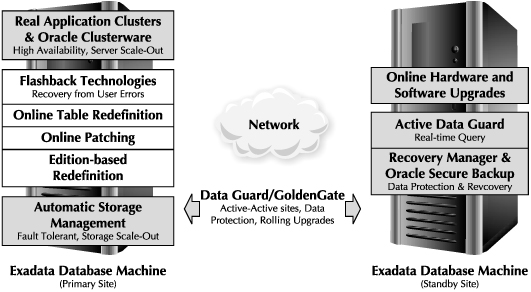

Figure 6-1 represents the MAA architecture using the Exadata Database Machine. The Database Machine MAA architecture has identically sized Database Machines on the primary and standby sites. The primary site contains a production database configured with Oracle Real Application Clusters (RAC). Oracle RAC provides protection from database server and instance failures. The standby site contains a physical standby database that is synchronized with the primary database by using Oracle Data Guard. The Active Data Guard option enables the physical standby database to be open in a read-only state while the database is kept in sync with the primary database. Active Data Guard enables you to offload read-only queries to the standby site and enhances the overall availability of the database by utilizing an otherwise idle standby site for reporting purposes. The figure also depicts the use of Oracle database technologies such as Flashback, RMAN, and ASM for providing high availability during planned and unplanned downtime.

FIGURE 6-1. Exadata Database Machine MAA architecture

We’ll next cover the Oracle high-availability features that address planned and unplanned downtime. The intent is not to cover the complete set of MAA technologies, but only the topics that relate to the Exadata Database Machine and the common tasks that will be performed on the Machine. The topics discussed in this section are listed here:

![]() High availability with Oracle Data Guard

High availability with Oracle Data Guard

![]() Using Oracle GoldenGate with Database Machine

Using Oracle GoldenGate with Database Machine

![]() Database Machine patches and upgrades

Database Machine patches and upgrades

![]() Exadata Storage Server high availability

Exadata Storage Server high availability

![]() Preventing data corruption

Preventing data corruption

High Availability with Oracle Data Guard

Oracle Data Guard is an integral component of MAA and the best-practice solution for ensuring high availability and disaster recovery of the Oracle database. Data Guard provides an extensive set of features that enable you to recover from planned and unplanned downtime scenarios, such as recovering from data corruption, performing near-zero database upgrades, and conducting database migrations.

Data Guard provides the software to manage and maintain one or more standby databases that are configured as a replica of the primary database, and protects from data loss by building and maintaining redundant copies of the database. When a data loss or a complete disaster occurs on the primary database, the copy of the data stored on the standby database can be used to repair the primary database. Data Guard also provides protection from data corruptions by detecting and repairing corruptions automatically as they happen, using the uncorrupted copy on the standby database.

The Data Guard architecture consists of processes that capture and transport transactions occurring on the primary database and applying them on the standby databases. Oracle redo generated on the primary site is used to transmit changes captured from the primary to the standby site(s), using a synchronous or asynchronous mechanism. Once the redo is received by the standby site, it is applied on the standby databases using one of two possible methods: Redo Apply or SQL Apply.

The Redo Apply process uses the database media recovery process to apply the transactions to the standby databases. Redo Apply maintains a block-for-block replica of the primary database, ensuring that the standby database is physically identical to the primary one in all respects. The standby database that uses Redo Apply to sync up with the primary is called a physical standby. The physical standby database can be open and made accessible for running read-only queries while the redo is being applied; this arrangement of the physical standby is called Active Data Guard.

The SQL Apply process mines the redo logs once they are received on the standby host, re-creates the SQL transactions as they occurred on the primary database, and then executes the SQL on the standby database. The standby in this case is open for read-write activity, and contains the same logical information as the primary database. However, the database has its own identity with possibly different physical structures. The standby database that uses the SQL Apply as the synchronization method is called a logical standby.

NOTE

The SQL Apply has some restrictions on the data types, objects, and SQL operations that it supports. Refer to the Oracle Data Guard manuals for further details.

The MAA best practices dictate the use of Data Guard Redo Apply as the synchronization method for achieving the highest availability with the Database Machine. MAA also proposes the use of Active Data Guard to enhance the overall availability of the database machine by utilizing the standby database for reporting and queries.

Data Guard is tightly integrated with the Oracle database and supports high-transaction volumes that are demanded by the Exadata Database Machine deployments. When you follow the best practices for configuring Data Guard, the typical apply rates that you can achieve with Redo Apply can be over 2 TB/hr. Keep in mind that this means 2 TB/hr of database changes, which is a high transaction rate.

Although the primary purpose of Data Guard is to provide high availability and disaster recovery, it can also be utilized for a variety of other business scenarios that address planned and unplanned downtime requirements. A few such scenarios are discussed next:

![]() Database rolling upgrades The Data Guard standby database can be used to perform rolling database upgrades with near-zero downtime. The standby database is upgraded while the primary is still servicing production loads, and the primary database is switched over to the standby, with minimal interruption.

Database rolling upgrades The Data Guard standby database can be used to perform rolling database upgrades with near-zero downtime. The standby database is upgraded while the primary is still servicing production loads, and the primary database is switched over to the standby, with minimal interruption.

![]() Migrate to the Database Machine Data Guard can be used to migrate Oracle databases to the Database Machine with minimal downtime, using the physical or the logical standby database. The standby database will be instantiated on the Exadata Database Machine and then switched over to the primary role, allowing the migration to happen with the flip of a switch. Refer to Chapter 10 for further details.

Migrate to the Database Machine Data Guard can be used to migrate Oracle databases to the Database Machine with minimal downtime, using the physical or the logical standby database. The standby database will be instantiated on the Exadata Database Machine and then switched over to the primary role, allowing the migration to happen with the flip of a switch. Refer to Chapter 10 for further details.

![]() Data corruption detection and prevention The Data Guard Apply process can detect corruptions, and also provide mechanisms to automatically recover from corruptions. This topic is discussed in detail later in the chapter.

Data corruption detection and prevention The Data Guard Apply process can detect corruptions, and also provide mechanisms to automatically recover from corruptions. This topic is discussed in detail later in the chapter.

![]() User error protection You can induce a delay in the Redo Apply process to queue the transactions for a specified period (delay) before they are applied on the standby database. The delay provides you with the ability to recover from user or application errors on the primary database, since the corruption does not immediately get propagated and can be repaired by using a pristine copy from the standby.

User error protection You can induce a delay in the Redo Apply process to queue the transactions for a specified period (delay) before they are applied on the standby database. The delay provides you with the ability to recover from user or application errors on the primary database, since the corruption does not immediately get propagated and can be repaired by using a pristine copy from the standby.

![]() Offload queries and backups Active Data Guard can be used to offload read-only queries and backups to the active standby database. Offloading the queries from the primary database will improve performance and accelerate the return on investment on the standby, which otherwise would be sitting idle. Backups created on the standby can be used to recover the primary database, since the standby will be a block-for-block copy of the primary.

Offload queries and backups Active Data Guard can be used to offload read-only queries and backups to the active standby database. Offloading the queries from the primary database will improve performance and accelerate the return on investment on the standby, which otherwise would be sitting idle. Backups created on the standby can be used to recover the primary database, since the standby will be a block-for-block copy of the primary.

![]() Primary-primary configuration Instead of having a traditional Data Guard setup of a primary site and a standby site, with all primary databases configured on the primary site, you can configure both sites to be primary sites and spread the primary roles across the available machines on both sites. For example, if you have a traditional Data Guard setup of OLTP and DSS / data warehouse databases in the primary roles on site A and site Bhousing their standbys, you can configure site A with the primary OLTP database and the standby DSS database, and site B with standby OLTP and primary DSS. The primary-primary setup allows you to utilize all the systems in the configuration and spread the production load over to the standby, which leads to an overall increase in performance and at the same time, decreases the impact of individual site failures.

Primary-primary configuration Instead of having a traditional Data Guard setup of a primary site and a standby site, with all primary databases configured on the primary site, you can configure both sites to be primary sites and spread the primary roles across the available machines on both sites. For example, if you have a traditional Data Guard setup of OLTP and DSS / data warehouse databases in the primary roles on site A and site Bhousing their standbys, you can configure site A with the primary OLTP database and the standby DSS database, and site B with standby OLTP and primary DSS. The primary-primary setup allows you to utilize all the systems in the configuration and spread the production load over to the standby, which leads to an overall increase in performance and at the same time, decreases the impact of individual site failures.

When configuring Data Guard with the Exadata Database Machine, special consideration needs to be given for Exadata Hybrid Columnar Compression (EHCC) and for transporting the redo traffic over the network. These considerations are discussed next.

Data Guard and Exadata Hybrid Columnar Compression

The Exadata Hybrid Columnar Compression (EHCC) feature is the Oracle compression technology available only to the databases residing on the Exadata Storage Servers. If you plan on using EHCC compression with the Database Machine in a Data Guard configuration, the best practice is to have the primary and the standby databases both reside on the Exadata Storage Servers. Such a Data Guard configuration will provide the best performance and the highest availability of your critical data in the event of failures.

If you intend to configure a standby database on traditional storage (non-Exadata-based) for a primary database residing on Exadata Storage Servers, you need to consider the limitations mentioned next. The limitations arise mainly because EHCC compression is not supported on databases residing on non-Exadata storage.

![]() When performing a switchover operation to the standby on the traditional storage, the EHCC-compressed tables need to be uncompressed on the standby before they can be accessed by the applications. The time to uncompress EHCC tables can significantly affect the availability of the database and add to the total recovery time needed for the switchover. Since EHCC is normally used for less frequently updated or historical data, the inability to access this data momentarily may not be a serious problem for the business.

When performing a switchover operation to the standby on the traditional storage, the EHCC-compressed tables need to be uncompressed on the standby before they can be accessed by the applications. The time to uncompress EHCC tables can significantly affect the availability of the database and add to the total recovery time needed for the switchover. Since EHCC is normally used for less frequently updated or historical data, the inability to access this data momentarily may not be a serious problem for the business.

![]() If you need to uncompress the EHCC tables on the standby database, you should factor in the additional space needed on the standby to accommodate the uncompressed data. The additional space is dependent on the compression ratios provided by EHCC, and could range from 10 to 50 times the original compressed space.

If you need to uncompress the EHCC tables on the standby database, you should factor in the additional space needed on the standby to accommodate the uncompressed data. The additional space is dependent on the compression ratios provided by EHCC, and could range from 10 to 50 times the original compressed space.

![]() If the standby database is going to be utilized for production loads after the switchover, it needs to be able to sustain the throughput and load characteristics of the primary database. The reality is that it probably cannot match the Database Machine performance, in which case, the standby will be running in a reduced performance mode.

If the standby database is going to be utilized for production loads after the switchover, it needs to be able to sustain the throughput and load characteristics of the primary database. The reality is that it probably cannot match the Database Machine performance, in which case, the standby will be running in a reduced performance mode.

![]() Active Data Guard is not supported on the standby if you plan on using EHCC on the primary database.

Active Data Guard is not supported on the standby if you plan on using EHCC on the primary database.

NOTE

A simple way to move an EHCC-compressed table to non-EHCC is by using the ALTER TABLE MOVE command.

Data Guard Network Best Practices

The network utilized to push the Data Guard traffic (redo) from the primary database to the standby should be able to handle the redo rates generated by the primary. Otherwise, you will encounter apply lags, which can add delays to the switchover process and hamper the recoverability of data.

Before deciding on the best method of routing the redo between the primary and the standby, calculate the bandwidth required by the redo generated by the primary database. This can be easily done from the Automatic Workload Repository (AWR) report by looking at the redo size per second metric. The metric should be captured during peak loads on the primary and accumulated from all the instances generating redo in the RAC cluster. You should also add the overhead of the TCP network (~30 percent due to TCP headers) to come up with the final bandwidth requirement.

Once you calculate the bandwidth required, consider the following options for deciding the best method applicable for routing the redo:

![]() Public network Investigate the bandwidth available on the public network to push redo. If the standby is in a remote location, this might be the only option available to you, unless the primary and the standby data centers are networked using dedicated lines. Since the public network is shared with other traffic, consider using quality of service (QoS) features to guarantee the bandwidth allocation required for Data Guard.

Public network Investigate the bandwidth available on the public network to push redo. If the standby is in a remote location, this might be the only option available to you, unless the primary and the standby data centers are networked using dedicated lines. Since the public network is shared with other traffic, consider using quality of service (QoS) features to guarantee the bandwidth allocation required for Data Guard.

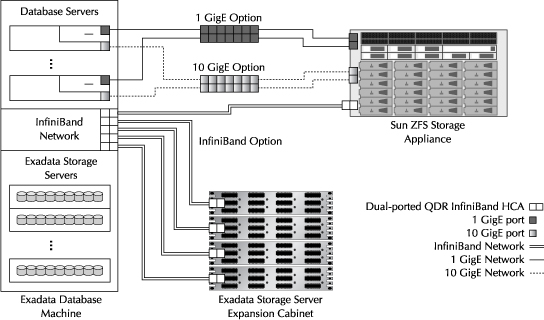

![]() Dedicated network If the public network is unable to handle the required bandwidth, consider isolating the traffic through a dedicated gigabit network. Each database server in the Database Machine has a set of 1 GigE and 10 GigE ports that you can use to route redo. You should be able to get an effective transfer rate of 120 MB/s through a 1 GigE port and 1 GB/s through a 10 GigE port. If you require more bandwidth than what is available from a single port, consider bonding multiple ports together or shipping the redo from multiple servers. For example, using two 1 GigE ports can give you a combined aggregated throughput of 240 MB/s, and two 10 GigE ports can give you a throughput of 2 GB/s.

Dedicated network If the public network is unable to handle the required bandwidth, consider isolating the traffic through a dedicated gigabit network. Each database server in the Database Machine has a set of 1 GigE and 10 GigE ports that you can use to route redo. You should be able to get an effective transfer rate of 120 MB/s through a 1 GigE port and 1 GB/s through a 10 GigE port. If you require more bandwidth than what is available from a single port, consider bonding multiple ports together or shipping the redo from multiple servers. For example, using two 1 GigE ports can give you a combined aggregated throughput of 240 MB/s, and two 10 GigE ports can give you a throughput of 2 GB/s.

![]() InfiniBand network Use the InfiniBand network to route redo when the redo rates cannot be accommodated using the 1 GigE or the 10 GigE ports. InfiniBand cables have a distance limitation (typically 100 m), so the distance between the primary and the standby Database Machines will be one of the deciding factors to determine if InfiniBand can be used. Using the InfiniBand network with TCP communication (IPoIB), you should be able to get a throughput of about 2 GB/s by using one port.

InfiniBand network Use the InfiniBand network to route redo when the redo rates cannot be accommodated using the 1 GigE or the 10 GigE ports. InfiniBand cables have a distance limitation (typically 100 m), so the distance between the primary and the standby Database Machines will be one of the deciding factors to determine if InfiniBand can be used. Using the InfiniBand network with TCP communication (IPoIB), you should be able to get a throughput of about 2 GB/s by using one port.

![]() Data Guard redo compression Consider transmitting the redo in a compressed format by using the Oracle Advanced Compression option. This is useful if none of the options are available to satisfy your bandwidth requirements.

Data Guard redo compression Consider transmitting the redo in a compressed format by using the Oracle Advanced Compression option. This is useful if none of the options are available to satisfy your bandwidth requirements.

Using Oracle GoldenGate with Database Machine

Oracle GoldenGate is a real-time, log-based, change data capture and replication tool that supports Oracle and non-Oracle sources and targets. Oracle GoldenGate replicates committed transactions from the source database in real time while preserving transactional integrity. GoldenGate has the intelligence to interface with the proprietary log formats of different database vendors, and using this intelligence, it can re-create the SQL statement of the transaction as it is executed on the source system and apply the re-created SQL on the destination system. GoldenGate performs these steps in real time and with minimal overhead on the source and thus, provides a solution that enables high-speed and real-time transactional data replication.

Oracle GoldenGate supports a variety of use cases, including real-time business intelligence, query offloading, zero-downtime upgrades and migrations, disaster recovery, and active-active databases with bidirectional replication, data synchronization, and high availability. A few use cases of GoldenGate focused on providing high availability for the Database Machine deployments are discussed here:

![]() GoldenGate can perform migrations of Oracle and non-Oracle databases to the Exadata Database Machine with minimal to zero downtime. The migration process starts by instantiating the source database on the Database Machine, replicating transactions from the source database to the Database Machine, keeping the two systems in sync for a time, and eventually switching the production system to the Oracle database. This method will allow you to perform comprehensive testing of your applications on the Exadata Database Machine, using production data volumes, tune them if necessary, and more importantly, allow you to attain a comfort level with the migration process before performing the actual switchover. Refer to Chapter 10 for further details on performing migrations to the Database Machine using GoldenGate.

GoldenGate can perform migrations of Oracle and non-Oracle databases to the Exadata Database Machine with minimal to zero downtime. The migration process starts by instantiating the source database on the Database Machine, replicating transactions from the source database to the Database Machine, keeping the two systems in sync for a time, and eventually switching the production system to the Oracle database. This method will allow you to perform comprehensive testing of your applications on the Exadata Database Machine, using production data volumes, tune them if necessary, and more importantly, allow you to attain a comfort level with the migration process before performing the actual switchover. Refer to Chapter 10 for further details on performing migrations to the Database Machine using GoldenGate.

![]() GoldenGate supports active-passive replication configurations with which it can replicate data from an active primary database on the Exadata Database Machine to the inactive standby database. GoldenGate can keep the two systems in sync by applying changes in real time. The standby database can be used to provide high availability during planned and unplanned outages, perform upgrades, and accelerate the recovery process on the primary.

GoldenGate supports active-passive replication configurations with which it can replicate data from an active primary database on the Exadata Database Machine to the inactive standby database. GoldenGate can keep the two systems in sync by applying changes in real time. The standby database can be used to provide high availability during planned and unplanned outages, perform upgrades, and accelerate the recovery process on the primary.

NOTE

The term standby database in this context is used to refer to a standby database replicated by GoldenGate, and should not be confused with the Data Guard standby database.

![]() GoldenGate supports active-active replication configurations, which offer bidirectional replication between primary and standby databases. The benefit of active-active is that the standby database (which is also another primary) can be used to offload processing from the primary. The standby database would otherwise be idle and will be utilized only during outages. This setup will allow you to distribute load across the primary and the standby databases based on application types and geographic location. Utilizing an idle database, which is usually considered a “dead” investment by the IT departments, will help you to justify the cost of purchasing a standby Database Machine.

GoldenGate supports active-active replication configurations, which offer bidirectional replication between primary and standby databases. The benefit of active-active is that the standby database (which is also another primary) can be used to offload processing from the primary. The standby database would otherwise be idle and will be utilized only during outages. This setup will allow you to distribute load across the primary and the standby databases based on application types and geographic location. Utilizing an idle database, which is usually considered a “dead” investment by the IT departments, will help you to justify the cost of purchasing a standby Database Machine.

![]() The Database Machine can be used as a real-time data warehouse in which other Oracle and non-Oracle databases can feed real-time transactions using GoldenGate. Real-time data warehouses are used by the enterprises in today’s competitive landscape for performing real-time analytics and business intelligence.

The Database Machine can be used as a real-time data warehouse in which other Oracle and non-Oracle databases can feed real-time transactions using GoldenGate. Real-time data warehouses are used by the enterprises in today’s competitive landscape for performing real-time analytics and business intelligence.

![]() GoldenGate can be used in situations that require the Exadata Database Machine to feed other database systems or data warehouses that require changed data to be captured from the Database Machine and propagated to the destination systems in real time. These types of deployments allow coexistence and integration of the Database Machine with other databases that you may have deployed in the data center.

GoldenGate can be used in situations that require the Exadata Database Machine to feed other database systems or data warehouses that require changed data to be captured from the Database Machine and propagated to the destination systems in real time. These types of deployments allow coexistence and integration of the Database Machine with other databases that you may have deployed in the data center.

Oracle GoldenGate Architecture

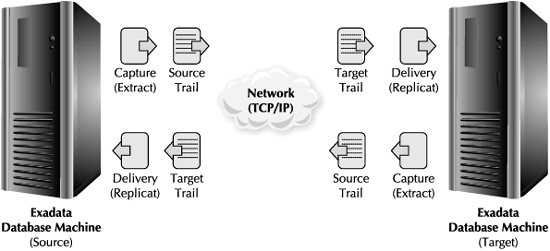

The primary components of the Oracle GoldenGate software are shown in Figure 6-2. Each component is designed so that it can perform its task independently of the other. This design ensures minimal dependencies and at the same time preserves data integrity by eliminating undue interference.

FIGURE 6-2. Oracle GoldenGate architecture

The main components comprising the GoldenGate software architecture are the following:

![]() Extract process The extract process captures new transactions as they occur on the source system. The extract process interfaces with the native transaction logs of the source database and reads the result of insert, update, and delete operations in real time. The extract can push captured changes directly to the replicat process, or generate trail files.

Extract process The extract process captures new transactions as they occur on the source system. The extract process interfaces with the native transaction logs of the source database and reads the result of insert, update, and delete operations in real time. The extract can push captured changes directly to the replicat process, or generate trail files.

![]() Trail files Trail files are generated by the extract process. They contain database transactions captured from the source system in a transportable and platform-independent format. Trail files reside outside the database to ensure platform independence, and to enhance the reliability and availability of transactions. This architecture minimizes the impact on the source system because additional tables or queries are not required on the source for supporting the data capture process.

Trail files Trail files are generated by the extract process. They contain database transactions captured from the source system in a transportable and platform-independent format. Trail files reside outside the database to ensure platform independence, and to enhance the reliability and availability of transactions. This architecture minimizes the impact on the source system because additional tables or queries are not required on the source for supporting the data capture process.

![]() Replicat process The replicat process takes the changed data from the latest trail file and applies it to the target using the native SQL for the appropriate target database system. The replicat process preserves the original sequence of the transactions as they were captured on the source system, and thereby preserves transactional and referential integrity at the target.

Replicat process The replicat process takes the changed data from the latest trail file and applies it to the target using the native SQL for the appropriate target database system. The replicat process preserves the original sequence of the transactions as they were captured on the source system, and thereby preserves transactional and referential integrity at the target.

![]() Manager process The manager process runs on the target and the source systems, and is responsible for starting, monitoring, and restarting other GoldenGate processes, and also for allocating data storage, reporting errors, and logging events.

Manager process The manager process runs on the target and the source systems, and is responsible for starting, monitoring, and restarting other GoldenGate processes, and also for allocating data storage, reporting errors, and logging events.

![]() Checkpoint files Checkpoint files contain the current read and write positions of the extract and replicat processes. Checkpoints provide fault tolerance by preventing the data loss when the system, the network, or the GoldenGate processes need to be restarted.

Checkpoint files Checkpoint files contain the current read and write positions of the extract and replicat processes. Checkpoints provide fault tolerance by preventing the data loss when the system, the network, or the GoldenGate processes need to be restarted.

![]() Discard files Discard files are used by GoldenGate to record failed operations either by the extract or the replicat process. For example, invalid column mappings are logged into the discard file by the replicat process so that they can be further investigated.

Discard files Discard files are used by GoldenGate to record failed operations either by the extract or the replicat process. For example, invalid column mappings are logged into the discard file by the replicat process so that they can be further investigated.

Oracle GoldenGate and Database Machine Best Practices

As discussed previously, GoldenGate requires a set of key files to accomplish its tasks. The best practice for storing these files on the Database Machine is to store them in the Oracle Database File System (DBFS). DBFS is discussed in Chapter 2; in a nutshell, it provides a distributed, NFS-like file system interface that can be mounted on the database servers. DBFS and NFS are the only methods currently available in the Database Machine to allow sharing of files among the database servers. The advantage of DBFS over NFS-like file systems is that DBFS inherits the high availability, reliability, and disaster recovery capabilities of the Oracle database since the files placed in DBFS are stored inside the database as a SecureFiles object.

NOTE

NFS file systems can be mounted on the database servers using the InfiniBand network or the 1 GigE or 10 GigE ports. The underlying transport protocol supported for NFS is TCP (IPoIB for InfiniBand).

The best-practice considerations for implementing GoldenGate with the Exadata Database Machine are as follows:

![]() Use DBFS to store GoldenGate trail files and checkpoint files. If a database server in the Database Machine fails, the GoldenGate extract and replicat processes will continue to function, since the trail and checkpoint files will still be accessible from the other database servers.

Use DBFS to store GoldenGate trail files and checkpoint files. If a database server in the Database Machine fails, the GoldenGate extract and replicat processes will continue to function, since the trail and checkpoint files will still be accessible from the other database servers.

![]() Ensure that the extract and the replicat processes run only on a single database node. This requirement guarantees that multiple extract/replicat processes do not work on the same set of transactions at the same time. One way to prevent them from starting up concurrently from other database nodes is to mount the DBFS file system only on one node and use this node to run these processes. Other nodes cannot accidentally start them, since DBFS will not be mounted on them.

Ensure that the extract and the replicat processes run only on a single database node. This requirement guarantees that multiple extract/replicat processes do not work on the same set of transactions at the same time. One way to prevent them from starting up concurrently from other database nodes is to mount the DBFS file system only on one node and use this node to run these processes. Other nodes cannot accidentally start them, since DBFS will not be mounted on them.

This check is built in when using a regular file system to store the checkpoint and trail files instead of DBFS. Using file locking mechanisms, the first extract/replicat process will exclusively lock the files and ensure that the second process cannot start. DBFS currently does not support such methods and hence, the manual checks are required.

![]() The best practice for storing checkpoint files on DBFS is to create a symbolic link from the GoldenGate home directory to a directory on DBFS, as shown in the following example. Use the same mount point names on all the nodes to ensure consistency and seamless failover.

The best practice for storing checkpoint files on DBFS is to create a symbolic link from the GoldenGate home directory to a directory on DBFS, as shown in the following example. Use the same mount point names on all the nodes to ensure consistency and seamless failover.

# DBFS is mounted on /mnt/dbfs

# GoldenGate is installed on /OGG/v10_4

% mkdir /mnt/dbfs/OGG/dirchk

% cd /OGG/v10_4

% rm -rf dirchk

% ln -s /mnt/dbfs/OGG/dirchk dirchk

![]() The DBFS database should be configured as a separate RAC database on the same nodes that house the databases accessed by GoldenGate. Perform checks to ensure that the DBFS file system is mountable on all database nodes. However as a best practice stated earlier, only one node will mount the DBFS at a time.

The DBFS database should be configured as a separate RAC database on the same nodes that house the databases accessed by GoldenGate. Perform checks to ensure that the DBFS file system is mountable on all database nodes. However as a best practice stated earlier, only one node will mount the DBFS at a time.

![]() Install the GoldenGate software in the same location on all the nodes that are required to run GoldenGate upon a failure of the original node. Also ensure that the manager, extract, and replicat parameter files are up to date on all nodes.

Install the GoldenGate software in the same location on all the nodes that are required to run GoldenGate upon a failure of the original node. Also ensure that the manager, extract, and replicat parameter files are up to date on all nodes.

![]() Configure the extract and replicat processes to start up automatically upon the startup of the manager process.

Configure the extract and replicat processes to start up automatically upon the startup of the manager process.

![]() Configure Oracle Clusterware to perform startup, shutdown, and failover of GoldenGate components. This practice provides high availability of the GoldenGate processes by automating steps required to initiate a failover operation.

Configure Oracle Clusterware to perform startup, shutdown, and failover of GoldenGate components. This practice provides high availability of the GoldenGate processes by automating steps required to initiate a failover operation.

![]() The page and discard files should be set up on the local file system and within the GoldenGate installation directory structure. The page file is a special memory-mapped file and currently not supported by DBFS.

The page and discard files should be set up on the local file system and within the GoldenGate installation directory structure. The page file is a special memory-mapped file and currently not supported by DBFS.

Database Machine Patches and Upgrades

Applying patches and performing upgrades are normal events in the lifecycle of software, and the Exadata Database Machine software components are no exception. The software installed on the database servers, the Exadata Storage Servers, and the InfiniBand switches are candidates for being patched or upgraded when Oracle releases their newer versions.

Oracle Enterprise Manager (OEM) has the capability to patch the Oracle software stack. You can use OEM to simplify the patching experience on the Exadata Database Machine. OEM’s integration with My Oracle Support enables you to identify recommended patches for your system by searching Knowledge Base articles, validating them for any conflicts, finding or requesting merge patches, and lastly but not least, automating the patch deployment process by using OEM Provisioning and Patch Automation features.

We will next describe the Database Machine patching process using methods that provide zero downtimes. Database high-availability features such as RAC and Data Guard, and the built-in high-availability features of Exadata Storage Server, make zero downtime patching achievable.

We will also discuss the methods available for upgrading the database, ASM and Clusterware, with minimal downtime using Data Guard.

NOTE

The patches for the Database Machine are categorized into database software patches, database system patches (which include the OS, firmware, database, and OFED patches), and the Exadata Storage Server patches. A separate system-level patch for the Exadata Storage Server does not exist, since this is bundled into the Exadata Storage Server patch.

Exadata Storage Server Patching

The Exadata Storage Server patches encompass patches to the Exadata Storage Server Software, Oracle Linux OS, firmware updates, and patches to Open Fabrics Enterprise Distribution (OFED) packages. Under no circumstances should users apply OS, firmware, or OFED patches directly on the Exadata Storage Servers. These patches will be supplied by Oracle and will be bundled into an Exadata Storage Server patch. The patch will be applied to all the servers in a rolling fashion and one at a time, without incurring any downtime.

Exadata Storage Server patches are one of two types: overlay and staged. Overlay patches require a restart of the Exadata Storage Server Software processes (CELLSRV, RS, and MS). Upon the restart of the Exadata Storage Server Software, ASM issues an automatic reconnect and the service resumes normal operation. This process does not incur any downtime.

Staged patches require the Exadata Storage Server to be rebooted upon application. The Exadata Storage Server will be taken offline and if any interim updates to the data residing in the offline Exadata Storage Servers occur during this period, they will be tracked by ASM. The updates will be synced when the Exadata Storage Server becomes available, using the ASM fast mirror re-sync feature. Staged patches do not incur any service downtime.

Database Patching with Minimal Downtime

Oracle database patches are broadly categorized into two types—patches and patch sets. A patch is a change to the Oracle software that happens between patch sets or upgrades, whereas a patch set is a mechanism for delivering a set of patches that are combined, integrated, and tested together, and delivered on a regular basis. Patches may be applied to an Oracle database with zero or near-zero downtime, using one of two techniques: the online patching feature introduced in Oracle Database 11g and rolling patching with Oracle RAC. We will describe both features in this section. The process of applying patch sets is treated similar to an upgrade, and you should use the methods outlined in the “Database Rolling Upgrades” section of this chapter to perform minimal downtime upgrades from patch sets.

NOTE

Oracle database patches can be Interim, Merge, Bundle, or Critical Patch Update (CPU) patches.

Rolling Patching with RAC

Oracle provides the ability to apply patches to the individual nodes of a RAC cluster in a rolling fashion, to one RAC node at a time, while the other nodes are still servicing user requests. The process of applying a rolling patch starts with taking one of the RAC nodes out of service, applying the patch to the node, and putting the node back in service. These steps are repeated until all instances in the cluster are patched.

Patches need to be labeled as “rolling” before they can be applied using this method. Usually, patches that modify the database and the shared structures between the RAC instances do not qualify as rolling. Moreover, only patches, and not patch sets, can be labeled as rolling capable.

Rolling patching using RAC guarantees the availability of the database service during the patching process. However, you will be running with one less node in the cluster until the process is completed, so the impact to the business due to reduced capacity should be considered prior to proceeding with this method.

Online Patching

Online patching is the process of applying patches to the Oracle instance while the database instance is up. A patch is capable of being applied online when the scope of changes introduced by the patch is small and the patch does not modify the database or the shared structures, which are also the requirements for rolling patches.

The criteria for qualifying a patch as online is more restrictive than the criteria for the rolling patch. If the patch is not qualified as rolling, then it definitely cannot be qualified as online. Examples of patches that usually get qualified as online are the interim and debug patches.

When applying an online patch, you specify the ORACLE_HOME of the binaries that you like to patch, along with the database instance. The online patch will patch the binaries residing in the Oracle home as with a regular database patch. The online patch will also patch the database instance processes. Each database process periodically checks for patched code and copies the new code into its execution space. This means that the processes might not pick up the new patched code at the exact time the patch is applied and there might be some delay.

You will see an increase in the overall memory utilization of the patched database processes due to the extra space needed in the Program Global Area (PGA) for running patched code. The extra memory is released once the database instance is restarted. You need to consider the total free memory available on the database host before applying the patch so you can investigate the impact of the memory increase to other resources. Typically, each patched process requires about 3 to 5 percent more memory, but the actual memory will depend on the number of changes introduced in the patch. The best way to measure it would be to test the patch on a test system before applying it to the production system.

Apart from using Enterprise Manager, you can also use the opatch utility to apply online patches. Use this opatch command to query whether the patch is qualified as online. If it is, the output from the command will be “Patch is an online patch: true”.

$ opatch query <path to patch directory> -is_online_patch

Oracle Clusterware and Oracle ASM Rolling Upgrades

Oracle Database 11g Release 2 is capable of performing rolling upgrades of Oracle ASM and Oracle Clusterware when configured in a cluster environment, thereby making the cluster available to the database and the applications during the upgrade process.

Starting with Database 11g Release 2, Oracle ASM and Clusterware are both executed from one Oracle home installation called the grid infrastructure. Upgrading the grid infrastructure binaries to the next release takes care of upgrading Oracle ASM and Clusterware software.

With the rolling upgrade feature, you will take one node in the cluster down, upgrade the Oracle grid infrastructure, start up ASM and Clusterware, and put the node back in the cluster. You will repeat this process on all the nodes, one at a time, in a rolling fashion. The cluster is available during this process, and no downtime is incurred to the database.

Oracle ASM and Clusterware can run at different versions of the software until all nodes in the cluster are upgraded. It should be noted that any new features introduced by the new versions of ASM or Clusterware are not enabled until all nodes in the cluster are upgraded.

Database Rolling Upgrades

Using Oracle Data Guard, it is possible for you to upgrade from older versions of the database and apply patch sets, in a rolling fashion and with near-zero downtime, to the end users and applications. Data Guard rolling upgrades were introduced with Oracle Database 10 g Release 1 using the SQL Apply process of the logical standby database. Oracle Database 11g enhances this capability by using a transient logical standby method that utilizes a physical standby database.

Rolling upgrades using the transient logical standby method start by using an existing physical standby database and temporarily converting it to a logical standby for the purpose of the upgrade, followed by the upgrade of the logical standby to the latest release. The logical standby database is then made the new primary by a simple Data Guard switchover process. Keep in mind that there are restrictions, based on data types and other factors, that impact the use of logical standby databases; please refer to the documentation for more details.

NOTE

During rolling upgrades, when converting the physical standby database to the logical standby, you need to keep the identity of the original physical standby database intact by using the KEEP IDENTITY clause of the ALTER DATABASE RECOVER TO LOGICAL STANDBY statement. This clause ensures that the logical standby has the same DBID and DB_NAME as the original physical standby.

During the rolling upgrade process, the synchronization of the redo is paused before the upgrade process is started on the logical standby; the redo from the primary is queued to be applied and resumes after the upgrade completes. The SQL Apply process is capable of synchronizing the redo generated by a lower release of the primary database with a higher release of the upgraded logical standby database, and this ensures that all the transactions are propagated to the standby and there is no data loss. This capability of the SQL Apply process forms the basis of performing rolling upgrades. The primary and the standby databases can remain at different versions until you perform sanity checks on the upgraded logical standby and confirm that the upgrade process has completed successfully.

At this point, you can perform a Data Guard switchover to the standby database, resulting in changing the role of the upgraded logical standby to primary. You can upgrade the old primary database by following a similar process as the one followed for the standby, and initiate a second switchover to the original production system.

There are several benefits of using the transient logical standby method for upgrades. In fact, this method is the Oracle MAA recommended best practice, because the method is simple, reliable, and robust, and requires minimal downtime. Some of the benefits of transient logical standby are highlighted here:

![]() The transient logical standby method utilizes an existing physical standby database for performing the upgrade and does not require the extra space for creating a new logical standby database solely for the purpose of performing the upgrade.

The transient logical standby method utilizes an existing physical standby database for performing the upgrade and does not require the extra space for creating a new logical standby database solely for the purpose of performing the upgrade.

![]() The rolling upgrade process provides the added benefit of testing the upgraded environment prior to performing the switchover. The tests are performed using the new version of the Oracle software, with production data and production-like workloads.

The rolling upgrade process provides the added benefit of testing the upgraded environment prior to performing the switchover. The tests are performed using the new version of the Oracle software, with production data and production-like workloads.

![]() This method greatly reduces the downtime required by running the pre-upgrade, upgrade, and post-upgrade tasks on the standby while the production system is still online and accessible to the users. The downtime incurred by this method is essentially the time it takes for the database to perform a switchover operation to the standby database.

This method greatly reduces the downtime required by running the pre-upgrade, upgrade, and post-upgrade tasks on the standby while the production system is still online and accessible to the users. The downtime incurred by this method is essentially the time it takes for the database to perform a switchover operation to the standby database.

Online Application Upgrades Using Edition-Based Redefinition

Switching gears a bit, this section focuses on providing high availability during database application upgrades, and not Oracle software upgrades as we were discussing earlier.

Upgrading user applications and database objects with zero downtime is possible by using the edition-based redefinition feature of Oracle Database 11g Release 2. Edition-based redefinition allows you to upgrade the application and its associated database objects to a newer edition (or version), while the old edition of the application and the database objects coexist and are still accessible by the users. Once the upgrade process is complete, the new connections from the upgraded application will be accessing the new edition of the objects, and the users that were still connected to the old edition will continue to be serviced until they disconnect the session.

The database concepts that enable edition-based redefinitions are

![]() Editions An edition associates the versions of the database objects that together form a semantically intact code release. The release can include all the objects, which is the case when the code is installed for the first time, or a subset of objects—if the objects are modified using a patched release. Database sessions execute in the context of an edition and a user is associated with an edition upon connect time.

Editions An edition associates the versions of the database objects that together form a semantically intact code release. The release can include all the objects, which is the case when the code is installed for the first time, or a subset of objects—if the objects are modified using a patched release. Database sessions execute in the context of an edition and a user is associated with an edition upon connect time.

![]() Editioning views An editioning view of a database object is the definition of the object as it existed in a particular edition. Editioning views expose different definitions of the database object and allow the users to see the definition that applies to its edition.

Editioning views An editioning view of a database object is the definition of the object as it existed in a particular edition. Editioning views expose different definitions of the database object and allow the users to see the definition that applies to its edition.

![]() Cross-edition triggers Cross-edition triggers are responsible for synchronizing data changes between old and new editions of tables. For example, if a table has new columns added in its latest edition, the users connected to the old edition can still perform updates using the old definition, and the cross-edition trigger will take care of populating the new columns behind the scenes.

Cross-edition triggers Cross-edition triggers are responsible for synchronizing data changes between old and new editions of tables. For example, if a table has new columns added in its latest edition, the users connected to the old edition can still perform updates using the old definition, and the cross-edition trigger will take care of populating the new columns behind the scenes.

Exadata Storage Server High Availability

Ensuring the high availability of the database storage grid is important for enhancing the overall availability of the Database Machine. Oracle ASM provides built-in high-availability features by incorporating Grid RAID, as outlined in Chapter 5. With Grid RAID, when an underlying disk on the Exadata Storage Server fails, the data is still available from the mirrored copy placed on a different Exadata Storage Server. ASM also incorporates built-in protection from data corruption, through which it will transparently access a mirrored copy when the primary copy is found to be corrupt.

Although the ASM redundancy level of NORMAL provides protection from multiple, simultaneous, failures and corruptions occurring in a single Exadata Storage Server, it may not protect you when the failures are spread across multiple Exadata Storage Servers. In such situations, your option for recovery is to perform a restore from a backup or to fail over to the Data Guard standby database (if it is configured).

The best practices outlined next guarantee higher availability and recoverability of the storage grid upon simultaneous, multiple failures that spread across multiple Exadata Storage Servers.

Storage Configuration Best Practices

The recommended ASM diskgroup best-practice configuration as discussed in Chapter 5 is to create a minimum of two diskgroups in the Database Machine. The first diskgroup will store the user and system tablespaces, and the second will store the database Fast Recovery Area (FRA). The database FRA is used for storing archived logs, flashback logs, redo logs, and control files. For the purpose of this discussion, let us assume the diskgroup that stores user data is named DATA and the diskgroup that stores the FRA is named RECOV.

NOTE

A third ASM diskgroup is created by Oracle when configuring the Database Machine. This diskgroup is typically used to store OCR and voting disks required by Oracle Clusterware, and is created on the grid disks residing on the outermost tracks.

The DATA diskgroup should be created on grid disks that reside on the hot or outer tracks of the cell disk, whereas the RECOV diskgroup should reside on the cold or inner tracks of the cell disk. This ensures higher performance for the applications by ensuring faster access to the data blocks from the hot areas of the cell disk.

Storage Configuration with Data Guard

The Data Guard setup ensures that you can tolerate all types of failures on the primary site, up to and including a total disaster of the primary. Also, for failures that are limited to a single Exadata Storage Server, the Grid RAID features will make the data available at all times without requiring the need to fail over to the standby site.

The MAA best practices for configuring DATA and RECOV diskgroups with Data Guard are

![]() Create DATA and RECOV diskgroups using ASM NORMAL redundancy.

Create DATA and RECOV diskgroups using ASM NORMAL redundancy.

![]() The DATA and RECOV diskgroups should be created across all available Exadata Storage Servers in the Database Machine.

The DATA and RECOV diskgroups should be created across all available Exadata Storage Servers in the Database Machine.

![]() This setup guarantees maximum protection due to failures, and at the same time provides the best performance by utilizing all available Exadata Storage Servers in the storage grid.

This setup guarantees maximum protection due to failures, and at the same time provides the best performance by utilizing all available Exadata Storage Servers in the storage grid.

Storage Configuration Without Data Guard

When you do not have Data Guard configured, as stated earlier, you are only protected from single failures or multiple failures confined to one Exadata Storage Server when you use ASM NORMAL redundancy. The MAA best practices for configuring DATA and RECOV diskgroups without Data Guard are

![]() Create the DATA diskgroup using ASM NORMAL redundancy.

Create the DATA diskgroup using ASM NORMAL redundancy.

![]() Create the RECOV diskgroup using HIGH redundancy. The HIGH redundancy ensures protection from double failures occurring across the Exadata Storage Servers and guarantees full recovery with zero data loss.

Create the RECOV diskgroup using HIGH redundancy. The HIGH redundancy ensures protection from double failures occurring across the Exadata Storage Servers and guarantees full recovery with zero data loss.

NOTE

If you are using ASM NORMAL redundancy without a Data Guard setup, there is a chance of data loss when multiple failures occur across a set of Exadata Storage Servers. For example, if you lose both copies of a redo log due to multiple failures, and if the redo was marked “active” by the database indicating that it was an essential component for performing a full recovery, you have essentially lost your data that was in the redo.

Preventing Data Corruption

Data corruption can occur anytime and in any layer of the hardware and the software stack that deals with storing and retrieving data. Data can get corrupted when it is in the storage, during its transmission over the network, in the memory structures, or when the storage layer performs an I/O operation. If data corruption is not prevented or repaired in a timely manner, the database will lose its integrity and can result in irreparable data loss, which can be catastrophic for the business.

In this section, we will discuss the best practices for preventing and detecting corruption, and the methods available for automatically repairing corruption when it occurs. Although the best practices can help you prevent almost all types of corruption, you might still end up with some, as corruption cannot always be completely prevented. If you have discovered corruption, you can use database recovery methods such as block-level recovery, automated backup and recovery, tablespace point-in-time recovery, remote standby databases, and transactional recovery. Keep in mind that the recovery process can be time consuming, so every effort should be taken to minimize the occurrence of corruption.

Detecting Corruption with Database Parameters

The Oracle database has internal checks which help detect corruption occurring in the database block. The checks involve calculating a checksum on the block contents, and storing it along with the block number and a few other fields in the block header. The checksum is used later to validate the integrity of the block, based on the settings of a few database parameters, that we will define shortly.

The database can also help detect lost writes. A lost write is an I/O operation that gets successfully acknowledged by the storage subsystem as being “persisted,” but for some reason the I/O was not saved to the disk. Upon a subsequent read of the block that encountered a lost write, the I/O subsystem returns the stale version of the block, which might be used by the database for performing other updates and thereby instigate the propagation of corruption to other coherent blocks.

Corruption detection is built into Oracle ASM when the ASM diskgroups are configured with NORMAL or HIGH redundancy. ASM is able to detect block corruption by validating the block checksum upon a read, and if it detects a corruption, it transparently reads the block again from the mirrored copy. If the mirrored block is intact, then ASM will return the good block to the database, and also try to write the good block over the bad block to fix the corruption. This process is transparent to the database and does not require any setup or tweaks.

When block corruption is detected by RMAN and database processes, it is recorded in the database dictionary view V$DATABASE_BLOCK_CORRUPTION. The view is continuously updated when corrupted blocks are repaired, thus allowing you to detect and report upon the status of corruption much sooner than was ever possible.

The parameters that you use to configure automatic corruption detection in the database and to prevent lost writes are

![]() DB_BLOCK_CHECKSUM This parameter determines whether the database will calculate a checksum for the block and store it in the header each time it is written to disk. DB_BLOCK_CHECKSUM can have the following values:

DB_BLOCK_CHECKSUM This parameter determines whether the database will calculate a checksum for the block and store it in the header each time it is written to disk. DB_BLOCK_CHECKSUM can have the following values:

![]() FALSE or OFF Checksums are only enabled and verified for the SYSTEM tablespace.

FALSE or OFF Checksums are only enabled and verified for the SYSTEM tablespace.

![]() TYPICAL Checksums are enabled on data blocks and computed only upon initial inserts into the block. This is the default setting.

TYPICAL Checksums are enabled on data blocks and computed only upon initial inserts into the block. This is the default setting.

![]() TRUE or FULL Checksums are enabled on redo and data blocks upon initial inserts and recomputed upon updates/deletes only on data blocks.

TRUE or FULL Checksums are enabled on redo and data blocks upon initial inserts and recomputed upon updates/deletes only on data blocks.

![]() DB_BLOCK_CHECKING This parameter enables the block integrity checks to be performed by Oracle using checksums computed by the DB_BLOCK_CHECKSUM parameter. DB_BLOCK_CHECKING can have the following values:

DB_BLOCK_CHECKING This parameter enables the block integrity checks to be performed by Oracle using checksums computed by the DB_BLOCK_CHECKSUM parameter. DB_BLOCK_CHECKING can have the following values:

![]() FALSE or OFF Semantic checks are performed on the objects residing in SYSTEM tablespace only. This is the default setting.

FALSE or OFF Semantic checks are performed on the objects residing in SYSTEM tablespace only. This is the default setting.

![]() LOW Basic block header checks are performed when blocks change in memory. This includes block changes due to RAC interinstance communication, reads from disk, and updates to data blocks.

LOW Basic block header checks are performed when blocks change in memory. This includes block changes due to RAC interinstance communication, reads from disk, and updates to data blocks.

![]() MEDIUM All LOW-level checks plus full semantic checks are performed on all objects except indexes.

MEDIUM All LOW-level checks plus full semantic checks are performed on all objects except indexes.

![]() TRUE or FULL All MEDIUM-level checks plus full semantic checks are performed on index blocks.

TRUE or FULL All MEDIUM-level checks plus full semantic checks are performed on index blocks.

![]() DB_LOST_WRITE_PROTECT This parameter enables (or disables) logging of buffer cache block reads in the redo log. When set to TYPICAL or FULL, the system change number (SCN) of the block being read from the buffer cache is recorded in the redo. When the redo is used by Data Guard to apply transactions from the primary to the standby database, the redo SCN can be compared to the data block SCN on the standby database and evaluated to detect lost writes.

DB_LOST_WRITE_PROTECT This parameter enables (or disables) logging of buffer cache block reads in the redo log. When set to TYPICAL or FULL, the system change number (SCN) of the block being read from the buffer cache is recorded in the redo. When the redo is used by Data Guard to apply transactions from the primary to the standby database, the redo SCN can be compared to the data block SCN on the standby database and evaluated to detect lost writes.

Lost write detection works best when used in conjunction with Data Guard (this topic is covered in the next section). When you do not use Data Guard, RMAN can detect lost writes when it performs recovery.

DB_LOST_WRITE_PROTECT can have the following values:

![]() NONE This is the default setting and disables buffer cache reads from being recorded in the redo.

NONE This is the default setting and disables buffer cache reads from being recorded in the redo.

![]() TYPICAL Buffer cache reads involving read-write tablespaces are recorded in the redo.

TYPICAL Buffer cache reads involving read-write tablespaces are recorded in the redo.

![]() FULL All buffer cache reads are recorded in the redo, including reads on read-write and read-only tablespaces.

FULL All buffer cache reads are recorded in the redo, including reads on read-write and read-only tablespaces.

As of Oracle Database 11g Release 1, you are not required to individually set each of the three parameters discussed earlier. You can set one single parameter, DB_ULTRA_SAFE, and that takes care of implementing the appropriate level of protection by setting DB_BLOCK_CHECKING, DB_BLOCK_CHECKSUM, and DB_LOST_WRITE_PROTECT. The DB_ULTRA_SAFE can have the following values:

![]() DATA_AND_INDEX This value turns on the following settings for data and index blocks:

DATA_AND_INDEX This value turns on the following settings for data and index blocks:

![]() DB_BLOCK_CHECKSUM to FULL

DB_BLOCK_CHECKSUM to FULL

![]() DB_BLOCK_CHECKING to FULL

DB_BLOCK_CHECKING to FULL

![]() DB_LOST_WRITE_PROTECT to TYPICAL

DB_LOST_WRITE_PROTECT to TYPICAL

![]() DATA_ONLY This value turns on the following settings only for data blocks:

DATA_ONLY This value turns on the following settings only for data blocks:

![]() DB_BLOCK_CHECKSUM to FULL

DB_BLOCK_CHECKSUM to FULL

![]() DB_BLOCK_CHECKING to MEDIUM

DB_BLOCK_CHECKING to MEDIUM

![]() DB_LOST_WRITE_PROTECT to TYPICAL

DB_LOST_WRITE_PROTECT to TYPICAL

![]() OFF This is the default setting. OFF will default to the individual settings of DB_BLOCK_CHECKSUM, DB_BLOCK_CHECKING, and DB_LOST_WRITE_PROTECT.

OFF This is the default setting. OFF will default to the individual settings of DB_BLOCK_CHECKSUM, DB_BLOCK_CHECKING, and DB_LOST_WRITE_PROTECT.

Turning on these parameters at various levels will incur additional overhead on the system. The overhead will typically vary between 1 and 10 percent, depending on the application and the available system resources. It is a best practice to perform sample tests to calculate the overhead as it applies to your system. The overhead should be compared to the benefits of automatically detecting and preventing corruption, especially when dealing with systems requiring high availability.

Using Data Guard to Prevent Corruptions

Oracle Data Guard provides protection from data corruption by keeping an identical copy of the primary database on the standby site. The database on the standby site can be utilized to repair the corruption encountered on the primary database, and vice versa. Moreover, the Data Guard redo apply process performs data block integrity checks while applying the redo, and ensures that the corruption on the primary database does not get propagated to the standby.

Data Guard also helps recover from logical corruption initiated by the user or the application on the primary database by delaying the application of redo to the standby site. With the delay in place, if you notice the corruption before it is propagated to the standby, you can stop the apply process and recover the data from the intact copy on the standby. You can also use the database Flashback features on the primary or the standby to recover from logical corruption.

To enable corruption protection with Data Guard, the best practice is to set the database parameter DB_ULTRA_SAFE to DATA_AND_INDEX on the primary and the standby database. As stated earlier, setting DB_ULTRA_SAFE to DATA_AND_INDEX will incur a slight performance overhead on the system, but the benefits of protecting the critical data from corruptions will surely outweigh the impact.

If performance impact on the primary database becomes an issue, at a minimum, you should enable this parameter on the standby database.

Enhanced Lost Write Protection

The lost write detection feature discussed earlier is most effective when used in conjunction with Data Guard Redo Apply. When this feature is enabled, the apply process on the standby database reads the transaction system change number (SCN) from the redo log shipped from the primary database and compares it with the SCN of the corresponding data block on the standby. If the redo SCN is lower than the data block SCN, it indicates a lost write occurred on the primary. If the redo SCN is higher than the data block SCN, it indicates a lost write occurred on the standby. In both situations, the apply process raises an error, alerting the database administrator to take steps to repair the corruption.

To repair lost writes on the primary database, you must initiate failover to the standby database and restore or recover the corrupt block on the primary. To repair a lost write on a standby database, you must re-create the standby database or restore a backup of the affected files.

Enhanced lost write protection is enabled by the setting DB_LOST_WRITE_PROTECT to TYPICAL as discussed earlier.

Automatic Block Repair Using Physical Standby

The automatic block repair feature enables automatic repairs of corrupt data blocks as soon as the database detects a block corruption, thereby improving the availability of data by fixing corruptions promptly. Without this feature, the corrupt data is unavailable until a block recovery is performed from database backups or Flashback logs. The automatic block repair feature is available when you use Oracle Data Guard physical standby operating in real-time query mode.

When the Data Guard setup is configured with automatic block repair and the primary database encounters a corrupt data block, the block automatically is replaced with an uncorrupt copy from the physical standby. Likewise, when a corrupt block is accessed by the physical standby database, the block is replaced with an uncorrupt copy from the primary database. If, for any reason, the automatic block feature is unable to fix the corruption, it raises ORA-1578 errors.

Automatic block repair is enabled by setting LOG_ARCHIVE_CONFIG or FAL_SERVER parameters. For detailed instructions on setting these parameters, refer to the Oracle Data Guard manuals.

Exadata Storage Server and HARD

The Hardware Assisted Resilient Data (HARD) is an initiative by Oracle to help prevent data corruptions occurring at the storage management layer when writing Oracle data blocks to the hard disk. With the HARD program, the storage vendors implement internal checks to validate the integrity of the Oracle block right before (or after) the block gets written to (or read from) disk. If the integrity of the block is violated, the storage management layer raises I/O errors to the database so it can retry the I/O. This feature is implemented underneath the covers in the storage layer, and is transparent to the end users and the database administrators.

Exadata Storage Server is fully compliant with HARD and provides the most comprehensive protection to prevent corruptions from being propagated to the hard disk. The HARD checks implemented by the Exadata Storage Server are more thorough and provide higher protection than the checks implemented by third-party storage vendors. For example, Exadata HARD performs extensive validation of block locations, magic numbers, head and tail checks, and alignment errors that are not typically performed by non-Exadata HARD implementations.

In order to enable HARD checks on the Exadata Storage Server, you need to set the DB_BLOCK_CHECKSUM to TYPICAL or FULL. Alternatively, you can set DB_ULTRA_SAFE to DATA_AND_INDEX as discussed earlier.

Exadata Database Machine Backup and Recovery Best Practices

A comprehensive and reliable backup and recovery strategy is the most important piece of a database high-availability strategy. Regardless of the type and size of databases, an effective strategy is focused on the methods and procedures for backing up critical data, and more importantly, for recovering the data successfully when required. While recoverability is the ultimate goal, the potential downtime incurred to recover should also be considered. The downtime includes the time to identify the problem, formulate the recovery steps, and perform recovery.

NOTE

Recovering a database using backups is generally used to recover from media failures within a single site. For recovering from a complete disaster of the site, or to recover with minimal downtime, the recommended strategy is to use Oracle Data Guard as discussed earlier in this chapter.

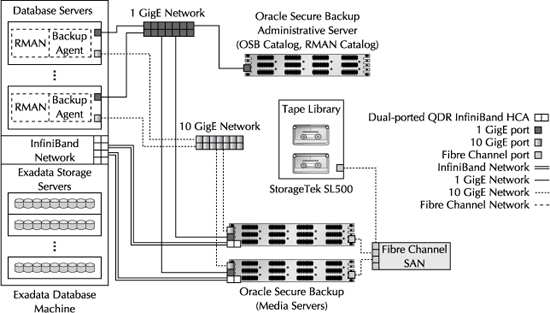

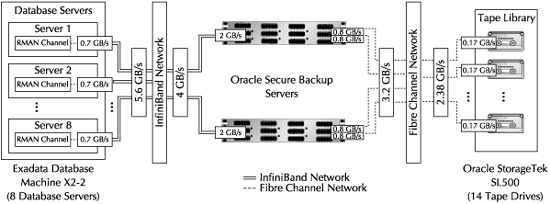

An effective backup and recovery strategy of the Exadata Database Machine should include all of the components that are housed within the Database Machine for which there are no built-in recovery mechanisms. The components that need to be a part of the strategy are the Oracle databases, the operating system (OS) and file systems of the database servers, the InfiniBand switch configuration, and the ILOM configuration.

The OS on the Exadata Storage Server has built-in recovery mechanisms and does not need separate backups. When OS recovery is needed on these servers, they will be restored by using the supplied CELLBOOT USB flash drive. The CELLBOOT USB drive stores the last successful OS boot image of the server. Since Oracle does not allow any deviations from the default OS install and setup of the Exadata Storage Servers, restoring using the CELLBOOT USB will be sufficient to perform OS-level recovery. Moreover, the OS resides on a file system that is configured on the first two disks of the Exadata Storage Server and uses software RAID-1 protection. If one of the first two disks fails, the server will still be available.

NOTE

You should back up the database server OS and the local file systems separately. The local storage on the database server is configured with RAID-5 protection, which can tolerate single drive failures. When two or more drives fail, the OS and the file systems need to be recovered from the backup. You should also back up the ILOM and InfiniBand switch configuration files using the native tools and methods provided for them.