VMware vSphere Design Essentials (2015)

Chapter 2. Designing VMware ESXi Host, Cluster, and vCenter

The core elements that are responsible for the success of virtualizing our datacenter start with accurate design of ESXi and vCenter. VMware ESXi is a host OS, installed on bare metal, which is a driving factor for virtual datacenters and the cloud. VMware's ESXi hypervisors have changed a lot over the years, and the foundation of the enterprise contribution has undergone a structured transition phase.

There are a plenty of implementation options for ESXi deployment that tell us about product growth and maturity. We'll compare the choices, looking at the returns from each implementation to decide which one will be more suitable for different environments. An enterprise can now rollout ESXi in a straightforward manner and manage it constantly with policy management. In this chapter, we'll discuss the essential configurations that are required in VMware vSphere design and how to effectively design the management layer. The heart of any moral vSphere infrastructure is a strong ESXi design.

In this chapter, you will learn about the following topics:

· Design essentials for ESXi

· Designing ESXi

· Design configurations for ESXi

· Design considerations for ESXi with industry inputs

· Design essentials for upgrading ESXi

· Design essentials for migrating ESXi

· Designing essentials for the management layer

· Design decisions for the management layer

· Design considerations for the management layer with industry inputs

Design essentials for ESXi

VMware ESXi is the primary component in the VMware integrated environment. ESXi (short for Elasticity Sky X), which was formerly known as ESX and GSX, is a world-class, Type 1 hypervisor developed by VMware for implementing virtual datacenters. As a Type 1 hypervisor, ESXi is not an application—it is an OS that integrates vital OS components such as a kernel.

ESXi 5.5.0 Update 2 is the latest version and has updated drivers. It is available for public download and the following table shows the release history:

|

Date of Release |

Name of the Release |

Version |

Build |

|

December 2, 2014 |

ESXi550-201412001 |

ESXi 5.5 Express Patch 5 |

2302651 |

|

October 15, 2014 |

ESXi550-201410001 |

ESXi 5.5 Patch 3 |

2143827 |

|

September 9, 2014 |

VMware ESXi 5.5 Update 2 |

ESXi 5.5 Update 2 |

2068190 |

|

July 1, 2014 |

ESXi550-201407001 |

ESXi 5.5 Patch 2 |

1892794 |

|

June 10, 2014 |

ESXi550-201406001 |

ESXi 5.5 Express Patch 4 |

1881737 |

|

April 19, 2014 |

ESXi550-201404020 |

ESXi 5.5 Express Patch 3 |

1746974 |

|

April 19, 2014 |

ESXi550-201404001 |

ESXi 5.5 Update 1a |

1746018 |

|

March 11, 2014 |

VMware ESXi 5.5.1 Driver Rollup |

1636597 |

|

|

March 11, 2014 |

VMware ESXi 5.5 Update 1 |

ESXi 5.5 Update 1 |

1623387 |

|

December 22, 2013 |

ESXi550-201312001 |

ESXi 5.5 Patch 1 |

1474528 |

|

November 25, 2013 |

vSAN Beta Refresh |

1439689 |

|

|

September 22, 2013 |

VMware ESXi 5.5 |

ESXi 5.5 GA |

1331820 |

After Version 4.1, VMware released another version named ESXi. ESXi replaces Service Console, which is nothing but an elementary OS, with a more tightly integrated OS.

The decision to Service Console fundamentally changed what was conceivable with VMware's hypervisor. VMware ESXi demands a much smaller server footprint, so the hypervisor consumes fewer host resources to perform essentially the same functions that were performed by ESX—this is a great consolidation. ESXi also offers reduced disk utilization—whether it is OS or application, data that is consumed from local disk, or booting from a SAN disk.

The best advantage of ESXi is reduced base code, which is good in terms of security. Previous versions of ESX were shipped and downloadable with a file size of 2 GB, whereas the present ESXi version is 125 MB. It's easy to see that less code implies fewer security requirements as there is a smaller attack vector. The ESX Service Console provides additional software security, which ESXi avoids.

ESXi patches are released less frequently and are easy to apply across virtual datacenters; this massively reduces the administrative burden from a management standpoint. This is another great advantage of ESXi.

Designing ESXi

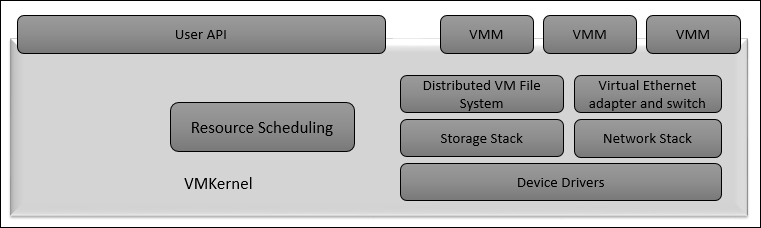

The VMware ESXi design comprises the fundamental OS and processes that run on top of ESXi, and it is called VMkernel. VMkernel runs all processes on ESXi, including ESXi agents, management software, as well as virtual machines. ESXi gains control over hardware devices on the physical servers and manages resources for the virtual machines. The main processes that route on top of the ESXi VMkernel are as follows:

· Direct Console User Interface (DCUI)

· Virtual Machine Monitor (VMM)

· Several agents used to enable high-level VMware infrastructure management from remote applications

· Common Information Model (CIM)

VMkernel is a POSIX-like OS developed by VMware and provides functionalities similar to other OS', such as control, process creation, process threads, signals, and filesystems. ESXi is designed precisely to support multiple VMs and provide core functionalities such as resource scheduling, I/O stacks, device drivers, and so on.

VMware ESXi was restructured to permit VMware consumers to scale out through a hypervisor that is more parallel to a hardware server. The vision was a base OS that is capable of autoconfiguring, receiving its settings remotely, and running from memory without disks. However, it's also an OS that's flexible enough to be installed on hard disks along with a locally saved state and user-defined settings for smaller, ready-to-use installations that don't require additional infrastructure.

Removing the VMware Service Console obviously has a great impact. A number of services and agents that were installed by default had to be rethought. The familiar command-line interface with its entree to management, troubleshooting, and configuration tools is swapped in ESXi, which is Linux-styled third-party agents for backups, hardware monitoring, and so on must be provisioned in different ways.

Design configurations for ESXi

In this section, you will see the key configurations that are required while designing VMware ESXi. All of the VMware ESXi hosts should be identical in terms of hardware specifications and should be built and configured reliably, to diminish the amount of operational effort involved with patch management, and to provide a building block solution. The following table illustrates the maximum configuration required while designing ESXi on your infrastructure:

|

Item |

Designed to support |

Max supported |

|

Host CPU maximums |

Logical CPUs per ESXi host |

320 |

|

NUMA Nodes per ESXi host |

16 |

|

|

Virtual machine maximums |

Virtual machines per ESXi host |

512 |

|

Virtual CPUs per ESXi host |

4096 |

|

|

Virtual CPUs per core |

321 |

|

|

Fault Tolerance maximums |

Virtual disks per ESXi host |

16 |

|

Virtual CPUs per VM |

1 |

|

|

RAM per FT VM |

64 GB |

|

|

Virtual machines per ESXi host |

4 |

|

|

Memory |

RAM per ESXi host |

4 TB |

|

Number of swap files per VM |

1 |

|

|

Virtual Disks |

Virtual Disks per ESXi host |

2048 |

|

ISCSI Physical |

LUNs per ESXi host |

256 |

|

Qlogic 1 GB iSCSI HBA initiator ports per ESXi host |

4 |

|

|

Broadcom 1 GB iSCSI HBA initiator ports per ESXi host |

4 |

|

|

Broadcom 10 GB iSCSI HBA initiator ports per ESXi host |

4 |

|

|

NICs that can be associated with software iSCSI stack per ESXi host |

8 |

|

|

Number of total paths per ESXi host |

1024 |

|

|

Number of paths to a LUN (software iSCSI and hardware iSCSI) per ESXi host |

8 |

|

|

Qlogic iSCSI: dynamic targets per adapter port per ESXi host |

64 |

|

|

Qlogic iSCSI: static targets per adapter port per ESXi host |

62 |

|

|

Broadcom 1 GB iSCSI HBA targets per adapter port per ESXi host |

641 |

|

|

Broadcom 10 GB iSCSI HBA targets per adapter port per ESXi host |

128 |

|

|

Software iSCSI targets per ESXi host |

2561 |

|

|

NAS |

NFS mounts per ESXi host |

256 |

|

LUNs per ESXi host |

256 |

|

|

LUN size |

64 TB |

|

|

LUN ID |

255 |

|

|

Number of paths to a LUN |

32 |

|

|

Number of total paths on an ESXi host |

1024 |

|

|

Number of HBAs of any type |

8 |

|

|

HBA ports |

16 |

|

|

Targets per HBA |

256 |

|

|

Common VFS |

Volume size |

64 TB |

|

Volumes per host |

256 |

|

|

Hosts per volume |

64 |

|

|

Powered on virtual machines per VMFS volume |

2048 |

|

|

Concurrent vMotion operations per VMFS volume |

128 |

|

|

Physical NICs |

1,000 1 Gb Ethernet ports (Intel PCI-e) |

24 |

|

igb 1 Gb Ethernet ports (Intel) |

16 |

|

|

tg3 1 Gb Ethernet ports (Broadcom) |

32 |

|

|

bnx2 1 Gb Ethernet ports (Broadcom) |

16 |

|

|

nx_nic 10 Gb Ethernet ports (NetXen) |

8 |

|

|

be2net 10 Gb Ethernet ports (Server engines) |

8 |

|

|

ixgbe 10 Gb Ethernet ports (Intel) |

8 |

|

|

bnx2x 10 Gb Ethernet ports (Broadcom) |

8 |

|

|

Combination of 10 Gb and 1Gb Ethernet ports |

8 10 Gb and 4 1 Gb ports |

|

|

mlx4_en 40 GB Ethernet Ports (Mellanox) |

4 |

|

|

VMDirectPath limits |

VMDirectPath PCI/PCIe devices per host |

8 |

|

SR-IOV Number of virtual functions |

642 |

|

|

SR-IOV Number of 10 GB pNICs |

8 |

|

|

VMDirectPath PCI/PCIe devices per virtual machine |

43 |

|

|

vSphere Standard and Distributed Switch |

Total virtual network switch ports per host (VDS and VSS Ports) |

4096 |

|

Maximum active ports per host (VDS and VSS) |

1016 |

|

|

Virtual network switch creation ports per standard switch |

4088 |

|

|

Port groups per standard switch |

512 |

|

|

Static/Dynamic port groups per distributed switch |

6500 |

|

|

Ephemeral port groups per distributed switch |

1016 |

|

|

Ports per distributed switch |

60000 |

|

|

Distributed virtual network switch ports per vCenter |

60000 |

|

|

Static/dynamic port groups per vCenter |

10000 |

|

|

Ephemeral port groups per vCenter |

1016 |

|

|

Distributed switches per vCenter |

128 |

|

|

Distributed switches per host |

16 |

|

|

VSS port groups per host |

1000 |

|

|

LACP - LAGs per host |

64 |

|

|

LACP - uplink ports per LAG (Team) |

32 |

|

|

Hosts per distributed switch |

1000 |

|

|

NetIOC resource pools per vDS |

64 |

|

|

Link aggregation groups per vDS |

64 |

|

|

Cluster (all clusters including HA and DRS) |

Hosts per cluster |

32 |

|

Virtual machines per cluster |

4000 |

|

|

Virtual machines per host |

512 |

|

|

Powered-on virtual machine config files per data store in an HA cluster |

2048 |

|

|

Resource Pool |

Resource pools per host |

1600 |

|

Children per resource pool |

1024 |

|

|

Resource pool tree depth |

82 |

|

|

Resource pools per cluster |

1600 |

Key design considerations for ESXi

In this section, the following are the most important considerations for your VMware ESXi design:

· Consider using VMware Capacity Planner to investigate the performance and usage of prevailing servers; this will help you to identity the requirements for planning.

· Consider eliminating inconsistency and achieving a controllable and manageable infrastructure by homogenizing the physical alignment of the ESXi hosts.

· Never design ESXi without a remote management console such as DRAC and ILO.

· Ensure that syslog is properly set up for your virtual infrastructure and log files are offloaded to non-virtual infrastructures.

· Consult your server vendor on proper memory DIMM size, placement, and type to get optimal performance. While other configurations may work, they can greatly impact memory performance.

· Ensure you choose the right hardware for the ESXi host, while aligning the functional requirements with a workload that has a baseline and performance that has a benchmark.

· Ensure higher density and consolidation are maintained in the design, always scale up the ESXi hosts; this strategy will in turn get the best RTO scales out of our distributed architecture.

· While designing, it is worth taking into consideration CPU scheduling for larger VMs, NUMA node size, and the balance between CPU and RAM to avoid underperformance. This will in turn provide sufficient I/O and network throughput for the VM.

· In your design, if you have planned to deploy SSD in the ESXi host, make sure you have enough data facts with respect to read/write performance. In today's market, most SSDs are heavily read-biased and hence worth considering in your design. Factors that need to be considered are peak network bandwidth requirements for NICs and PCI Bus. If sizing ESXi host falls under the scope of work, then give more importance to CPU, memory, network and storage bandwidth as well.

· Ensure your design has sufficient ESXi hosts to bring them into line with a scale out practice to increase DRS cluster productivity while consuming sufficient ESXi hosts for our workloads, in order to get optimal performance.

· Ensure in your design that sizing and scaling your virtual infrastructure are given more importance; continuously study using local SSD drives for local caching solutions such as vFlash Read Cache, PernixData, or any other solution of your choice for ESXi hosts.

· Ensure in your design that you are sizing your VMware ESXi hosts and consider having a lighter ESXi host instead of having more, smaller ESXi hosts; this will in turn bring more benefit in CapEx and OpEx, as well reducing the datacenter footprint.

· Size ESXi host CPU and RAM keeping the following things in mind—20 percent headroom for spikes and business peaks, VMkernel overhead, planned and unplanned downtimes, and future business growth.

Designing an ESXi scenario

Let's apply what we have learnt so far in designing ESXi 5.5 in the following scenario. Let's start with hardware merchant for our design is Dell. In this scenario, the Dell PowerEdge R715 server has been chosen as the hardware for designing and implementing VMware ESXi 5.5. The configuration and assembly process for each system should be homogeneous, with all components installed identically for all VMware ESXi 5.5 hosts. Homogenize not only the model but also the physical configuration of the VMware ESXi 5.5 hosts, to provide a simple and supportable infrastructure by eliminating unevenness. The specifications and configuration for this hardware platform are detailed in the following table:

|

Attributes |

Modules |

|

Vendor |

Dell |

|

Model |

PowerEdge R715 |

|

Number of CPU sockets |

4 |

|

Number of CPU cores |

16 |

|

Processor speed |

2.3 GHz |

|

Number of network adaptor ports |

8 |

|

Network adaptor vendor(s) |

Broadcom/Intel |

|

Network adaptor model(s) |

2x Broadcom 5709C dual-port onboard |

|

Network adaptor speed |

2x Intel Gigabit ET dual-port Gigabit |

|

Installation destination |

Dual SD card |

|

VMware ESXi server version |

VMware ESXi 5.5 server; build: latest |

With the preceding configuration, let's start designing. The first step is to configure the Domain Name Service (DNS) for all of the VMware ESXi hosts that are required; they must be able to resolve short names and Fully Qualified Domain Names (FQDN) using forward and reverse lookup from all server and client machines that require access to VMware ESXi.

The next step is to configure Network Time Protocol (NTP). It must be configured on each ESXi host and should be configured to share the same time source as the VMware vCenter Server to ensure consistency across VMware vSphere solutions.

At this moment, VMware ESXi 5.5 offers four different solutions—local disk, USB/SD, booting from SAN, stateless booting of ESXi. In case your infrastructure requirements gathering states that we need to use stateless disk, plan your rollouts with minimal associated CapEx and OpEx. VMware provides solution to deploy ESXi on SD cards, because it will permit a cost-saving migration to stateless when required.

While installing VMware ESXi, consider the auto-configuration phase; a 4 GB VFAT scratch partition is created if a partition is not present on another disk. In this scenario, SD cards are used as the installation endpoint and will not permit the creation of a scratch partition. Creating a shared volume is suggested by industry experts. That will hold the scratch partition for all VMWare ESXi hosts in an exceptional against per-server folder. We are using one of the NFS data stores in this scenario.

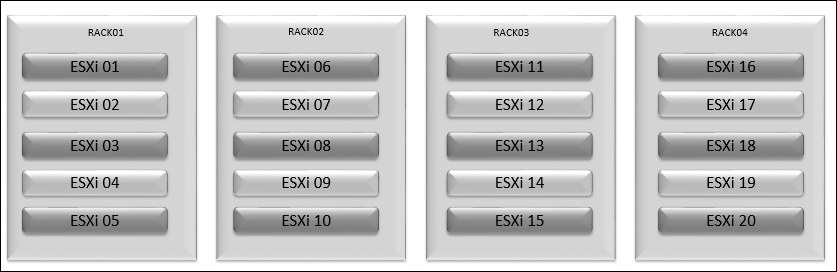

For our scenario, let's consider using a minimum of four racks for the 20 hosts and layering the VMware ESXi hosts across the racks as depicted in the following diagram.

Wiring considerations for our scenario are redundant power and four power distribution units for racks, each connected to separate legs of a distribution panel or isolated on separate panels. Here, the assumption is that the distribution panels are in turn isolated and connected to different continuous power supplies.

Layering the VMware ESXi hosts across the accessible racks diminishes the impact of a single point of failure.

Design essentials for upgrading ESXi

ESXi hosts can be upgraded from previous version or from ESXi 4.x in several ways. ESXi Installable 4.x hosts can be upgraded in one of three ways:

· An interactive upgrade via an ESXi Installable image

· A scripted upgrade, or using VUM. ESXi 5.0 hosts can be upgraded to 5.1

· The esxcli command-line tool

Note

If we have implemented Auto Deploy, we can directly apply a new image and reboot.

While upgrading VMware ESXi hosts from a previous version of ESXi 4.x to the latest version of 5.x using any of the three supported approaches, 50 MB must be accessible on the local VMFS volume. This space is required to store the ESXi server's configuration data in temp mode.

The following table illustrates approaches for upgrading ESXi to the latest version:

|

Upgrade approaches |

From ESX/ESXi 4.x to ESXi 5.5 |

From ESXi 5.0 to ESXi 5.5 |

From ESXi 5.1 to ESXi 5.5 |

|

Using vSphere Update Manager |

Possible |

Possible |

Possible |

|

Using interactive upgrade from devices such as CD DVD or USB drive |

Possible |

Possible |

Possible |

|

Using scripted upgrade |

Possible |

Possible |

Possible |

|

Using vSphere Auto Deploy |

Not Possible |

Possible, Key Note: Only if the ESXi 5.0.x host was implemented using Auto Deploy |

Possible, Key Note: Only if the ESXi 5.1.x host was implemented using Auto Deploy |

|

Via esxcli |

Not Possible |

Possible |

Possible |

The following table illustrates the various scenarios that you will come across while upgrading ESXi to the latest version:

|

Scenario for Upgrade or Migration to ESXi 5.5 |

Support Status |

How to do it |

|

ESX and ESXi 3.x hosts |

Direct upgrade is not possible |

We must upgrade ESX/ESXi 3.x hosts to ESX/ESXi version 4.x before we can upgrade them to ESXi 5.5. Refer to the vSphere 4.x upgrade documentation. Alternatively, we might find it simpler and more cost-effective to perform a fresh installation of ESXi 5.5. |

|

The ESX 4.x host that was upgraded from ESX 3.x with a partition layout incompatible with ESXi 5.x |

Not possible |

The VMFS partition cannot be preserved. Upgrading or migration is possible only if there is at most one VMFS partition on the disk that is being upgraded and the VMFS partition must start after sector 1843200. Perform a fresh installation. To keep virtual machines, migrate them to a different system. |

|

ESX/ESXi 4.x host, migration or upgrade with vSphere Update Manager |

Possible |

Refer to the Using vSphere Update Manager to Perform Orchestrated Host Upgrades section in the vSphere Upgrade Guide. |

|

ESX/ESXi 4.x host, interactive migration or upgrade |

Possible |

Refer to the Upgrade or Migrate Hosts Interactively section in the vSphere Upgrade Guide. The installer wizard offers the choice to upgrade or perform a fresh installation. If we upgrade, ESX partitions and configuration files are converted to be compatible with ESXi. |

|

ESX/ESXi 4.x host, scripted upgrade |

Possible |

Refer to the Installing, Upgrading, or Migrating Hosts Using a Script section in the vSphere Upgrade Guide. In the upgrade script, specify the particular disk to upgrade on the system. If the system cannot be upgraded correctly because the partition table is incompatible, the installer displays a warning and does not proceed. In this case, perform a fresh installation. Upgrading or migration is possible only if there is at most one VMFS partition on the disk that is being upgraded and the VMFS partition must start after sector 1843200. |

|

ESX 4.x host on a SAN or SSD |

Partially possible |

We can upgrade the host as we would normally upgrade a ESX 4.x host, but no provisions are made to optimize the partitions on the disk. To optimize the partition scheme on the host, perform a fresh installation. |

|

ESX 4.x host, missing the Service Console .vmdk file, interactive migration from CD or DVD, scripted migration, or migration with vSphere Update Manager |

Not possible |

The most likely reasons for a missing Service Console are that the Service Console is corrupted or the VMFS volume is not available, which can occur if the VMFS was installed on a SAN and the LUN is not accessible. In this case, on the disk selection screen of the installer wizard, if we select a disk that has an existing ESX 4.x installation, the wizard prompts us to perform a clean installation. |

|

ESX/ESXi 4.x host, asynchronously released driver or other third-party customizations, interactive migration from CD or DVD, scripted migration, or migration with vSphere Update Manager |

Not possible |

The most likely reasons for a missing Service Console are that the Service Console is corrupted or the VMFS volume is not available, which can occur if the VMFS was installed on a SAN and the LUN is not accessible. In this case, on the disk selection screen of the installer wizard, if we select a disk that has an existing ESX 4.x installation, the wizard prompts us to perform a clean installation. |

|

ESX/ESXi 4.x host, asynchronously released driver or other third-party customizations, interactive migration from CD or DVD, scripted migration, or migration with vSphere Update Manager |

Possible |

When we upgrade an ESXi 5.0.x or 5.1.x host that has custom VIBs to Version 5.5, the custom VIBs are migrated. Refer to the Upgrading Hosts That Have Third-Party Custom VIBs section in the vSphere Upgrade Guide. |

|

ESXi host 5.0.x |

Possible |

Methods supported for direct upgrade to ESXi 5.5 are: · vSphere Update Manager · Interactive upgrade from CD, DVD, or USB drive · Scripted Upgrade · esxcli · Auto Deploy If the ESXi 5.0.x host was deployed using Auto Deploy, we can use Auto Deploy to provide the host an ESXi 5.5 image. |

|

ESXi 5.1.x host |

Possible |

Methods supported for direct upgrade to ESXi 5.5 are: · Sphere Update Manager. · Interactive upgrade from CD, DVD, or USB drive · Scripted Upgrade · esxcli · Auto Deploy If the ESXi 5.1.x host was deployed using Auto Deploy, we can use Auto Deploy to provide the host with an ESXi 5.5 image. |

Design essentials for migrating ESXi

Migrating VMware ESXi 4.x to the latest version requires careful planning. The migration procedure will be affected by several factors—the version of VMware vCenter Server, the latest version of VMware ESXi, the compatibility of available hardware, boot considerations, and the type and destination state of data stores. We need a clear migration path. The outline of the migration plan is illustrated in the following section, and you can customize it according to your demands:

Pre-migration

The following pre-migration tasks should be performed prior to installing VMware ESXi on the host:

· Validate that all prerequisites are complete and verify that hardware requirements are met

· Document the existing VMware ESX host configuration

· Evacuate virtual machines and templates and put the host into Maintenance Mode

· If running in a VMware HA/VMware DRS cluster, remove the host from the cluster

Migration

After the pre-migration tasks are complete, you are ready to upgrade VMware ESXi and perform the migration.

The actual migration involves six steps:

· Removing the host from vCenter

· Installing VMware ESXi

· Configuring the management network

· Reconnecting the host in VMware vCenter Server

· Restoring host-specific configuration settings

· Testing/validating the upgraded host

· Moving the host back into the VMware HA/VMware DRS cluster

Post-migration

The following are generic considerations that are worth considering in your design:

· Configuring Direct Console User Interface (DCUI)

· Joining the ESXi host to Active Directory (AD)

· Identifying and configuring a persistent data store for configuring logging

· Backing up the VMware ESXi host configuration

Delivery considerations

Prior to redeployment of the ESX hosts to ESXi, you should run a testing phase that looks at the existing disposition and examines whether each element is suitable for ESXi. Obviously, a pilot is a good way to test the suitability of ESXi in your existing configuration. However, it's difficult to test every possible scenario via a small pilot. This is because large enterprises have many different types of hardware, versions of hardware, connections to different network and storage devices, backup tools, monitoring tools, and so on.

Provisioning

ESX servers can be upgraded or rebuilt. ESX 4.x server upgrades can be undertaken by the ESXi interactive or scripted install mechanism, or via VUM. If the ESX 4.x servers have previously been upgraded from ESX 3.x, then the partitioning configuration brought across might not allow a subsequent upgrade to ESXi. There needs to be at least 50 MB free in the host's local VMFS volume to store the previous configuration. If you're using VUM to upgrade, the /boot partition needs to have at least 350 MB free. If you don't have this much space, you can still use the interactive update because it doesn't need this space for staging the upgrade files. Any VMFS volumes on the local disk that need to be preserved must start after the first 1 GB; otherwise, the upgrade can't create the ESXi partitions it requires.

Operations

One of the more obvious techniques to smooth a transition is to begin using the newer cross-host management tools as early as possible. Most of the utilities available for ESX classic hosts work with ESXi hosts. vSphere Client, vCenter Server, vCenter Web Client, vSphere command line interface (vCLI), and PowerCLI are host-agnostic and will make any transition less disruptive. There is no reason not to start working with these tools from the outset; even if you decide not to migrate at that stage, you'll be better prepared when ESXi hosts begin to appear in your environment.

The primary management loss when you replace ESX is the Service Console. This is the one tool that, if you use it regularly, must be replaced with an equivalent. There are two main contenders: the vCLI and the ESXi Shell. The vCLI provides the same Linux-style commands as the Service Console. The easiest way to get it is to download the vSphere Management Assistant (vMA) virtual appliance. This includes a Linux shell and all the associated environmental tools you'd expect. Generally, anything you can do at a Service Console prompt, you can do in the vMA. Most scripts can be converted to vMA command syntax relatively easily. The second option is the ESXi Shell. Although it's a stripped-down, bare-bones environment, it provides the majority of vSphere-specific commands that you'd otherwise find in the Service Console. Some of the more Linux-centric commands may not be available, but it provides a more familiar feel than the vMA because it's host-specific, and therefore the syntax is closer to that of the Service Console.

In addition to rewriting scripts, you'll need to replace other services that ESX includes in the Service Console. For ESXi hosts without persistent storage for a scratch partition, it's important to either redirect the logs to a remote data store or configure the host to point to a remote syslog server. ESXi hosts don't have their own direct Web Access as ESX hosts do. It's unlikely that this is a regularly used feature but, if you've relied on it in certain circumstances, then you need to get accustomed to using the Windows client to connect to the host. Finally, if you've used the Service Console for host monitoring via SNMP or a server vendor's hardware agent, then ESXi has built-in CIM agents for hardware on the HCL. Many vendors can supply enhanced modules for their particular hardware. With these CIM modules; you can set up alerts in vCenter, and some third-party hardware-monitoring software can use the information. ESXi also provides some SNMP support, which you can use to replace any lost functionality of Service Console agents.

Designing essentials for the management layer

VMware vCenter Server provides a central platform for dealing with sVMware vSphere infrastructure. It is designed so as to automate and distribute a virtual infrastructure with assurance.

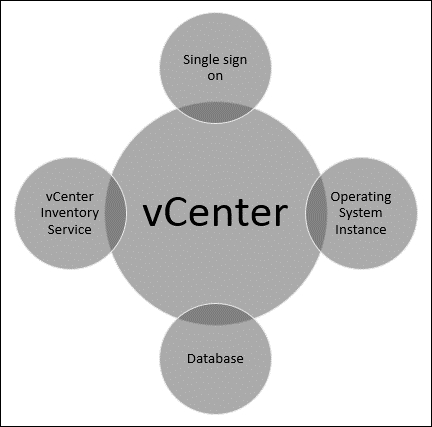

VMware vCenter is a key component in most centrally critical elements of our virtual data center. It's the management point we likely use to manage our virtual data center. We will create data centers, clusters, resource pools, networks, and data stores; assign permissions; configure alerts; and monitor performance. All of this functionality is centrally configured in the vCenter Server. You should therefore dedicate part of your design to building a robust and scalable vCenter Server, and the following diagram illustrates various components of vCenter from vSphere 5.1 and above:

Before vSphere 5.1, vCenter Server was basically a monumental application; there were really only two major mechanisms to interact with: vCenter Server in vSphere 4.x and vSphere 5.0, which is an OS instance and database. However, with the introduction of vSphere 5.1, VMware has fragmented the monumental vCenter Server into a number of different components. In addition to the two previous components, database now joins another two components: vCenter Inventory Service and Single Sign-On.

The introduction of additional components in vSphere 5.1 and 5.5 means we also have to choose whether we will run all these components on a single server or break the roles apart on separate servers. Let's explore each component one by one.

OS instances and database requirements

VMware vCenter Server OS instances are available in two basic formats: installable software on the Windows platform or as a preinstalled virtual appliance that can work on Linux as part of the vCSA. Sizing vCenter Server Installable on a Windows platform must meet the following hardware requirements:

|

vCenter Server Hardware |

Requirements |

|

CPU |

Two 64-bit CPUs or one 64-bit dual-core processor. |

|

Processor |

2.0 GHz or faster Intel 64 or AMD 64 processor. |

|

Memory |

Minimum memory requirements can be defined based on your design decision: · 4 GB of RAM is required if the vCenter Server is installed on a different server than vCenter SSO and vCenter Inventory Service · 10 GB of RAM is required if the vCenter Server, vCenter SSO, and vCenter Inventory Service are installed on the same server |

|

Disk storage |

Minimum storage requirements can be defined based on your design decision: · 4 GB is required if vCenter Server is installed on a different machine than vCenter SSO and vCenter Inventory Service · 40-60 GB is required if vCenter Server, vCenter SSO, and vCenter Inventory Service are installed on the same machine Disk storage requirements have to be calculated if the vCenter Server database runs on the same machine as vCenter Server. 450 MB is required for the log. |

|

Microsoft SQL Server 2008 R2 Express disk |

Up to 2 GB free disk space to decompress the installation archive. Approximately 1.5 GB is deleted after the installation is complete. |

|

Network speed |

Speed is 1 Gbps. |

The vCenter Server Appliances are management applications that need sufficient resources in order to perform optimally. We should view the following as the minimum requirements for a specific to infrastructure. Oversizing vCenter Server might result in improved performance, better concurrency in production deployments, and reduced latency. Hardware requirements for VMware vCenter Server Appliances are as follows:

|

VMware vCenter Server Appliance Hardware |

Requirements |

|

Disk storage on the host machine |

A minimum 70 GB and a maximum size of 125 GB are required. Disk space depends on the size of our vCenter Server inventory. |

|

Memory in the VMware vCenter Server Appliance |

If you are planning to use embedded PostgreSQL database, then vCenter Server appliance supports up to 100 ESXi hosts or 3,000 virtual machines and have the following memory requirements: · 8 GB is required for very small inventory (10 or fewer ESXi hosts, 100 or fewer virtual machines) · 16 GB is required for a small inventory (minimum of 10 to maximum of 50 ESXi hosts or minimum of 100 and maximum of 1,500 virtual machines) · 24 GB is required for a medium inventory (minimum of 50 to maximum of 100 ESXi hosts or minimum of 1,500 to maximum of 3,000 virtual machines) |

|

If you are planning to use an Oracle database, then vCenter Server appliance supports up to 1,000 ESXi hosts, 10,000 virtual machines, and 10,000 powered on virtual machines, and it has the following memory requirements: · 8 GB of ram required for a very small inventory (10 or fewer ESXi hosts, 100 or fewer virtual machines) · 16 GB of RAM required for small inventory (10 to 100 ESXi hosts or a minimum of 100 or maximum of 1,000 virtual machines) · 24 GB of RAM required for medium inventory (100 to 400 ESXi hosts or minimum of 1,000 or maximum of 4,000 virtual machines) · 32 GB of RAM required for a large inventory (more than 400 ESXi hosts or 4,000 virtual machines) |

vCenter SSO

With the release of vSphere 5.1 and 5.5, VMware brought in a new feature to vCenter Server known as vCenter Single Sign-On. This component introduces a centralized verification facility that vCenter Server uses to allow for verification against multiple backend facilities, such as LDAP and AD.

For smaller environments, vCenter SSO can be installed on the same servers as the rest of the vCenter Server components; for larger environments, it can be installed on a different system for better performance. VMware vCenter SSO also supports a range of topologies, including standalone, cluster topology, and multisite topology.

The following table illustrates minimum hardware requirements for vCenter SSO, running on a separate host machine from vCenter Server:

|

vCenter SSO Hardware |

Requirements |

|

Processor |

Intel or AMD x64 processor with a minimum two or more logical cores, each with a speed of 2 GHz |

|

Memory |

3 GB |

|

Disk storage |

2 GB |

|

Network speed |

1 Gbps |

The components of SSO are Security Token Service (STS), administration server, vCenter Lookup Service, and VMware Directory Service; these have to be considered while designing your infrastructure using SSO.

Deploying SSO models

VMware vCenter can be deployed using various methods such as Basic, Multiple SSO instances in the same location, and Multiple SSO instances in different locations.

Basic vCenter SSO

Basic vCenter SSO is the most well-known deployment mode and meets the requirements of most vSphere 5.1 and 5.5 users. Classically, this deployment model maintains the same methodology as previous vCenter Server infrastructure. In most cases, we can use vCenter Simple Install to deploy vCenter Server with vCenter SSO in the basic mode.

Multiple SSO instances in the same location

Using this deployment model, we install a vCenter SSO primary instance and one or more additional vCenter SSO instance on another node. Both the primary and secondary instances are sited behind a third-party network load balancer. Each vCenter SSO has its particular VMware Directory Service that replicates data with other vCenter SSO servers in the same location.

Multiple SSO instances in different locations

Using this deployment model, we can install different region-based deployments, when a single administrator needs to administer vCenter Server instances that are deployed on regionally dispersed sites in Linked Mode and each site is represented by one vCenter SSO, with one vCenter SSO on each HA cluster. The vCenter SSO site entry point is the system that other sites communicate with. This is the only system that needs to be accessible from the other sites. In a clustered deployment, the entry point of the site is the machine where the HA load balancer is installed.

|

vCenter Server Deployment |

SSO Deployment Mode |

|

Single vCenter Server |

Basic vCenter SSO |

|

Multiple local vCenter Servers |

Basic vCenter SSO |

|

Multiple remote vCenter Servers |

Basic vCenter SSO |

|

Multiple vCenter Servers in Linked Mode |

Multisite vCenter SSO |

|

vCenter Servers with high availability |

Basic vCenter SSO with VMware vSphere HA (provides high availability for vCenter Server and vCenter SSO) Basic vCenter SSO with vCenter Server Heartbeat (provides high availability for vCenter Server and vCenter SSO) |

VMware vCenter Inventory Service

In the next vCenter release, we can expect it to support cross vCenter vMotion. VMware also split off the inventory portion of vCenter Server into a separate component. The vCenter Inventory Service now provisions the discovery and management of inventory objects across different linked vCenter Server instances. As with vCenter SSO, we can install vCenter Inventory Service on the same system as the other components or we can split the Inventory Service onto a separate system for greater performance and scalability.

Design decisions for the management layer

In this section, we will discuss some of the key decisions involved in crafting the management layer design for your VMware vSphere infrastructure implementation, such as the following:

· Identifying vCenter to be hosted either on a physical server or on the VM Server

· Identifying vCenter to be hosted either on the Windows platform or VCA on the Linux platform

· Identifying database server locations either on primary sites or remote sites

· Configuration maximums to be considered

Let's get started straightaway with identifying decision-driving factors for installation and configuration.

Identifying whether vCenter will be hosted on a physical server or on VM Server

There is a long debate in the VMware blogs and community about whether we should install vCenter Server on a physical server or on VM. Let's take the case where we have a large enterprise that has some 200 ESXi hosts. For some reason, we have a serious outage. For example, we lose connectivity to LUN where vCenter VM is stored; we won't have the VM backup for another 6 to 12 hours. Consider that something goes wrong on our infrastructure. After VM boot, altogether we may have a high CPU and RAM usage. This is one example of what can happen if our vCenter is a virtual machine; another one could be in a vCloud Director (vCD) or VMware Horizon infrastructure; we cannot deploy any new VMs because our vCenter is down. We can't perform certain actions to restore our environment because we have no vCenter. As we can see, serious issues may arise if our virtual vCenter Server is not available.

We have discussed reasons for our infrastructure to have vCenter on physical hardware. Now, let's look at the other side of the coin: why we should choose to go for vCenter as a virtual machine. Note that VMware now lists the use of vCenter as a virtual machine as a best practice. Although it is best practice, there are certain risks and for those we have tabulated a migration plan as listed in the following table:

|

Failure reason |

Design Justification |

|

VMware vCenter is lost when the database fails |

Design our SQL instances on a different server and cluster them |

|

vCenter and SQL are VMs on the virtual infrastructure |

Design vCenter and SQL on separate ESXis with anti-affinity rules set |

|

vCenter and SQL belong to the same storage |

Design our vCenter on different LUNs from storage devices |

|

LUN connection failure to vCenter |

Design database snapshots at periodic intervals |

|

vCenter and SQL server has a performance challenge |

Design our vCenter/SQL so it has adequate resources |

|

VMware vCenter crashed |

Deploy a new virtual machine to replace the crashed one and do not forget to attach it the database |

Identifying whether vCenter will be hosted on the Windows platform or vCSA on the Linux platform

Identifying the correct vCenter is a key factor for your design. With vSphere 5.0 and 5.5, VMware introduced vCenter Server virtual appliance, which is a Linux-based virtual appliance. Prior to the introduction of the vCenter Server virtual appliance, we had only one option that is Windows platform-based: vCenter Server, let's see which option to pick for our design.

If we have a strong operational support for Microsoft Windows servers, which means a solid patching solution is in place, all critical alerts are monitored, and a proactive team of Windows administrators, it is best to deploy on a Microsoft Windows Server-based version of vCenter Server. This makes more sense than introducing a vCenter Server virtual appliance.

If you are looking for a simple and quick vCenter deployment and it is a smaller infrastructure, then go with a vCenter Server virtual appliance for very real benefits. Simply deploy the Open Virtualization Format (OVF) package, configure the vApp, and then hit the web-based management tool after that.

Identifying the database server location on primary sites or remote sites

Database manufacturers such as IBM, Microsoft, and Oracle recommend resource alignments for ideal performance of their database servers. For example, Microsoft recommends at least 1 GB of RAM and 4 GB for ideal performance of SQL Server.

On the other hand, take into consideration the resources required for the vCenter service, Web services, and plugins; we are looking at at least another 2 GB up to a maximum of 4 GB of RAM for vCenter. It is pretty likely that, in an enterprise infrastructure, our design has a properly sized database server that can accommodate the vCenter database without any major issues.

The recommended resources are as follows:

· 1 CPU with 2.0 GHz or any other similar configuration

· 4 GB of RAM

Taking this into account, if we install both the vCenter and the database server on the same server, we need at least 8 GB of RAM up to a maximum of 16 GB RAM and at least 4 CPUs up to a maximum of 8 CPUs. It is best to go for a local database for the preceding case. Based on your requirements, pick a local or remote database.

Configuration maximums to be considered

The maximum supported configurations that are required when you design your vSphere infrastructure are as follows:

|

vCenter Server Scalability |

|

|

Components |

Configuration |

|

ESXi hosts per vCenter Server |

1,000 |

|

Powered-on VMs per vCenter Server |

10,000 |

|

Registered VMs per vCenter Server |

15,000 |

|

Linked vCenter Servers |

10 |

|

ESXi hosts in linked vCenter Servers |

3,000 |

|

Powered-on VMs in linked vCenter Servers |

30,000 |

|

Registered VMs in linked vCenter Servers |

50,000 |

|

Concurrent vSphere Client connections to vCenter Server |

100 |

|

Concurrent vSphere Web Clients connections to vCenter Server |

180 |

|

Number of ESXi hosts per datacenter |

500 |

|

MAC addresses per vCenter Server |

65,536 |

|

User Interface |

|

|

USB devices connected per vSphere Client |

20 |

|

Concurrent operations |

|

|

vMotion operations per ESXi host (1 Gb/s network) |

4 |

|

vMotion operations per ESXi host (10 Gb/s network) |

8 |

|

vMotion operations per data store |

128 |

|

Storage vMotion operations per ESXi host |

2 |

|

Storage vMotion operations per ESXi data store |

8 |

|

vCenter Server Appliance |

|

|

ESXi hosts (vPostgres database) |

100 |

|

VMs (vPostgres database) |

3,000 |

|

ESXi hosts (Oracle database) |

1,000 |

|

VMs (Oracle database) |

10,000 |

|

VMware vCenter Update Manager |

|

|

VMware Tools scan per ESXi host |

90 |

|

VMware Tools upgrade per ESXi host |

24 |

|

VM hardware scan per ESXi host |

90 |

|

VM hardware upgrade per ESXi host |

24 |

|

VMware Tools scan per VUM server |

90 |

|

VMware Tools upgrade per VUM server |

75 |

|

VM hardware scan per VUM server |

90 |

|

VM hardware upgrade per VUM server |

75 |

|

ESXi host scan per VUM server |

75 |

|

ESXi host remediation per VUM server |

71 |

|

ESXi host upgrade per VUM server |

71 |

|

ESXi host upgrade per cluster |

1 |

|

Cisco VDS update and deployment |

70 |

|

VMware vCenter Orchestrator |

|

|

Associated vCenter Server systems |

20 |

|

Associated ESXi instances |

1,280 |

|

Associated VMs |

350,001 |

|

Associated running workflows |

300 |

Design considerations for management layers with industry inputs

Designing VMware vSphere is really challenging; to avoid any bottleneck, we need to learn and understand how it is really done by experienced architects in the following section. We will discuss the values and procedures that need to be followed while designing a VMware vSphere management layer, as we discussed in Chapter 1, Essentials of VMware vSphere.

Take into consideration the number of ESXi hosts and VMs we are going to have, followed by the kind of statistics we will need to maintain for our infrastructure. Sizing of server is most important from the ground up, so that we won't need to redeploy as we outgrow our infrastructure. Also, remember that our vCenter Server should be isolated from our database server, providing isolation of roles for the different components of the infrastructure. We should plan for resilience for both components and take into account what kind of outage we can predict. When we have this information, we can plan the level of resilience we need. Don't be afraid to design your vCenter as a VM. In some cases, this can deliver a greater level of resilience with more ease than using a physical server; hence, it is worth considering this option in your design.

vCenter is key to our kingdom of virtual infrastructure. Leaving it unprotected places your kingdom out in the open without security. A great number of attacks today are carried out from within the network.

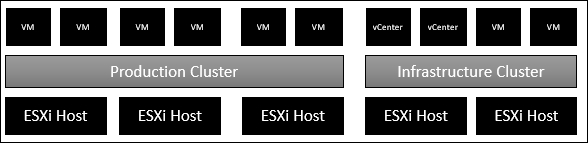

It is always better to run infrastructure VMs on a dedicated virtual infrastructure (cluster), away from production. In each case, we will have to take into consideration what options are available to ensure that SLAs will not be violated. vCenter Server placement will also have an impact on the backup and recovery solution you will implement and on its licensing:

The following table lists the various design values and principles that every design is required to have:

|

Principles |

Factors to consider |

|

Readiness |

Key components to consider on your design are as follows: · vSphere HA · DRS and Resource Pool · vCenter Heartbeat · Template and vMotion · Alarms and Statistics |

|

Manageability |

Key components to consider on your design are as follows: · Compatibility and interoperability · Scalability · Linked mode |

|

Performance |

Key components to consider on your design are as follows: · OS to be hosted · Database placement on either primary or secondary sites · Update Manager |

|

Recoverability |

Key components to consider on your design are as follows: · Recovery Time Objective (RTO) · Recovery Point Objective (RPO) · Mean Time to Repair (MTTR) · Disaster Recovery (DR) · Business Continuity (BC) |

|

Security |

Key components to consider on your design are as follows: · Isolation · SSL certificates · Domain certificates · Permission with right access and identity management |

|

Justification |

Key components to consider on your design are as follows: · Design decision · Risk and implications · Assumption and constraints |

Finally, a monitoring utility needs to be installed in the guest OS. Make sure that your infrastructure monitoring utility will support the vCenter Server Appliances; otherwise, look for option monitoring via SSH or SNMP; vCenter Server Appliances have been improved in version 5.5. Enterprises must understand these considerations before selecting a vCenter Server Appliance as opposed to installing vCenter on Windows. Some further options are listed in the following table:

|

Features |

vCenter on Windows platform |

vCenter Server Appliances running on Linux platform |

|

Linked Mode |

Yes |

No |

|

Plugin |

Yes |

Partial |

|

VMware HA |

Yes |

Yes |

|

VMware FT |

No |

No |

|

vCenter Heartbeat |

Yes |

No |

|

VMware Update Manager |

Yes |

Partial |

|

VMware SRM |

Yes |

Yes |

Here are the various industry practices followed across different verticals and line of businesses:

· Key design principles should be followed while sizing a vCenter database appropriately to avoid performance issues.

· Never consider a built-in SQL Express database for production. It is configured to support only 5 ESXi hosts and no more than 50 virtual machines.

· Never disable DRS or use VM-Host affinity rules to decrease movements of vCenter.

· Using vSphere 5.5, we can implement SSO in a multisite configuration when vCenter Server Linked Mode is needed.

· Observe the effect of virtualizing vCenter. Ensure it has the highest priority for restarts and make sure that the services vCenter Server depends on are available—DNS, DHCP, AD, Database before that.

· If you are planning to use SRM for DR, it is recommended to consider using separate Windows instances for installation of SRM including Storage Replication Adapters (SRAs).

· If you're planning to install vCenter Server along with other VMs, set CPU and memory shares to the maximum.

· In large enterprise virtual infrastructures, make VMware vCenter as isolated as possible by scaling out rather than having all roles such as Inventory, SSO, VUM, and SRM on the same Windows instance.

· Perform proper sizing of vCenter resource while adding any other management components, such as vCOPS, SRM, and VUM.

· Implement vCenter as a VM or vCSA to make use of its advantages over virtualization. This will help you achieve server consolidation.

· Justify RTO, RPO, and MTTR for vCenter in your design document.

· Optimize multiple idle sessions within vCenter if the Windows terminal server is not set to the ideal timeout.

· Sizing vCenter should have considerations for further scalability, including clusters and VM accommodation.

· Design essentials should provide necessary information such as whether VM should have clocks synchronized. This will avoid unpredictable results during the installation and configuration of SSO.

· Design should advise the deployment of vCenter Server to configure the Managed IP address.

· Back up vCenter Server on a periodic basis and test the recovery procedure periodically. This should act as a health check on your design document.

Summary

In this chapter, you read about the design essentials for ESXi, design of ESXi, design configurations of ESXi, design considerations for ESXi with industry inputs, design essentials for upgrading ESXi, design essentials for migrating ESXi, designing essentials for management layer, design decisions for the management layer, and design considerations for management layer with industry inputs. In the next chapter, you will learn about designing VMware vSphere Networking.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2025 All site design rights belong to S.Y.A.