Games, Design and Play: A detailed approach to iterative game design (2016)

Part III: Practice

Chapter 12. Evaluating Your Game

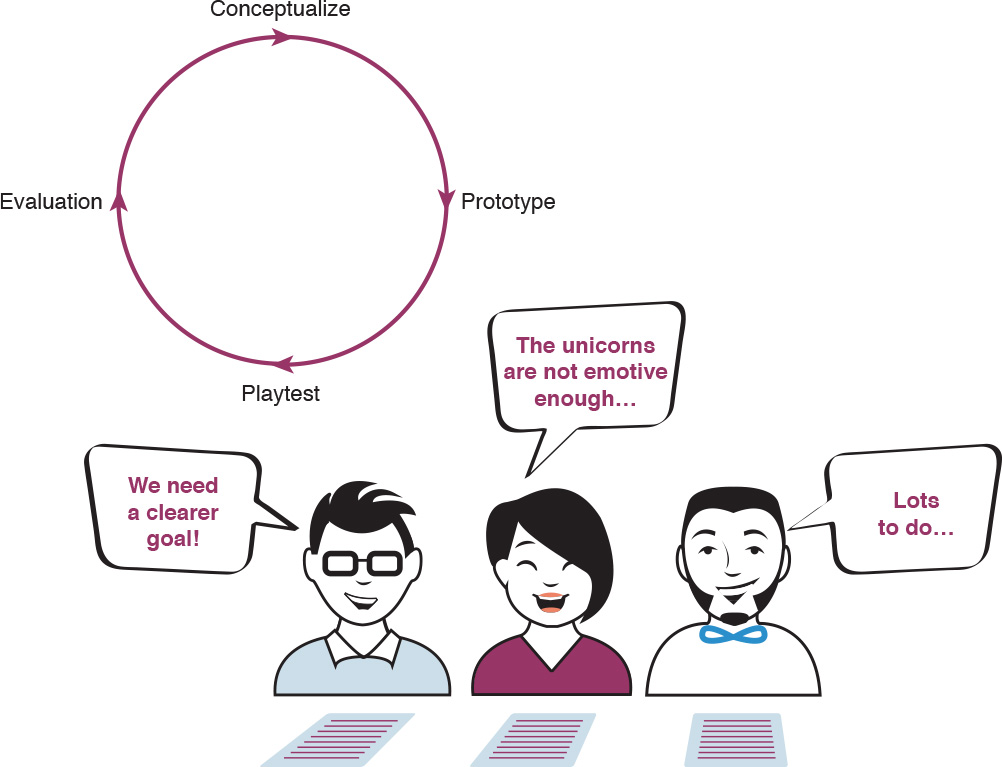

Evaluation is where you consider your game’s design, interpret the outcome of your playtests, and make decisions about how to refine your design plans. This directly flows into the first stage: conceptualization. You are reviewing the questions and answers (both expected and unexpected) and then making decisions about how to improve your game, including what kind of prototype will be next in the process.

Evaluation is the final, and sometimes most challenging, step in the iterative cycle. This is where all of the feedback from playtests gets examined by the team. If prototypes pose questions that are answered through playtests, then the evaluate phase is when those answers are reviewed and turned into actionable ideas for design revisions. The answers from the playtest, however, aren’t always clear. Think of yourself as the doctor, the game as a patient, and the playtest session the symptoms the patient reports to you. Playtester feedback is what the game is saying to you about the alignment between your design values and your player experience. The team’s observations of the playtest, and what the playtesters do and say, are the evidence considered to diagnose your game’s design. Just like the medical profession, it takes time to accurately diagnose problems, and treatments can run the gamut from “take two aspirin and call me in the morning” to complex surgery.

Figure 12.1 Evaluating is the fourth phase of the iterative cycle.

Reviewing Playtest Results

To get started with evaluation, think back to the questions posed in the prototype. Was the prototype exploring the play experience? If so, the evaluation should be focused on that aspect of the game. If the prototype was an exploration of art, code, or other elements around play, then make sure the evaluation focuses accordingly. The important thing here is to focus the evaluation on what was present and under evaluation.

Next, consider the answers that emerged from the playtests. This isn’t always as easy as it might seem. With the prototype, playtest notes, and documentation in hand, the team should review what happened during the playtest. This can happen in a couple of ways: individually review and collectively discuss, or collectively review and discuss. However the team decides, everyone should focus on thinking about issues in a structured way, focusing on what, where, why, and the takeaway.

![]() What: Instead of thinking in broad terms—it was awesome, players had fun, the game was too hard, and so on—break down feedback and observations from the playtest into small moments that highlight the good and the bad of the game, its design, and how that was implemented in the prototype. Focus on what actually happened, not your or a teammate’s analysis. Did a playtester not know how to use a particular action? Did the players consistently laugh or get frustrated at a particular moment? Focus on the evidence, and not yet the team’s interpretations.

What: Instead of thinking in broad terms—it was awesome, players had fun, the game was too hard, and so on—break down feedback and observations from the playtest into small moments that highlight the good and the bad of the game, its design, and how that was implemented in the prototype. Focus on what actually happened, not your or a teammate’s analysis. Did a playtester not know how to use a particular action? Did the players consistently laugh or get frustrated at a particular moment? Focus on the evidence, and not yet the team’s interpretations.

![]() Where: An important aspect of evaluating playtest feedback is knowing the context within which the moment was observed. Sometimes knowing the specific moment within the gameplay is important. Was the player trying to achieve a particular goal? Were they dealing with a particularly challenging moment in the game? Other times, problems arise around aspects of the game not yet implemented, like someone complaining about the plainness of the game when art direction hasn’t been implemented.

Where: An important aspect of evaluating playtest feedback is knowing the context within which the moment was observed. Sometimes knowing the specific moment within the gameplay is important. Was the player trying to achieve a particular goal? Were they dealing with a particularly challenging moment in the game? Other times, problems arise around aspects of the game not yet implemented, like someone complaining about the plainness of the game when art direction hasn’t been implemented.

![]() Why: For each of these moments, diagnose why you think it happened. If the player didn’t know how to use a particular action, was it because the control scheme wasn’t explained? Did the game not provide the experience hoped? Or did the action work in an unexpected way? Try to understand the underlying causes of the feedback on the prototype.

Why: For each of these moments, diagnose why you think it happened. If the player didn’t know how to use a particular action, was it because the control scheme wasn’t explained? Did the game not provide the experience hoped? Or did the action work in an unexpected way? Try to understand the underlying causes of the feedback on the prototype.

![]() Takeaway: The most important thing in reviewing the playtest is coming to a consensus on what the playtest reveals about the game’s design. Getting to a specific comment like “the paddle controls are too loose,” “players seem to jump instead of climb,” or “the object-oriented storytelling is not introducing the secondary characters well” is what to aim for. This requires a certain degree of diagnostic skill for converting player feedback into decisions for revising the design of the game. Again, this is a lot like what doctors do when reviewing a patient’s symptoms. The feedback may be evidence of something, but the underlying cause still needs to be diagnosed. This diagnosis is especially important when players suggest a specific design change, like, “I think you should make the score bigger on the screen.” Instead of just translating this into the design task “make score bigger,” try to understand why the playtesters suggested that. This is like a patient coming into the doctor’s office saying, “I have a sprained ankle.” The patient is diagnosing themself, but the doctor needs to understand why the patient thinks they have a sprained ankle and look at all of the evidence before the doctor makes a diagnosis. What are the symptoms? It could be that the playtesters are saying that they don’t see the score changing—or that they think the score is important and that in the fast pacing of the game they miss it. A bigger score might not solve the problem. Instead, other forms of feedback about player performance might make more sense, such as a screen between matches emphasizing the score and player achievements.

Takeaway: The most important thing in reviewing the playtest is coming to a consensus on what the playtest reveals about the game’s design. Getting to a specific comment like “the paddle controls are too loose,” “players seem to jump instead of climb,” or “the object-oriented storytelling is not introducing the secondary characters well” is what to aim for. This requires a certain degree of diagnostic skill for converting player feedback into decisions for revising the design of the game. Again, this is a lot like what doctors do when reviewing a patient’s symptoms. The feedback may be evidence of something, but the underlying cause still needs to be diagnosed. This diagnosis is especially important when players suggest a specific design change, like, “I think you should make the score bigger on the screen.” Instead of just translating this into the design task “make score bigger,” try to understand why the playtesters suggested that. This is like a patient coming into the doctor’s office saying, “I have a sprained ankle.” The patient is diagnosing themself, but the doctor needs to understand why the patient thinks they have a sprained ankle and look at all of the evidence before the doctor makes a diagnosis. What are the symptoms? It could be that the playtesters are saying that they don’t see the score changing—or that they think the score is important and that in the fast pacing of the game they miss it. A bigger score might not solve the problem. Instead, other forms of feedback about player performance might make more sense, such as a screen between matches emphasizing the score and player achievements.

Think of yourself as a doctor of game design. There are various ways to consider this feedback from a design perspective. But most importantly, think about it in relation to your design values. This could be an opportunity to bring your game more in line with them. If the team is not careful, this process can turn into a scene from the TV program House, where Hugh Laurie’s character berates and talks over his colleagues. So be careful about keeping things constructive during the evaluation process.

What to Think About

Some of the most important aspects to evaluate about a game’s design are the places where design values translate into player experience. Being able to think about how players are or aren’t getting the intended experience often has to do with a combination of the implementation of basic game design tools and the mechanisms by which players engage with the game. Thinking through each of these is important during the evaluation of the prototype and playtest. Before starting this review, it is important to think back to the design motivations and related design values established for the game. Taking the time to refresh the team’s hopes by looking back over the design values will help focus the team’s evaluation of the playtest.

![]() Actions: Do the players understand what they can and cannot do when playing the game? Are the controls intuitive or easy to learn and master? Are players able to develop skill around the core actions of the game?

Actions: Do the players understand what they can and cannot do when playing the game? Are the controls intuitive or easy to learn and master? Are players able to develop skill around the core actions of the game?

![]() Goals: Do the players understand the game’s goals? Are players creating their own goals in addition to or instead of? How is the game communicating the goals? Are the goals supported by the actions, objects, playspace, story, and so on?

Goals: Do the players understand the game’s goals? Are players creating their own goals in addition to or instead of? How is the game communicating the goals? Are the goals supported by the actions, objects, playspace, story, and so on?

![]() Challenge: Is the game providing the right degree and kind of difficulty or push-back? Does the game keep players engaged? If the challenge comes from the subject, is it coming through during the play experience?

Challenge: Is the game providing the right degree and kind of difficulty or push-back? Does the game keep players engaged? If the challenge comes from the subject, is it coming through during the play experience?

![]() Information spaces: Are players able to make sense of the information provided by the game? Is there too much information given the pace of the play experience? Too little? Are players missing out on essential information?

Information spaces: Are players able to make sense of the information provided by the game? Is there too much information given the pace of the play experience? Too little? Are players missing out on essential information?

![]() Feedback: Is the loop between player actions and the game’s response clear? Can players interpret the outcomes of their actions with confidence?

Feedback: Is the loop between player actions and the game’s response clear? Can players interpret the outcomes of their actions with confidence?

![]() Decision-making: Are players able to make decisions about how to pursue their goals and have the experience they seek?

Decision-making: Are players able to make decisions about how to pursue their goals and have the experience they seek?

![]() Player perceptions: Does the way the playspace is represented support the intended play experience?

Player perceptions: Does the way the playspace is represented support the intended play experience?

![]() Contexts of play: Is the place where the game is played having an impact on player experience? How about the time of day? What else is going on around the play session?

Contexts of play: Is the place where the game is played having an impact on player experience? How about the time of day? What else is going on around the play session?

![]() Takeaways: Is the game conveying the intended message, concept, or experience?

Takeaways: Is the game conveying the intended message, concept, or experience?

![]() Emotions: What emotions arise during play? Do they correspond to those hoped for?

Emotions: What emotions arise during play? Do they correspond to those hoped for?

Interpreting Observations

Once the team has captured the key observations of the playtest, it is time to think about what these observations say about the game’s design. This breaks down into two key categories: strengths and weaknesses, or even better, intended and unintended strengths and weaknesses. Often, players will respond to things in the game that you hadn’t anticipated or even intended to be part of the play experience. Take careful note of these moments for analysis. Intended strengths hopefully relate to the design values but may directly contradict them. Keeping them in mind as you discuss what you saw is very helpful, as it gives you another marker for evaluation.

Sometimes player responses that seem negative are actually what you were hoping to achieve. Maybe players said the game is too hard, which was intentional, or maybe they lament it is too short, but that was by design. With innovative design, there can often be a tension between player expectations and the design motivations and design values of the game. This particularly comes into play around genre conventions and the expectations players develop around how games will play. Bending and breaking convention is a hallmark of experimental and innovative game design, but it can also be a sign of poorly conceived design. Finding the right balance between intentional innovation and design oversights is a real challenge. Design values are always helpful in reminding the team of what they hope to achieve with a game and whether or not player expectations are inline or outside the focus of the game.

We taught a course in game design to a group of high school students once, and after a playtest, one of them said their playtesters “played it wrong.” Of course, that’s one way of looking at the weaknesses in your game that are surfaced in a playtest, but if they played it “wrong,” it’s likely that there’s something in the design that needs to be addressed. Or, it could be that the way players played it is right, and perhaps more intuitive and fun than the way it was designed to be played. Either way, these revelations in a playtest are invaluable. This sounds counterintuitive—players exposing weaknesses is gold—but it’s true. When it becomes apparent something is not working, you can fix it. A playtest helps give clarity about what works and what doesn’t. And it shows the path for improving the game.

As with the strengths of the game, look for intended and unintended problems. Some of that will be anticipated, but there will inevitably be concerns previously not seen. And that is a good thing, as it is always better to find out while problems can still be addressed. Taking the time to focus on the strengths and weaknesses in your prototype will help get to the next step: refining the game.

Conceptualizing Solutions

We have all this feedback now. How do we turn it into actual changes we want to make to the game’s design? This is where we return to conceptualize, the first step in the iterative design cycle. Many of the techniques are the same as outlined in Chapter 9, “Conceptualizing Your Game”—brainstorming, checking in on design motivations, and design values—but the context is different. With a full cycle of prototyping, playtesting, and evaluation complete, we now have seen some aspect of our game through our players’ eyes. We have experienced what works and what doesn’t in the game’s design, or at least the aspects of it captured in the prototype. Because of this, we have much more specific feedback to interpret, and we might need more time to identify what we need to change in our prototype. So we recommend a six-part approach to making decisions about revisions to your game, whether it be early in the design process or later in the playable prototype phase: review, incubate, brainstorm, decide, document, and schedule.

Review

To make sure everyone knows what issues are being worked on, a two-step review process is always helpful. First, to review the strengths and weaknesses found during the playtest, make sure the team is clear on them and that there are no misinterpretations. The second step is to revisit the team’s design values to make sure that the way we translate the feedback into actual design tasks helps us get closer to the values that we identified in the beginning. Returning to the design values at every step of the way is like tuning an instrument or balancing the tires on a car so that we can move forward with them in mind as we make decisions about the game’s design.

Incubate

With the strengths, weaknesses, and design values in mind, we then mull the specific issue over. Sometimes the issues are interconnected, making a solution that much more complicated to determine. Often, we begin with some time for everyone to think through the problems on their own, writing all their ideas for a given issue. This is a great way to let everyone think about solutions. How long everyone thinks on their own can vary. Sometimes we give ourselves 10 to 15 minutes. Sometimes we give ourselves a couple of days. Some people incubate best while running, or napping, or washing dishes. It really depends on the particular issues, where the game is in the design process, and other variables. The general rule, though, is the bigger the issue, the more time it can take to solve.

Brainstorm

Once the incubation phase is over, we gather to brainstorm ideas for strengthening our game. Capturing the ideas in a way everyone can see is always helpful. If in person, use a whiteboard, chalkboard, or a computer screen everyone can see. If remote, use a shared document, virtual whiteboard, or other shared method. These discussions should be inclusive, letting everyone share their ideas. (Remember the brainstorm rules?) Once all the ideas for each issue under discussion have been heard, it is time to start thinking about which solutions are best for the next prototype. Often, ideas combine or even lead to new ideas. Make sure all these are captured, too.

Here are a couple pointers for brainstorming solutions to your design. You’ll likely come up with new ideas at this point to prototype and explore. That’s great, but be careful. This is when you run the risk of overloading your design. It’s key to identify the things that you absolutely need to do for your next prototype and playtest and the ones that are lower in priority. Sometimes the ideas that emerge here are great, but they’re not perfect for the current game. So write them down, and save them for a future project.

Decide

With all the options discussed and their merits weighed, the team should make decisions about which solutions to implement. How do you know which are the right choices? Sometimes it is obvious, and sometimes it is really hard to figure out. But always keep the design values in mind, and let them help decide what is best for the game. It is better to make a decision than to spend too much time debating among the team. Getting to the next implementable prototype is much better than the perfect idea, so put emphasis on actionable decisions that are in the spirit of the design values whenever possible.

Document

With decisions made, it is time to divide tasks among the team to realize the solutions. First, capture the revisions that need to be made to the game design document. Then translate this into a series of tasks. Make certain everyone knows what they are responsible for. Using a tracking spreadsheet similar to what we described in Chapter 7, “Game Design Documentation,” is helpful for this.

Schedule

Once everyone knows what they should be doing, the team should agree on a schedule for getting to the next prototype. This may be a couple of hours, it may be a couple of days, or it may even be a couple of months, depending on how big the game is, where you are in the process, and other commitments team members have outside the project. Again, a tracking spreadsheet is really helpful in working through all the details.

Summary

Evaluation is one of the more challenging and complex steps in the iterative game design process, but it’s also where your game gets better. It’s important to take your time, review the playtest feedback, and discuss and diagnose with your team. Consider your basic game design tools and the mechanisms by which players engage with your game as you do, and brainstorm solutions before jumping to conclusions. Of course, everything is guided by a review of your design values and documented so that you can stay on the path to finishing and releasing your game.

Following are the steps to evaluating your design:

1. Review: Look at the strengths and weaknesses exposed in the playtest, and review the design values to make sure the design is on track.

2. Incubate: With the strengths, weaknesses, and design values in mind, we take some time to consider the feedback and possible solutions.

3. Brainstorm: Brainstorm ideas for strengthening the game, using techniques from Chapter 9.

4. Decide: With all the options discussed and their merits weighed, the team should make decisions about which solutions to implement.

5. Document: Capture the revisions that need to be made to the design document and schematics, and break them down into tasks for the task list.

6. Schedule: Once everyone knows what to do, the team should agree on a schedule for getting to the next prototype.