Pro HTML5 Accessibility: Building an Inclusive Web (2012)

C H A P T E R 2

Understanding Disability and Assistive Technology

In this chapter, we will look at a range of different types of disability and examples of some of the assistive technology (AT) that is used by people with disabilities. This chapter will help you to gain a greater understanding of what it means to have a disability and how having a disability changes the way web content is consumed by a user of AT.

Understanding Your Users

One of the hardest things for developers who are trying to build accessible web sites is to successfully understand the needs of their users. You should consider the potential needs from a couple of different angles. First, you must think about the functional requirements of the users, such as the tasks they wish to achieve while using the site. That has an obvious impact on the functionality that the site requires, as well as the approach you should take toward information architecture.

Then there are the devices that your audience will use. Leaving aside AT for a moment, with the growth of smartphone usage, many of us now are using the Web on the go, so the mobile space is really important.

![]() Note There is a very tight mapping between accessibility and the mobile Web. So many of the development practices you bring to creating content for mobile devices are also really good for accessibility!

Note There is a very tight mapping between accessibility and the mobile Web. So many of the development practices you bring to creating content for mobile devices are also really good for accessibility!

It’s All Me, Me, Me with Some People!

Many designers build things for themselves, or for their friends, whether consciously or unconsciously. However, there has been a greater awareness in the design community about accessibility over the past few years and a buzz about the challenge of building accessible interfaces that has captured the imagination of developers.

The following text will help you come to grips with what it means to have a disability and how this can affect how you perceive the world and interact with it. This understanding can be used to better understand how to accommodate the diverse range of interaction needs that people with disabilities have. It isn’t exhaustive, nor is it meant to be, but it should serve as a good introduction.

![]() Note Keep in mind that you cannot reasonably be expected to know every detail about every kind of disability, every piece of AT, and the corresponding techniques for designing custom Web interfaces that will work with them! So don’t worry. Let common sense be your guide, and learn to embrace feedback, even if it initially appears to be negative. It’s really valuable! When one person has a problem trying to perform a task on your web site, it means that there are many more who are also having problems but just haven’t said anything. So be grateful that someone was grumpy enough to complain! By reacting positively, you will be able to improve the quality of your design projects as well as improve as a developer. So it’s a win-win situation. It might hurt your pride a little, but you’ll get over it.

Note Keep in mind that you cannot reasonably be expected to know every detail about every kind of disability, every piece of AT, and the corresponding techniques for designing custom Web interfaces that will work with them! So don’t worry. Let common sense be your guide, and learn to embrace feedback, even if it initially appears to be negative. It’s really valuable! When one person has a problem trying to perform a task on your web site, it means that there are many more who are also having problems but just haven’t said anything. So be grateful that someone was grumpy enough to complain! By reacting positively, you will be able to improve the quality of your design projects as well as improve as a developer. So it’s a win-win situation. It might hurt your pride a little, but you’ll get over it.

We’ll look at some User Centered Design techniques in a Chapter 9, “HTML 5, Usability, and User-Centered Design,” but right now let’s look at some different disability types.

Overview of Blindness

There are many degrees of blindness. The common perception is that a blind person cannot see anything at all, but this is often not the case. A blind person might be able to make out some light, shapes, and other forms.

One friend of mine actually has good sight, but he is registered as legally blind because he has a severe form of dyslexia that renders him functionally blind because his condition creates cognitive confusion. So there are many interesting edge cases.

There are also reasons that a person has to be defined as officially having a disability. These reasons include such things as eligibility for state aid, welfare, and other services.

For example, in the US under the Social Security laws, a person is considered blind if the following applies:

“He has central visual acuity of 20/200 or less in the better eye with the use of a correcting lens.”1

In the UK, the Snellen Test is a common way of measuring visual acuity by using a chart with different sized letters in order to evaluate the sharpness of a person’s vision. If a person is being assessed for a vision impairment, the test looks specifically for

· Those below 3/60 Snellen (most people below 3/60 are severely sight impaired)

· Those better than 3/60 but below 6/60 Snellen (people who have a very contracted field of vision only)

· Those 6/60 Snellen or above (people in this group who have a contracted field of vision especially if the contraction is in the lower part of the field)2

__________

1 www.who.int/blindness/causes/en/.

![]() Note The definitions of disability vary from country to country, and there are international efforts to synchronize these definitions under initiatives such as the International Classification of Function (ICF). These are classifications of health and health-related domains from body, individual, and societal perspectives. Classifications are determined by looking at a list of body functions and structure, as well as their level of activity and participation. Because an individual’s functioning and disability occurs in a context, the ICF also includes a list of environmental factors. Therefore, the twin domains of activity and participation are a list of tasks, actions, and life situations that are used to record positive or neutral performance, as well as any activity limitations and participation restrictions that a person may encounter. These are grouped in various ways to give an overview of an individual’s level of ability in areas such as learning and applying knowledge, communication, mobility, self care, community, and social and civic life, for example.

Note The definitions of disability vary from country to country, and there are international efforts to synchronize these definitions under initiatives such as the International Classification of Function (ICF). These are classifications of health and health-related domains from body, individual, and societal perspectives. Classifications are determined by looking at a list of body functions and structure, as well as their level of activity and participation. Because an individual’s functioning and disability occurs in a context, the ICF also includes a list of environmental factors. Therefore, the twin domains of activity and participation are a list of tasks, actions, and life situations that are used to record positive or neutral performance, as well as any activity limitations and participation restrictions that a person may encounter. These are grouped in various ways to give an overview of an individual’s level of ability in areas such as learning and applying knowledge, communication, mobility, self care, community, and social and civic life, for example.

Some of the most common causes of blindness worldwide are the following

· Cataracts (47.9%)

· Glaucoma (12.3%)

· Age-related macular degeneration (8.7%)

· Corneal opacity (5.1%)

· Diabetic retinopathy (4.8%)

· Childhood blindness (3.9%)

· Trachoma (3.6%)

· Onchocerciasis (0.8%)3

![]() Note In terms of computers and the Web, blind users in particular are a group that have benefitted from technological developments in a very real way. The development of text-to-speech software has allowed blind users of AT to work at a broad range of jobs (and not all IT) and to participate strongly with various online communities. This all helps with the sense of inclusion a person feels.

Note In terms of computers and the Web, blind users in particular are a group that have benefitted from technological developments in a very real way. The development of text-to-speech software has allowed blind users of AT to work at a broad range of jobs (and not all IT) and to participate strongly with various online communities. This all helps with the sense of inclusion a person feels.

__________

2 The definition also considers the angle of vision or visual field. You can find out more at www.ssa.gov/OP_Home/ssact/title16b/1614.htm.

3 www.who.int/blindness/causes/en/

Blindness and Accessibility

Most of the time when people think of Web accessibility, they think of blind people! You could be forgiven for thinking this was the case—because blind users are often the most vocal group online.

However, Web accessibility is not solely about blind computer users. When you read a lot of discussion online about both subjects, don’t fall into the trap of associating Web accessibility with only blind people and screen readers. The idea that “If it works with a screen reader, it is accessible” is only partially true. But that is a little like saying, “Function x is defined in the HTML5 spec like this, therefore you should do it that way and it will just work.” In an ideal world, yes, it would work for sure, but in the real world you have to consider the browser, its support for any given functionality, and the user modality (or method of interaction—are they sighted or blind users of AT, and so on?).

There are other aspects to consider for users of more serial devices, such as single-button switches for people with limited mobility. A screen-reader user might have no problems navigating or interacting with a widget or with page content that could be very difficult for someone with limited mobility. Computers users with limited mobility will often tire very easily or even get exhausted tabbing through the 20 links that you thought were a good idea to include at the time!

So try not to just consider blind users (although they are, of course, very important) and screen readers when you are thinking about accessibility. However, because the screen-reading technology in use is very sophisticated, as you shall see later, there is a degree of complexity in both correctly serving content to these devices as well as using them.

Vision Impairment

There is a very broad range of vision impairments. Some of the most common are outlined here, with some photographic examples included that attempt to simulate what vision might be like for a person with the condition. It isn’t really the case that a person with vision impairment just can’t see very well. As these examples hope to illustrate, there can be greater problems for the person with the condition, depending on the impairment itself.

Figure 2-1 is a picture of my desktop before I created any of the simulations that follow.

Figure 2-1. My desktop, as seen by someone with relatively good vision

Glaucoma

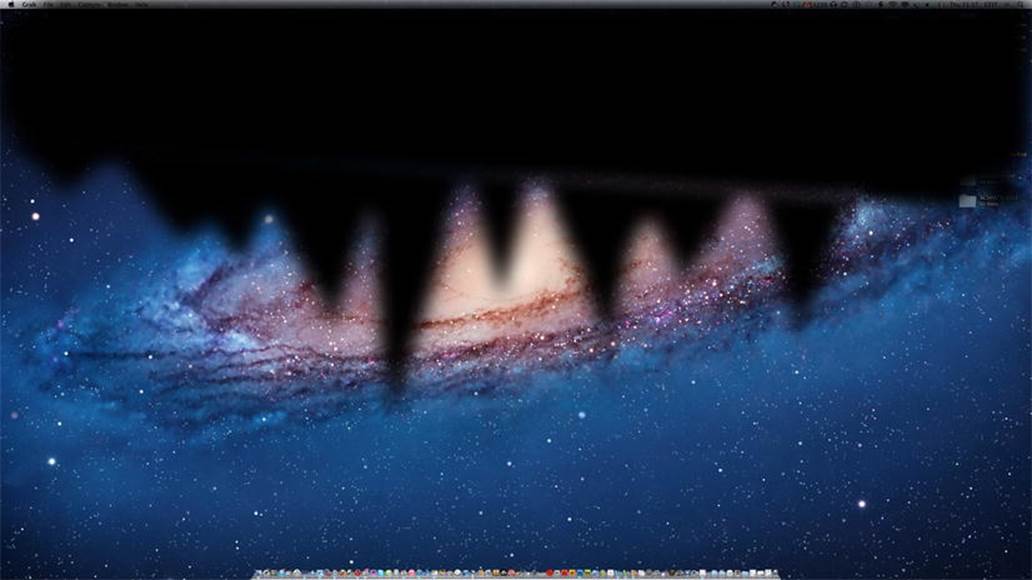

There are many causes for glaucoma, from simple aging to smoking. People with glaucoma might experience a total loss of their peripheral vision, as depicted in Figure 2-2. In the early stages, glaucoma can cause some subtle loss of color contrast, which can lead to difficulties seeing things around the environment or using a computer.

Figure 2-2. My desktop, as seen by someone with glaucoma (residual vision sample)

A person with peripheral sight loss might have difficulty seeing dynamic content updates if they are not near a particular element or widget. This can also be an issue for screen magnification users (more on this later).

Macular Degeneration

Macular degeneration is common among older people and causes a loss of vision in the center of the eye, as shown in Figure 2-3. This makes reading, writing, doing any kind of work on a computer, or performing up-close work very difficult. Recognizing certain colors can also be a problem.

Figure 2-3. My desktop, as seen by someone with macular degeneration (residual vision sample)

Retinopathy

This condition causes a partial blurring of vision or patchy loss of vision, as shown in Figure 2-4. It can be brought on by advanced diabetes. The person’s near vision may be reduced, and they may have difficulty with up-close reading.

Figure 2-4. My desktop, as seen by someone with retinopathy (residual vision sample)

Detached Retina

A detached retina can result in a loss of vision where the retina has been damaged. A detached retina might cause the appearance of dark shadows over part of a person’s vision, or the person might experience bright flashes of light or showers of dark spots. See Figure 2-5.

Figure 2-5. My desktop, as seen by someone with a detached retina

![]() Note There are some useful online tools that can act as vision simulators, such as the one developed by Lighthouse International. The simulator is designed to help inform, educate, and sensitize the public about impaired vision. Using filters that simulate macular degeneration, diabetic retinopathy, glaucoma, hemianopia, and retinitis pigmentosa, over YouTube videos of your choice, the simulator suggests some of the visual problems that a person with eye conditions experiences every day.4

Note There are some useful online tools that can act as vision simulators, such as the one developed by Lighthouse International. The simulator is designed to help inform, educate, and sensitize the public about impaired vision. Using filters that simulate macular degeneration, diabetic retinopathy, glaucoma, hemianopia, and retinitis pigmentosa, over YouTube videos of your choice, the simulator suggests some of the visual problems that a person with eye conditions experiences every day.4

For a more immersive simulation experience, the University of Cambridge has developed a very useful set of glasses that are designed to simulate a general loss of the ability to see fine detail, but are not intended to represent any particular eye condition. This type of loss normally occurs with aging and the majority of eye conditions, as well as not wearing the most appropriate glasses. The Cambridge Simulation Glasses can help to

· Understand how visual acuity loss affects real-world tasks

· Empathize with those who have poor vision

__________

4 www.lighthouse.org/about-low-vision-blindness/vision-simulator/

· Assess the visual demand of a task, based on the level of impairment 5

Physical Disability

There are many kinds of physical disabilities that can manifest in different ways and range from the moderate to the more severe. Physical disabilities can be conditions present from birth, or they can be acquired later on in life due to accident.

Some common mobility problems include either a lack of physical control of movement or unwanted spasms, such as tremors. Often people with physical disabilities become easily exhausted and find many forms of movement very tiring.

When it comes to the use of a computer, people with physical disabilities often cannot use a mouse. The devices that are used (switches, joy sticks, and other AT, which we will look at shortly) can be a great help when trying to interact with a computer.

Some people with physical disabilities might not use any kind of AT but will find it hard or even impossible to use web sites and applications that are not keyboard accessible. In fact, ensuring your web sites are keyboard accessible is probably one of the single greatest things you can do to help users with physical disabilities. Doing this as a rule of thumb is a great idea and will also help to support users of switches and other serial input devices.

Cognitive and Sensory Disabilities

Computer users with cognitive and sensory disabilities are probably the hardest to accommodate. This is pretty much because it is such a new area of research and there is little conclusive evidence about what works and what doesn’t.

Accessibility-related web-development techniques are also underspecified. However, as time goes on, there is a greater understanding of cognitive and sensory disabilities and there will be a greater suite of accessible development methods that can be used to accommodate this user group’s needs.

Some of the challenges that the designer faces when building interfaces that can be used by people with cognitive and sensory disabilities are outlined in the sections that follow.

Perception

This is a visual and auditory difficulty where certain shapes, forms, and sounds can be hard to recognize. Understanding how to serve the needs of users with perception difficulties can be hard, because it is difficult to know just how they might perceive certain items, such as unusual user interfaces.

Care must be taken when combinations of visual and auditory cues are used in rich media interfaces, because this can cause problems such as sequencing (which is explained in an upcoming section).

Consistent and clear design will certainly help, and the use of distinctly designed components that clearly illustrate their inherent functionality and are easy understand will help as well.

Memory and Attention

Problems with short-term memory and attention can have a profound impact on a person’s ability to perform the most basic tasks, and it can make using more complex technologies very challenging. People experiencing issues with memory loss can find that it severely impacts their ability to understand and react to user-interface feedback (such as form validation) and that it makes responding appropriately or in the required way in any given situation very difficult.

Chunking content into small, related blocks can be a great help to people with memory problems. This is where you divide related content and interface elements into groups of five to seven chunks, which is a useful method to aid comprehension as well as to help users with short attention spans.

Sequencing

Sequencing relates to the ability to associate auditory and visual cues over time, or knowing what steps are required to perform a given task. Difficulties with sequencing can be reduced by providing cues in the interface that, for example, will help your users understand when input is required.

Also, sequencing-related problems can be minimized if you avoid using unnecessary flashing content, animation, or movement that can be distracting to the user and divert their attention away from core functionality, or that can just be distracting when the user is trying to read. Many other users would also thank you for doing this, including me!

Dyslexia

The term dyslexia comprises a range of conditions that relate to difficulty interpreting words and numbers or math.

The use of clear, concise language for Web content can be a great help to people with dyslexia, as can the font you use. People with dyslexia have problems with characters that include ticks or tails, which are found in most Serif fonts. The size of ascenders and descenders on some letters (such as the downstroke on p and the upstroke on b) can get mixed up, so the visual shape of the characters must be clear. Dyslexic people rely on this as a visual clue to help them distinguish one letter from another.

Some general advice on fonts to use can be found at Dyslexic.com, specifically www.dyslexic.com/fonts.

![]() Note Comic Sans seems like a great font, but most designers would rather gnaw off their own leg than use it. A groovier and more designer friendly font is the rather nice “Dyslexie: A Typeface for Dyslexics.” This font was developed at the University of Twente in the Netherlands to help dyslexic people read more easily.

Note Comic Sans seems like a great font, but most designers would rather gnaw off their own leg than use it. A groovier and more designer friendly font is the rather nice “Dyslexie: A Typeface for Dyslexics.” This font was developed at the University of Twente in the Netherlands to help dyslexic people read more easily.

It’s based on the notion that many of the 26 letters in the standard Latin-based alphabet, as used in English, look similar—such as v/w, i/j and m/n. Thus, people with dyslexia often confuse these letters. By creating a new typeface where the differences in these letters are emphasized, it was found that dyslexic people made fewer errors.

There is also a really nice video on dyslexia at the following web site: www.studiostudio.nl/project-dyslexie.

What Is Assistive Technology?

The range of assistive technology (AT) devices and controls is really varied. There are also many definitions. I like this one:

“A term used to describe all of the tools, products, and devices, from the simplest to the most complex, that can make a particular function easier or possible to perform.”

US National Multiple Sclerosis Society

You might have noticed that disability isn’t explicitly mentioned at all, and this is important. You don’t think of your glasses or your TV remote controls as assistive technology, but that is exactly what they are.

![]() Tip For a great introduction to AT, watch the AT boogie video by Jeff Moyer with animation by Haik Hoisington. It’s fun and educational, and it can be found at http://inclusive.com/assistive-technology-boogie.

Tip For a great introduction to AT, watch the AT boogie video by Jeff Moyer with animation by Haik Hoisington. It’s fun and educational, and it can be found at http://inclusive.com/assistive-technology-boogie.

Why can’t technology be used by many different people regardless of their ability? Can good design make this a reality? Good design should enable the user to perform a desired task regardless of the user’s ability.

As a designer or web developer, you don’t need in-depth knowledge of how assistive technology works. In fact, this range and depth of knowledge is likely difficult to achieve because it will take a lot of time and energy.

Many assistive-technology devices are serial input devices. They accept one binary input, on/off. Others are much more complex (like screen readers) and can be used in conjunction with the browser to do very sophisticated things and develop new interaction models. In the next section, we will look at screen readers—what they are, how they work, and how you can use them in your testing (with warnings) of the accessibility of your web sites.

You don’t really need an exhaustive understanding of every type of AT, but a good understanding of screen-reader technology is a valuable foundation for successful accessible design, regardless of the AT used.

What Is a Screen Reader?

Screen readers are mainly used by blind and visually impaired people, but they can also be beneficial to other user groups, such as people with dyslexia or people with literacy issues.

A screen reader will identify what is on the screen and output this data as speech. It is text-to-speech software that literally reads out the contents of the screen to a user as he or she gives focus to items on the screen and navigates by using the keyboard. Screen readers are used to interact with and control a PC, Mac, web browser, and other software.

Screen readers can work very well with the operating system of the host computer itself, and they can give a deep level of interaction with the computer, letting the user perform many complex system administration tasks. In fact, the screen reader usually performs much better when interacting with the host operating system because they are tightly integrated. It’s when the screen reader user goes online that problems often start. The online world isn’t a controlled or well-regulated place, so there are reasons why the safe and well-engineered environment of the operating system is generally much more accessible.

Screen readers can be used to simulate right-mouse clicks, open items, and interrogate objects. Interrogating an object really means to query—rather like focusing on an object and asking it “What are you, or what properties do you have?” Screen readers also have a range of cursor typesand can be used to navigate the Web and control the on-screen cursor, as well as simulate mouse-over events in Web environments that use JavaScript. In short, pretty much all sighted user functionality can be done using a screen reader.

![]() Note The term screen reader is quite misleading. A screen reader does much more than just read the screen. A more accurate term would be screen navigation and reading device because the software is used to navigate not only the user’s computer but the Web.

Note The term screen reader is quite misleading. A screen reader does much more than just read the screen. A more accurate term would be screen navigation and reading device because the software is used to navigate not only the user’s computer but the Web.

Many different screen readers are available, such as JAWS, Window-Eyes, the free open-source Linux screen reader (ORCA), and the free NVDA, as well as the constantly improving VoiceOver that comes already bundled with Mac OS X. There are others, such as Dolphin Supernova by Dolphin, System Access from Serotek, and ZoomText Magnifier/Reader from Ai Squared. Coming up next is an overview of some of the more commonly used screen readers. It’s not exhaustive coverage of the available products, and the different packages basically do the same thing. What people use is largely determined by budget and preference.

JAWS

JAWS for Windows is one of the most commonly used screen readers and is developed by Freedom Scientific in the US. JAWS stands for Job Access With Speech, and it’s an expensive piece of software that costs about $1,000 for the professional version.

There are also many JAWS scripts available, which expands its functionality to enable access to some custom interfaces and platforms. JAWS was originally a DOS-based program. It gained popularity due to its ability to use macros and quickly access content and functionality.

Around 2002, this ability was brought into the more graphical Windows environment with the addition of being able to navigate around a webpage using quick keys and giving focus to HTML elements like headings. This kind of functionality and user interaction with screen-reading technology has become a cornerstone of accessible web development.

JAWS functionality expanded as the years went by, with the ability to query the fonts used in a page, specify what web elements had focus, and more advanced features like tandem, which allows you to remotely use another person’s screen reader to access a computer. Tandem is very useful for troubleshooting and remote accessibility testing.

JAWS 12 saw the introduction of a virtual ribbon, for use with Microsoft Office and other applications, as well as the introduction of support for WAI-ARIA.

So How Does a Blind Person Access the Web and Use a Screen Reader?

The following will help you come to grips with how the screen reader is used, as well as help you if you decide to manually test your HTML5 interfaces with a screen reader.

First off, JAWS uses what’s called a virtual cursor when interacting with Web content. So you are not actually interacting directly with the webpage itself but with a virtual version or snapshot of the page that is loaded on a page refresh. The virtual cursor is also used for reading and navigating Microsoft Word files and PDF files, so some of the tricks you will learn here can be applied when navigating accessible offline documents.

![]() Note With JAWS, interacting with Web content involves using an off-screen model (OSM), where HTML content from the page is temporarily buffered or stored and the screen reader interacts with that rather than the page directly. There are times, however, when the DOM is used, and other screen readers don’t use an off-screen model anymore because it is seen as slightly outdated and problematic. So interacting with the DOM directly is preferable. Don’t worry too much about this now because I’ll cover it in more detail in a Chapter 4, “Understanding Accessibility APIs, Screen Readers, and the DOM.” The details of how screen readers use an OSM and interact with the DOM become important later when we talk about dynamic content and using JavaScript.

Note With JAWS, interacting with Web content involves using an off-screen model (OSM), where HTML content from the page is temporarily buffered or stored and the screen reader interacts with that rather than the page directly. There are times, however, when the DOM is used, and other screen readers don’t use an off-screen model anymore because it is seen as slightly outdated and problematic. So interacting with the DOM directly is preferable. Don’t worry too much about this now because I’ll cover it in more detail in a Chapter 4, “Understanding Accessibility APIs, Screen Readers, and the DOM.” The details of how screen readers use an OSM and interact with the DOM become important later when we talk about dynamic content and using JavaScript.

Starting with JAWS

The type of JAWS voice that you use—as well as its pitch, its speed, and the amount of punctuation that JAWS outputs—can be controlled via the Options menu > Voices > Voice Adjustment.

![]() Tip You might also wish to make some adjustments like turning off the echo for when you are typing. In my experience, many blind users do this right away; otherwise, when you type a sentence, each character you input will be announced and that can get very annoying, very fast.

Tip You might also wish to make some adjustments like turning off the echo for when you are typing. In my experience, many blind users do this right away; otherwise, when you type a sentence, each character you input will be announced and that can get very annoying, very fast.

Many of the speech functions in JAWS are accessed via the numeric keypad. The INSERT key is very important also (commonly called the JAWS key), because it is used to access some more advanced functions when online.

The number pad is used to query text and controls how and what is read. So using the arrow keys will move you up and down and read on-screen content, while going LEFT and RIGHT with the arrow keys will bring you forward and back, respectively, through text.

![]() Note To stop JAWS from talking at any time, press the CTRL key!

Note To stop JAWS from talking at any time, press the CTRL key!

The most common keys for interacting with text are

· NUM PAD 5—Say character

· INSERT+NUM PAD 5—Say word

· INSERT+NUM PAD 5 twice—Spell word

· INSERT+LEFT ARROW—Say prior word

· INSERT+RIGHT ARROW—Say next word

· INSERT+UP ARROW—Say line

· INSERT+HOME (the 7 key) —Say to cursor

· INSERT+PAGE UP (the 9 key) —Say from cursor

· INSERT+PAGE DOWN (the 3 key) —Say bottom line of window

· INSERT+END (the 1 key) —Say top line of window

As mentioned earlier, the LEFT ARROW and RIGHT ARROW keys are used to move to and read the next or previous character, respectively. The UP ARROW and DOWN ARROW keys will allow you to move to and read the previous or next line, respectively. If you hold down the ALT key and press the UP ARROW or DOWN ARROW key, you go through a document by sentence. Or you can use CTRL to navigate a document by paragraph.

Using Dialog Boxes with JAWS

To shift between different programs you have open on your PC, you use the CTRL+TAB and CTRL+SHIFT+TAB keys to toggle forward and back, respectively. To navigate options in a dialog box, you use the TAB key to go forward and SHIFT+TAB to go back.

JAWS and the WEB

JAWS provides a killer way to browse the Web easily. When you open your Web browser—for example, Internet Explorer (IE)—you can jump to any HTML element on the page of your choice by pressing a single key. To find the headings on a page, press H; for all tables, press T; for form controls, press F; and so on. Pressing any of these keys more than once will result in the next desired element within the document source order being announced and given focus. This is a fantastic way to browse the Web, use headings to navigate, and jump over sections of content and quickly give focus to whatever element you wish!

![]() Note Being able to browse the Web as I just described is entirely dependent on the page having a suitable semantic structure for the assistive technology to use in the first place. If a webpage has no headings, for example, this method of browsing just won’t work. Given this, the important role that well-formed markup plays in accessibility, as discussed in the previous chapter, should be crystal clear.

Note Being able to browse the Web as I just described is entirely dependent on the page having a suitable semantic structure for the assistive technology to use in the first place. If a webpage has no headings, for example, this method of browsing just won’t work. Given this, the important role that well-formed markup plays in accessibility, as discussed in the previous chapter, should be crystal clear.

Displaying HTML Items as Lists

Expanding on the preceding method of user interaction, JAWS can also be used to create lists of all the headings, links, and other HTML elements in a page and present them to the user in a dialog box the user can easily navigate through using the cursor keys.

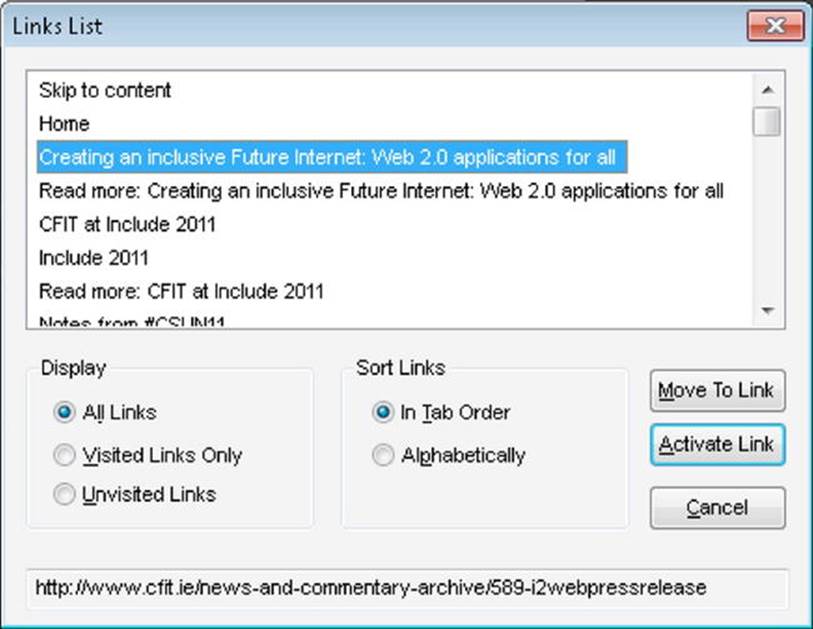

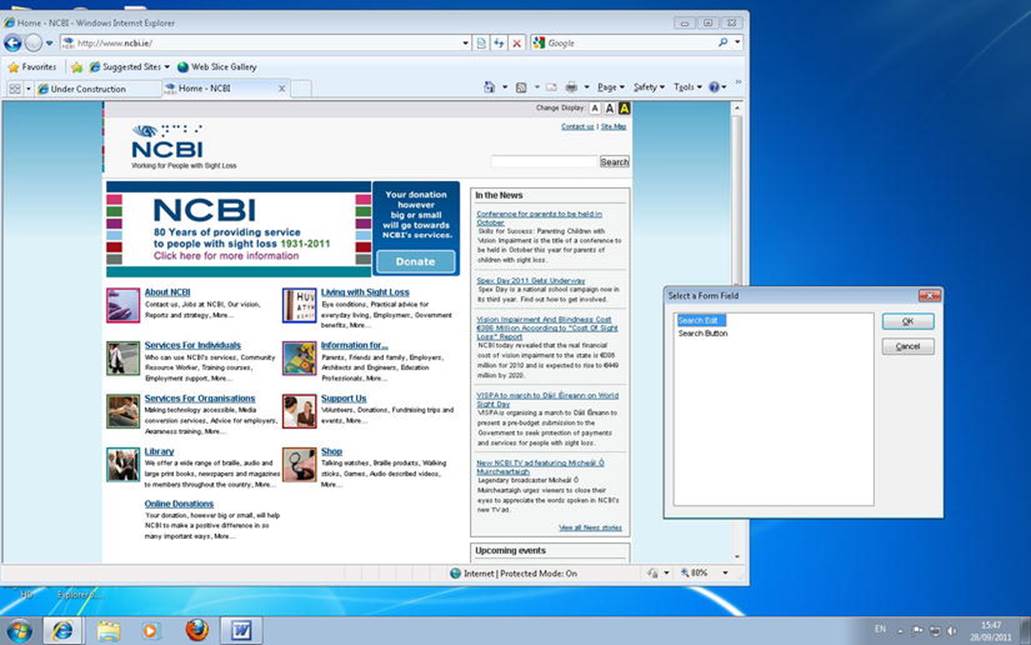

If you press the JAWS (INSERT) key and a corresponding function key, you get a list of HTML elements displayed nicely in a dialog box. For example, you can press INSERT+F7 to display a list of all links on the current page, as shown in the dialog box in Figure 2-6.

Figure 2-6. The Links List dialog box

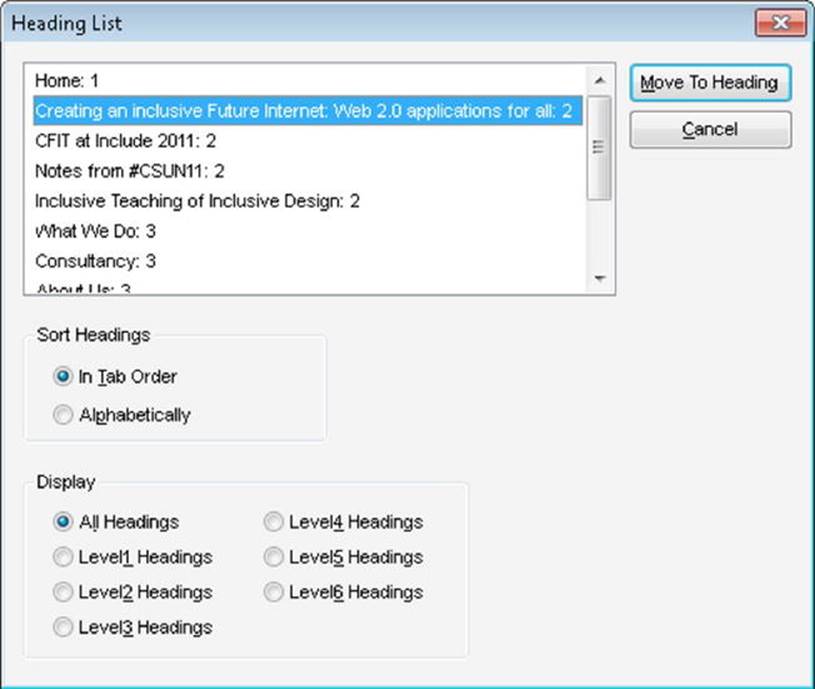

Another example is that you can press INSERT+F6 to display a list of all headings on the current page, as shown in Figure 2-7.

Figure 2-7. The Heading List dialog box

Or you can press INSERT+F5 to display a list of all form fields on the current page, as shown in Figure 2-8.

Figure 2-8. The Select A Form Field dialog box

You can then use the arrow keys to select an item, and press ENTER to activate or give it focus.

![]() Note Once the dialog is open, you can also browse the list alphabetically—a really handy feature. So if you have a long list of links, rather than using the arrow keys to go through them one by one, if you know the name of the link you want you can press the key of its first letter and bounce to that link directly. So (once the link dialog box is open) if you want the Contact link, press C; if you want About Us, press A; or if you want Sales, press S; and so on.

Note Once the dialog is open, you can also browse the list alphabetically—a really handy feature. So if you have a long list of links, rather than using the arrow keys to go through them one by one, if you know the name of the link you want you can press the key of its first letter and bounce to that link directly. So (once the link dialog box is open) if you want the Contact link, press C; if you want About Us, press A; or if you want Sales, press S; and so on.

JAWS and Forms

As mentioned earlier, JAWS uses a virtual cursor for interacting with webpages. The screen reader can be thought of as not interacting with the page directly, apart from when the user needs to input some data, such as with a form. This is when the virtual cursor is switched off, and JAWS disables the quick navigation keys and functions and enters Forms Mode.

![]() Tip The quick navigation features that were described earlier are turned off when you are in Forms Mode because you need the keys to type! When using the JAWS virtual cursor (which is the default when browsing the Web), the keyboard input is captured by the screen reader and used to navigate the Web.

Tip The quick navigation features that were described earlier are turned off when you are in Forms Mode because you need the keys to type! When using the JAWS virtual cursor (which is the default when browsing the Web), the keyboard input is captured by the screen reader and used to navigate the Web.

In earlier versions of JAWS, when you moved into a form control and wanted to type or select a radio button or other element, you had to manually select Forms Mode. The newer versions of JAWS have an Auto Forms Mode and are on by default. More advanced users can turn this feature off if they choose and go back to manually selecting Forms Mode in their screen reader. This can give the user more control, as they can choose to enter the Input Mode or not – rather than it being the default in their AT. It is largely a matter of preference; other screen readers like VoiceOver will allow you to enter text in an input field when it has focus and doesn’t have a user controlled Forms Mode.

![]() Note To manually control a form using JAWS, press F to move to the next form control on the page. Press ENTER to enter Forms Mode. Press the TAB key to move between form controls while in Forms Mode. Type in edit fields, select check boxes, and select items from lists and combo boxes. Press NUM PAD PLUS to exit Forms Mode.

Note To manually control a form using JAWS, press F to move to the next form control on the page. Press ENTER to enter Forms Mode. Press the TAB key to move between form controls while in Forms Mode. Type in edit fields, select check boxes, and select items from lists and combo boxes. Press NUM PAD PLUS to exit Forms Mode.

There can be problems with the JAWS screen reader not being able to pick up on some content in forms because the virtual cursor is off. When you build forms, you should be sure that your forms are well labeled, and by keeping them clear and simple in layout and design you should avoid these issues. However, user testing your projects with people with disabilities is a great way of highlighting problems that you couldn’t anticipate. We’ll look at this in Chapter 9, “HTML5, Usability, and User-Centered Design.” Also at this stage, getting into the details of Forms Mode and the various cursors that are used is a little more of a post-graduate topic, but I will try to highlight some of these issues in the later Chapter 4, “Understanding Accessibility APIs, Screen Readers, the DOM,” as well as Chapter 8, “HTML5 and Accessible Forms.”

VoiceOver and the Mac

There have been huge improvements with the quality of the native, out-of-the-box VoiceOver screen reader that comes bundled with every Mac. It is safe to say the quality of the voice, its integration with the operating system, and its usefulness on the Web have resulted in many blind users making the switch to the Mac. The cost of the JAWS screen reader alone is equivalent to buying a lower spec Mac. Also, I have heard some blind friends say that they prefer it because it just “feels nicer.”

VoiceOver is also suitable for you, as a developer, to use in your testing of the accessibility of web sites—and in some ways, it is preferable to using JAWS because you can start testing it right out of the box and the learning curve isn’t as steep.

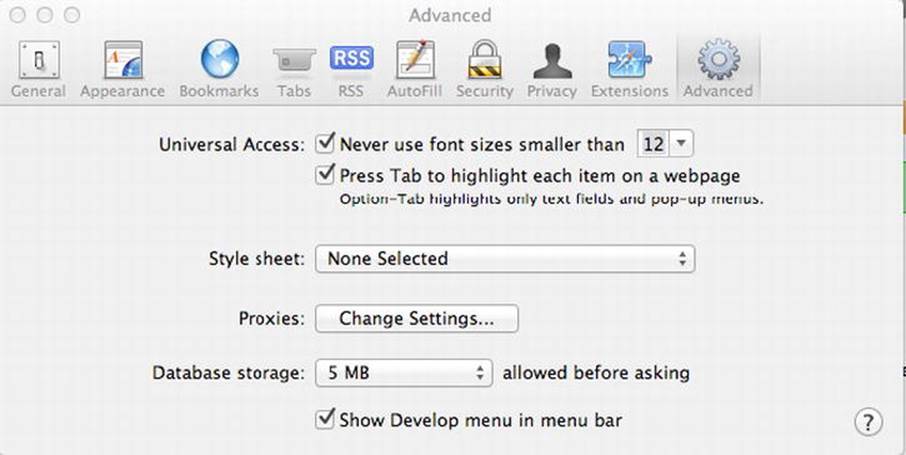

Before we look at VoiceOver in more detail, I need to mention something important that you really should do to configure your Safari browser. To be able to give focus to items on the page such as links (which is very important for accessibility testing), you need to enable this feature in the browser under Universal Access, as shown in Figure 2-9. This feature will work whether VoiceOver is on or off—it’s a pain that it’s not enabled by default.

Figure 2-9. You must select the Press Tab To Highlight Each Item check box

![]() Note If you are vision impaired, VoiceOver can be used to configure your Mac straight out of the box. This is a really useful feature.

Note If you are vision impaired, VoiceOver can be used to configure your Mac straight out of the box. This is a really useful feature.

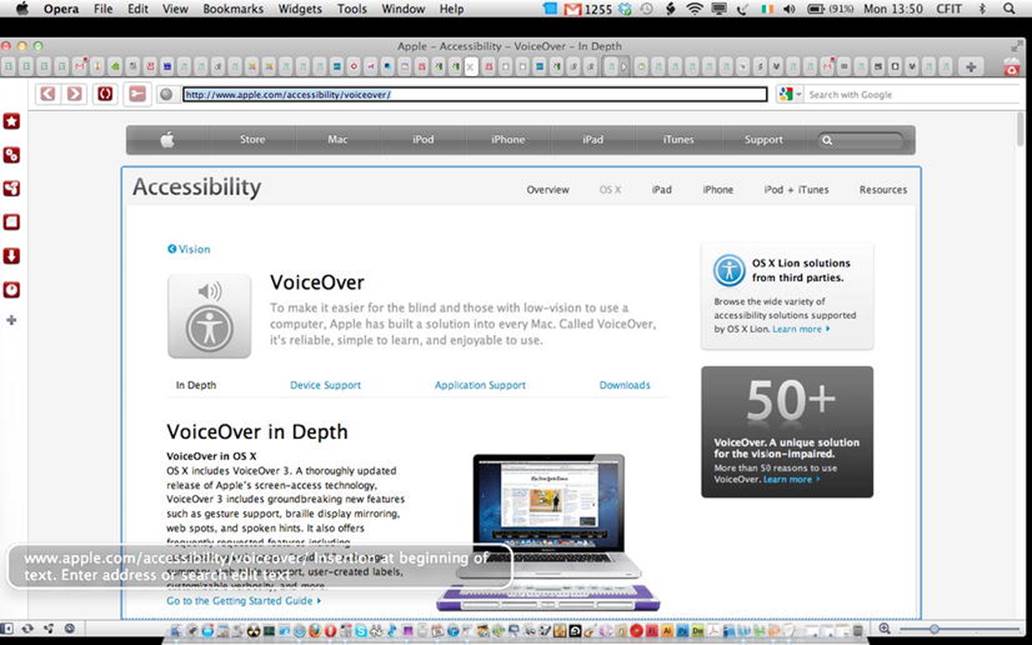

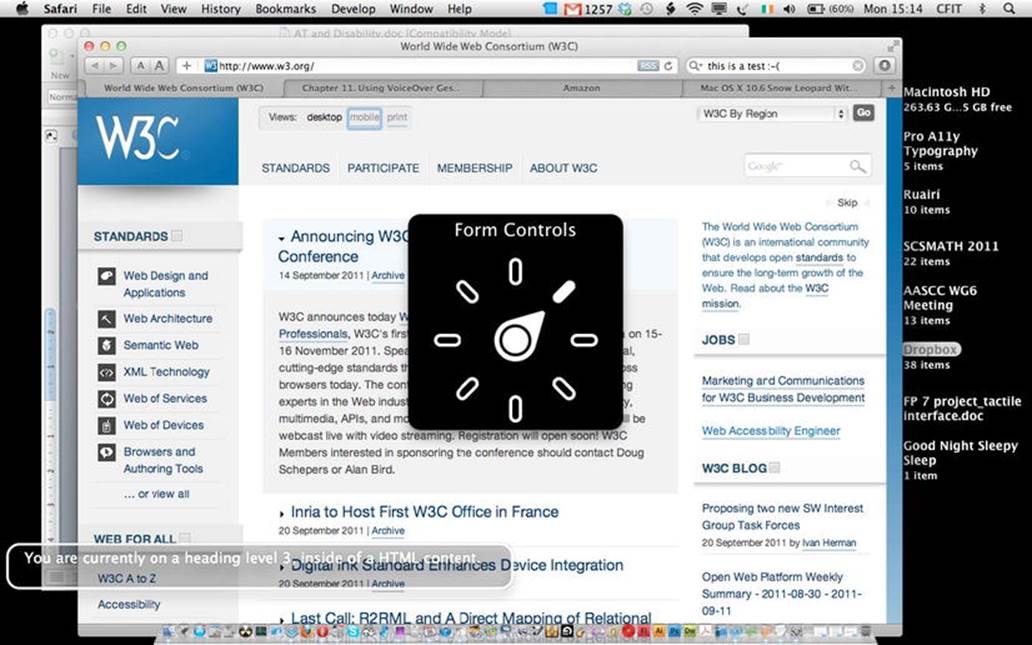

So where to start with VoiceOver? If you are running the Mac already, you can start VoiceOver by pressing CMD+F5. You’ll see a change to your screen on the bottom left, as shown in Figure 2-10.

Figure 2-10. VoiceOver screen reader dialog

This dialog box can be very helpful for testing, because it represents the text output of VoiceOver. So if you are a sighted person testing a page, you can get a visual heads up about what the screen reader will be outputting. Any items you focus on using the keyboard (or the trackpad or mouse) will also have their name (and sometimes certain properties) announced by VoiceOver, and you will be able to see this info in this box.

The Control (Ctrl) and Option (alt) keys are very important when using VoiceOver and are known as the VoiceOver or VO keys. You will pretty much need to press these in conjunction with other keys to get VoiceOver to do stuff, like bounce to a heading, go to links, and other such actions.

You can also assign VO commands to things like trackpad gestures and number pads to perform commonly used tasks with fewer keystrokes. You might want to emulate the swipe gesture to navigate between HTML items, such as when using the iPhone and VoiceOver (which is a really cool way to be able to browse the Web). I’ll say more on that a little later. The VoiceOver Utility is used to tweak the screen reader to your needs.

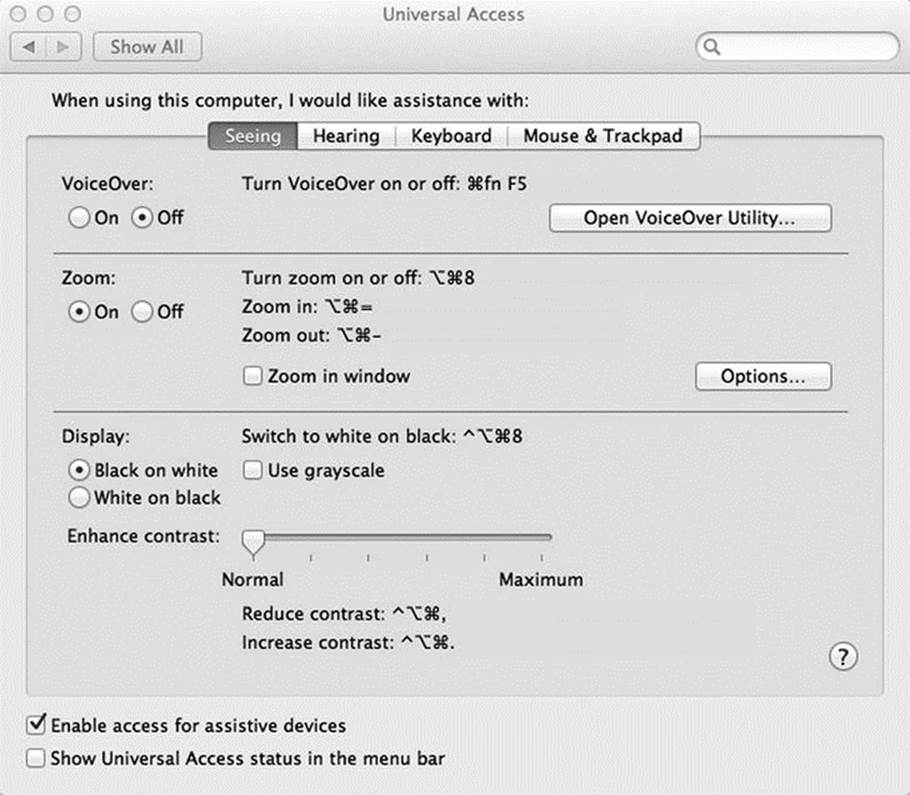

You access the VoiceOver Utility by selecting Universal Access in the Systems Preferences dialog box, as shown in Figure 2-11.

Figure 2-11. Accessing the Universal Access options

Then you will see the accessibility options relating to the Mac, as shown in Figure 2-12.

Figure 2-12. Universal Access options

![]() Note There are several other accessibility features already on your Mac, such as a pretty good screen magnifier for people with impaired vision. (This is also available with every Windows PC and Linux Box.) It’s worth spending some time getting familiar with them.

Note There are several other accessibility features already on your Mac, such as a pretty good screen magnifier for people with impaired vision. (This is also available with every Windows PC and Linux Box.) It’s worth spending some time getting familiar with them.

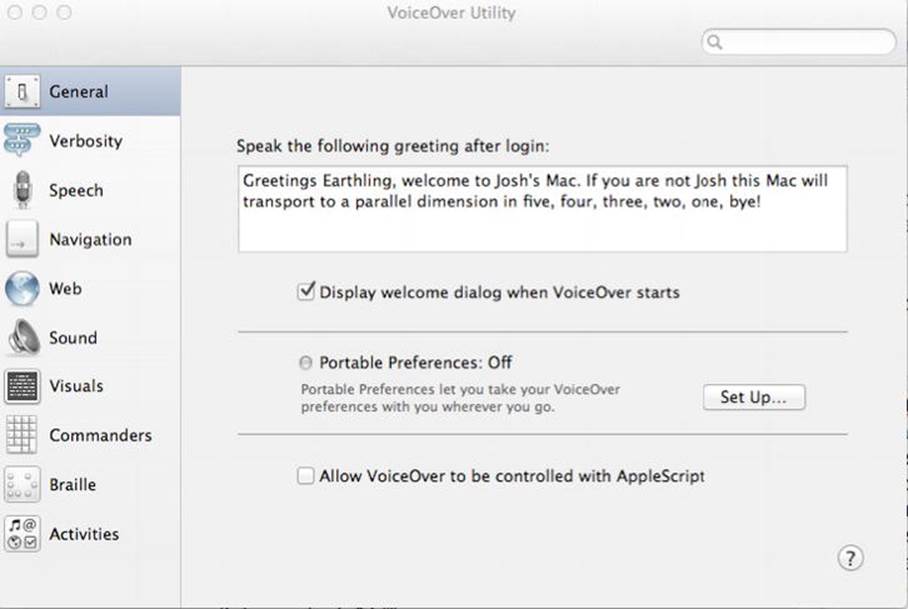

Opening the VoiceOver Utility will bring you into the area where you can customize your screen reader settings, as shown in Figure 2-13.

Figure 2-13. VoiceOver Utility

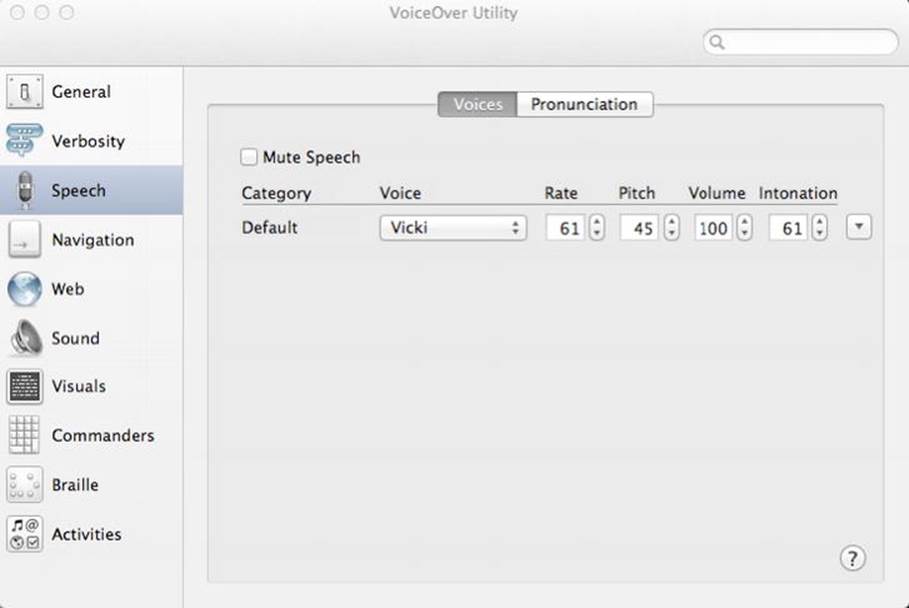

The VoiceOver Utility allows you to select the voice, pitch, speed, and other settings that you want to use. You might want to adjust the voice to speak to you fast or slow, depending on what you are doing. I really like the Vicki voice, set at the values shown in Figure 2-14.

Figure 2-14. VoiceOver Utility—speech configuration options

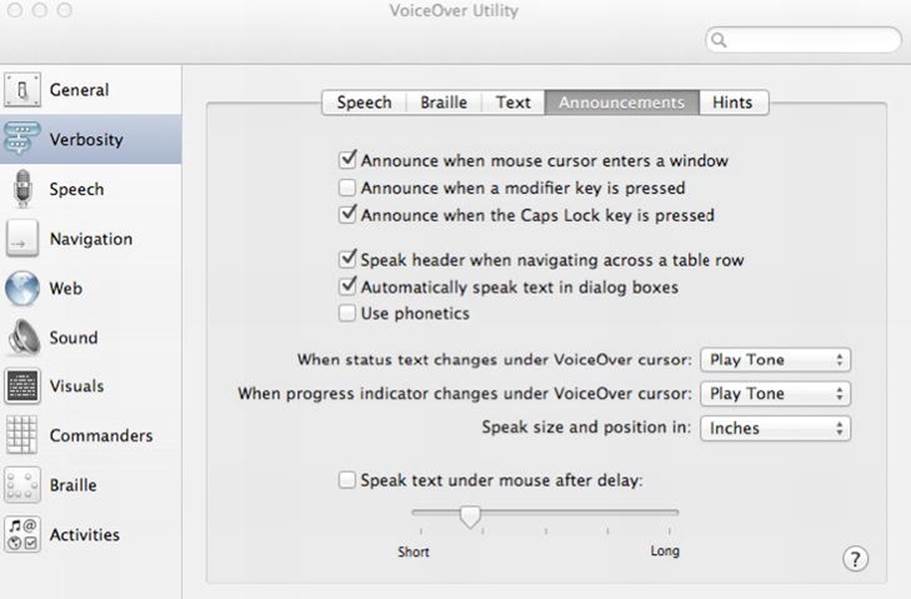

![]() Note There are a couple of other features that you might want to tweak. For example, I like to switch off the Announce When A Modifier Key Is Pressed option and the Announce When The Caps Lock Key Is Pressed option. Although both are important when a visually impaired person is using the screen reader, when testing webpages for accessibility I find the first option really annoying because every time the shift key is pressed (if I am typing) “Shift” gets announced. These options are shown in Figure 2-15, which displays the Verbosity options.

Note There are a couple of other features that you might want to tweak. For example, I like to switch off the Announce When A Modifier Key Is Pressed option and the Announce When The Caps Lock Key Is Pressed option. Although both are important when a visually impaired person is using the screen reader, when testing webpages for accessibility I find the first option really annoying because every time the shift key is pressed (if I am typing) “Shift” gets announced. These options are shown in Figure 2-15, which displays the Verbosity options.

Figure 2-15. VoiceOver Utility—Verbosity options

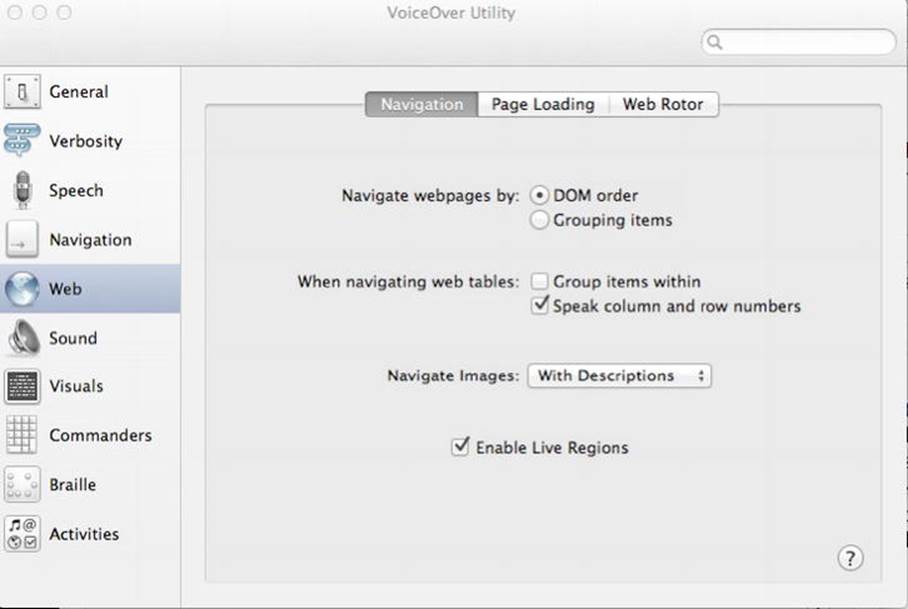

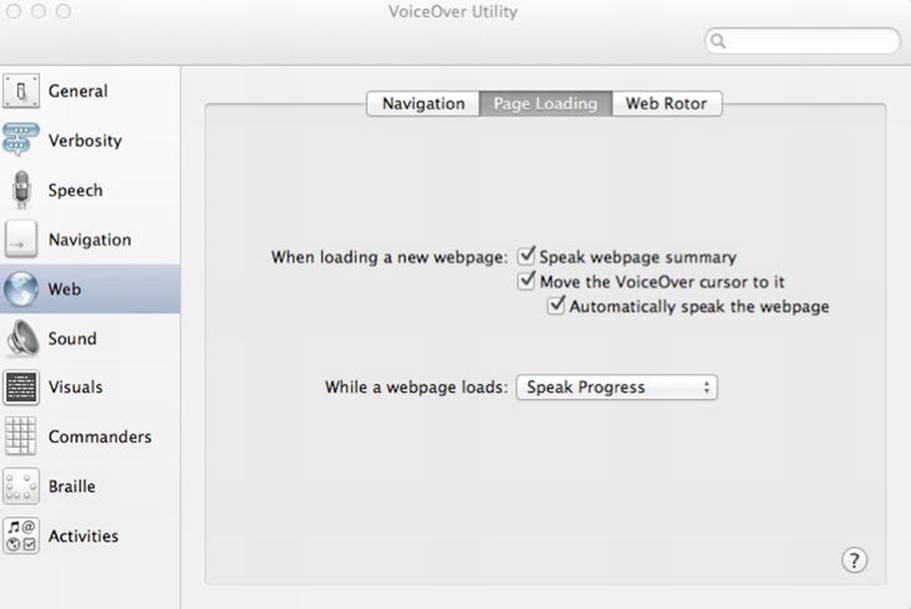

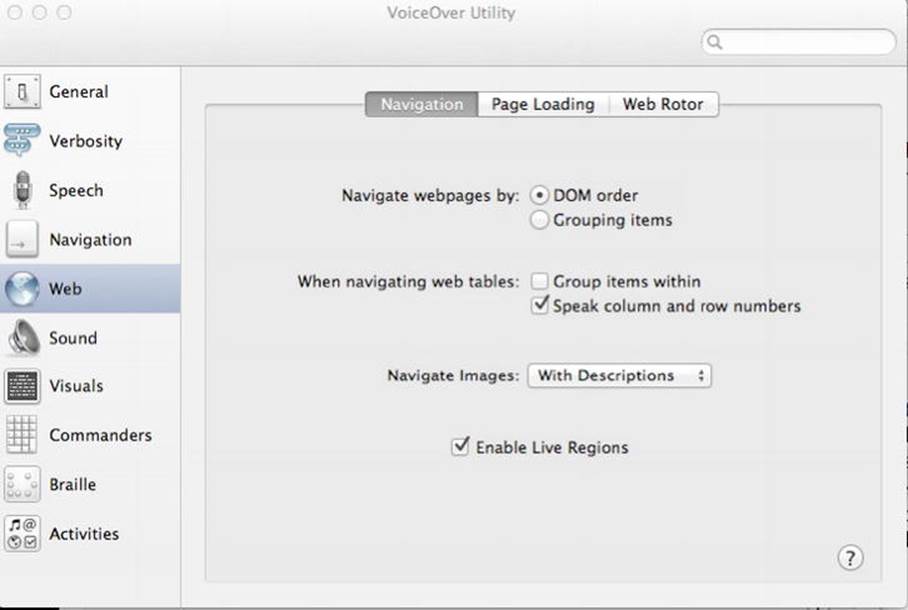

The Web options, shown in Figure 2-16, are also important. I recommend that you have a look and tweak them a little, because this might help with your testing and with browsing the Web.

Figure 2-16. VoiceOver—Web options

You can see three tabs in Figure 2-16: Navigation, Page Loading, and Web Rotor.

![]() Tip VoiceOver offers a couple of ways to navigate webpages. The first is the default DOM Order, which allows you to navigate in the way I outlined previously, such as going from heading to heading or link to link. The navigation order is determined by the source order in which the items appear in the code. The second option is Grouping Items, which lets you use gestures like swiping left and right, or up and down, to get a spatial feel for a page and where items are positioned. Although this might be useful for some blind users, it's not really useful for a developer to test the accessibility of a page. So I would leave the DOM Order option selected.

Tip VoiceOver offers a couple of ways to navigate webpages. The first is the default DOM Order, which allows you to navigate in the way I outlined previously, such as going from heading to heading or link to link. The navigation order is determined by the source order in which the items appear in the code. The second option is Grouping Items, which lets you use gestures like swiping left and right, or up and down, to get a spatial feel for a page and where items are positioned. Although this might be useful for some blind users, it's not really useful for a developer to test the accessibility of a page. So I would leave the DOM Order option selected.

I recommend leaving the Page Loading options, shown in Figure 2-17, as they are.

Figure 2-17. More VoiceOver Web options

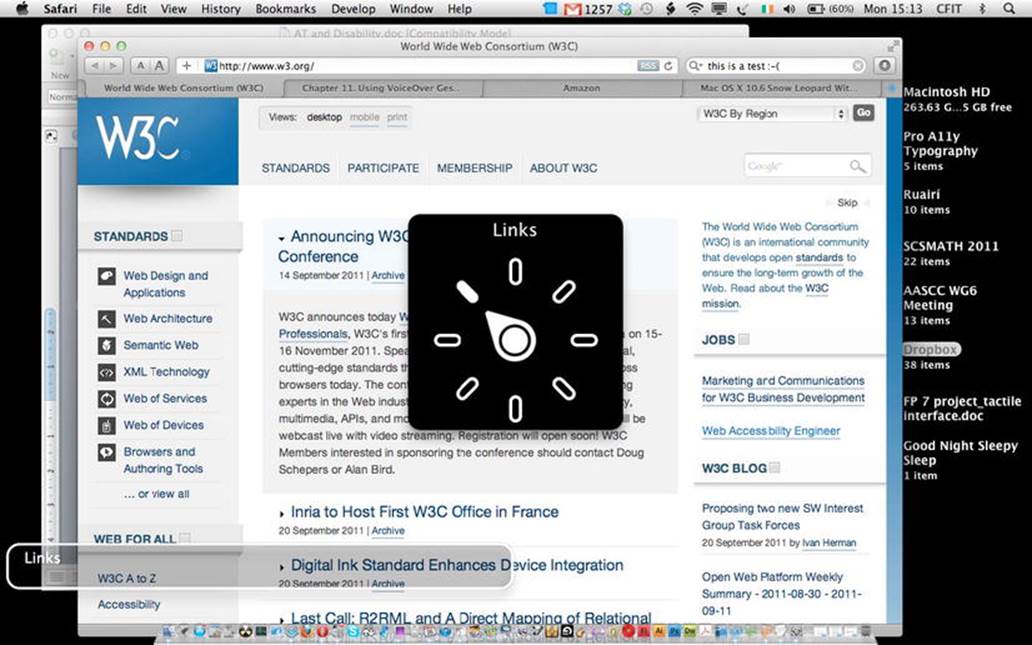

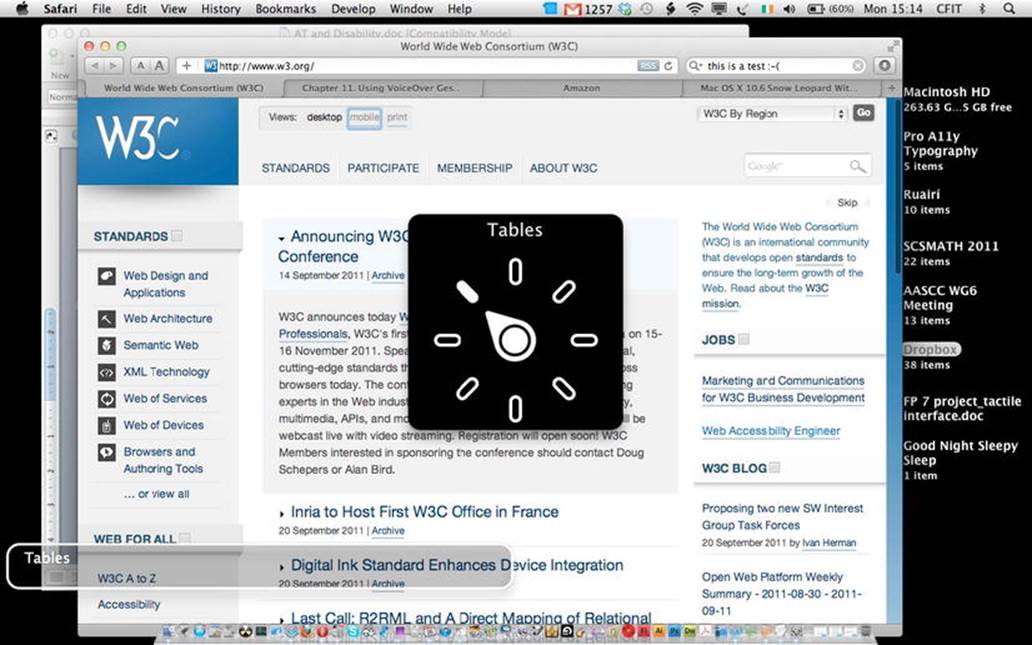

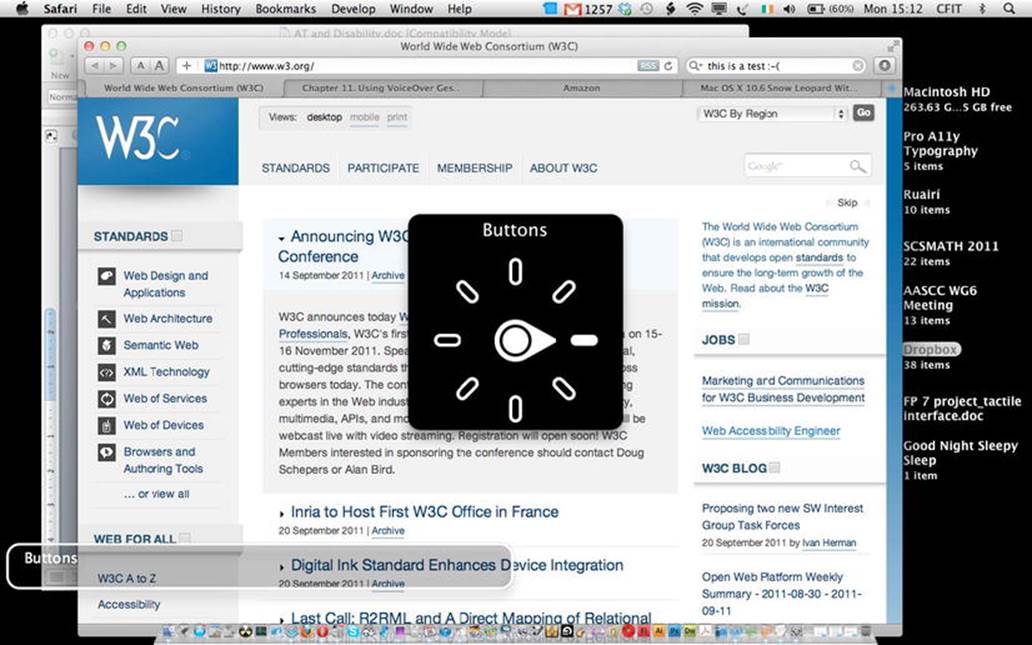

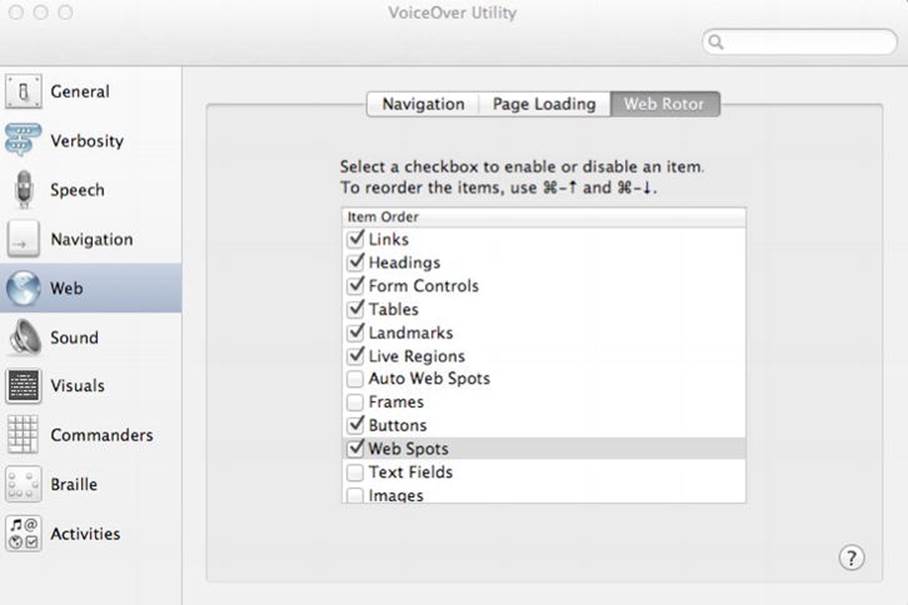

The last option we shall tweak slightly. This is the preferences pane for the Web Rotor, which is an important feature in VoiceOver for you to understand because it is at the core of the new wave of gesture-based interaction.

Using the Web Rotor

The Web Rotor acts as a way to use simple gestures to access certain kinds of HTML and other elements. As shown in Figures 2-18, 2-19, 2-20, and 2-21, it is a virtual dial that you access by pressing your thumb and your forefinger at say 6 o’clock and 12 o’clock, respectively, and then turning your fingers clockwise or counterclockwise. You will then see a dial appear that shows the various options for you to choose, as you continue to turn. When you have chosen a particular kind of content (such as a link or headings), you can use, via the trackpad, a simple swipe gesture (to the right or left) to navigate. Each gesture will highlight the desired items as they appear in the source code, outputting them as speech as you swipe. Pretty neat.

Figure 2-18. VoiceOver—Choosing links with the Web Rotor

Figure 2-19. Choosing tables with the Web Rotor

Figure 2-20. Choosing buttons with the Web Rotor

Figure 2-21. Choosing form controls with the Web Rotor

As you can see from those four screen shots, this is an elegant and clever solution that takes full advantage of using the trackpad and some simple gestures. As you play with it, you’ll see that it’s pretty easy to switch between content types and get a feel for how accessible the page is.

![]() Note You must have Trackpad Commander enabled for this to work, and doing so will disable the click functionality of the trackpad. Also, this functionality works best with Safari.

Note You must have Trackpad Commander enabled for this to work, and doing so will disable the click functionality of the trackpad. Also, this functionality works best with Safari.

You can customize the rotor so that you can tweak the order in which the rotor items appear, as well as what does or doesn’t appear.

Another adjustment I would make is to select the Enable Live Regions check box, as shown in Figure 2-22, to add live regions to the rotor choices. Live regions and landmarks are a part of the WAI-ARIA specification. Live regions dynamically update areas of a page for things like up-to-the-minute weather information, stock prices, and shares data—or anything that is constantly updating. Landmarks are a way of distinguishing between sections of content like the header, a banner item, and so forth.

Figure 2-22. VoiceOver—Navigation options

Although Enable Live Regions is selected by default, it’s not enabled by default within the Rotor. So if you wish to see this feature in your Web Rotor preferences (and yes, it’s going to be useful), I recommend that you select Web Rotor in your VoiceOver Utility preferences and activate live regions and landmarks, as shown in Figure 2-23. In the figure, I have rearranged things slightly to suit my needs for accessibility testing.

Figure 2-23. Web Rotor display options

VoiceOver has tons of other great features, such as user-defined Web Spots. By pressing the VO keys, CMD and Shift, and the right brace (}), you can create a list of custom spots (or functions that might get used a lot on a webpage, for example) and then navigate to them using the rotor. This is very useful for repeated use of a page that you visit very often.

![]() Note These examples are all relevant to MAC OS Lion (10.7.1) and using Safari 5.1. The Rotor can also be accessed using the VO keys and pressing U, and navigating via the arrow keys. You can also start to type an HTML element’s name to get a list of items that can be accessed, rather like a clever search engine feature.

Note These examples are all relevant to MAC OS Lion (10.7.1) and using Safari 5.1. The Rotor can also be accessed using the VO keys and pressing U, and navigating via the arrow keys. You can also start to type an HTML element’s name to get a list of items that can be accessed, rather like a clever search engine feature.

All of the features included with the JAWS screen reader (when using the Web, anyway) pretty much have their functional equivalent in VoiceOver, although there are subtle differences in the realization of the interface. They can’t really be considered exactly the same, because they operate in different ways and JAWS is the older kid on the block and therefore has some more advanced functions.

![]() Tip The new version of VoiceOver that comes with Lion (10.7) has a handy new feature called QuickNav. It’s a way of interacting with the Rotor in a more direct fashion. Press the left and right cursor keys together, and then enter QuickNav mode. By pressing the left arrow and up arrow together you can move the Rotor backward and then navigate the webpage by the chosen item with the up/down arrow keys. By using the right arrow and the up arrow, you can move the Rotor forward. For more information, as well as a video on how to use this feature see:www.apple.com/accessibility/voiceover.

Tip The new version of VoiceOver that comes with Lion (10.7) has a handy new feature called QuickNav. It’s a way of interacting with the Rotor in a more direct fashion. Press the left and right cursor keys together, and then enter QuickNav mode. By pressing the left arrow and up arrow together you can move the Rotor backward and then navigate the webpage by the chosen item with the up/down arrow keys. By using the right arrow and the up arrow, you can move the Rotor forward. For more information, as well as a video on how to use this feature see:www.apple.com/accessibility/voiceover.

Window-Eyes

GWMicro is the developer of Window-Eyes (for Windows operating systems), which is a screen reader that is similar to JAWS and worth a mention because it was one of the first to support WAI-ARIA. It operates slightly differently than JAWS in that it doesn’t use an off-screen model (OSM) and interacts with the DOM directly, but its core functionality is very similar to JAWS. I have heard blind friends and colleagues express a preference for it because it was a little faster and more responsive, but functionally it is very similar. It is, however, much cheaper than JAWS, which is a good thing.

NVDA

The NVDA screen reader is also worth a mention because it has many positive things going for it (such as being completely free and open source). It’s also a strong supporter of WAI-ARIA.

It can also provide feedback via synthetic speech and Braille. NVDA allows blind and vision-impaired people to access and interact with the Windows operating system and many third-party applications.

Major highlights of NVDA include the following:

· Support for popular applications, including web browsers, email clients, Internet chat programs, and office suites

· Built-in speech synthesizer supporting over 20 languages

· Announcement of textual formatting where available, such as the font name and size, the text style, and spelling errors

· Automatic announcement of text under the mouse, and optional audible indication of the mouse position

· Support for many refreshable Braille displays

· Ability to run entirely from a USB stick or other portable media without the need for installation

· Easy-to-use talking installer

· Translated into many languages

· Support for modern Windows operating systems, including both 32-bit and 64-bit variants

· Ability to run on Windows logon and other secure screens

· Support for common accessibility interfaces such as Microsoft Active Accessibility, Java Access Bridge, IAccessible2, and UI Automation

· Support for Windows Command Prompt and console applications

NVDA has its own speech synthesizer that comes bundled with it (called eSpeak), but it can be plugged into common existing speech synthesis engines such as SAPI 4/5.

Screen Readers and Alternatives

If you don’t want to use a screen reader (and you may have good reasons, because it’s difficult to learn how to use them properly), you could try using a screen-reader emulator such as Fangs.5 Fangs can be used as a plugin for Firefox and to demonstrate what the output of a webpage would be to a user of JAWS. This approach, however, isn’t a silver bullet either, because it won’t give you a feel for the user experience of a blind person, which is what really learning the ropes of using a screen reader will do. Having said that, it’s a useful tool you can turn to if using a screen reader doesn’t go well.

![]() Tip A trick you can use with VoiceOver, if you don’t want to listen to the entire speech output—VoiceOver will display the text that it is outputting as speech in a little window at the bottom of the screen. For developers, this is useful for understanding what the screen reader will output when any HTML element has focus.

Tip A trick you can use with VoiceOver, if you don’t want to listen to the entire speech output—VoiceOver will display the text that it is outputting as speech in a little window at the bottom of the screen. For developers, this is useful for understanding what the screen reader will output when any HTML element has focus.

In Chapter 4, “Understanding Accessibility APIs, Screen Readers, and the DOM,” we will take a more advanced look at how the screen reader works under the hood; this will help you understand just what is going on when you use a screen reader. Finally, in Chapter 10, “Tools, Tips, and Tricks: Assessing Your HTML5 Project,” we will further explore some common strategies used by blind people when they’re navigating the Web and outline how you might be able to simulate these browsing strategies in your accessibility testing with a screen reader. We’ll also look at some other tools you can use in your browser to understand what the screen reader “sees” under the hood.

Technologies for Mobile Device Accessibility

This short section on mobile device accessibility is designed to give you a brief introduction to the range of mobile devices that can be used by people with disabilities—in particular, vision impairments. It doesn’t go into developing for these devices, as many of them require platform-specific apps, though they can of course consume your HTML5 content also.

__________

5 www.standards-schmandards.com/projects/fangs/

VoiceOver and the iPhone

The functionality of VoiceOver on the iPhone is pretty much the same. There are a couple of very neat things that it does, however. For example, it has a new way of selecting items on the iPhone, so a person using VoiceOver will move her finger over the screen and any objects that are hit will be announced. When an onscreen item is active (has been announced) and the user has her finger on the item, tapping anywhere else on the screen will select it.

That’s really clever, and while it can take a little getting used to, it makes a lot of sense. Typing out text messages and emails can be a lot slower than with a dedicated keyboard, but external keyboards can be added. I have also seen a blind friend use a dedicated Braille input device.

The standard swipe gestures also work the same as with the MacBook Pro, as well as the Web Rotor. You might even find yourself using VoiceOver outside on a sunny day when listening to iTunes—it can be very useful even for a sighted person when it’s hard to see the screen.

![]() Note It is truly amazing to see the quantum leap that is the iPhone. In accessibility terms, it was a total game-changer that introduced a whole new interaction model using touch and gestures. If anyone had told me even four or five years ago that a touch screen device with one button would be used successfully by blind and vision-impaired people as an input device, I would have said they were crazy.

Note It is truly amazing to see the quantum leap that is the iPhone. In accessibility terms, it was a total game-changer that introduced a whole new interaction model using touch and gestures. If anyone had told me even four or five years ago that a touch screen device with one button would be used successfully by blind and vision-impaired people as an input device, I would have said they were crazy.

Talks and Symbian

For many years, the main mobile screen reader was Talks. It could be used on Symbian phones (such as Nokia) as a way of accessing the phone, the phone’s functionality, and so forth. It is still reasonably popular but has lost a lot of ground due to the popularity of other, newer mobile operating systems.

RIM and BlackBerry Accessibility

The BlackBerry was never really considered a very accessible device, but that is about to change. I have heard positive things about RIM’s initiative to improve the accessibility of the BlackBerry platform.

Here are some of the accessibility features that are available for the new wave of BlackBerry smartphones:

· Clarity theme for BlackBerry smartphones—These include a simplified Home screen interface, large text-only icons, and a high-contrast screen display.

· Visual, audible, and vibration notifications of incoming phone calls, text messages, emails, and so forth.

· Customizable fonts—You can increase the size, style, and weight of fonts.

· Audible click—A confirming audio tone useful for navigating using the trackpad or trackball.

· Reverse contrast—You can change the device display color from dark on light to light on dark.

· Grayscale—You can convert all colors to their respective shades of gray.

· Browser zoom—A screen-magnification function.

A range of other enhancements on the platform are designed to serve the needs of users with mobility, cognitive, and speech impairments. So it looks really promising. Another big plus is that Aaron Leventhal, who was the chief accessibility architect at Mozilla and was driving Firefox accessibility, is now the senior accessibility product manager at RIM and will bring a lot of skill and experience to the platform.

Android

The Android platform is gaining ground in usage, and as of version 1.6 there has been in-built platform features for people with vision impairments. However, it still requires that you download the appropriate software and configure the phone. This can be a little complicated, but in principle a visually-impaired user can access just about any function, including making phone calls, text messaging, emailing, web browsing, and downloading apps via the Android Market.

![]() Caution Talkback is a free screen reader developed by Google. It is important to be aware that to get the most out of using these screen readers, you must use a phone that has a physical navigational controller, for navigation through applications, menus, and options. This can be a trackball, trackpad, and so on. It’s also a good idea to select a phone that has a physical keyboard, because there is very limited accessibility support for touch-screen devices.

Caution Talkback is a free screen reader developed by Google. It is important to be aware that to get the most out of using these screen readers, you must use a phone that has a physical navigational controller, for navigation through applications, menus, and options. This can be a trackball, trackpad, and so on. It’s also a good idea to select a phone that has a physical keyboard, because there is very limited accessibility support for touch-screen devices.

I am a fan of the Android platform. I love its customizable nature and its flexibility, and you can twiddle away with it for hours on end. However, from an accessibility perspective, the whole experience leaves a lot to be desired—especially on a touch-screen device. There is a night-and-day difference between the complexity involved in configuring and enabling accessibility for an Android device and the out-of-the-box accessibility experience of the iPhone. The iPhone is far superior, which is a pity because the Android platform has much to recommend it. You can use various screen readers with Android, for example, and there are other useful accessibility features. However, in terms of the user experience, it is currently just very fiddly and is not for the faint hearted.

Speech Synthesis: What Is It and How Does It Work?

Now that you’ve had an introduction to how to use a screen reader, how about a little background on what’s actually going on under the hood?

Text that is to be outputted as speech is transformed into very small atomic components called phonemes. These are the building blocks of our language (and yes, they are smaller than syllables). For example, the English language alphabet has 26 characters and about 40 phonemes.

The main kind of synthesis used to actually create the voice output are these:

· Formant synthesis

· Concatenative synthesis

· Articulatory synthesis

Of the three, formant is the earliest used and most common. It is also the easiest method for quickly creating recognizable sounds because formants are real-time generated sounds that represent the main frequency components of the human voice. Formants occupy a rather narrow band of the frequency spectrum (just like the human voice) and are created by combining these commonly used frequencies at various levels of amplitude.

Formant synthesis has a distinct advantage over the other types of synthesis because it can be used to output text at quite high speeds and still be intelligible and understandable, which is more difficult with either concatenative or articulatory synthesis. However, formant-based speech output can sound rather robotic.

Concatenative synthesis is when a database of pre-recorded sounds is used to represent the text output as speech. It can result in a more human voice, but it has some disadvantages—such as loss of clarity at high-speed output (which is important for many screen-reader users who have a preference for high-speed speech output).

Articulatory synthesis uses a model that copies the human vocal tract and how sounds are actually created. It is more complex, but it leads us nicely into some of the other models, such as HMM, which try to predict what will be coming next on the basis of the previous or current output. These models do this by using hidden Markov chains (which is bringing us into the territory of probabilistic modeling, so let’s leave it there!).

![]() Note If you’d like more information, there is an interesting working group looking at HMM-based speech synthesis systems, which you can find at http://hts.sp.nitech.ac.jp. You can also find some real-time HMM generators there.

Note If you’d like more information, there is an interesting working group looking at HMM-based speech synthesis systems, which you can find at http://hts.sp.nitech.ac.jp. You can also find some real-time HMM generators there.

Screen Magnification

Screen-magnification software is used by people who are vision impaired. These products are used to literally magnify the screen, in whole or in part. This magnified view is then the principle view for the user and can be moved around the screen by the mouse or other device.

You might be aware that this kind of feature is already a part of the Windows operating system and Mac OS X and be wondering why someone would spend their hard-earned money on a dedicated software package. The difference between a dedicated package and the feature in your operating system is one of quality and clarity, and it is very noticeable when you compare a screen-magnification product like SuperNova or ZoomText with the in-built operating system products. The fact is that when you use (and really push) the magnification features of your operating system, you can get artifacts and, more importantly, blurred text that can become illegible at high magnification levels.

A screen-magnification package like SuperNova or ZoomText will redraw the screen at a higher resolution. What’s more, such packages also have other in-built features that provide high-quality anti-aliasing, so any re-drawn text that is viewed at high magnification levels is much sharper and clearer. While the inbuilt screen magnification features of your chosen operating system can certainly be used by someone with a mild to moderate vision impairment, very often more severely vision-impaired people will need to use an off-the-shelf package.

![]() Note Some things to be aware of when you are building web sites and applications is making parts of the page change in a way that can be seen by someone using screen-magnification software. A rule of thumb is to avoid things like having a control on the far left of your page that updates something on the far right when activated, which the user can easily miss when he or she is looking at a rather narrow part of the screen. It might be more accurate to say “When the user might not expect it.” If the command updates a shopping cart or similar item, it’s probably fine because the user is primed to expect this to happen. This design pattern can be a problem when it is unexpected, because the screen magnifier’s view is often rather small. So just bear that in mind.

Note Some things to be aware of when you are building web sites and applications is making parts of the page change in a way that can be seen by someone using screen-magnification software. A rule of thumb is to avoid things like having a control on the far left of your page that updates something on the far right when activated, which the user can easily miss when he or she is looking at a rather narrow part of the screen. It might be more accurate to say “When the user might not expect it.” If the command updates a shopping cart or similar item, it’s probably fine because the user is primed to expect this to happen. This design pattern can be a problem when it is unexpected, because the screen magnifier’s view is often rather small. So just bear that in mind.

Switch Access

Enhanced informational design is also good for users with very limited physical mobility or movement. Users with physical disabilities often use a device called a switch, shown in Figure 2-24, to interact with their computer and access the Web.

Figure 2-24. A variety of single-button switches