Node.js in Action (2014)

Part 3. Going further with Node

Chapter 13. Beyond web servers

This chapter covers

· Using Socket.IO for real-time cross-browser communication

· Implementing TCP/IP networking

· Using Node’s APIs to interact with the operating system

· Developing and working with command-line tools

Node’s asynchronous nature enables you to perform I/O-intensive tasks that might be difficult or inefficient in a synchronous environment. We’ve mostly covered HTTP applications in this book, but what about other kinds of applications? What else is Node useful for?

The truth is that Node is tailored not only for HTTP, but for all kinds of general-purpose I/O. This means you can build practically any type of application using Node, such as command-line programs, system administration scripts, and real-time web applications.

In this chapter, you’ll learn how to create real-time web servers that go beyond the traditional HTTP server model. You’ll also learn about some of Node’s other APIs that you can use to create other kinds of applications, like TCP servers or command-line programs.

We’ll start by looking at Socket.IO, which enables real-time communication between browsers and the server.

13.1. Socket.IO

Socket.IO (http://socket.io) is arguably the best-known module in the Node community. People who are interested in creating real-time web applications, but have never heard of Node, usually hear about Socket.IO sooner or later, which then brings them to Node itself. Socket.IO allows you to write real-time web applications using a bidirectional communication channel between the server and client.

At its simplest, Socket.IO has an API very similar to the WebSocket API (http://www.websocket.org), but has built-in fallbacks for older browsers where such features did not yet exist. Socket.IO also provides convenient APIs for broadcasting, volatile messages, and a lot more. These features have made Socket.IO very popular for web-based browser games, chat apps, and streaming applications.

HTTP is a stateless protocol, meaning that the client is only able to make single, short-lived requests to the server, and the server has no real notion of connected or disconnected users. This limitation prompted the standardization of the WebSocket protocol, which specifies a way for browsers to maintain a full-duplex connection to the server, allowing both ends to send and receive data simultaneously. WebSocket APIs allow for a whole new breed of web applications utilizing real-time communication between the client and server.

The problem with the WebSocket protocol is that it’s not yet finalized, and although some browsers have begun shipping with WebSocket, there are still a lot of older versions out there, especially of Internet Explorer. Socket.IO solves this problem by utilizing WebSocket when it’s available in the browser, and falling back to other browser-specific tricks to simulate the behavior that WebSocket provides, even in older browsers.

In this section, you’ll build two sample applications using Socket.IO:

· A minimal Socket.IO application that pushes the server’s time to connected clients

· A Socket.IO application that triggers page refreshes when CSS files are edited

After you build the example apps, we’ll show you a few more ways you can use Socket.IO by briefly revisiting the upload-progress example from chapter 4. Let’s start with the basics.

13.1.1. Creating a minimal Socket.IO application

Let’s say you want to build a quick little web application that constantly updates the browser in real time with the server’s UTC time. An app like this would be useful to identify differences between the client’s and server’s clocks. Now try to think of how you could build this application using the http module or the frameworks you’ve learned about so far. Although it’s possible to get something working using a trick like long-polling, Socket.IO provides a cleaner interface for accomplishing this. Implementing this app with Socket.IO is about as simple as you can get.

To build it, you can start by installing Socket.IO using npm:

npm install socket.io

The following listing shows the server-side code, so save this file for now and you can try it out when you have the client-side code as well.

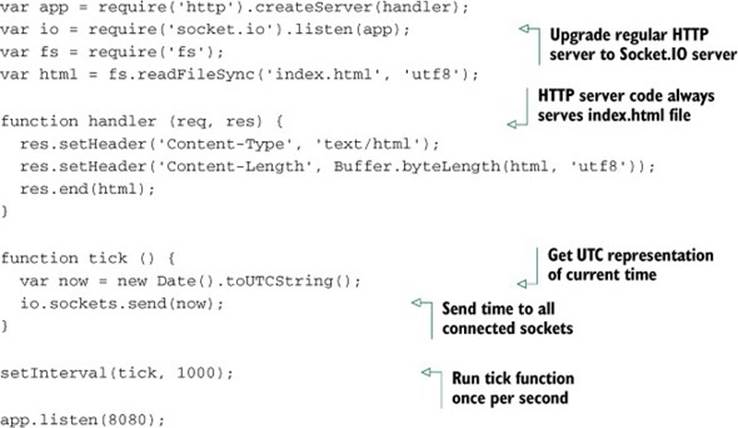

Listing 13.1. A Socket.IO server that updates its clients with the time

As you can see, Socket.IO minimizes the amount of extra code you need to add to the base HTTP server. It only took two lines of code involving the io variable (which is the variable for your Socket.IO server instance) to enable real-time messages between your server and clients. In this clock server example, you invoke the tick() function once per second to notify all the connected clients of the server’s time.

The server code first reads the index.html file into memory, and you need to implement that now. The following listing shows the client side of this application.

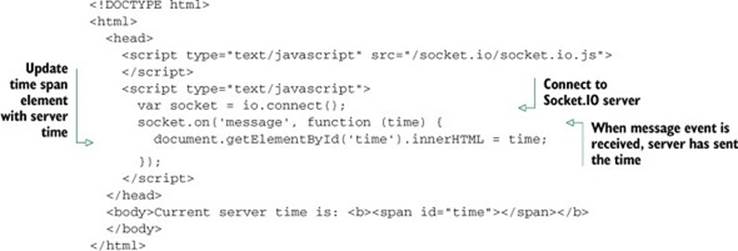

Listing 13.2. A Socket.IO client that displays the server’s broadcasted time

Trying it out

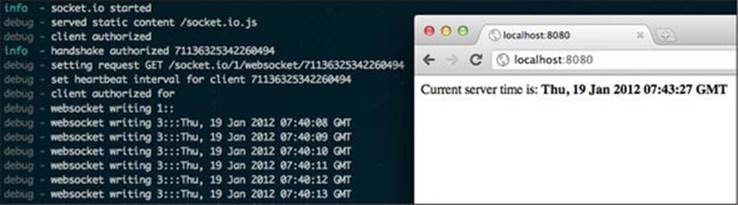

You’re now ready to run the server. Fire it up with node clock-server.js and you’ll see the response “info - socket.io started.” This means that Socket.IO is set up and ready to receive connections, so open up your browser to the URL http://localhost:8080/. With any luck, you’ll be greeted by something that looks like figure 13.1. The time will be updated every second from the message received by the server. Go ahead and open another browser at the same time to the same URL, and you’ll see the values change together in sync.

Figure 13.1. The clock server running in a terminal window with a client in a browser connected to the server

Just like that, you have real-time communication between the client and server with just a few lines of code, thanks to Socket.IO.

Other kinds of messaging with Socket.IO

Sending a message to all the connected sockets is only one way that Socket.IO enables you to interact with connected users. You can also send messages to individual sockets, broadcast to all sockets except one, send volatile (optional) messages, and a lot more. Be sure to check out Socket.IO’s documentation for more information (http://socket.io/#how-to-use).

Now that you have an idea of the simple things that are possible with Socket.IO, let’s take a look at another example of how server-sent events can be beneficial to developers.

13.1.2. Using Socket.IO to trigger page and CSS reloads

Let’s quickly take a look at the typical workflow for web page designers:

1. Open the web page in multiple browsers.

2. Look for styling on the page that needs adjusting.

3. Make changes to one or more stylesheets.

4. Manually reload all the web browsers.

5. Go back to step 2.

One step you could automate is step 4, where the designer needs to manually go into each web browser and click the Refresh button. This is especially time-consuming when the designer needs to test different browsers on different computers and various mobile devices.

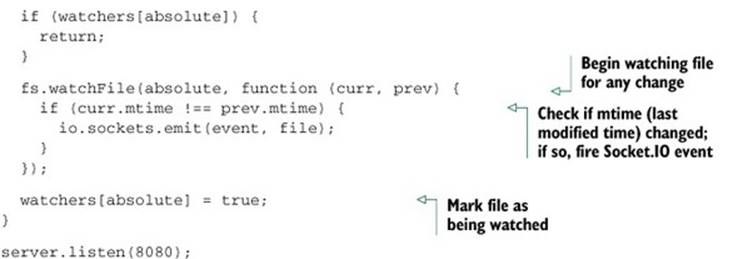

But what if you could eliminate this manual refresh step completely? Imagine that when you save the stylesheet in your text editor, all the web browsers that have that page open automatically reload the changes in the CSS sheet. This would be a huge time-saver for devs and designers alike, and Socket.IO matched with Node’s fs.watchFile and fs.watch functions make it possible in just a few lines of code.

We’ll use fs.watchFile() in this example instead of the newer fs.watch() because we’re assured this code will work the same on all platforms, but we’ll cover the behavior of fs.watch() in depth later.

fs.watchFile() vs. fs.watch()

Node.js provides two APIs for watching files: fs.watchFile() (http://mng.bz/v6dA) is rather expensive resource-wise, but it’s more reliable and works cross-platform. fs.watch() (http://mng.bz/5KSC) is highly optimized for each platform, but it has behavioral differences on certain platforms. We’ll go over these functions in greater detail in section 13.3.2.

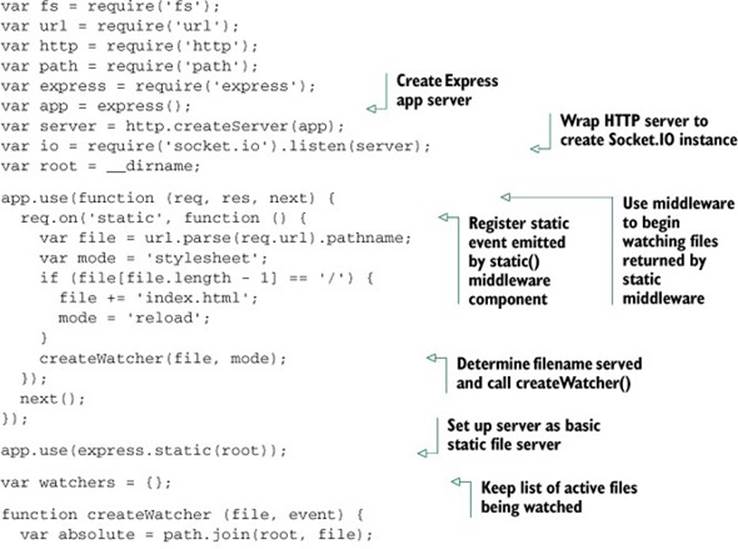

In this example, we’ll combine the Express framework with Socket.IO. They work together seamlessly, just like the regular http.Server in the previous example.

First, let’s look at the server code in its entirety. Save the following code as watch-server.js if you’re interested in running this example at the end.

Listing 13.3. Express/Socket.IO server that triggers events on file change

At this point you have a fully functional static file server that’s prepared to fire reload and stylesheet events across the wire to the client using Socket.IO.

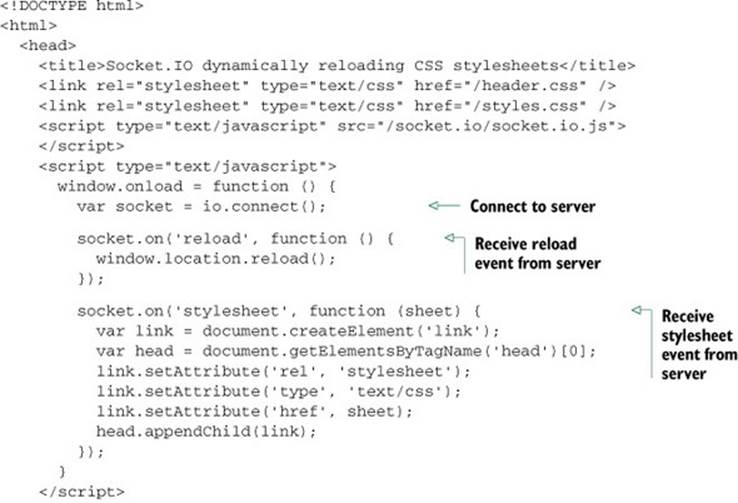

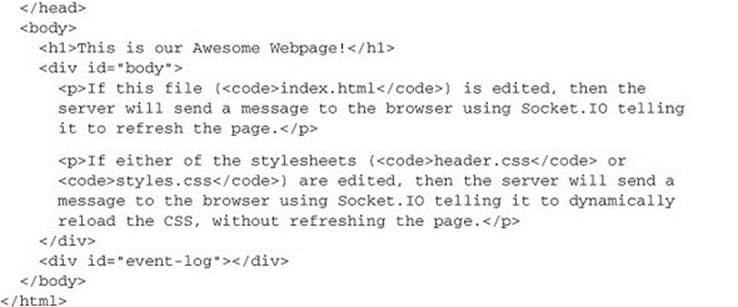

Now let’s take a look at the basic client-side code. Save this as index.html so that it gets served at the root path when you fire up the server next.

Listing 13.4. Client-side code to reload stylesheets after receiving server events

Trying it out

Before this will work, you’ll need to create a couple of CSS files, header.css and styles.css, because the index.html file loads those two stylesheets when it loads.

Now that you have the server code, the index.html file, and the CSS stylesheets that the browser will use, you can try it out. Fire up the server:

$ node watch-server.js

Once the server has started, open your web browser to http://localhost:8080 and you’ll see the simple HTML page being served and rendered. Now try altering one of the CSS files (perhaps tweak the background color of the body tag), and you’ll see the stylesheet reload in the browser right in front of your eyes, without even reloading the page itself. Try opening the page in multiple browsers at once.

In this example, reload and stylesheet are custom events that you have defined in the application; they’re not part of Socket.IO’s API. This demonstrates how the socket object acts like a bidirectional EventEmitter, which you can use to emit events that Socket.IO will transfer across the wire for you.

13.1.3. Other uses of Socket.IO

As you know, HTTP was never originally intended for any kind of real-time communication. But with advances in browser technologies, like WebSocket, and with modules like Socket.IO, this limitation has been lifted, opening a big door for all kinds of new applications that were never before possible in the web browser.

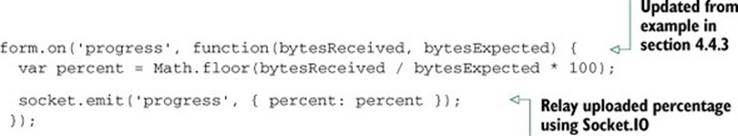

Back in chapter 4 we said that Socket.IO would be great for relaying upload progress events back to the browser for the user to see. Using a custom progress event would work well:

For this relaying to work, you’ll need to get access to the socket instance that matches the browser uploading the file. That’s beyond the scope of this book, but there are resources on the internet that can help you figure that out. (For starters, take a look at Daniel Baulig’s “socket.io and Express: tying it all together” article on his blinzeln blog: www.danielbaulig.de/socket-ioexpress.)

Socket.IO is game changing. As mentioned earlier, developers interested in real-time web applications often hear about Socket.IO before knowing about Node.js—a testament to how influential and important Socket.IO is. It’s consistently gaining traction in web gaming communities and being used for more creative games and applications than one could have thought possible. It’s also a very popular pick for use in applications written in Node.js competitions, like Node Knockout (http://nodeknockout.com). What awesome thing will you write with it?

13.2. TCP/IP networking in depth

Node is well suited for networking applications, because those typically involve a lot of I/O. Besides the HTTP servers you’ve learned much about already, Node supports any type of TCP-based networking. Node is a good platform for writing an email server, file server, or proxy server, for example, and it can also be used as a client for these kinds of services. Node provides a few tools to aid in writing high quality and performant I/O applications, and you’ll learn about them in this section.

Some networking protocols require values to be read at the byte level—chars, ints, floats, and other data types involving binary data. But JavaScript doesn’t include any native binary data types to work with. The closest you can get is crazy hacks with strings. Node picks up the slack by implementing its own Buffer data type, which acts as a piece of fixed-length binary data, making it possible to access the low-level bytes needed to implement other protocols.

In this section you’ll learn about the following topics:

· Working with buffers and binary data

· Creating a TCP server

· Creating a TCP client

Let’s first take a deeper look at how Node deals with binary data.

13.2.1. Working with buffers and binary data

The Buffer is a special data type that Node provides for developers. It acts as a slab of raw binary data with a fixed length. Buffers can be thought of as the equivalent of the malloc() C function or the new keyword in C++. Buffers are very fast and light objects, and they’re used throughout Node’s core APIs. For example, they’re returned in data events by all Stream classes by default.

Node exposes the Buffer constructor globally, encouraging you to use it as an extension of the regular JavaScript data types. From a programming point of view, you can think of buffers as similar to arrays, except they’re not resizable and can only contain the numbers 0 through 255 as values. This makes them ideal for storing binary data of, well, anything. Because buffers work with raw bytes, you can use them to implement any low-level protocol that you desire.

Text data vs. binary data

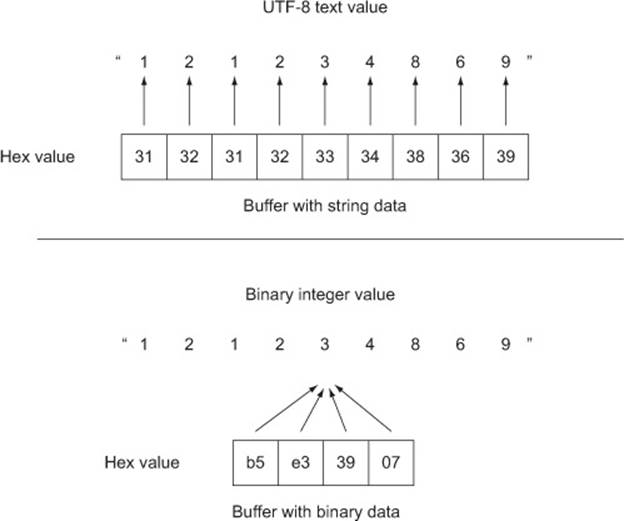

Say you wanted to store the number 121234869 in memory using a Buffer. By default, Node assumes that you want to work with text-based data in buffers, so when you pass the string "121234869" to the Buffer constructor function, a new Buffer object will be allocated with the string value written to it:

var b = new Buffer("121234869");

console.log(b.length);

9

console.log(b);

<Buffer 31 32 31 32 33 34 38 36 39>

In this case, it would return a 9-byte Buffer. This is because the string was written to the Buffer using the default human-readable text-based encoding (UTF-8), where the string is represented with 1 byte per character.

Node also includes helper functions for reading and writing binary (machine-readable) integer data. These are needed for implementing machine protocols that send raw data types (like ints, floats, doubles, and so on) over the wire. Because you want to store a number value in this example, it’s possible to be more efficient by utilizing the helper function writeInt32LE() to write the number 121234869 as a machine-readable binary integer (assuming a little-endian processor) into a 4-byte Buffer.

There are other variations of the Buffer helper functions, as well:

· writeInt16LE() for smaller integer values

· writeUInt32LE() for unsigned values

· writeInt32BE() for big-endian values

There are lots more, so be sure to check the Buffer API documentation page (http://nodejs.org/docs/latest/api/buffer.html) if you’re interested in them all.

In the following code snippet, the number is written using the writeInt32LE binary helper function:

var b = new Buffer(4);

b.writeInt32LE(121234869, 0);

console.log(b.length);

4

console.log(b);

<Buffer b5 e5 39 07>

By storing the value as a binary integer instead of a text string in memory, the data size is decreased by half, from 9 bytes down to 4. Figure 13.2 shows the breakdown of these two buffers and essentially illustrates the difference between human-readable (text) protocols and machine-readable (binary) protocols.

Figure 13.2. The difference between representing 121234869 as a text string vs. a little-endian binary integer at the byte level

Regardless of what kind of protocol you’re working with, Node’s Buffer class will be able to handle the proper representation.

Byte endianness

The term endianness refers to the order of the bytes within a multibyte sequence. When bytes are in little-endian order, the least significant byte (LSB) is stored first, and the byte sequence is read from right to left. Conversely, big-endian order is when the first byte stored is the most significant byte (MSB) and the byte sequence is read from left to right. Node.js offers equivalent helper functions for both little-endian and big-endian data types.

Now it’s time to put these Buffer objects to use by creating a TCP server and interacting with it.

13.2.2. Creating a TCP server

Node’s core API sticks to being low-level, exposing only the bare essentials for modules to build on. Node’s http module is a good example of this, building on top of the net module to implement the HTTP protocol. Other protocols, like SMTP for email or FTP for file transfer, need to be implemented on top of the net module as well, because Node’s core API doesn’t implement any other higher-level protocols.

Writing data

The net module offers a raw TCP/IP socket interface for your applications to use. The API for creating a TCP server is very similar to creating an HTTP server: you call net.createServer() and give it a callback function that will be invoked upon each connection. The main difference in creating a TCP server is that the callback function only takes one argument (usually named socket), which is the Socket object, as opposed to the req and res arguments used when creating an HTTP server.

The Socket class

The Socket class is used by both the client and server aspects of the net module in Node. It’s a Stream subclass that’s both readable and writable (bidirectional). That is, it emits data events when input data has been read from the socket, and it has write() and end() functions for sending output data.

Let’s quickly look at a bare-bones net.Server that waits for connections and then invokes a callback function. In this case, the logic inside the callback function simply writes “Hello World!” to the socket and closes the connection cleanly:

var net = require('net');

net.createServer(function (socket) {

socket.write('Hello World!\r\n');

socket.end();

}).listen(1337);

console.log('listening on port 1337');

Fire up the server for some testing:

$ node server.js

listening on port 1337

If you were to try to connect to the server in a web browser, it wouldn’t work because this server doesn’t speak HTTP, only raw TCP. In order to connect to this server and see the message, you need to connect with a proper TCP client, like netcat(1):

$ netcat localhost 1337

Hello World!

Great! Now let’s try using telnet(1):

$ telnet localhost 1337

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

Hello World!

Connection closed by foreign host.

telnet is usually meant to be run in an interactive mode, so it prints out its own stuff as well, but the “Hello World!” message does get printed right before the connection is closed, just as expected.

As you can see, writing data to the socket is easy. You just use write() calls and a final end() call. This API intentionally matches the API for HTTP res objects when writing a response to the client.

Reading data

It’s common for servers to follow the request-response paradigm, where the client connects and immediately sends a request of some sort. The server reads the request and processes a response of some sort to write back to the socket. This is exactly how the HTTP protocol works, as well as the majority of other networking protocols in the wild, so it’s important to know how to read data in addition to writing it.

Fortunately, if you remember how to read a request body from an HTTP req object, reading from a TCP socket should be a piece of cake. Complying with the readable Stream interface, all you have to do is listen for data events containing the input data that was read from the socket:

socket.on('data', function (data) {

console.log('got "data"', data);

});

By default, there’s no encoding set on the socket, so the data argument will be a Buffer instance. Usually, this is exactly how you want it (that’s why it’s the default), but when it’s more convenient, you can call the setEncoding() function to have the data argument be the decoded strings instead of buffers. You also listen for the end event so you know when the client has closed their end of the socket and won’t be sending any more data:

socket.on('end', function () {

console.log('socket has ended');

});

You can easily write a quick TCP client that looks up the version string of the given SSH server by simply waiting for the first data event:

var net = require('net');

var socket = net.connect({ host: process.argv[2], port: 22 });

socket.setEncoding('utf8');

socket.once('data', function (chunk) {

console.log('SSH server version: %j', chunk.trim());

socket.end();

});

Now try it out. Note that this oversimplified example assumes that the entire version string will come in one chunk. Most of the time this works just fine, but a proper program would buffer the input until a \n char was found. Let’s check what the github.com SSH server uses:

$ node client.js github.com

SSH server version: "SSH-2.0-OpenSSH_5.5p1 Debian-6+squeeze1+github8"

Connecting two streams with socket.pipe()

Using pipe() (http://mng.bz/tuyo) in conjunction with either the readable or writable portions of a Socket object is also a good idea. In fact, if you wanted to write a basic TCP server that echoed everything that was sent to it back to the client, you could do that with a single line of code in your callback function:

socket.pipe(socket);

This example shows that it only takes one line of code to implement the IETF Echo Protocol (http://tools.ietf.org/rfc/rfc862.txt), but more importantly it demonstrates that you can pipe() both to and from the socket object. Of course, you would usually do this with more meaningful stream instances, like a filesystem or gzip stream.

Handling unclean disconnections

The last thing that should be said about TCP servers is that you need to anticipate clients that disconnect but don’t cleanly close the socket. In the case of netcat(1), this would happen when you press Ctrl-C to kill the process, rather than pressing Ctrl-D to cleanly close the connection. To detect this situation, you listen for the close event:

socket.on('close', function () {

console.log('client disconnected');

});

If you have cleanup to do after a socket disconnects, you should do it from the close event, not the end event, because end won’t fire if the connection isn’t closed cleanly.

Putting it all together

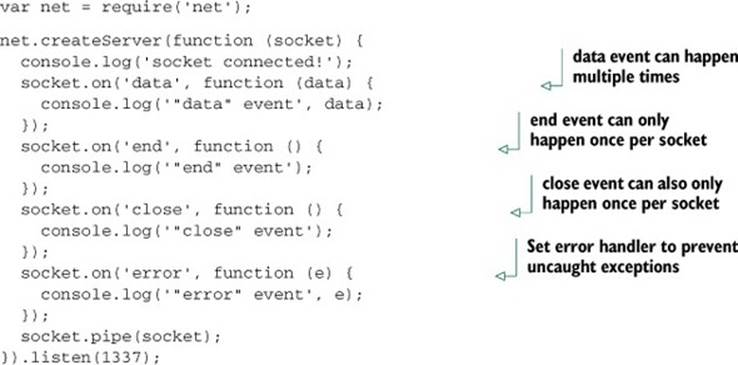

Let’s take all these events and create a simple echo server that logs stuff to the terminal when the various events occur. The server is shown in the following listing.

Listing 13.5. A simple TCP server that echoes any data it receives back to the client

Fire up the server and connect to it with netcat or telnet and play around with it a bit. You should see the console.log() calls for the events being printed to the server’s stdout as you mash around on the keyboard in the client app.

Now that you can build low-level TCP servers in Node, you’re probably wondering how to write a client program in Node to interact with these servers. Let’s do that now.

13.2.3. Creating a TCP client

Node isn’t only about server software; creating client networking programs is just as useful and just as easy in Node.

The key to creating raw connections to TCP servers is the net.connect() function; it accepts an options argument with host and port values and returns a socket instance. The socket returned from net.connect() starts off disconnected from the server, so you’ll usually want to listen for the connect event before doing any work with the socket:

var net = require('net');

var socket = net.connect({ port: 1337, host: 'localhost' });

socket.on('connect', function () {

// begin writing your "request"

socket.write('HELO local.domain.name\r\n');

...

});

Once the socket instance is connected to the server, it behaves just like the socket instances you get inside a net.Server callback function.

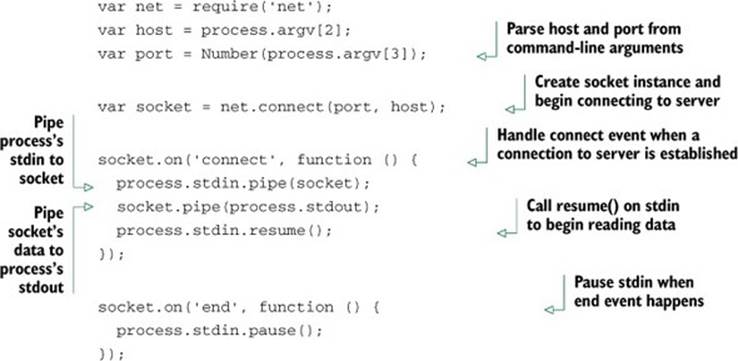

Let’s demonstrate by writing a basic replica of the netcat(1) command, as shown in the following listing. Basically, the program connects to the specified remote server and pipes stdin from the program to the socket, and then pipes the socket’s response to the program’s stdout.

Listing 13.6. A basic replica of the netcat(1) command implemented using Node

You can use this client to connect to the TCP server examples you wrote before. Or if you’re a Star Wars fan, try invoking this netcat replica script with the following arguments for a special Easter egg:

$ node netcat.js towel.blinkenlights.nl 23

Sit back and enjoy the output shown in figure 13.3. You deserve a break.

Figure 13.3. Connecting to the ASCII Star Wars server with the netcat.js script

That’s all it takes to write low-level TCP servers and clients using Node.js. The net module provides a simple, yet comprehensive, API, and the Socket class follows both the readable and writable Stream interfaces, as you would expect. Essentially, the net module is a showcase of the core fundamentals of Node.

Let’s switch gears once again and look at Node’s core APIs that allow you to interact with the process’s environment and query information about the runtime and operating system.

13.3. Tools for interacting with the operating system

Often you’ll find yourself wanting to interact with the environment that Node is running in. This might involve checking environment variables to enable debug-mode logging, implementing a Linux joystick driver using the low-level fs functions to interact with /dev/js0 (the device file for a game joystick), or launching an external child process like php to compile a legacy PHP script.

All these kinds of actions require you to use some of the Node core APIs, and we’ll cover some of these modules in this section:

· The global process object— Contains information about the current process, such as the arguments given to it and the environment variables that are currently set

· The fs module— Contains the high-level ReadStream and WriteStream classes that you’re familiar with by now, but also houses low-level functions that we’ll look at

· The child_process module— Contains both low-level and high-level interfaces for spawning child processes, as well as a special way to spawn node instances with a two-way message-passing channel

The process object is one of those APIs that a large majority of programs will interact with, so let’s start with that.

13.3.1. The process global singleton

Every Node process has a single global process object that every module shares access to. Useful information about the process and the context it’s running in can be found in this object. For example, the arguments that were invoked with Node to run the current script can be accessed withprocess.argv, and you can get or set the environment variables using the process.env object. But the most interesting feature of the process object is that it’s an EventEmitter instance, which emits very special events, such as exit and uncaughtException.

The process object has lots of bells and whistles, and some of the APIs not discussed in this section will be covered later in the chapter. In this section, we’ll focus on the following:

· Using process.env to get and set environment variables

· Listening for special events emitted by process, such as exit and uncaught-Exception

· Listening for signal events emitted by process, like SIGUSR2 and SIGKILL

Using process.env to get and set environment variables

Environment variables are great for altering the way your program or module will work. For example, you can use these variables to configure your server, specifying which port to listen on. Or the operating system can set the TMPDIR variable to specify where your programs should output temporary files that can be cleaned up later.

Environment variables?

In case you’re not already familiar with environment variables, they’re a set of key/value pairs that any process can use to affect the way it will behave. For example, all operating systems use the PATH environment variable as a list of file paths to search when looking up a program’s location by name (with ls being resolved to /bin/ls).

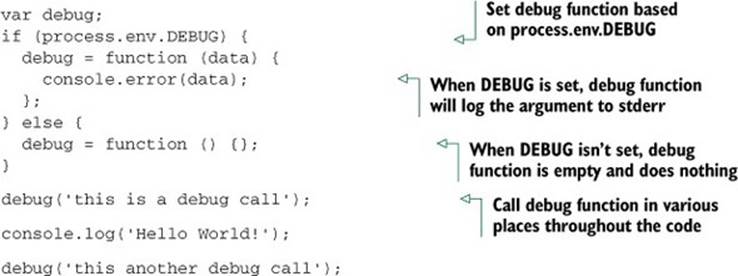

Suppose you wanted to enable debug-mode logging while developing or debugging your module, but not during regular use, because that would be annoying for consumers of your module. A great way to do this is with environment variables. You could look up what the DEBUG variable is set to by checking process.env.DEBUG, as shown in the next listing.

Listing 13.7. Define a debug function based on a DEBUG environment variable

If you try running this script regularly (without the process.env.DEBUG environment variable set), you’ll see that calls to debug do nothing, because the empty function is being called:

$ node debug-mode.js

Hello World!

To test out debug mode, you need to set the process.env.DEBUG environment variable. The simplest way to do this when launching a Node instance is to prepend the command with DEBUG=1. When in debug mode, calls to the debug function will then be printed to the console as well as to the regular output. This is a nice way to get diagnostic reporting to stderr when debugging a problem in your code:

$ DEBUG=1 node debug-mode.js

this is a debug call

Hello World!

this is another debug call

The debug community module by T.J. Holowaychuk (https://github.com/visionmedia/debug) encapsulates precisely this functionality with some additional features. If you like the debugging technique presented here, you should definitely check it out.

Special events emitted by process

Normally there are two special events that get emitted by the process object:

· exit gets emitted right before the process exits.

· uncaughtException gets emitted any time an unhandled error is thrown.

The exit event is essential for any application that needs to do something right before the program exits, like clean up an object or print a final message to the console. One important thing to note is that the exit event gets fired after the event loop has already stopped, so you won’t have the opportunity to start any asynchronous tasks during the exit event. The exit code is passed as the first argument, and it’s 0 on a successful exit.

Let’s write a script that listens on the exit event to print an “Exiting...” message:

process.on('exit', function (code) {

console.log('Exiting...');

});

The other special event emitted by process is the uncaughtException event. In the perfect program, there will never be any uncaught exceptions, but in the real world, it’s better to be safe than sorry. The only argument given to the uncaughtException event is the uncaught Errorobject.

When there are no listeners for “error” events, any uncaught errors will crash the process (this is the default behavior for most applications), but when there’s at least one listener, it’s up to that listener to decide what to do with the error. Node won’t exit automatically, though it is considered mandatory to do so in your own callback. The Node.js documentation explicitly warns that any use of this event should contain a process.exit() call within the callback; otherwise you’ll leave the application in an undefined state, which is bad.

Let’s listen for uncaughtException and then throw an uncaught error to see it in action:

process.on('uncaughtException', function (err) {

console.error('got uncaught exception:', err.message);

process.exit(1);

});

throw new Error('an uncaught exception');

Now when an unexpected error happens, you’re able to catch the error and do any necessary cleanup before you exit the process.

Catching signals sent to the process

UNIX has the concept of signals, which are a basic form of interprocess communication (IPC). These signals are very primitive, allowing for only a fixed set of names to be used and no arguments to be passed.

Node has default behaviors for a few signals, which we’ll go over now:

· SIGINT—Sent by your shell when you press Ctrl-C. Node’s default behavior is to kill the process, but this can be overridden with a single listener for SIGINT on process.

· SIGUSR1—When this signal is received, Node will enter its built-in debugger.

· SIGWINCH—Sent by your shell when the terminal is resized. Node resets process.stdout.rows and process.stdout.columns and emits a resize event when this is received.

Those are the three signals that Node handles by default, but you can also listen for any of these signals and invoke a callback function by listening for the signal on the process object.

Say you’ve written a server, but when you press Ctrl-C to kill the server, it’s an unclean shutdown, and any pending connections are dropped. The solution to this is to catch the SIGINT signal and stop the server from accepting connections, letting any existing connections complete before the process completes. This is done by listening for process.on('SIGINT', ...). The name of the event emitted is the same as the signal name:

process.on('SIGINT', function () {

console.log('Got Ctrl-C!');

server.close();

});

Now when you press Ctrl-C on your keyboard, the SIGINT signal will be sent to the Node process from your shell, which will invoke the registered callback instead of killing the process. Because the default behavior of most applications is to exit the process, it’s usually a good idea to do the same in your own SIGINT handler, after any necessary shutdown actions happen. In this case, stopping a server from accepting connections will do the trick. This also works on Windows, despite its lack of proper signals, because Node handles the equivalent Windows actions and simulates artificial signals in Node.

You can apply this same technique to catch any of the UNIX signals that get sent to your Node process. These signals are listed in the Wikipedia article on UNIX signals: http://wikipedia.org/wiki/Unix_signal#POSIX_signals. Unfortunately, signals don’t generally work on Windows, except for the few simulated signals: SIGINT, SIGBREAK, SIGHUP, and SIGWINCH.

13.3.2. Using the filesystem module

The fs module provides functions for interacting with the filesystem of the computer that Node is running on. Most of the functions are one-to-one mappings of their C function counterparts, but there are also higher-level abstractions like the fs.readFile(), fs.writeFile(),fs.ReadStream, and fs.WriteStream classes, which build on top of open(), read(), write(), and close().

Nearly all of the low-level functions are identical in use to their C counterparts. In fact, most of the Node documentation refers you to the equivalent man page explaining the matching C function. You can easily identify these low-level functions because they’ll always have a synchronous counterpart. For example, fs.stat() and fs.statSync() are the low-level bindings to the stat(2) C function.

Synchronous functions in Node.js

As you already know, Node’s API is mostly asynchronous functions that never block the event loop, so why bother including synchronous versions of these filesystem functions? The answer is that Node’s own require() function is synchronous, and it’s implemented using the fs module functions, so synchronous counterparts were necessary. Nevertheless, in Node, synchronous functions should only be used during startup, or when your module is initially loaded, and never after that.

Let’s take a look at some examples of interacting with the filesystem.

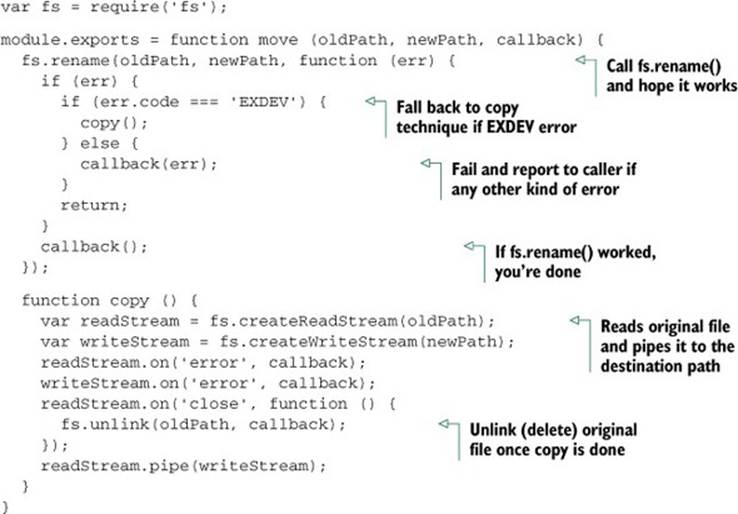

Moving a file

A seemingly simple, yet very common, task when interacting with the filesystem is moving a file from one directory to another. On UNIX platforms you use the mv command for this, and on Windows it’s the move command. Doing the same thing in Node should be similarly simple, right?

Well, if you browse through the fs module in the REPL or in the documentation (http://nodejs.org/api/fs.html), you’ll notice that there’s no fs.move() function. But there is an fs.rename() function, which is the same thing, if you think about it. Perfect!

But not so fast there. fs.rename() maps directly to the rename(2) C function, and one gotcha with this function is that it doesn’t work across physical devices (like two hard drives). That means the following code wouldn’t work properly and would throw an EXDEV error:

fs.rename('C:\\hello.txt', 'D:\\hello.txt', function (err) {

// err.code === 'EXDEV'

});

What do you do now? Well, you can still create new files on D:\ and read files from C:\, so copying the file over will work. With this knowledge, you can create an optimized move() function that calls the very fast fs.rename() when possible and copies the file from one device to another when necessary, using fs.ReadStream and fs.Write-Stream. One such implementation is shown in the following listing.

Listing 13.8. A move() function that renames, if possible, or falls back to copying

Note that this copy function only works with files, not directories. To make it work for directories, you’d have to first check if the given path was a directory, and if it was you’d call fs.readdir() and fs.mkdir() as necessary. You can implement that on your own.

fs module error codes

The fs module returns standard UNIX names for the filesystem error codes (www.gnu.org/software/libc/manual/html_node/Error-Codes.html), so some familiarity with those names is required. These names get normalized by libuv even on Windows, so that your application only needs to check for one error code at a time. According to the GNU documentation page, an EXDEV error happens when “an attempt to make an improper link across file systems was detected.”

Watching a directory or file for changes

fs.watchFile() has been around since the early days. It’s expensive on some platforms because it uses polling to see if the file has changed. That is, it stat()s the file, waits a short period of time, and then stat()s again in a continuous loop, invoking the watcher function any time the file has changed.

Suppose you’re writing a module that logs changes from the system log file. To do this, you’d want a callback function to be invoked any time the global system.log file is modified:

var fs = require('fs');

fs.watchFile('/var/log/system.log', function (curr, prev) {

if (curr.mtime.getTime() !== prev.mtime.getTime()) {

console.log('"system.log" has been modified');

}

});

The curr and prev variables are the current and previous fs.Stat objects, which should have different timestamps for one of the file times attached. In this example, the mtime values are being compared, because you only want to be notified when the file is modified, not when it’s accessed.

fs.watch() was introduced in the Node v0.6 release. As we mentioned earlier, it’s more optimized than fs.watchFile() because it uses the platform’s native file change notification API for watching files. Because of this, the function is also capable of watching for changes to any file in a directory. In practice, fs.watch() is less reliable than fs.watchFile() because of differences between the various platforms’ underlying file-watching mechanisms. For example, the filename parameter doesn’t get reported on OS X when watching a directory, and it’s up to Apple to change that in a future release of OS X. Node’s documentation keeps a list of these caveats at http://nodejs.org/api/fs.html#fs_caveats.

Using community modules: fstream and filed

As you’ve seen, the fs module, like all of Node’s core APIs, is strictly low-level. That means there’s plenty of room to innovate and create awesome abstractions on top of it. Node’s active collection of modules is growing on npm every day, and as you might guess, there are some quality ones that extend the fs module.

For example, the fstream module by Isaac Schlueter (https://github.com/isaacs/fstream) is one of the core pieces of npm itself. This module is interesting because it began life as a part of npm and then got extracted because its general-purpose functionality was useful to many kinds of command-line applications and sysadmin scripts. One of the awesome features that sets fstream apart is its seamless handling of permissions and symbolic links, which are maintained by default when copying files and directories.

By using fstream, you can perform the equivalent of cp -rp sourceDir destDir (copying a directory and its contents recursively, and transferring over ownership and permissions) by simply piping a Reader instance to a Writer instance. In the following example, we also utilize fstream’s filter feature to conditionally exclude files based on a callback function:

fstream

.Reader("path/to/dir")

.pipe(fstream.Writer({ path: "path/to/other/dir", filter: isValid )

// checks the file that is about to be written and

// returns whether or not it should be copied over

function isValid () {

// ignore temp files from text editors like TextMate

return this.path[this.path.length - 1] !== '~';

}

The filed module by Mikeal Rogers (https://github.com/mikeal/filed) is another influential module, mostly because it was written by the same author as the highly popular request module. These modules made popular a new kind of flow control over Stream instances: listening for the pipeevent, and acting differently based on what is being piped to it (or what it is being piped to).

To demonstrate the power of this approach, take a look at how filed turns a regular HTTP server into a full-featured static file server with just one line of code:

http.createServer(function (req, res) {

req.pipe(filed('path/to/static/files')).pipe(res);

});

This code takes care of sending Content-Length with the proper caching headers. In the case where the browser already has the file cached, filed will respond to the HTTP request with a 304 Not Modified code, skipping the steps of opening and reading the file from the disk process. These are the kinds of optimizations that acting on the pipe event make possible, because the filed instance has access to both the req and res objects of the HTTP request.

We’ve demonstrated two examples of good community modules that extend the base fs module to do awesome things or expose beautiful APIs, but there are many more. The npm search command is a good way to find published modules for a given task. Say you wanted to find another module that simplifies copying files from one destination to another: executing npm search copy could bring up some useful results. When you find a published module that looks interesting, you can execute npm info module-name to get information about the module, such as its description, home page, and published versions. Just remember that for any given task, it’s likely that someone has attempted to solve the problem with an npm module, so always check there before writing something from scratch.

13.3.3. Spawning external processes

Node provides the child_process module to create child subprocesses from within a Node server or script. There are two APIs for this: a high-level one, exec(), and a low-level one, spawn(). Either one may be appropriate, depending on your needs. There’s also a special way to create child processes of Node itself, with a special IPC channel built in, called fork(). All of these functions are meant for different use cases:

· cp.exec()—A high-level API for spawning commands and buffering the result in a callback

· cp.spawn()—A low-level API for spawning single commands into a Child-Process object

· cp.fork()—A special way to spawn additional Node processes with a built-in IPC channel

We’ll look at each of these in turn.

Pros and cons to child processes

There are benefits and drawbacks to using child processes. One obvious downside is that the program being executed needs to be installed on the user’s machine, making it a dependency of your application. The alternative would be to use JavaScript to do whatever the child process did. A good example of this is npm, which originally used the system tar command when extracting Node packages. This caused problems because there were conflicts relating to incompatible versions of tar, and it’s very rare for a Windows computer to have tar installed. These factors led to node-tar (https://github.com/isaacs/node-tar) being written entirely in JavaScript, not using any child processes.

On the flip side, using external applications allows a developer to tap into a wealth of applications written in other languages. For example, gm (http://aheckmann.github.com/gm/) is a module that utilizes the powerful GraphicsMagick and ImageMagick libraries to perform all sorts of image manipulation and conversions within a Node application.

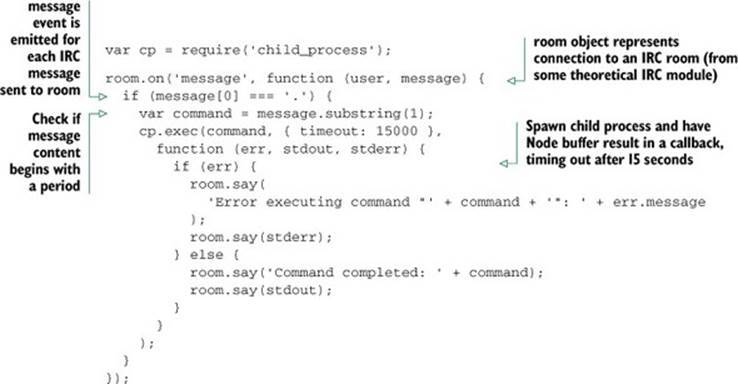

Buffering command results using cp.exec()

The high-level API, cp.exec(), is useful for when you want to invoke a command, and you only care about the final result, not about accessing the data from a child’s stdio streams as they come. This API allows you to enter full sequences of commands, including multiple processes piped to one another.

One good use case for the exec() API is when you’re accepting user commands to be executed. Say you’re writing an IRC bot, and you’d like to execute commands when the user enters something beginning with a period (.). For example, if a user typed .ls as their IRC message, the bot would execute ls and print the output back to the IRC room. As shown in the following listing, you need to set the timeout option, so that any never-ending processes are automatically killed after a certain period of time.

Listing 13.9. Using cp.exec() to run user-entered commands through the IRC bot

There are some good modules already in the npm registry that implement the IRC protocol, so if you’d like to write an IRC bot for real, you should definitely use one of the existing modules (both irc and irc-js in the npm registry are popular).

For times when you need to buffer a command’s output, but you’d like Node to automatically escape the arguments for you, there’s the execFile() function. This function takes four arguments, rather than three, and you pass the executable you want to run, along with an array of arguments to invoke the executable with. This is useful when you have to incrementally build up the arguments that the child process is going to use:

cp.execFile('ls', [ '-l', process.cwd() ],

function (err, stdout, stderr) {

if (err) throw err;

console.error(stdout);

});

Spawning commands with a Stream interface using cp.spawn()

The low-level API for spawning child processes in Node is cp.spawn(). This function differs from cp.exec() because it returns a ChildProcess object that you can interact with. Rather than giving cp.spawn() a single callback function when the process completes, cp.spawn() lets you interact with each stdio stream of the child process individually.

The most basic use of cp.spawn() looks like this:

var child = cp.spawn('ls', [ '-l' ]);

// stdout is a regular Stream instance, which emits 'data',

// 'end', etc.

child.stdout.pipe(fs.createWriteStream('ls-result.txt'));

child.on('exit', function (code, signal) {

// emitted when the child process exits

});

The first argument is the program you want to execute. This can be a single program name, which will be looked up in the current PATH, or it can be an absolute path to a program. The second argument is an array of string arguments to invoke the process with. In the default case, aChildProcess object contains three built-in Stream instances that your script is meant to interact with:

· child.stdin is the writable Stream that represents the child’s stdin.

· child.stdout is the readable Stream that represents the child’s stdout.

· child.stderr is the readable Stream that represents the child’s stderr.

You can do whatever you want with these streams, such as piping them to a file or socket or some other kind of writable stream. You can even completely ignore them if you like.

The other interesting event that happens on ChildProcess objects is the exit event, which is fired when the process has exited and the associated stream objects have all ended.

One good example module that abstracts the use of cp.spawn() into helpful functionality is node-cgi (https://github.com/TooTallNate/node-cgi), which allows you to reuse legacy Common Gateway Interface (CGI) scripts in your Node HTTP servers. CGI was really just a standard for responding to HTTP requests by invoking CGI scripts as child processes of an HTTP server with special environment variables describing the request. For example, you could write a CGI script that uses sh as the CGI interface:

#!/bin/sh

echo "Status: 200"

echo "Content-Type: text/plain"

echo

echo "Hello $QUERY_STRING"

If you were to name that file hello.cgi (don’t forget to chmod +x hello.cgi to make it executable), you could easily invoke it as the response logic for HTTP requests in your HTTP server with a single line of code:

var http = require('http');

var cgi = require('cgi');

var server = http.createServer( cgi('hello.cgi') );

server.listen(3000);

With this server set up, when an HTTP request hits the server, node-cgi would handle the request by doing two things:

· Spawning the hello.cgi script as a new child process using cp.spawn()

· Passing the new process contextual information about the current HTTP request using a custom set of environment variables

The hello.cgi script uses one of the CGI-specific environment variables, QUERY_STRING, which contains the query-string portion of the request URL. The script uses this in the response, which gets written to the script’s stdout. If you were to fire up this example server and send an HTTP request to it using curl, you’d see something like this:

$ curl http://localhost:3000/?nathan

Hello nathan

There are a lot of very good use cases for child processes in Node, and node-cgi is one example. As you get your server or application to do what it needs to do, you’ll find that you inevitably have to utilize them at some point.

Distributing the workload using cp.fork()

The last API offered by the child_process module is a specialized way of spawning additional Node processes, but with a special IPC channel built in. Since you’re always spawning Node itself, the first argument passed to cp.fork() is a path to a Node.js module to execute.

Like cp.spawn(), cp.fork() returns a ChildProcess object. The major difference is the API added by the IPC channel: the child process now has a child.send (message) function, and the script being invoked by fork() can listen for process .on('message') events.

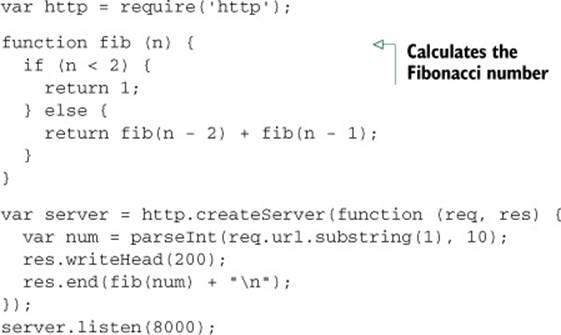

Suppose you want to write a Node HTTP server that calculates the Fibonacci sequence. You might try naively writing the server all in one shot, as shown in the next listing.

Listing 13.10. A non-optimal implementation of a Fibonacci HTTP server in Node.js

If you fire up the server with node fibonacci-naive.js and send an HTTP request to http://localhost:8000, the server will work as expected, but calculating the Fibonacci sequence for a given number is an expensive, CPU-bound computation. While your Node server’s single thread is grinding away at calculating the result, no additional HTTP requests can be served. Additionally, you’re only utilizing one CPU core here, and you likely have others that are sitting there doing nothing. This is bad.

A better solution is to fork Node processes during each HTTP request and have the child process do the expensive calculation and report back. cp.fork() offers a clean interface for doing this.

This solution involves two files:

· fibonacci-server.js will be the server.

· fibonacci-calc.js does the calculation.

First, here’s the server:

var http = require('http');

var cp = require('child_process');

var server = http.createServer(function(req, res) {

var child = cp.fork(__filename, [ req.url.substring(1) ]);

child.on('message', function(m) {

res.end(m.result + '\n');

});

});

server.listen(8000);

The server uses cp.fork() to place the Fibonacci calculation logic in a separate Node process, which will report back to the parent process using process.send(), as shown in the following fibonacci-calc.js script:

function fib(n) {

if (n < 2) {

return 1;

} else {

return fib(n - 2) + fib(n - 1);

}

}

var input = parseInt(process.argv[2], 10);

process.send({ result: fib(input) });

You can start the server with node fibonacci-server.js and, again, send an HTTP request to http://localhost:8000.

This is a great example of how dividing up the various components that make up your application into multiple processes can be a great benefit to you. cp.fork() provides child.send() and child.on('message') to send messages to and receive messages from the child. Within the child process itself, you have process.send() and process.on('message') to send messages to and receive messages from the parent. Use them!

Let’s switch gears once more and look at developing command-line tools in Node.

13.4. Developing command-line tools

Another task commonly fulfilled by Node scripts is building command-line tools. By now, you should be familiar with the largest command-line tool written in Node: the Node Package Manager, a.k.a. npm. As a package manager, it does a lot of filesystem operations and spawning of child processes, and all of this is done using Node and its asynchronous APIs. This enables npm to install packages in parallel, rather than serially, making the overall process faster. And if a command-line tool that complicated can be written in Node, then anything can.

Most command-line programs have common process-related needs, like parsing command-line arguments, reading from stdin, and writing to stdout and stderr. In this section, you’ll learn about the common requirements for writing a full command-line program, including the following:

· Parsing command-line arguments

· Working with stdin and stdout streams

· Adding pretty colors to the output using ansi.js

To get started on building awesome command-line programs, you need to be able to read the arguments the user invoked your program with. We’ll take a look at that first.

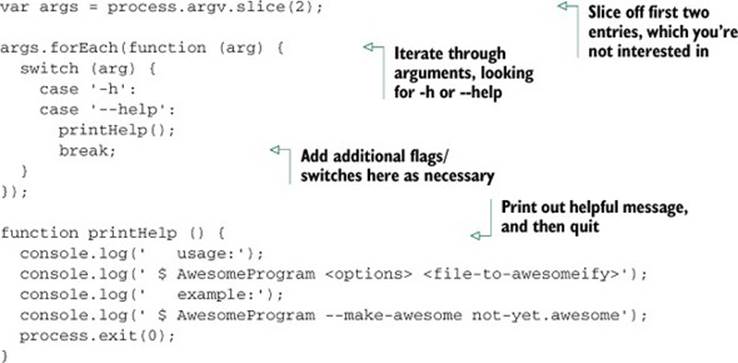

13.4.1. Parsing command-line arguments

Parsing arguments is an easy and straightforward process. Node provides you with the process.argv property, which is an array of strings, which are the arguments that were used when Node was invoked. The first entry of the array is the Node executable, and the second entry is the name of your script. Parsing and acting on these arguments simply requires iterating through the array entries and inspecting each argument.

To demonstrate, let’s write a quick script called args.js that prints out the result of process.argv. Most of the time you won’t care about the first two entries, so you can slice() them off before processing:

var args = process.argv.slice(2);

console.log(args);

When you invoke this script standalone, you’ll get an empty array because no additional arguments were passed in:

$ node args.js

[]

But when you pass along “hello” and “world” as arguments, the array contains string values as you’d expect:

$ node args.js hello world

[ 'hello', 'world' ]

As with any terminal application, you can use quotes around arguments that have spaces in them to combine them into a single argument. This is not a feature of Node, but rather of the shell that you’re using (likely bash on a UNIX platform or cmd.exe on Windows):

$ node args.js "tobi is a ferret"

[ 'tobi is a ferret' ]

By UNIX convention, every command-line program should respond to the -h and --help flags by printing out usage instructions and then exiting. The following listing shows an example of using Array#forEach() to iterate through the arguments and parse them in the callback, printing out the usage instructions when the expected flag is encountered.

Listing 13.11. Parsing process.argv using Array#forEach() and a switch block

You can easily extend that switch block to parse additional switches. Community modules like commander.js, nopt, optimist, and nomnom (to name a few) all solve this problem in their own ways, so don’t feel that using a switch block is the only way to parse the arguments. Like so many things in programming, there’s no single correct way to do it.

Another task that every command-line program will need to deal with is reading input from stdin and writing structured data to stdout. Let’s take a look at how this is done in Node.

13.4.2. Working with stdin and stdout

It’s common for UNIX programs to be small, self-contained, and focused on a single task. These programs are then combined by using pipes, feeding the results of one process to the next, until the end of the command chain. For example, using standard UNIX commands to retrieve the list of unique authors from any given Git repository, you could combine the git log, sort, and uniq commands like this:

$ git log --format='%aN' | sort | uniq

Mike Cantelon

Nathan Rajlich

TJ Holowaychuk

These commands run in parallel, feeding the output of the first process to the next, continuing on until the end. To adhere to this piping idiom, Node provides two Stream objects for your command-line program to work with:

· process.stdin—A ReadStream to read input data from

· process.stdout—A WriteStream to write output data to

These objects act like the familiar stream interfaces that you’ve already learned about.

Writing output data with process.stdout

You’ve been using the process.stdout writable stream implicitly every time you’ve called console.log(). Internally, the console.log() function calls process.stdout .write() after formatting the input arguments. But the console functions are more for debugging and inspecting objects. When you need to write structured data to stdout, you can call process.stdout.write() directly.

Say your program connects to an HTTP URL and writes the response to stdout. Stream#pipe() works well in this context, as shown here:

var http = require('http');

var url = require('url');

var target = url.parse(process.argv[2]);

var req = http.get(target, function (res) {

res.pipe(process.stdout);

});

Voilà! An absolutely minimal curl replica in only seven lines of code. Not too bad, huh? Next up let’s cover process.stdin.

Reading input data with process.stdin

Before you can read from stdin, you must call process.stdin.resume() to indicate that your script is interested in data from stdin. After that, stdin acts like any other readable stream, emitting data events as data is received from the output of another process, or as the user enters keystrokes into the terminal window.

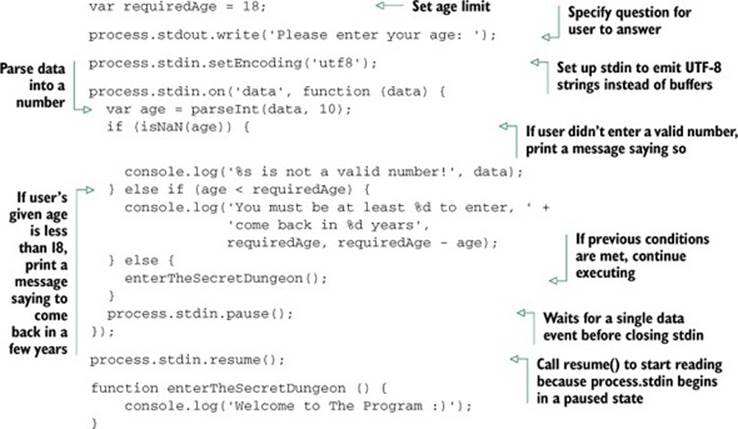

The following listing shows a command-line program that prompts the user for their age before deciding whether to continue executing.

Listing 13.12. An age-restricted program that prompts the user for their age

Diagnostic logging with process.stderr

There’s also a process.stderr writable stream in every Node process, which acts exactly like the process.stdout stream, except that it writes to stderr instead. Because stderr is usually reserved for debugging, and not for sending structured data and piping, you’ll generally useconsole.error() instead of accessing process.stderr directly.

Now that you’re familiar with the built-in stdio streams in Node, which is crucial knowledge for building any command-line program, let’s move on to something a bit more colorful (pun intended).

13.4.3. Adding colored output

Lots of command-line tools use colored text to make things easier to distinguish on the screen. Node itself does this in its REPL, as does npm for its various logging levels. It’s a nice bonus feature that any command-line program can easily benefit from, and adding colored output to your programs is rather easy, especially with the support of community modules.

Creating and writing ANSI escape codes

Colors on the terminal are produced by ANSI escape codes (the ANSI name comes from the American National Standards Institute). These escape codes are simple text sequences written to the stdout that have special meanings to the terminal—they can change the text color, change the position of the cursor, make a beep sound, and more.

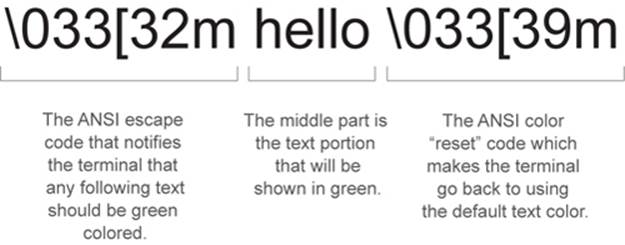

Let’s start simply. To print the word “hello” in the color green in your script, a single console.log() call is all it takes:

console.log('\033[32mhello\033[39m');

If you look closely, you can see the word “hello” in the middle of the string with some weird-looking characters on either side. This may look confusing at first, but it’s rather simple. Figure 13.4 breaks up the green “hello” string into its three distinct pieces.

Figure 13.4. Outputting “hello” in green text using ANSI escape codes

There are a lot of escape codes that terminals recognize, and most developers have better things to do with their time than memorize them all. Thankfully, the Node community comes to the rescue again with multiple modules, such as colors.js, cli-color, and ansi.js, that make using colors in your programs easy and fun.

ANSI escape codes on Windows

Technically, Windows and its command prompt (cmd.exe) don’t support ANSI escape codes. Fortunately for us, Node interprets the escape codes on Windows when your scripts write them to stdout, and then calls the appropriate Windows functions to produce the same results. This is interesting to know, but not something you’ll have to think about while writing your Node applications.

Formatting foreground colors using ansi.js

Let’s take a look at ansi.js (https://github.com/TooTallNate/ansi.js), which you can install with npm install ansi. This module is nice because it’s a very thin layer on top of the raw ANSI codes, which gives you greater flexibility compared to the other color modules (they only work with a single string at a time). In ansi.js, you set the modes (like “bold”) of the stream, and they’re persistent until cleared by one of the reset() calls. As an added bonus, ansi.js is the first module to support 256 color terminals, and it can convert CSS color codes (such as #FF0000) into ANSI color codes.

The ansi.js module works with the concept of a cursor, which is really just a wrapper around a writable stream instance with lots of convenience functions for writing ANSI codes to the stream, all of which support chaining. To print the word “hello” in green text again, using ansi.js syntax, you would write this:

var ansi = require('ansi');

var cursor = ansi(process.stdout);

cursor

.fg.green()

.write('Hello')

.fg.reset()

.write('\n');

You can see here that to use ansi.js you first have to create a cursor instance from a writable stream. Because you’re interested in coloring your program’s output, you pass process.stdout as the writable stream that the cursor will use. Once you have the cursor, you can invoke any of the methods it provides to alter the way that the text output will be rendered to the terminal. In this case, the result is equivalent to the console.log() call from before:

· cursor.fg.green() sets the foreground color to green

· cursor.write('Hello') writes the text “Hello” to the terminal in green

· cursor.fg.reset() resets the foreground color back to the default

· cursor.write('\n') finishes up with a newline

Programmatically adjusting the output using the cursor provides a clean interface for changing colors.

Formatting background colors using ansi.js

The ansi.js module also supports background colors. To set the background color instead of the foreground color, replace the fg portion of the call with bg. For example, to set a red background color, you’d call cursor.bg.red().

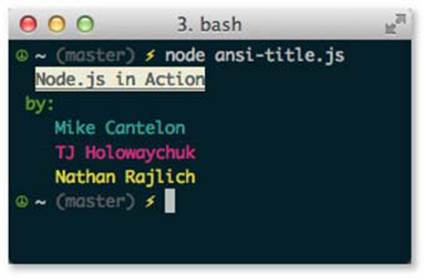

Let’s wrap up with a quick program that prints this book’s title information to the terminal in colors, as shown in figure 13.5.

Figure 13.5. The result of ansi-title.js script printing out the name of this book and the authors in different colors

The code to output fancy colors like these is verbose, but very straightforward, because each function call maps directly to the corresponding escape code being written to the stream. The code shown in the following listing consists of two lines of initialization followed by one really long chain of function calls that end up writing color codes and strings to process.stdout.

Listing 13.13. A simple program that prints this book’s title and authors in pretty colors

var ansi = require('ansi');

var cursor = ansi(process.stdout);

cursor

.reset()

.write(' ')

.bold()

.underline()

.bg.white()

.fg.black()

.write('Node.js in Action')

.fg.reset()

.bg.reset()

.resetUnderline()

.resetBold()

.write(' \n')

.fg.green()

.write(' by:\n')

.fg.cyan()

.write(' Mike Cantelon\n')

.fg.magenta()

.write(' TJ Holowaychuk\n')

.fg.yellow()

.write(' Nathan Rajlich\n')

.reset()

Color codes are only one of the key features of ansi.js. We haven’t touched on the cursor-positioning codes, how to make a beep sound, or how to hide and show the cursor. You can consult the ansi.js documentation and examples to see how that works.

13.5. Summary

Node is primarily designed for I/O-related tasks, such as creating HTTP servers. But as you’ve learned throughout this chapter, Node is well suited for a large variety of different tasks, such as creating a command-line interface to your application server, a client program that connects to the ASCII Star Wars server, a program that fetches and displays statistics from stock market servers—the possibilities are only limited by your imagination. Take a look at npm or node-gyp for a couple of complicated examples of command-line programs written using Node. They’re great examples to learn from.

In this chapter, we talked about a couple of community modules that could aid in the development of your next application. In the next chapter, we’ll focus on how you can find these awesome modules in the Node community, and how you can contribute modules you’ve developed back to the community for feedback and improvements. The social interaction is the exciting stuff!