Windows Internals, Sixth Edition, Part 1 (2012)

Chapter 5. Processes, Threads, and Jobs

In this chapter, we’ll explain the data structures and algorithms that deal with processes, threads, and jobs in the Microsoft Windows operating system. The first section focuses on the internal structures that make up a process. The second section outlines the steps involved in creating a process (and its initial thread). The internals of threads and thread scheduling are then described. The chapter concludes with a description of jobs.

Because processes and threads touch so many components in Windows, a number of terms and data structures (such as working sets, objects and handles, system memory heaps, and so on) are referred to in this chapter but are explained in detail elsewhere in the book. To fully understand this chapter, you need to be familiar with the terms and concepts explained in Chapter 1, and Chapter 2, such as the difference between a process and a thread, the Windows virtual address space layout, and the difference between user mode and kernel mode.

Process Internals

This section describes the key Windows process data structures maintained by various parts of the system and describes different ways and tools to examine this data.

Data Structures

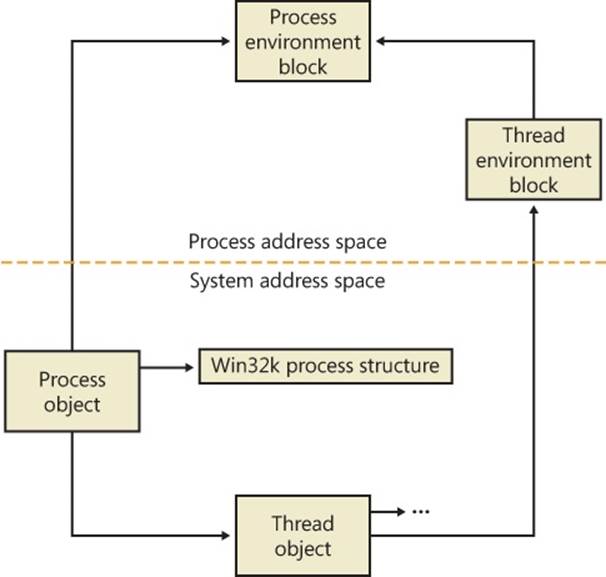

Each Windows process is represented by an executive process (EPROCESS) structure. Besides containing many attributes relating to a process, an EPROCESS contains and points to a number of other related data structures. For example, each process has one or more threads, each represented by an executive thread (ETHREAD) structure. (Thread data structures are explained in the section Thread Internals later in this chapter.)

The EPROCESS and most of its related data structures exist in system address space. One exception is the process environment block (PEB), which exists in the process address space (because it contains information accessed by user-mode code). Additionally, some of the process data structures used in memory management, such as the working set list, are valid only within the context of the current process, because they are stored in process-specific system space. (See Chapter 10, “Memory Management,” in Part 2 for more information on process address space.)

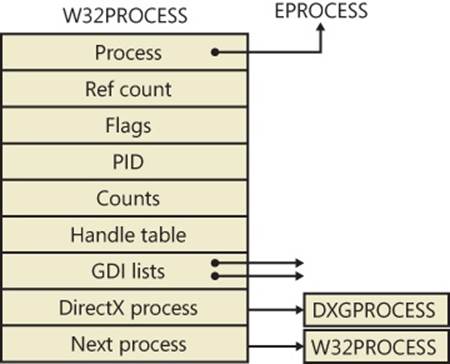

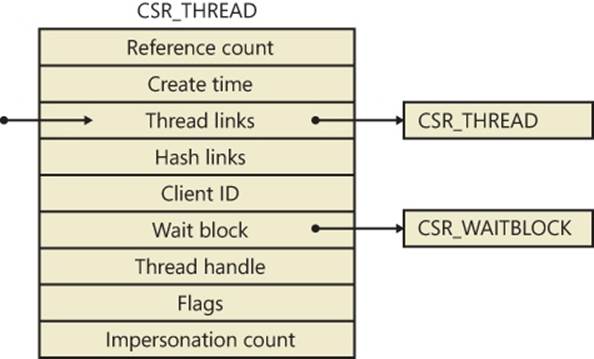

For each process that is executing a Win32 program, the Win32 subsystem process (Csrss) maintains a parallel structure called the CSR_PROCESS. Finally, the kernel-mode part of the Win32 subsystem (Win32k.sys) maintains a per-process data structure, W32PROCESS. The W32PROCESS structure is created the first time a thread calls a Windows USER or GDI function that is implemented in kernel mode.

With the exception of the idle process, every EPROCESS structure is encapsulated as a process object by the executive object manager (described in Chapter 3). Because processes are not named objects, they are not visible in the WinObj tool. You can, however, see the Type object called “Process” in the \ObjectTypes directory. A handle to a process provides, through use of the process-related APIs, access to some of the data in the EPROCESS structure and also in some of its associated structures.

Figure 5-1 is a simplified diagram of the process and thread data structures. Each data structure shown in the figure is described in detail in this chapter.

Figure 5-1. Data structures associated with processes and threads

Many other drivers and system components, by registering process creation notifications, can choose to create their own data structures to track information they store on a per-process basis. When one discusses the overhead of a process, the size of such data structures must often be taken into consideration, although it is nearly impossible to obtain an accurate number.

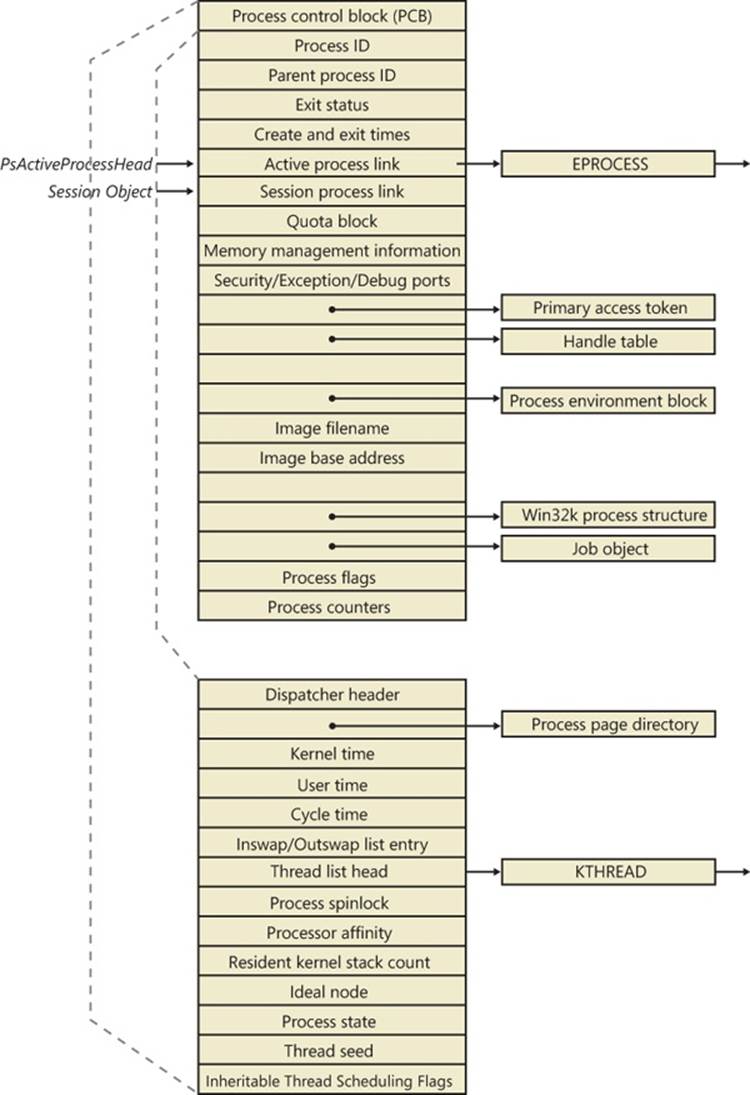

First let’s focus on the process object. (The thread object is covered in the section Thread Internals later in the chapter.) Figure 5-2 shows the key fields in an EPROCESS structure.

Figure 5-2. Important fields of the executive process structure and its embedded kernel process structure

Similar to the way that the kernel’s APIs and components are divided into isolated and layered modules with their own naming conventions, the data structures for a process follow a similar design. As shown in Figure 5-2, the first member of the executive process structure is calledPcb, for process control block. It is a structure of type KPROCESS, for kernel process. Although routines in the executive store information in the EPROCESS, the dispatcher, scheduler, and interrupt/time accounting code—being part of the operating system kernel—use the KPROCESS instead. This allows a layer of abstraction to exist between the executive’s high-level functionality and its underlying low-level implementation of certain functions, and it helps prevent unwanted dependencies between the layers.

EXPERIMENT: DISPLAYING THE FORMAT OF AN EPROCESS STRUCTURE AND ITS FIELDS

For a list of the fields that make up an EPROCESS structure and their offsets in hexadecimal, type dt nt!_eprocess in the kernel debugger. (See Chapter 1 for more information on the kernel debugger and how to perform kernel debugging on the local system.) The output (truncated for the sake of space) on a 32-bit system looks like this:

lkd> dt nt!_eprocess

+0x000 Pcb : _KPROCESS

+0x080 ProcessLock : _EX_PUSH_LOCK

+0x088 CreateTime : _LARGE_INTEGER

+0x090 ExitTime : _LARGE_INTEGER

+0x098 RundownProtect : _EX_RUNDOWN_REF

+0x09c UniqueProcessId : Ptr32 Void

...

+0x0dc ObjectTable : Ptr32 _HANDLE_TABLE

+0x0e0 Token : _EX_FAST_REF

...

+0x108 Win32Process : Ptr32 Void

+0x10c Job : Ptr32 _EJOB

...

+0x2a8 TimerResolutionLink : _LIST_ENTRY

+0x2b0 RequestedTimerResolution : Uint4B

+0x2b4 ActiveThreadsHighWatermark : Uint4B

+0x2b8 SmallestTimerResolution : Uint4B

+0x2bc TimerResolutionStackRecord : Ptr32 _PO_DIAG_STACK_RECORD

The first member of this structure (Pcb) is an imbedded structure of type KPROCESS. This is where scheduling and time-accounting data is stored. You can display the format of the kernel process structure in the same way as the EPROCESS:

lkd> dt _kprocess

nt!_KPROCESS

+0x000 Header : _DISPATCHER_HEADER

+0x010 ProfileListHead : _LIST_ENTRY

+0x018 DirectoryTableBase : Uint4B

...

+0x074 StackCount : _KSTACK_COUNT

+0x078 ProcessListEntry : _LIST_ENTRY

+0x080 CycleTime : Uint8B

+0x088 KernelTime : Uint4B

+0x08c UserTime : Uint4B

+0x090 VdmTrapcHandler : Ptr32 Void

The dt command also enables you to view the specific contents of one field or multiple fields by typing their names following the structure name—such as dt nt!_eprocess UniqueProcessId, which displays the process ID field. In the case of a field that represents a structure—such as the Pcb field of EPROCESS, which contains the KPROCESS substructure—adding a period after the field name will cause the debugger to display the substructure.

For example, an alternative way to see the KPROCESS is to type dt nt!_eprocess Pcb. You can continue to recurse this way by adding more field names (within KPROCESS) and so on. Finally, to recurse through all the substructures, the –r switch of the dt command allows you to do just that. Adding a number after the switch controls the depth of recursion the command will follow.

The dt command used as shown earlier shows the format of the selected structure, not the contents of any particular instance of that structure type. To show an instance of an actual process, you can specify the address of an EPROCESS structure as an argument to the dt command. You can get the addresses of almost all of the EPROCESS structures in the system by using the!process 0 0 command (the exception being the system idle process). Because the KPROCESS is the first thing in the EPROCESS, the address of an EPROCESS will also work as the address of a KPROCESS with dt _kprocess.

Processes and threads are such integral parts of Windows that it’s impossible to talk about them without referring to many other parts of the system. To keep the length of this chapter manageable, however, those related subjects (such as memory management, security, objects, and handles) are covered elsewhere.

EXPERIMENT: USING THE KERNEL DEBUGGER !PROCESS COMMAND

The kernel debugger !process command displays a subset of the information in a process object and its associated structures. This output is arranged in two parts for each process. First you see the information about the process, as shown here. (When you don’t specify a process address or ID, !process lists information for the process owning the thread currently running on CPU 0, which will be WinDbg itself on a single-processor system.)

lkd> !process

PROCESS 85857160 SessionId: 1 Cid: 0bcc Peb: 7ffd9000 ParentCid: 090c

DirBase: b45b0820 ObjectTable: b94ffda0 HandleCount: 99.

Image: windbg.exe

VadRoot 85a1c8e8 Vads 97 Clone 0 Private 5919. Modified 153. Locked 1.

DeviceMap 9d32ee50

Token ebaa1938

...

' PageFaultCount 37066

MemoryPriority BACKGROUND

BasePriority 8

CommitCharge 6242

After the basic process output comes a list of the threads in the process. That output is explained in the EXPERIMENT: Using the Kernel Debugger !thread Command section later in the chapter.

Other commands that display process information include !handle, which dumps the process handle table (which is described in more detail in the section Object Handles and the Process Handle Table in Chapter 3). Process and thread security structures are described in Chapter 6.

Note that the output gives you the address of the PEB, which you can use with the !peb command shown in the next experiment to see the PEB of an arbitrary process. However, because the PEB is in the user-mode address space, it is valid only within the context of its own process. To look at the PEB of another process, you must first switch WinDbg to that process. You can do this with the .process command, followed by the EPROCESS pointer.

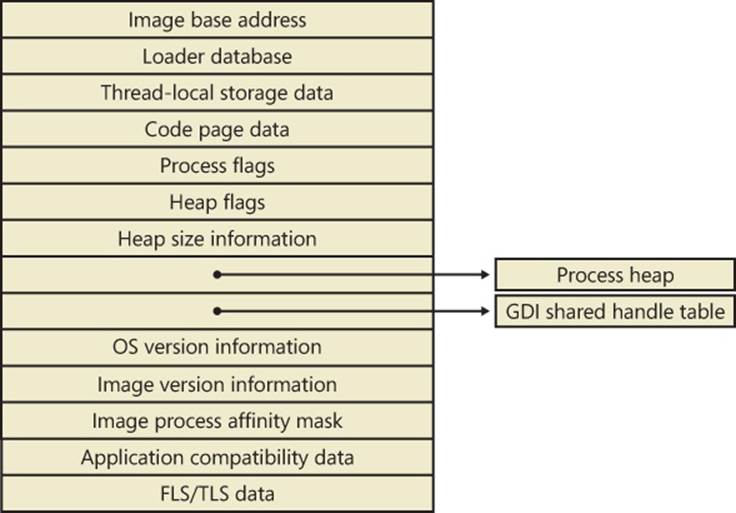

The PEB lives in the user-mode address space of the process it describes. It contains information needed by the image loader, the heap manager, and other Windows components that need to access it from user mode. The EPROCESS and KPROCESS structures are accessible only from kernel mode. The important fields of the PEB are illustrated in Figure 5-3 and are explained in more detail later in this chapter.

Figure 5-3. Fields of the process environment block

EXPERIMENT: EXAMINING THE PEB

You can dump the PEB structure with the !peb command in the kernel debugger, which displays the PEB of the process that owns the currently running thread on CPU 0. By using the information in the previous experiment, you can also use the PEB pointer as an argument to the command.

lkd> !peb 7ffd9000

PEB at 7ffd9000

InheritedAddressSpace: No

ReadImageFileExecOptions: No

BeingDebugged: No

ImageBaseAddress: 002a0000

Ldr 77895d00

...

WindowTitle: 'C:\Users\Alex Ionescu\Desktop\WinDbg.lnk'

ImageFile: 'C:\Program Files\Debugging Tools for Windows\windbg.exe'

CommandLine: '"C:\Program Files\Debugging Tools for Windows\windbg.exe" '

DllPath: 'C:\Program Files\Debugging Tools for Windows;C:\Windows\

system32;C:\Windows\system;C:\Windows

Environment: 001850a8

ALLUSERSPROFILE=C:\ProgramData

APPDATA=C:\Users\Alex Ionescu\AppData\Roaming

...

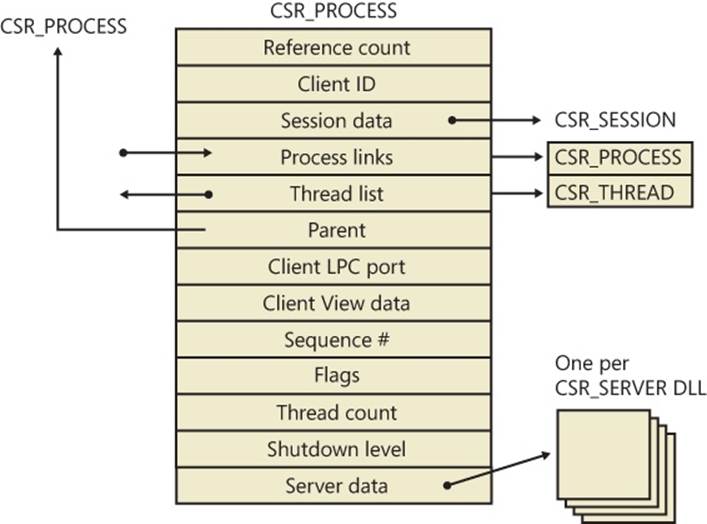

The CSR_PROCESS structure contains information about processes that is specific to the Windows subsystem (Csrss). As such, only Windows applications have a CSR_PROCESS structure associated with them (for example, Smss does not). Additionally, because each session has its own instance of the Windows subsystem, the CSR_PROCESS structures are maintained by the Csrss process within each individual session. The basic structure of the CSR_PROCESS is illustrated in Figure 5-4 and is explained in more detail later in this chapter.

Figure 5-4. Fields of the CSR process structure

EXPERIMENT: EXAMINING THE CSR_PROCESS

You can dump the CSR_PROCESS structure with the !dp command in the user-mode debugger while attached to the Csrss process of the session you want to inspect. Use the File, Attach To A Process option to get a list of processes, and select the Csrss process for the correct session. (You can see the session of the process by expanding the tree item for it.) Make sure to select the Noninvasive check box to avoid freezing your system.

The !dp command takes as input the PID of the process whose CSR_PROCESS structure should be dumped. Alternatively, the structure pointer can be given directly as an argument. Because !dp already performs a dt command internally, there is no need to use dt on your own.

0:000> !dp v 0x1c0aa8-8

PCSR_PROCESS @ 001c0aa0:

+0x000 ClientId : _CLIENT_ID

+0x008 ListLink : _LIST_ENTRY [ 0x1d8618 - 0x1b1b10 ]

+0x010 ThreadList : _LIST_ENTRY [ 0x1c0b80 - 0x1c7638 ]

+0x018 NtSession : 0x001c0bb8 _CSR_NT_SESSION

...

+0x054 Luid : _LUID

+0x05c ServerDllPerProcessData : [1] (null)

Threads:

Thread 001c0b78, Process 001c0aa0, ClientId 198.19c, Flags 0, Ref Count 1

Thread 001c0e78, Process 001c0aa0, ClientId 198.1cc, Flags 0, Ref Count 1

...

The W32PROCESS structure is the final system data structure associated with processes that we’ll look at. It contains all the information that the Windows graphics and window management code in the kernel (Win32k) needs to maintain state information about GUI processes (which were defined earlier as processes that have done at least one USER/GDI system call). The basic structure of the W32PROCESS is illustrated in Figure 5-5 and is explained in more detail later in this chapter.

Figure 5-5. Fields of the Win32k Process structure

EXPERIMENT: EXAMINING THE W32PROCESS

There is no command provided by the debugger extensions to dump the W32PROCESS structure, but it is present in the symbols of the Win32k driver. As such, by using the dt command with the appropriate symbol name win32k!_W32PROCESS, it is possible to dump the fields as long as the pointer is known. Because the !process command does not actually output this pointer (even though it is stored in the EPROCESS object), the field must be inspected manually with dt nt!_EPROCESS Win32Process followed by an EPROCESS pointer.

In the following example, the W32PROCESS structure for the shell, Explorer.exe, is shown:

lkd> dt win32k!_W32PROCESS 0xff991490

+0x000 Process : 0x84a2b030 _EPROCESS

+0x004 RefCount : 1

...

+0x020 W32Pid : 0x590

+0x024 GDIHandleCount : 383

+0x028 GDIHandleCountPeak : 0x239

+0x02c UserHandleCount : 228

+0x030 UserHandleCountPeak : 0x16c

...

+0x088 hSecureGdiSharedHandleTable : 0x84a24159

+0x08c DxProcess : 0xa2c93980

The DxProcess field is a pointer to yet another per-process data structure—in this case, maintained by the DirectX Video Card Port Driver—but its description is beyond the scope of this book.

Protected Processes

In the Windows security model, any process running with a token containing the debug privilege (such as an administrator’s account) can request any access right that it desires to any other process running on the machine—for example, it can read and write arbitrary process memory, inject code, suspend and resume threads, and query information on other processes. Tools such as Process Explorer and Task Manager need and request these access rights to provide their functionality to users.

This logical behavior (which helps ensure that administrators will always have full control of the running code on the system) clashes with the system behavior for digital rights management requirements imposed by the media industry on computer operating systems that need to support playback of advanced, high-quality digital content such as Blu-ray and HD-DVD media. To support reliable and protected playback of such content, Windows uses protected processes. These processes exist alongside normal Windows processes, but they add significant constraints to the access rights that other processes on the system (even when running with administrative privileges) can request.

Protected processes can be created by any application; however, the operating system will allow a process to be protected only if the image file has been digitally signed with a special Windows Media Certificate. The Protected Media Path (PMP) in Windows makes use of protected processes to provide protection for high-value media, and developers of applications such as DVD players can make use of protected processes by using the Media Foundation API.

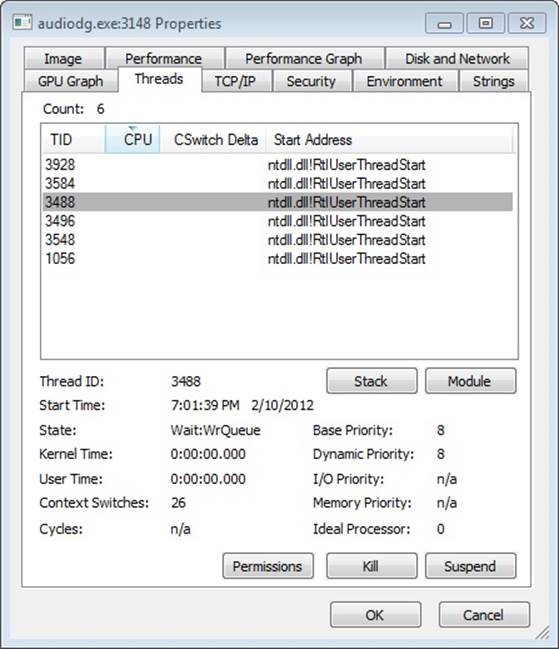

The Audio Device Graph process (Audiodg.exe) is a protected process because protected music content can be decoded through it. Similarly, the Windows Error Reporting (or WER, discussed in Chapter 3) client process (Werfault.exe) can also run protected because it needs to have access to protected processes in case one of them crashes. Finally, the System process itself is protected because some of the decryption information is generated by the Ksecdd.sys driver and stored in its user-mode memory. The System process is also protected to protect the integrity of all kernel handles (because the System process’ handle table contains all the kernel handles on the system).

At the kernel level, support for protected processes is twofold: first, the bulk of process creation occurs in kernel mode to avoid injection attacks. (The flow for both protected and standard process creation is described in detail in the next section.) Second, protected processes have a special bit set in their EPROCESS structure that modifies the behavior of security-related routines in the process manager to deny certain access rights that would normally be granted to administrators. In fact, the only access rights that are granted for protected processes are PROCESS_QUERY/SET_LIMITED_INFORMATION, PROCESS_TERMINATE, and PROCESS_SUSPEND_RESUME. Certain access rights are also disabled for threads running inside protected processes; we will look at those access rights later in this chapter in the sectionThread Internals.

Because Process Explorer uses standard user-mode Windows APIs to query information on process internals, it is unable to perform certain operations on such processes. On the other hand, a tool like WinDbg in kernel-debugging mode, which uses kernel-mode infrastructure to obtain this information, will be able to display complete information. See the experiment in the Thread Internals section on how Process Explorer behaves when confronted with a protected process such as Audiodg.exe.

NOTE

As mentioned in Chapter 1, to perform local kernel debugging, you must boot in debugging mode (enabled by using bcdedit /debug on or by using the Msconfig advanced boot options). This protects against debugger-based attacks on protected processes and the Protected Media Path (PMP). When booted in debugging mode, high-definition content playback will not work.

Limiting these access rights reliably allows the kernel to sandbox a protected process from user-mode access. On the other hand, because a protected process is indicated by a flag in the EPROCESS structure, an administrator can still load a kernel-mode driver that disables this bit. However, this would be a violation of the PMP model and considered malicious, and such a driver would likely eventually be blocked from loading on a 64-bit system because the kernel-mode, code-signing policy prohibits the digital signing of malicious code. Even on 32-bit systems, the driver has to be recognized by PMP policy or else the playback will be halted. This policy is implemented by Microsoft and not by any kernel detection. This block would require manual action from Microsoft to identify the signature as malicious and update the kernel.

Flow of CreateProcess

So far, this chapter has shown the various data structures involved in process state manipulation and management, and how various tools and debugger commands can inspect this information. In this section, we’ll see how and when those data structures are created and filled out, as well as the overall creation and termination behaviors behind processes.

A Windows subsystem process is created when an application calls (or eventually ends up in) one of the process-creation functions, such as CreateProcess, CreateProcessAsUser, CreateProcessWithTokenW, or CreateProcessWithLogonW. Creating a Windows process consists of several stages carried out in three parts of the operating system: the Windows client-side library Kernel32.dll (in the case of the CreateProcessAsUser, CreateProcessWithTokenW, and CreateProcessWithLogonW routines, part of the work is first done in Advapi32.dll), the Windows executive, and the Windows subsystem process (Csrss).

Because of the multiple-environment subsystem architecture of Windows, creating an executive process object (which other subsystems can use) is separated from the work involved in creating a Windows subsystem process. So, although the following description of the flow of the Windows CreateProcess function is complicated, keep in mind that part of the work is specific to the semantics added by the Windows subsystem as opposed to the core work needed to create an executive process object.

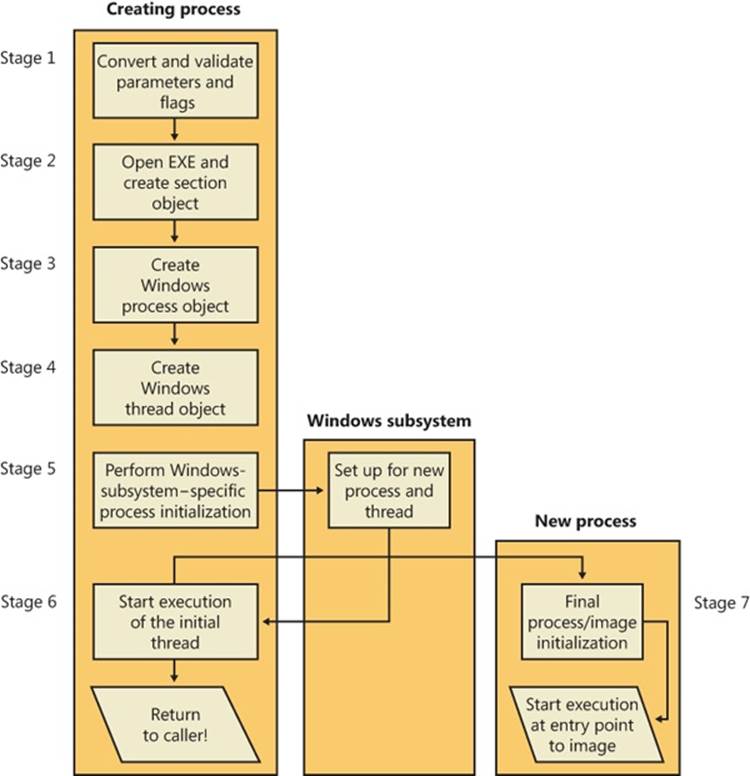

The following list summarizes the main stages of creating a process with the Windows CreateProcess function. The operations performed in each stage are described in detail in the subsequent sections. Some of these operations might be performed by CreateProcess itself (or other helper routines in user mode), while others will be performed by NtCreateUserProcess or one of its helper routines in kernel mode. In our detailed analysis to follow, we will differentiate between the two at each step required.

NOTE

Many steps of CreateProcess are related to the setup of the process virtual address space and therefore refer to many memory management terms and structures that are defined in Chapter 10 in Part 2.

1. Validate parameters; convert Windows subsystem flags and options to their native counterparts; parse, validate, and convert the attribute list to its native counterpart.

2. Open the image file (.exe) to be executed inside the process.

3. Create the Windows executive process object.

4. Create the initial thread (stack, context, and Windows executive thread object).

5. Perform post-creation, Windows-subsystem-specific process initialization.

6. Start execution of the initial thread (unless the CREATE_ SUSPENDED flag was specified).

7. In the context of the new process and thread, complete the initialization of the address space (such as load required DLLs) and begin execution of the program.

Figure 5-6 shows an overview of the stages Windows follows to create a process.

Figure 5-6. The main stages of process creation

Stage 1: Converting and Validating Parameters and Flags

Before opening the executable image to run, CreateProcess performs the following steps.

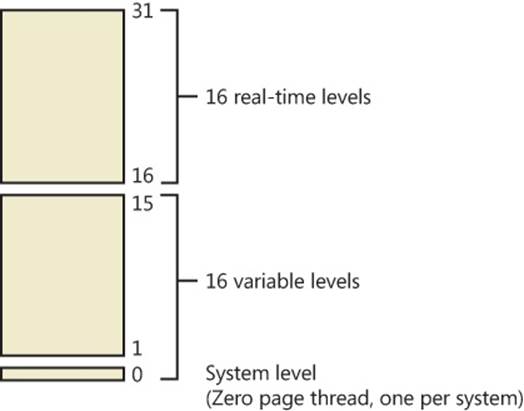

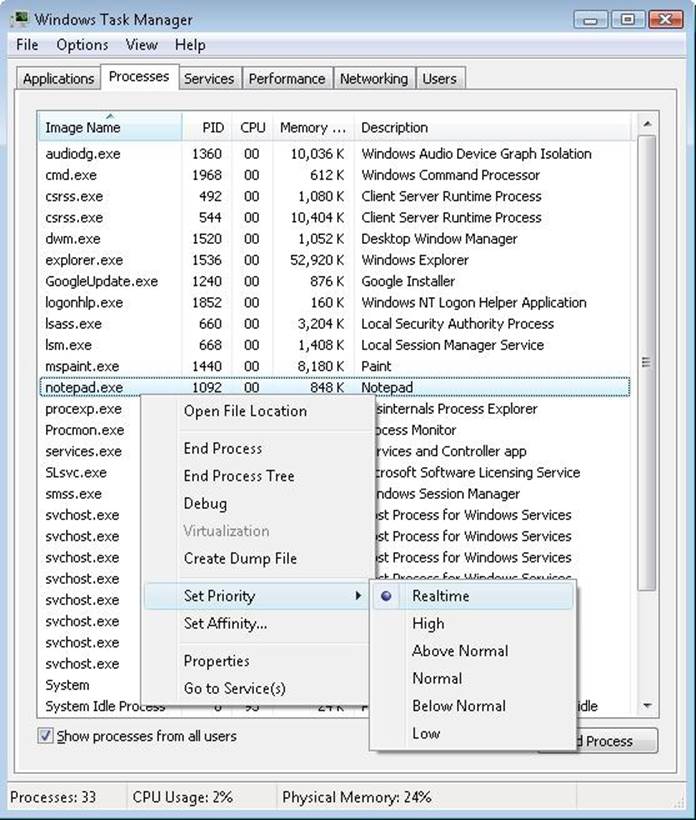

In CreateProcess, the priority class for the new process is specified as independent bits in the CreationFlags parameter. Thus, you can specify more than one priority class for a single CreateProcess call. Windows resolves the question of which priority class to assign to the process by choosing the lowest-priority class set.

If no priority class is specified for the new process, the priority class defaults to Normal unless the priority class of the process that created it is Idle or Below Normal, in which case the priority class of the new process will have the same priority as the creating class.

If a Real-time priority class is specified for the new process and the process’ caller doesn’t have the Increase Scheduling Priority privilege, the High priority class is used instead. In other words, CreateProcess doesn’t fail just because the caller has insufficient privileges to create the process in the Real-time priority class; the new process just won’t have as high a priority as Real-time.

All windows are associated with desktops, the graphical representation of a workspace. If no desktop is specified in CreateProcess, the process is associated with the caller’s current desktop.

If the process is part of a job object, but the creation flags requested a separate virtual DOS machine (VDM), the flag is ignored.

If the caller is sending a handle to a monitor as an output handle instead of a console handle, standard handle flags are ignored.

If the creation flags specify that the process will be debugged, Kernel32 initiates a connection to the native debugging code in Ntdll.dll by calling DbgUiConnectToDbg and gets a handle to the debug object from the current thread’s environment block (TEB).

Kernel32.dll sets the default hard error mode if the creation flags specified one.

The user-specified attribute list is converted from Windows subsystem format to native format and internal attributes are added to it. The possible attributes that can be added to the attribute list are listed in Table 5-1, including their documented Windows API counterparts, if any.

NOTE

The attribute list passed on a CreateProcess call permits passing back to the caller information beyond a simple status code, such as the TEB address of the initial thread or information on the image section. This is necessary for protected processes because the parent cannot query this information after the child is created.

Table 5-1. Process Attributes

|

Native Attribute |

Equivalent Windows Attribute |

Type |

Description |

|

PS_CP_PARENT_PROCESS |

PROC_THREAD_ATTRIBUTE_PARENT_PROCESS. Also used when elevating |

Input |

Handle to the parent process. |

|

PS_CP_DEBUG_OBJECT |

N/A – used when using DEBUG_PROCESS as a flag |

Input |

Debug object if process is being started debugged. |

|

PS_CP_PRIMARY_TOKEN |

N/A – used when using CreateProcessAsUser/WithToken |

Input |

Process token if CreateProcessAsUser was used. |

|

PS_CP_CLIENT_ID |

N/A – returned by Win32 API as a parameter |

Output |

Returns the TID and PID of the initial thread and the process. |

|

PS_CP_TEB_ADDRESS |

N/A – internally used and not exposed |

Output |

Returns the address of the TEB for the initial thread. |

|

PS_CP_FILENAME |

N/A – used as a parameter in CreateProcess API. |

Input |

Name of the process that should be created. |

|

PS_CP_IMAGE_INFO |

N/A – internally used and not exposed |

Output |

Returns SECTION_IMAGE_INFORMATION, which contains information on the version, flags, and subsystem of the executable, as well as the stack size and entry point. |

|

PS_CP_MEM_RESERVE |

N/A – internally used by SMSS and CSRSS. |

Input |

Array of virtual memory reservations that should be made during initial process address space creation, allowing guaranteed availability because no other allocations have taken place yet. |

|

PS_CP_PRIORITY_CLASS |

N/A – passed in as a parameter to the CreateProcess API. |

Input |

Priority class that the process should be given. |

|

PS_CP_ERROR_MODE |

N/A – passed in through CREATE_DEFAULT_ERROR_MODE flag |

Input |

Hard error-processing mode for the process. |

|

PS_CP_STD_HANDLE_INFO |

Input |

Specifies if standard handles should be duplicated, or if new handles should be created. |

|

|

PS_CP_HANDLE_LIST |

PROC_THREAD_ATTRIBUTE_HANDLE_LIST |

Input |

List of handles belonging to the parent process that should be inherited by the new process. |

|

PS_CP_GROUP_AFFINITY |

PROC_THREAD_ATTRIBUTE_GROUP_AFFINITY |

Input |

Processor group(s) the thread should be allowed to run on. |

|

PS_CP_PREFERRED_NODE |

PROC_THREAD_ATTRIBUTES_PRFERRED_NODE |

Input |

Preferred (ideal) node that should be associated with the process. It affects the node on which the initial process heap and thread stack will be created. |

|

PS_CP_IDEAL_PROCESSOR |

PROC_THREAD_ATTTRIBUTE_IDEAL_PROCESSOR |

Input |

Preferred (ideal) processor that the thread should be scheduled on. |

|

PS_CP_UMS_THREAD |

PROC_THREAD_ATTRIBUTE_UMS_THREAD |

Input |

Contains the UMS attributes, completion list, and context. |

|

PS_CP_EXECUTE_OPTIONS |

PROC_THREAD_MITIGATION_POLICY |

Input |

Contains information on which mitigations (SEHOP, ATL Emulation, NX) should be enabled/disabled for the process. |

Once these steps are completed, CreateProcess performs the initial call to NtCreateUserProcess to attempt creation of the process. Because Kernel32.dll has no idea at this point whether the application image name is a real Windows application or a POSIX, 16-bit, or DOS application, the call might fail—at which point, CreateProcess looks at the error reason and attempts to correct the situation.

Stage 2: Opening the Image to Be Executed

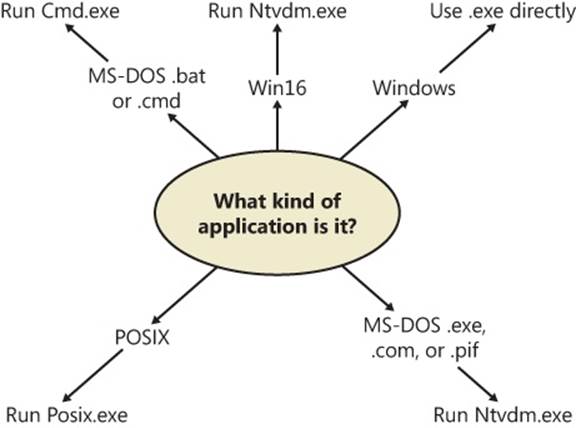

As illustrated in Figure 5-7, the first stage in NtCreateUserProcess is to find the appropriate Windows image that will run the executable file specified by the caller and to create a section object to later map it into the address space of the new process. If the call failed for any reason, it returns to CreateProcess with a failure state (see Table 5-2) that causes CreateProcess to attempt execution again.

If the executable file specified is a Windows .exe, NtCreateUserProcess tries to open the file and create a section object for it. The object isn’t mapped into memory yet, but it is opened. Just because a section object has been successfully created doesn’t mean that the file is a valid Windows image, however; it could be a DLL or a POSIX executable. If the file is a POSIX executable, the image to be run changes to Posix.exe, and CreateProcess restarts from the beginning of Stage 1. If the file is a DLL, CreateProcess fails.

Now that NtCreateUserProcess has found a valid Windows executable image, as part of the process creation code described in Stage 3 it looks in the registry under HKLM\SOFTWARE\Microsoft \Windows NT\CurrentVersion\Image File Execution Options to see whether a subkey with the file name and extension of the executable image (but without the directory and path information—for example, Image.exe) exists there. If it does, PspAllocateProcess looks for a value named Debugger for that key. If this value is present, the image to be run becomes the string in that value and CreateProcess restarts at Stage 1.

TIP

You can take advantage of this process creation behavior and debug the startup code of Windows services processes before they start rather than attach the debugger after starting a service, which doesn’t allow you to debug the startup code.

On the other hand, if the image is not a Windows .exe (for example, if it’s an MS-DOS, a Win16, or a POSIX application), CreateProcess goes through a series of steps to find a Windows support image to run it. This process is necessary because non-Windows applications aren’t run directly—Windows instead uses one of a few special support images that, in turn, are responsible for actually running the non-Windows program. For example, if you attempt to run a POSIX application, CreateProcess identifies it as such and changes the image to be run to the Windows executable file Posix.exe. If you attempt to run an MS-DOS or a Win16 executable, the image to be run becomes the Windows executable Ntvdm.exe. In short, you can’t directly create a process that is not a Windows process. If Windows can’t find a way to resolve the activated image as a Windows process (as shown in Table 5-2), CreateProcess fails.

Figure 5-7. Choosing a Windows image to activate

Table 5-2. Decision Tree for Stage 1 of CreateProcess

|

If the Image . . . |

Create State Code |

This Image Will Run . . . |

. . . and This Will Happen |

|

Is a POSIX executable file |

PsCreateSuccess |

Posix.exe |

CreateProcess restarts Stage 1. |

|

Is an MS-DOS application with an .exe, .com, or .pif extension |

PsCreateFailOnSectionCreate |

Ntvdm.exe |

CreateProcess restarts Stage 1. |

|

Is a Win16 application |

PsCreateFailOnSectionCreate |

Ntvdm.exe |

CreateProcess restarts Stage 1. |

|

Is a Win64 application on a 32-bit system (or a PPC, MIPS, or Alpha Binary) |

PsCreateFailMachineMismatch |

N/A |

CreateProcess will fail. |

|

Has a Debugger key with another image name |

PsCreateFailExeName |

Name specified in the Debugger key |

CreateProcess restarts Stage 1. |

|

Is an invalid or damaged Windows EXE |

PsCreateFailExeFormat |

N/A |

CreateProcess will fail. |

|

Cannot be opened |

PsCreateFailOnFileOpen |

N/A |

CreateProcess will fail. |

|

Is a command procedure (application with a .bat or .cmd extension) |

PsCreateFailOnSectionCreate |

Cmd.exe |

CreateProcess restarts Stage 1. |

Specifically, the decision tree that CreateProcess goes through to run an image is as follows:

§ If the image is an MS-DOS application with an .exe, .com, or .pif extension, a message is sent to the Windows subsystem to check whether an MS-DOS support process (Ntvdm.exe, specified in the registry value HKLM\SYSTEM\CurrentControlSet\Control\WOW\cmdline) has already been created for this session. If a support process has been created, it is used to run the MS-DOS application. (The Windows subsystem sends the message to the VDM [Virtual DOS Machine] process to run the new image.) Then CreateProcess returns. If a support process hasn’t been created, the image to be run changes to Ntvdm.exe and CreateProcess restarts at Stage 1.

§ If the file to run has a .bat or .cmd extension, the image to be run becomes Cmd.exe, the Windows command prompt, and CreateProcess restarts at Stage 1. (The name of the batch file is passed as the first parameter to Cmd.exe.)

§ If the image is a Win16 (Windows 3.1) executable, CreateProcess must decide whether a new VDM process must be created to run it or whether it should use the default sessionwide shared VDM process (which might not yet have been created). The CreateProcess flags CREATE_SEPARATE_WOW_VDM and CREATE_SHARED_WOW_VDM control this decision. If these flags aren’t specified, the registry value HKLM\SYSTEM\CurrentControlSet\Control\WOW\DefaultSeparateVDM dictates the default behavior. If the application is to be run in a separate VDM, the image to be run changes to ntvdm.exe followed by some configuration parameters and the 16-bit process’ name and CreateProcess restarts at Stage 1. Otherwise, the Windows subsystem sends a message to see whether the shared VDM process exists and can be used. (If the VDM process is running on a different desktop or isn’t running under the same security as the caller, it can’t be used and a new VDM process must be created.) If a shared VDM process can be used, the Windows subsystem sends a message to it to run the new image and CreateProcess returns. If the VDM process hasn’t yet been created (or if it exists but can’t be used), the image to be run changes to the VDM support image and CreateProcess restarts at Stage 1.

Stage 3: Creating the Windows Executive Process Object (PspAllocateProcess)

At this point, NtCreateUserProcess has opened a valid Windows executable file and created a section object to map it into the new process address space. Next it creates a Windows executive process object to run the image by calling the internal system function PspAllocateProcess. Creating the executive process object (which is done by the creating thread) involves the following substages:

§ Setting up the EPROCESS object

§ Creating the initial process address space

§ Initializing the kernel process structure(KPROCESS)

§ Setting up the PEB

§ Concluding the setup of the process address space (which includes initializing the working set list and virtual address space descriptors and mapping the image into address space)

NOTE

The only time there won’t be a parent process is during system initialization. After that point, a parent process is always required to provide a security context for the new process.

Stage 3A: Setting Up the EPROCESS Object

This substage involves the following steps:

1. Inherit the affinity of the parent process, unless it was explicitly set during process creation (through the attribute list).

2. Choose the ideal node that was specified in the attribute list, if any.

3. Inherit the I/O and page priority from the parent process. If there is no parent process, the default page priority (5) and I/O priority (Normal) are used.

4. Set the new process’ exit status to STATUS_PENDING.

5. Choose the hard error processing mode selected by the attribute list; otherwise, inherit the parent’s processing mode if none was given. If no parent exists, use the default processing mode which is to display all errors.

6. Store the parent process’ process ID in the InheritedFromUniqueProcessId field in the new process object.

7. Query the Image File Execution Options key to check if the process should be mapped with large pages. Also, query the key to check if NTDLL has been listed as a DLL that should be mapped with large pages within this process.

8. Query the Image File Execution Options key for a specific NUMA node assignment associated with the process. The assignment can be either based on inheritance (in which the NUMA node will be propagated from the parent) or an explicit NUMA assignment, as long as this assignment does not override the initial NUMA node specified in the attribute list.

9. Disable stack randomization if ASLR was disabled on the executable containing the process.

10.Attempt to acquire all the privileges required for creating the process. Choosing the Real-time process priority class, assigning a token to the new process, mapping the process with large pages, and creating the process within a new session are all operations that require the appropriate privilege.

11.Create the process’ primary access token (a duplicate of its parent’s primary token). New processes inherit the security profile of their parents. If the CreateProcessAsUser function is being used to specify a different access token for the new process, the token is then changed appropriately. This change might happen only if the parent token’s integrity level dominates the integrity level of the access token, and if the access token is a true child or sibling of the parent token. Note that if the parent has the SeAssignPrimaryToken privilege, this will bypass these checks.

12.The session ID of the new process token is now checked to determine if this is a cross-session create—in which case, the parent process temporarily attaches to the target session to correctly process quotas and address space creation.

13.Set the new process’ quota block to the address of its parent process’ quota block, and increment the reference count for the parent’s quota block. If the process was created through CreateProcessAsUser, this step won’t occur. Instead, the default quota is created, or a quota matching the user’s profile is selected.

14.The process minimum and maximum working set sizes are set to the values of PspMinimumWorkingSet and PspMaximumWorkingSet, respectively. These values can be overridden if performance options were specified in the PerfOptions key part of Image File Execution Options—in which case, the maximum working set is taken from there. Note that the default working set limits are soft limits and are essentially hints, while the PerfOptions working set maximum is a hard limit (that is, the working set will not be allowed to grow past that number).

15.Initialize the address space of the process. (See Stage 3B.) Then detach from the target session if it was different.

16.The group affinity for the process is now chosen if group-affinity inheritance was not used. The default group affinity either will inherit from the parent, if NUMA node propagation was set earlier (the group owning the NUMA node will be used) or be assigned round-robin based on the PspProcessGroupAssignment seed. If the system is in forced group-awareness mode and group 0 was chosen by the selection algorithm, group 1 is chosen instead, as long as it exists.

17.Initialize the KPROCESS part of the process object. (See Stage 3C.)

18.The token for the process is now set.

19.The process’ priority class is set to normal, unless the parent was using idle or the Below Normal process priority class—in which case, the parent’s priority is inherited. If a process priority class was set manually through the attribute lists, it is now set.

20.The process handle table is initialized. If the inherit handles flag is set for the parent process, any inheritable handles are copied from the parent’s object handle table into the new process. (For more information about object handle tables, see Chapter 3.) A process attribute can also be used to specify only a subset of handles, which is useful when you are using CreateProcessAsUser to restrict which objects should be inherited by the child process.

21.If performance options were specified through the PerfOptions key, these are now applied. The PerfOptions key includes overrides for the working set limit, I/O priority, page priority, and CPU priority class of the process.

22.The final process priority class and the default quantum for its threads are computed and set.

23.The second stage of address space setup is completed, including the initialization of the PEB (Stage 3D/3E).

24.Mitigation options for No-Execute support are now set.

25.The process PID and creation time is set, although the PID is not yet inserted in the PID handle table, nor is the process inserted in the process lists (that is the job of the insertion stage).

Stage 3B: Creating the Initial Process Address Space

The initial process address space consists of the following pages:

§ Page directory (and it’s possible there’ll be more than one for systems with page tables more than two levels, such as x86 systems in PAE mode or 64-bit systems)

§ Hyperspace page

§ VAD bitmap page

§ Working set list

To create these three pages, the following steps are taken:

1. Page table entries are created in the appropriate page tables to map the initial pages.

2. The number of pages is deducted from the kernel variable MmTotalCommittedPages and added to MmProcessCommit.

3. The systemwide default process minimum working set size (PsMinimumWorkingSet) is deducted from MmResidentAvailablePages.

4. The page table pages for the global system space (that is, other than the process-specific pages we just described, and except session-specific memory).

Stage 3C: Creating the Kernel Process Structure

The next stage of PspAllocateProcess is the initialization of the KPROCESS structure (the Pcb member of the EPROCESS). This work is performed by KeInitializeProcess, which initializes the following:

§ The doubly-linked list which connects all threads part of the process (initially empty).

§ The initial value (or reset value) of the process default quantum (which is described in more detail in the Thread Scheduling section later in the chapter), which is hard-coded to 6 until it is initialized later (by PspComputeQuantumAndPriority).

NOTE

The default initial quantum differs between Windows client and server systems. For more information on thread quantums, turn to their discussion in the section Thread Scheduling.

§ The process’ base priority is set based on what was computed in Stage 3A.

§ The default processor affinity for the threads in the process is set, as is the group affinity. The group affinity was calculated earlier in Stage 3A or inherited from the parent.

§ The process swapping state is set to resident.

§ The thread seed is based on the ideal processor that the kernel has chosen for this process (which is based on the previously created process’ ideal processor, effectively randomizing this in a round-robin manner). Creating a new process will update the seed in KeNodeBlock (the initial NUMA node block) so that the next new process will get a different ideal processor seed.

Stage 3D: Concluding the Setup of the Process Address Space

Setting up the address space for a new process is somewhat complicated, so let’s look at what’s involved one step at a time. To get the most out of this section, you should have some familiarity with the internals of the Windows memory manager, which are described in Chapter 10 in Part 2.

1. The virtual memory manager sets the value of the process’ last trim time to the current time. The working set manager (which runs in the context of the balance set manager system thread) uses this value to determine when to initiate working set trimming.

2. The memory manager initializes the process’ working set list—page faults can now be taken.

3. The section (created when the image file was opened) is now mapped into the new process’ address space, and the process section base address is set to the base address of the image.

4. Ntdll.dll is mapped into the process; if this is a Wow64 process, the 32-bit Ntdll.dll is also mapped.

5. A new session, if requested, is now created for the process. This special step is mostly implemented for the benefit of the Session Manager (SMSS) when initializing a new session.

6. The standard handles are duplicated, and the new values are written in the process parameters structure.

7. Any memory reservations listed in the attribute list are now processed. Additionally, two flags allow the bulk reservation of the first 1 or 16 MB of the address space. These flags are used internally for mapping real-mode vectors and ROM code, for example (which must be in the low ranges of virtual address space, where normally the heap or other process structures could be located).

8. The user process parameters are written into the process, copied, and fixed up (meaning converted from absolute form to a relative form so that a single memory block is needed).

9. The affinity information is written into the PEB.

10.The MinWin API redirection set is mapped into the process.

NOTE

POSIX processes clone the address space of their parents, so they don’t have to go through these steps to create a new address space. In the case of POSIX applications, the new process’ section base address is set to that of its parent process and the parent’s PEB is cloned for the new process.

Stage 3E: Setting Up the PEB

NtCreateUserProcess calls MmCreatePeb, which first maps the systemwide national language support (NLS) tables into the process’ address space. It next calls MiCreatePebOrTeb to allocate a page for the PEB and then initializes a number of fields, most of them based on internal variables that were configured through the registry, such as MmHeap* values, MmCriticalSectionTimeout, and MmMinimumStackCommitInBytes. Some of these fields can be overridden by settings in the linked executable image, such as the Windows version in the PE header or the affinity mask in the load configuration directory of the PE header.

If the image header characteristics IMAGE_FILE_UP_SYSTEM_ONLY flag is set (indicating that the image can run only on a uniprocessor system), a single CPU (MmRotatingUniprocessorNumber) is chosen for all the threads in this new process to run on. The selection process is performed by simply cycling through the available processors—each time this type of image is run, the next processor is used. In this way, these types of images are spread evenly across the processors.

Stage 3F: Completing the Setup of the Executive Process Object (PspInsertProcess)

Before the handle to the new process can be returned, a few final setup steps must be completed, which are performed by PspInsertProcess and its helper functions:

1. If systemwide auditing of processes is enabled (either as a result of local policy settings or group policy settings from a domain controller), the process’ creation is written to the Security event log.

2. If the parent process was contained in a job, the job is recovered from the job level set of the parent and then bound to the session of the newly created process. Finally, the new process is added to the job.

3. PspInsertProcess inserts the new process object at the end of the Windows list of active processes (PsActiveProcessHead).

4. The process debug port of the parent process is copied to the new child process, unless the NoDebugInherit flag is set (which can be requested when creating the process). If a debug port was specified, it is attached to the new process at this time.

5. Because job objects can now specify restrictions on which group or groups the threads within the processes part of a job can run on, PspInsertProcess must make sure that the group affinity associated with the process would not violate the group affinity associated with the job. An interesting secondary issue to consider is if the job’s permissions grant access to modify the process’ affinity permissions, because a lesser-privileged job object might interfere with the affinity requirements of a more privileged process.

6. Finally, PspInsertProcess creates a handle for the new process by calling ObOpenObjectByPointer, and then returns this handle to the caller. Note that no process creation callback is sent until the first thread within the process is created, and the code always sends process callbacks before sending object-managed based callbacks.

Stage 4: Creating the Initial Thread and Its Stack and Context

At this point, the Windows executive process object is completely set up. It still has no thread, however, so it can’t do anything yet. It’s now time to start that work. Normally, the PspCreateThread routine is responsible for all aspects of thread creation and is called by NtCreateThreadwhen a new thread is being created. However, because the initial thread is created internally by the kernel without user-mode input, the two helper routines that PspCreateThread relies on are used instead: PspAllocateThread and PspInsertThread.

PspAllocateThread handles the actual creation and initialization of the executive thread object itself, while PspInsertThread handles the creation of the thread handle and security attributes and the call to KeStartThread to turn the executive object into a schedulable thread on the system. However, the thread won’t do anything yet—it is created in a suspended state and isn’t resumed until the process is completely initialized (as described in Stage 5).

NOTE

The thread parameter (which can’t be specified in CreateProcess but can be specified in CreateThread) is the address of the PEB. This parameter will be used by the initialization code that runs in the context of this new thread (as described in Stage 6).

PspAllocateThread performs the following steps:

1. It prevents user-mode scheduling (UMS) threads from being created in Wow64 processes, as well as preventing user-mode callers from creating threads in the system process.

2. An executive thread object is created and initialized.

3. If CPU rate limiting is enabled, the CPU quota block is initialized.

4. The various lists used by LPC, I/O Management, and the Executive are initialized.

5. The thread’s create time is set, and its thread ID (TID) is created.

6. Before the thread can execute, it needs a stack and a context in which to run, so these are set up. The stack size for the initial thread is taken from the image—there’s no way to specify another size. If this is a Wow64 process, the Wow64 thread context will also be initialized.

7. The thread environment block (TEB) is allocated for the new thread.

8. The user-mode thread start address is stored in the ETHREAD. This is the system-supplied thread startup function in Ntdll.dll (RtlUserThreadStart). The user’s specified Windows start address is stored in the ETHREAD in a different location so that debugging tools such as Process Explorer can query the information.

9. KeInitThread is called to set up the KTHREAD structure. The thread’s initial and current base priorities are set to the process’ base priority, and its affinity and quantum are set to that of the process. This function also sets the initial thread ideal processor. (See the section Ideal and Last Processor for a description of how this is chosen.) KeInitThread next allocates a kernel stack for the thread and initializes the machine-dependent hardware context for the thread, including the context, trap, and exception frames. The thread’s context is set up so that the thread will start in kernel mode in KiThreadStartup. Finally, KeInitThread sets the thread’s state to Initialized and returns to PspAllocateThread.

10.If this is a UMS thread, PspUmsInitThread is called to initialize the UMS state.

Once that work is finished, NtCreateUserProcess calls PspInsertThread to perform the following steps:

1. A check is made to ensure that the thread’s group affinity does not violate job limitations (which we already described earlier). In the process create path, this check is skipped because it was already done at the earlier stage.

2. Checks are made to ensure that the process hasn’t already been terminated, that the thread hasn’t already been terminated, or that the thread hasn’t even been able to start running. If any of these cases are true, thread creation will fail.

3. The KTHREAD part of the thread object is initialized by calling KeStartThread. This involves inheriting scheduler settings from the owner process, setting the ideal node and processor, updating the group affinity, and inserting the thread in the process list maintained by KPROCESS (a separate list from the one in EPROCESS). Additionally, on x64 systems, another systemwide list of processes, KiProcessListHead, is used by PatchGuard to maintain the integrity of the executive’s PsActiveProcessHead. Finally, the stack count of the process is incremented.

4. The thread count in the process object is incremented, and the owner process’ I/O priority and page priority are inherited. If this is the highest number of threads the process has ever had, the thread count high watermark is updated as well. If this was the second thread in the process, the primary token is frozen (that is, it can no longer be changed, unless the process is a POSIX subsystem process).

5. If the thread is a UMS thread, the count of UMS threads is incremented.

6. The thread is inserted in the process’ thread list, and the thread is suspended if the creating process requested it.

7. If CPU rate limiting is enabled, the rate control APC is initialized and the CpuThrottled bit is set in the KTHREAD.

8. The object is inserted, and any registered thread callbacks are called. If this was the first thread in the process (and therefore, the operation happened as part of the CreateProcess path), the registered kernel process callbacks are also called.

9. The handle is created with ObOpenObjectByPointer.

10.The thread is readied for execution by calling KeReadyThread. It enters the deferred ready queue, the process is paged out, and a page in is requested.

Stage 5: Performing Windows Subsystem–Specific Post-Initialization

Once NtCreateUserProcess returns with a success code, all the necessary executive process and thread objects have been created. Kernel32.dll then performs various operations related to Windows subsystem–specific operations to finish initializing the process.

First of all, various checks are made for whether Windows should allow the executable to run. These checks include validating the image version in the header and checking whether Windows application certification has blocked the process (through a group policy). On specialized editions of Windows Server 2008 R2, such as Windows Web Server 2008 R2 and Windows HPC Server 2008 R2, additional checks are made to see whether the application imports any disallowed APIs.

If software restriction policies dictate, a restricted token is created for the new process. Afterward, the application-compatibility database is queried to see whether an entry exists in either the registry or system application database for the process. Compatibility shims will not be applied at this point—the information will be stored in the PEB once the initial thread starts executing (Stage 6).

At this point, Kernel32.dll sends a message to the Windows subsystem so that it can set up SxS information (see the end of this section for more information on side-by-side assemblies) such as manifest files, DLL redirection paths, and out-of-process execution for the new process. It also initializes the Windows subsystem structures for the process and initial thread. The message includes the following information:

§ Process and thread handles

§ Entries in the creation flags

§ ID of the process’ creator

§ Flag indicating whether the process belongs to a Windows application (so that Csrss can determine whether or not to show the startup cursor)

§ UI language information

§ DLL redirection and .local flags

§ Manifest file information

The Windows subsystem performs the following steps when it receives this message:

1. CsrCreateProcess duplicates a handle for the process and thread. In this step, the usage count of the process and the thread is incremented from 1 (which was set at creation time) to 2.

2. If a process priority class isn’t specified, CsrCreateProcess sets it according to the algorithm described earlier in this section.

3. The Csrss process structure (CSR_PROCESS) is allocated.

4. The new process’ exception port is set to be the general function port for the Windows subsystem so that the Windows subsystem will receive a message when a second-chance exception occurs in the process. (For further information on exception handling, see Chapter 3.)

5. The Csrss thread structure (CSR_THREAD) is allocated and initialized.

6. CsrCreateThread inserts the thread in the list of threads for the process.

7. The count of processes in this session is incremented.

8. The process shutdown level is set to 0x280 (the default process shutdown level—see SetProcessShutdownParameters in the MSDN Library documentation for more information).

9. The new Csrss process structure is inserted into the list of Windows subsystem-wide processes.

10.The per-process data structure used by the kernel-mode part of the Windows subsystem (W32PROCESS) is allocated and initialized.

11.The application start cursor is displayed. This cursor is the familiar rolling doughnut shape—the way that Windows says to the user, “I’m starting something, but you can use the cursor in the meantime.” If the process doesn’t make a GUI call after two seconds, the cursor reverts to the standard pointer. If the process does make a GUI call in the allotted time, CsrCreateProcess waits five seconds for the application to show a window. After that time, CsrCreateProcess resets the cursor again.

After Csrss has performed these steps, CreateProcess checks whether the process was run elevated (which means it was executed through ShellExecute and elevated by the AppInfo service after the consent dialog box was shown to the user). This includes checking whether the process was a setup program. If it was, the process’ token is opened, and the virtualization flag is turned on so that the application is virtualized. (See the information on UAC and virtualization in Chapter 6.) If the application contained elevation shims or had a requested elevation level in its manifest, the process is destroyed and an elevation request is sent to the AppInfo service. (See Chapter 6 for more information on elevation.)

Note that most of these checks are not performed for protected processes; because these processes must have been designed for Windows Vista or later, there’s no reason why they should require elevation, virtualization, or application-compatibility checks and processing. Additionally, allowing mechanisms such as the shim engine to use its usual hooking and memory-patching techniques on a protected process would result in a security hole if someone could figure how to insert arbitrary shims that modify the behavior of the protected process. Additionally, because the Shim Engine is installed by the parent process, which might not have access to its child protected process, even legitimate shimming cannot work.

Stage 6: Starting Execution of the Initial Thread

At this point, the process environment has been determined, resources for its threads to use have been allocated, the process has a thread, and the Windows subsystem knows about the new process. Unless the caller specified the CREATE_ SUSPENDED flag, the initial thread is now resumed so that it can start running and perform the remainder of the process initialization work that occurs in the context of the new process (Stage 7).

Stage 7: Performing Process Initialization in the Context of the New Process

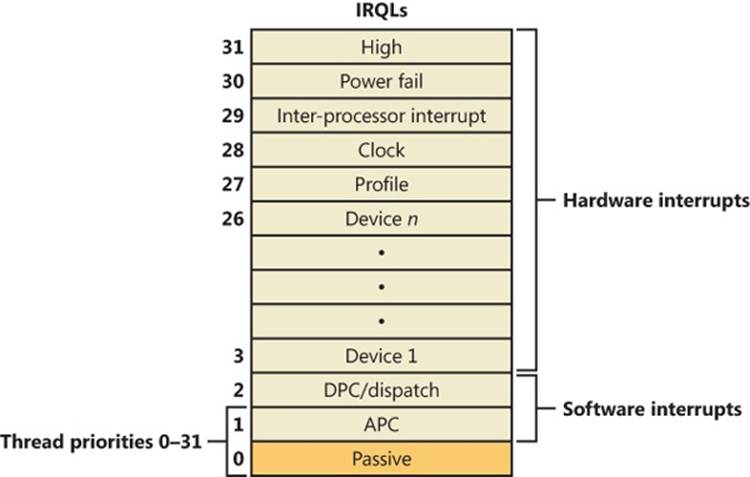

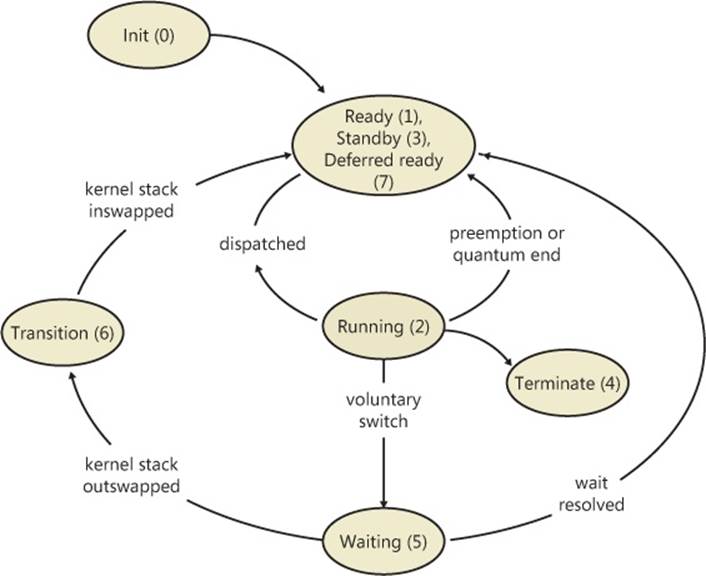

The new thread begins life running the kernel-mode thread startup routine KiThreadStartup. KiThreadStartup lowers the thread’s IRQL level from deferred procedure call (DPC)/dispatch level to APC level and then calls the system initial thread routine, PspUserThreadStartup. The user-specified thread start address is passed as a parameter to this routine.

First, this function disables the ability to swap the primary process token at runtime, which is reserved for POSIX support only (to emulate setuid behavior). It then sets the Locale ID and the ideal processor in the TEB, based on the information present in kernel-mode data structures, and then it checks whether thread creation actually failed. Next it calls DbgkCreateThread, which checks whether image notifications were sent for the new process. If they weren’t, and notifications are enabled, an image notification is sent first for the process and then for the image load of Ntdll.dll. Note that this is done in this stage rather than when the images were first mapped because the process ID (which is required for the kernel callouts) is not yet allocated at that time.

Once those checks are completed, another check is performed to see whether the process is a debuggee. If it is, PspUserThreadStartup checks whether the debugger notifications have already been sent for this process. If not, a create process message is sent through the debug object (if one is present) so that the process startup debug event (CREATE_PROCESS_DEBUG_INFO) can be sent to the appropriate debugger process. This is followed by a similar thread startup debug event and by another debug event for the image load of Ntdll.dll. DbgkCreateThreadthen waits for a reply from the debugger (via the ContinueDebugEvent function).

Now that the debugger has been notified, PspUserThreadStartup looks at the result of the initial check on the thread’s life. If it was killed on startup, the thread is terminated. This check is done after the debugger and image notifications to be sure that the kernel-mode and user-mode debuggers don’t miss information on the thread, even if the thread never got a chance to run.

Otherwise, the routine checks whether application prefetching is enabled on the system and, if so, calls the prefetcher (and Superfetch) to process the prefetch instruction file (if it exists) and prefetch pages referenced during the first 10 seconds the last time the process ran. (For details on the prefetcher and Superfetch, see Chapter 10 in Part 2.)

PspUserThreadStartup then checks whether the systemwide cookie in the SharedUserData structure has been set up yet. If it hasn’t, it generates it based on a hash of system information such as the number of interrupts processed, DPC deliveries, and page faults. This systemwide cookie is used in the internal decoding and encoding of pointers, such as in the heap manager to protect against certain classes of exploitation. (For more information on heap manager security, see Chapter 10 in Part 2.)

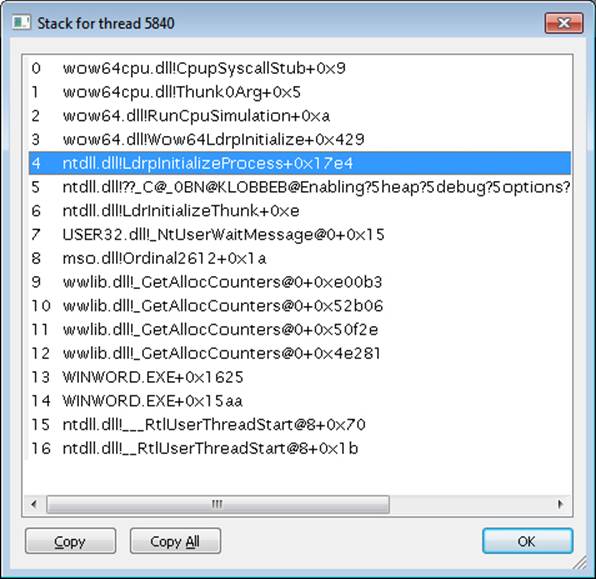

Finally, PspUserThreadStartup sets up the initial thunk context to run the image-loader initialization routine (LdrInitializeThunk in Ntdll.dll), as well as the systemwide thread startup stub (RtlUserThreadStart in Ntdll.dll). These steps are done by editing the context of the thread in place and then issuing an exit from system service operation, which loads the specially crafted user context. The LdrInitializeThunk routine initializes the loader, the heap manager, NLS tables, thread-local storage (TLS) and fiber-local storage (FLS) arrays, and critical section structures. It then loads any required DLLs and calls the DLL entry points with the DLL_PROCESS_ ATTACH function code.

Once the function returns, NtContinue restores the new user context and returns to user mode—thread execution now truly starts.

RtlUserThreadStart uses the address of the actual image entry point and the start parameter and calls the application’s entrypoint. These two parameters have also already been pushed onto the stack by the kernel. This complicated series of events has two purposes. First, it allows the image loader inside Ntdll.dll to set up the process internally and behind the scenes so that other user-mode code can run properly. (Otherwise, it would have no heap, no thread-local storage, and so on.)

Second, having all threads begin in a common routine allows them to be wrapped in exception handling so that when they crash, Ntdll.dll is aware of that and can call the unhandled exception filter inside Kernel32.dll. It is also able to coordinate thread exit on return from the thread’s start routine and to perform various cleanup work. Application developers can also call SetUnhandledExceptionFilter to add their own unhandled exception-handling code.

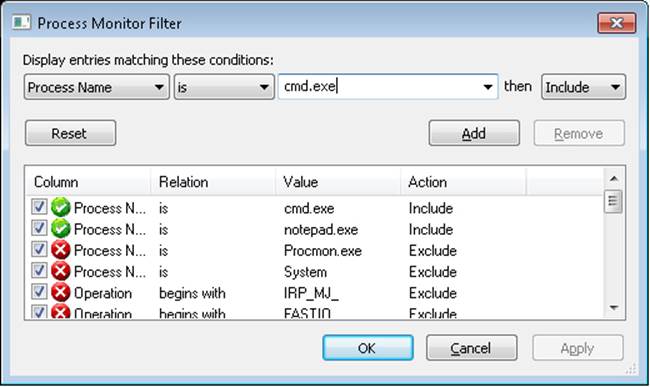

EXPERIMENT: TRACING PROCESS STARTUP

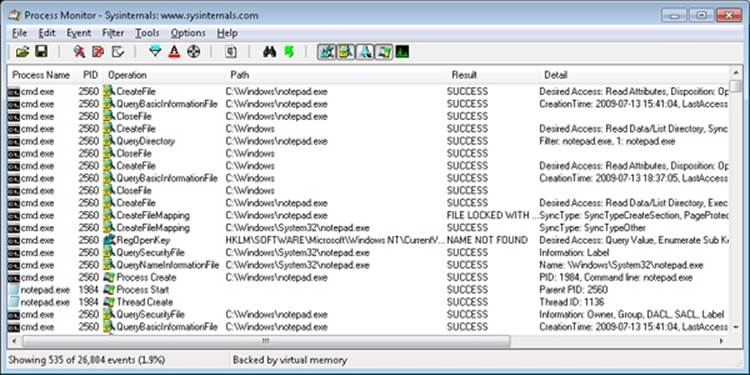

Now that we’ve looked in detail at how a process starts up and the different operations required to begin executing an application, we’re going to use Process Monitor to look at some of the file I/O and registry keys that are accessed during this process.

Although this experiment will not provide a complete picture of all the internal steps we’ve described, you’ll be able to see several parts of the system in action, notably prefetch and Superfetch, image-file execution options and other compatibility checks, and the image loader’s DLL mapping.

We’ll look at a very simple executable—Notepad.exe—and launch it from a Command Prompt window (Cmd.exe). It’s important that we look both at the operations inside Cmd.exe and those inside Notepad.exe. Recall that a lot of the user-mode work is performed by CreateProcess, which is called by the parent process before the kernel has created a new process object.

To set things up correctly, add two filters to Process Monitor: one for Cmd.exe, and one for Notepad.exe—these are the only two processes you should include. Be sure that you don’t have any currently running instances of these two processes so that you know you’re looking at the right events. The filter window should look like this:

Next, make sure that event logging is currently disabled (clear File, Capture Events), and then start up the command prompt. Enable event logging (using the File menu again, or simply press CTRL+E or click the magnifying glass icon on the toolbar), and then type Notepad.exe and press Enter. On a typical Windows system, you should see anywhere between 500 and 1500 events appear. Hide the Sequence and Time Of Day columns so that you can focus your attention on the columns of interest. Your window should look similar to the one shown next.

Just as described in Stage 1 of the CreateProcess flow, one of the first things to notice is that just before the process is started and the first thread is created, Cmd.exe does a registry read at HKLM\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Image File Execution Options. Because there were no image-execution options associated with Notepad.exe, the process was created as is.

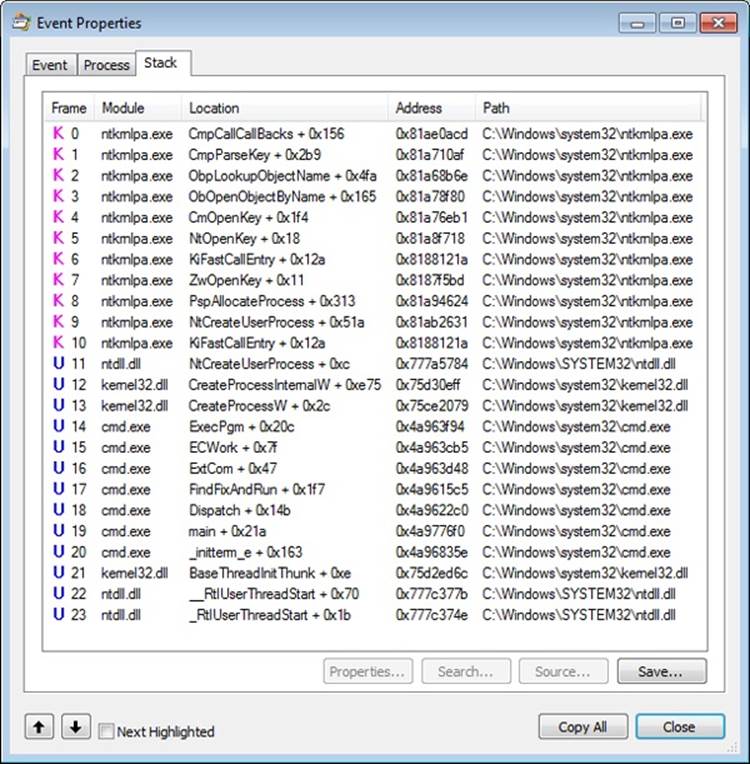

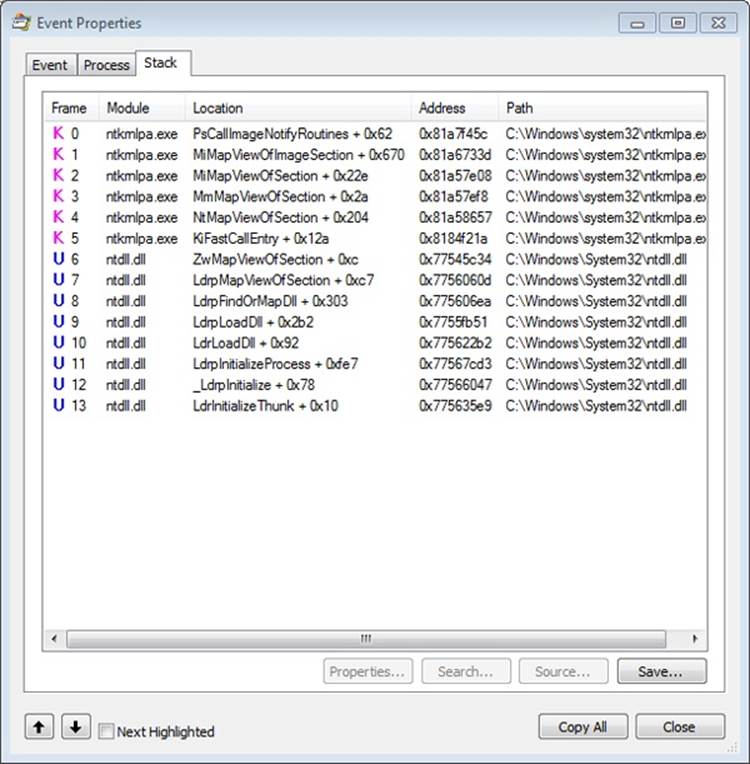

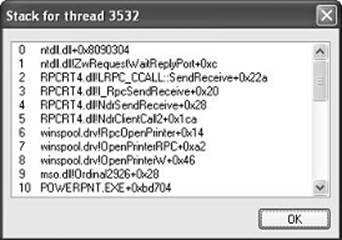

As with this and any other event in Process Monitor’s log, you have the ability to see whether each part of the process creation flow was performed in user mode or kernel mode, and by which routines, by looking at the stack of the event. To do this, double-click on the RegOpenKey event and switch to the Stack tab. The following screen shows the standard stack on a 32-bit Windows machine.

This stack shows that you already reached the part of process creation performed in kernel mode (through NtCreateUserProcess) and that the helper routine PspAllocateProcess is responsible for this check.

Going down the list of events after the thread and process have been created, you will notice three groups of events. The first is a simple check for application-compatibility flags, which will let the user-mode process creation code know if checks inside the application-compatibility database are required through the shim engine.

This check is followed by multiple reads to Side-By-Side, Manifest, and MUI/Language keys, which are part of the assembly framework mentioned earlier. Finally, you might see file I/O to one or more .sdb files, which are the application-compatibility databases on the system. This I/O is where additional checks are done to see if the shim engine needs to be invoked for this application. Because Notepad is a well-behaved Microsoft program, it doesn’t require any shims.

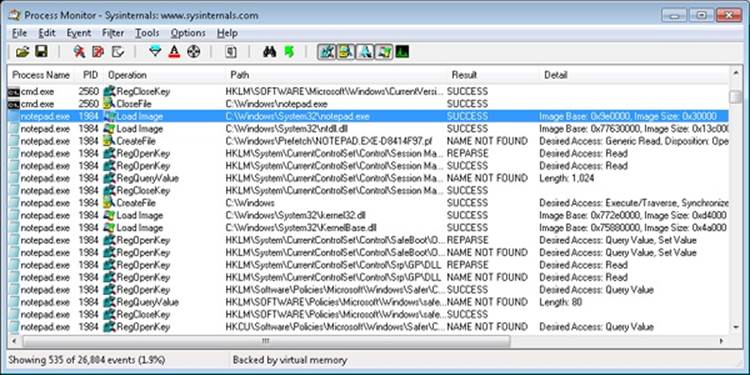

The following screen shows the next series of events, which happen inside the Notepad process itself. These are actions initiated by the user-mode thread startup wrapper in kernel mode, which performs the actions described earlier. The first two are the Notepad.exe and Ntdll.dll image load debug notification messages, which can be generated only now that code is running inside Notepad’s process context and not the context for the command prompt.

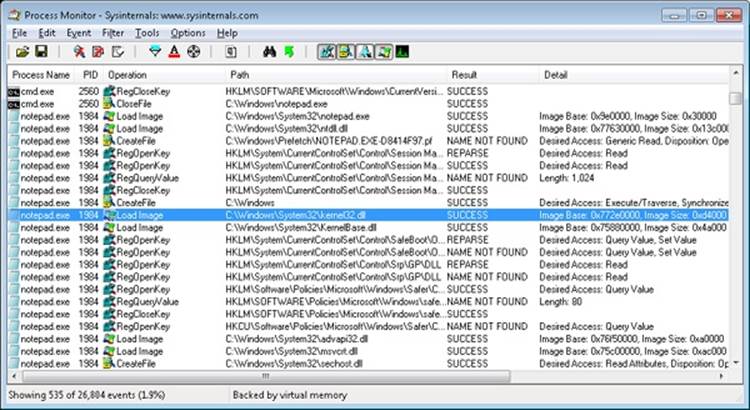

Next, the prefetcher kicks in, looking for a prefetch database file that has already been generated for Notepad. (For more information on the prefetcher, see Chapter 10 in Part 2.) On a system where Notepad has already been run at least once, this database will exist, and the prefetcher will begin executing the commands specified inside it. If this is the case, scrolling down you will see multiple DLLs being read and queried. Unlike typical DLL loading, which is done by the user-mode image loader by looking at the import tables or when an application manually loads a DLL, these events are being generated by the prefetcher, which is already aware of the libraries that Notepad will require. Typical image loading of the DLLs required happens next, and you will see events similar to the ones shown here:

These events are now being generated from code running inside user mode, which was called once the kernel-mode wrapper function finished its work. Therefore, these are the first events coming from LdrpInitializeProcess, which we mentioned is the internal system wrapper function for any new process, before the start address wrapper is called. You can confirm this on your own by looking at the stack of these events—for example, the kernel32.dll image load event, which is shown in the next screen:

Further events are generated by this routine and its associated helper functions until you finally reach events generated by the WinMain function inside Notepad, which is where code under the developer’s control is now being executed. Describing in detail all the events and user-mode components that come into play during process execution would fill up this entire chapter, so exploration of any further events is left as an exercise for the reader.

Thread Internals

Now that we’ve dissected processes, let’s turn our attention to the structure of a thread. Unless explicitly stated otherwise, you can assume that anything in this section applies to both user-mode threads and kernel-mode system threads (which are described in Chapter 2).

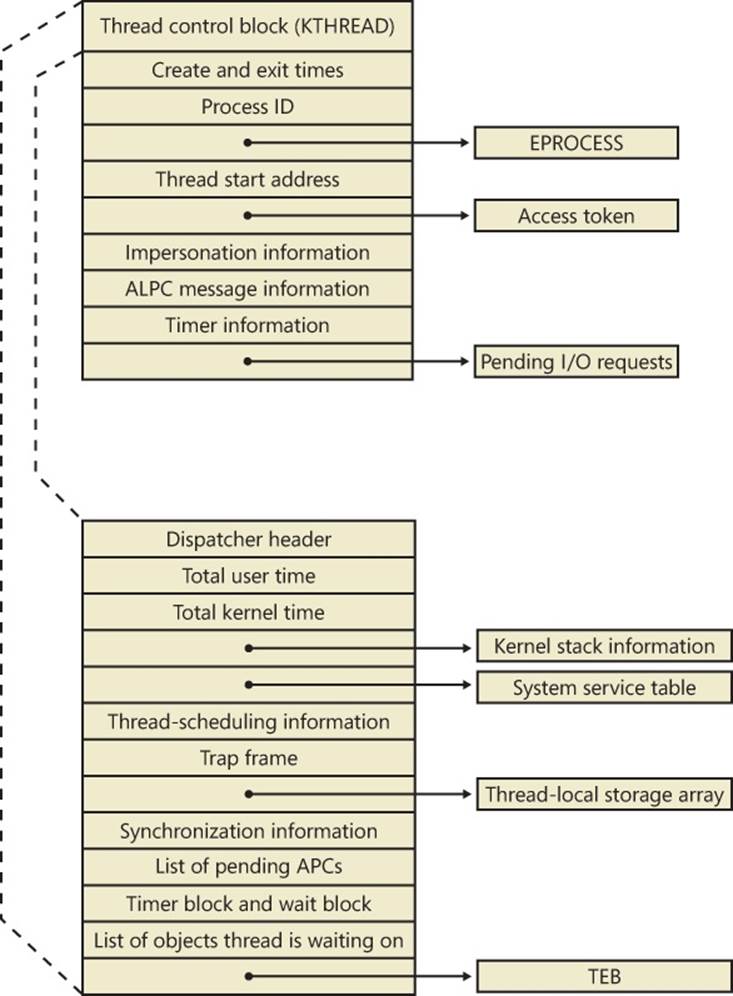

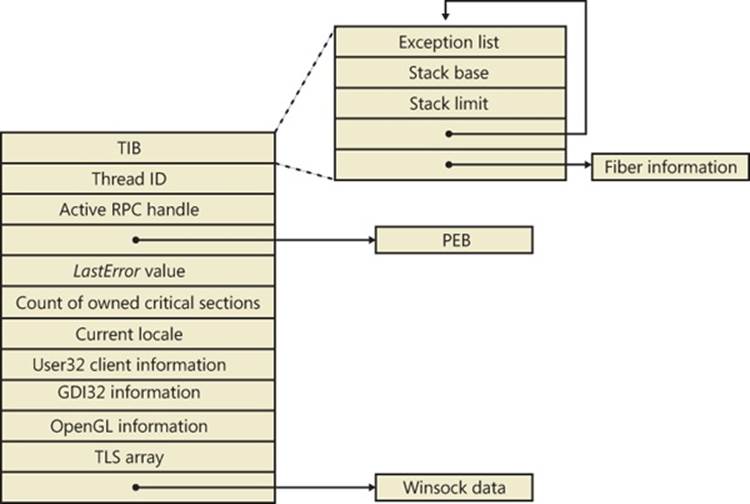

Data Structures

At the operating-system level, a Windows thread is represented by an executive thread object. The executive thread object encapsulates an ETHREAD structure, which in turn contains a KTHREAD structure as its first member. These are illustrated in Figure 5-8. The ETHREAD structure and the other structures it points to exist in the system address space, with the exception of the thread environment block (TEB), which exists in the process address space (again, because user-mode components need to access it).

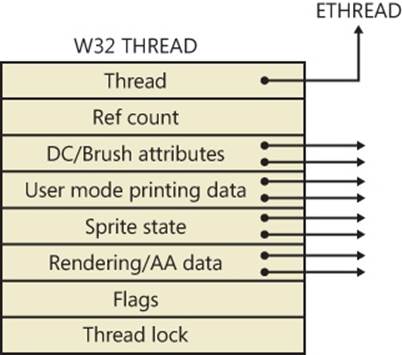

The Windows subsystem process (Csrss) maintains a parallel structure for each thread created in a Windows subsystem application, called the CSR_THREAD. For threads that have called a Windows subsystem USER or GDI function, the kernel-mode portion of the Windows subsystem (Win32k.sys) maintains a per-thread data structure (called the W32THREAD) that the KTHREAD structure points to.

NOTE

The fact that the executive, high-level, graphics-related, Win32k thread structure is pointed to by the KTHREAD, instead of the ETHREAD, appears to be a layer violation or oversight in the standard kernel’s abstraction architecture—the scheduler and other low-level components do not use this field.

Figure 5-8. Important fields of the executive thread structure and its embedded kernel thread structure

Most of the fields illustrated in Figure 5-8 are self-explanatory. The first member of the ETHREAD is called the Tcb, for “Thread control block”; this is a structure of type KTHREAD. Following that are the thread identification information, the process identification information (including a pointer to the owning process so that its environment information can be accessed), security information in the form of a pointer to the access token and impersonation information, and finally, fields relating to Asynchronous Local Procedure Call (ALPC) messages and pending I/O requests. Some of these key fields are covered in more detail elsewhere in this book. For more details on the internal structure of an ETHREAD structure, you can use the kernel debugger dt command to display its format.

Let’s take a closer look at two of the key thread data structures referred to in the preceding text: the KTHREAD and the TEB. The KTHREAD structure (which is the Tcb member of the ETHREAD) contains information that the Windows kernel needs to perform thread scheduling, synchronization, and timekeeping functions.

EXPERIMENT: DISPLAYING ETHREAD AND KTHREAD STRUCTURES

The ETHREAD and KTHREAD structures can be displayed with the dt command in the kernel debugger. The following output shows the format of an ETHREAD on a 32-bit system:

lkd> dt nt!_ethread

nt!_ETHREAD

+0x000 Tcb : _KTHREAD

+0x1e0 CreateTime : _LARGE_INTEGER

+0x1e8 ExitTime : _LARGE_INTEGER

+0x1e8 KeyedWaitChain : _LIST_ENTRY

+0x1f0 ExitStatus : Int4B

...

+0x270 AlpcMessageId : Uint4B

+0x274 AlpcMessage : Ptr32 Void

+0x274 AlpcReceiveAttributeSet : Uint4B

+0x278 AlpcWaitListEntry : _LIST_ENTRY

+0x280 CacheManagerCount : Uint4B

The KTHREAD can be displayed with a similar command or by typing dt nt!_ETHREAD Tcb, as was shown in the EPROCESS/KPROCESS experiment earlier:

lkd> dt nt!_kthread

nt!_KTHREAD

+0x000 Header : _DISPATCHER_HEADER

+0x010 CycleTime : Uint8B

+0x018 HighCycleTime : Uint4B

+0x020 QuantumTarget : Uint8B

...

+0x05e WaitIrql : UChar

+0x05f WaitMode : Char

+0x060 WaitStatus : Int4B

EXPERIMENT: USING THE KERNEL DEBUGGER !THREAD COMMAND

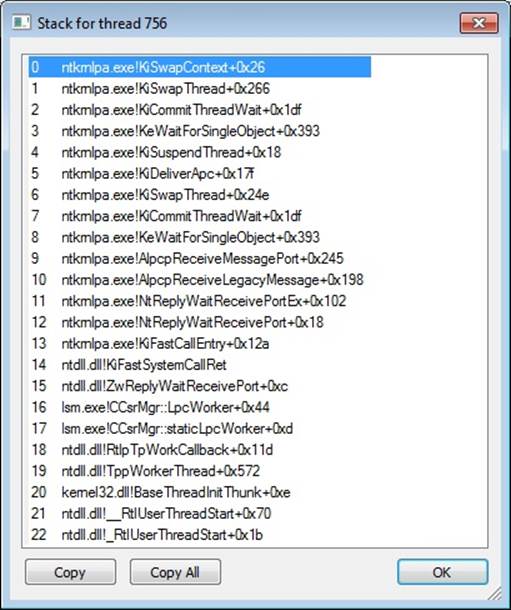

The kernel debugger !thread command dumps a subset of the information in the thread data structures. Some key elements of the information the kernel debugger displays can’t be displayed by any utility, including the following information: internal structure addresses; priority details; stack information; the pending I/O request list; and, for threads in a wait state, the list of objects the thread is waiting for.

To display thread information, use either the !process command (which displays all the threads of a process after displaying the process information) or the !thread command with the address of a thread object to display a specific thread.

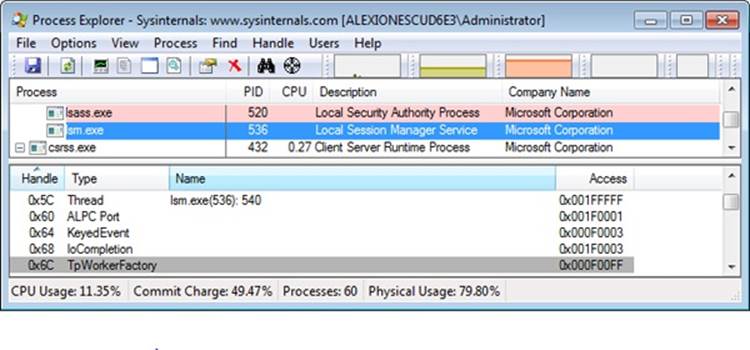

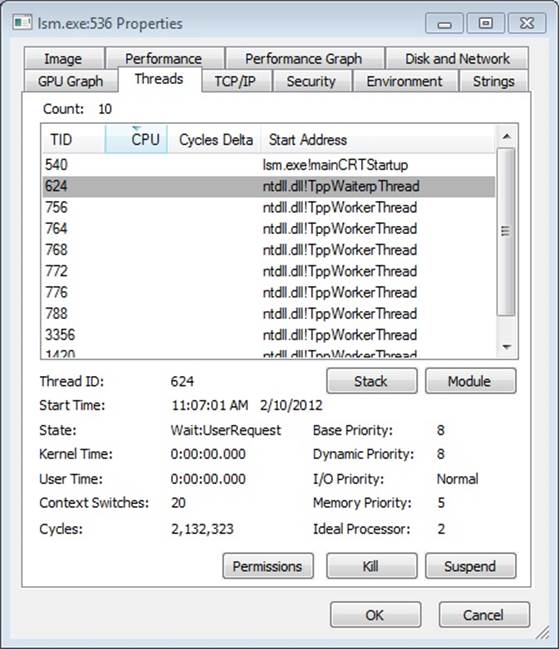

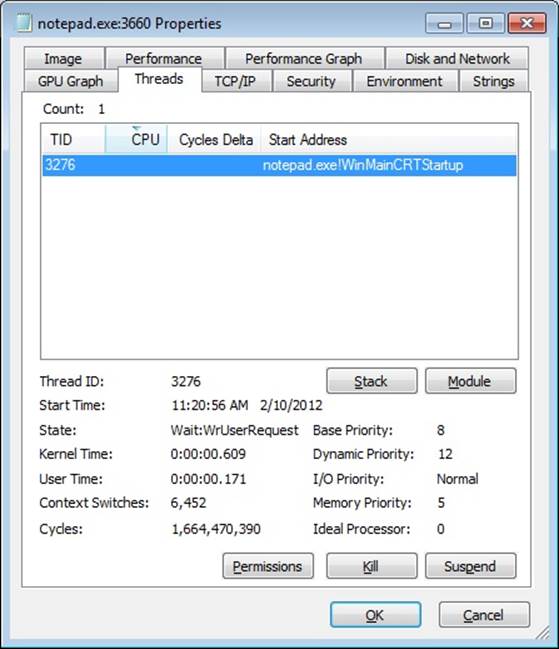

EXPERIMENT: VIEWING THREAD INFORMATION

The following output is the detailed display of a process produced by using the Tlist utility in the Debugging Tools for Windows. Notice that the thread list shows Win32StartAddr. This is the address passed to the CreateThread function by the application. All the other utilities, except Process Explorer, that show the thread start address show the actual start address (a function in Ntdll.dll), not the application-specified start address.

C:\Program Files\Windows Kits\8.0\Debuggers\x86>tlist winword

3232 WINWORD.EXE 648739_Chap05.docx - Microsoft Word

CWD: C:\Users\Alex Ionescu\Documents\

CmdLine: "C:\Program Files\Microsoft Office\Office14\WINWORD.EXE" /n "C:\Users\Alex

Ionescu\Documents\Chapter5.docx

VirtualSize: 531024 KB PeakVirtualSize: 585248 KB

WorkingSetSize:122484 KB PeakWorkingSetSize:181532 KB

NumberOfThreads: 12

2104 Win32StartAddr:0x2fde10ec LastErr:0x00000000 State:Waiting

2992 Win32StartAddr:0x7778fd0d LastErr:0x00000000 State:Waiting

3556 Win32StartAddr:0x3877e970 LastErr:0x00000000 State:Waiting

2436 Win32StartAddr:0x3877e875 LastErr:0x00000000 State:Waiting

3136 Win32StartAddr:0x3877e875 LastErr:0x00000000 State:Waiting

3412 Win32StartAddr:0x3877e875 LastErr:0x00000000 State:Waiting

1096 Win32StartAddr:0x3877e875 LastErr:0x00000000 State:Waiting

912 Win32StartAddr:0x74497832 LastErr:0x00000000 State:Waiting

1044 Win32StartAddr:0x389b0926 LastErr:0x00000583 State:Waiting

1972 Win32StartAddr:0x694532fb LastErr:0x00000000 State:Waiting

4056 Win32StartAddr:0x75f9c83e LastErr:0x00000000 State:Waiting

1124 Win32StartAddr:0x777903e9 LastErr:0x00000000 State:Waiting

14.0.5123.5000 shp 0x2FDE0000 C:\Program Files\Microsoft Office\Office14\WINWORD.EXE

6.1.7601.17725 shp 0x77760000 C:\Windows\SYSTEM32\ntdll.dll

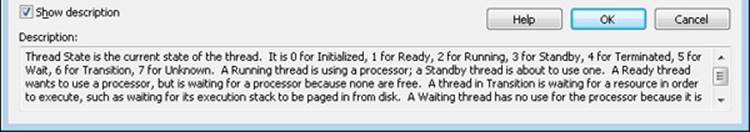

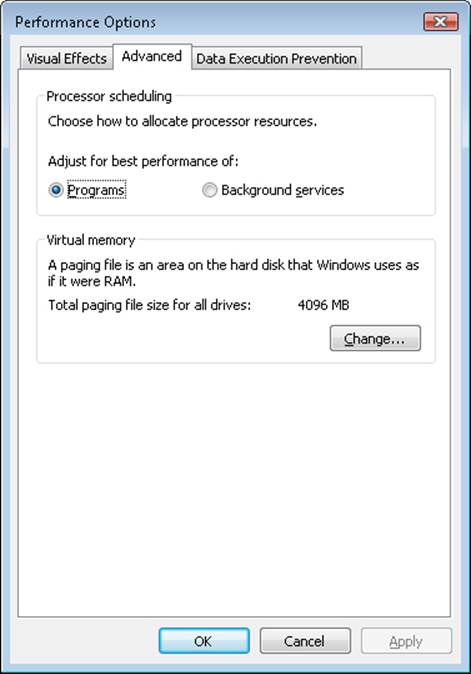

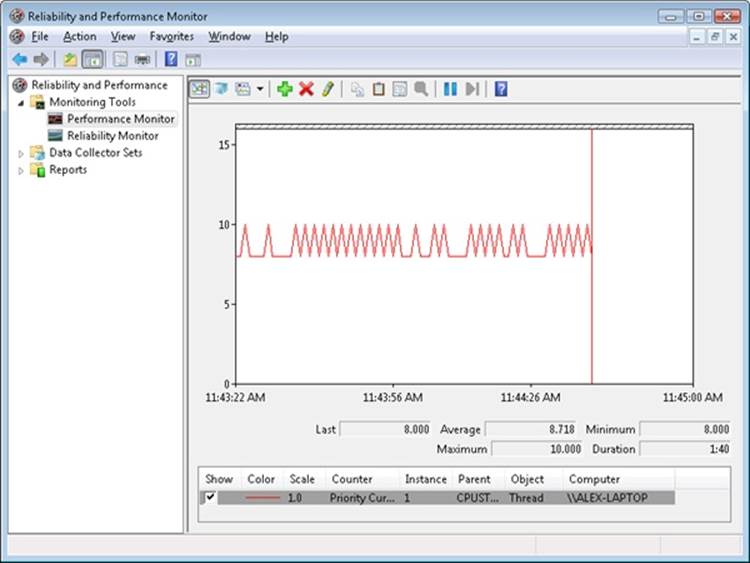

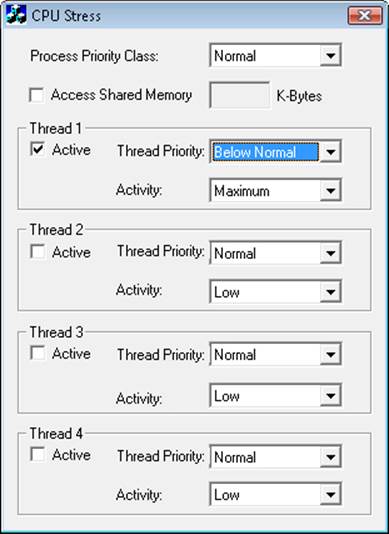

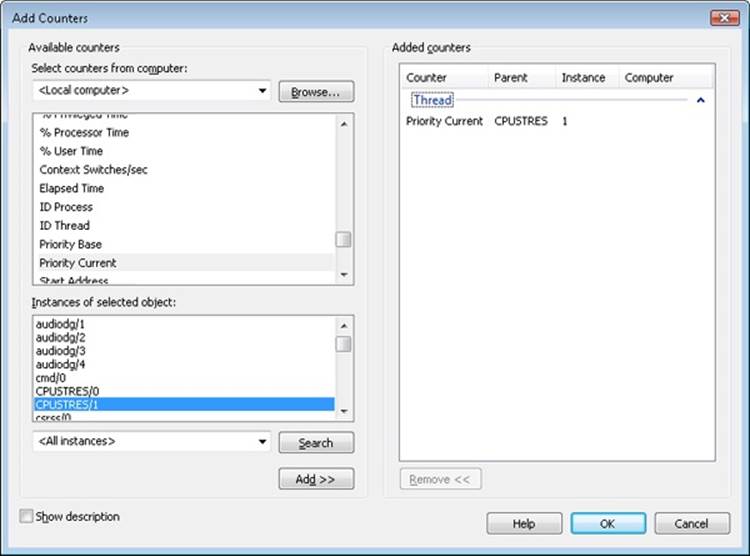

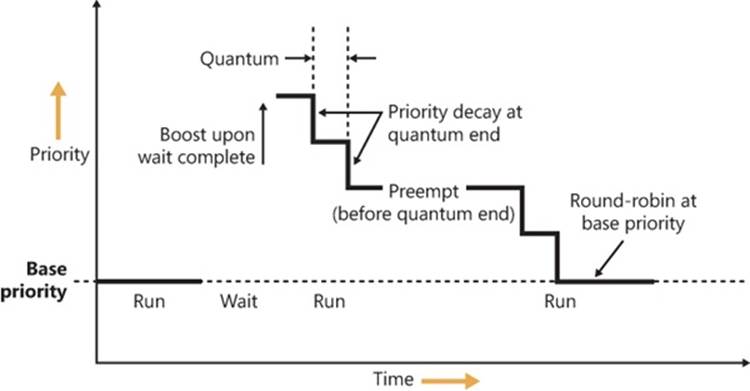

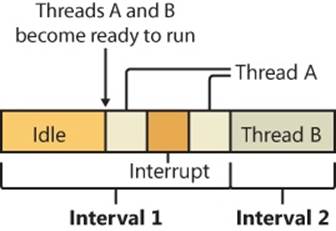

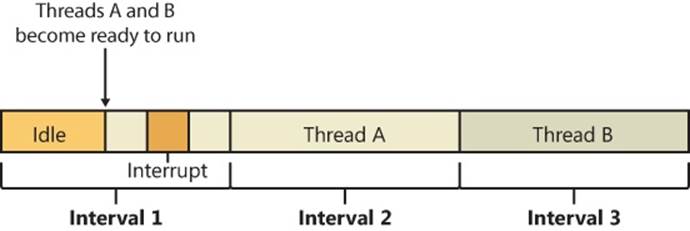

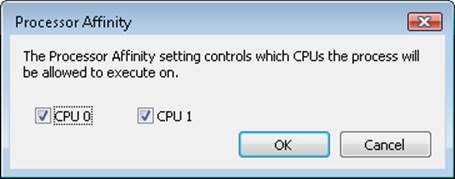

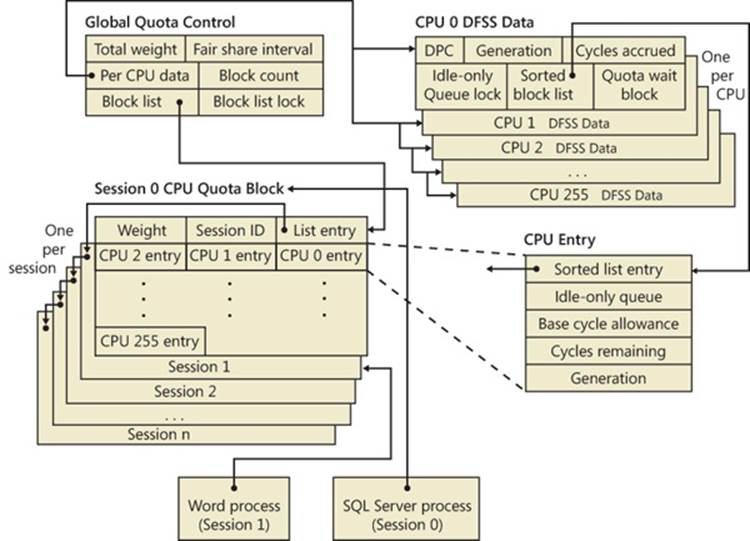

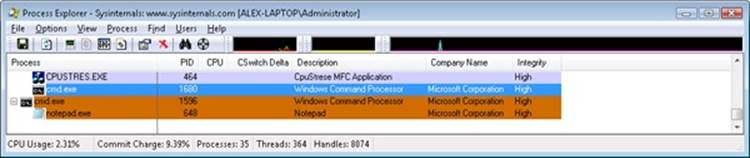

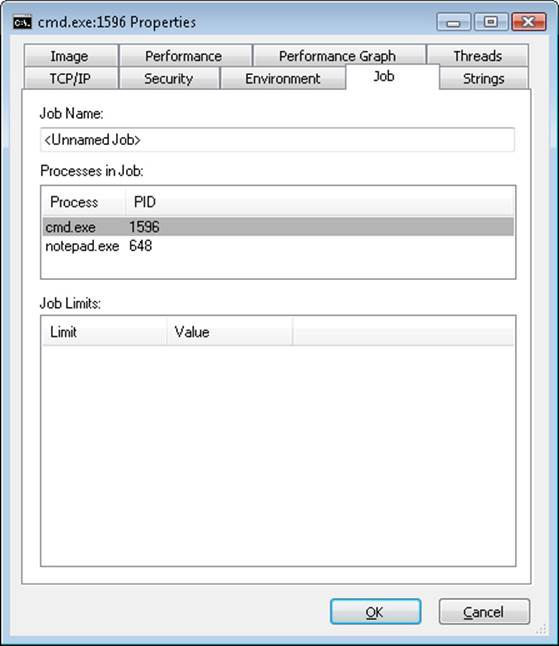

6.1.7601.17651 shp 0x75CE0000 C:\Windows\system32\kernel32.dll