T-SQL Querying (2015)

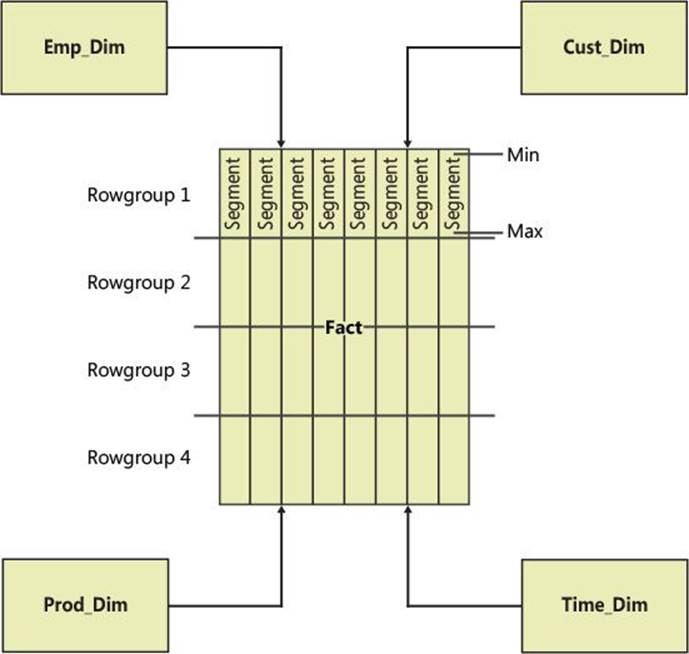

Chapter 2. Query tuning

Chapter 1, “Logical query processing,” focused on the logical design of SQL. This chapter focuses on the physical implementation in the Microsoft SQL Server platform. Because SQL is based on a standard, you will find that the core language elements look the same in the different database platforms. However, because the physical layer is not based on a standard, there you will see bigger differences between the different platforms. Therefore, to be good at query tuning you need to get intimately familiar with the physical layer in the particular platform you are working with. This chapter covers various aspects of the physical layer in SQL Server to give you a foundation for query tuning.

To tune queries, you need to analyze query execution plans and look at performance measures that different performance tools give you. A critical step in this process is to try and identify which activities in the plan represent more work, and somehow connect the relevant parts of the performance measures to those activities. Once you have this part figured out, you need to try and eliminate or reduce the activities that represent the majority of the work.

To understand what you see in the query execution plans, you need to be very familiar with access methods. To understand access methods, well, you need to be very familiar with the internal data structures that SQL Server uses.

This chapter starts by covering internal data structures. It then describes tools you will use to measure query performance. Those topics are followed by a detailed discussion of access methods and an examination of query-execution plans involving those. This chapter also covers cardinality estimates, indexing features, prioritizing queries for tuning, index and query information and statistics, temporary tables, set-based versus iterative solutions, query tuning with query revisions, and parallel query execution.

This chapter focuses on the traditional disk-based tables. Memory-optimized tables are covered separately in Chapter 10, “In-Memory OLTP.”

Internals

This section focuses on internal data structures in SQL Server. It starts with a description of pages and extents. It then goes into a discussion about organizing tables as a heap versus as a B-tree and nonclustered indexes.

Pages and extents

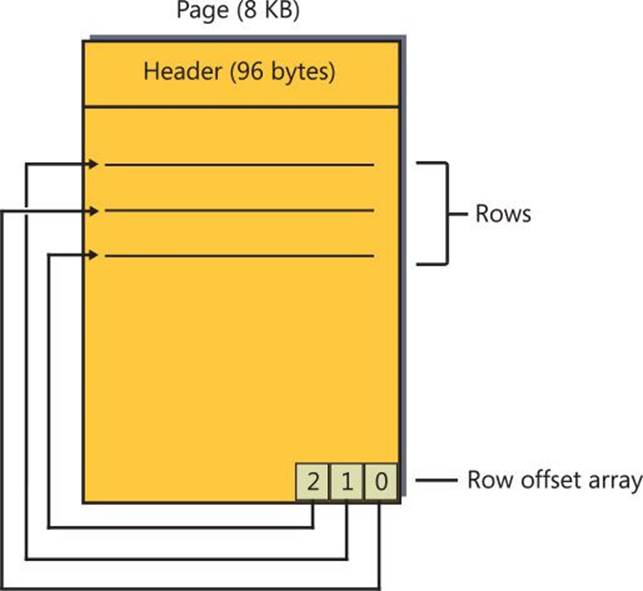

A page is an 8-KB unit where SQL Server stores data. With disk-based tables, the page is the smallest I/O unit that SQL Server can read or write. Things change in this respect with memory-optimized tables, but as mentioned, those issues are discussed separately in Chapter 10. Figure 2-1shows an illustration of the page structure.

FIGURE 2-1 A diagram of a page.

The 96-byte header of the page holds information such as which allocation unit the page belongs to, pointers to the previous and next pages in the linked list in case the page is part of an index, the amount of free space in the page, and more. Based on the allocation unit, you can eventually figure out which object the page belongs to through the views sys.allocation_units and sys.partitions. For data and index pages, the body of the page contains the data or index records. At the end of the page, there’s the row-offset array, which is an array of two-byte pointers that point to the individual records in the page. The combination of the previous and next pointers in the page header and the row-offset array enforce index key order.

To work with a page for read or write purposes, SQL Server needs the page in the buffer pool (the data cache in memory). SQL Server cannot interact with a page directly in the data file on disk. If SQL Server needs to read a page and the page in question is already in the data cache as the result of some previous activity, it will perform only a logical read (read from memory). If the page is not already in memory, SQL Server will first perform a physical read, which pulls the page from the data file to memory, and then it will perform the logical read. Similarly, if SQL Server needs to write a page and the page is already in memory, it will perform a logical write. It will also set the dirty flag in the header of the page in memory to indicate that the state in memory is more recent than the state in the data file.

SQL Server periodically runs processes called lazywriter and checkpoint to write dirty pages from cache to disk, and it will sometimes use other threads to aid in this task. SQL Server uses an algorithm called LRU-K2 to prioritize which pages to free from the data cache based on the last two visits (recorded in the page header in memory). SQL Server uses mainly the lazywriter process to mark pages as free based on this algorithm.

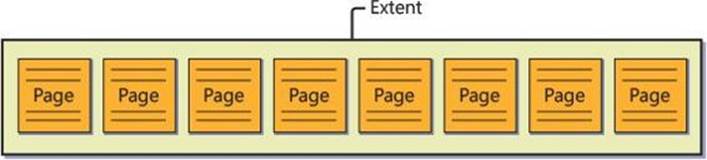

An extent is a unit that contains eight contiguous pages, as shown in Figure 2-2.

FIGURE 2-2 A diagram of an extent.

SQL Server supports two types of extents, called mixed and uniform. For new objects (tables or indexes), SQL Server allocates one page at a time from mixed extents until they reach a size of eight pages. So different pages of the same mixed extent can belong to different objects. SQL Server uses bitmap pages called shared global allocation maps (SGAMs) to keep track of which extents are mixed and have a page available for allocation. Once an object reaches a size of eight pages, SQL Server applies all future allocations of pages for it from uniform extents. A uniform extent is entirely owned by the same object. SQL Server uses bitmap pages called global allocation maps (GAMs) to keep track of which extents are free for allocation.

Table organization

A table can be organized in one of two ways—either as a heap or as a B-tree. Technically, the table is organized as a B-tree when it has a clustered index defined on it and as a heap when it doesn’t. You can create a clustered index directly using the CREATE CLUSTERED INDEX command or, starting with SQL Server 2014, using an inline clustered index definition INDEX <index_name> CLUSTERED. You can also create a clustered index indirectly through a primary key or unique constraint definition. When you add a primary-key constraint to a table, SQL Server will enforce it using a clustered index unless either you specify the NONCLUSTERED keyword explicitly or a clustered index already exists on the table. When you add a unique constraint to a table, SQL Server will enforce it using a nonclustered index unless you specify the CLUSTERED keyword. Either way, as mentioned, with a clustered index the table is organized as a B-tree and without one it is organized as a heap. Because a table must be organized in one of these two ways—heap or B-tree—the table organization is known as HOBT.

It’s far more common to see tables organized as B-trees in SQL Server. The main reason is probably that when you define a primary-key constraint, SQL Server enforces it with a clustered index by default. So, in cases where people create tables without thinking of the physical organization and define primary keys with their defaults, they end up with B-trees. As a result, there’s much more experience in SQL Server with B-trees than with heaps. Also, a clustered index gives a lot of control both on insertion patterns and on read patterns. For these reasons, with all other things being equal, I will generally prefer the B-tree organization by default.

There are cases where a heap can give you benefits. For example, in Chapter 6, “Data modification,” I describe bulk-import tools and the conditions you need to meet for the import to be processed with minimal logging. If the target table is a B-tree, it must be empty when you start the import if you want to get minimal logging. If it’s a heap, you can get minimal logging even if it already contains data. So, when the target table already contains some data and you’re adding a lot more, you can get better import performance if you first drop the indexes, do the import into a heap, and then re-create the indexes. In this example, you use a heap as a temporary state.

Regardless of how the table is organized, it can have zero or more nonclustered indexes defined on it. Nonclustered indexes are always organized as B-trees. The HOBT, as well as the nonclustered indexes, can be made of one or more units called partitions. Technically, the HOBT and each of the nonclustered indexes can be partitioned differently. Each partition of each HOBT and nonclustered index stores data in collections of pages known as allocation units. The three types of allocation units are known as IN_ROW_DATA, ROW_OVERFLOW_DATA, and LOB_DATA.

IN_ROW_DATA holds all fixed-length columns; it also holds variable-length columns as long as the row size does not exceed the 8,060-byte limit. ROW_OVERFLOW_DATA holds VARCHAR, NVARCHAR, VARBINARY, SQL_VARIANT, or CLR user-defined typed data that does not exceed 8,000 bytes but was moved from the original row because it exceeded the 8,060-row size limit. LOB_DATA holds large object values—VARCHAR(MAX), NVARCHAR(MAX), VARBINARY(MAX) that exceed 8,000 bytes, XML, or CLR UDTs. The system view sys.system_internals_allocation_units holds the anchors pointing to the page collections stored in the allocation units.

In the following sections, I describe the heap, clustered index, and nonclustered index structures. For simplicity’s sake, I’ll assume that the data is nonpartitioned; but if it is partitioned, the description is still applicable to a single partition.

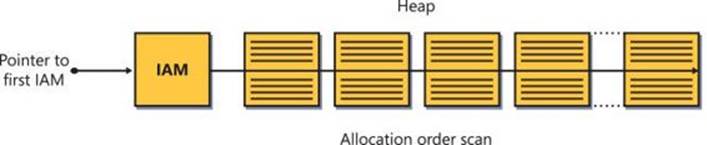

Heap

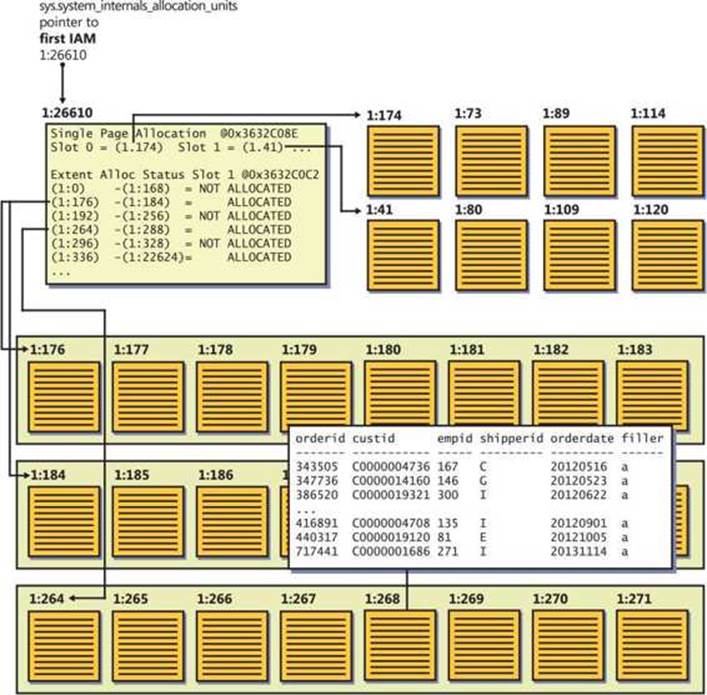

A heap is a table that has no clustered index. The structure is called a heap because the data is not organized in any order; rather, it is laid out as a bunch of pages and extents. The Orders table in the sample database PerformanceV3 (see this book’s intro for details on how to install the sample database) is actually organized as a B-tree, but Figure 2-3 illustrates what it might look like when organized as a heap.

FIGURE 2-3 A diagram of a heap.

SQL Server maps the data that belongs to a heap using one or more bitmap pages called index allocation maps (IAMs). The header of the IAM page has pointers to the first eight pages that SQL Server allocated for the heap from mixed extents. The header also has a pointer to a starting location of a range of 4 GB that the IAM page maps in the data file. The body of the IAM page has a representative bit for each extent in that range. The bit is 0 if the extent it represents does not belong to the object owning the IAM page and 1 if it does. If one IAM is not enough to cover all the object’s data, SQL Server will maintain a chain of IAM pages. When SQL Server needs to scan a heap, it relies on the information in the IAM pages to figure out which pages and extents it needs to read. I will refer to this type of scan as an allocation order scan. This scan is done in file order and, therefore, when the data is not cached it usually applies sequential reads.

As you can see in Figure 2-3, SQL Server maintains internal pointers to the first IAM page and the first data page of a heap. Those pointers can be found in the system view sys.system_internals_allocation_units.

Because a heap doesn’t maintain the data in any particular order, new rows that are added to the table can go anywhere. SQL Server uses bitmap pages called page free space (PFS) to keep track of free space in pages so that it can quickly find a page with enough free space to accommodate a new row or allocate a new one if no such page exists.

When a row expands as a result of an update to a variable-length column and the page has no room for the row to expand, SQL Server moves the expanded row to a page with enough space to accommodate it and leaves behind what’s known as a forwarding pointer that points to the new location of the row. The purpose of forwarding pointers is to avoid the need to modify pointers to the row from nonclustered indexes when data rows move.

I didn’t yet explain a concept called a page split (because page splits can happen only in B-trees), but it suffices to say for now that heaps do not incur page splits.

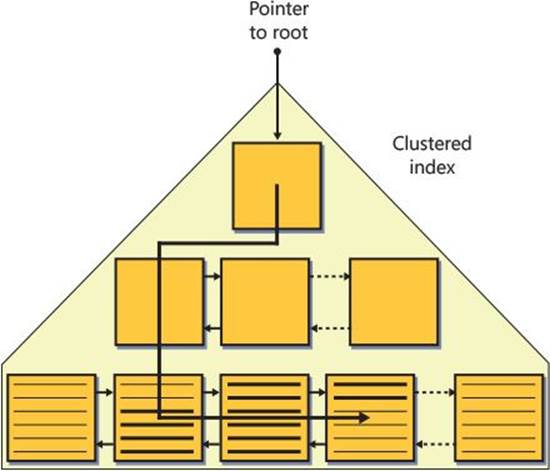

B-tree (clustered index)

All indexes in SQL Server on disk-based tables are structured as B-trees, which are a special case of balanced trees. A balanced tree is a tree where no leaf is much farther away from the root than any other leaf.

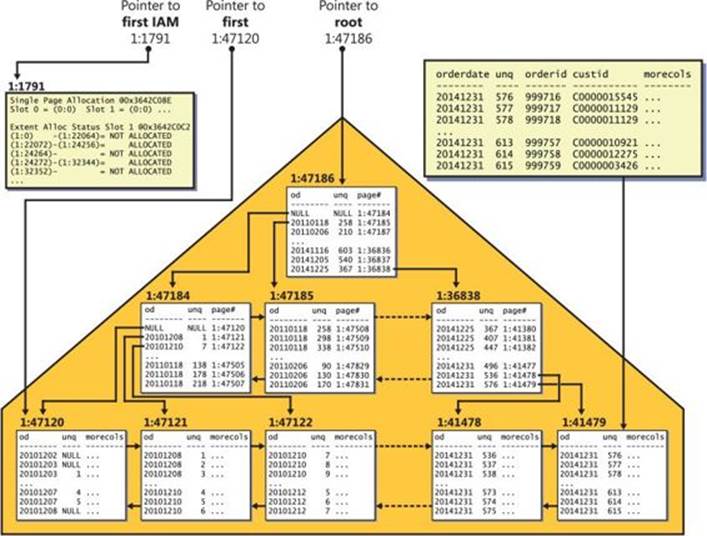

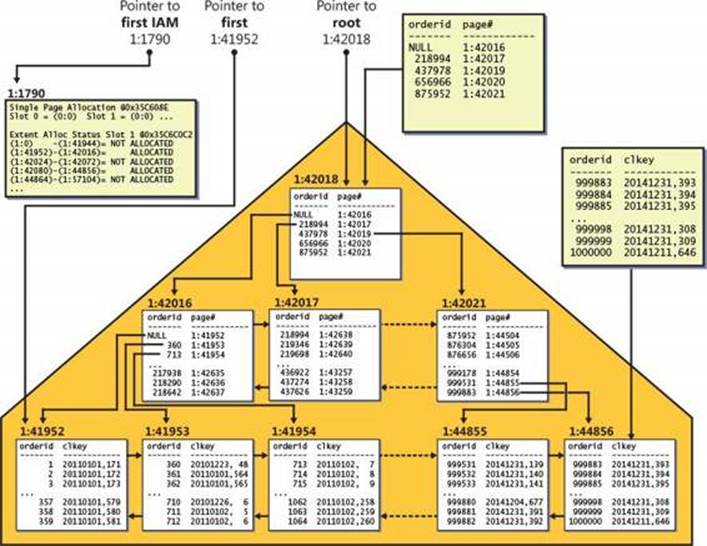

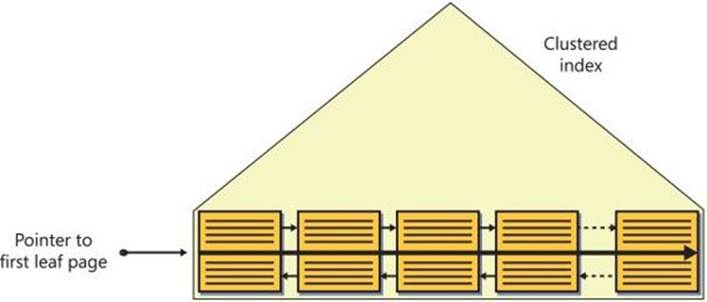

A clustered index is structured as a B-tree, and it maintains the entire table’s data in its leaf level. The clustered index is not a copy of the data; rather, it is the data. Figure 2-4 illustrates how the Orders table might look when organized as a B-tree with the orderdate column defined as the key.

FIGURE 2-4 An illustration of a B-tree.

As you can see in the figure, the full data rows of the Orders table are stored in the index leaf level. The data rows are organized in the leaf in key order (orderdate in our case). A doubly linked list maintains the key order between the pages, and the row-offset array at the end of the page maintains this order within the page. When SQL Server needs to perform an ordered scan (or a range scan) operation in the leaf level of the index, it does so by following the linked list. I’ll refer to this type of scan as an index order scan.

Notice in Figure 2-4 that with each leaf row, the index maintains a column called uniquifier (abbreviated to unq in the illustration). The value in this column enumerates rows that have the same key value, and it is used together with the key value to uniquely identify rows when the index’s key columns are not unique. I’ll elaborate on the purpose of the uniquifier later when discussing nonclustered indexes.

When the optimizer optimizes a query, it needs to choose algorithms to handle operations such as joins, grouping and aggregating, distinct, window functions, and presentation order. Some operations can be processed only by using an order-based algorithm, like presentation order (ORDER BY clause in query). Some operations can be processed using more than one algorithm, including an order-based one. For example, grouping/aggregating and distinct can be processed either with an order-based algorithm or a hash-based one. Joins can be processed with an order-based algorithm, a hash-based algorithm, or a loop-based one. To use an order-based algorithm, the data needs to be arranged as ordered. One way for the optimizer to get the data ordered is to add an explicit sort operation to the plan. However, an explicit sort operation requires a memory grant and doesn’t scale well (N log N scaling).

Another way to get the data ordered is to perform an index order scan, assuming the right index exists. This option tends to be more efficient than the alternatives. Also, it doesn’t require a memory grant like explicit sort and hash operators do. When an index doesn’t exist, the optimizer is then left with just the remaining options. For operations that can be processed only with an order-based algorithm, like presentation order, the optimizer will have to add an explicit sort operation. For operations that can be processed with more than one algorithm, like grouping and aggregating, the optimizer will make a choice based on cost estimates. Because sorting doesn’t scale well, the optimizer tends to choose a strategy based on sorting with a small number of rows. Hash algorithms tend to scale better than sorting, so the optimizer tends to prefer hashing with a large number of rows.

Regarding the data in the leaf level of the index, the order of the pages in the file might not match the index key order. If page x points to next page y, and page y appears before page x in the file, page y is considered an out-of-order page. Logical scan fragmentation (also known asaverage fragmentation in percent) is measured as the percentage of out-of-order pages. So if there are 25,000 pages in the leaf level of the index and 5,000 of them are out of order, you have 20 percent of logical scan fragmentation. The main cause of logical scan fragmentation is page splits, which I’ll describe shortly. The higher the logical scan fragmentation of an index is, the slower an index order scan operation is when the data isn’t cached. This fragmentation tends to have little if any impact on index order scans when the data is cached.

Interestingly, in addition to the linked list, SQL Server also maintains IAM pages to map the data stored in the index in file order like it does in the heap organization. Recall that I referred to a scan of the data that is based on IAM pages as an allocation order scan. SQL Server might use this type of scan when it needs to perform unordered scans of the index’s leaf level. Because an allocation order scan reads the data in file order, its performance is unaffected by logical scan fragmentation, unlike with index order scans. Therefore, allocation order scans tend to be more efficient than index order scans, especially when the data isn’t cached and there’s a high level of logical scan fragmentation.

As mentioned, the main cause of logical scan fragmentation is page splits. A split of a leaf page occurs when a row needs to be inserted into the page and the target page does not have room to accommodate the row. Remember that an index maintains the data in key order. A row must enter a certain page based on its key value. If the target page is full, SQL Server will split the page. SQL Server supports two kinds of splits: one for an ascending key pattern and another for a nonascending key pattern.

If the new key is greater than or equal to the maximum key, SQL Server assumes that the insert pattern is an ascending key pattern. It allocates a new page and inserts the new row into that page. An ascending key pattern tends to be efficient when a single session adds new rows and the disk subsystem has a small number of drives. The new page is allocated in cache and filled with new rows. However, the ascending key pattern is not efficient when multiple sessions add new rows and your disk subsystem has many drives. With this pattern, there’s constant page-latch contention on the rightmost index page and insert performance tends to be suboptimal. An insert pattern with a random distribution of new keys tends to be much more efficient in such a scenario.

If the new key is less than the maximum key, SQL Server assumes that the insert pattern is a non-ascending key pattern. It allocates a new page, moves half the rows from the original page to the new one, and adjusts the linked list to reflect the right logical order of the pages. It inserts the new row either to the original page or to the new one based on its key value. The new page is not guaranteed to come right after the one that split—it could be somewhere later in the file, and it could also be somewhere earlier in the file.

Page splits are expensive, and they tend to result in logical scan fragmentation. You can measure the level of fragmentation in your index by querying the avg_fragmentation_in_percent attribute of the function sys.dm_db_index_physical_stats. To remove fragmentation, you rebuild the index using an ALTER INDEX REBUILD command. You can specify an option called FILLFACTOR with the percent that you want to fill leaf-level pages at the end of the rebuild. For example, using FILLFACTOR = 70 you request to fill leaf pages to 70 percent. With indexes that have a nonascending key insert pattern, it could be a good idea to leave some free space in the leaf pages to reduce occurrences of splits and to not let logical scan fragmentation evolve to high levels. You decide which fill factor value to use based on the rate of additions and frequency of rebuilds. With indexes that have an ascending key insert pattern, it’s pointless to leave empty space in the leaf pages because all new rows go to the right edge of the index anyway.

On top of the leaf level of the index, the index maintains additional levels, each summarizing the level below it. Each row in a nonleaf index page points to a whole page in the level below it, and together with the next row has information about the range of keys that the target page is responsible for. The row contains two elements: the minimum key column value in the index page being pointed to (assuming an ascending order) and a 6-byte pointer to that page. This way, you know that the target page is responsible for the range of keys that are greater than or equal to the key in the current row and less than the key in the next row. Note that in the row that points to the first page in the level below, the first element is NULL to indicate that there’s no minimum. As for the page pointer, it consists of the file number in the database and the page number in the file. When SQL Server builds an index, it starts from the leaf level and adds levels on top. It stops as soon as a level contains a single page, also known as the root page.

When SQL Server needs to find a certain key or range of keys at the leaf level of the index, it performs an access method called index seek. The access method starts by reading the root page and identifies the row that represents the range that contains the key being sought. It then navigates down the levels, reading one page in each level, until it reaches the page in the leaf level that contains the first matching key. The access method then performs a range scan in the leaf level to find all matching keys. Remember that all leaf pages are the same distance from the root. This means that the access method will read as many pages as the number of levels in the index to reach the first matching key. The I/O pattern of these reads is random I/O, as opposed to sequential I/O, because naturally the pages read by a seek operation until it reaches the leaf level will seldom reside next to each other.

In terms of our performance estimations, it is important to know the number of levels in an index because that number will be the cost of a seek operation in terms of page reads, and some execution plans invoke multiple seek operations repeatedly (for example, a Nested Loops join operator). For an existing index, you can get this number by invoking the INDEXPROPERTY function with the IndexDepth property. But for an index that you haven’t created yet, you need to be familiar with the calculations you can use to estimate the number of levels the index will contain.

The operands and steps required for calculating the number of levels in an index (call it L) are as follows (remember that these calculations apply to clustered and nonclustered indexes unless explicitly stated otherwise):

![]() The number of rows in the table (call it num_rows) This is 1,000,000 in our case.

The number of rows in the table (call it num_rows) This is 1,000,000 in our case.

![]() The average gross leaf row size (call it leaf_row_size) In a clustered index, this is actually the data row size. By “gross,” I mean that you need to take into consideration the internal overhead of the row and the 2-byte pointer in the row-offset array. The row overhead typically involves a few bytes. In our Orders table, the gross average data row size is roughly 200 bytes.

The average gross leaf row size (call it leaf_row_size) In a clustered index, this is actually the data row size. By “gross,” I mean that you need to take into consideration the internal overhead of the row and the 2-byte pointer in the row-offset array. The row overhead typically involves a few bytes. In our Orders table, the gross average data row size is roughly 200 bytes.

![]() The average leaf page density (call it page_density) This value is the average percentage of population of leaf pages. Page density is affected by things like data deletion, page splits, and rebuilding the index with a fillfactor value that is lower than 100. The clustered index in our table is based on an ascending key pattern; therefore, page_density in our case is likely going to be close to 100 percent.

The average leaf page density (call it page_density) This value is the average percentage of population of leaf pages. Page density is affected by things like data deletion, page splits, and rebuilding the index with a fillfactor value that is lower than 100. The clustered index in our table is based on an ascending key pattern; therefore, page_density in our case is likely going to be close to 100 percent.

![]() The number of rows that fit in a leaf page (call it rows_per_leaf_page) The formula to calculate this value is FLOOR((page_size – header_size) * page_density / leaf_row_size). In our case, rows_per_leaf_page amount to FLOOR((8192 – 96) * 1 / 200) = 40.

The number of rows that fit in a leaf page (call it rows_per_leaf_page) The formula to calculate this value is FLOOR((page_size – header_size) * page_density / leaf_row_size). In our case, rows_per_leaf_page amount to FLOOR((8192 – 96) * 1 / 200) = 40.

![]() The number of leaf pages (call it num_leaf_pages) This is a simple formula: num_rows / rows_per_leaf_page. In our case, it amounts to 1,000,000 / 40 = 25,000.

The number of leaf pages (call it num_leaf_pages) This is a simple formula: num_rows / rows_per_leaf_page. In our case, it amounts to 1,000,000 / 40 = 25,000.

![]() The average gross nonleaf row size (call it non_leaf_row_size) A nonleaf row contains the key columns of the index (in our case, only orderdate, which is 3 bytes); the 4-byte uniquifier (which exists only in a clustered index that is not unique); the page pointer, which is 6 bytes; a few additional bytes of internal overhead, which total 5 bytes in our case; and the row offset pointer at the end of the page, which is 2 bytes. In our case, the gross nonleaf row size is 20 bytes.

The average gross nonleaf row size (call it non_leaf_row_size) A nonleaf row contains the key columns of the index (in our case, only orderdate, which is 3 bytes); the 4-byte uniquifier (which exists only in a clustered index that is not unique); the page pointer, which is 6 bytes; a few additional bytes of internal overhead, which total 5 bytes in our case; and the row offset pointer at the end of the page, which is 2 bytes. In our case, the gross nonleaf row size is 20 bytes.

![]() The number of rows that fit in a nonleaf page (call it rows_per_non_leaf_page) The formula to calculate this value is similar to calculating rows_per_leaf_page. It’s FLOOR((page_size – header_size) / non_leaf_row_size), which in our case amounts to FLOOR((8192 – 96) / 20) = 404.

The number of rows that fit in a nonleaf page (call it rows_per_non_leaf_page) The formula to calculate this value is similar to calculating rows_per_leaf_page. It’s FLOOR((page_size – header_size) / non_leaf_row_size), which in our case amounts to FLOOR((8192 – 96) / 20) = 404.

![]() The number of levels above the leaf (call it L–1) This value is calculated with the following formula: CEILING(LOG(num_leaf_pages, rows_per_non_leaf_page)). In our case, L–1 amounts to CEILING(LOG(25000, 404)) = 2.

The number of levels above the leaf (call it L–1) This value is calculated with the following formula: CEILING(LOG(num_leaf_pages, rows_per_non_leaf_page)). In our case, L–1 amounts to CEILING(LOG(25000, 404)) = 2.

![]() The index depth (call it L) To get L you simply add 1 to L–1, which you computed in the previous step. The complete formula to compute L is CEILING(LOG(num_leaf_pages, rows_per_non_leaf_page)) + 1. In our case L amounts to 3.

The index depth (call it L) To get L you simply add 1 to L–1, which you computed in the previous step. The complete formula to compute L is CEILING(LOG(num_leaf_pages, rows_per_non_leaf_page)) + 1. In our case L amounts to 3.

![]() The number of rows that can be represented by an index with L levels (call it N) Suppose you are after the inverse computation of L. Namely, how many rows can be represented by an index with L levels. You compute rows_per_non_leaf_page and rows_per_leaf_page just like before. Then to compute N, use the formula: POWER(rows_per_non_leaf_page, L–1) * rows_per_leaf_page. For L = 3, rows_per_non_leaf_page = 404, and rows_per_leaf_page = 40, you get 6,528,540 rows.

The number of rows that can be represented by an index with L levels (call it N) Suppose you are after the inverse computation of L. Namely, how many rows can be represented by an index with L levels. You compute rows_per_non_leaf_page and rows_per_leaf_page just like before. Then to compute N, use the formula: POWER(rows_per_non_leaf_page, L–1) * rows_per_leaf_page. For L = 3, rows_per_non_leaf_page = 404, and rows_per_leaf_page = 40, you get 6,528,540 rows.

You can play with the preceding formulas for L and N and see that with up to about 16,000 rows, our index will have two levels. Three levels would support up to about 6,500,000 rows, and four levels would support up to about 2,600,000,000 rows. With nonclustered indexes, the formulas are the same—it’s just that you can fit more rows in each leaf page, as I will describe later. So with nonclustered indexes, the upper bound for each number of levels covers even more rows in the table. Our table has 1,000,000 rows, and all current indexes on our table have three levels. Therefore, the cost of a seek operation in any of the indexes on our table is three reads. Remember this number for later performance-related discussions in the chapter. As you can understand from this discussion, the number of levels in the index depends on the row size and the key size. But unless we’re talking about extreme sizes, as ballpark numbers you will probably get two levels with small tables (up to a few dozens of thousands of rows), three levels with medium tables (up to a few million rows), and four levels with large ones (up to a few billion rows).

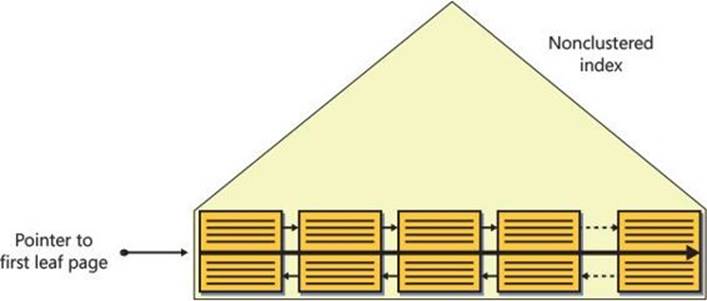

Nonclustered index on a heap

A nonclustered index is also structured as a B-tree and in many respects is similar to a clustered index. The main difference is that a leaf row in a nonclustered index contains only the index key columns and a row locator value representing a particular data row. The content of the row locator depends on whether the underlying table is organized as a heap or as a B-tree. This section describes non-clustered indexes on a heap, and the following section will describe nonclustered indexes on a B-tree (clustered table).

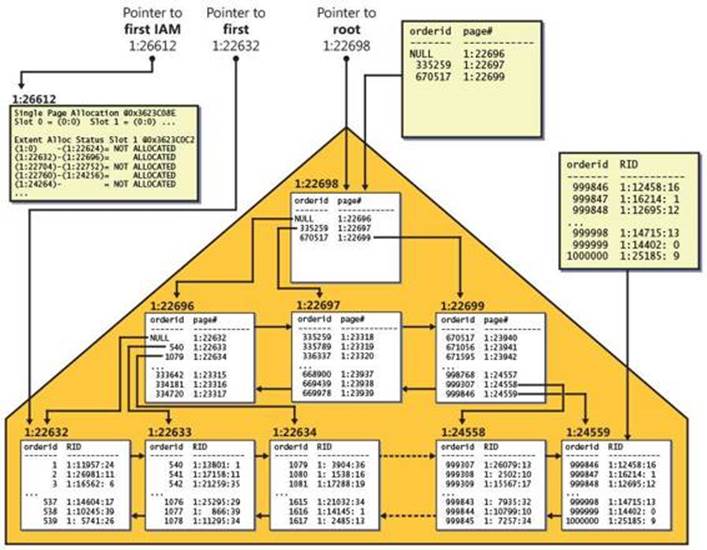

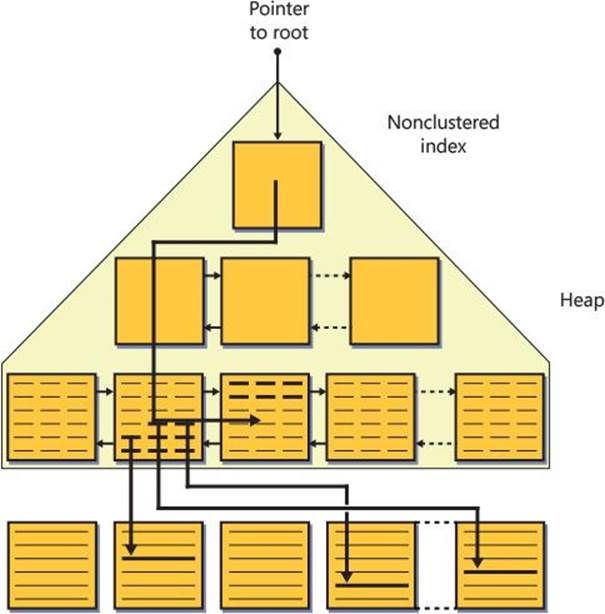

Figure 2-5 illustrates the nonclustered index that SQL Server created to enforce our primary-key constraint (PK_Orders) with the orderid column as the key column. SQL Server assigned the index with the same name as the constraint, PK_Orders.

FIGURE 2-5 Nonclustered index on a heap.

The row locator used by a nonclustered index leaf row to point to a data row is an 8-byte physical pointer called a row identifier, or RID for short. It consists of the file number in the database, the target page number in the file, and the zero-based entry number in the row-offset array in the target page. When looking for a data row based on a given nonclustered index key, SQL Server first performs a seek operation in the index to find the leaf row with the key being sought. SQL Server then performs a RID lookup operation, which translates to reading the page that contains the data row, and then it pulls the row of interest from that page. Therefore, the cost of a RID lookup is one page read.

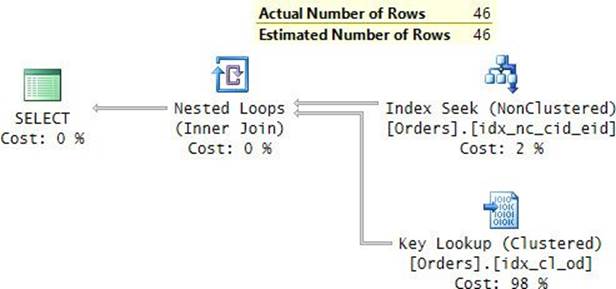

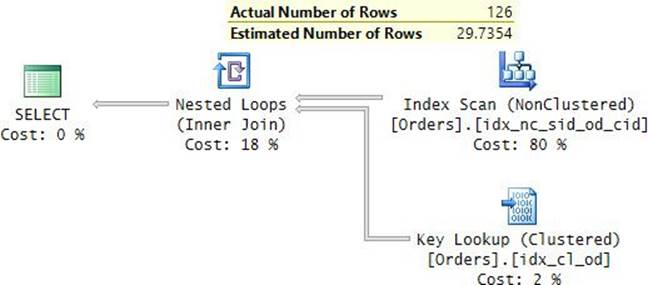

If you’re looking for a range of keys, SQL Server will perform a range scan in the index leaf and then a RID lookup per matching key. For a single lookup or a small number of lookups, the cost is not high, but for a large number of lookups, the cost can be very high because SQL Server ends up reading one whole page for each row being sought. For range queries that use a nonclustered index and a series of lookups—one per qualifying key—the cumulative cost of the lookup operations typically makes up the bulk of the cost of the query. I’ll demonstrate this point in the “Access methods” section. As for the cost of a seek operation, remember that the formulas I provided earlier for clustered indexes are just as relevant to nonclustered indexes. It’s just that the leaf_row_size is smaller, and therefore the rows_per_leaf_page will be higher. But the formulas are the same.

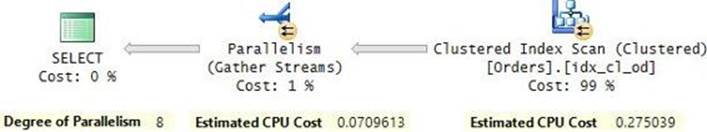

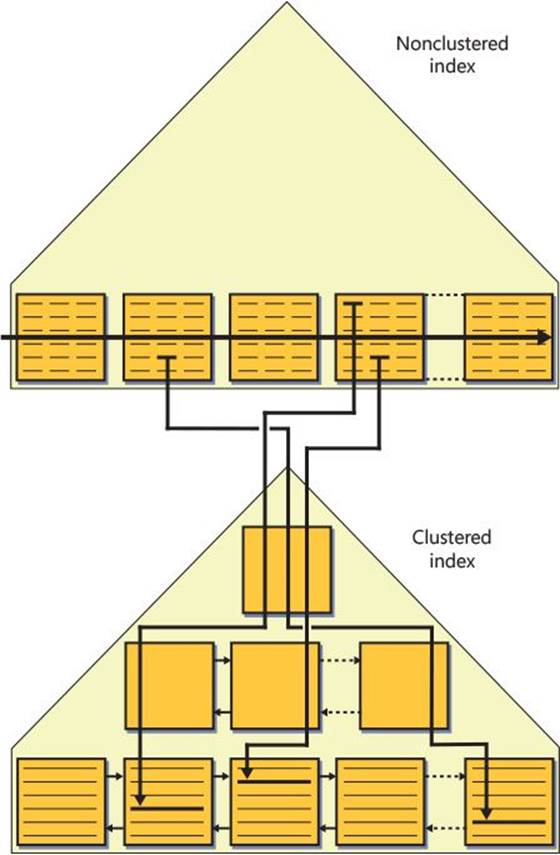

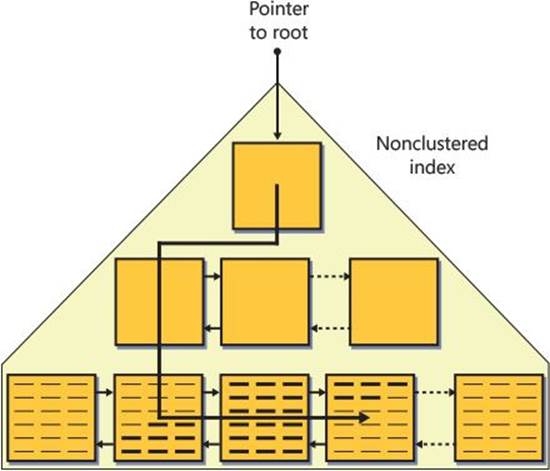

Nonclustered index on a B-tree

Nonclustered indexes created on a B-tree (clustered table) are architected differently than on a heap. The difference is that the row locator in a nonclustered index created on a B-tree is a value called a clustering key, as opposed to being a RID. The clustering key consists of the values of the clustered index keys from the row being pointed to and the uniquifier (if present). The idea is to point to a row logically as opposed to physically. This architecture was designed mainly for OLTP systems, where clustered indexes tend to incur frequent page splits upon data insertions and updates. If nonclustered indexes used RIDs as row locators, all pointers to the data rows that moved would have to be changed to reflect their new RIDs. Imagine the performance impact this would have with multiple nonclustered indexes defined on the table. Instead, SQL Server maintains logical pointers that don’t change when data rows move between leaf pages in the clustered index.

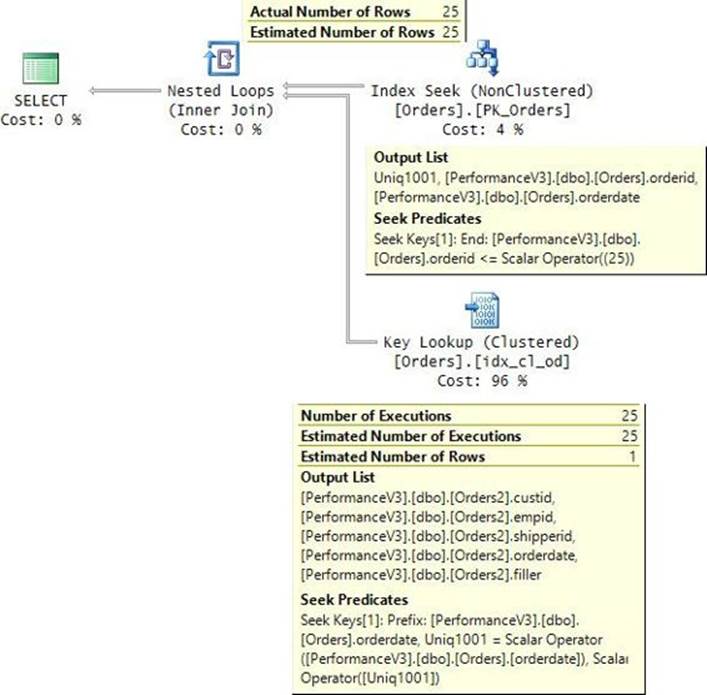

Figure 2-6 illustrates what the PK_Orders nonclustered index might look like; the index is defined with orderid as the key column, and the Orders table has a clustered index defined with orderdate as the key column.

FIGURE 2-6 Nonclustered index on a B-tree.

A seek operation looking for a particular key in the nonclustered index (some orderid value) will end up reaching the relevant leaf row and have access to the row locator. The row locator in this case is the clustering key of the row being pointed to. To actually get to the row being pointed to, the lookup operation will need to perform a seek in the clustered index based on the acquired clustering key. This type of lookup is known as a key lookup, as opposed to a RID lookup. I will demonstrate this access method later in the chapter. The cost of each lookup operation (in terms of the number of page reads) is as high as the number of levels in the clustered index (3 in our case). That’s compared to a single page read for a RID lookup when the table is a heap. Of course, with range queries that use a nonclustered index and a series of lookups, the ratio between the number of logical reads in a heap case and a clustered table case will be close to 1:L, where L is the number of levels in the clustered index.

Tools to measure query performance

SQL Server gives you a number of tools to measure query performance, but one thing to keep in mind is that ultimately what matters is the user experience. The user mainly cares about two things: response time (the time it takes the first row to return) and throughput (the time it takes the query to complete). Naturally, as technical people we want to identify different performance measures that we believe are the major contributors to the eventual user experience. So we tend to look at things like number of reads (mainly interesting in I/O intensive activities), as well as CPU time and elapsed time.

The examples I will use in this chapter will be against a sample database called PerformanceV3. (See the book’s intro for details on how to install it.) Run the following code to connect to the sample database:

SET NOCOUNT ON;

USE PerformanceV3;

I will use the following query to demonstrate tools to measure query performance:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

WHERE orderid <= 10000;

When you want to measure query performance in a test environment, you need to think about whether you expect the production environment to have hot or cold cache for the query. If the former, you want to execute the query twice and measure the performance of the second execution. The first execution will cause all pages to be brought into the data cache, and therefore the second execution will run against hot cache. If the latter, before you run your query, you want to run a manual checkpoint to write dirty buffers to disk and then drop all clean buffers from cache, like so:

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

Note, though, that you should manually clear the data cache only in isolated test environments, because obviously this action will have a negative impact on the performance of queries. Both commands require elevated permissions.

I use three main, built-in tools to analyze and measure query performance: a graphical execution plan, the STATISTICS IO and STATISTICS TIME session options, and an Extended Events session with statement completed events. I use the graphical execution plan to analyze the plan that the optimizer created for the query. I use the session options when I need to measure the performance of a single query or a small number of queries. I use an Extended Events session when I need to measure the performance of a large number of queries.

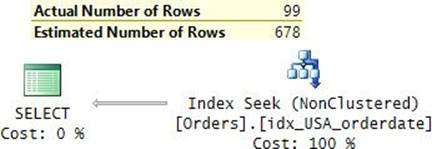

Regarding the execution plan, you request to see the estimated plan in SQL Server Management Studio (SSMS) by highlighting your query and clicking the Display Estimated Execution Plan (Ctrl+L) button on the SQL Editor toolbar. You request to see the actual plan by enabling the Include Actual Execution Plan (Ctrl+M) button and executing the query. I generally prefer to analyze the actual plan because it includes run-time information like the actual number of rows returned by, and the actual number of executions of, each operator. Suboptimal choices made by the optimizer are often a result of inaccurate cardinality estimates, and those can be detected by comparing estimated and actual numbers in an actual execution plan.

Use the following code to enable measuring query performance with the session options STATISTICS IO (for I/O information) and STATISTICS TIME (for time information):

SET STATISTICS IO, TIME ON;

When you run a query, SSMS will report performance information in the Messages pane.

To measure the performance of ad hoc queries that you submit from SSMS using an Extended Events session, capture the event sql_statement_completed and filter the session ID of the SSMS session that you’re submitting the queries from. Use the following code to create and start such an Extended Events session after replacing the session ID with that of your SSMS session:

CREATE EVENT SESSION query_performance ON SERVER

ADD EVENT sqlserver.sql_statement_completed(

WHERE (sqlserver.session_id=(53))); -- replace with your session ID;

ALTER EVENT SESSION query_performance ON SERVER STATE = START;

To watch the event information, go to Object Explorer in SSMS. Navigate through the folder hierarchy Management\Extended Events\Sessions. Expand the Sessions folder, right-click the session query_performance, and choose Watch Live Data.

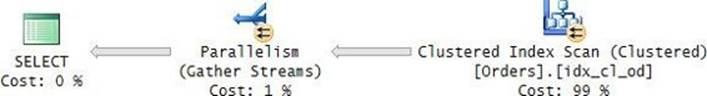

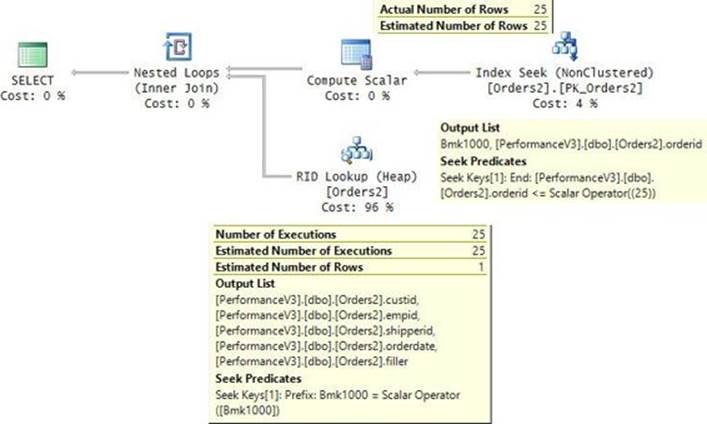

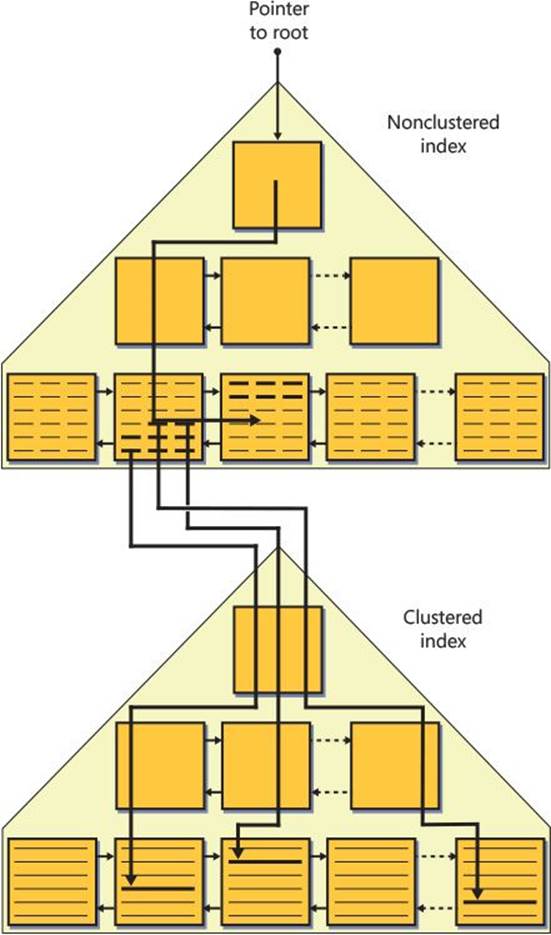

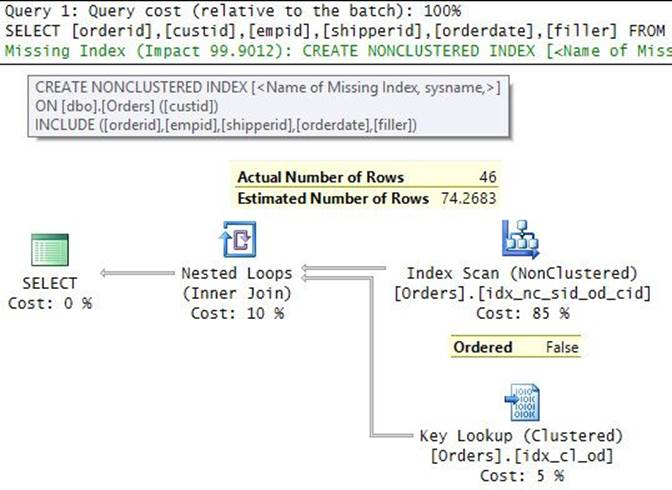

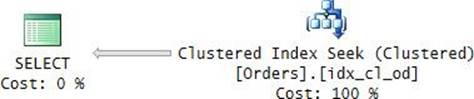

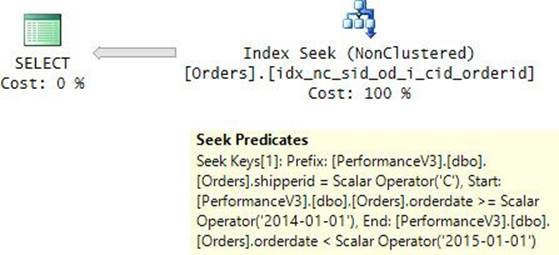

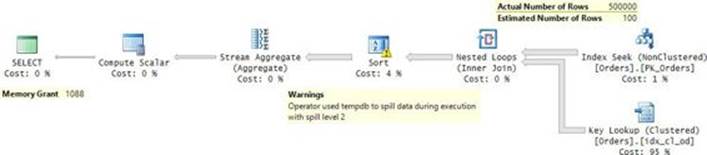

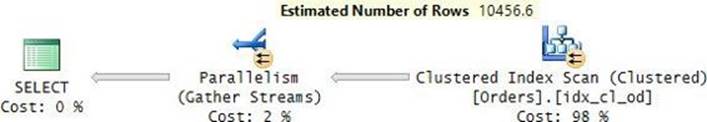

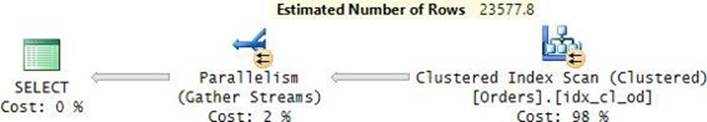

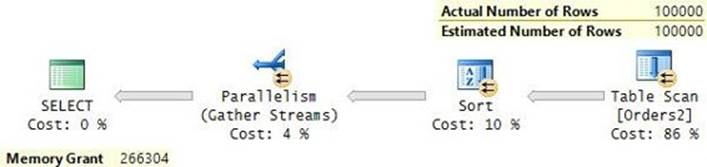

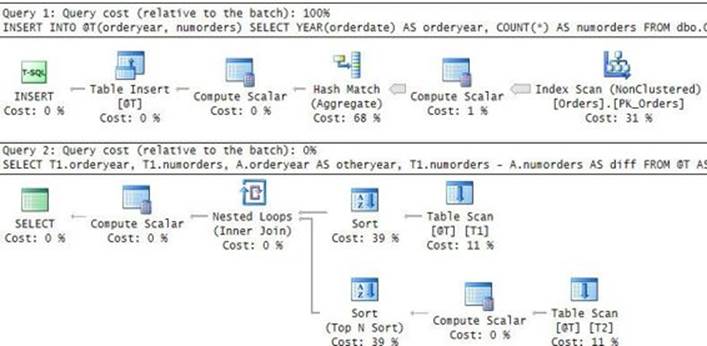

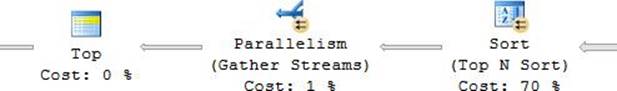

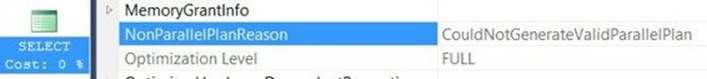

To show the output of the different performance-measuring tools, run the sample query after clearing the data cache. I got the actual query plan shown in Figure 2-7.

FIGURE 2-7 Execution plan for the sample query.

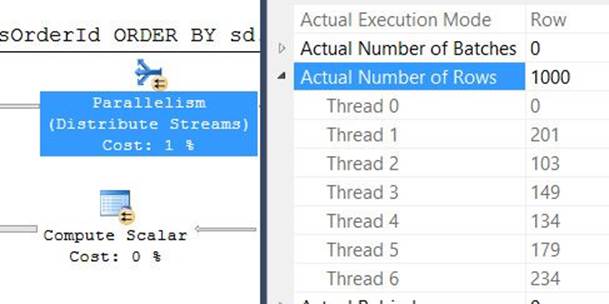

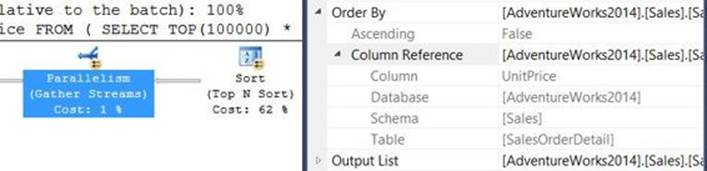

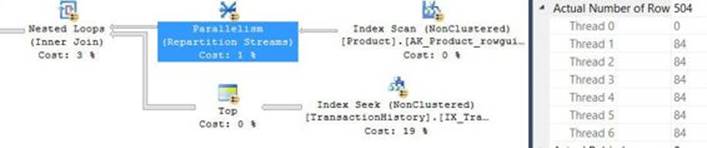

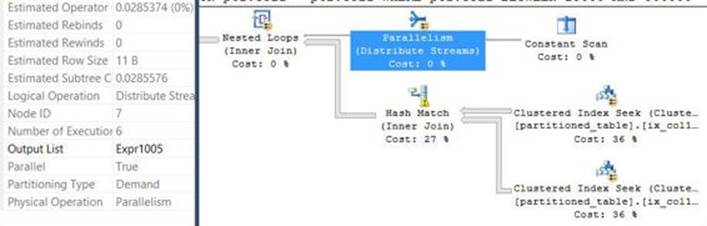

The plan is parallel. You can tell that an operator uses parallelism if it has a yellow circle with two arrows. The plan performs a parallel clustered index scan, which also applies a filter, and then it gathers the streams of rows returned by the different threads. An interesting point to note here is that the data flows in the plan from right to left as depicted by the direction of the arrows; however, the internal execution of the plan starts with the leftmost node, which is known as the root node. This node executes the node to its right requesting rows, and in turn that node executes the node to its right, requesting rows.

Following is the output generated by the STATISTICS IO option for this query:

Table 'Orders'. Scan count 9, logical reads 25339, physical reads 1, read-ahead reads 25138, lob

logical reads 0, lob physical reads 0, lob read-ahead reads 0.

The measure Scan count indicates how many times the object was accessed during the processing of the query. I think that the name of this measure is a bit misleading because any kind of access to the object adds to the count, not necessarily a scan. A name like Access count probably would have been more appropriate. In our case, the scan count is 9 because there were 8 threads that accessed the object in the parallel scan, plus a separate thread accessed the object for the serial parts of the plan.

The logical reads measure (25,339 in our case) indicates how many pages were read from the data cache. Note that if a page is read twice from cache, it is counted twice. Unfortunately, the STATISTICS IO tool does not indicate how many distinct pages were read. The measures physical reads (1 in our case) and read-ahead reads (25,138 in our case) indicate how many pages were read physically from disk into the data cache. As you can imagine, a physical read is much more expensive than a logical read. The physical reads measure represents a regular read mechanism. This mechanism uses synchronous reads of single-page units. By synchronous, I mean that the query processing cannot continue until the read is completed. The read-ahead reads measure represents a specialized read mechanism that anticipates the data that is needed for the query. This mechanism is referred to as read-ahead or prefetch. SQL Server usually uses this mechanism when a large-enough scan is involved. This mechanism applies asynchronous reads and might read chunks greater than a single page, all the way up to 512 KB per read. To help with troubleshooting, you can disable read-ahead by using trace flag 652. (Turn it on with DBCC TRACEON, and turn it off with DBCC TRACEOFF.) To find out the total number of physical reads that were involved in the processing of the query, sum the measures physical reads and read-ahead reads. In our case, the total is 25,139.

The STATISTICS IO output also shows logical reads, physical reads, and read-ahead reads for large object types separately. In our case, there are no large object types involved; therefore, all three measures are zeros.

The STATISTICS TIME option reports the following time statistics for our query:

SQL Server Execution Times:

CPU time = 170 ms, elapsed time = 765 ms.

Note that you will get multiple outputs from this option. In addition to getting the output for the execution of the query, you will also get output for the parsing and compilation of the query. You also will get outputs for every element you enable in SSMS that involves work that is submitted behind the scenes to SQL Server, like when you enable the graphical execution plan. The STATISTICS TIME output row that appears right after the STATISTICS IO output row is the one representing the query execution. To process our query against cold cache, SQL Server used 170 ms of CPU time and it took the query 765 ms of wall-clock time to complete.

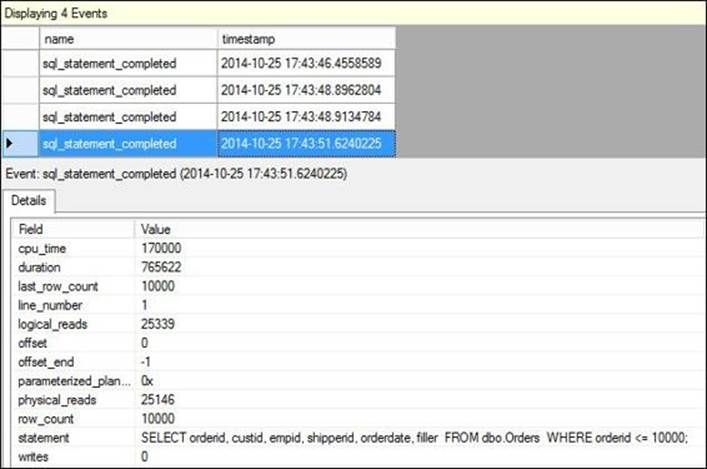

Figure 2-8 shows the Watch Live Data window for the Extended Events session query_performance, with the execution of the sample query against cold cache highlighted.

FIGURE 2-8 Information from the Extended Events session.

Now that the cache is hot, run the query a second time. I got the following output from STATISTICS IO:

Table 'Orders'. Scan count 9, logical reads 25339, physical reads 0, read-ahead reads 0, lob

logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Notice that the measures representing physical reads are zeros. I got the following output from STATISTICS TIME:

CPU time = 265 ms, elapsed time = 299 ms.

Naturally, with hot cache the query finishes more quickly.

Access methods

This section provides a description of the various methods SQL Server uses to access data; it is designed to be used as a reference for discussions throughout this book involving the analysis of execution plans.

The examples in this section will use queries against the Orders table in the PerfromanceV3 database. This table is organized as a B-tree with the clustered index defined based on orderdate as the key. Use the following code to create a copy of the Orders table called Orders2, organized as a heap:

IF OBJECT_ID(N'dbo.Orders2', N'U') IS NOT NULL DROP TABLE dbo.Orders2;

SELECT * INTO dbo.Orders2 FROM dbo.Orders;

ALTER TABLE dbo.Orders2 ADD CONSTRAINT PK_Orders2 PRIMARY KEY NONCLUSTERED (orderid);

When I want to show access methods against a B-tree–organized table, I’ll query Orders. When I want to show access methods against a heap-organized table, I’ll query Orders2.

![]() Important

Important

I used randomization to generate the sample data in the PerformanceV3 database. This means that when you query objects in this database, your results probably will be slightly different than mine.

Most of the cost associated with access methods is related to I/O activity. Therefore, I’ll provide the number of logical reads as the main measure of the access methods’ performance.

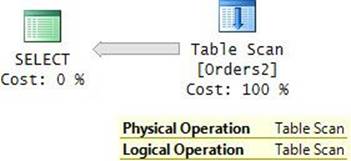

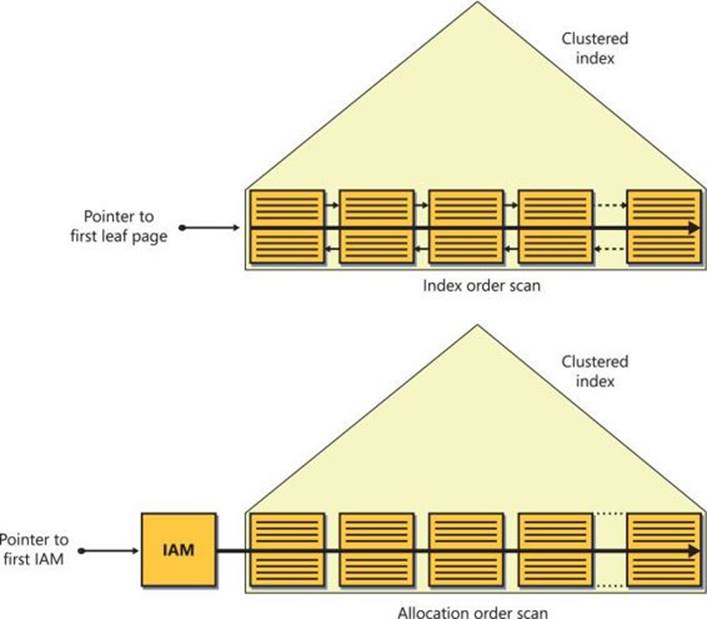

Table scan/unordered clustered index scan

The first access method I’ll describe is a full scan of the table when there’s no requirement to return the data in any particular order. You get such a full scan in two main cases. One is when you really need all rows from the table. Another is when you need only a subset of the rows but don’t have a good index to support your filter. The tricky thing is that the query plan for such a full scan looks different depending on the underlying table’s organization (heap or B-tree). When the underlying table is a heap, the plan will show an operator called Table Scan. When the underlying table is a B-tree, the plan will show an operator called Clustered Index Scan with an Ordered: False property. I’ll refer to the former as a table scan and to the latter as an unordered clustered index scan.

A table scan or an unordered clustered index scan involves a scan of all data pages that belong to the table. The following query against the Orders2 table, which is structured as a heap, would require a table scan:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders2;

Figure 2-9 shows the graphical execution plan produced by the relational engine’s optimizer for this query, and Figure 2-10 shows an illustration of the way this access method is processed by the storage engine.

FIGURE 2-9 Heap scan (execution plan).

FIGURE 2-10 Heap scan (storage engine).

SQL Server reported 24,396 logical reads for this query on my system.

If you find it a bit confusing that both the Physical Operation and Logical Operation properties show Table Scan, you’re not alone. To me, the logical operation is a table scan and the physical operation is a heap scan.

The only option that the storage engine has to process a Table Scan operator is to use an allocation order scan. Recall from the section “Table organization” that an allocation order scan is performed based on IAM pages in file order.

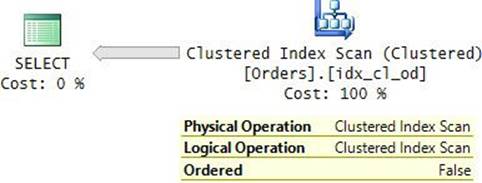

The following query against the Orders table, which is structured as a B-tree, would require an unordered clustered index scan:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders;

Figure 2-11 shows the execution plan that the optimizer will produce for this query. Notice that the Ordered property of the Clustered Index Scan operator indicates False. Figure 2-12 shows an illustration of the two ways that the storage engine can carry out this access method.

FIGURE 2-11 B-tree scan (execution plan).

FIGURE 2-12 B-tree scan (storage engine).

SQL Server reported 25,073 logical reads for this query on my system.

Also, here I find it a bit confusing that the plan shows the same value in the Physical Operation and Logical Operation properties. This time, both properties show Clustered Index Scan. To me, the logical operation is still a table scan and the physical operation is a clustered index scan.

The fact that the Ordered property of the Clustered Index Scan operator indicates False means that as far as the relational engine is concerned, the data does not need to be returned from the operator in key order. This doesn’t mean that it is a problem if it is returned ordered; instead, it means that any order would be fine. This leaves the storage engine with some maneuvering space in the sense that it is free to choose between two types of scans: an index order scan (a scan of the leaf of the index following the linked list) and an allocation order scan (a scan based on IAM pages). The factors that the storage engine takes into consideration when choosing which type of scan to employ include performance and data consistency. I’ll provide more details about the storage engine’s decision-making process after I describe ordered index scans (Clustered Index Scan and Index Scan operators with the property Ordered: True).

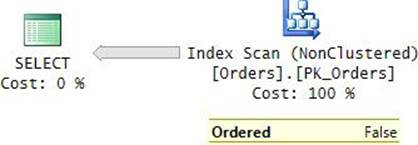

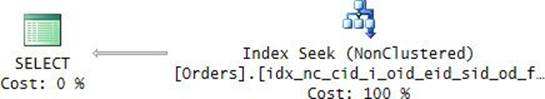

Unordered covering nonclustered index scan

An unordered covering nonclustered index scan is similar to an unordered clustered index scan. The concept of a covering index means that a nonclustered index contains all columns specified in a query. In other words, a covering index is not an index with special properties; rather, it becomes a covering index with respect to a particular query. SQL Server can find all the data it needs to satisfy the query by accessing solely the index data, without needing to access the full data rows. Other than that, the access method is the same as an unordered clustered index scan, only, obviously, the leaf level of the covering nonclustered index contains fewer pages than the leaf of the clustered index because the row size is smaller and more rows fit in each page. I explained earlier how to calculate the number of pages in the leaf level of an index (clustered or nonclustered).

As an example of this access method, the following query requests all orderid values from the Orders table:

SELECT orderid

FROM dbo.Orders;

Our Orders table has a nonclustered index on the orderid column (PK_Orders), meaning that all the table’s order IDs reside in the index’s leaf level. The index covers our query. Figure 2-13 shows the graphical execution plan you would get for this query, and Figure 2-14 illustrates the two ways in which the storage engine can process it.

FIGURE 2-13 Unordered covering nonclustered index scan (execution plan).

FIGURE 2-14 Unordered covering nonclustered index scan (storage engine).

SQL Server reported 2,611 logical reads for this query on my system. This is the number of pages making the leaf level of the PK_Orders index.

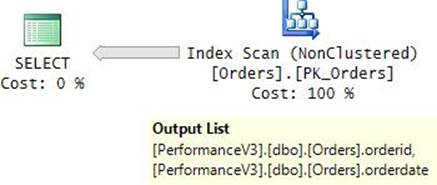

As a small puzzle for you, add the orderdate column to the query, like so:

SELECT orderid, orderdate

FROM dbo.Orders;

Examine the execution plan for this query as shown in Figure 2-15.

FIGURE 2-15 Index that includes a clustering key.

Observe that the PK_Orders index is still considered a covering one with respect to this query. The question is, how can this be the case when the index was defined explicitly only on the orderid column as the key? The answer is the table has a clustered index defined with the orderdatecolumn as the key. As you might recall, SQL Server uses the clustered index key as the row locator in nonclustered indexes. So, even though you defined the PK_Orders index explicitly only with the orderid column, SQL Server internally defined it with the columns orderid and orderdate. Never mind that SQL Server added the orderdate column to be used as the row locator—once it’s there, SQL Server can use it for other query-processing purposes.

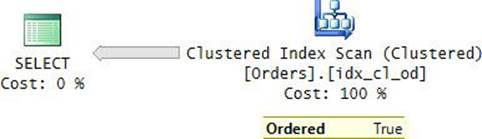

Ordered clustered index scan

An ordered clustered index scan is a full scan of the leaf level of the clustered index that guarantees that the data will be returned to the next operator in index order. For example, the following query, which requests all orders sorted by orderdate, will get such an access method in its plan:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

ORDER BY orderdate;

You can find the execution plan for this query in Figure 2-16 and an illustration of how the storage engine carries out this access method in Figure 2-17.

FIGURE 2-16 Ordered clustered index scan (execution plan).

FIGURE 2-17 Ordered clustered index scan (storage engine).

SQL Server reported 25,073 logical reads for this query on my system.

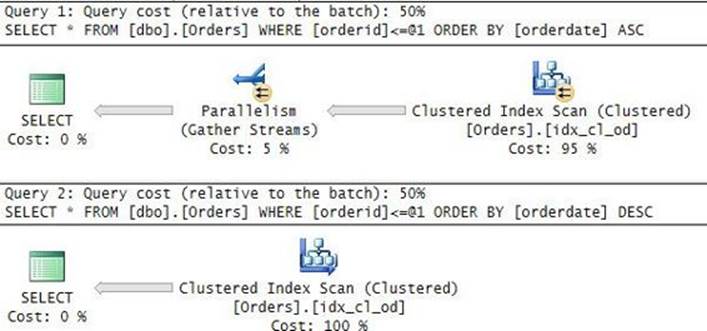

Notice in the plan that the Ordered property is True. This indicates that the data needs to be returned from the operator ordered. When the operator has the property Ordered: True, the scan can be carried out by the storage engine only in one way—by using an index order scan (a scan based on the index linked list), as shown in Figure 2-17. Unlike an allocation order scan, the performance of an index order scan depends on the fragmentation level of the index. With no fragmentation at all, the performance of an index order scan should be very close to the performance of an allocation order scan because both will end up reading the data in file order sequentially. However, with cold cache, as the fragmentation level grows, the performance difference will be more substantial—in favor of the allocation order scan, of course. The natural deductions are that you shouldn’t request the data sorted if you don’t need it sorted, to allow the potential for using an allocation order scan, and that you should resolve fragmentation issues in indexes that incur large index order scans against cold cache. I’ll elaborate on fragmentation and its treatment later.

Note that the optimizer is not limited to ordered-forward scans. Remember that the linked list is a doubly linked list, where each page contains both a next pointer and a previous pointer. Had you requested a descending sort order, you would have still gotten an ordered index scan, only ordered backward (from tail to head) instead of ordered forward (from head to tail). Interestingly, though, as of SQL Server 2014, the storage engine will consider using parallelism only with an ordered-forward scan. It always processes an ordered-backward scan serially.

SQL Server also supports descending indexes. One reason to use those is to enable parallel scans when parallelism is an important factor in the performance of the query. Another reason to use those is to support multiple key columns that have opposite directions in their sort requirements—for example, sorting by col1, col2 DESC. I’ll demonstrate using descending indexes later in the chapter in the section “Indexing features.”

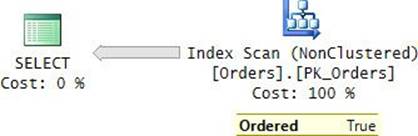

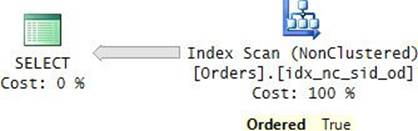

Ordered covering nonclustered index scan

An ordered covering nonclustered index scan is similar to an ordered clustered index scan, with the former performing the access method in a nonclustered index—typically, when covering a query. The cost is, of course, lower than a clustered index scan because fewer pages are involved. For example, the PK_Orders index on our clustered Orders table covers the following query, and it has the data in the desired order:

SELECT orderid, orderdate

FROM dbo.Orders

ORDER BY orderid;

Figure 2-18 shows the query’s execution plan, and Figure 2-19 illustrates the way the storage engine processes the access method.

FIGURE 2-18 Ordered covering nonclustered index scan (execution plan).

FIGURE 2-19 Ordered covering nonclustered index scan (storage engine).

Notice in the plan that the Ordered property of the Index Scan operator shows True.

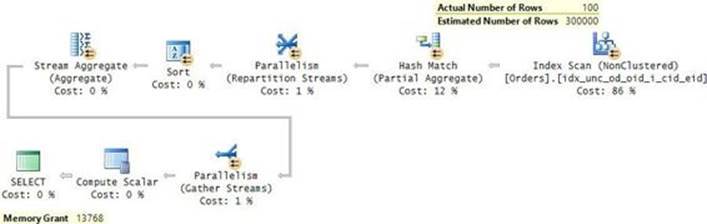

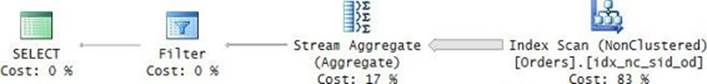

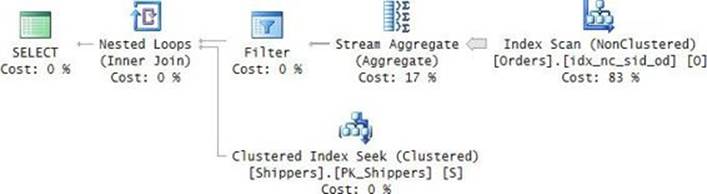

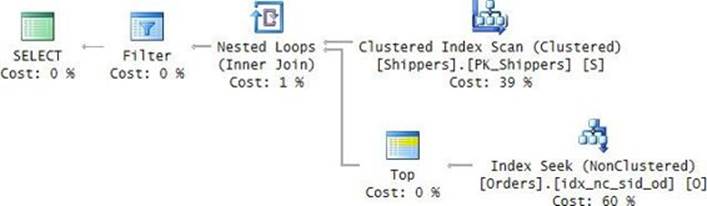

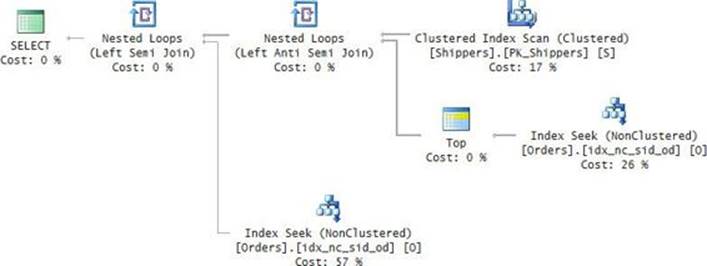

An ordered index scan is used not only when you explicitly request the data sorted, but also when the plan uses an operator that can benefit from sorted input data. This can be the case when processing GROUP BY, DISTINCT, joins, and other requests. This can also happen in less obvious cases. For example, check out the execution plan shown in Figure 2-20 for the following query:

SELECT orderid, custid, empid, orderdate

FROM dbo.Orders AS O1

WHERE orderid =

(SELECT MAX(orderid)

FROM dbo.Orders AS O2

WHERE O2.orderdate = O1.orderdate);

FIGURE 2-20 Ordered covering nonclustered index scan with segmentation.

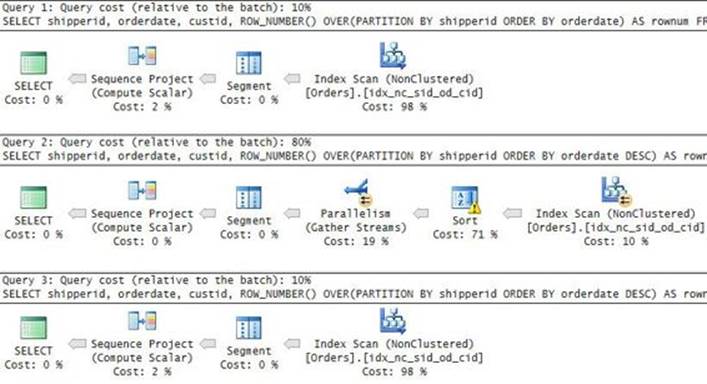

The Segment operator arranges the data in groups and emits a group at a time to the next operator (Top in our case). Our query requests the orders with the maximum orderid per orderdate. Fortunately, we have a covering index for the task (idx_unc_od_oid_i_cid_eid), with the key columns being (orderdate, orderid) and included nonkey columns being (custid, empid). I’ll elaborate on included nonkey columns later in the chapter. The important point for our discussion is that the Segment operator organizes the data by groups of orderdate values and emits the data, a group at a time, where the last row in each group is the maximum orderid in the group; because orderid is the second key column right after orderdate. Therefore, the plan doesn’t need to sort the data; rather, the plan just collects it with an ordered scan from the covering index, which is already sorted by orderdate and orderid. The Top operator has a simple task of just collecting the last row (TOP 1 descending), which is the row of interest for the group. The number of rows reported by the Top operator is 1490, which is the number of unique groups (orderdate values), each of which got a single row from the operator. Because our nonclustered index covers the query by including in its leaf level all other columns that are mentioned in the query (custid, empid), there’s no need to look up the data rows; the query is satisfied by the index data alone.

The storage engine’s treatment of scans

Before I continue the coverage of additional access methods, I’m going to explain the way the storage engine treats the relational engine’s instructions to perform scans. The relational engine is like the brains of SQL Server; it includes the optimizer, which is in charge of producing execution plans for queries. The storage engine is like the muscles of SQL Server; it needs to carry out the instructions provided to it by the relational engine in the execution plan and perform the actual row operations. Sometimes the optimizer’s instructions leave the storage engine with some room for maneuvering, and then the storage engine determines the best of several possible options based on factors such as performance and consistency.

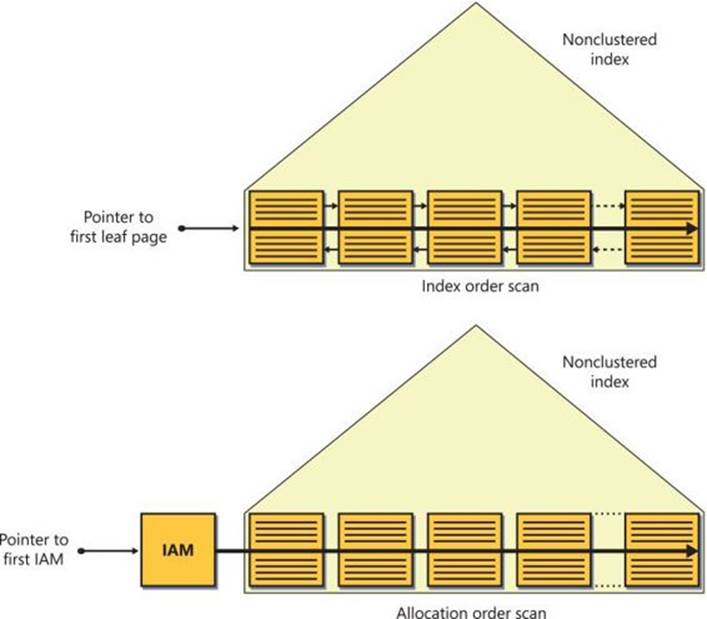

Allocation order scans vs. index order scans

When the plan shows a Table Scan operator, the storage engine has only one option: to use an allocation order scan. When the plan shows an Index Scan operator (clustered or nonclustered) with the property Ordered: True, the storage engine can use only an index order scan.

When the plan shows an Index Scan operator with Ordered: False, the relational engine doesn’t care in what order the rows are returned. In this case, there are two options to scan the data: allocation order scan and index order scan. It is up to the storage engine to determine which to employ. Unfortunately, the storage engine’s actual choice is not indicated in the execution plan, or anywhere else. I will explain the storage engine’s decision-making process, but it’s important to understand that what the plan shows is the relational engine’s instructions and not what the storage engine did.

The performance of an allocation order scan is not affected by logical fragmentation in the index because it’s done in file order, anyway. However, the performance of an index order scan that involves physical reads is affected by fragmentation—the higher the fragmentation, the slower the scan. Therefore, as far as performance is concerned, the storage engine considers the allocation order scan the preferable option. The exception is when the index is very small (up to 64 pages). In that case, the cost of interpreting IAM pages becomes significant with respect to the rest of the work, and the storage engine considers the index order scan to be preferable. Small tables aside, in terms of performance the allocation order scan is considered preferable.

However, performance is not the only aspect that the storage engine needs to take into consideration; it also needs to account for data-consistency expectations based on the effective isolation level. When there’s more than one option to carry out a request, the storage engine opts for the fastest option that meets the consistency requirements.

In certain circumstances, scans can end up returning multiple occurrences of rows or even skipping rows. Allocation order scans are more prone to such behavior than index order scans. I’ll first describe how such a phenomenon can happen with allocation order scans and in which circumstances. Then I’ll explain how it can happen with index order scans.

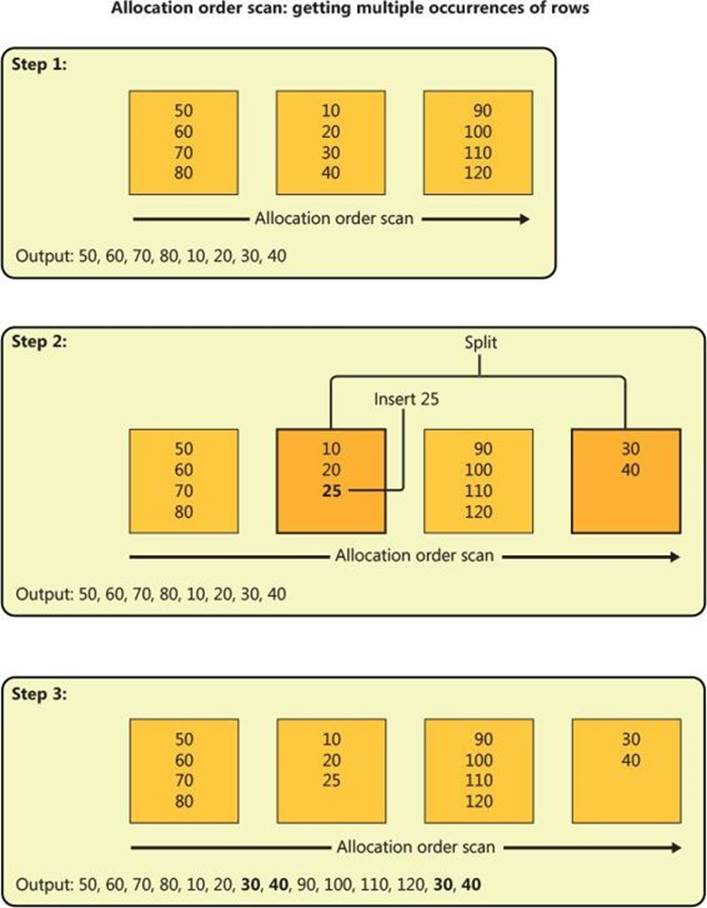

Allocation order scans

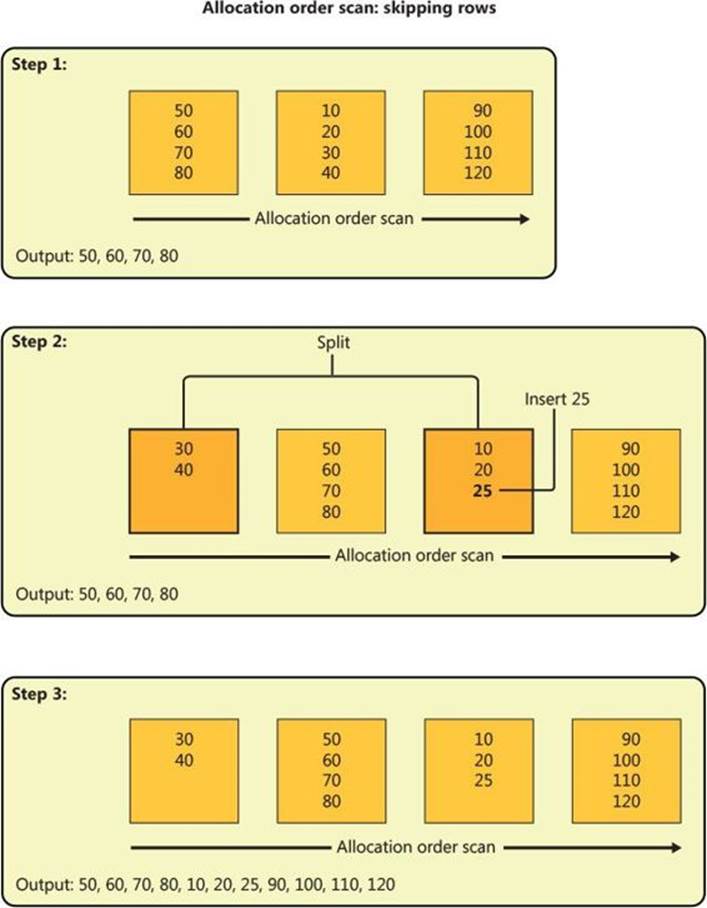

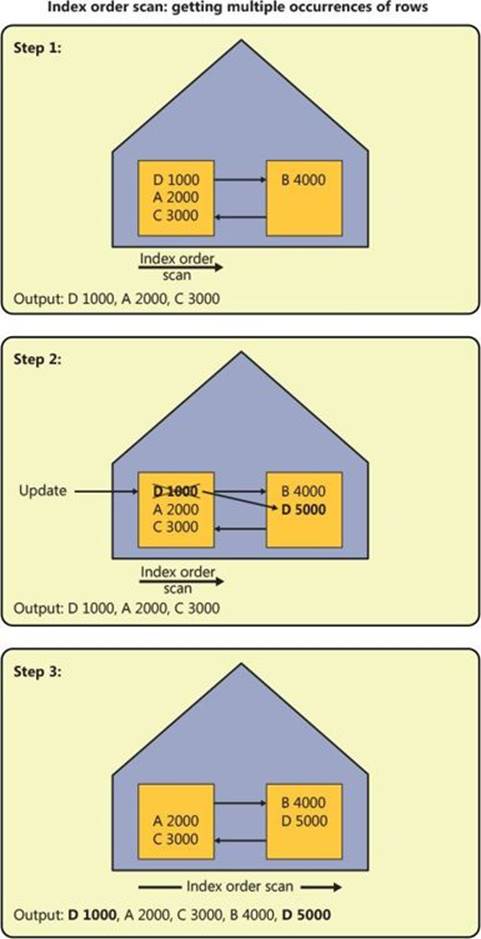

Figure 2-21 demonstrate in three steps how an allocation order scan can return multiple occurrences of rows.

FIGURE 2-21 Allocation order scan: getting multiple occurrences of rows.

Step 1 shows an allocation order scan in progress, reading the leaf pages of some index in file order (not index order). Two pages were already read (keys 50, 60, 70, 80, 10, 20, 30, 40). At this point, before the third page of the index is read, someone inserts a row into the table with key 25.

Step 2 shows a split that took place in the page that was the target for the insert because it was full. As a result of the split, a new page was allocated—in our case, later in the file at a point that the scan did not yet reach. Half the rows from the original page move to the new page (keys 30, 40), and the new row with key 25 was added to the original page because of its key value.

Step 3 shows the continuation of the scan: reading the remaining two pages (keys 90, 100, 110, 120, 30, 40), including the one that was added because of the split. Notice that the rows with keys 30 and 40 were read a second time.

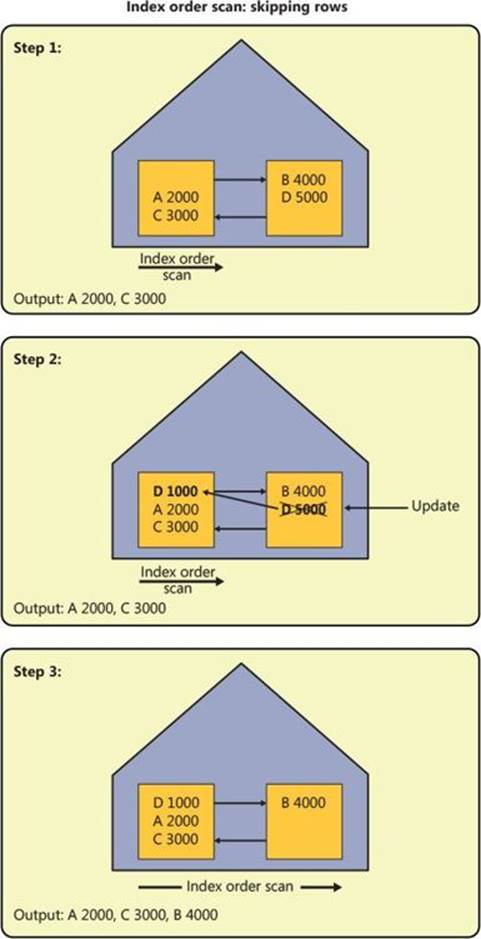

Of course, in a similar fashion, depending on how far the scan reaches by the time this split happens and where the new page is allocated, the scan might end up skipping rows. Figure 2-22 demonstrates how this can happen in three steps.

FIGURE 2-22 Allocation order scan: skipping rows.

Step 1 shows an allocation order scan in progress that manages to read one page (keys 50, 60, 70, 80) before the insert takes place.

Step 2 shows the split of the target page, only this time the new page is allocated earlier in the file at a point that the scan already passed. Like in the previous split example, the rows with keys 30 and 40 move to the new page, and the new row with key 25 is added to the original page.

Step 3 shows the continuation of the scan: reading the remaining two pages (keys 10, 20, 25, 90, 100, 110, 120). As you can see, the rows with keys 30 and 40 were completely skipped.

In short, an allocation order scan can return multiple occurrences of rows and skip rows resulting from splits that take place during the scan. A split can take place because of an insert of a new row, an update of an index key causing the row to move, or an update of a variable-length column causing the row to expand. Remember that splits take place only in indexes; heaps do not incur splits. Therefore, such phenomena cannot happen in heaps.

An index order scan is safer in the sense that it won’t read multiple occurrences of the same row or skip rows because of splits. Remember that an index order scan follows the index linked list in order. If a page that the scan hasn’t yet reached splits, the scan ends up reading both pages; therefore, it won’t skip rows. If a page that the scan already passed splits, the scan doesn’t read the new one; therefore, it won’t return multiple occurrences of rows.

The storage engine is well aware that allocation order scans are prone to such inconsistent reads because of splits, while index order scans aren’t. It will carry out an Index Scan Ordered: False with an allocation order scan in one of two categories of cases that I will refer to as the unsafeand safe categories.

The unsafe category is when the scan actually can return multiple occurrences of rows or skip rows because of splits. The storage engine opts for this option when the index size is greater than 64 pages and the request is running under the Read Uncommitted isolation level (for example, when you specify NOLOCK in the query). Most people’s perception of Read Uncommitted is simply that the query does not request a shared lock and therefore it can read uncommitted changes (dirty reads). This perception is true, but unfortunately most people don’t realize that to the storage engine, Read Uncommitted is also an indication that pretty much all bets are off in terms of consistency. In other words, it will opt for the faster option even at the cost of returning multiple occurrences of rows or skipping rows. When the query is running under the default Read Committed isolation level or higher, the storage engine will opt for an index order scan to prevent such phenomena from happening because of splits. To recap, the storage engine employs allocation order scans of the unsafe category when all the following conditions are true:

![]() The index size is greater than 64 pages.

The index size is greater than 64 pages.

![]() The plan shows Index Scan, Ordered: False.

The plan shows Index Scan, Ordered: False.

![]() The query is running under the Read Uncommitted isolation level.

The query is running under the Read Uncommitted isolation level.

![]() Changes are allowed to the data.

Changes are allowed to the data.

In terms of the safe category, the storage engine also opts for allocation order scans with higher isolation levels than Read Uncommitted when it knows that it is safe to do so without sacrificing the consistency of the read (at least as far as splits are concerned). For example, when you run the query using the TABLOCK hint, the storage engine knows that no one can change the data while the read is in progress. Therefore, it is safe to use an allocation order scan. Of course, this means that attempts by other sessions to modify the table will be blocked during the read. Another example where the storage engine knows that it is safe to employ an allocation order scan is when the index resides in a read-only filegroup or database. To summarize, the storage engine will use an allocation order scan of the safe category when the index size is greater than 64 pages and the data is read-only (because of the TABLOCK hint, read-only filegroup, or database).

Keep in mind that logical fragmentation has an impact on the performance of index order scans but not on that of allocation order scans. And based on the preceding information, you should realize that the storage engine will sometimes use index order scans to process an Index Scan operator with the Ordered: False property.

The next section will demonstrate both unsafe and safe allocation order scans.

Run the following code to create a table called T1:

SET NOCOUNT ON;

USE tempdb;

GO

-- Create table T1

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

CREATE TABLE dbo.T1

(

clcol UNIQUEIDENTIFIER NOT NULL DEFAULT(NEWID()),

filler CHAR(2000) NOT NULL DEFAULT('a')

);

GO

CREATE UNIQUE CLUSTERED INDEX idx_clcol ON dbo.T1(clcol);

A unique clustered index is created on clcol, which will be populated with random GUIDs by the default expression NEWID(). Populating the clustered index key with random GUIDs should cause a large number of splits, which in turn should cause a high level of logical fragmentation in the index.

Run the following code to insert rows into the table using an infinite loop, and stop it after a few seconds (say 5, to allow more than 64 pages in the table):

SET NOCOUNT ON;

USE tempdb;

TRUNCATE TABLE dbo.T1;

WHILE 1 = 1

INSERT INTO dbo.T1 DEFAULT VALUES;

Run the following code to check the fragmentation level of the index:

SELECT avg_fragmentation_in_percent FROM sys.dm_db_index_physical_stats

(

DB_ID(N'tempdb'),

OBJECT_ID(N'dbo.T1'),

1,

NULL,

NULL

);

When I ran this code on my system, I got more than 99 percent fragmentation, which of course is very high. If you need more evidence to support the fact that the order of the pages in the linked list is different from their order in the file, you can use the undocumented DBCC IND command, which gives you the B-tree layout of the index:

DBCC IND(N'tempdb', N'dbo.T1', 0);

I prepared the following piece of code to spare you from having to browse through the output of DBCC IND in an attempt to figure out the index leaf layout:

CREATE TABLE #DBCCIND

(

PageFID INT,

PagePID INT,

IAMFID INT,

IAMPID INT,

ObjectID INT,

IndexID INT,

PartitionNumber INT,

PartitionID BIGINT,

iam_chain_type VARCHAR(100),

PageType INT,

IndexLevel INT,

NextPageFID INT,

NextPagePID INT,

PrevPageFID INT,

PrevPagePID INT

);

INSERT INTO #DBCCIND

EXEC (N'DBCC IND(N''tempdb'', N''dbo.T1'', 0)');

CREATE CLUSTERED INDEX idx_cl_prevpage ON #DBCCIND(PrevPageFID, PrevPagePID);

WITH LinkedList

AS

(

SELECT 1 AS RowNum, PageFID, PagePID

FROM #DBCCIND

WHERE IndexID = 1

AND IndexLevel = 0

AND PrevPageFID = 0

AND PrevPagePID = 0

UNION ALL

SELECT PrevLevel.RowNum + 1,

CurLevel.PageFID, CurLevel.PagePID

FROM LinkedList AS PrevLevel

JOIN #DBCCIND AS CurLevel

ON CurLevel.PrevPageFID = PrevLevel.PageFID

AND CurLevel.PrevPagePID = PrevLevel.PagePID

)

SELECT

CAST(PageFID AS VARCHAR(MAX)) + ':'

+ CAST(PagePID AS VARCHAR(MAX)) + ' ' AS [text()]

FROM LinkedList

ORDER BY RowNum

FOR XML PATH('')

OPTION (MAXRECURSION 0);

DROP TABLE #DBCCIND;

The code stores the output of DBCC IND in a temp table, then it uses a recursive query to follow the linked list from head to tail, and then it uses a technique based on the FOR XML PATH option to concatenate the addresses of the leaf pages into a single string in linked list order. I got the following output on my system:

1:132098 1:111372 1:133098 1:125591 1:137567 1:118198 1:128938 1:117929 1:136036 1:128595...

It’s easy to observe logical fragmentation here. For example, page 1:132098 points to the page 1:111372, which is earlier in the file.

Next, run the following code to query T1:

SELECT SUBSTRING(CAST(clcol AS BINARY(16)), 11, 6) AS segment1, *

FROM dbo.T1;

The last 6 bytes of a UNIQUEIDENTIFIER value represent the first segment that determines ordering; therefore, I extracted that segment with the SUBSTRING function so that it would be easy to see whether the rows are returned in index order. The execution plan of this query indicates a Clustered Index Scan, Ordered: False. However, because the environment is not read-only and the isolation level is the default Read Committed, the storage engine uses an index order scan. This query returns the rows in the output in index order. For example, here’s the output that I got on my system:

segment1 clcol filler

-------------- ------------------------------------ -------

0x0000ED83A06E F5F5CA72-48F6-4716-BBDC-0000ED83A06E a

0x0002672771DF 7B3D64FE-9197-487E-A354-0002672771DF a

0x0002EE4AF130 7D4A671D-5FBD-4B37-831C-0002EE4AF130 a

0x000395E30408 2A670CC5-5459-4506-9DCE-000395E30408 a

0x0004BD69D4ED 40CB1A42-48C7-4D9C-A5F4-0004BD69D4ED a

0x0005E14203C0 DCFFE73A-2125-490F-913B-0005E14203C0 a

0x00067DD63977 B49C5103-01E7-4745-B1C3-00067DD63977 a

0x0007B82DD187 1157F2E9-AD7E-4795-850F-0007B82DD187 a

0x0007BC012CC0 93ED4A5C-3AAD-4686-8CA1-0007BC012CC0 a

0x0007E73BEFB8 732072E7-A767-48A3-B2CF-0007E73BEFB8 a

...

Query the table again, this time with the NOLOCK hint:

SELECT SUBSTRING(CAST(clcol AS BINARY(16)), 11, 6) AS segment1, *

FROM dbo.T1 WITH (NOLOCK);

This time, the storage engine employs an allocation order scan of the unsafe category. Here’s the output I got from this code on my system:

segment1 clcol filler

-------------- ------------------------------------ -------

0x03D5CA43F8AE 7E1A28DE-B712-4F71-A553-03D5CA43F8AE a

0x426CDFD8DDB3 003EFE48-180E-4A8D-B6E1-426CDFD8DDB3 a

0x426D6A3FFC2C 9239BF31-1AA2-47F0-8B50-426D6A3FFC2C a

0x426E5C9673AD 2F0A49B8-2A7E-4C20-A22E-426E5C9673AD a

0x5EEBC295A84A 3743277C-0C72-48CF-A6B5-5EEBC295A84A a

0x97A52864E1D8 131BDF97-E015-42E2-B884-97A52864E1D8 a

0x78D967590D8C 7AA0F5BF-0BF1-4689-B851-78D967590D8C a

0x78DA17132327 F40CC88B-FC08-4534-842C-78DA17132327 a

0x78DACE8A159E 90BE7781-301F-48AB-A398-78DACE8A159E a

0x20C225A8A10D 547BD309-7804-4969-8A7F-20C225A8A10D a

...

Notice that this time the rows are not returned in index order. If splits occur while such a read is in progress, the read might end up returning multiple occurrences of rows and skipping rows.

As an example of an allocation order scan of the safe category, run the query with the TABLOCK hint:

SELECT SUBSTRING(CAST(clcol AS BINARY(16)), 11, 6) AS segment1, *

FROM dbo.T1 WITH (TABLOCK);

Here, even though the code is running under the Read Committed isolation level, the storage engine knows that it is safe to use an allocation order scan because no one can change the data during the read. I got the following output back from this query:

segment1 clcol filler

-------------- ------------------------------------ -------

0x03D5CA43F8AE 7E1A28DE-B712-4F71-A553-03D5CA43F8AE a

0x426CDFD8DDB3 003EFE48-180E-4A8D-B6E1-426CDFD8DDB3 a

0x426D6A3FFC2C 9239BF31-1AA2-47F0-8B50-426D6A3FFC2C a

0x426E5C9673AD 2F0A49B8-2A7E-4C20-A22E-426E5C9673AD a

0x5EEBC295A84A 3743277C-0C72-48CF-A6B5-5EEBC295A84A a

0x97A52864E1D8 131BDF97-E015-42E2-B884-97A52864E1D8 a

0x78D967590D8C 7AA0F5BF-0BF1-4689-B851-78D967590D8C a

0x78DA17132327 F40CC88B-FC08-4534-842C-78DA17132327 a

0x78DACE8A159E 90BE7781-301F-48AB-A398-78DACE8A159E a

0x20C225A8A10D 547BD309-7804-4969-8A7F-20C225A8A10D a

...

Next I’ll demonstrate how an unsafe allocation order scan can return multiple occurrences of rows. Open two connections (call them connection 1 and connection 2). Run the following code in connection 1 to insert rows into T1 in an infinite loop, causing frequent splits:

SET NOCOUNT ON;

USE tempdb;

TRUNCATE TABLE dbo.T1;

WHILE 1 = 1

INSERT INTO dbo.T1 DEFAULT VALUES;

Run the following code in connection 2 to read the data in a loop while connection 1 is inserting data:

SET NOCOUNT ON;

USE tempdb;

WHILE 1 = 1

BEGIN

SELECT * INTO #T1 FROM dbo.T1 WITH(NOLOCK);

IF EXISTS(

SELECT clcol

FROM #T1

GROUP BY clcol

HAVING COUNT(*) > 1) BREAK;

DROP TABLE #T1;

END

SELECT clcol, COUNT(*) AS cnt

FROM #T1

GROUP BY clcol

HAVING COUNT(*) > 1;

DROP TABLE #T1;

The SELECT statement uses the NOLOCK hint, and the plan shows Clustered Index Scan, Ordered: False, meaning that the storage engine will likely use an allocation order scan of the unsafe category. The SELECT INTO statement stores the output in a temporary table so that it will be easy to prove that rows were read multiple times. In each iteration of the loop, after reading the data into the temp table, the code checks for multiple occurrences of the same GUID in the temp table. This can happen only if the same row was read more than once. If duplicates are found, the code breaks from the loop and returns the GUIDs that appear more than once in the temp table. When I ran this code, after a few seconds I got the following output in connection 2 showing all the GUIDs that were read more than once:

clcol cnt

------------------------------------ -----------

F144911F-44B8-4AC7-9396-D2C26DBB9E2E 2

990B829E-739D-4AA1-BD59-F55DFF8E9530 2

9A02C46B-389E-45A1-AC07-90DB4115D43B 2

B5BAF81C-B2B5-492A-9E9A-DB566E4A5A98 2

132255C8-63F5-4DB7-9126-D37C1F321868 2

69A77E96-B748-48A8-94A2-85B6A270F5D1 2

7F3C7E7A-8181-44BB-8D0F-0F39AA001DC2 2

B5C5C70D-B721-4225-8455-F56910DA2CD1 2

9E2588DA-CB57-4CA0-A10D-F08B0306B6A6 2

F50AA680-E754-4B61-8335-F08A3C965924 2

...

At this point, you can stop the code in connection 1.

If you want, you can rerun the test without the NOLOCK hint and see that the code in connection 2 doesn’t stop, because duplicate GUIDs are not found.

Next I’ll demonstrate an unsafe allocation order scan that skips rows. Run the following code to create the tables T1 and MySequence:

-- Create table T1

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

CREATE TABLE dbo.T1

(

clcol UNIQUEIDENTIFIER NOT NULL DEFAULT(NEWID()),

seqval INT NOT NULL,

filler CHAR(2000) NOT NULL DEFAULT('a')

);

CREATE UNIQUE CLUSTERED INDEX idx_clcol ON dbo.T1(clcol);

-- Create table MySequence

IF OBJECT_ID(N'dbo.MySequence', N'U') IS NOT NULL DROP TABLE dbo.MySequence;

CREATE TABLE dbo.MySequence(val INT NOT NULL);

INSERT INTO dbo.MySequence(val) VALUES(0);

The table T1 is similar to the one used in the previous demonstration, but this one has an additional column called seqval that will be populated with sequential integers. The table MySequence holds the last-used sequence value (populated initially with 0), which will be incremented by 1 before each insert to T1. To prove that a scan skipped rows, you simply need to show that the output of the scan has gaps between contiguous values in the seqval column. I’m not using the built-in sequence object in my test because SQL Server doesn’t guarantee that you won’t have gaps between sequence values, regardless of the cache setting you use. To demonstrate this behavior, open two connections (again, call them connection 1 and connection 2). Run the following code from connection 1 to insert rows into T1 in an infinite loop, and increment the sequence value by 1 in each iteration:

SET NOCOUNT ON;

USE tempdb;

UPDATE dbo.MySequence SET val = 0;

TRUNCATE TABLE dbo.T1;

DECLARE @nextval AS INT;

WHILE 1 = 1

BEGIN

UPDATE dbo.MySequence SET @nextval = val += 1;

INSERT INTO dbo.T1(seqval) VALUES(@nextval);

END

Run the following code in connection 2 while the inserts are running in connection 1:

SET NOCOUNT ON;

USE tempdb;

DECLARE @max AS INT;

WHILE 1 = 1

BEGIN

SET @max = (SELECT MAX(seqval) FROM dbo.T1);

SELECT * INTO #T1 FROM dbo.T1 WITH(NOLOCK);

CREATE NONCLUSTERED INDEX idx_seqval ON #T1(seqval);

IF EXISTS(

SELECT *

FROM (SELECT seqval AS cur,

(SELECT MIN(seqval)

FROM #T1 AS N

WHERE N.seqval > C.seqval) AS nxt

FROM #T1 AS C

WHERE seqval <= @max) AS D

WHERE nxt - cur > 1) BREAK;

DROP TABLE #T1;

END