T-SQL Querying (2015)

Chapter 3. Multi-table queries

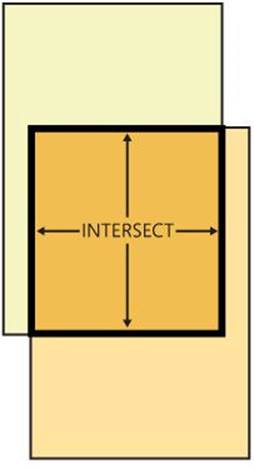

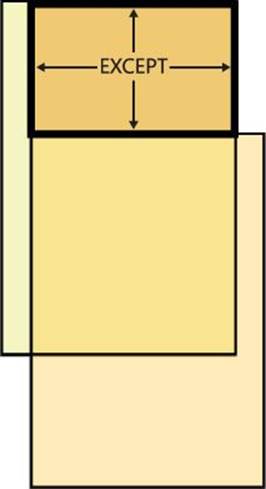

This chapter’s focus is multi-table queries. It covers subqueries, table expressions, the APPLY operator, joins, and the relational operators UNION, EXCEPT, and INTERSECT.

Chapters 1 and 2 provided the foundation for the rest of the book. Chapter 1, “Logical query processing,” described the logical layer of T-SQL, and Chapter 2, “Query tuning,” described the physical layer. Each layer was described separately to emphasize its unique role—the logical layer’s role is to define meaning, and the physical layer’s role is to implement, or carry out, what the logical layer defines. The physical layer is not supposed to define meaning. Starting with this chapter, the coverage relies on the foundation provided in the previous chapters, with discussions revolving around both the logical and the physical aspects of the code.

Subqueries

Subqueries are a classic feature in SQL that you use to nest queries. Using subqueries is a convenient capability in the language when you want one query to operate on the result of another, and if you prefer not to use intermediate objects like variables for this purpose.

This section covers different aspects of subqueries. It covers self-contained versus correlated subqueries, the EXISTS predicate, and misbehaving subqueries. Subqueries can return three shapes of results: single-valued (scalar), multi-valued, and table-valued. This section covers the first two. The last type is covered later in the chapter in the “Table expressions” section.

Self-contained subqueries

A self-contained subquery is, as it sounds, independent of the outer query. In contrast, a correlated subquery has references (correlations) to columns of tables from the outer query. One of the advantages of self-contained subqueries compared to correlated ones is the ease of troubleshooting. You can always copy the inner query to a separate window and troubleshoot it independently. When you’re done troubleshooting and fixing what you need, you paste the subquery back in the host query. I’ll start by providing examples for scalar and multi-valued self-contained subqueries, and then transition to correlated ones.

The first example I’ll discuss is of a self-contained, scalar subquery. A scalar subquery returns a single value and is allowed where a single-valued expression is expected—for example, as an operand in a comparison in the WHERE clause. As an example, the following query returns customers for whom all employees in the organization handled orders:

SET NOCOUNT ON;

USE TSQLV3;

SELECT custid

FROM Sales.Orders

GROUP BY custid

HAVING COUNT(DISTINCT empid) = (SELECT COUNT(*) FROM HR.Employees);

As an aside, this query handles a simple case of what relational algebra refers to as relational division. You are dividing the set of orders in the Orders table (which connects customers and employees) by the set of employees in the Employees table, and you get back qualifying customers.

The subquery returns the count of employees in the Employees table. The outer query groups the orders from the Orders table by customer ID, and it filters only customer groups having a distinct count of employee IDs that is equal to the count of employees returned by the subquery. Obviously, this query assumes that proper referential integrity is enforced in the database, preventing an order from having an employee ID that doesn’t appear in the Employees table. The subquery returns 9 as the count of employees in the Employees table. The outer query returns customer ID 71 because that’s the only customer with orders handled by 9 distinct employees.

Note that if a scalar subquery returns an empty set, it’s converted to a NULL. If it returns more than one value, it fails at run time. Neither option is a possibility in our case, because the scalar aggregate subquery is guaranteed to return exactly one value, even if the source table is empty.

The next example is of a self-contained, multi-valued subquery. A multi-valued subquery returns multiple values in one column. It can be used where a multi-valued result is expected—for example, with the IN predicate.

The task in our example is to return orders that were placed on the last date of activity of the month. The last date of activity is not necessarily the last possible date of the month—for example, if the company doesn’t handle orders on weekends and holidays. The first step in the solution is to write a grouped query that returns the last date of activity for each year and month group, like so:

SELECT MAX(orderdate) AS lastdate

FROM Sales.Orders

GROUP BY YEAR(orderdate), MONTH(orderdate);

This query returns the following output:

lastdate

----------

2014-01-31

2014-07-31

2015-05-06

2014-04-30

2014-10-31

2015-02-27

2013-12-31

2014-05-30

2015-03-31

2013-09-30

...

This query is a bit unusual for a grouped query. Usually, you group by certain elements and return the grouped elements and aggregates. Here you group by the order year and month, and you return only the aggregate without the group elements. But if you think about it, the maximum date for a year and month group preserves within it the year and month information, so the group information isn’t really lost here.

The second and final step is to query the Orders table and filter only orders with order dates that appear in the set of dates returned by the query from the previous step. Here’s the complete solution query:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders

WHERE orderdate IN

(SELECT MAX(orderdate)

FROM Sales.Orders

GROUP BY YEAR(orderdate), MONTH(orderdate));

This query generates the following output:

orderid orderdate custid empid

----------- ---------- ----------- -----------

10269 2013-07-31 89 5

10294 2013-08-30 65 4

10317 2013-09-30 48 6

10343 2013-10-31 44 4

10368 2013-11-29 20 2

...

Correlated subqueries

Correlated subqueries have references known as correlations to columns from tables in the outer query. They tend to be trickier to troubleshoot when problems occur because you cannot run them independently. If you copy the inner query and paste it in a new window (to make it runnable), you have to substitute the correlations with constants representing sample values from your data. But then when you’re done troubleshooting and fixing what you need, you have to replace the constants back with the correlations. This makes troubleshooting correlated subqueries more complex and more prone to errors.

As the first example for a correlated subquery, consider a task similar to the last one in the previous section. The task was to return the orders that were placed on the last date of activity of the month. Our new task is to return the orders that were placed on the last date of activity of thecustomer. Seemingly, the only difference is that instead of the group being the year and month, it’s now the customer. So if you take the last query and replace the grouping set from YEAR(orderdate), MONTH(orderdate) with custid, you get the following subquery.

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders

WHERE orderdate IN

(SELECT MAX(orderdate)

FROM Sales.Orders

GROUP BY custid);

However, now there is a bug in the solution. The values returned by the subquery this time don’t preserve the group (customer) information. Suppose that for customer 1 the maximum date is some d1 date, and for customer 2, the maximum date is some later d2 date; if customer 2 happens to place orders on d1, you will get those too, even though you’re not supposed to. To fix the bug, you need the inner query to operate only on the orders that were placed by the customer from the outer row. For this, you need a correlation, like so:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders AS O1

WHERE orderdate IN

(SELECT MAX(O2.orderdate)

FROM Sales.Orders AS O2

WHERE O2.custid = O1.custid

GROUP BY custid);

Now the solution is correct, but there are a couple of awkward things about it. For one, you’re filtering only one customer and also grouping by the customer, so you will get only one group. In such a case, it’s more natural to express the aggregate as a scalar aggregate without the explicit GROUP BY clause. For another, the subquery will return only one value. So, even though the IN predicate is going to work correctly, it’s more natural to use an equality operator in such a case. After applying these two changes, you get the following solution:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders AS O1

WHERE orderdate =

(SELECT MAX(O2.orderdate)

FROM Sales.Orders AS O2

WHERE O2.custid = O1.custid);

This code generates the following output:

orderid orderdate custid empid

----------- ---------- ----------- -----------

11044 2015-04-23 91 4

11005 2015-04-07 90 2

11066 2015-05-01 89 7

10935 2015-03-09 88 4

11025 2015-04-15 87 6

...

10972 2015-03-24 40 4

10973 2015-03-24 40 6

11028 2015-04-16 39 2

10933 2015-03-06 38 6

11063 2015-04-30 37 3

...

Currently, if a customer placed multiple orders on its last date of activity, the query returns all those orders. As an example, observe that the query output contains multiple orders for customer 40. Suppose you are required to return only one order per customer. In such a case, you need to define a rule for breaking the ties in the order date. For example, the rule could be to break the ties based on the maximum primary key value (order ID, in our case). In the case of the two orders that were placed by customer 40 on March 24, 2015, the maximum primary key value is 10973. Note that that’s the maximum primary key value from the customer’s orders with the maximum order date—not from all the customer’s orders.

You can use a number of methods to apply the tiebreaking logic. One method is to use a separate scalar aggregate subquery for each ordering and tiebreaking element. Here’s a query demonstrating this technique:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders AS O1

WHERE orderdate =

(SELECT MAX(orderdate)

FROM Sales.Orders AS O2

WHERE O2.custid = O1.custid)

AND orderid =

(SELECT MAX(orderid)

FROM Sales.Orders AS O2

WHERE O2.custid = O1.custid

AND O2.orderdate = O1.orderdate);

The first subquery is the same as in the solution without the tiebreaker. It’s responsible for returning the maximum order date for the outer customer. The second subquery returns the maximum order ID for the outer customer and order date. The problem with this approach is that you need as many subqueries as the number of ordering and tiebreaking elements. Each subquery needs to be correlated by all elements you correlated in the previous subqueries plus a new one. So, especially when multiple tiebreakers are involved, this solution becomes long and cumbersome.

To simplify the solution, you can collapse all subqueries into one by using TOP instead of scalar aggregates. The TOP filter has two interesting advantages in its design compared to aggregates. One advantage is that TOP can return one element while ordering by another, whereas aggregates consider the same element as both the ordering and returned element. Another advantage is that TOP can have a vector of elements defining order, whereas aggregates are limited to a single input element. These two advantages allow you to handle all ordering and tiebreaking elements in the same subquery, like so:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders AS O1

WHERE orderid =

(SELECT TOP (1) orderid

FROM Sales.Orders AS O2

WHERE O2.custid = O1.custid

ORDER BY orderdate DESC, orderid DESC);

The outer query filters only the orders where the key is equal to the key obtained by the TOP subquery representing the customer’s most recent order, with the maximum key used as a tiebreaker. To obtain the qualifying key, the subquery filters the orders for the outer customer, orders them by orderdate DESC, orderid DESC, and returns the key of the very top row.

This type of task is generally known as the top N per group task and is quite common in practice in different variations. It is discussed in a number of places in the book, with the most complete coverage conducted in Chapter 5, “TOP and OFFSET-FETCH.” Here it is discussed mainly to demonstrate subqueries.

At this point, you have a simple and intuitive solution using the TOP filter. The question is, how well does it perform? In terms of indexing guidelines, solutions for top N per group tasks usually benefit from a specific indexing pattern that I like to think of as the POC pattern. That’s an acronym for the elements involved in the task. P stands for the partitioning (the group) element, which in our case is custid. O stands for the ordering element, which in our case is orderdate DESC, orderid DESC. The PO elements make the index key list. C stands for the covered elements—namely, all remaining elements in the query that you want to include in the index to get query coverage. In our case, the C element is empid. If the index is nonclustered, you specify the C element in the index INCLUDE clause. If the index is clustered, the C element is implied. Run the following code to create a POC index to support your solution:

CREATE UNIQUE INDEX idx_poc

ON Sales.Orders(custid, orderdate DESC, orderid DESC) INCLUDE(empid);

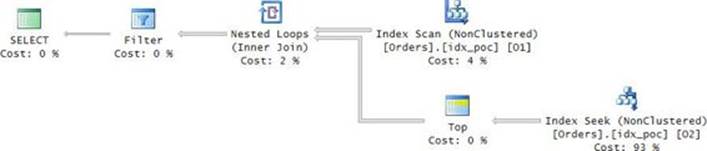

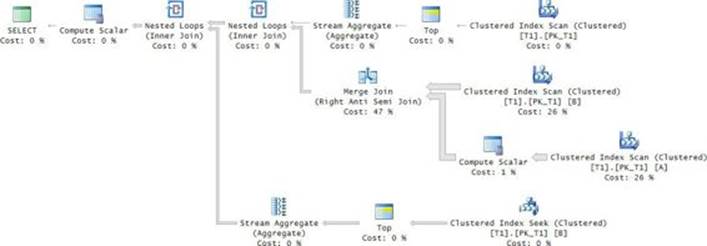

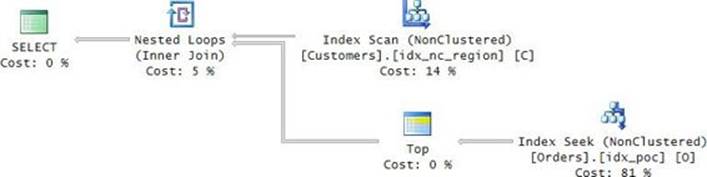

With the index created, you get the plan shown in Figure 3-1 for the solution with the TOP subquery.

FIGURE 3-1 Plan with a seek per order.

The plan does a full scan of the POC index representing the instance O1 of the Orders table (the outer instance in the query). Then for each row from O1, the plan performs a seek in the POC index representing the instance O2 of the Orders table (the inner instance in the query). The seek reaches the beginning of the section in the leaf of the index for the current customer and scans one row to collect the key. The plan then filters only the rows from O1 where the O1 key is equal to the O2 key.

There is a certain inefficiency in this plan that can be optimized. Think about how many times the Index Seek operator is executed. Currently, you get a seek per order, but you are after only one row per customer. So a more efficient strategy is to apply a seek per customer. The denser thecustid column, the fewer seeks such a strategy would require. To make the performance discussion more concrete, suppose the data has 10,000 customers with an average of 1,000 orders each, giving you 10,000,000 orders in total. The existing plan performs 10,000,000 seeks. With three levels in the index B-tree, this translates to 30,000,000 random reads. That’s extremely inefficient. If you figure out a way to get a plan that performs a seek per customer, you would get only 10,000 seeks. Such a plan can be achieved using the CROSS APPLY operator, as I will demonstrate later in this chapter. For now, I’ll limit the solution to using only plain subqueries because that’s the focus of this section.

To get a more efficient plan using subqueries, you implement the solution in two steps. In one step, you query the Customers table, and with a TOP subquery similar to the previous one you return the qualifying order ID for each customer, like so:

SELECT

(SELECT TOP (1) orderid

FROM Sales.Orders AS O

WHERE O.custid = C.custid

ORDER BY orderdate DESC, orderid DESC) AS orderid

FROM Sales.Customers AS C;

This query generates the following output showing just the qualifying order IDs:

orderid

-----------

11011

10926

10856

11016

10924

11058

10826

10970

11076

11023

...

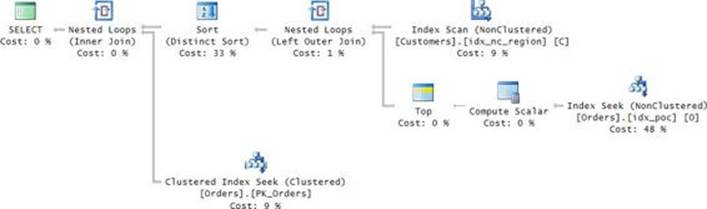

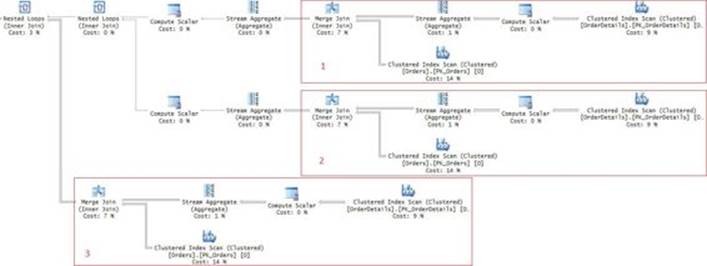

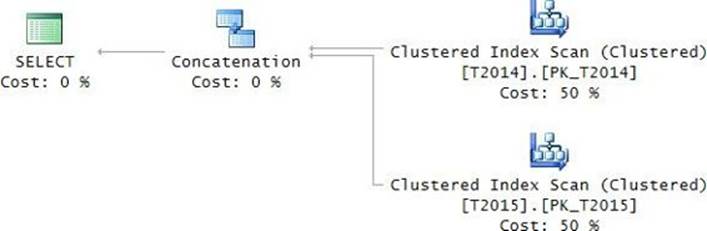

The plan for this query is shown in Figure 3-2.

FIGURE 3-2 Plan with a seek per customer.

The critical difference between this plan and the one in Figure 3-1 is that this one applies a seek per customer, not per order. This plan performs 10,000 seeks, costing you 30,000 random reads.

The second step is to query the Orders table to return information about orders whose keys appear in the set of keys returned by the query implementing the first step, like so:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders

WHERE orderid IN

(SELECT

(SELECT TOP (1) orderid

FROM Sales.Orders AS O

WHERE O.custid = C.custid

ORDER BY orderdate DESC, orderid DESC)

FROM Sales.Customers AS C);

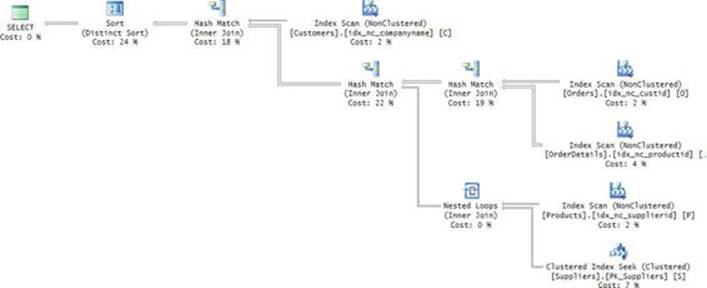

The query plan for the complete solution is shown in Figure 3-3.

FIGURE 3-3 Complete plan with a seek per customer.

In addition to the work described for the first step of the solution (retrieving the qualifying keys), this plan also performs 10,000 seeks in the index PK_Orders to obtain the desired order attributes for the qualifying orders. That’s after sorting the keys to optimize the seeks. This part adds 10,000 more seeks, giving you a total of 20,000 seeks and resulting in 60,000 random reads. That’s a factor of 500 compared to the previous solution!

When you’re done, run the following code for cleanup:

DROP INDEX idx_poc ON Sales.Orders;

The EXISTS predicate

The EXISTS predicate accepts a subquery as input and returns true or false, depending on whether the subquery returns a nonempty set or an empty one, respectively. As a basic example, the following query returns customers who placed orders:

SELECT custid, companyname

FROM Sales.Customers AS C

WHERE EXISTS (SELECT * FROM Sales.Orders AS O

WHERE O.custid = C.custid);

The predicate is natural to use because it allows you to express requests like the preceding one in SQL in a manner that is similar to the way you express them in a spoken language.

A curious thing about EXISTS is that, unlike most predicates in SQL, it uses two-valued logic. It returns either true or false. It cannot return unknown because there’s no situation where it doesn’t know whether the subquery returns at least one row or none. Pardon the triple-negative form of the last sentence, but for the purposes of this chapter, you want to practice this kind of logic. If the inner query involves a filter, the rows for which the filter predicate evaluates to unknown because of the presence of NULLs are discarded. Still, the EXISTS predicate always knows with certainty whether the result set is empty or not. In contrast to EXISTS, the IN predicate, for example, uses three-valued logic. Consequently, a query using EXISTS can have a different logical meaning than a query using IN when you negate the predicate and NULLs are possible in the data. I’ll demonstrate this later in the chapter.

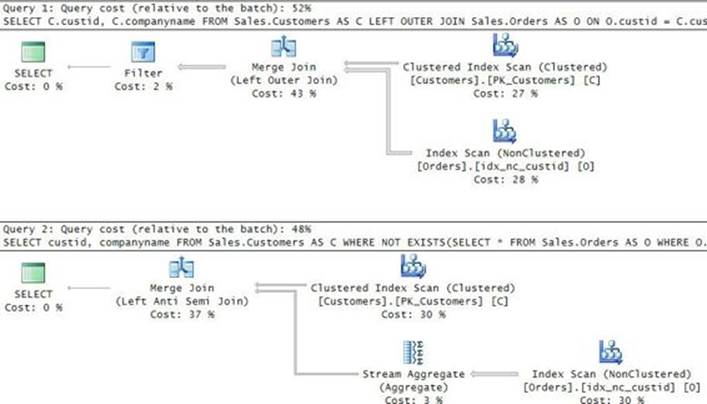

Another interesting thing about EXISTS is that, from an indexing selection perspective, the optimizer ignores the subquery’s SELECT list. For example, the Orders table has an index called idx_nc_custid defined on the custid column as the key with no included columns. Observe the query plan shown in Figure 3-4 for the last EXISTS query.

FIGURE 3-4 Plan for EXISTS with SELECT *.

Notice that the only index the plan uses from the Orders table is idx_nc_custid, despite the fact that the subquery uses SELECT *. In other words, this index is considered a covering one for the purposes of this subquery. That’s proof that the SELECT list is ignored for index-selection purposes.

Next I’ll demonstrate a couple of classic tasks that can be handled using the EXISTS predicate. I’ll use a table called T1, which you create and populate by running the following code:

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

CREATE TABLE dbo.T1(col1 INT NOT NULL CONSTRAINT PK_T1 PRIMARY KEY);

INSERT INTO dbo.T1(col1) VALUES(1),(2),(3),(7),(8),(9),(11),(15),(16),(17),(28);

The key column in the table is col1. Suppose you have a key generator that generates consecutive keys starting with 1, but because of deletions you have gaps. One classic challenge is to identify the minimum missing value. To test the correctness of your solution, you can use the small set of sample data that the preceding code generates. For performance purposes, you want to populate the table with a much bigger set. Use the following code to populate it with 10,000,000 rows:

TRUNCATE TABLE dbo.T1;

INSERT INTO dbo.T1 WITH (TABLOCK) (col1)

SELECT n FROM TSQLV3.dbo.GetNums(1, 10000000) AS Nums WHERE n % 10000 <> 0

OPTION(MAXDOP 1);

You can use a CASE expression that returns 1 if 1 doesn’t already exist in the table. The trickier part is identifying the minimum missing value when 1 does exist. One way to achieve this is using the following query:

SELECT MIN(A.col1) + 1 AS missingval

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1);

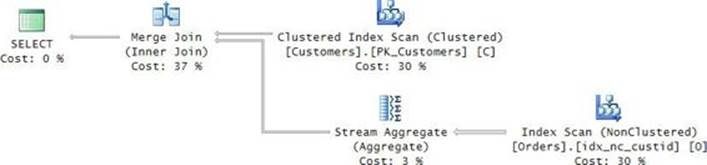

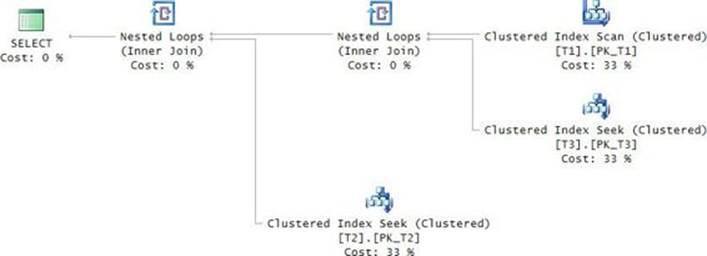

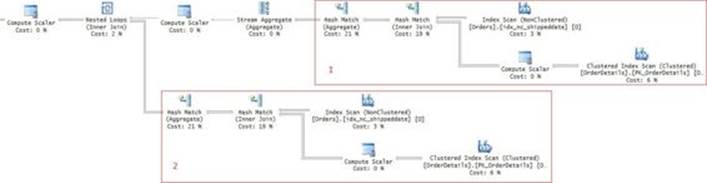

The first step in this code is to filter the rows from T1 (aliased as A) where the value in A appears before a missing value. That way, you know the value in A appears before a missing value because you cannot find another value in T1 (aliased as B) where the B value is greater than the A value by 1. Then, from all remaining values in A, you return the minimum plus one. Perhaps you expected the query to finish instantly; after all, there is an index on col1 (PK_T1). Theoretically, you should be able to scan the index in order and short-circuit the work as soon as the first point before a missing value is found. But it takes this query a good few seconds to complete against the large set of sample data. On my system, it took it five seconds to complete. To understand why the query is slow, examine the query plan shown in Figure 3-5.

FIGURE 3-5 Plan for a minimum missing value, first attempt.

Observe that the index on col1 is fully scanned twice. The Merge Join operator is used to apply a right anti semi join to detect nonmatches. The Stream Aggregate operator identifies the minimum. The critical thing in this plan that makes it inefficient is that the input is scanned twice fully. There’s no short-circuit even though the scans are ordered.

One attempt to optimize the query is to use the TOP filter instead of the MIN aggregate, like so:

SELECT TOP (1) A.col1 + 1 AS missingval

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1)

ORDER BY A.col1;

But again, the query is slow. Examine the execution plan of this query, as shown in Figure 3-6.

FIGURE 3-6 Plan for a minimum missing value, second attempt.

The Merge Join predicate considers the A input as sorted by Expr1003 (col1 + 1) and the B input by col1 (T1.col1). Because the outer query orders the rows by A.col1 and not by A.col1 + 1, the optimizer doesn’t realize the rows are already sorted and adds a Sort (Top N Sort) operator that consumes the entire input to determine the top 1 row. To fix this, simply change the outer query’s ORDER BY to A.col1 + 1, like so:

SELECT TOP (1) A.col1 + 1 AS missingval

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1)

ORDER BY A.col1 + 1;

Observe the plan shown in Figure 3-7.

FIGURE 3-7 Plan for a minimum missing value, third attempt.

Now the optimizer realizes that the Merge Join operator returns the rows sorted the same way the TOP filter needs them, so it can short-circuit as soon as the first matching row is found. The query finishes in sub-second time.

Alternatively, you will also get an optimal plan if you change the inner query’s WHERE filter from B.col1 = A.col1 + 1 to A.col1 = B.col1 – 1, and the ORDER BY clause from A.col1 + 1 to A.col1, like so:

SELECT TOP (1) A.col1 + 1 AS missingval

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE A.col1 = B.col1 - 1)

ORDER BY A.col1;

Now add the logic with the CASE expression to return 1 when 1 doesn’t exist and to return the result of the subquery (using one of the efficient solutions) otherwise. You get the following complete solution:

SELECT

CASE

WHEN NOT EXISTS(SELECT * FROM dbo.T1 WHERE col1 = 1) THEN 1

ELSE (SELECT TOP (1) A.col1 + 1 AS missingval

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1)

ORDER BY missingval)

END AS missingval;

Another classic task involving a sequence of values is to identify all ranges of missing values. In our case, we’ll look for the ones missing between the minimum and maximum that exist in the table. This task is generally known as identifying gaps. Here, unlike with the minimum missing-value task, there’s no potential for a short circuit. As the first step, you can use the same query you used in the previous task to find all values that appear before a gap:

SELECT col1

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1);

You get the following output against the small set of sample data:

col1

-----------

3

9

11

17

28

Observe that you also get the maximum value in the table (28) because that value plus 1 doesn’t exist. But this value doesn’t represent a gap you want to return. To exclude it from the output, add the following predicate to the outer query’s filter:

AND col1 < (SELECT MAX(col1) FROM dbo.T1)

Now that you are left with only values that appear before gaps, you need to identify for each current value the related value that appears right after the gap. You can do this in the outer query’s SELECT list with the following subquery, which returns for each current value the minimum value that is greater than the current one:

(SELECT MIN(B.col1)

FROM dbo.T1 AS B

WHERE B.col1 > A.col1)

You might think it’s a bit strange to use the alias B both here and in the subquery that checks that the value plus 1 doesn’t exist. However, because the two subqueries have independent scopes, there’s no problem with that.

Last, you add one to the value before the gap and subtract one from the value after the gap to get the actual gap information. Putting it all together, here’s the complete solution:

SELECT col1 + 1 AS range_from,

(SELECT MIN(B.col1)

FROM dbo.T1 AS B

WHERE B.col1 > A.col1) - 1 AS range_to

FROM dbo.T1 AS A

WHERE NOT EXISTS

(SELECT *

FROM dbo.T1 AS B

WHERE B.col1 = A.col1 + 1)

AND col1 < (SELECT MAX(col1) FROM dbo.T1);

This query generates the following output:

range_from range_to

----------- -----------

4 6

10 10

12 14

18 27

The execution plan for this solution is shown in Figure 3-8.

FIGURE 3-8 Plan for the gaps query.

The plan is quite efficient, especially when there’s a small number of gaps. The three rightmost operators in the upper branch handle the computation of the maximum col1 value in the table. The value is obtained from the tail of the index, so the work is negligible. The middle section uses a Merge Join (Right Anti Semi Join) operator to identify the values that appear before gaps. The merge algorithm relies on two ordered scans of the index on col1. This strategy is much more efficient than the alternative, which is to apply an index seek per original value in the sequence. Finally, the bottom branch applies an index seek per filtered value (a value that appears before a gap) to identify the respective value after the gap. What’s important here is that you get an index seek per filtered value (namely, per gap) and not per original value. So, especially when the number of gaps is small, you get a small number of seeks. For example, the large set of sample data has 10,000,000 rows with 1,000 gaps. With this sample data, the query finishes in five seconds on my system. Chapter 4, “Grouping, pivoting, and windowing,” continues the discussion about identifying gaps and shows additional solutions.

When you’re done, run the following code for cleanup:

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

One of the interesting things about T-SQL querying tasks is that, for any given problem, there are usually many possible solutions. Often, different solutions get different execution plans. So it’s important to develop the skill of solving querying tasks in different ways and observing carefully in each case how the optimizer handles things. Later, when you tune a query and need to achieve a certain effect on the plan, it’s easier if you already know which change is likely to give you such an effect—for example, by comparing how SQL Server handles a solution based on a subquery versus one that uses a join, how it handles a solution using an aggregate versus one that uses the TOP filter, and so on. Another example for different approaches is applying positive logic versus negative logic. For instance, recall from the beginning of the chapter the task to identify customers for whom all employees handled orders. The solution I presented for the task applied positive logic:

USE TSQLV3;

SELECT custid

FROM Sales.Orders

GROUP BY custid

HAVING COUNT(DISTINCT empid) = (SELECT COUNT(*) FROM HR.Employees);

Another approach is to apply negative logic, but because what you’re after is positive—customers for whom all employees handled orders—you will need two negations to get the same meaning. It’s just like saying that something is common is the same as saying that it is not uncommon. In our case, saying customers for whom all employees handled orders is like saying customers for whom no employees handled no orders. This translates to the following query with two NOT EXISTS predicates, one nested inside the other:

SELECT custid, companyname

FROM Sales.Customers AS C

WHERE NOT EXISTS

(SELECT * FROM HR.Employees AS E

WHERE NOT EXISTS

(SELECT * FROM Sales.Orders AS O

WHERE O.custid = C.custid

AND O.empid = E.empid));

Perhaps the double-negative form is not very intuitive initially, but the good news is that this kind of thinking can improve with practice.

Now you can examine and compare the plans for the positive solutions versus the negative solutions to learn how the optimizer handles things in the different cases. Note, though, that the tables in the TSQLV3 database are very small, so you can’t really do any performance testing with them. You will need much bigger tables to do proper performance analysis and tuning. I demonstrate an example with bigger volumes of data where the double-negative approach enables a very efficient solution in the article “Identifying a Subsequence in a Sequence, Part 2,” which you can find here: http://sqlmag.com/t-sql/identifying-subsequence-in-sequence-part-2.

I found the double-negative approach to be handy in a number of other cases. For example, suppose you have a character string column called sn (for serial number) that is supposed to allow only digits. You want to enforce this rule with a CHECK constraint. You can phrase a predicate that is based on positive logic, but I find the double-negative form to be the most elegant and economic in this case. The positive rule says that every character in the column is a digit. The double-negative form is that no character in the column is not a digit. In T-SQL, this rule translates to the following predicate:

sn NOT LIKE '%[^0-9]%'

Nice and simple!

Misbehaving subqueries

There are common tasks that are handled with subqueries for which you can easily get into trouble with bugs when you don’t follow certain best practices. I’ll describe two such problems: one involving a substitution error in a subquery column name, and another involving unintuitive handling of NULLs in a subquery. I’ll also provide best practices that, if followed, can help you avoid getting into such trouble.

Consider the following T1 and T2 tables and the sample data they are populated with:

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

IF OBJECT_ID(N'dbo.T2', N'U') IS NOT NULL DROP TABLE dbo.T2;

GO

CREATE TABLE dbo.T1(col1 INT NOT NULL);

CREATE TABLE dbo.T2(col2 INT NOT NULL);

INSERT INTO dbo.T1(col1) VALUES(1);

INSERT INTO dbo.T1(col1) VALUES(2);

INSERT INTO dbo.T1(col1) VALUES(3);

INSERT INTO dbo.T2(col2) VALUES(2);

Suppose you need to return the values in T1 that also appear in the set of values in T2. You write the following query (but don’t run it yet).

SELECT col1 FROM dbo.T1 WHERE col1 IN(SELECT col1 FROM dbo.T2);

Before you execute this query, examine the values in both tables and answer the question, which values do you expect to see in the result? Naturally, you expect to see only the value 2 in the result. Now execute the query. You get the following output:

col1

-----------

1

2

3

Can you explain why you’re getting all values from T1 and not just 2?

A close examination of the table definitions and the query code will reveal that the subquery against T2 refers to col1 by mistake, instead of col2. Now the question is, why didn’t the code fail? The way SQL resolves which table the column belongs to is it first looks for the column in the table in the immediate query. If it cannot find it there, like in our case, it looks for it in the table in the outer query. In our case, there is a column named col1 in the outer table; therefore, that one is used. Unintentionally, the inner reference to col1 became a correlated reference. So when the outer col1 value is 1, the inner query selects a 1 for every row in T2. When the outer col1 value is 2, the inner query selects a 2 for every row in T2. You realize that as long as there are rows in T2, and the col1 value is not NULL, you’ll always get a match.

Usually, this type of problem happens when you are not consistent in naming attributes the same way in different tables when they represent the same thing. For example, suppose that in a Customers table you name the column holding the customer ID custid, and in a related Orders table you name the column customerid. Then you write a query similar to the query in our example looking for customers who placed orders, but by mistake you specify custid against both tables. You will get all customers back instead of just the ones who truly placed orders. When the outer statement is SELECT, the query returns an incorrect result with all rows, but you realize that when the outer statement is DELETE, you end up deleting all rows.

There are two best practices that can help you avoid such bugs in your code: one is a long-term recommendation, and the other is a short-term one. The long-term best practice is to pay more attention to naming attributes in different tables the same way when they represent the same thing. The short-term recommendation is to simply prefix the column name with the table name (or alias, if you assigned one), and then you’re not allowing implied resolution. Apply this practice to our sample query:

SELECT col1 FROM dbo.T1 WHERE col1 IN(SELECT T2.col1 FROM dbo.T2);

Now the query fails with a resolution error saying there’s no column called col1 in T2:

Msg 207, Level 16, State 1, Line 278

Invalid column name 'col1'.

Seeing this error, you will of course figure out the problem and fix the query by specifying the right column name, col2, in the subquery.

Another classic case where people get into trouble with subqueries has to do with the complexities that are related to the NULL treatment. To see the problem, re-create and repopulate the T1 and T2 tables with the following code:

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

IF OBJECT_ID(N'dbo.T2', N'U') IS NOT NULL DROP TABLE dbo.T2;

GO

CREATE TABLE dbo.T1(col1 INT NULL);

CREATE TABLE dbo.T2(col1 INT NOT NULL);

INSERT INTO dbo.T1(col1) VALUES(1);

INSERT INTO dbo.T1(col1) VALUES(2);

INSERT INTO dbo.T1(col1) VALUES(NULL);

INSERT INTO dbo.T2(col1) VALUES(2);

INSERT INTO dbo.T2(col1) VALUES(3);

Observe the values in both tables. Suppose you want to return only the values that appear in T2 but not in T1. You write the following code in an attempt to achieve this (but don’t run it yet):

SELECT col1 FROM dbo.T2 WHERE col1 NOT IN(SELECT col1 FROM dbo.T1);

Before you run the code, answer the question, which values do you expect to see in the result? Most people expect to get the value 3 back. Now run the query. You get an empty set back:

col1

-----------

A key to understanding why you get an empty set is remembering that for a WHERE filter to return a row, the predicate needs to evaluate to true; getting false or unknown causes the row to be discarded. It’s clear why you don’t get the value 2 back—because the value 2 does appear in T1 (the IN predicate returns true), you don’t want to see it (the NOT IN is false). The trickier part is to figure out why you don’t get the value 3 back. The answer to the question whether 3 appears in T1 is unknown because the NULL can represent any value. More technically, the IN predicate translates to 3=1 OR 3=2 OR 3=NULL, and the result of this disjunction of predicates is the logical value unknown. Now apply NOT to the result. When you negate unknown, you still get unknown. In other words, just like it’s unknown that 3 appears in T1, it’s also unknown that 3 doesn’t appear in T1.

All this means is that when you use NOT IN with a subquery and at least one of the members of the subquery’s result is NULL, the query will return an empty set. From a SQL perspective, you don’t want to see the values from T2 that appear in T1 exactly, because you know with certainty that they appear in T1, and you don’t want to see the rest of the values from T2, because you don’t know with certainty that they don’t appear in T1. This is one of the absurd implications of NULL handling and the three-valued logic.

You can do certain things to avoid getting into such trouble. For one, if a column is not supposed to allow NULLs, make sure you enforce a NOT NULL constraint. If you don’t, chances are that NULLs will find their way into that column. For another, if the column is indeed supposed to allow NULLs, but for example those NULLs represent a missing and inapplicable value, you need to revise your solution to ignore them. You can achieve this behavior in a couple of ways. One option is to explicitly remove the NULLs in the inner query by adding the filter WHERE col1 IS NOT NULL, like so:

SELECT col1 FROM dbo.T2 WHERE col1 NOT IN(SELECT col1 FROM dbo.T1 WHERE col1 IS NOT NULL);

This time, you get the result you probably expected to begin with:

col1

-----------

3

The other option is to use NOT EXISTS instead of NOT IN, like so:

SELECT col1

FROM dbo.T2

WHERE NOT EXISTS(SELECT * FROM dbo.T1 WHERE T1.col1 = T2.col1);

Here, when the inner query’s filter compares a NULL with anything, the result of the filter predicate is unknown, and rows for which the predicate evaluates to unknown are discarded. In other words, the implicit removal of the NULLs by the filter in this solution has the same effect as the explicit removal of the NULLs in the previous solution.

Table expressions

Table expressions are named queries you interact with similar to the way you interact with tables. T-SQL supports four kinds of table expressions: derived tables, common table expressions (CTEs), views, and inline table-valued functions (inline TVFs). The first two are visible only in the scope of the statement that defines them. With the last two, the definition of the table expression is stored as a permanent object in the database, and anyone with the right permissions can interact with them.

A query against a table expression involves three parts in the code: the inner query, the name that you assign to the table expression (and when relevant, its columns), and the outer query. The inner query is supposed to generate a table result. This means it needs to satisfy three requirements:

![]() The inner query cannot have a presentation ORDER BY clause. It can have an ORDER BY clause to support a TOP or OFFSET-FETCH filter, but then the outer query doesn’t give you assurance that the rows will be presented in any particular order, unless it has its own ORDER BY clause.

The inner query cannot have a presentation ORDER BY clause. It can have an ORDER BY clause to support a TOP or OFFSET-FETCH filter, but then the outer query doesn’t give you assurance that the rows will be presented in any particular order, unless it has its own ORDER BY clause.

![]() All columns must have names. So, if you have a column that results from a computation, you must assign it with a name using an alias.

All columns must have names. So, if you have a column that results from a computation, you must assign it with a name using an alias.

![]() All column names must be unique. So, if you join tables that have columns with the same name and you need to return the columns with the same name, you’ll need to assign them with different aliases. Table names or aliases that are used as prefixes for the column names in intermediate logical-processing phases are removed when a table expression is defined based on the query. Therefore, the column names in the table expression have to be unique as standalone, unqualified, ones.

All column names must be unique. So, if you join tables that have columns with the same name and you need to return the columns with the same name, you’ll need to assign them with different aliases. Table names or aliases that are used as prefixes for the column names in intermediate logical-processing phases are removed when a table expression is defined based on the query. Therefore, the column names in the table expression have to be unique as standalone, unqualified, ones.

As long as a query satisfies these three requirements, it can be used as the inner query in a table expression.

From a physical processing perspective, the inner query’s result doesn’t get persisted anywhere; rather, it is inlined. This means that the outer query and the inner query are merged. When you look at a plan for a query against a table expression, you see the plan interacting with the underlying physical structures directly after the query got inlined. In other words, there’s no physical side to table expressions; rather, they are virtual and should be considered to be a logical tool. The one exception to this rule is when using indexed views—in which case, the view result gets persisted in a B-tree. For details see http://msdn.microsoft.com/en-us/library/ms191432.aspx).

The following sections describe the different kinds of table expressions.

Derived tables

A derived table is probably the type of table expression that most closely resembles a subquery. It is a table subquery that is defined in the FROM clause of the outer query. You use the following form to define and query a derived table:

SELECT <col_list> FROM (<inner_query>) AS <table_alias>[(<target_col_list>)];

Remember that all columns of a table expression must have names; therefore, you have to assign column aliases to all columns that are results of computations. Derived tables support two different syntaxes for assigning column aliases: inline aliasing and external aliasing. To demonstrate the syntaxes, I’ll use a table called T1 that you create and populate by running the following code:

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

GO

CREATE TABLE dbo.T1(col1 INT);

INSERT INTO dbo.T1(col1) VALUES(1);

INSERT INTO dbo.T1(col1) VALUES(2);

Suppose you need the inner query to define a column alias called expr1 for the computation col1 + 1, and then in the outer query refer to expr1 in one of the expressions. Using the inline aliasing form, you specify the computation followed by the AS clause followed by the alias, like so:

SELECT col1, exp1 + 1 AS exp2

FROM (SELECT col1, col1 + 1 AS exp1

FROM dbo.T1) AS D;

Using the external aliasing form, you specify the target column aliases in parentheses right after the derived table name, like so:

SELECT col1, exp1 + 1 AS exp2

FROM (SELECT col1, col1 + 1

FROM dbo.T1) AS D(col1, exp1);

With this syntax, you have to specify names for all columns, even the ones that already have names.

Both syntaxes are standard. Each has its own advantages. What I like about the inline aliasing form is that with long and complex queries it’s easy to identify which alias represents which computation. What I like about the external form is that it’s easy to see in one place which columns the table expression contains. You need to make a decision about which syntax is more convenient for you to use. If you want, you can combine both, like so:

SELECT col1, exp1 + 1 AS exp2

FROM (SELECT col1, col1 + 1 AS exp1

FROM dbo.T1) AS D(col1, exp1);

In case of a conflict, the alias assigned externally is used.

From a language-design perspective, derived tables have two weaknesses. One has to do with nesting and the other with multiple references. Both are results of the derived table being defined in the FROM clause of the outer query. Regarding nesting: if you need to make references from one derived table to another, you need to nest those tables. The problem with nesting is that it can make it hard to follow the logic when you need to review or troubleshoot the code. As an example, consider the following query where derived tables are used to enable the reuse of column aliases:

SELECT orderyear, numcusts

FROM (SELECT orderyear, COUNT(DISTINCT custid) AS numcusts

FROM (SELECT YEAR(orderdate) AS orderyear, custid

FROM Sales.Orders) AS D1

GROUP BY orderyear) AS D2

WHERE numcusts > 70;

The query returns order years and the distinct number of customers handled in each year for years that had more than 70 distinct customers handled.

Trying to figure out what the code does by reading it from top to bottom is tricky because the outer query is interrupted in the middle by the derived table D2, and then the query defining D2 is interrupted in the middle by the derived table D1. Human brains are not very good at analyzing nested units; they are better at analyzing independent modules where you focus your attention on one unit at a time, from start to end, in an uninterrupted way. So, typically what you do to try and make sense out of such nested code is analyze it from the innermost derived table (D1, in this example), and then gradually work outward, rather than analyzing it in a more natural order.

As for physical processing, as mentioned, table expressions don’t get persisted anywhere; instead, they get inlined. This can be seen clearly in the execution plan of the query, as shown in Figure 3-9.

FIGURE 3-9 Table expressions that are inlined.

There are no spool operators representing work tables; rather, the plan interacts with the Orders table directly.

In addition to the nesting aspect, another weakness of derived tables relates to cases where you need multiple references to the same table expression. Take the following query as an example:

SELECT CUR.orderyear, CUR.numorders, CUR.numorders - PRV.numorders AS diff

FROM (SELECT YEAR(orderdate) AS orderyear, COUNT(*) AS numorders

FROM Sales.Orders

GROUP BY YEAR(orderdate)) AS CUR

LEFT OUTER JOIN

(SELECT YEAR(orderdate) AS orderyear, COUNT(*) AS numorders

FROM Sales.Orders

GROUP BY YEAR(orderdate)) AS PRV

ON CUR.orderyear = PRV.orderyear + 1;

The query computes the number of orders that were handled in each year, and the difference from the count of the previous year. There are actually simpler and more efficient ways to handle this task—for example, with the LEAD function—but this code is used for illustration purposes. Observe that the outer query joins two derived tables that are based on the exact same query that computes the yearly order counts. SQL doesn’t allow you to define and name a derived table once and then refer to it multiple times in the same FROM clause that defines it. Unfortunately, you have to repeat the code. This makes the code longer and harder to maintain. Every time you need to make a change in the derived table query, you need to remember to make it in all copies, which increases the likelihood of errors.

CTEs

Common table expressions (CTEs) are another kind of table expression that, like derived tables, are visible only to the statement that defines them. There are no session-scoped or batch-scoped CTEs. The nice thing about CTEs is that they were designed to resolve the two weaknesses that derived tables have. Here’s an example of a CTE definition representing yearly order counts:

WITH OrdCount

AS

(

SELECT

YEAR(orderdate) AS orderyear,

COUNT(*) AS numorders

FROM Sales.Orders

GROUP BY YEAR(orderdate)

)

SELECT orderyear, numorders

FROM OrdCount;

You will find here the same components you have in code interacting with a derived table—namely, there’s the CTE name, the inner query, and the outer query. The difference between CTEs and derived tables is in how these three components are arranged with respect to each another. Observe that the code starts with naming the CTE, continues with the inner query expressed from start to end uninterrupted, and then the outer query also is expressed from start to end uninterrupted. This design makes CTEs easier to work with than derived tables.

Recall the example with the nesting of derived tables. Here’s the alternative using CTEs:

WITH C1 AS

(

SELECT YEAR(orderdate) AS orderyear, custid

FROM Sales.Orders

),

C2 AS

(

SELECT orderyear, COUNT(DISTINCT custid) AS numcusts

FROM C1

GROUP BY orderyear

)

SELECT orderyear, numcusts

FROM C2

WHERE numcusts > 70;

As you can see, in the same WITH statement you can define multiple CTEs separated by commas. Each can refer in the inner query to all previously defined CTEs. Then the outer query can refer to all CTEs defined by that WITH statement. The nice thing about this approach is that the units are not nested; rather, they are expressed one at a time in an uninterrupted manner. So, if you need to figure out the logic of the code, you analyze it in a more natural order from top to bottom.

The one thing I find a bit tricky when troubleshooting a WITH statement with multiple CTEs is that, if you want to check the result of an intermediate CTE, you cannot just highlight the code down to that part and run it. You need to inject a SELECT * FROM <ctename> right after that CTE and then run the code down to that part. Derived tables have an advantage in this respect in the sense that, without any code changes, you can highlight a query against a derived table including all the units you want to test and run it.

From a physical-processing perspective, the treatment of CTEs is the same as that for derived tables. The results of the inner queries don’t get persisted anywhere; rather, the code gets inlined. This code generates the same plan as the one shown earlier for derived tables in Figure 3-9.

The other advantage of using CTEs rather than derived tables is that, because you name the CTE before using it, you are allowed to refer to the CTE name multiple times in the outer query without needing to repeat the code. Recall the example shown earlier with two copies of the same derived table to return yearly counts of orders and the difference from the previous yearly count. Here’s the CTE-based alternative:

WITH OrdCount

AS

(

SELECT

YEAR(orderdate) AS orderyear,

COUNT(*) AS numorders

FROM Sales.Orders

GROUP BY YEAR(orderdate)

)

SELECT CUR.orderyear, CUR.numorders,

CUR.numorders - PRV.numorders AS diff

FROM OrdCount AS CUR

LEFT OUTER JOIN OrdCount AS PRV

ON CUR.orderyear = PRV.orderyear + 1;

Clearly, this is a better design.

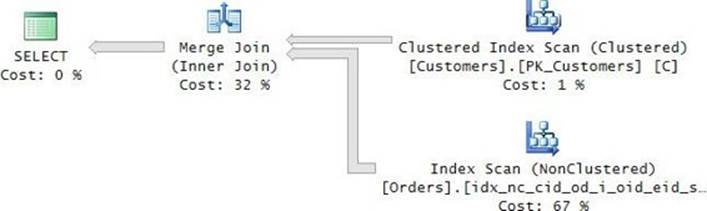

Note that because SQL Server doesn’t persist the table expression’s result anywhere, whether you use derived tables or CTEs, all references to the table expression’s name will be expanded, and the work will be repeated. This can be seen clearly in the plan shown in Figure 3-10.

FIGURE 3-10 Work that is repeated with multiple references.

You can see the work happening twice. A covering index from the Orders table is scanned twice, and then the rows are sorted, grouped, and aggregated twice. If the table is large and you want to avoid repeating all this work, you should consider using a temporary table or a table variable instead.

Recursive CTEs

T-SQL supports a specialized form of a CTE with recursive syntax. To describe and demonstrate recursive CTEs, I’ll use a table called Employees that you create and populate by running the following code:

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID(N'dbo.Employees', N'U') IS NOT NULL DROP TABLE dbo.Employees;

CREATE TABLE dbo.Employees

(

empid INT NOT NULL

CONSTRAINT PK_Employees PRIMARY KEY,

mgrid INT NULL

CONSTRAINT FK_Employees_Employees FOREIGN KEY REFERENCES dbo.Employees(empid),

empname VARCHAR(25) NOT NULL,

salary MONEY NOT NULL

);

INSERT INTO dbo.Employees(empid, mgrid, empname, salary)

VALUES(1, NULL, 'David' , $10000.00),

(2, 1, 'Eitan' , $7000.00),

(3, 1, 'Ina' , $7500.00),

(4, 2, 'Seraph' , $5000.00),

(5, 2, 'Jiru' , $5500.00),

(6, 2, 'Steve' , $4500.00),

(7, 3, 'Aaron' , $5000.00),

(8, 5, 'Lilach' , $3500.00),

(9, 7, 'Rita' , $3000.00),

(10, 5, 'Sean' , $3000.00),

(11, 7, 'Gabriel', $3000.00),

(12, 9, 'Emilia' , $2000.00),

(13, 9, 'Michael', $2000.00),

(14, 9, 'Didi' , $1500.00);

CREATE UNIQUE INDEX idx_nc_mgr_emp_i_name_sal

ON dbo.Employees(mgrid, empid) INCLUDE(empname, salary);

Recursive CTEs have a body with not just one member like regular CTEs, but rather multiple members. Usually, you will have one anchor member and one recursive member, but the syntax certainly allows having multiple anchor members and multiple recursive members. I’ll explain the roles of the members through an example. The following code returns all direct and indirect subordinates of employee 3:

WITH EmpsCTE AS

(

SELECT empid, mgrid, empname, salary

FROM dbo.Employees

WHERE empid = 3

UNION ALL

SELECT C.empid, C.mgrid, C.empname, C.salary

FROM EmpsCTE AS P

JOIN dbo.Employees AS C

ON C.mgrid = P.empid

)

SELECT empid, mgrid, empname, salary

FROM EmpsCTE;

The first query you see in the CTE body is the anchor member. The anchor member is a regular query that can run as a self-contained one and executes only once. This query creates the initial result set that is then used by the recursive member. In our case, the anchor query returns the row for employee 3. Then, in the CTE body, the code uses a UNION ALL operator to indicate that the result of the anchor query should be unified with the results of the recursive query that comes next. The recursive member executes repeatedly until it returns an empty set. What makes it recursive is the reference that it has to the CTE name. This reference represents the immediate previous result set. In our case, the recursive query joins the immediate previous result set holding the managers from the previous round with the Employees table to obtain the direct subordinates of those managers. As soon as the recursive query cannot find another level of subordinates, it stops executing. Then the reference to the CTE name in the outer query represents the unified result sets of the anchor and recursive members. Here’s the output generated by this code:

empid mgrid empname salary

------ ------ -------- --------

3 1 Ina 7500.00

7 3 Aaron 5000.00

9 7 Rita 3000.00

11 7 Gabriel 3000.00

12 9 Emilia 2000.00

13 9 Michael 2000.00

14 9 Didi 1500.00

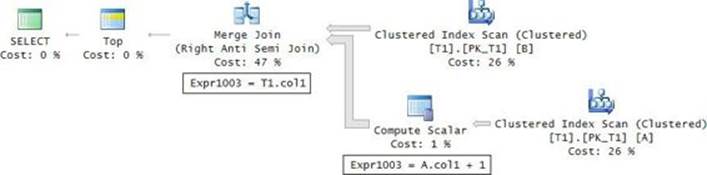

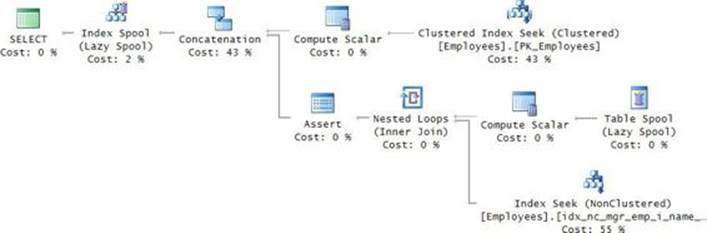

The execution plan for this query is shown in Figure 3-11.

FIGURE 3-11 Plan for a recursive query.

The plan creates a B-tree-based worktable (Index Spool operator) where it stores the intermediate result sets. The plan performs a seek in the clustered index to retrieve the row for employee 3 and writes it to the spool. The plan then repeatedly reads the last round’s result from the spool, joining it with the covering index from the Employees table to retrieve the employees from the next level, and it writes those to the spool. The plan keeps a count of how many times the recursive member was executed. It does so by using two Compute Scalar operators. The first initializes a variable with 0, and the second increments the variable by 1 in each iteration. Then the Assert operator fails the query if the counter is greater than 100. In other words, by default the recursive member is allowed to run up to 100 times. You can change the default to the limit you want to set using a query option called MAXRECURSION. To remove the limit, set it to 0. You specify the option in an OPTION clause at the end of the outer query—for example, OPTION(MAXRECURSION 10).

The main benefits I see in recursive queries are the brevity of the code and the ability to traverse graph structures based only on the parent and child IDs (mgrid and empid, in our example). The main drawback of recursive queries is performance. They tend to perform less efficiently than alternative methods, even your own loop-based solutions. With recursive queries, you don’t have any control over the worktable; for example, you can’t define your own indexes on it, you can’t define how to filter the rows from the previous round, and so on. If you know how to optimize T-SQL code, you can usually get better performance with your own solution.

For more information about recursive queries and handling graph structures, see Chapter 11, “Graphs and recursive queries.”

Views

Derived tables and CTEs are available only to the statement that defines them. Once the defining statement finishes, they are gone. If you need the ability to reuse the table expression beyond the single statement, you can use views or inline TVFs. Views and inline TVFs are stored as an object in the database and therefore are reusable by users with the right permissions. What gets stored in the database is the query definition and metadata information, not the data (with the exception of an indexed view as mentioned earlier). When submitting a query or a modification against the table expression, SQL Server inlines the table expression and applies the query or modification to the underlying object.

As an example, suppose you have different branches in your organization. You want users from the USA branch to be able to interact only with customers from the USA. SQL Server doesn’t have built-in support for row-level permissions. So you create the following view querying the Customers table and filtering only customers from the USA:

USE TSQLV3;

IF OBJECT_ID(N'Sales.USACusts', N'V') IS NOT NULL DROP VIEW Sales.USACusts;

GO

CREATE VIEW Sales.USACusts WITH SCHEMABINDING

AS

SELECT

custid, companyname, contactname, contacttitle, address,

city, region, postalcode, country, phone, fax

FROM Sales.Customers

WHERE country = N'USA'

WITH CHECK OPTION;

You grant the users from the USA permissions against the view but not against the underlying table.

The SCHEMABINDING option ensures that structural changes against referenced objects and columns will be rejected. This option requires schema-qualifying object names and explicit enumeration of the column list (no *). The CHECK OPTION ensures that modifications against the view that contradict the inner query’s filter are rejected. For example, with this option you won’t be allowed to insert a customer from the UK through the view. You also won’t be allowed to update the country/region to one other than the USA through the view.

Now when you query the view, you interact only with customers from the USA, like in the following example:

SELECT custid, companyname

FROM Sales.USACusts

ORDER BY region, city;

As mentioned, behind the scenes SQL Server will inline the inner query, so the execution plan you get will be the same as the one you will get for this query:

SELECT custid, companyname

FROM Sales.Customers

WHERE country = N'USA'

ORDER BY region, city;

Note that despite the fact that the view’s inner query gets inlined, sometimes SQL Server cannot avoid doing work even when, intuitively, you think it should. Consider an example where you perform vertical partitioning of a wide table, storing in each narrower table the key and a different subset of the original columns. Then you use a view to join the tables to make it look like one relation. Use the following code to create a basic example for such an arrangement:

IF OBJECT_ID(N'dbo.V', N'V') IS NOT NULL DROP VIEW dbo.V;

IF OBJECT_ID(N'dbo.T3', N'U') IS NOT NULL DROP TABLE dbo.T3;

IF OBJECT_ID(N'dbo.T2', N'U') IS NOT NULL DROP TABLE dbo.T2;

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

GO

CREATE TABLE dbo.T1

(

keycol INT NOT NULL CONSTRAINT PK_T1 PRIMARY KEY,

col1 INT NOT NULL

);

CREATE TABLE dbo.T2

(

keycol INT NOT NULL

CONSTRAINT PK_T2 PRIMARY KEY

CONSTRAINT FK_T2_T1 REFERENCES dbo.T1,

col2 INT NOT NULL

);

CREATE TABLE dbo.T3

(

keycol INT NOT NULL

CONSTRAINT PK_T3 PRIMARY KEY

CONSTRAINT FK_T3_T1 REFERENCES dbo.T1,

col3 INT NOT NULL

);

GO

CREATE VIEW dbo.V WITH SCHEMABINDING

AS

SELECT T1.keycol, T1.col1, T2.col2, T3.col3

FROM dbo.T1

INNER JOIN dbo.T2

ON T1.keycol = T2.keycol

INNER JOIN dbo.T3

ON T1.keycol = T3.keycol;

GO

Note that I’m not getting into a discussion here about whether this is a good design or a bad one—I’m just trying to explain how optimization of code against table expressions works. Suppose you know there’s a one-to-one relationship between all tables. Every row in one table has exactly one matching row in each of the other tables. You issue a query against the view requesting columns that originate in only one of the tables:

SELECT keycol, col1 FROM dbo.V;

Some people intuitively expect the optimizer to be smart enough to know that only T1 should be accessed. The problem is that SQL Server doesn’t know what you know—that there’s a one-to-one relationship between the tables. As far as SQL Server is concerned, there could be rows in T1 that don’t have related rows in the other tables and therefore shouldn’t be returned. Also, there could be rows in T1 that have multiple related rows in the other tables and therefore should be returned multiple times. In other words, the optimizer has to create a plan where it joins T1 with T2 and T3 as shown in Figure 3-12.

FIGURE 3-12 Plan that shows access to all tables.

To access only T1 to get what you want, you have to query T1 directly and not through the view. I’m using this example to emphasize that sometimes issuing a query through a view (or any other type of table expression) will involve more work than querying only what you think the relevant underlying objects are. That’s despite the fact that the inner query gets inlined.

A quick puzzle

Query only col2 from the view and, before looking at the plan, try to guess which tables should be accessed. Then examine the plan and try to explain what you see. Perhaps you expected all three tables to be accessed based on the previous example, but you will find that T2 and T3 are accessed but not T1. The optimizer realizes that, thanks to the existing foreign-key relationships, every row in T2 and T3 has exactly one matching row in T1. The other way around is not necessarily true; theoretically, a row in T1 can have zero, one, or more matching rows in T2 and T3. Also, inner joins are commutative, meaning that (T1 JOIN T2) JOIN T3 is equivalent to T1 JOIN (T2 JOIN T3). So when asking only for a column from T2, it’s enough to join T2 with T3 to get the correct result. The join to T1 becomes redundant. It’s nice to see that the optimizer is capable of such tricks. But you do still pay for the join to T3 because, theoretically, this join can affect the result. As mentioned, if you want the optimal plan, you need to access the underlying table directly and not through the view. The same optimization considerations apply to all types of table expressions, not just views.

When you’re done, run the following code for cleanup:

IF OBJECT_ID(N'dbo.V', N'V') IS NOT NULL DROP VIEW dbo.V;

IF OBJECT_ID(N'dbo.T3', N'U') IS NOT NULL DROP TABLE dbo.T3;

IF OBJECT_ID(N'dbo.T2', N'U') IS NOT NULL DROP TABLE dbo.T2;

IF OBJECT_ID(N'dbo.T1', N'U') IS NOT NULL DROP TABLE dbo.T1;

Inline table-valued functions

Suppose you need a reusable table expression like a view, but you also need to be able to pass input parameters to the table expression. Views do not support input parameters. For this purpose, SQL Server provides you with inline table-valued functions (TVFs). As an example, the following code creates an inline TVF called GetTopOrders that accepts as inputs a customer ID and a number and returns the requested number of most-recent orders for the input customer:

IF OBJECT_ID(N'dbo.GetTopOrders', N'IF') IS NOT NULL DROP FUNCTION dbo.GetTopOrders;

GO

CREATE FUNCTION dbo.GetTopOrders(@custid AS INT, @n AS BIGINT) RETURNS TABLE

AS

RETURN

SELECT TOP (@n) orderid, orderdate, empid

FROM Sales.Orders

WHERE custid = @custid

ORDER BY orderdate DESC, orderid DESC;

GO

The following code queries the function to return the three most recent orders for customer 1:

SELECT orderid, orderdate, empid

FROM dbo.GetTopOrders(1, 3) AS O;

SQL Server inlines the inner query and performs parameter embedding, meaning that it replaces the parameters with constants. After inlining and embedding, the query that actually gets optimized is the following:

SELECT TOP (3) orderid, orderdate, empid

FROM Sales.Orders

WHERE custid = 1

ORDER BY orderdate DESC, orderid DESC;

I find inline TVFs to be a great tool, allowing for the encapsulation of the logic and reusability without any performance penalties. I’m afraid I cannot say the same thing about the other types of user-defined functions (UDFs). I elaborate on performance problems of UDFs in Chapter 9, “Programmable objects.”

Generating numbers

One of my favorite tools in T-SQL, if not the favorite, is a function that returns a sequence of integers in a requested range. Such a function has so many practical uses that I’m surprised SQL Server doesn’t provide it as a built-in tool. Fortunately, by employing a few magical tricks, you can create a very efficient version of such a function yourself as an inline TVF.

Before looking at my version, I urge you to try and come up with yours. It’s a great challenge. Create a function called GetNums that accepts the inputs @low and @high and returns a sequence of integers in the requested range. The goal is best-possible performance. You will use the function to generate millions of rows in some cases, so naturally you will want it to perform well.

There are three critical things I rely on to get a performant solution:

![]() Generating a large number of rows with cross joins

Generating a large number of rows with cross joins

![]() Generating row numbers efficiently without sorting

Generating row numbers efficiently without sorting

![]() Short-circuiting the work with TOP when the requested number of rows is reached

Short-circuiting the work with TOP when the requested number of rows is reached

The first step is to generate a large number of rows. It doesn’t really matter what you will have in those rows because eventually you’ll produce the numbers with the ROW_NUMBER function. A great way in T-SQL to generate a large number of rows is to apply cross joins. But you need a table with at least two rows as a starting point. You can create such a table as a virtual one by using the VALUES clause, like so:

SELECT c FROM (VALUES(1),(1)) AS D(c);

This query generates the following output showing that the virtual table has two rows:

c

-----------

1

1

The next move is to define a CTE based on the last query (call it L0, for level 0), and apply a self-cross join between two instances of L0 to get four rows, like so:

WITH

L0 AS (SELECT c FROM (VALUES(1),(1)) AS D(c))

SELECT 1 AS c FROM L0 AS A CROSS JOIN L0 AS B;

This query generates the following output:

c

-----------

1

1

1

1

The next move is to define a CTE called L1 based on the last query, and then join two instances of L1 to get 16 rows. Repeating this pattern, by the time you get to L5, you have potentially 4,294,967,296 rows! Here’s the expression to compute this number:

SELECT POWER(2., POWER(2., 5));

The next step is to query L5 and compute row numbers (call the column rownum) without causing a sort operation to take place. This is done by specifying ORDER BY (SELECT NULL). You define a CTE called Nums based on this query.

Finally, the last step is to query Nums, and with the TOP option filter @high – @low + 1 rows, ordered by rownum. You compute the result number column (call it n) as @low + rownum – 1.

Here’s the complete solution code applied to the sample input range 11 through 20:

DECLARE @low AS BIGINT = 11, @high AS BIGINT = 20;

WITH

L0 AS (SELECT c FROM (VALUES(1),(1)) AS D(c)),

L1 AS (SELECT 1 AS c FROM L0 AS A CROSS JOIN L0 AS B),

L2 AS (SELECT 1 AS c FROM L1 AS A CROSS JOIN L1 AS B),

L3 AS (SELECT 1 AS c FROM L2 AS A CROSS JOIN L2 AS B),

L4 AS (SELECT 1 AS c FROM L3 AS A CROSS JOIN L3 AS B),

L5 AS (SELECT 1 AS c FROM L4 AS A CROSS JOIN L4 AS B),

Nums AS (SELECT ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS rownum

FROM L5)

SELECT TOP(@high - @low + 1) @low + rownum - 1 AS n

FROM Nums

ORDER BY rownum;

This code generates the following output:

n

--------------------

11

12

13

14

15

16

17

18

19

20

For reusability, encapsulate the solution in an inline TVF called GetNums, like so:

IF OBJECT_ID(N'dbo.GetNums', N'IF') IS NOT NULL DROP FUNCTION dbo.GetNums;

GO

CREATE FUNCTION dbo.GetNums(@low AS BIGINT, @high AS BIGINT) RETURNS TABLE

AS

RETURN

WITH

L0 AS (SELECT c FROM (VALUES(1),(1)) AS D(c)),

L1 AS (SELECT 1 AS c FROM L0 AS A CROSS JOIN L0 AS B),

L2 AS (SELECT 1 AS c FROM L1 AS A CROSS JOIN L1 AS B),

L3 AS (SELECT 1 AS c FROM L2 AS A CROSS JOIN L2 AS B),

L4 AS (SELECT 1 AS c FROM L3 AS A CROSS JOIN L3 AS B),

L5 AS (SELECT 1 AS c FROM L4 AS A CROSS JOIN L4 AS B),

Nums AS (SELECT ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS rownum

FROM L5)

SELECT TOP(@high - @low + 1) @low + rownum - 1 AS n

FROM Nums

ORDER BY rownum;

GO

Use the following code to test the function:

SELECT n FROM dbo.GetNums(11, 20);

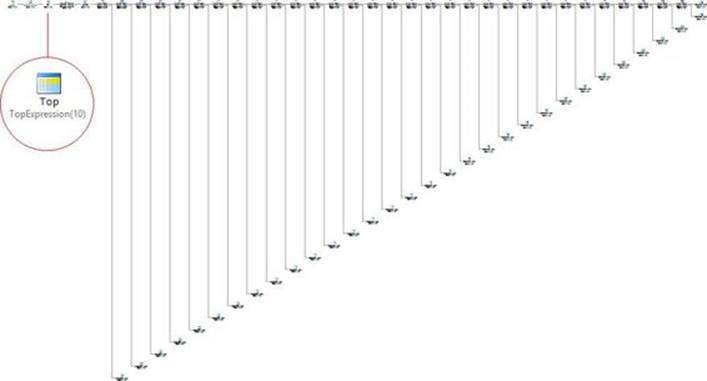

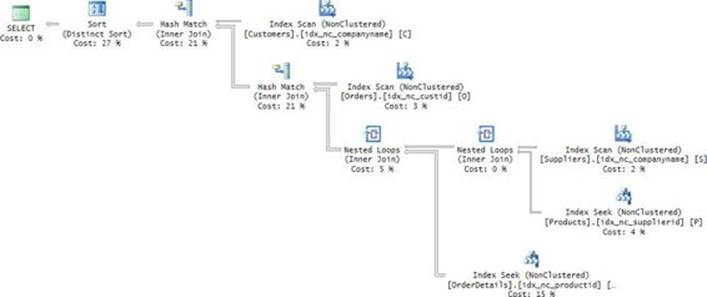

My friend Laurent Martin refers to this function as the harp function. This nickname might seem puzzling until you look at the query plan, which is shown in Figure 3-13.

FIGURE 3-13 The harp function.

The beauty of this plan is that the Top operator is the one requesting the rows from the branch to the right of it, and it short-circuits as soon as the requested number of rows is achieved. The Sequence Project and Nested Loops operators, which handle the row-number calculation and cross joins, respectively, respond to the request for rows from the Top operator. As soon as the Top operator stops requesting rows, they stop producing rows. In other words, even though potentially this solution can generate over four billion rows, in practice it generates only the requested number and then short-circuits. Testing the performance of the function on my laptop, it finished generating 10,000,000 numbers in four seconds, with results discarded. That’s not so bad.

The APPLY operator

The APPLY operator is one of the most powerful tools I know of in T-SQL, and yet, it seems to be unnoticed by many. Often when I teach about T-SQL, I ask the students who’s using APPLY and, in many cases, I get only a small percent saying they do. What’s interesting about this operator is that it can be used in very creative ways to solve all kinds of querying tasks.

There are three flavors of APPLY: CROSS APPLY, OUTER APPLY, and implicit APPLY. The following sections describe these flavors.

The CROSS APPLY operator

One way to think of the CROSS APPLY operator is as a hybrid of a cross join and a correlated subquery. Think for a moment of a regular cross join. The inputs can be tables or table expressions, but then the table expressions have to be self-contained. The inner queries are not allowed to be correlated ones. The CROSS APPLY operator is similar to a cross join, only the inner query in the right input is allowed to have correlations to elements from the left input. The left input, like in a cross join, has to be self-contained.

So, T1 CROSS JOIN T2 is equivalent to T1 CROSS APPLY T2. Also, T1 CROSS JOIN (self_contained_query) AS Q is equivalent to T1 CROSS APPLY (self_contained_query) AS Q. However, unlike a cross join, CROSS APPLY allows the right input to be a correlated table subquery, as in T1 CROSS APPLY (SELECT ... FROM T2 WHERE T2.col1 = T1.col1) AS Q. This means that for every row in T1, Q is evaluated separately based on the current value in T1.col1.

Interestingly, standard SQL doesn’t support the APPLY operator but has a similar feature called a lateral derived table. The standard’s parallel to T-SQL’s CROSS APPLY is a CROSS JOIN with a lateral derived table, allowing the right table expression to be a correlated one. So what in T-SQL is expressed as T1 CROSS APPLY (SELECT ... FROM T2 WHERE T2.col1 = T1.col1) AS Q, in standard SQL is expressed as T1 CROSS JOIN LATERAL (SELECT ... FROM T2 WHERE T2.col1 = T1.col1) AS Q.

To demonstrate a practical use case for CROSS APPLY, recall the earlier discussion in this chapter about the top N per group task. The specific example was filtering the most recent orders per customer. The order for the filter was based on orderdate descending with orderid descending used as the tiebreaker. I suggested creating the following POC index to support your solution:

CREATE UNIQUE INDEX idx_poc

ON Sales.Orders(custid, orderdate DESC, orderid DESC)

INCLUDE(empid);

I used the following solution based on a regular correlated subquery:

SELECT orderid, orderdate, custid, empid

FROM Sales.Orders

WHERE orderid IN

(SELECT

(SELECT TOP (1) orderid

FROM Sales.Orders AS O

WHERE O.custid = C.custid

ORDER BY orderdate DESC, orderid DESC)

FROM Sales.Customers AS C);

The problem with a regular subquery is that it’s limited to returning only one column. So this solution used a subquery to return the qualifying order ID per customer, but then another layer was needed with a query against Orders to retrieve the rest of the order information per qualifying order ID. So the plan for this solution shown earlier in Figure 3-3 involved a seek in the POC index per customer to retrieve the qualifying order ID, and another seek per customer in the POC index to retrieve the rest of the order information.

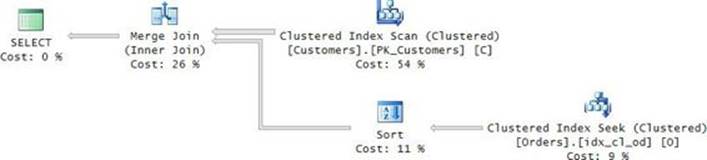

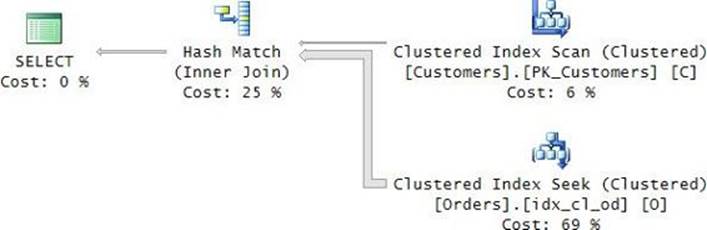

Because CROSS APPLY allows you to apply a correlated table expression, you’re not limited to returning only one column. So you can simplify the solution by removing the need for the extra layer, and instead of doing two seeks per customer, do only one. Here’s what the solution using the CROSS APPLY operator looks like (this time requesting the three most recent orders per customer):

SELECT C.custid, A.orderid, A.orderdate, A.empid

FROM Sales.Customers AS C

CROSS APPLY ( SELECT TOP (3) orderid, orderdate, empid

FROM Sales.Orders AS O

WHERE O.custid = C.custid

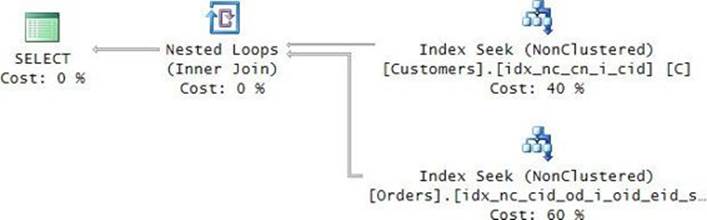

ORDER BY orderdate DESC, orderid DESC ) AS A;

It’s both simpler than the previous one and more efficient, as can be seen in the query plan shown in Figure 3-14.

FIGURE 3-14 Plan for a query with the CROSS APPLY operator.

In this example, the inner query is quite simple, so it’s no big deal to embed it directly. But in cases where the inner query is more complex, you would probably want to encapsulate it in an inline TVF, like so:

IF OBJECT_ID(N'dbo.GetTopOrders', N'IF') IS NOT NULL DROP FUNCTION dbo.GetTopOrders;

GO

CREATE FUNCTION dbo.GetTopOrders(@custid AS INT, @n AS BIGINT)

RETURNS TABLE

AS

RETURN

SELECT TOP (@n) orderid, orderdate, empid

FROM Sales.Orders

WHERE custid = @custid

ORDER BY orderdate DESC, orderid DESC;

GO

Then you can replace the correlated table expression with a call to the function, passing C.custid as the input customer ID (the correlation) and 3 as the number of rows to filter per customer, like so:

SELECT C.custid, A.orderid, A.orderdate, A.empid

FROM Sales.Customers AS C

CROSS APPLY dbo.GetTopOrders( C.custid, 3 ) AS A;

You can find a more extensive coverage of efficient handling of the top N per group task in Chapter 5.

The OUTER APPLY operator

The CROSS APPLY operator has something in common with an inner join in that left rows that don’t have matches on the right side are discarded. So, in the previous query, customers who didn’t place orders were discarded. Similar to a left outer join operator, which preserves all left rows, there’s an OUTER APPLY operator that preserves all left rows. Like with an outer join, the OUTER APPLY operator uses NULLs as placeholders for nonmatches on the right side.

As an example, to alter the last query to preserve all customers, replace CROSS APPLY with OUTER APPLY, like so:

SELECT C.custid, A.orderid, A.orderdate, A.empid

FROM Sales.Customers AS C