T-SQL Querying (2015)

Chapter 4. Grouping, pivoting, and windowing

This chapter focuses on performing data-analysis calculations with T-SQL for various purposes, such as reporting. A data-analysis calculation is one you apply to a set of rows and that returns a single value. For example, aggregate calculations fall into this category.

T-SQL supports numerous features and techniques you can use to perform data-analysis calculations, such as window functions, pivoting and unpivoting, computing custom aggregations, and working with multiple grouping sets. This chapter covers such features and techniques.

Window functions

You use window functions to perform data-analysis calculations elegantly and, in many cases, efficiently. In contrast to group functions, which are applied to groups of rows defined by a grouped query, window functions are applied to windows of rows defined by a windowed query. Where a GROUP BY clause determines groups, an OVER clause determines windows. So think of the window as the set of rows, or the context, for the function to apply to.

The design of window functions is quite profound, enabling you to handle data-analysis calculations in many cases more elegantly and more easily than with other tools. Standard SQL acknowledges the immense power in window functions, providing extensive coverage for those. T-SQL implements a subset of the standard, and I hope Microsoft will continue that investment in the future.

The coverage of window functions in this chapter is organized based on their categories: aggregate, ranking, offset, and statistical. There’s also a section with solutions to gaps and islands problems using window functions.

![]() Note

Note

Some of the windowing features described in this section were introduced in Microsoft SQL Server 2012. So if you are using an earlier version of SQL Server, you won’t be able to run the related code samples. Those features include frame specification for aggregate window functions (ORDER BY and ROWS or RANGE window frame units), offset window functions (FIRST_VALUE, LAST_VALUE, LAG, and LEAD), and statistical window functions (PERCENT_RANK, CUME_DIST, PERCENTILE_CONT, and PERCENTILE_DISC).

Aggregate window functions

Aggregate window functions are the same functions you know as grouped functions (SUM, AVG, and others), only you apply them to a window instead of to a group. To demonstrate aggregate window functions, I’ll use a couple of small tables called OrderValues and EmpOrders, and a large table called Transactions. Run the following code to create the tables and fill them with sample data:

SET NOCOUNT ON;

USE tempdb;

-- OrderValues table

IF OBJECT_ID(N'dbo.OrderValues', N'U') IS NOT NULL DROP TABLE dbo.OrderValues;

SELECT * INTO dbo.OrderValues FROM TSQLV3.Sales.OrderValues;

ALTER TABLE dbo.OrderValues ADD CONSTRAINT PK_OrderValues PRIMARY KEY(orderid);

GO

-- EmpOrders table

IF OBJECT_ID(N'dbo.EmpOrders', N'U') IS NOT NULL DROP TABLE dbo.EmpOrders;

SELECT empid, ISNULL(ordermonth, CAST('19000101' AS DATE)) AS ordermonth, qty, val, numorders

INTO dbo.EmpOrders

FROM TSQLV3.Sales.EmpOrders;

ALTER TABLE dbo.EmpOrders ADD CONSTRAINT PK_EmpOrders PRIMARY KEY(empid, ordermonth);

GO

-- Transactions table

IF OBJECT_ID('dbo.Transactions', 'U') IS NOT NULL DROP TABLE dbo.Transactions;

IF OBJECT_ID('dbo.Accounts', 'U') IS NOT NULL DROP TABLE dbo.Accounts;

CREATE TABLE dbo.Accounts

(

actid INT NOT NULL CONSTRAINT PK_Accounts PRIMARY KEY

);

CREATE TABLE dbo.Transactions

(

actid INT NOT NULL,

tranid INT NOT NULL,

val MONEY NOT NULL,

CONSTRAINT PK_Transactions PRIMARY KEY(actid, tranid)

);

DECLARE

@num_partitions AS INT = 100,

@rows_per_partition AS INT = 20000;

INSERT INTO dbo.Accounts WITH (TABLOCK) (actid)

SELECT NP.n

FROM TSQLV3.dbo.GetNums(1, @num_partitions) AS NP;

INSERT INTO dbo.Transactions WITH (TABLOCK) (actid, tranid, val)

SELECT NP.n, RPP.n,

(ABS(CHECKSUM(NEWID())%2)*2-1) * (1 + ABS(CHECKSUM(NEWID())%5))

FROM TSQLV3.dbo.GetNums(1, @num_partitions) AS NP

CROSS JOIN TSQLV3.dbo.GetNums(1, @rows_per_partition) AS RPP;

The OrderValues table has a row per order with total order values and quantities. The EmpOrders table keeps track of monthly totals per employee. The Transactions table has 2,000,000 rows with bank account transactions (100 accounts, with 20,000 transactions in each).

Limitations of data analysis calculations without window functions

Before delving into the details of window functions, you first should realize the limitations of alternative tools that perform data-analysis calculations. Knowing those limitations is a good way to appreciate the ingenious design of window functions.

Consider the following grouped query:

SELECT custid, SUM(val) AS custtotal

FROM dbo.OrderValues

GROUP BY custid;

Compared to analyzing the detail, the grouped query gives you new insights into the data in the form of aggregates. However, the grouped query also hides the detail. For example, the following attempt to return a detail element in a grouped query fails:

SELECT custid, val, SUM(val) AS custtotal

FROM dbo.OrderValues

GROUP BY custid;

You get the following error:

Msg 8120, Level 16, State 1, Line 70

Column 'dbo.OrderValues.val' is invalid in the select list because it is not contained in either

an aggregate function or the GROUP BY clause.

The reference to the val column in the SELECT list is not allowed because it is not part of the grouping set and not contained in a group aggregate function. But what if you do need to return details and aggregates? For example, suppose you want to query the OrderValues table and return, in addition to the order information, the percent of the current order value out of the grand total and also out of the customer total.

You could achieve this with scalar aggregate subqueries, like so:

SELECT orderid, custid, val,

val / (SELECT SUM(val) FROM dbo.OrderValues) AS pctall,

val / (SELECT SUM(val) FROM dbo.OrderValues AS O2

WHERE O2.custid = O1.custid) AS pctcust

FROM dbo.OrderValues AS O1;

Or, if you want formatted output:

SELECT orderid, custid, val,

CAST(100. *

val / (SELECT SUM(val) FROM dbo.OrderValues)

AS NUMERIC(5, 2)) AS pctall,

CAST(100. *

val / (SELECT SUM(val) FROM dbo.OrderValues AS O2

WHERE O2.custid = O1.custid)

AS NUMERIC(5, 2)) AS pctcust

FROM dbo.OrderValues AS O1

ORDER BY custid;

The problem with this approach is that each subquery gets a fresh view of the data as its starting point. Typically, the desired starting point for the set calculation is the underlying query’s result set after most logical query processing phases (table operators, filters, and others) were applied.

For example, suppose you need to filter only orders placed on or after 2015. To achieve this, you add a filter in the underlying query, like so:

SELECT orderid, custid, val,

CAST(100. *

val / (SELECT SUM(val) FROM dbo.OrderValues)

AS NUMERIC(5, 2)) AS pctall,

CAST(100. *

val / (SELECT SUM(val) FROM dbo.OrderValues AS O2

WHERE O2.custid = O1.custid)

AS NUMERIC(5, 2)) AS pctcust

FROM dbo.OrderValues AS O1

WHERE orderdate >= '20150101'

ORDER BY custid;

This filter affects only the underlying query, not the subqueries. The subqueries still query all years. This code generates the following output:

orderid custid val pctall pctcust

-------- ------- -------- ------- --------

10835 1 845.80 0.07 19.79

10952 1 471.20 0.04 11.03

11011 1 933.50 0.07 21.85

10926 2 514.40 0.04 36.67

10856 3 660.00 0.05 9.40

10864 4 282.00 0.02 2.11

10953 4 4441.25 0.35 33.17

10920 4 390.00 0.03 2.91

11016 4 491.50 0.04 3.67

10924 5 1835.70 0.15 7.36

...

There’s a bug in the code. Observe that the percentages per customer don’t sum up to 100 like they should, and the same applies to the percentages out of the grand total. To have the subqueries operate on the underlying query’s result set, you have to either duplicate code elements or use a table expression.

Window functions were designed with the limitations of grouped queries and subqueries in mind, and that’s just the tip of the iceberg in terms of their capabilities!

Unlike grouped queries, windowed queries don’t hide the detail. A window function’s result is returned in addition to the detail.

Unlike the fresh view of the data exposed to a scalar aggregate in a subquery, an OVER clause exposes the underlying query result as the starting point for the window function. More precisely, in terms of logical query processing, window functions operate on the set of rows exposed to the SELECT phase as input—after the handling of FROM, WHERE, GROUP BY, and HAVING. For this reason, window functions are allowed only in the SELECT and ORDER BY clauses of a query. Using an empty specification in the OVER clause defines a window with the entire underlying query result set. So, SUM(val) OVER() gives you the grand total. Adding a window partition clause like PARTITION BY custid restricts the window to only the rows that have the same customer ID as in the current row. So SUM(val) OVER(PARTITION BY custid) gives you the customer total.

The following query then gives you the detail along with the percent of the current order value out of the grand total as well as out of the customer total:

SELECT orderid, custid, val,

val / SUM(val) OVER() AS pctall,

val / SUM(val) OVER(PARTITION BY custid) AS pctcust

FROM dbo.OrderValues;

Here’s a revised version with formatted percentages:

SELECT orderid, custid, val,

CAST(100. * val / SUM(val) OVER() AS NUMERIC(5, 2)) AS pctall,

CAST(100. * val / SUM(val) OVER(PARTITION BY custid) AS NUMERIC(5, 2)) AS pctcust

FROM dbo.OrderValues

ORDER BY custid;

Add a filter to the query:

SELECT orderid, custid, val,

CAST(100. * val / SUM(val) OVER() AS NUMERIC(5, 2)) AS pctall,

CAST(100. * val / SUM(val) OVER(PARTITION BY custid) AS NUMERIC(5, 2)) AS pctcust

FROM dbo.OrderValues

WHERE orderdate >= '20150101'

ORDER BY custid;

Unlike subqueries, window functions get as their starting point the underlying query result after filtering. Observe the query output:

orderid custid val pctall pctcust

-------- ------- -------- ------- --------

10835 1 845.80 0.19 37.58

10952 1 471.20 0.11 20.94

11011 1 933.50 0.21 41.48

10926 2 514.40 0.12 100.00

10856 3 660.00 0.15 100.00

10864 4 282.00 0.06 5.03

10953 4 4441.25 1.01 79.24

10920 4 390.00 0.09 6.96

11016 4 491.50 0.11 8.77

10924 5 1835.70 0.42 27.18

...

Notice that this time the percentages per customer (pctcust) sum up to 100, and the same goes for the percentages out of the grand total when summing those up in all rows.

Window elements

A window function is conceptually evaluated per row, and the elements in the window specification can implicitly or explicitly relate to the underlying row. Aggregate window functions support a number of elements in their specification, as demonstrated by the following query, which computes a running total quantity per employee and month:

SELECT empid, ordermonth, qty,

SUM(qty) OVER(PARTITION BY empid

ORDER BY ordermonth

ROWS BETWEEN UNBOUNDED PRECEDING

AND CURRENT ROW) AS runqty

FROM dbo.EmpOrders;

The window specification can define a window partition and a window frame. Both elements provide ways to restrict a subset of rows from the underlying query’s result set for the function to operate on. The window partition is defined using a window partition clause (PARTITION BY ...). The window frame is defined using a window order clause (ORDER BY ...) and a window frame clause (ROWS | RANGE ...). The following sections describe these window elements in detail.

Window partition

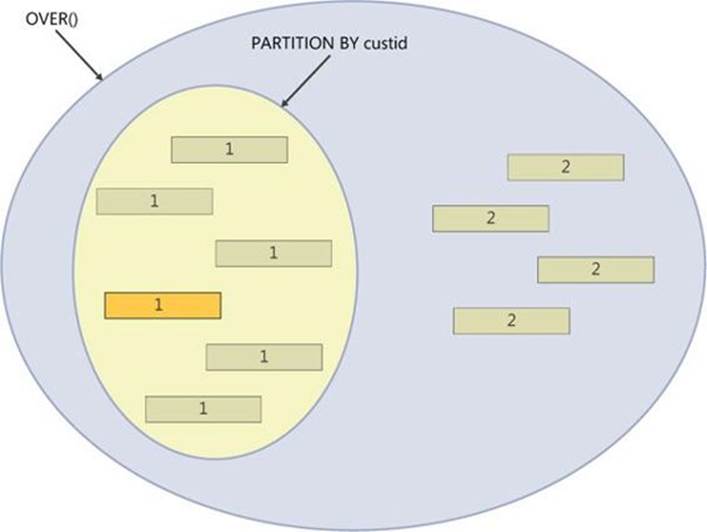

A window partition is a subset of the underlying query’s result set defined by a window partition clause. The window partition is the subset of rows that have the same values in the window partition elements as in the current row. (Remember, the function is evaluated per row.) For example,PARTITION BY empid restricts the rows to only those that have the same empid value as in the current row. Similarly, PARTITION BY custid restricts the rows to only those that have the same custid value as in the current row. Absent a window partition clause, the underlying query result set is considered one big partition. So, for any given row, OVER() exposes to the function the underlying query’s entire result set. Figure 4-1 illustrates what’s considered the qualifying set of rows for the highlighted row with both an empty OVER clause and one containing a window partition clause. The numbers represent customer IDs.

FIGURE 4-1 Window partition.

As an example, the following query computes for each order the percent of the current order value out of the grand total, as well as out of the customer total:

SELECT orderid, custid, val,

CAST(100. * val / SUM(val) OVER() AS NUMERIC(5, 2)) AS pctall,

CAST(100. * val / SUM(val) OVER(PARTITION BY custid) AS NUMERIC(5, 2)) AS pctcust

FROM dbo.OrderValues

ORDER BY custid;

This query generates the following output:

orderid custid val pctall pctcust

-------- ------- ------- ------- --------

10643 1 814.50 0.06 19.06

10692 1 878.00 0.07 20.55

10702 1 330.00 0.03 7.72

10835 1 845.80 0.07 19.79

10952 1 471.20 0.04 11.03

11011 1 933.50 0.07 21.85

10926 2 514.40 0.04 36.67

10759 2 320.00 0.03 22.81

10625 2 479.75 0.04 34.20

10308 2 88.80 0.01 6.33

...

There are clear advantages in terms of simplicity and elegance to using such a windowed query compared to, say, using multiple grouped queries and joining their results. But what about performance? In this respect, I’m afraid the news is not so good. Before I elaborate, I’d like to stress that the inefficiencies I’m about to describe are limited to window aggregate functions without a frame. As I will explain later, window aggregate functions with a frame, window ranking functions, and window offset functions are optimized differently.

To discuss performance aspects, I’ll use the bigger Transactions table, which has 2,000,000 rows. Consider the following query:

SELECT actid, tranid, val,

val / SUM(val) OVER() AS pctall,

val / SUM(val) OVER(PARTITION BY actid) AS pctact

FROM dbo.Transactions;

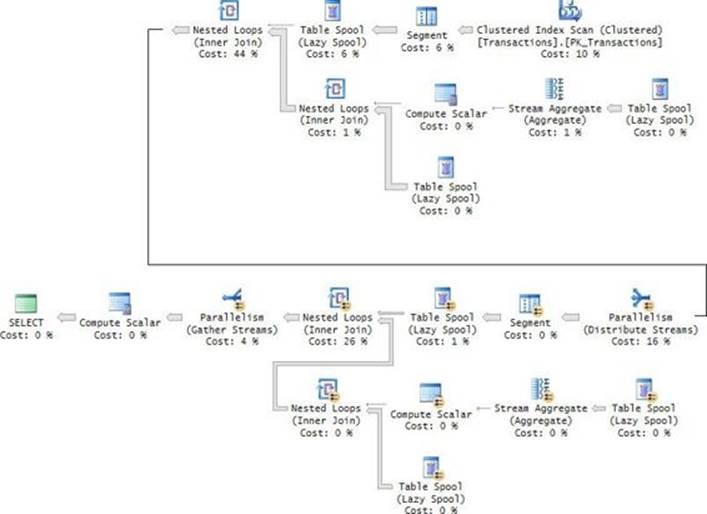

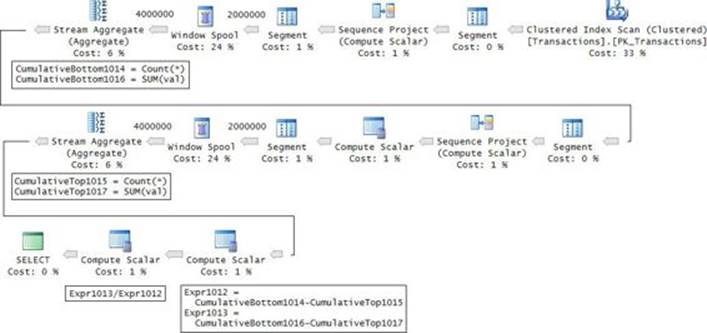

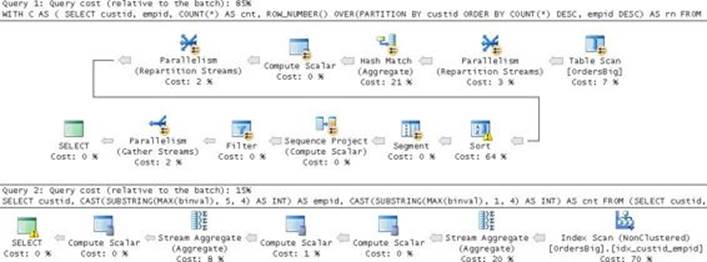

The execution plan for this query is shown in Figure 4-2.

FIGURE 4-2 Optimization with window aggregates.

Recall that the initial set that is exposed to the window function is the underlying query result, after the logical processing phases prior to the SELECT phase have been applied (FROM, WHERE, GROUP BY, and HAVING). Furthermore, the window function’s results are returned in addition to the detail, not instead of it. To ensure both requirements, for each of the window functions, the query plan writes the rows to a spool and then reads from the spool twice—once to compute the aggregate and once to read the detail—and then joins the results. Because the query has two unique window specifications, this work happens twice in the plan.

Clearly, there’s room for improved optimization, especially in cases like ours where there are no table operators, filters, or grouping involved, and there’s an optimal covering index in place (PK_Transactions). Logic can be added to the optimizer to detect cases where there’s no need to spool data, and instead the data is read directly from the index.

With the current optimization resulting in the plan shown in Figure 4-2, on my system, it took the query 13.76 seconds to complete, using 16.485 CPU seconds and 10,053,178 logical reads. That’s a lot for the amount of data involved!

If you rewrite the solution using grouped queries, you will get much better performance. Here’s the version using grouped queries:

WITH GrandAgg AS

(

SELECT SUM(val) AS sumall FROM dbo.Transactions

),

ActAgg AS

(

SELECT actid, SUM(val) AS sumact

FROM dbo.Transactions

GROUP BY actid

)

SELECT T.actid, T.tranid, T.val,

T.val / GA.sumall AS pctall,

T.val / AA.sumact AS pctact

FROM dbo.Transactions AS T

CROSS JOIN GrandAgg AS GA

INNER JOIN ActAgg AS AA

ON AA.actid = T.actid;

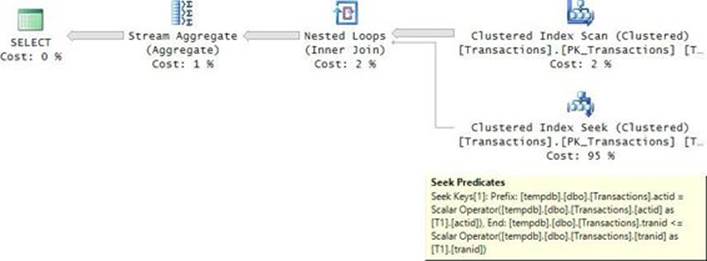

Examine the plan shown in Figure 4-3. Observe that there’s no spooling at all, and that efficient order-based aggregates (Stream Aggregate) are computed based on index order.

FIGURE 4-3 Optimization with group aggregates.

The performance statistics I got for this solution on my system are as follows:

![]() Run time: 2.01 seconds

Run time: 2.01 seconds

![]() CPU time: 4.97 seconds

CPU time: 4.97 seconds

![]() Logical reads: 19,368

Logical reads: 19,368

That’s quite an improvement.

Because there are great language design benefits in using window functions, we hope to see future investment in better optimization of such functions.

Usually, grouping and windowing are considered different methods to achieve a task, but there are interesting ways to combine them. They are not mutually exclusive. The easiest way to understand this is to think of logical query processing. Remember from Chapter 1, “Logical query processing,” that the GROUP BY clause is evaluated in step 3 and the SELECT clause is evaluated in step 5. Therefore, if the query is a grouped query, the SELECT phase operates on the grouped state of the data and not the detailed state. So if you use a window function in the SELECT or ORDER BY phase, the window function also operates on the grouped state.

As an example, consider the following grouped query:

SELECT custid, SUM(val) AS custtotal

FROM dbo.OrderValues

GROUP BY custid;

The query groups the rows by custid, and it returns the total values per customer (custtotal).

Before looking at the next query, try to answer the following question: Can you add the val column directly to the SELECT list in the preceding query? The answer is, “Of course not.” Because the query is a grouped query, a reference to a column name in the SELECT list is allowed only if the column is part of the GROUP BY list or contained in a group aggregate function.

With this in mind, suppose you need to add to the query a calculation returning the percent of the current customer total out of the grand total. The customer total is expressed with the grouped function SUM(val). Normally, to express the grand total in a detailed query you use a windowed function, SUM(val) OVER(). Now try dividing one by the other, like so:

SELECT custid, SUM(val) AS custtotal,

SUM(val) / SUM(val) OVER() AS pct

FROM dbo.OrderValues

GROUP BY custid;

You get the same error as when trying to add the val column directly to the SELECT list:

Msg 8120, Level 16, State 1, Line 229

Column 'dbo.OrderValues.val' is invalid in the select list because it is not contained in either

an aggregate function or the GROUP BY clause.

Unlike grouped functions, which operate on the detail rows per group, windowed functions operate on the grouped data. Windowed functions can see what the SELECT phase can see. The error message is a bit misleading in that it says “...because it is not contained in either an aggregate function...” and you’re probably thinking that it is. This error message was added to SQL Server well before window functions were introduced. What it means to say is “...because it is not contained in either a group aggregate function....”

If the window function cannot refer to the val column directly, what can it refer to? An attempt to refer to the alias custtotal also fails because of the all-at-once concept in SQL. The answer is that the windowed SUM should be applied to the grouped SUM, like so:

SELECT custid, SUM(val) AS custtotal,

SUM(val) / SUM(SUM(val)) OVER() AS pct

FROM dbo.OrderValues

GROUP BY custid;

This idea takes a bit of getting used to, but when thinking in terms of logical query processing, it makes perfect sense.

Window frame

Just as a partition is a filtered portion of the underlying query’s result set, a frame is a filtered portion of the partition. A frame requires ordering specifications using a window order clause. Based on that order, you define two delimiters that frame the rows. Then the window function is applied to that frame of rows. You can use one of two window frame units: ROWS or RANGE.

I’ll start with ROWS. Using this unit, you can define three types of delimiters:

![]() UNBOUNDED PRECEDING | FOLLOWING: First or last row in the partition, respectively.

UNBOUNDED PRECEDING | FOLLOWING: First or last row in the partition, respectively.

![]() CURRENT ROW: Current row.

CURRENT ROW: Current row.

![]() N ROWS PRECEDING | FOLLOWING: An offset in terms of the specified number of rows (N) before or after the current row, respectively.

N ROWS PRECEDING | FOLLOWING: An offset in terms of the specified number of rows (N) before or after the current row, respectively.

The first delimiter has to be on or before the second delimiter.

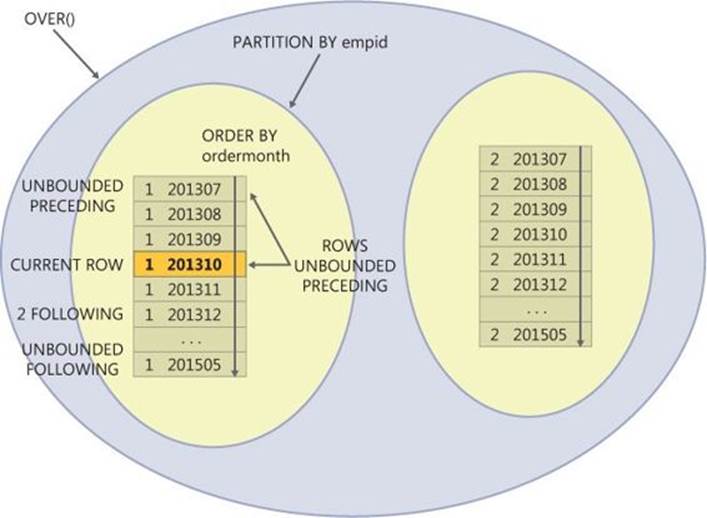

Figure 4-4 illustrates the window frame concept. The numbers 1 and 2 represent employee IDs; the values 201307, 201308, and so on represent order months. A sample row is highlighted in bold as the current row.

FIGURE 4-4 Window frame.

As an example, suppose you want to query the EmpOrders table and compute for each employee and order month the running total quantity. In other words, you want to see the cumulative performance of the employee over time. You define the window partition clause based on the empidcolumn, the window order clause based on the ordermonth column, and the window frame clause as ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW, like so:

SELECT empid, ordermonth, qty,

SUM(qty) OVER(PARTITION BY empid

ORDER BY ordermonth

ROWS BETWEEN UNBOUNDED PRECEDING

AND CURRENT ROW) AS runqty

FROM dbo.EmpOrders;

Because this frame specification is so common, the standard defines the equivalent shorter form ROWS UNBOUNDED PRECEDING. Here’s the shorter form used in our query:

SELECT empid, ordermonth, qty,

SUM(qty) OVER(PARTITION BY empid

ORDER BY ordermonth

ROWS UNBOUNDED PRECEDING) AS runqty

FROM dbo.EmpOrders;

This query generates the following output:

empid ordermonth qty runqty

------ ---------- ----- -------

1 2013-07-01 121 121

1 2013-08-01 247 368

1 2013-09-01 255 623

1 2013-10-01 143 766

1 2013-11-01 318 1084

1 2013-12-01 536 1620

...

1 2015-05-01 299 7812

2 2013-07-01 50 50

2 2013-08-01 94 144

2 2013-09-01 137 281

2 2013-10-01 248 529

2 2013-11-01 237 766

2 2013-12-01 319 1085

...

2 2015-04-01 1126 5915

...

Using a window function to compute a running total is both elegant and, as you will soon see, very efficient. An alternative technique is to use a query with a join and grouping, like so:

SELECT O1.empid, O1.ordermonth, O1.qty,

SUM(O2.qty) AS runqty

FROM dbo.EmpOrders AS O1

INNER JOIN dbo.EmpOrders AS O2

ON O2.empid = O1.empid

AND O2.ordermonth <= O1.ordermonth

GROUP BY O1.empid, O1.ordermonth, O1.qty;

This technique is both more complex and gets optimized extremely inefficiently.

To evaluate the optimization of the different techniques, I’ll use the bigger Transactions table. Suppose you need to compute the balance after each transaction for each account. Using a windowed SUM function, you apply the calculation to the val column, partitioned by the actid column, ordered by the tranid column, with the frame ROWS UNBOUNDED PRECEDING.

The optimal index for window functions is what I like to think of as a POC index. This acronym stands for Partitioning (actid, in our case), Ordering (tranid), and Covering (val). The P and O parts should make the index key list, and the C part should be included in the index leaf row. The clustered index PK_Orders on the Transactions table is based on this pattern, so we already have the optimal index in place.

The following query computes the aforementioned bank account balances using a window function:

SELECT actid, tranid, val,

SUM(val) OVER(PARTITION BY actid

ORDER BY tranid

ROWS UNBOUNDED PRECEDING) AS balance

FROM dbo.Transactions;

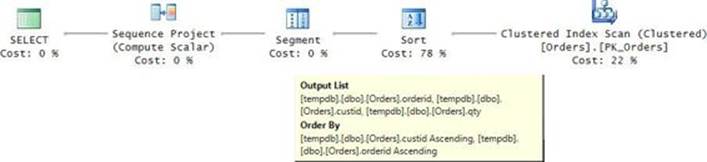

The plan for this query is shown in Figure 4-5.

![]()

FIGURE 4-5 Plan with fast-track optimization of window function for running totals.

The plan starts by scanning the POC index in order. That’s a good start. The next pair of Segment and Sequence Project operators are responsible for computing row numbers. The row numbers are used to indicate which rows fit in each underlying row’s window frame. The Segment operator flags the next operator when a new partition starts. The Sequence Project operator assigns the row numbers—1 if the row is the first in the partition, and the previous value plus 1 if it is not. The next Segment operator, again, is responsible for flagging the operator to its left when a new partition starts.

Then the next pair of Window Spool and Stream Aggregate operators represent the frame of rows that need to be aggregated and the aggregate applied to the frame, respectively. If it were not for specialized optimization called fast-track that the optimizer employs here, for a partition with N rows, the spool would have to store 1 + 2 + ... + N = (N + N2)/2 rows. As an example, for an account with just 20,000 transactions, the number would be 200,010,000. Fortunately, when the first delimiter of the frame is the first row in the partition (UNBOUNDED PRECEDING), the optimizer detects the case as a “fast-track” case and uses specialized optimization. For each row, it takes the previous row’s accumulation, adds the current row’s value, and writes that to the spool.

With this sort of optimization, you would expect the number of rows going out of the spool to be the same as the number of rows going into it (2,000,000 in our case); however, the plan shows that the number of rows going out of the spool is twice the number going in. The reason for this has to do with the fact that the Stream Aggregate operator was designed well before window functions were introduced. Originally, it was used only to compute group functions in grouped queries, which return the aggregates but hide the detail. So the trick Microsoft used to use the operator to compute a window aggregate is that besides the row with the accumulated aggregate, they also add the detail row to the spool so as not to lose it. So, with fast-track optimization, if N rows go into the Window Spool operator, you should see 2N rows going out.

It took four seconds for the query to complete on my system with results discarded.

Here’s the alternative solution using a join and grouping:

SELECT T1.actid, T1.tranid, T1.val, SUM(T2.val) AS balance

FROM dbo.Transactions AS T1

INNER JOIN dbo.Transactions AS T2

ON T2.actid = T1.actid

AND T2.tranid <= T1.tranid

GROUP BY T1.actid, T1.tranid, T1.val;

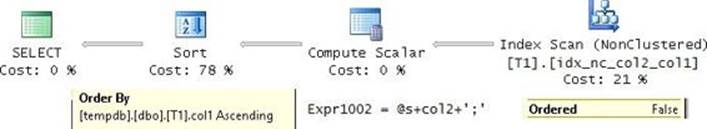

Running the query and waiting for it to complete is a good exercise in patience. To understand why, examine the query plan shown in Figure 4-6.

FIGURE 4-6 Plan for running totals with a join and a grouped query.

Here the optimizer doesn’t employ any specialized optimization. You see the POC index scanned once for the T1 instance of the table, and then for each row, it performs a seek in the index representing the instance T2 to the beginning of the current account section, and then a range scan of all rows where T2.actid <= T1.actid. Besides the cost of the full scan of the index and the cost of the seeks, the range scan processes in total (N + N2)/2 rows per account, where N is the number of transactions per account. (In our sample data, N is equal to 20,000.) It took over an hour and a half for the query to finish on my system.

While the plan for the query with the join and grouping has quadratic scaling (N2), the plan for the window function has linear scaling. Furthermore, when the optimizer knows that per underlying row there are no more than 10,000 rows in the window frame, it uses a special optimized in-memory spool (as opposed to a more expensive on-disk spool). Remember that when the unit is ROWS and the optimizer uses fast-track optimization, it writes only two rows to the spool per underlying row—one with the aggregate and one with the detail. In such a case, the conditions for using the in-memory spool are satisfied.

Still, with certain frame specifications like ROWS UNBOUNDED PRECEDING, which are the more commonly used ones, there’s potential for improvements in the optimizer. Theoretically, spooling could be avoided in those cases. Instead, the optimizer could use streaming operators, like the Sequence Project operator it uses to calculate the ROW_NUMBER function. As an example, when replacing the windowed SUM in the query computing balances with the ROW_NUMBER function just to check the performance, the query completes in one second. That’s compared to four seconds for the original query. This is the performance improvement potential for calculations like running totals if Microsoft decides to invest further in this area in the future.

As mentioned, you can use the ROWS option to specify delimiters as an offset in terms of a number of rows before (PRECEDING) or after (FOLLOWING) the current row. As an example, the following query computes for each employee and month the moving average quantity of the last three recorded months.

SELECT empid, ordermonth,

AVG(qty) OVER(PARTITION BY empid

ORDER BY ordermonth

ROWS BETWEEN 2 PRECEDING AND CURRENT ROW) AS avgqty

FROM dbo.EmpOrders;

This query generates the following output:

empid ordermonth avgqty

------ ---------- -------

1 2013-07-01 121

1 2013-08-01 184

1 2013-09-01 207

1 2013-10-01 215

1 2013-11-01 238

1 2013-12-01 332

1 2014-01-01 386

1 2014-02-01 336

1 2014-03-01 249

1 2014-04-01 154

...

As another example, the following query computes the moving average value of the last 100 transactions:

SELECT actid, tranid, val,

AVG(val) OVER(PARTITION BY actid

ORDER BY tranid

ROWS BETWEEN 99 PRECEDING AND CURRENT ROW) AS avg100

FROM dbo.Transactions;

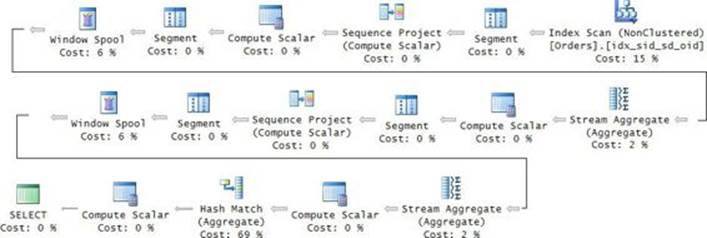

What’s interesting about the optimization of frames that don’t start at the beginning of the partition is that you would expect the optimizer to create a plan that writes all frame rows to the window spool and aggregates them. In the last example, this would mean 100 × 2,000,000 = 2,000,000,000 rows. However, the optimizer has a trick that it can use under certain conditions to avoid such work. If the frame has more than four rows and the aggregate is a cumulative one like SUM, COUNT, or AVG, the optimizer creates a plan with two fast-track running aggregate computations: one starting at the beginning of the partition and ending with the last row in the desired frame (call it CumulativeBottom) and another starting at the beginning of the partition and ending with the row before the start of the desired frame (call it CumulativeTop). Then it derives the final aggregate as a calculation between the two. You can see this strategy employed in the plan for the last query shown in Figure 4-7.

FIGURE 4-7 Plan for moving average.

It took this query 12 seconds to complete on my system. This is not so bad considering the alternative.

If the computation is not cumulative—namely, it cannot be derived from bottom and top calculations—the optimizer will not be able to use any tricks and all applicable rows have to be written to the spool and aggregated. Examples of such aggregates are MIN and MAX. To demonstrate this, the following query uses the MAX aggregate to compute the maximum value among the last 100 transactions:

SELECT actid, tranid, val,

MAX(val) OVER(PARTITION BY actid

ORDER BY tranid

ROWS BETWEEN 99 PRECEDING AND CURRENT ROW) AS max100

FROM dbo.Transactions;

The plan for this query is shown in Figure 4-8.

![]()

FIGURE 4-8 Plan for moving maximum.

Observe the number of rows flowing into and from the Window Spool operator. This query took 64 seconds to complete.

Note that even though the plan chosen is a parallel plan, there’s a trick developed by Adam Machanic you can use that can improve the treatment of parallelism. When the calculation is partitioned (like in our case, where it is partitioned by actid), you can issue a query against the table holding the partitions (Accounts, in our case) and then, with the CROSS APPLY operator, apply a query similar to the original but filtered by the current partition value.

To apply this method to our example, use the following query:

SELECT A.actid, D.tranid, D.val, D.max100

FROM dbo.Accounts AS A

CROSS APPLY (SELECT tranid, val,

MAX(val) OVER(ORDER BY tranid

ROWS BETWEEN 99 PRECEDING AND CURRENT ROW) AS max100

FROM dbo.Transactions AS T

WHERE T.actid = A.actid) AS D;

The plan for this query is shown in Figure 4-9.

FIGURE 4-9 Optimization with the APPLY operator.

It took this query 30 seconds to complete on my system—half the time of the original query.

Aggregate window functions support another frame unit, called RANGE. Unfortunately though, SQL Server has a limited implementation of this option. In standard SQL, the RANGE option allows you to express the delimiters as an offset from the current row’s ordering value. That’s in contrast to ROWS, which defines the offset in terms of a number of rows. So with RANGE, the definition of the frame is more dynamic.

As an example, suppose you need to compute for each employee and month the moving average quantity of the last three months—not the last three recorded months. The problem is that you don’t have any assurance that every employee had activity in every month. If an employee did not have activity in a certain month, there won’t be a row in the EmpOrders table for that employee in that month. For example, employee 9 had activity in July 2013 and then no activity in August and September, activity in October, no activity in November, and then activity in December. If you use the frame ROWS BETWEEN 2 PRECEDING AND CURRENT ROW to compute the moving average of the last three recorded months, for employee 9 in December 2013 you will capture a period of half a year. The RANGE option is supposed to help you if you need a period of the last three months, never mind how many rows are included. According to standard SQL, you are supposed to use the following query (don’t run it in SQL Server because it’s not supported):

SELECT empid, ordermonth, qty,

SUM(qty) OVER(PARTITION BY empid

ORDER BY ordermonth

RANGE BETWEEN INTERVAL '2' MONTH PRECEDING

AND CURRENT ROW) AS sum3month

FROM dbo.EmpOrders;

As a result, you are supposed to get the following output for employee 9:

empid ordermonth qty sum3month

----------- ---------- ----------- -----------

...

9 2013-07-01 294 294

9 2013-10-01 256 256

9 2013-12-01 25 281

9 2014-01-01 74 99

9 2014-03-01 137 211

9 2014-04-01 52 189

9 2014-05-01 8 197

9 2014-06-01 161 221

9 2014-07-01 4 173

9 2014-08-01 98 263

...

Unfortunately, SQL Server is missing two things to allow such a query. One, SQL Server is missing the INTERVAL feature. Two, SQL Server supports only UNBOUNDED and CURRENT ROW as delimiters for the RANGE unit.

To achieve the task in SQL Server, you have a few options. One is to pad the data with missing employee and month entries, and then use the ROWS option, like so:

DECLARE

@frommonth AS DATE = '20130701',

@tomonth AS DATE = '20150501';

WITH M AS

(

SELECT DATEADD(month, N.n, @frommonth) AS ordermonth

FROM TSQLV3.dbo.GetNums(0, DATEDIFF(month, @frommonth, @tomonth)) AS N

),

R AS

(

SELECT E.empid, M.ordermonth, EO.qty,

SUM(EO.qty) OVER(PARTITION BY E.empid

ORDER BY M.ordermonth

ROWS BETWEEN 2 PRECEDING AND CURRENT ROW) AS sum3month

FROM TSQLV3.HR.Employees AS E CROSS JOIN M

LEFT OUTER JOIN dbo.EmpOrders AS EO

ON E.empid = EO.empid

AND M.ordermonth = EO.ordermonth

)

SELECT empid, ordermonth, qty, sum3month

FROM R

WHERE qty IS NOT NULL;

The other option is to use a query with a join and grouping like the one I showed for the running total example:

SELECT O1.empid, O1.ordermonth, O1.qty,

SUM(O2.qty) AS sum3month

FROM dbo.EmpOrders AS O1

INNER JOIN dbo.EmpOrders AS O2

ON O2.empid = O1.empid

AND O2.ordermonth

BETWEEN DATEADD(month, -2, O1.ordermonth)

AND O1.ordermonth

GROUP BY O1.empid, O1.ordermonth, O1.qty

ORDER BY O1.empid, O1.ordermonth;

The question is, why even bother supporting RANGE with only UNBOUNDED and CURRENT ROW as delimiters? As it turns out, there is a subtle logical difference between ROWS and RANGE when they use the same frame specification. But that difference manifests itself only when the order is not unique. The ROWS option doesn’t include peers (ties in the ordering value), whereas RANGE does. Consider the following query as an example:

SELECT orderid, orderdate, val,

SUM(val) OVER(ORDER BY orderdate ROWS UNBOUNDED PRECEDING) AS sumrows,

SUM(val) OVER(ORDER BY orderdate RANGE UNBOUNDED PRECEDING) AS sumrange

FROM dbo.OrderValues;

This query generates the following output:

orderid orderdate val sumrows sumrange

-------- ---------- -------- ----------- -----------

10248 2013-07-04 440.00 440.00 440.00

10249 2013-07-05 1863.40 2303.40 2303.40

10250 2013-07-08 1552.60 3856.00 4510.06

10251 2013-07-08 654.06 4510.06 4510.06

10252 2013-07-09 3597.90 8107.96 8107.96

...

11070 2015-05-05 1629.98 1257012.06 1263014.56

11071 2015-05-05 484.50 1257496.56 1263014.56

11072 2015-05-05 5218.00 1262714.56 1263014.56

11073 2015-05-05 300.00 1263014.56 1263014.56

11074 2015-05-06 232.09 1263246.65 1265793.22

11075 2015-05-06 498.10 1263744.75 1265793.22

11076 2015-05-06 792.75 1264537.50 1265793.22

11077 2015-05-06 1255.72 1265793.22 1265793.22

The orderdate column isn’t unique. Observe the difference between the results of the functions in orders 10250 and 10251. Notice that in the case of ROWS peers in the orderdate values were not included, making the calculation nondeterministic. Access order to the rows determines which values are included in the calculation. With RANGE, peers were included, so both rows get the same results.

While the logical difference between the two options is subtle, the performance difference is not subtle at all. For the Window Spool operator, the optimizer can use either a special optimized in-memory spool or an on-disk spool like the one used for temporary tables with all the I/O and latching overhead. The risk with the in-memory spool is memory consumption. If the optimizer knows that per underlying row there can’t be more than 10,000 rows in the frame, it uses the in-memory spool. If it either knows that there could be more or is not certain, it will use the on-disk spool. With ROWS, when the fast-track case is detected you have exactly two rows stored in the spool per underlying row, so you always get the in-memory spool. You have a couple of ways to check what kind of spool was used. One is using an extended event calledwindow_spool_ondisk_warning, which is fired when an on-disk spool is used. The other is through STATISTICS IO. The in-memory spool results in zeros in the I/O counters against the worktable, whereas the on-disk spool shows nonzero values.

As an example, run the following query after turning on STATISTICS IO:

SELECT actid, tranid, val,

SUM(val) OVER(PARTITION BY actid

ORDER BY tranid

ROWS UNBOUNDED PRECEDING) AS balance

FROM dbo.Transactions;

It took this query four seconds to complete on my system. Its plan is similar to the one shown earlier in Figure 4-5. Notice that the I/O statistics reported for the worktable are zeros, telling you that the in-memory spool was used:

Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Transactions'. Scan count 1, logical reads 6208, physical reads 168,

read-ahead reads 6200, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Remember that RANGE includes peers, so when using this option, the optimizer cannot know ahead how many rows will need to be included. So whenever you use RANGE, the optimizer always chooses the on-disk spool. Here’s an example using the RANGE option:

SELECT actid, tranid, val,

SUM(val) OVER(PARTITION BY actid

ORDER BY tranid

RANGE UNBOUNDED PRECEDING) AS balance

FROM dbo.Transactions;

Observe that the I/O statistics against the worktable are quite significant this time, telling you that the on-disk spool was used:

Table 'Worktable'. Scan count 2000100, logical reads 12044701, physical reads 0,

read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Transactions'. Scan count 1, logical reads 6208, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

It took this query 37 seconds to complete on my system. That’s an order of magnitude slower than with the ROWS option.

![]() Important

Important

There are two important things you should make a note of. One is that if you specify a window order clause but do not indicate an explicit frame, the default frame is the much slower RANGE UNBOUNDED PRECEDING. The second point is that when the order is unique, ROWS and RANGE that have the same frame specification return the same results, but still the former will be much faster. Unfortunately, the optimizer doesn’t internally convert the RANGE option to ROWS when the ordering is unique to allow better optimization. So unless you have a nonunique order and need to include peers, make sure you indicate ROWS explicitly; otherwise, you will just pay for the unnecessary work.

A classic data-analysis calculation called year-to-date (YTD) is a variation of a running total calculation. It’s just that you need to reset the calculation at the beginning of each year. To achieve this, you specify the expression representing the year, like YEAR(orderdate), in the window partition clause in addition to any other elements you need there.

For example, suppose you need to query the OrderValues table and return for each order the current order info, plus a YTD calculation of the order values per customer based on order date ordering, including peers. This means that your window partition clause will be based on the elements custid, YEAR(orderdate). The window order clause will be based on orderdate. The window frame unit will be RANGE because you are requested not to break ties but rather include them. So even though the RANGE option is the more expensive one, that’s exactly the special case where you are supposed to use it. Here’s the complete query:

SELECT custid, orderid, orderdate, val,

SUM(val) OVER(PARTITION BY custid, YEAR(orderdate)

ORDER BY orderdate

RANGE UNBOUNDED PRECEDING) AS YTD_val

FROM dbo.OrderValues;

This query generates the following output:

custid orderid orderdate val YTD_val

------- -------- ---------- -------- --------

1 10643 2014-08-25 814.50 814.50

1 10692 2014-10-03 878.00 1692.50

1 10702 2014-10-13 330.00 2022.50

1 10835 2015-01-15 845.80 845.80

1 10952 2015-03-16 471.20 1317.00

1 11011 2015-04-09 933.50 2250.50

...

10 10389 2013-12-20 1832.80 1832.80

10 10410 2014-01-10 802.00 1768.80

10 10411 2014-01-10 966.80 1768.80

10 10431 2014-01-30 1892.25 3661.05

10 10492 2014-04-01 851.20 4512.25

...

Observe that customer 10 placed two orders on January 10, 2014, and that the calculation produces the same YTD values in both rows, as it should.

If you need to return only one row per distinct customer and date, you want to first group the rows by the two, and then apply the windowed SUM function to the grouped SUM function. Now that the combination of partitioning and ordering elements is unique, you should use the ROWS unit. Here’s the complete query:

SELECT custid, orderdate,

SUM(SUM(val)) OVER(PARTITION BY custid, YEAR(orderdate)

ORDER BY orderdate

ROWS UNBOUNDED PRECEDING) AS YTD_val

FROM dbo.OrderValues

GROUP BY custid, orderdate;

This query generates the following output:

custid orderdate YTD_val

------- ---------- --------

1 2014-08-25 814.50

1 2014-10-03 1692.50

1 2014-10-13 2022.50

1 2015-01-15 845.80

1 2015-03-16 1317.00

1 2015-04-09 2250.50

...

10 2013-12-20 1832.80

10 2014-01-10 1768.80

10 2014-01-30 3661.05

10 2014-04-01 4512.25

10 2014-11-14 7630.25

...

Observe that now there’s only one row for each unique customer and date, like in the case of customer 10, on January 10, 2014.

Ranking window functions

Ranking calculations are implemented in T-SQL as window functions. When you rank a value, you don’t rank it alone, but rather with respect to some set of values, based on certain order. The windowing concept lends itself to such calculations. The set is defined by the OVER clause. Absent a window partition clause, you rank the current row’s value against all rows in the underlying query’s result set. With a window partition clause, you rank the row against the rows in the same window partition. A mandatory window order clause defines the order for the ranking.

To demonstrate ranking window functions, I’ll use the following Orders table.

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID(N'dbo.Orders', N'U') IS NOT NULL DROP TABLE dbo.Orders;

CREATE TABLE dbo.Orders

(

orderid INT NOT NULL,

orderdate DATE NOT NULL,

empid INT NOT NULL,

custid VARCHAR(5) NOT NULL,

qty INT NOT NULL,

CONSTRAINT PK_Orders PRIMARY KEY (orderid)

);

GO

INSERT INTO dbo.Orders(orderid, orderdate, empid, custid, qty)

VALUES(30001, '20130802', 3, 'B', 10),

(10001, '20131224', 1, 'C', 10),

(10005, '20131224', 1, 'A', 30),

(40001, '20140109', 4, 'A', 40),

(10006, '20140118', 1, 'C', 10),

(20001, '20140212', 2, 'B', 20),

(40005, '20140212', 4, 'A', 10),

(20002, '20140216', 2, 'C', 20),

(30003, '20140418', 3, 'B', 15),

(30004, '20140418', 3, 'B', 20),

(30007, '20140907', 3, 'C', 30);

T-SQL supports four ranking functions, called ROW_NUMBER, RANK, DENSE_RANK, and NTILE. Here’s a query demonstrating all four against the Orders table, without a window partition clause, ordered by the qty column:

SELECT orderid, qty,

ROW_NUMBER() OVER(ORDER BY qty) AS rownum,

RANK() OVER(ORDER BY qty) AS rnk,

DENSE_RANK() OVER(ORDER BY qty) AS densernk,

NTILE(4) OVER(ORDER BY qty) AS ntile4

FROM dbo.Orders;

This query generates the following output:

orderid qty rownum rnk densernk ntile4

-------- ---- ------- ---- --------- -------

10001 10 1 1 1 1

10006 10 2 1 1 1

30001 10 3 1 1 1

40005 10 4 1 1 2

30003 15 5 5 2 2

30004 20 6 6 3 2

20001 20 7 6 3 3

20002 20 8 6 3 3

10005 30 9 9 4 3

30007 30 10 9 4 4

40001 40 11 11 5 4

The ROW_NUMBER function is the most commonly used of the four. It computes unique incrementing integers in the target partition (the entire query result in this example), based on the specified order. When the ordering is not unique within the partition, like in our example, the calculation is nondeterministic. Meaning, if you run the query again, you’re not guaranteed to get repeatable results. For example, observe the three rows with the quantity 20. They got the row numbers 6, 7, and 8. What determines which of the three gets which row number is a matter of data layout, optimization, and actual access order. Those things are not guaranteed to be repeatable.

There are cases where you need to guarantee determinism (repeatable results). For example, row numbers can be used for paging purposes, as I will demonstrate in Chapter 5, “TOP and OFFSET-FETCH.” For each page, you submit a query filtering only a certain range of row numbers. You do not want the same row to end up in two different pages even though the underlying data didn’t change just because in one query it got row number 25 and in the other 26. To force a deterministic calculation of row numbers, add a tiebreaker to the window order clause. In our example, extending it to qty, orderid makes the ordering unique, and therefore the calculation deterministic.

RANK and DENSE_RANK differ from ROW_NUMBER in that they produce the same rank value for rows with the same ordering value (quantity, in our case). The difference between RANK and DENSE_RANK is that the former computes one more than the count of rows with lower ordering values than the current, and the latter computes one more than the count of distinct ordering values that are lower than the current. For example, the rows that got row numbers 6, 7, and 8 all got rank values 6 (1 + 5 rows with quantities lower than 20) and dense rank values 3 (1 + 2 distinct quantities that are lower than 20). As a result, rank values can have gaps between them, as is the case between the ranks 1 and 5, whereas dense rank values cannot.

Finally, the NTILE function assigns tile numbers to the rows in the partition based on the specified number of tiles and ordering. In our case, the requested number of tiles is 4 and the ordering is based on the qty column. So, based on quantity ordering, the first fourth of the rows in the result is assigned with tile number 1, the next with 2, and so on. If the count of rows doesn’t divide evenly by the specified number of tiles, assuming R is the remainder, the first R tiles will get an extra row. In our case, we have 11 rows, resulting in a tile size of 2 and a remainder of 3. So the first three tiles will have three rows instead of two. The NTILE calculation is the least commonly used out of the four ranking calculations. Its main use cases are in statistical analysis of data.

Recall that the optional window partition clause is available to all window functions. Here’s an example of a computation of row numbers, partitioned by custid, and ordered by orderid:

SELECT custid, orderid, qty,

ROW_NUMBER() OVER(PARTITION BY custid ORDER BY orderid) AS rownum

FROM dbo.Orders

ORDER BY custid, orderid;

This query generates the following output, where you can see the row numbers are independent for each customer:

custid orderid qty rownum

------ ----------- ----------- --------------------

A 10005 30 1

A 40001 40 2

A 40005 10 3

B 20001 20 1

B 30001 10 2

B 30003 15 3

B 30004 20 4

C 10001 10 1

C 10006 10 2

C 20002 20 3

C 30007 30 4

The query defines presentation order by custid, orderid. Remember that absent a presentation ORDER BY clause, presentation order is not guaranteed.

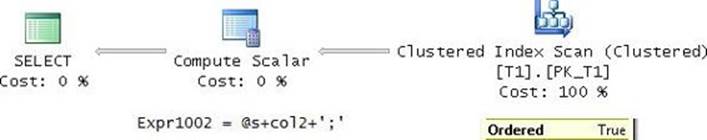

From an optimization perspective, like with window aggregate functions, the ideal index for a query with a ranking function like ROW_NUMBER is a POC index. Figure 4-10 has the plan for the query when a POC index does not exist.

FIGURE 4-10 Optimization of ROW_NUMBER without a POC index.

Because a POC index isn’t present in our case, the optimizer chooses a clustered index scan with an Ordered: False property. It then applies a Sort operator to sort the data by custid, orderid. This order supports both the calculation of the ROW_NUMBER function and the presentation order, which happen to be aligned. The Segment operator flags the Sequence Project operator when a new segment (partition) starts. In turn, the latter returns 1 when the row is the first in the partition, and adds 1 to the previous value when it isn’t.

If you want to avoid the sort, you need to create a POC index, like so:

CREATE UNIQUE INDEX idx_cid_oid_i_qty ON dbo.Orders(custid, orderid) INCLUDE(qty);

Rerun the query, and you get the plan shown in Figure 4-11.

FIGURE 4-11 Optimization of ROW_NUMBER with a POC index.

This time you get an ordered scan of the POC index, and the sort disappears from the plan.

Compared to the previously described optimization of aggregate window functions, ROW_NUMBER, RANK, and DENSE_RANK are optimized using superfast streaming operators; there’s no spooling. It would certainly be great to see in the future similar optimization of aggregate functions that use common specialized frames like ROWS UNBOUNDED PRECEDING.

As for NTILE, the optimizer needs to know the count of rows in the query result in order to compute the tile size. For this, it uses optimization similar to adding the window aggregate COUNT(*) OVER(), with the spooling technique demonstrated earlier in Figure 4-2.

There’s a curious thing about the ROW_NUMBER function and window ordering. A window order clause is mandatory, and it cannot be a constant; though there’s no requirement for the window ordering to be unique. These requirements impose a challenge when you want to compute row numbers just for uniqueness purposes and you don’t care about order. You also don’t want to pay any unnecessary costs that are related to arranging the data in the required order. If you attempt to omit the window order clause, you get an error. If you try to specify a constant likeORDER BY NULL, you get an error. Surprisingly, SQL Server is happy when you provide a subquery returning a constant, as in ORDER BY (SELECT NULL). Here’s an example for a query using this trick:

SELECT orderid, orderdate, custid, empid, qty,

ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS rownum

FROM dbo.Orders;

The optimizer realizes that because all rows will have the same ordering value, it doesn’t really need the data in any order. So it assigns the row numbers simply based on access order (data layout, optimization).

Offset window functions

You use offset window functions to request an element from a row that is at the beginning or end of the window frame or is in a certain offset from the current row. I’ll first describe a pair of offset window functions called FIRST_VALUE and LAST_VALUE, and then I’ll describe the pair LAG and LEAD.

FIRST_VALUE and LAST_VALUE

You can use the FIRST_VALUE and LAST_VALUE functions to return an element from the first or last row in the window frame, respectively. The frame specification is the same as what I described in the “Window frame” section in the “Aggregate window functions” topic. This includes the discussion about the critical performance difference between ROWS and RANGE.

You will usually use the FIRST_VALUE and LAST_VALUE functions when you need to return something from the first or last row in the partition. In such a case, you will want to use FIRST_VALUE with the frame ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW, and LAST_VALUE with the frame ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING.

As an example, the following query against the Orders table returns with each order the quantity of the same customer’s first order (firstqty) and last order (lastqty):

SELECT custid, orderid, orderdate, qty,

FIRST_VALUE(qty) OVER(PARTITION BY custid

ORDER BY orderdate, orderid

ROWS BETWEEN UNBOUNDED PRECEDING

AND CURRENT ROW) AS firstqty,

LAST_VALUE(qty) OVER(PARTITION BY custid

ORDER BY orderdate, orderid

ROWS BETWEEN CURRENT ROW

AND UNBOUNDED FOLLOWING) AS lastqty

FROM dbo.Orders

ORDER BY custid, orderdate, orderid;

This query generates the following output:

custid orderid orderdate qty firstqty lastqty

------ ----------- ---------- ----------- ----------- -----------

A 10005 2013-12-24 30 30 10

A 40001 2014-01-09 40 30 10

A 40005 2014-02-12 10 30 10

B 30001 2013-08-02 10 10 20

B 20001 2014-02-12 20 10 20

B 30003 2014-04-18 15 10 20

B 30004 2014-04-18 20 10 20

C 10001 2013-12-24 10 10 30

C 10006 2014-01-18 10 10 30

C 20002 2014-02-16 20 10 30

C 30007 2014-09-07 30 10 30

Typically, you will use these functions combined with other elements in the calculation. For example, compute the difference between the current order quantity and the quantity of the same customer’s first order, as in qty – FIRST_VALUE(qty) OVER(...).

As with all window functions, the window partition clause is optional. As you could probably guess, the window order clause is mandatory. An explicit window frame specification is optional, but recall that once you have a window order clause, there is a frame; and the default frame isRANGE UNBOUNDED PRECEDING. So you want to make sure you specify the frame explicitly; otherwise, you will have problems with both functions. With the FIRST_VALUE function, the default frame will give you the element from the first row in the partition. However, remember that the default RANGE option is much more expensive than ROWS. As for LAST_VALUE, the last row in the default frame is the current row, and not the last row in the partition.

As for optimization, the FIRST_VALUE and LAST_VALUE functions are optimized like aggregate window functions with the same frame specification.

LAG and LEAD

You can use the LAG and LEAD functions to return an element from the previous or next row, respectively. As you might have guessed, a window order clause is mandatory with these functions. Typical uses for these functions include trend analysis (the difference between the current and previous months’ values), recency (the difference between the current and previous orders’ supply dates), and so on.

As an example, the following query returns along with order information the quantities of the same customer’s previous and next orders:

SELECT custid, orderid, orderdate, qty,

LAG(qty) OVER(PARTITION BY custid

ORDER BY orderdate, orderid) AS prevqty,

LEAD(qty) OVER(PARTITION BY custid

ORDER BY orderdate, orderid) AS nextqty

FROM dbo.Orders

ORDER BY custid, orderdate, orderid;

This query generates the following output:

custid orderid orderdate qty prevqty nextqty

------ ----------- ---------- ----------- ----------- -----------

A 10005 2013-12-24 30 NULL 40

A 40001 2014-01-09 40 30 10

A 40005 2014-02-12 10 40 NULL

B 30001 2013-08-02 10 NULL 20

B 20001 2014-02-12 20 10 15

B 30003 2014-04-18 15 20 20

B 30004 2014-04-18 20 15 NULL

C 10001 2013-12-24 10 NULL 10

C 10006 2014-01-18 10 10 20

C 20002 2014-02-16 20 10 30

C 30007 2014-09-07 30 20 NULL

As you can see, the LAG function returns a NULL for the first row in the partition and the LEAD function returns a NULL for the last.

These functions support a second parameter indicating the offset in case you want it to be other than NULL, and a third parameter indicating the default instead of a NULL in case a row doesn’t exist in the requested offset. For example, the expression LAG(qty, 3, 0) returns the quantity from three rows before the current row and 0 if a row doesn’t exist in that offset.

As for optimization, the LAG and LEAD functions are internally converted to the LAST_VALUE function with a frame made of one row. In the case of LAG, the frame used is ROWS BETWEEN 1 PRECEDING AND 1 PRECEDING; in the case of LEAD, the frame is ROWS BETWEEN 1 FOLLOWING AND 1 FOLLOWING.

Statistical window functions

T-SQL supports two pairs of statistical window functions that provide information about distribution of data. One pair of functions provides rank distribution information and another provides inverse distribution information.

Rank distribution functions

Rank distribution functions give you the relative rank of a row expressed as a percent (in the range 0 through 1) in the target partition based on the specified order. T-SQL supports two such functions, called PERCENT_RANK and CUME_DIST. Each of the two computes the rank a bit differently.

Following are the elements involved in the formulas for the two computations:

![]() rk = Rank of the row based on the same specification as the distribution function.

rk = Rank of the row based on the same specification as the distribution function.

![]() nr = Count of rows in the partition.

nr = Count of rows in the partition.

![]() np = Number of rows that precede or are peers of the current row.

np = Number of rows that precede or are peers of the current row.

The formula used to compute PERCENT_RANK is (rk – 1) / (nr – 1).

The formula used to compute CUME_DIST is np / nr.

As an example, the following query uses these functions to compute rank distribution information of student test scores:

USE TSQLV3;

SELECT testid, studentid, score,

CAST( 100.00 *

PERCENT_RANK() OVER(PARTITION BY testid ORDER BY score)

AS NUMERIC(5, 2) ) AS percentrank,

CAST( 100.00 *

CUME_DIST() OVER(PARTITION BY testid ORDER BY score)

AS NUMERIC(5, 2) ) AS cumedist

FROM Stats.Scores;

This query generates the following output:

testid studentid score percentrank cumedist

---------- ---------- ----- ------------ ---------

Test ABC Student E 50 0.00 11.11

Test ABC Student C 55 12.50 33.33

Test ABC Student D 55 12.50 33.33

Test ABC Student H 65 37.50 44.44

Test ABC Student I 75 50.00 55.56

Test ABC Student B 80 62.50 77.78

Test ABC Student F 80 62.50 77.78

Test ABC Student A 95 87.50 100.00

Test ABC Student G 95 87.50 100.00

Test XYZ Student E 50 0.00 10.00

Test XYZ Student C 55 11.11 30.00

Test XYZ Student D 55 11.11 30.00

Test XYZ Student H 65 33.33 40.00

Test XYZ Student I 75 44.44 50.00

Test XYZ Student B 80 55.56 70.00

Test XYZ Student F 80 55.56 70.00

Test XYZ Student A 95 77.78 100.00

Test XYZ Student G 95 77.78 100.00

Test XYZ Student J 95 77.78 100.00

Inverse distribution functions

Inverse distribution functions compute percentiles. A percentile N is the value below which N percent of the observations fall. For example, the 50th percentile (aka median) test score is the score below which 50 percent of the scores fall.

There are two distribution models that can be used to compute percentiles: discrete and continuous. When the requested percentile exists as an exact value in the population, both models return that value. For example, when computing the median with an odd number of elements, both models result in the middle element. The two models differ when the requested percentile doesn’t exist as an actual value in the population. For example, when computing the median with an even number of elements, there is no middle point. In such a case, the discrete model returns the element closest to the missing one. In the median’s case, you get the value just before the missing one. The continuous model interpolates the missing value from the two surrounding it, assuming continuous distribution. In the median’s case, it’s the average of the two middle values.

T-SQL provides two window functions, called PERCENTILE_DISC and PERCENTILE_CONT, that implement the discrete and continuous models, respectively. You specify the percentile you’re after as an input value in the range 0 to 1. Using a clause called WITHIN GROUP, you specify the order. As is usual with window functions, you can apply the calculation against the entire query result set or against a restricted partition using a window partition clause.

As an example, the following query computes the median student test scores within the same test using both models:

SELECT testid, studentid, score,

PERCENTILE_DISC(0.5) WITHIN GROUP(ORDER BY score)

OVER(PARTITION BY testid) AS mediandisc,

PERCENTILE_CONT(0.5) WITHIN GROUP(ORDER BY score)

OVER(PARTITION BY testid) AS mediancont

FROM Stats.Scores;

Because a window function is not supposed to hide the detail, you get the results along with the detail rows. The same results get repeated for all rows in the same partition. Here’s the output of this query:

testid studentid score mediandisc mediancont

---------- ---------- ----- ---------- ----------------------

Test ABC Student E 50 75 75

Test ABC Student C 55 75 75

Test ABC Student D 55 75 75

Test ABC Student H 65 75 75

Test ABC Student I 75 75 75

Test ABC Student B 80 75 75

Test ABC Student F 80 75 75

Test ABC Student A 95 75 75

Test ABC Student G 95 75 75

Test XYZ Student E 50 75 77.5

Test XYZ Student C 55 75 77.5

Test XYZ Student D 55 75 77.5

Test XYZ Student H 65 75 77.5

Test XYZ Student I 75 75 77.5

Test XYZ Student B 80 75 77.5

Test XYZ Student F 80 75 77.5

Test XYZ Student A 95 75 77.5

Test XYZ Student G 95 75 77.5

Test XYZ Student J 95 75 77.5

Observe that for Test ABC the two functions return the same results because there’s an odd number of rows. For Test XYZ, the results are different because there’s an even number of elements. Remember that in the case of median, when there’s an even number of elements the two models use different calculations.

Standard SQL supports inverse distribution functions as grouped ordered set functions. That’s convenient when you need to return the percentile calculation once per group (test, in our case) and not repeated with all detail rows. Unfortunately, ordered set functions are not supported yet in SQL Server. But just to give you a sense, here’s how you would compute the median in both models per test (don’t try running this in SQL Server):

SELECT testid,

PERCENTILE_DISC(0.5) WITHIN GROUP(ORDER BY score) AS mediandisc,

PERCENTILE_CONT(0.5) WITHIN GROUP(ORDER BY score) AS mediancont

FROM Stats.Scores

GROUP BY testid;

![]() Note

Note

You can find a SQL Server feature enhancement request about ordered set functions here: https://connect.microsoft.com/SQLServer/feedback/details/728969. We hope we will see this feature implemented in the future. The concept is applicable not just to inverse-distribution functions but to any set function that has ordering relevance.

The alternative in SQL Server is to use the window functions and eliminate duplicates with a DISTINCT clause, like so:

SELECT DISTINCT testid,

PERCENTILE_DISC(0.5) WITHIN GROUP(ORDER BY score)

OVER(PARTITION BY testid) AS mediandisc,

PERCENTILE_CONT(0.5) WITHIN GROUP(ORDER BY score)

OVER(PARTITION BY testid) AS mediancont

FROM Stats.Scores;

This query generates the following output:

testid mediandisc mediancont

---------- ---------- ----------------------

Test ABC 75 75

Test XYZ 75 77.5

From an optimization perspective, these functions are optimized very inefficiently. The query plan has a number of rounds where the rows are spooled, and then the spool is read once to compute an aggregate and once for the detail. See Chapter 5 for a discussion about more efficient alternatives.

Gaps and islands

Gaps and islands are classic problems in T-SQL that involve a sequence of values. The type of the values is usually date and time, but sometimes integer and others. The gaps task involves identifying the ranges of missing values, and the islands task involves identifying the ranges of existing values. Examples for gaps and islands tasks are identifying periods of inactivity and periods of activity, respectively. Many gaps and islands tasks can be solved efficiently using window functions.

I’ll first demonstrate solutions using a sequence of integers simply because it’s much easier to explain the logic with integers. In reality, most gaps and islands tasks involve date and time sequences. Once you figure out a technique to solve the task using a sequence of integers, you need to perform fairly minor adjustments to apply it to a date-and-time sequence.

For sample data, I’ll use the following table T1:

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID('dbo.T1', 'U') IS NOT NULL DROP TABLE dbo.T1;

CREATE TABLE dbo.T1(col1 INT NOT NULL CONSTRAINT PK_T1 PRIMARY KEY);

GO

INSERT INTO dbo.T1(col1) VALUES(1),(2),(3),(7),(8),(9),(11),(15),(16),(17),(28);

Gaps

The gaps task is about identifying the ranges of missing values in the sequence as intervals. I’ll demonstrate a technique to handle the task against the table T1.

One of the simplest and most efficient techniques involves two steps. The first is to return for each current col1 value (call it cur) the immediate next value using the LEAD function (call it nxt). This is achieved with the following query:

SELECT col1 AS cur, LEAD(col1) OVER(ORDER BY col1) AS nxt

FROM dbo.T1;

This query generates the following output:

cur nxt

----------- -----------

1 2

2 3

3 7

7 8

8 9

9 11

11 15

15 16

16 17

17 28

28 NULL

We have an index on col1, so the plan for this query does a single ordered scan of the index to support the LEAD calculation.

Examine the pairs of cur-nxt values, and observe that the pairs where the difference is greater than one represent gaps. The second step involves defining a CTE based on the last query, and then filtering the pairs representing gaps, adding one to cur and subtracting one from nxt to return the actual gap information. Here’s the complete solution query:

WITH C AS

(

SELECT col1 AS cur, LEAD(col1) OVER(ORDER BY col1) AS nxt

FROM dbo.T1

)

SELECT cur + 1 AS range_from, nxt - 1 AS range_to

FROM C

WHERE nxt - cur > 1;

This query generates the following output:

range_from range_to

----------- -----------

4 6

10 10

12 14

18 27

Notice also that the pair with the NULL in nxt was naturally filtered out because the difference was NULL, NULL > 1 is unknown, and unknown gets filtered out.

Islands

The islands task is the inverse of the gaps task. Identifying islands means returning the ranges of consecutive values. I’ll first demonstrate a solution using the generic sequence of integers in T1.col1, and then I’ll use a more realistic example using a sequence of dates.

One of the most beautiful and efficient techniques to identify islands involves computing row numbers based on the order of the sequence column, and using those to compute an island identifier. To see how this can be achieved, first compute row numbers based on col1 order, like so:

SELECT col1, ROW_NUMBER() OVER(ORDER BY col1) AS rownum

FROM dbo.T1;

This query generates the following output:

col1 rownum

----------- --------------------

1 1

2 2

3 3

7 4

8 5

9 6

11 7

15 8

16 9

17 10

28 11

Look carefully at the two sequences and see if you can identify an interesting relationship between them that can help you identify islands. For this, focus your attention on one of the islands. If you do enough staring at the output, you will figure it out at some point—within an island, both sequences keep incrementing by a fixed interval of 1 integer; therefore, the difference between the two is the same within the island. Also, when switching from one island to the next rownum increases by 1, whereas col1 increases by more than 1; therefore, the difference keeps growing from one island to the next and hence is unique per island. This means you can use the difference as the island, or group, identifier. Run the following query to compute the group identifier (grp) based on this logic:

SELECT col1, col1 - ROW_NUMBER() OVER(ORDER BY col1) AS grp

FROM dbo.T1;

This query generates the following output:

col1 grp

----------- --------------------

1 0

2 0

3 0

7 3

8 3

9 3

11 4

15 7

16 7

17 7

28 17

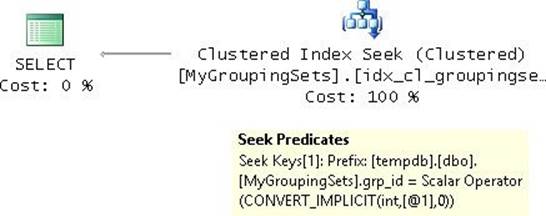

Because there’s an index defined on col1, the computation of the row numbers doesn’t require explicit sorting, as can be seen in the plan for this query in Figure 4-12.

FIGURE 4-12 Plan for query computing group identifier.

Most of the cost of this plan is in the ordered scan of the index PK_T1. The rest of the work involving the calculation of the row numbers and the computation of the difference between the sequences is negligible.

Finally, you need to define a CTE based on the last query, and then in the outer query group the rows by grp, return the minimum col1 value as the start of the island, and return the maximum as the end. Here’s the complete solution query:

WITH C AS

(

SELECT col1, col1 - ROW_NUMBER() OVER(ORDER BY col1) AS grp

FROM dbo.T1

)

SELECT MIN(col1) AS range_from, MAX(col1) AS range_to

FROM C

GROUP BY grp;

This query generates the following output:

range_from range_to

----------- -----------

1 3

7 9

11 11

15 17

28 28

The col1 sequence in T1 has unique values, but you should be aware that if duplicate values were possible, the solution would not work correctly anymore. That’s because the ROW_NUMBER function generates unique values in the target partition, even for rows with the same ordering values. For the logic to be correct, you need a ranking function that assigns the same ranking values for all rows with the same ordering values, with no gaps between distinct ranking values. Fortunately, such a ranking function exists—that’s the DENSE_RANK function. Here’s the revised solution using DENSE_RANK:

WITH C AS

(

SELECT col1, col1 - DENSE_RANK() OVER(ORDER BY col1) AS grp

FROM dbo.T1

)

SELECT MIN(col1) AS range_from, MAX(col1) AS range_to

FROM C

GROUP BY grp;

Because the DENSE_RANK function works correctly both when the sequence is unique and when it isn’t, it makes sense to always use DENSE_RANK and not ROW_NUMBER. It’s just easier to explain the logic first with a unique sequence using the ROW_NUMBER function, and then examine a nonunique sequence, and then explain why DENSE_RANK is preferred.

As mentioned, in most cases in reality you will need to identify islands involving date and time sequences. As an example, suppose you need to query the Sales.Orders table in the TSQLV3 database, and identify for each shipper the islands of shipped date values. The shipped date values are stored in the shippeddate column. Clearly you can have duplicate values in this column. Also, not all orders recorded in the table are shipped orders; those that were not yet shipped have a NULL shipped date. For the purpose of identifying islands, you should simply ignore those NULLs. To support your solution, you need an index on the partitioning column shipperid, sequence column shippeddate, and tiebreaker column orderid as the keys. Because NULLs are possible and should be ignored, you add a filter that excludes NULLs from the index definition. Run the following code to create the recommended index:

USE TSQLV3;

CREATE UNIQUE INDEX idx_sid_sd_oid

ON Sales.Orders(shipperid, shippeddate, orderid)

WHERE shippeddate IS NOT NULL;

When computing the group identifier for the islands in col1 in T1, both the col1 values and the dense rank values were integers with the same interval 1. With the shippeddate sequence, things are trickier because the shipped date values and the dense rank values are of different types; one is temporal and the other is integer. Still, the interval between consecutive values in each is fixed, only in one case it’s the temporal interval 1 day and in the other it’s the integer interval 1. So, to apply the logic in this case, you subtract from the temporal shipped date value the dense rank value times the temporal interval (1 day). This way, the group identifier will be a temporal value, but like before it will be the same for all members of the same island.

Here’s the complete solution query implementing this logic:

WITH C AS

(

SELECT shipperid, shippeddate,

DATEADD(

day,

-1 * DENSE_RANK() OVER(PARTITION BY shipperid ORDER BY shippeddate),

shippeddate) AS grp

FROM Sales.Orders

WHERE shippeddate IS NOT NULL

)

SELECT shipperid,

MIN(shippeddate) AS fromdate,

MAX(shippeddate) AS todate,

COUNT(*) as numorders

FROM C

GROUP BY shipperid, grp;

A good way to understand the logic behind computing the group identifier is to first run the inner query, which defines the CTE C, and examine the result. You will get the following output:

shipperid shippeddate grp

----------- ----------- ----------

1 2013-07-10 2013-07-09

1 2013-07-15 2013-07-13

1 2013-07-23 2013-07-20

1 2013-07-29 2013-07-25

1 2013-08-02 2013-07-28

1 2013-08-06 2013-07-31

1 2013-08-09 2013-08-02

1 2013-08-09 2013-08-02

...

1 2014-01-10 2013-12-07

1 2014-01-13 2013-12-09

1 2014-01-14 2013-12-09

1 2014-01-14 2013-12-09

1 2014-01-22 2013-12-16

...

You will notice that the dates that belong to the same island get the same group identifier.

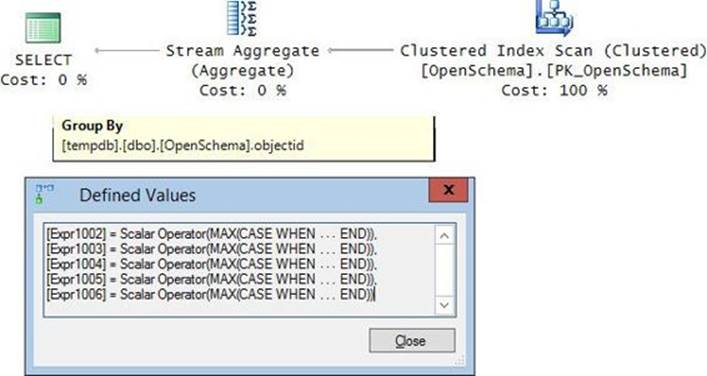

The plan for the inner query defining the CTE C can be seen in Figure 4-13.

FIGURE 4-13 Plan for inner query computing group identifier with dates.

This plan is very efficient. It performs an ordered scan of the index you prepared for this solution to support the computation of the dense rank values. The cost of the rest of the work in the plan is negligible.

The complete solution generates the ranges of shipped date values as the following output shows: