T-SQL Querying (2015)

Chapter 9. Programmable objects

This chapter focuses on the programmatic constructs in T-SQL. It covers dynamic SQL, user-defined functions, stored procedures, triggers, SQLCLR programming, transactions and concurrency, and exception handling. Because the book’s focus is on querying, most of the discussions involving programmatic constructs will tend to center on their use to support querying, with emphasis on the performance of the code.

Dynamic SQL

Dynamic SQL is, in essence, T-SQL code that constructs and executes T-SQL code. You usually build the batch of code as a character string that you store in a variable, and then you execute the code stored in the variable using one of two tools: the EXEC command (short for EXECUTE) or the sp_executesql stored procedure. The latter is the more flexible tool and, therefore, the recommended one to use.

The code you execute dynamically operates in a batch that is considered separate from the calling batch. That’s important to keep in mind because the batch is the unit for a number of things in Microsoft SQL Server. It’s the unit of parsing, binding, and optimization. The batch is also the scope for variables and parameters. You cannot access a variable declared in one batch within another. This means that if you have a variable in the calling batch, it is not visible to the code in the dynamic batch, and the other way around. So if you need to be able to pass variable values between the batches, and you want to avoid writing to and reading from tables for this purpose, you will need an interface in the form of input and output parameters. Between the EXEC and sp_executesql tools, only the latter supports such an interface; therefore, it is the recommended tool to use. I’ll demonstrate using both tools.

Using the EXEC command

As mentioned, the EXEC command doesn’t support an interface. You pass the character string holding the batch of code that you want to run as input, and the command executes the code within it. The lack of support for an interface introduces a challenge if in the dynamic batch you need to refer to a value in a variable or a parameter from the calling batch.

For example, suppose that the calling batch has a variable called @s that holds the last name of an employee. You need to construct a dynamic batch that, among other things, queries the HR.Employees table in the TSQLV3 database and returns the employee or employees with that last name. What some do in such a case is concatenate the content of the variable (the actual last name) as a literal in the code and then execute the constructed code with the EXEC command, like so:

SET NOCOUNT ON;

USE TSQLV3;

DECLARE @s AS NVARCHAR(200);

SET @s = N'Davis'; -- originates in user input

DECLARE @sql AS NVARCHAR(1000);

SET @sql = N'SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N''' + @s + N''';';

PRINT @sql; -- for debug purposes

EXEC (@sql);

Running this code produces the following output, starting with the generated batch of code for troubleshooting purposes:

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N'Davis';

empid firstname lastname hiredate

----------- ---------- -------------------- ----------

1 Sara Davis 2012-05-01

There are two problems with this approach—one related to performance and the other to security. The performance-related problem is that for each distinct last name, the generated query string will be different. This means that unless SQL Server decides to parameterize the code—and it’s quite conservative about the cases that it automatically parameterizes—it will end up creating and caching a separate plan for each distinct string. This behavior can result in flooding the memory with all those ad hoc plans, which will rarely get reused.

As for the security-related problem, concatenating user inputs as constants directly into the code exposes your environment to SQL injection attacks. Imagine that the user providing the last name that ends up in your code is a hacker. Imagine that instead of providing just a last name, they pass the following (by running the preceding code and replacing just the variable assignment with the following one):

SET @s = N'abc''; PRINT ''SQL injection!''; --';

Observe the query string and the outputs that are generated:

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N'abc'; PRINT 'SQL injection!'; --';

empid firstname lastname hiredate

----------- ---------- -------------------- ----------

SQL injection!

Notice the last bit of output. It tells you that you ended up running code in your system that the hacker injected and you didn’t intend to run. In this example, the injected code is a harmless PRINT command, but it could have been code with much worse implications, as the following comic strip will attest: http://xkcd.com/327/.

Often hackers will not try to inject code that does direct damage, because such an attempt is likely to be discovered quickly. Instead, they will try to steal information from your environment. Using the employee-name example to demonstrate, a hacker will first pass any last name just to see the structure of the result. The hacker will see that it contains four columns: the first is an integer, the second and third are character strings, and the fourth is a date. The hacker then submits the following “last name” to collect information about the objects in the database:

SET @s = N'abc'' UNION ALL SELECT object_id, SCHEMA_NAME(schema_id), name, NULL

FROM sys.objects WHERE type IN (''U'', ''V''); --';

Instead of getting employee information, the hacker gets the following information about the object IDs, schema, and object names of all objects in the database (the object IDs in your case will be different, of course):

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N'abc' UNION ALL

SELECT object_id, SCHEMA_NAME(schema_id), name, NULL

FROM sys.objects WHERE type IN ('U', 'V'); --';

empid firstname lastname hiredate

----------- ----------- ------------------ ----------

245575913 HR Employees NULL

309576141 Production Suppliers NULL

341576255 Production Categories NULL

373576369 Production Products NULL

485576768 Sales Customers NULL

517576882 Sales Shippers NULL

549576996 Sales Orders NULL

645577338 Sales OrderDetails NULL

805577908 Stats Tests NULL

837578022 Stats Scores NULL

901578250 dbo Nums NULL

933578364 Sales OrderValues NULL

949578421 Sales OrderTotalsByYear NULL

965578478 Sales CustOrders NULL

981578535 Sales EmpOrders NULL

Because the hacker is interested in stealing customer information, his next move is to query sys.columns to ask for the metadata information about the columns in the Customers table. (Again, the hacker will need to use the object ID representing the Customers table in your database.) Here’s the query he will use:

SET @s = N'abc'' UNION ALL

SELECT NULL, name, NULL, NULL FROM sys.columns WHERE object_id = 485576768; --';

The hacker gets the following output:

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N'abc' UNION ALL

SELECT NULL, name, NULL, NULL FROM sys.columns WHERE object_id = 485576768; --';

empid firstname lastname hiredate

----------- ------------- --------- ----------

NULL custid NULL NULL

NULL companyname NULL NULL

NULL contactname NULL NULL

NULL contacttitle NULL NULL

NULL address NULL NULL

NULL city NULL NULL

NULL region NULL NULL

NULL postalcode NULL NULL

NULL country NULL NULL

NULL phone NULL NULL

NULL fax NULL NULL

Then the hacker’s next move is to collect the phone numbers of the customers by using the following code:

SET @s = N'abc'' UNION ALL SELECT NULL, companyname, phone, NULL FROM Sales.Customers; --';

He gets the following output:

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = N'abc' UNION ALL

SELECT NULL, companyname, phone, NULL FROM Sales.Customers; --';

empid firstname lastname hiredate

----------- --------------- --------------- ----------

NULL Customer NRZBB 030-3456789 NULL

NULL Customer MLTDN (5) 789-0123 NULL

NULL Customer KBUDE (5) 123-4567 NULL

NULL Customer HFBZG (171) 456-7890 NULL

NULL Customer HGVLZ 0921-67 89 01 NULL

...

All of this was possible because you concatenated the user input straight into the code. If you’re thinking you can check the inputs by looking for common elements used in injection, you need to be aware that it’s hard to cover all possible injection methods. Hackers keep reinventing themselves. Like my friend and colleague Richard Waymire once said, “They’re not necessarily smarter than you, but they have more time than you.” The only real way to avoid injection is not to concatenate user inputs into your code; rather, pass them as parameters. However, to do this, you need a tool that supports an interface, and unfortunately the EXEC command doesn’t. Fortunately, the sp_executesql procedure does support such an interface, as I will demonstrate shortly.

The EXEC command has an interesting capability you can use to concatenate multiple variables within the parentheses, like so:

EXEC(@v1 + @v2 + @v2);

Even if the inputs are character strings with a limited size—for example, VARCHAR(8000)—you are allowed to exceed 8,000 characters in the combined string. With legacy versions of SQL Server prior to the support for VARCHAR(MAX) and NVARCHAR(MAX), this used to be an important capability. But with these types, you can pass an input batch that is up to 2 GB in size. EXEC supports both regular character strings and Unicode ones as inputs, unlike sp_executesql, which supports only the latter kind.

Using EXEC AT

In addition to supporting an EXEC command that executes a dynamic batch locally, SQL Server also supports a command called EXEC AT that executes a dynamic batch against a linked server. What’s interesting about this tool is that if the provider you use to connect to the linked server supports parameters, you can pass inputs to the dynamic batch through parameters.

As an example, assuming you have access to another SQL Server instance called YourServer, run the following code to create a linked server:

EXEC sp_addlinkedserver

@server = N'YourServer',

@srvproduct = N'SQL Server';

Assuming you installed the sample database TSQLV3 in that instance, run the following code to test the EXEC AT command:

DECLARE @sql AS NVARCHAR(1000), @pid AS INT;

SET @sql =

N'SELECT productid, productname, unitprice

FROM TSQLV3.Production.Products

WHERE productid = ?;';

SET @pid = 3;

EXEC(@sql, @pid) AT [YourServer];

The code constructs a batch that queries the Production.Products table, filters a product ID that is provided as a parameter, and then executes the batch against the linked server, passing the value 3 as the input. This code generates the following output:

productid productname unitprice

----------- -------------- ----------

3 Product IMEHJ 10.00

Using the sp_executesql procedure

Unlike the EXEC command, the sp_executesql procedure supports defining input and output parameters, much like a stored procedure does. This tool accepts three parts as its inputs. The first part, called @stmt, is the input batch of code you want to run (with the references to the input and output parameters). The second part, called @params, is where you provide the declaration of your parameters. The third part is where you assign values to the dynamic batch’s input parameters and collect values from the output parameters into variables.

Back to the original task of querying the Employees table based on a last name that originated in user input, here’s how you achieve it with a parameterized query using sp_executesql:

DECLARE @s AS NVARCHAR(200);

SET @s = N'Davis';

DECLARE @sql AS NVARCHAR(1000);

SET @sql = 'SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = @lastname;';

PRINT @sql; -- For debug purposes

EXEC sp_executesql

@stmt = @sql,

@params = N'@lastname AS NVARCHAR(200)',

@lastname = @s;

The code generates the following query string and output:

SELECT empid, firstname, lastname, hiredate

FROM HR.Employees WHERE lastname = @lastname;

empid firstname lastname hiredate

----------- ---------- -------------------- ----------

1 Sara Davis 2012-05-01

This solution doesn’t have the performance and security problems you had with EXEC. Regarding performance, the query gets optimized in the first execution of the code and the plan is cached. Subsequent executions of the code can potentially reuse the cached plan regardless of which last name is passed. This behavior is quite similar to the way plans for parameterized queries in stored procedures are cached and reused.

Regarding security, because the actual user input is not embedded in the code, there’s absolutely no exposure to SQL injection. The user input is always considered as a value in the parameter and is never made an actual part of the code.

Dynamic pivot

In Chapter 4, “Grouping, pivoting, and windowing,” I covered pivoting methods and provided the following example demonstrating how to handle pivoting dynamically:

USE TSQLV3;

DECLARE

@cols AS NVARCHAR(1000),

@sql AS NVARCHAR(4000);

SET @cols =

STUFF(

(SELECT N',' + QUOTENAME(orderyear) AS [text()]

FROM (SELECT DISTINCT YEAR(orderdate) AS orderyear

FROM Sales.Orders) AS Years

ORDER BY orderyear

FOR XML PATH(''), TYPE).value('.[1]', 'VARCHAR(MAX)'), 1, 1, '')

SET @sql = N'SELECT custid, ' + @cols + N'

FROM (SELECT custid, YEAR(orderdate) AS orderyear, val

FROM Sales.OrderValues) AS D

PIVOT(SUM(val) FOR orderyear IN(' + @cols + N')) AS P;';

EXEC sys.sp_executesql @stmt = @sql;

This example constructs a pivot query that returns a row per customer, a column per order year, and the sum of all order values for each intersection of customer and year. The code returns the following output:

custid 2013 2014 2015

------- -------- --------- ---------

1 NULL 2022.50 2250.50

2 88.80 799.75 514.40

3 403.20 5960.78 660.00

4 1379.00 6406.90 5604.75

5 4324.40 13849.02 6754.16

6 NULL 1079.80 2160.00

7 9986.20 7817.88 730.00

8 982.00 3026.85 224.00

9 4074.28 11208.36 6680.61

10 1832.80 7630.25 11338.56

...

Dynamic SQL is used here to avoid the need to hard code the years into the query. Instead, you query the distinct years from the data. Using an aggregate string concatenation method that is based on the FOR XML PATH option, you construct the comma-separated list of years for the pivot query’s IN clause. This way, when orders are recorded from a new year, the next time you execute the code that year is automatically included. If you’re reading the book’s chapters out of order and are not familiar with pivoting and aggregate string concatenation methods, you can find those in Chapter 4 in the sections “Pivoting” and “Custom aggregations,” respectively. In the next section, I’ll assume you are familiar with both.

The dynamic pivot example from Chapter 4 handled a specific pivoting task. If you need a similar solution for a different pivot task, you need to duplicate the code and change the pivoting elements to the new ones. The following sp_pivot stored procedure provides a more generalized solution for dynamic pivoting:

USE master;

GO

IF OBJECT_ID(N'dbo.sp_pivot', N'P') IS NOT NULL DROP PROC dbo.sp_pivot;

GO

CREATE PROC dbo.sp_pivot

@query AS NVARCHAR(MAX),

@on_rows AS NVARCHAR(MAX),

@on_cols AS NVARCHAR(MAX),

@agg_func AS NVARCHAR(257) = N'MAX',

@agg_col AS NVARCHAR(MAX)

AS

BEGIN TRY

-- Input validation

IF @query IS NULL OR @on_rows IS NULL OR @on_cols IS NULL

OR @agg_func IS NULL OR @agg_col IS NULL

THROW 50001, 'Invalid input parameters.', 1;

-- Additional input validation goes here (SQL injection attempts, etc.)

DECLARE

@sql AS NVARCHAR(MAX),

@cols AS NVARCHAR(MAX),

@newline AS NVARCHAR(2) = NCHAR(13) + NCHAR(10);

-- If input is a valid table or view

-- construct a SELECT statement against it

IF COALESCE(OBJECT_ID(@query, N'U'), OBJECT_ID(@query, N'V')) IS NOT NULL

SET @query = N'SELECT * FROM ' + @query;

-- Make the query a derived table

SET @query = N'(' + @query + N') AS Query';

-- Handle * input in @agg_col

IF @agg_col = N'*' SET @agg_col = N'1';

-- Construct column list

SET @sql =

N'SET @result = ' + @newline +

N' STUFF(' + @newline +

N' (SELECT N'',['' + '

+ 'CAST(pivot_col AS sysname) + '

+ 'N'']'' AS [text()]' + @newline +

N' FROM (SELECT DISTINCT('

+ @on_cols + N') AS pivot_col' + @newline +

N' FROM' + @query + N') AS DistinctCols' + @newline +

N' ORDER BY pivot_col'+ @newline +

N' FOR XML PATH('''')),'+ @newline +

N' 1, 1, N'''');'

EXEC sp_executesql

@stmt = @sql,

@params = N'@result AS NVARCHAR(MAX) OUTPUT',

@result = @cols OUTPUT;

-- Create the PIVOT query

SET @sql =

N'SELECT *' + @newline +

N'FROM (SELECT '

+ @on_rows

+ N', ' + @on_cols + N' AS pivot_col'

+ N', ' + @agg_col + N' AS agg_col' + @newline +

N' FROM ' + @query + N')' +

+ N' AS PivotInput' + @newline +

N' PIVOT(' + @agg_func + N'(agg_col)' + @newline +

N' FOR pivot_col IN(' + @cols + N')) AS PivotOutput;'

EXEC sp_executesql @sql;

END TRY

BEGIN CATCH

;THROW;

END CATCH;

GO

The stored procedure accepts the following input parameters:

![]() The @query parameter is either a table or view name or an entire query whose result you want to pivot—for example, N‘Sales.OrderValues’.

The @query parameter is either a table or view name or an entire query whose result you want to pivot—for example, N‘Sales.OrderValues’.

![]() The @on_rows parameter is the pivot grouping element—for example, N‘custid’.

The @on_rows parameter is the pivot grouping element—for example, N‘custid’.

![]() The @on_cols parameter is the pivot spreading element—for example, N‘YEAR(orderdate)’.

The @on_cols parameter is the pivot spreading element—for example, N‘YEAR(orderdate)’.

![]() The @agg_func parameter is the pivot aggregate function—for example, N‘SUM’.

The @agg_func parameter is the pivot aggregate function—for example, N‘SUM’.

![]() The @agg_col parameter is the input expression to the pivot aggregate function—for example, N‘val’.

The @agg_col parameter is the input expression to the pivot aggregate function—for example, N‘val’.

![]() Important

Important

This stored procedure supports SQL injection by definition. The input parameters are injected directly into the code to form the pivot query. For this reason, you want to be extremely careful with how and for what purpose you use it. For example, it could be a good idea to restrict the use of this procedure only to developers to aid in constructing pivot queries. Having an application invoke this procedure after collecting user inputs puts your environment at risk.

Because the stored procedure is created in the master database with the sp_ prefix, it can be executed in the context of any database. This is done either by calling it without the database prefix while connected to the desired database or by invoking it using the three-part name with the desired database as the database prefix, as in mydb.dbo.sp_pivot.

The stored procedure performs some basic input validation to ensure all inputs were provided. The procedure then checks if the input @query contains an existing table or view name in the target database. If it does, the procedure replaces the content of @query with a query against the object; if it does not, the procedure assumes that @query already contains a query. Then the procedure replaces the contents of @query with the definition of a derived table called Query based on the input query. This derived table will be considered as the input for finding both the distinct spreading values and the final pivot query.

The next step in the procedure is to check if the input @agg_col is *. It’s common for people to use * as the input to the aggregate function COUNT to count rows. However, the PIVOT operator doesn’t support * as an input to COUNT. So the code replaces * with the constant 1, and it will later define a column called agg_col based on what’s stored in @agg_col. This column will eventually be used as the input to the aggregate function stored in @agg_func.

The next two steps represent the heart of the stored procedure. These steps construct two different dynamic batches. The first creates a string with the comma-separated list of distinct spreading values that will eventually appear in the PIVOT operator’s IN clause. This batch is executed with sp_executesql and, using an output parameter called @result, the result string is stored in a local variable called @cols.

The second dynamic batch holds the final pivot query. The code constructs a derived table called PivotInput from a query against the derived table stored in @query. All pivoting elements (grouping, spreading, and aggregation) are injected into the inner query’s SELECT list. Then the PIVOT operator’s specification is constructed from the input aggregate function applied to agg_col and the IN clause with the comma-separated list of spreading values stored in @cols. The final pivot query is then executed using sp_executesql.

As an example of using the procedure, the following code computes the count of orders per employee and order year pivoted by order month:

EXEC TSQLV3.dbo.sp_pivot

@query = N'Sales.Orders',

@on_rows = N'empid, YEAR(orderdate) AS orderyear',

@on_cols = N'MONTH(orderdate)',

@agg_func = N'COUNT',

@agg_col = N'*';

This code generates the following output:

empid orderyear 1 2 3 4 5 6 7 8 9 10 11 12

------- ---------- --- --- --- --- --- --- --- --- --- --- --- ---

1 2014 3 2 5 1 5 4 7 3 8 7 3 7

5 2013 0 0 0 0 0 0 3 0 1 2 2 3

2 2015 7 3 9 18 2 0 0 0 0 0 0 0

6 2014 2 2 2 4 2 2 2 2 1 4 5 5

8 2014 5 8 6 2 4 3 6 5 3 7 2 3

9 2015 5 4 6 4 0 0 0 0 0 0 0 0

2 2013 0 0 0 0 0 0 1 2 5 2 2 4

3 2014 7 9 3 5 5 6 2 4 4 7 8 11

7 2013 0 0 0 0 0 0 0 1 2 5 3 0

4 2015 6 14 12 10 2 0 0 0 0 0 0 0

...

As another example, the following code computes the sum of order values (quantity * unit price) per employee pivoted by order year:

EXEC TSQLV3.dbo.sp_pivot

@query = N'SELECT O.orderid, empid, orderdate, qty, unitprice

FROM Sales.Orders AS O

INNER JOIN Sales.OrderDetails AS OD

ON OD.orderid = O.orderid',

@on_rows = N'empid',

@on_cols = N'YEAR(orderdate)',

@agg_func = N'SUM',

@agg_col = N'qty * unitprice';

This code generates the following output:

empid 2013 2014 2015

------ --------- ---------- ---------

9 11365.70 29577.55 42020.75

3 19231.80 111788.61 82030.89

6 17731.10 45992.00 14475.00

7 18104.80 66689.14 56502.05

1 38789.00 97533.58 65821.13

4 53114.80 139477.70 57594.95

5 21965.20 32595.05 21007.50

2 22834.70 74958.60 79955.96

8 23161.40 59776.52 50363.11

When you’re done, run the following code for cleanup:

USE master;

IF OBJECT_ID(N'dbo.sp_pivot', N'P') IS NOT NULL DROP PROC dbo.sp_pivot;

Dynamic search conditions

Dynamic search conditions, also known as dynamic filtering, are a common need in applications. The idea is that the application provides the user with an interface to filter data by various attributes, and the user chooses which attributes to filter by with each request. To demonstrate techniques to handle dynamic search conditions, I’ll use a table called Orders in my examples. Run the following code to create the Orders table in tempdb as a copy of TSQLV3.Sales.Orders, along with a few indexes to support common filters:

SET NOCOUNT ON;

USE tempdb;

IF OBJECT_ID(N'dbo.GetOrders', N'P') IS NOT NULL DROP PROC dbo.GetOrders;

IF OBJECT_ID(N'dbo.Orders', N'U') IS NOT NULL DROP TABLE dbo.Orders;

GO

SELECT orderid, custid, empid, orderdate,

CAST('A' AS CHAR(200)) AS filler

INTO dbo.Orders

FROM TSQLV3.Sales.Orders;

CREATE CLUSTERED INDEX idx_orderdate ON dbo.Orders(orderdate);

CREATE UNIQUE INDEX idx_orderid ON dbo.Orders(orderid);

CREATE INDEX idx_custid_empid ON dbo.Orders(custid, empid) INCLUDE(orderid, orderdate, filler);

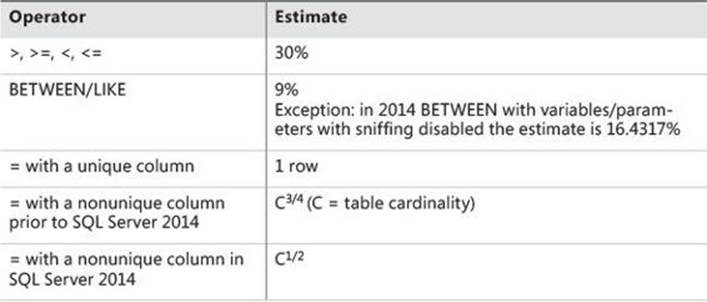

Your task is to create a stored procedure called dbo.GetOrders that accepts four optional inputs, called @orderid, @custid, @empid, and @orderdate. The procedure is supposed to query the Orders table and filter the rows based only on the parameters that get non-NULL input values.

The following implementation of the stored procedure represents one of the most commonly used techniques to handle the task:

IF OBJECT_ID(N'dbo.GetOrders', N'P') IS NOT NULL DROP PROC dbo.GetOrders;

GO

CREATE PROC dbo.GetOrders

@orderid AS INT = NULL,

@custid AS INT = NULL,

@empid AS INT = NULL,

@orderdate AS DATE = NULL

AS

SELECT orderid, custid, empid, orderdate, filler

FROM dbo.Orders

WHERE (orderid = @orderid OR @orderid IS NULL)

AND (custid = @custid OR @custid IS NULL)

AND (empid = @empid OR @empid IS NULL)

AND (orderdate = @orderdate OR @orderdate IS NULL);

GO

The procedure uses a static query that, for each parameter, uses the following disjunction of predicates (OR’d predicates): column = @parameter OR @parameter IS NULL. If a value isn’t provided for the parameter, the right predicate is true. With a disjunction of predicates when one of the operands is true, the whole thing is true, so no filtering happens based on this column. If a value is specified, the right predicate is false; therefore, the left predicate is the only one that counts for determining which rows to filter.

This solution is simple and easy to maintain; however, absent an extra element, it tends to result in suboptimal query plans. The reason for this is that when you execute the procedure for the first time, the optimizer optimizes the parameterized form of the code, including all predicates from the query. This means that both the predicates that are relevant for the current execution of the query and the ones that aren’t relevant are included in the plan. This approach is used to promote plan reuse behavior to save the time and resources that would have been associated with the creation of a new plan with every execution. This topic is covered in detail later in the chapter in the “Compilations, recompilations, and reuse of execution plans” section. This approach guarantees that if the procedure is called again with a different set of relevant parameters, the cached plan would still be valid and therefore can be reused. But if the plan has to be correct while taking into consideration all predicates in the query, including the irrelevant ones for the current execution, you realize that it’s unlikely to be efficient.

As an example, run the following code to execute the procedure with an input value provided only to the @orderdate parameter:

EXEC dbo.GetOrders @orderdate = '20140101';

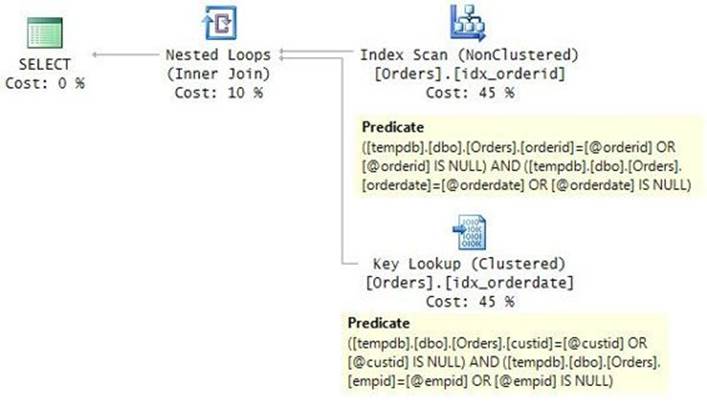

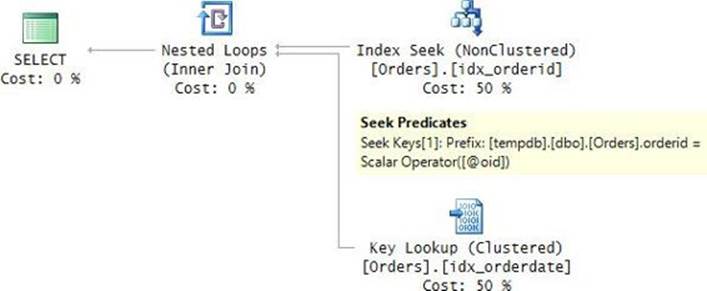

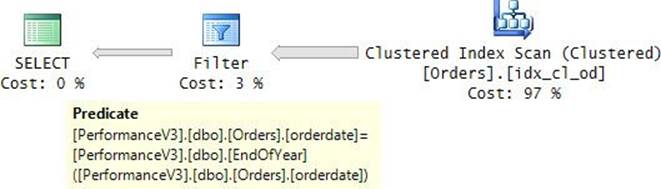

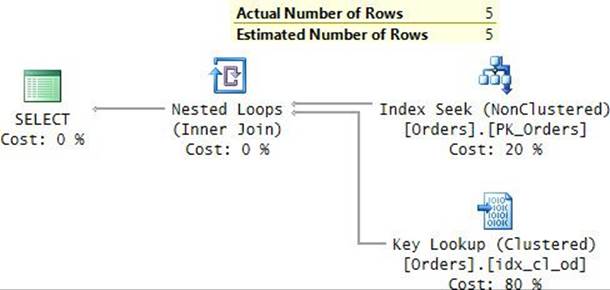

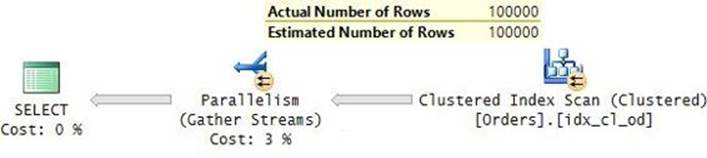

Observe the plan that the optimizer created for the query as shown in Figure 9-1.

FIGURE 9-1 Plan for a solution using a static query.

There are different places in the plan where the different predicates are processed, but the point is that all predicates are processed. With a filter based only on the orderdate column, the most efficient plan would be one that performs a seek and a range scan in the clustered index. But what you see here is an entirely different plan that is very inefficient for this execution.

You can do a number of things to get efficient plans. If you are willing to forgo the benefits of plan reuse, add OPTION(RECOMPILE) at the end of the query, which forces SQL Server to create a new plan with every execution. It’s critical, though, to use the statement-level RECOMPILE option and not the procedure-level one. With the former, the parser performs parameter embedding, where it replaces the parameters with the constants and removes the redundant parts of the query before passing it to the optimizer. As a result, the query that gets optimized contains only the predicates that are relevant to the current execution, so the likelihood of getting an efficient plan is quite high.

Run the following code to re-create the GetOrders procedure, adding the RECOMPILE option to the query:

IF OBJECT_ID(N'dbo.GetOrders', N'P') IS NOT NULL DROP PROC dbo.GetOrders;

GO

CREATE PROC dbo.GetOrders

@orderid AS INT = NULL,

@custid AS INT = NULL,

@empid AS INT = NULL,

@orderdate AS DATE = NULL

AS

SELECT orderid, custid, empid, orderdate, filler

FROM dbo.Orders

WHERE (orderid = @orderid OR @orderid IS NULL)

AND (custid = @custid OR @custid IS NULL)

AND (empid = @empid OR @empid IS NULL)

AND (orderdate = @orderdate OR @orderdate IS NULL)

OPTION (RECOMPILE);

GO

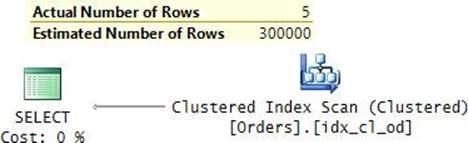

Run the following code to test the procedure with a filter based on the orderdate column:

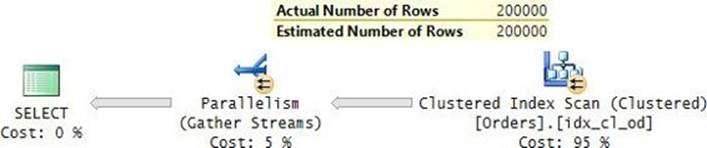

EXEC dbo.GetOrders @orderdate = '20140101';

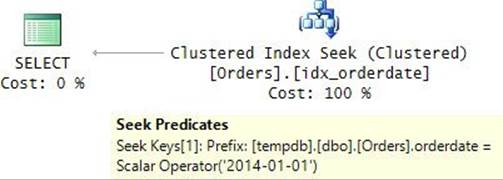

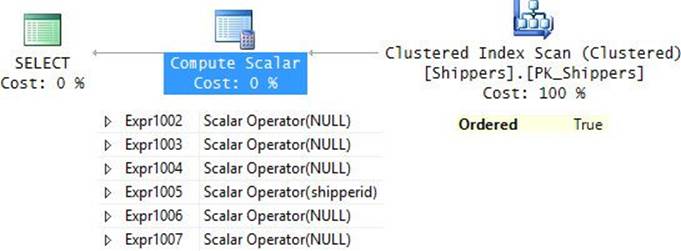

The plan for this execution is shown in Figure 9-2.

FIGURE 9-2 Plan for a query with RECOMPILE filtering by orderdate.

The plan is optimal, with a seek in the clustered index, which is defined with orderdate as the key. Observe that only the predicate involving the orderdate column appears in the plan, and that the reference to the parameter was replaced with the constant.

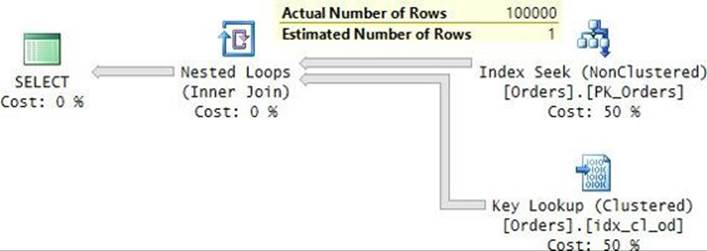

Execute the procedure again, this time with a filter based on the orderid column:

EXEC dbo.GetOrders @orderid = 10248;

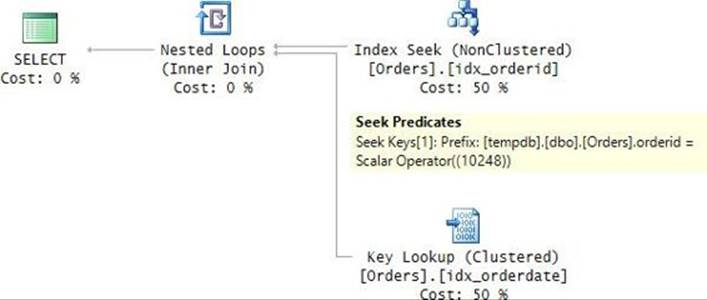

The plan for this execution is shown in Figure 9-3.

FIGURE 9-3 Plan for a query with RECOMPILE filtering by orderid.

The plan is, again, optimal, performing a seek in the index on orderid, followed by a lookup. The only predicate appearing in this plan is the one based on the orderid column—again, with the parameter replaced with the constant.

Because of the forced recompiles and parameter embedding that takes place, you get very efficient plans. The solution uses static SQL, so it’s not exposed to SQL injection attacks. It’s simple and easy to maintain. The one drawback of this solution is that, by definition, it doesn’t reuse plans. But what if the procedure is called frequently with common combinations of input parameters and you would like to get both efficient plans and efficient plan reuse? There are a couple of ways to achieve this goal.

You could create a separate procedure for each unique combination of parameters and have the GetOrders procedure use nested IF-ELSE IF statements to invoke the right procedure based on which parameters are specified. Each procedure will have a unique query with only the relevant predicates, without the RECOMPILE option. With this solution, the likelihood of getting efficient plans is high. Also, the plans can be cached and reused. Because the solution uses static SQL, it has no exposure to SQL injection. However, as you can imagine, it can be a maintenance nightmare. With P parameters, the number of procedures you will need is 2P. For example, with 8 parameters, you will have 256 procedures. Imagine that whenever you need to make a change, you will need to apply it to all procedures. Not a picnic.

Another strategy is to use dynamic SQL, but with a parameterized form of the code to promote efficient plan reuse behavior and avoid exposure to SQL injection. You construct the query string starting with the known SELECT and FROM clauses of the query. You start the WHERE clause with WHERE 1 = 1. This way, you don’t need to maintain a flag to know whether you need a WHERE clause and whether you need an AND operator before concatenating a predicate. The parser will eliminate this redundant predicate. Then, for each procedure parameter whose value was specified, you concatenate a parameterized predicate to the WHERE clause. Then you execute the parameterized query using sp_executesql, passing the query string to the @stmt input, declaring the four dynamic batch parameters in the @params input, and assigning the procedure parameters to the respective dynamic batch parameters.

Here’s the code to re-create the procedure based on the new strategy:

IF OBJECT_ID(N'dbo.GetOrders', N'P') IS NOT NULL DROP PROC dbo.GetOrders;

GO

CREATE PROC dbo.GetOrders

@orderid AS INT = NULL,

@custid AS INT = NULL,

@empid AS INT = NULL,

@orderdate AS DATE = NULL

AS

DECLARE @sql AS NVARCHAR(1000);

SET @sql =

N'SELECT orderid, custid, empid, orderdate, filler'

+ N' /* 27702431-107C-478C-8157-6DFCECC148DD */'

+ N' FROM dbo.Orders'

+ N' WHERE 1 = 1'

+ CASE WHEN @orderid IS NOT NULL THEN

N' AND orderid = @oid' ELSE N'' END

+ CASE WHEN @custid IS NOT NULL THEN

N' AND custid = @cid' ELSE N'' END

+ CASE WHEN @empid IS NOT NULL THEN

N' AND empid = @eid' ELSE N'' END

+ CASE WHEN @orderdate IS NOT NULL THEN

N' AND orderdate = @dt' ELSE N'' END;

EXEC sp_executesql

@stmt = @sql,

@params = N'@oid AS INT, @cid AS INT, @eid AS INT, @dt AS DATE',

@oid = @orderid,

@cid = @custid,

@eid = @empid,

@dt = @orderdate;

GO

Observe the GUID that I planted in a comment in the query. I created it by invoking the NEWID function. The point is to make it easy later to track down the cached plans that are executed by the procedure to demonstrate plan caching and reuse behavior. I’ll demonstrate this shortly.

Run the following code to execute the procedure twice with a filter based on the orderdate column, but with two different dates:

EXEC dbo.GetOrders @orderdate = '20140101';

EXEC dbo.GetOrders @orderdate = '20140102';

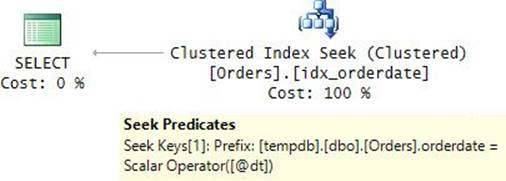

The plan for these executions is shown in Figure 9-4.

FIGURE 9-4 Plan for a dynamic query filtering by orderdate.

The plan is very efficient because the query that was optimized contains only the relevant predicates. Furthermore, unlike when using the RECOMPILE query option, there’s no parameter embedding here, which is good in this case. The parameterized form of the query gets optimized and cached, allowing plan reuse when the procedure is executed with the same combination of parameters. In the first execution of the procedure with a filter based on orderdate, SQL Server couldn’t find an existing plan in cache, so it optimized the query and cached the plan. For the second execution, it found a cached plan for the same query string and therefore reused it.

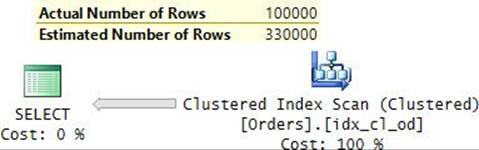

Execute the procedure again, this time with a filter based on the orderid column:

EXEC dbo.GetOrders @orderid = 10248;

This time the constructed query string contains a parameterized filter based on the orderid column. Because there was no plan in cache for this query string, SQL Server created a new plan and cached it too. Figure 9-5 shows the plan that SQL Server created for this query string.

FIGURE 9-5 Plan for a dynamic query filtering by orderid.

Again, you get a highly efficient plan for this query string, with only the relevant predicates in a parameterized form. Now you have two plans in cache for the two unique query strings that were executed by the stored procedure. Use the following query to examine the cached plans and their reuse behavior, filtering only plans for queries that contain the GUID you planted in the code:

SELECT usecounts, text

FROM sys.dm_exec_cached_plans AS CP

CROSS APPLY sys.dm_exec_sql_text(cp.plan_handle) AS ST

WHERE ST.text LIKE '%27702431-107C-478C-8157-6DFCECC148DD%'

AND ST.text NOT LIKE '%sys.dm_exec_cached_plans%'

AND CP.objtype = 'Prepared';

This code generates the following output:

usecounts text

----------- -----------------------------------------------------

1 (@oid AS INT, @cid AS INT, @eid AS INT, @dt AS DATE)

SELECT orderid, custid, empid, orderdate, filler

/* 27702431-107C-478C-8157-6DFCECC148DD */

FROM dbo.Orders WHERE 1 = 1 AND orderid = @oid

2 (@oid AS INT, @cid AS INT, @eid AS INT, @dt AS DATE)

SELECT orderid, custid, empid, orderdate, filler

/* 27702431-107C-478C-8157-6DFCECC148DD */

FROM dbo.Orders WHERE 1 = 1 AND orderdate = @dt

Observe that two plans were created because there were two unique query strings—one with a filter on orderid, so far used once, and another with a filter on orderdate, so far used twice.

This solution supports efficient plans and efficient plan reuse. Because the dynamic code uses parameters rather than injecting the constants into the code, it is not exposed to SQL injection attacks. Compared to the solution with the multiple procedures, this one is much easier to maintain. It certainly seems like this solution has strong advantages compared to the others.

There’s an excellent paper written on the topic of dynamic search conditions by my friend and fellow SQL Server MVP Erland Sommarskog. If this topic is important to you, be sure to check out Erland’s paper. You can find it at his website: http://www.sommarskog.se/dyn-search.html.

Dynamic sorting

Similar to dynamic search conditions, dynamic sorting is another common application need. As an example, suppose you need to develop a stored procedure called dbo.GetSortedShippers in the TSQLV3 database. The procedure accepts an input parameter called @colname, and it is supposed to return the rows from the Sales.Shippers table sorted by the input column name.

One of the most common first attempts at a solution for the task is to use a static query with an ORDER BY clause based on a CASE expression that looks like this:

ORDER BY

CASE @colname

WHEN N'shipperid' THEN shipperid

WHEN N'companyname' THEN companyname

WHEN N'phone' THEN phone

END

If you try running the procedure passing N‘companyname’ as input, you get a type-conversion error. The reason for the error is that CASE is an expression, and as such, the type of the result is determined by the data type precedence among the expression’s operands (the possible returned values in this case). Among the types of the three operands, INT is the one with the highest data type precedence; hence, it’s predetermined that the CASE expression’s type is INT. So when you pass N‘companyname’ as input, SQL Server tries to convert the companyname values to integers and, of course, fails.

One possible workaround is to add the following ELSE clause to the expression:

ORDER BY

CASE @colname

WHEN N'shipperid' THEN shipperid

WHEN N'companyname' THEN companyname

WHEN N'phone' THEN phone

ELSE CAST(NULL AS SQL_VARIANT)

END

This clause is not supposed to be activated because the procedure will be called by the application with names of columns that exist in the table; however, from SQL Server’s perspective, it theoretically could be activated. The SQL_VARIANT type is considered stronger than the other types; therefore, it’s chosen as the CASE expression’s type. The thing with the SQL_VARIANT type is that it can hold within it most other base types, including all those that participate in our expression, while preserving the information about the base type and its ordering semantics. So when the user calls the procedure with, say, N‘companyname’ as input, the CASE expression returns the companyname values as SQL_VARIANT values with NVARCHAR as the base type. So you get correct NVARCHAR-based ordering semantics.

With this trick, you get a solution that is correct. However, similar to non SARGable filters, because you’re applying manipulation to the ordering element, the optimizer will not rely on index order even if you have a supporting covering index. Curiously, as described by Paul White in the article “Parameter Sniffing, Embedding, and the RECOMPILE Options” (http://sqlperformance.com/2013/08/t-sql-queries/parameter-sniffing-embedding-and-the-recompile-options), as long as you use a separate CASE expression for each input and add the RECOMPILE query option, you get a plan that can rely on index order. That’s thanks to the parameter embedding employed by the parser that’s similar to what I explained in the discussion about dynamic search conditions. So, instead of using one CASE expression, you will use multiple expressions, like so:

ORDER BY

CASE WHEN @colname = N'shipperid' THEN shipperid END,

CASE WHEN @colname = N'companyname' THEN companyname END,

CASE WHEN @colname = N'phone' THEN phone END

OPTION(RECOMPILE)

Say the user executes the procedure passing N‘companyname’ as input; after parsing, the ORDER BY clause becomes the following (although NULL constants are normally not allowed in the ORDER BY directly): ORDER BY NULL, companyname, NULL. As long as you have a covering index in place, the optimizer can certainly rely on its order and avoid explicit sorting in the plan.

For the procedure to be more flexible, you might also want to support a second parameter called @sortdir to allow the user to specify the sort direction (‘A’ for ascending and ‘D’ for descending). Supporting this second parameter will require you to double the number of CASE expressions in the query.

Run the following code to create the GetSortedShippers procedure based on this strategy:

USE TSQLV3;

IF OBJECT_ID(N'dbo.GetSortedShippers', N'P') IS NOT NULL DROP PROC dbo.GetSortedShippers;

GO

CREATE PROC dbo.GetSortedShippers

@colname AS sysname, @sortdir AS CHAR(1) = 'A'

AS

SELECT shipperid, companyname, phone

FROM Sales.Shippers

ORDER BY

CASE WHEN @colname = N'shipperid' AND @sortdir = 'A' THEN shipperid END,

CASE WHEN @colname = N'companyname' AND @sortdir = 'A' THEN companyname END,

CASE WHEN @colname = N'phone' AND @sortdir = 'A' THEN phone END,

CASE WHEN @colname = N'shipperid' AND @sortdir = 'D' THEN shipperid END DESC,

CASE WHEN @colname = N'companyname' AND @sortdir = 'D' THEN companyname END DESC,

CASE WHEN @colname = N'phone' AND @sortdir = 'D' THEN phone END DESC

OPTION (RECOMPILE);

GO

Run the following code to test the procedure:

EXEC dbo.GetSortedShippers N'shipperid', N'D';

After parsing, the ORDER BY clause became the following:

ORDER BY NULL, NULL, NULL, shipperid DESC, NULL DESC, NULL DESC

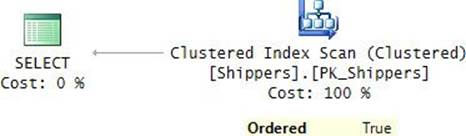

The plan for this execution is shown in Figure 9-6.

FIGURE 9-6 Plan for a query with multiple CASE expressions and RECOMPILE.

Observe that the plan performs an ordered scan of the clustered index on shipperid, avoiding the need for explicit sorting.

This solution produces efficient plans. Because it uses a static query, it’s not exposed to SQL injection attacks. However, it has two drawbacks. First, by definition, it does not reuse query plans. Second, it doesn’t lend itself to supporting multiple sort columns as inputs; you’d just end up with many CASE expressions.

A solution that doesn’t have these drawbacks is one based on dynamic SQL. You construct a query string with an ORDER BY clause with an injected sort column and direction. The obvious challenge with this solution is the exposure to SQL injection. Then, again, table structures tend to be pretty stable. So you could incorporate a test to ensure that the input column name appears in the hard-coded set of sort columns you want to support from the table. If the column doesn’t appear in the set, you abort the query, suspecting an attempted SQL injection. If the set of supported sort columns needs to change, you alter the procedure definition. Most likely, such changes will be infrequent enough to make this approach viable.

Use the following code to re-create the procedure based on this strategy:

IF OBJECT_ID(N'dbo.GetSortedShippers', N'P') IS NOT NULL DROP PROC dbo.GetSortedShippers;

GO

CREATE PROC dbo.GetSortedShippers

@colname AS sysname, @sortdir AS CHAR(1) = 'A'

AS

IF @colname NOT IN(N'shipperid', N'companyname', N'phone')

THROW 50001, 'Column name not supported. Possibly a SQL injection attempt.', 1;

DECLARE @sql AS NVARCHAR(1000);

SET @sql = N'SELECT shipperid, companyname, phone

FROM Sales.Shippers

ORDER BY '

+ QUOTENAME(@colname) + CASE @sortdir WHEN 'D' THEN N' DESC' ELSE '' END + ';';

EXEC sys.sp_executesql @stmt = @sql;

GO

The QUOTENAME function is used to delimit the sort column name with square brackets. In our specific example, delimiters aren’t required, but in case you need to support column names that are considered irregular identifiers, you will need the delimiters.

Use the following code to test the procedure:

EXEC dbo.GetSortedShippers N'shipperid', N'D';

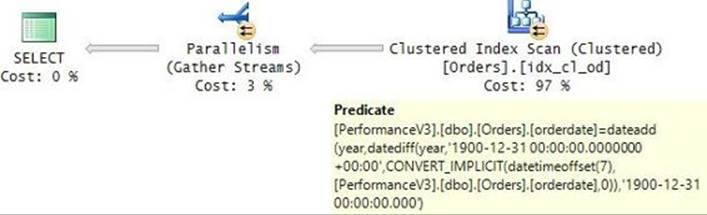

The plan for this execution is shown in Figure 9-7.

FIGURE 9-7 Plan for a dynamic query.

It’s an efficient plan that relies on index order. Unlike the previous solution, this one can efficiently reuse previously cached plans. This solution can also be easily extended if you need to support multiple input sort columns, as the following revised definition demonstrates:

IF OBJECT_ID(N'dbo.GetSortedShippers', N'P') IS NOT NULL DROP PROC dbo.GetSortedShippers;

GO

CREATE PROC dbo.GetSortedShippers

@colname1 AS sysname, @sortdir1 AS CHAR(1) = 'A',

@colname2 AS sysname = NULL, @sortdir2 AS CHAR(1) = 'A',

@colname3 AS sysname = NULL, @sortdir3 AS CHAR(1) = 'A'

AS

IF @colname1 NOT IN(N'shipperid', N'companyname', N'phone')

OR @colname2 IS NOT NULL AND @colname2 NOT IN(N'shipperid', N'companyname', N'phone')

OR @colname3 IS NOT NULL AND @colname3 NOT IN(N'shipperid', N'companyname', N'phone')

THROW 50001, 'Column name not supported. Possibly a SQL injection attempt.', 1;

DECLARE @sql AS NVARCHAR(1000);

SET @sql = N'SELECT shipperid, companyname, phone

FROM Sales.Shippers

ORDER BY '

+ QUOTENAME(@colname1) + CASE @sortdir1 WHEN 'D' THEN N' DESC' ELSE '' END

+ ISNULL(N',' + QUOTENAME(@colname2) + CASE @sortdir2 WHEN 'D' THEN N' DESC' ELSE '' END, N'')

+ ISNULL(N',' + QUOTENAME(@colname3) + CASE @sortdir3 WHEN 'D' THEN N' DESC' ELSE '' END, N'')

+ ';';

EXEC sys.sp_executesql @stmt = @sql;

GO

Regarding security, it’s important to know that normally the user executing the stored procedure will need direct permissions to run the code that executes dynamically. If you don’t want to grant such direct permissions, you can use the EXECUTE AS clause to impersonate the security context of the user specified in that clause. You can find the details about how to do this in Books Online: http://msdn.microsoft.com/en-us/library/ms188354.aspx.

As an extra resource, you can find excellent coverage of dynamic SQL in the paper “The Curse and Blessings of Dynamic SQL” by Erland Sommarskog: http://www.sommarskog.se/dynamic_sql.html.

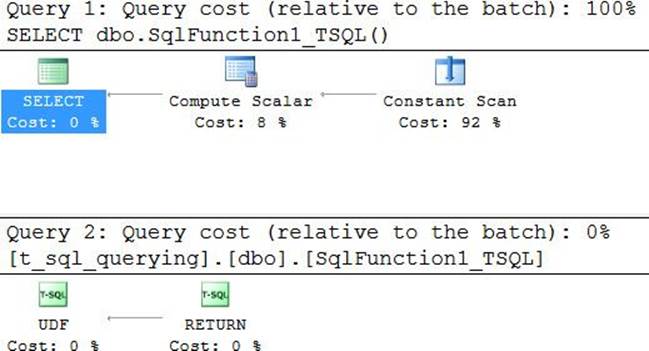

User-defined functions

User-defined functions (UDFs) give you powerful encapsulation and reusability capabilities. They are convenient to use because they can be embedded in queries. However, they also incur certain performance-related penalties you should be aware of. For this purpose, I’m referring to scalar UDFs and multistatement, table-valued functions (TVFs). I’m not referring to inline TVFs, which I covered in Chapter 3, “Multi-table queries.” Inline TVFs, by definition, get inlined, so they don’t incur any performance penalties.

This section covers T-SQL UDFs. Later, the chapter covers CLR UDFs in the “SQLCLR programming” section.

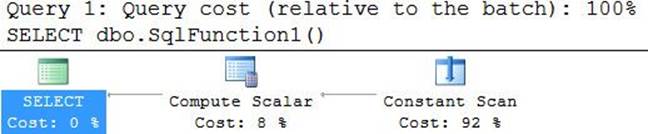

Scalar UDFs

Scalar UDFs accept arguments and return a scalar value. They can be incorporated where single-valued expressions are allowed—for example, in queries, constraints, and computed columns.

T-SQL UDFs (not just scalar) are limited in a number of ways. They are not allowed to have side effects on the database or the system. Therefore, you are not allowed to apply data or structural changes to database objects other than variables (including table variables) defined within the function. You’re also not allowed to invoke activities that have indirect side effects, like when invoking the RAND and NEWID functions. For example, one invocation of the RAND function will determine the seed that will be used in a subsequent seedless invocation of RAND. You’re not allowed to use dynamic SQL, and you’re not allowed to include exception handling with the TRY-CATCH construct.

Scalar T-SQL UDFs are implemented in two main ways. In one, the function invokes a single expression that returns a scalar result. In the other, the function invokes multiple statements and eventually returns a scalar result. Either way, you need to be aware that there are performance penalties associated with the use of scalar UDFs. I’ll start with an example for a scalar UDF that is based on a single expression. I’ll compare the performance of a query that contains the expression directly to one where you encapsulate the expression in a UDF.

The following query filters only orders that were placed on the last day of the year:

USE PerformanceV3;

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

WHERE orderdate = DATEADD(year, DATEDIFF(year, '19001231', orderdate), '19001231');

If you’re not familiar with the method used here to compute the last day of the year, you can find the details in Chapter 7, “Working with date and time.”

On my system, this query got the execution plan shown in Figure 9-8.

FIGURE 9-8 Plan for a query without a function.

Observe that the plan uses a parallel scan of the clustered index, mainly to process the filter using multiple threads. When running this query against hot cache in my system, the query took 281 milliseconds to complete, and it used 704 milliseconds of CPU time.

To check the impact of parallelism here, I forced a serial plan by specifying the hint MAXDOP 1, like so:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

WHERE orderdate = DATEADD(year, DATEDIFF(year, '19001231', orderdate), '19001231')

OPTION(MAXDOP 1);

This time, it took the query 511 milliseconds to complete. So, on my system, the execution time of the parallel plan was half the execution time of the serial plan.

Without a doubt, from a programming perspective, it’s beneficial to encapsulate the expression that computes the end-of-year date in a scalar UDF. This encapsulation helps you hide the complexity and enables reusability. Run the following code to encapsulate the expression in theEndOfYear UDF.

IF OBJECT_ID(N'dbo.EndOfYear') IS NOT NULL DROP FUNCTION dbo.EndOfYear;

GO

CREATE FUNCTION dbo.EndOfYear(@dt AS DATE) RETURNS DATE

AS

BEGIN

RETURN DATEADD(year, DATEDIFF(year, '19001231', @dt), '19001231');

END;

GO

Now run the query after you replace the direct expression with a call to the UDF, like so:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

WHERE orderdate = dbo.EndOfYear(orderdate);

There’s no question about the programmability benefits you get here compared to not using the UDF. The code is clearer and more concise. However, the impact of the use of the UDF on performance is quite severe. Observe the plan for this query shown in Figure 9-9.

FIGURE 9-9 Plan for a query with a scalar UDF.

Unfortunately, even when the UDF is based on a single expression, SQL Server doesn’t inline it. This means that the UDF is invoked per row in the underlying table, as you can see in the Filter operator’s Predicate property in the plan. In our case, the UDF gets invoked 1,000,000 times. There’s overhead in each invocation, and with a million invocations this overhead amounts to something substantial. Also, observe that the query plan is serial. Any use of T-SQL scalar UDFs prevents parallelism.

It took this query over four seconds to complete on my system, with a total CPU cost of over four seconds. That’s about 16 times more than the query without the UDF (with the default parallel plan), and 8 times more than when I forced the serial plan. Either way, as you can see, the penalty for using the UDF is quite high.

Curiously, there’s a workaround that will allow you to encapsulate your logic in a function while not incurring the performance penalty. Instead of using a scalar UDF, use an inline TVF. The tricky part is that an inline TVF has to return a query. So specify the expression along with a column alias in a FROM-less SELECT query, and return that query from the function. Here’s the code to drop the scalar UDF and create an inline TVF instead:

IF OBJECT_ID(N'dbo.EndOfYear') IS NOT NULL DROP FUNCTION dbo.EndOfYear;

GO

CREATE FUNCTION dbo.EndOfYear(@dt AS DATE) RETURNS TABLE

AS

RETURN

SELECT DATEADD(year, DATEDIFF(year, '19001231', @dt), '19001231') AS endofyear;

GO

To invoke a table function in a query and pass a column from the outer table as input, you need to use either the explicit APPLY operator or an implicit one in a subquery. Here’s an example using the implicit form:

SELECT orderid, custid, empid, shipperid, orderdate, filler

FROM dbo.Orders

WHERE orderdate = (SELECT endofyear FROM dbo.EndOfYear(orderdate));

This time, the expression in the function does get inlined. SQL Server creates the same plan as the one I showed earlier in Figure 9-8 for the original query without the UDF. Thus, there’s no performance penalty for using the inline TVF.

As for scalar UDFs that are based on multiple statements, those are usually used to implement iterative logic. As discussed earlier in the book in Chapter 2, “Query tuning,” T-SQL iterations are slow. So, especially when you need to apply many iterations, you’ll tend to get better performance using a CLR UDF instead of a T-SQL one. Furthermore, as long as a CLR UDF isn’t marked as applying data access, it doesn’t prevent parallelism.

As an example for a T-SQL UDF that uses iterative logic, consider the following definition of the RemoveChars UDF:

USE TSQLV3;

IF OBJECT_ID(N'dbo.RemoveChars', N'FN') IS NOT NULL DROP FUNCTION dbo.RemoveChars;

GO

CREATE FUNCTION dbo.RemoveChars(@string AS NVARCHAR(4000), @pattern AS NVARCHAR(4000))

RETURNS NVARCHAR(4000)

AS

BEGIN

DECLARE @pos AS INT;

SET @pos = PATINDEX(@pattern, @string);

WHILE @pos > 0

BEGIN

SET @string = STUFF(@string, @pos, 1, N'');

SET @pos = PATINDEX(@pattern, @string);

END;

RETURN @string;

END;

GO

This function accepts two parameters as inputs. One is @string, representing an input string. Another is @pattern, representing the pattern of a single character. The UDF’s purpose is to return a string representing the input string after the removal of all occurrences of the input character pattern.

The function’s code starts by using the PATINDEX function to compute the first position of the character pattern in @string and stores it in @pos. The code then enters a loop that keeps running while there are still occurrences of the character pattern in the string. In each iteration, the code uses the STUFF function to remove the first occurrence from the string and then looks for the position of the next occurrence. Once the loop is done, the code returns what’s left in @string.

Here’s an example for using the UDF in a query against the Sales.Customers table to return clean phone numbers (by removing all nonmeaningful characters):

SELECT custid, phone, dbo.RemoveChars(phone, N'%[^0-9]%') AS cleanphone

FROM Sales.Customers;

This function returns the following output:

custid phone cleanphone

------- --------------- -----------

1 030-3456789 0303456789

2 (5) 789-0123 57890123

3 (5) 123-4567 51234567

4 (171) 456-7890 1714567890

5 0921-67 89 01 0921678901

6 0621-67890 062167890

7 67.89.01.23 67890123

8 (91) 345 67 89 913456789

9 23.45.67.89 23456789

10 (604) 901-2345 6049012345

...

You will find coverage of CLR UDFs later in the chapter in the “SQLCLR programming” section. In that section, you will find the definition of a CLR UDF called RegExReplace. This UDF applies regex-based replacement to the input string based on the input regex pattern. With this UDF, you handle the task using the following code:

SELECT custid, phone, dbo.RegExReplace(N'[^0-9]', phone, N'') AS cleanphone

FROM Sales.Customers;

You get two main advantages by using the CLR-based solution rather than the T-SQL solution. First, regular expressions are much richer compared to the primitive patterns you can use with the PATINDEX function and the LIKE predicate. Second, you get better performance. I tested the query against a table with 1,000,000 rows. Even though there aren’t many iterations required to clean phone numbers, the query ran for 16 seconds with the T-SQL UDF and 8 seconds with the CLR one.

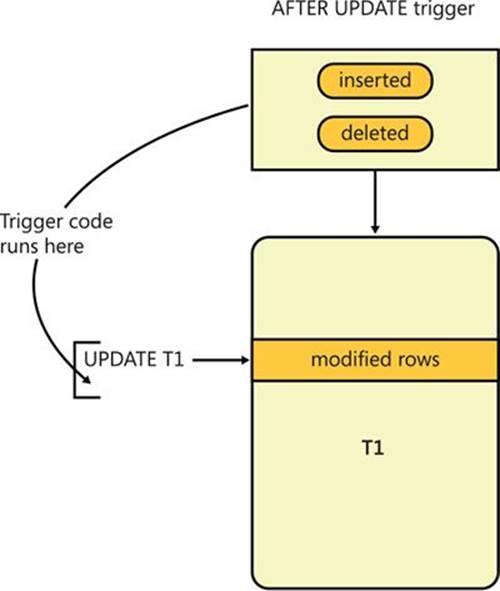

Multistatement TVFs

Multistatement TVFs have multiple statements in their body, and they return a table variable as their output. The returned table variable is defined in the function’s header. The purpose of the body is to fill the table variable with data. When you query such a function, SQL Server declares the table variable, runs the flow to fill it with data, and then hands it to the calling query.

To demonstrate using multistatement TVFs, I’ll use a table called Employees that you create and populate by running the following code:

SET NOCOUNT ON;

USE tempdb;

GO

IF OBJECT_ID(N'dbo.Employees', N'U') IS NOT NULL DROP TABLE dbo.Employees;

GO

CREATE TABLE dbo.Employees

(

empid INT NOT NULL CONSTRAINT PK_Employees PRIMARY KEY,

mgrid INT NULL CONSTRAINT FK_Employees_Employees REFERENCES dbo.Employees,

empname VARCHAR(25) NOT NULL,

salary MONEY NOT NULL,

CHECK (empid <> mgrid)

);

INSERT INTO dbo.Employees(empid, mgrid, empname, salary)

VALUES(1, NULL, 'David', $10000.00),

(2, 1, 'Eitan', $7000.00),

(3, 1, 'Ina', $7500.00),

(4, 2, 'Seraph', $5000.00),

(5, 2, 'Jiru', $5500.00),

(6, 2, 'Steve', $4500.00),

(7, 3, 'Aaron', $5000.00),

(8, 5, 'Lilach', $3500.00),

(9, 7, 'Rita', $3000.00),

(10, 5, 'Sean', $3000.00),

(11, 7, 'Gabriel', $3000.00),

(12, 9, 'Emilia' , $2000.00),

(13, 9, 'Michael', $2000.00),

(14, 9, 'Didi', $1500.00);

CREATE UNIQUE INDEX idx_unc_mgr_emp_i_name_sal ON dbo.Employees(mgrid, empid)

INCLUDE(empname, salary);

Following is the definition of a function called GetSubtree:

IF OBJECT_ID(N'dbo.GetSubtree', N'TF') IS NOT NULL DROP FUNCTION dbo.GetSubtree;

GO

CREATE FUNCTION dbo.GetSubtree (@mgrid AS INT, @maxlevels AS INT = NULL)

RETURNS @Tree TABLE

(

empid INT NOT NULL PRIMARY KEY,

mgrid INT NULL,

empname VARCHAR(25) NOT NULL,

salary MONEY NOT NULL,

lvl INT NOT NULL

)

AS

BEGIN

DECLARE @lvl AS INT = 0;

-- Insert subtree root node into @Tree

INSERT INTO @Tree

SELECT empid, mgrid, empname, salary, @lvl

FROM dbo.Employees

WHERE empid = @mgrid;

WHILE @@ROWCOUNT > 0 AND (@lvl < @maxlevels OR @maxlevels IS NULL)

BEGIN

SET @lvl += 1;

-- Insert children of nodes from prev level into @Tree

INSERT INTO @Tree

SELECT E.empid, E.mgrid, E.empname, E.salary, @lvl

FROM dbo.Employees AS E

INNER JOIN @Tree AS T

ON E.mgrid = T.empid AND T.lvl = @lvl - 1;

END;

RETURN;

END;

GO

The function returns a subtree of employees below an input manager (@mgrid), with an optional input level limit (@maxlevels). The function defines a table variable called @Tree as the returned output. The variable holds employee information as well as a column called lvl representing the distance in levels from the input subtree root manager (0 for the root, 1 for the level below, and so on).

The code in the function’s body starts by declaring a level counter called @lvl and initializes it with zero. The code then inserts the row for the input manager into the table variable along with the just-initialized level zero. The code then runs a loop that keeps iterating as long as the last insert has at least one row and, if the user provided a level limit, that limit wasn’t exceeded. In each iteration, the code increments the level counter and then inserts into @Tree the next level of subordinates. Once the loop is done, the code returns. At that point, the function hands the table variable @Tree to the calling query.

As an example of using the function, the following code requests the subtree of manager 3 without a level limit:

SELECT empid, empname, mgrid, salary, lvl

FROM GetSubtree(3, NULL);

This code generates the following output:

empid empname mgrid salary lvl

------ -------- ------ -------- ----

3 Ina 1 7500.00 0

7 Aaron 3 5000.00 1

9 Rita 7 3000.00 2

11 Gabriel 7 3000.00 2

12 Emilia 9 2000.00 3

13 Michael 9 2000.00 3

14 Didi 9 1500.00 3

One thing to remember about the use of multistatement TVFs is that they return a table variable. Remember that, unlike it does with temporary tables, SQL Server doesn’t maintain histograms for table variables. As a result, when the optimizer needs to make cardinality estimates related to the table variable, it is more limited in the tools that it can use. The lack of histograms can result in suboptimal choices. This is true both for the queries against the table variable within the function’s body and for the outer queries against the table function. If you identify performance problems that you connect to the lack of histograms, you’ll need to reevaluate your solution.

An alternative option is to use a stored procedure with temporary tables. The downside with this approach is that it’s not as convenient to interact with the result of a stored procedure as querying the result of a table function. You will need to figure out your priorities and do some testing to see if there’s a performance difference and how big it is.

Stored procedures

In this section, I cover the use of T-SQL stored procedures. As mentioned, because the focus of the book is querying, I will cover mainly how to use stored procedures to execute queries and the tuning aspects of the code. I’ll first describe the advantages stored procedures have over ad hoc code. I’ll then cover the way SQL Server handles compilations, recompilations, and the reuse of execution plans. I’ll also cover the use of table types and table-valued parameters, as well as the EXECUTE WITH RESULT SETS clause.

Stored procedures are an important programming tool that gives you a number of benefits compared to implementing your solutions with ad hoc code. Like in other programming environments, encapsulating the logic in a routine enables reusability and allows you to hide the complexity.

Compared to deploying changes in the application, deploying changes in a stored procedure is much simpler. Whether you have a bug to fix or a more efficient way to achieve the task, you issue an ALTER PROC command, and everyone immediately starts using the altered version.

With stored procedures, you tend to reduce a lot of the network traffic. All you pass through the network is the procedure name and the input parameters. The logic is executed in the database engine, and only the final outcome needs to be transmitted back to the caller. Implementing the logic in the application tends to result in more round trips between the application and the database, causing more network traffic.

The use of parameterized queries in stored procedures promotes efficient plan caching and reuse behavior. That’s the focus of the next section. I should note, though, that this capability is not exclusive to queries in stored procedures. You can get similar benefits when using sp_executesqlwith parameterized queries.

Compilations, recompilations, and reuse of execution plans

When you need to tune stored procedures that have performance problems, you should focus your efforts on two main areas. One is tuning the queries within the procedure based on what you’ve learned so far in the book. For this purpose, it doesn’t matter if the query resides in a stored procedure or not. Another is related to plan caching, reuse, parameter sniffing, variable sniffing, and recompilations.

![]() Note

Note

The examples in this section assume you have a clean copy of the sample database PerformanceV3. If you don’t, run the script PerformanceV3.sql from the book’s source code first.

Reuse of execution plans and parameter sniffing

When you create a stored procedure, SQL Server doesn’t optimize the queries within it. It does so the first time you execute the procedure. The initial compilation, which mainly involves optimization of the queries, takes place at the entire batch (procedure) level. SQL Server caches the query plans to enable reuse in subsequent executions of the procedure. When SQL Server triggers a recompilation, it does so at the statement level.

The reason for plan caching and reuse is to save the time, CPU, and memory resources that are involved in the creation of a new plan. How long it takes SQL Server to create a new plan varies. I’ve seen plans that took a few milliseconds to create and also plans that took minutes to create. SQL Server takes the sizes of the tables involved into consideration to determine time and cost thresholds for the optimization process. You can find details about the compilation in the properties of the root node (in a SELECT query, it’s the SELECT node) in a graphical or XML query plan. You will find the following properties: CompileTime (in ms), CompileCPU (in ms), CompileMemory (in KB), Optimization Level (TRIVIAL or FULL), Reason For Early Termination (Good Enough Plan Found if a plan with a cost below the cost threshold is found, or Time Out if the time threshold is reached), and others.

The assumption that SQL Server makes is that if a valid cached plan exists, normally it’s beneficial to reuse it. So, by default, it will try to. Under certain conditions, SQL Server will trigger a recompilation, causing a new plan to be created. I’ll discuss this topic in the section “Recompilations.”

I’ll use a stored procedure called GetOrders to demonstrate plan caching and reuse behavior. Run the following code to create the procedure in the PerformanceV3 database:

USE PerformanceV3;

IF OBJECT_ID(N'dbo.GetOrders', N'P') IS NOT NULL DROP PROC dbo.GetOrders;

GO

CREATE PROC dbo.GetOrders( @orderid AS INT )

AS

SELECT orderid, custid, empid, orderdate, filler

/* 703FCFF2-970F-4777-A8B7-8A87B8BE0A4D */

FROM dbo.Orders

WHERE orderid >= @orderid;

GO

The procedure accepts an order ID as input and returns information about all orders that have an order ID that is greater than or equal to the input one. I planted a GUID as a comment in the code to easily track down the plans in cache that are associated with this query.

There is a nonclustered, noncovering index called PK_Orders defined on the orderid column as the key. Depending on the selectivity of the filter, different plans are considered optimal. For a filter with high selectivity, the optimal plan is one that uses the PK_Orders index and applies lookups. For low selectivity, a serial plan that scans the clustered index is optimal. For a filter with medium selectivity, a parallel plan that scans the clustered index is optimal. (There needs to be few enough filtered rows that when the gather streams cost is added to the scan cost it does not exceed the serial plan cost.)

Say you execute the procedure and currently there’s no reusable cached plan for the query. SQL Server sniffs the current parameter value (also known as the parameter compiled value) and optimizes the plan accordingly. SQL Server then caches the plan and will reuse it for subsequent executions of the procedure until the conditions for a recompilation are met. This strategy assumes that the sniffed value represents the typical input.

To see a demonstration of this behavior, enable the inclusion of the actual execution plan in SQL Server Management Studio (SSMS) and execute the procedure for the first time with a selective input, like so:

EXEC dbo.GetOrders @orderid = 999991;

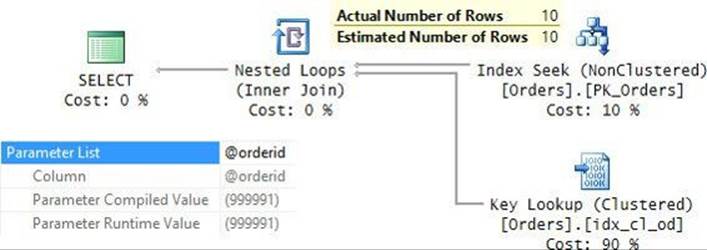

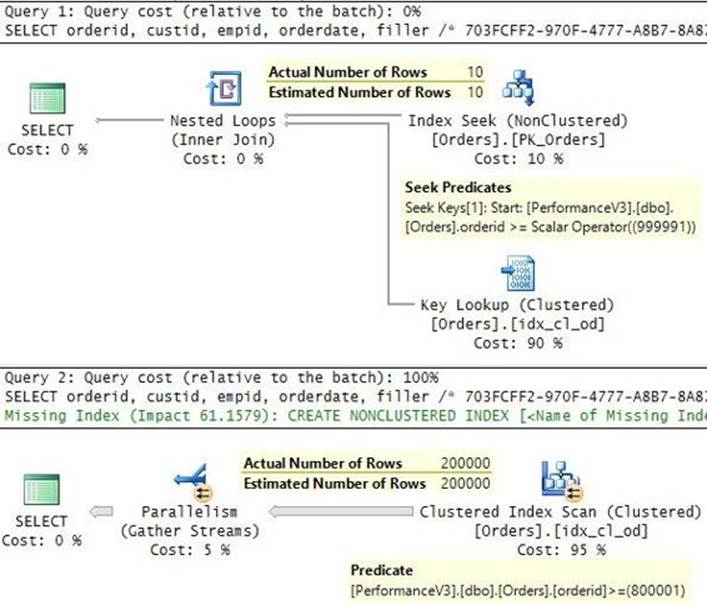

The plan for this execution is shown in Figure 9-10.

FIGURE 9-10 Plan for the first execution of the procedure.

Observe in the properties of the root node that both the compiled value and the run-time value of the @orderid parameter is 999991. Based on this sniffed value, the cardinality estimate for the filter is 10 rows. Consequently, the optimizer chose a plan that performs a seek and a range scan in the index PK_Orders and applies lookups for the qualifying rows. With 10 lookups, the execution of this plan performed only 33 logical reads in total.

If, indeed, subsequent executions of the procedure will be done with high selectivity, the default plan reuse behavior will be beneficial to you. To see an example, execute the procedure again providing another value with high selectivity:

EXEC dbo.GetOrders @orderid = 999996;

Figure 9-11 shows the graphical execution plan for this execution.

FIGURE 9-11 Plan for the second execution of the procedure.

Observe that the parameter compiled value and, hence, the estimated number of rows returned by the Index Seek operation are unchanged because they reflect the compile-time information. After all, SQL Server reused the cached plan. The parameter run-time value this time is 999996, and the actual number of rows returned is 5. With 5 matches, the execution of this plan performs only 18 reads.

You can analyze plan reuse behavior by querying the plan cache, filtering only plans for the query containing our planted GUID, like so:

SELECT CP.usecounts, CP.cacheobjtype, CP.objtype, CP.plan_handle, ST.text

FROM sys.dm_exec_cached_plans AS CP

CROSS APPLY sys.dm_exec_sql_text(CP.plan_handle) AS ST

WHERE ST.text LIKE '%703FCFF2-970F-4777-A8B7-8A87B8BE0A4D%'

AND ST.text NOT LIKE '%sys.dm_exec_cached_plans%';

At this point, the usecounts column should show a use count of 2 for our query plan.

![]() Note

Note

In an active system with lots of cached query plans, this query can be expensive because it needs to scan the text to look for the GUID. Starting with SQL Server 2012, you can label your queries using the query hint OPTION(LABEL = ‘some label’). Unfortunately, though, this label is not exposed in dynamic management views (DMVs) like sys.dm_exec_cached_plans as a separate column. I hope this capability will be added in the future to allow interesting queries to be filtered in a less expensive way. See the following Microsoft Connect item with such a feature enhancement request to Microsoft: https://connect.microsoft.com/SQLServer/feedback/details/833055.

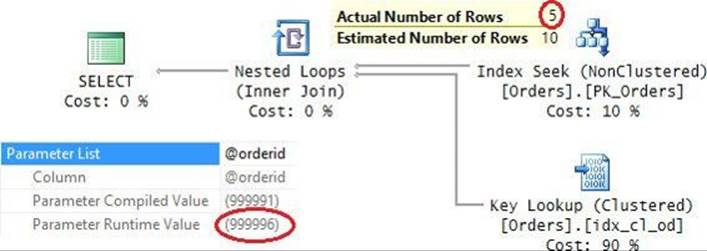

As you can see, plan reuse is beneficial when the different executions of the procedure provide inputs of a similar nature. But what if that’s not the case? For example, execute the procedure with an input parameter that has medium selectivity:

EXEC dbo.GetOrders @orderid = 800001;

Normally, this query would benefit from a plan that performs a full scan of the clustered index. But because there’s a plan in cache, it’s reused, as you can see in Figure 9-12.

FIGURE 9-12 Plan for the third execution of the procedure.

The parameter compiled value is the one provided when the plan was created (999991), but the run-time value is 800001. The cost percentages of the operators and estimated row counts are the same as when the plan was created because they reflect the parameter compiled value. But the arrows are much thicker than before because they reflect the actual row counts in this execution. The Index Seek operator returns 200,000 rows, and therefore the plan performs that many key lookups. This plan execution performed 600,524 logical reads on my system. That’s a lot compared to how much a clustered index scan would cost you!

The query in our stored procedure is pretty simple; all it has is just a filter and a SELECT list with a few columns. Still, this simple example helps illustrate the concept of inaccurate cardinality estimates and some of its possible implications, like choosing an index seek and lookups versus an index scan, and choosing a serial plan versus a parallel one. You can feel free to experiment with more query elements to demonstrate other implications. For example, if you add grouping and aggregation, you will also be able to observe the implications of inaccurate cardinality estimates on choosing the aggregate algorithm, as well as on computing the required memory grant (for sort and hash operators). Use the following code to alter the procedure, adding grouping and aggregation to the query:

ALTER PROC dbo.GetOrders( @orderid AS INT )

AS

SELECT empid, COUNT(*) AS numorders

/* 703FCFF2-970F-4777-A8B7-8A87B8BE0A4D */

FROM dbo.Orders

WHERE orderid >= @orderid

GROUP BY empid;

GO

Normally, with high selectivity (for example, with the input @orderid = 999991) the optimal plan is a serial plan with an index seek and key lookups, a sort operator, and an order-based aggregate (Stream Aggregate). With medium selectivity (for example, with the input @orderid = 800001), the optimal plan is a parallel plan with an index scan, a local hash aggregate, and a global order aggregate.

Which plan you will get in practice depends on the input you provide in the first execution. For example, execute the procedure first with high selectivity. You will get a serial plan with an index seek and key lookups, a sort operator, and an order-based aggregate. Also, the query will request a small memory grant for the sort activity. (See the properties of the root SELECT node of the actual query plan.) This plan will be cached. Then execute the procedure again with medium selectivity. The cached plan will be reused. Not only that, the cached plan will be inefficient for the second execution, very likely the memory grant for the sort operation won’t be sufficient, and it will have to spill to tempdb. (The Sort operator in the actual query plan will show a warning to that effect.)

Adding grouping and aggregation to the query is just one idea of how you can experiment with different query elements to observe the implications of inaccurate cardinality estimates. Of course, there are many other things you can try, like joins, ordering, and so on.

Preventing reuse of execution plans

When facing situations like in the previous section, where plan reuse is not beneficial to you, you usually can do a number of things. If all you have is just the query with the filter (no grouping and aggregation or other elements), one option is to create a covering index, causing the plan for the query to be a trivial one. The optimizer will choose a plan that performs a seek and a range scan in the covering index regardless of the cardinality estimate. Regardless of the selectivity of the input, the same plan is always the optimal one. But you might not be able to create such an index. Also, if your query does involve additional activities like grouping and aggregating, joining, and so on, even with a covering index an accurate cardinality estimate might still be important to determine things like which algorithms to use and how much of a memory grant is required.

Another option is to force SQL Server to recompile the query in every execution by adding the RECOMPILE query hint, like so:

ALTER PROC dbo.GetOrders( @orderid AS INT )

AS

SELECT orderid, custid, empid, orderdate, filler

/* 703FCFF2-970F-4777-A8B7-8A87B8BE0A4D */

FROM dbo.Orders

WHERE orderid >= @orderid

OPTION(RECOMPILE);

GO

The tradeoff between using the RECOMPILE query option and reusing plans is that you’re likely to get efficient plans at the cost of compiling the query in every execution. You should be able to tell whether it makes sense for you to use this approach based on the benefit versus cost in your case.

![]() Tip

Tip

In case you are familiar with the procedure-level RECOMPILE option, I should point out that it’s less recommended to use. For one, it will affect all queries in the procedure, and perhaps you want to prevent reuse only for particular queries. For another, it doesn’t benefit from parameter embedding (replacing the parameters with constants) like the statement option does. I described this capability earlier in the chapter in the section “Dynamic search conditions.”

In terms of caching, an interesting difference between the two is that when using the procedure-level option, SQL Server doesn’t cache the plan. When using the statement-level option, SQL Server does cache the last plan, but it doesn’t reuse that plan. So you will find information about the last plan in DMVs like sys.dm_exec_cached_plans and sys.dm_exec_query_stats.

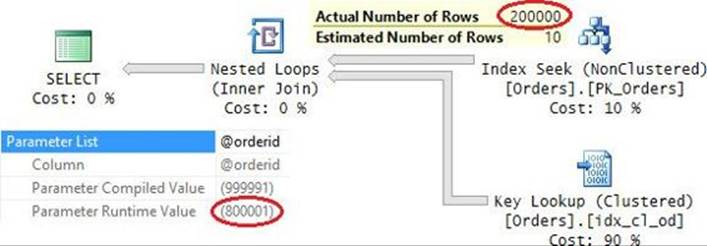

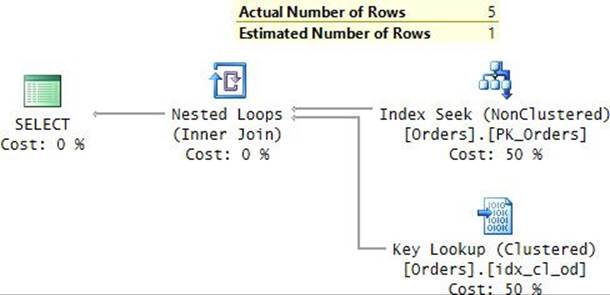

To test the revised procedure, execute it with both high and medium selectivity:

EXEC dbo.GetOrders @orderid = 999991;

EXEC dbo.GetOrders @orderid = 800001;

The plans for the two executions are shown in Figure 9-13.

FIGURE 9-13 Plans for executions with a query using RECOMPILE.