Effective MySQL: Backup and Recovery (2012)

Chapter 3. Understanding Business Requirements for Disaster Recovery

“No one cares about your backup; they only care if you can restore.”

Adapted from W. Curtis Preston - Backup & Recovery (O’Reilly, 2009)

One of the factors in choosing a backup methodology is the business requirements for data recovery. There are businesses where the loss of a single transaction has a substantive impact, and businesses where recovering to last night at midnight meets the business requirements for acceptable loss. Defining these requirements and classes of data is not strictly a technical problem; however, it is important to prevent very difficult conversations about mismatched expectations.

In this chapter we will discuss:

• Defining requirements

• Determining responsibilities

• Understanding business terminology

• Planning for situations

![]()

Defining Requirements

The requirements of the business can dictate how your database backup and recovery strategy is implemented. The business may accept a four hour recovery time, meaning that additional hard drive space is the only additional physical need for an existing system. Or, no downtime may dictate multiple geographically placed servers, many smaller servers rather than fewer larger servers, and with the application designed to support partitioning necessary to satisfy these business requirements.

Being prepared for any level of disaster is just as important as supporting a growing system; however, this never receives the prestige like improving system performance. Many requirements you need to put in place are safety nets that may never be utilized; however, it would be disastrous for your business viability if they were not in place.

Basic hardware redundancies including multiple servers, hard drive RAID configurations, network bonding and duplicate power supplies are basic necessities. Redundancy is designed to prevent a recovery requirement and enable systems to maintain a level of availability, generally in a degraded mode, e.g., a disk failure in a RAID disk, NIC failure in a network bond, or slave failure in a MySQL topology. In these situations the redundancy via either a replication or active/passive usage can ensure seamless operations. The system is considered degraded, as the lack of further redundancy is a point of failure, e.g., 1 disk in RAID 5. Furthermore, additional system load is generally necessary to restore the system failure to full operation.

Advanced considerations include placing servers in different racks to avoid fire, theft, or other serious damage. These decisions could include working with varying external providers adding complexity to the decision making and support processes.

However, it may be impossible to fully consider an explosion that takes out the power supply and backup power options of an entire data center of 10,000 servers. Recently the seizure by FBI agents of servers that were totally unrelated to the original warrant, and upheld by a U.S. district court, showed that physical servers in proximity to alleged illegal activities are not immune to unexpected loss.

Are you prepared? What is important is that you are aware of and consider all of these factors.

What is the cost to downtime? Having an actual figure of $X per hour combined with the potential loss due to reputation is a powerful motivator when requesting the investment of additional servers or other hardware for the implementation of a successful failure strategy.

![]()

Determining Responsibilities

This book and the Effective MySQL series provide highly practical and technical content to the reader. This chapter, while one of the least technical sections of any book, is the single most important business information for any system that records information, regardless of the choice of product. Disaster preparedness is too often overlooked in any organization, from a single person startup to Fortune 500 companies.

What is important is that both the business and technical decision makers have clear guidelines and agreement of these guidelines. For example, what does the statement “no downtime” mean in your context? The decision maker may say “no downtime,” but what that really means is serving page content and serving ads. This then implies that user management, adding comments, placing orders, and other functions are all services that can afford to have limited outages. These considerations may differ depending on your type of business. A media organization would consider serving of ads critical, while an online store would consider placing orders critical.

The most important component of any business is a disaster recovery (DR) plan. This is especially important when the data you have is your primary business asset. A total loss of data will most likely result in a loss of business viability, including your job and possible reputation. What is the acceptable loss of data, also known as the recovery point objective (RPO)?

Terminology

The following terms are used in defining business requirements for disaster situations.

|

Term |

Description |

|

DR |

Disaster recovery (DR) is the plan, including steps, actions, responsibilities, and timelines, that is needed for returning your business to successful operations. The DR plan includes the significant component for the successful and timely recovery of all information, which will depend on a suitable backup strategy. |

|

MTTR |

The mean time to recover (MTTR) is the average time taken to successfully recover from failure. This is not a guarantee that a system will be operational within this time. Individual components and types of failure may have very different MTTR values. The replacement of a failed hard drive is different from loss of network connectivity by an upstream provider, or by a denial of service attack. |

|

MTTD |

The mean time to detect (MTTD) is often unrepresented in any strategy; however, the time to detect a problem can have a significant impact on the type of recovery and/or the requirements for loss of data. |

|

RPO |

The recovery point objective (RPO) is the point in time to which you must recover data as defined by your organization. This is a generated definition of what an organization determines as acceptable loss in a disaster situation. Not all environments require an up to the minute recovery plan. More information at http://en.wikipedia.org/wiki/Recovery_point_objective. |

|

RTO |

The recovery time object (RTO) is the acceptable amount of time in the recovery situation to ensure business continuity. This is generally defined in a Service Level Agreement (SLA). |

|

Data classes |

Not all data has the same value or net worth. Some information is more important, and this classification can affect how your backup and recovery strategy may operate. In a disaster situation, certain data is more critical. The system may be considered operational without all data available. Defining data classes determines these types of data. |

|

SLA |

A Service Level Agreement (SLA) is something to be considered within an existing organization and not just with external suppliers. An SLA should also include both technical and business decision responsibilities in response to any important situation. |

Defining a formal SLA within an organization may vary for each system. This may include different values for these terms for each specific system.

Technical Resource Responsibilities

In most significant disasters you will never be given the opportunity to explain the impact, your possible options, or even how hard the solution may be. The questions will be very precise and generally include:

• When will our system be available?

• What information has been lost?

• Why did this happen?

The decision makers will discuss the potential revenue that was lost and the total business impact. Knowing these facts is important in determining what you need to plan and prepare for, how to present confidence at any time, and how to justify additional needs in physical and human resources.

Decision Maker Responsibilities

The role of the decision maker is to ensure the ongoing business viability at your organization. This includes many factors a technical resource may not consider, such as the ongoing media impact, shareholder responsibilities, acquiring additional staff resources, dealing with third party suppliers, and much more. Do you know how to reverse an online transaction, send an e-mail blast, and change the message on your customer support phone system? What is important is that you are prepared to support decisions made. The most likely preparation you can do to provide a level of confidence to your organization includes:

• Have a backup and recovery strategy in place.

• Have actual timings, test results, and daily reports of the success of your strategy freely available for anybody in your organization.

• Consider the extent of possible disaster recovery situations. You may not be able to address all issues; however, be able to think outside of the normal database operations for creative solutions to complex and business threatening conditions.

• Be proactive in providing information to build confidence in advance.

Knowing the decision makers and building a rapport over time is less about technical ability and more about professional development.

Identifying Dependencies

As you will see in the following case study, regardless of the best plans the database administrator has for supporting a disaster, there are dependencies on other resources and operations outside of your control.

![]()

Case Study

The following case study of a real world example is used to understand the important technical and business factors for a complex business situation.

The MySQL Topology

Your MySQL topology includes one master server and two slave servers using MySQL replication. This has been implemented because, in the past, several issues about read-scalability and reporting have enabled the justification of additional servers. Your environment supports a dedicated read slave and a dedicated reporting slave.

Your Backup and Recovery Strategy

Your backup strategy involves using one database slave to take a full copy of all of your data. You also realize the importance of the master binary logs for a point in time recovery and you have a secondary process that keeps copies of these at five minute intervals.

The current backup and recovery strategy supports many situations that have occurred in the past.

• You direct reads to your primary slave and reporting to the second slave.

• You can redirect reads to a different slave or the master.

• You can redirect or disable reporting.

• You test your backup. You are confident that a full restore of your system in two to three hours providing necessary hardware is functional.

• You can restore all data to a total loss of five minutes in a multiple database disaster situation and generally you can support data loss to a few seconds.

The current strategy is not perfect. You have some requests in process to support a controlled fail-over using a virtual IP (VIP) rather than a specific domain name; however, this involves implementing application and system changes. These needs do not seem as important as you would wish within your organization.

A Real Life Disaster

Your slave server has stopped applying transactions. There is no error message in replication. Your additional monitoring detects important business metrics and no orders have happened in the past 30 minutes.

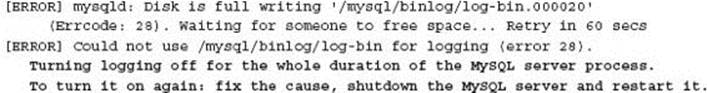

Meanwhile on the master server, multiple disk alerts have gone unnoticed and unactioned by the system administrator. As a result the partition holding the MySQL binary logs fills up. This results in the following error in the master error log, which ironically is not actively monitored:

For more information on the environment conditions that caused this error see http://ronaldbradford.com/blog/never-let-your-binlog-directory-fill-up-2009-07-15/.

Your backup strategy relies on the slave server being up to date, that is, by applying all binary log statements from the master. As this is now disabled, your slave server is missing important business transactions and is inconsistent with your master. You cannot use your primary recovery process, that is, simply restore your last successful static backup and apply the master binary logs for a point in time recovery. Your only option to recover all data is to stop your master database and take a backup, something you have never done on this server.

This new backup also involves having to clean up available disk space to support a copy of the database on this system. You also need to install the latest backup script, as this is not run on this system. Do you risk modifying the backup script and backup across the network to save cleaning up disk-space? How long will that take? Even after this new backup is taken you then have to restore this backup on both slaves.

This late notification and initial investigation has taken two hours to determine the only technical decision to ensure no data loss requires a further six hours to complete just to ensure you have a recoverable situation, and several hours more to complete the recovery of both slave servers. You are required to give regular business updates, to which you have no basis of information for this situation before. The result to the business is no new customer orders for over eight hours.

Your best made plans as a DBA are put to dust by a part time system administrator who is replacing a person on vacation. They did not notice or respond to a disk alert before it was too late. In the past, the DBA group you belong to has requested access to these important system alerts, but the system admin group will not give you access, as that is not your responsibility.

It does not really matter who is to blame—the database was unavailable for eight hours during peak time and you are the highly paid DBA responsible for ensuring the database is operational.

Technical Outcomes

There are many good points to take away from this experience. Your environment has system monitoring in place. Many organizations fail at this most initial step. A MySQL backup and recovery strategy is in place, is tested, documented, and timed. There are multiple MySQL instances to support some situations for failure.

There are some simple technical steps that are not implemented. Open access to all information alerts and the request for implementing using IPs and not using domain names for database connections, both simple technical tasks, but caught up in the bureaucracy of the business and decision makers.

What other options existed that could have been considered if you had more time to investigate or discuss with peers? You could have promoted one of your slaves, the most current, to a master. This would involve changing the MySQL configuration to enable binary logging and modifying your application servers to point to the new server. You could re-configure the second slave server to use this new master. You could have backed up the old master, because you now accept a loss of transactions for the sales during this time. Your downtime is now reduced to three hours; however, you have a mismatch of monies received with the orders defined, and potentially very annoyed customers if they do not get their orders. What is the time to undertake data forensics of the processed orders, then reapplying these orders to the system? There is no easy way because you do not want to double charge customers. What is the additional staff time and greater cost needed? More importantly, do you need to even consider this? We will answer this specific question in the following section.

If you had no idea about your system, and varying options, how could you give multiple options and time estimates to everybody who wants answers?

The mean time to detect (MTTD) is very important in this situation. The mean time to recover (MTTR) is also important. Which is more important may be different with respect to the point of view of responsibilities.

That item on your pending to-do list about having a more documented, tested, and streamlined fail-over process may have been a saving grace. Unfortunately the request to the application team to change the DB connections, the system group to enable a virtual IP (VIP) and necessary MySQL configuration changes are no help.

The Decision Process

As a DBA it is not always your decision about what action to take. That is the decision of the business owner. Who is that in your organization? What is important is how you structure information for the responsible person to make an informed decision.

In this situation, while three hours of downtime is less than eight hours, is this the best decision? Each hour is not just lost revenue, but a loss of business reputation. Will further bad press of being down all day hurt more? Again, it is important to understand the business requirements and to know who is ultimately responsible. In the previous section you considered the additional impacts of the three hour recovery option that accepts data loss from a technical perspective. However, you did not consider the business approach of processing a full refund to all customer orders affected. This functionality already exists to process refunds. The business could also send a specific e-mail apology about the situation and ask customers to re-order, even offering a discount code for the inconvenience. This additional process also already exists. The result would mean no lost data having to be restored and no additional work by physical resources. The only technical requirement would be identifying the customers affected and the details of these now refunded orders.

A disaster is the one thing that an executive of an organization should be kept awake at night worrying about. As a responsible DBA or data architect, your single greatest asset to an organization is to know what to do when something goes wrong, to be prepared. Being proactive and actively simulating and testing disaster recovery situations, documenting, timing, and reporting is the knowledge that separates skilled and technical resources from expert resources with a holistic business view.

Essential External Communication

While communication internally is critical in any disaster scenario, external communication is just as important. Having a public facing status page, a forum, and a feedback loop for customers is essential. It is also critical that information is transparent and open. Previous online disasters where information has been forthcoming promptly reduces additional stresses.

It is critical that the status and feedback options are not part of your primary infrastructure. As detailed with worst case examples in the following section, it is important this infrastructure is in a different data center, and preferably a different host provider.

![]()

Planning for the Worst Situation

It is impossible to plan for every possible disaster. Knowing what is possible can help identify how your business may be able to cope, and how it may not. The following are some real life situations that can happen. As a technical resource it is important that you share these situations with decision makers to ensure they are aware of the potential issues. Even the largest companies are not immune to an unexpected disaster. One public failure of the 365 Main data center due to a power incident on July 24, 2007, affected giant Internet sites including Craigslist, GameSpot, Yelp, Technorati, Typepad, and Netflix.

For more supporting information of these real life disasters described in summary here visit http://effectiveMySQL.com/article/real-life-disasters/.

Total SAN Failure

A SAN is not a backup solution. If anything a SAN is a higher likelihood of a larger cascading failure. Losing a single server from multiple hard drive failures (e.g., a RAID 1, RAID 5, and RAID 10 configuration can all operate with a single loss) has far less impact in a hundred server environment than a SAN failing and dropping all mounts for all systems. There are many actual occurrences of SAN failures. With one client, a routine replacement of several failed hard drives and a software upgrade by an employee of a large SAN service provider for a multi-million dollar SAN investment caused an internal panic, which shut down the SAN and 160 different mount points. This resulted in corrupting 30+ database servers and taking the entire website (a top 20 traffic site by Alexa) offline for several days.

In this example, relying on a single SAN for all production servers is a situation that should be avoided.

Power Disruption

Even with backup generators with four days of fuel supply in place, and a DR situation tested and used in the past five years, three out of ten (i.e., 30 percent) backup generators failed to operate when power was lost at a prominent San Francisco data center. The result was 40 percent of customers losing power to equipment. This was a serious disaster causing cascading systems failure.

In this example, hosting your entire infrastructure even with a premier hosting provider with impressive uptime is no guarantee of a total system outage. Using multiple locations is advisable for critical functionality.

Explosion

In June 2008 an electrical explosion at a data center took offline approximately 10,000 servers including the primary web and database server for the author of this book. Under direction from the fire department, due to safety issues and due to the seriousness of the incident, the backup generators located adjacent to the complex were also powered down. This resulted in an outage for several days. The situation was more complicated because the service provider also had their own management servers, domain management, SSL management, client management, and communication tools all in the same location without redundancy.

In this example, knowing your DR plan also includes understanding the DR plan of applicable service providers. An infrastructure for a web presence has many moving parts, some of which you may not consider, in this case, suitable DNS and network fail safes.

This was one example where the host provider managed the situation for customers well. The issue was addressed in a timely manner with regular updates published on a status page and forums. The phone greetings for support also included status information of the problem.

FBI Seizure

In a recent FBI raid for specific hardware, additional servers and important networking equipment were also seized with the same warrant. Much like a serious physical failure, the host provider was given no notice, and unaffected systems were also affected, ultimately leading to a cascading failure. In addition, unlike a physical failure that can be addressed by resources repairing or replacing faulty equipment, this equipment was removed for an undetermined time.

Blackout

Large statewide blackouts are uncommon; however, as a recent incident in September 2011 highlighted, a simple incident with one component (i.e., transformer failure) caused a cascading system failure that resulted in the shutdown of multiple nuclear power plants. In this situation power was lost for 15 hours for a large region across several states leaving entire cities without power. With a high availability solution across data centers, insufficient geographical redundancy could still result in a total loss.

In addition, a loss of power could mean no operation of your customer support telephone system, or facilities for your office staff to operate during the situation. Even with a geographical deployment there may be no way to implement a controlled fail-over, monitor, or update customers easily.

Human Factors

Being hacked, malicious intent by a disgruntled employee, and a failed system wide rollout of software by an employee that disables access to thousands of servers are all very possible situations. For many organizations, the act of changing the keys to your front door, aka the system passwords for your servers, is an important process that needs to be known, documented, tested, and able to be implemented instantly.

Human Resources

In addition to these situations, the impact of human resources may be the most overlooked situation. The case study in this chapter highlighted that less skilled technical resources caused a cascading failure situation. Vacation, sickness, accidents, and even overworked employees are all situations that have to be understood as possible disaster situations.

A commonly overlooked situation is the need for 24/7 support that is required regularly. While key resources may need to be on call for emergency support after hours, if this is always occurring, productively can be significantly impacted. The likelihood of error due to handling issues without appropriate procedures and at constant extended afterhours work are factors not to be avoided.

What is your “red bus” policy? This was a term first heard by the author some 20 years ago when a decision maker was talking about my potential demise. Simply put, what happens when your critical resource is hit by a “red bus”? While this term sounds harsh, this references all possible situations from sickness, vacations, accidents, and even the resignation of critical technical resources. While this is the responsibility of an organization, every individual could be proactive in ensuring your organization has some procedures or contact details for support services, emergency consulting services, or peers to step in.

![]()

Developing a Strategic Plan

Defining the requirements and responsibilities as discussed is necessary to determine what is acceptable to the business. In his book High Availability and Disaster Recovery (Springer, 2006), Klaus Schmidt describes the two properties important for any failure scenario, the probability of the failure and the damage caused by the failure. The mapping of the types of failure on an XY chart, with Damage on the X-axis and Probability on the Y-axis (pg 83), enables failures to be mapped into three categories: Fault protection or recovery by high availability, fault recovery by disaster recovery, and forbidden zone (pg 84). While the final category may appear inappropriately named, any failure in this category requires a system redesign to remove this limitation in providing a robust solution. This technical approach can be valuable for identifying the risk to the business and contributing to an appropriate strategic plan. In some situations, the addition of applicable redundancy is sufficient to avoid a loss of service situation.

Not discussed in this chapter, but also important in the overall data management plan, are any retention policies for legal or auditing requirements. Your backup plan may require keeping several months of backups on a weekly or monthly cycle.

![]()

Conclusion

System failure and disasters are inevitable; however, a catastrophe that affects your business, your own career, and reputation is avoidable. Many disaster situations have far greater business implications than finding and implementing a technical solution. A formal SLA agreement is a driving factor in making well informed decisions during these situations. The Boy Scouts’ very simple motto is the most applicable advice for any technologist: Be prepared.