Apache Hadoop YARN: Moving beyond MapReduce and Batch Processing with Apache Hadoop 2 (2014)

5. Installing Apache Hadoop YARN

A cluster-wide installation of Hadoop 2 YARN is necessary to harness the parallel processing capability of the Hadoop ecosystem. HDFS and YARN form the core components of Hadoop version 2. The familiar MapReduce process is still part of YARN, but it has become its own application framework. The installation methods described in this chapter enable you to fully install the base components needed for YARN functionality. Recall that in YARN, the JobTracker has been replaced by the ResourceManager and the per-node TaskTrackers have been replaced by the NodeManager. The basic HDFS installation using a NameNode and DataNodes remains unchanged.

We describe two methods of installation here: a script-based install and a GUI-based install using Apache Ambari. In both cases, a certain minimum amount of user is input required for successful installation.

The Basics

Of all the many ways to install Hadoop version 2, one of the more involved ways is to simply download the distribution from the Apache Software Foundation (ASF) site. This process requires the creation of a few directories, possibly creation and editing of the configuration files, and maybe even creation of your own scripts. Such a process is a great way to learn the basics of Hadoop administration, but for those wanting a fast and flexible route to Hadoop installation, we present two methods of automated installation in this chapter.

The system requirements for a Hadoop installation are somewhat basic. The installation of the ASF distribution still relies on a Linux file system such as ext3, ext4, or xfs. A Java Development Kit 1.6 (or greater) is required as well. The OpenJDK that comes with most popular Linux distributions should work for most installation procedures. Production systems should have processors, memory, disk, and network sufficient for the production use cases of each organization. To just get started, however, all we need are Linux servers and the right version of Java.

Hadoop 2 offers significant improvements beyond YARN—namely, improvements in the HDFS (Hadoop File System) that can influence infrastructure decisions. Whether to use NameNode federation and NameNode HA (high availability) are the two important decisions that must be made by most organizations. NameNode federation significantly improves the scalability and performance of HDFS by introducing the ability to deploy multiple NameNodes for a single cluster. In addition, HDFS introduces built-in high availability for the NameNode via a new feature called the Quorum Journal Manager (QJM). QJM-based HA features an active NameNode and a standby NameNode. The standby NameNode can become active either by a manual process or automatically. Automatic failover works in coordination with ZooKeeper. Hadoop 2 HDFS introduces the ZKFailoverController, which uses ZooKeeper’s election functionality to determine the active NameNode. Other features are also available in HDFS, but a complete description of HDFS installation and configuration is beyond the scope of this book. More information on HDFS options can be found at http://hadoop.apache.org/docs/stable/hdfs_user_guide.html.

For the purposes of describing the YARN installation process, a simple HDFS installation familiar to Hadoop 1 users will be used. It consists of a single NameNode, a SecondaryNameNode, and multiple DataNodes.

System Preparation

Once your system requirements are confirmed and you have downloaded the latest version of Hadoop 2, you will need some information that will make the scripted installation easier. The workhorse of this method is the open-source tool Parallel Distributed Shell (http://sourceforge.net/projects/pdsh), commonly referred to as simply pdsh, which describes itself as “an efficient, multithreaded remote shell client which executes commands on multiple remote hosts in parallel.” In simple terms, pdsh will execute commands remotely on hosts specified either on the command line or in a file. As Hadoop is a distributed system that potentially spans thousands of hosts, pdsh can be a very valuable tool for managing systems. Also included in the pdsh distribution is the pdcp command, which performs distributed copying of files. We’ll use both the pdsh and pdcp commands to install Hadoop 2.

Note

The following procedure describes an RPM (Red Hat)-based installation. The scripts described here are available for download from the book repository (see Appendix A), along with instructions for Ubuntu installation.

Step 1: Install EPEL and pdsh

The pdsh tool is easily installed using an existing RPM package. It is also possible to install pdsh by downloading prebuilt binaries or by compiling the tool from its source files. In most cases, this tool will be available through your system’s existing software installation or update mechanism. For Red Hat–based systems, this is the yum RPM repository; for SUSE systems, it is the zypper RPM repository.

For the purposes of this installation, we will use Red Hat RPM-based system. A version of the pdsh package is distributed in the Extra Packages for Enterprise Linux (EPEL) repository. EPEL has extra packages not in the standard RPM repositories for distributions based on Red Hat Linux. The following steps, performed as root, are needed to install the EPEL repository the pdsh RPM.

# rpm -Uvh http://download.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpm

...

# yum install pdsh

Step 2: Generate and Distribute ssh Keys

For pdsh to work effectively, we need to configure “password-less” ssh (secure shell). When pdsh executes remote commands, it will attempt to do so without the need for a password, similar to a user executing an ssh command. The first step is to generate the public and private keys for any user executing pdsh commands (at a minimum, for the root user). On Linux, the OpenSSH package is generally used for this task. This package includes the ssh-keygen command shown later in this subsection. For the easiest installation, as root we execute the ssh-keygencommand and accept all the defaults, making sure not to enter a passphrase. If we did specify a passphrase, we would need to enter this passphrase every time we used pdsh or any other tool that accessed the private key.

After generating the keys, we need to copy the public key to the hosts to which we want to log in via ssh without a password. While this might seem like a painstaking task, OpenSSH has the tools to make things easier. The OpenSSH clients package, which is usually installed by default, provides ssh-copy-id, a command that copies a public key to another host and adds the host to the ssh known_hosts file. During an installation using pdsh, we’ll want to use the host’s fully qualified domain name (FQDN), as this is also the hostname we’ll use in Hadoop configuration files.

# ssh-keygen -t rsa

...

# ssh-copy-id -i /root/.ssh/id_rsa.pub my.fqdn.tld

...

Once pdsh is installed and password-less ssh is working, the following type of command should be possible. See the pdsh man page for more information.

# pdsh –w my.fqdn.tld hostname

One feature of pdsh that will be useful to us is the ability to use host lists. For example, if we create a file called all_hosts and include the FQDNs of all the nodes in the cluster, then pshd can use this list as follows:

# pdsh -w ^all_hosts uptime

This feature will be used extensively in the installation script.

Script-based Installation of Hadoop 2

To simplify the installation process, we will use an installation script to perform the series of tasks required for a typical Hadoop 2 installation. The script is available for review in Appendix B, and can serve as a guide to the steps necessary to install Hadoop 2. The script and all other files are also available from the book repository; see Appendix A for details. The installation script is designed to be flexible and customizable to your needs. Modification for your specific needs is encouraged.

Before we begin, there are some assumptions and some prerequisites that you will need to provide. First, we assume that all nodes have a current Red Hat distribution installed (or Red Hat–like distribution). Second, we assume that adequate memory, cores, network, and disk space are available to meet your needs. Best practices for selecting hardware can be found on the web: http://hortonworks.com/blog/best-practices-for-selecting-apache-hadoop-hardware. Finally, we assume that pdsh is working across the cluster.

You will need to choose a version of Hadoop. As of this book’s writing, version 2.2.0 was the most current version available from Apache Hadoop’s website. To obtain the current version, go to http://hadoop.apache.org/releases.html and follow the links to the current version. For this install, we downloaded hadoop-2.2.0.tar.gz (there is no need to use the “src” version).

JDK Options

There are two options for installing a Java JDK. The first is to install the OpenJDK that is part of the distribution. You can test whether the OpenJDK is installed by issuing the following command. If the OpenJDK is installed, the packages will be listed.

# rpm -qa|grep jdk

java-1.6.0-openjdk-devel-1.6.0.0-1.62.1.11.11.90.el6_4.x86_64

java-1.6.0-openjdk-1.6.0.0-1.62.1.11.11.90.el6_4.x86_64

Both the base and devel versions should be installed. The other recommended JVM is jdk-6u31-linux-x64-rpm.bin from Oracle. This version can be downloaded from http://www.oracle.com/technetwork/java/javasebusiness/downloads/java-archive-downloads-javase6-419409.html.

If you choose to use the Oracle version, make sure you have removed the OpenJDK from all your systems using the following command:

# rpm –e java-1.6.0-openjdk-devel java-1.6.0-openjdk-devel

You may need to add the “--nodeps” option to remove the packages. Keep in mind that if there are dependencies on the OpenJDK, you may need to change some settings to use the Oracle JDK.

Step 1: Download and Extract the Scripts

The install scripts and supporting files are available from the book repository (see Appendix A). You can simply use wget to pull down the hadoop2-install-scripts.tgz file.

As root, extract the file move to the hadoop2-install-scripts working directory:

# tar xvzf hadoop2-install-scripts.tgz

# cd hadoop2-install-scripts

If you have not done so already, download your Hadoop version and place it in this directory (do not extract it). Also, if you are using the Oracle SDK, place it in this directory as well.

Step 2: Set the Script Variables

The main script is called install-hadoop2.sh. The following is a list of the user-defined variables that appear at the beginning of this script. You will want to make sure your version matches the HADOOP_VERSION variable. You can also change the install path by changingHADOOP_HOME (in this case, the path is /opt). Next, there are various directories that are used by the various services. The following example assumes that /var has sufficient capacity to hold all the Hadoop 2 data and log files. These paths can also be changed to suit your hardware. It is a good idea to keep the default values for HTTP_STATIC_USER and YARN_PROXY_PORT. Finally, JAVA_HOME needs to be defined. If you are using the OpenJDK, make sure this definition corresponds to the OpenJDK path. If you are using the Oracle JDK, then download jdk-6u31-linux-x64-rpm.bin to this directly and define JAVA_HOME as empty: JAVA_HOME="".

# Basic environment variables. Edit as necessary

HADOOP_VERSION=2.2.0

HADOOP_HOME="/opt/hadoop-${HADOOP_VERSION}"

NN_DATA_DIR=/var/data/hadoop/hdfs/nn

SNN_DATA_DIR=/var/data/hadoop/hdfs/snn

DN_DATA_DIR=/var/data/hadoop/hdfs/dn

YARN_LOG_DIR=/var/log/hadoop/yarn

HADOOP_LOG_DIR=/var/log/hadoop/hdfs

HADOOP_MAPRED_LOG_DIR=/var/log/hadoop/mapred

YARN_PID_DIR=/var/run/hadoop/yarn

HADOOP_PID_DIR=/var/run/hadoop/hdfs

HADOOP_MAPRED_PID_DIR=/var/run/hadoop/mapred

HTTP_STATIC_USER=hdfs

YARN_PROXY_PORT=8081

# If using local OpenJDK, it must be installed on all nodes.

# If using jdk-6u31-linux-x64-rpm.bin, then

# set JAVA_HOME="" and place jdk-6u31-linux-x64-rpm.bin in this directory

JAVA_HOME=/usr/lib/jvm/java-1.6.0-openjdk-1.6.0.0.x86_64/

Step 3: Provide Node Names

Once you have set the options, it is time to perform the installation. Keep in mind that the script relies heavily on pdsh and pdcp. If these two commands are not working across the cluster, then the installation procedure will not work. You can get help for the script by running

# ./install-hadoop2.sh -h

The script offers two options: file based or interactive. In file-based mode, the script needs the following list of files with the appropriate node names for your cluster:

![]() nn_host: HDFS NameNode hostname

nn_host: HDFS NameNode hostname

![]() rm_host: YARN ResourceManager hostname

rm_host: YARN ResourceManager hostname

![]() snn_host: HDFS SecondaryNameNode hostname

snn_host: HDFS SecondaryNameNode hostname

![]() mr_history_host: MapReduce Job History server hostname

mr_history_host: MapReduce Job History server hostname

![]() yarn_proxy_host: YARN Web Proxy hostname

yarn_proxy_host: YARN Web Proxy hostname

![]() nm_hosts: YARN NodeManager hostnames

nm_hosts: YARN NodeManager hostnames

![]() dn_hosts: HDFS DataNode hostnames, separated by a space

dn_hosts: HDFS DataNode hostnames, separated by a space

If you use the interactive method, the files will be created automatically. If you choose the file-based method, you can edit the files yourself. With exception of nm_hosts and dn_hosts, all of the files require one hostname. Depending on your installation, some of these hosts may be the same physical machine. The nm_hosts and dn_hosts files take a space-delimited list of hostnames, which will identify HDFS data nodes and/or YARN worker nodes.

Step 4: Run the Script

After you have checked over everything, you can run the script as follows, using tee to keep a record of the install:

# ./install-hadoop2.sh –f |tee install-hadoop2-results

Some steps may take longer than others. If everything worked correctly, a MapReduce job (the classic “calculate pi” example) will be run. If it is successful, the installation process is complete. You may wish to install other tools like Pig, Hive, or HBase as well. For your reference, the script does the following:

1. Copies the Hadoop tar file to all hosts.

2. Optionally copies and installs Oracle JDK 1.6.0_31 to all hosts.

3. Sets the JAVA_HOME and HADOOP_HOME environment variables on all hosts.

4. Extracts the Hadoop distribution on all hosts.

5. Creates system accounts and groups on all hosts (Group: hadoop, Users: yarn, hdfs, and mapred).

6. Creates HDFS data directories on the NameNode host, SecondaryNameNode host, and DataNode hosts.

7. Creates log directories on all hosts.

8. Creates pid directories on all hosts.

9. Edits Hadoop environment scripts for log directories on all hosts.

10. Edits Hadoop environment scripts for pid directories on all hosts.

11. Creates the base Hadoop XML config files (core-site.XML, hdfs-site.XML, mapred-site.XML, yarn-site.XML).

12. Copies the base Hadoop XML config files to all hosts.

13. Creates configuration, command, and script links on all hosts.

14. Formats the NameNode.

15. Copies start-up scripts to all hosts (hadoop-datanode, hadoop-historyserver, hadoop-namenode, hadoop-nodemanager, hadoop-proxyserver, hadoop-resourcemanager, and hadoop-secondarynamenode).

16. Starts Hadoop services on all hosts.

17. Creates MapReduce Job History directories.

18. Runs the YARN pi MapReduce job.

Step 5: Verify the Installation

There are a few points in the script-based installation process where problems may occur. One important step is formatting the NameNode (Step 14 in the preceding list). The results of this command will show up in the script output. Make sure there were no errors with this command.

If you note other errors, such as when starting the Hadoop daemons, check the log files located under $HADOOP_HOME/logs. The final part of the script runs the following example “pi” MapReduce job command.

hadoop jar \

$HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-$HADOOP_VERSION.jar \

pi -Dmapreduce.clientfactory.class.name=org.apache.hadoop.mapred.YarnClientFactory \

-libjars $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-$HADOOP_VERSION.jar \

16 10000

If this test is successful, you should see the following at the end of the output. (Your run-time time will vary.)

Job Finished in 25.854 seconds

Estimated value of Pi is 3.14127500000000000000

You can also examine the HDFS file system using the following command:

# hdfs dfs -ls /

Found 6 items

drwxr-xr-x - hdfs hdfs 0 2013-02-06 21:17 /apps

drwxr-xr-x - hdfs hadoop 0 2013-02-06 22:26 /benchmarks

drwx------ - mapred hdfs 0 2013-04-25 16:20 /mapred

drwxr-xr-x - hdfs hdfs 0 2013-05-16 11:53 /system

drwxrwxr-- - hdfs hadoop 0 2013-05-14 14:54 /tmp

drwxrwxr-x - hdfs hadoop 0 2013-04-26 02:00 /user

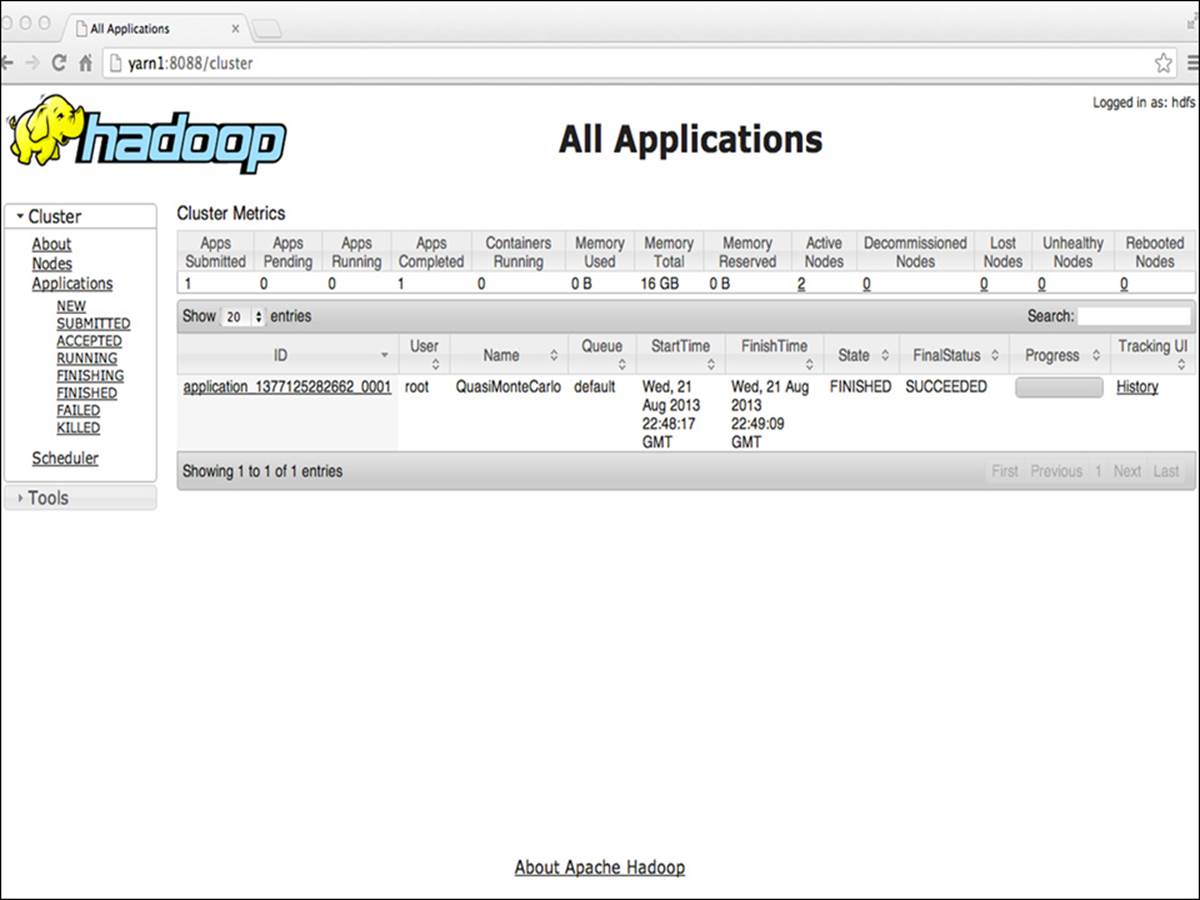

Similar to Hadoop version 1, many aspects of Hadoop version 2 are available via the built-in web UI. The web UI can be accessed by directly accessing the following URL in your favorite browser or by issuing the following command as the user hdfs (using your local hostname):

$ firefox http://hostname:8088/cluster

An example of the UI is shown in Figure 5.1.

Figure 5.1 YARN web UI

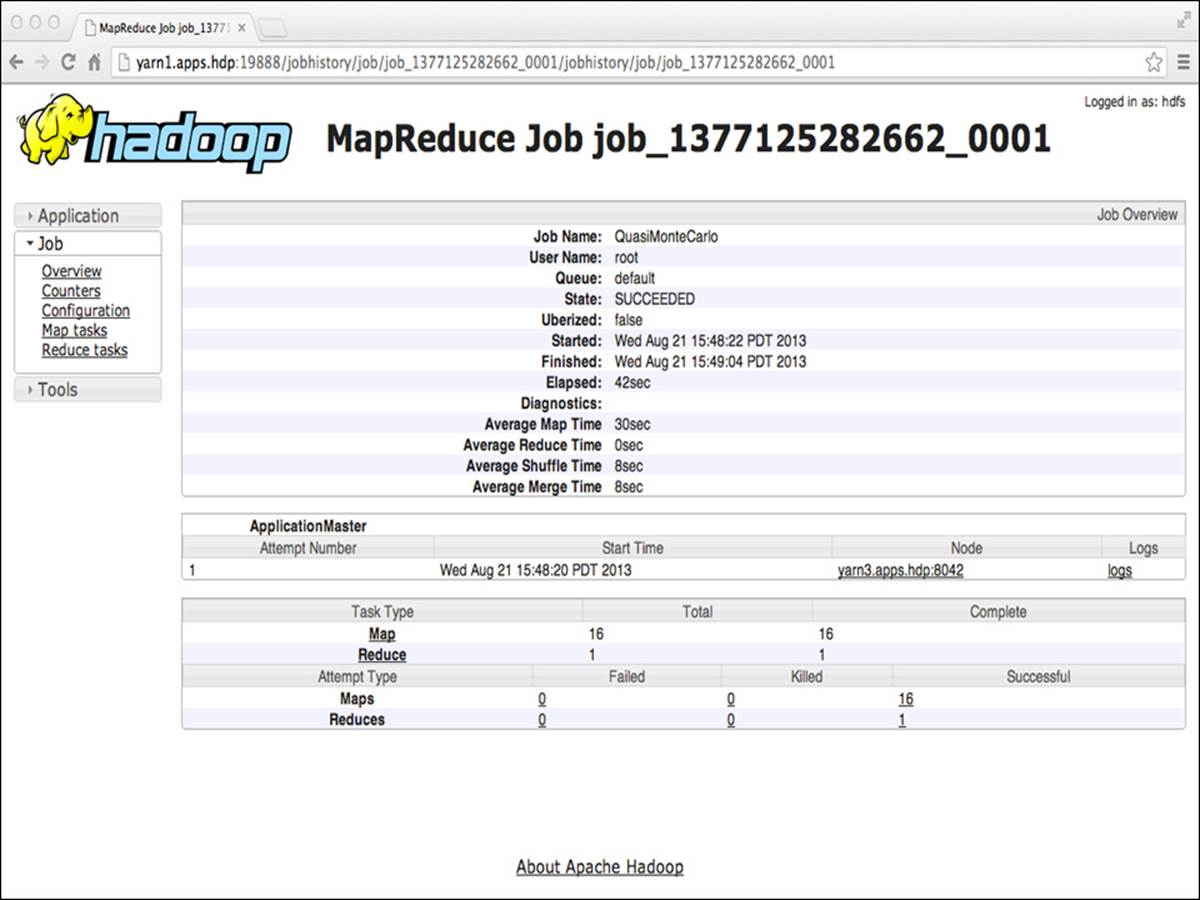

The test job should be listed in the UI window. You can find out about the job history by clicking the History link on the right side of the job summary. When you do so, the window shown in Figure 5.2 should be displayed.

Figure 5.2 YARN pi example job history

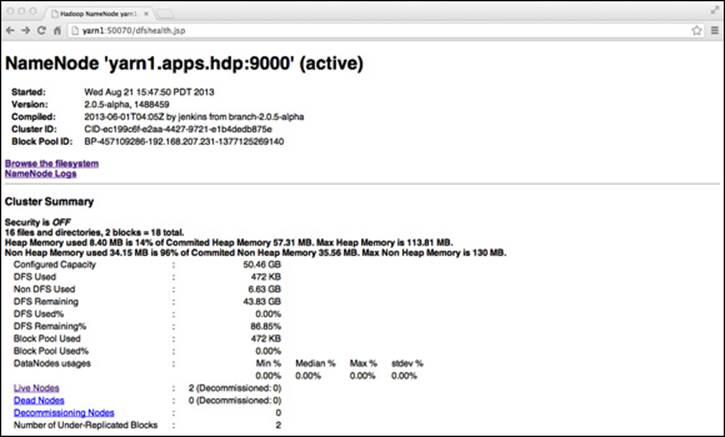

To check the status of the your parallel file system, enter hostname:50070 into the browser. A page similar to Figure 5.3 should be displayed.

Figure 5.3 HDFS NameNode web UI

Note

The output with Hadoop version 2.2.0 may look slightly different than it does in Figures 5.1, 5.2, and 5.3.

If the HDFS file system looks fine and there are no dead nodes, then your Hadoop cluster should be fully functional. You can investigate other aspects of your Hadoop 2 cluster by exploring some of the links on both the HDFS and YARN UI pages.

Script-based Uninstall

To uninstall the Hadoop2 installation, use the uninstall-hadoop2.sh script. Make sure any changes you made to the install-hadoop2.sh script, such as the Hadoop version number (HADOOP_VERSION), are copied to the uninstall script.

Configuration File Processing

The install script provides some commands that you will find useful for processing Hadoop XML files. If you examine the install-hadoop2.sh script, you should notice that several commands are used to create the Hadoop XML configuration files:

create_config() --file filename

put_config --file filename --property-property pname --value pvalue

del_config()--file filename --property-property pname

where filename is the name of the XML file, pname is the property to be defined, and pvalue is the actual value. The functions are defined in hadoop-xml-conf.sh, which is part of the hadoop2-install-scripts.tgz archive. See the book repository (Appendix A) for download instructions. Basically, these scripts facilitate the creation of Hadoop XML configuration files. If you want to customize the installation or make changes to the XML files, these scripts may be useful.

Configuration File Settings

The following is a brief description of the settings specified in the Hadoop configuration files by the installation script. These files are located in $HADOOP_HOME/etc/hadoop.

core-site.xml

In this file, we define two essential properties for the entire system.

hdfs://$nn:9000 --> fs.default.name

$HTTP_STATIC_USER --> hadoop.http.staticuser.user

First, we define the name of the default file system we wish to use. Because we are using HDFS, we will set this value to hdfs://$nn:9000 ($nn is the NameNode we specified in the script and 9000 is the standard HDFS port). Next we add thehadoop.http.staticuser.user (hdfs) that we defined in the install script. This login is used as the default user for the built-in web user interfaces.

hdfs-site.xml

The hdfs-site.xml file holds information about the Hadoop HDFS file system. Most of these values were set at the beginning of the script. They are copied as follows:

$NN_DATA_DIR --> dfs.namenode.name.dir

$SNN_DATA_DIR --> fs.checkpoint.dir

$SNN_DATA_DIR --> fs.checkpoint.edits.dir

$DN_DATA_DIR --> dfs.datanode.data.dir

The remaining two values are set to the standard default port numbers ($nn is the NameNode and $snn is the SecondaryNameNode we input to the script):

$nn:50070 --> dfs.namenode.http-address

$snn:50090 --> dfs.namenode.secondary.http-address

mapred-site.xml

Users who are familiar with Hadoop version 1 may notice that this is a known configuration file. Given that MapReduce is just another YARN framework, it needs its own configuration file. The script specifies the following settings:

yarn --> mapreduce.framework.name

$mr_hist:10020 --> mapreduce.jobhistory.address

$mr_hist:19888 --> mapreduce.jobhistory.webapp.address

/mapred --> yarn.app.mapreduce.am.staging-dir

The first property is mapreduce.framework.name. For this property, there are three valid values: local (default), classic, or yarn. Specifying “local” for this value means that the MapReduce Application is run locally in a process on the client machine, without using any cluster resources. This local process will execute the map and reduce tasks for a given job; because it is local, it doesn’t need to shuffle data from map task output on one server to reduce task input on another server. Typically, this means that there will be one map task and one reduce task.

Specifying “classic” for the mapreduce.framework.name property is appropriate when there is a Hadoop 1.x JobTracker running in your cluster where Hadoop can submit the job. This property exists to accommodate unforeseen situations where there are backward-compatibility problems with users’ MapReduce jobs and the need for a classic job submission process to a JobTracker is unavoidable.

As we are interested in using yarn, we will set mapreduce.framework.name to “yarn” and use the new MapReduce framework provided by YARN.

The next two properties, mapreduce.jobhistory.address and mapreduce.jobhistory.webapp.address, may seem similar but have some subtle differences. The mapreduce.jobhistory.address property is the host and port where the MapReduce application will send job history via its own internal protocol. The mapreduce.jobhistory.webapp.address is where an administrator or a user can view the details of MapReduce jobs that have completed.

Finally, we specify a property for a MapReduce staging directory. When a Map-Reduce job is submitted to YARN, the MapReduce ApplicationMaster will create temporary data in HDFS for the job and will need a staging area for this data. The propertyyarn.app.mapreduce.am.staging-dir is where we designate such a directory in HDFS (i.e., /mapred).

This staging area will also be used by the job history server. The installation script will make sure that the proper permissions and subdirectories are created (i.e., /mapred/history/done_intermediate).

yarn-site.xml

The final configuration file is yarn-site.xml. The script sets the following values:

mapreduce.shuffle --> yarn.nodemanager.aux-services

org.apache.hadoop.mapred.ShuffleHandler --> yarn.nodemanager.aux-services.mapreduce.shuffle.class

$yarn_proxy:$YARN_PROXY_PORT --> yarn.web-proxy.address

$rmgr:8030 --> yarn.resourcemanager.scheduler.address

$rmgr:8031 --> yarn.resourcemanager.resource-tracker.address

$rmgr:8032 --> yarn.resourcemanager.address

$rmgr:8033 --> yarn.resourcemanager.admin.address

$rmgr:8088 --> yarn.resourcemanager.webapp.address

The yarn.nodemanager.aux-services property tells the NodeManager that a MapReduce container will have to do a shuffle from the map tasks to the reduce tasks. Previously, the shuffle step was part of the monolithic MapReduce TaskTracker. With YARN, the shuffle is an auxiliary service and must be set in the configuration file. In addition, the yarn.nodemanager.aux-services.mapreduce.shuffle.class property tells YARN which class to use to do the actual shuffle. The class we use for the shuffle handler isorg.apache.hadoop.mapred.ShuffleHandler. Although it’s possible to write your own shuffle handler by extending this class, it is recommended that the default class be used.

The next property is the yarn.web-proxy.address. This property is part of the installation process because we decided to run the YARN Proxy Server as a separate process. If we didn’t configure it this way, the Proxy Server would run as part of the ResourceManager process. The Proxy Server aims to lessen the possibility of security issues. An ApplicationMaster will send to the ResourceManager a link for the application’s web UI but, in reality, this link can point anywhere. The YARN Proxy Server lessens the risk associated with this link, but it doesn’t eliminate it.

The remaining settings are the default ResourceManager port addresses.

Start-up Scripts

The Hadoop distribution includes a lot of convenient scripts to start and stop services such as the ResourceManager and NodeManagers. In a production cluster, however, we want the ability to integrate our scripts with the system’s services management. On most Linux systems today, that means integrating with the init system.

Instead of relying on the built-in scripts that ship with Hadoop, we provide a set of init scripts that can be placed in /etc/init.d and used to start, stop, and monitor the Hadoop services. The files are as follows, with each service being identified by name:

hadoop-namenode

hadoop-datanode

hadoop-secondarynamenode

hadoop-resourcemanager

hadoop-nodemanager

hadoop-proxyserver

hadoop-historyserver

Of course, not all services run on all nodes; thus the installation script places the correct files on the requisite nodes. Before starting the service, the script also registers each service with chkconfig. Once these services are installed, they can be easily managed with commands like the following:

# service hadoop-namenode start

# hadoop-resourcemanager status

# hadoop-proxyserver restart

# hadoop-historyserver stop

Installing Hadoop with Apache Ambari

A script-based manual Hadoop installation can turn out to be a challenging process as it scales out from tens of nodes to thousands of nodes. Because of this complexity, a tool with the ability to manage installation, configuration, and monitoring of the Hadoop cluster became a much-needed addition to the Hadoop ecosystem. Apache Ambari provides the means to handle these simple, yet highly valuable tasks by utilizing an agent on each node to install required components, change configuration files, and monitor performance or failures of nodes either individually or as an aggregate. Both administrators and developers will find many of the Ambari features useful.

Installation with Ambari is faster, easier, and less error prone than manually setting up each service’s configuration file. As an example, a 12-node cluster install of Hortonworks HDP2.X services (HDFS, MRv2, YARN, Hive, Sqoop, ZooKeeper, HCatalog, Oozie, HBase, and Pig) was accomplished in less than one hour with this tool. Ambari can dramatically cut down on the number of people required to install large clusters and increase the speed with which development environments can be created.

Configuration files are maintained by an Ambari server acting as the primary interface to make changes to the cluster. Ambari guarantees that the configuration files on all nodes are the same by automatically redistributing them to the nodes every time a service’s configuration changes. From an operational perspective, this approach provides peace of mind; you know that the entire cluster—from 10 to 4000-plus nodes—is always in sync. For developers, it allows for rapid performance tuning because the configuration files can be easily manipulated.

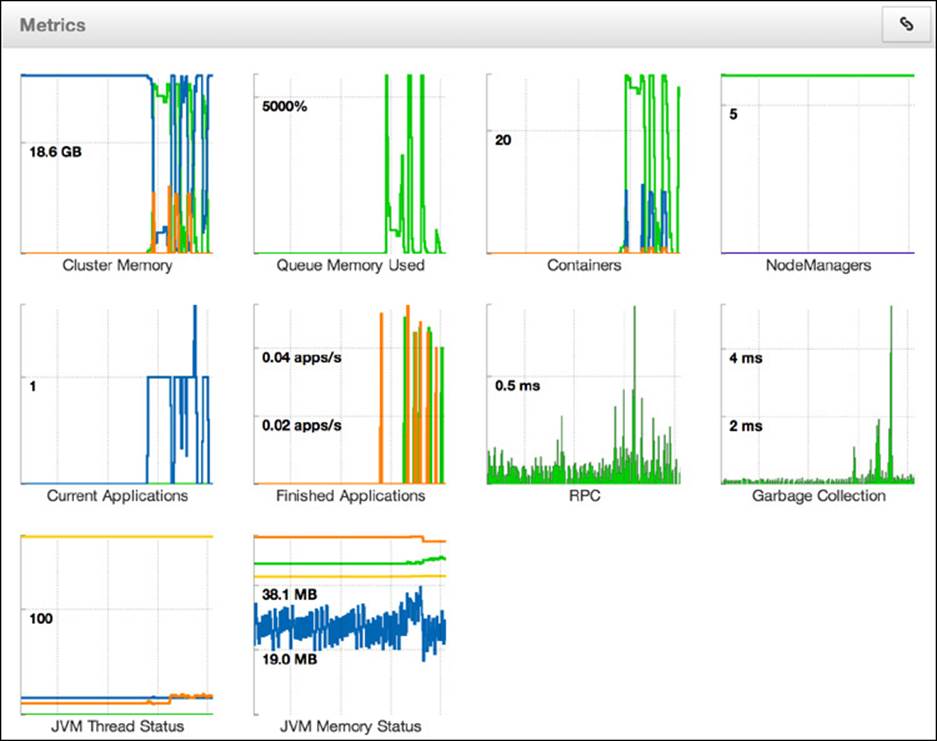

Monitoring encompasses the starting and stopping of services, along with reporting on whether a service is up or down, network usage, HDFS, YARN, and a multitude of other load metrics. Ganglia and Nagios report back to the Ambari server monitoring cluster health on issues ranging from utilization of services such as HDFS storage to failures of stack components or entire nodes. Users can also take advantage of the ability to monitor a number of YARN metrics such as cluster memory, total containers, NodeManagers, garbage collection, and JVM metrics. An example of the Ambari dashboard is shown in Figure 5.4.

Figure 5.4 YARN metrics dashboard

Performing an Ambari-based Hadoop Installation

Compared to a manual installation of Hadoop 2, when using Ambari there are significantly fewer software requirements and operating system tasks like creating users or groups and directory structures to perform. To manage the cluster with Ambari, we install two components:

1. The Ambari-Server, which should be its own node separate from the rest of the cluster

2. An Ambari-Agent on each node of the rest of the cluster that requires managing

For the purposes of this installation, we will reference the HDP 2.0 (Hortonworks Data Platform) documentation for the Ambari Automated Install (see http://docs.hortonworks.com/#2.0 for additional information). In addition, although Ambari may eventually work with other Hadoop installations, we will use the freely available HDP version to ensure a successful installation.

Step 1: Check Requirements

As of this writing, RHEL 5 and 6, CentOS 5 and 6, OEL (Oracle Enterprise Linux) 5 and 6, and SLS 11 are supported by HDP 2, however Ubuntu is not supported at this time. Ensure that each node has yum, rpm, scp, curl, and wget. Additionally, ntpd should be running. Hive/HCatalog, Oozie, and Ambari all require their own internal databases; we can control these databases during installation, but they will need to be installed. If your cluster nodes do not have access to the Internet, you will have to mirror the HDP repository and set up your own local repositories.

Step 2: Install the Ambari Server

Perform the following actions to download the Ambari repository, add it to your existing yum.repos.d on the server node, and install the packages:

# wget http://public-repo-1.hortonworks.com/ambari-beta/centos6/1.x/beta/ambari.repo

# cp ambari.repo /etc/yum.repos.d

# yum -y install ambari-server

Next we set up the server. At this point, you can decide whether you want to customize the Ambari-Server database; the default is PostgreSQL. You will also be prompted to accept the JDK license unless you specify the --java-home option with the path of the JDK on all nodes in the cluster. For this installation, we will use the default values as signified by the silent install option. If the following command is successful, you should see “Ambari Server ‘setup’ completed successfully.”

# ambari-server setup --silent

Step 3: Install and Start Ambari Agents

Most of today’s current enterprise security divisions have a hard time accepting some of Hadoop’s more unusual requirements, such as root password-less ssh between all nodes in the cluster. Root password-less ssh is used only to automate installation of the Ambari agents and is not required for day-to-day operation. To stay within many security guidelines, we will be performing a manual install of the Ambari-Agent on each node in the cluster. Be sure to set up the repository files on each node as described in Step 2. Optionally, you can use pdsh and pdcp with password-less root to help automate the installation of Ambari agents across the cluster. To install the agent, enter the following for each node:

# yum -y install ambari-agent

Next, configure the Ambari-Agent ini file with the FQDN of the Ambari-Server. By default, this value is localhost. This task can be done using sed.

# sed -i 's/hostname=localhost/hostname=yourAmbariServerFQDNhere/g' /etc/ambari-agent/conf/ambari-agent.ini

On all nodes, start Ambari with the following command:

# ambari-agent start

Step 4: Start the Ambari Server

To start the Ambari-Server, enter

# ambari-server start

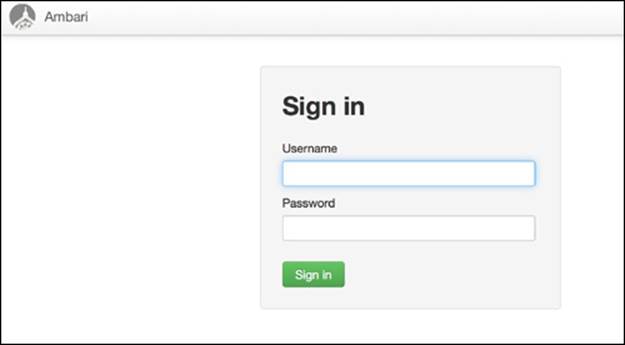

Log into the Ambari-Server web console using http://AmbariServerFQDN:8080. If everything is working properly, you should see the login screen shown in Figure 5.5. The default login is username = admin and password = admin.

Figure 5.5 Ambari sign-in screen

Step 5: Install an HDP2.X Cluster

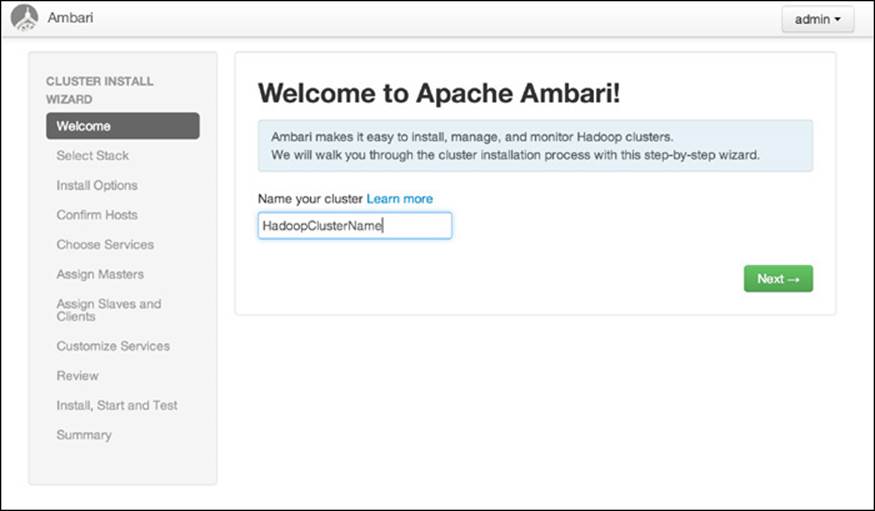

The first task is to name your cluster.

1. Enter a name for your cluster and click the green “Next” button (see Figure 5.6).

Figure 5.6 Enter a cluster name

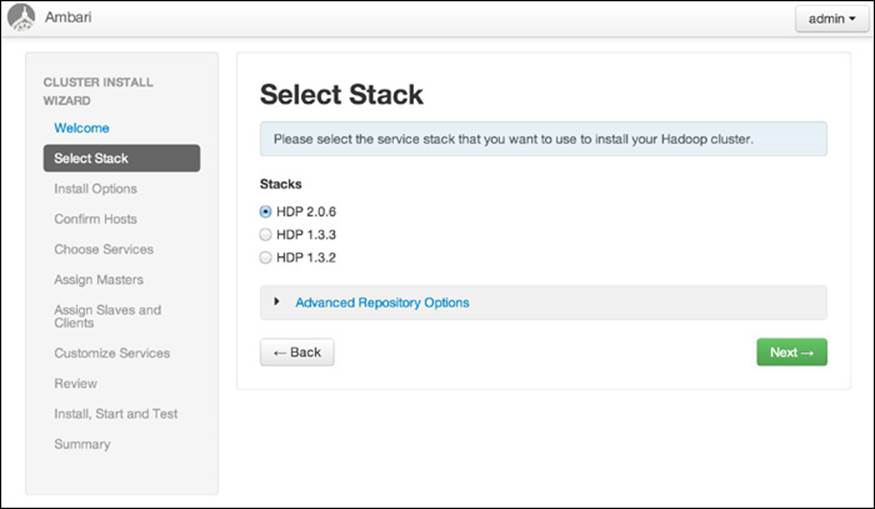

2. The next option is to select the version of the HDP software stack. Currently the options include only the recent version of HDP. Select the 2.X stack option and click Next as shown in Figure 5.7.

Figure 5.7 Select a Hadoop 2.X software stack

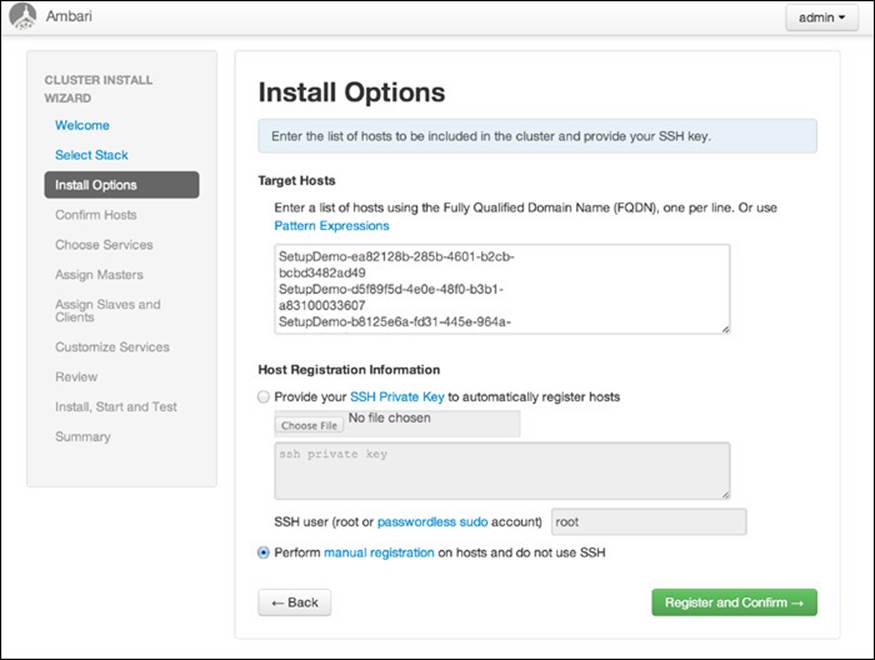

3. Next, Ambari will ask for your list of nodes, one per line, and ask you to select manual or private key registration. In this installation, we are using the manual method (see Figure 5.8).

Figure 5.8 Hadoop install options

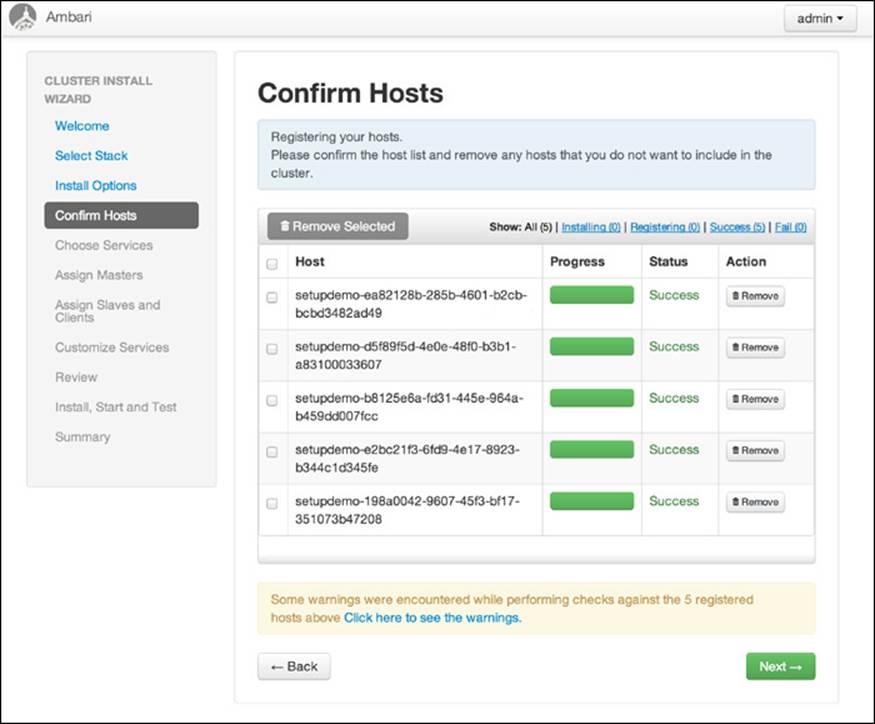

The installed Ambari-Agents should register with the Ambari-Server at this point. Any install warnings will also be displayed here, such as ntpd not running on the nodes. If there are issues or warnings, the registration window will indicate these as shown in Figure 5.9. Note that the example is installing a “cluster” of one node.

Figure 5.9 Ambari host registration screen

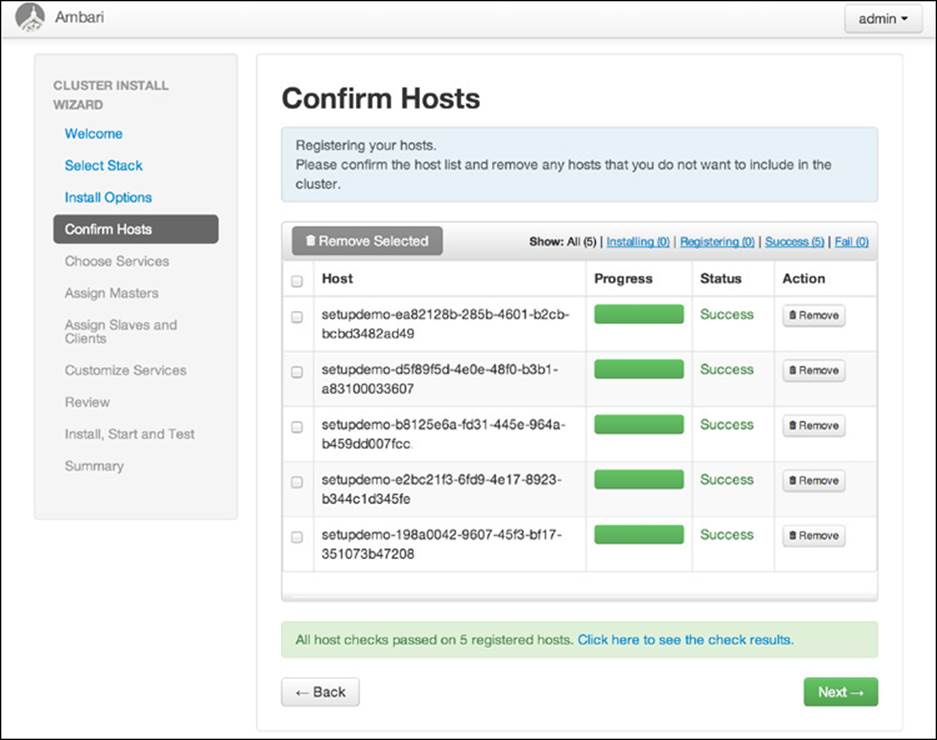

If everything is set correctly, your window should look like Figure 5.10.

Figure 5.10 Successful Ambari host registration screen

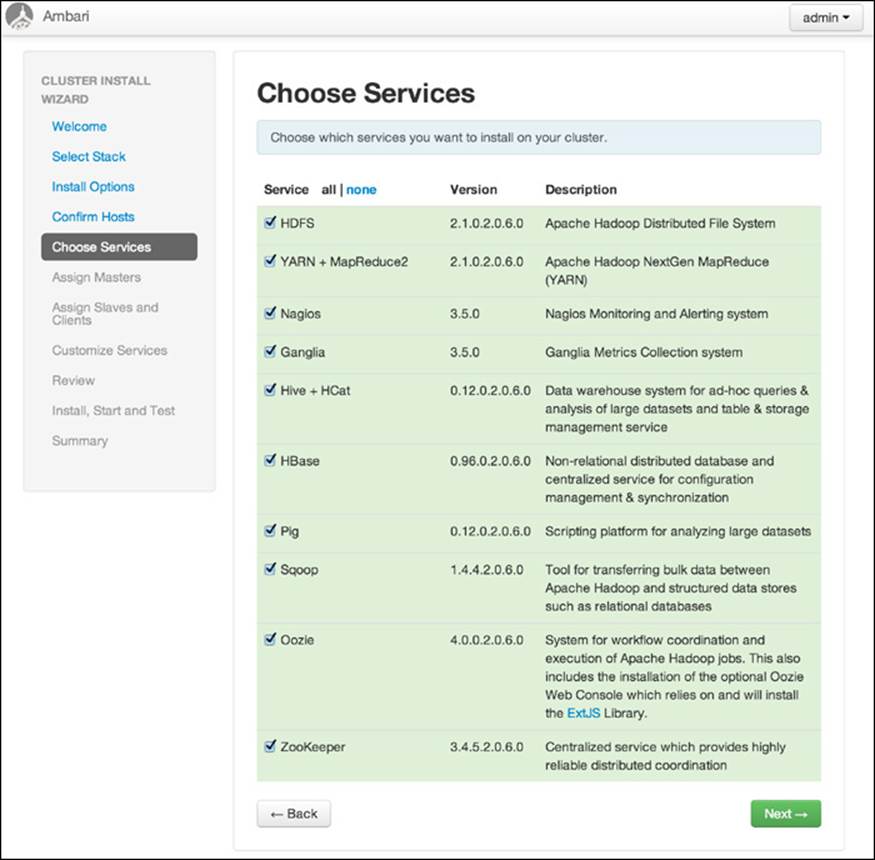

4. The next step is to select which components of the HDP2.X stack you want to install. At the very least, you will want to install HDFS, YARN, and MapReduceV2. In Figure 5.11, we will install everything.

Figure 5.11 Ambari services selection

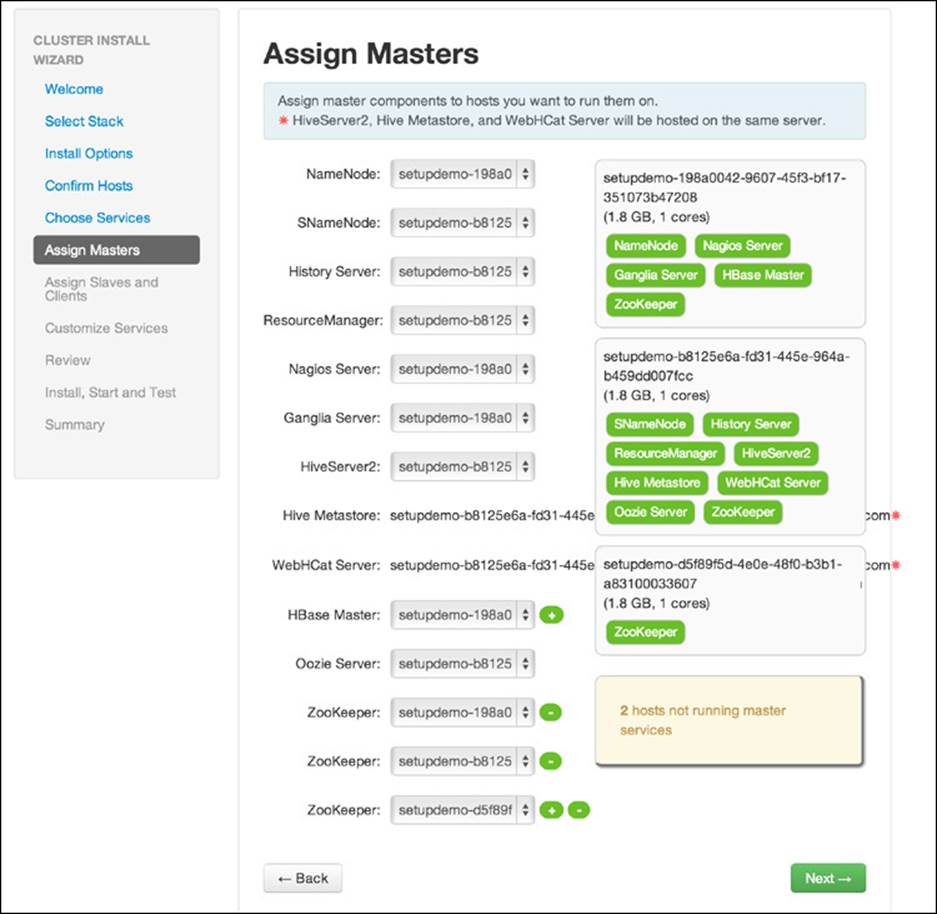

5. The next step is to assign functions to the various nodes in your cluster (see Figure 5.12). The Assign Masters window allows for the selection of master nodes—that is, NameNode, ResourceManager, HBaseMaster, Hive Server, Oozie Server, etc. All nodes that have registered with the Ambari-Server will be available in the drop-down selection box. Remember that the ResourceManager has replaced the JobTracker from Hadoop version 1 and in a multi-node installation should always be given its own dedicated node.

Figure 5.12 Ambari host assignment

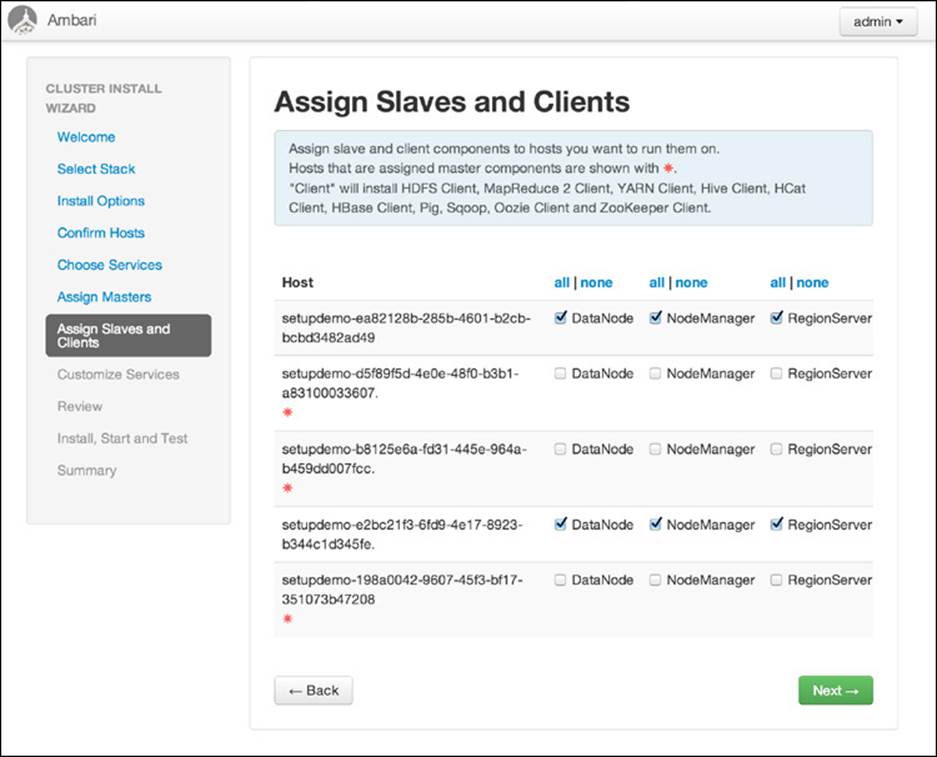

6. In this step, you assign NodeManagers (which run YARN containers), RegionServers, and DataNodes (HDFS). Remember that the NodeManager has replaced the TaskTracker from Hadoop version 1, so you should always co-locate one of these node managers with a DataNode to ensure that local data is available for YARN containers. The selection window is shown in Figure 5.13. Again, this example has only a single node.

Figure 5.13 Ambari slave and client component assignment

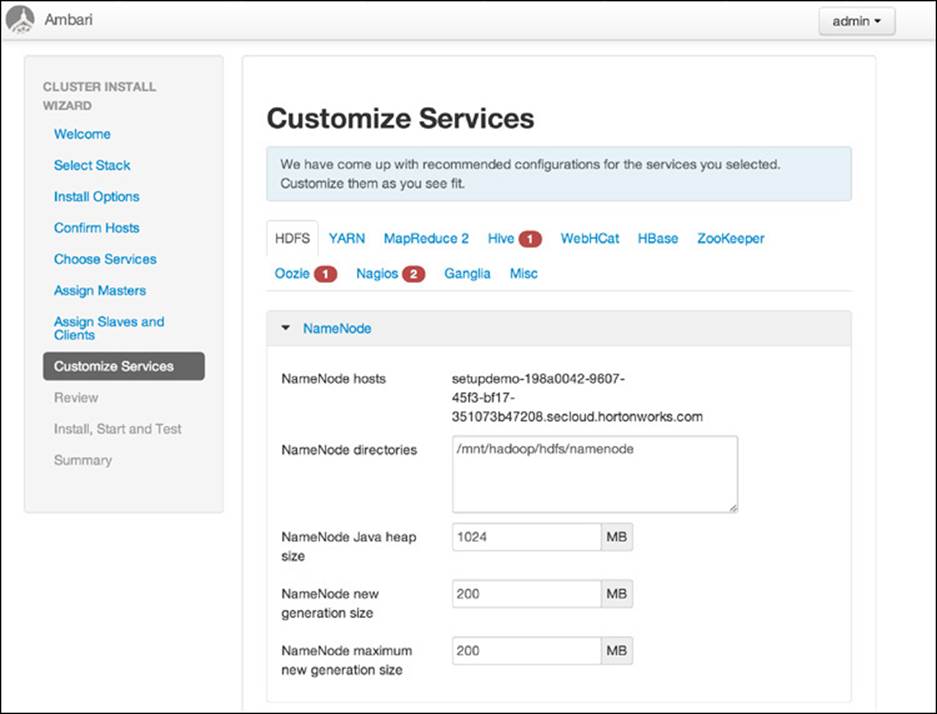

7. The next set of screens allows you to define any initial parameter changes and usernames for the services selected for installation (i.e., Hive, Oozie, and Nagios). Users are required to set up the database passwords and alert reporting email before continuing. The Hive database setup is pictured in Figure 5.14.

Figure 5.14 Ambari customized services window

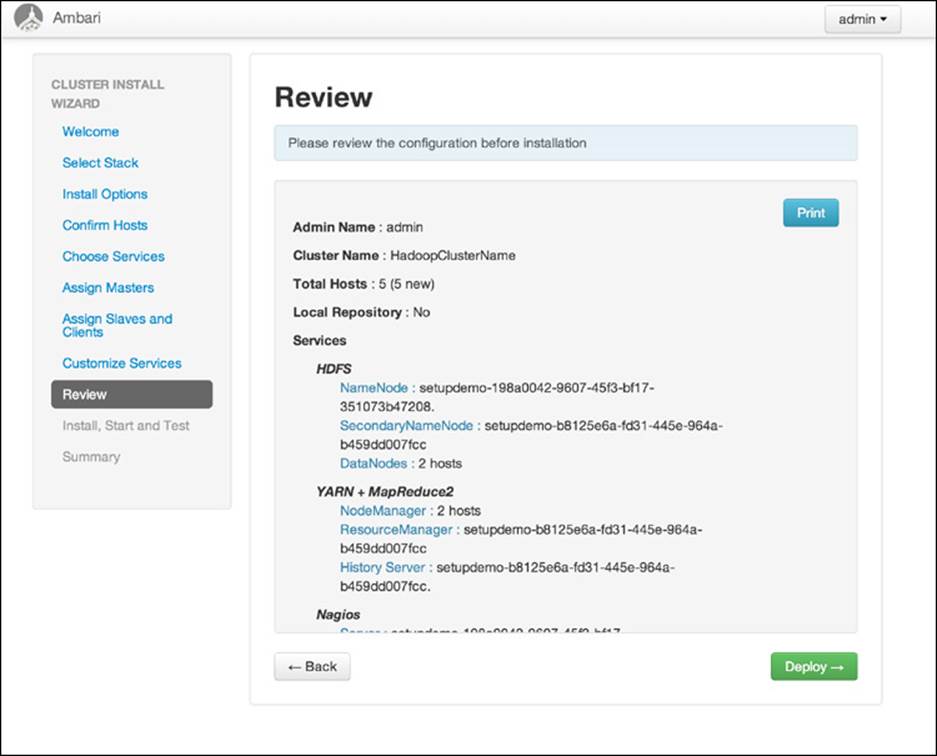

8. The final step before installing Hadoop is a review of your configuration. Figure 5.15 summarizes the actions that are about to take place. Be sure to double-check all settings before you commit to an install.

Figure 5.15 Ambari final review window

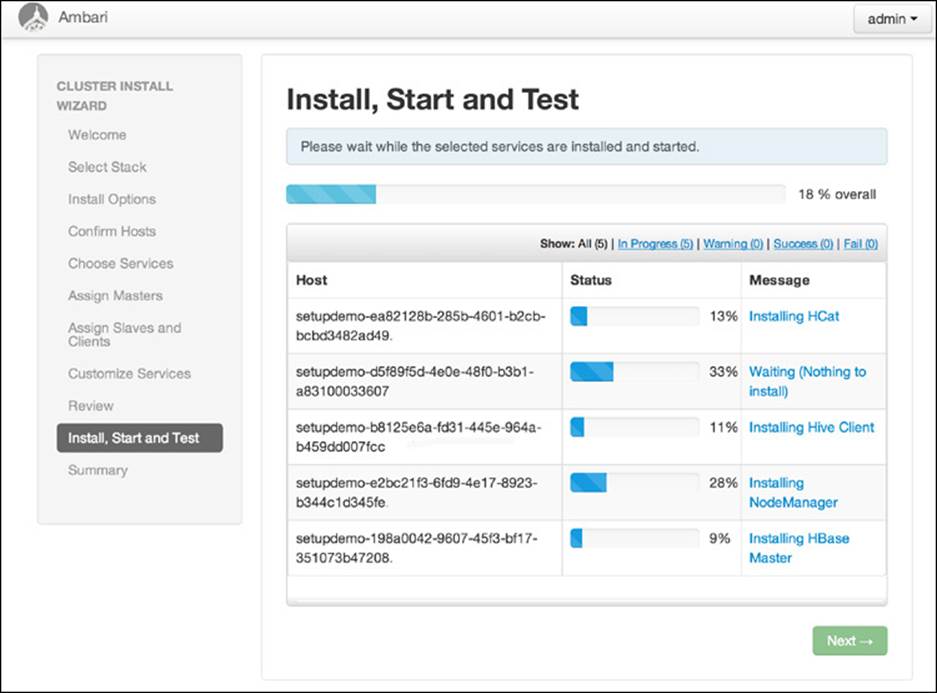

9. During the installation step shown in Figure 5.16, the cluster is actually provisioned with the various software packages. By clicking on the node name, you can drill down into the installation status of every component. Should the installation encounter any errors on specific nodes, these errors will be highlighted on this screen.

Figure 5.16 Ambari deployment process

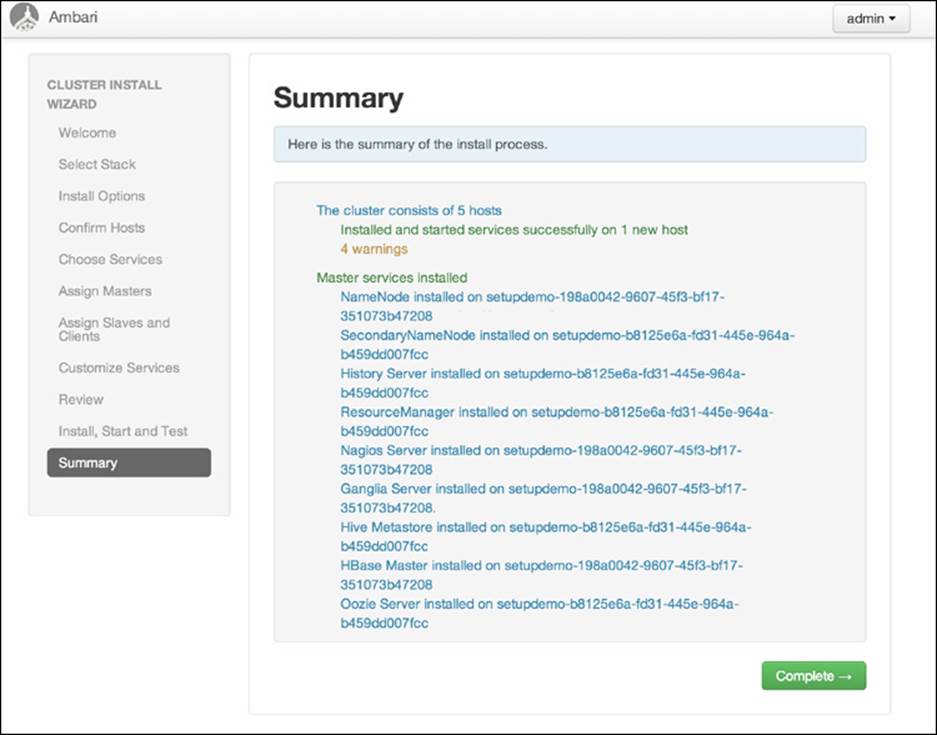

10. Once the installation of the nodes is complete, a summary window similar to Figure 5.17 will be displayed. The screen indicates which tasks were completed and identifies any preliminary testing of cluster services.

Figure 5.17 Ambari summary window

Congratulations! You have just completed installing HDP2.X with Ambari. Consult the online Ambari documentation (http://docs.hortonworks.com/#2) for further details on the installation process.

If you are using Hive and have a FQDN longer than 60 characters (as is common in some cloud deployments), please note that this can cause authentication issues with the MySQL database that Hive installs by default with Ambari. To work around this issue, start the MySQL database with the “--skip-name-resolve” option to disable FQDN resolution and authenticate based only on IP number.

Wrap-up

It is possible to perform an automated script-based install of moderate to very large clusters. The use of parallel distributed shell and copy commands (pdsh and pdcp, respectively) makes a fully remote installation on any number of nodes possible. The script-based install process is designed to be flexible, and users can easily modify it for their specific needs on Red Hat (and derivative)–based distributions.

In addition to the install script, some useful functions for creating and changing the Hadoop XML property files are made available to users. To aid with start-up and shutdown of Hadoop services, the scripted install also provides SysV init scripts for Red Hat–based systems.

Finally, a graphical install process using Apache Ambari was described in this chapter. With Ambari, the entire Hadoop installation process can be automated with a powerful point-and-click interface. As we will see in Chapter 6, “Apache Hadoop YARN Administration,” Ambari can also be used for administration purposes.

Installing Hadoop 2 YARN from scratch is also easy. The single-machine installation outlined in Chapter 2, “Apache Hadoop YARN Install Quick Start,” can be used as a guide. Again, in custom scenarios, pdsh and pdcp can be very valuable.