Apache Hadoop YARN: Moving beyond MapReduce and Batch Processing with Apache Hadoop 2 (2014)

7. Apache Hadoop YARN Architecture Guide

Chapter 4 provided a functional overview of YARN components and a brief description of how a YARN application flows through the system. In this chapter, we will delve deeper into the inner workings of YARN and describe how the system is implemented from the ground up.

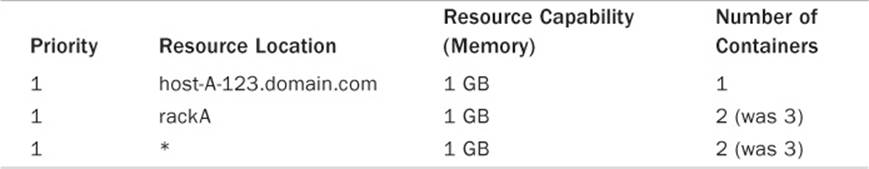

YARN separates all of its functionality into two layers: a platform layer responsible for resource management and what is called first-level scheduling, and a framework layer that coordinates application execution and second-level scheduling. Specifically, a per-cluster ResourceManagertracks usage of resources, monitors the health of various nodes in the cluster, enforces resource-allocation invariants, and arbitrates conflicts among users. By separating these multiple duties that were previously shouldered by a single daemon, the JobTracker, in Hadoop version 1, the ResourceManager can simply allocate resources centrally based on a specification of an application’s requirements, but ignore how the application makes use of those resources. That responsibility is delegated to an ApplicationMaster, which coordinates the logical execution of a single application by requesting resources from the ResourceManager, generating a physical plan of its work, making use of the resources it receives, and coordinating the execution of such a physical plan.

Overview

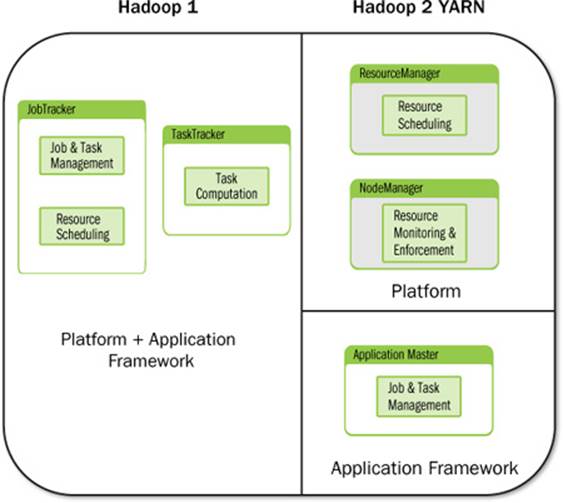

The ResourceManager and NodeManagers running on individual nodes come together to form the core of YARN and constitute the platform. ApplicationMasters and the corresponding containers come together to form a YARN application. This separation of concerns is shown in Figure 7.1. From YARN’s point of view, all users interact with it by submitting applications that then make use of the resources offered by the platform. From end-users’ perspective, they may either (1) directly interact with YARN by running applications directly on the platform or (2) interact with aframework, which in turn runs as an application on top of YARN. Frameworks may expose a higher-level functionality to the end-users. As an example, the MapReduce code that comes bundled with Apache Hadoop can be looked at as a framework running on top of YARN. On the one hand, MapReduce gives to the users a map and reduce abstraction that they can code against, with the framework taking care of the gritty details of running smoothly on a distributed system—failure handling, reliability, resource allocation, and so. On the other hand, MapReduce uses the underlying platform’s APIs to implement such functionality.

Figure 7.1 Hadoop version 1 with integrated platform and applications framework versus Hadoop version 2 with separate platform and application framework

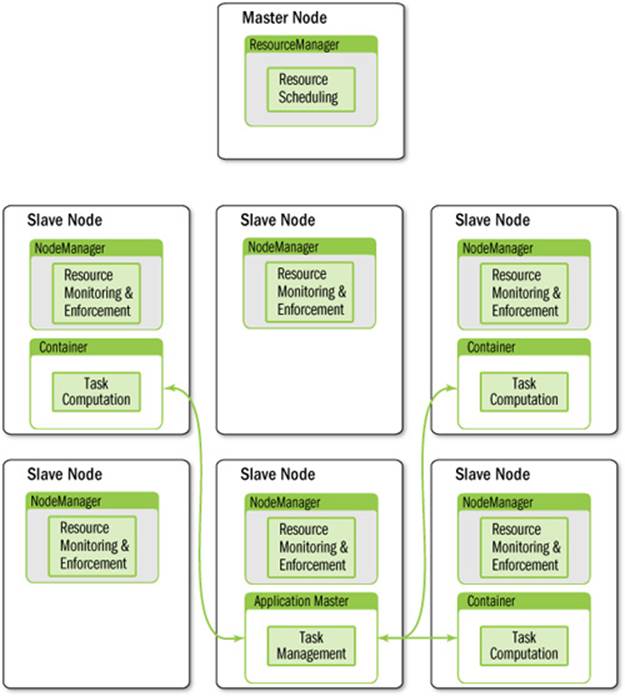

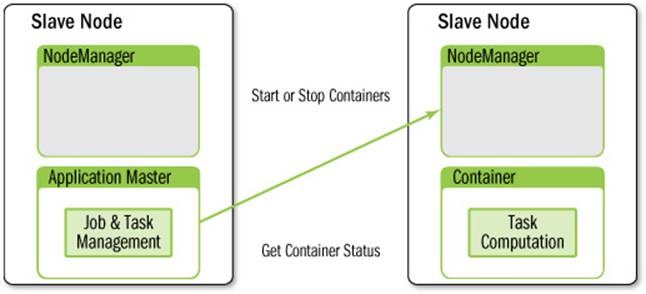

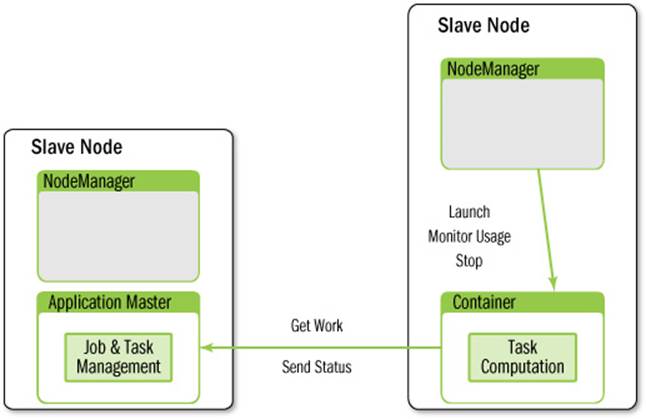

The overall architecture is described in Figure 7.2. The ResourceManager provides scheduling of applications. Each application is managed by an ApplicationMaster (per-task manager) that requests per-task computation resources in the form of containers. Containers are scheduled by the ResourceManager and locally managed by the per-node NodeManager.

Figure 7.2 YARN architectural overview

A detailed description of the responsibilities and components of the ResourceManager, NodeManager, and ApplicationMaster follows.

ResourceManager

As previously described, the ResourceManager is the master that arbitrates all the available cluster resources, thereby helping manage the distributed applications running on the YARN platform. It works together with the following components:

![]() The per-node NodeManagers, which take instructions from the ResourceManager, manage resources available on a single node, and accept container requests from ApplicationMasters

The per-node NodeManagers, which take instructions from the ResourceManager, manage resources available on a single node, and accept container requests from ApplicationMasters

![]() The per-application ApplicationMasters, which are responsible for negotiating resources with the ResourceManager and for working with the NodeManagers to start, monitor, and stop the containers

The per-application ApplicationMasters, which are responsible for negotiating resources with the ResourceManager and for working with the NodeManagers to start, monitor, and stop the containers

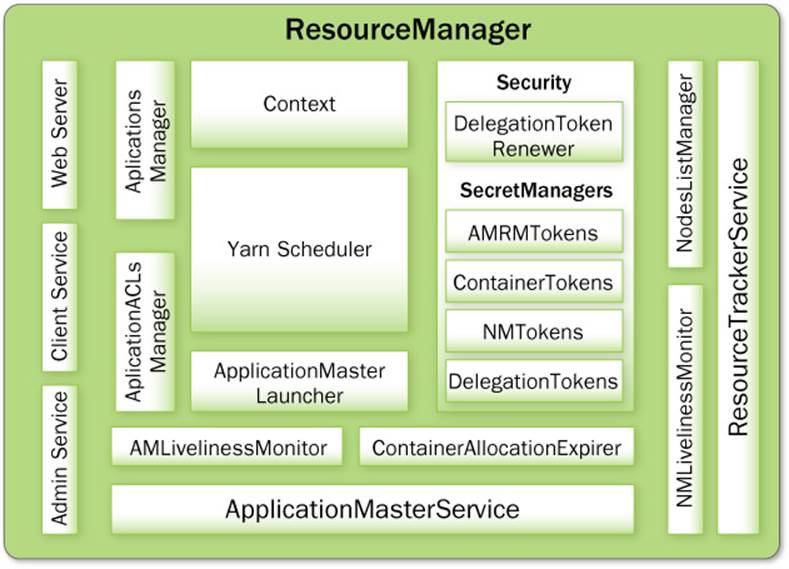

Overview of the ResourceManager Components

The ResourceManager components are illustrated in Figure 7.3. To better describe the workings of each component, they will be introduced separately by grouping them corresponding to each external entity for which they provide services: clients, the NodeManagers, the ApplicationMasters, or other internal core components.

Figure 7.3 ResourceManager components

Client Interaction with the ResourceManager

The first interaction point of a user with the platform comes in the form of a client to the ResourceManager. The following components in ResourceManager interact with the client.

Client Service

This service implements ApplicationClientProtocol, the basic client interface to the ResourceManager. This component handles all the remote procedure call (RPC) communications to the ResourceManager from the clients, including operations such as the following:

![]() Application submission

Application submission

![]() Application termination

Application termination

![]() Exposing information about applications, queues, cluster statistics, user ACLs, and more

Exposing information about applications, queues, cluster statistics, user ACLs, and more

Client Service provides additional protection to the ResourceManager depending on whether the administrator configured YARN to run in secure or nonsecure mode. In secure mode, the Client Service makes sure that all incoming requests from users are authenticated (for example, by Kerberos) and then authorizes each user by looking up application-level Access Control Lists (ACLs) and subsequently queue-level ALCs. For all clients that cannot be authenticated with Kerberos directly, this service also exposes APIs to obtain what are known as the ResourceManager delegation tokens. Delegation tokens are special objects that a Kerberos-authenticated client can first obtain by securely communicating with the ResourceManager and then pass along to its nonauthenticated processes. Any client process that has a handle to these delegation tokens can communicate with ResourceManager securely without separately authenticating with Kerberos first.

Administration Service

While Client Service is responsible for typical user invocations like application submission and termination, there is a list of activities that administrators of a YARN cluster have to perform from time to time. To make sure that administration requests don’t get starved by the regular users’ requests and to give the operators’ commands a higher priority, all of the administrative operations are served via a separate interface called Administration Service. ResourceManagerAdministrationProtocol is the communication protocol that is implemented by this component. Some of the important administrative operations are highlighted here:

![]() Refreshing queues: for example, adding new queues, stopping existing queues, and reconfiguring queues to change some of their properties like capacities, limits, and more

Refreshing queues: for example, adding new queues, stopping existing queues, and reconfiguring queues to change some of their properties like capacities, limits, and more

![]() Refreshing the list of nodes handled by the ResourceManager: for example, adding newly installed nodes or decommissioning existing nodes for various reasons

Refreshing the list of nodes handled by the ResourceManager: for example, adding newly installed nodes or decommissioning existing nodes for various reasons

![]() Adding new user-to-group mappings, adding/updating administrator ACLs, modifying the list of superusers, and so on

Adding new user-to-group mappings, adding/updating administrator ACLs, modifying the list of superusers, and so on

Both Client Service and Administration Service work closely with ApplicationManager for ACL enforcement.

Application ACLs Manager

The ResourceManager needs to gate the user-facing APIs like the client and administrative requests so that they are accessible only to authorized users. This component maintains the ACLs per application and enforces them. Application ACLs are enabled on the ResourceManager by setting to true the configuration property yarn.acl.enable. There are two types of application accesses: (1) viewing and (2) modifying an application. ACLs against the view access determine who can “view” some or all of the application-related details on the RPC interfaces, web UI, and web services. The modify-application ACLs determine who can “modify” the application (e.g., kill the application).

An ACL is a list of users and groups who can perform a specific operation. Users can specify the ACLs for their submitted application as part of the ApplicationSubmissionContext. These ACLs are tracked per application by the ACLsManager and used for access control whenever a request comes in. Note that irrespective of the ACLs, all administrators (determined by the configuration property yarn.admin.acl) can perform any operation.

The same ACLs are transferred over to the ApplicationMaster so that the ApplicationMaster itself can use them for users accessing various services running inside the ApplicationMaster. The NodeManager also receives the same ACLs as part of ContainerLaunchContext (discussed later in this chapter) when a container is launched which then uses them for access control to serve requests about the applications/containers, mainly about their status, application logs, etc.

ResourceManager Web Application and Web Services

The ResourceManager has a web application that exposes information about the state of the cluster; metrics; lists of active, healthy, and unhealthy nodes; lists of applications, their state and status; hyper-references to the ApplicationMaster web interfaces; and a scheduler-specific interface.

Application Interaction with the ResourceManager

Once an application goes past the client-facing services in the ResourceManager and is accepted into the system, it travels through the internal machinery of the ResourceManager that is responsible for launching the ApplicationMaster. The following describes how the ApplicationMasters interact with the ResourceManager once they have started.

ApplicationMasters Service

This component responds to requests from all the ApplicationMasters. It implements ApplicationMasterProtocol, which is the one and only protocol that ApplicationMasters use to communicate with the ResourceManager. It is responsible for the following tasks:

![]() Registration of new ApplicationMasters

Registration of new ApplicationMasters

![]() Termination/unregistering of requests from any finishing ApplicationMasters

Termination/unregistering of requests from any finishing ApplicationMasters

![]() Authorizing all requests from various ApplicationMasters to make sure that only valid ApplicationMasters are sending requests to the corresponding Application entity residing in the ResourceManager

Authorizing all requests from various ApplicationMasters to make sure that only valid ApplicationMasters are sending requests to the corresponding Application entity residing in the ResourceManager

![]() Obtaining container allocation and deallocation requests from all running ApplicationMasters and forwarding them asynchronously to the YarnScheduler

Obtaining container allocation and deallocation requests from all running ApplicationMasters and forwarding them asynchronously to the YarnScheduler

The ApplicationMasterService has additional logic to make sure that—at any point in time—only one thread in any ApplicationMaster can send requests to the ResourceManager. All the RPCs from ApplicationMasters are serialized on the ResourceManager, so it is expected that only one thread in the ApplicationMaster will make these requests.

This component works closely with ApplicationMaster liveliness monitor described next.

ApplicationMaster Liveliness Monitor

To help manage the list of live ApplicationMasters and dead/non-responding ApplicationMasters, this monitor keeps track of each ApplicationMaster and its last heartbeat time. Any ApplicationMaster that does not produce a heartbeat within a configured interval of time—by default, 10 minutes—is deemed dead and is expired by the ResourceManager. All containers currently running/allocated to an expired ApplicationMaster are marked as dead. The ResourceManager reschedules the same application to run a new Application Attempt on a new container, allowing up to a maximum of two such attempts by default.

Interaction of Nodes with the ResourceManager

The following components in the ResourceManager interact with the NodeManagers running on cluster nodes.

Resource Tracker Service

NodeManagers periodically send heartbeats to the ResourceManager, and this component of the ResourceManager is responsible for responding to such RPCs from all the nodes. It implements the ResourceTracker interface to which all NodeManagers communicate. Specifically, it is responsible for the following tasks:

![]() Registering new nodes

Registering new nodes

![]() Accepting node heartbeats from previously registered nodes

Accepting node heartbeats from previously registered nodes

![]() Ensuring that only “valid” nodes can interact with the ResourceManager and rejecting any other nodes

Ensuring that only “valid” nodes can interact with the ResourceManager and rejecting any other nodes

Before and during the registration of a new node to the system, lots of things happen. The administrators are supposed to install YARN on the node along with any other dependencies, and configure the node to communicate to its ResourceManager by setting up configuration similar to other existing nodes. If needed, this node should be removed from the excluded nodes list of the ResourceManager.

The ResourceManager will reject requests from any invalid or decommissioned nodes. Nodes that don’t respect the ResourceManager’s configuration for minimum resource requirements will also be rejected.

Following a successful registration, in its registration response the ResourceManager will send security-related master keys needed by NodeManagers to authenticate container-related requests from the ApplicationMasters. NodeManagers need to be able to validate NodeManager tokens and container tokens that are submitted by ApplicationMasters as part of container-launch requests. The underlying master keys are rolled over every so often for security purposes; thus, on further heartbeats, NodeManagers will be notified of such updates whenever they happen.

The Resource Tracker Service forwards a valid node-heartbeat to the YarnScheduler, which then makes scheduling decisions based on freely available resources on that node and the resource requirements from various applications.

In addition, the Resource Tracker Service works closely with the NodeManager liveliness monitor and nodes-list manager, described next.

NodeManagers Liveliness Monitor

To keep track of live nodes and specifically identify any dead nodes, this component keeps track of each node’s identifier (ID) and its last heartbeat time. Any node that doesn’t send a heartbeat within a configured interval of time—by default, 10 minutes—is deemed dead and is expired by the ResourceManager. All the containers currently running on an expired node are marked as dead, and no new containers are scheduled on such node. Once such a node restarts (either automatically or by administrators’ intervention) and reregisters, it will again be considered for scheduling.

Nodes-List Manager

The nodes-list manager is a collection in the ResourceManager’s memory of both valid and excluded nodes. It is responsible for reading the host configuration files specified via the yarn.resourcemanager.nodes.include-path andyarn.resourcemanager.nodes.exclude-path configuration properties and seeding the initial list of nodes based on those files. It also keeps track of nodes that are explicitly decommissioned by administrators as time progresses.

Core ResourceManager Components

So far, we have described various components of the ResourceManager that interact with the outside world—namely, clients, ApplicationMasters, and NodeManagers. In this section, we’ll present the core ResourceManager components that bind all of them together.

ApplicationsManager

The ApplicationsManager is responsible for maintaining a collection of submitted applications. After application submission, it first validates the application’s specifications and rejects any application that requests unsatisfiable resources for its ApplicationMaster (i.e., there is no node in the cluster that has enough resources to run the ApplicationMaster itself). It then ensures that no other application was already submitted with the same application ID—a scenario that can be caused by an erroneous or a malicious client. Finally, it forwards the admitted application to the scheduler.

This component is also responsible for recording and managing finished applications for a while before they are completely evacuated from the ResourceManager’s memory. When an application finishes, it places an ApplicationSummary in the daemon’s log file. The ApplicationSummaryis a compact representation of application information at the time of completion.

Finally, the ApplicationsManager keeps a cache of completed applications long after applications finish to support users’ requests for application data (via web UI or command line). The configuration property yarn.resourcemanager.max-completed-applications controls the maximum number of such finished applications that the ResourceManager remembers at any point of time. The cache is a first-in, first-out list, with the oldest applications being moved out to accommodate freshly finished applications.

ApplicationMaster Launcher

In YARN, while every other container’s launch is initiated by an ApplicationMaster, the ApplicationMaster itself is allocated and prepared for launch on a NodeManager by the ResourceManager itself. The ApplicationMaster Launcher is responsible for this job. This component maintains a thread pool to set up the environment and to communicate with NodeManagers so as to launch ApplicationMasters of newly submitted applications as well as applications for which previous ApplicationMaster attempts failed for some reason. It is also responsible for talking to NodeManagers about cleaning up the ApplicationMaster—mainly killing the process by signaling the corresponding NodeManager when an application finishes normally or is forcefully terminated.

YarnScheduler

The YarnScheduler is responsible for allocating resources to the various running applications subject to constraints of capacities, queues, and so on. It performs its scheduling function based on the resource requirements of the applications, such as memory, CPU, disk, and network needs. Currently, memory and CPU cores are supported resources. We already gave a brief coverage of various YARN scheduling options in Chapter 4, “Functional Overview of YARN Components.” The default scheduler that is packaged with YARN, the Capacity scheduler, is discussed in Chapter 8.

ContainerAllocationExpirer

This component is in charge of ensuring that all allocated containers are eventually used by ApplicationMasters and subsequently launched on the corresponding NodeManagers. ApplicationMasters run as untrusted user code and may potentially hold on to allocations without using them; as such, they can lead to under-utilization and abuse of a cluster’s resources. To address this, the ContainerAllocationExpirer maintains a list of containers that are allocated but still not used on the corresponding NodeManagers. For any container, if the corresponding NodeManager doesn’t report to the ResourceManager that the container has started running within a configured interval of time (by default, 10 minutes), the container is deemed dead and is expired by the ResourceManager.

In addition, independently NodeManagers look at this expiry time, which is encoded in the ContainerToken tied to a container, and reject containers that are submitted for launch after the expiry time elapses. Obviously, this feature depends on the system clocks being synchronized across the ResourceManager and all NodeManagers in the system.

Security-related Components in the ResourceManager

The ResourceManager has a collection of components called SecretManagers that are charged with managing the tokens and secret keys that are used to authenticate/authorize requests on various RPC interfaces. A brief summary of the tokens, secret keys, and the secret managers follows.

ContainerToken SecretManager

This SecretManager is responsible for managing ContainerTokens—a special set of tokens issued by the ResourceManager to an ApplicationMaster so that it can use an allocated container on a specific node. This ResourceManager-specific component keeps track of the underlying secret keys and rolls the keys over every so often.

ContainerTokens are a security tool used by the ResourceManager to send vital information related to starting a container to NodeManagers through the ApplicationMaster. This information cannot be sent directly to a NodeManager without causing significant latencies. The ResourceManager can construct ContainerTokens only after a container is allocated, and the information to be encoded in a ContainerToken is available only after this allocation. Waiting for NodeManagers to acknowledge the token before ApplicationMasters can get the allocated container is a nonstarter. For this reason, they are routed to the NodeManagers through the ApplicationMasters.

From a security point of view, we cannot trust the ApplicationMaster to pass along correct information to the NodeManagers before starting a container. For example, it may just fabricate the amount of memory or cores before passing along this information to the NodeManager. To avoid this problem, the ResourceManager encrypts vital container-related information into a container token before sending it to the ApplicationMaster. A container token consists of the following fields:

![]() Container ID: This uniquely identifies a container. The NodeManager uses this information to bind it to a specific application or application attempt. This binding is important because any user may have multiple applications running concurrently and one ApplicationMaster should not start containers for another application.

Container ID: This uniquely identifies a container. The NodeManager uses this information to bind it to a specific application or application attempt. This binding is important because any user may have multiple applications running concurrently and one ApplicationMaster should not start containers for another application.

![]() NodeManager address: The container token encodes the target Node-Manager’s address so as to avoid abusive ApplicationMasters using container tokens corresponding to containers allocated on one NodeManager to start containers on another unrelated NodeManager.

NodeManager address: The container token encodes the target Node-Manager’s address so as to avoid abusive ApplicationMasters using container tokens corresponding to containers allocated on one NodeManager to start containers on another unrelated NodeManager.

![]() Application submitter: This is the name of the user who submitted the application to the ResourceManager. It is important because the NodeManager needs to perform all container-related activities, such as localizing resources, starting a process for the container, and creating log directories, as the user for security reasons.

Application submitter: This is the name of the user who submitted the application to the ResourceManager. It is important because the NodeManager needs to perform all container-related activities, such as localizing resources, starting a process for the container, and creating log directories, as the user for security reasons.

![]() Resource: This informs the NodeManager about the amount of each resource (e.g., memory, virtual cores) that the ResourceManager has authorized an ApplicationMaster to start. The NodeManager uses this information both to account for used resources and to monitor containers to not use resources beyond the corresponding limits.

Resource: This informs the NodeManager about the amount of each resource (e.g., memory, virtual cores) that the ResourceManager has authorized an ApplicationMaster to start. The NodeManager uses this information both to account for used resources and to monitor containers to not use resources beyond the corresponding limits.

![]() Expiry timestamp: NodeManagers look at this timestamp to determine if the container token passed is still valid. Any containers that are not used by the ApplicationMasters until after this expiry time is reached will be automatically cancelled by YARN.

Expiry timestamp: NodeManagers look at this timestamp to determine if the container token passed is still valid. Any containers that are not used by the ApplicationMasters until after this expiry time is reached will be automatically cancelled by YARN.

![]() For this feature to work, the clocks on the nodes running the ResourceManager and the NodeManagers must be in sync.

For this feature to work, the clocks on the nodes running the ResourceManager and the NodeManagers must be in sync.

![]() When the ResourceManager allocates a container, it also determines and sets its expiry time based on a cluster configuration, defaulting to 10 minutes.

When the ResourceManager allocates a container, it also determines and sets its expiry time based on a cluster configuration, defaulting to 10 minutes.

![]() When administrators set the expiry interval configuration, it should not be set (1) to a very low value, because ApplicationMasters may not have enough time to start containers before they are expired, or (2) to a very high value, because doing so permits rogue ApplicationMasters to allocate containers but not use them, which hurts cluster utilization.

When administrators set the expiry interval configuration, it should not be set (1) to a very low value, because ApplicationMasters may not have enough time to start containers before they are expired, or (2) to a very high value, because doing so permits rogue ApplicationMasters to allocate containers but not use them, which hurts cluster utilization.

![]() If a container is not used before it expires, then the NodeManager will simply reject any start-container requests using this token. The NodeManager also has a cache of recently started containers to prevent ApplicationMasters from using the same token in a rapid manner on very short-lived containers.

If a container is not used before it expires, then the NodeManager will simply reject any start-container requests using this token. The NodeManager also has a cache of recently started containers to prevent ApplicationMasters from using the same token in a rapid manner on very short-lived containers.

![]() Master key identifier: This is used by NodeManagers to validate container tokens that are sent across them.

Master key identifier: This is used by NodeManagers to validate container tokens that are sent across them.

![]() The ResourceManager generates a secret key and assigns a key ID to uniquely identify this key. This secret key, along with its ID, is shared with every NodeManager, first as a part of each node’s registration and then during subsequent heartbeats whenever the ResourceManager rolls over the keys for security reasons. The key rollover period is a ResourceManager configurable value, but defaults to a day.

The ResourceManager generates a secret key and assigns a key ID to uniquely identify this key. This secret key, along with its ID, is shared with every NodeManager, first as a part of each node’s registration and then during subsequent heartbeats whenever the ResourceManager rolls over the keys for security reasons. The key rollover period is a ResourceManager configurable value, but defaults to a day.

![]() Whenever the ResourceManager rolls over the underlying keys, they aren’t immediately used to generate new tokens; thus there is enough time for all the NodeManagers in the cluster to learn about the rollover. As NodeManagers emit heartbeats and learn about the new key, or once the activation period expires, the ResourceManager replaces its older key with a newly created key. Thereafter, it uses the new key only for generating container tokens. This activation period is set to be 1.5 times the node-expiry interval.

Whenever the ResourceManager rolls over the underlying keys, they aren’t immediately used to generate new tokens; thus there is enough time for all the NodeManagers in the cluster to learn about the rollover. As NodeManagers emit heartbeats and learn about the new key, or once the activation period expires, the ResourceManager replaces its older key with a newly created key. Thereafter, it uses the new key only for generating container tokens. This activation period is set to be 1.5 times the node-expiry interval.

![]() As you can see, there will be times before key activation when NodeManagers may receive tokens generated using different keys. In such a case, even when the ResourceManager instructs NodeManagers that a key has rolled over, NodeManagers continue to remember both the current (new) key and the previous (old) key, and use the correct key based on the master key ID present in the token.

As you can see, there will be times before key activation when NodeManagers may receive tokens generated using different keys. In such a case, even when the ResourceManager instructs NodeManagers that a key has rolled over, NodeManagers continue to remember both the current (new) key and the previous (old) key, and use the correct key based on the master key ID present in the token.

![]() ResourceManager identifier: It is possible that the ResourceManager might restart after allocating a container but before the ApplicationMaster can reach the NodeManager to start the container. To ensure both the new ResourceManager and the NodeManagers are able to recognize containers from the old instance of ResourceManager separately from the ones allocated by the new instance, the ResourceManager identifier is encoded into the container token. At the time of this writing, the ResourceManager on restart will kill all of the previously running containers; in a similar vein, NodeManagers simply reject containers issued by the older ResourceManager.

ResourceManager identifier: It is possible that the ResourceManager might restart after allocating a container but before the ApplicationMaster can reach the NodeManager to start the container. To ensure both the new ResourceManager and the NodeManagers are able to recognize containers from the old instance of ResourceManager separately from the ones allocated by the new instance, the ResourceManager identifier is encoded into the container token. At the time of this writing, the ResourceManager on restart will kill all of the previously running containers; in a similar vein, NodeManagers simply reject containers issued by the older ResourceManager.

AMRMToken SecretManager

Only ApplicationMasters can initiate requests for resources in the form of containers. To avoid the possibility of arbitrary processes maliciously imitating a real ApplicationMaster and sending scheduling requests to the ResourceManager, the ResourceManager uses per-ApplicationAttempt tokens called AMRMTokens. This secret manager saves each token locally in memory until an ApplicationMaster finishes and uses it to authenticate any request coming from a valid ApplicationMaster process.

ApplicationMasters can obtain this token by loading a credentials file localized by YARN. The location of this file is determined by the public constant ApplicationConstants.CONTAINER_TOKEN_FILE_ENV_NAME.

Unlike the container tokens, the underlying master key for AMRMTokens doesn’t need to be shared with any other entity in the system. Like the container tokens, the keys are rolled every so often for security reasons, but there are no corresponding activation periods.

NMToken SecretManager

Container tokens are in a way used for authorization of start-container requests from the ApplicationMasters. They are valid only during the connection to the NodeManager that is created for starting the container. Further, if there is no other authentication mechanism, a connection created using a container token cannot be used to start other containers. The whole point of a container token is to prevent resource abuse, which would be possible with shared connections.

Besides starting a container, NodeManagers allow ApplicationMasters to stop a container or get the status of a container. These requests can be submitted long after containers are allocated, so mandating the ApplicationMasters to create a persistent but separate connection per container with each NodeManager is not practical.

NMTokens serve this purpose. ApplicationMasters use NMTokens to manage one connection per NodeManager and use it to send all requests to that node.

![]() The ResourceManager generates one NMToken per application attempt per NodeManager.

The ResourceManager generates one NMToken per application attempt per NodeManager.

![]() Whenever a new container is created, ResourceManager issues the ApplicationMaster an NMToken corresponding to that node. ApplicationMasters will get NMTokens only for those NodeManagers on which they started containers.

Whenever a new container is created, ResourceManager issues the ApplicationMaster an NMToken corresponding to that node. ApplicationMasters will get NMTokens only for those NodeManagers on which they started containers.

![]() As a network optimization, NMTokens are not sent to the ApplicationMasters for each and every allocated container, but only for the first time or if NMTokens have to be invalidated due to the rollover of the underlying master key.

As a network optimization, NMTokens are not sent to the ApplicationMasters for each and every allocated container, but only for the first time or if NMTokens have to be invalidated due to the rollover of the underlying master key.

![]() Whenever an ApplicationMaster receives a new NMToken, it should replace the existing token, if present, for that NodeManager with the newer token. A library, NMTokenCache, is available for the token management.

Whenever an ApplicationMaster receives a new NMToken, it should replace the existing token, if present, for that NodeManager with the newer token. A library, NMTokenCache, is available for the token management.

![]() ApplicationMasters are always expected to use the latest NMToken, and each NodeManager accepts only one NMToken from any ApplicationMaster. If a new NMToken is received from the ResourceManager, then older connections for corresponding NodeManagers should be closed and a new connection should be created with the latest NMToken. If connections created with older NMTokens are then used for launching newly assigned containers, the NodeManagers simply reject them.

ApplicationMasters are always expected to use the latest NMToken, and each NodeManager accepts only one NMToken from any ApplicationMaster. If a new NMToken is received from the ResourceManager, then older connections for corresponding NodeManagers should be closed and a new connection should be created with the latest NMToken. If connections created with older NMTokens are then used for launching newly assigned containers, the NodeManagers simply reject them.

![]() As with container tokens, NMTokens issued for one ApplicationMaster cannot be used by another. To make this happen, the application attempt ID is encoded into the NMTokens.

As with container tokens, NMTokens issued for one ApplicationMaster cannot be used by another. To make this happen, the application attempt ID is encoded into the NMTokens.

RMDelegationToken SecretManager

This component is a ResourceManager-specific delegation token secret manager. It is responsible for generating delegation tokens to clients, which can be passed on to processes that wish to be able to talk to the ResourceManager but are not Kerberos authenticated.

DelegationToken Renewer

In secure mode, the ResourceManager is Kerberos authenticated and so provides the service of renewing file system tokens on behalf of the applications. This component renews tokens of submitted applications as long as the application runs and until the tokens can no longer be renewed.

NodeManager

A NodeManager is YARN’s per-node agent that takes care of the individual compute nodes in a Hadoop YARN cluster and uses the physical resources on the nodes to run containers as requested by YARN applications. It is essentially the “worker” daemon in YARN. Its responsibilities include the following tasks:

![]() Keeping up-to-date with the ResourceManager

Keeping up-to-date with the ResourceManager

![]() Tracking node health

Tracking node health

![]() Overseeing containers’ life-cycle management; monitoring resource usage (e.g., memory, CPU) of individual containers

Overseeing containers’ life-cycle management; monitoring resource usage (e.g., memory, CPU) of individual containers

![]() Managing the distributed cache (a local file system cache of files such as jars and libraries that are used by containers)

Managing the distributed cache (a local file system cache of files such as jars and libraries that are used by containers)

![]() Managing the logs generated by containers

Managing the logs generated by containers

![]() Auxiliary services that may be exploited by different YARN applications

Auxiliary services that may be exploited by different YARN applications

We’ll now give a brief overview of NodeManagers’ functionality before describing the components in more detail.

Overview of the NodeManager Components

Among the previously listed responsibilities, container management is the core responsibility of a NodeManager. From this point of view, the NodeManager accepts requests from ApplicationMasters to start and stop containers, authenticates container tokens (a security mechanism to make sure applications can appropriately use resources as given out by the ResourceManager), manages libraries that containers depend on for execution, and monitors containers’ execution. Operators configure each NodeManager with a certain amount of memory, number of CPUs, and other resources available at the node by way of configuration files (yarn-default.xml and/or yarn-site.xml). After registering with the ResourceManager, the NodeManager periodically sends a heartbeat with its current status and receives instructions, if any, from the ResourceManager. When the scheduler gets to process the node’s heartbeat (which can happen after a delay follows a node’s heartbeat), containers are allocated against that NodeManager and then are subsequently returned to the ApplicationMasters when the ApplicationMasters themselves send a heartbeat to the ResourceManager.

All containers in YARN—including ApplicationMasters—are described by a Container Launch Context (CLC). This request object includes environment variables, library dependencies (which may be present on remotely accessible storage), security tokens that are needed both for downloading libraries required to start a container and for usage by the container itself, container-specific payloads for NodeManager auxiliary services, and the command necessary to create the process. After validating the authenticity of a start-container request, the NodeManager configures the environment for the container, forcing any administrator-provided settings that may be configured.

Before actually launching a container, the NodeManager copies all the necessary libraries—data files, executables, tarballs, jar files, shell scripts, and so on—to the local file system. The downloaded libraries may be shared between containers of a specific application via a local application-level cache, between containers launched by the same user via a local user-level cache, and even between users via a public cache, as can be specified in the CLC. The NodeManager eventually garbage-collects libraries that are not in use by any running containers.

The NodeManager may also kill containers as directed by the ResourceManager. Containers may be killed in the following situations:

![]() The ResourceManager sends a signal that an application has completed.

The ResourceManager sends a signal that an application has completed.

![]() The scheduler decides to preempt it for another application or user.

The scheduler decides to preempt it for another application or user.

![]() The NodeManager detects that the container exceeded the resource limits as specified by its ContainerToken.

The NodeManager detects that the container exceeded the resource limits as specified by its ContainerToken.

Whenever a container exits, the NodeManager will clean up its working directory in local storage. When an application completes, all resources owned by its containers are cleaned up.

In addition to starting and stopping containers, cleaning up after exited containers, and managing local resources, the NodeManager offers other local services to containers running on the node. For example, the log aggregation service uploads all the logs written by the application’s containers to stdout and stderr to a file system once the application completes.

As described in the ResourceManager section, when any NodeManager fails (which may occur for various reasons), the ResourceManager detects this failure using a timeout, and reports the failure to all running applications. If the fault or condition causing the timeout is transient, the NodeManager will resynchronize with the ResourceManager, clean up its local state, and continue. Similarly, when a new NodeManager joins the cluster, the ResourceManager notifies all ApplicationMasters about the availability of new resources for spawning containers.

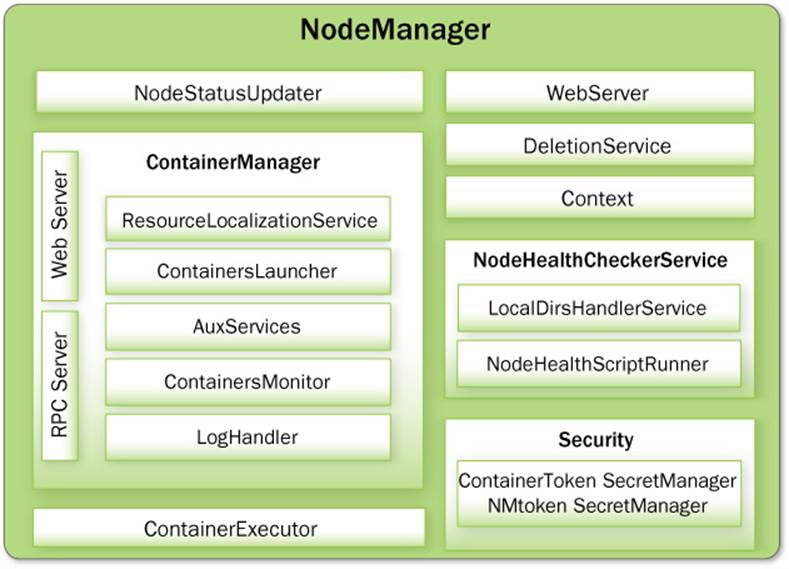

NodeManager Components

Similar to the ResourceManager, the NodeManager is divided internally into a host of nested components, each of which has a clear responsibility. Figure 7.4 gives an overview of the NodeManager components.

Figure 7.4 NodeManager components

NodeStatusUpdater

On start-up, this component registers with the ResourceManager, sends information about the resources available on this node, and identifies the ports at which the NodeManager’s web server and the RPC server are listening. As part of the registration, the ResourceManager sends the NodeManager security-related keys needed by the NodeManager to authenticate future container requests from the ApplicationMasters. Subsequent NodeManager–ResourceManager communication provides the ResourceManager with any updates on existing containers’ status, new containers started on the node by the ApplicationMasters, containers that have completed, and so on.

In addition, the ResourceManager may signal the NodeManager via this component to potentially kill currently running containers because of, say, a scheduling policy that shuts down the NodeManager in situations such as explicit decommissioning by the operator or resynchronizing of the NodeManager in case of network issues. Finally, when any application finishes on the ResourceManager, the ResourceManager signals the NodeManager to clean up various application-specific entities on the NodeManager—for example, internal per-application data structures and application-level local resources—and then initiate and finish the per-application logs’ aggregation onto a file system.

ContainerManager

This component is the core of the NodeManager. It is composed of the following subcomponents, each of which performs a subset of the functionality that is needed to manage the containers running on the node.

RPC Server

ContainerManager accepts requests from ApplicationMasters to start new containers, or to stop running ones. It works with NMToken SecretManager and ContainerToken SecretManager (described later) to authenticate and authorize all requests. All the operations performed on containers running on this node are recorded in an audit log, which can be postprocessed by security tools.

Resource Localization Service

Resource localization is one of the important services offered by NodeManagers to user applications. Overall, the resource localization service is responsible for securely downloading and organizing various file resources needed by containers. It tries its best to distribute the files across all the available disks. It also enforces access control restrictions on the downloaded files and puts appropriate usage limits on them. To understand how localization happens inside NodeManager, a brief recap of some definitions related to resource localization from Chapter 4, “Functional Overview of YARN Components,” follows.

![]() Localization: Localization is the process of copying/downloading remote resources onto the local file system. Instead of always accessing a resource remotely, that resource is copied to the local machine, which can then be accessed locally.

Localization: Localization is the process of copying/downloading remote resources onto the local file system. Instead of always accessing a resource remotely, that resource is copied to the local machine, which can then be accessed locally.

![]() LocalResource: LocalResource represents a file/library required to run a container. The localization service is responsible for localizing the resource prior to launching the container. For each LocalResource, applications can specify the following information:

LocalResource: LocalResource represents a file/library required to run a container. The localization service is responsible for localizing the resource prior to launching the container. For each LocalResource, applications can specify the following information:

![]() URL: Remote location from where a LocalResource has to be downloaded.

URL: Remote location from where a LocalResource has to be downloaded.

![]() Size: Size in bytes of the LocalResource.

Size: Size in bytes of the LocalResource.

![]() Creation timestamp: Resource creation time on the remote file system.

Creation timestamp: Resource creation time on the remote file system.

![]() LocalResourceType: The type of a resource localized by the NodeManager—FILE, ARCHIVE, or PATTERN.

LocalResourceType: The type of a resource localized by the NodeManager—FILE, ARCHIVE, or PATTERN.

![]() Pattern: The pattern that should be used to extract entries from the archive (used only when the type is PATTERN).

Pattern: The pattern that should be used to extract entries from the archive (used only when the type is PATTERN).

![]() LocalResourceVisibility: Specifies the visibility of a resource localized by the NodeManager. The visibility can be either PUBLIC, PRIVATE, or APPLICATION.

LocalResourceVisibility: Specifies the visibility of a resource localized by the NodeManager. The visibility can be either PUBLIC, PRIVATE, or APPLICATION.

![]() DeletionService: A service that runs inside the NodeManager and deletes local paths as and when instructed to do so.

DeletionService: A service that runs inside the NodeManager and deletes local paths as and when instructed to do so.

![]() Localizer: The actual thread or process that does localization. There are two types of localizers: PublicLocalizer for PUBLIC resources and ContainerLocalizers for PRIVATE and APPLICATION resources.

Localizer: The actual thread or process that does localization. There are two types of localizers: PublicLocalizer for PUBLIC resources and ContainerLocalizers for PRIVATE and APPLICATION resources.

![]() LocalCache: NodeManager maintains and manages several local caches of all the files downloaded. The resources are uniquely identified based on the remote URL originally used while copying that file.

LocalCache: NodeManager maintains and manages several local caches of all the files downloaded. The resources are uniquely identified based on the remote URL originally used while copying that file.

The Localization Process

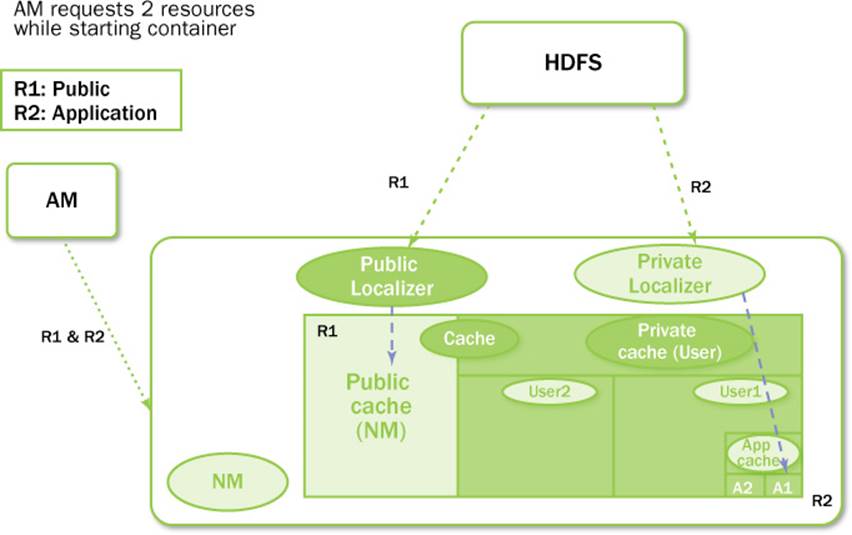

As you will recall from Chapter 4, “Functional Overview of YARN Components,” there are three types of LocalResources: PUBLIC, PRIVATE, and APPLICATION. For security reasons, the NodeManager localizes PRIVATE/APPLICATION LocalResources in a completely different manner than PUBLIC LocalResources. Figure 7.5 gives an overview of where and how resource localization happens.

Figure 7.5 Resource-localization process inside the NodeManager

Localization of PUBLIC Resources

Localization of PUBLIC resources is taken care of by a pool of threads called Public-Localizers. PublicLocalizers run inside the address space of the NodeManager itself. The number of PublicLocalizer threads is controlled by the configuration propertyyarn.nodemanager.localizer.fetch.thread-count, which sets the maximum parallelism during downloading of PUBLIC resources to this thread count. While localizing PUBLIC resources, the localizer validates that all the requested resources are, indeed, PUBLIC by checking their permissions on the remote file system. Any LocalResource that doesn’t match that condition is rejected for localization. Each PublicLocalizer uses credentials passed as part of ContainerLaunchContext (discussed later) to securely copy the resources from the remote file system.

Localization of PRIVATE/APPLICATON Resources

Localization of PRIVATE/APPLICATION resources is not done inside the NodeManager and, therefore, is not centralized. The process is a little involved and is outlined here.

![]() Localization of these resources happen in a separate process called ContainerLocalizer.

Localization of these resources happen in a separate process called ContainerLocalizer.

![]() Every ContainerLocalizer process is managed by a single thread in NodeManager called LocalizerRunner. Every container will trigger one LocalizerRunner if it has any resources that are not yet downloaded.

Every ContainerLocalizer process is managed by a single thread in NodeManager called LocalizerRunner. Every container will trigger one LocalizerRunner if it has any resources that are not yet downloaded.

![]() LocalResourcesTracker is a per-user or per-application object that tracks all the LocalResources for a given user or an application.

LocalResourcesTracker is a per-user or per-application object that tracks all the LocalResources for a given user or an application.

![]() When a container first requests a PRIVATE/APPLICATION LocalResource, if it is not found in LocalResourcesTracker (or is found but is in the INITIALIZED state), it is added to pending resources list.

When a container first requests a PRIVATE/APPLICATION LocalResource, if it is not found in LocalResourcesTracker (or is found but is in the INITIALIZED state), it is added to pending resources list.

![]() A LocalizerRunner may (or may not) be created depending on the need for downloading something new.

A LocalizerRunner may (or may not) be created depending on the need for downloading something new.

![]() The LocalResource is added to its LocalizerRunner’s pending resources list.

The LocalResource is added to its LocalizerRunner’s pending resources list.

![]() One requirement for the NodeManager in secure mode is to download/copy these resources as the application submitter, rather than as a yarn-user (privileged user). Therefore, the LocalizerRunner starts a LinuxContainerExecutor (LCE). The LCE is a process running as application submitter, which then executes a ContainerLocalizer. The ContainerLocalizer works as follows:

One requirement for the NodeManager in secure mode is to download/copy these resources as the application submitter, rather than as a yarn-user (privileged user). Therefore, the LocalizerRunner starts a LinuxContainerExecutor (LCE). The LCE is a process running as application submitter, which then executes a ContainerLocalizer. The ContainerLocalizer works as follows:

![]() Once started, the ContainerLocalizer starts a heartbeat with the NodeManager process.

Once started, the ContainerLocalizer starts a heartbeat with the NodeManager process.

![]() On each heartbeat, the LocalizerRunner either assigns one resource at a time to a ContainerLocalizer or asks it to die. The ContainerLocalizer informs the LocalizerRunner about the status of the download.

On each heartbeat, the LocalizerRunner either assigns one resource at a time to a ContainerLocalizer or asks it to die. The ContainerLocalizer informs the LocalizerRunner about the status of the download.

![]() If it fails to download a resource, then that particular resource is removed from LocalResourcesTracker and the container eventually is marked as failed. When this happens, the LocalizerRunner stops the running ContainerLocalizers and exits.

If it fails to download a resource, then that particular resource is removed from LocalResourcesTracker and the container eventually is marked as failed. When this happens, the LocalizerRunner stops the running ContainerLocalizers and exits.

![]() If it is a successful download, then the LocalizerRunner gives a Container-Localizer another resource again and again, continuing to do so until all pending resources are successfully downloaded.

If it is a successful download, then the LocalizerRunner gives a Container-Localizer another resource again and again, continuing to do so until all pending resources are successfully downloaded.

![]() As of this writing, each ContainerLocalizer doesn’t support parallel downloading of multiple PRIVATE/APPLICATION resources. In addition, the maximum parallelism is the number of containers requested for the same user on the same NodeManager at that point of time. The worst case for this process occurs when an ApplicationMaster itself is starting. If the ApplicationMaster needs any resources to be localized then, they will be downloaded serially before its container starts.

As of this writing, each ContainerLocalizer doesn’t support parallel downloading of multiple PRIVATE/APPLICATION resources. In addition, the maximum parallelism is the number of containers requested for the same user on the same NodeManager at that point of time. The worst case for this process occurs when an ApplicationMaster itself is starting. If the ApplicationMaster needs any resources to be localized then, they will be downloaded serially before its container starts.

Target Locations of LocalResources

On each of the NodeManager machines, LocalResources are ultimately localized in the following target directories, under each local directory:

![]() PUBLIC: <local-dir>/filecache

PUBLIC: <local-dir>/filecache

![]() PRIVATE: <local-dir>/usercache/<username>/filecache

PRIVATE: <local-dir>/usercache/<username>/filecache

![]() APPLICATION: <local-dir>/usercache/<username>/appcache/<app-id>/

APPLICATION: <local-dir>/usercache/<username>/appcache/<app-id>/

Irrespective of the application type, once the resources are downloaded and the containers are running, the containers can access these resources locally by making use of the symbolic links created by the NodeManager in each container’s working directory.

Resource Localization Configuration

Administrators can control various aspects of resource localization by setting or changing certain configuration parameters in yarn-site.xml when starting a NodeManager:

![]() yarn.nodemanager.local-dirs: A comma-separated list of local directories that one can configure to be used for copying files during localization. The idea behind allowing multiple directories is to use multiple disks for localization so as to provide both fail-over (one or a few disks going bad doesn’t affect all containers) and load balancing (no single disk is bottlenecked with writes) capabilities. Thus, individual directories should be configured if possible on different local disks.

yarn.nodemanager.local-dirs: A comma-separated list of local directories that one can configure to be used for copying files during localization. The idea behind allowing multiple directories is to use multiple disks for localization so as to provide both fail-over (one or a few disks going bad doesn’t affect all containers) and load balancing (no single disk is bottlenecked with writes) capabilities. Thus, individual directories should be configured if possible on different local disks.

![]() yarn.nodemanager.local-cache.max-files-per-directory: Limits the maximum number of files that will be localized in each of the localization directories (separately for PUBLIC, PRIVATE, and APPLICATION resources). The default value is 8192 and this parameter should, in general, not be assigned a large value (configure a value that is sufficiently less than the per-directory maximum file limit of the underlying file system, such as ext3).

yarn.nodemanager.local-cache.max-files-per-directory: Limits the maximum number of files that will be localized in each of the localization directories (separately for PUBLIC, PRIVATE, and APPLICATION resources). The default value is 8192 and this parameter should, in general, not be assigned a large value (configure a value that is sufficiently less than the per-directory maximum file limit of the underlying file system, such as ext3).

![]() yarn.nodemanager.localizer.address: The network address where ResourceLocalizationService listens for requests from various localizers.

yarn.nodemanager.localizer.address: The network address where ResourceLocalizationService listens for requests from various localizers.

![]() yarn.nodemanager.localizer.client.thread-count: Limits the number of RPC threads in ResourceLocalizationService that are used for handling localization requests from localizers. The default is 5, which means that at any point of time, only five localizers will be processed while others wait in the RPC queues.

yarn.nodemanager.localizer.client.thread-count: Limits the number of RPC threads in ResourceLocalizationService that are used for handling localization requests from localizers. The default is 5, which means that at any point of time, only five localizers will be processed while others wait in the RPC queues.

![]() yarn.nodemanager.localizer.fetch.thread-count: Configures the number of threads used for localizing PUBLIC resources. Recall that localization of PUBLIC resources happens inside the NodeManager address space; thus this property limits how many threads will be spawned inside the NodeManager for localization of PUBLIC resources. The default is 4.

yarn.nodemanager.localizer.fetch.thread-count: Configures the number of threads used for localizing PUBLIC resources. Recall that localization of PUBLIC resources happens inside the NodeManager address space; thus this property limits how many threads will be spawned inside the NodeManager for localization of PUBLIC resources. The default is 4.

![]() yarn.nodemanager.delete.thread-count: Controls the number of threads used by DeletionService for deleting files. This DeletionService is used all over the NodeManager for deleting log files as well as local cache files. The default is 4.

yarn.nodemanager.delete.thread-count: Controls the number of threads used by DeletionService for deleting files. This DeletionService is used all over the NodeManager for deleting log files as well as local cache files. The default is 4.

![]() yarn.nodemanager.localizer.cache.target-size-mb: This property decides the maximum disk space to be used for localizing resources. (As of this book’s writing, there was no individual limit for PRIVATE, APPLICATION, or PUBLIC caches.) Once the total disk size of the cache exceeds this value, the DeletionService will try to remove files that are not used by any running containers. This limit is applicable to all the disks and is not used on a per-disk basis.

yarn.nodemanager.localizer.cache.target-size-mb: This property decides the maximum disk space to be used for localizing resources. (As of this book’s writing, there was no individual limit for PRIVATE, APPLICATION, or PUBLIC caches.) Once the total disk size of the cache exceeds this value, the DeletionService will try to remove files that are not used by any running containers. This limit is applicable to all the disks and is not used on a per-disk basis.

![]() yarn.nodemanager.localizer.cache.cleanup.interval-ms: After the interval specified by this configuration property elapses, ResourceLocalizationService will try to delete any unused resources if the total cache size exceeds the configured maximum cache size. Unused resources are those resources that are not referred to by any running container. Every time a container requests a resource, that container is added to the resource’s reference list. It will remain there until the container finishes, thereby preventing accidental deletion of this resource. As a part of container resource cleanup (when the container finishes), the container will be removed from the resource’s reference list. When the reference count drops to zero, it is an ideal candidate for deletion. The resources will be deleted on a least recently used (LRU) basis until the current cache size drops below the target size.

yarn.nodemanager.localizer.cache.cleanup.interval-ms: After the interval specified by this configuration property elapses, ResourceLocalizationService will try to delete any unused resources if the total cache size exceeds the configured maximum cache size. Unused resources are those resources that are not referred to by any running container. Every time a container requests a resource, that container is added to the resource’s reference list. It will remain there until the container finishes, thereby preventing accidental deletion of this resource. As a part of container resource cleanup (when the container finishes), the container will be removed from the resource’s reference list. When the reference count drops to zero, it is an ideal candidate for deletion. The resources will be deleted on a least recently used (LRU) basis until the current cache size drops below the target size.

Containers Launcher

The Containers Launcher maintains a pool of threads to prepare and launch containers as quickly as possible. It also cleans up the containers’ processes when the Resource-Manager sends such a request through the NodeStatusUpdater or when the ApplicationMasters send requests via the RPC server. The launch or cleanup of a container happens in one thread of the thread pool, which will return only when the corresponding operation finishes. Consequently, launch or cleanup of one container doesn’t affect any other operations and all container operations are isolated inside the NodeManager process.

Auxiliary Services

An administrator may configure the NodeManager with a set of pluggable, auxiliary services. The NodeManager provides a framework for extending its functionality by configuring these services. This feature allows per-node custom services that specific frameworks may require, yet places them in a local “sandbox” separate from the rest of the NodeManager. These services must be configured before the NodeManager starts. Auxiliary services are notified when an application’s first container starts on the node, whenever a container starts or finishes, and finally when the application is considered to be complete.

While a container’s local storage will be cleaned up after it exits, it can promote some output so that it will be preserved until the application finishes. In this way, a container may produce data that persists beyond the life of the container, to be managed by the node. This property of output persistence, together with auxiliary services, enables a powerful feature. One important use-case that takes advantage of this feature is Hadoop MapReduce. For Hadoop MapReduce applications, the intermediate data are transferred between the map and reduce tasks using an auxiliary service called ShuffleHandler. As mentioned earlier, the CLC allows ApplicationMasters to address a payload to auxiliary services. MapReduce applications use this channel to pass tokens that authenticate reduce tasks to the shuffle service.

When a container starts, the service information for auxiliary services is returned to the ApplicationMaster so that the ApplicationMaster can use this information to take advantage of any available auxiliary services. As an example, the MapReduce framework gets the ShuffleHandler’s port information, which it then passes on to the reduce tasks for shuffling map outputs.

Containers Monitor

After a container is launched, this component starts observing its resource utilization while the container is running. To enforce isolation and fair sharing of resources like memory, each container is allocated some amount of such a resource by the ResourceManager. The ContainersMonitor monitors each container’s usage continuously. If a container exceeds its allocation, this component signals the container to be killed. This check is done to prevent any runaway container from adversely affecting other well-behaved containers running on the same node.

Log Handler

The LogHandler is a pluggable component that offers the option of either keeping the containers’ logs on the local disks or zipping them together and uploading them onto a file system. We describe this feature in Chapter 6 under the heading “User Log Management.”

Container Executor

This NodeManager component interacts with the underlying operating system to securely place files and directories needed by containers and subsequently to launch and clean up processes corresponding to containers in a secure manner.

Node Health Checker Service

The NodeHealthCheckerService provides for checking the health of a node by running a configured script frequently. It also monitors the health of the disks by creating temporary files on the disks every so often. Any changes in the health of the system are sent to NodeStatusUpdater (described earlier), which in turn passes the information to the ResourceManager.

NodeManager Security Components

This section outlines the NodeManager security components.

Application ACLs Manager in the NodeManager

The NodeManager needs to gate the user-facing APIs to allow specific users to access them. For instance, container logs can be displayed on the web interface. This component maintains the ACL for each application and enforces the access permissions whenever such a request is received.

ContainerToken SecretManager in the NodeManager

In the NodeManager, this component mirrors the corresponding functionality in the ResourceManager. It verifies various incoming requests to ensure that all of the start-container requests are properly authorized by the ResourceManager.

NMToken SecretManager in the NodeManager

This component also mirrors the corresponding functionality in the ResourceManager. It verifies all incoming API calls to ensure that the requests are properly authenticated using NMTokens.

Web Server

This component exposes the list of applications, containers running on the node at a given point of time, node-health-related information, and the logs produced by the containers.

Important NodeManager Functions

The flow of a few important NodeManager functions with respect to running a YARN application are summarized next.

Container Launch

To facilitate container launch, the NodeManager expects to receive detailed information about a container’s run time, as part of the total container specification. This includes the container’s command line, environment variables, a list of (file) resources required by the container, and any security tokens.

On receiving a container-launch request, the NodeManager first verifies this request and determines if security is enabled, so as to authorize the user, correct resources assignment, and other aspects of the request. The NodeManager then performs the following set of steps to launch the container.

1. A local copy of all the specified resources is created (distributed cache).

2. Isolated work directories are created for the container, and the local resources are made available in these directories by way of symbolic links to the downloaded resources.

3. The launch environment and command line are used to start the actual container.

User Log Management and Aggregation

Hadoop version 2 has much improved user log management, including log aggregation in HDFS. A full discussion of user log management can be found in Chapter 6, “Apache Hadoop YARN Administration.”

MapReduce Shuffle Auxiliary Service

The shuffle functionality required to run a MapReduce application is implemented as an auxiliary service. This service starts up a Netty web server, and knows how to handle MapReduce-specific shuffle requests from reduce tasks. The MapReduce ApplicationMaster specifies the service ID for the shuffle service, along with security tokens that may be required. The NodeManager provides the ApplicationMaster with the port on which the shuffle service is running; this information is then passed to the reduce tasks.

In YARN, the NodeManager is primarily limited to managing abstract containers (i.e., only processes corresponding to a container) and does not concern itself with per-application state management like MapReduce tasks. It also does away with the notion of named slots, such as map and reduce slots. Because of this clear separation of responsibilities coupled with the modular architecture described previously, the NodeManager can scale much more easily and its code is much more maintainable.

ApplicationMaster

The per-application ApplicationMaster is the bootstrap process that kicks off everything for a YARN application once it gets past the application submission and achieves its own launch. If one compares this approach to the Hadoop 1 architecture, the ApplicationMaster is in essence the per-application JobTracker. We start with a brief overview of the ApplicationMaster and then describe each of its chief responsibilities in detail.

Overview

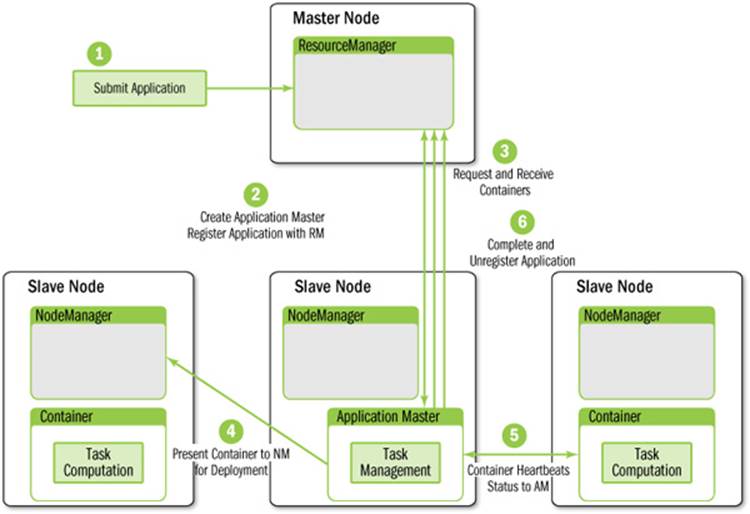

Once an application is submitted, the application’s representation in the ResourceManager negotiates for a container to spawn this bootstrap process. Once such a container is allocated, as described in the ResourceManager section, the ApplicationMaster’s launcher directly communicates with the ApplicationMaster container’s NodeManager to set up and launch the container. Thus begins the life of an ApplicationMaster. A brief overview of its overall interaction with the rest of YARN is shown in Figure 7.6.

Figure 7.6 Application Master interactions with YARN

The process starts when (1) an application submits a request to the ResourceManager. Next, the ApplicationMaster is started and registers with the ResourceManager (2). The ApplicationMaster then requests containers (3) from the ResourceManager to perform actual work. The assigned containers are presented to the NodeManager for use by the ApplicationMaster (4). Computation takes place in the containers, which keep in contact (5) with the ApplicationMaster (not the ResourceManager) as the job progresses. When the application is complete, containers are stopped and the ApplicationMaster is unregistered (6) from the ResourceManager.

Once successfully launched, the ApplicationMaster is responsible for the following tasks:

![]() Initializing the process of reporting liveliness to the ResourceManager

Initializing the process of reporting liveliness to the ResourceManager

![]() Computing the resource requirements of the application

Computing the resource requirements of the application

![]() Translating the requirements into ResourceRequests that are understood by the YARN scheduler

Translating the requirements into ResourceRequests that are understood by the YARN scheduler

![]() Negotiating those resource requests with the scheduler

Negotiating those resource requests with the scheduler

![]() Using allocated containers by working with the NodeManagers

Using allocated containers by working with the NodeManagers

![]() Tracking the status of running containers and monitoring their progress

Tracking the status of running containers and monitoring their progress

![]() Reacting to container or node failures by requesting alternative resources from the scheduler if needed

Reacting to container or node failures by requesting alternative resources from the scheduler if needed

In the remainder of this section, we’ll describe these individual responsibilities in greater detail.

Liveliness

The first operation that any ApplicationMaster has to perform is to register with the ResourceManager. As part of the registration, ApplicationMasters can inform the ResourceManager about an IPC address and/or a web URL. The IPC address refers to a client-facing service address—a location that the application’s client can visit to obtain nongeneric information about the running application. The communication on the IPC server is application specific: It can be RPC, a simple socket connection, or something else. ApplicationMasters can also report an HTTP tracking URL that points to either an embedded web application running inside the ApplicationMaster’s address space or an external web server. This feature enables the clients to obtain application status and information via HTTP.

In the registration response, the ResourceManager returns information that the ApplicationMaster can use, such as the minimum and maximum sizes of resources that YARN accepts, and the ACLs associated with the application that are set by the user during application submission. The ApplicationMaster can use these ACLs for authorizing user requests on its own client-facing service.

Once registered, an ApplicationMaster periodically needs to send heartbeats to the ResourceManager to affirm its liveliness and health. Any ApplicationMaster that fails to report the status for the yarn.am.liveness-monitor.expiry-interval-ms property (a configuration property of the ResourceManager, whose default is 10 minutes) will be deemed to be a dead ApplicationMaster and will be killed by the platform. This configuration is controlled by administrators and should always be less than the value of the nodes’ expiry interval governed by theyarn.nm.liveness-monitor.expiry-interval-ms property. Otherwise, in situations involving network partitions, nodes may be marked as dead long before ApplicationMasters are marked as such, which may lead to correctness issues on the ResourceManager.

Resource Requirements

Once the liveliness reports with the ResourceManager are taken care of, the application/framework needs to figure out its own resource requirements. It may need either a static definition of resources or a dynamic one.

Resource requirements are referred to as static when they are decided at the time of application submission (in most cases, by the client) and when, once the ApplicationMaster starts running, there is no change in that specification. For example, in the case of Hadoop MapReduce, the number of maps is based on the input splits for MapReduce applications and the number of reducers on user input; thus this number depends on a static set of resources selected before the application’s submission.

Even if the requirements are static, there is another differentiating characteristic in terms of how the scheduling of those resources happens:

![]() All of the allocated containers may be required to run together—a kind of gang scheduling where resource usage follows a static all-or-nothing model.

All of the allocated containers may be required to run together—a kind of gang scheduling where resource usage follows a static all-or-nothing model.

![]() Alternatively, resource usage may change elastically, such that containers can proceed with their work as they are allocated independently of the availability of resources for the remaining containers.

Alternatively, resource usage may change elastically, such that containers can proceed with their work as they are allocated independently of the availability of resources for the remaining containers.

When dynamic resource requirements are applied, the ApplicationMaster may choose how many resources to request at run time based on criteria such as user hints, availability of cluster resources, and business logic.

In either case, once a set of resource requirements is clearly defined, the ApplicationMaster can begin sending the requests across to the scheduler and then schedule the allocated containers to do the desired work.

Scheduling

When an ApplicationMaster accumulates enough resource requests or a timer expires, it can send the requests in a heartbeat message, via the allocate API, to the ResourceManager. The allocate call is the single most important API between the ApplicationMaster and the scheduler. It is used by the ApplicationMaster to inform the ResourceManager about its requests; it is also used as the liveliness signal. At any point in time, only one thread in the ApplicationMaster can invoke the allocate API; all such calls are serialized on the ResourceManager per ApplicationAttempt. Because of this, if multiple threads ask for resources via the allocate API, each thread may get an inconsistent view of the overall resource requests.