OpenGL SuperBible: Comprehensive Tutorial and Reference, Sixth Edition (2013)

Part II: In Depth

Chapter 9. Fragment Processing and the Framebuffer

What You’ll Learn in This Chapter

• How data is passed into fragment shaders, how to control the way it’s sent there, and what to do with it once it gets there

• How to create your own framebuffers and control the format of data that they store

• How to produce more than just one output from a single fragment shader

• How to get data out of your framebuffer and into textures, buffers, and your application’s memory

This chapter is all about the back end — everything that happens after rasterization. We will take an in-depth look at some of the interesting things you can do with a fragment shader, what happens to your data once it leaves the fragment shader, and how to get it back into your application. We’re also going to look at ways to improve the quality of the images that your applications produce, from rendering in high dynamic range, to antialiasing techniques (compensating from the pixelating effect of the display) and alternative color spaces that you can render into.

Fragment Shaders

You have already been introduced to the fragment shader stage. It is the stage in the pipeline where your shader code determines the color of each fragment before it is sent for composition into the framebuffer. The fragment shader runs once per fragment, where a fragment is a virtual element of processing that might end up contributing to the final color of a pixel. Its inputs are generated by the fixed-function interpolation phase that executes as part of rasterization. By default, all members of the input blocks to the fragment shader are smoothly interpolated across the primitive being rasterized, with the endpoints of that interpolation being fed by the last stage in the front end (which may be the vertex, tessellation evaluation, or geometry shader stages). However, you have quite a bit of control over how that interpolation is performed and even whether interpolation is performed at all.

Interpolation and Storage Qualifiers

You already read about some of the storage qualifiers supported by GLSL in earlier chapters. There are a few storage qualifiers that can be used to control interpolation that you can use for advanced rendering. They include the flat and noperspective, and we quickly go over each of these here.

Disabling Interpolation

When you declare an input to your fragment shader, that input is generated, or interpolated, across the primitive being rendered. However, whenever you pass an integer from the front end to the back end, interpolation must be disabled — this is done automatically for you because OpenGL isn’t capable of smoothly interpolating integers. It is also possible to explicitly disable interpolation for floating-point fragment shader inputs. Fragment shader inputs for which interpolation has been disabled are known as flat inputs (in contrast to smooth inputs, referring to the smooth interpolation normally performed by OpenGL). To create a flat input to the fragment shader for which interpolation is not performed, declare it using the flat storage1 qualifier, as in

1. It’s actually legal to explicitly declare floating-point fragment shader inputs with the smooth storage qualifier, although this is normally redundant as this is the default.

flat in vec4 foo;

flat in int bar;

flat in mat3 baz;

You can also apply interpolation qualifiers to input blocks, which is where the smooth qualifier comes in handy. Interpolation qualifiers applied to blocks are inherited by its members — that is, they are applied automatically to all members of the block. However, it’s possible to apply a different qualifier to individual members of the block. Thus, consider this snippet:

flat in INPUT_BLOCK

{

vec4 foo;

int bar;

smooth mat3 baz;

};

Here, foo has interpolation disabled because it inherits flat qualification from the parent block. bar is automatically flat because it is an integer. However, even though baz is a member of a block that has the flat interpolation qualifier, it is smoothly interpolated because it has the smoothinterpolation qualifier applied at the member level.

Don’t forget that while we are describing this in terms of fragment shader inputs, storage and interpolation qualifiers used on the corresponding outputs in the front end must match those used at the input of the fragment shader. This means that whatever the last stage in your front end, whether it’s a vertex, tessellation evaluation, or geometry shader, you should also declare the matching output with the flat qualifier.

When flat inputs to a fragment are in use, their value comes from only one of the vertices in a primitive. When the primitives being rendered are single points, then there is only one choice as to where to get the data. However, when the primitives being rendered are lines or triangles, either the first or last vertex in the primitive is used. The vertex from which the values for flat fragment shader inputs are taken is known as the provoking vertex, and you can decide whether that should be the first or last vertex by calling

void glProvokingVertex(GLenum provokeMode);

Here, provokeMode indicates which vertex should be used, and valid values are GL_FIRST_VERTEX_CONVENTION and GL_LAST_VERTEX_CONVENTION. The default is GL_LAST_VERTEX_CONVENTION.

Interpolating without Perspective Correction

As you have learned, OpenGL interpolates the values of fragment shader inputs across the face of primitives, such as triangles, and presents a new value to each invocation of the fragment shader. By default, the interpolation is performed smoothly in the space of the primitive being rendered. That means that if you were to look at the triangle flat on, the steps that the shader inputs take across its surface would be equal. However, OpenGL performs interpolation in screen space as it steps from pixel to pixel. Very rarely is a triangle seen directly face on, and so perspective foreshortening means that the step in each varying from pixel to pixel is not constant — that is, they are not linear in screen space. OpenGL corrects for this by using perspective-correct interpolation. To implement this, it interpolates values that are linear in screen space and uses those to derive the actual values of the shader inputs at each pixel.

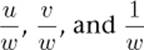

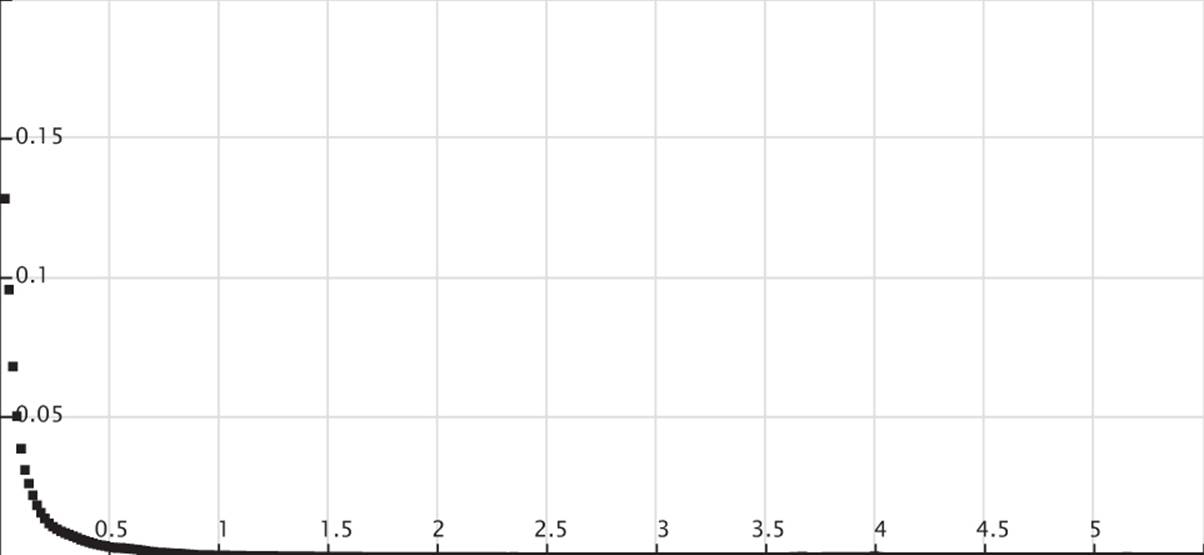

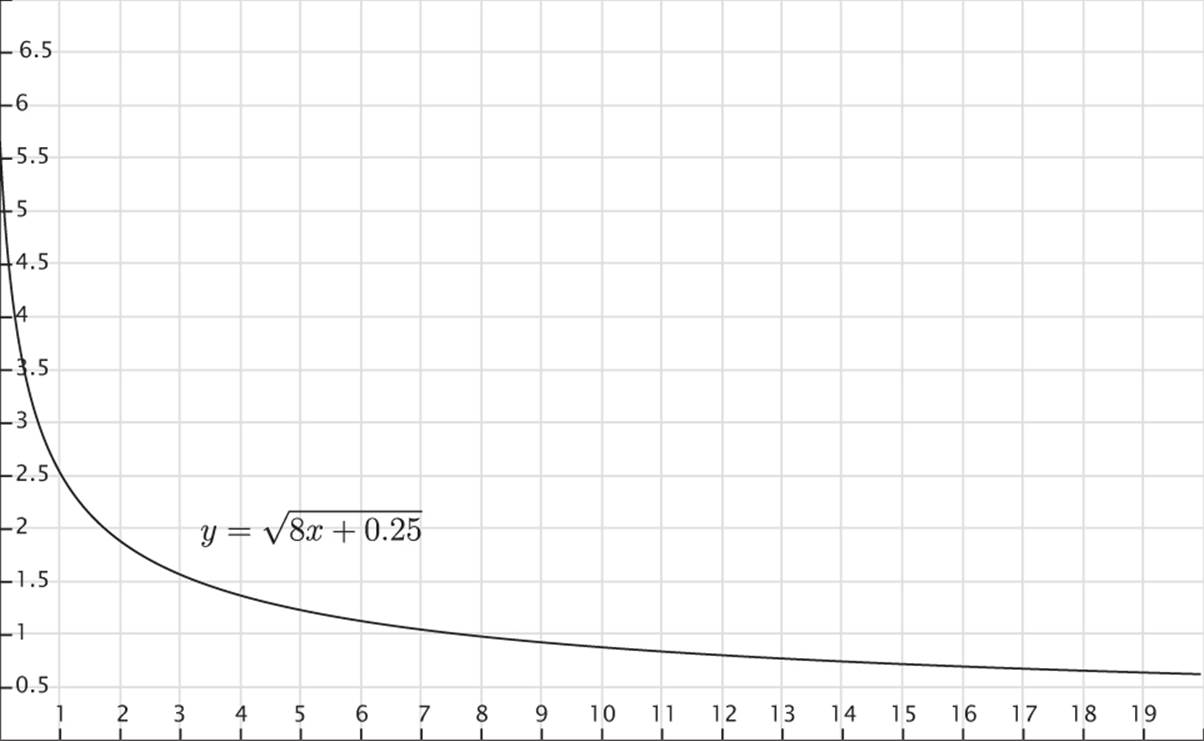

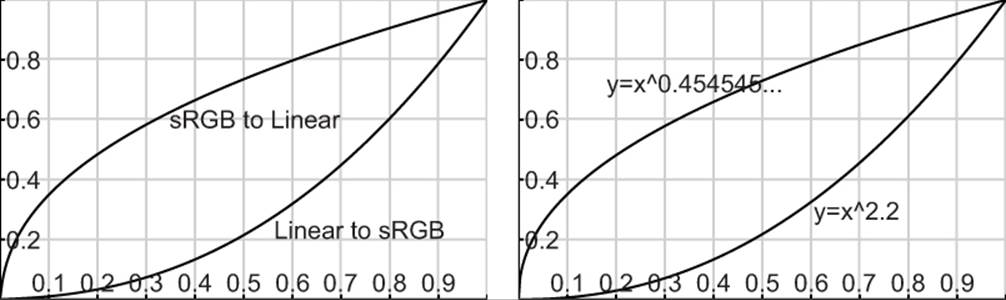

Consider a texture coordinate, uv, that is to be interpolated across a triangle. Neither u nor v is linear in screen space. However (due to some math that is beyond the scope of this section), ![]() and

and ![]() are linear in screen space, as is

are linear in screen space, as is ![]() (the fourth component of the fragment’s coordinate). So, what OpenGL actually interpolates is

(the fourth component of the fragment’s coordinate). So, what OpenGL actually interpolates is

At each pixel, it reciprocates ![]() to find w and then multiplies

to find w and then multiplies ![]() and

and ![]() by w to find u and v. This provides perspective-correct values of the interpolants to each instance of the fragment shader.

by w to find u and v. This provides perspective-correct values of the interpolants to each instance of the fragment shader.

Normally, this is what you want. However, there may be times when you don’t want this. If you actually want interpolation to be carried out in screen space regardless of the orientation of the primitive, you can use the noperspective storage qualifier, like this:

noperspective out vec2 texcoord;

in the vertex shader (or whatever shader is last in the front end of your pipeline), and

noperspective in vec2 texcoord;

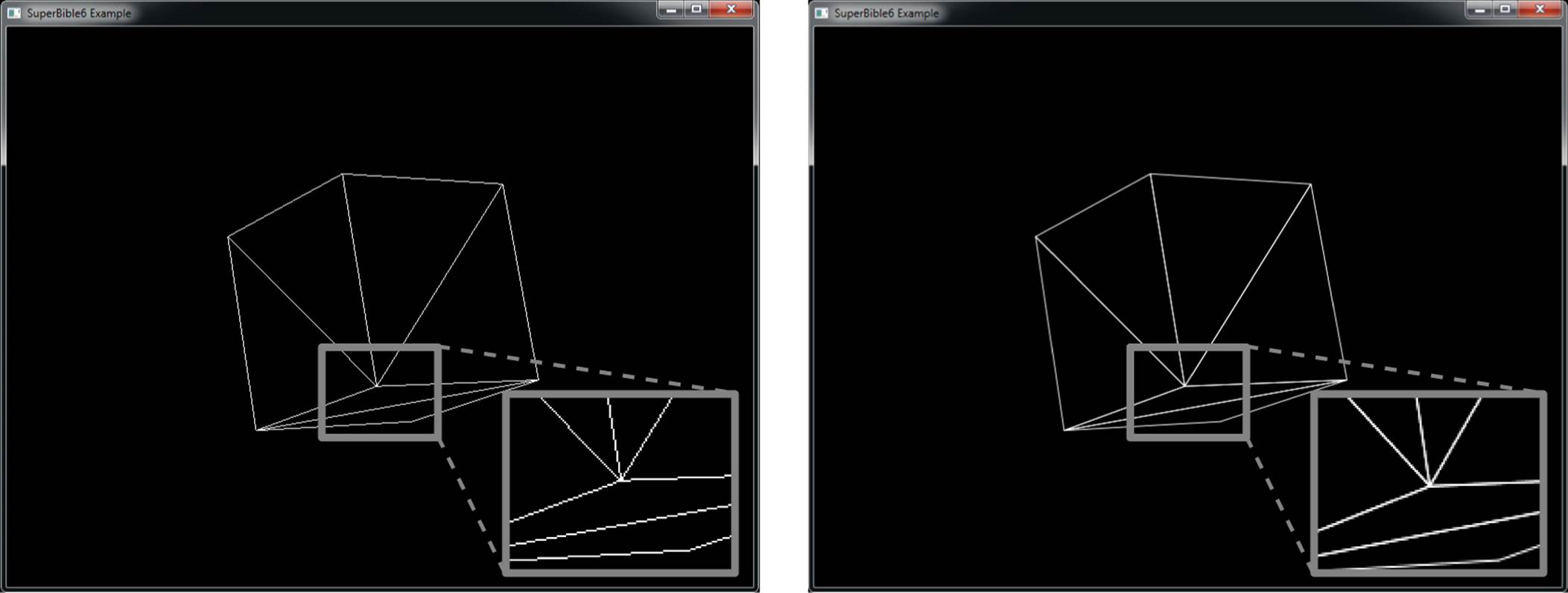

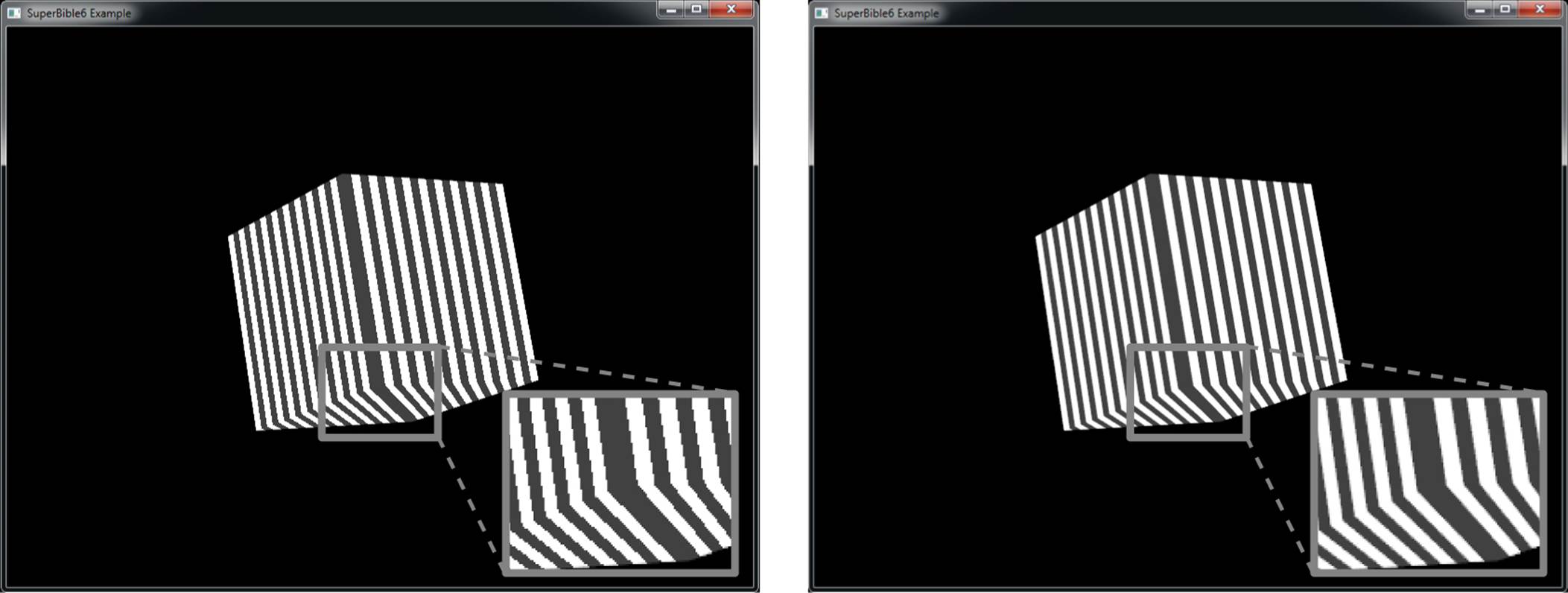

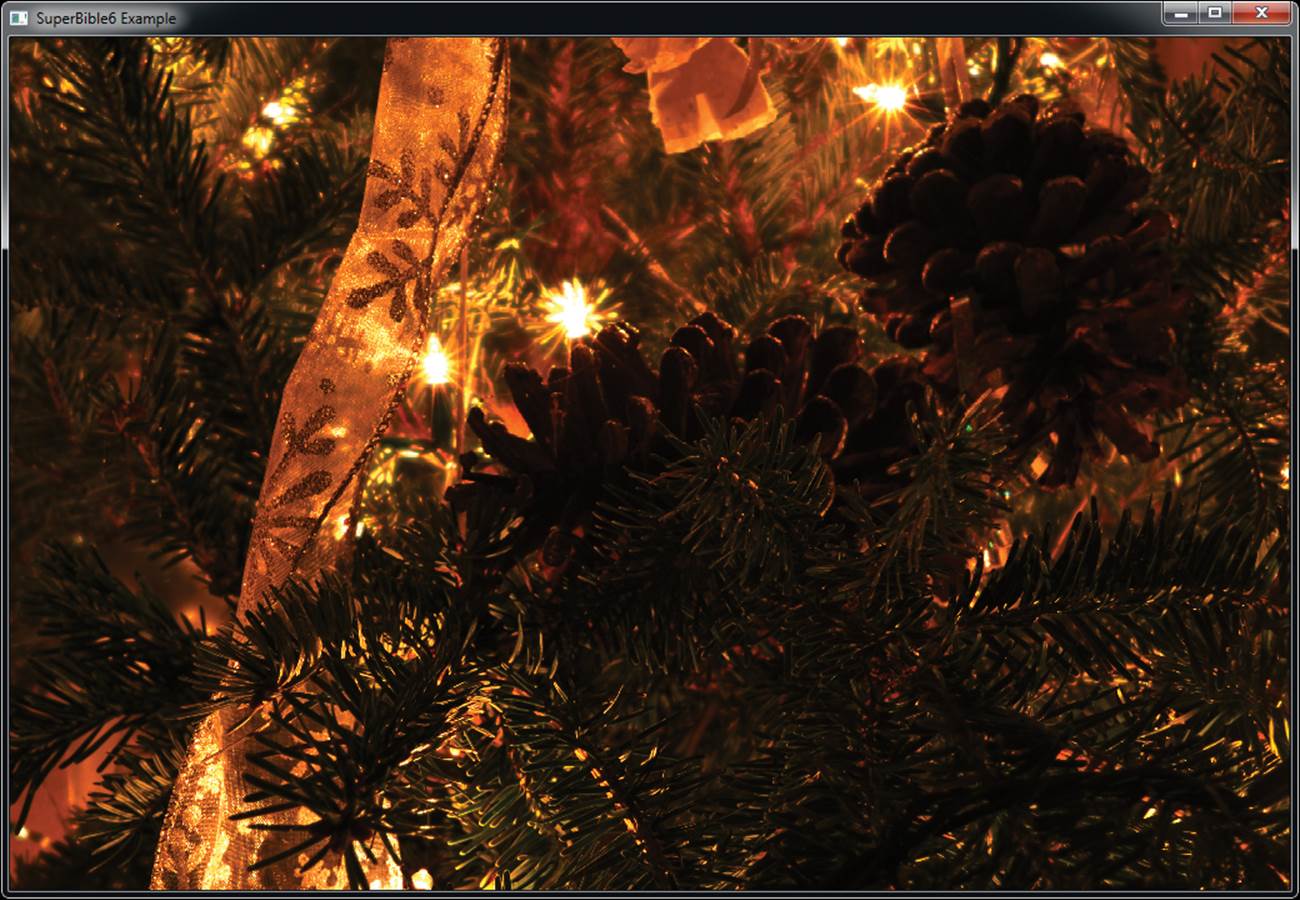

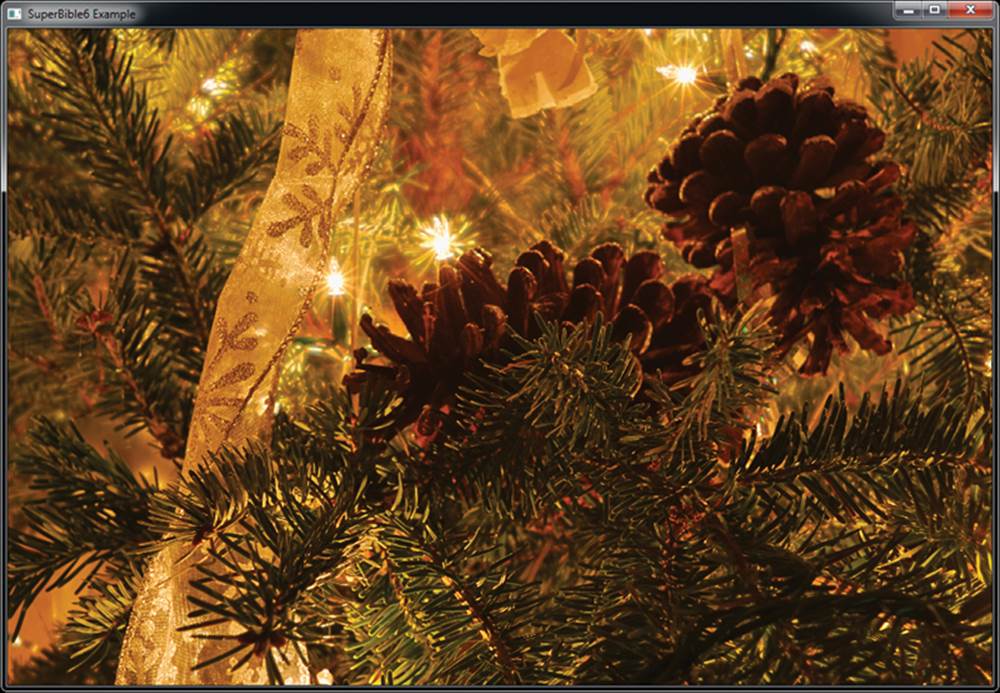

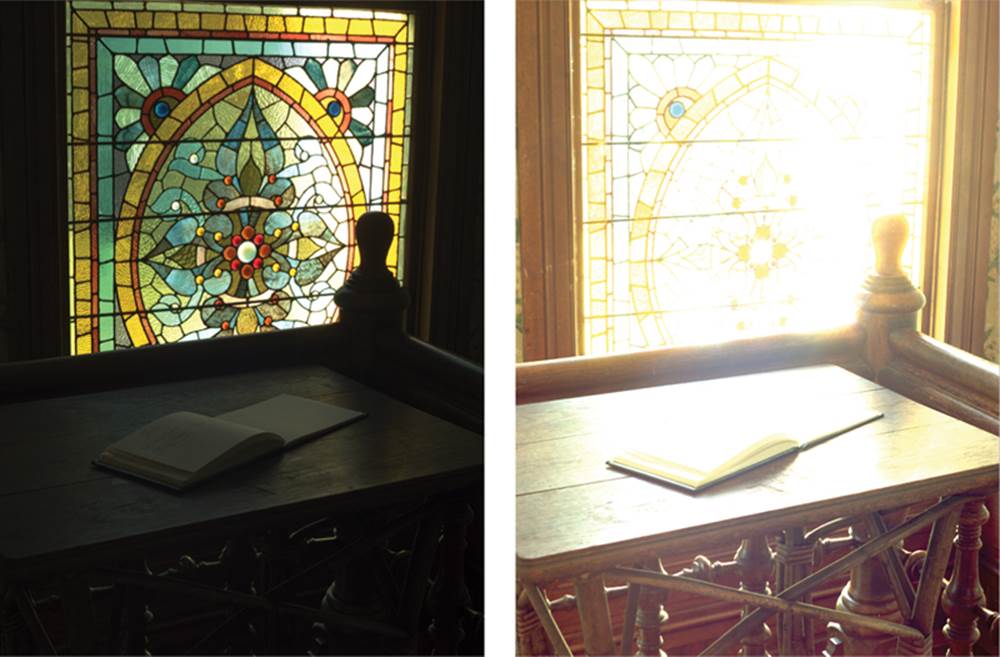

in the fragment shader, for example. The results of using perspective-correct and screen-space linear (noperspective) rendering are shown in Figure 9.1.

Figure 9.1: Contrasting perspective-correct and linear interpolation

The top image of Figure 9.1 shows perspective-correct interpolation applied to a pair of triangles as its angle to the viewer changes. Meanwhile, the bottom image of Figure 9.1 shows how the noperspective storage qualifier has affected the interpolation of texture coordinates. As the pair of triangles moves to a more and more oblique angle relative to the viewer, the texture becomes more and more skewed.

Per-Fragment Tests

Once the fragment shader has run, OpenGL needs to figure what do to with the fragments that are generated. Geometry has been clipped and transformed into normalized device space, and so all of the fragments that are produced by rasterization are known to be on the screen (or inside the window). However, OpenGL then performs a number of other tests on the fragment to determine if and how it should be written to the framebuffer. These tests (in logical order) are the scissor test, the stencil test, and the depth test. These are covered in pipeline order in the following section.

Scissor Testing

The scissor rectangle is an arbitrary rectangle that you can specify in screen coordinates that allows you to further clip rendering to a particular region.

Unlike the viewport, geometry is not clipped directly against the scissor rectangle, but rather individual fragments are tested against the rectangle as part of post-rasterization2 processing. As with viewport rectangles, OpenGL supports an array of scissor rectangles. To set them up, you can call glScissorIndexed() or glScissorIndexedv(), whose prototypes are

2. It may be the case that some OpenGL implementations either apply scissoring at the end of the geometry stage, or in an early part of rasterization. Here, we are describing the logical OpenGL pipeline, though.

void glScissorIndexed(GLuint index,

GLint left,

GLint bottom,

GLsizei width,

GLsizei height);

void glScissorIndexedv(GLuint index,

const GLint * v);

For both functions, the index parameter specifies which scissor rectangle you want to change. The left, bottom, width, and height parameters describe a region in window coordinates that defines the scissor rectangle. For glScissorIndexedv(), the left, bottom, width, and height parameters are stored (in that order) in an array whose address is passed in v.

To select a scissor rectangle, the gl_ViewportIndex built-in output from the geometry shader is used (yes, the same one that selects the viewport). That means that given an array of viewports and an array of scissor rectangles, the same index is used for both arrays. To enable scissor testing, call

glEnable(GL_SCISSOR_TEST);

To disable it, call

glDisable(GL_SCISSOR_TEST);

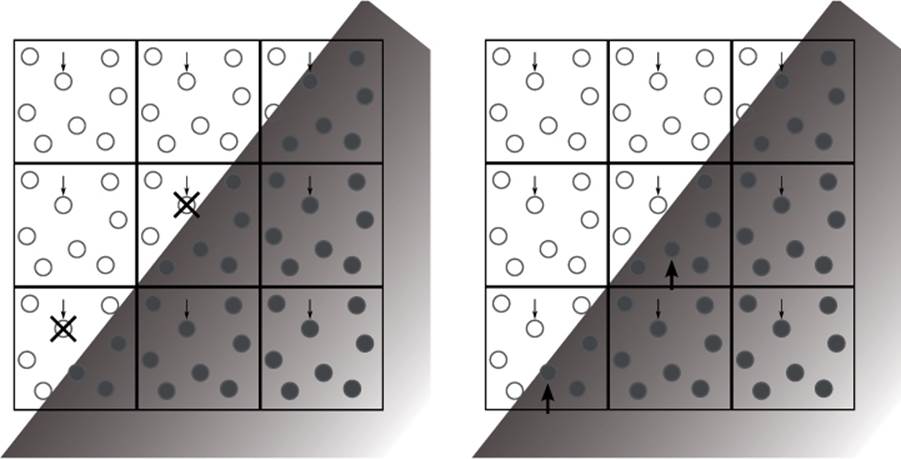

The scissor test starts off disabled, so unless you need to use it, you don’t need to do anything. If we again use the shader of Listing 8.36, which employs an instanced geometry shader to write to gl_ViewportIndex, enable the scissor test, and set some scissor rectangles, we can mask off sections of rendering. Listing 9.1 shows part of the code from the multiscissor, which is to set up our scissor rectangles, and Figure 9.2 shows the result of rendering with this code.

// Turn on scissor testing

glEnable(GL_SCISSOR_TEST);

// Each rectangle will be 7/16 of the screen

int scissor_width = (7 * info.windowWidth) / 16;

int scissor_height = (7 * info.windowHeight) / 16;

// Four rectangles - lower left first...

glScissorIndexed(0, 0, 0, scissor_width, scissor_height);

// Lower right...

glScissorIndexed(1,

info.windowWidth - scissor_width, 0,

info.windowWidth - scissor_width, scissor_height);

// Upper left...

glScissorIndexed(2,

0, info.windowHeight - scissor_height,

scissor_width, scissor_height);

// Upper right...

glScissorIndexed(3,

info.windowWidth - scissor_width,

info.windowHeight - scissor_height,

scissor_width, scissor_height);

Listing 9.1: Setting up scissor rectangle arrays

Figure 9.2: Rendering with four different scissor rectangles

An important point to remember about the scissor test is that when you clear the framebuffer using glClear() or glClearBufferfv(), the first scissor rectangle is applied as well. This means that you can clear an arbitrary rectangle of the framebuffer using the scissor rectangle, but it can also lead to errors if you leave the scissor test enabled at the end of a frame and then try to clear the framebuffer ready for the next frame.

Stencil Testing

The next step in the fragment pipeline is the stencil test. Think of the stencil test as cutting out a shape in cardboard and then using that cutout to spray-paint the shape on a mural. The spray paint only hits the wall in places where the cardboard is cut out (just like a real stencil). If pixel format of the framebuffer includes a stencil buffer, you can similarly mask your draws to the framebuffer. You can enable stenciling by calling glEnable() and passing GL_STENCIL_TEST in the cap parameter. Most implementations only support stencil buffers that contain 8 bits, but some configurations may support fewer bits (or more, but this is extremely uncommon).

Your drawing commands can have a direct effect on the stencil buffer, and the value of the stencil buffer can have a direct effect on the pixels you draw. To control interactions with the stencil buffer, OpenGL provides two commands: glStencilFuncSeparate() and glStencilOpSeparate(). OpenGL lets you set both of these separately for front- and back-facing geometry. The prototypes of glStencilFuncSeparate() and glStencilOpSeparate() are

void glStencilFuncSeparate(GLenum face,

GLenum func,

GLint ref,

GLuint mask);

void glStencilOpSeparate(GLenum face,

GLenum sfail,

GLenum dpfail,

GLenum dppass);

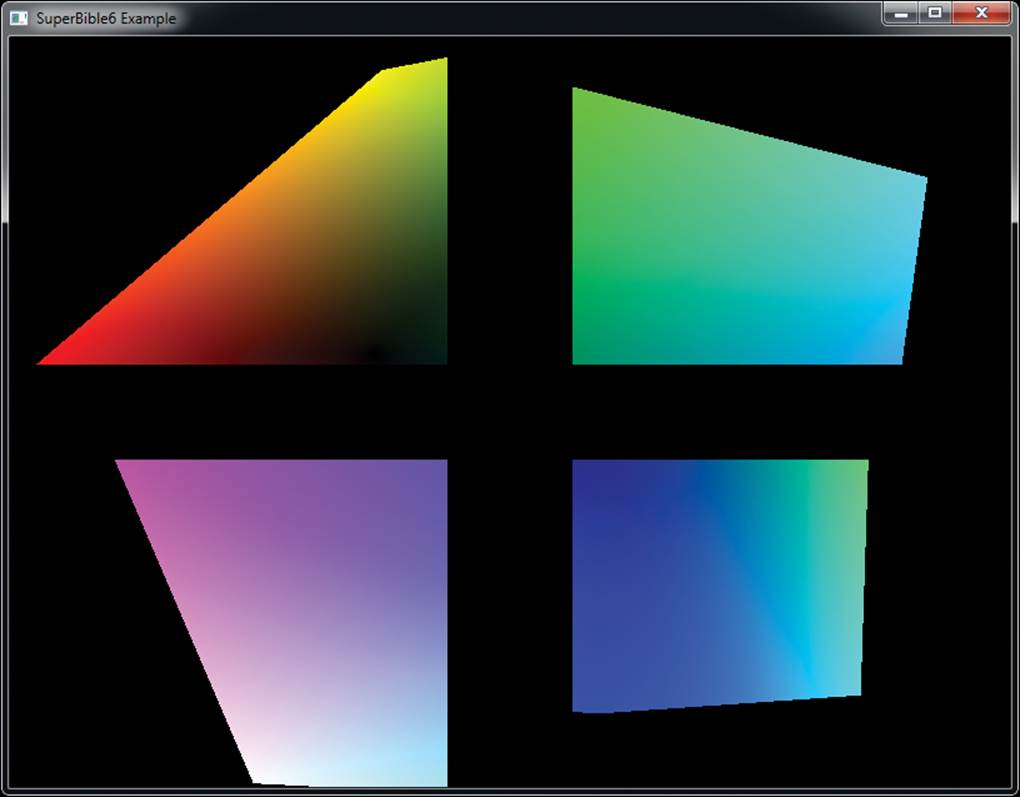

First let’s look at glStencilFuncSeparate(), which controls the conditions under which the stencil test passes or fails. The test is applied separately for front-facing and back-facing primitives, each has its own state, and you can pass GL_FRONT, GL_BACK, or GL_FRONT_AND_BACK for face, signifying which geometry will be affected. The value of func can be any of the values in Table 9.1. These specify under what conditions geometry will pass the stencil test.

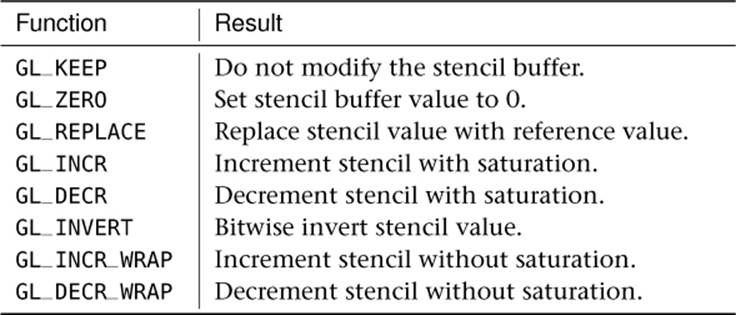

Table 9.1. Stencil Functions

The ref value is the reference used to compute the pass or fail result, and the mask parameter lets you control which bits of the reference and the buffer are compared. In pseudo-code, the operation of the stencil test is effectively implemented as

GLuint current = GetCurrentStencilContent(x, y);

if (compare(current & mask,

ref & mask,

front_facing ? front_op : back_op))

{

passed = true;

}

else

{

passed = false;

}

The next step is to tell OpenGL what to do when the stencil test passes or fails by using glStencilOpSeparate(). This function takes four parameters, with the first specifying which faces will be affected. The next three parameters control what happens after the stencil test is performed and can be any of the values in Table 9.2. The second parameter, sfail, is the action taken if the stencil test fails. The dpfail parameter specifies the action taken if the depth buffer test fails, and the final parameter, dppass, specifies what happens if the depth buffer test passes. Note that because stencil testing comes before depth testing (which we’ll get to in a moment), should the stencil test fail, the fragment is killed right there and no further processing is performed — which explains why there are only three operations here rather than four.

Table 9.2. Stencil Operations

So how does this actually work out? Let’s look at a simple example of typical usage shown in Listing 9.2. The first step is to clear the stencil buffer to 0 by calling glClearBufferiv() with buffer set to GL_STENCIL, drawBuffer set to 0, and value pointing to a variable containing zero. Next, a window border is drawn that may contain details such as a player’s score and statistics. Set up the stencil test to always pass with the reference value being 1 by calling glStencilFuncSeparate(). Next, tell OpenGL to replace the value in the stencil buffer only when the depth test passes by calling glStencilOpSeparate() followed by rendering the border geometry. This turns the border area pixels to 1 while the rest of the framebuffer remains at 0. Finally, set up the stencil state so that the stencil test will only pass if the stencil buffer value is 0, and then render the rest of the scene. This causes all pixels that would overwrite the border we just drew to fail the stencil test and not be drawn to the framebuffer. Listing 9.2 shows an example of how stencil can be used.

// Clear stencil buffer to 0

const GLint zero;

glClearBufferiv(GL_STENCIL, 0, &zero);

// Setup stencil state for border rendering

glStencilFuncSeparate(GL_FRONT, GL_ALWAYS, 1, 0xff);

glStencilOpSeparate(GL_FRONT, GL_KEEP, GL_ZERO, GL_REPLACE);

// Render border decorations

. . .

// Now, border decoration pixels have a stencil value of 1

// All other pixels have a stencil value of 0.

// Setup stencil state for regular rendering,

// fail if pixel would overwrite border

glStencilFuncSeparate(GL_FRONT_AND_BACK, GL_LESS, 1, 0xff);

glStencilOpSeparate(GL_FRONT, GL_KEEP, GL_KEEP, GL_KEEP);

// Render the rest of the scene, will not render over stenciled

// border content

. . .

Listing 9.2: Example stencil buffer usage, border decorations

There are also two other stencil functions: glStencilFunc() and glStencilOp(). These behave just as glStencilFuncSeparate() and glStencilOpSeparate() would if you were to set the face parameter to GL_FRONT_AND_BACK.

Controlling Updates to the Stencil Buffer

By clever manipulation of the stencil operation modes (setting them all to the same value, or judicious use of GL_KEEP, for example), you can perform some pretty flexible operations on the stencil buffer. However, beyond this, it’s possible to control updates to individual bits of the stencil buffer. The glStencilMaskSeparate() function takes a bitfield of which bits in the stencil buffer should be updated and which should be left alone. Its prototype is

void glStencilMaskSeparate(GLenum face, GLuint mask);

As with the stencil test function, there are two sets of state — one for front-facing and one for back-facing primitives. Just like glStencilFuncSeparate(), the face parameter specifies which types of primitives should be affected. The mask parameter is a bitfield that maps to the bits in the stencil buffer — if the stencil buffer has less than 32 bits (8 is the maximum supported by most current OpenGL implementations), only that many of the least significant bits of mask are used. If a mask bit is set to 1, the corresponding bit in the stencil buffer can be updated. But if the mask bit is 0, the corresponding stencil bit will not be written to. For instance, consider the following code:

GLuint mask = 0x000F;

glStencilMaskSeparate(GL_FRONT, mask);

glStencilMaskSeparate(GL_BACK, ~mask);

In the preceding example, the first call to glStencilMaskSeparate() affects front-facing primitives and enables the lower four bits of the stencil buffer for writing while leaving the rest disabled. The second call to glStencilMaskSeparate() sets the opposite mask for back-facing primitives. This essentially allows you to pack two stencil values together into an 8-bit stencil buffer — the lower four bits being used for front-facing primitives, and the upper four bits being used for back-facing primitives.

Depth Testing

After stencil operations are complete and if depth testing is enabled, OpenGL tests the depth value of a fragment against the existing content of the depth buffer. If depth writes are also enabled and the fragment has passed the depth test, the depth buffer is updated with the depth value of the fragment. If the depth test fails, the fragment is discarded and does not pass to the following fragment operations.

The input to primitive assembly is a set of vertex positions that make up primitives. Each has a z coordinate. This coordinate is scaled and biased such that the normal3 visible range of values lies between zero and one. This is the value that’s usually stored in the depth buffer. During depth testing, OpenGL reads the depth value of the fragment from the depth buffer at the current fragment’s coordinate and compares it to the generated depth value for the fragment being processed.

3. It’s possible to turn off this visibility check and consider all fragments visible, even if they lie outside the zero-to-one range that is stored in the depth buffer.

You can choose what comparison operator is used to figure out if the fragment “passed” the depth test. To set the depth comparison operator (or depth function), call glDepthFunc(), whose prototype is

void glDepthFunc(GLenum func);

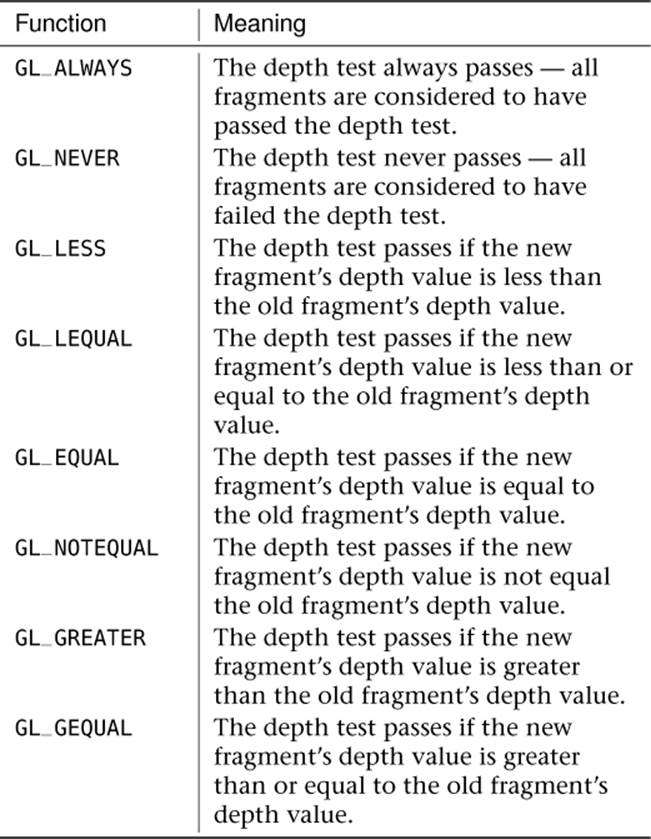

Here, func is one of the available depth comparison operators. The legal values for func and what they mean are shown in Table 9.3.

Table 9.3. Depth Comparison Functions

If the depth test is disabled, it is as if the depth test always passes (i.e., the depth function is set to GL_ALWAYS), with one exception: The depth buffer is only updated when the depth test is enabled. If you want your geometry to be written into the depth buffer unconditionally, you must enable the depth test and set the depth function to GL_ALWAYS. By default, the depth test is disabled. To turn it on, call

glEnable(GL_DEPTH_TEST);

To turn it off again, simply call glDisable() with the GL_DEPTH_TEST parameter. It is a very common mistake to disable the depth test and expect it to be updated. Again, the depth buffer is not updated unless the depth test is also enabled.

Controlling Updates of the Depth Buffer

Writes to the depth buffer can be turned on and off, regardless of the result of the depth test. Remember, the depth buffer is only updated if the depth test is turned on (although the test function can be set to GL_ALWAYS if you don’t actually need depth testing and only wish to update the depth buffer). The glDepthMask() function takes a Boolean flag that turns writes to the depth buffer on if it’s GL_TRUE and off if GL_FALSE. For example,

glDepthMask(GL_FALSE);

will turn writes to the depth buffer off, regardless of the result of the depth test. You can use this, for example, to draw geometry that should be tested against the depth buffer, but that shouldn’t update it. By default, the depth mask is set to GL_TRUE, which means you won’t need to change it if you want depth testing and writing to behave as normal.

Depth Clamping

OpenGL represents the depth of each fragment as a finite number, scaled between zero and one. A fragment with a depth of zero is intersecting the near plane (and would be jabbing you in the eye if it were real), and a fragment with a depth of one is at the farthest representable depth but not infinitely far away. To eliminate the far plane and draw things at any arbitrary distance, we would need to store arbitrarily large numbers in the depth buffer — something that’s not really possible. To get around this, OpenGL has the option to turn off clipping against the near and far planes and instead clamp the generated depth values to the range zero to one. This means that any geometry that protrudes behind the near plane or beyond the far plane will essentially be projected onto that plane.

To enable depth clamping (and simultaneously turn off clipping against the near and far planes), call

glEnable(GL_DEPTH_CLAMP);

and to disable depth clamping, call

glDisable(GL_DEPTH_CLAMP);

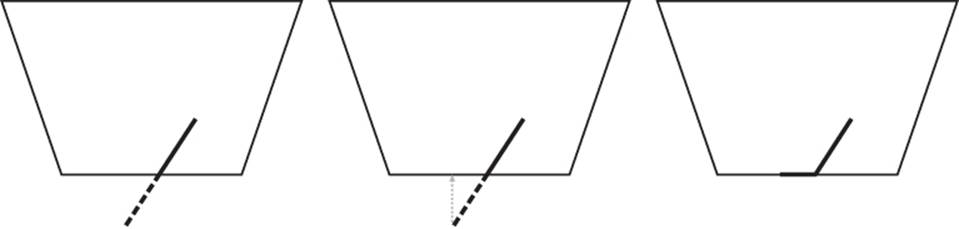

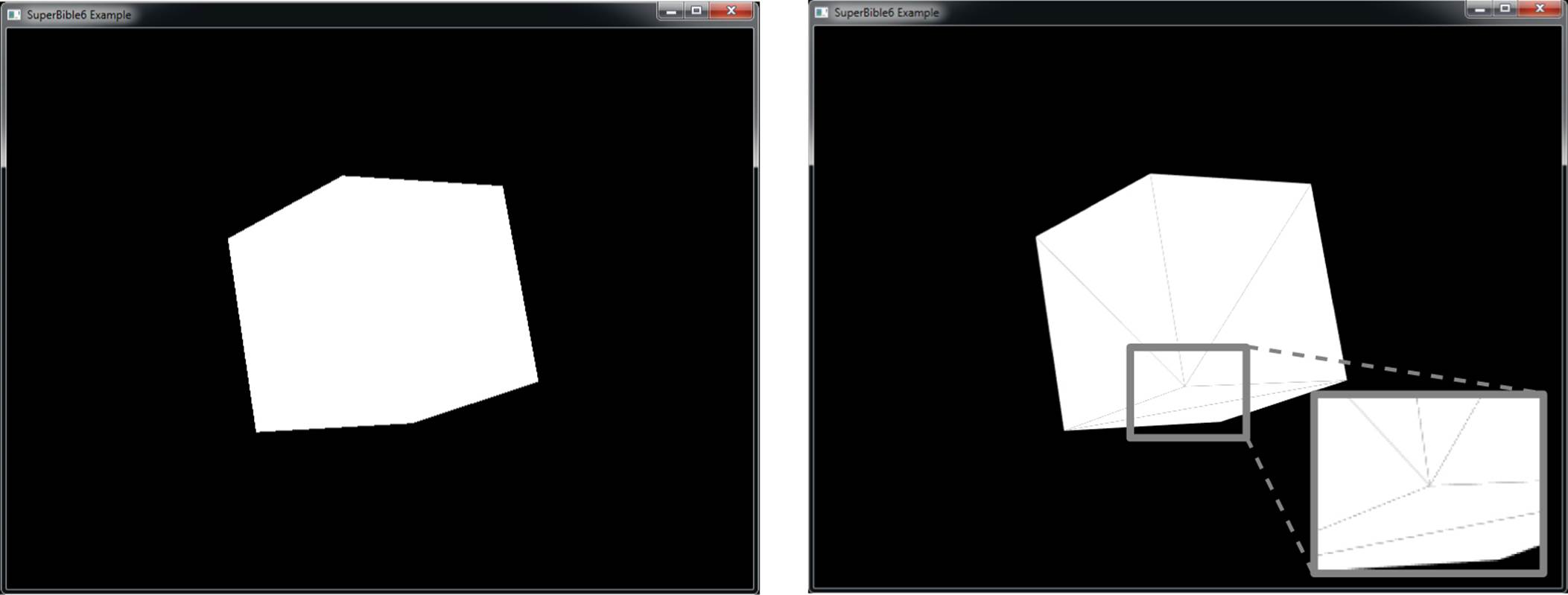

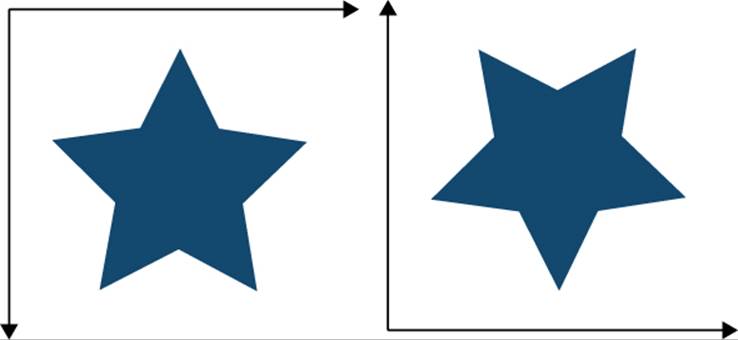

Figure 9.3 illustrates the effect of enabling depth clamping and drawing a primitive that intersects the near plane.

Figure 9.3: Effect of depth clamping at the near plane

It is simpler to demonstrate this in two dimensions, and so on the left of Figure 9.3, the view frustum is displayed as if we were looking straight down on it. The dark line represents the primitive that would have been clipped against the near plane, and the dotted line represents the portion of the primitive that was clipped away. When depth clamping is enabled, rather than clipping the primitive, the depth values that would have been generated outside the range zero to one are clamped into that range, effectively projecting the primitive onto the near plane (or the far plane, if the primitive would have clipped that). The center of Figure 9.3 shows this projection. What actually gets rendered is shown on the right of Figure 9.3. The dark line represents the values that eventually get written into the depth buffer. Figure 9.4 shows how this translates to a real application.

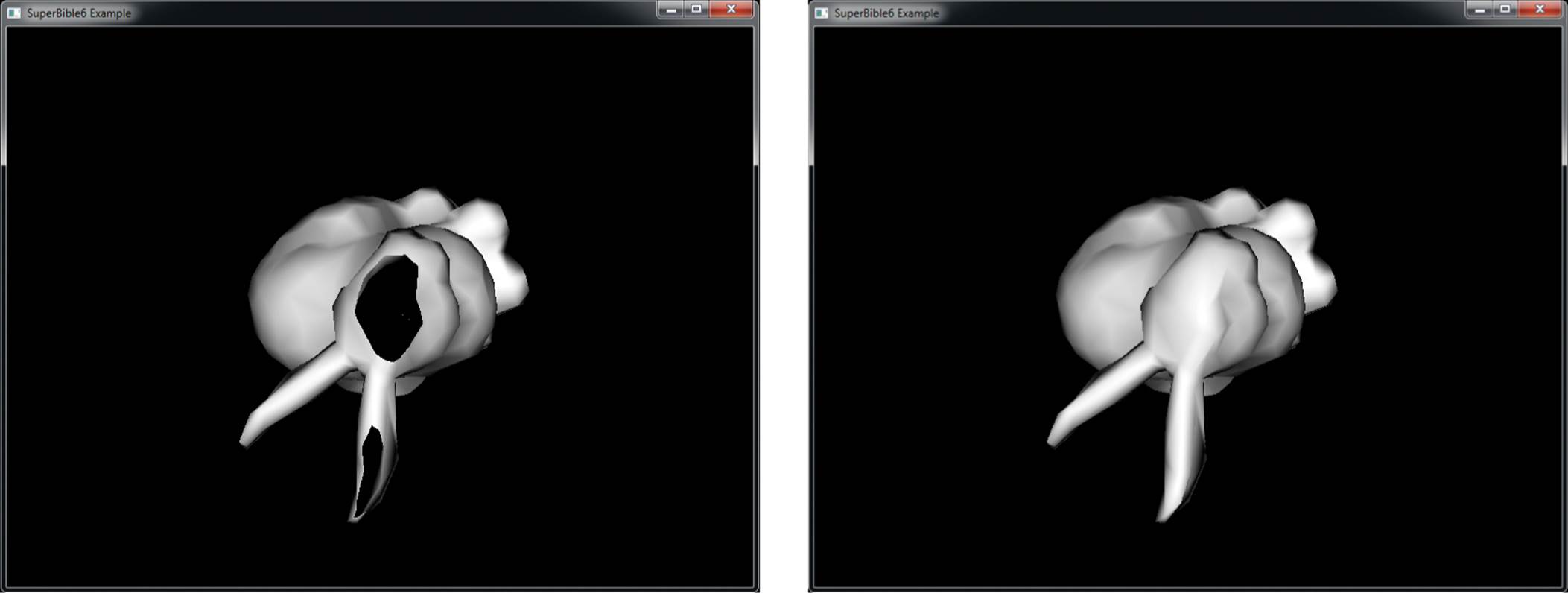

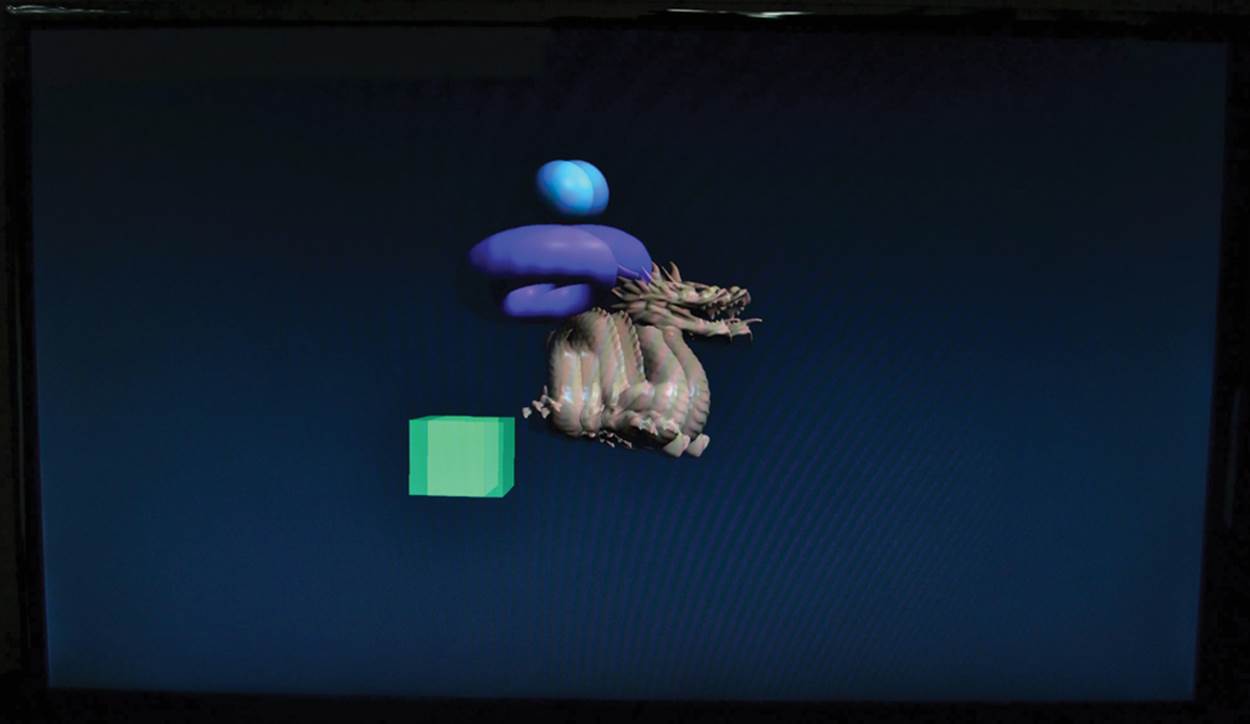

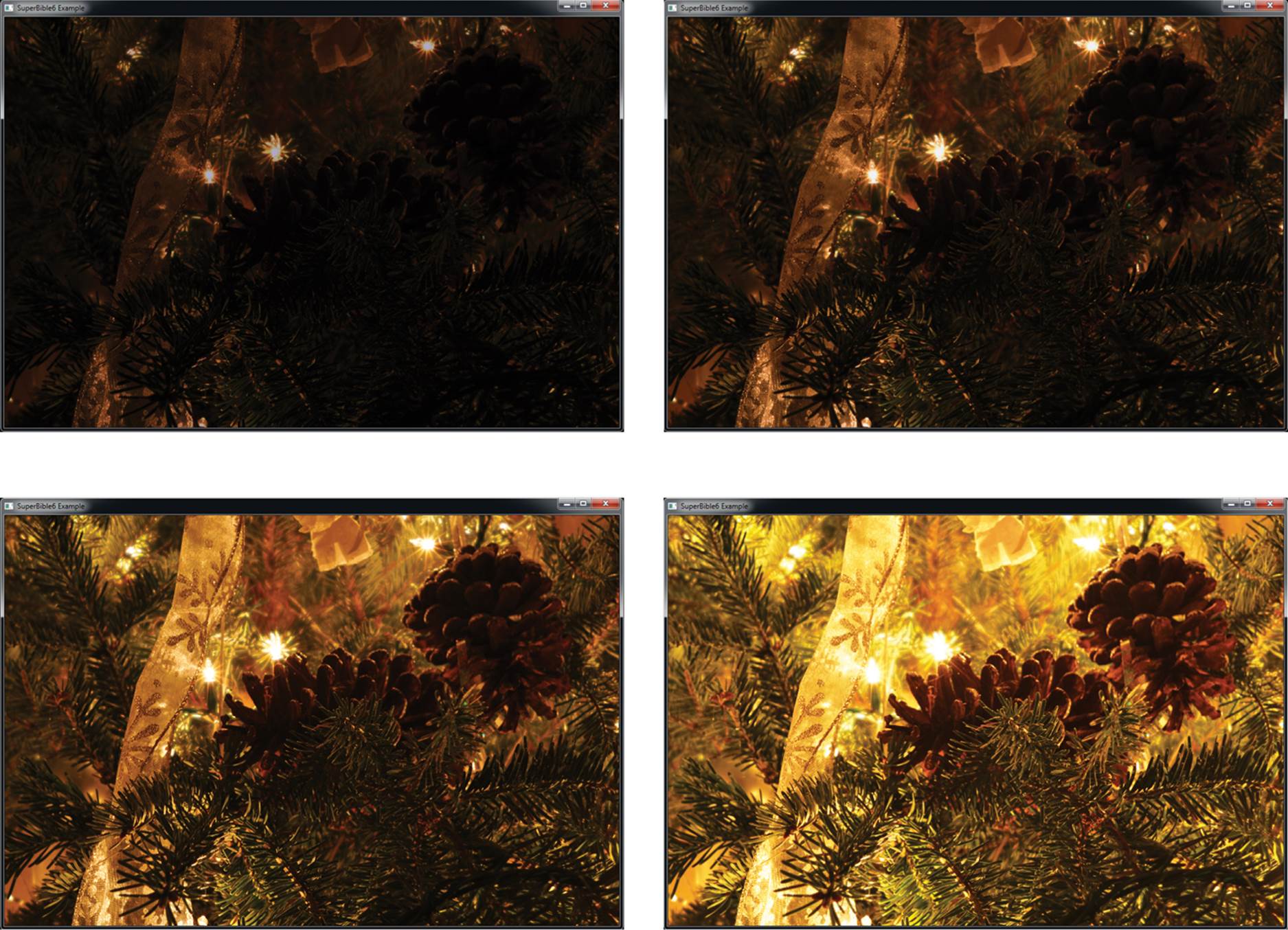

Figure 9.4: A clipped object with and without depth clamping

In the left image of Figure 9.4, the geometry has become so close to the viewer that it is partially clipped against the near plane. As a result, the portions of the polygons that would have been behind the near plane are simply not drawn, and so they leave a large hole in the model. You can see right through to the other side of the object, and the image is quite visibly incorrect. On the right of Figure 9.4, depth clamping has been enabled. As you can see, the geometry that was lost in the left image is back and fills the hole in the object. The values in the depth buffer aren’t technically correct, but this hasn’t translated to visual anomalies, and the picture produced looks better than that in the left image.

Early Testing

Logically, the depth and stencil tests occur after the fragment has been shaded, but most graphics hardware is capable of performing the test before your shader runs and avoiding the cost of executing that shader if the ownership test would fail. However, if a shader has side effects (such as directly writing to a texture) or would otherwise effect the outcome of the test, OpenGL can’t perform the tests first, and must always run your shader. Not only that, but it must always wait for the shader to finish executing before it can perform depth testing or update the stencil buffer.

One particular example of something you can do in your shader that would stop OpenGL from performing the depth test before executing it is writing to the built-in gl_FragDepth output.

The special built-in variable gl_FragDepth is available for writing an updated depth value to. If the fragment shader doesn’t write to this variable, the interpolated depth generated by OpenGL is used as the fragment’s depth value. Your fragment shader can either calculate an entirely new value for gl_FragDepth, or it can derive one from the value gl_FragCoord.z. This new value is subsequently used by OpenGL both as the reference for the depth test and as the value written to the depth buffer should the depth test pass. You can use this functionality, for example, to slightly perturb the values in the depth buffer and create physically bumpy surfaces. Of course, you’d need to shade such surfaces appropriately to make them appear bumpy, but when new objects were tested against the content of the depth buffer, the result would match the shading.

Because your shader changes the fragment’s depth value when you write to gl_FragDepth, there’s no way that OpenGL can perform the depth test before the shader runs because it doesn’t know what you’re going to put there. For this scenario, OpenGL provides some layout qualifiers that let you tell it what you plan to do with the depth value.

Now, remember that the range of values in the depth buffer is between 0.0 and 1.0, and that the depth test comparison operators include functions such as GL_LESS and GL_GREATER. Now, if you set the depth test function to GL_LESS, for example (which would pass for any fragment that is closerto the viewer than what is currently in the framebuffer), then if you only ever set gl_FragDepth to a value that is less than it would have been otherwise, then the fragment will pass the depth test regardless of whatever the shader does, and so the original test result remains valid. In this case, OpenGL now knows that it can perform the depth test before running your fragment shader, even though the logical pipeline has it running afterwards.

The layout qualifier you use to tell OpenGL what you’re going to do to depth is applied to a redeclaration of gl_FragDepth. The redeclaration of gl_FragDepth can take the form of any of the following:

layout (depth_any) out float gl_FragDepth;

layout (depth_less) out float gl_FragDepth;

layout (depth_greater) out float gl_FragDepth;

layout (depth_unchanged) out float gl_FragDepth;

If you use the depth_any layout qualifier, you’re telling OpenGL that you might write any value to gl_FragDepth. This is effectively the default — if OpenGL sees that your shader writes to gl_FragDepth, it has no idea what you did to it and assumes that the result could be anything. If you specify depth_less, you’re effectively saying that whatever you write to gl_FragDepth will result in the fragment’s depth value being less than it would have been otherwise. In this case, results from the GL_LESS and GL_LEQUAL comparison functions remain valid. Similarly, using depth_greaterindicates that your shader will only make the fragment’s depth greater than it would have been and, therefore, the result of the GL_GREATER and GL_GEQUAL tests remain valid.

The final qualifier, depth_unchanged, is somewhat unique. This tells OpenGL that whatever you do to gl_FragDepth, it’s free to assume you haven’t written anything to it that would change the result of the depth test. In the case of depth_any, depth_less, and depth_greater, although OpenGL becomes free to perform depth testing before your shader executes under certain circumstances, there are still times when it must run your shader and wait for it to finish. With depth_unchanged you are telling OpenGL that no matter what you do with the fragment’s depth value, the original result of the test remains valid. You might choose to use this if you plan to perturb the fragment’s depth slightly, but not in a way that would make it intersect any other geometry in the scene (or if you don’t care if it does).

Regardless of the layout qualifier you apply to a redeclaration of gl_FragDepth and what OpenGL decides to do about it, the value you write into gl_FragDepth will be clamped into the range 0.0 to 1.0 and then written into the depth buffer.

Color Output

The color output stage is the last part of the OpenGL pipeline before fragments are written to the framebuffer. It determines what happens to your color data between when it leaves your fragment shader and when it is finally displayed to the user.

Blending

For fragments that pass the per-fragment tests, blending is performed. Blending allows you to combine the incoming source color with the color already in the color buffer or with other constants using one of the many supported blend equations. If the buffer you are drawing to is fixed point, the incoming source colors will be clamped to 0.0 to 1.0 before any blending operations occur. Blending is enabled by calling

glEnable(GL_BLEND);

and disabled by calling

glDisable(GL_BLEND);

The blending functionality of OpenGL is powerful and highly configurable. It works by multiplying the source color (the value produced by your shader) by the source factor, then multiplying the color in the framebuffer by the destination factor, and then combining the results of these multiplications using an operation that you can choose called the blend equation.

Blend Functions

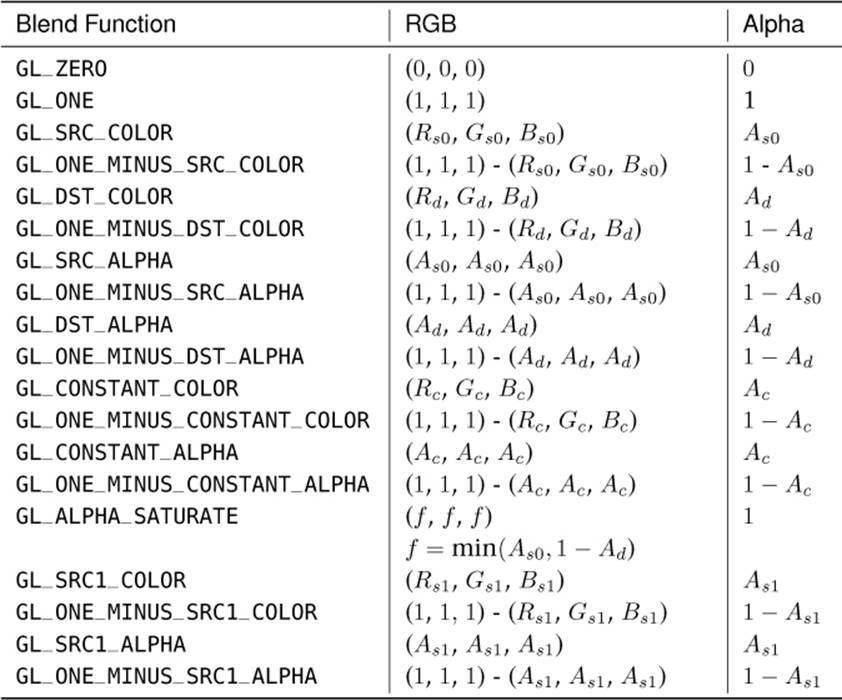

To choose the source and destination factors by which OpenGL will multiply the result of your shader and the value in the framebuffer, respectively, you can call glBlendFunc() or glBlendFuncSeparate(). glBlendFunc() lets you set the source and destination factors for all four channels of data (red, green, blue, and alpha). glBlendFuncSeparate(), on the other hand, allows you to set a source and destination factor for the red, green, and blue channels and another for the alpha channel.

glBlendFuncSeparate(GLenum srcRGB, GLenum dstRGB,

GLenum srcAlpha, GLenum dstaAlpha);

glBlendFunc(GLenum src, GLenum dst);

The possible values for these calls can be found in Table 9.4. There are four sources of data that might be used in a blending function. These are the first source color (Rs0, Gs0, Bs0, and As0), the second source color (Rs1, Gs1, Bs1, and As1), the destination color (Rd, Gd, Bd, and Ad), and the constant blending color (Rc, Gc, Bc, and Ac). The last value, the constant blending color, can be set by calling glBlendColor():

glBlendColor(GLfloat red, GLfloat green,

GLfloat blue, GLfloat alpha);

Table 9.4. Blend Functions

In addition to all of these sources, the constant values zero and one can be used as any of the product terms.

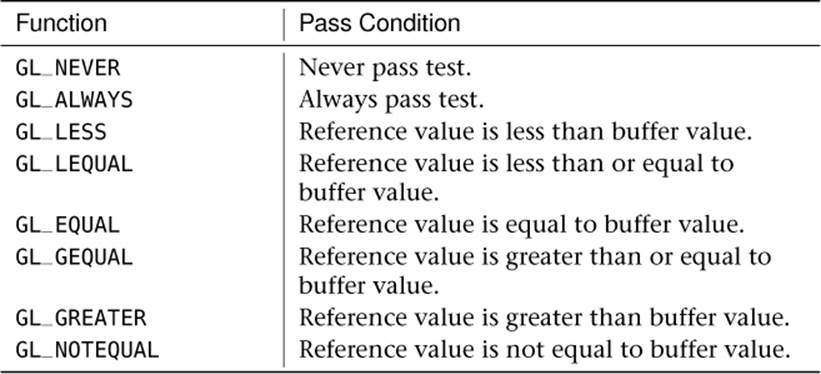

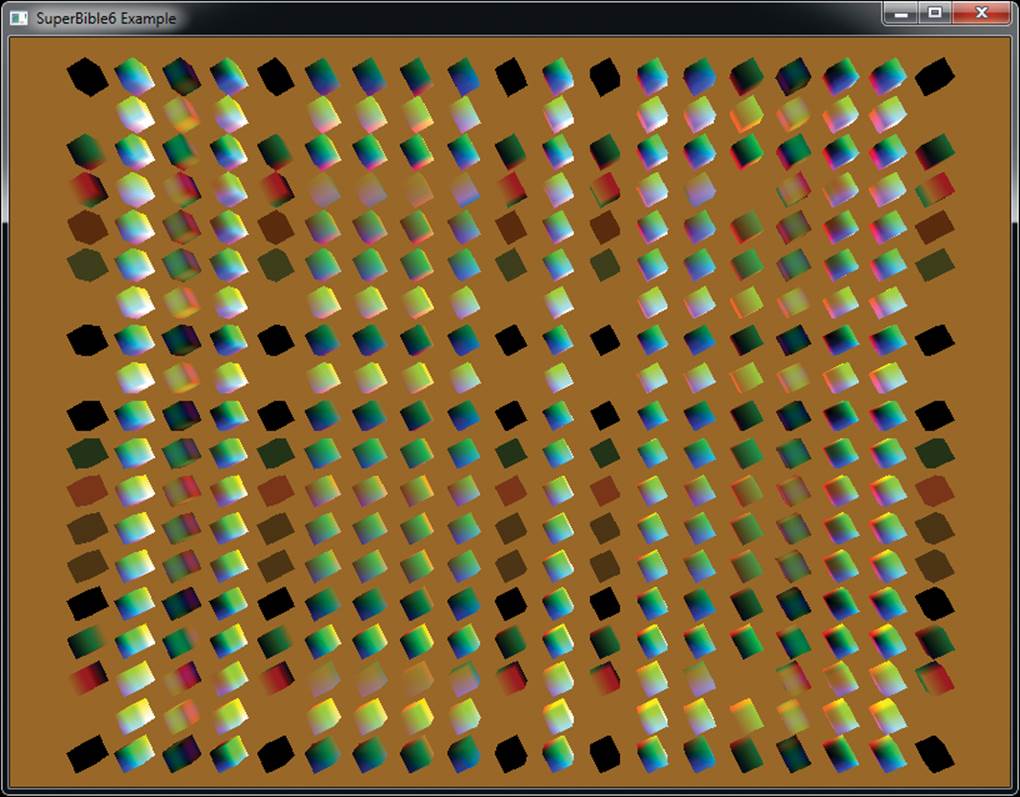

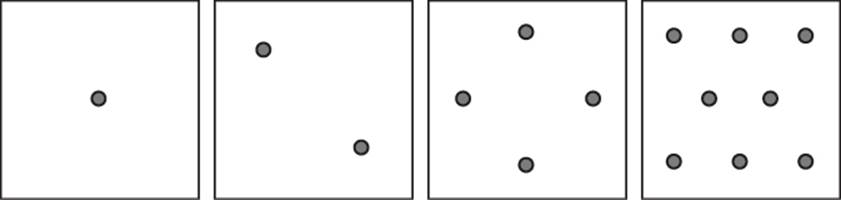

As a simple example, consider the code shown in Listing 9.3. This code clears the framebuffer to a mid-orange color, turns on blending, sets the blend color to a mid-blue color, and then draws a small cube with every possible combination of source and destination blending function.

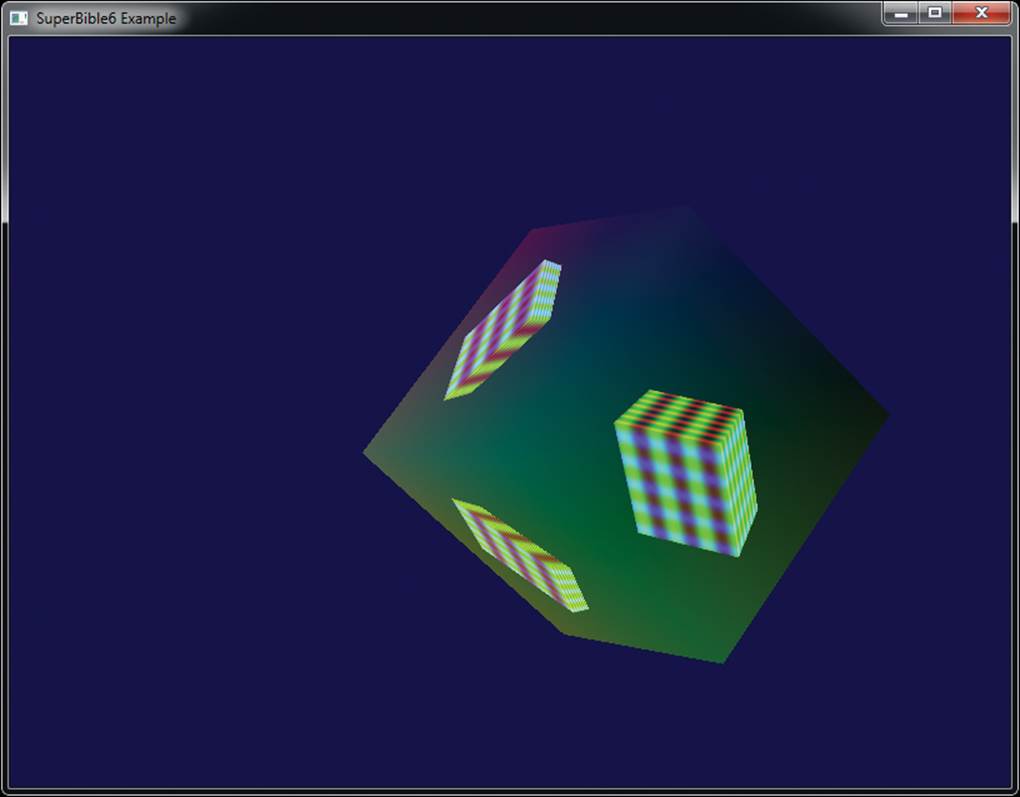

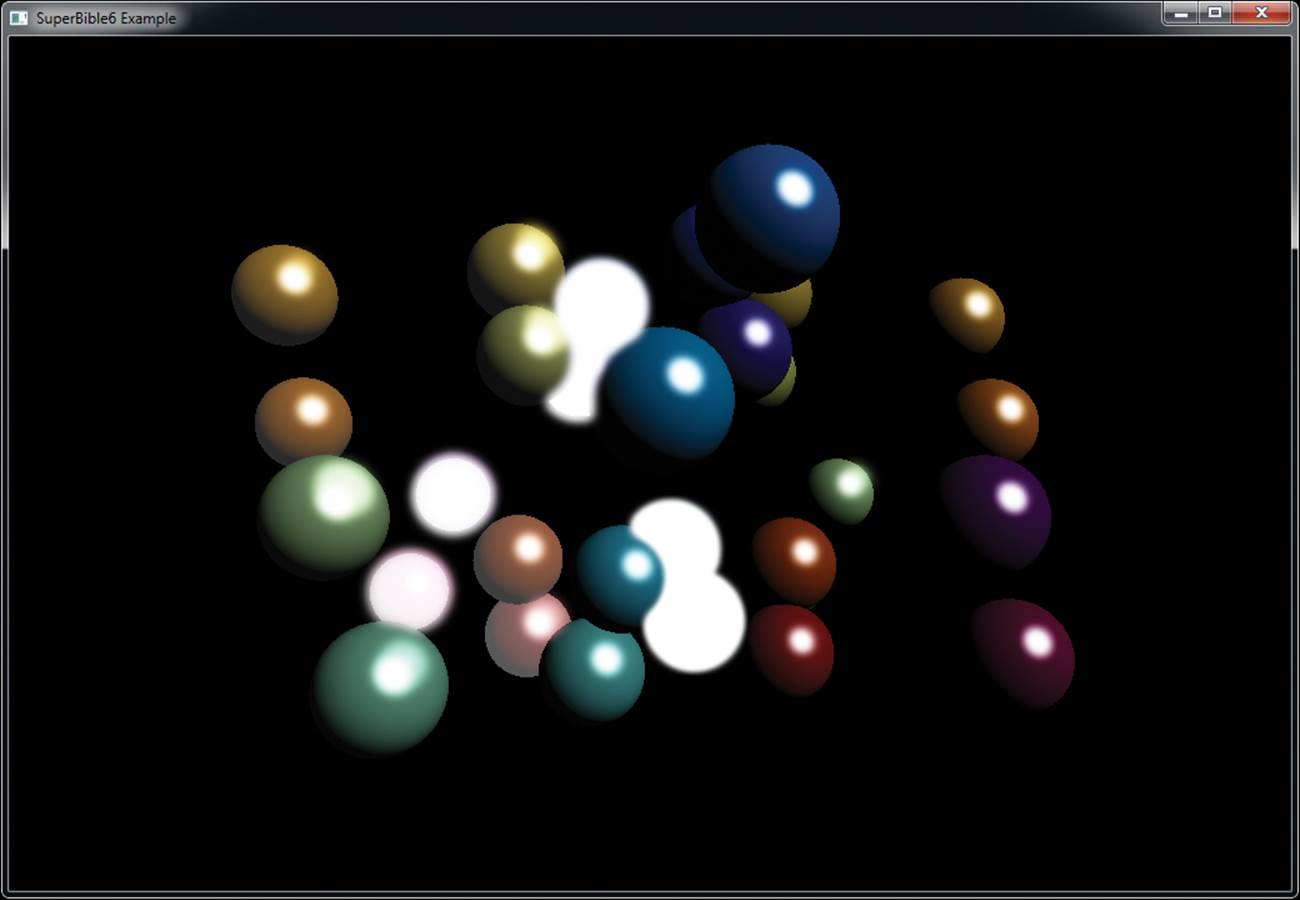

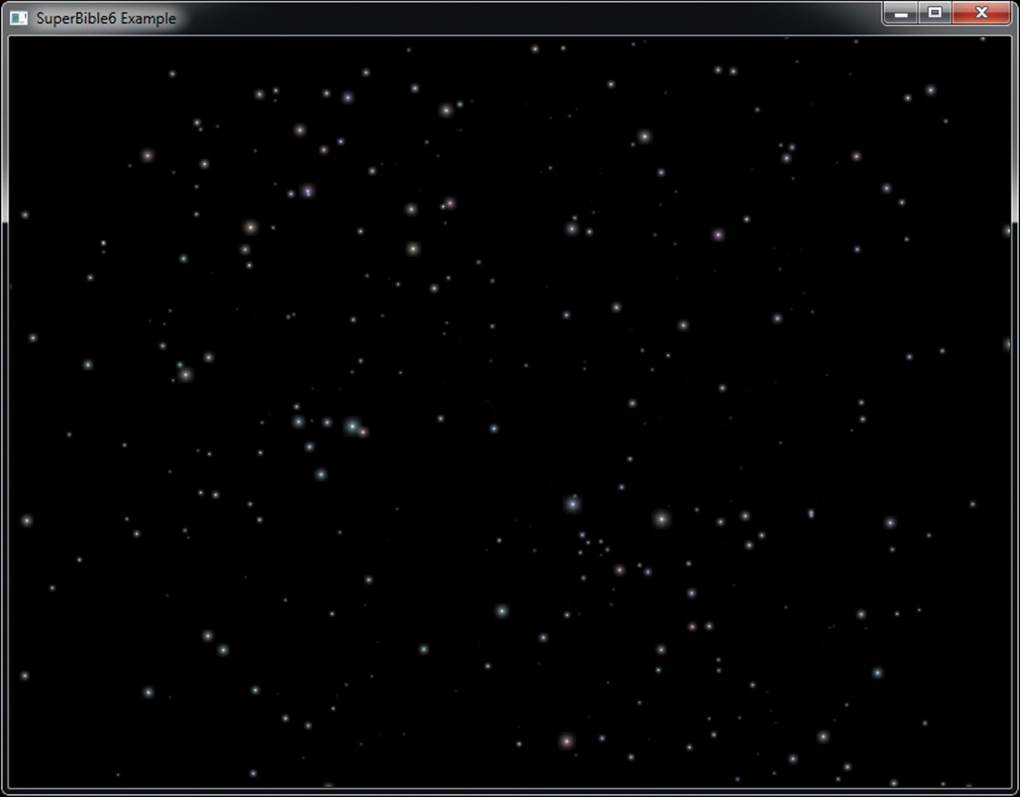

The result of rendering with the code shown in Listing 9.3 is shown in Figure 9.5. This image is also shown in Color Plate 1 and was generated by the blendmatrix sample application.

Figure 9.5: All possible combinations of blending functions

static const GLfloat orange[] = { 0.6f, 0.4f, 0.1f, 1.0f };

glClearBufferfv(GL_COLOR, 0, orange);

static const GLenum blend_func[] =

{

GL_ZERO,

GL_ONE,

GL_SRC_COLOR,

GL_ONE_MINUS_SRC_COLOR,

GL_DST_COLOR,

GL_ONE_MINUS_DST_COLOR,

GL_SRC_ALPHA,

GL_ONE_MINUS_SRC_ALPHA,

GL_DST_ALPHA,

GL_ONE_MINUS_DST_ALPHA,

GL_CONSTANT_COLOR,

GL_ONE_MINUS_CONSTANT_COLOR,

GL_CONSTANT_ALPHA,

GL_ONE_MINUS_CONSTANT_ALPHA,

GL_SRC_ALPHA_SATURATE,

GL_SRC1_COLOR,

GL_ONE_MINUS_SRC1_COLOR,

GL_SRC1_ALPHA,

GL_ONE_MINUS_SRC1_ALPHA

};

static const int num_blend_funcs = sizeof(blend_func) /

sizeof(blend_func[0]);

static const float x_scale = 20.0f / float(num_blend_funcs);

static const float y_scale = 16.0f / float(num_blend_funcs);

const float t = (float)currentTime;

glEnable(GL_BLEND);

glBlendColor(0.2f, 0.5f, 0.7f, 0.5f);

for (j = 0; j < num_blend_funcs; j++)

{

for (i = 0; i < num_blend_funcs; i++)

{

vmath::mat4 mv_matrix =

vmath::translate(9.5f - x_scale * float(i),

7.5f - y_scale * float(j),

-50.0f) *

vmath::rotate(t * -45.0f, 0.0f, 1.0f, 0.0f) *

vmath::rotate(t * -21.0f, 1.0f, 0.0f, 0.0f);

glUniformMatrix4fv(mv_location, 1, GL_FALSE, mv_matrix);

glBlendFunc(blend_func[i], blend_func[j]);

glDrawElements(GL_TRIANGLES, 36, GL_UNSIGNED_SHORT, 0);

}

}

Listing 9.3: Rendering with all blending functions

Dual-Source Blending

You may have noticed that some of the factors in Table 9.4 use source 0 colors (Rs0, Gs0, Bs0, and As0), and others use source 1 colors (Rs1, Gs1, Bs1, and As1). Your shaders can export more than one final color for a given color buffer by setting up the outputs used in your shader by assigning them indices using the index layout qualifier. An example is shown below:

layout (location = 0, index = 0) out vec4 color0;

layout (location = 0, index = 1) out vec4 color1;

Here, color0_0 will be used for the GL_SRC_COLOR factor, and color0_1 will be used for the GL_SRC1_COLOR. When you use dual source blending functions, the number of separate color buffers that you can use might be limited. You can find out how many dual output buffers are supported by querying the value of GL_MAX_DUAL_SOURCE_DRAW_BUFFERS.

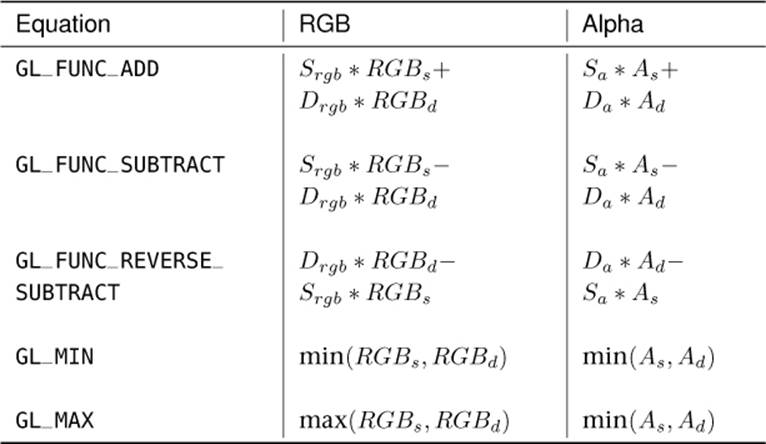

Blend Equation

Once the source and destination factors have been multiplied by the source and destination colors, the two products need to be combined together. This is done using an equation that you can set by calling glBlendEquation() or glBlendEquationSeparate(). As with the blend functions, you can choose one blend equation for the red, green, and blue channels and another for the alpha channel — use glBlendEquationSeparate() to do this. If you want both equations to be the same, you can call glBlendEquation():

glBlendEquation(GLenum mode);

glBlendEquationSeparate(GLenum modeRGB,

GLenum modeAlpha);

For glBlendEquation(), the one parameter, mode, selects the same mode for all of the red, green, blue, and alpha channels. For glBlendEquationSeparate(), an equation can be chosen for the red, green, and blue channels (specified in modeRGB) and another for the alpha channel (specified inmodeAlpha). The values you pass to the two functions are shown in Table 9.5.

Table 9.5. Blend Equations

In Table 9.5, RGBs represents the source red, green, and blue values; RGBd represents the destination red, green, and blue values; As and Ad represent the source and destination alpha values; Srgb and Drgb represent the source and destination blend factors; and Sa and Da represent the source and destination alpha factors (chosen by glBlendFunc() or glBlendFuncSeparate()).

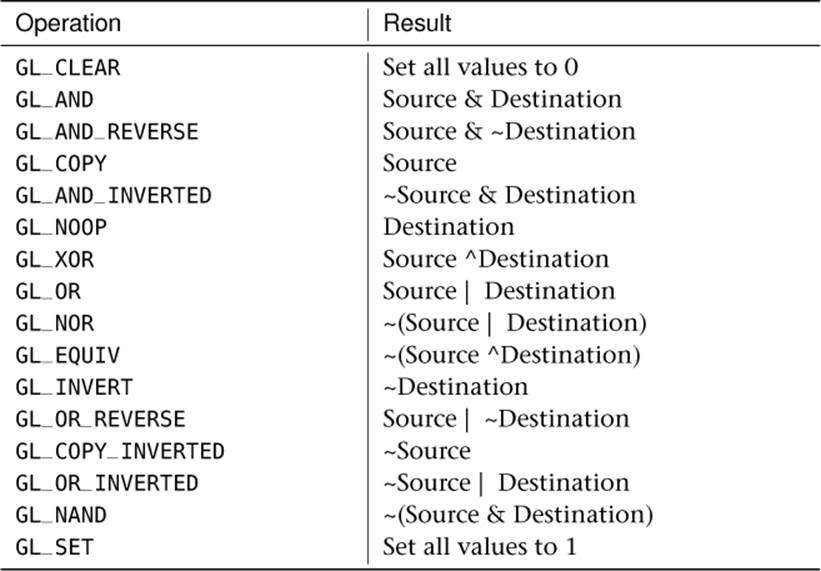

Logical Operations

Once the pixel color is in the same format and bit depth as the framebuffer, there are two more steps that can affect the final result. The first allows you to apply a logical operation to the pixel color before it is passed on. When enabled, the effects of blending are ignored. Logic operations do not affect floating-point buffers. You can enable logic ops by calling

glEnable(GL_COLOR_LOGIC_OP);

and disable it by calling

glDisable(GL_COLOR_LOGIC_OP);

Logic operations use the values of the incoming pixel and the existing framebuffer to compute a final value. You can pick the operation that computes the final value by calling glLogicOp(). The possible options are listed in Table 9.6. The prototype of glLogicOp() is

glLogicOp(GLenum op);

Table 9.6. Logic Operations

where op is one of the values from Table 9.6.

Logic operations are applied separately to each color channel, and operations that combine source and destination are performed bitwise on the color values. Logic ops are not commonly used in today’s graphics applications but still remain part of OpenGL because the functionality is still supported on common GPUs.

Color Masking

One of the last modifications that can be made to a fragment before it is written is masking. By now you recognize that three different types of data can be written by a fragment shader: color, depth, and stencil data. Just as you can mask off updates to the stencil and depth buffers, you can also apply a mask to the updates of the color buffer.

To mask color writes or prevent color writes from happening, you can use glColorMask() and glColorMaski(). We briefly introduced glColorMask() back in Chapter 5 where we turned on and off writing to the framebuffer. However, you don’t have to mask all color channels at once; for instance, you can choose to mask the red and green channels while permitting writes to the blue channel. Each function takes four Boolean parameters that control updates to each of the red, green, blue, and alpha channels of the color buffer. You can pass in GL_TRUE to one of these parameters to allow writes for the corresponding channel to occur, or GL_FALSE to mask these writes off. The first function, glColorMask(), allows you to mask all buffers currently enabled for rendering, while the second function, glColorMaski(), allows you to set the mask for a specific color buffer (there can be many if you’re rendering off screen). The prototypes of these two functions are

glColorMask(GLboolean red,

GLboolean green,

GLboolean blue,

GLboolean alpha);

glColorMaski(GLuint index,

GLboolean red,

GLboolean green,

GLboolean blue,

GLboolean alpha);

For both functions, red, green, blue, and alpha can be set to either GL_TRUE or GL_FALSE to determine whether the red, green, blue, or alpha channels should be written to the framebuffer. For glColorMaski(), index is the index of the color attachment to which masking should apply. Each color attachment can have its own color mask settings. So, for example, you could write only the red channel to attachment 0, only the green channel to attachment 1, and so on.

Mask Usage

Write masks can be useful for many operations. For instance, if you want to fill a shadow volume with depth information, you can mask off all color writes because only the depth information is important. Or if you want to draw a decal directly to screen space, you can disable depth writes to prevent the depth data from being polluted. The key point about masks is you can set them and immediately call your normal rendering paths, which may set up necessary buffer state and output all color, depth, and stencil data you would normally use without needing any knowledge of the mask state. You don’t have to alter your shaders to not write some value, detach some set of buffers, or change the enabled draw buffers. The rest of your rendering paths can be completely oblivious and still generate the right results.

Off-Screen Rendering

Until now, all of the rendering your programs have performed has been directed into a window, or perhaps the computer’s main display. The output of your fragment shader goes into the back buffer, which is normally owned by the operating system or window system that your application is running on, and is eventually displayed to the user. Its parameters are set when you choose a format for the rendering context. As a platform-specific operation, this means that you have little control over what the underlying storage format really is. Also, in order for the samples in this book to run on many platforms, the book’s application framework takes care of setting this up for you, hiding many of the details.

However, OpenGL includes features that allow you to set up your own framebuffer and use it to draw directly into textures. You can then use these textures later for further rendering or processing. You also have a lot of control over the format and layout of the framebuffer. For example, when you use the default framebuffer, it is implicitly sized to the size of the window or display, and rendering outside the display (if the window is obscured or dragged off the side of the screen, for example) is undefined as the corresponding pixels’ fragment shaders might not run. However, with user-supplied framebuffers, the maximum size of the textures you render to is only limited by the maximums supported by the implementation of OpenGL you’re running on, and rendering to any location in it is always defined.

User-supplied framebuffers are represented by OpenGL as framebuffer objects. As with most objects in OpenGL, each framebuffer object has a name that must be reserved before it is created — the actual object is initialized when it is first bound. So, the first thing to do is to reserve a name for a framebuffer object and bind it to the context to initialize it. To generate names for framebuffer objects, call glGenFramebuffers(), and to bind a framebuffer to the context, call glBindFramebuffer(). The prototypes of these functions are

void glGenFramebuffers(GLsizei n,

GLuint * ids);

void glBindFramebuffer(GLenum target,

GLuint framebuffer);

The glGenFramebuffers() function takes a count in n and hands you back a list of names in ids that you are able to use as framebuffer objects. The glBindFramebuffer() function makes your application-supplied framebuffer object the current framebuffer (instead of the default one). Theframebuffer is one of the names that you got from a call to glGenFramebuffers(), and target parameter will normally be GL_FRAMEBUFFER. However, it’s possible to bind two framebuffers at the same time — one for reading and one for writing.

To bind a framebuffer for reading only, set target to GL_READ_FRAMEBUFFER. Likewise, to bind a framebuffer just for rendering to, set target to GL_DRAW_FRAMEBUFFER. The framebuffer bound for drawing will be the destination for all of your rendering (including stencil and depth values used during their respective tests and colors read during blending). The framebuffer bound for reading will be the source of data if you want to read back pixel data or copy data from the framebuffer into textures, as we’ll explain shortly. Setting target to just GL_FRAMEBUFFER actually binds the object to both the read and draw framebuffer targets, and this is normally what you want.

Once you have created a framebuffer object and bound it, you can attach textures to it to serve as the storage for the rendering you’re going to do. There are three types of attachment supported by the framebuffer — the depth, stencil, and color attachments, which serve as the depth, stencil, and color buffers. To attach a texture to a framebuffer, we can call glFramebufferTexture(), whose prototype is

void glFramebufferTexture(GLenum target,

GLenum attachment,

GLuint texture,

GLint level);

For glFramebufferTexture(), target is the binding point where the framebuffer object you want to attach a texture to is bound. This should be GL_READ_FRAMEBUFFER, GL_DRAW_FRAMEBUFFER, or just GL_FRAMEBUFFER. In this case, GL_FRAMEBUFFER is considered to be equivalent toGL_DRAW_FRAMEBUFFER, and so if you use this token, OpenGL will attach the texture to the framebuffer object bound the GL_DRAW_FRAMEBUFFER target.

attachment tells OpenGL which attachment you want to attach the texture to. It can be GL_DEPTH_ATTACHMENT to attach the texture to the depth buffer attachment, or GL_STENCIL_ATTACHMENT to attach it to the stencil buffer attachment. Because there are several texture formats that include depth and stencil values packed together, OpenGL also allows you to set attachment to GL_DEPTH_STENCIL_ATTACHMENT to indicate that you want to use the same texture for both the depth and stencil buffers.

To attach a texture as the color buffer, set attachment to GL_COLOR_ATTACHMENT0. In fact, you can set attachment to GL_COLOR_ATTACHMENT1, GL_COLOR_ATTACHMENT2, and so on to attach multiple textures for rendering to. We’ll get to that momentarily, but first, we’ll look at an example of how to set up a framebuffer object for rendering to. Lastly, texture is the name of the texture you want to attach to the framebuffer, and level is the mipmap level of the texture you want to render into. Listing 9.4 shows a complete example of setting up a framebuffer object with a depth buffer and a texture to render into.

// Create a framebuffer object and bind it

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

// Create a texture for our color buffer

glGenTextures(1, &color_texture);

glBindTexture(GL_TEXTURE_2D, color_texture);

glTexStorage2D(GL_TEXTURE_2D, 1, GL_RGBA8, 512, 512);

// We're going to read from this, but it won't have mipmaps,

// so turn off mipmaps for this texture.

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// Create a texture that will be our FBO's depth buffer

glGenTextures(1, &depth_texture);

glBindTexture(GL_TEXTURE_2D, depth_texture);

glTexStorage2D(GL_TEXTURE_2D, 1, GL_DEPTH_COMPONENT32F, 512, 512);

// Now, attach the color and depth textures to the FBO

glFramebufferTexture(GL_FRAMEBUFFER,

GL_COLOR_ATTACHMENT0,

color_texture, 0);

glFramebufferTexture(GL_FRAMEBUFFER,

GL_DEPTH_ATTACHMENT,

depth_texture, 0);

// Tell OpenGL that we want to draw into the framebuffer's color

// attachment

static const GLenum draw_buffers[] = { GL_COLOR_ATTACHMENT0 };

glDrawBuffers(1, draw_buffers);

Listing 9.4: Setting up a simple framebuffer object

After this code has executed, all we need to do is call glBindFramebuffer() again and pass our newly created framebuffer object, and all rendering will be directed into the depth and color textures. Once we’re done rendering into our own framebuffer, we can use the resulting texture as a regular texture and read from it in our shaders. Listing 9.5 shows an example of doing this.

// Bind our off-screen FBO

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

// Set the viewport and clear the depth and color buffers

glViewport(0, 0, 512, 512);

glClearBufferfv(GL_COLOR, 0, green);

glClearBufferfv(GL_DEPTH, 0, &one);

// Activate our first, non-textured program

glUseProgram(program1);

// Set our uniforms and draw the cube.

glUniformMatrix4fv(proj_location, 1, GL_FALSE, proj_matrix);

glUniformMatrix4fv(mv_location, 1, GL_FALSE, mv_matrix);

glDrawArrays(GL_TRIANGLES, 0, 36);

// Now return to the default framebuffer

glBindFramebuffer(GL_FRAMEBUFFER, 0);

// Reset our viewport to the window width and height, clear the

// depth and color buffers.

glViewport(0, 0, info.windowWidth, info.windowHeight);

glClearBufferfv(GL_COLOR, 0, blue);

glClearBufferfv(GL_DEPTH, 0, &one);

// Bind the texture we just rendered to for reading

glBindTexture(GL_TEXTURE_2D, color_texture);

// Activate a program that will read from the texture

glUseProgram(program2);

// Set uniforms and draw

glUniformMatrix4fv(proj_location2, 1, GL_FALSE, proj_matrix);

glUniformMatrix4fv(mv_location2, 1, GL_FALSE, mv_matrix);

glDrawArrays(GL_TRIANGLES, 0, 36);

// Unbind the texture and we're done.

glBindTexture(GL_TEXTURE_2D, 0);

Listing 9.5: Rendering to a texture

The code shown in Listing 9.5 is taken from the basicfbo sample and first binds our user-defined framebuffer, sets the viewport to the dimensions of the framebuffer, and clears the color buffer with a dark green color. It then proceeds to draw our simple cube model. This results in the cube being rendered into the texture we previously attached to the GL_COLOR_ATTACHMENT0 attachment point on the framebuffer. Next, we unbind our FBO, returning to the default framebuffer that represents our window. We render the cube again, this time with a shader that uses the texture we just rendered to. The result is that an image of the first cube we rendered is shown on each face of the second cube. Output of the program is shown in Figure 9.6.

Figure 9.6: Result of rendering into a texture

Multiple Framebuffer Attachments

In the last section, we introduced the concept of user-defined framebuffers, which are also known as FBOs. An FBO allows you to render into textures that you create in your application. Because the textures are owned and allocated by OpenGL, they are decoupled from the operating or window system and so can be extremely flexible. The upper limit on their size depends only on OpenGL and not on the attached displays, for example. You also have full control over their format.

Another extremely useful feature of user-defined framebuffers is that they support multiple attachments. That is, you can attach multiple textures to a single framebuffer and render into them simultaneously with a single fragment shader. Recall that to attach your texture to your FBO, you called glFramebufferTexture() and passed GL_COLOR_ATTACHMENT0 as the attachment parameter, but we mentioned that you can also pass GL_COLOR_ATTACHMENT1, GL_COLOR_ATTACHMENT2, and so on. In fact, OpenGL supports attaching at least eight textures to a single FBO. Listing 9.6 shows an example of setting up an FBO with three color attachments.

static const GLenum draw_buffers[] =

{

GL_COLOR_ATTACHMENT0,

GL_COLOR_ATTACHMENT1,

GL_COLOR_ATTACHMENT2

};

// First, generate and bind our framebuffer object

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

// Generate three texture names

glGenTextures(3, &color_texture[0]);

// For each one...

for (int i = 0; i < 3; i++)

{

// Bind and allocate storage for it

glBindTexture(GL_TEXTURE_2D, color_texture[i]);

glTexStorage2D(GL_TEXTURE_2D, 9, GL_RGBA8, 512, 512);

// Set its default filter parameters

glTexParameteri(GL_TEXTURE_2D,

GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D,

GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// Attach it to our framebuffer object as color attachments

glFramebufferTexture(GL_FRAMEBUFFER,

draw_buffers[i], color_texture[i], 0);

}

// Now create a depth texture

glGenTextures(1, &depth_texture);

glBindTexture(GL_TEXTURE_2D, depth_texture);

glTexStorage2D(GL_TEXTURE_2D, 9, GL_DEPTH_COMPONENT32F, 512, 512);

// Attach the depth texture to the framebuffer

glFramebufferTexture(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT,

depth_texture, 0);

// Set the draw buffers for the FBO to point to the color attachments

glDrawBuffers(3, draw_buffers);

Listing 9.6: Setting up an FBO with multiple attachments

To render into multiple attachments from a single fragment shader, we must declare multiple outputs in the shader and associate them with the attachment points. To do this, we use a layout qualifier to specify each output’s location, which is a term used to refer to the index of the attachment to which that output will be sent. Listing 9.7 shows an example of this.

layout (location = 0) out vec4 color0;

layout (location = 1) out vec4 color1;

layout (location = 2) out vec4 color2;

Listing 9.7: Declaring multiple outputs in a fragment shader

Once you have declared multiple outputs in your fragment shader, you can write different data into each of them and that data will be directed into the framebuffer color attachment indexed by the output’s location. Remember, the fragment shader still only executes once for each fragment produced during rasterization, and the data written to each of the shader’s outputs will be written at the same position within each of the corresponding framebuffer attachments.

Layered Rendering

In “Array Textures” in Chapter 5, we described a form of texture called the array texture, which represents a stack of 2D textures arranged as an array of layers that you can index into in a shader. It’s also possible to render into array textures by attaching them to a framebuffer object and using a geometry shader to specify which layer you want the resulting primitives to be rendered into. Listing 9.8 is taken from the gslayered sample and illustrates how to set up a framebuffer object that uses a 2D array texture as a color attachment. Such a framebuffer is known as a layered framebuffer. In addition to creating an array texture to use as a color attachment, you can create an array texture with a depth or stencil format and attach that to the depth or stencil attachment points of the framebuffer object. That texture will then become your depth or stencil buffer, allowing you to perform depth and stencil testing in a layered framebuffer.

// Create a texture for our color attachment, bind it, and allocate

// storage for it. This will be 512 × 512 with 16 layers.

GLuint color_attachment;

glGenTextures(1, &color_attachment);

glBindTexture(GL_TEXTURE_2D_ARRAY, color_attachment);

glTexStorage3D(GL_TEXTURE_2D_ARRAY, 1, GL_RGBA8, 512, 512, 16);

// Do the same thing with a depth buffer attachment.

GLuint depth_attachment;

glGenTextures(1, &depth_attachment);

glBindTexture(GL_TEXTURE_2D_ARRAY, depth_attachment);

glTexStorage3D(GL_TEXTURE_2D_ARRAY, 1, GL_DEPTH_COMPONENT, 512, 512, 16);

// Now create a framebuffer object, and bind our textures to it

GLuint fbo;

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

glFramebufferTexture(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0,

color_attachment, 0);

glFramebufferTexture(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT,

depth_attachment, 0);

// Finally, tell OpenGL that we plan to render to the color

// attachment

static const GLuint draw_buffers[] = { GL_COLOR_ATTACHMENT0 };

glDrawBuffers(1, draw_buffers);

Listing 9.8: Setting up a layered framebuffer

Once you have created an array texture and attached it to a framebuffer object, you can then render into it as normal. If you don’t use a geometry shader, all rendering goes into the first layer of the array — the slice at index zero. However, if you wish to render into a different layer, you will need to write a geometry shader. In the geometry shader, the built-in variable gl_Layer is available as an output. When you write a value into gl_Layer, that value will be used to index into the layered framebuffer to select the layer of the attachments to render into. Listing 9.9 shows a simple geometry shader that renders 16 copies of the incoming geometry, each with a different model-view matrix, into an array texture and passes a per-invocation color along to the fragment shader.

#version 430 core

// 16 invocations of the geometry shader, triangles in

// and triangles out

layout (invocations = 16, triangles) in;

layout (triangle_strip, max_vertices = 3) out;

in VS_OUT

{

vec4 color;

vec3 normal;

} gs_in[];

out GS_OUT

{

vec4 color;

vec3 normal;

} gs_out;

// Declare a uniform block with one projection matrix and

// 16 model-view matrices

layout (binding = 0) uniform BLOCK

{

mat4 proj_matrix;

mat4 mv_matrix[16];

};

void main(void)

{

int i;

// 16 colors to render our geometry

const vec4 colors[16] = vec4[16](

vec4(0.0, 0.0, 1.0, 1.0), vec4(0.0, 1.0, 0.0, 1.0),

vec4(0.0, 1.0, 1.0, 1.0), vec4(1.0, 0.0, 1.0, 1.0),

vec4(1.0, 1.0, 0.0, 1.0), vec4(1.0, 1.0, 1.0, 1.0),

vec4(0.0, 0.0, 0.5, 1.0), vec4(0.0, 0.5, 0.0, 1.0),

vec4(0.0, 0.5, 0.5, 1.0), vec4(0.5, 0.0, 0.0, 1.0),

vec4(0.5, 0.0, 0.5, 1.0), vec4(0.5, 0.5, 0.0, 1.0),

vec4(0.5, 0.5, 0.5, 1.0), vec4(1.0, 0.5, 0.5, 1.0),

vec4(0.5, 1.0, 0.5, 1.0), vec4(0.5, 0.5, 1.0, 1.0)

);

for (i = 0; i < gl_in.length(); i++)

{

// Pass through all the geometry

gs_out.color = colors[gl_InvocationID];

gs_out.normal = mat3(mv_matrix[gl_InvocationID]) * gs_in[i].normal;

gl_Position = proj_matrix *

mv_matrix[gl_InvocationID] *

gl_in[i].gl_Position;

// Assign gl_InvocationID to gl_Layer to direct rendering

// to the appropriate layer

gl_Layer = gl_InvocationID;

EmitVertex();

}

EndPrimitive();

}

Listing 9.9: Layered rendering using a geometry shader

The result of running the geometry shader shown in Listing 9.9 is that we have an array texture with a different view of a model in each slice. Obviously, we can’t directly display the contents of an array texture, so we must now use our texture as the source of data in another shader. The vertex shader in Listing 9.10, along with the corresponding fragment shader in Listing 9.11, displays the contents of an array texture.

#version 430 core

out VS_OUT

{

vec3 tc;

} vs_out;

void main(void)

{

int vid = gl_VertexID;

int iid = gl_InstanceID;

float inst_x = float(iid % 4) / 2.0;

float inst_y = float(iid >> 2) / 2.0;

const vec4 vertices[] = vec4[](vec4(-0.5, -0.5, 0.0, 1.0),

vec4( 0.5, -0.5, 0.0, 1.0),

vec4( 0.5, 0.5, 0.0, 1.0),

vec4(-0.5, 0.5, 0.0, 1.0));

vec4 offs = vec4(inst_x - 0.75, inst_y - 0.75, 0.0, 0.0);

gl_Position = vertices[vid] *

vec4(0.25, 0.25, 1.0, 1.0) + offs;

vs_out.tc = vec3(vertices[vid].xy + vec2(0.5), float(iid));

}

Listing 9.10: Displaying an array texture — vertex shader

#version 430 core

layout (binding = 0) uniform sampler2DArray tex_array;

layout (location = 0) out vec4 color;

in VS_OUT

{

vec3 tc;

} fs_in;

void main(void)

{

color = texture(tex_array, fs_in.tc);

}

Listing 9.11: Displaying an array texture — fragment shader

The vertex shader in Listing 9.10 simply produces a quad based on the vertex index. In addition, it offsets the quad using a function of the instance index such that rendering 16 instances will produce a 4 × 4 grid of quads. Finally, it also produces a texture coordinate using the x and ycomponents of the vertex along with the instance index as the third component. Because we will use this to fetch from an array texture, this third component will select the layer. The fragment shader in Listing 9.11 simply reads from the array texture using the supplied texture coordinates and sends the result to the color buffer.

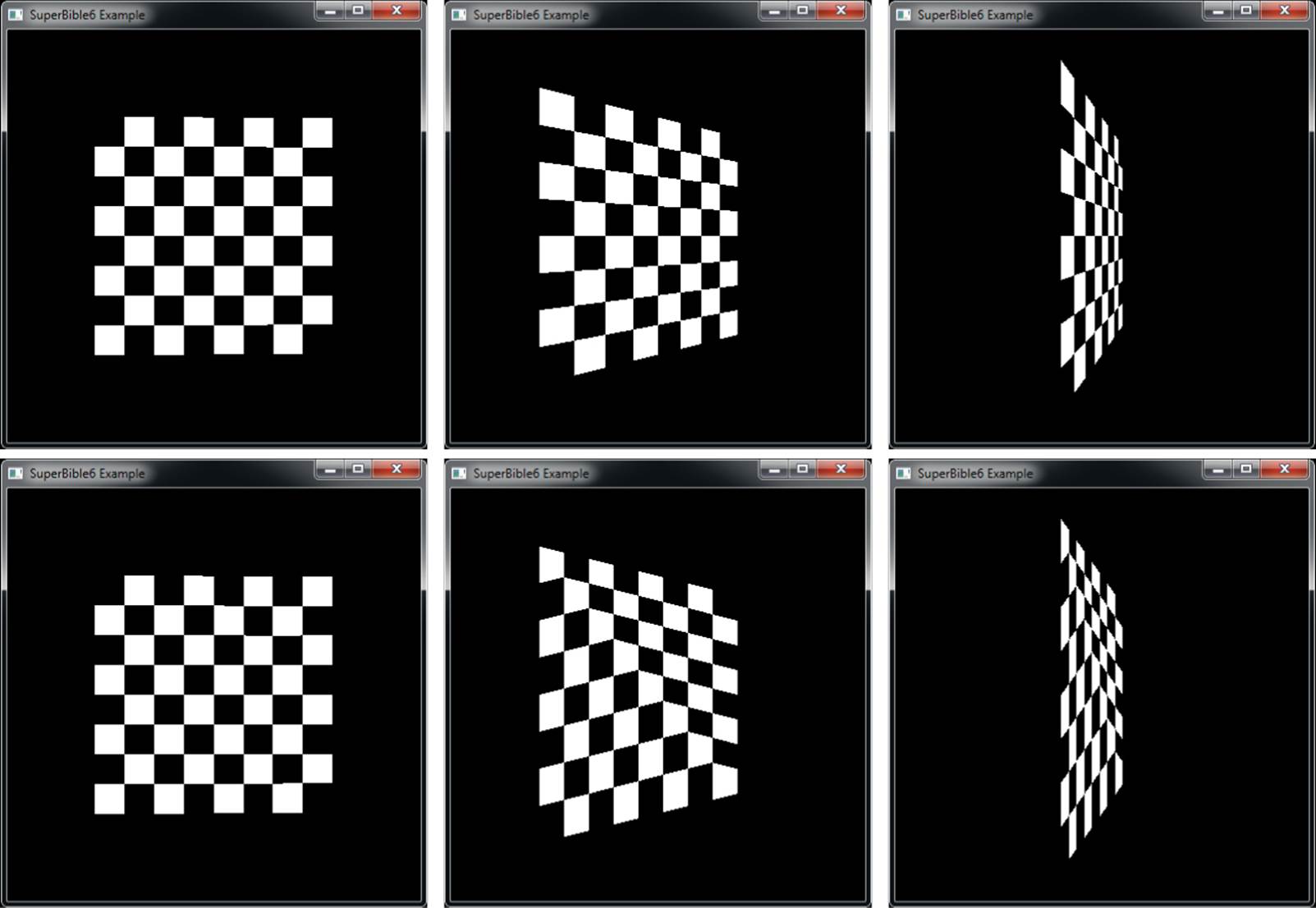

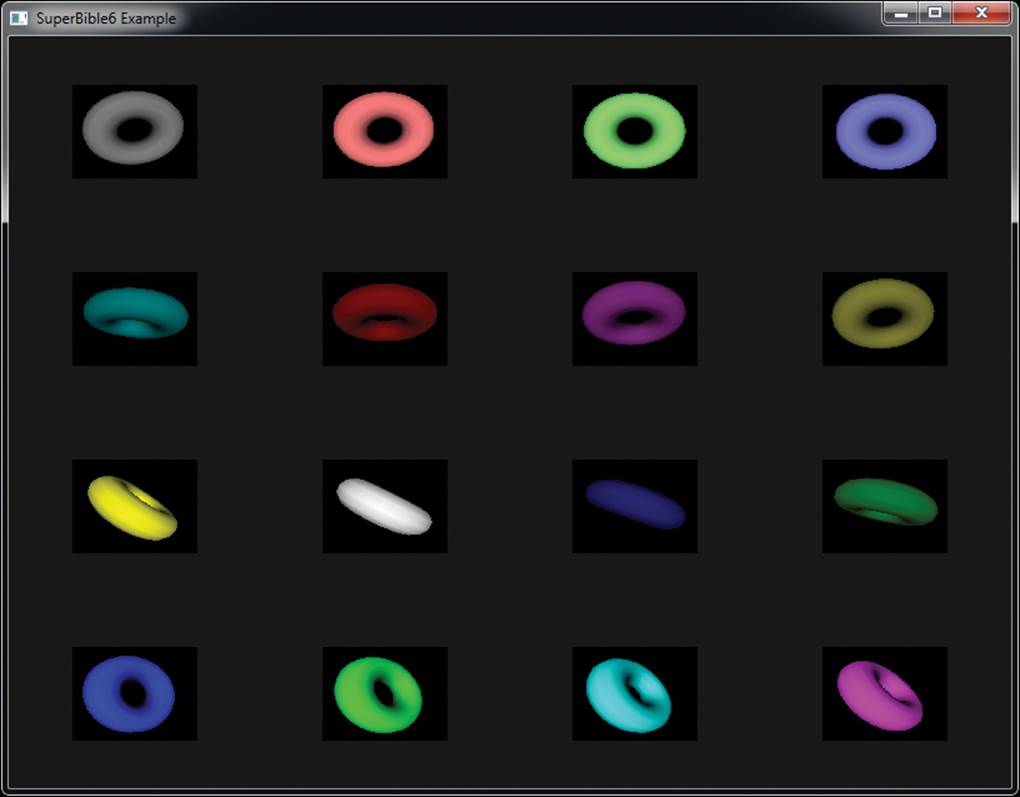

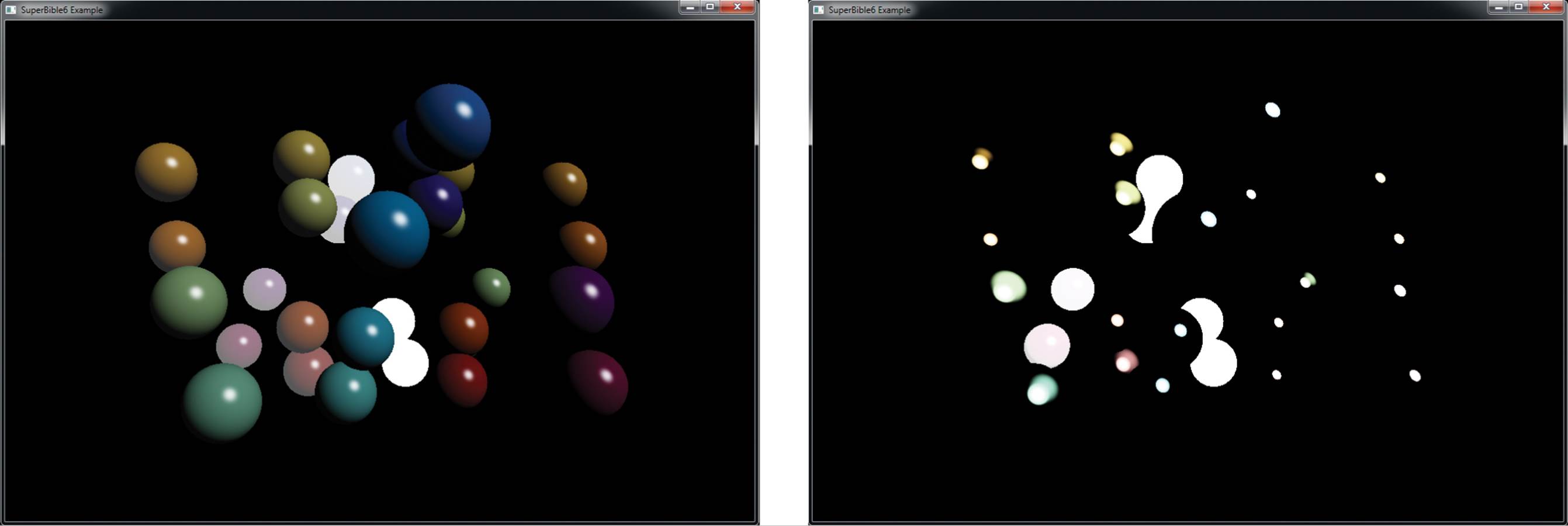

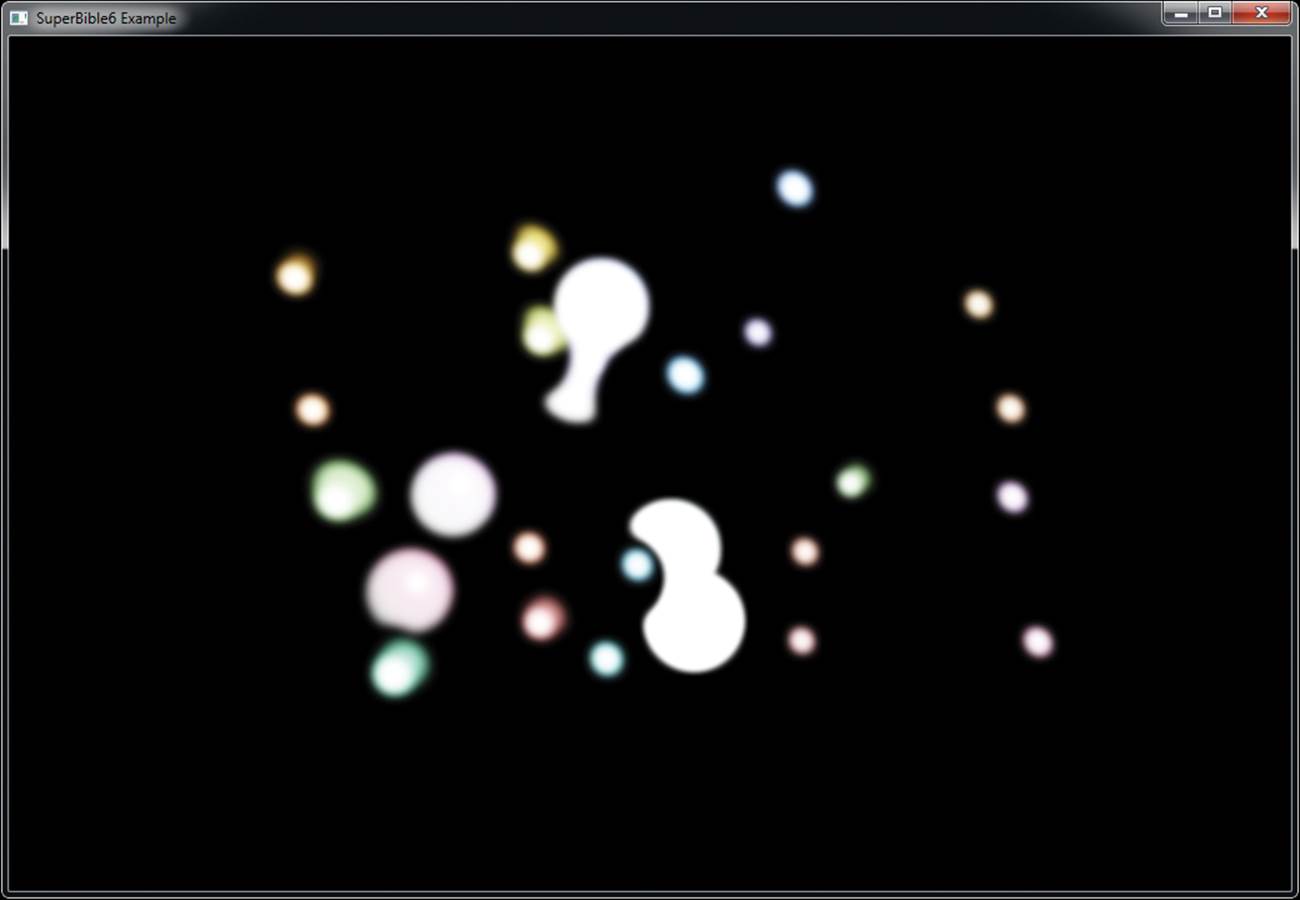

The result of the program is shown in Figure 9.7. As you can see, 16 copies of the torus have been rendered, each with a different color and orientation. Each of the 16 copies is then drawn into the window by reading from a separate layer of the array texture.

Figure 9.7: Result of the layered rendering example

Rendering into a 3D texture works in almost exactly the same way. You simply attach the whole 3D texture to a framebuffer object as one of its color attachments and then set the gl_Layer output as normal. The value written to gl_Layer becomes the z coordinate of the slice within the 3D texture where data produced by the fragment shader will be written. It’s even possible to render into multiple slices of the same texture (array or 3D) at the same. To do this, call glFramebufferTextureLayer(), whose prototype is

void glFramebufferTextureLayer(GLenum target,

GLenum attachment,

GLuint texture,

GLint level,

GLint layer);

The glFramebufferTextureLayer() function works just like glFramebufferTexture(), except that it takes one additional parameter, layer, which specifies the layer of the texture that you wish to attach to the framebuffer. For instance, the code in Listing 9.12 creates a 2D array texture with eight layers and attaches each of the layers to the corresponding color attachment of a framebuffer object.

GLuint tex;

glGenTextures(1, &tex);

glBindTexture(GL_TEXTURE_2D_ARRAY, tex);

glTexStorage3D(GL_TEXTURE_2D_ARRAY, 1, GL_RGBA8, 256, 256, 8);

GLuint fbo;

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

int i;

for (i = 0; i < 8; i++)

{

glFramebufferTextureLayer(GL_FRAMEBUFFER,

GL_COLOR_ATTACHMENT0 + i,

tex,

0,

i);

}

static const GLenum draw_buffers[] =

{

GL_COLOR_ATTACHMENT0, GL_COLOR_ATTACHMENT1,

GL_COLOR_ATTACHMENT2, GL_COLOR_ATTACHMENT3,

GL_COLOR_ATTACHMENT4, GL_COLOR_ATTACHMENT5,

GL_COLOR_ATTACHMENT6, GL_COLOR_ATTACHMENT7

};

glDrawBuffers(8, &draw_buffers[0]);

Listing 9.12: Attaching texture layers to a framebuffer

Now, when you render into the framebuffer created in Listing 9.12, your fragment shader can have up to eight outputs, and each will be written to a different layer of the texture.

Rendering to Cube Maps

As far as OpenGL is concerned, a cube map is really a special case of an array texture. A single cube map is just an array of six slices, and a cube map array texture is an array of an integer multiple of six slices. You attach a cube map texture to a framebuffer object in exactly the same way as shown in Listing 9.8, except that rather than creating a 2D array texture, you create a cube map texture. The cube map has six faces, which are known as positive and negative x, positive and negative y, and positive and negative z, and they appear in that order in the array texture. When you write 0 into gl_Layer in your geometry shader, rendering will go to the positive x face of the cube map. Writing 1 into gl_Layer sends output to the negative x face, writing 2 sends output to the positive y face, and so on, until eventually, writing 5 sends output to the negative z face.

If you create a cube map array texture and attach it to a framebuffer object, writing to the first six layers will render into the first cube, writing the next six layers will write into the second cube, and so on. So, if you set gl_Layer to 6, you will write to the positive x face of the second cube in the array. If you set gl_Layer to 1234, you will render into the positive z face of the 205th face.

Just as with 2D array textures, it’s also possible to attach individual faces of a cube map to the various attachment points of a single framebuffer object. In this case, we use the glFramebufferTexture2D() function, whose prototype is

void glFramebufferTexture2D(GLenum target,

GLenum attachment,

GLenum textarget,

GLuint texture,

GLint level);

Again, this function works just like glFramebufferTexture(), except that it has one additional parameter, textarget. This can be set to specify which face of the cube map you want to attach to the attachment. To attach the cube map’s positive x face, set this to GL_CUBE_MAP_POSITIVE_X; for the negative x face, set it to GL_CUBE_MAP_NEGATIVE_X. Similar tokens are available for the y and z faces, too. Using this, you could bind all of the faces of a single cube map4 to the attachment points on a single framebuffer and render into all of them at the same time.

4. While this is certainly possible, rendering the same thing to all faces of a cube map has limited utility.

Framebuffer Completeness

Before we can finish up with framebuffer objects, there is one last important topic. Just because you are happy with the way you set up your FBO doesn’t mean your OpenGL implementation is ready to render. The only way to find out if your FBO is set up correctly and in a way that the implementation can use it is to check for framebuffer completeness. Framebuffer completeness is similar in concept to texture completeness. If a texture doesn’t have all required mipmap levels specified with the right sizes, formats, and so on, that texture is incomplete and can’t be used.

There are two categories of completeness: attachment completeness and whole framebuffer completeness.

Attachment Completeness

Each attachment point of an FBO must meet certain criteria to be considered complete. If any attachment point is incomplete, the whole framebuffer will also be incomplete. Some of the cases that cause an attachment to be incomplete are

• No image is associated with the attached object.

• Width or height of zero for attached image.

• A non-color renderable format is attached to a color attachment.

• A non-depth renderable format is attached to a depth attachment.

• A non-stencil renderable format is attached to a stencil attachment.

Whole Framebuffer Completeness

Not only does each attachment point have to be valid and meet certain criteria, but the framebuffer object as a whole must also be complete. The default framebuffer, if one exists, will always be complete. Common cases for the whole framebuffer being incomplete are

• glDrawBuffers() has mapped an output to an FBO attachment where no image is attached.

• The combination of internal formats is not supported by the OpenGL driver.

Checking the Framebuffer

When you think you are finished setting up an FBO, you can check to see whether it is complete by calling

GLenum fboStatus = glCheckFramebufferStatus(GL_DRAW_FRAMEBUFFER);

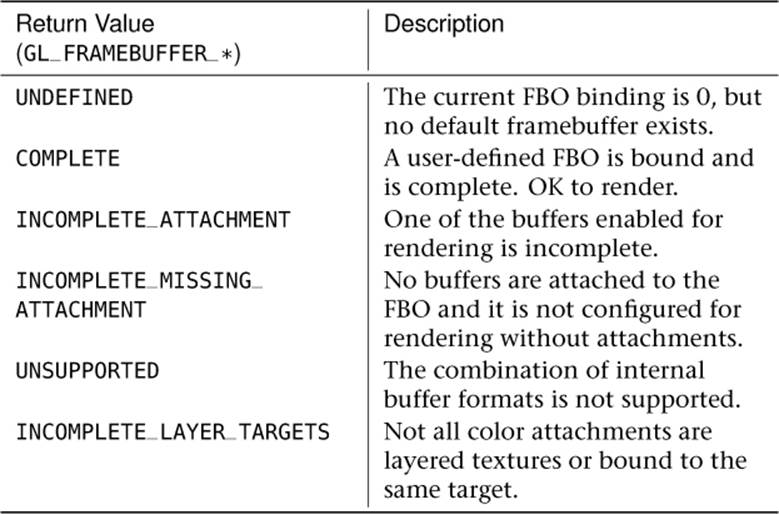

If glCheckFramebufferStatus() returns GL_FRAMEBUFFER_COMPLETE, all is well, and you may use the FBO. The return value of glCheckFramebufferStatus() provides clues to what might be wrong if the framebuffer is not complete. Table 9.7 describes all possible return conditions and what they mean.

Table 9.7. Framebuffer Completeness Return Values

Many of these return values are helpful when debugging an application but are less useful after an application has shipped. Nonetheless, the first sample application checks to make sure none of these conditions occurred. It pays to do this check in applications that use FBOs, making sure your use case hasn’t hit some implementation-dependent limitation. An example of how this might look is shown in Listing 9.13.

GLenum fboStatus = glCheckFramebufferStatus(GL_DRAW_FRAMEBUFFER);

if(fboStatus != GL_FRAMEBUFFER_COMPLETE)

{

switch (fboStatus)

{

case GL_FRAMEBUFFER_UNDEFINED:

// Oops, no window exists?

break;

case GL_FRAMEBUFFER_INCOMPLETE_ATTACHMENT:

// Check the status of each attachment

break;

case GL_FRAMEBUFFER_INCOMPLETE_MISSING_ATTACHMENT:

// Attach at least one buffer to the FBO

break;

case GL_FRAMEBUFFER_INCOMPLETE_DRAW_BUFFER:

// Check that all attachments enabled via

// glDrawBuffers exist in FBO

case GL_FRAMEBUFFER_INCOMPLETE_READ_BUFFER:

// Check that the buffer specified via

// glReadBuffer exists in FBO

break;

case GL_FRAMEBUFFER_UNSUPPORTED:

// Reconsider formats used for attached buffers

break;

case GL_FRAMEBUFFER_INCOMPLETE_MULTISAMPLE:

// Make sure the number of samples for each

// attachment is the same

break;

case GL_FRAMEBUFFER_INCOMPLETE_LAYER_TARGETS:

// Make sure the number of layers for each

// attachment is the same

break;

}

}

Listing 9.13: Checking completeness of a framebuffer object

If you attempt to perform any command that reads from or writes to the framebuffer while an incomplete FBO is bound, the command simply returns after throwing the error GL_INVALID_FRAMEBUFFER_OPERATION, retrievable by calling glGetError().

Read Framebuffers Need to Be Complete, Too!

In the previous examples, we test the FBO attached to the draw buffer binding point, GL_DRAW_FRAMEBUFFER. But a framebuffer attached to GL_READ_FRAMEBUFFER also has to be attachment complete and whole framebuffer complete for reads to work. Because only one read buffer can be enabled at a time, making sure an FBO is complete for reading is a little easier.

Rendering in Stereo

Most5 human beings have two eyes. We use these two eyes to help us judge distance by providing parallax shift — a slight difference between the images our two eyes see. There are many depth queues, including depth from focus, from differences in lighting and the relative movement of objects as we move our point of view. OpenGL is able to produce pairs of images that, depending on the display device used, can be presented separately to your two eyes and increase the sense of depth of the image. There are plenty of display devices available including binocular displays (devices with a separate physical display for each eye), shutter and polarized displays that require glasses to view, and autostereoscopic displays that don’t require that you put anything on your face. OpenGL doesn’t really care about how the image is displayed, only that you wish to render two views of the scene — one for the left eye and one for the right.

5. Those readers with less than two eyes may wish to skip to the next section.

To display images in stereo requires some cooperation from the windowing or operating system, and therefore the mechanism to create a stereo display is platform specific. The gory details of this are covered for a number of platforms in Chapter 14. For now, we can use the facilities provided by the sb6 application framework to create our stereo window for us. In your application, you can override sb6::application::init, call the base class function, and then set info.flags.stereo to 1 as shown in Listing 9.14. Because some OpenGL implementations may require your application to cover the whole display (which is known as full-screen rendering), you can also set the info.flags.fullscreen flag in your init function to make the application use a full-screen window.

void my_application::init()

{

info.flags.stereo = 1;

info.flags.fullscreen = 1; // Set this if your OpenGL

// implementation requires

// fullscreen for stereo rendering.

}

Listing 9.14: Creating a stereo window

Remember, not all displays support stereo output, and not all OpenGL implementations will allow you to create a stereo window. However, if you have access to the necessary display and OpenGL implementation, you should have a window that runs in stereo. Now we need to render into it. The simplest way to render in stereo is to simply draw the entire scene twice. Before rendering into the left eye image, call

glDrawBuffer(GL_BACK_LEFT);

When you want to render into the right eye image, call

glDrawBuffer(GL_BACK_RIGHT);

In order to produce a pair of images with a compelling depth effect, you need to construct transformation matrices representing the views observed by the left and right eyes. Remember, our model matrix transforms our model into world space, and world space is global, applying the same way regardless of the viewer. However, the view matrix essentially transforms the world into the frame of the viewer. As the viewer is in a different location for each of the eyes, the view matrix must be different for each of the two eyes. Therefore, when we render to the left view, we use the left view matrix, and when we’re rendering to the right view, we use the right view matrix.

The simplest form of stereo view matrix pairs simply translates the left and right views away from each other on the horizontal axis. Optionally, you can also rotate the view matrices inwards towards the center of view. Alternatively, you can use the vmath::lookat function to generate your view matrices for you. Simply place your eye at the left eye location (slightly left of the viewer position) and the center of the object of interest to create the left view matrix, and then do the same with the right eye position to create the right view matrix. Listing 9.15 shows how this is done.

void my_application::render(double currentTime)

{

static const vmath::vec3 origin(0.0f);

static const vmath::vec3 up_vector(0.0f, 1.0f, 0.0f);

static const vmath::vec3 eye_separation(0.01f, 0.0f, 0.0f);

vmath::mat4 left_view_matrix =

vmath::lookat(eye_location - eye_separation,

origin,

up_vector);

vmath::mat4 right_view_matrix =

vmath::lookat(eye_location + eye_separation,

origin,

up_vector);

static const GLfloat black[] = { 0.0f, 0.0f ,0.0f, 0.0f };

static const GLfloat one = 1.0f;

// Setting the draw buffer to GL_BACK ends up drawing in

// both the back left and back right buffers. Clear both

glDrawBuffer(GL_BACK);

glClearBufferfv(GL_COLOR, 0, black);

glClearBufferfv(GL_DEPTH, 0, &one);

// Now, set the draw buffer to back left

glDrawBuffer(GL_BACK_LEFT);

// Set our left model-view matrix product

glUniformMatrix4fv(model_view_loc, 1,

left_view_matrix * model_matrix);

// Draw the scene

draw_scene();

// Set the draw buffer to back right

glDrawBuffer(GL_BACK_RIGHT);

// Set the right model-view matrix product

glUniformMatrix4fv(model_view_loc, 1,

right_view_matrix * model_matrix);

// Draw the scene... again.

draw_scene();

}

Listing 9.15: Drawing into a stereo window

Clearly, the code in Listing 9.15 renders the entire scene twice. Depending on the complexity of your scene, that could be very, very expensive — literally doubling the cost of rendering the scene. One possible tactic is to switch between the GL_BACK_LEFT and GL_BACK_RIGHT draw buffers between each and every object in your scene. This can mean that updates to state (such as binding textures or changing the current program) can be performed only once, but changing the draw buffer can be as expensive as any other state-changing function. As we learned earlier in the chapter, though, it’s possible to render into more than one buffer at a time by outputting two vectors from your fragment shader. In fact, consider what would happen if you used a fragment shader with two outputs and then call

static const GLenum buffers[] = { GL_BACK_LEFT, GL_BACK_RIGHT }

glDrawBuffers(2, buffers);

After this, the first output of your fragment shader will be written to the left eye buffer, and the second will be written to the right eye buffer. This is great! Now we can render both eyes at the same time! Well, not so fast. Remember, even though the fragment shader can output to a number of different draw buffers, the location within each of those buffers will be the same. How do we draw a different image into each of the buffers?

What we can do is use a geometry shader to render into a layered framebuffer with two layers, one for the left eye and one for the right eye. We will use geometry shader instancing to run the geometry shader twice, and write the invocation index into the layer to direct the two copies of the data into the two layers of the framebuffer. In each invocation of the geometry shader, we can select one of two model-view matrices and essentially perform all of the work of the vertex shader in the geometry shader. Once we’re done rendering the whole scene, the framebuffer’s two layers will contain the left and right eye images. All that is needed now is to render a full-screen quad with a fragment shader that reads from the two layers of the array texture and writes the result into its two outputs, which are directed into the left and right eye views.

Listing 9.16 shows the simple geometry shader that we’ll use in our application to render both views of our stereo scene in a single pass.

#version 430 core

layout (triangles, invocations = 2) in;

layout (triangle_strip, max_vertices = 3) out;

uniform matrices

{

mat4 model_matrix;

mat4 view_matrix[2];

mat4 projection_matrix;

};

in VS_OUT

{

vec4 color;

vec3 normal;

vec2 texture_coord;

} gs_in[];

out GS_OUT

{

vec4 color;

vec3 normal;

vec2 texture_coord;

} gs_out;

void main(void)

{

// Calculate a model-view matrix for the current eye

mat4 model_view_matrix = view_matrix[gl_InvocationID] *

model_matrix;

for (int i = 0; i < gl_in.length(); i++)

{

// Output layer is invocation ID

gl_Layer = gl_InvocationID;

// Multiply by the model matrix, view matrix for the

// appropriate eye and then the projection matrix.

gl_Position = projection_matrix *

model_view_matrix *

gl_in[i].gl_Position;

gs_out.color = gs_in[i].color;