OpenGL SuperBible: Comprehensive Tutorial and Reference, Sixth Edition (2013)

Part I: Foundations

Chapter 5. Data

What You’ll Learn in This Chapter

• How to create buffers and textures that you can use to store data that your program can access

• How to get OpenGL to supply the values of your vertex attributes automatically

• How to access textures from your shaders for both reading and writing

In the examples you’ve seen so far, we have either used hard-coded data directly in our shaders, or we have passed values to shaders one at a time. While sufficient to demonstrate the configuration of the OpenGL pipeline, this is hardly representative of modern graphics programming. Recent graphics processors are designed as streaming processors that consume and produce huge amounts of data. Passing a few values to OpenGL at a time is extremely inefficient. To allow data to be stored and accessed by OpenGL, we include two main forms of data storage — buffers and textures. In this chapter, we first introduce buffers, which are linear blocks of un-typed data and can be seen as generic memory allocations. Next, we introduce textures, which are normally used to store multi-dimensional data, such as images or other data types.

Buffers

In OpenGL, buffers are linear allocations of memory that can be used for a number of purposes. They are represented by names, which are essentially opaque handles that OpenGL uses to identify them. Before you can start using buffers, you have to ask OpenGL to reserve some names for you and then use them to allocate memory and put data into that memory. The memory allocated for a buffer object is called its data store. Once you have the name of a buffer, you can attach it to the OpenGL context by binding it to a buffer binding point. Binding points are sometimes referred to as targets,1 and the terms may be used interchangeably. There are a large number of buffer binding points in OpenGL, and each has a different use. For example, you can use the contents of a buffer to automatically supply the inputs of a vertex shader, to store the values of variables that will be used by your shaders, or as a place for shaders to store the data they produce.

1. It’s not technically correct to conflate target and binding point as a single target may have multiple binding points. However, for most use cases, it is well understood what is meant.

Allocating Memory using Buffers

The function that is used to allocate memory using a buffer object is glBufferData(), whose prototype is

void glBufferData(GLenum target,

GLsizeiptr size,

const GLvoid * data,

GLenum usage);

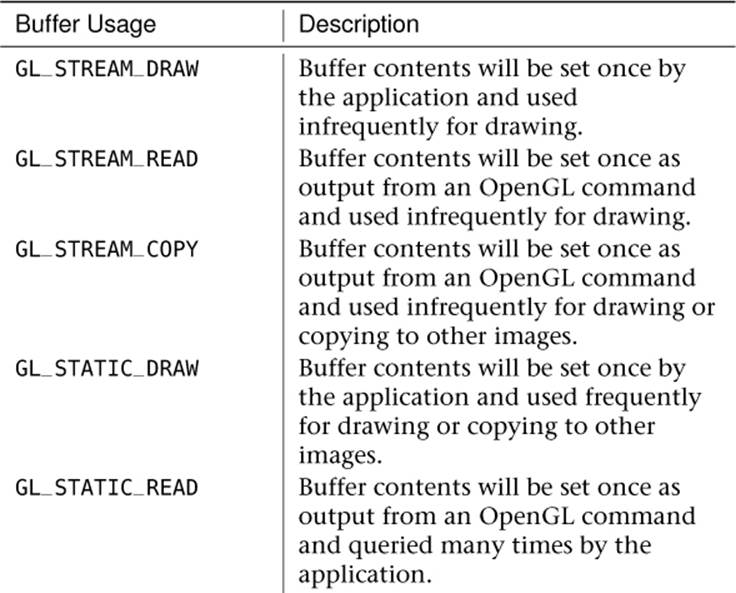

The target parameter tells OpenGL which target the buffer you want to allocate storage for is bound to. For example, the binding point that is used when you want to use a buffer to store data that OpenGL can put into your vertex attributes is called the GL_ARRAY_BUFFER binding point. Although you may hear the term vertex buffer or uniform buffer, unlike some graphics libraries, OpenGL doesn’t really assign types to buffers — a buffer is just a buffer and can be used for any purpose at any time (and even multiple purposes at the same time, if you like). The size parameter tells OpenGL how big the buffer should be, and data is a pointer to some initial data for the buffer (it can be NULL if you don’t have data to put in the buffer right away). Finally, usage tells OpenGL how you plan to use the buffer. There are a number of possible values for usage, which are listed in Table 5.1.

Table 5.1. Buffer Object Usage Models

Listing 5.1 shows how a name for a buffer is reserved by calling glGenBuffers(), how it is bound to the context using glBindBuffer(), and how storage for it is allocated by calling glBufferData().

// The type used for names in OpenGL is GLuint

GLuint buffer;

// Generate a name for the buffer

glGenBuffers(1, &buffer);

// Now bind it to the context using the GL_ARRAY_BUFFER binding point

glBindBuffer(GL_ARRAY_BUFFER, buffer);

// Specify the amount of storage we want to use for the buffer

glBufferData(GL_ARRAY_BUFFER, 1024 * 1024, NULL, GL_STATIC_DRAW);

Listing 5.1: Generating, binding, and initializing a buffer

After the code in Listing 5.1 has executed, buffer contains the name of a buffer object that has been initialized to represent one megabyte of storage for whatever data we choose. Using the GL_ARRAY_BUFFER target to refer to the buffer object suggests to OpenGL that we’re planning to use this buffer to store vertex data, but we’ll still be able to take that buffer and bind it to some other target later. There are a handful of ways to get data into the buffer object. You may have noticed the NULL pointer that we pass as the third argument to glBufferData() in Listing 5.1. Had we instead supplied a pointer to some data, that data would have been used to initialize the buffer object. Another way to get data into a buffer is to give it to OpenGL and tell it to copy data there. To do this, we call glBufferSubData(), passing the size of the data we want to put into the buffer, the offset in the buffer where we want it to go, and a pointer to the data in memory that should be put into the buffer. glBufferSubData() is declared as

void glBufferSubData(GLenum target,

GLintptr offset,

GLsizeiptr size,

const GLvoid * data);

Listing 5.2 shows how we can put the data originally used in Listing 3.1 into a buffer object, which is the first step in automatically feeding a vertex shader with data.

// This is the data that we will place into the buffer object

static const float data[] =

{

0.25, -0.25, 0.5, 1.0,

-0.25, -0.25, 0.5, 1.0,

0.25, 0.25, 0.5, 1.0

};

// Put the data into the buffer at offset zero

glBufferSubData(GL_ARRAY_BUFFER, 0, sizeof(data), data);

Listing 5.2: Updating the content of a buffer with glBufferSubData()

Another method for getting data into a buffer object is to ask OpenGL for a pointer to the memory that the buffer object represents and then copy the data there yourself. Listing 5.3 shows how to do this using the glMapBuffer() function.

// This is the data that we will place into the buffer object

static const float data[] =

{

0.25, -0.25, 0.5, 1.0,

-0.25, -0.25, 0.5, 1.0,

0.25, 0.25, 0.5, 1.0

};

// Get a pointer to the buffer's data store

void * ptr = glMapBuffer(GL_ARRAY_BUFFER, GL_WRITE_ONLY);

// Copy our data into it...

memcpy(ptr, data, sizeof(data));

// Tell OpenGL that we're done with the pointer

glUnmapBuffer(GL_ARRAY_BUFFER);

Listing 5.3: Mapping a buffer’s data store with glMapBuffer()

The glMapBuffer() function is useful if you don’t have all the data handy when you call the function. For example, you might be about to generate the data, or to read it from a file. If you wanted to use glBufferSubData() (or the initial pointer passed to glBufferData()), you’d have to generate or read the data into a temporary memory and then get OpenGL to make another copy of it into the buffer object. If you map a buffer, you can simply read the contents of the file directly into the mapped buffer. When you unmap it, if OpenGL can avoid making a copy of the data, it will. Regardless of whether we used glBufferSubData() or glMapBuffer() and an explicit copy to get data into our buffer object, it now contains a copy of data[] and we can use it as a source of data to feed our vertex shader.

Filling and Copying Data in Buffers

After allocating storage space for your buffer object using glBufferData(), one possible next step is to fill the buffer with known data. Whether you use the initial data parameter of glBufferData(), use glBufferSubData() to put the initial data in the buffer, or use glMapBuffer() to obtain a pointer to the buffer’s data store and fill it with your application, you will need to overwrite the entire buffer. If the data you want to put into a buffer is a constant value, it is probably much more efficient to call glClearBufferSubData(), whose prototype is

void glClearBufferSubData(GLenum target,

GLenum internalformat,

GLintptr offset,

GLsizeiptr size,

GLenum format,

GLenum type,

const void * data);

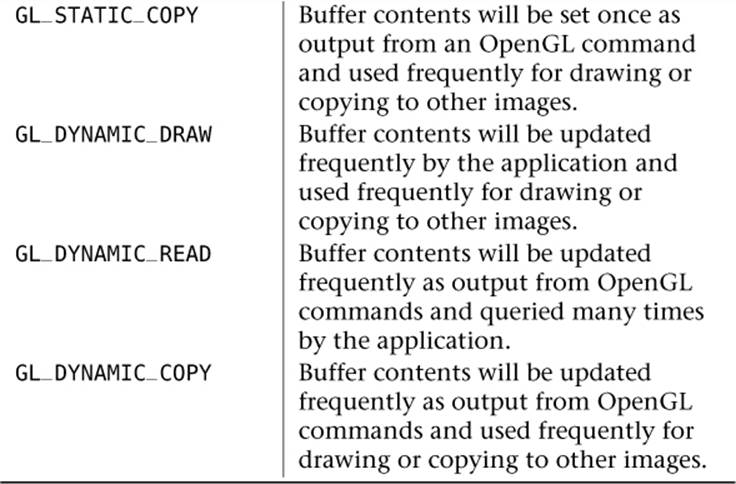

The glClearBufferSubData() function takes a pointer to a variable containing the values that you want to clear the buffer object to and, after converting it to the format specified in internalformat, replicates it across the range of the buffer’s data store specified by offset and size, both of which are measured in bytes. format and type tell OpenGL about the data pointed to by data. Format can be one of GL_RED, GL_RG, GL_RGB, or GL_RGBA to specify 1-, 2-, 3-, or 4-channel data, for example. Meanwhile, type should represent the data type of the components. For instance, it could beGL_UNSIGNED_BYTE or GL_FLOAT to specify unsigned bytes or floating-point data. The most common types supported by OpenGL and their corresponding C data types are listed in Table 5.2.

Table 5.2. Basic OpenGL Type Tokens and Their Corresponding C Types

Once your data has been sent to the GPU, it’s entirely possible you may want to share that data between buffers or copy the results from one buffer into another. OpenGL provides an easy-to-use way of doing that. glCopyBufferSubData() lets you specify which buffers are involved as well as the size and offsets to use.

void glCopyBufferSubData(GLenum readtarget,

GLenum writetarget,

GLintptr readoffset,

GLintptr writeoffset,

GLsizeiptr size);

The readtarget and writetarget are the targets where the two buffers you want to copy data between are bound. These can be buffers bound to any of the available buffer binding points. However, since buffer binding points can only have one buffer bound at a time, you couldn’t copy between two buffers both bound to the GL_ARRAY_BUFFER target, for example. This means that when you perform the copy, you need to pick two targets to bind the buffers to, which will disturb OpenGL state.

To resolve this, OpenGL provides the GL_COPY_READ_BUFFER and GL_COPY_WRITE_BUFFER targets. These targets were added specifically to allow you to copy data from one buffer to another without any unintended side effects. They are not used for anything else in OpenGL, and so you can bind your read and write buffers to these binding points without affecting any other buffer target. The readoffset and writeoffset parameters tell OpenGL where in the source and destination buffers to read or write the data, and the size parameter tells it how big the copy should be. Be sure that the ranges you are reading from and writing to remain within the bounds of the buffers; otherwise, your copy will fail.

You may notice the types of readoffset, writeoffset, and size, which are GLintptr and GLsizeiptr. These types are special definitions of integer types that are at least wide enough to hold a pointer variable.

Feeding Vertex Shaders from Buffers

Back in Chapter 2, you were briefly introduced to the vertex array object (VAO) where we explained how it represented the inputs to the vertex shader — even though at the time, we didn’t use any real inputs to our vertex shaders and opted instead for hard-coded arrays of data. Then, inChapter 3 we introduced the concept of vertex attributes, but we only discussed how to change their static values. Although the vertex array object stores these static attribute values for you, it can do a whole lot more. Before we can proceed, we need to create a vertex array object to store our vertex array state:

GLuint vao;

glGenVertexArrays(1, &vao);

glBindVertexArray(vao);

Now that we have our VAO created and bound, we can start filling in its state. Rather than using hard-coded data in the vertex shader, we can instead rely entirely on the value of a vertex attribute and ask OpenGL to fill it automatically using the data stored in a buffer object that we supply. To tell OpenGL where in the buffer object our data is, we use the glVertexAttribPointer() function2 to describe the data, and then enable automatic filling of the attribute by calling glEnableVertexAttribArray(). The prototypes of glVertexAttribPointer() andglEnableVertexAttribArray() are

2. glVertexAttribPointer() is so named for historical reasons. Way back in times of yore, OpenGL didn’t have buffer objects and all of the data it read was from your application’s memory. When you called glVertexAttribPointer(), you really did give it a pointer to real data. On modern architectures, that’s horribly inefficient, especially if the data will be read more than once, and so now OpenGL only supports reading data from buffer objects. Although the name of the function remains to this day, the pointer parameter is really interpreted as an offset into a buffer object.

void glVertexAttribPointer(GLuint index,

GLint size,

GLenum type,

GLboolean normalized,

GLsizei stride,

const GLvoid * pointer);

void glEnableVertexAttribArray(GLuint index);

For glVertexAttribPointer(), the first parameter, index, is the index of the vertex attribute. You can define a large number of attributes as input to a vertex shader and then refer to them by their index as explained in “Vertex Attributes” in Chapter 3. size is the number of components that are stored in the buffer for each vertex, and type is the type of the data, which would normally be one of the types in Table 5.2.

The normalized parameter tells OpenGL whether the data in the buffer should be normalized (scaled between 0.0 and 1.0) before being passed to the vertex shader or if it should be left alone and passed as is. This is ignored for floating-point data, but for integer data types such asGL_UNSIGNED_BYTE or GL_INT, it is important. For example, if GL_UNSIGNED_BYTE data is normalized, it is divided by 255 (the maximum value representable by an unsigned byte) before being passed to a floating-point input to the vertex shader. The shader will therefore see values of the input attribute between 0.0 and 1.0. However, if the data is not normalized, it is simply casted to floating point and the shader will receive numbers between 0.0 and 255.0, even though the input to the vertex shader is floating-point.

The stride parameter tells OpenGL how many bytes are between the start of one vertex’s data and the start of the next, but you can set this to zero to let OpenGL calculate it for you based on the values of size and type.

Finally, pointer is, despite its name, the offset into the buffer that is currently bound to GL_ARRAY_BUFFER where the vertex attribute’s data starts.

An example showing how to use glVertexAttribPointer() to configure a vertex attribute is shown in Listing 5.4. Notice that we also call glEnableVertexAttribArray() after setting up the pointer. This tells OpenGL to use the data in the buffer to fill the vertex attribute rather than using data we give it using one of the glVertexAttrib*() functions.

// First, bind our buffer object to the GL_ARRAY_BUFFER binding

// The subsequent call to glVertexAttribPointer will reference this buffer

glBindBuffer(GL_ARRAY_BUFFER, buffer);

// Now, describe the data to OpenGL, tell it where it is, and turn on

// automatic vertex fetching for the specified attribute

glVertexAttribPointer(0, // Attribute 0

4, // Four components

GL_FLOAT, // Floating-point data

GL_FALSE, // Not normalized

// (floating-point data never is)

0, // Tightly packed

NULL); // Offset zero (NULL pointer)

glEnableVertexAttribArray(0);

Listing 5.4: Setting up a vertex attribute

After Listing 5.4 has been executed, OpenGL will automatically fill the first attribute in the vertex shader with data it has read from the buffer that was bound when glVertexAttribPointer() was called. We can modify our vertex shader to use only its input vertex attribute rather than a hard-coded array. This updated shader is shown in Listing 5.5.

#version 430 core

layout (location = 0) in vec4 position;

void main(void)

{

gl_Position = position;

}

Listing 5.5: Using an attribute in a vertex shader

As you can see, the shader of Listing 5.5 is greatly simplified over the original shader shown in Chapter 2. Gone is the hard-coded array of data, and as an added bonus, this shader can be used with an arbitrary number of vertices. You can literally put millions of vertices worth of data into your buffer object and draw them all with a single command such as a call to glDrawArrays().

If you are done using data from a buffer object to fill a vertex attribute, you can disable that attribute again with a call to glDisableVertexAttribArray(), whose prototype is

void glDisableAttribArray(GLuint index);

Once you have disabled the vertex attribute, it goes back to being static and passing the value you specify with glVertexAttrib*() to the shader.

Using Multiple Vertex Shader Inputs

As you have learned, you can get OpenGL to feed data into your vertex shaders for you and using data you’ve placed in buffer objects. You can also declare multiple inputs to your vertex shaders and assign each one a unique location that can be used to refer to it. Combining these things together means that you can get OpenGL to provide data to multiple vertex shader inputs simultaneously. Consider the input declarations to a vertex shader shown in Listing 5.6

layout (location = 0) in vec3 position;

layout (location = 1) in vec3 color;

Listing 5.6: Declaring two inputs to a vertex shader

If you have a linked program object whose vertex shader has multiple inputs, you can determine the locations of those inputs by calling

GLint glGetAttribLocation(GLuint program,

const GLchar * name);

Here, program is the name of the program object containing the vertex shader, and name is the name of the vertex attribute. In our example declarations of Listing 5.6, passing "position" to glGetAttribLocation() will cause it to return 0, and passing "color" will cause it to return 1. Passing something that is not the name of a vertex shader input will cause glGetAttribLocation() to return -1. Of course, if you always specify locations for your vertex attributes in your shader code, then glGetAttribLocation() should return whatever you specified. If you don’t specify locations in shader code, OpenGL will assign locations for you, and those locations will be returned by glGetAttribLocation().

There are two ways to connect vertex shader inputs to your application’s data, and they are referred to as separate attributes and interleaved attributes.

When attributes are separate, that means that they are either located in different buffers, or at least at different locations in the same buffer. For example, if you want to feed data into two vertex attributes, you could create two buffer objects, bind the first to the GL_ARRAY_BUFFER target and callglVertexAttribPointer(), then bind the second buffer to the GL_ARRAY_BUFFER target and call glVertexAttribPointer() again for the second attribute. Alternatively, you can place the data at different offsets within the same buffer, bind it to the GL_ARRAY_BUFFER target, then callglVertexAttribPointer() twice — once with the offset to the first chunk of data and then again with the offset of the second chunk of data. Code demonstrating this is shown in Listing 5.7

GLuint buffer[2];

static const GLfloat positions[] = { ... };

static const GLfloat colors[] = { ... };

// Get names for two buffers

glGenBuffers(2, &buffers);

// Bind the first and initialize it

glBindBuffer(GL_ARRAY_BUFFER, buffer[0]);

glBufferData(GL_ARRAY_BUFFER, sizeof(positions), positions, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, NULL);

glEnableVertexAttribArray(0);

// Bind the second and initialize it

glBindBuffer(GL_ARRAY_BUFFER, buffer[1]);

glBufferData(GL_ARRAY_BUFFER, sizeof(colors), colors, GL_STATIC_DRAW);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 0, NULL);

glEnableVertexAttribArray(1);

Listing 5.7: Multiple separate vertex attributes

In both cases of separate attributes, we have used tightly packed arrays of data to feed both attributes. This is effectively structure-of-arrays (SoA) data. We have a set of tightly packed, independent arrays of data. However, it’s also possible to use an array-of-structures form of data. Consider how the following structure might represent a single vertex:

struct vertex

{

// Position

float x;

float y;

float z;

// Color

float r;

float g;

float b;

};

Now we have two inputs to our vertex shader (position and color) interleaved together in a single structure. Clearly, if we make an array of these structures, we have an array-of-structures (AoS) layout for our data. To represent this with calls to glVertexAttribPointer(), we have to use itsstride parameter. The stride parameter tells OpenGL how far apart in bytes the start each vertex’s data is. If we leave it as zero, it’s a signal to OpenGL that the data is tightly packed and that it can work it out for itself given the type and stride parameters. However, to use the vertexstructure declared above, we can simply use sizeof(vertex) for the stride parameter and everything will work out. Listing 5.8 shows the code to do this.

GLuint buffer;

static const vertex vertices[] = { ... };

// Allocate and initialize a buffer object

glGenBuffers(1, &buffer);

glBindBuffer(GL_ARRAY_BUFFER, buffer);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

// Set up two vertex attributes - first positions

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE,

sizeof(vertex), (void *)offsetof(vertex, x));

glEnableVertexAttribArray(0);

// Now colors

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE,

sizeof(vertex), (void *)offsetof(vertex, r));

glEnableVertexAttribArray(1);

Listing 5.8: Multiple interleaved vertex attributes

Loading Objects from Files

As you can see, you could potentially use a large number of vertex attributes in a single vertex shader, and as we progress through various techniques, you will see that we’ll regularly use four or five, possibly more. Filling buffers with data to feed all of these attributes and then setting up the vertex array object and all of the vertex attribute pointers can be a chore. Further, encoding all of your geometry data directly in your application just simply isn’t practical for anything but the simplest models. Therefore, it makes sense to store model data in files and load it into your application. There are plenty of model file formats out there, and most modeling programs support several of the more common formats.

For the purpose of this book, we have devised a simple object file definition called an .SBM file that stores the information we need without being either too simple or too over-engineered. Complete documentation for the format is contained in Appendix B. The sb6 framework also includes a loader for this model format, called sb6::object. To load an object file, create an instance of sb6::object, and call its load function as follows:

sb6::object my_object;

my_object.load("filename.sbm");

If successful, the model will be loaded into the instance of sb6::object, and you will be able to render it. During loading, the class will create and set up the object’s vertex array object and then configure all of the vertex attributes contained in the model file. The class also includes a renderfunction that binds the object’s vertex array object and calls the appropriate drawing command. For example, calling

my_object.render();

will render a single copy of the object with the current shaders. In many of the examples in the remainder of this book, we’ll simply use our object loader to load object files (several of which are included with the book’s source code) and render them.

Uniforms

Although not really a form of storage, uniforms are an important way to get data into shaders and to hook them up to your application. You have already seen how to pass data to a vertex shader using vertex attributes, and you have seen how to pass data from stage to stage using interface blocks. Uniforms allow you to pass data directly from your application into any shader stage. There are two flavors of uniforms that depend on how they are declared. The first are uniforms declared in the default block, and the second are uniform blocks, whose values are stored in buffer objects. We will discuss both now.

Default Block Uniforms

While attributes are needed for per-vertex positions, surface normals, texture coordinates, and so on, a uniform is how we pass data into a shader that stays the same — is uniform — for an entire primitive batch or longer. Probably the single most common uniform for a vertex shader is the transformation matrix. We use transformation matrices in our vertex shaders to manipulate vertex positions and other vectors. Any shader variable can be specified as a uniform, and uniforms can be in any of the shader stages (even though we only talk about vertex and fragment shaders in this chapter). Making a uniform is as simple as placing the keyword uniform at the beginning of the variable declaration:

uniform float fTime;

uniform int iIndex;

uniform vec4 vColorValue;

uniform mat4 mvpMatrix;

Uniforms are always considered to be constant, and they cannot be assigned to by your shader code. However, you can initialize their default values at declaration time in a manner such as

uniform answer = 42;

If you declare the same uniform in multiple shader stages, each of those stages will “see” the same value of that uniform.

Arranging Your Uniforms

After a shader has been compiled and linked into a program object, you can use one of many functions defined by OpenGL to set their values (assuming you don’t want the defaults defined by the shader). Just as with vertex attributes, these functions refer to uniforms by their location within their program object. It is possible to specify the locations of uniforms in your shader code by using a location layout qualifier. When you do this, OpenGL will try to assign the locations that you specify to the uniforms in your shaders. The location layout qualifier looks like

layout (location = 17) uniform vec4 myUniform;

You’ll notice the similarity between the location layout qualifier for uniforms and the one we’ve used for vertex shader inputs. In this case, myUniform will be allocated to location 17. If you don’t specify a location for your uniforms in your shader code, OpenGL will automatically assign locations to them for you. You can figure out what locations were assigned by calling the glGetUniformLocation() function, whose prototype is

GLint glGetUniformLocation(GLuint program,

const GLchar* name);

This function returns a signed integer that represents the location of the variable named by name in the program specified by program. For example, to get the location of a uniform variable named vColorValue, we would do something like this:

GLint iLocation = glGetUniformLocation(myProgram, "vColorValue");

In the previous example, passing "myUniform" to glGetUniformLocation() would result in the value 17 being returned. If you know a priori where your uniforms are because you assigned locations to them in your shaders, then you don’t need to find them and you can avoid the calls toglGetUniformLocation(). This is the recommended way of doing things.

If the return value of glGetUniformLocation() is -1, it means the uniform name could not be located in the program. You should bear in mind that even if a shader compiles correctly, a uniform name may still “disappear” from the program if it is not used directly in at least one of the attached shaders — even if you assign it a location explicitly in your shader source code. You do not need to worry about uniform variables being optimized away, but if you declare a uniform and then do not use it, the compiler will toss it out. Also, know that shader variable names are case sensitive, so you must get the case right when you query their locations.

Setting Scalars and Vector Uniforms

OpenGL supports a large number of data types both in the shading language and in the API, and in order to allow you to pass all this data around, it includes a huge number of functions just for setting the value of uniforms. A single scalar or vector data type can be set with any of the following variations on the glUniform*() function:

void glUniform1f(GLint location, GLfloat v0);

void glUniform2f(GLint location, Glfloat v0, GLfloat v1);

void glUniform3f(GLint location, GLfloat v0, GLfloat v1,

GLfloat v2);

void glUniform4f(GLint location, GLfloat v0, GLfloat v1,

GLfloat v2, GLfloat v3);

void glUniform1i(GLint location, GLint v0);

void glUniform2i(GLint location, GLint v0, GLint v1);

void glUniform3i(GLint location, GLint v0, GLint v1,

GLint v2);

void glUniform4i(GLint location, GLint v0, GLint v1,

GLint v2, GLint v3);

void glUniform1ui(GLint location, GLuint v0);

void glUniform2ui(GLint location, GLuint v0, GLuint v1);

void glUniform3ui(GLint location, GLuint v0, GLuint v1,

GLuint v2);

void glUniform4ui(GLint location, GLuint v0, GLuint v1,

GLuint v2, GLint v3);

For example, consider the following four variables declared in a shader:

uniform float fTime;

uniform int iIndex;

uniform vec4 vColorValue;

uniform bool bSomeFlag;

To find and set these values in the shader, your C/C++ code might look something like this:

GLint locTime, locIndex, locColor, locFlag;

locTime = glGetUniformLocation(myShader, "fTime");

locIndex = glGetUniformLocation(myShader, "iIndex");

locColor = glGetUniformLocation(myShader, "vColorValue");

locFlag = glGetUniformLocation(myShader, "bSomeFlag");

...

...

glUseProgram(myShader);

glUniform1f(locTime, 45.2f);

glUniform1i(locIndex, 42);

glUniform4f(locColor, 1.0f, 0.0f, 0.0f, 1.0f);

glUniform1i(locFlag, GL_FALSE);

Note that we used an integer version of glUniform*() to pass in a bool value. Booleans can also be passed in as floats, with 0.0 representing false, and any non-zero value representing true.

Setting Uniform Arrays

The glUniform*() function also comes in flavors that take a pointer, potentially to an array of values.

void glUniform1fv(GLint location, GLuint count, const GLfloat* value);

void glUniform2fv(GLint location, GLuint count, const Glfloat* value);

void glUniform3fv(GLint location, GLuint count, const GLfloat* value);

void glUniform4fv(GLint location, GLuint count, const GLfloat* value);

void glUniform1iv(GLint location, GLuint count, const GLint* value);

void glUniform2iv(GLint location, GLuint count, const GLint* value);

void glUniform3iv(GLint location, GLuint count, const GLint* value);

void glUniform4iv(GLint location, GLuint count, const GLint* value);

void glUniform1uiv(GLint location, GLuint count, constGLuint* value);

void glUniform2uiv(GLint location, GLuint count, constGLuint* value);

void glUniform3uiv(GLint location, GLuint count, constGLuint* value);

void glUniform4uiv(GLint location, GLuint count, constGLuint* value);

Here, the count value represents how many elements are in each array of x number of components, where x is the number at the end of the function name. For example, if you had a uniform with four components, such as one shown here:

uniform vec4 vColor;

then in C/C++, you could represent this as an array of floats:

GLfloat vColor[4] = { 1.0f, 1.0f, 1.0f, 1.0f };

But this is a single array of four values, so passing it into the shader would look like this:

glUniform4fv(iColorLocation, 1, vColor);

On the other hand, if you had an array of color values in your shader,

uniform vec4 vColors[2];

then in C++, you could represent the data and pass it in like this:

GLfloat vColors[4][2] = { { 1.0f, 1.0f, 1.0f, 1.0f } ,

{ 1.0f, 0.0f, 0.0f, 1.0f } };

...

glUniform4fv(iColorLocation, 2, vColors);

At its simplest, you can set a single floating-point uniform like this:

GLfloat fValue = 45.2f;

glUniform1fv(iLocation, 1, &fValue);

Setting Uniform Matrices

Finally, we see how to set a matrix uniform. Shader matrix data types only come in the single and double-precision floating-point variety, and thus we have far less variation. The following functions set the values of 2 × 2, 3 × 3, and 4 × 4 single-precision floating-point matrix uniforms, respectively:

glUniformMatrix2fv(GLint location, GLuint count,

GLboolean transpose, const GLfloat *m);

glUniformMatrix3fv(GLint location, GLuint count,

GLboolean transpose, const GLfloat *m);

glUniformMatrix4fv(GLint location, GLuint count,

GLboolean transpose, const GLfloat *m);

Similarly, the following functions set the values of 2 × 2, 3 × 3, and 4 × 4 double-precision floating-point matrix uniforms:

glUniformMatrix2dv(GLint location, GLuint count,

GLboolean transpose, const GLdouble *m);

glUniformMatrix3dv(GLint location, GLuint count,

GLboolean transpose, const GLdouble *m);

glUniformMatrix4dv(GLint location, GLuint count,

GLboolean transpose, const GLdouble *m);

In all of these functions, the variable count represents the number of matrices stored at the pointer parameter m (yes, you can have arrays of matrices!). The Boolean flag transpose is set to GL_FALSE if the matrix is already stored in column-major ordering (the way OpenGL prefers). Setting this value to GL_TRUE causes the matrix to be transposed when it is copied into the shader. This might be useful if you are using a matrix library that uses a row-major matrix layout instead (for example, some other graphics APIs use row-major ordering and you may wish to use a library designed for one of them).

Uniform Blocks

Eventually, the shaders you’ll be writing will become very complex. Some of them will require a lot of constant data, and passing all this to the shader using uniforms can become quite inefficient. If you have a lot of shaders in an application, you’ll need to set up the uniforms for every one of those shaders, which means a lot of calls to the various glUniform*() functions. You’ll also need to keep track of which uniforms change. Some change for every object, some change once per frame, while others may only require initializing once for the whole application. This means that you either need to update different sets of uniforms in different places in your application (making it more complex to maintain) or update all the uniforms all the time (costing performance).

To alleviate the cost of all the glUniform*() calls, to make updating a large set of uniforms simpler, and to be able to easily share a set of uniforms between different programs, OpenGL allows you to combine a group of uniforms into a uniform block and store the whole block in a buffer object. The buffer object is just like any other that has been described earlier. You can quickly set the whole group of uniforms by either changing your buffer binding or overwriting the content of a bound buffer. You can also leave the buffer bound while you change programs, and the new program will see the current set of uniform values. This functionality is called the uniform buffer object, or UBO. In fact, the uniforms you’ve used up until now live in the default block. Any uniform declared at the global scope in a shader ends up in the default uniform block. You can’t keep the default block in a uniform buffer object; you need to create one or more named uniform blocks.

To declare a set of uniforms to be stored in a buffer object, you need to use a named uniform block in your shader. This looks a lot like the interface blocks described in the section “Interface Blocks” back in Chapter 3, but it uses the uniform keyword instead of in or out. Listing 5.9 shows what the code looks like in a shader.

uniform TransformBlock

{

float scale; // Global scale to apply to everything

vec3 translation; // Translation in X, Y, and Z

float rotation[3]; // Rotation around X, Y, and Z axes

mat4 projection_matrix; // A generalized projection matrix to apply

// after scale and rotate

} transform;

Listing 5.9: Example uniform block declaration

This code declares a uniform block whose name is TransformBlock. It also declares a single instance of the block called transform. Inside the shader, you can refer to the members of the block using its instance name, transform (e.g., transform.scale or transform.projection_matrix). However, to set up the data in the buffer object that you’ll use to back the block, you need to know the location of a member of the block, and for that, you need the block name, TransformBlock. If you wanted to have multiple instances of the block, each with its own buffer, you could maketransform an array. The members of the block will have the same locations within each block, but there will now be several instances of the block that you can refer to in the shader. Querying the location of members within a block is important when you want to fill the block with data, which is explained in the following section.

Building Uniform Blocks

Data accessed in the shader via named uniform blocks can be stored in buffer objects. In general, it is the application’s job to fill the buffer objects with data using functions like glBufferData() or glMapBuffer(). The question is, then, what is the data in the buffer supposed to look like? There are actually two possibilities here, and whichever one you choose is a trade-off.

The first method is to use a standard, agreed upon layout for the data. This means that your application can just copy data into the buffers and assume specific locations for members within the block — you can even store the data on disk ahead of time and simply read it straight into a buffer that’s been mapped using glMapBuffer(). The standard layout may leave some empty space between the various members of the block, making the buffer larger than it needs to be, and you might even trade some performance for this convenience, but even so, using the standard layout is probably safe in almost all situations.

Another alternative is to let OpenGL decide where it would like the data. This can produce the most efficient shaders, but it means that your application needs to figure out where to put the data so that OpenGL can read it. Under this scheme, the data stored in uniform buffers is arranged in ashared layout. This is the default layout and is what you get if you don’t explicitly ask OpenGL for something else. With the shared layout, the data in the buffer is laid out however OpenGL decides is best for runtime performance and access from the shader. This can sometimes allow for greater performance to be achieved by the shaders, but requires more work from the application. The reason this is called the shared layout is that while OpenGL has arranged the data within the buffer, that arrangement will be the same between multiple programs and shaders sharing the same declaration of the uniform block. This allows you to use the same buffer object with any program. To use the shared layout, the application must determine the locations within the buffer object of the members of the uniform block.

First, we’ll describe the standard layout, which is what we would recommend that you use for your shaders (even though it’s not the default). To tell OpenGL that you want to use the standard layout, you need to declare the uniform block with a layout qualifier. A declaration of ourTransformBlock uniform block, with the standard layout qualifier, std140, is shown in Listing 5.10.

layout(std140) uniform TransformBlock

{

float scale; // Global scale to apply to everything

vec3 translation; // Translation in X, Y, and Z

float rotation[3]; // Rotation around X, Y, and Z axes

mat4 projection_matrix; // A generalized projection matrix to

// apply after scale and rotate

} transform;

Listing 5.10: Declaring a uniform block with the std140 layout

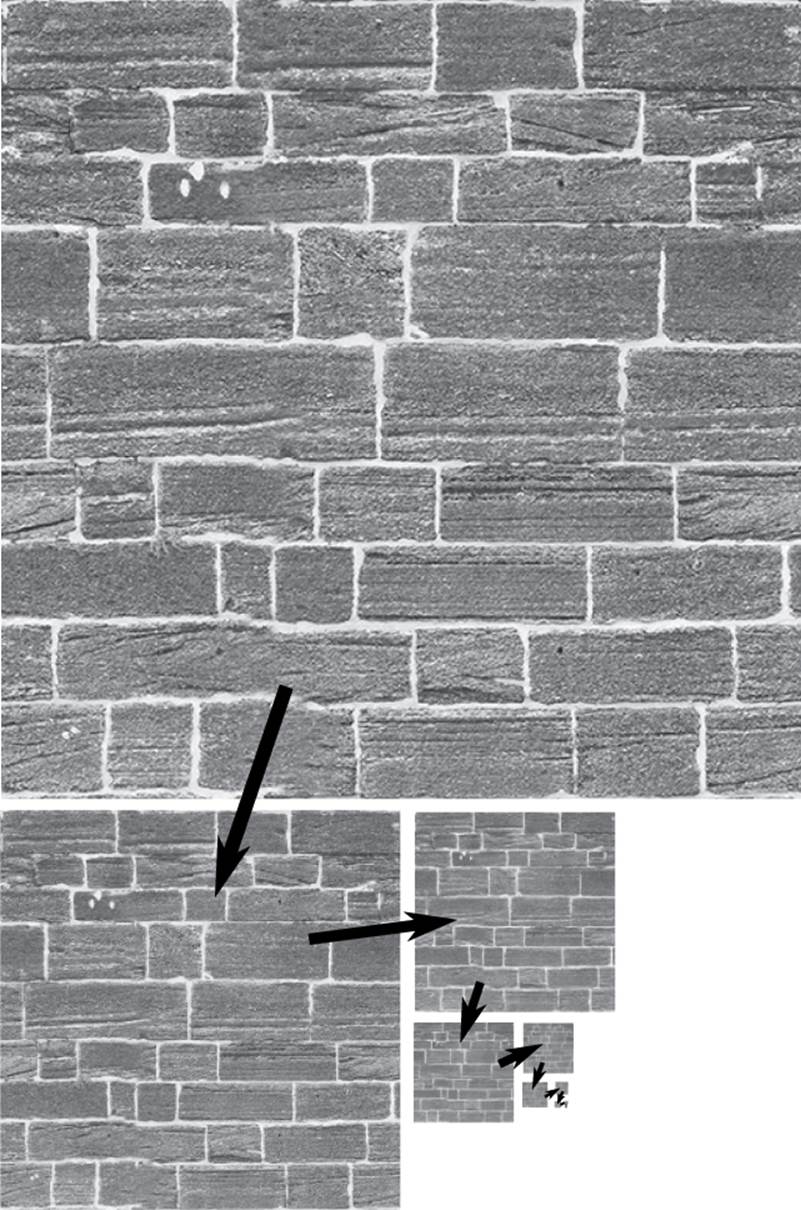

Once a uniform block has been declared to use the standard, or std140, layout, each member of the block consumes a predefined amount of space in the buffer and begins at an offset that is predictable by following a set of rules. A summary of the rules is as follows:

Any type consuming N bytes in a buffer begins on an N-byte boundary within that buffer. That means that standard GLSL types such as int, float, and bool (which are all defined to be 32-bit or four-byte quantities) begin on multiples of four bytes. A vector of these types of length two always begins on a 2N-byte boundary. For example, that means a vec2, which is eight bytes long in memory, always starts on an eight-byte boundary. Three- and four-element vectors always start on a 4N-byte boundary; so vec3 and vec4 types start on 16-byte boundaries, for instance. Each member of an array of scalar or vector types (int s or vec3 s, for example) always start boundaries defined by these same rules, but rounded up to the alignment of a vec4. In particular, this means that arrays of anything but vec4 (and N × 4 matrices) won’t be tightly packed, but instead there will be a gap between each of the elements. Matrices are essentially treated like short arrays of vectors, and arrays of matrices are treated like very long arrays of vectors. Finally, structures and arrays of structures have additional packing requirements; the whole structure starts on the boundary required by its largest member, rounded up to the size of a vec4.

Particular attention must be paid to the difference between the std140 layout and the packing rules that are often followed by your C++ (or other application language) compiler of choice. In particular, an array in a uniform block is not necessarily tightly packed. This means that you can’t create, for example, an array of float in a uniform block and simply copy data from a C array into it because the data from the C array will be packed, and the data in the uniform block won’t be.

This all sounds complex, but it is logical and well defined, and allows a large range of graphics hardware to implement uniform buffer objects efficiently. Returning to our TransformBlock example, we can figure out the offsets of the members of the block within the buffer using these rules.Listing 5.11 shows an example of a uniform block declaration along with the offsets of its members.

layout(std140) uniform TransformBlock

{

// Member base alignment offset aligned offset

float scale; // 4 0 0

vec3 translation; // 16 4 16

float rotation[3]; // 16 28 32 (rotation[0])

// 48 (rotation[1])

// 64 (rotation[2])

mat4 projection_matrix; // 16 80 80 (column 0)

// 96 (column 1)

// 112 (column 2)

// 128 (column 3)

} transform;

Listing 5.11: Example of a uniform block with offsets

There is a complete example of the alignments of various types in the original ARB_uniform_buffer_object extension specification.

If you really want to use the shared layout, you can determine the offsets that OpenGL assigned to your block members. Each member of a uniform block has an index that is used to refer to it to find its size and location within the block. To get the index of a member of a uniform block, call

void glGetUniformIndices(GLuint program,

GLsizei uniformCount,

const GLchar ** uniformNames,

GLuint * uniformIndices);

This function allows you to get the indices of a large set of uniforms — perhaps even all of the uniforms in a program with a single call to OpenGL, even if they’re members of different blocks. It takes a count of the number of uniforms you’d like the indices for (uniformCount) and an array of uniform names (uniformNames) and puts their indices in an array for you (uniformIndices). Listing 5.12 contains an example of how you would retrieve the indices of the members of TransformBlock, which we declared earlier.

static const GLchar * uniformNames[4] =

{

"TransformBlock.scale",

"TransformBlock.translation",

"TransformBlock.rotation",

"TransformBlock.projection_matrix"

};

GLuint uniformIndices[4];

glGetUniformIndices(program, 4, uniformNames, uniformIndices);

Listing 5.12: Retrieving the indices of uniform block members

After this code has run, you have the indices of the four members of the uniform block in the uniformIndices array. Now that you have the indices, you can use them to find the locations of the block members within the buffer. To do this, call

void glGetActiveUniformsiv(GLuint program,

GLsizei uniformCount,

const GLuint * uniformIndices,

GLenum pname,

GLint * params);

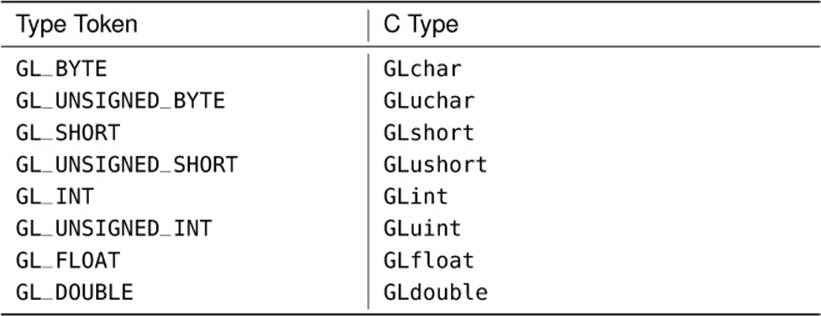

This function can give you a lot of information about specific uniform block members. The information that we’re interested in is the offset of the member within the buffer, the array stride (for TransformBlock.rotation), and the matrix stride (for TransformBlock.projection_matrix). These values tell us where to put data within the buffer so that it can be seen in the shader. We can retrieve these from OpenGL by setting pname to GL_UNIFORM_OFFSET, GL_UNIFORM_ARRAY_STRIDE, and GL_UNIFORM_MATRIX_STRIDE, respectively. Listing 5.13 shows what the code looks like.

GLint uniformOffsets[4];

GLint arrayStrides[4];

GLint matrixStrides[4];

glGetActiveUniformsiv(program, 4, uniformIndices,

GL_UNIFORM_OFFSET, uniformOffsets);

glGetActiveUniformsiv(program, 4, uniformIndices,

GL_UNIFORM_ARRAY_STRIDE, arrayStrides);

glGetActiveUniformsiv(program, 4, uniformIndices,

GL_UNIFORM_MATRIX_STRIDE, matrixStrides);

Listing 5.13: Retrieving the information about uniform block members

Once the code in Listing 5.13 has run, uniformOffsets contains the offsets of the members of the TransformBlock block, arrayStrides contains the strides of the array members (only rotation, for now), and matrixStrides contains the strides of the matrix members (only projection_matrix).

The other information that you can find out about uniform block members includes the data type of the uniform, the size in bytes that it consumes in memory, and layout information related to arrays and matrices within the block. You need some of that information to initialize a buffer object with more complex types, although the size and types of the members should be known to you already if you wrote the shaders. The other accepted values for pname and what you get back are listed in Table 5.3.

Table 5.3. Uniform Parameter Queries via glGetActiveUniformsiv()

If the type of the uniform you’re interested in is a simple type such as int, float, bool, or even vectors of these types (vec4 and so on), all you need is its offset. Once you know the location of the uniform within the buffer, you can either pass the offset to glBufferSubData() to load the data at the appropriate location, or you can use the offset directly in your code to assemble the buffer in memory. We demonstrate the latter option here because it reinforces the idea that the uniforms are stored in memory, just like vertex information can be stored in buffers. It also means fewer calls to OpenGL, which can sometimes lead to higher performance. For these examples, we assemble the data in the application’s memory and then load it into a buffer using glBufferData(). You could alternatively use glMapBuffer() to get a pointer to the buffer’s memory and assemble the data directly into that.

Let’s start by setting the simplest uniform in the TransformBlock block, scale. This uniform is a single float whose location is stored in the first element of our uniformIndices array. Listing 5.14 shows how to set the value of the single float.

// Allocate some memory for our buffer (don't forget to free it later)

unsigned char * buffer = (unsigned char *)malloc(4096);

// We know that TransformBlock.scale is at uniformOffsets[0] bytes

// into the block, so we can offset our buffer pointer by that value and

// store the scale there.

*((float *)(buffer + uniformOffsets[0])) = 3.0f;

Listing 5.14: Setting a single float in a uniform block

Next, we can initialize data for TransformBlock.translation. This is a vec3, which means it consists of three floating-point values packed tightly together in memory. To update this, all we need to do is find the location of the first element of the vector and store three consecutive floats in memory starting there. This is shown in Listing 5.15.

// Put three consecutive GLfloat values in memory to update a vec3

((float *)(buffer + uniformOffsets[1]))[0] = 1.0f;

((float *)(buffer + uniformOffsets[1]))[1] = 2.0f;

((float *)(buffer + uniformOffsets[1]))[2] = 3.0f;

Listing 5.15: Retrieving the indices of uniform block members

Now, we tackle the array rotation. We could have also used a vec3 here, but for the purposes of this example, we use a three-element array to demonstrate the use of the GL_UNIFORM_ARRAY_STRIDE parameter. When the shared layout is used, arrays are defined as a sequence of elements separated by an implementation-defined stride in bytes. This means that we have to place the data at locations in the buffer defined both by GL_UNIFORM_OFFSET and GL_UNIFORM_ARRAY_STRIDE, as in the code snippet of Listing 5.16.

// TransformBlock.rotations[0] is at uniformOffsets[2] bytes into

// the buffer. Each element of the array is at a multiple of

// arrayStrides[2] bytes past that

const GLfloat rotations[] = { 30.0f, 40.0f, 60.0f };

unsigned int offset = uniformOffsets[2];

for (int n = 0; n < 3; n++)

{

*((float *)(buffer + offset)) = rotations[n];

offset += arrayStrides[2];

}

Listing 5.16: Specifying the data for an array in a uniform block

Finally, we set up the data for TransformBlock.projection_matrix. Matrices in uniform blocks behave much like arrays of vectors. For column-major matrices (which is the default), each column of the matrix is treated like a vector, the length of which is the height of the matrix. Likewise, row-major matrices are treated like an array of vectors where each row is an element in that array. Just like normal arrays, the starting offset for each column (or row) in the matrix is determined by an implementation defined quantity. This can be queried by passing theGL_UNIFORM_MATRIX_STRIDE parameter to glGetActiveUniformsiv(). Each column of the matrix can be initialized using similar code to that which was used to initialize the vec3 TransformBlock.translation. This setup code is given in Listing 5.17.

// The first column of TransformBlock.projection_matrix is at

// uniformOffsets[3] bytes into the buffer. The columns are

// spaced matrixStride[3] bytes apart and are essentially vec4s.

// This is the source matrix - remember, it's column major so

const GLfloat matrix[] =

{

1.0f, 2.0f, 3.0f, 4.0f,

9.0f, 8.0f, 7.0f, 6.0f,

2.0f, 4.0f, 6.0f, 8.0f,

1.0f, 3.0f, 5.0f, 7.0f

};

for (int i = 0; i < 4; i++)

{

GLuint offset = uniformOffsets[3] + matrixStride[3] * i;

for (j = 0; j < 4; j++)

{

*((float *)(buffer + offset)) = matrix[i * 4 + j];

offset += sizeof(GLfloat);

}

}

Listing 5.17: Setting up a matrix in a uniform block

This method of querying offsets and strides works for any of the layouts. With the shared layout, it is the only option. However, it’s somewhat inconvenient, and as you can see, you need quite a lot of code to lay out your data in the buffer in the correct way. This is why we recommend that you use the standard layout. This allows you to determine where in the buffer data should be placed based on a set of rules that specify the size and alignments for the various data types supported by OpenGL. These rules are common across all OpenGL implementations, and so you don’t need to query anything to use it (although, should you query offsets and strides, the results will be correct). There is some chance that you’ll trade a small amount of shader performance for its use, but the savings in code complexity and application performance are well worth it.

Regardless of which packing mode you choose, you can bind your buffer full of data to a uniform block in your program. Before you can do this, you need to retrieve the index of the uniform block. Each uniform block in a program has an index that is compiler assigned. There is fixed maximum number of uniform blocks that can be used by a single program, and a maximum number that can be used in any given shader stage. You can find these limits by calling glGetIntegerv() with the GL_MAX_UNIFORM_BUFFERS parameter (for the total per program) and eitherGL_MAX_VERTEX_UNIFORM_BUFFERS, GL_MAX_GEOMETRY_UNIFORM_BUFFERS, GL_MAX_TESS_CONTROL_UNIFORM_BUFFERS, GL_MAX_TESS_EVALUATION_UNIFORM_BUFFERS, or GL_MAX_FRAGMENT_UNIFORM_BUFFERS for the vertex, tessellation control and evaluation, geometry, and fragment shader limits, respectively. To find the index of a uniform block in a program, call

GLuint glGetUniformBlockIndex(GLuint program,

const GLchar * uniformBlockName);

This returns the index of the named uniform block. In our example uniform block declaration here, uniformBlockName would be "TransformBlock". There is a set of buffer binding points to which you can bind a buffer to provide data for the uniform blocks. It is essentially a two-step process to bind a buffer to a uniform block. Uniform blocks are assigned binding points, and then buffers can be bound to those binding points, matching buffers with uniform blocks. This way, different programs can be switched in and out without changing buffer bindings, and the fixed set of uniforms will automatically be seen by the new program. Contrast this to the values of the uniforms in the default block, which are per-program state. Even if two programs contain uniforms with the same names, their values must be set for each program and will change when the active program is changed.

To assign a binding point to a uniform block, call

void glUniformBlockBinding(GLuint program,

GLuint uniformBlockIndex,

GLuint uniformBlockBinding);

where program is the program where the uniform block you’re changing lives. uniformBlockIndex is the index of the uniform block you’re assigning a binding point to. You just retrieved that by calling glGetUniformBlockIndex(). uniformBlockBinding is the index of the uniform block binding point. An implementation of OpenGL supports a fixed maximum number of binding points, and you can find out what that limit is by calling glGetIntegerv() with the GL_MAX_UNIFORM_BUFFER_BINDINGS parameter.

Alternatively, you can specify the binding index of your uniform blocks right in your shader code. To do this, we again use the layout qualifier, this time with the binding keyword. For example, to assign our TransformBlock block to binding 2, we could declare it as

layout(std140, binding = 2) uniform TransformBlock

{

...

} transform;

Notice that the binding layout qualifier can be specified at the same time as the std140 (or any other) qualifier. Assigning bindings in your shader source code avoids the need to call glUniformBlockBinding(), or even to determine the block’s index from your application, and so is usually the best method of assigning block location. Once you’ve assigned binding points to the uniform blocks in your program, whether through the glUniformBlockBinding() function or through a layout qualifier, you can bind buffers to those same binding points to make the data in the buffers appear in the uniform blocks. To do this, call

glBindBufferBase(GL_UNIFORM_BUFFER, index, buffer);

Here, GL_UNIFORM_BUFFER tells OpenGL that we’re binding a buffer to one of the uniform buffer binding points. index is the index of the binding point and should match what you specified either in your shader or in uniformBlockBinding in your call to glUniformBlockBinding(). buffer is the name of the buffer object that you want to attach. It’s important to note that index is not the index of the uniform block (uniformBlockIndex in glUniformBlockBinding()), but the index of the uniform buffer binding point. This is a common mistake to make and is easy to miss.

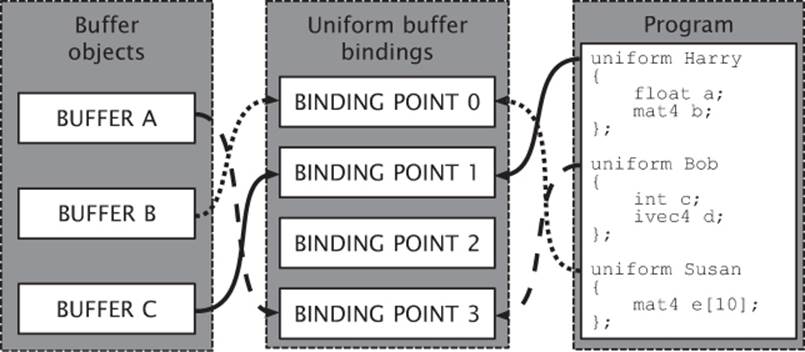

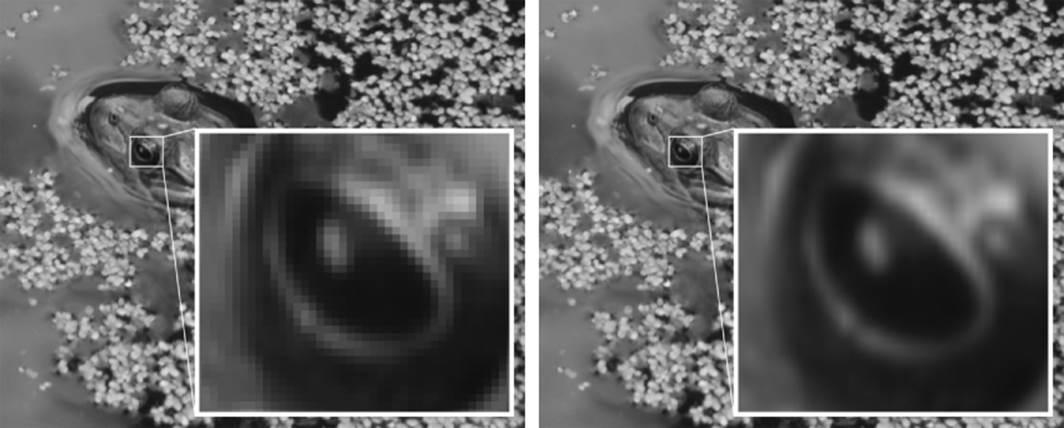

This mixing and matching of binding points with uniform block indices is illustrated in Figure 5.1.

Figure 5.1: Binding buffers and uniform blocks to binding points

In Figure 5.1, there is a program with three uniform blocks (Harry, Bob, and Susan) and three buffer objects (A, B, and C). Harry is assigned to binding point 1, and buffer C is bound to binding point 1, so Harry’s data comes from buffer C. Likewise, Bob is assigned to binding point 3, to which buffer A is bound, and so Bob’s data comes from buffer A. Finally, Susan is assigned to binding point 0, and buffer B is bound to binding point 0, so Susan’s data comes from buffer B. Notice that binding point 2 is not used.

That doesn’t matter. There could be a buffer bound there, but the program doesn’t use it.

The code to set this up is simple and is given in Listing 5.18.

// Get the indices of the uniform blocks using glGetUniformBlockIndex

GLuint harry_index = glGetUniformBlockIndex(program, "Harry");

GLuint bob_index = glGetUniformBlockIndex(program, "Bob");

GLuint susan_index = glGetUniformBlockIndex(program, "Susan");

// Assign buffer bindings to uniform blocks, using their indices

glUniformBlockBinding(program, harry_index, 1);

glUniformBlockBinding(program, bob_index, 3);

glUniformBlockBinding(program, susan_index, 0);

// Bind buffers to the binding points

// Binding 0, buffer B, Susan's data

glBindBufferBase(GL_UNIFORM_BUFFER, 0, buffer_b);

// Binding 1, buffer C, Harry's data

glBindBufferBase(GL_UNIFORM_BUFFER, 1, buffer_c);

// Note that we skipped binding 2

// Binding 3, buffer A, Bob's data

glBindBufferBase(GL_UNIFORM_BUFFER, 3, buffer_a);

Listing 5.18: Specifying bindings for uniform blocks

Again, if we had set the bindings for our uniform blocks in our shader code by using the binding layout qualifier, we could avoid the calls to glUniformBlockBinding() in Listing 5.18. This example is shown in Listing 5.19.

layout (binding = 1) uniform Harry

{

// ...

};

layout (binding = 3) uniform Bob

{

// ...

};

layout (binding = 0) uniform Susan

{

// ...

};

Listing 5.19: Uniform blocks binding layout qualifiers

After a shader containing the declarations shown in Listing 5.19 is compiled and linked into a program object, the bindings for the Harry, Bob, and Susan uniform blocks will be set to the same things as they would be after executing Listing 5.18. Setting the uniform block binding in the shader can be useful for a number of reasons. First is that it reduces the number of calls to OpenGL that your application must make. Second, it allows the shader to associate a uniform block with a particular binding point without the application needing to know its name. This can be helpful if you have some data in a buffer with a standard layout, but want to refer to it with different names in different shaders.

A common use for uniform blocks is to separate steady state from transient state. By setting up the bindings for all your programs using a standard convention, you can leave buffers bound when you change the program. For example, if you have some relatively fixed state — say the projection matrix, the size of the viewport, and a few other things that change once a frame or less often — you can leave that information in a buffer bound to binding point zero. Then, if you set the binding for the fixed state to zero for all programs, whenever you switch program objects using glUseProgram(), the uniforms will be sitting there in the buffer, ready to use.

Now let’s say that you have a fragment shader that simulates some material (e.g., cloth or metal); you could put the parameters for the material into another buffer. In your program that shades that material, bind the uniform block containing the material parameters to binding point 1. Each object would maintain a buffer object containing the parameters of its surface. As you render each object, it uses the common material shader and simply binds its parameter buffer to buffer binding point 1.

A final significant advantage of uniform blocks is that they can be quite large. The maximum size of a uniform block can be determined by calling glGetIntegerv() and passing the GL_MAX_UNIFORM_BLOCK_SIZE parameter. Also, the number of uniform blocks that you can access from a single program can be retrieved by calling glGetIntegerv() and passing the GL_MAX_UNIFORM_BLOCK_BINDINGS. OpenGL guarantees that at least 64KB in size, and you can have at least 14 of them referenced by a single program. Taking the example of the previous paragraph a little further, you could pack all of the properties for all of the materials used by your application into a single, large uniform block containing a big array of structures. As you render the objects in your scene, you only need to communicate the index within that array of the material you wish to use. You can achieve that with a static vertex attribute or traditional uniform, for example. This could be substantially faster than replacing the contents of a buffer object or changing uniform buffer bindings between each object. If you’re really clever, you can even render objects made up from multiple surfaces with different materials using a single drawing command.

Using Uniforms to Transform Geometry

Back in Chapter 4, “Math for 3D Graphics,” you learned how to construct matrices that represent several common transformations including scale, translation, and rotation, and how to use the sb6::vmath library to do the heavy lifting for you. You also saw how to multiply matrices to produce a composite matrix that represents the whole transformation sequence. Given a point of interest and the camera’s location and orientation, you can build a matrix that will transform objects into the coordinate space of the viewer. Also, you can build matrices that represent perspective and orthographic projections onto the screen.

Furthermore, in this chapter you have seen how to feed a vertex shader with data from buffer objects, and how to pass data into your shaders through uniforms (whether in the default uniform block, or in a uniform buffer). Now it’s time to put all this together and build a program that does a little more than pass vertices through un-transformed.

Our example program will be the classic spinning cube. We’ll create geometry representing a unit cube located at the origin and store it in buffer objects. Then, we will use a vertex shader to apply a sequence of transforms to it to move it into world space. We will construct a basic view matrix, multiply our model and view matrices together to produce a model-view matrix, and create a perspective transformation matrix representing some of the properties of our camera. Finally, we will pass these into a simple vertex shader using uniforms and draw the cube on the screen.

First, let’s set up the cube geometry using a vertex array object. The code to do this is shown in Listing 5.20.

// First, create and bind a vertex array object

glGenVertexArrays(1, &vao);

glBindVertexArray(vao);

static const GLfloat vertex_positions[] =

{

-0.25f, 0.25f, -0.25f,

-0.25f, -0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

0.25f, -0.25f, -0.25f,

0.25f, 0.25f, -0.25f,

-0.25f, 0.25f, -0.25f,

/* MORE DATA HERE */

-0.25f, 0.25f, -0.25f,

0.25f, 0.25f, -0.25f,

0.25f, 0.25f, 0.25f,

0.25f, 0.25f, 0.25f,

-0.25f, 0.25f, 0.25f,

-0.25f, 0.25f, -0.25f

};

// Now generate some data and put it in a buffer object

glGenBuffers(1, &buffer);

glBindBuffer(GL_ARRAY_BUFFER, buffer);

glBufferData(GL_ARRAY_BUFFER,

sizeof(vertex_positions),

vertex_positions,

GL_STATIC_DRAW);

// Set up our vertex attribute

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 0, NULL);

glEnableVertexAttribArray(0);

Listing 5.20: Setting up cube geometry

Next, on each frame, we need to calculate the position and orientation of our cube and calculate the matrix that represents them. We also build the camera matrix by simply translating in the z direction. Once we have built these matrices, we can multiply them together and pass them as uniforms into our vertex shader. The code to do this is shown in Listing 5.21.

float f = (float)currentTime * (float)M_PI * 0.1f;

vmath::mat4 mv_matrix =

vmath::translate(0.0f, 0.0f, -4.0f) *

vmath::translate(sinf(2.1f * f) * 0.5f,

cosf(1.7f * f) * 0.5f,

sinf(1.3f * f) * cosf(1.5f * f) * 2.0f) *

vmath::rotate((float)currentTime * 45.0f, 0.0f, 1.0f, 0.0f) *

vmath::rotate((float)currentTime * 81.0f, 1.0f, 0.0f, 0.0f);

Listing 5.21: Building the model-view matrix for a spinning cube

The projection matrix can be rebuilt whenever the window size changes. The sb6::application framework provides a function called onResize that handles resize events. If we override this function, then when the window size changes it will be called and we can projection matrix. We can load that into a uniform as well in our rendering loop. If the window size changes, we’ll also need to update our viewport with a call to glViewport(). Once we have put all our matrices into our uniforms, we can draw the cube geometry with the glDrawArrays() function. The code to update the projection matrix is shown in Listing 5.22 and the remainder of the rendering loop is shown in Listing 5.23.

void onResize(int w, int h)

{

sb6::application::onResize(w, h);

aspect = (float)info.windowWidth / (float)info.windowHeight;

proj_matrix = vmath::perspective(50.0f,

aspect,

0.1f,

1000.0f);

}

Listing 5.22: Updating the projection matrix for the spinning cube

// Clear the framebuffer with dark green

static const GLfloat green[] = { 0.0f, 0.25f, 0.0f, 1.0f };

glClearBufferfv(GL_COLOR, 0, green);

// Activate our program

glUseProgram(program);

// Set the model-view and projection matrices

glUniformMatrix4fv(mv_location, 1, GL_FALSE, mv_matrix);

glUniformMatrix4fv(proj_location, 1, GL_FALSE, proj_matrix);

// Draw 6 faces of 2 triangles of 3 vertices each = 36 vertices

glDrawArrays(GL_TRIANGLES, 0, 36);

Listing 5.23: Rendering loop for the spinning cube

Before we can actually render anything, we’ll need to write a simple vertex shader to transform the vertex positions using the matrices we’ve been given and to pass along the color information so that the cube isn’t just a flat blob. The vertex shader is shown in Listing 5.24 and the fragment shader is shown in Listing 5.25.

#version 430 core

in vec4 position;

out VS_OUT

{

vec4 color;

} vs_out;

uniform mat4 mv_matrix;

uniform mat4 proj_matrix;

void main(void)

{

gl_Position = proj_matrix * mv_matrix * position;

vs_out.color = position * 2.0 + vec4(0.5, 0.5, 0.5, 0.0);

}

Listing 5.24: Spinning cube vertex shader

#version 430 core

out vec4 color;

in VS_OUT

{

vec4 color;

} fs_in;

void main(void)

{

color = fs_in.color;

}

Listing 5.25: Spinning cube fragment shader

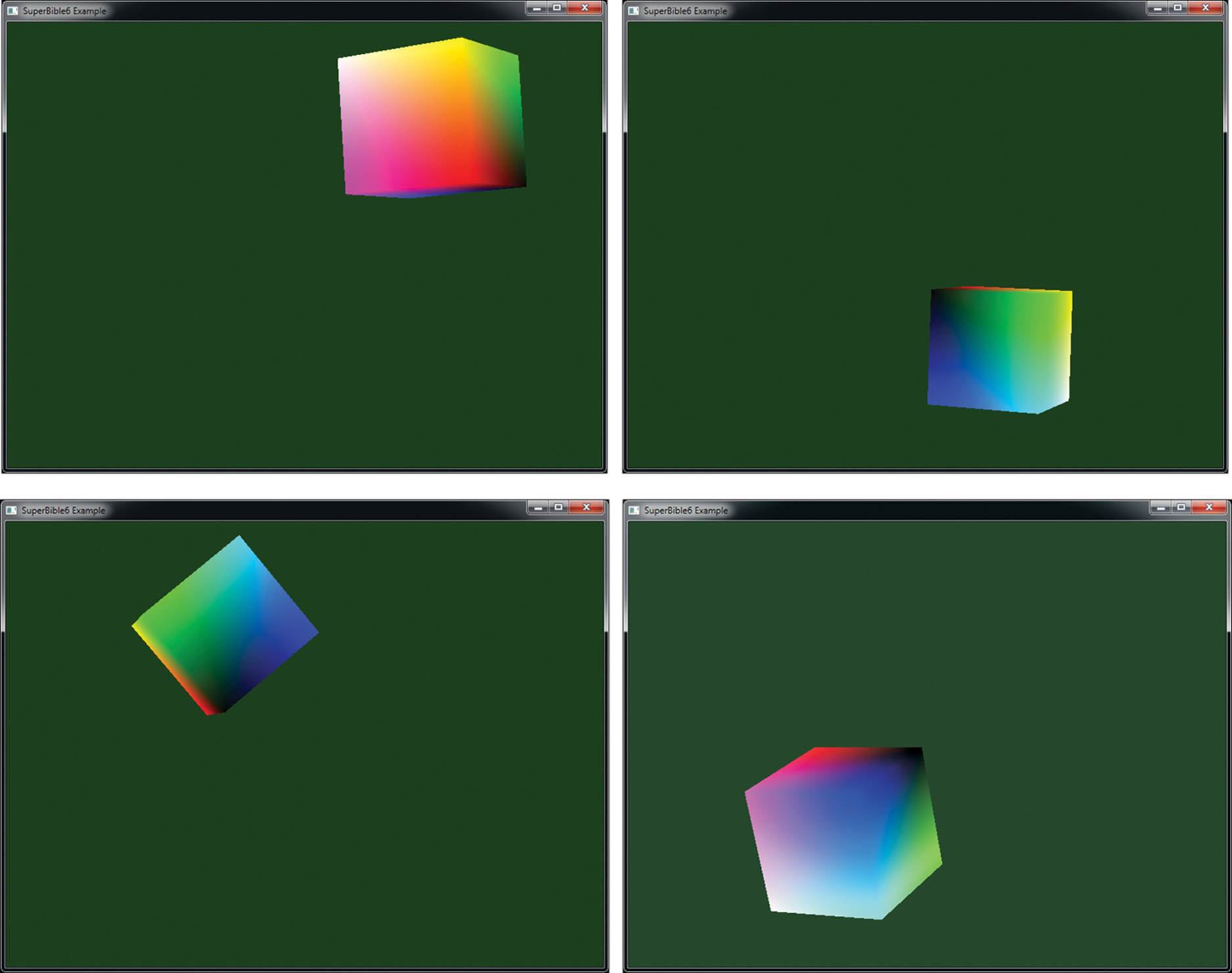

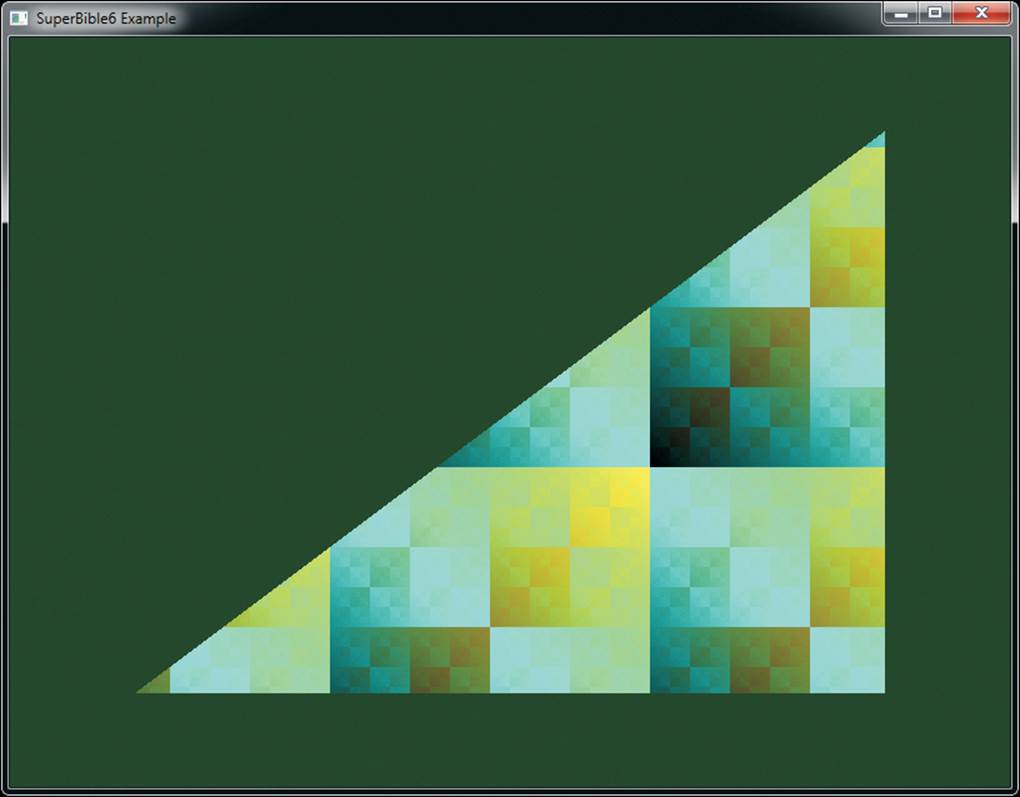

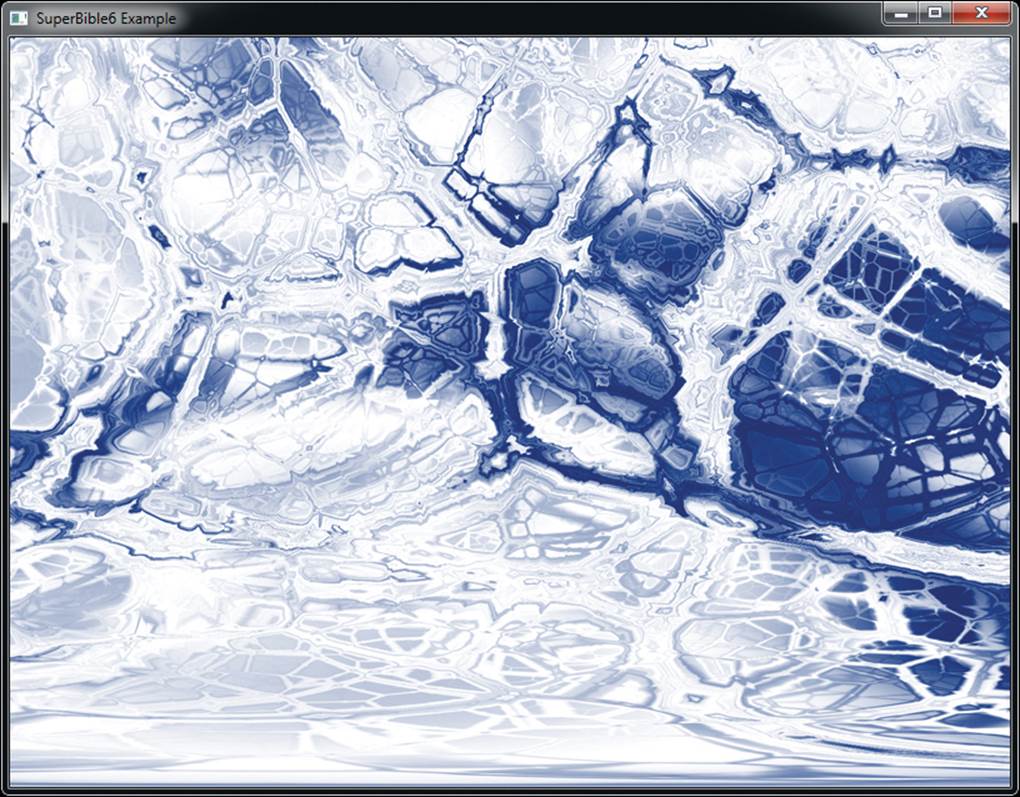

A few frames of the resulting application are shown in Figure 5.2.

Figure 5.2: A few frames from the spinning cube application

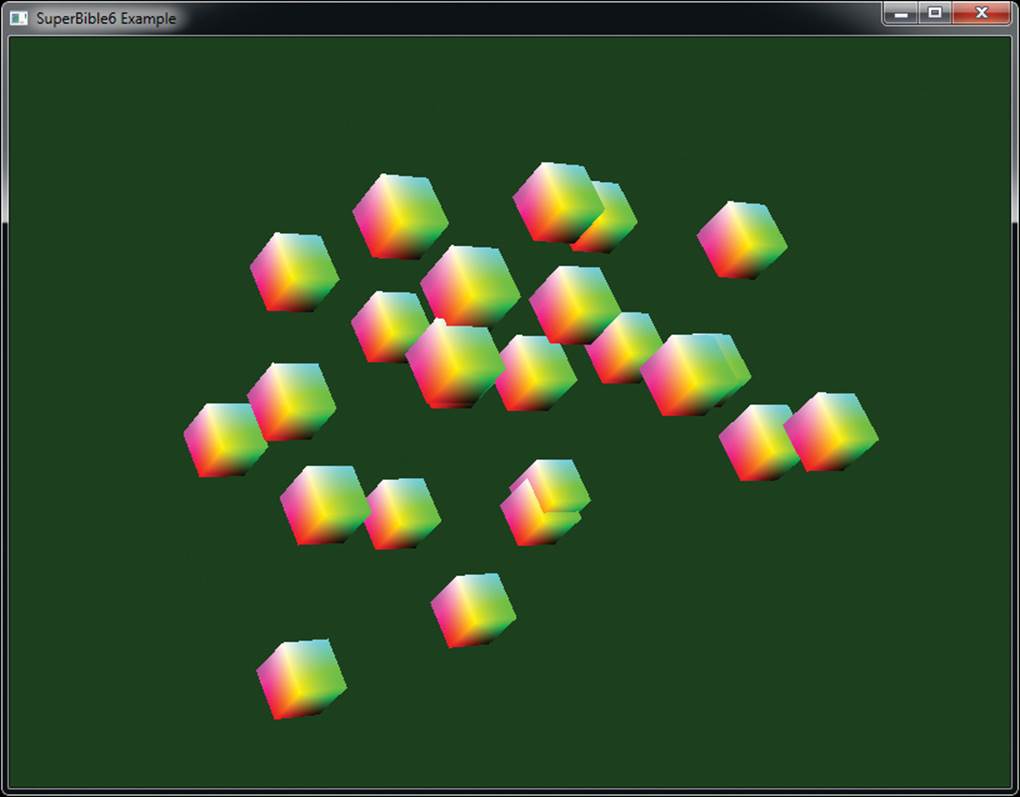

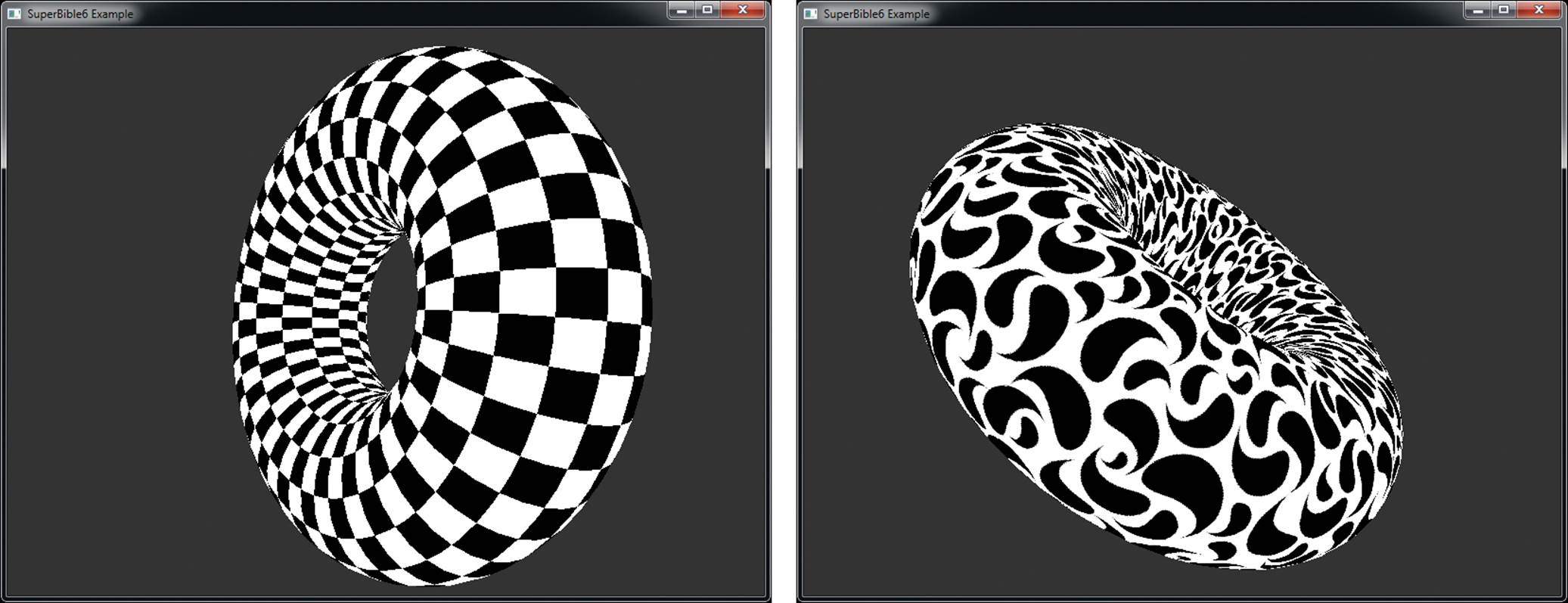

Of course, now that we have our cube geometry in a buffer object and a model-view matrix in a uniform, there’s nothing to stop us from updating the uniform and drawing many copies of the cube in a single frame. In Listing 5.26 we’ve modified the rendering function to calculate a new model-view matrix many times and repeatedly draw our cube. Also, because we’re going to render many cubes in this example, we’ll need to clear the depth buffer before rendering the frame. Although not shown here, we also modified our startup function to enable depth testing and set the depth test function to GL_LEQUAL. The result of rendering with our modified program is shown in Figure 5.3.

Figure 5.3: Many cubes!

// Clear the framebuffer with dark green and clear

// the depth buffer to 1.0

static const GLfloat green[] = { 0.0f, 0.25f, 0.0f, 1.0f };

static const GLfloat one = 1.0f;

glClearBufferfv(GL_COLOR, 0, green);

glClearBufferfv(GL_DEPTH, 0, &one);

// Activate our program

glUseProgram(program);

// Set the model-view and projection matrices

glUniformMatrix4fv(proj_location, 1, GL_FALSE, proj_matrix);

// Draw 24 cubes...

for (i = 0; i < 24; i++)

{

// Calculate a new model-view matrix for each one

float f = (float)i + (float)currentTime * 0.3f;

vmath::mat4 mv_matrix =

vmath::translate(0.0f, 0.0f, -20.0f) *

vmath::rotate((float)currentTime * 45.0f, 0.0f, 1.0f, 0.0f) *

vmath::rotate((float)currentTime * 21.0f, 1.0f, 0.0f, 0.0f) *

vmath::translate(sinf(2.1f * f) * 2.0f,

cosf(1.7f * f) * 2.0f,

sinf(1.3f * f) * cosf(1.5f * f) * 2.0f);

// Update the uniform

glUniformMatrix4fv(mv_location, 1, GL_FALSE, mv_matrix);

// Draw - notice that we haven't updated the projection matrix

glDrawArrays(GL_TRIANGLES, 0, 36);

}

Listing 5.26: Rendering loop for the spinning cube

Shader Storage Blocks

In addition to the read-only access to buffer objects that is provided by uniform blocks, buffer objects can also be used for general storage from shaders using shader storage blocks. These are declared in a similar manner to uniform blocks and backed in the same way by binding a range of buffer objects to one of the indexed GL_SHADER_STORAGE_BUFFER targets. However, the biggest difference between a uniform block and a shader storage block is that your shader can write into the shader storage block and, furthermore, it can even perform atomic operations on members of a shader storage block. Shader storage blocks also have a much higher upper size limit.

To declare a shader storage block, simply declare a block in the shader just like you would a uniform block, but rather than use the uniform keyword, use the buffer qualifier. Like uniform blocks, shader storage blocks support the std140 packing layout qualifier, but also support the std4303packing layout qualifier, which allows arrays of integers and floating-point variables (and structures containing them) to be tightly packed (something that is sorely lacking from std140). This allows better efficiency of memory use and tighter cohesion with structure layouts generated by compilers for languages such as C++. An example shader storage block declaration is shown in Listing 5.27.

3. The std140 and std430 packing layouts are named for the version of the shading language with which they were introduced — std140 with GLSL 1.40 (which was part of OpenGL 3.1), and std430 with GLSL 4.30, which was the version released with OpenGL 4.3.

#version 430 core

struct my_structure

{

int pea;

int carrot;

vec4 potato;

};

layout (binding = 0, std430) buffer my_storage_block

{

vec4 foo;

vec3 bar;

int baz[24];

my_structure veggies;

};

Listing 5.27: Example shader storage block declaration

The members of a shader storage block can be referred to just as any other variable. To read from them, you could, for example use them as a parameter to a function, and to write into them you simply assign to them. When the variable is used in an expression, the source of data will be the buffer object, and when the variable is assigned to, the data will be written into the buffer object. You can place data into the buffer using functions like glBufferData() just as you would with a uniform block. Because the buffer is writable by the shader, if you call glMapBuffer() withGL_READ_ONLY (or GL_READ_WRITE) as the access mode, you will be able read the data produced by your shader.

Shader storage blocks and their backing buffer objects provide additional advantages over uniform blocks. For example, their size is not really limited. Of course, if you go overboard, OpenGL may fail to allocate memory for you, but there really isn’t a hard-wired practical upper limit to the size of a shader storage block. Also, the newer packing rules for std430 allow an application’s data to be more efficiently packed and directly accessed than would a uniform block. It is worth noting, though, that due to the stricter alignment requirements of uniform blocks and smaller minimum size, some hardware may handle uniform blocks differently than shader storage blocks and execute more efficiently when reading from them. Listing 5.28 shows how you might use a shader storage block in place of regular inputs in a vertex shader.

#version 430 core

struct vertex

{

vec4 position;

vec3 color;

};

layout (binding = 0, std430) buffer my_vertices

{

vertex vertices[];

};

uniform mat4 transform_matrix;

out VS_OUT

{

vec3 color;

} vs_out;

void main(void)

{

gl_Position = transform_matrix * vertices[gl_VertexID].position;

vs_out.color = vertices[gl_VertexID].color;

}

Listing 5.28: Using a shader storage block in place of vertex attributes

Although it may seem that shader storage blocks offer so many advantages that they almost make uniform blocks and vertex attributes redundant, you should be aware that all of this additional flexibility makes it difficult for OpenGL to make access to storage blocks truly optimal. For example, some OpenGL implementations may be able to provide faster access to uniform blocks given the knowledge that their content will always be constant. Also, reading the input data for vertex attributes may happen long before your vertex shader runs, letting OpenGL’s memory subsystem keep up. Reading vertex data right in the middle of your shader might well slow it down quite a bit.

Atomic Memory Operations

In addition to simply reading and writing of memory, shader storage blocks allow you to perform atomic operations on memory. An atomic operation is a sequence of a read from memory potentially followed by a write to memory that must be uninterrupted for the result to be correct. Consider a case where two shader invocations perform the operation m = m + 1; using the same memory location represented by m. Each invocation will load the current value stored in the memory location represented by m, add one to it, and then write it back to memory at the same location.

If each invocation operates in lockstep, then we will end up with the wrong value in memory unless the operation can be made atomic. This is because the first invocation will load the value from memory, and then the second invocation will read the same value from memory. Both invocations will increment their copy of the value. The first invocation will write its incremented value back to memory, and then finally, the second invocation will overwrite that value with the same, incremented value that it calculated. This problem only gets worse when there are many more than two invocations running at a time.

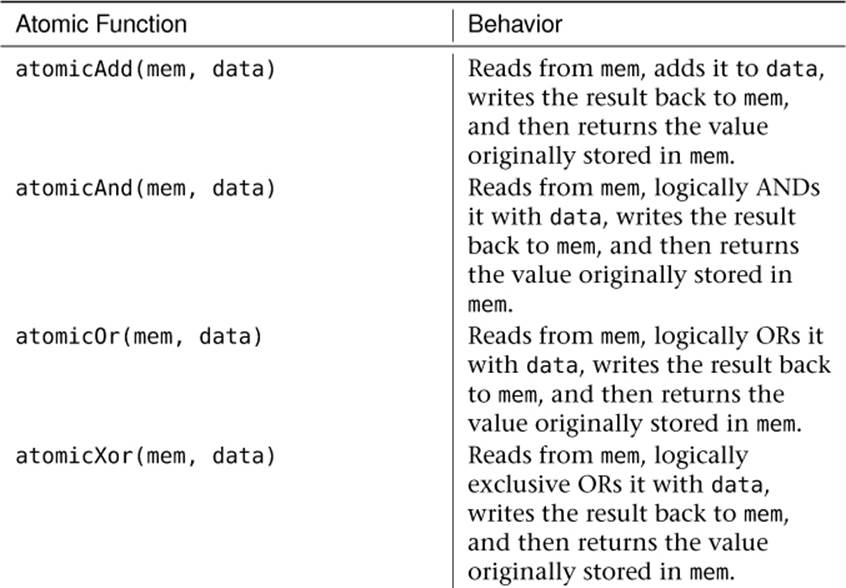

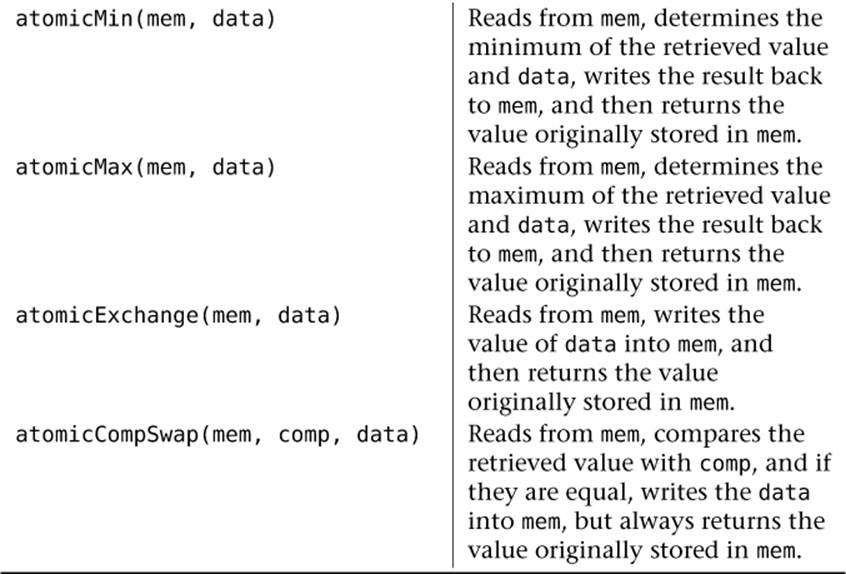

To get around this problem, atomic operations cause the complete read-modify-write cycle to complete for one invocation before any other invocation gets a chance to even read from memory. In theory, if multiple shader invocations perform atomic operations on different memory locations, then everything should run nice and fast and work just as if you had written the naïve m = m + 1; code in your shader. If two invocations access the same memory locations (this is known as contention), then they will be serialized and only one will get to go at one time. To execute an atomic operation on a member of a shader storage block, you call one of the atomic memory functions listed in Table 5.4.

Table 5.4. Atomic Operations on Shader Storage Blocks

In Table 5.4, all of the functions have an integer (int) and unsigned integer (uint) version. For the integer versions, mem is declared as inout int mem, data and comp (for atomicCompSwap) are declared as int data, and int comp and the return value of all functions is int. Likewise, for the unsigned integer versions, all parameters are declared using uint and the return type of the function is uint. Notice that there are no atomic operations on floating-point variables, vectors, or matrices or integer values that are not 32 bits wide. All of the atomic memory access functions shown in Table 5.4 return the value that was in memory prior to the atomic operation taking place. When an atomic operation is attempted by multiple invocations of your shader to the same location at the same time, they are serialized, which means that they take turns. This means that you’re not guaranteed to receive any particular return value of an atomic memory operation.

Synchronizing Access to Memory

When you are only reading from a buffer, data is almost always going to be available when you think it should be and you don’t need to worry about the order in which your shaders read from it. However, when your shader starts writing data into buffer objects, either through writes to variables in shader storage blocks or through explicit calls to the atomic operation functions that might write to memory, there are cases where you need to avoid hazards.

Memory hazards fall roughly into three categories:

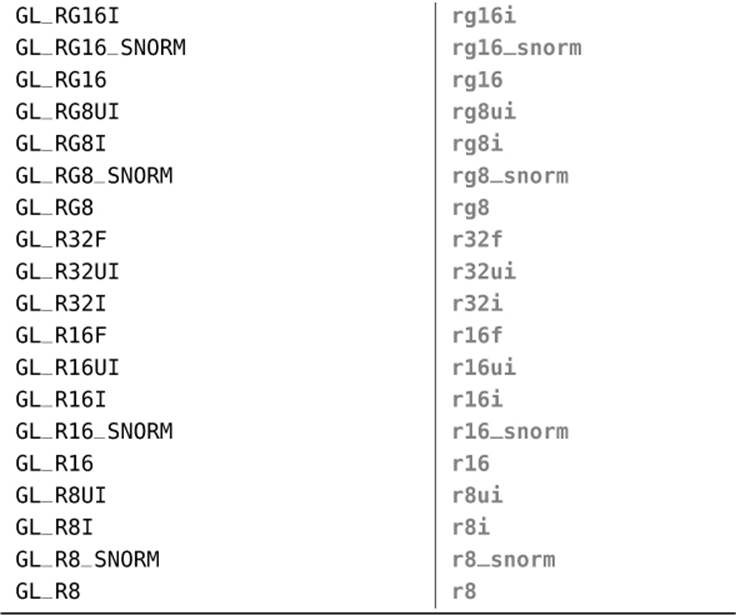

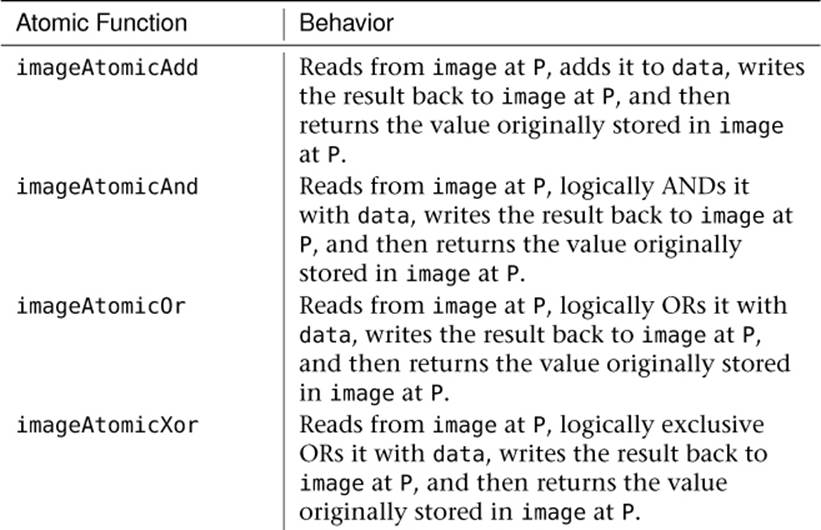

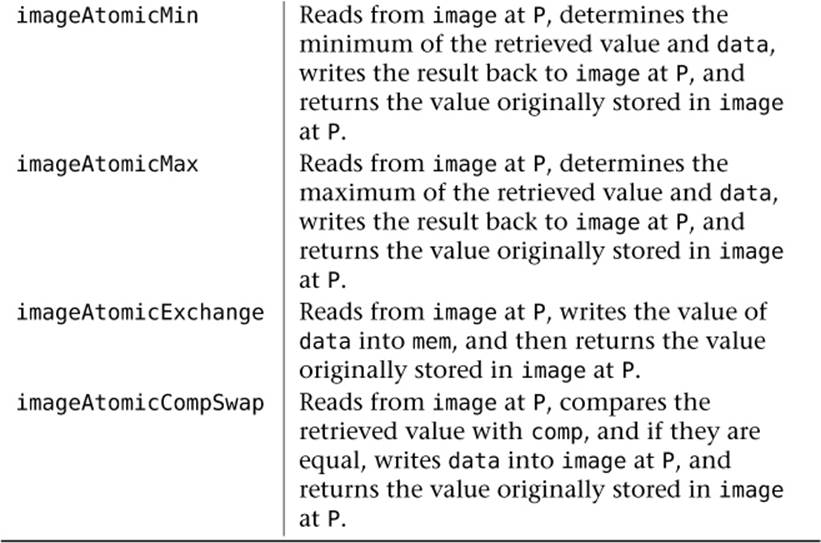

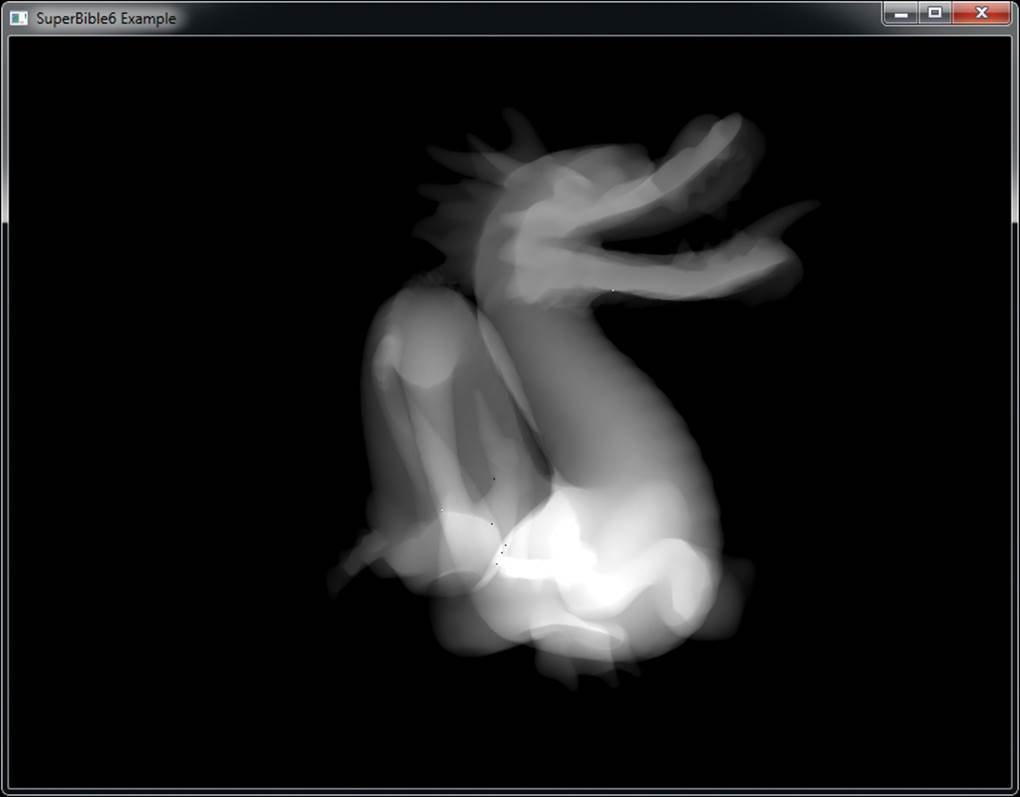

• A Read-After-Write (RAW) hazard can occur when your program attempts to read from a memory location right after it’s written to it. Depending on the system architecture, the read and write may be re-ordered such that the read actually ends up being executed before the write is complete, resulting in the old data being returned to the application.