Functional Programming in Scala (2015)

Part 3. Common structures in functional design

Chapter 12. Applicative and traversable functors

In the previous chapter on monads, we saw how a lot of the functions we’ve been writing for different combinator libraries can be expressed in terms of a single interface, Monad. Monads provide a powerful interface, as evidenced by the fact that we can use flatMap to essentially write imperative programs in a purely functional way.

In this chapter, we’ll learn about a related abstraction, applicative functors, which are less powerful than monads, but more general (and hence more common). The process of arriving at applicative functors will also provide some insight into how to discover such abstractions, and we’ll use some of these ideas to uncover another useful abstraction, traversable functors. It may take some time for the full significance and usefulness of these abstractions to sink in, but you’ll see them popping up again and again in your daily work with FP if you pay attention.

12.1. Generalizing monads

By now we’ve seen various operations, like sequence and traverse, implemented many times for different monads, and in the last chapter we generalized the implementations to work for any monad F:

def sequence[A](lfa: List[F[A]]): F[List[A]]

traverse(lfa)(fa => fa)

def traverse[A,B](as: List[A])(f: A => F[B]): F[List[B]]

as.foldRight(unit(List[B]()))((a, mbs) => map2(f(a), mbs)(_ :: _))

Here, the implementation of traverse is using map2 and unit, and we’ve seen that map2 can be implemented in terms of flatMap:

def map2[A,B,C](ma: F[A], mb: F[B])(f: (A,B) => C): F[C] =

flatMap(ma)(a => map(mb)(b => f(a,b)))

What you may not have noticed is that a large number of the useful combinators on Monad can be defined using only unit and map2. The traverse combinator is one example—it doesn’t call flatMap directly and is therefore agnostic to whether map2 is primitive or derived. Furthermore, for many data types, map2 can be implemented directly, without using flatMap.

All this suggests a variation on Monad—the Monad interface has flatMap and unit as primitives, and derives map2, but we can obtain a different abstraction by letting unit and map2 be the primitives. We’ll see that this new abstraction, called an applicative functor, is less powerful than a monad, but we’ll also see that limitations come with benefits.

12.2. The Applicative trait

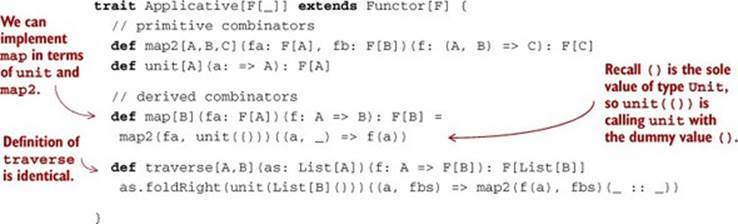

Applicative functors can be captured by a new interface, Applicative, in which map2 and unit are primitives.

Listing 12.1. Creating the Applicative interface

This establishes that all applicatives are functors. We implement map in terms of map2 and unit, as we’ve done before for particular data types. The implementation is suggestive of laws for Applicative that we’ll examine later, since we expect this implementation of map to preserve structure as dictated by the Functor laws.

Note that the implementation of traverse is unchanged. We can similarly move other combinators into Applicative that don’t depend directly on flatMap or join.

Exercise 12.1

![]()

Transplant the implementations of as many combinators as you can from Monad to Applicative, using only map2 and unit, or methods implemented in terms of them.

def sequence[A](fas: List[F[A]]): F[List[A]]

def replicateM[A](n: Int, fa: F[A]): F[List[A]]

def product[A,B](fa: F[A], fb: F[A]): F[(A,B)]

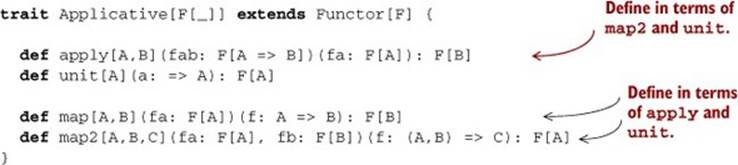

Exercise 12.2

![]()

Hard: The name applicative comes from the fact that we can formulate the Applicative interface using an alternate set of primitives, unit and the function apply, rather than unit and map2. Show that this formulation is equivalent in expressiveness by defining map2 and map in terms ofunit and apply. Also establish that apply can be implemented in terms of map2 and unit.

Exercise 12.3

![]()

The apply method is useful for implementing map3, map4, and so on, and the pattern is straightforward. Implement map3 and map4 using only unit, apply, and the curried method available on functions.[1]

1 Recall that given f: (A,B) => C, f.curried has type A => B => C. A curried method exists for functions of any arity in Scala.

def map3[A,B,C,D](fa: F[A],

fb: F[B],

fc: F[C])(f: (A, B, C) => D): F[D]

def map4[A,B,C,D,E](fa: F[A],

fb: F[B],

fc: F[C],

fd: F[D])(f: (A, B, C, D) => E): F[E]

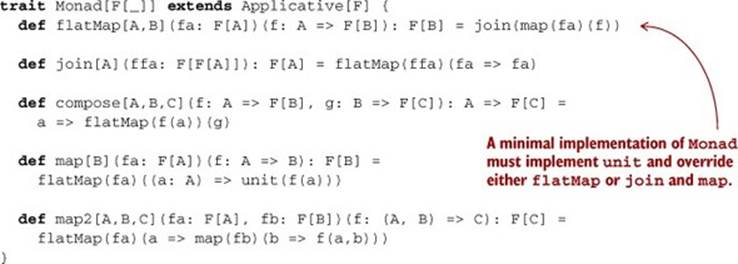

Furthermore, we can now make Monad[F] a subtype of Applicative[F] by providing the default implementation of map2 in terms of flatMap. This tells us that all monads are applicative functors, and we don’t need to provide separate Applicative instances for all our data types that are already monads.

Listing 12.2. Making Monad a subtype of Applicative

So far, we’ve been just rearranging the functions of our API and following the type signatures. Let’s take a step back to understand the difference in expressiveness between Monad and Applicative and what it all means.

12.3. The difference between monads and applicative functors

In the last chapter, we noted there were several minimal sets of operations that defined a Monad:

· unit and flatMap

· unit and compose

· unit, map, and join

Are the Applicative operations unit and map2 yet another minimal set of operations for monads? No. There are monadic combinators such as join and flatMap that can’t be implemented with just map2 and unit. To see convincing proof of this, take a look at join:

def join[A](f: F[F[A]]): F[A]

Just reasoning algebraically, we can see that unit and map2 have no hope of implementing this function. The join function “removes a layer” of F. But the unit function only lets us add an F layer, and map2 lets us apply a function within F but does no flattening of layers. By the same argument, we can see that Applicative has no means of implementing flatMap either.

So Monad is clearly adding some extra capabilities beyond Applicative. But what exactly? Let’s look at some concrete examples.

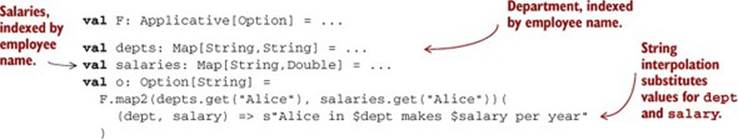

12.3.1. The Option applicative versus the Option monad

Suppose we’re using Option to work with the results of lookups in two Map objects. If we simply need to combine the results from two (independent) lookups, map2 is fine.

Listing 12.3. Combining results with the Option applicative

Here we’re doing two lookups, but they’re independent and we merely want to combine their results within the Option context. If we want the result of one lookup to affect what lookup we do next, then we need flatMap or join, as the following listing shows.

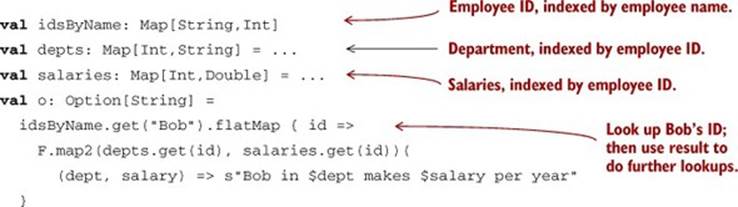

Listing 12.4. Combining results with the Option monad

Here depts is a Map[Int,String] indexed by employee ID, which is an Int. If we want to print out Bob’s department and salary, we need to first resolve Bob’s name to his ID, and then use this ID to do lookups in depts and salaries. We might say that with Applicative, the structure of our computation is fixed; with Monad, the results of previous computations may influence what computations to run next.

“Effects” in FP

Functional programmers often informally call type constructors like Par, Option, List, Parser, Gen, and so on effects. This usage is distinct from the term side effect, which implies some violation of referential transparency. These types are called effects because they augment ordinary values with “extra” capabilities. (Par adds the ability to define parallel computation, Option adds the possibility of failure, and so on.) Related to this usage of effects, we sometimes use the terms monadic effects or applicative effects to mean types with an associated Monad orApplicative instance.

12.3.2. The Parser applicative versus the Parser monad

Let’s look at one more example. Suppose we’re parsing a file of comma-separated values with two columns: date and temperature. Here’s an example file:

1/1/2010, 25

2/1/2010, 28

3/1/2010, 42

4/1/2010, 53

...

If we know ahead of time the file will have the date and temperature columns in that order, we can just encode this order in the Parser we construct:

case class Row(date: Date, temperature: Double)

val F: Applicative[Parser] = ...

val d: Parser[Date] = ...

val temp: Parser[Double] = ...

val row: Parser[Row] = F.map2(d, temp)(Row(_, _))

val rows: Parser[List[Row]] = row.sep("\n")

If we don’t know the order of the columns and need to extract this information from the header, then we need flatMap. Here’s an example file where the columns happen to be in the opposite order:

# Temperature, Date

25, 1/1/2010

28, 2/1/2010

42, 3/1/2010

53, 4/1/2010

...

To parse this format, where we must dynamically choose our Row parser based on first parsing the header (the first line starting with #), we need flatMap:

case class Row(date: Date, temperature: Double)

val F: Monad[Parser] = ...

val d: Parser[Date] = ...

val temp: Parser[Double] = ...

val header: Parser[Parser[Row]] = ...

val rows: Parser[List[Row]] =

F.flatMap (header) { row => row.sep("\n") }

Here we’re parsing the header, which gives us a Parser[Row] as its result. We then use this parser to parse the subsequent rows. Since we don’t know the order of the columns up front, we’re selecting our Row parser dynamically, based on the result of parsing the header.

There are many ways to state the distinction between Applicative and Monad. Of course, the type signatures tell us all we really need to know and we can understand the difference between the interfaces algebraically. But here are a few other common ways of stating the difference:

· Applicative computations have fixed structure and simply sequence effects, whereas monadic computations may choose structure dynamically, based on the result of previous effects.

· Applicative constructs context-free computations, while Monad allows for context sensitivity.[2]

2 For example, a monadic parser allows for context-sensitive grammars while an applicative parser can only handle context-free grammars.

· Monad makes effects first class; they may be generated at “interpretation” time, rather than chosen ahead of time by the program. We saw this in our Parser example, where we generated our Parser[Row] as part of the act of parsing, and used this Parser[Row] for subsequent parsing.

12.4. The advantages of applicative functors

The Applicative interface is important for a few reasons:

· In general, it’s preferable to implement combinators like traverse using as few assumptions as possible. It’s better to assume that a data type can provide map2 than flatMap. Otherwise we’d have to write a new traverse every time we encountered a type that’s Applicative but not a Monad! We’ll look at examples of such types next.

· Because Applicative is “weaker” than Monad, this gives the interpreter of applicative effects more flexibility. To take just one example, consider parsing. If we describe a parser without resorting to flatMap, this implies that the structure of our grammar is determined before we begin parsing. Therefore, our interpreter or runner of parsers has more information about what it’ll be doing up front and is free to make additional assumptions and possibly use a more efficient implementation strategy for running the parser, based on this known structure. AddingflatMap is powerful, but it means we’re generating our parsers dynamically, so the interpreter may be more limited in what it can do. Power comes at a cost. See the chapter notes for more discussion of this issue.

· Applicative functors compose, whereas monads (in general) don’t. We’ll see how this works later.

12.4.1. Not all applicative functors are monads

Let’s look at two examples of data types that are applicative functors but not monads. These are certainly not the only examples. If you do more functional programming, you’ll undoubtedly discover or create lots of data types that are applicative but not monadic.[3]

3 Monadic is the adjective form of monad.

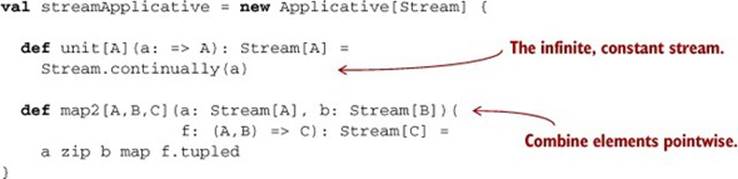

The Applicative for Streams

The first example we’ll look at is (possibly infinite) streams. We can define map2 and unit for these streams, but not flatMap:

The idea behind this Applicative is to combine corresponding elements via zipping.

Exercise 12.4

![]()

Hard: What is the meaning of streamApplicative.sequence? Specializing the signature of sequence to Stream, we have this:

def sequence[A](a: List[Stream[A]]): Stream[List[A]]

Validation: An Either Variant that Accumulates Errors

In chapter 4, we looked at the Either data type and considered the question of how such a data type would have to be modified to allow us to report multiple errors. For a concrete example, think of validating a web form submission. Only reporting the first error means the user would have to repeatedly submit the form and fix one error at a time.

This is the situation with Either if we use it monadically. First, let’s actually write the monad for the partially applied Either type.

Exercise 12.5

![]()

Write a monad instance for Either.

def eitherMonad[E]: Monad[({type f[x] = Either[E, x]})#f]

Now consider what happens in a sequence of flatMap calls like the following, where each of the functions validName, validBirthdate, and validPhone has type Either[String, T] for a given type T:

validName(field1) flatMap (f1 =>

validBirthdate(field2) flatMap (f2 =>

validPhone(field3) map (f3 => WebForm(f1, f2, f3))

If validName fails with an error, then validBirthdate and validPhone won’t even run. The computation with flatMap inherently establishes a linear chain of dependencies. The variable f1 will never be bound to anything unless validName succeeds.

Now think of doing the same thing with map3:

map3(

validName(field1),

validBirthdate(field2),

validPhone(field3))(

WebForm(_,_,_))

Here, no dependency is implied between the three expressions passed to map3, and in principle we can imagine collecting any errors from each Either into a List. But if we use the Either monad, its implementation of map3 in terms of flatMap will halt after the first error.

Let’s invent a new data type, Validation, that is much like Either except that it can explicitly handle more than one error:

sealed trait Validation[+E, +A]

case class Failure[E](head: E, tail: Vector[E] = Vector())

extends Validation[E, Nothing]

case class Success[A](a: A) extends Validation[Nothing, A]

Exercise 12.6

![]()

Write an Applicative instance for Validation that accumulates errors in Failure. Note that in the case of Failure there’s always at least one error, stored in head. The rest of the errors accumulate in the tail.

To continue the example, consider a web form that requires a name, a birth date, and a phone number:

case class WebForm(name: String, birthdate: Date, phoneNumber: String)

This data will likely be collected from the user as strings, and we must make sure that the data meets a certain specification. If it doesn’t, we must give a list of errors to the user indicating how to fix the problem. The specification might say that name can’t be empty, that birthdate must be in the form "yyyy-MM-dd", and that phoneNumber must contain exactly 10 digits.

Listing 12.5. Validating user input in a web form

def validName(name: String): Validation[String, String] =

if (name != "") Success(name)

else Failure("Name cannot be empty")

def validBirthdate(birthdate: String): Validation[String, Date] =

try {

import java.text._

Success((new SimpleDateFormat("yyyy-MM-dd")).parse(birthdate))

} catch {

Failure("Birthdate must be in the form yyyy-MM-dd")

}

def validPhone(phoneNumber: String): Validation[String, String] =

if (phoneNumber.matches("[0-9]{10}"))

Success(phoneNumber)

else Failure("Phone number must be 10 digits")

And to validate an entire web form, we can simply lift the WebForm constructor with map3:

def validWebForm(name: String,

birthdate: String,

phone: String): Validation[String, WebForm] =

map3(

validName(name),

validBirthdate(birthdate),

validPhone(phone))(

WebForm(_,_,_))

If any or all of the functions produce Failure, the whole validWebForm method will return all of those failures combined.

12.5. The applicative laws

This section walks through the laws for applicative functors.[4] For each of these laws, you may want to verify that they’re satisfied by some of the data types we’ve been working with so far (an easy one to verify is Option).

4 There are various other ways of presenting the laws for Applicative. See the chapter notes for more information.

12.5.1. Left and right identity

What sort of laws should we expect applicative functors to obey? Well, we should definitely expect them to obey the functor laws:

map(v)(id) == v

map(map(v)(g))(f) == map(v)(f compose g)

This implies some other laws for applicative functors because of how we’ve implemented map in terms of map2 and unit. Recall the definition of map:

def map[B](fa: F[A])(f: A => B): F[B] =

map2(fa, unit(()))((a, _) => f(a))

Of course, there’s something rather arbitrary about this definition—we could have just as easily put the unit on the left side of the call to map2:

def map[B](fa: F[A])(f: A => B): F[B] =

map2(unit(()), fa)((_, a) => f(a))

The first two laws for Applicative might be summarized by saying that both these implementations of map respect the functor laws. In other words, map2 of some fa: F[A] with unit preserves the structure of fa. We’ll call these the left and right identity laws (shown here in the first and second lines of code, respectively):

map2(unit(()), fa)((_,a) => a) == fa

map2(fa, unit(()))((a,_) => a) == fa

12.5.2. Associativity

To see the next law, associativity, let’s look at the signature of map3:

def map3[A,B,C,D](fa: F[A],

fb: F[B],

fc: F[C])(f: (A, B, C) => D): F[D]

We can implement map3 using apply and unit, but let’s think about how we might define it in terms of map2. We have to combine our effects two at a time, and we seem to have two choices—we can combine fa and fb, and then combine the result with fc. Or we could associate the operation the other way, grouping fb and fc together and combining the result with fa. The associativity law for applicative functors tells us that we should get the same result either way. This should remind you of the associativity laws for monoids and monads:

op(a, op(b, c)) == op(op(a, b), c)

compose(f, op(g, h)) == compose(compose(f, g), h)

The associativity law for applicative functors is the same general idea. If we didn’t have this law, we’d need two versions of map3, perhaps map3L and map3R, depending on the grouping, and we’d get an explosion of other combinators based on having to distinguish between different groupings.

We can state the associativity law in terms of product.[5] Recall that product just combines two effects into a pair, using map2:

5 product, map, and unit are an alternate formulation of Applicative. Can you see how map2 can be implemented using product and map?

def product[A,B](fa: F[A], fb: F[B]): F[(A,B)] =

map2(fa, fb)((_,_))

And if we have pairs nested on the right, we can always turn those into pairs nested on the left:

def assoc[A,B,C](p: (A,(B,C))): ((A,B), C) =

p match { case (a, (b, c)) => ((a,b), c) }

Using these combinators, product and assoc, the law of associativity for applicative functors is as follows:

product(product(fa,fb),fc) == map(product(fa, product(fb,fc)))(assoc)

Note that the calls to product are associated to the left on one side and to the right on the other side of the == sign. On the right side we’re then mapping the assoc function to make the resulting tuples line up.

12.5.3. Naturality of product

Our final law for applicative functors is naturality. To illustrate, let’s look at a simple example using Option.

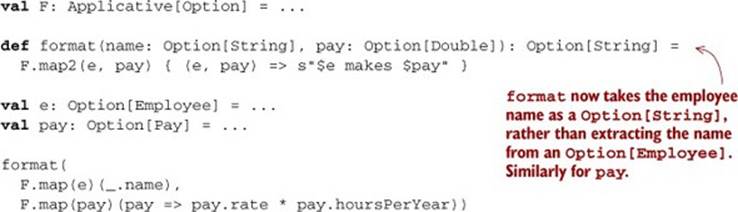

Listing 12.6. Retrieving employee names and annual pay

val F: Applicative[Option] = ...

case class Employee(name: String, id: Int)

case class Pay(rate: Double, hoursPerYear: Double)

def format(e: Option[Employee], pay: Option[Pay]): Option[String] =

F.map2(e, pay) { (e, pay) =>

s"${e.name} makes ${pay.rate * pay.hoursPerYear}"

}

val e: Option[Employee] = ...

val pay: Option[Pay] = ...

format(e, pay)

Here we’re applying a transformation to the result of map2—from Employee we extract the name, and from Pay we extract the yearly wage. But we could just as easily apply these transformations separately, before calling format, giving format an Option [String] andOption[Double] rather than an Option[Employee] and Option[Pay]. This might be a reasonable refactoring, so that format doesn’t need to know the details of how the Employee and Pay data types are represented.

Listing 12.7. Refactoring format

We’re applying the transformation to extract the name and pay fields before calling map2. We expect this program to have the same meaning as before, and this sort of pattern comes up frequently. When working with Applicative effects, we generally have the option of applying transformations before or after combining values with map2. The naturality law states that it doesn’t matter; we get the same result either way. Stated more formally,

map2(a,b)(productF(f,g)) == product(map(a)(f), map(b)(g))

Where productF combines two functions into one function that takes both their arguments and returns the pair of their results:

def productF[I,O,I2,O2](f: I => O, g: I2 => O2): (I,I2) => (O,O2) =

(i,i2) => (f(i), g(i2))

The applicative laws are not surprising or profound. Just like the monad laws, these are simple sanity checks that the applicative functor works in the way that we’d expect. They ensure that unit, map, and map2 behave in a consistent and reasonable manner.

Exercise 12.7

![]()

Hard: Prove that all monads are applicative functors by showing that if the monad laws hold, the Monad implementations of map2 and map satisfy the applicative laws.

Exercise 12.8

![]()

Just like we can take the product of two monoids A and B to give the monoid (A, B), we can take the product of two applicative functors. Implement this function:

def product[G[_]](G: Applicative[G]):

Applicative[({type f[x] = (F[x], G[x])})#f]

Exercise 12.9

![]()

Hard: Applicative functors also compose another way! If F[_] and G[_] are applicative functors, then so is F[G[_]]. Implement this function:

def compose[G[_]](G: Applicative[G]):

Applicative[({type f[x] = F[G[x]]})#f]

Exercise 12.10

![]()

Hard: Prove that this composite applicative functor meets the applicative laws. This is an extremely challenging exercise.

Exercise 12.11

![]()

Try to write compose on Monad. It’s not possible, but it is instructive to attempt it and understand why this is the case.

def compose[G[_]](G: Monad[G]): Monad[({type f[x] = F[G[x]]})#f]

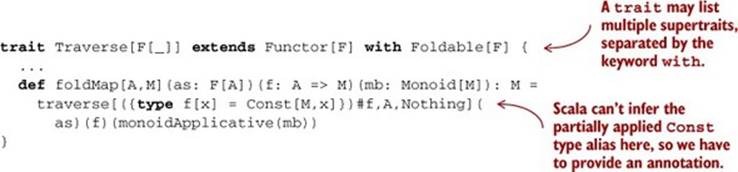

12.6. Traversable functors

We discovered applicative functors by noticing that our traverse and sequence functions (and several other operations) didn’t depend directly on flatMap. We can spot another abstraction by generalizing traverse and sequence once again. Look again at the signatures of traverseand sequence:

def traverse[F[_],A,B](as: List[A])(f: A => F[B]): F[List[B]]

def sequence[F[_],A](fas: List[F[A]]): F[List[A]]

Any time you see a concrete type constructor like List showing up in an abstract interface like Applicative, you may want to ask the question, “What happens if I abstract over this type constructor?” Recall from chapter 10 that a number of data types other than List are Foldable. Are there data types other than List that are traversable? Of course!

Exercise 12.12

![]()

On the Applicative trait, implement sequence over a Map rather than a List:

def sequenceMap[K,V](ofa: Map[K,F[V]]): F[Map[K,V]]

But traversable data types are too numerous for us to write specialized sequence and traverse methods for each of them. What we need is a new interface. We’ll call it Traverse:[6]

6 The name Traversable is already taken by an unrelated trait in the Scala standard library.

trait Traverse[F[_]] {

def traverse[G[_]:Applicative,A,B](fa: F[A])(f: A => G[B]): G[F[B]] =

sequence(map(fa)(f))

def sequence[G[_]:Applicative,A](fga: F[G[A]]): G[F[A]] =

traverse(fga)(ga => ga)

}

The interesting operation here is sequence. Look at its signature closely. It takes F[G[A]] and swaps the order of F and G, so long as G is an applicative functor. Now, this is a rather abstract, algebraic notion. We’ll get to what it all means in a minute, but first, let’s look at a few instances ofTraverse.

Exercise 12.13

![]()

Write Traverse instances for List, Option, and Tree.

case class Tree[+A](head: A, tail: List[Tree[A]])

We now have instances for List, Option, Map, and Tree. What does this generalized traverse/sequence mean? Let’s just try plugging in some concrete type signatures for calls to sequence. We can speculate about what these functions do, just based on their signatures:

· List[Option[A]] => Option[List[A]] (a call to Traverse[List].sequence with Option as the Applicative) returns None if any of the input List is None; otherwise it returns the original List wrapped in Some.

· Tree[Option[A]] => Option[Tree[A]] (a call to Traverse[Tree].sequence with Option as the Applicative) returns None if any of the input Tree is None; otherwise it returns the original Tree wrapped in Some.

· Map[K, Par[A]] => Par[Map[K,A]] (a call to Traverse[Map[K,_]].sequence with Par as the Applicative) produces a parallel computation that evaluates all values of the map in parallel.

There turns out to be a startling number of operations that can be defined in the most general possible way in terms of sequence and/or traverse. We’ll explore these in the next section.

A traversal is similar to a fold in that both take some data structure and apply a function to the data within in order to produce a result. The difference is that traverse preserves the original structure, whereas foldMap discards the structure and replaces it with the operations of a monoid. Look at the signature Tree[Option[A]] => Option[Tree[A]], for instance. We’re preserving the Tree structure, not merely collapsing the values using some monoid.

12.7. Uses of Traverse

Let’s now explore the large set of operations that can be implemented quite generally using Traverse. We’ll only scratch the surface here. If you’re interested, follow some of the references in the chapter notes to learn more, and do some exploring on your own.

Exercise 12.14

![]()

Hard: Implement map in terms of traverse as a method on Traverse[F]. This establishes that Traverse is an extension of Functor and that the traverse function is a generalization of map (for this reason we sometimes call these traversable functors). Note that in implementing map, you can call traverse with your choice of Applicative[G].

trait Traverse[F[_]] extends Functor[F] {

def traverse[G[_],A,B](fa: F[A])(f: A => G[B])(

implicit G: Applicative[G]): G[F[B]] =

sequence(map(fa)(f))

def sequence[G[_],A](fga: F[G[A]])(

implicit G: Applicative[G]): G[F[A]] =

traverse(fga)(ga => ga)

def map[A,B](fa: F[A])(f: A => B): F[B] = ???

}

But what is the relationship between Traverse and Foldable? The answer involves a connection between Applicative and Monoid.

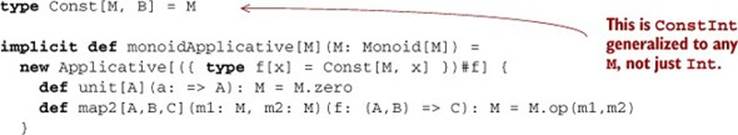

12.7.1. From monoids to applicative functors

We’ve just learned that traverse is more general than map. Next we’ll learn that traverse can also express foldMap and by extension foldLeft and foldRight! Take another look at the signature of traverse:

def traverse[G[_]:Applicative,A,B](fa: F[A])(f: A => G[B]): G[F[B]]

Suppose that our G were a type constructor ConstInt that takes any type to Int, so that ConstInt[A] throws away its type argument A and just gives us Int:

type ConstInt[A] = Int

Then in the type signature for traverse, if we instantiate G to be ConstInt, it becomes

def traverse[A,B](fa: F[A])(f: A => Int): Int

This looks a lot like foldMap from Foldable. Indeed, if F is something like List, then what we need to implement this signature is a way of combining the Int values returned by f for each element of the list, and a “starting” value for handling the empty list. In other words, we only need a Monoid[Int]. And that’s easy to come by.

In fact, given a constant functor like we have here, we can turn any Monoid into an Applicative.

Listing 12.8. Turning a Monoid into an Applicative

This means that Traverse can extend Foldable and we can give a default implementation of foldMap in terms of traverse:

Note that Traverse now extends both Foldable and Functor! Importantly, Foldable itself can’t extend Functor. Even though it’s possible to write map in terms of a fold for most foldable data structures like List, it’s not possible in general.

Exercise 12.15

![]()

Answer, to your own satisfaction, the question of why it’s not possible for Foldable to extend Functor. Can you think of a Foldable that isn’t a functor?

So what is Traverse really for? We’ve already seen practical applications of particular instances, such as turning a list of parsers into a parser that produces a list. But in what kinds of cases do we want the generalization? What sort of generalized library does Traverse allow us to write?

12.7.2. Traversals with State

The State applicative functor is a particularly powerful one. Using a State action to traverse a collection, we can implement complex traversals that keep some kind of internal state.

An unfortunate amount of type annotation is necessary in order to partially apply State in the proper way, but traversing with State is common enough that we can create a special method for it and write those type annotations once and for all:

def traverseS[S,A,B](fa: F[A])(f: A => State[S, B]): State[S, F[B]] =

traverse[({type f[x] = State[S,x]})#f,A,B](fa)(f)(Monad.stateMonad)

To demonstrate this, here’s a State traversal that labels every element with its position. We keep an integer state, starting with 0, and add 1 at each step.

Listing 12.9. Numbering the elements in a traversable

def zipWithIndex[A](ta: F[A]): F[(A,Int)] =

traverseS(ta)((a: A) => (for {

i <- get[Int]

_ <- set(i + 1)

} yield (a, i))).run(0)._1

This definition works for List, Tree, or any other traversable.

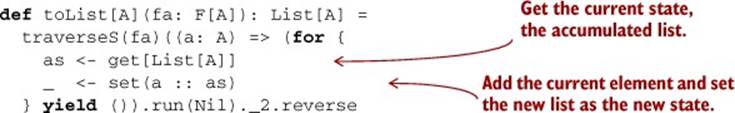

Continuing along these lines, we can keep a state of type List[A], to turn any traversable functor into a List.

Listing 12.10. Turning traversable functors into lists

We begin with the empty list Nil as the initial state, and at every element in the traversal, we add it to the front of the accumulated list. This will of course construct the list in the reverse order of the traversal, so we end by reversing the list that we get from running the completed state action. Note that we yield () because in this instance we don’t want to return any value other than the state.

Of course, the code for toList and zipWithIndex is nearly identical. And in fact most traversals with State will follow this exact pattern: we get the current state, compute the next state, set it, and yield some value. We should capture that in a function.

Listing 12.11. Factoring out our mapAccum function

def mapAccum[S,A,B](fa: F[A], s: S)(f: (A, S) => (B, S)): (F[B], S) =

traverseS(fa)((a: A) => (for {

s1 <- get[S]

(b, s2) = f(a, s1)

_ <- set(s2)

} yield b)).run(s)

override def toList[A](fa: F[A]): List[A] =

mapAccum(fa, List[A]())((a, s) => ((), a :: s))._2.reverse

def zipWithIndex[A](fa: F[A]): F[(A, Int)] =

mapAccum(fa, 0)((a, s) => ((a, s), s + 1))._1

Exercise 12.16

![]()

There’s an interesting consequence of being able to turn any traversable functor into a reversed list—we can write, once and for all, a function to reverse any traversable functor! Write this function, and think about what it means for List, Tree, and other traversable functors.

def reverse[A](fa: F[A]): F[A]

It should obey the following law, for all x and y of the appropriate types:

toList(reverse(x)) ++ toList(reverse(y)) ==

reverse(toList(y) ++ toList(x))

Exercise 12.17

![]()

Use mapAccum to give a default implementation of foldLeft for the Traverse trait.

12.7.3. Combining traversable structures

It’s the nature of a traversal that it must preserve the shape of its argument. This is both its strength and its weakness. This is well demonstrated when we try to combine two structures into one.

Given Traverse[F], can we combine a value of some type F[A] and another of some type F[B] into an F[C]? We could try using mapAccum to write a generic version of zip.

Listing 12.12. Combining two different structure types

def zip[A,B](fa: F[A], fb: F[B]): F[(A, B)] =

(mapAccum(fa, toList(fb)) {

case (a, Nil) => sys.error("zip: Incompatible shapes.")

case (a, b :: bs) => ((a, b), bs)

})._1

Note that this version of zip is unable to handle arguments of different “shapes.” For example, if F is List, then it can’t handle lists of different lengths. In this implementation, the list fb must be at least as long as fa. If F is Tree, then fb must have at least the same number of branches asfa at every level.

We can change the generic zip slightly and provide two versions so that the shape of one side or the other is dominant.

Listing 12.13. A more flexible implementation of zip

def zipL[A,B](fa: F[A], fb: F[B]): F[(A, Option[B])] =

(mapAccum(fa, toList(fb)) {

case (a, Nil) => ((a, None), Nil)

case (a, b :: bs) => ((a, Some(b)), bs)

})._1

def zipR[A,B](fa: F[A], fb: F[B]): F[(Option[A], B)] =

(mapAccum(fb, toList(fa)) {

case (b, Nil) => ((None, b), Nil)

case (b, a :: as) => ((Some(a), b), as)

})._1

These implementations work out nicely for List and other sequence types. In the case of List, for example, the result of zipR will have the shape of the fb argument, and it will be padded with None on the left if fb is longer than fa.

For types with more interesting structures, like Tree, these implementations may not be what we want. Note that in zipL, we’re simply flattening the right argument to a List[B] and discarding its structure. For Tree, this will amount to a preorder traversal of the labels at each node. We’re then “zipping” this sequence of labels with the values of our left Tree, fa; we aren’t skipping over nonmatching subtrees. For trees, zipL and zipR are most useful if we happen to know that both trees share the same shape.

12.7.4. Traversal fusion

In chapter 5, we talked about how multiple passes over a structure can be fused into one. In chapter 10, we looked at how we can use monoid products to carry out multiple computations over a foldable structure in a single pass. Using products of applicative functors, we can likewise fuse multiple traversals of a traversable structure.

Exercise 12.18

![]()

Use applicative functor products to write the fusion of two traversals. This function will, given two functions f and g, traverse fa a single time, collecting the results of both functions at once.

def fuse[G[_],H[_],A,B](fa: F[A])(f: A => G[B], g: A => H[B])

(G: Applicative[G], H: Applicative[H]):

(G[F[B]], H[F[B]])

12.7.5. Nested traversals

Not only can we use composed applicative functors to fuse traversals, traversable functors themselves compose. If we have a nested structure like Map[K,Option[List[V]]], then we can traverse the map, the option, and the list at the same time and easily get to the V value inside, becauseMap, Option, and List are all traversable.

Exercise 12.19

![]()

Implement the composition of two Traverse instances.

def compose[G[_]](implicit G: Traverse[G]):

Traverse[({type f[x] = F[G[x]]})#f]

12.7.6. Monad composition

Let’s now return to the issue of composing monads. As we saw earlier in this chapter, Applicative instances always compose, but Monad instances do not. If you tried before to implement general monad composition, then you would have found that in order to implement join for nested monads F and G, you’d have to write something of a type like F[G[F[G[A]]]] => F[G[A]]. And that can’t be written generally. But if G also happens to have a Traverse instance, we can sequence to turn G[F[_]] into F[G[_]], leading to F[F[G[G[A]]]]. Then we can join the adjacent F layers as well as the adjacent G layers using their respective Monad instances.

Exercise 12.20

![]()

Hard: Implement the composition of two monads where one of them is traversable.

def composeM[F[_],G[_]](F: Monad[F], G: Monad[G], T: Traverse[G]):

Monad[({type f[x] = F[G[x]]})#f]

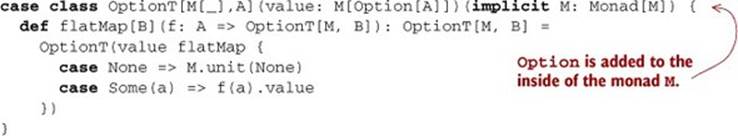

Expressivity and power sometimes come at the price of compositionality and modularity. The issue of composing monads is often addressed with a custom-written version of each monad that’s specifically constructed for composition. This kind of thing is called a monad transformer. For example, the OptionT monad transformer composes Option with any other monad:

The flatMap definition here maps over both M and Option, and flattens structures like M[Option[M[Option[A]]]] to just M[Option[A]]. But this particular implementation is specific to Option. And the general strategy of taking advantage of Traverse works only with traversable functors. To compose with State (which can’t be traversed), for example, a specialized StateT monad transformer has to be written. There’s no generic composition strategy that works for every monad.

See the chapter notes for more information about monad transformers.

12.8. Summary

In this chapter, we discovered two new useful abstractions, Applicative and Traverse, simply by playing with the signatures of our existing Monad interface. Applicative functors are a less expressive but more compositional generalization of monads. The functions unit and map allow us to lift values and functions, whereas map2 and apply give us the power to lift functions of higher arities. Traversable functors are the result of generalizing the sequence and traverse functions we’ve seen many times. Together, Applicative and Traverse let us construct complex nested and parallel traversals out of simple elements that need only be written once. As you write more functional code, you’ll learn to spot instances of these abstractions and how to make better use of them in your programs.

This is the final chapter in part 3, but there are many abstractions beyond Monad, Applicative, and Traverse, and you can apply the techniques we’ve developed here to discover new structures yourself. Functional programmers have of course been discovering and cataloguing for a while, and there is by now a whole zoo of such abstractions that captures various common patterns (arrows, categories, and comonads, just to name a few). Our hope is that these chapters have given you enough of an introduction to start exploring this wide world on your own. The material linked in the chapter notes is a good place to start.

In part 4 we’ll complete the functional programming story. So far we’ve been writing libraries that might constitute the core of a practical application, but such applications will ultimately need to interface with the outside world. In part 4 we’ll see that referential transparency can be made to apply even to programs that perform I/O operations or make use of mutable state. Even there, the principles and patterns we’ve learned so far allow us to write such programs in a compositional and reusable way.