Computer Science: A Very Short Introduction (2016)

Chapter 4 The art, science, and engineering of programming

To repeat, algorithmic thinking is central to computer science. Yet, algorithms are abstract artefacts. Computer scientists can live quite contentedly (if they so desired) in the rarified world of algorithms and never venture into the ‘real world’, much as ‘pure’ mathematicians might do. But if we desire real, physical computers to carry out computations on our behalf, if we want physical computers to not only do the kinds of computations we find too tedious (though necessary) but also those that are beyond our normal cognitive capacities, then algorithmic thinking alone does not suffice. They must be implemented in a form that can be communicated to physical computers, interpreted, and executed by them on their own terms rather than on human terms.

This is where programming enters the computing scene. A computer program is the specification of a desired computation in a language communicable to physical computers. The act of constructing such computations is called programming and the languages for specifying programs are called programming languages.

Programs are liminal artefacts

The concept of a program is elusive, subtle, and rather strange. For one thing, as I explain shortly, the same computation can be described at several abstraction levels depending on the language in which it is expressed, thus allowing for multiple equivalent programs. Secondly, a program is Janus-faced: on the one hand the program is a piece of static text, that is, a symbol structure that has all the characteristics of an abstract artefact. On the other hand, a program is a dynamic process—that is, it causes things to happen within a physical computer, and such processes consume physical time and physical space; thus it has a material substrate upon which it acts. Moreover, it requires a material medium for it to work.

Thus programs have an abstract and a material face, and for this, we may call programs liminal artefacts. The consequences of this liminality are both huge and controversial.

First, within the computer science community, some are drawn to the abstractness of programs and they hold the view that programs are mathematical objects. To them, programming is a kind of mathematical activity involving axioms, definitions, theorems, and proofs. Other computer scientists insist on its material facet and hold that programs are empirical objects. Programming to them is an empirical engineering activity involving the classical engineering tasks of specification of requirements, design, implementation, and conducting experiments that test and evaluate the resulting artefacts.

Secondly, the analogy between programs and the mind is often drawn. If a program is a liminal artificial object, the mind is a liminal natural object. On the one hand, mental (or cognitive) processes such as remembering, thinking, perceiving, planning, language understanding and mastering, etc., can be (and have been for centuries) examined as if the mind is a purely abstract thing interacting autonomously with the ‘real’ world. Yet the mind has a ‘seat’. Unless one is an unrepentant dualist who completely separates mind from body, one does not believe that the mind can exist outside the brain—a physical object. And so, while some philosophers and cognitive scientists study the mind as if it is an abstract entity, neuroscientists seek strictly physical explanations of mental phenomena in terms of brain processes.

Indeed, the scientific study of cognition has been profoundly influenced by the analogy of mind with programs. One consequence has been the development of the branch of computer science called artificial intelligence (AI) which attempts to create mind-like and brain-like computational artefacts. Another has been the transformation of cognitive psychology into a broader discipline called cognitive science at the core of which is the hypothesis that mental processes can be modelled as program-like computations.

Thus, the intellectual influence of computer science has extended well beyond the discipline itself. Much as the Darwinian theory of evolution has extended its reach beyond biology so also because of the mind–program analogy computer science’s influence has gone beyond computing itself. Or rather (as we will see in a later chapter) the very idea of computing has extended well beyond the scope of physical computers and automatic computation. I think it is fair to say that few sciences of the artificial have had such intellectual consequences outside their own domains.

Yet another consequence of the liminality of programs is that programs and programming are inexorably entwined with artificial languages called programming languages. One can design algorithms using natural language perhaps augmented with some artificial notation (as is seen in the case of the algorithms presented in Chapter 3). But no one can become a programmer without mastering at least one programming language. A pithy (if only rough) formula could well be:

![]()

This itself has several implications.

One is the development of the theory of programming languages as a branch of computer science. Inevitably, this has led to a relationship between this theory and the science of linguistics, which is concerned with the structure of natural languages.

A second implication is the never-ending quest for the ‘dream’ programming language which can do what is required of any language better than any of its predecessors or its contemporary competitors. This is the activity of language design. The challenge to language designers is twofold: to facilitate communication of computations to physical computers which could then execute these computations with minimal human intervention; and also to facilitate communication with other human beings so that they can comprehend the computation, analyse it, criticize it, and offer suggestions for improvement, just as people do with any text. This dual challenge has been the source of an abiding obsession of computer scientists with programming and other languages of computation.

Closely related to language design is a third outcome: the study and development of programs called compilers that translate programs written in a programming language into the machine code of particular physical computers. Compiler design and implementation is yet another branch of computer science.

Finally, there has been the effort to design features of physical computers that facilitate the compiler’s task. This activity is called ‘language-oriented computer design’ and has historically been of great interest within the branch of computer science called computer architecture (see Chapter 5).

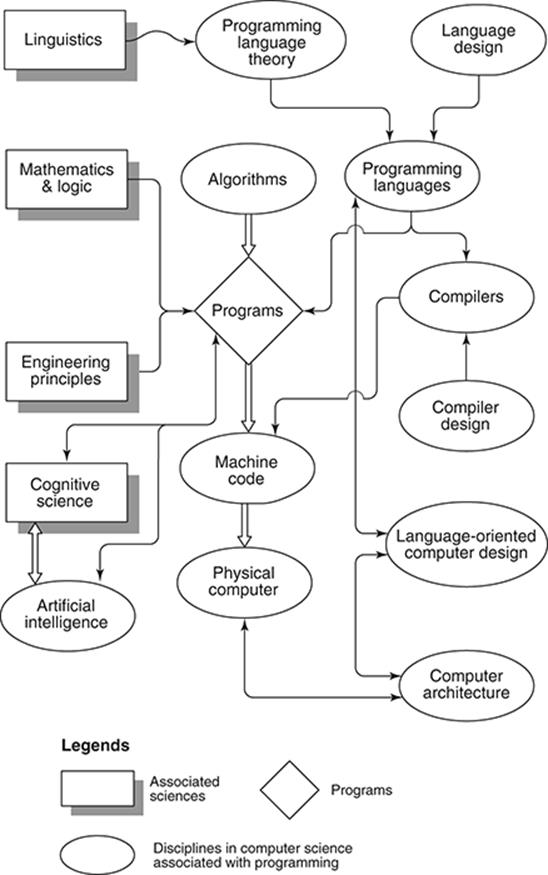

Figure 3 shows schematically the many relationships of programs and programming with these other entities and disciplines. The entities enclosed in rectangles are contributing disciplines outside computer science; the entities enclosed in ovals are disciplines within computer science.

3. Programming, related disciplines, and associated sciences.

Language, thought, reality, and programming

Notice, I say ‘language’ in the preceding section, not ‘notation’. Notation refers to symbols invented for writing about something. Thus, chemical or mathematical notation. Language goes beyond notation in that it affords a symbol system for thinking about something. Language is embroiled with thought itself.

There is a famous issue which linguists and anthropologists have debated over: is thought defined by language? There are those who assert that this is so, that the language we use determines the way we think about the world and what we think about, indeed, that it defines our conceptualization of reality itself. Its most extreme implication is that since language is a defining element of culture, thoughts, percepts or concepts are not translatable from one language culture to another. We are each trapped in our own language-defined reality. A very post-modern condition. Others take the more moderate view that language influences, but does not define, how we think about the world. The proposition that language defines or influences thought is called the Sapir–Whorf hypothesis after the anthropological linguists Edward Sapir and Benjamin Lee Whorf who developed it. (It is also called the ‘principle of linguistic relativity’.)

The Sapir–Whorf hypothesis addressed natural languages. Our concern is with artificial languages, specifically those invented to express computations. No one to my knowledge has framed an analogue to the Sapir–Whorf hypothesis for the computational realm but the obsession computer scientists have shown from the earliest days of electronic computers with programming and other computational languages strongly suggest that some form of the hypothesis is tacitly accepted by the computer science community. More accurately, we may say with some confidence that the languages of computing (in particular, programming) are intimately entwined with the nature of the computing environment; and that programming languages influence programmers’ mentality.

So let us consider, first, programming languages. The nature of programs will naturally emerge from this discussion.

Programming languages as abstract artefacts

It was mentioned earlier that a computation can be specified as programs at different abstraction levels that vary in ‘distance’ from the physical computers that can execute the programs. Correspondingly, programming languages can be conceived at different abstraction levels. A crude dichotomy separates high-level from low-level languages. The former enable programs to be written independent of all physical computers that would execute them, and the latter refers to languages designed with respect to specific families of computers or even more specifically to a particular computer.

Thus, high-level languages are ‘machine-independent’ and low-level ones are ‘machine-dependent’ with the caveat that the degree of independence or dependence may well vary. The lowest level languages are called assembly languages and they are so specific to particular (family of, or individual) physical computers that the assembly language programmer literally manipulates the features of the computers themselves.

There was a time in the history of computing when almost all programming was done using assembly languages. Such programs were still symbol structures (and to a very moderate extent abstract) but translators called ‘assemblers’ (themselves programs) would convert them into the target computers’ machine code. However, because of the tedium, difficulty, amount of human time required, and error-proneness of assembly language programming the focus shifted to the invention and design of increasingly higher level, machine-independent programming languages, and the task of translating programs in such languages into executable machine code for specific computers was delegated to compilers.

In this chapter hereafter, and in the remainder of this book, unless stated explicitly, the term ‘programming language’ will always refer to high-level languages.

Programming languages, in contrast to natural ones, are invented or designed. They are, thus, artefacts. They entail the use of notation as symbols. As we will see, a programming language is actually a set of symbol structures and, being independent of physical computers, are abstract in exactly the same sense that algorithms are abstract. We thus have the curious situation that while programs written in such languages are liminal, the languages of programming themselves are abstract.

Language = notation + concepts & categories

Let us revisit the notation/language distinction. The world of computing is populated by hundreds of computational languages. A large majority of these are for programming but others have been created for other purposes, in particular for the design and description of physical computers at various levels of abstraction (see Chapter 5). The latter are generically called ‘computer hardware description languages’ (CHDLs) or ‘computer design and description languages’ (CDDLs).

These computational languages employ different notations—symbols—and a part of the mental effort in learning a new computational language goes into mastering the notation—that is, what the symbols symbolize. This entails mapping the notational signs onto fundamental computationalconcepts and linguistic categories. A particular language, then, comprises a body of concepts and categories together with the notation that represents them. Again, as a rough formula:

![]()

In computing, different signs have been deployed in different languages to symbolize the same concept. Conversely, the same sign may symbolize different concepts in different languages.

For example, the fundamental programming concept known as the assignment (already encountered in Chapter 3) may be denoted by such signs as ‘=>’, ‘=’, ‘←’, ‘:=’ in different languages. Thus the assignment statements:

X + 1 => X

X = X + 1

X:= X + 1

X ← X + 1

X += 1

all mean the same thing: the current value of the variable X is incremented by 1 and the result is assigned to (or copied back into) X. Assignment is a computational concept, and the assignment statement is a linguistic category, and is present in most programming languages. The ways of representing it differ from one language to another depending on the taste and predilection of the language designers.

Concepts and categories in programming languages

So what are these concepts and categories? Computing, recall, is symbol processing; in more common parlance it is information processing. ‘In the beginning is information’—or, as language designers prefer to call it, data—which is to be processed. Thus a fundamental concept embedded in all programming languages is what is called the data type. As stated in Chapter 1, a data type defines the nature of the values a data object (otherwise called ‘a variable’) can hold along with the operations that are permissible (‘legal’) on such values. Data types are either ‘primitive’ (or ‘atomic’) or ‘structured’ (or ‘composite’), composed from more basic data types.

In the factorial example of Chapter 3, there is only the primitive data type ‘non-negative integer’, meaning that integers greater than or equal to 0 are admissible as values of variables of this type; and only integer arithmetic operations can be performed on variables of this type. It also means that only integer values can be assigned to variables of this type. For example, an assignment such as

![]()

is a legal statement if x is defined as of data type integer. If x has not been declared as such, if instead it is declared as (say) a character string (representing a name), then the assignment will be illegal.

A number itself, is not necessarily an integer unless it is defined as such. Thus, from a computational point of view, a telephone number is not an integer; it is a numeric character string; one cannot add or multiply two telephone numbers. So, if x is declared as a numeric character string, the earlier assignment will not be valid.

The linear search algorithm of Chapter 3 includes both primitive and structured data types. The variable i is of the primitive type integer; the variable given is of structured type ‘character string’, itself composed out of the primitive type ‘character’. The list student is also a structured data type, sometimes called ‘linear list’, sometimes ‘array’. The i-th element of student is itself a structured data type (called by different names in different programming languages, including ‘record’ and ‘tuple’) comprising here of two data types, one (name) of type character string, the other (email) also of type character string. So a variable such as student is a hierarchically organized data structure: characters composed into character strings; character strings composed into tuples or records; tuples composed into lists or arrays.

But data or information is only the beginning of a computation. Moreover the variables themselves are passive. Computation involves action and the composition of actions into processes. A programming language, thus, must not only have the facility for specifying data objects, they must also include statements that specify actions and processes.

In fact, we have already encountered several times the most fundamental statement types in the preceding chapter. One is the assignment statement: its execution invokes a process that involves both a direction and a temporal flow of information. For example, in executing the assignment statement

![]()

where A and B are both variables, information flows from B to A. But it isn’t like water flowing from one container to another. The value of B is not changed or reduced or emptied after the execution of this statement. Rather, the value of B is ‘read’ and ‘copied’ into A so that at the end, the values of A and B are equal. However, in executing the statement

![]()

the value of A does change: new value of A = old value of A + value of B. The value of B remains unchanged.

The general form of the assignment statement is

![]()

where E is an expression (such as the arithmetic expressions Y + 1, (X – Y) * (Z/W)). The execution of the assignment in general is a two-step process: E is first evaluated; then this value is assigned to X.

The assignment statement, then, specifies the unit of action in a computation. It is the atomic process of computations. But just as in the natural world atoms combine into molecules and molecules into larger molecules so also in the computational world. Assignments combine to form larger segments, and the latter combine to form still larger segments until complete programs obtain. There is hierarchy at work in the computational world as there is in nature.

Thus, a major task of computer scientists has been to discover the rules of composition, invent statement types that represent these rules, and design notations for each statement type. While the rules of composition and statement types may be quite universal, different programming languages may use different notations to represent them.

One such statement type is the sequential statement: two or more (simpler) statements are composed sequentially so that when executed they are executed in the order of the component statements. The notation I used in Chapter 3 to denote sequencing was the ‘;’. Thus, in Euclid’s algorithm we find the sequential statement

m ← n;

n ← r;

goto step 1

in which the flow of control proceeds through the three statements according to the order shown.

But computations may also require making choices between one of several alternatives. The if … then … else statements used in Chapter 3 in several of the algorithms are instances of the conditional statement type in programming languages. In the general form if C then S1 else S2, the condition C is evaluated, and if true then control goes to S1, otherwise control flows to S2.

Sometimes, we need to return flow of control to an earlier part of the computation and repeat it. The notations while … do and repeat … until used in the linear search and nonrecursive factorial algorithms in Chapter 3 exemplify these instances of the iteration statement type.

These three statement types, the sequential, conditional, and iterative, are the building blocks for the construction of programs. Every programming language provides notation to represent these categories. In fact, in principle, any computation can be specified by a program involving a combination of just these three statement types. (This was proved in 1966 by two Italian computer theorists, Corrado Böhm and Giuseppe Jacopini.) In practice many other rules of composition and corresponding statement types have been proposed to facilitate programming (such as theunconditional branch exemplified by the goto statement used in Euclid’s algorithm in Chapter 3).

Programming as art

Programming is an act of design and like all design activities, it entails judgement, intuition, aesthetic taste, and experience. It is for this that Donald Knuth entitled his celebrated and influential series of texts The Art of Computer Programming (1968–9). Almost a decade later Knuth elaborated on this theme in a lecture. In speaking of the art of programming, he wrote, he was alluding to programming as an art form. Programs should be aesthetically satisfying; they should be beautiful. The experience of writing a program should be akin to composing poetry or music. Thus, the idea of style, so intimately a part of the discourses of art, music, and literature, must be an element of programming aesthetics. Recall the Dutch computer scientist Edsger Dijkstra’s remark mentioned in Chapter 3: in devising algorithms, ‘Beauty is our business’. The Russian computer scientist A.P. Ershov has echoed these sentiments.

Along this same theme, Knuth later proposed that programs should be works of literature, that one can gain pleasure in writing programs in such a way that the programs will give pleasure on being read by others. (He called this philosophy ‘literate programming’, though I think ‘literary programming’ would have been more apt as an expression of his sentiment.)

Programming as a mathematical science

But, of course, computer scientists (including Knuth) seek to discover more objective and formal foundations for programming. They want a science of programming. The Böhm–Jacopini result mentioned earlier is the sort of formal, mathematical result computer scientists yearn for. In fact, by a ‘science’ of programming many computer scientists mean a mathematical science.

The view of programming as a mathematical science has been most prominently manifested in three other ways—all having to do with the abstract face of programs or, as I noted early in this chapter, the view held by some that programs are mathematical objects.

One is the discovery of rules of syntax of programming languages. These are rules that determine the grammatical correctness of programs and have a huge practical bearing, since one of the first tasks of compilers (automatic translators of high level programs into machine code) is to ensure that the program it is translating is grammatically or syntactically correct. The theory of programming language syntax owes its beginnings to the linguist Noam Chomsky’s work on the theory of syntax (for natural languages).

The second contribution to the science of programming is the development of rules of semantics—that is, principles that define the meaning of the different statement types. Its importance should be quite evident: in order to use a programming language the programmer must be quite clear about the meaning of its component statement types. So also, the compiler writer must understand unambiguously the meaning of each statement type in order to translate programs into machine code. But semantics, as the term is used in linguistics, is a thorny problem since it involves relating linguistic categories to what they refer to in the world, and the theory of programming language semantics mirrors these same difficulties. It is fair to say that the theory of semantics in programming, despite its sophisticated development, has not had the same kind of acceptance by the computer science community, nor has it been used so effectively as the theory of syntax.

The third contribution to the science of programming is closely related to the semantics issue. This contribution is founded on the conviction of such computer scientists as the Englishman C.A.R. Hoare and the Dutchman Edsger Dijkstra that computing is akin to mathematics and that the same principles that mathematicians use, such as axioms, rules of deductive logic, theorems, and proofs, are applicable to programming. This philosophy was stated quite unequivocally and defiantly by Hoare who announced, in 1985, the following manifesto:

(a) Computers are mathematical machines. That is, their behaviour can be mathematically defined and every detail is logically derivable from the definition.

(b) Programs are mathematical expressions. They describe precisely and in detail the behaviour of the computer on which they are executed.

(c) A programming language is a mathematical theory. It is a formal system which helps the programmer in both developing a program and proving that the program satisifies the specification of its requirements.

(d) Programming is a mathematical activity. Its practice requires application of the traditional methods of mathematical understanding and proof.

In the venerable axiomatic tradition in mathematics, one begins with axioms (propositions that are taken to be ‘self-evidently’ true about the domain of interest, such as the principle of mathematical induction mentioned in Chapter 3), and definitions of basic concepts, and provesprogressively, new insights and propositions (collectively called theorems) from these axioms, definitions and already proved theorems, using rules of deduction. Inspired by this tradition, the third contribution to a mathematical science of programming concerns the construction of axiomatic proofs of the correctness of programs based on axioms, definitions and rules of deduction defining the semantics of the relevant programming language. The semantics is called axiomatic semantics, and their application is known as axiomatic proofs of correctness.

As in the axiomatic approach in mathematics (and its use in such disciplines as mathematical physics and economics), there is much formal elegance and beauty in the mathematical science of programming. However, it is only fair to point out that while a formidable body of knowledge has been developed in this realm, a goodly number of academic computer scientists and industrial practitioners remain skeptical of their practical applicability in the hurly-burly ‘real world’ of computing.

Programming as (software) engineering

This is because of the view held by many that programs are not ‘just’ beautiful abstract artefacts. Even Hoare’s manifesto recognized that programs must describe the behaviour of the computers which execute them. The latter is the material face of programs and which confers liminality to them. To many, in fact, programs are technological products, hence programming is an engineering activity.

The word software appears to have entered the computing vocabulary in 1960. Yet its connotation remains uncertain. Some use ‘software’ and ‘program’ as synonyms. Some think of software to mean the special and essential set of programs (such as operating systems and other tools and ‘utilities’ called, collectively, ‘system programs’) that are built to execute atop a physical computer to create the virtual machines (or computer systems) that others can use more efficaciously (see Figure 1 in Chapter 2). Still others consider software to mean not only programs but the associated documentation that is essential to the development, operation, maintenance and modification of large programs. And there are some who would include human expertise and knowledge in this compendium.

At any rate, ‘software’ has the following significant connotations: it is that part of a computer system that is not itself physical; it requires the physical computer to make it operational; and there is a sense in which software is very much an industrial product with all that the adjective implies.

Software is, then, a computational artefact that facilitates the usage of a computer system by many (possibly millions, even billions of )users. Most times (though not always) it is a commercially produced artefact which manifests certain levels of robustness and reliability we have come to expect of industrial systems.

Perhaps in analogy with other industrial systems, a software development project is associated with a life cycle. And so, like many other complex, engineering projects (e.g. a new space satellite launch project) the development of a software system is regarded as an engineering project, and it is in this context that the term software engineering (first coined in the mid-1960s) seems particularly apposite. This has led, naturally, to the idea of the ‘software engineer’. It is not a coincidence that a large portion of thinking about software engineering has originated in the industrial sector.

Various models of the software life cycle have been proposed over the past fifty years. Collectively, they all recognize that the development of a software system involves a number of stages:

(a) Analysis of the requirements the software is intended to serve.

(b) Development of precise functional, performance and cost specifications of the different components (‘modules’) that can be identified from requirements analysis.

(c) The design of the software system that will (hopefully) meet the specifications. This activity may itself consist of conceptual and detailed design stages.

(d) Implementing the design as an operational software system specified in a programming language and compiled for execution on the target computer system(s).

(e) Verification and validation of the implemented set of programs to ensure that they meet the specifications.

(f) Once verified and validated, the maintenance of the system and, if and whenever necessary, its modification.

These stages do not, of course, follow in a rigidly linear way. There is always the possibility of returning to an earlier stage from a later one if flaws and faults are discovered. Moreover, this software life cycle also requires an infrastructure—of tools, methodology, documentation standards, and human expertise which collectively constitute a software engineering environment.

One must also note that one or more of these stages will entail solid bodies of scientific theories as part of their deployment. Specification and design may involve the use of languages having their own syntax and semantics; detailed design and implementation will involve programming languages and, possibly, the use of axiomatic proof techniques. Verification and validation will invariably demand sophisticated modes of proving and experimentally testing the software. Much as the classical fields of engineering (such as structural or mechanical engineering) entail engineering sciences as components, so also software engineering.