Understanding Context: Environment, Language, and Information Architecture (2014)

Part IV. Digital Information

<Chapter 14. Digital Environment

No metaphor is more misleading than “smart.”

—MARK WEISER

Variant Modes and Digital Places

THE RULE-DRIVEN MODES AND simulated affordances of interfaces are also the objects, events, and layouts that function as places, whether on a screen alone or in the ambient digital activity in our surroundings. So, changing the mode of objects can affect the mode of places as well, especially when objects work as parts of interdependent systems.

For example, let’s take a look at a software-based place: Google’s search site. Suppose that we want to research what qualities to look for in a new kitchen knife. When we run a query using Google’s regular mobile Web search, we see the sort of general results to which we’ve become accustomed: supposedly neutral results prioritized by Google’s famed algorithm, which prioritizes based on the general “rank” of authority, determined by analyzing links across the Web. Google also defines “quality” for sites in general. On the desktop Google site, there are paid ad-word links that are clearly labeled as sponsored results, but the main results are easy to distinguish from the paid ones.

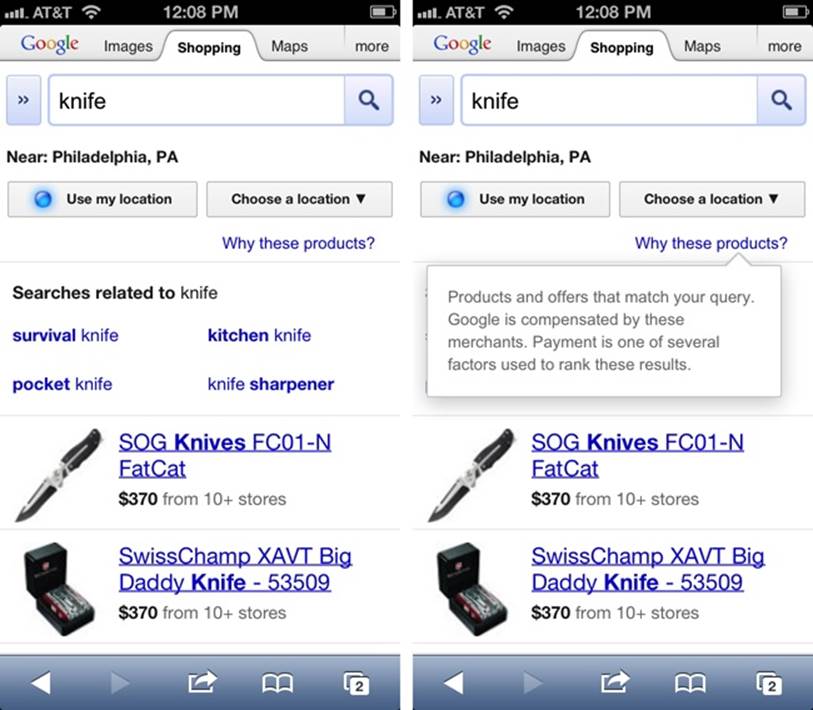

But Google’s Shopping site is a different story: when searching for products there, all of the results are affected by paid promotion, as illustrated in Figure 14-1. I actually wasn’t aware of this until a colleague pointed it out to me at lunch one day.[273]

Figure 14-1. When in the Shopping tab, search results are driven by different rules, which you can see by clicking the “Why these products?” link

At least several factors are in play here:

§ Google’s long-standing web-search function has established cultural expectations in its user base: Google is providing the most relevant results based on the search terms entered. Although it’s arguable as to whether these results are effective—or if they’ve been corrupted by site owners’ search-engine manipulation—we still tend to equate Google with no-nonsense, just-the-facts search results, as a cultural invariant.

§ Google’s Shopping tab is listed right next to the Images, Maps, and “more” tabs—which, when expanded, show other Google services. The way these are displayed together implies that the rules behind how they give us results are equivalent. Objects that look the same are perceived as having similar properties. So, we aren’t expecting results for Images to be prioritized by advertising dollar, just as we don’t assume searching for a town in Maps will take us to a different-but-similar town because of someone’s marketing plan.

§ In the object layout of the search results view, Google Shopping provides no clear indication of this tab’s different mode. The “Near: Philadelphia, PA” label indicates location is at work in some way, but the only way to know about paid-priority results is to tap the vaguely named “Why these products?” link at the upper right. It’s nice of Google to provide this explanation, but users might not engage it because they assume they already know the answer, based on learned experience within Google’s other environments.

There’s nothing inherently wrong with making a shopping application function differently from a search application. But the invariant features of the environment need to make the difference more clear by using semantic function to better establish context.[274]

Foraging for Information

When we use these information environments, we’re not paying explicit attention to a lot of these factors. In fact, we’re generally feeling our way through with little conscious effort. So, the finer points of logical difference between one mode of an environment and another are easily lost on us. According to a number of related theories on information-seeking, humans look for information by using behavior patterns similar to those used by other terrestrial animals when foraging for food. Research has shown that people don’t formulate fully logical queries and then go about looking in a rationally efficient manner; instead, they tend to move in a direction that feels right based on various semantic or visual cues, wandering through the environment in a nonlinear, somewhat unconsciously driven path, “sniffing out” whatever seems related to whatever it is for which they’re searching.

We take action in digital-semantic environments using the same bodies that we evolved to use in physical environments. Instead of using a finger to poke a mango to see if it’s ripe, or cocking an ear to listen for the sound of water, we poke at the environment with words, either by tapping or clicking words and pictures, or giving our own words to the environment through search queries, to see what it says back to us.

Marcia Bates’ influential article from 1989, “The Design of Browsing and Berrypicking Techniques for the Online Search Interface,” argues that people look for information by using behaviors that are repurposed from early human evolution. Bates points out that the classic information retrieval model, which assumes a linear, logical approach to matching a query with the representation of a document, was becoming inadequate as technology was presenting users with information environments of even greater scale and complexity.[275]

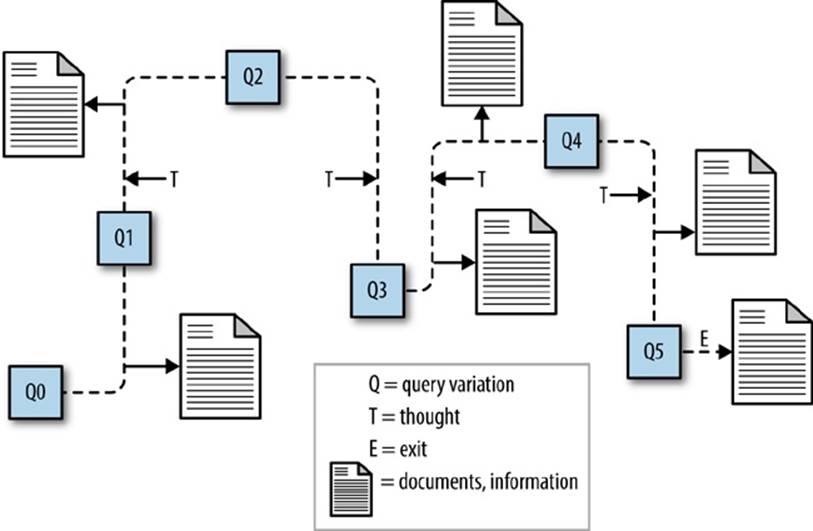

Bates has gone on to further develop her theoretical framework by folding “berrypicking” into a more comprehensive approach (see Figure 14-2), such as her 2002 article, “Toward an Integrated Model of Information Seeking and Searching.” In that article, Bates argues that an “enormous part of all we know and learn...comes to us through passive undirected behavior.”[276] That is, most search activity is really tacit, nondeliberate environmental action, not unlike the way we find our way through a city. Bates also points out that people tend to arrange their physical and social surroundings in ways to help them find information, essentially extending their cognition into the structures of their environment.

Bates’ work often references another, related theoretical strand called information foraging theory. Introduced in a 1999 article by Stuart Card and Peter Pirolli, information foraging makes use of anthropological research on food-foraging strategies. It also borrows from theories developed in ecological psychology. Card and Pirolli propose several mathematical models for describing these behaviors, including information scent models. These models “address the identification of information value from proximal cues.”[277] Card, Pirolli, and others have continued developing information foraging theory and have been influential in information science and human-computer interaction fields.

Figure 14-2. Bates’ “berrypicking” model

I should point out that information foraging theory originated from a traditional cognitive-science perspective, assuming the brain works like a computer to sort out all the mathematics of how much energy might be conserved by using one path over another. However, even though Bates, Card, and Pirolli come from that tradition, it’s arguable their work ends up being more in line with embodiment than not. The essential issue these theories address is that environments made mostly of semantic information lack most of the physical cues our perceptual systems evolved within; so our perception does what it can with what information is available, still using the same old mechanisms our cognition relied on long before writing existed.

Inhabiting Two Worlds at Once

There are also on-screen capabilities that change the meaning of physical places, without doing anything physical to those places. Here’s a relatively harmless example: in the podcast manager and player app called Downcast, you can instruct the software to update podcast feeds based on various modality settings, including geolocation, as illustrated in Figure 14-3.

Figure 14-3. Refresh podcasts by geolocation in the Downcast podcast app

This feature allows Downcast to save on cellular data usage by updating only at preselected locations that have WiFi. It’s a wonderful convenience. But, we should keep in mind that it adds a layer of digital behavior that changes, if only slightly, the functional meaning of one physical context versus another. Even without a legion of intelligent objects, our smartphones make every place we inhabit potentially “smart.”

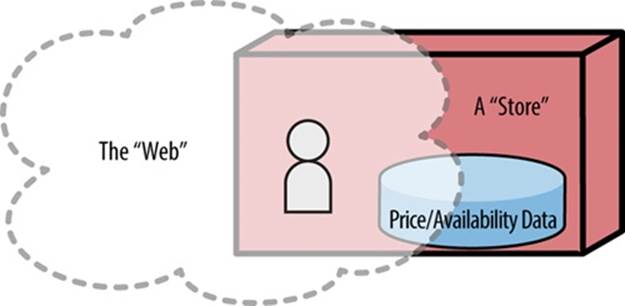

Another way digital has changed how we experience “place” is by replicating on the Web much of the semantic information we’d find in a physical store, and allowing us to shop online instead of in a building. These on-screen places for shopping are expected more and more to be integrated with physical store locations. For big-box retailers that want to grow business across all channels, this means interesting challenges for integrating those dimensions.

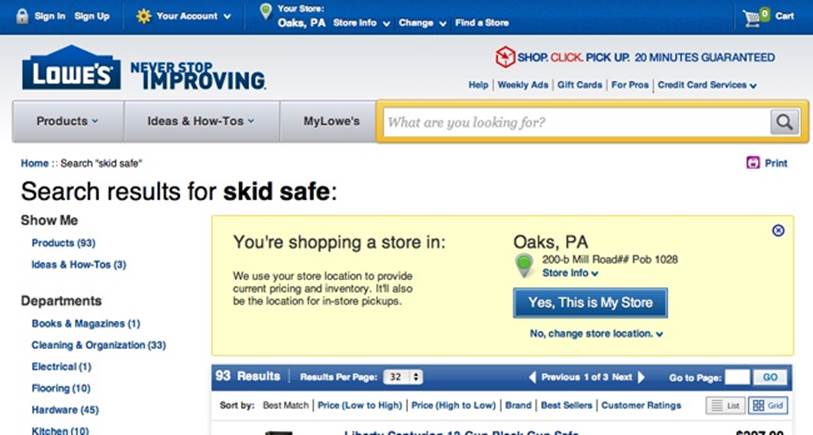

Large traditional retailers are in the midst of major transition. Most of them were already big corporations long before the advent of the Web, so they have deeply entrenched, legacy infrastructures, supply chains, and organizational silos. These structures evolved in an environment in which everything about the business was based on terrestrially bound, local stores. Since the rise of e-commerce, most have struggled with how to exist in both dimensions at once. For example, if a customer wants to order something to be delivered or picked up in the store, it means the system must know where that user is and what store is involved to provide accurate price and availability information, as illustrated in Figure 14-4.

Figure 14-4. The user begins shopping on the Web—a place without location—but must be placed in a “store” to see necessary information about products

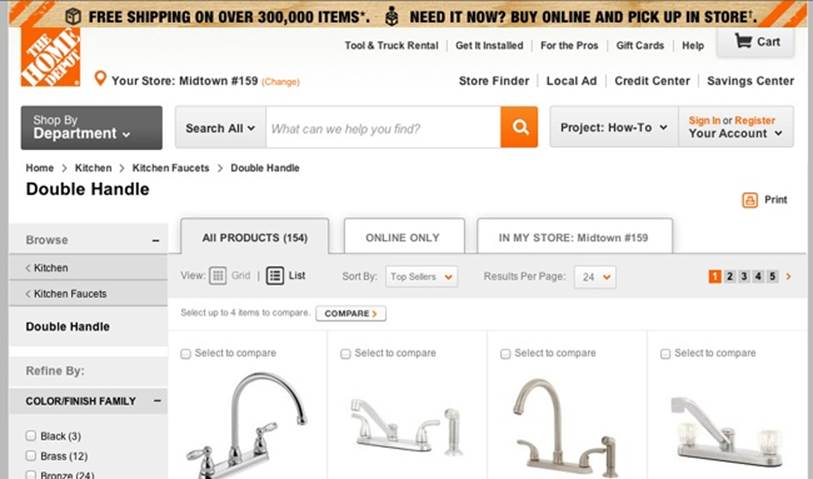

Conventions are starting to emerge for disambiguating the context of product availability. The approach adopted by Home Depot, shown in Figure 14-5, is becoming more common: for the product-listing view, the interface presents options to view All Products as well as Online Only and In My Store. This strategy seems pretty straightforward, but it turns out there are numerous complications when we unpack what these contextual labels really mean.

Figure 14-5. Home Depot’s attempts to give semantic structure to the overlap of online and in-store shopping

All Products

In many online stores, “all products” can actually mean “all products we list online, but not everything you could buy in the store.” This issue is improving, but lots of big retailers are still working to get their services infrastructure to handle the same inventory across stores and online. For stores that sell regionally sourced products such as lumber, this can be a special challenge.

Online Only

At first glance, this is a clear label that indicates products displayed on that tab are available only by ordering from the website. However, on some retail sites, what it really means is “these aren’t available in ‘Your Store,’ so you need to order them from the site instead,” even though they might be available in a store only a few miles away from “Your Store.”

In My Store

I never selected a store and agreed to make it “mine.” The website chose a store for me, using a third-party service that guesses my location based on the IP address the system thinks is assigned to my device’s net connection. I know this only because I’ve learned it in my consulting work. However, many regular customers don’t have that insider knowledge; they might not notice the choice was made for them at all. Sometimes, when systems make these choices for us, it’s convenient. But it works only when it’s accurate. Unfortunately, these geolocation-by-IP services are notoriously flakey, because there’s no guarantee my IP address reflects my real location. For example, many customers do shopping from their workplace or via a coffee shop’s WiFi connection. Corporate computers often use proxy connections for security reasons, and the proxy can be located many miles away. You can easily buy something thinking it will be ready for pickup at the store on the way home, only to find that it’s actually waiting for you in another city.

The language is trying to create stability where systems are unable to promise it. There’s always a point at which simplifying the environment obscures too much important information. The best way to handle a situation like this is not to fake simplicity but to embrace the complexity and clarify it by making it more understandable. For example, Lowe’s Home Improvement has been experimenting with how to make the complex rules more clear, without obscuring important information that the customer really should understand, as depicted in Figure 14-6.

When the customer gets to a point on the site at which accurate location is relevant to action—such as in product lists with prices and availability displayed, or in the shopping cart—the site gently but unmistakably provides a message panel that urges the customer to take a moment to confirm the store choice. It’s not a pop-up window that interrupts and obscures—the customer can keep shopping whether dismissing the embedded message or not, but it will continue to show up at important moments to ensure that the customer has confirmed the store before buying anything.

This is a digitally encoded business rule that the user needs to understand in order to use the environment. Like the ontology of “calendar” that we looked at earlier, here the ontologies of “store” and “shopping” and “purchase,” and even “product,” are still being sorted out for retailers who need customers to shop both in the cloud and in a store. Until systems can magically not only read your mind but know the exact location you want to get a product from, some version of this translation will be needed.

Figure 14-6. Lowes goes to some trouble to explain the complexity and avoid costly confusion about location

LOCATION, LOCATION, LOCATION

We see digital influence at work even in subtle ways in our various online platforms. For example, as demonstrated in Figure 14-7, there are many ways to describe the location of a person.

Figure 14-7. My Twitter profile, temporarily sporting an iPhone coordinate location

Physically

If I can see the friend, the friend is an object in the environment, exhibiting surface patterns that I recognize as that friend’s face and body, and event-specifying information in the way the friend moves. The friend could be out of sight—occluded by another environmental surface—but I might still be able to hear the friend speak or make some other recognizable sound. Either way, the friend is within a relatively close distance to where I am.

Semantically

Semantic information gives us the ability to extend our sensemaking of location far beyond our human-scale perception. Because of the cultural system I’m part of, I understand that if my friend is “in Detroit,” Detroit is a city in Michigan, which is situated on a map that I’ve seen thousands of times in my life. I probably couldn’t tell you exactly where, but I know it’s in the Midwest and close to the Canadian border. It’s not precise, but it’s a good-enough, satisficing concept that gets the job done.

Digitally

Suppose that I’ve met this person only online, over Twitter. At some point I wonder where he lives, so I check his Twitter profile only to find that it lists the person’s location as iPhone: 37.404922,-5.992203, as in Figure 14-8. This is coordinate information that doesn’t correspond with any semantic or physical reference point for me. Technically, this is semantic information, but in the context in which I’m seeing it, it’s bound up with digital influence—it requires that I tap something to ask the software to translate it into a city name or a simple map.

Ambient Agents

There are physical objects and events in our surroundings that are digitally enabled; and these physical-digital agents are proliferating at a wild rate. Digital agent-objects are already more prevalent than most people realize, because they’ve been introduced into our lives in mostly mundane ways. For years we’ve been getting comfortable with on-screen digital agents such as an email client that decides on its own which messages are spam and which aren’t. Thus, it’s a small step to having a clothes dryer that stops when it senses the moisture is gone, or a thermostat that learns our habits and makes best-guess adjustments to home temperature. The consumer space is already awash in these gadgets, and many are networked so that we can control them from a kitchen console or personal smartphone.

These are all seemingly benign agents on their own, but as they add up, they become a layer of digital sentience pervading our surroundings. This can have a downside, as consumers discovered when refrigerators and other smart devices were hijacked by spam bots in late 2013,[278] or when electronics manufacturer LG had to admit it was collecting private viewing-habits data without customer knowledge.[279] These digital-information undercurrents transform our very dwellings from private enclaves to porous nodes on global networks—they change what “home” means. Generally, these technologies are created with the best of intentions. Yet, like all technologies, we have to learn their limits and consequences, and improve along the way.

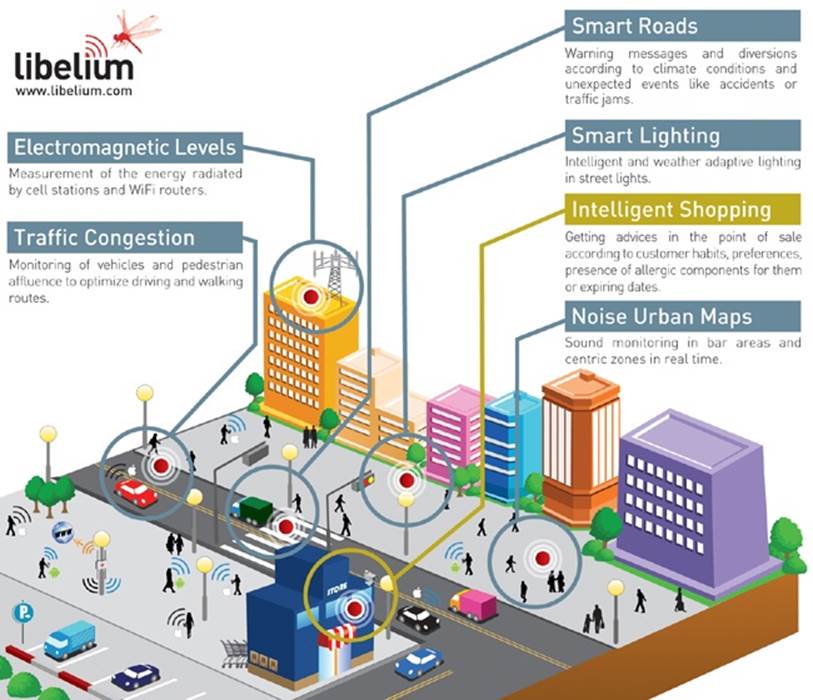

Likewise, the networked objects of the urban landscape transform what cities are to us. In William Mitchell’s ever-prescient words, “Rooms and buildings will henceforth be seen as sites where bits meet the body—where digital information is translated into visual, auditory, tactile, or otherwise perceptible form, and, conversely, where bodily actions are sensed and converted into digital information.”[280] Since those words were published in 1996, much of the world has found itself in exactly the situation Mitchell describes: our inhabited places are fundamentally different, whether online or offline, through the emergence of networked consumer technology and government infrastructures. We treat them like the invariant processes of nature, even though digital influence means these processes don’t have to stay true to any natural laws at all.

Our cities and towns are becoming sensor-studded, agent-suffused environments that can improve our lives immensely, such as the cityscape of systems depicted in Figure 14-8. But they also require careful design and translation into people’s everyday, tacitly aware understanding.

As inhabitants of these places satisfice their way through each day, they need the environment to let them know when objects aren’t going to behave the way natural objects do. Retail store shelves can track body movements, age, and gender.[281] Safety-aware, cooperatively smart chemical drums can track how well their handlers are following safe-handling policies.[282] These are creatures of a sort that are not especially legible to us.

Figure 14-8. Detail portion of an infographic about smart cities, from Internet-of-Things platform provider, Libelium[283]

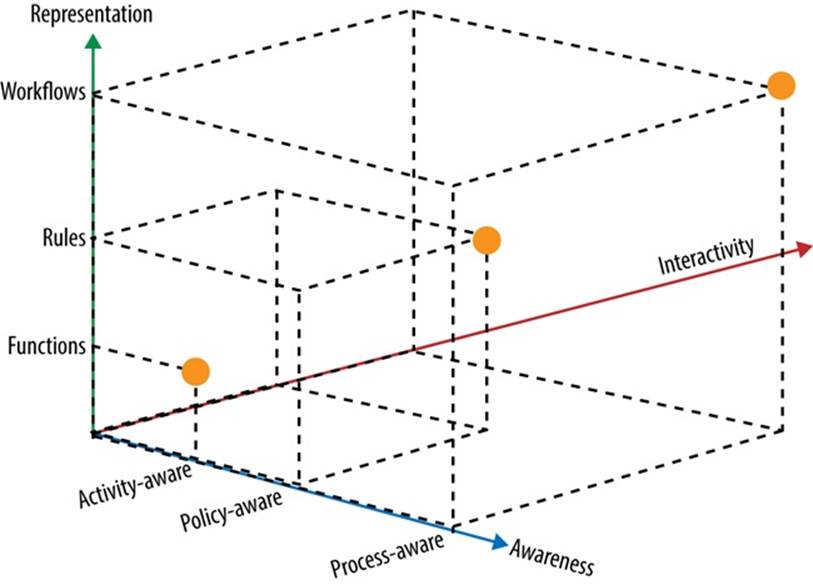

These are known challenges in computing fields. In a 2010 IEEE paper titled “Smart Objects as Building Blocks for the Internet of Things,” the authors raise important questions about digital-agent objects:

The vision of an Internet of Things built from smart objects raises several important research questions in terms of system architecture, design and development, and human involvement. For example, what is the right balance for the distribution of functionality between smart objects and the supporting infrastructure? How do we model and represent smart objects’ intelligence? What are appropriate programming models? And how can people make sense of and interact with smart physical objects?[284]

The model, presented in Figure 14-9, describes smart objects across several dimensions, one of which the authors describe as “fundamental design and architectural principles: activity-aware objects, policy-aware objects, and process-aware objects.”

Figure 14-9. A model describing smart objects across several dimensions[285]

This and other models like it will be important for breaking down the types of agency these objects have, informing the design approaches we should take in creating and including them in designed environments.

In their book Code/Space: Software and Everyday Life, a particularly important idea explored by Kitchin and Dodge is that, not only do hardware and software objects have this kind of agency, but the “space” (in our terms, the places) we inhabit can have agency, as well—a quasi-sentience that’s woven into the surfaces and layouts around us. Robots aren’t only in the form of objects that behave like people or animals; entire buildings and cities can be “robots” of a sort.

This might sound ominous, and for good reason. Without diligent attention and foresight of its creators and users, the agency of pervasive digital infrastructures can erode human agency, independence, and privacy. In Everyware: The Dawning Age of Ubiquitous Computing, Adam Greenfield offers a thorough analysis of the implications of pervasively digital environments. One of Greenfield’s theses states: “Everyware produces a wide belt of circumstances where human agency, judgment, and will are progressively supplanted by compliance with external, frequently algorithmically-applied, standards and norms.”[286] We are used to living in environments in which, other than nature, everything around us happens based on the present or planned choices of human beings, but that arrangement is rapidly changing. We’ve created systems that use light-speed decision logic based on rules that are increasingly written by the systems themselves. Greenfield has dedicated himself to ongoing efforts at bridging the “black box” dimension of digital agency with the human-scale world that people can actually perceive and understand. The socio-political implications of “everyware” are deeply important. Although that’s not our focus for this discussion, Greenfield’s work is a good starting place for considering these issues.

When designing a system that depends on the perception of a human agent, the system should present invariant information that meets the expectations that human perception brings to the experience—the human umwelt (one’s perceived environment). It’s difficult enough to understand what all the “levers” in our lives actually do. Now, though, we are faced with a world bristling with levers that do not look like levers; they are hidden from view or look and behave like something else entirely. These levers can create events too fast for our slow, embodied perception to pick up, much less understand.

Likewise, when designing a system that needs to perceive human context in helpful and accurate ways, the system itself should clearly signal its own umwelt—how it perceives and understands the human trying to interact with it. People tend to assume a digital agent is going to know more than it actually is capable of knowing; users of these agents risk making too many assumptions about the agents’ intelligence. That’s partly because intelligence is, itself, a reification. There is no actual thing we can point to that is “intelligence.” But reification means we see intelligence where there often isn’t any, perhaps to our great disappointment or risk. Digital agents need to be transparent about their limitations rather than present a simplified front that inaccurately promises human-like coherence.

This is why it’s so important to understand how humans experience context. The parts of our environment that have been given digital agency should have an ontology that the human agent can comprehend, so users can understand what sort of creature the agent is, how to interact with it, and what can be expected of it.

[273] Thanks to Richard Dalton (http://mauvyrusset.com) for this tidbit.

[274] An informative take on the filtered-place problem is in the book, The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think, by Eli Pariser. Penguin Books, 2011.

[275] Bates, Marcia J. “The design of browsing and berrypicking techniques for the online search interface.” Online Review. October, 1989;13(5):407-24.

[276] Bates, Marcia J. “Toward an Integrated Model of Information Seeking and Searching.” (Keynote Address, Fourth international Conference on Information Needs, Seeking and Use in Different Contexts, Lisbon, Portugal, September 11, 2002.) New Review of Information Behaviour Research. 2002; Volume 3:1-15.

[277] Pirolli, Peter, and Stuart Card. “Information Foraging” Xerox Palo Alto Research Center. Psychological Review. 1999; 106(4):643-75.

[278] “Fridge sends spam emails as attack hits smart gadgets.” BBC News Technology January 17, 2014 (http://www.bbc.com/news/technology-25780908).

[279] Wakefiled, Jane. “LG promises update for ‘spying’ smart TV.” BBC News Technology November 21, 2013 (http://www.bbc.com/news/technology-25042563).

[280] Mitchell, William J. City of Bits: Space, Place, and the Infobahn (On Architecture). Cambridge, MA: MIT Press, 1996:913-14, Kindle locations.

[281] Ungerleider, Neal. “The Future of Shopping: Shelves That Track the Age and Gender of Passing Customers,” Fast Company (fastcompany.com) October 15, 2013 (http://bit.ly/1r8RsSw).

[282] Kortuem, Gerd et al. “Smart objects as building blocks for the Internet of things,” Internet Computing, IEEE. 2010;14(1):44-51 (http://usir.salford.ac.uk/2735/1/w1iot.pdf).

[283] Courtesy, Libelium (libelium.com)

[284] Kortuem, Gerd et al. “Smart objects as building blocks for the Internet of things.” Internet Computing, IEEE. 2010;14(1):44-51.

[285] ———. “Smart objects as building blocks for the Internet of things.” Internet Computing, IEEE. 2010;14(1):44-51.

[286] Greenfield, Adam. Everyware: The Dawning Age of Ubiquitous Computing. Berkeley, CA: New Riders; 2004:148.