Understanding Context: Environment, Language, and Information Architecture (2014)

Part V. The Maps We Live In

Chapter 18. The Social Map

Talk is essential to the human spirit. It is the human spirit. Speech. Not silence.

—WILLIAM GASS

Conversation

IMAGINE A BUSY DINER ON A SATURDAY MORNING AND ALL THE CONVERSATIONS GOING ON THERE: a family at a table where parent and child negotiate about eating the eggs before the waffle; a couple making plans for the rest of the weekend; a man on a cell phone reconnecting with whomever he met on a date the previous night. There are also visible gestures, facial expressions, and body language woven into the activity of talk. Not to mention the newspapers and magazines being perused over coffee—conversations mediated by publishers and writers. There are also people texting via SMS, reading news and sharing stories via email, checking their “feeds” of friends and family on Facebook and Twitter, or gazing at pictures on Instagram and Flickr. They’re using these threads of information in their table talk, showing friends at the table what is on their phones as part of the topic at hand, and using it the other way around—taking pictures of food and friends, and posting them to the cloud. Conversation is still about people talking with people, but it’s now an everyday thing to not just be in one place at a time, but in two or more simultaneously.

We tend to think of conversation as something that fills the gaps between actually doing things. By now, though, it’s hopefully clear that talking is actually a quite tangible form of “doing,” and that we wouldn’t be doing much at all without language, which exists because of the need to converse.

Conversation is made manifest all around us. All human-made environments are conversations in action. Cities and their structures exist because people had conversations, and they instantiate the meanings of those conversations in stone, concrete, glass, and steel. Before print and literacy became widespread, buildings such as cathedrals were the primary medium for telling stories and broadcasting messages. Later, libraries became the meta structures that house the published artifacts of the slow-moving print-based conversations of our culture.

Structures are conversations, but of course, conversations also have structures. Watch two people talking, and you’ll pick up on tacitly informal patterns of tonality, inflection, facial expression, posture, and gesture that add up to a sort of punctuation, signaling whose turn it is to speak and the structural relationships between statements. There are formal structures for conversations as well, such as Robert’s Rules of Order, a system invented in the nineteenth century for better organizing discussions by deliberative bodies such as parliamentary gatherings or board meetings.

There used to be a fairly clear distinction between real-time, spoken conversation and a written or published one via postal mail or other printed media such as books and newspapers. But now, that distinction is dissolving under our button-pushing fingers, as we publish our communiques without need of paper, printing presses, or supply-chain distribution.

Conversation doesn’t exist in a vacuum; it is inextricable from the properties of its media. Just as the joints in a creature’s limbs evolved to suit the invariant structures of the environment, the way we converse also evolves within—and is shaped by—our environment. To paraphrase Marshall McLuhan, the medium really is an intrinsic property of the message.[344] So, when digitally enabled environmental properties are added to our environment, those shape the message, too.

One thing the emergence of persistent, pervasive digital networks has done is remove a lot of the environmental context that conversation evolved in, changing the nature of how conversation works. We need to learn new properties of the environments we use to communicate; as we’ve seen, many of those properties don’t necessarily work like the world used to.

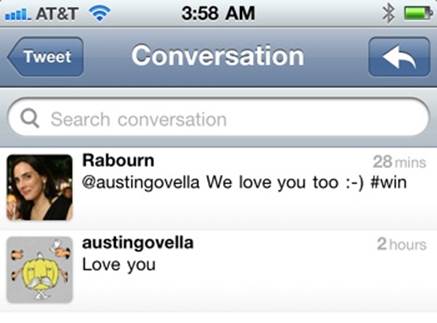

Social Architectures

Those of us who use Twitter a great deal have become accustomed to occasionally committing a faux pas known as a “DM Fail.” This is when a user means to send a direct message (DM) but instead posts a tweet publicly. For example, Figure 18-1 shows one in which two information-architecture community members had an exchange they allowed me to capture. Austin Govella accidentally tweeted “Love you”: a message he meant to be a private communication to a family member. Of course, part of the now-familiar tradition of the DM Fail is to gently poke a bit of fun at the mishap, as Tanya Rabourn happily did in this example, with a delightful “#win” hashtag celebrating the moment.[345]

Figure 18-1. A garden variety “mistweet” and a gently snarky response

This is not an error we would normally commit in the nondigital environment, where so much ecological information is available to let us know both for whom a message is intended and who else might be able to hear it. Even in a phone conversation, there’s an assumed context of privacy that’s specified in the environment: a phone receiver pressed to your ear whereby you hear only one other person’s voice, close to the microphone of his own receiver. What is it, then, about the Twitter environment that confuses the context enough for people to make this error?

When Twitter was first launched in 2006, it was mainly as a supplemental service for cell phone “texting” via Short Message Service (SMS),[346] allowing friends to subscribe to one another’s messages when sent to the Twitter SMS address of 40404. For example, rather than my having to text something like “Heading to the bar on my corner to watch the game” to a bunch of different people individually (some of whom might not be interested in my status), I could just send it to 40404. Then, Twitter would forward it to friends who have subscribed to (or “followed”) my Twitter messages. The SMS origin is why tweets are limited to 140 characters: SMS protocol has a limit of 160 characters per message, and 140 leaves room for adding contextual information such as the username of the “tweeter.”

As part of the protocol for Twitter, users can use commands of a sort to follow or unfollow people, change other preferences, and even make a specific message be a direct message instead of a public tweet, with the syntax “d username message”—“d” being the command for “make the rest of this a direct message.”

So, even when Twitter was mainly SMS based, there were plenty of opportunities for misdirected private messages. If you receive a message from a friend or loved one on your cell phone, the natural, learned reaction is to just text her a response. It’s a conversation: we’re so used to having them without thinking about using special commands, it’s hard to learn the new habit of checking what sort of message it is you’ve received and then remembering to add “d username” before your response. Adding to the problem, the phone’s environment doesn’t provide enough information to differentiate the context. In the physical environment, we have to make a major change in our bodily position (or the volume of our voice) to go from a private conversation to a public proclamation. But, in a digitally generated environment, it takes only the difference of a typed character or two.

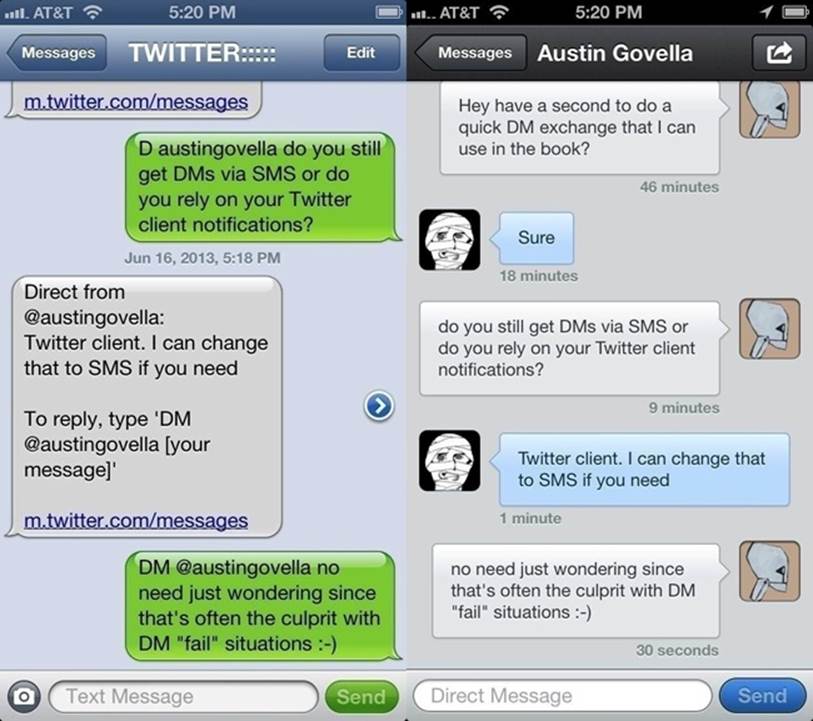

Even when using a more recent smartphone and a Twitter client, it can be easy to make the mistake of responding in the wrong way. On an iPhone, for example, my Twitter client’s direct-message interface is almost identical to my phone’s native SMS interface (Figure 18-2). Recall that a digital user interface is basically graphical semantic information role-playing as physical information—a simulated structural environment.

Figure 18-2. A direct-message exchange, on Twitter (via Tweetbot) and SMS

If you’re explicitly attending to the details of these views as interfaces, it’s not hard to spot their differences. In Heidegger’s terms from Chapter 6, that would be the much less natural and efficient “unreadiness-to-hand.” However, when we’re having a conversation, we’re not paying much attention to the interface; we’re treating it as “ready-to-hand” by default by attending to the communication, not its container. This is another example of the tacit way in which we engage our environment, satisficing in the moment. For the act of replying to someone, my perceptual system concerns itself with only a few bits of information—the words written by the other person, the field where I can put words to answer him, and the Send button. These are the invariant elements of the environment that matter to me in the midst of the conversation. The rest of it becomesclutter, unless I’ve worked to train myself to think twice and look at the other clues. In this case, I have to check if Twitter sent me the message, which makes the difference between a public conveyance or private communication.

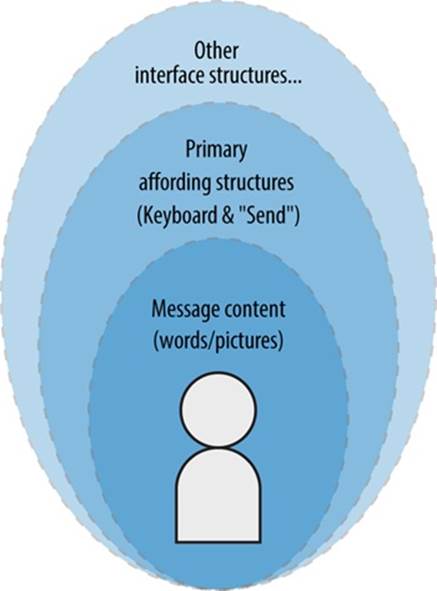

The way the object is designed for interaction can have a disproportionate effect on how the place is experienced as architecture. Twitter provides an environment for communication, structured by its architecture of labels and rules, and instantiated by various client interfaces, as illustrated inFigure 18-3.

Figure 18-3. The layers involved in a Twitter direct-message exchange

The client application is actually an important factor; rules embodied in the client can change the context of what Twitter is to a particular user. Twitter has historically been more of a service that informs disparate objects and places rather than a place itself. It has its own website and app, but even then, many people never use the official Twitter interfaces. Back when Twitter would show up to only a hundred or so posts in a feed, I recall how confused I would be when people I followed would tweet so much it kept me from seeing what others were saying. I later discovered that they were using client applications that cached thousands of tweets from their feed—removing this environmental limitation. Spamming Twitter didn’t look like a problem from their point of view.

The client also changes what our actions mean for one another. Twitter’s API provides the ability to mark tweets as “favorites.” Early in my own Twitter usage, I didn’t use this feature much, other than to mark tweets that contained links I wanted to read later. (Unfortunately, this ended up being a dustbin I never managed to revisit.)

But, after I began using a smartphone app as my Twitter client, I could set the app to give me immediate notification when one of my tweets was “favorited” by someone else. I then realized that I began using favorites differently, as if everyone else also had their client set like mine. Tapping the little “favorite” star became a way to give a sort of “nod” to the tweet’s writer. I set a mode that created a new invariant, and it sets my expectation that everyone else is in the same umwelt, or environmental perspective, that I’ve created for myself. I might be having a half-imagined moment of conversation with the other person and never know it.

The rules-architecture of a social platform has a huge impact on determining what kind of places it instantiates and what sorts of conversations and actions happen there. The rules we make manifest in social software require our explicit, careful attention. Even a simple Listserv mailing list has rule structures that have been shown to encourage and allow trolling and “flame wars” because they lack the social environmental feedback mechanisms available to us in nondigital conversation.[347]

In his book Here Comes Everybody: The Power of Organizing Without Organizations (Penguin), Clay Shirky uses an ecological analogy that nicely speaks to the environmental perspective we’ve been exploring:

When we change the way we communicate, we change society. The tools that a society uses to create and maintain itself are as central to human life as a hive is to a bee. Though the hive is not part of any individual bee, it is part of the colony, both shaped by and shaping the lives of its inhabitants. The hive is a social device, a piece of bee information technology that provides a platform, literally, for the communication and coordination that keeps the colony viable. Individual bees can’t be understood separately from the colony or from their shared, cocreated environment. So it is with human networks; bees make hives, we make mobile phones.[348]

Mobile phones certainly are a factor. Just as the invention of elevators helped make skyscrapers a possibility, a whole new networked-device category enables new structures and rules that accommodate new sorts of activity.

Regardless of device, though, place structures can still result in different sorts of conversations. An often-mentioned difference between Facebook and Twitter is that Facebook requires symmetrical friending—mutual agreement to be “friends.” But Twitter employs the looser asymmetrical following: I can follow someone, but that someone doesn’t have to follow me in return.

That doesn’t keep many people from obsessing about who is following them or not, but it does create a contextual expectation that differs from Facebook or other symmetrical connection platforms. Of course, on Facebook, this anxiety is only increased after users catch onto the fact that even its “Most Recent” mode for its News Feed is being filtered by a hidden, undisclosed algorithm; nobody really knows who is seeing their posts, or if they’re seeing all of them from their friends. When a label that sounds so solidly invariant turns out to be procedurally generated, it tends to make us question every other supposedly solid element.

Extending Shirky’s analogy, we do not inhabit just one hive with one set of structures and rules. Although we’ve always, in a sense, lived in multiple “hives,” each with their own sets of rules (home, work, school, club, and so on), we now need to be simultaneously present in them all. It’s like trying to play baseball, football, and tennis, at the same time while making breakfast, while having intelligent conversations about each activity.

Throughout any given day, a regular person might be “present” and attending to conversations in any number of places:

§ A conversation about a project in a workplace email thread

§ A management discussion on an employer’s intranet

§ Keeping up with the news feed from friends and family on Facebook

§ Discussing professional topics with other practitioners on LinkedIn

§ Bantering with other fans of a sports team, under a Twitter hashtag

§ Engaging in photo-sharing on Instagram

§ Texting with family via phone SMS

This isn’t an extreme example; the chances are that if you’re reading this book, you’re simultaneously plugged into roughly this many places. Each has its own interaction design conventions, and each has its own architectural “rules of order” and structural constraints as well as its own cultural norms and nuances of expression. For example, you can’t assume a “favorite” in Twitter will be interpreted the same way as a “like” on Facebook, and your sense of humor on Instagram might come across as unprofessional on LinkedIn.

It’s important to remember, though: conversation isn’t only about “social software” or “social media.” Conversation has been the main activity of social context since long before the invention of cities, much less the arrival of the Internet. Software and its capabilities are new arrivals, but they affect the nature of the conversations we have as well as their content.[349]

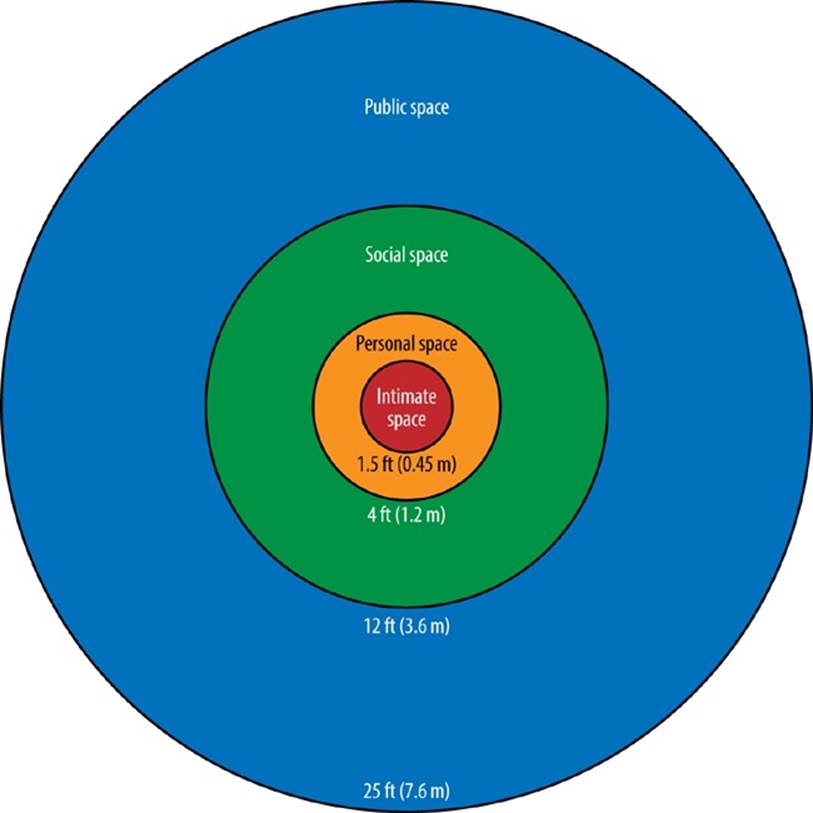

“Proxemics” as a Structural Model

Architectures are generally social environments, so they build on the behavior patterns humans embody in social life. These are tacitly constructed, but identifiable, and they can inform how we should design all sorts of places. In the 1960s, the cultural anthropologist Edward T. Hall described how physical distance affects social communication and coined the term proxemics. Hall believed that by studying the way people interact spatially, we can learn things not only about social activity but about how to create environments that better accommodate or even encourage different sorts of sociality, such as “the organization of space in...houses and buildings, and ultimately the layout of...towns.”[350]

Hall developed a model for distinguishing different levels of intimacy based on physical space. He stressed that the distances are not necessarily the same in all cultures, only that the emotional and social engagement between people can be correlated with physical distance, as illustrated inFigure 18-4.[351]

Hall’s model works nicely with an embodiment perspective because the distinctions emerge from what sorts of communication are physically afforded by various proximities. Additionally, the structure is spatially nested, which fits with how we perceive the environment. Here are the communication “levels” by layer:

Intimate

Allows whispering, embracing

Personal

Face-to-face and high-touch conversations with close friends and family

Social

Interactions with acquaintances or friends-of-friends, such as a handshake, and the ability to hear one another clearly at a conversational volume

Public

Performing or speaking in public, which requires a louder voice and larger gestures

Figure 18-4. Proxemics model of personal distance[352]

Because none of these interactions happen without motion and activity, Hall also broke each of these into “close” and “far” phases to indicate the transitional stages we move through as we socialize through our day.

This model isn’t all there is to proxemics, but it provides us a helpful structure for thinking through the way we create architectures online, both for social media and for other interactions, such as the overtures of a retail website to gather personal information, or make product recommendations.

We might learn from this that we should make structures that let users gradually build trust and intimacy with one another and the environment. For example, don’t expose personal information to social-platform “friends” by default; instead, let users establish distinctions over time. Or, don’t allow a digital agent to be too helpful too soon, because it can feel like presumptuous overfamiliarity (and the assumptions can often be flat-out wrong).

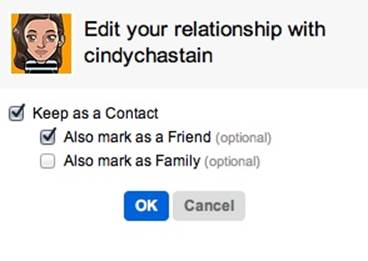

The way we define categories of for social relationships determines fundamental structures of social architecture. Flickr has always had a simple, three-tier construct that roughly aligns with proxemic distance: Contact, Friend, Family (Figure 18-5). I assume others, like me, use these with some interpretive flexibility—for example, keeping some family members as contacts and some close lifelong friends as family. But Flickr lets us interpret this for ourselves.

Figure 18-5. Flickr’s dialog box for choosing among contact categories

When creating a Flickr account and becoming a new user of the platform, it’s pretty clear that these three simple tiers are nested: Family sees everything; Friends see a subset of that; Contacts see a subset of what Friends see. But, it would be even better if that were reflected here as a reminder.

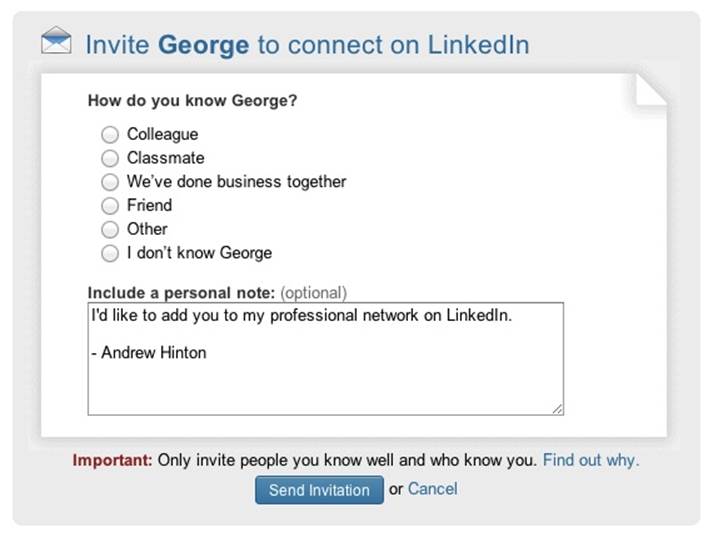

LinkedIn doesn’t have an especially clear proxemic progression (Figure 18-6) represented in its relationship categories.

The interface says, “Only invite people you know well and who know you,” but we know LinkedIn is a professional-profile-based site—so what does “know well” really mean in this context? And what if categories overlap? For example, what if we are both friends and colleagues? There are multiple dimensions at work here and not much context for why we’d choose one over another.

Figure 18-6. LinkedIn’s dialog box for categorizing a new contact

Hall also developed a model that explained two different ways that cultures tend to communicate and understand information: “high context” and “low context.” High-context cultures rely more on the surrounding signifiers and tacit information that informs a communication. These cultures count on consistent, invariant meanings that they assume everyone will “get” even if not spelled out explicitly. By contrast, low-context cultures need much more explicit communication; they can’t rely as much on tacit information to clarify meaning.[353]

Any social environment’s architecture can constrain its users to being more on the high- or low-context scale, in terms of how well they understand one another. Architectures that demand all communication and context-setting be only through highly structured, predefined limits can, perhaps ironically, manage to strain out all the “noise” that would otherwise add meaningful nuance to communication. The limits of these structures can stifle social connection in the name of engineered purity. At the same time, allowing a lot of high-context communication can open up room for chaos and cruft, making it difficult to monetize or manage a social software platform. These are tough challenges, either way, but the dynamics should be understood and worked through purposefully, not ignored.

Identity

Each digital place has environmental elements that nudge or outright constrain us into being different sides of ourselves—our personalities, interests, and modes of expression. Not unlike the Luna Blue Hotel example in Chapter 17—in which vacancy disappeared on the Web even while it had empty rooms—our identities are also partially constructed from the databases across the Internet. For everyone who knows us only by that information, we are what it says we are.

There are arguments and evidence from many disciplines, from philosophy to psychology, contending that a person’s individual identity is not a singular, stable thing. Whether one believes there is some immutable core to the human self, it is clear that such a core—if it exists—is not all that matters for defining who we are. Indeed, we don’t exist in a vacuum. We’re part of an environment, including many other people who experience us and have an impression of who we are. And at the same time, they influence us and our actions, beliefs, and preferences in ways we’re only recently fully coming to understand.

Back in the early days of the public Internet, researcher and theorist Sherry Turkle described how living online, in digital information structures, has made the facets of our identities explicitly delineated. This is in contrast to our offline selves, which have historically been defined more tacitly and subtly. In her 1997 book Life on the Screen: Identity in the Age of the Internet (Simon & Schuster), she explains how the Internet has brought us to a literal culmination of what postmodern theorists had been saying about identity for the prior several decades: the self is a “multiple, distributed system...a decentered self that exists in many worlds and plays many roles at the same time.” Turkle then goes on to show how these “worlds” and “roles” have gone from being tacitly emergent entities that don’t necessarily have clear boundaries, to being things we can enact in an explicitly defined way in specific, clearly bounded contexts. Using Multi-User Domains (MUDs) for her ethnographic field work, she shows how people explore different sides of themselves, acting out facets of their personalities in separate, clearly defined places—in one, a hyper-masculine and demanding warrior, while in another a fey, androgynous elf. She makes the point that this provides many options for habitation and life experience, leaving “real life” as just “one more window.”[354]

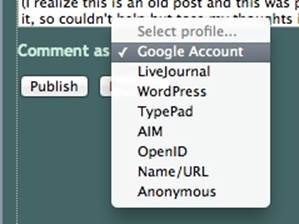

There are still places where role-players get to put on various personalities and digital bodies. But in one sense, this ability has become mainstreamed into the many social platforms people inhabit. We have online profiles at many different sites, each of which is engineered around a specific set of information about us. We’re often finding ourselves choosing which platform is best for a particular picture or personal moment, or choosing which of our identities to use for making a comment somewhere, as shown in Figure 18-7.

And these choices matter. For example, LinkedIn doesn’t ask what my favorite bands are or what five things I would take to a desert island, or what sort of people I want to date. It asks for business-related information such as work and education history—its context as a career-oriented place is largely defined by the semantic categories it gathers from us.

Figure 18-7. Blogspot gives users the ability to log in using one of a number of net-defined “identities” to comment on a post

So, LinkedIn defines the role one plays on LinkedIn, and in turn, that’s the identity one has when visiting there. The same goes for a dating site, or a personal journaling platform. Even an e-commerce site provides constraints for our role and identity; for example, Amazon, where the information categories constrain my role to being a consumer among other consumers, is a place where I can engage in conversations and self-expression, but always about products that I and others might consume.

What has changed since Turkle’s writing in 1995: there’s no longer a neat division between “real life” and life “on the screen.” Screens are now everywhere, as apertures into the pervasive, networked information dimension. There is no stepping away from the computer to live in “meatspace” versus “cyberspace” anymore.[355] These other contexts are always-on and invariantly available. It’s hard to think of any networked experience that is still a fully walled garden; they all have tendrils working their way into syndicated feeds, email alerts, live chats, and other channels.

Sometimes, a service is polite enough to ask if we want to connect one context to another, which is good. But these connections still come with complications.

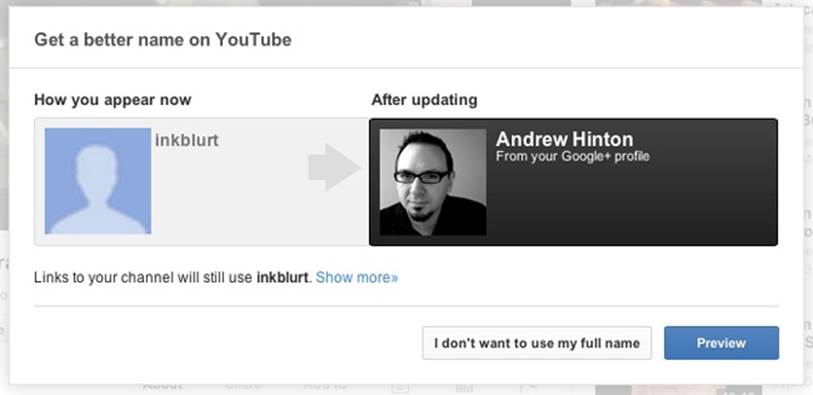

For example, lately, Google has been nudging users to consolidate their profiles, sweetening the encouragement by showing you how much “better” your identity will look and sound if you combine them, as is shown in Figure 18-8. Sounds fine on the face of it, but notice the disclaimer in the figure: “Links to your channel will still use [your YouTube username].”

Figure 18-8. Google suggests I merge my personal Google account and the Google Plus account I have from my employer’s Google Apps platform

One problem with an environment made of names is that you can’t easily just shirk one and move on to another. Then how will my identity be nested if I say yes to this? In which ways will it be merged and in which ways will it not? It’s not clear.

I’ve wrestled with a similar issue just within Google’s Gmail platform. As Google grew its services portfolio, I created a new “Google Apps” identity so that I could use Gmail with my own andrewhinton.com domain; however, when I did, I already had years of email archives and history with my existing email address at gmail.com. Then, I joined a consultancy that was using Google’s Apps for Business service. Google has integrated all three of these into its Plus social platform, but I’ve found no useful way to merge or coordinate the three identities. Even in the Google ecosystem, I am represented by at least several different profiles. On the Internet, it’s a challenge to be represented as a single object, with a single label, even when you want to.

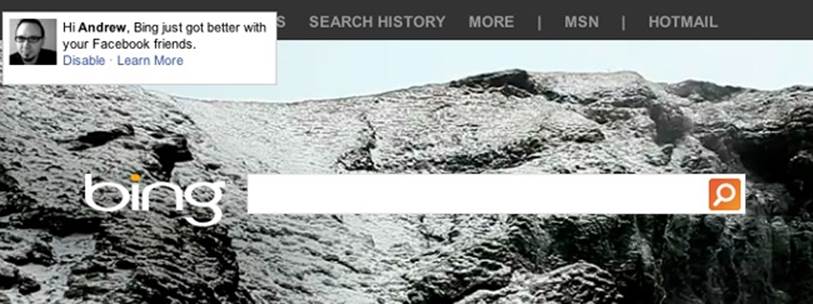

My Facebook identity is another version of me online; and around the time of the Beacon experiment, I realized my Facebook identity was being recognized on other sites, far beyond Facebook. That’s because Facebook recognizes me through embedded code in websites—such as online retailers or magazines—that have nothing explicitly to do with Facebook. So many places I go, I see my face or the faces of Facebook friends embedded out of context.

When I first encountered this pattern, it was disconcerting because it wasn’t clear if my face was something other people were seeing or if it was just showing up for me (see Figure 18-9). It’s one of those things that took explicit, reflective attention—and repeated exposure—to accept this new pseudo-presence of my “self” in all these other places. Even now, though, if it’s embedded in some way new to me, it can still take me off guard.

This contextual cloning and splintering of my online self is happening far beyond Facebook’s many connections. For example, my American Express account is embedded in the digital context of my accounts at Amazon and Delta Airlines. These digitally formed places are not merely supplemental to our identities, because we rely on them as primary infrastructure for our social contexts.

Figure 18-9. How is it that my Facebook profile is integrated into Microsoft’s Bing? The rules make sense to the network, but not to me

In one interview, a teenager explains the consequences for a friend of hers, not for a “social network” site, but a phone texting channel:

Not having an iPhone can be social suicide, notes Casey. One of her friends found herself effectively exiled from their circle for six months because her parents dawdled in upgrading her to an iPhone. Without it, she had no access to the iMessage group chat, where it seemed all their shared plans were being made.

“She wasn’t in the group chat, so we stopped being friends with her,” Casey says. “Not because we didn’t like her, but we just weren’t in contact with her.[356]

At one time, having a listing in the phone book was an essential bit of semantic information, providing an interface between a person or business and civic life. If you weren’t in the phone book, you were disconnected from a huge dimension of social infrastructure. But now we need an account in every digital place where our friends, family, or customers expect us to be. If we aren’t represented in those places, we don’t exist in them.

These places can become so necessary in our lives that they can actually go from being pleasant to oppressive. As the currently reigning Übernetwork, Facebook serves again as a good example. Although Facebook’s user numbers have continued to increase, many people are finding that if they keep an account at all, it’s only out of necessity. They are afraid of disappearing entirely from the place where so much of what defines them and their community happens, but they consider it more of a chore than a delight.

One recent study found many factors in play for why Facebook has become a contextually complicated place. The report finds that teens can’t freely express their identities with their peers the way they might otherwise, because so many parents, teachers, and other adult community figures have joined Facebook, as well.[357] But even among peers, “looking good, both physically and in reputation, is a big deal.” There’s pressure to uphold a positive image, in part because there’s pressure for one’s shared information to be “liked” in order to know if it’s valued or appreciated by peers. Everything can be “watched and judged.”

Many teens are finding respite by creating accounts in other platforms, such as Twitter, Tumblr, Instagram, or Reddit. For many, there’s a clear awareness that it’s because those places have different architectures through which they can express themselves with less restriction and oversight, and with less pressure to comprehensively define so much of themselves for consumption by every “role” they play in their lives.

For example, Tumblr has exploded recently as a teen social platform. One teen in the study said he uses it more because, “I don’t have to present a specific or false image of myself and I don’t have to interact with people I don’t necessarily want to talk to.” That is, Tumblr is a different kind of place whose structures are less demanding about defining oneself or compelling a user to take so many actions to be part of the system.

Like Twitter, Reddit, and other less-demanding platforms, one benefit is you’re not compelled to identify your real name to viewers. You can experiment with what you’re interested in culturally and socially, and only tell trusted friends your username. A common usage pattern on Reddit is the “throwaway” username: users will create a temporary login just to ask a single embarrassing or private question, often actually putting some version of “throwaway” in the name itself. Reddit makes it easy to do this, or it wouldn’t be so common. The rules of the environment shape the behavior of its inhabitants.

A principle we keep returning to is that we’re part of a nested, evolutionary ecology. We are the way we are largely because of the structures and affordances of our environment. So, if that’s the case, all the stuff that makes up our identities—personalities, social connections, personal history, and so on—work the way they do with the assumption that the world around us works in a particular way, as well. Facebook has grown, in part, by providing mechanisms for every facet of our social lives. It sounds like a fine goal, until we realize that Facebook—in trying to be so many different places at once—disrupts our cognitive ability to distinguish what sort of place we are in, which in turn disrupts our ability to know which side of ourselves we should be presenting to others.

As other social platforms continue to innovate and expand their scope in order to grow their user base, they create similar disruptions. This social dissonance is not just in our heads but is directly coupled with the dissonance of structure in these environments. Our identities are coupled, for good or ill, with the structure of place. Unstable or collapsed context architectures result in unstable or collapsed identities.

Collisions and Fronts

The way social structures can collapse or be unstable isn’t a sign that we’re disingenuous or duplicitous; having these sides of ourselves is just how we are. Erving Goffman, one of the most influential sociologists of the twentieth century, argued that we naturally put on “fronts” that we use to control the way others perceive us in various facets of our lives. He framed these personas in theatrical terms: we have a “back-stage” self that is more relaxed and personally open, a “front-stage” self that is more controlled and cultivated for public or professional interaction, as well as a “core” self that is mostly internal, the way we relate to ourselves. And, of course, there can be various facets of each, depending on the situation one is in.

I suspect these fronts are natural adaptations to the complexity of human relationships. We’re usually not conscious we’re doing it, because it’s not something we plan to do; it’s just how we behave. This dynamic works just fine as long as our fronts align with the environment we’re in—acting in one way when among our best friends, in another way when at work, and in another when visiting elderly relatives. When in each of those contexts, there is unambiguous information specified in the environment informing us who we’re with, and where.

When Facebook’s Beacon was causing waves, sociologist and ethnographic researcher Sam Ladner explained that what was going wrong with Beacon (referencing Goffman’s concept) was what she calls a “collision of fronts.”

Facebook’s Beacon didn’t work because it forces people to use multiple fronts AT THE SAME TIME. If I tag a recipe from Epicurious.com, but I broadcast that fact to friends that perceive me to be a party girl, I have a collision of fronts. If my boss demands to be my friend, I have a collision of fronts. If I rent The Notebook on Netflix, and my friends think I am a Goth, I have a collision of fronts.[358]

Imagine if all the places you thought of as important places in your city or town were somehow merged into one place: at a smaller scale, it might be your bedroom, kitchen, and front porch; simultaneously, at a larger scale, it could be your workplace, your home, your gym, and—in a weirdly timeless way—your high school reunion, one that doesn’t last only a weekend, but goes on and on as you try conducting the rest of your life. In its need to grow without boundaries, Facebook collapses contexts by creating many doorways that feel as though they will take us to separate places, but they all drop us into the same big place at the same time.[359]

Soon after Beacon, Facebook added tools for creating and managing “groups,” but they weren’t especially good for privacy. A few years later, they overhauled what the platform meant by “groups” entirely, to allow more private management between smaller clusters of friends, but the settings for them (as well as for all the privacy controls) are hard to find and understand, in spite of Facebook’s almost-annual attempts to improve their usability. As late as 2012, Consumer Reports was calling Facebook’s privacy controls “labyrinthian.” Changing the rules so often only further confounded users.

For Mark Zuckerberg, Facebook’s creator, the labyrinth hit close to home in 2012 when his sister Randi discovered that a photo from her personal Facebook status feed was shared with an audience not only outside her own Facebook page, but on Twitter—outside of Facebook entirely.

Ms. Zuckerberg complained to the Twitter user (named Callie Schweitzer), saying that sharing her photo in such a way was “way uncool,” to which Callie responded as depicted in Figure 18-10.

Figure 18-10. A Zuckerberg brouhaha[360]

But according to the Buzzfeed article about this brouhaha, Ms. Schweitzer more likely saw it not because she was subscribed to Randi’s feed, but because she’s friends with Randi’s sister, who was tagged by name in the photo, which made the picture show up in the sister’s news feed, as well.[361]

There was a hearty round of schadenfreude among web dwellers when this occurred; people frustrated with the platform’s convoluted privacy controls were more than happy to see a member of Facebook aristocracy suffer from the same contextual collision that had vexed the common folk for years.

The problem arose in part because Facebook’s environment uses names in ways we don’t use them in regular conversation. When typing a name into a status or photo post, Facebook automatically looks for names in your friends list that match, and provides an auto-suggest-and-complete interaction. Facebook also makes it a default setting for users to allow being tagged in such a way, and for items tagged thusly to show up in the news feeds of friends of the tagged person—and it’s hard to tell that the tagged person didn’t post it herself.

A similar issue occurs on Flickr: the system publishes any pictures tagged with your name as a primary place in your profile. In the mobile app (Figure 18-11), tapping the Photos Of button reveals a gallery of such pictures; in my case, because I never get around to tagging pictures of me with my own name, these are nearly all pictures made by other people.

Figure 18-11. Flickr’s mobile profile presentation

Someone new to Flickr could easily assume the Photos Of area is actually curated by me—after all, I’m the curator of the other structures: Sets, Groups, Favorites, and Contacts. The convention in most social networks has been that the user controls what represents him in his profile. Adding to the confusion, these icons are all presented as if they are similar in kind—apples with apples—even though one is not like the others.

So, even though social platforms claim to be giving users ways to manage context, the walls and windows keep shifting; the rules are so slippery, we environmentally learn that we can’t trust key structures to be invariant. Like some of the teens studied by Pew, one way users have combatted this confusion is to just create multiple accounts, one for their “front-stage” self, and another for their “back-stage” persona—a practice Facebook actively condemns. According to Facebook’s official policies, multiple profiles can cause you to be exiled from Facebook:

On Facebook, people connect using their real names and identities. We ask that you refrain from publishing the personal information of others without their consent. Claiming to be another person, creating a false presence for an organization, or creating multiple accounts undermines community and violates Facebook’s terms.[362]

What this policy misses is the fact that people are not just one thing in all places and all times, among all people. Real “community” leaves room for the multiple fronts of one’s identity. It’s only because Facebook has created such a muddle within the shell structure of a singular profile that people have given up on making sense of it, and decided instead to create their own separately defined contexts for their social fronts.

The Ontology of Self

What we’re witnessing in social software platforms is a clash between different definitions of the self and what an identity actually is, not to mention what a “friend” is. Recall that the word ontology can be used in two different ways: first, it means the conceptual, human question of being, and what something “is”; and, second, it means formal, technological definition of an entity (what attributes does it have that define what it is in the system?).

Digital logic leaves little or no room for the tacit nuances of organic, nondigital life. To achieve anything even close to the rich complexity of natural human meaning is one of the most challenging things computer science can attempt—hence the still-awkward interactions we have with voice-controlled computers, or the erratic usefulness of “natural language search.” Those capabilities require many expensive layers of artificial intelligence, which is still far from being consistently reliable outside of narrowly defined use-cases.

Facebook puts a lot of the work on users’ shoulders to figure out how to make the many dimensions of one’s identity fit the very few “slots” provided by its data models and controlled vocabularies.

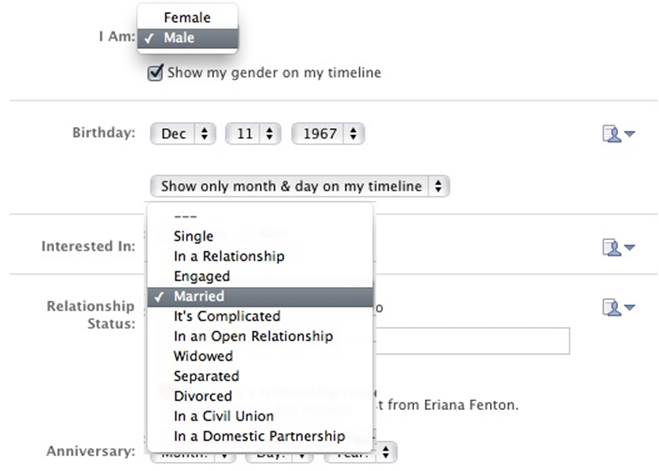

For example, Figure 18-12 shows the top portion of Facebook’s form for gathering one’s “Basic” profile information, as it stood circa 2012. I’ve exposed the selections in two of the drop-down menus to illustrate how Facebook was using a limiting ontology of identity.

Figure 18-12. Facebook’s previous gender and relationship choice lists

First, note gender. In the twenty-first century, much of the world has come to realize that we are gendered in ways that are far more complex than the binary setting of male or female, and that gender is (like identity itself) largely constructed by cultural and social context. It’s good to know that a few months after I first drafted this section, Facebook changed to a more inclusive approach for gender specification: the menu choices are Male, Female, and Custom; selecting Custom reveals additional fields, as demonstrated in Figure 18-13, with even the lovely nuance of pronoun choice.

Figure 18-13. Facebook’s updated Gender selection interface

Also note the drop-down selection for Relationship Status in Figure 18-12. The choices present a list of mutually exclusive categories that don’t accommodate the complexity of actual relationships. For example, what marriage isn’t “complicated” in one way or another? The list also infers that there is a sort of linear progression to a romantic relationship: from Single to “In a relationship” to Engaged and then to Married. (Let’s set aside the unsettling feeling we get if we extend that implied line through “It’s Complicated” and the rest.) But the truth is, a real romantic relationship doesn’t move in a linear fashion through these clearly defined states. Not to mention the more legally specific constructions, many of which are defined differently from one municipality to another.

And yet, for anyone who has dated and had even a semi-serious relationship in the age of Facebook, this drop-down list can be vexing. Why? Because Facebook has become an official part of how couples define their relationships. The changing of one’s relationship status has become part of the prevailing cultural environment, as much as wearing a partner’s varsity-letter jacket in high school or offering an engagement ring in a marriage proposal. Because there are already culturally conventional markers for engagement and marriage, those selections are somewhat less problematic than others. But the really difficult one is Single versus “In a relationship”—at what point in one’s dating life does an individual (or a couple) switch from one to the other? In analog, semantic life, this is a tacit inflection point that emerges uniquely for everyone. But here, it has to be a clearly defined signal—a flipped switch.

This issue seems trivial at first, but Facebook is such a major factor in how others in the world understand who we are that this choice has a great deal of pressure on it. I personally know people who have stopped dating because of arguments over this tiny semantic choice.

There’s an obvious question underlying this entire issue: Why does the platform require answering this question with a selection from a defined list? Why can’t there just be a free-text field, with perhaps a list of suggested phrases, the way Facebook added the Custom field for gender?

The answer is probably because the information isn’t only for regular users. The collected data needs to be structured as attributes for data-mining advertisers. Facebook is asking us to identify ourselves within the definitions of a map that is partly not intended for us. In doing so—as we rely more and more heavily on infrastructures such as Facebook to mediate our identities—they structure what and who we are to one another.

So, on Facebook, filling out your personal profile isn’t just about telling other people about yourself on Facebook. It’s also connecting you with semantic information categories that can be structurally searched and analyzed in ways that would not occur to you. And why would it? It’s happening in an abstracted process outside of your perception.

Now that Facebook has introduced what it calls Graph Search, all those public factors are fodder for analysis to identify you in whatever ways searchers wish to interpret the data. People can use that information to profile users based on religion, gender, age, and even sexual orientation.[363]And because “Big Data” can be used to find patterns we would never dream of, these factors are discoverable through triangulation, even if users haven’t made those fields in their profile explicitly public.[364] It’s not just Facebook that’s using this sort of shadow-of-yourself analysis to target messages. The Barack Obama election campaign used these techniques with such skill that they had a better sense of how individuals would vote than the individuals had themselves.[365] And big-box retailer Target can accurately market baby products to people who don’t even know they’re pregnant yet.[366] Keep in mind, these last two examples aren’t driven only by web behaviors, but by data gathered from many other now-publicly-available sources. The information modes we’re familiar with—our everyday semantic utterances and bodily activities—are increasingly tethered to digital-information data stores, ready to be harvested.

Digital theorist, futurist, and philosopher Jaron Lanier has railed against such misappropriations of personal information. In You Are Not a Gadget: A Manifesto (Vintage Books), Lanier reminds us that the Shannon construction of information (as discussed in Chapter 12), by its nature, creates limitations in how we describe ourselves and our environment:

Recall that the motivation of the invention of the bit was to hide and contain the thermal, chaotic aspect of physical reality for a time in order to have a circumscribed, temporary, programmable nook within the larger theater. Outside of the conceit of the computer, reality cannot be fully known or programmed.

Poorly conceived digital systems can erase the numinous nuances that make us individuals. The all-or-nothing nature of the bit is reflected at all layers in a digital information system, just like the quantum nature of elementary particles is reflected in the uncertainty of complex systems in macro physical reality, like the weather. If we associate human identity with the digital reduction instead of reality at large, we will reduce ourselves.

The all-or-nothing conceit of the bit should not be amplified to become the social principle of the human world, even though that’s the lazy thing to do from an engineering point of view. It’s equally mistaken to build digital culture, which is gradually becoming all culture, on a foundation of anonymity or single-persona antiprivacy. Both are similar affronts to personhood.”[367]

Lanier is pointing out the ways in which the digital mode of information can influence and ultimately warp the way we identify ourselves. Of course, a business is a business, and advertising is what drives revenue for Facebook and many other social platforms, which wouldn’t exist but for that revenue. So, the answer isn’t to vilify the business as a business. A good start would be doing a better job of providing transparency around user information, ideally giving users some level of agency in how their information is being used, other than having to leave the platform altogether.[368]

As designers of these inhabited, living maps, we need to realize how deeply we are affecting the lives of people who use what we make. The defined attributes we wrap around people (as well as objects, events, and places) that we might assume are only supplementary can easily become fundamental in their effects. Facebook is only one obvious example of these issues; they’re just as important when designing a corporate intranet or a patient database for a healthcare system.

Networked Publics

Digital places, structured by systems of labels and rules, are not just something we visit as an optional distraction anymore. And, when an environment becomes more of a requirement, it’s more about civics than mere socializing. Author and professor Clay Shirky states in clear terms how the structures and rules we create in these places affect our lives:

Social software is the experimental wing of political philosophy, a discipline that doesn’t realize it has an experimental wing. We are literally encoding the principles of freedom of speech and freedom of expression in our tools. We need to have conversations about the explicit goals of what it is that we’re supporting and what we are trying to do, because that conversation matters.[369]

We are co-inhabiting digital governance structures legislated by software engineers, counseled by marketers, advertisers, and corporate board members.

In her dissertation on social software, Taken Out of Context: American Teen Sociality in Networked Publics, social media scholar danah boyd (her capitalization) explains that there is not really just one “public.” Rather than saying “the public” it’s more accurate to refer to “a public” among many. She states, “Using the indefinite article allows us to recognize that there are different collections of people depending on the situation and context. This leaves room for multiple ‘publics.’” She explains that publics are not necessarily separate from one another: they overlap, and are nested with smaller publics inside larger ones. (From a Gibsonian perspective, this might suggest that people group together in an overlapping, nested way similar to how we perceive the environment; this would make sense, given that people are part of the environment, as well.) In addition, boyd mentions that there are also emergent collectives working against the grain of the status quo, referred to as “counterpublics.”[370]

Publics are shaped in part by how they are mediated, and boyd argues that “networked” publics are different from the “broadcast” and “unmediated” publics that came before; she says the proper frame for the structures and rules we put into the networked environment is architecture: “Physical structures are a collection of atoms, while digital structures are built out of bits. The underlying properties of bits and atoms fundamentally distinguish these two types of environments, define what types of interactions are possible, and shape how people engage in these spaces.”[371]In fact, citing William J. Mitchell’s City of Bits: Space, Place, and the Infobahn, boyd explains:

Mitchell (1995) argued that bits do not simply change the flow of information, but they alter the very architecture of everyday life. Through networked technology, people are no longer shaped just by their dwellings but by their networks (Mitchell 1995: 49). The city of bits that Mitchell lays out is not configured just by the properties of bits but by the connections between them.[372]

The way we use semantic function to make environments adds up to a sort of urban planning and architectural practice, and not merely in a metaphorical sense. This is architecture that we literally live in together.

To elaborate, boyd lays out four “Properties of Networked Publics” that make them different from the other sorts of mediated publics:[373]

§ Persistence: Online expressions are automatically recorded and archived.

§ Replicability: Content made out of bits can be duplicated.

§ Scalability: The potential visibility of content in networked publics is great.

§ Searchability: Content in networked publics can be accessed through search.

Additionally, “the properties of networked publics lead to a dynamic in which people are forced to contend with a loss of context.”[374]

In ecological terms, these are new invariant properties of our environment that don’t behave in the way in which our embodied perceptual system expects. As anthropologist Michael Wesch explained in Chapter 2 about the experience of looking at a webcam and trying to understand what sort of social interaction one is experiencing, we don’t have any gut-level grasp of what expression means in such a disembodied, wide-scaled context.

For an online environment like Facebook or Google’s Buzz and Plus, there are no intrinsic physical structures for us to rely upon for knowing where we are, or where the objects we create are (such as photos or status updates), and who can see them. The system has to simulate those structures for us, not only with graphical simulation of surface structures, but semantic relationships of labels.

It also has to build in structures for giving us a sense of others’ behavior, or attempts to meet or learn more about us; but such structures struggle to behave like physical social life. On LinkedIn, for example, there are mechanisms for knowing who looked at your profile (Figure 18-14), if you allow others to know you looked at theirs; it’s something that wouldn’t really exist outside of digital information.

Figure 18-14. Checking who viewed your profile in LinkedIn

Just because anything can be linked to anything doesn’t mean it’s the right thing to do. The environment might make perfect sense in its requirements and its engineered execution, but it isn’t truly habitable until, in the words of information architect Jorge Arango, it “preserves the integrity of meaning across contexts.”[375] It must make sense not just to the map itself, but to the people who live in it.

[344] Marshall McLuhan famously posited that “the medium is the message” in his 1964 book Understanding Media: The Extensions of Man (MIT Press).

[345] Thanks to Tanya Rabourn and Austin Govella for permission to bring this exchange into the book.

[346] SMS is actually the most widely used data channel on the planet; for much of the developing world, SMS is their equivalent of the Internet.

[347] Clay Shirky makes this and other surprisingly still-relevant points in his 2004 essay, “Group as User: Flaming and the Design of Social Software,” (http://bit.ly/1uzVZOZ).

[348] Shirky, Clay. Here Comes Everybody: The Power of Organizing Without Organizations. New York: Penguin Press, 2008:17.

[349] It means we talk about “content” more than we used to, because it’s semantic material that’s so easily detached from the origin. This means that we must explicitly plan and manage how that content is governed and published, as in the practice of Content Strategy.

[350] Hall, Edward T. “A System for the Notation of Proxemic Behavior.” American Anthropologist October, 1963; 65(5):1003-26.

[351] Some details gathered from the Wikipedia article on Proxemics (http://bit.ly/1t9Hl4c).

[352] Wikimedia Commons: http://commons.wikimedia.org/wiki/File:Personal_Space.svg

[353] Hall, Edward T. Beyond Culture. New York: Anchor Books, 1976.

[354] Turkle, Sherry. Life on the Screen: Identity in the Age of the Internet. New York: Touchstone, 1995:14.

[355] Turkle, in fact, came to see this, as well, and the point is part of her argument in her later book, Alone Together: Why We Expect More from Technology and Less from Each Other. She sees it as part of an unhealthy corrosion of human communication. Personally, I see it not as necessarily corrosive, but a sort of phase transition from one mode of community to another.

[356] “What Really Happens On A Teen Girl’s iPhone.” Huffington Post, June 5, 2013 (http://huff.to/1wiaBWx).

[357] Madden, et.al. “Teens, Social Media, and Privacy,” Pew Research Internet Project, May 21, 2013 (http://pewrsr.ch/1w3flBK).

[358] Ladner, Sam. “What Designers Can Learn From Facebook’s Beacon: the collision of ‘fronts’,” Posted at copernicusconsulting.net November 30, 2007 (http://bit.ly/10iKhAW).

[359] “Facebook & your privacy,” Consumer Reports magazine June 2012. (http://bit.ly/ZELYHC).

[360] Screenshot by author.

[361] http://www.buzzfeed.com/jpmoore/mark-zuckerbergs-sister-complains-of-facebook-pri

[362] https://www.facebook.com/communitystandards/

[363] van Ess, Henk. “The Creepy Side of Facebook Graph Search” PBS.org Mediashift January 24, 2013 (http://to.pbs.org/1AoOYYV).

[364] Green, Jon. “Facebook knows you’re gay before you do,” Americablog, March 20, 2013 (http://americablog.com/2013/03/facebook-might-know-youre-gay-before-you-do.html).

[365] Issenberg, Sasha. “A More Perfect Union: How President Obama’s campaign used big data to rally individual voters, Part 1.” MIT Technology Review December 16, 2012 (http://bit.ly/1wwD6AN).

[366] Duhigg, Charles. “How Companies Learn Your Secrets,” The New York Times, February 16, 2012 (http://nyti.ms/1CQFQsQ).

[367] Lanier, Jaron. You Are Not a Gadget: A Manifesto. New York: Vintage Books, 2011:201.

[368] The question of whether people must agree to give up their personal data in order to participate in the platform is related to a bigger question: at what point does something like Facebook become a kind of monopoly utility, to which people have a right to access?

[369] As reported by Nat Torkington in his notes of Shirky’s talk (http://oreil.ly/1t9HBA9).

[370] boyd, danah. Taken Out of Context: American Teen Sociality in Networked Publics Dissertation; University of California, Berkeley. 2008, p. 18

[371] boyd, p. 24

[372] boyd, p. 25

[373] boyd, p. 27

[374] boyd, p. 36

[375] Arango, Jorge. “Links, Nodes & Order: A Unified Theory of Information Architecture,” (http://www.jarango.com/blog/2013/04/07/links-nodes-order/).