Understanding Context: Environment, Language, and Information Architecture (2014)

Part V. The Maps We Live In

Chapter 17. Virtual and Ambient Places

It’s funny how the colors of the real world only seem really real when you viddy them on the screen.

—ALEX, IN A CLOCKWORK ORANGE, ANTHONY BURGESS

Of Dungeons and Quakes

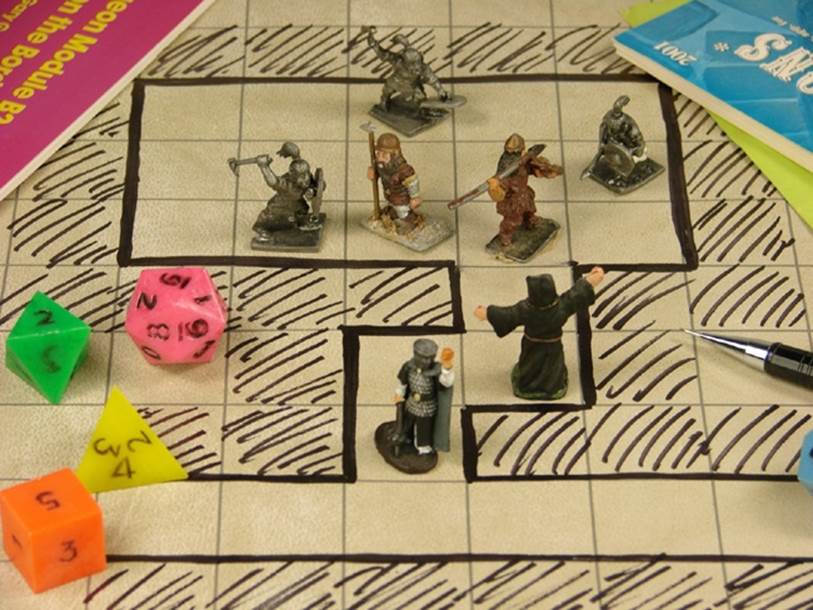

SEMANTIC INFORMATION CAN BE TRULY IMMERSIVE. Whether it’s an all-night dorm conversation or losing yourself in an engrossing novel, language can swallow our attention whole. When you add more layers to the semantic environment, meaningful experiences of place can emerge. Take a role-playing game such as Dungeons & Dragons (Figure 17-1). For the players, the physical surroundings—a friend’s kitchen table or the back of a hobby shop—recede into mist as the shared story of the campaign becomes more palpable and compelling. Even as a teenager, when I was an active player, I marveled at how all it took was some scribbling on paper, some rules, and some dice to create a fully engaging environment that my friends and I could inhabit until dawn.

Digital technology is turning the sorts of rules and maps we find in a tabletop game into actively inhabited virtual places as well as radically transformed physical ones. We find one example in text-based Multi-User Dungeons (or Domains), more commonly known by their acronym “MUDs” (and variants MUSH, MOO, and so on). Invented almost as soon as computers with command-line interfaces could be networked, MUDs establish immersive environments in which players can interact as they find treasure and slay monsters, or in some cases just socialize and build new places and objects.

Figure 17-1. A game of Dungeons & Dragons, in progress[318]

In one such “social” MUD, called LambdaMOO (MOO standing for “MUD, Object Oriented”), writer Julian Dibbell witnessed the power of that immersive experience himself and wrote about it in his 1999 book, My Tiny Life: Crime and Passion in a Virtual World (Henry Holt and Company). At one point, he tried creating a map to help him fully comprehend the MOO, but found that it couldn’t fully encompass all the wonders that had been created by LambdaMOO’s denizens. He had an epiphany: “It occurred to me that there was in fact one map that represented the width, breadth, and depth of the MOO with absolute and unapologetic reliability—and that map was the MOO itself.”[319]

LambdaMOO and other similar MUDs have a built-in scripting language that players use to create new parts of the environment; and the environment itself is often referred to as the game’s map. The MOO has nested structures, all described strictly with written language, including the rules that govern the environment’s programming. A typed command can create an object; when an entryway is added—an attribute that creates the ability to enter the object—it becomes a room. Rooms can be connected, and filled with other objects, which can be programmed to interact with players.

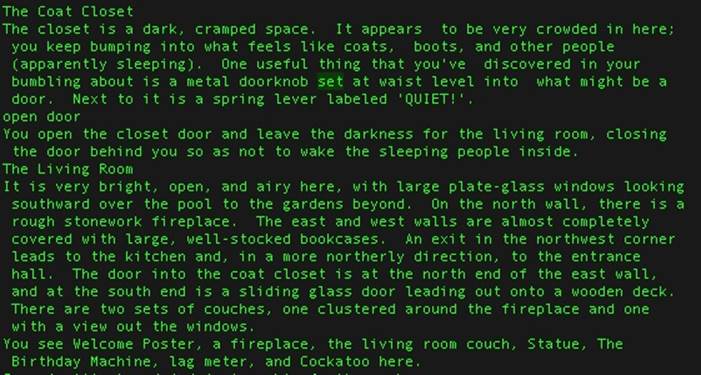

LambdaMOO in particular has a central gathering place—the Living Room, shown in Figure 17-2—where a loquacious cockatoo “object” is programmed to respond to actions such as being bathed, fed, or even gagged when it becomes too noisy. The player is represented as an avatar, which is essentially another object in the environment. The object-oriented approach to the environment works like a digitally reified, more strictly hierarchical version of James J. Gibson’s elements: objects, places, layouts, and the rest.

Figure 17-2. The Coat Closet and Living Room in LambdaMOO

In his LambdaMOO adventures, Dibbell found that the MOO, populated mainly by grad-school academics and computer scientists, was an emotionally significant place for its users, where they were exploring sides of their identity and social life that might have been impossible otherwise. In a storyline that threads throughout the book, Dibbell shows how the brutal violation of an in-game character by one or more hackers (going by the name “Mr. Bungle”) had an unsettling effect on the user whose in-game avatar was harmed. She was more surprised than anyone that the experience felt so traumatic that it brought her to tears; and the administrators who ran the MOO found themselves conducting a sort of Constitutional Congress to figure out how the MOO should be governed.[320] Reading the account now, one has to be struck by the questions the MOO leaders wrestled with, because they still sound so familiar and relevant for all our shared online environments, from intranets to social media platforms. The “cyber-bullying” and cruel “trolling” that have become epidemic in recent years all have early, awful seeds in the actions of Mr. Bungle.

Text-based MUDs and their ilk are still around and still have immersive power. But technology soon advanced to the point at which three-dimensional visual game spaces went mainstream. In the mid-to-late 1990s, I was obsessed with Quake, a genre-defining first-person-shooter video game. In particular, I was interested in the multiplayer variant that allowed players to compete in real time, in various versions of the game rules, from “Deathmatch” to “Capture the Flag.” At the time, it was cutting-edge technology. The studio that created Quake, id Software, invented techniques for game design, decentralized networking, open APIs, 3D rendering, latency handling, and countless other infrastructure innovations that we take for granted today, and which are in use far outside game software.[321] It was also one of the first games to inspire a massive online community outside of the actual game itself.

I’ll admit that I wasn’t a very good player. I ended up spending more of my time setting up and “modding” game servers, and designing websites for teams (Quake “Clans”), complete with real-time scoreboards and sprawling, threaded discussions. Some of these experiences are what formed my own foundational ideas about what it means to make information environments.

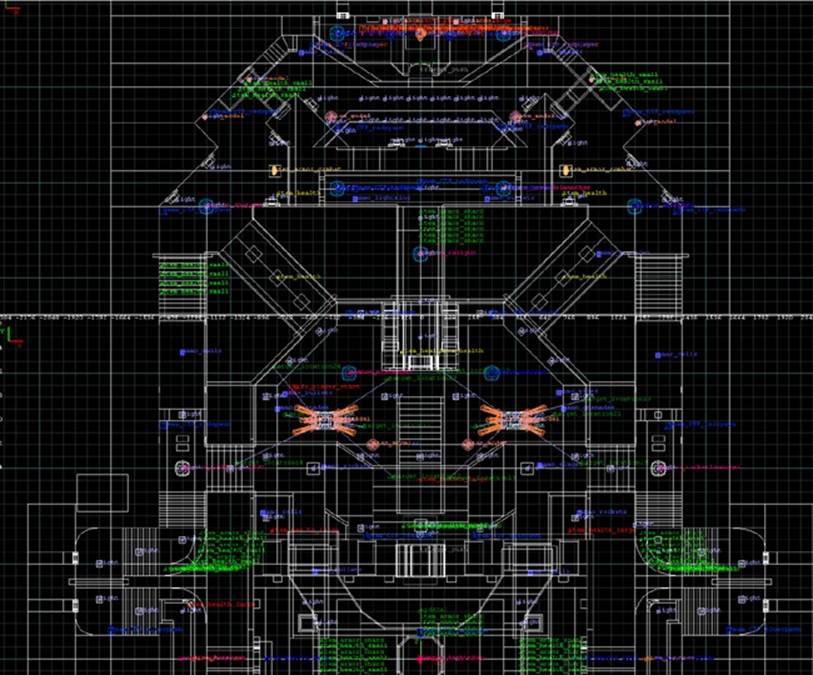

Quake’s action takes place in game levels referred to as “maps,” of which you can see a later-version example in Figure 17-3. The open approach employed by id Software made it possible for creative people all over the Internet to create their own maps and game variants, some of which became much more popular than the ones that shipped with the game.

Figure 17-3. A Quake “map” as it looks as a wireframe view in a map editor

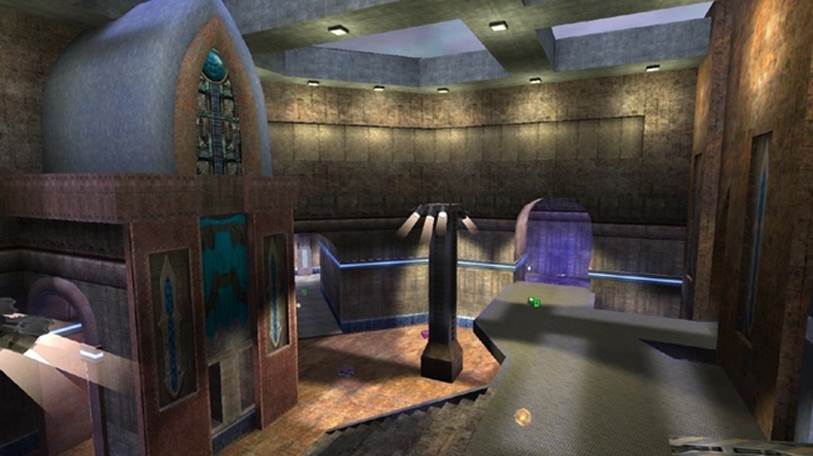

In the words of one pair of players I chatted with back then, while they were running practice sprints from flag to flag on a particularly challenging map, “We live here!” It was a self-deprecating jest about the amount of time they were spending perfecting their game, but it struck me then—and still does—as a fundamentally true statement. Whether it’s the literally architectural simulation of a Quake map (see Figure 17-4), the word-based simulation of LambdaMOO, or the more subtly place-making qualities of a website or instant messenger platform, our lives meaningfully “take place” in these environments.

Figure 17-4. How one room of a Quake map looks as rendered in the game (map designed by Tom Boeckx for Quake 3 Arena, a more recent version of the game)[322]

The Porous Nature of Cyberplaces

Every retail website, social network, email platform, corporate intranet and smartphone app establishes structure that we come to understand as places—because that’s how all terrestrial creatures self-organize their understanding of the environment. Even if we resist calling them cyberspace, they are certainly cyberplaces; and there are more of them than ever. Each one is its own more-or-less contained contextual experience within the vast array of nested contexts in the environment. Although they seem to be contained within the screens of the devices we use to access them, that sense of containment is only an aspect of their interface. Most digital places are actually porous and connected.

Behind the scenes is an ocean of unfettered digital information, potentially connecting that site, app, or platform with anything else on the Internet. Even in the late 1990s, multiplayer Quake servers were not only generating the immersive experience of the game, but were also spewing real-time scores, player names, and network information to server-browsing platforms, which were then connected to all manner of game-finding applications, websites, scoreboards, and more. That was a novel concept in the mid-1990s, but not anymore. If I use my bank’s smartphone app to transfer money from my account, my spouse can receive an immediate text message informing her about the transaction. If I post an update to Twitter, all sorts of third-party platforms can add it to their data stores or syndicate it into mash-ups.

There are offline interconnections, as well. Whereas online environments were once seen as a virtual escape from reality, they’re now mostly a supplemental dimension we use to enhance and expand our physical, offline lives. So, digital placemaking is equally a way to close the distances of time and space between ourselves and our families, friends, and coworkers.

Outside of game platforms, we most often see structures that use built-environment tropes as metaphors rather than simulated buildings. Google Plus uses “circles,” Facebook uses “groups,” and Basecamp uses “projects”—all of these are labels standing for structures that instantiate places, defined by how they organize our online environment.

Figure 17-5 shows a feature in Microsoft’s Windows Phone 8 operating system called “Rooms.” The creators of the feature realized the phone could do a better job of helping users establish a sense of place for organizing their communication and tasks. In a post on the official Windows blog, Juliette Guilbert, a member of the design team, explains:

It definitely takes a village, but our village needs all the help it can get. Now that my husband has his new [Windows phone], we’ve got it—in the form of Rooms, a new feature in Windows Phone 8. Instead of flinging frantic texts around, we can check the room calendar to see who’s on deck. We can start a group chat so everyone’s on the “What the heck is happening?” thread together. And when I add something to the grocery list or cancel a dentist appointment, the updates sync to my husband’s phone. He’s got the room pinned to Start, so its Live Tile even alerts him to the changes.[323]

Although designers might think of Rooms as a metaphor, it’s important to remember that users will likely take the label at face value. Because this is a relatively new feature, as of this writing, it remains to be seen just how successful it will be.

Figure 17-5. Three panels of the Windows Phone 8 Rooms feature[324]

These building-like metaphors have a long history online. LambdaMOO had “rooms” in which people could congregate, create objects, and collaborate. Since the beginnings of the Internet there have been applications with which people can visit digital places, such as IRC, Listservs, and UseNet. But in the mobile smartphone space, this attention to persistently shared, private placemaking is a more recent development.

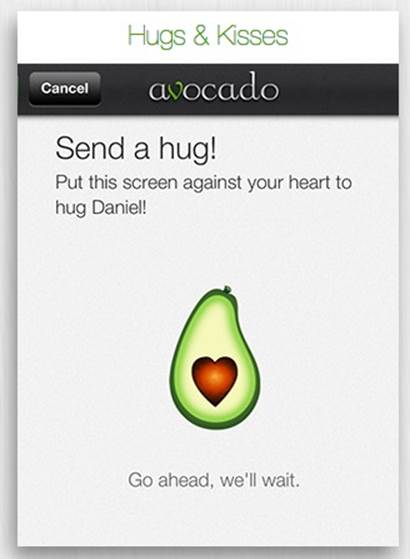

Figure 17-6 presents another example of private placemaking called Avocado, a smartphone app that creates a special place for a couple to share messages, photos, lists, and a calendar.

The couple shares the same password for the app: even though it’s just a string of characters, a password is a significant semantic object that represents intimacy, similar to sharing keys to one’s home. (In fact, research shows that passwords are generally becoming tokens for intimacy, especially among young people.)[325] There’s a strong sense of connection that comes with that one bit of structural design. Other embodied architectural features of Avocado include the following:

§ Interactions go beyond just pictures and words by directing users to perform bodily behaviors for some messages: kissing the screen for a kiss, or pressing the phone to one’s chest to send a “hug.”

§ The “settings” and “profile info” of the app are about the couple, not just the one user; this further establishes the place as a shared one, equally owned by both users, even though it has instances appearing on separate devices.

§ Even though you can log in to the service’s website and use all the functions there, it’s really optimized for the phone, which we treat more intimately as extensions of ourselves than desktop or laptop computers.

Figure 17-6. The architecture of the app has design choices that shape the nature of the place it instantiates[326]

According to its creators, “The move to more intimate applications is only natural, as maturing platforms like Facebook and Twitter lack functionality to provide real private sharing.”[327] It’s an interesting statement, given that both Facebook and Twitter provide mechanisms for making part or all of one’s profile private; but the simplicity of having one app that equals one place, without any confusing privacy settings to configure your own structures, is part of the merit of the Avocado experience. Yet, even as privately constructed as Avocado is, it’s still created to be a supplemental extension of a nondigital relationship. Shopping lists, calendars, and other shared tools are not about your “Avocado Life,” but your “Real Life.”

In addition to supplementing our friends-and-family life, digital places also augment our civic life. In the United States, controversy swirled over the launch of the website Healthcare.gov—the primary vehicle for connecting United States citizens with the services provided under the new healthcare legislation known as the Affordable Care Act. Upon launch, what many people discovered was a broken system that couldn’t accommodate their needs, as illustrated in Figure 17-7. It was not just because of system overload, though; it was because the backend infrastructure and business rules driving it hadn’t been sorted out yet.

Figure 17-7. What millions of users saw when the ACA national website launched

Eventually, the problems were fixed, but the faulty launch highlighted a watershed moment in American civic life: it was the first time a government program of that massive scale relied almost exclusively on a digitally rendered place—the website—as the infrastructure for implementing sweeping legislative change. Even if citizens called by phone, representatives relied on the same site infrastructure. It wasn’t an interstate system, made of concrete and steel; it wasn’t hundreds of ACA offices established in federal buildings across the country; it was a website that the government required people to “go to” in order to access the service.

Digital places are part of our entire environment, including core civil infrastructures, such as healthcare, which accounts for almost a fifth of gross domestic product (GDP)[328] in the United States. They are nested among and within one another in ways both overt and hidden. The launch of the ACA site has to do with context at a national scale—defining the kind of place in which a country’s citizens live.

When we look at these examples closely, we realize that they are all actually language in various forms—semantic information, enabled by digital technology, resulting in new objects and places in our environment. We need them to behave in ways that make sense—which means they need to “make place” in a sensible way.

VACANCY AT THE LUNA BLUE

The physical reality of a place is hard to separate from the language we use to talk about it; and when that language is turned into the machinery of software and networked systems, it can have a transformative effect on the “real” place. In a multiplayer game, a database error only interrupts the fun. However, when we depend on massively multiuser environments for real-life commerce, a bug can have more dire consequences. The Internet is, in essence, a massively multiuser environment, with many structures and rulesets establishing objects, places, and digital agents. Basically, we all live in a giant MUD together now.

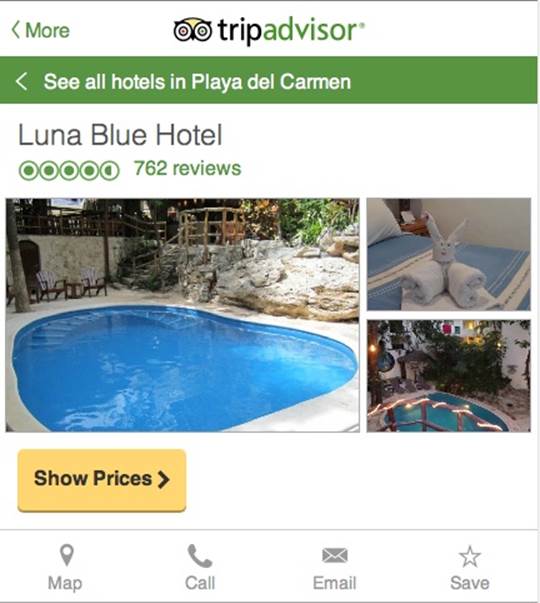

Consider the Luna Blue Hotel: an 18-room facility on the Mexican coast, near the island of Cozumel. As a friendly, family-owned hotel with a great reputation, the proprietors of the Luna Blue were dismayed to discover that Expedia—a large gorilla in the travel-services jungle—was showing their hotel with “No Vacancy” even though they had plenty of rooms.[329]

Because Expedia is such a huge player in the travel space, the no-vacancy status spread all over the Web to other sites where people discuss or research where to stay when traveling to the area. These other sites included big travel services affiliated with Expedia, such as TripAdvisor (Figure 17-8) and Hotels. com—all of which eventually showed up as metadata in Google search results. A single error had a domino effect of erasing the hotel’s availability from the layer of reality that mattered to it most. The ongoing saga went viral on Reddit and other sites, but Expedia’s information about (and treatment of) the hotel only got worse over time, according to the hotel’s owners.[330]

Figure 17-8. The Luna Blue, as shown via the TripAdvisor mobile website

Places are what they are due to all sorts of contextual information. In this case, an actual, physical place—with buildings, and tiki torches, and everything—was contextually compromised because the semantic map of the Web became distorted. This is just one example of how physical context can be usurped almost entirely by semantic context, when digital-information systems create such pervasive, global structures that make the semantic information the “reality of record.”

The architectural issues here go far beyond the structure of a single web-site. They arise from the relationship between the backend systems and databases, the business rules instantiated in those systems, and how all that hidden, digital logic ends up being displayed in the language that’s wrapped around a specific, physical place, like the Luna Blue. It calls into question the ontology of “Vacancy” as defined in who knows how many black boxes strung across the Web.

Information architecture can’t concern itself with only the surface labeling within a particular context—it has to consider the cross-contextual meaning of such a label, and what rules behind the scenes might change what the label signifies. The word “vacancy” stands in for an immensely complex system of logistics and commercial logic. The business rules and technological underpinnings that drive the appearance of that label have to be part of an information architecture practitioner’s consideration.

Augmented and Blended Places

Even though we experience them as immersive places, digital environments are still nested within our physical environment. Smart homes, RFID-tagged retail merchandise, mobile airport check-ins, GPS-enhanced cars, and even roadways bristling with digital signs and billboards mean that semantic information is now dynamically and actively engaged in our entire environment, not just static marks on surfaces.

Just as our smartphones are making us “cyborgs,” any physical place is potentially also a “cyberplace,” shaped by the maps of information we engage through the digital systems pervading our surroundings—the digital agency of the objects and surfaces that make up the Internet of Things.

Back in 2007, I took my daughter, Madeline, to the American Museum of Natural History in New York City (see Figure 17-9 and Figure 17-10). One of the most fascinating exhibits was the Hall of Biodiversity, where a huge wall has thousands of species arranged in a sort of taxonomy of taxidermy. Oddly, though, they don’t have labels. Instead, maps and digital interfaces supply the semantic scaffolding for understanding the exhibit.

New York CityPhoto by Ryan Somma. CC license: ." width="650" height="433" />

New York CityPhoto by Ryan Somma. CC license: ." width="650" height="433" />

Figure 17-9. The Hall of Biodiversity at the American Museum of Natural History in New York City[331]

The Hall has a row of kiosks with which visitors can navigate rich information about the creatures mounted above. Carefully designed displays of printed taxonomical hierarchies blend with digital displays of narrative content and the sensory flood of wildlife.

This carefully orchestrated information environment relies on the cognitive abilities of visitors—the perception-action looping and language interpretation that happens simultaneously among physical and semantic information modes. The “glue” that pulls it all together is enabled by the digital information that drives the interactive interfaces. Keep in mind that the museum is a controlled environment, where the entire structure is created for a singular purpose. Architecture, interior design, and exhibit design can work together to establish a bubble-world in which everything integrates coherently. That’s different from most of the world; the uncontrolled, uncurated one where we are expected to design and launch products and services.

Figure 17-10. My daughter, Madeline, learning about natural history through cross-media interaction, on our trip in 2007

In the wild of noncurated places, there can still be fascinating transformations. Supermarket company Tesco created a similar wall-of-objects, which you can see in Figure 17-11, but in this case, the objects are two-dimensional simulations—wall-sized posters that look like store shelves full of products. Each product has a QR code customers can scan with their phones, purchase from an app, and then have delivered to their doors after they’ve arrived home.

This environmental innovation takes advantage of a real, contextual insight—subway passengers have to stand and wait for trains, and they are often in a hurry to get to or from home. In addition, they need to remember a list of things they should get at the store rattling around in their heads. Cleverly, these simulated store shelves take the context of customer behavior into account, bringing the store to the customer. This novel idea reportedly increased sales for Tesco’s Home Plus brand by 130 percent.[332]

Figure 17-11. Shoppers scan simulated products for home delivery, while waiting for the subway[333]

Unlike the museum example, this is not a fully curated, choreographed environment. Yet it transforms one context into being another at the same time.

And the displays adroitly take advantage of the new objects now part of the environment: smartphones and persistently available mobile network access. It’s the nesting of the posters within those other environmental invariants that make them what they are. Otherwise, they’d just be pictures of dish soap and soft drinks.

Both the Tesco and the museum examples offer places that immerse us in contextual layers, with physical and simulated-physical information. They use whatever manner of technology, language, and layout available to them, to more fully engage us in an experience, nudging us to act in ways that merely reading about sea life or grocery products would not.

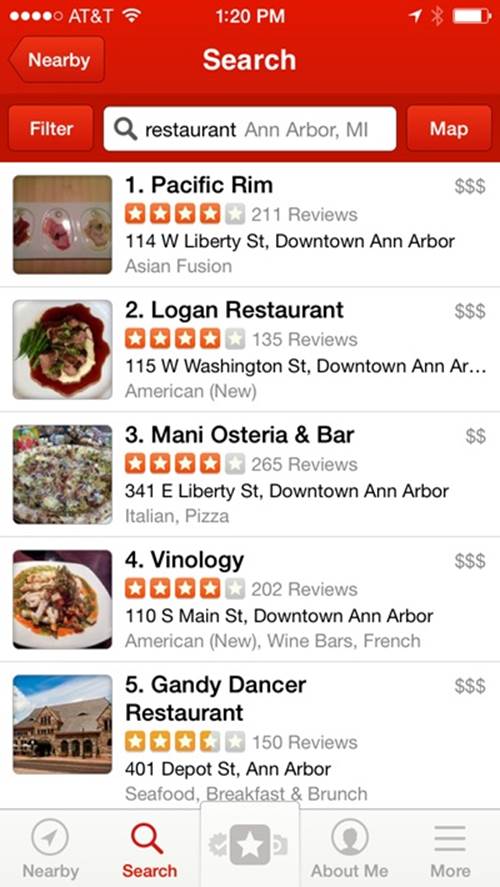

In a sense, the smartphone potentially turns any environment into a richly interactive, semantically layered place. For example, take the relationship between restaurants and Yelp (Figure 17-12), the social reviewing platform that augments our understanding of the places of business in our environment.

Figure 17-12. Yelp’s search-results view, showing summary contextual information about restaurants in Ann Arbor, MI

Because Yelp provides ready contextual information for independent restaurants as well as chains, the reputation-information playing field is being leveled. Wherever Yelp is in heavy use, national-chain restaurant popularity has decreased, yielding ground to local, independent restaurants. Additionally, a one-star increase in an independent’s rating affects its revenue positively up to 9 percent—a benefit that the chain restaurants don’t experience.[334] Adding a digital-powered semantic-information dimension to the environment shifts the entire marketplace of restaurant-going.

Retail businesses are also taking advantage of the mobile viewport to the information dimension. Wegmans, a regional chain of upscale supermarkets, has a mobile application that shoppers can use to read Yelp-like reviews of products as well as assist in finding a product’s aisle location in a specific store, as demonstrated in Figure 17-13.

This feature might not seem all that advanced, but it takes enormous coordination of a retailer’s infrastructure to accomplish this trick. The Wegmans app also includes other capabilities, including an extensive recipe database and a shopping list feature. It’s a great example of how a retailer is adapting to new expectations by providing tools that address the situational context of grocery shopping, not just the groceries alone.

There have been recent forays into literally merging the digital dimension with the physical. Yelp was also one of the first consumer mobile apps to add augmented reality (AR) to its platform in a feature it calls “Monacle.” As Figure 17-14 illustrates, with Monacle, Yelp uses the phone’s camera, GPS, and motion sensors to show a layer of review information over the screen’s live, digital picture of the physical environment.

The AR capability certainly provides a captivating level of blended information. But, as is the case with the virtual-reality version of cyberspace, we find that this layering effect is more of an edge case. Simply reading the reviews in their regular, non-AR format is satisficing enough for most of our needs.

Figure 17-13. The Wegmans app shows the Aisle/Location of a specific product within your current store as well as product reviews

Figure 17-14. Yelp’s Monacle feature, layering thumbnail review information over the surrounding street

Google Glass (see Figure 17-15), the search giant’s well-hyped “wearable” device, is essentially a way to provide AR all the time, without having to hold a smartphone up to our faces.

Glass has been controversial for a number of reasons, one of which has to do with privacy. Because it can record pictures, video, and audio, it raises questions about whether the device too easily breaches the walls that we assume exist in social life. It’s not the ability to record that sparks the concern, but the fact that Glass is meant to be worn continually like regular glasses and can be activated without the physical cues we’re used to seeing when someone is about to take a photo or make a video with a conventional device or smartphone. The invariant events we count on to signify the act of recording are dissolving into the hidden subtleties of digital agency.

Figure 17-15. One feature of Google Glass is an AR function, layering the view with supplemental information[335]

The capability of Glass to “read” everyday gestures as triggers highlights how context can be several things at once—a coy one-eyed blink at someone across a room can also be a shutter-button press. In linguistics terms, a signifier intended to signify X is interpreted by another interpreter to signify Y instead. A speck of dust in the wearer’s eye can trigger the digital agent without the unwitting wearer’s consent—physical affordances interpreted as semantic function by digitally influenced devices.

This is not unlike the hidden rules that caused controversy with Facebook Beacon, whereby a common action (a purchase on a separate website) could be recorded and turned into information of another sort, and unwittingly broadcast to one’s “friends.” Except, when it comes to Glass and other trigger-sensing objects, the boundary crossed isn’t just between websites, but between the dimensions of our physical surroundings.

What this means for information architecture: the semantic functions we use to design information environments are no longer just hyperlinks contained in screens. They can now involve any action that users might take in any place they inhabit. It’s an important overlap between interaction design and information architecture—where object-level interaction has potentially massive ripple effects across oceans of semantically stitched systems. Like Peter Morville tells us in Ambient Findability (O’Reilly), “(T)he proving grounds have shifted from natural and built environments to the noosphere, a world defined by symbols and semantics.”[336] Language is the material for building what we need to make sense of our new environs.

The implications of how those interactions are nested in the environment—how they create cross-contextual systems of places, objects, and events—is an architectural concern. That is, it’s akin to Wurman’s mission for information architecture: “the creating of systemic, structural, and orderly principles to make something work.”[337] Whether it’s a pair of science-fiction eyeglasses, a living room gaming system’s gesture-sensing interface, or a grocery store’s geolocation feature, all these technologies are changing what it means to use information to make places, and the systems that make up our shared environment. In aggregate, they fundamentally reshape how our environment works.

The Map That Makes Itself

Rules and digital agency impart the ability of the map/territory to make more of itself. One way this happens is through something called procedural generation, which refers to the algorithmic creation of structure and content. Procedural generation is especially popular in video games, in which it can make a unique map for each played instance. Unlike older video games, where each level was the same each time and part of mastering the game was about remembering the patterns of each level, procedurally generated games make players rely on perceptual-reactive skills more like those needed for new territories in the real world. Sim City and The Sims creator, Will Wright, made use of procedural generation in the game Spore, and it’s also behind the chunky-but-weirdly-natural environments found in the poignantly immersive Minecraft, shown inFigure 17-16.[338] Entire unique continents can grow with little or no user input. Minecraft can randomly generate terrain, or the user can specify parameters that the game uses as a “seed” to create maps with preferred features, such as a snowy mountain range or jungle biome.

Figure 17-16. Minecraft world generation is procedural, but can be “seeded” to create essentially the same structures from one world to the next, with slight variations in surface detail

Most “maps that make themselves” aren’t so literally self-generating, but more dependent on user activity. Algorithms can provide structures and functions that tap into the collective work of the user base to grow rich semantic topologies.

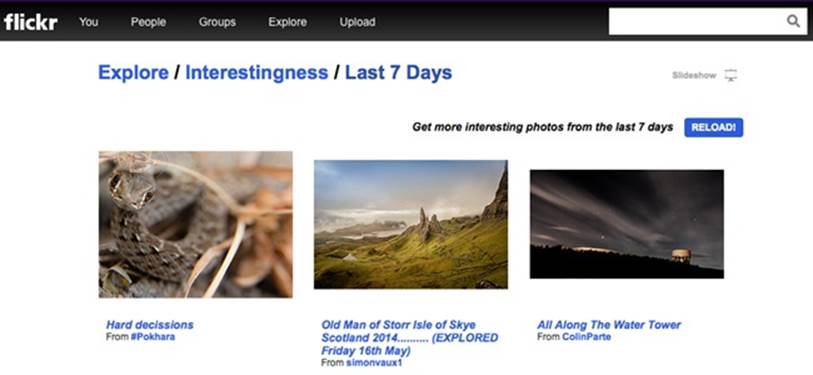

For example, some websites have contexts that are defined entirely by algorithm, such as Flickr’s photo-browsing context based on Interestingness (Figure 17-17). Here’s how Flickr’s describes Interestingness:

There are lots of elements that make something ‘interesting’ (or not) on Flickr. Where the click-throughs are coming from; who comments on it and when; who marks it as a favorite; its tags and many more things which are constantly changing. Interestingness changes over time, as more and more fantastic content and stories are added to Flickr.[339]

Figure 17-17. Flickr’s Interestingness facet, in the current version of the platform[340]

Even though they have some procedurally generated features, environments such as Flickr, Craigslist, and Wikipedia are more rule-frameworks than static web structures. Such frameworks can channel user content creation into rapidly generated cyber-landscapes. In 2006 alone, Wikipedia grew by over 6,000 articles per day—each one a new page adding to its vast corpus.[341]

This organic perspective reminds us of the question: where does the system end and the user begin? Ecologically, we are part of our environment; this principle doesn’t stop being true just because an environment is made largely of language and bits. In a sense, these environments are morphing and evolving through the efforts of several resources at once: digital algorithm-agents scouring and crunching raw information into new content; armies of product development teams updating their functionality and architecture; and millions of inhabitants creating new content, tagging it, linking and commenting, adding layer upon layer of semantic material that feeds the system’s appetite for new information.

Whether the software is making more of itself, or users are generating more territory through publishing, commenting, discussing, and uploading, the effect on user perception is pretty much the same: the environment grows and grows, expanding, reshaping, and shifting under our feet. As Resmini and Rosati put it, pervasive information architectures are “evolving, unfinished, unpredictable systems.”[342] The map is also territory, complete with its own ecosystem of species migration, stormy weather, continental drifts, and the occasional exploding volcano.

Metamaps and Compasses

These ever-expanding, unpredictably evolving maps become territories in their own right at such scale that we need “metamaps” for understanding them. We also need the equivalent of compasses for finding our way through their more uncharted wildernesses.

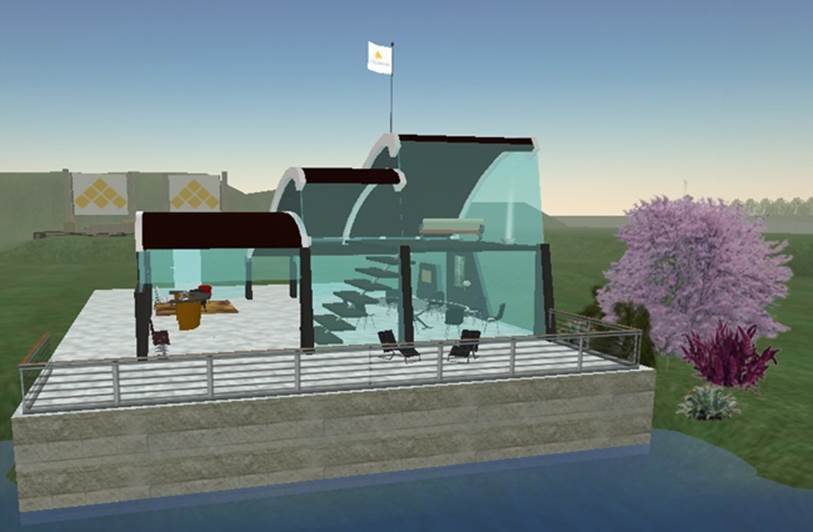

Although it is well past its day at the top of the hype curve, Second Life (see Figure 17-18) is an interesting object lesson in how navigation of our surroundings might become more about the semantic information landscape than the physical one.

Even though Second Life does its best to mimic the ecological nature of the physical structures we have in our “first life,” in actuality these structures can change at any moment. Giant buildings can be moved, deleted, or just “put away” into an owner’s personal inventory. Whole islands can shift or disappear from day to day.

Figure 17-18. A now-decommissioned virtual home of the IA Institute, in Second Life

The information we rely on in the physical world to be invariant can actually be quite variant in Second Life. So, the information its dwellers come to rely on most is the meta-information: the dynamic, semantic information that informs players where activities are happening or where friends are located, all on the Cartesian grid of Second Life’s highly variant, digital topology. On a trivia group’s regular Tuesday gathering, it might be meeting in a cloud city, a jungle, or a skyscraper. The members don’t rely on the faux-physical landmarks, but the semantic ones, because they can be searched and found even more quickly than a user can “fly” or “teleport” to the location to check it out in person.

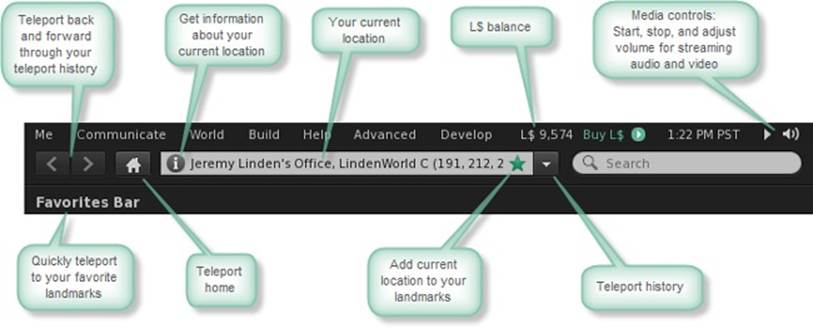

Perhaps the most prescient part of Second Life isn’t its virtual-reality landscape, but its navigation interface, the Viewer, whose menu bar is shown in Figure 17-19. Its residents use this tool to basically “fly by instruments” the way a real-life pilot must use only the cockpit’s instrument panel to fly in a fog.

Figure 17-19. From a tutorial on how to use Viewer version 2, on the official Second Life Wiki[343]

More and more, we are now navigating our world by the language we put into the stratosphere with digital technology rather than by physical landmarks, even though physical objects are much more stable in nonvirtual life. We want to know which store has something in stock, which theater has the movie we want to see at our preferred time, or which bar has more of our friends hanging out in it on a Friday night. It’s this dimension of ever-shifting semantic information that we want to track and navigate. In that sense, our smartphones are now acting as “Viewer” devices for navigating the “First Life” world around us.

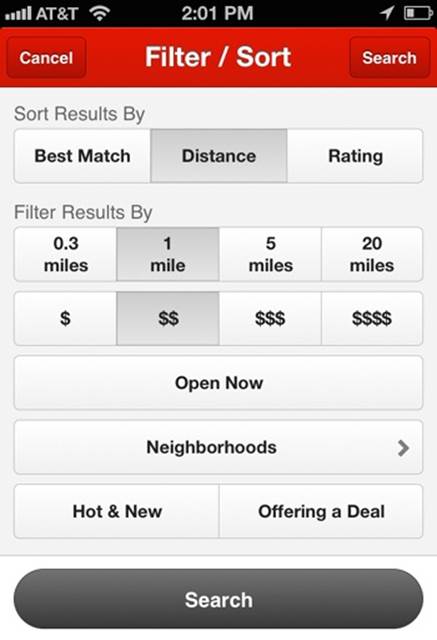

Yelp (Figure 17-20)acts as a sort of compass in the sense that you can use it to filter your environment by various factors—choosing your “true north” by facets such as average review, distance, or price.

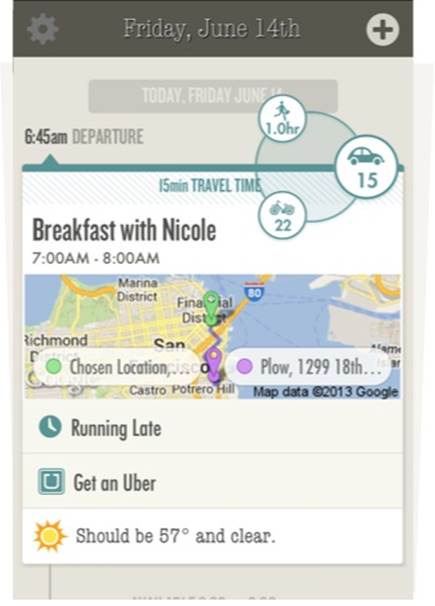

Increasingly, our tools for navigating our environment are becoming more personalized, as well, keeping track of our preferences so as to create new “true norths” that are unique to us. The Google Now service is one of a number of newer technologies that aim to tell us what we need to know before we have to ask. Before it was acquired, a similar service called Donna (see Figure 17-21) explained that it “pays attention for you so you can focus your time on the people you’re with.”

Figure 17-20. Yelp’s Filter and Sort interface is a sort of sextant for navigating local resources

Figure 17-21. Donna, now acquired and discontinued by Yahoo, was a pioneer in the personal-compass app category

By knowing what’s in our calendars and address books and tracking our email activity as well as (increasingly) our general activity patterns, these services aim to be digital agents that do some of the heavy lifting for us, narrowing and distilling the essentials of the information in our environments—creating bite-size maps-of-the-map and the equivalent of arrows pointing us in a direction, saying “wear warm clothes today” or “leave early due to traffic.” They do their best to understand our context, and in turn to improve our experience of that context.

These wonderful metamaps and compasses don’t work effectively all on their own, though. They need to use information from somewhere, and that information has to have the right qualities to be trusted and comprehended.

Let’s recall that these navigational aids and digital agents are only as smart as the structures provided to them in the environment to begin with. Google’s search ranks sites higher when they’re well-structured and effectively made places. Donna can tell us to leave early because someone created an API with which the service can know about traffic, weather, and geolocation—all of which required effective labeling and metadata. A pricing service can send a text message alerting us that there’s a sale in a store we’re visiting because someone took the time to define and structure the frameworks that make those connections possible. The rules these agents use for behavior have to be defined as part of their architectural infrastructure. Digital agents need structural cues, too. No matter how smart they get, there’s work to be done in the environment for bridging between physical, semantic, and digital information.

And we shouldn’t forget: no matter how enabled by artificial intelligence, such metamaps and compasses tend to become less accurate as they try to be smarter and more richly relevant to context. The bigger the gap we’re trying to bridge, the more it’s subject to the fog of ambiguity; this is especially the case when the environment’s information involves tacit familiarity versus explicit definition.

We should also remember what we learned about maps: that they’re always interpretations of the environment, and they serve some set of interests. The same is true of mediating, augmenting tools. What they choose to show us is always driven by an agenda, intentionally or not. As users, we should always be wary of what that agenda might be. As designers of these entities, we should provide enough transparency to let users in on the priorities and interests being served by the way the elements and rules are assembled.