eCommerce in the Cloud (2014)

Part III. To the Cloud!

Chapter 9. Security for the Cloud

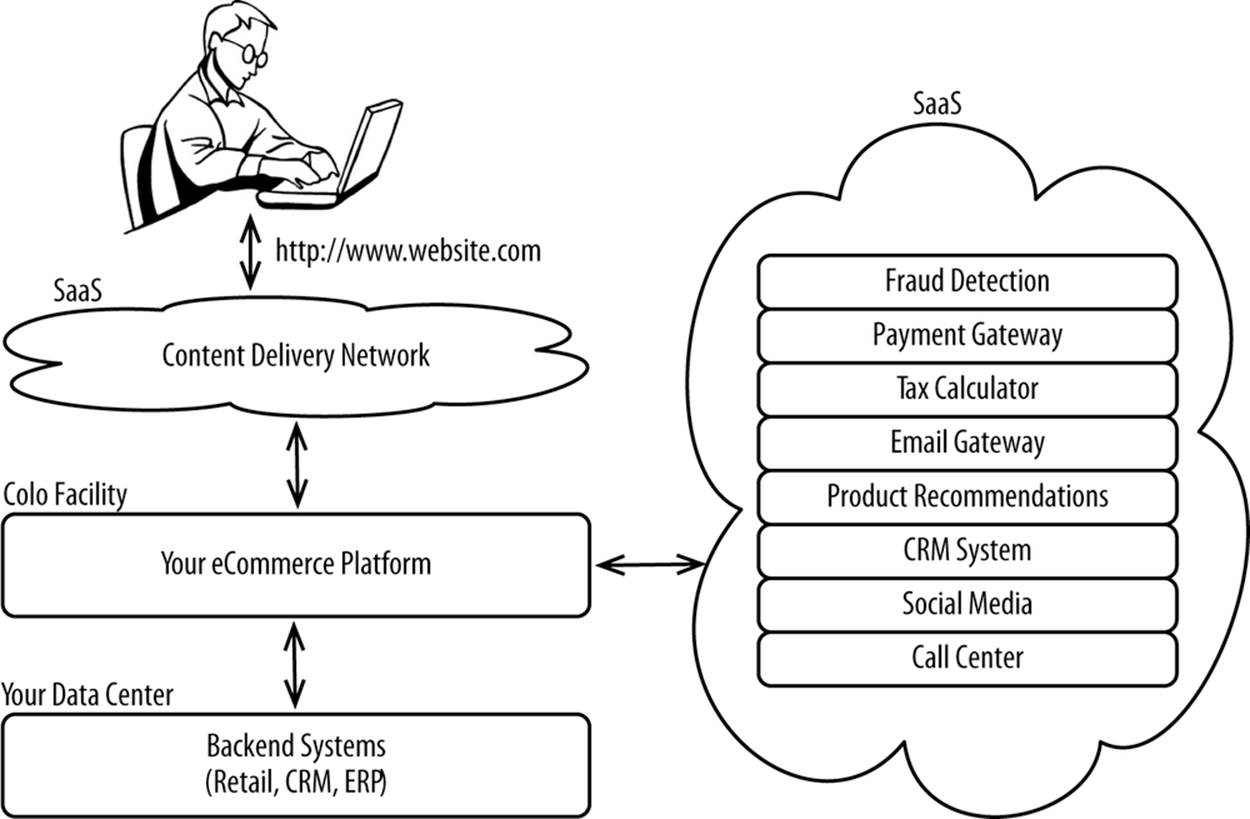

In today’s world, sensitive data used by ecommerce is strewn across dozens of systems, many of which are controlled by third parties. For example, the most sensitive of ecommerce data, including credit cards and other personally identifiable information (often abbreviated PII) routinely passes through Content Delivery Networks before being sent to third-party fraud-detection systems and then ultimately to third-party payment gateways. That data travels securely across thousands of miles over multiple networks to data centers owned and managed by third parties, as depicted in Figure 9-1.

TIP

What cloud computing lacks in direct physical ownership of assets, it offers in more control—which is more important.

Figure 9-1. Use of SaaS within ecommerce platforms

It’s highly unlikely that you even own the data center or the hardware you serve your platform from, as most use a managed hosting service or a colo. Your data is already out of your physical possession but is firmly under your control. Control is far more important than possession. The adoption of Infrastructure-as-a-Service or Platform-as-a-Service for the core ecommerce platform is an incremental evolution over the current approach. Next to legacy backend systems, your ecommerce platform is the last to be deployed out in a cloud. Eventually, those legacy systems will be replaced with cloud-based solutions. It’s only a matter of time.

The unfounded perception is that clouds are inherently insecure, when in fact they can be more secure than traditional hosting arrangements. Clouds are multitenant by nature, forcing security to be a forethought rather than an afterthought. Cloud vendors can go out of business overnight because of a security issue. Cloud vendors go out of their way to demonstrate compliance with rigorous certifications and accreditations, such as the Payment Card Industry Data Security Standard (PCI DSS), ISO 27001, Federal Risk and Authorization Management Program (FedRAMP), and a host of others.

Cloud vendors have the advantage of being able to specialize in making their offerings secure. They employ the best experts in the world and have the luxury of building security into their offerings from the beginning, in a uniform manner. Cloud vendors have also invested heavily in building out tools that you can use to make your deployments in their clouds more secure. For example, many vendors offer an identity and access management suite that’s fully integrated with their offerings as a free value add. The ability to limit access to resources in a cloud in a fine-grained manner is a defining feature of cloud offerings.

The use of a cloud doesn’t absolve you of responsibility. If your cloud vendor suffers an incident and it impacts your customers, you’re fully responsible. But cloud vendors spend enormous resources staying secure, and breaches are many times more likely to occur as a result of your code, your people, or your (lack of) process.

General Security Principles

Security issues are far more likely to be caused by a lack of process or to be a consequence of a lack of process. Security encompasses hundreds or even thousands of individual technical and non-technical items. Think of security as an ongoing system as opposed to something you do.

TIP

You can’t ever be completely secure—you just have to be more secure than your peers so the attackers go after them instead of you.

According to a survey from Intel, respondents said 30% of threats come from within an organization and 70% of threats come from the outside.[60] Of the threats coming from the outside, Rackspace quantified them in another study as follows:[61]

§ 31% of all incidents involved SQL injection exploit attempts

§ 21% involved SSH brute-force attacks

§ 18% involved MySQL login brute-force attempts

§ 9% involved XML-RPC exploit attempts

§ 5% involved vulnerability scans

These issues are equally applicable to both traditional environments and clouds; there isn’t a single vulnerability in this list that is more applicable to a cloud. The tools to counter internal and external threats are well-known and apply equally (but sometimes differently) to the cloud. What matters is that you have a comprehensive system in place for identifying and mitigating risks. These systems are called information security management systems (ISMS). We’ll quickly cover a few so you can have an understanding of what the most popular frameworks call for.

Adopting an Information Security Management System

An information security management system (ISMS) is a framework that brings structure to security and can be used to demonstrate a baseline level of security. They can be built, adapted, or adopted. Adherence to at least one well-defined framework is a firm requirement for any ecommerce deployment, whether or not it’s in the cloud. Full adoption of at least one of these frameworks will change the way you architect, implement, and maintain your platform for the better.

All frameworks call for controls, which are discrete actions that can be taken to prevent a breach from occurring, stop a breach that’s in progress, and take corrective actions after a breach. Controls can take a form that’s physical (security guards, locks), procedural (planning, training), or technical (implementing a firewall, configuring a web server setting).

ISO 27001 outlines a model framework, which most cloud vendors are already compliant with. Its central tenets are as follows:

Plan

Establish controls, classify data and determine which controls apply, assign responsibilities to individuals

Do

Implement controls

Check

Assess whether controls are correctly applied and report results to stakeholders

Act

Perform preventative and corrective actions as appropriate

Other frameworks include PCI DSS and FedRAMP, which all call for roughly the same plan/do/check/act cycle but with varying controls. Rely on these frameworks as a solid baseline, but layer on your own controls as required. For example, PCI DSS doesn’t technically require that you encrypt data in motion between systems within your trusted network, but doing so would just be common sense.

The check part of the plan/do/check/act cycle should always be performed internally and externally by a qualified assessor. An external vendor is likely to find more issues than you could on your own. All of the major frameworks require third-party audits because of the value they offer over self-assessment.

For cloud vendors, compliance with each of the frameworks is done for different reasons. Compliance helps to ensure security, and most important, helps to demonstrate security to all constituents. Compliance with some frameworks, such as FedRAMP, is required in order to do business with the US government. If there ever is a security issue, cloud vendors can use compliance with frameworks to reduce legal culpability. Retailers who suffer breaches routinely hide behind compliance with PCI DSS. These frameworks are much like seat belts. Being compliant doesn’t guarantee security any more than wearing a seat belt will save your life in a car crash. But there’s a strong causal relationship between wearing a seat belt and surviving a car crash.

Your reasons for adopting a security framework are largely the same as the reasons for cloud vendors, except you have the added pressure of maintaining compliance with PCI DSS. PCI DSS, which we’ll discuss shortly, is a commercial requirement imposed by the credit card industry on those who touch credit cards.

Let’s review a few of the key frameworks.

PCI DSS

The Payment Card Industry Data Security Standard (PCI DSS) is a collaboration between Visa, MasterCard, Discover, American Express, and JCB for the purpose of holding merchants to a single standard. A merchant, for the purposes of PCI, is defined as any organization that handles credit card information or personally identifiable information related to credit cards. Prior to the introduction of PCI DSS in 2004, each credit card issuer had its own standard that each vendor had to comply with. By coming together as a single group, credit card brands were able to put together one comprehensive standard that all merchants could adhere to.

NOTE

While protecting credit card data is obviously a priority, personally identifiable information extends far beyond that. PII is often defined as “any information about an individual maintained by an agency, including (1) any information that can be used to distinguish or trace an individual’s identity, such as name, social security number, date and place of birth, mother’s maiden name, or biometric records; and (2) any other information that is linked or linkable to an individual, such as medical, educational, financial, and employment information.”[62] Even IP addresses are commonly seen as PII because they could be theoretically used to pinpoint a specific user. The consequences of disclosing PII, whether or not related to credit cards, is usually the same.

PCI DSS is not a law, but failure to comply with it brings consequences such as fines from credit card issuers and issuing banks, and increased legal culpability in the event of a breach.

The PCI DSS standard calls for adherence to 6 objectives and 12 controls, as Table 9-1 demonstrates.[63]

Table 9-1. PCI DSS control objectives and requirements

|

Control objectives |

Requirements |

|

Build and maintain a secure network and systems |

1) Install and maintain a firewall configuration to protect cardholder data |

|

2) Do not use vendor-supplied defaults for system passwords and other security parameters |

|

|

Protect cardholder data |

3) Protect stored cardholder data |

|

4) Encrypt transmission of cardholder data across open public networks |

|

|

Maintain a vulnerability management program |

5) Protect all systems against malware and regularly update antivirus software or programs |

|

6) Develop and maintain secure systems and applications |

|

|

Implement strong access control measures |

7) Restrict access to cardholder data by business need to know |

|

8) Identify and authenticate access to system components |

|

|

9) Restrict physical access to cardholder data |

|

|

Regularly monitor and test networks |

10) Track and monitor all access to network resources and cardholder data |

|

11) Regularly test security systems and processes |

|

|

Maintain an Information Security Policy |

12) Maintain a policy that addresses information security for all personnel |

Validation of compliance is performed annually by a third-party qualified security assessor (QSA). PCI DSS is not intended to be extremely prescriptive. Instead, it’s meant to ensure that merchants are adhering to the preceding principles. So long as you can demonstrate adherence to these principles, QSAs will generally sign off. If your QSA won’t work with you to understand what you’re doing and how you’re adhering to the stated objectives of PCI DSS, you should find another QSA. All of the major cloud vendors have achieved the highest level of PCI DSS compliance and are regularly audited. Cloud computing in no way prohibits you from being PCI compliant.

Cardholder data is defined as the credit card number, but also data such as expiration date, name, address, and all other personally identifiable information that’s stored with the credit card. Any system (e.g., firewalls, routers, switches, servers, storage) that comes in contact with cardholder data is considered part of your cardholder data environment.

Your goal with PCI DSS should be to limit the scope of your cardholder data environment. One approach is to limit this scope by building or using a credit card processing service that is independent of the rest of your environment. If you build a service, you can have a separate firewall, load balancer, application server tier, database, and VLAN, with the application exposed through a subdomain such as https://pci.website.com, with an IFrame submitting cardholder data directly to that service. Only the systems behind that service would be considered in the scope of your cardholder data environment and thus subject to PCI DSS. All references to cardholder data would then be through a randomly generated token. We’ll discuss this in an edge-based approach shortly.

While PCI DSS is very specific to ecommerce, let’s explore a more generic framework.

ISO 27001

ISO 27001 describes a model information security management system, first published in 2005 by the International Organization for Standardization (ISO). Whereas PCI is a pragmatic guide focused on safeguarding credit card data, you’re free to choose where ISO 27001 applies and what controls you want in place.

Compliance with ISO 27001 is sometimes required for commercial purposes. Your business partners may require your compliance with the standard to help ensure that their data is safe. While compliance does not imply security, it’s a tangible step to show that you’ve taken steps to mitigate risk. Compliance with ISO 27001 is very similar to the famed ISO 9000 series for quality control, but applied to the topic of information security. As with ISO 9000, formal auditing is optional. You can adhere to the standard internally. But to publicly claim that you’re compliant, you need to be audited by an ISO-approved third party.

In addition to the plan/do/check/act cycle we discussed earlier, ISO 27001 allows you to select and build controls that are uniquely applicable to your organization. It references a series of controls found in ISO 27002 as representative of those that should be selected as a baseline:

Information security policies

Directives from management that define what security means for your organization and their support for achieving those goals

Organization of information security

How you organize and incentivize your employees and vendors, who’s responsible for what, mobile device/teleworking policies

Human resource security

Hiring people who value security, getting people to adhere to your policies, what happens after someone leaves your organization

Asset management

Inventory of physical assets, defining the responsibilities individuals have for safeguarding those assets, disposing of physical assets

Access control

How employees and vendors get access to both physical and virtual assets

Cryptography

Use of cryptography, including methods and applicability, as well as key management

Physical and environmental security

Physical security of assets, including protection against manmade and natural disasters

Operations management

Operational procedures and responsibilities including those related to backup, antivirus, logging/monitoring, and patching

Communications security

Logical and physical network controls to restrict the flow of data within networks, nontechnical controls such as nondisclosure agreements

System acquisition, development, and maintenance

Security of packaged software, policies to increase the software development lifecycle, policies around test data

Supplier relationships

Policies to improve information security within your IT supply chain

Information security incident management

How you collect data and respond to security issues

Information security aspects of business continuity management

Redundancy for technical and nontechnical systems

Compliance

Continuous self-auditing, meeting all legal requirements

While not complete, this list should provide you with an idea of the scope of ISO 27001 and 27002.

FedRAMP

Whereas PCI DSS is focused on safeguarding credit card data and ISO 27001 is about developing a process for information security, FedRAMP was specifically designed to ensure the security of clouds for use by the US government. (G-Cloud is the UK’s equivalent.) FedRAMP is a collaboration among government agencies to offer one security standard to cloud vendors. Cloud vendors simply couldn’t demonstrate compliance with each federal agency’s standards. Since 2011, the US government has had a formal cloud first policy. FedRAMP enables that policy by holding cloud vendors to a uniform baseline. Once a cloud vendor is certified, any US federal agency may use that vendor.

At its core, FedRAMP requires that vendors:

§ Identify and protect the boundaries of their cloud

§ Manage configuration across all systems

§ Offer firm isolation between software and hardware assets

§ Adhere to more than 290 security controls

§ Submit to vulnerability scans, including code scans

§ Document their approach to security

§ Submit to audits by a third party

Most of the security controls come right from the National Institute of Standards and Technology (NIST) Special Publication 800-53, with a few additional controls.[64] FedRAMP certification is a rigorous, time-consuming process, but one that yields substantial benefits to all constituents. Most of the major cloud vendors have achieved compliance.

FedRAMP differs substantially from PCI DSS in that it has a broader scope. PCI DSS is focused strictly on safeguarding credit card data, because that’s what the credit card issuers care about. FedRAMP was built for the purpose of securing government data, which may range from credit card numbers to tax returns to personnel files. FedRAMP is also built specifically for cloud computing, whereas PCI DSS is applicable to any system that handles credit card data.

FedRAMP differs from ISO 27001 in that it’s more of a pragmatic checklist, as opposed to an information security management system. To put it another way, ISO 27001 cares more about the how, whereas FedRAMP cares more about the what.

FedRAMP, like all frameworks, doesn’t guarantee security. But cloud vendors that achieve this certification are more likely to be secure.

Security Best Practices

While PCI DSS, ISO 27001, and FedRAMP differ in their purpose and scope, they all require the development of a plan and call for adherence to a range of technical and nontechnical controls. Again, cloud vendor compliance with these and similar certifications and accreditations maintains a good baseline of security, but compliance doesn’t equal security by itself.

In addition to ensuring that your cloud vendor is meeting a baseline of security and developing a plan, you must take proactive steps to secure your own information, whether or not in a cloud. Let’s review a few key security principles.

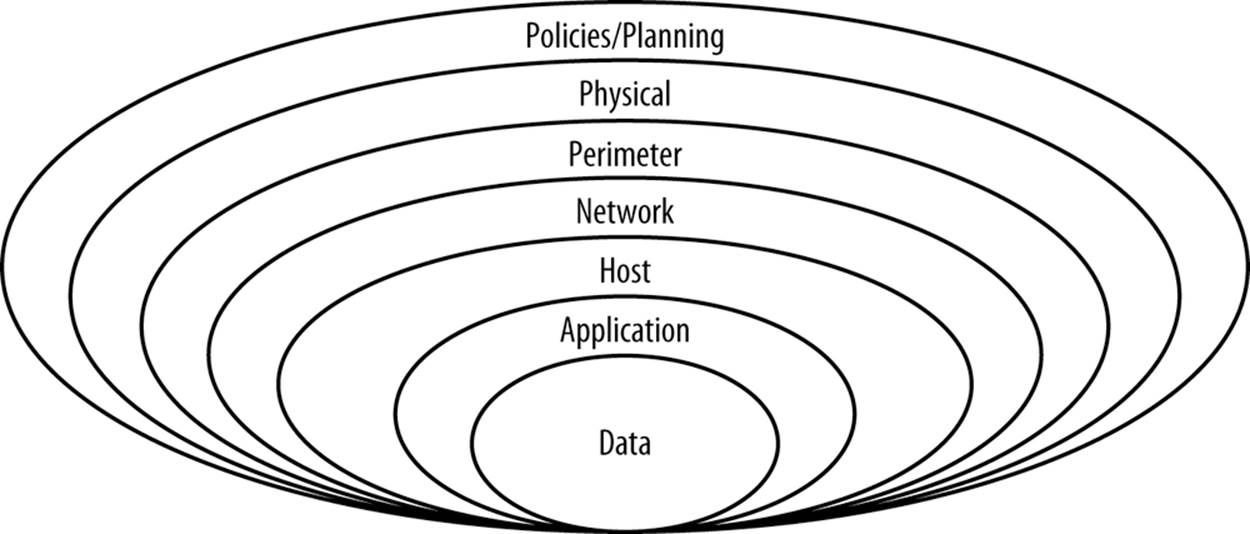

Defense in Depth

Your approach to security must be layered, such that compromising one layer won’t lead to your entire system being compromised. Layering on security is called defense in depth and plays a key role in safeguarding sensitive information, as Figure 9-2 shows.

Figure 9-2. The defense-in-depth onion

One or more safeguards are employed at each layer, as explained in Table 9-2, requiring an attacker to compromise multiple systems in order to cause harm.

Table 9-2. Protections in place for various layers

|

Layer |

Protections |

|

Policies/planning |

Information security management systems |

|

Physical |

Physical security offered by your cloud vendor |

|

Tamper-resistant hardware security modules |

|

|

Perimeter |

Distributed denial-of-service attack mitigation |

|

Content Delivery Networks |

|

|

Reverse proxies |

|

|

Firewalls |

|

|

Load balancers |

|

|

Network |

VLANs |

|

Firewalls |

|

|

Nonroutable subnets |

|

|

VPNs |

|

|

Host |

Hypervisors |

|

iptables or nftables |

|

|

Operating system hardening |

|

|

Application |

Application architecture |

|

Runtime environment |

|

|

Secure communication over SSL/TLS |

|

|

Data |

Encryption |

Defense in depth can be overused as a security technique. Each layer of security introduces complexity and latency, while requiring that someone manage it. The more people are involved, even in administration, the more attack vectors you create, and the more you distract your people from possibly more-important tasks. Employ multiple layers, but avoid duplication and use common sense.

Information Classification

Security is all about protecting sensitive information like credit cards and other personally identifiable information. Information security management systems like PCI DSS, ISO 27001, and FedRAMP exist solely to safeguard sensitive information and call for the development of an information classification system. An information classification system defines the levels of sensitivity, along with specific controls to ensure its safekeeping. Table 9-3 describes an example of a very basic system.

Table 9-3. Information classification system

|

Level |

Description |

Examples |

Encrypted in motion |

Encrypted at rest |

Tokenize |

Hash |

|

Public |

Information publicly available |

Product images, product description, and ratings and reviews |

No |

No |

No |

No |

|

Protected |

Disclosure unlikely to cause issues |

A list of all active promotions |

No |

No |

No |

No |

|

Restricted |

Disclosure could cause issues but not necessarily |

Logfiles, source code |

Yes |

Yes |

No |

No |

|

Confidential |

Disclosure could lead to lawsuits and possible legal sanctions |

Name, address, purchasing history |

Yes (using hardware security module) |

Yes (using hardware security module) |

No |

No |

|

Extremely confidential |

Disclosure could lead to lawsuits and possible legal sanctions |

Password, credit card number |

Yes (using hardware security module) |

Yes (using hardware security module) |

Yes (if possible) |

Yes (if possible) |

On top of this, you’ll want to assign policies determining information access, retention, destruction, and the like. These controls may vary by country, because of variations in laws.

Once you’ve developed an information classification system and assigned policies to each level, you’ll want to do a complete inventory of your systems for the purpose of identifying all data. Data can take the form of normalized data in a relational database, denormalized data in a NoSQL database, files on a filesystem, source code, and anything else that can be stored and transmitted. Each type of data must be assigned a classification.

A general best practice is to limit the amount of information that’s collected and retained. Information that you don’t collect or retain can’t be stolen from you.

Isolation

Isolation can refer to carving up a single horizontal resource (e.g., a physical server, a storage device, a network) or limiting communication between vertical tiers (e.g., web server to application server, application server to database). The goal is to limit the amount of communication between segments, whether horizontal or vertical, so that a vulnerability in one segment cannot lead to the whole system being compromised. It’s a containment approach, in much the same way that buildings are built with firewalls to ensure that fires don’t engulf the entire building.

The isolation mechanisms vary based on what’s being isolated. Virtual servers are isolated from each other through the use of a hypervisor. Storage devices are split into multiple volumes. Networks are segmented using technology like virtual LANs (VLANs) and isolated using firewalls. All of these technologies are well established and offer the ability to provide complete isolation. The key to employing these technologies is to implement isolation at the lowest layer possible.

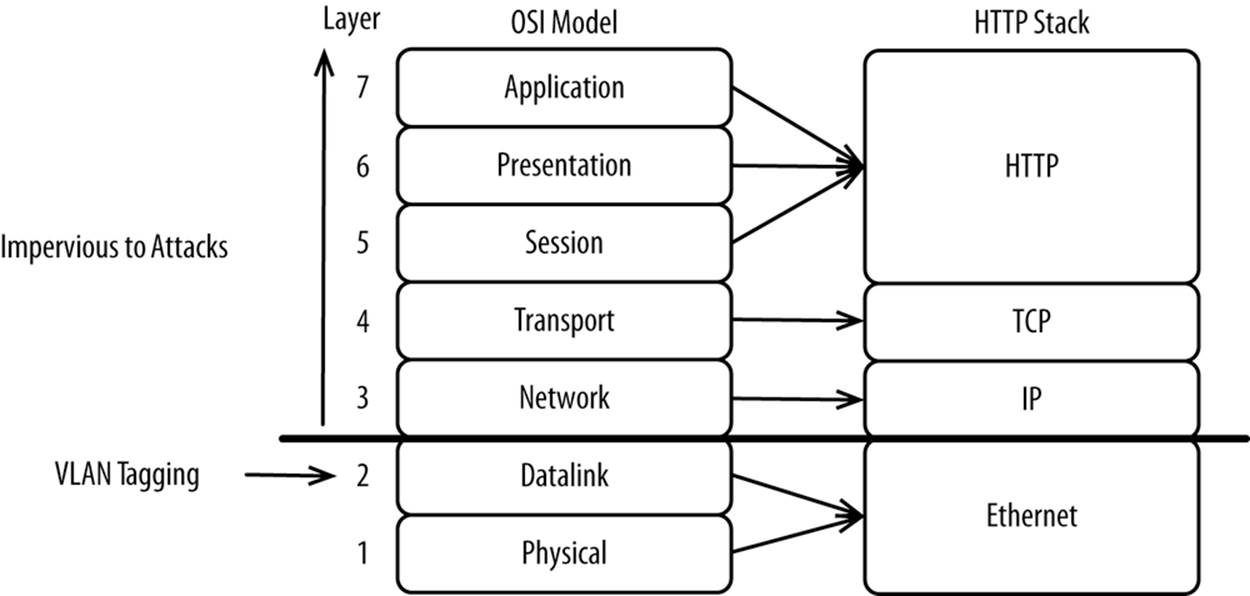

For example, the Open Systems Interconnection (OSI) model standardizes the various levels involved with network communication so that products from different vendors are interoperable. Switches, which operate at layer 2, are responsible for routing Ethernet frames. To logically segment Ethernet networks, you can tag each frame with a VLAN ID. If an Ethernet frame has a VLAN ID, the switch will route it only within the boundaries of the defined VLAN, as shown in Figure 9-3.

Figure 9-3. OSI model mapped back to HTTP stack

The advantage of this level of segmentation is that you’re entirely protected against attacks in layer 3 and above. This technique can be applied to separate all traffic between your application servers and databases. This principle reduces attack vectors while providing strong isolation. The lower in any stack—whether network, storage, or a hypervisor—the stronger the protection.

Isolation is a key component of a defense in depth strategy.

Identification, Authentication, and Authorization

Identification, authentication, and authorization are focused on controlling access to systems and data. Users can be individual humans or other systems, but the rules are mostly the same. Every user must properly identify itself (through a username, public key, or alternate means), properly authenticate itself (through a password, private key, or other means), and have designated access to the data. All three elements (identification, authentication, and authorization) must be validated before access may be granted to a system or data.

Human users should always be mapped 1:1 with accounts, as it increases accountability. With each human user having an account, you can hold individuals accountable for the actions performed by them. Accounts that multiple people hold access to are notorious enablers of malicious behavior, because actions performed by that account are hard to trace back to individuals. There’s no accountability.

Users of a system, whether internal or external, should always start out with access to nothing. This is the concept of least privileged access. Only after a user’s role is established should a user be assigned roles and rights per your information classification system. When an employee leaves the company or changes roles, the system should automatically update the user’s role in the system and revoke any granted rights.

Some cloud vendors offer robust identity and access management (IAM) systems that are fully integrated across their suite of services. These systems are typically delivered as SaaS and offer a uniform approach to manage access to all systems and data. The advantage these systems have is that they’re fully and deeply integrated with all of the systems offered by a cloud. Without a central IAM system, you’d have to build one on your own, likely host it, and integrate it with all of the systems you use. Third-party systems invariably support different protocols, different versions of each protocol, and offer limited ability to support fine-grained access. By using a cloud vendor’s Software-as-a-Service solution, you get a robust solution that’s ready to be immediately used.

It’s important to use an IAM system that supports identity federation, which is delegating authentication to a third party such as a corporate directory using a protocol like OAuth, SAML, or OpenID. Your source system, like a human resources database, can serve as the master and be referenced in real time to ensure that the user still exists. You wouldn’t want to get into a situation where an employee was terminated, but their account in the cloud vendor’s IAM system was still active. Identity federation solves this problem.

Finally, multifactor authentication is an absolute must for any human user who is transacting with your servers or your cloud vendor’s administration console. An attacker could use a range of techniques to get an administrator’s password, including brute-force attacks and social engineering. Once logged in, an attacker could do anything, including deleting the entire production environment. Multifactor authentication makes it impossible to log in by providing only a password. On top of a password, the second factor can be an authentication code from a physical device, like a smartphone or a standalone key fob. Two authentication factors, including what you know (password) and what you have (your smartphone), substantially strengthen the security of your account.

Audit Logging

Complementary to identification, authentication, and authorization is audit logging. All of the common information security management systems require extensive logging of every administrative action, including calls into a cloud vendor’s API, all actions performed by administrators, errors, login attempts, access to logs, and the results of various health checks. Every logged event should be tagged with the following:

§ The user who performed the event

§ Data center where the event was performed

§ ID, hostname, and/or IP of the server that performed the event

§ A timestamp marking when the event was performed

§ Process ID

Logs should be stored encrypted in one or more locations that are accessible to only a select few administrators. Consider writing them directly to your cloud vendor’s shared storage. Servers are ephemeral. When a server is killed, you don’t want valuable logs to disappear with it.

Logging not only acts as a deterrent to bad behavior but also ensures that there is traceability in the event of a breach. Provided that the logs aren’t tampered with, you should be able to reconstruct exactly what happened in the event of a failure, in much the same way that airplanes’ black box recorders record what happened during a crash. Being able to reconstruct what happened is important for legal reasons but also as a way to learn from failures to help ensure they don’t happen again.

Cloud vendors offer extensive built-in logging capabilities, coupled with secure, inexpensive storage for logs.

Security Principles for eCommerce

Among all workloads, ecommerce is unique in that it’s both highly visible and handles highly sensitive data. It’s that combination that leads it to have unique requirements for security. Traditional cloud workloads tend to be neither. If a climate scientist isn’t temporarily able to run a weather simulation, it’s not going to make headlines or lead to a lawsuit over a breach.

The visibility of ecommerce is increasing as it’s moving from a peripheral channel to the core of entire organizations. As we’ve discussed, this shift is called omnichannel retailing. An outage, regardless of its cause, is likely to knock all channels offline and prevent an organization from generating any revenue. Outages like this are prominently featured in the news and are almost always discussed on earnings calls. Executives are routinely fired because of outages, but the odds of a firing increase if the outage can be traced to a preventable security-related issue. Security-related incidents reflect poorly on the organization suffering the incident because it shows a lack of competency. It makes both senior management and investors question whether an organization is competent in the rest of the business.

While outages are bad, lost data is often much worse because of consequences including lost revenue, restitution to victims, fines from credit card issuers, loss of shareholder equity, bad press, and legal action including civil lawsuits initiated by wronged consumers. An outage may get you fired, but a major security breach will definitely get you fired. You may also be personally subject to criminal and civil penalties. Leaked data that can get you in trouble includes all of the standard data like credit cards, names, addresses, and the like, but it also extends to seemingly innocuous data, like purchase histories, shipping tracking numbers, wish lists, and so on. Disclosure of any personally identifiable information will get you in trouble.

Disclosing personally identifiable information will lead to not only commercial penalties and civil action, but also possibly criminal actions by various legal jurisdictions. Many countries impose both civil and criminal penalties on not only those who steal personally identifiable information, but also those who allow it to be stolen, in the case of gross negligence. For example, Mexico’s law states that ecommerce vendors can be punished with up to six years’ imprisonment if data on Mexican citizens is stolen. Many countries are even more punitive and may even confiscate profit earned while its citizens’ data was at risk. Many countries, such as Germany, severely restrict the movement of data on their citizens outside their borders in order to limit the risk of disclosures.

Vulnerabilities that lead to the disclosure of personally identifiable information tend to be application level. SQL injection (getting a database to execute arbitrary SQL), cross-site scripting (getting a web browser to execute arbitrary script like JavaScript), and cross-site request forgery (executing URLs from within the context of a customer’s session) are preferred means for stealing sensitive data. Applications tend to be large, having many pages and many possible attack vectors, while the barriers to entry for an attacker are low. Attackers don’t have to install sophisticated malware or anything very complicated—they simply have to view the source code for your HTML page and play with HTTP GET and POST parameters. In following with the defense-in-depth principle, your best approach is to layer:

Education

Educate your developers to the dangers of these vulnerabilities.

Development of best practices

Set expectations for high security up front; have all code peer reviewed.

White-box testing

Scan your source code for vulnerabilities.

Black-box testing

Scan your running application, including both your frontends and your backends, for vulnerabilities.

Web application firewall

Use a web application firewall to automatically guard against any of these vulnerabilities should your other defenses fail. Web application firewalls are capable of inspecting the HTTP requests and responses.

If you’re especially cautious, you can even run a database firewall. Like a web application firewall, a database firewall sits between your application servers and database and inspects the SQL. A query such as select * from credit_card originating from your application server should alwaysbe blocked. These firewalls are capable of customizable blacklist and whitelist rulesets.

Security is a function of both your application and the platform on which it is deployed. It’s a shared responsibility.

Security Principles for the Cloud

The cloud is a new way of delivering computing power, but it’s not that fundamentally different. All of the traditional security principles still apply. Some are easier; some are harder. What matters is that you have a good partnership with your cloud vendor. They provide a solid foundation and tools that you can use, but you have to take responsibility for the upper stack. Table 9-4 shows a breakdown of each party’s responsibility.

Table 9-4. Cloud vendor’s responsibilities versus your responsibilities

|

Cloud vendor responsibilities |

Your responsibilities |

|

Facilities |

N/A |

|

Power |

N/A |

|

Physical security of hardware |

N/A |

|

Network infrastructure |

N/A |

|

Internet connectivity |

N/A |

|

Patching hypervisor and below |

Patching operating system and above |

|

Monitoring |

Monitoring |

|

Firewalls |

Configuring customized firewall rules |

|

Virtualization |

Building and installing your own image |

|

API for provisioning/monitoring |

Using the APIs to provision |

|

Identity and access management framework |

Using the provided identity and access management framework |

|

Protection against cloud-wide distributed denial-of-service attacks |

Protecting against tenant-specific distributed denial-of-service attacks |

|

N/A |

Building and deploying your application(s) |

|

N/A |

Securing data in transit |

|

N/A |

Securing data at rest |

Security is a shared responsibility that requires extensive cooperation. An example of the cooperation required is when you perform penetration testing. Unless you tell your vendor ahead of time, a penetration test is indistinguishable from a real attack. You have to tell your cloud vendor ahead of time that you’re going to be doing penetration testing and that the activity seen between specific time periods is OK. If you don’t work with your cloud vendor, they’ll just block everything and, best case, your penetration won’t work because security is so tight by default.

Reducing Attack Vectors

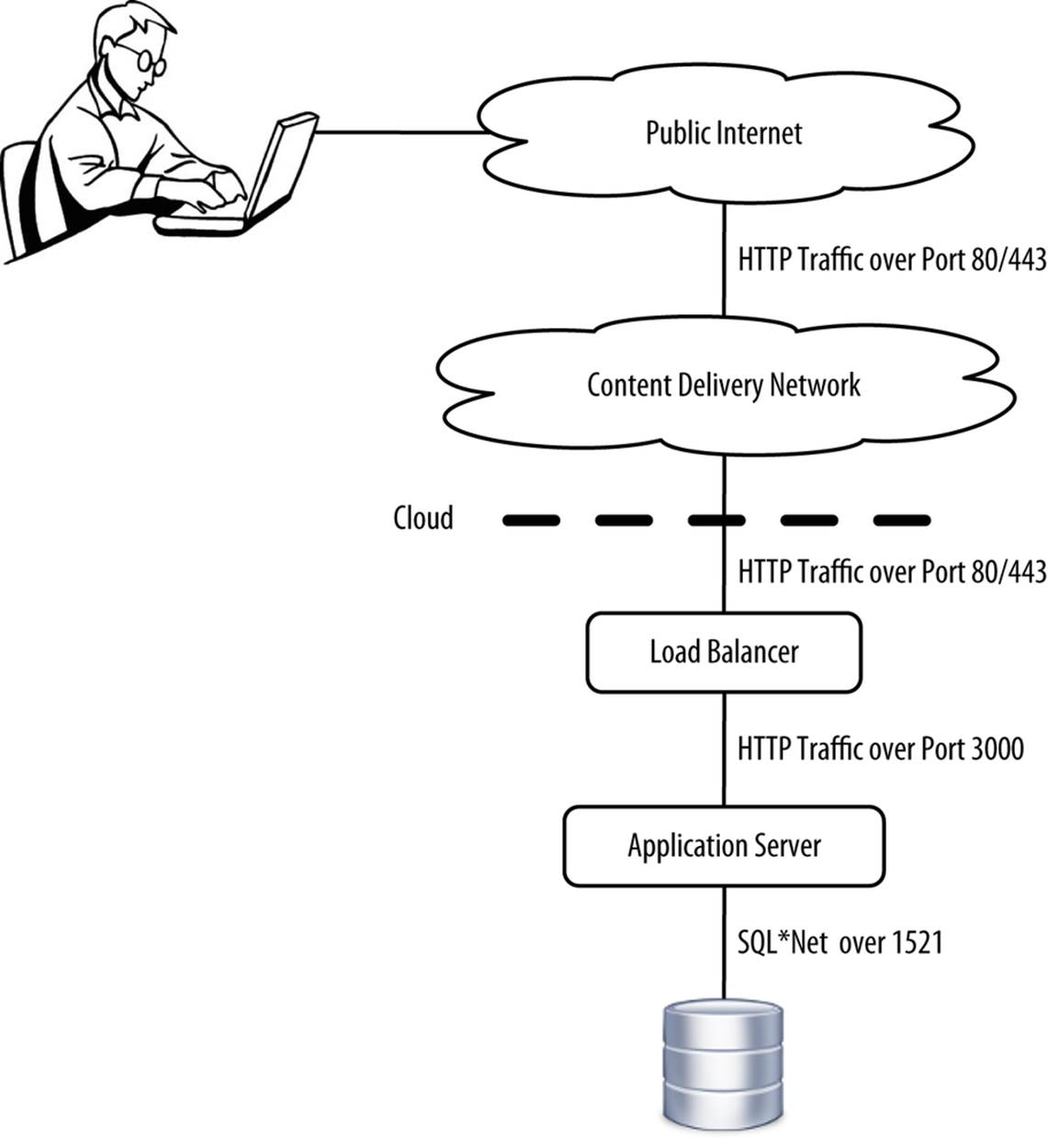

Key to reducing your risk is to minimize your attack surface. If every one of your servers were on the Internet, obviously that would create a large surface for attacks. Ideally, you’ll expose only a single IP address to the Internet, with all traffic forced through multiple firewalls and at least one layer of load balancing.

Many cloud vendors offer what amounts to a private subnet within a public cloud, whereby you can set up private networks that aren’t routable from the public Internet. Hosts are assigned private IP addresses, with you having full control over IP address ranges, routing tables, network gateways, and subnets. This is how large corporate networks are securely sliced up today. Only the cloud vendor’s hardened load balancer should be responding to requests from the Internet. To handle requests from your application to resources on the Internet, you can set up an intermediary server that’s routable from the Internet but doesn’t respond to requests originating from the Internet. Network address translation (NAT) can be used to connect from your application server instances in a secure subnet to get to the intermediary that’s exposed to the Internet. The intermediary is often called a bastion host.

TIP

Keep your backend off the Internet. This is applicable outside the technology domain as well.

In addition to private clouds within public clouds, firewalls are another great method of securing environments. Cloud vendors make it easy to configure firewall rules that prohibit traffic between tiers, except for specific protocols over specific ports. For example, you can have a firewall between your load balancer and application server tiers that permits only HTTP traffic over port 80 from passing between the two ports. Everything else will be denied by default (see Figure 9-4).

Figure 9-4. Restricting traffic by port and type

By having a default deny policy and accepting only specific traffic over a specific port, you greatly limit the number of attack vectors by keeping a low profile online. You can even configure firewalls and load balancers to accept traffic from a specific IP range, perhaps limiting certain traffic to and from the tier above/below and from your corporate network. For example, you can require that any inbound SSH connections be established from an IP address belonging to your company. This is a key principle of defense in depth, whereby an attacker would have to penetrate multiple layers in order to access your sensitive data.

Once within your private subnet, you have to minimize the attack surface area of each individual server. To do this, start by disabling all unnecessary services, packages, applications, methods of user authentication, and anything else that you won’t be using. Then, using a host-based firewall like iptables or nftables, block all inbound and outbound access, opening ports on only an exception basis. You should have SSH (restricted to the IP range belonging to your corporate network) and HTTP (restricted to the tier above it) open only on servers that host application servers. Use a configuration management approach per Chapter 5 in order to consistently apply your security policies. Your default deny policy is no good if it’s not actually implemented when a new server is built.

Going a layer lower, hypervisors themselves can serve as an attack vector. When virtualization first started becoming mainstream, there were security issues with hypervisors. But today’s hypervisors are much more mature and secure than they used to be and are now deemed OK to use by all of the major information security management systems. Cloud vendors use highly customized hypervisors, offering additional security mechanisms above the base hypervisor implementation, like Xen. For example, firewalls are often built-in between the physical server’s interface and guest server’s virtual interface to provide an extra layer of security on top of what the hypervisor itself offers. You can even configure your guest server to be in promiscuous mode, whereby your NIC forwards all packets routed through the NIC to one of the guests, and you won’t see any other guest’s traffic. Multiple layers of protection are in place to ensure this never happens. Separation of this sort occurs with all resources, including memory and storage.

Cloud vendors help to ensure that their hypervisors remain secure by frequently and transparently patching them and doing frequent penetration testing. If a cloud vendor’s hypervisor is found to have a security issue, that vendor’s entire business model is destroyed. They’re highly incentivized to get this right.

If you’re especially concerned about hypervisor security, you can always get dedicated servers instead of shared servers. This feature, offered by some cloud vendors, allows you to deploy one virtual server to an entire physical server.

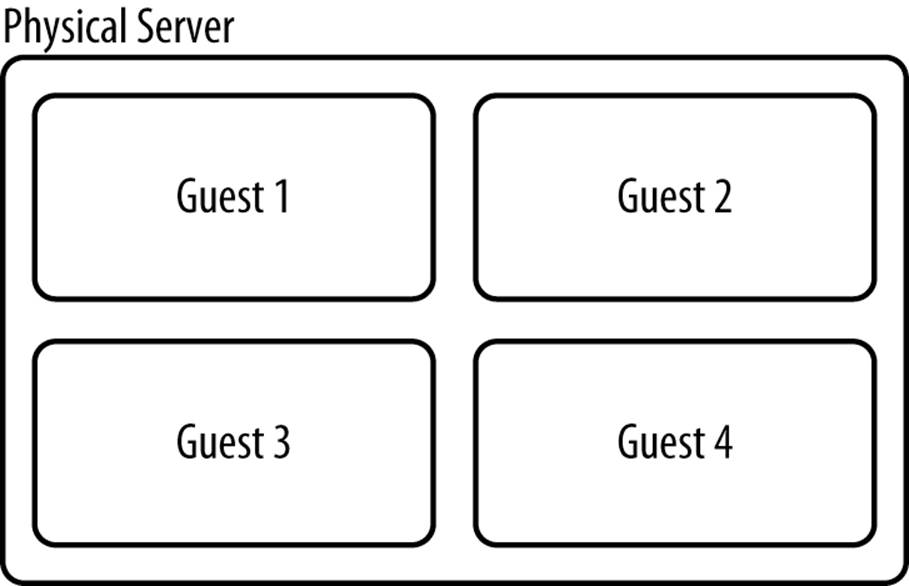

Instead of Figure 9-5, you can have one physical server and one vServer, as shown in Figure 9-6.

Figure 9-5. One physical server, four vServers

Figure 9-6. One physical server, one vServer

The price difference between the two models is often nominal, and you can use the same APIs you would normally use.

Protecting Data in Motion

Data can be in one of two states: in motion or at rest. Data that’s in motion is transient by nature. Think of HTTP requests, queries to a database, and the TCP packets that comprise those higher-order protocols. Data that’s at rest has been committed to a persistent storage medium, usually backed by a physical device of some sort. Any data in motion may be intercepted. To safeguard the data, you can encrypt it or use a connection that’s already secure.

Encryption is the most widely used method of protecting data in transit. Encryption typically takes the form of Secure Sockets Layer (SSL) or its successor, Transport Layer Security (TLS). SSL and TLS can be used to encrypt just about any data in motion, from HTTP [HTTP + (SSL or TLS) = HTTPS], to VPN traffic. Both SSL and TLS are well used and well supported by both clients and applications, making it the predominant approach to securing data in motion.

TIP

Extensive use of SSL and TLS taxes CPU and reduces performance. By moving encryption and decryption to hardware, you can entirely eliminate the CPU overhead and almost eliminate the performance overhead. You can transparently offload encryption and decryption to modern x86 processors or specialized hardware accelerators.

SSL and TLS work great for the following:

§ Customers’ clients (web browsers, smartphones) <-> load-balancing tier

§ Load-balancing tier <-> application tier

§ Administrative console <-> cloud

§ Application tier <-> SaaS (like a payment gateway)

§ Application tier <-> your legacy applications

§ Any communication within a cloud

§ Any communication between clouds

SSL and TLS work at the application layer, meaning that applications must be configured to use it. This generally isn’t a problem for clients that work with HTTP, as is common in today’s architectures. IPsec, on the other hand, will transparently work for all IP-based traffic, as it works a few layers lower than SSL and TLS by encapsulating TCP packets. You can use this for VPNs to connect to clients that don’t support SSL or TLS, such as legacy retail applications and the like. IPsec is commonly used for VPNs back to corporate data centers because of its flexibility.

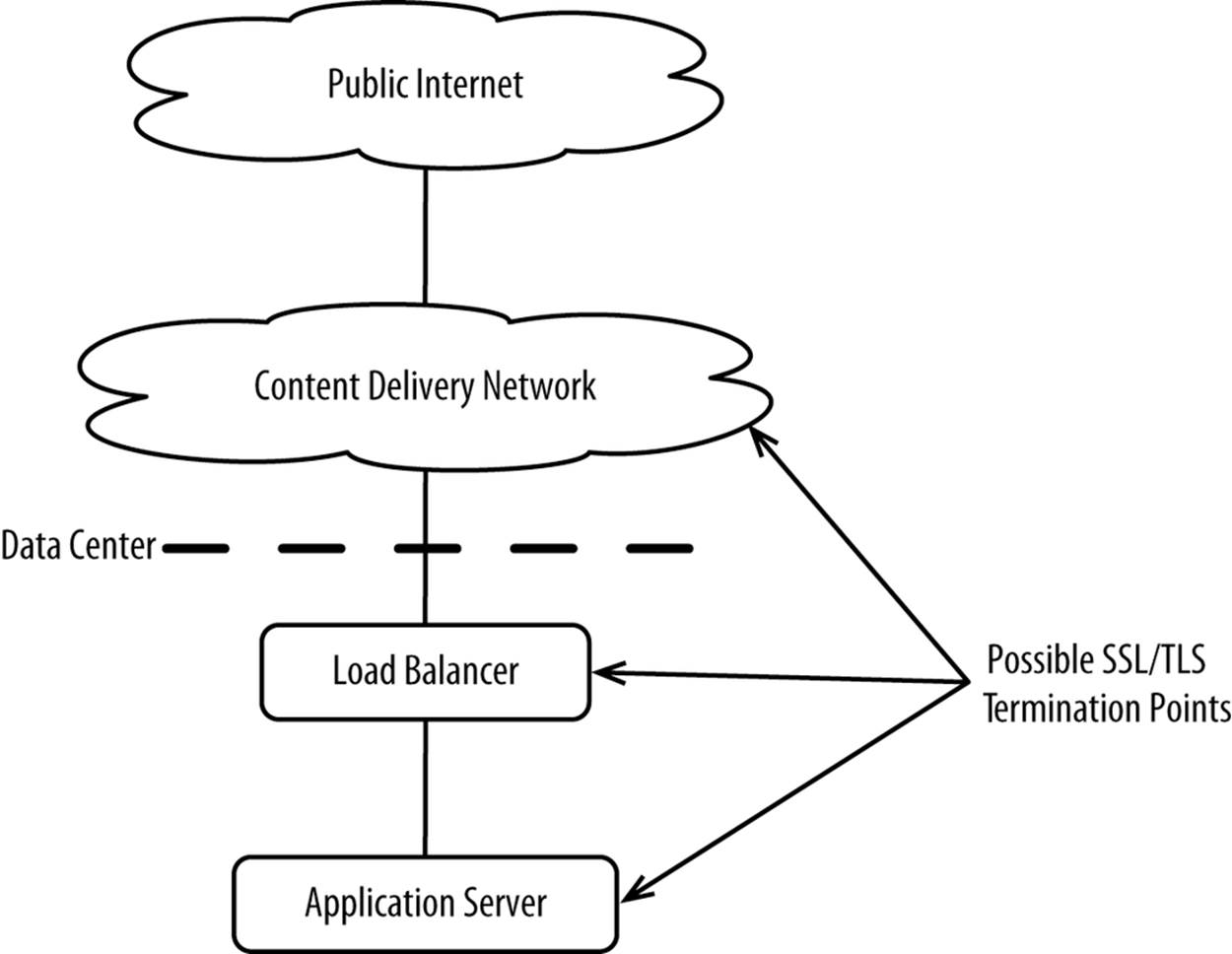

SSL, TLS, and IPsec all require that you terminate encryption. For example, you can terminate SSL or TLS within a Content Delivery Network, load balancer, or application server, as shown in Figure 9-7.

Figure 9-7. Possible SSL/TLS termination points

If you’re using a web server, you can terminate there, too. Each intermediary you pass encrypted traffic through may have to decrypt and then re-encrypt the traffic in order to view or modify the request. For example, if you terminate at your application tier and you have a web application firewall, you’ll need to decrypt and then re-encrypt all HTTP requests and responses so the web application firewall can actually see and potentially block application-level vulnerabilities.

Sometimes, it may be unnecessary or impossible to encrypt connections. We’ll talk about this in Chapter 11, but many cloud vendors offer colo vendors the ability to run dedicated fiber lines into their data centers. These private WANs are frequently used to connect databases hosted in a colo to application servers hosted in a cloud. These connections are dedicated and considered secure.

Once in a database, data transitions from being in motion to at rest.

Protecting Data at Rest

Data at rest is data that’s written to a persistent storage device, like a disk, whereas data in motion is typically traveling over a network between two hosts. Data at rest includes everything from the data managed by relational and NoSQL databases to logfiles to product images. Like data in motion, some is worth safeguarding, and some is not. Let’s explore.

To begin, you must first index and categorize all data that your system stores, applying different levels of security to each. This is a standard part of all information security management systems, as we discussed earlier in this chapter. If you can, reduce the amount of sensitive data that’s stored. You can:

§ Not store it

§ Reduce the length of time it needs to be stored

§ Tokenize it

§ Hash it

§ Anonymize it

§ Stripe it across multiple places

The less data you have, the less risk you have of it being stolen. Tokenization, for example, is substituting sensitive data in your system with a token. That way, even if your database were to be compromised, an attacker wouldn’t gain access to the sensitive data. Tokenization can be done at the edge by your CDN vendor or in your application. When you tokenize at the edge, only your CDN vendor and your payment gateway have access to the sensitive data.

Sensitive data that’s left over needs to be protected. Encryption is the typical means by which data at rest is safeguarded. You can apply a secure off-the-shelf algorithm to provide security, by encrypting files or entire filesystems. Encryption is a core feature of any storage system, including those offered by cloud vendors. To provide an additional level of security, you can encrypt your data twice—once before you store it and then again as you store it. The filesystem it’s being written to itself may be encrypted. Layering encryption is good, but just don’t overdo it. Encryption does add overhead, but it can be minimized by offloading encryption and decryption to modern x86 processors or specialized hardware accelerators.

Anytime you use encryption, you have to feed a key into your algorithm when you encode and decode. Your key is like a password, which must be kept safe, as anyone with access to an encryption key can decrypt data encrypted using that key. Hardware security modules (HSMs) are the preferred means for generating and safeguarding keys. HSMs contain hardware-based cryptoprocessors that excel at generating truly random numbers, which are important for secure key generation. These standalone physical devices can be directly attached to physical servers or attached over a network, and contain advanced hardware and software security features to prevent tampering. For example, HSMs can be configured to zero out all keys if the device detects tampering.

Some cloud vendors now offer dedicated HSMs that can be used within a cloud, though only the cloud vendor is able to physically install them. You can administer your HSMs remotely, with factors such as multifactor authentication providing additional security. If you want to use or manage your own HSMs, you can set them up in a data center you control, perhaps using a direct connection between your cloud vendor and a colo. This will be discussed further in Chapter 11.

Databases, whether relational or NoSQL, are typically where sensitive data is stored and retrieved. You have three options for hosting your databases:

§ Use your cloud vendor’s Database-as-a-Service offering

§ Set up a database in the cloud on infrastructure you provision

§ Host your database on premises in a data center that you control, using a direct connection between your cloud vendor and a colo (discussed further in Chapter 11)

All three approaches are perfectly capable of keeping your data secure, both in motion to the database and for data at rest once in a database. You can even encrypt data before you put it into the database, as an additional layer of security. Again, possession in no way implies security. What matters is having a strong information security management system, categorizing data appropriately, and employing appropriate controls.

Summary

The cloud isn’t inherently more or less secure than traditional on-premise solutions. Physical possession in no way implies security and can in fact make it more difficult to be secure by increasing the scope of your work. Control is what’s needed to be truly secure. Cloud vendors do a great job of taking care of lower-level security and giving you the tools you need to focus on making your own systems secure. Let your cloud vendors focus on securing the platform or infrastructure and below.

[60] Intel IT Center, “What’s Holding Back the Cloud,” (May 2012), http://intel.ly/1gAdtVB.

[61] Rackspace, “Reference Architecture: Enterprise Security for the Cloud,” (2013), http://bit.ly/1gAdwke.

[62] Erika McCallister, et al., “Guide to Protecting the Confidentiality of Personally Identifiable Information,” National Institute of Standards and Technology (April 2010), http://1.usa.gov/1gAdwAK.

[63] PCI Security Council, “Requirements and Security Assessment Procedures,” (November 2013), https://www.pcisecuritystandards.org/documents/PCI_DSS_v3.pdf.

[64] NIST, “Security and Privacy Controls for Federal Information Systems and Organizations,” (April 2013), http://1.usa.gov/1j2786S.