eCommerce in the Cloud (2014)

Part I. The Changing eCommerce Landscape

Chapter 2. How Is Enterprise eCommerce Deployed Today?

Prior to ecommerce, the Web was mostly static. Web pages consisted of HTML and images—no CSS, no JavaScript, and no AJAX. SSL wasn’t even supported by web browsers until late 1994. Many leading ecommerce vendors, including Amazon, eBay, Tesco, and Dell.com first came online in 1994 and 1995 with static websites. Naturally, people coming online wanted to be able to transact. Adding transaction capabilities to static HTML was a technical feat that required the following:

§ Using code to handle user input and generate HTML pages dynamically

§ Securing communication

§ Storing data in a persistent database

Today’s software and the architecture used to build these systems has largely remained the same since 1995. The innovations have been incremental at best.

TIP

With so much money generated by ecommerce, availability has trumped all else as a driving force behind architecture.

With the rise of omnichannel retailing and the increasing demands, the current approach to architecture is not sustainable. Let’s review the status quo.

Current Deployment Architecture

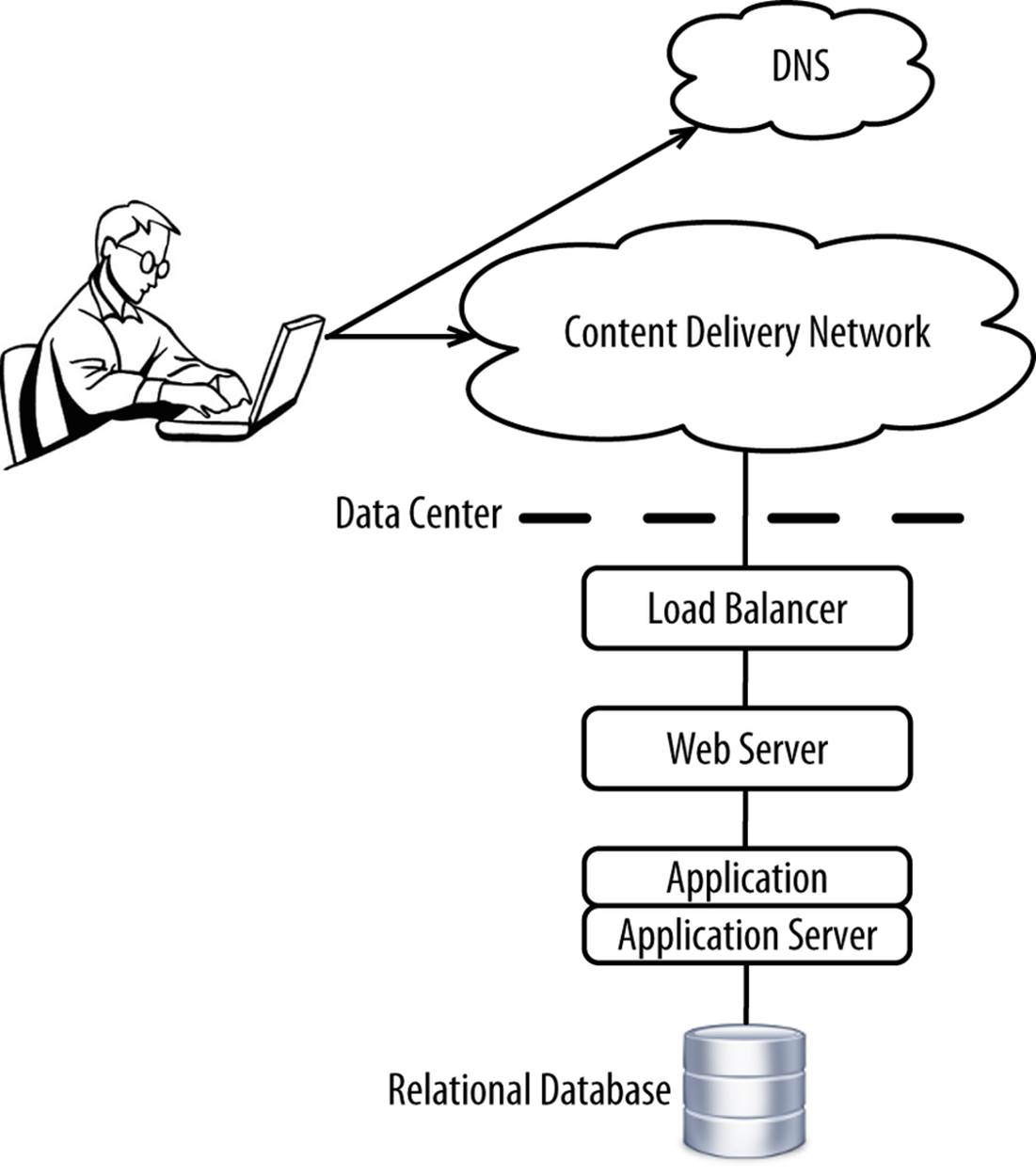

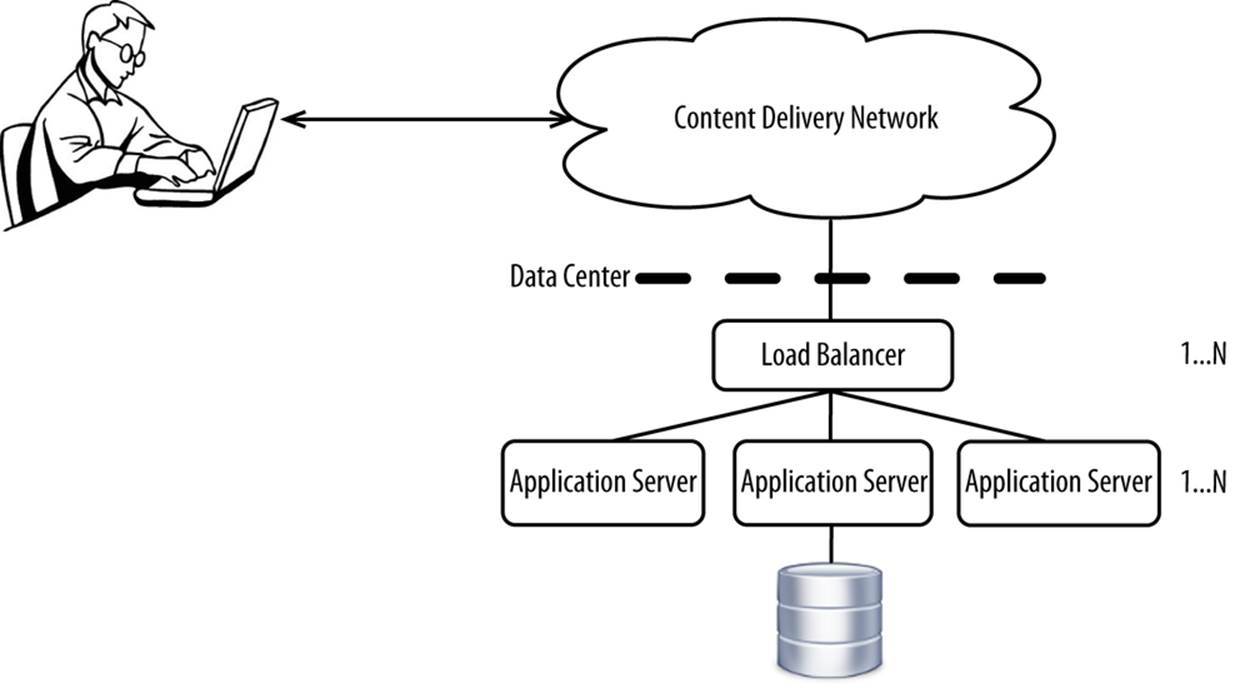

Most ecommerce deployment architectures follow the legacy three-tier deployment model consisting of web, application, and database tiers, as depicted in Figure 2-1.

Figure 2-1. Legacy three-tier eCommerce deployment architecture

The web servers make up the web tier and traditionally are responsible for serving static content up to Content Delivery Networks (CDNs). The middle tier comprises application servers and is able to actually generate responses that various clients can consume. The data tier is used for storing data that’s required for an application (e.g., product catalog, metadata of various types) and data belonging to customers (e.g., orders, profiles). We’ll discuss this throughout the chapter, but the technology that underlies this architecture has substantially changed.

Each channel typically has its own version of this stack, with either an integration layer or point-to-point integrations connecting everything together. All layers of the stack are typically deployed out of a single data center. This architecture continues to dominate because it’s a natural extension of the original deployment patterns back from the early days of ecommerce and it generally works.

Let’s explore each layer in greater detail.

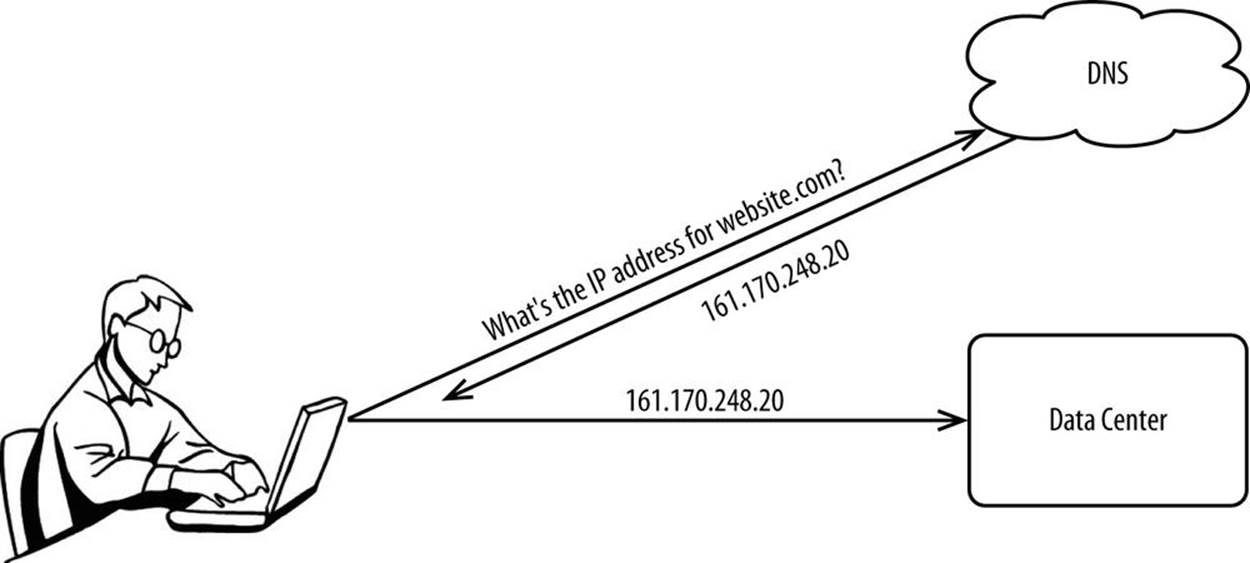

DNS

DNS is responsible for resolving friendly domain names (e.g., website.com) to an IP address (e.g., 161.170.248.20). See Figure 2-2.

Figure 2-2. Purpose of DNS

DNS exists so you can remember “website.com” instead of 161.170.248.20.

When an ecommerce platform is served out of a single data center, typically only one IP address is returned because the load balancers cluster to expose one IP address to the world. But with multiple data centers or multiple IPs exposed per data center, DNS becomes more complicated because one IP address has to be selected from a list of two or more (typically one per data center). The IP addresses are returned to the client in an ordered list, with the first IP address returned the one that the client connects to first. If that IP isn’t responding, the client moves down the list sequentially until it finds one that works.

Commonly used DNS servers, like BIND, work well with only one IP address per domain name. But as ecommerce has grown, there’s been an increase in the use of deploying to multiple data centers in an active/active or active/passive configuration. Generally, operating from more than one data center requires the DNS server to choose between two or more IP addresses. Traditional DNS servers are capable of basic load-balancing algorithms such as round-robin, but they’re not capable of digging deeper than that to evaluate the real-time health of a data center, geographic location of the client, round-trip latency between the client and each data center, or the real-time capacity of each data center.

This more advanced form of load balancing is known as Global Server Load Balancing, or GSLB for short. GSLB solutions are almost required now because there is more than one IP address behind most domain names. GSLB solutions can take the following forms:

§ Hosted as a standalone service

§ Hosted as part of a Content Delivery Network (CDN)

§ Appliance based, residing in an on-premises data center

Many are beginning to move to hosted solutions. We’ll discuss DNS and GSLB in greater detail in Chapter 10, but needless to say, appliance-based GSLB will no longer work when moving to the cloud. Solutions must be software based and must be more intelligent.

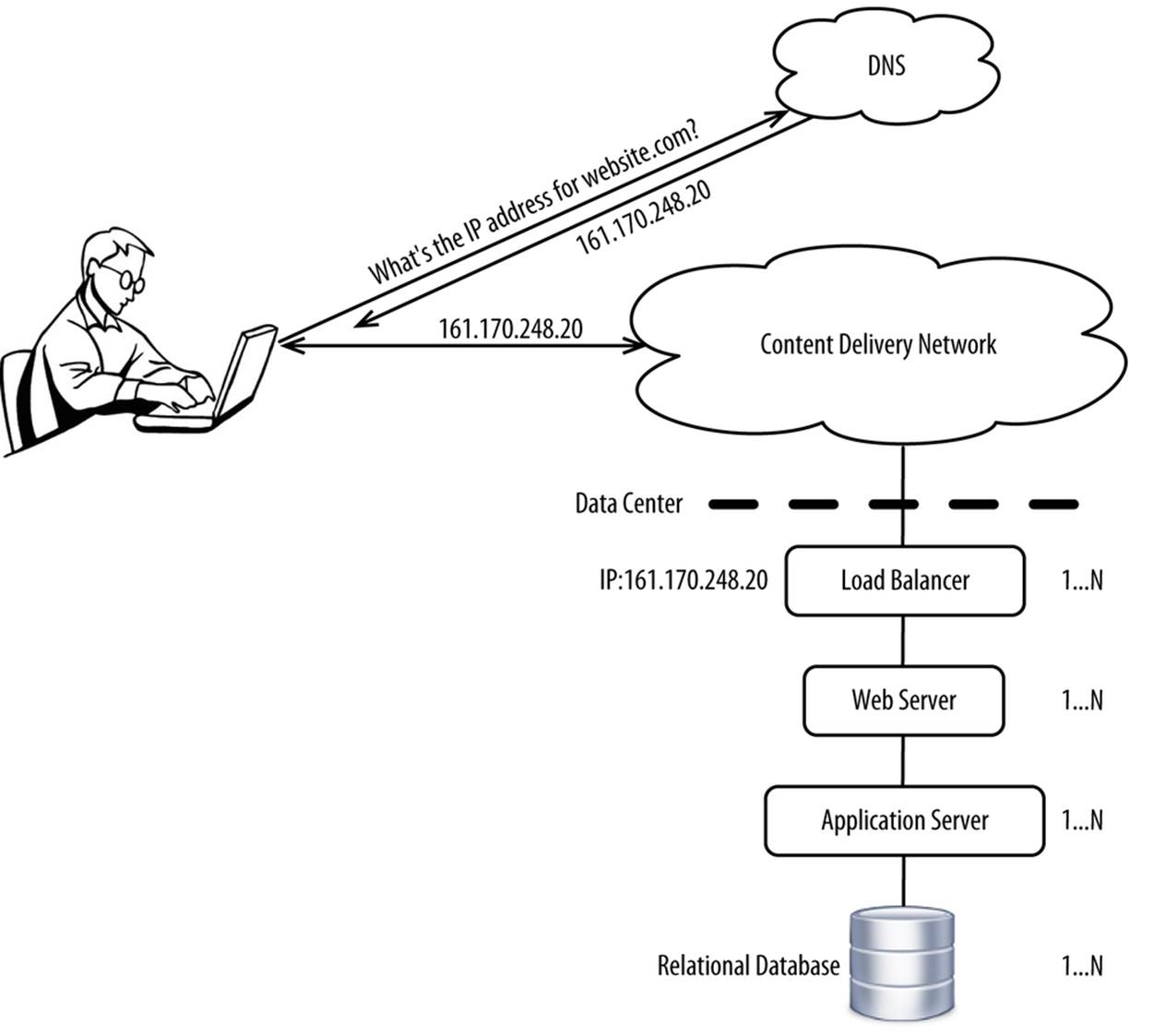

Intra Data Center Load Balancing

Today’s ecommerce platforms are served from hundreds or even thousands of physical servers, with only one IP address exposed to the world, as shown in Figure 2-3.

Load balancing takes place at every layer within a data center. Appliance-based hardware load balancers tend to be used today as the entry point into a data center and for load balancing within a data center. If a web server tier services traffic and sends it on to an application server tier, the web server often has load-balancing capabilities built in.

Load balancers have evolved over time from performing simple load-balancing duties to providing a full set of application control services. Even the term load balancer is now often replaced by most vendors with application delivery controller, which more accurately captures their expanding role. A few of those advanced features are as follows:

§ Static content serving

§ Dynamic page caching

§ SSL/TLS termination

§ URL rewriting

§ Redirection rules

§ Cache header manipulation

§ Dynamic page rewriting to improve performance

§ Web application firewall

§ Load balancing

§ Content compression

§ Rate limiting/throttling

§ Fast, secure connections to clouds

Figure 2-3. Load-balancing points within a data center

Load balancing in today’s ecommerce platforms will have to substantially change to meet future requirements.

Web Servers

In the early days of ecommerce, web servers were exposed directly to the public Internet, with each web server having its own IP address and own entry in the DNS record. To satisfy customers’ demands for truly transactional ecommerce, Common Gateway Interface (CGI) support was added to web servers. As needs outstripped the capabilities of CGI, application servers were added behind web servers, with web servers still responsible for serving static content and serving as the load balancer for a pool of application servers.

As ecommerce usage increased and the number of web servers grew, appliance-like hardware load balancers were added in front of the web servers. Web servers can’t cluster together and expose a single IP address to the world, as load balancers can. Load balancers also began to offer much of the same functionality as web servers. The line between web servers and load balancers blurred.

WARNING

Web servers are absolutely not required. In fact, their use is declining as the technology up and down stream of web servers has matured.

If web servers are used, they technically can perform the following functions:

§ Serving static content

§ Caching dynamic pages

§ Terminating SSL/TLS

§ Rewriting URLs

§ Redirecting rules

§ Manipulating cache headers

§ Dynamic page rewriting to improve performance

§ Creating a web application firewall

§ Load balancing

§ Compressing content

However, web servers usually just perform the following functions:

§ Serving static content to a Content Delivery Network

§ URL rewriting

§ Redirect rules

§ Load balancing

As CDNs, load balancers, and application servers have matured, functionality typically performed by web servers is increasingly being delegated to them. Table 2-1 shows what modern application servers, web servers, load balancers, and Content Delivery Networks are each capable of.

Table 2-1. Capabilities of modern application servers, web servers, load balancers, and Content Delivery Networks

|

Function |

Application servers |

Web servers |

Load balancers |

Content Delivery Networks (when used as reverse proxy) |

|

Serving static content |

X |

X |

X |

X |

|

Caching dynamic pages |

N/A |

X |

X |

X |

|

Terminating SSL |

X |

X |

X |

X |

|

URL rewriting |

X |

X |

X |

X |

|

Redirect rules |

X |

X |

X |

X |

|

Manipulating cache headers |

X |

X |

X |

X |

|

Dynamic page rewriting to improve performance |

X |

X |

X |

X |

|

Web application firewall |

X |

X |

X |

|

|

Load balancing |

N/A |

X |

X |

X |

|

Clustering to expose single Virtual IP Address (VIP) |

N/A |

X |

X |

N/A |

|

Content compression |

X |

X |

X |

X |

|

Rate limiting/throttling |

X |

X |

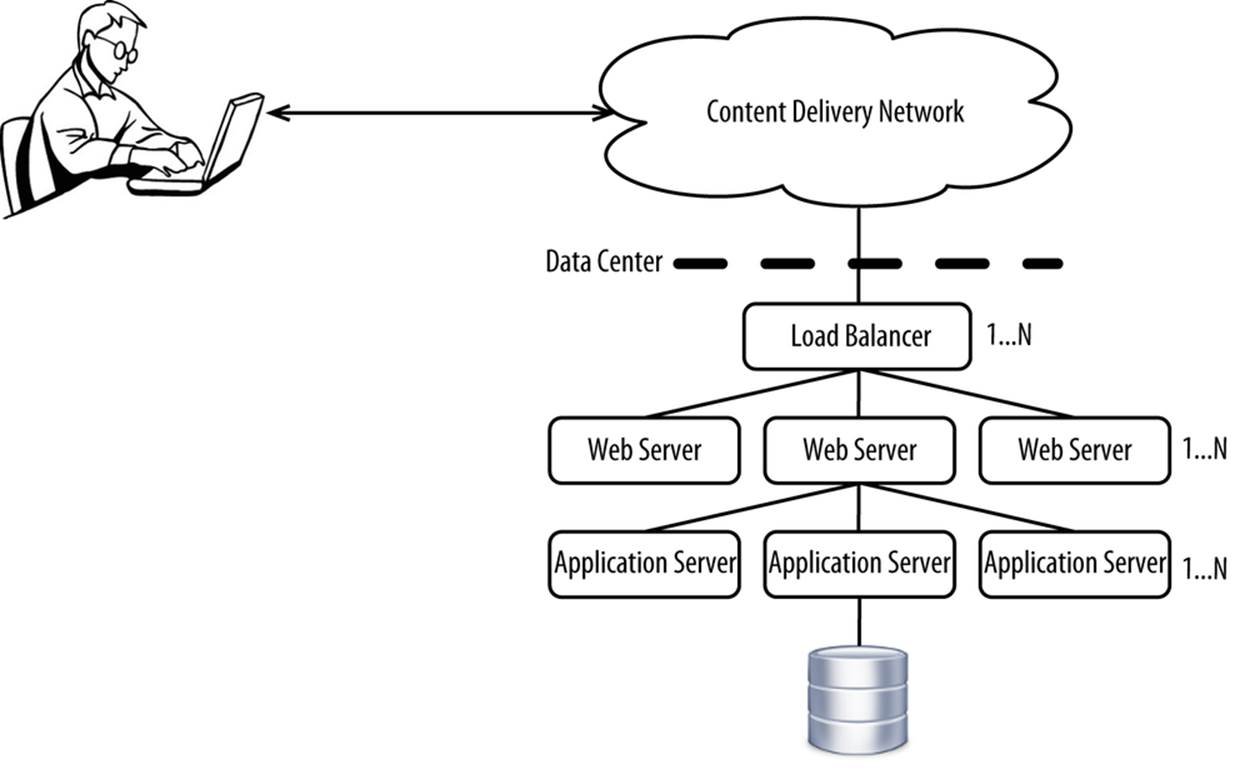

Given that application servers, load balancers, and Content Delivery Networks are required, web servers are becoming increasingly marginalized, with their use on the decline among major ecommerce vendors. The real problem is that web servers greatly complicate elasticity. Deployment architecture with web servers looks something like Figure 2-4.

Figure 2-4. Deployment architecture with web servers

Adding a new application server requires the following:

1. Provisioning hardware for the new application server

2. Installing/configuring the new application server

3. Deploying the application to the application server

4. Registering the application server with the web server or load balancer

If you need to add more web server capacity because of the increased application server capacity, you need to then do the following:

1. Provision hardware for the new web server.

2. Install/configure the new web server.

3. Register the new web server with the load balancer.

It can take a lot of work and coordination to add even a single application server. While some web server and application server pairs allow you to scale each tier independently, you still have to worry about dependencies. If you add many application servers without first adding web servers, you could run out of web server capacity. The order matters.

It’s often easier to eliminate the web server tier and push the responsibilities performed there to the CDN, load balancer, and application server. Many load balancers auto-detect new endpoints, making it even easier to add and remove new capacity quickly. That architecture looks likeFigure 2-5.

Figure 2-5. Deployment architecture without web servers

By flattening the hierarchy, you gain a lot of flexibility. Web servers can still add value if they’re doing something another layer cannot.

eCommerce Applications

The “e” is quickly being dropped from “ecommerce” to reflect the fact that there is no longer such a clear delineation between channels. Some retailers today are unable to report sales by channel because their channels are so intertwined. As discussed in Chapter 1, this one channel, called omnichannel, is serving as the foundation of all channels. Examples of channels include the following:

§ Web

§ Social

§ Mobile

§ Physical store

§ Kiosk

§ Chat

§ Call center

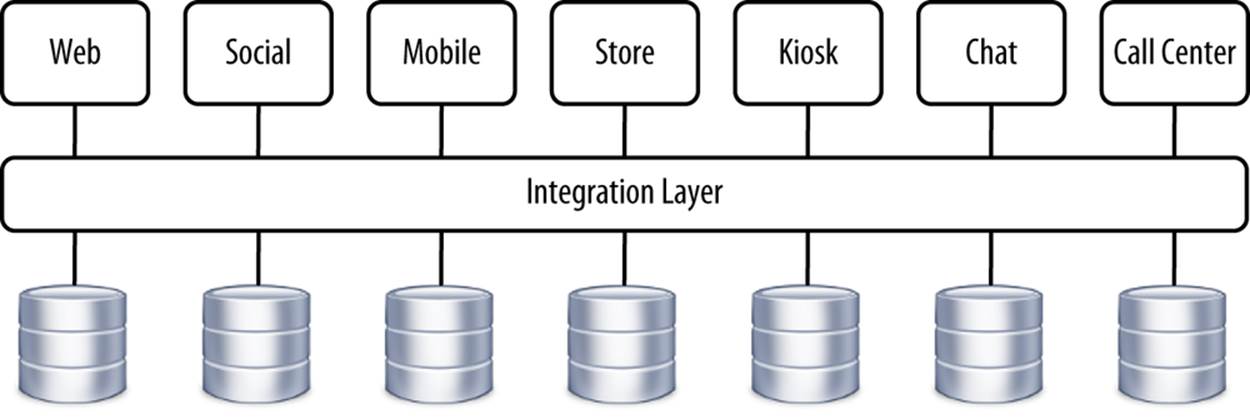

On one extreme, you’ll find that each channel has its own vertical stack, including a database, as shown in Figure 2-6.

Figure 2-6. Multichannel architecture featuring an integration layer

With this model, each channel is wired together with each other channel through liberal use of an integration layer. This is how ecommerce naturally evolved, but it suffers from many pitfalls:

§ A lot of code needs to be written to glue everything together.

§ Channels are always out of sync.

§ Differences in functionality leave customers upset (e.g., promotions online don’t show up in in-store point-of-sale systems).

§ Testing the entire stack is enormously complicated because of all of the resources that must be coordinated.

§ The same customer data could be updated concurrently from two or more channels, leading to data conflicts and a poor experience.

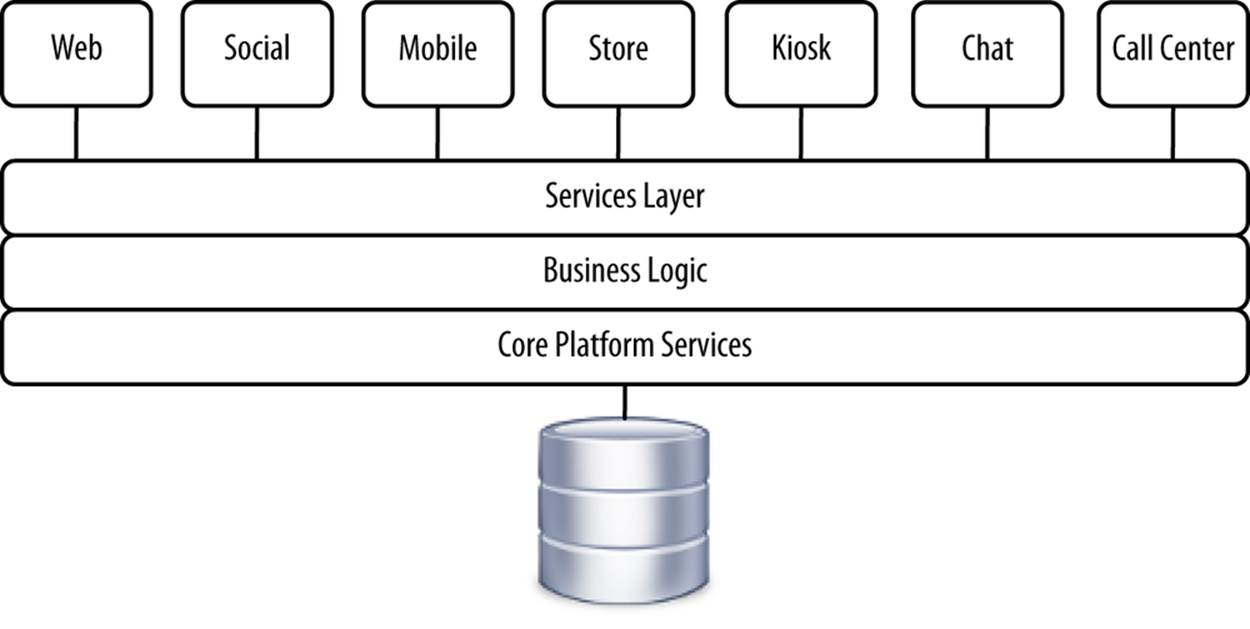

Over the past few years, there’s been a trend toward buying or building complete platforms, with one logical database and no integrations between channels, as shown in Figure 2-7.

Figure 2-7. Omnichannel architecture

Obviously, this takes time to fully achieve but is clearly the direction that the market is heading in. And it makes perfect sense, given the rise in omnichannel retailing and ever-increasing expectations.

TIP

Support of true omnichannel retailing is now the competitive differentiator for commercial ecommerce platforms.

Application Servers

Application servers, also known as containers, form a critical role in today’s ecommerce platforms. They provide the runtime environment for ecommerce applications and provide services such as HTTP request handling, HTTP session management, connections to the database, authentication, directory services, messaging, and other services that are consumed by ecommerce applications.

Today’s application servers are very mature, offering dozens of features that simplify the development, deployment, and runtime management of ecommerce applications. Over the years, they’ve continued to mature in the following ways:

§ More modular architecture, starting up only the services required by the application being deployed

§ Faster, lighter architectures

§ Tighter integration with databases, with some vendors offering true bidirectional communication with databases

§ Full integrations with cache grids

§ Improved diagnostics

§ Easier management

Application servers continue to play a central role in today’s ecommerce platforms.

Databases

Databases continue to play an important role in modern ecommerce architecture. Common examples of data in an ecommerce application that need to be stored include orders, profiles, products, SKUs, inventory, prices, ratings and reviews, and browsing history. Data can be stored using three high-level approaches.

Fully normalized

Normalized data has a defined, rigid structure, with constraints involving data types, whether each column is required, and so on. Here’s how you would define a very simple product table for a relational SQL database:

CREATETABLE PRODUCT

(

PRODUCT_ID VARCHAR(255) NOTNULL,

NAME VARCHAR(255) NOTNULL,

DESCRIPTION CLOBNOTNULL,

PRIMARYKEY(PRODUCT_ID)

);

To retrieve the data, you would execute a query:

SELECT * FROM PRODUCT;

and get back these results:

|

PRODUCT_ID |

NAME |

DESCRIPTION |

|

SK3000MBTRI4 |

Thermos Stainless King 16-Ounce Food Jar |

Constructed with double-wall stainless steel, this 16-ounce food jar is virtually unbreakable, yet its sleek design is both eye-catching and functional… |

This format very much mimics a spreadsheet. With data in a normalized format, you can execute complex queries, ensure the integrity of the data, and selectively update bits of data. Relational databases are built for the storage and retrieval of normalized data and have been used extensively for ecommerce.

Traditional relational databases tend to be ACID compliant. ACID stands for:[36]

Atomicity

The whole transaction either succeeds or fails.

Consistency

The transaction is committed without violating any integrity constraints (e.g., data type, whether column is nullable, foreign key constraints).

Isolation

Each transaction is executed in its own private sandbox and not visible to any other transaction until it is committed.

Durability

A committed transaction will not be lost.

Most ecommerce applications use both a vertically and horizontally scaled ACID-compliant relational database for the core order and profile data. Relational databases used to be a primary bottleneck for ecommerce applications, but as that technology has matured, it is rarely the bottleneck it once was, provided appropriate code practices are followed. Application-level caching (both in-memory and to data grids) has helped to further scale relational databases.

NoSQL

An increasingly popular alternative to relational databases are key/value or document stores. Rather than breaking up and storing the data in a fully normalized format, the data is represented as XML, JSON, or even a binary format, with the data available only if you know the record’s key. Implementations vary widely, but collectively these are known as NoSQL solutions. Here’s an example of what might be stored for the same product in the preceding section:

{

"name": "Thermos Stainless King 16-Ounce Food Jar",

"description": "Constructed with double-wall stainless steel, this 16-

ounce food jar is virtually unbreakable, yet its sleek design is both

eye-catching and functional...",

...

}

NoSQL is increasingly used for caching and storing nonrelational media, such as documents and data that don’t need to be stored in an ACID-compliant database. NoSQL solutions generally sacrifice consistency and availability in exchange for performance and the ability to scale in a distributed nature. Product images, ratings and reviews, browsing history, and other similar data is very well suited for NoSQL databases, where ACID compliance isn’t an issue.

NoSQL is increasingly beginning to find its place in large-scale ecommerce. The technology has real value, but it’s going to take some time for the technology and market to mature to the level of relational databases. Relational databases have been around in their modern form since the 1970s. NoSQL has been around for just a few years.

We’ll discuss ACID, BASE, and related principles in Chapter 10.

Fully denormalized

Historically, a lot of data was stored in plain HTML format. Merchandisers would use WYSIWYG editors and save the HTML itself, either in a file or in a database. These HTML fragments would then be inserted into the larger pages to form complete web pages. Data looked like this:

<h2>Thermos Stainless King 16-Ounce Food Jar</h2>

<div id="product_description">

Constructed with double-wall stainless steel, this

16-ounce food jar is virtually unbreakable, yet its

sleek design is both eye-catching and functional...

</div>

One of the many disadvantages of this approach is that you can’t reuse this data across channels. This will work for a web page, but how can you get an iPhone application to use this? This approach is on the decline and shouldn’t be used anymore.

Hosting

A key consideration for ecommerce success is the hosting model. Hosting includes at a minimum the physical data center, racks for hardware, power, and Internet connectivity. This is also known as ping, power, and pipe. Additionally, vendors can offer computing hardware, supporting infrastructure such as networking and storage, and various management services with service-level agreements.

TIP

From a hosting standpoint, the cloud is much more evolutionary than revolutionary. It’s been common for years to not own the physical data centers that you operate from. The hardware used to serve your platform and the networks over which your data travels are frequently owned by the data center provider. The cloud is no different in this regard.

Let’s look at today’s common hosting models, shown in Table 2-2.

Table 2-2. Today’s common hosting models

|

Attribute |

Self-hosted on-premises |

Self-hosted off premises |

Colocation |

Fully managed hosting |

Public Infrastructure-as-a-Service |

|

Who owns data center |

You |

You |

Colo vendor |

Hosting vendor |

IaaS vendor |

|

Physical location of data center |

Your office |

Remote |

Remote |

Remote |

Remote |

|

Who owns hardware |

You |

You |

You or colo vendor |

You or managed hosting vendor |

IaaS vendor |

|

Dedicated hardware |

Yes |

Yes |

Yes |

Probably |

Maybe |

|

Who builds infrastructure |

You |

You |

You or colo vendor |

Hosting vendor |

IaaS vendor |

|

Who provisions infrastructure |

You |

You |

You or colo vendor |

Hosting vendor |

IaaS vendor |

|

Who patches software |

You |

You |

You or colo vendor |

Hosting vendor |

IaaS vendor |

|

Accounting model |

CAPEX |

CAPEX |

CAPEX |

OPEX |

OPEX |

It’s rare to find an enterprise-level ecommerce vendor that self-hosts on premises. That used to be the model, but over the past two decades there has been a sharp movement toward fully managed hosting and now public Infrastructure-as-a-Service. Large, dedicated vendors offer much better data centers and supporting infrastructure for ecommerce. It doesn’t take much to outgrow an on-premises data center. These dedicated vendors offer the following features:

§ Highly available power through multiple suppliers and the use of backup generators

§ Direct connections to multiple Internet backbones

§ High security, including firewalls, guards with guns, physical biometric security

§ Placement of data centers away from flood planes, away from areas prone to earthquakes, and near cheap power

§ Advanced fire suppression

§ High-density cooling

Dedicated vendors offer far and above what you can build on premises in your own data center, for substantially less cost. Some of these data centers are millions of square feet.[37] The marginal cost these vendors incur for one more tenant is minuscule, allowing them to pass some of that savings along to you. Economies of scale is the guiding principle for these vendors.

Most of these vendors offer services to complement their hardware and infrastructure offerings. These services include the following (in ascending order of complexity):

§ Power cycling

§ Management from the operating system on down (including patching)

§ Shared services such as storage and load balancing

§ Management from the application sever on down (including patching)

§ Management from the application(s) on down

§ Ongoing application-level development/maintenance

Platform-as-a-Service and Software-as-a-Service is what Infrastructure-as-a-Service vendors have built on top of their infrastructure in an attempt to move up the value chain and earn more revenue with higher margins.

TIP

Anything that you cannot differentiate on yourself should be outsourced. This is now especially true of computing power.

Limitations of Current Deployment Architecture

Present-day ecommerce deployment architecture is guided by decades’ old architecture patterns, with availability being the driver behind all decisions. People are often incentivized for platform availability and punished, often with firings, for outages. An outage in today’s increasingly omnichannel world is akin to barring customers from entering all of your physical retail stores. Since physical retail stores are increasingly using a single omnichannel ecommerce platform for in-store point-of-sale systems, an outage will actually prevent in-store sales, too. Keeping the lights on is the imperative that comes at the cost of just about everything else.

Current deployment architecture suffers from numerous problems:

§ Everything is statically provisioned and configured, making it difficult to scale up or down.

§ The platform is scaled for peaks, meaning most hardware is grossly underutilized.

§ Outages occur with rapid spikes in traffic.

§ Too much time is spent building infrastructure as opposed to higher value-added activities.

Cloud computing can overcome these issues. Let’s explore each one of these a bit further.

Static Provisioning

Most ecommerce vendors statically build and configure environments. The problem with this is that ecommerce traffic is inherently elastic. Traffic can easily increase by 100 times over baseline. All it takes is for the latest pop star to tweet about your brand to his or her 50 million followers, and pretty quickly you’ll see exponential traffic as others re-tweet the original tweet. The world is so much more connected than it used to be. Either you have to scale for peak or you risk failing under heavy load. The cost of failing often outweighs the cost of buying a few more servers, so servers are over-provisioned, often by many times more than is necessary.

Static provisioning is bad because it’s inefficient. You can’t scale up or down based on real-time demand. This leads to ecommerce vendors scaling for peaks as opposed to scaling for actual load. Because nobody wants to get fired, everybody just wildly over-provisions in an attempt to maintain 100% uptime. Over-provisioning leads to serious issues:

§ Wasted data center space, which is increasingly expensive

§ Unnecessary cost, due to data center and human cost

§ Focus away from core competency—whether that’s selling the latest basketball shoe or selling forklifts

Most ecommerce vendors have to statically provision because their ecommerce platforms don’t lend themselves to scaling dynamically. For example, many ecommerce platforms require ports and IP addresses to be hardcoded in configuration files. The whole industry was built around static provisioning. The cloud and the concept of dynamic provisioning is a recent development.

Scaling for Peaks

Many ecommerce vendors simply guess at what their peaks will be and then multiply that by five in order to size their production environments. Hardware is statically deployed, sitting idle except for the few hours of the year where it spikes up to 20% utilization. The guiding factor has been to have 100% uptime, as downtime leads to unemployment. Then hardware must be procured for development and test environments, which are hardly ever used.

When you put together all of the typical environments needed, Table 2-3 shows the result, where 100% represents the hardware needed to support actual peak production traffic.

Table 2-3. Cumulative amount of traffic required across ecommerce environments

|

Environment |

% of production (actual peak traffic) |

Cumulative % |

|

Production—Typical utilization |

10% |

n/a |

|

Production—Actual peak |

100% |

100% |

|

Production—Actual peak + Padding/Safety factor |

500% |

500% |

|

Production—Clone of primary environment |

500% |

1000% |

|

Staging—Environment 1 |

50% |

1050% |

|

Staging—Environment 2 |

50% |

1100% |

|

Staging—Environment 3 |

50% |

1150% |

|

QA—Environment 1 |

25% |

1175% |

|

QA—Environment 2 |

25% |

1200% |

|

QA—Environment 3 |

25% |

1225% |

|

Development—Environment 1 |

10% |

1235% |

|

Development—Environment 2 |

10% |

1245% |

|

Development—Environment 3 |

10% |

1255% |

This is truer for larger vendors than it is for smaller vendors, who tend to not have the money to build out so many environments.

Because traffic can be so prone to rapid spikes, many ecommerce vendors multiply their actual expected peak by five so that peak consumes only 20% of the CPU or whatever the limiting factor is. Then most set up a mirror of production in a different physical data center for disaster recovery purposes or as a fully active secondary data center. On top of production, there are multiple preproduction environments, all being some fraction of production. Each branch of code typically requires its own environment. This amounts to a lot of hardware!

Let’s apply this math to an example, shown in Table 2-4. An ecommerce vendor needs 50 physical servers to handle actual production load at peak.

Table 2-4. Example of how many servers there are

|

Environment |

% of production (actual peak traffic) |

Servers |

|

Production—Actual peak |

100% |

50 |

|

Production—Actual peak + Padding/Safety factor |

500% |

250 |

|

Production—Clone of primary environment |

500% |

250 |

|

Staging—Environment 1 |

50% |

25 |

|

Staging—Environment 2 |

50% |

25 |

|

Staging—Environment 3 |

50% |

25 |

|

QA—Environment 1 |

25% |

13 |

|

QA—Environment 2 |

25% |

13 |

|

QA—Environment 3 |

25% |

13 |

|

Development—Environment 1 |

10% |

5 |

|

Development—Environment 2 |

10% |

5 |

|

Development—Environment 3 |

10% |

5 |

|

Total |

629 |

A total of 629 physical servers are required across all environments.

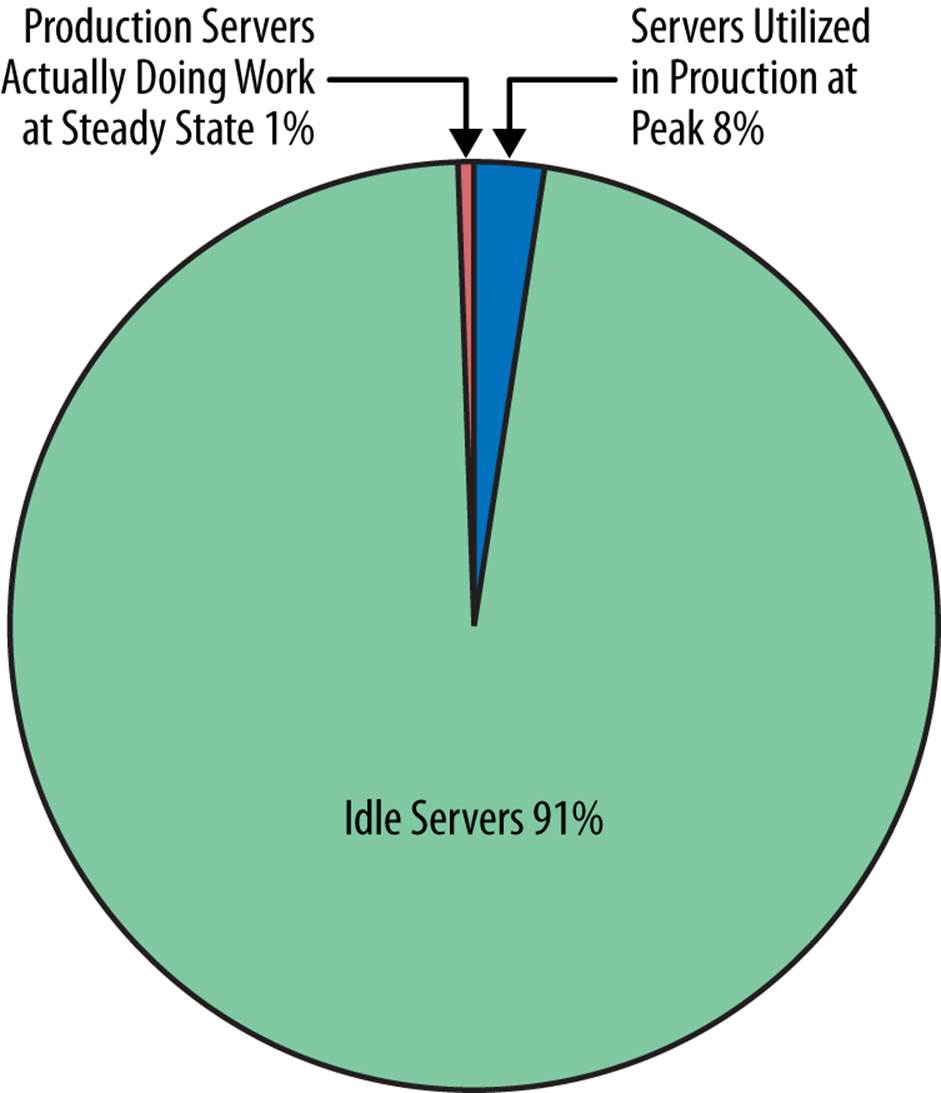

With normal production traffic, only five servers are required (10% of actual peak of 50 servers = 5). Development and QA environments are rarely used, with the only customers being internal QA testers. Staging environments are periodically used for load tests and for executives to preview functionality, but that’s about it. Figure 2-8 shows just how underutilized hardware often is.

Figure 2-8. Utilized versus unutilized hardware

A remarkable 1% of total servers (5 out of 629) are actually being utilized at steady state in this example. Just moving preproduction environments would save an enormous amount of money.

Outages Due to Rapid Scaling

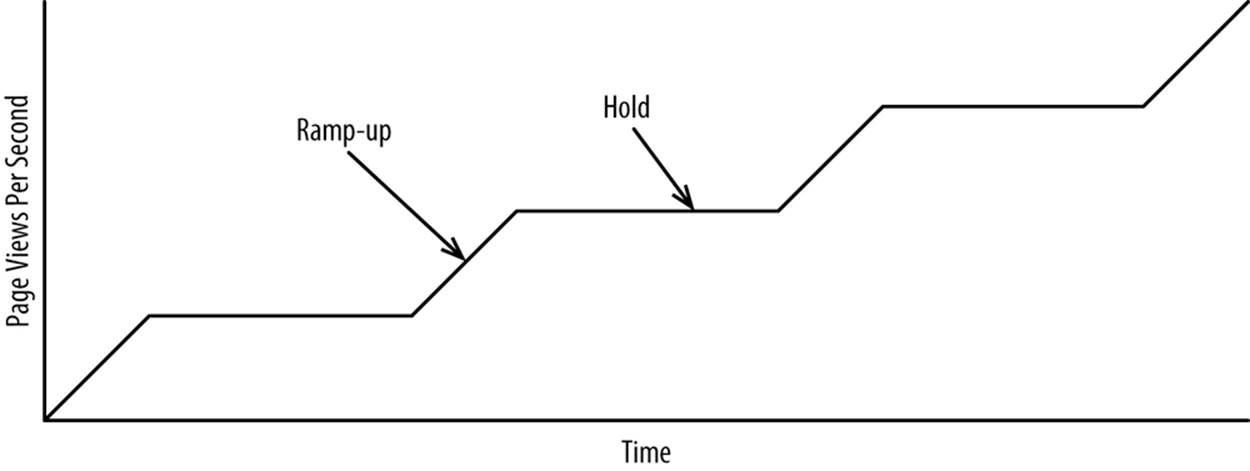

Because the stack is so underutilized, rapid spikes in traffic often bring down entire platforms. Load tests use carefully crafted ramp-up times, which guide how quickly virtual customers are added. After each ramp period, there’s always a period where the platform is allowed to stabilize. Stabilizing, also known as leveling, is done to allow the system time to recover from having a lot of load thrown at it. Load tests often look something like Figure 2-9.

Figure 2-9. Traffic from load test

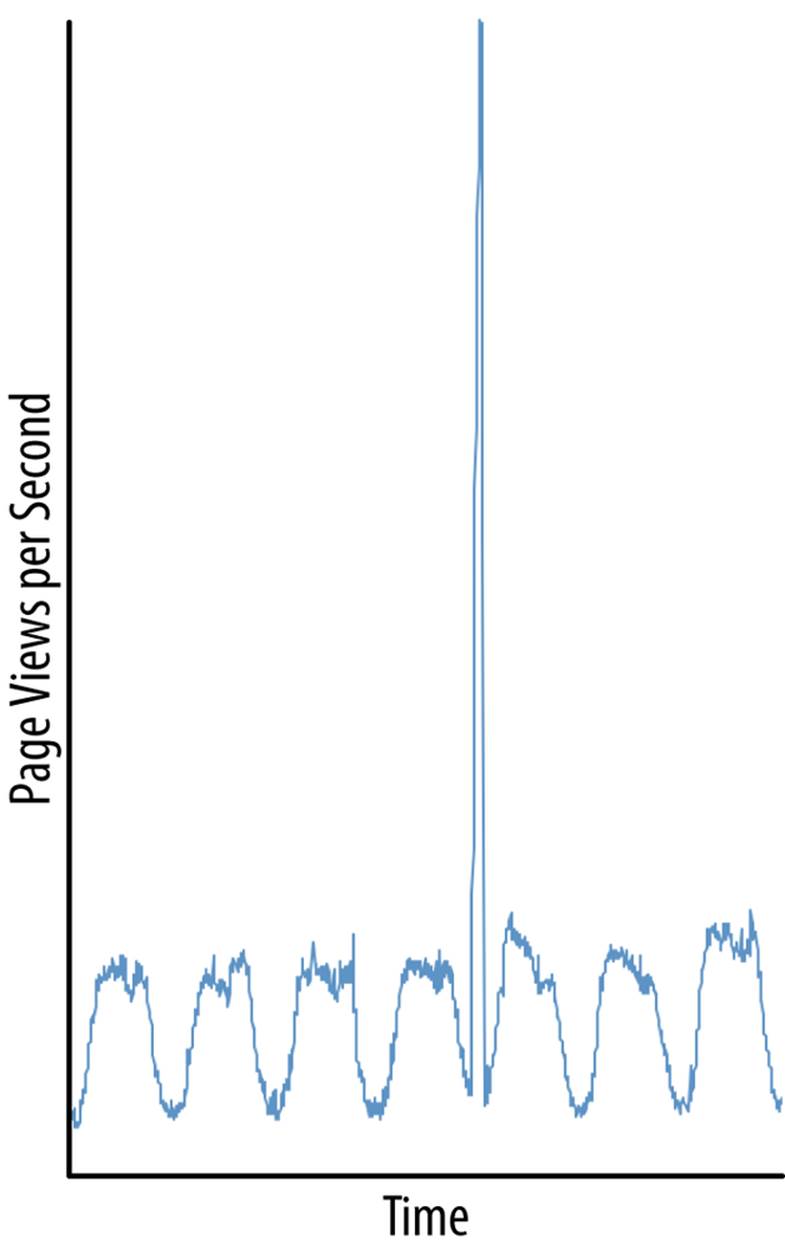

But in reality, traffic often looks like Figure 2-10.

Successful social media campaigns, mispriced products (e.g., $0.01 instead of $100), email blasts, coupons/promotions, mentions in the press, and important product launches can drive substantial traffic in a short period of time to the point where it can look like a distributed denial-of-service attack. Platforms don’t get “leveling” periods in real life.

CASE STUDY: DELL’S PRICE MISHAP

Dell’s Taiwanese subsidiary, www.dell.com.tw, accidentally set the price of one of its 19-inch monitors to approximately $15 USD instead of the intended price of approximately $148 USD. The incorrect price was posted at 11 PM locally. Within eight hours, 140,000 monitors were purchased at a rate of approximately five per second.[38]

Figure 2-10. Traffic in production

This rapid increase in traffic ends up creating connections throughout each layer, which leads to spikes in traffic and memory consumption. Creating connections is expensive—that’s why all layers use connection pooling of some sort. But it doesn’t make sense to have enormous connection pools that are only a few percent utilized. Connections and other heavier resources tend to be created on demand, which can lead to failures under heavy load. Software in general doesn’t work well when slammed with a lot of load.

Summary

In this chapter, we discussed the shortcomings of today’s deployment architecture, along with how the various components will need to change to support omnichannel retailing. The shortcomings discussed make a strong case for cloud computing, which we’ll discuss in the next chapter.

[36] Wikipedia, “ACID,” (2014), http://en.wikipedia.org/wiki/ACID.

[37] Forbes, “The 5 Largest Data Centers in the World,” http://onforb.es/1k7ywo2.

[38] Kevin Parrish, “Dell Ordered to Sell 19-inch LCD for $15,” Tom’s Guide (2 July 2009), http://bit.ly/MrUFhW.