Improving the Test Process: Implementing Improvement and Change - A Study Guide for the ISTQB Expert Level Module (2014)

Chapter 3. Model-Based Improvement

Using a model to support test process improvement is a tried and trusted approach that has acquired considerable acceptance in the testing industry. In fact, the success of this approach has unfortunately led some people to believe that this is the only way to approach test process improvement. Not so. As you will see in other chapters of this book, using models is just one way to approach test process improvement, and models are certainly not a substitute for experience when it comes to recommending test process improvements. However, models do offer a structured and systematic way of showing where a test process currently stands, where it might be improved, and what steps can be taken to achieve a better, more mature test process. The success and acceptance of using models calls for a thorough consideration of the options available to test process improvers when they are choosing a model-based approach.

The objective of this chapter is to help test process improvers make the right choices when it comes to test process improvement models, give them a thorough overview of the issues concerning model use, and provide an overview of the most commonly used models: TMMi, TPI NEXT, CTP, and STEP. We will also be looking at what the software process improvement models CMMI and ISO/IEC 15504 can offer the test process improver. Of course, it would not be practical for this chapter to consider these models right down to the last detail; that would add around 500 pages to the book! The reader is kindly requested to consult the referenced works for more detailed coverage.

This chapter first looks at model-based approaches in general and asks the fundamental questions, What would we ideally expect to see in a model used for test process improvement? and, What assumptions are we taking when using a model?

Test process improvement models are not all the same; they adopt different approaches, they are structured differently, and they each have their own strengths and weaknesses. The remainder of the chapter focuses on these aspects with regard to the models previously mentioned.

3.1 Introduction to Test Process Improvement Models

Syllabus Learning Objectives

|

LO 3.1.1 |

(K2) Understand the attributes of a test process improvement model with essential generic attributes. |

|

LO 3.1.2 |

(K2) Compare the continuous and staged approaches including their strengths and weaknesses. |

|

LO 3.1.3 |

(K2) Summarize the assumptions made in using models in general. |

|

LO 3.1.4 |

(K2) Compare the specific advantages of using a modelbased approach with their disadvantages. |

3.1.1 Desirable Characteristics of Test Process Improvement Models

Fundamentally, a test process improvement model should provide three essential benefits:

![]() Clearly show the current status of the test process in a thorough, structured, and understandable manner

Clearly show the current status of the test process in a thorough, structured, and understandable manner

![]() Help to identify where improvements to that test process should take place

Help to identify where improvements to that test process should take place

![]() Provide guidance on how to progress from the current to the desired state

Provide guidance on how to progress from the current to the desired state

Let’s be honest. A first look at the available models for test process improvement can be confusing; there are so many styles, approaches, and formal issues to consider. With all this, it can be difficult to appreciate the fundamental attributes we should be looking for in such a model. This section describes a number of specific areas that can help us understand the aspects of a test process improvement model that can generally be considered as “desirable.” The areas are covered under the following headings:

![]() Model Content

Model Content

![]() Model Design

Model Design

![]() Formal Considerations

Formal Considerations

Model Content

![]() Practicality and ease of use are high on the list of desirable model characteristics. A model that is overcomplicated, difficult to use, and impractical will struggle to achieve a general level of acceptability from both its users and the stakeholders who should be benefitting from its use. Convincing others to adopt an impractical model will be difficult and may even place a question mark over the use of a model-based approach.

Practicality and ease of use are high on the list of desirable model characteristics. A model that is overcomplicated, difficult to use, and impractical will struggle to achieve a general level of acceptability from both its users and the stakeholders who should be benefitting from its use. Convincing others to adopt an impractical model will be difficult and may even place a question mark over the use of a model-based approach.

![]() A well-researched, empirical basis is essential. Models must be representative of a justified “best practice” approach to testing. Without any solid basis for this justification, models may be seen as simply a collection of notions and ideas put forward by a limited number of individuals. Because such models have unproven validity, the test process improver must look for examples where value has been demonstrated in actual practice.

A well-researched, empirical basis is essential. Models must be representative of a justified “best practice” approach to testing. Without any solid basis for this justification, models may be seen as simply a collection of notions and ideas put forward by a limited number of individuals. Because such models have unproven validity, the test process improver must look for examples where value has been demonstrated in actual practice.

![]() Details are important. A strong mover behind the development of test process improvement models was the low level of testing detail provided by software development models (e.g., CMMI). This was often considered inadequate for thorough and practical test process improvement. A model for test process improvement must therefore provide sufficient detail to allow in-depth information about test processes to be obtained and permit a wide range of specific testing issues to be adequately covered. Models that deal only in generalities will be difficult to apply in practice and may need considerable subjective interpretation when used for test process assessment purposes.

Details are important. A strong mover behind the development of test process improvement models was the low level of testing detail provided by software development models (e.g., CMMI). This was often considered inadequate for thorough and practical test process improvement. A model for test process improvement must therefore provide sufficient detail to allow in-depth information about test processes to be obtained and permit a wide range of specific testing issues to be adequately covered. Models that deal only in generalities will be difficult to apply in practice and may need considerable subjective interpretation when used for test process assessment purposes.

![]() The user must be supported by the model in identifying, proposing, and quantifying improvements that are specific to identified test process weaknesses. A variety of improvement suggestions for specific testing problems is desirable. A model that proposes only a single solution to a problem or only focuses on the assessment part of test process improvement will be of only limited value.

The user must be supported by the model in identifying, proposing, and quantifying improvements that are specific to identified test process weaknesses. A variety of improvement suggestions for specific testing problems is desirable. A model that proposes only a single solution to a problem or only focuses on the assessment part of test process improvement will be of only limited value.

Model Design

![]() Models should help us achieve test process improvements in small, manageable steps rather than great leaps. By providing a mechanism for small, evolutionary improvements, models for test process improvement should support a wide range of improvement scopes (e.g., minor adjustments or major programs) and ensure that those improvements are clearly defined in the model.

Models should help us achieve test process improvements in small, manageable steps rather than great leaps. By providing a mechanism for small, evolutionary improvements, models for test process improvement should support a wide range of improvement scopes (e.g., minor adjustments or major programs) and ensure that those improvements are clearly defined in the model.

![]() The prioritization of improvements is an essential aspect in determining an acceptable test improvement plan. Models should support the definition of priorities and enable businesses to understand the reasons for those priorities.

The prioritization of improvements is an essential aspect in determining an acceptable test improvement plan. Models should support the definition of priorities and enable businesses to understand the reasons for those priorities.

![]() Models must support the different activities found in a test process improvement program (the IDEAL approach discussed in chapter 6 identifies, for example, five principal activities). The model should be considered as a tool with which to support the implementation of a test process improvement program. The model itself should not demand major changes to the chosen approach to test process improvement.

Models must support the different activities found in a test process improvement program (the IDEAL approach discussed in chapter 6 identifies, for example, five principal activities). The model should be considered as a tool with which to support the implementation of a test process improvement program. The model itself should not demand major changes to the chosen approach to test process improvement.

![]() Flexibility is a highly desirable characteristic of test process improvement models. Within a given organization, a variety of different project types may be found, such as large projects, projects using standard software, and projects using a particular software development life cycle. The model must be flexible enough to deal with all of these project types. In addition, the model must cater to the different business objectives being followed by an organization. This calls for a high level of model flexibility, including the possibility to apply tailoring.

Flexibility is a highly desirable characteristic of test process improvement models. Within a given organization, a variety of different project types may be found, such as large projects, projects using standard software, and projects using a particular software development life cycle. The model must be flexible enough to deal with all of these project types. In addition, the model must cater to the different business objectives being followed by an organization. This calls for a high level of model flexibility, including the possibility to apply tailoring.

![]() Suggestions for test process improvement may be given (prescribed) by the model or depend on the judgment of the user. These approaches have their advantages and disadvantages, so a decision on which is considered desirable has to be made by the model user within their own project and organizational context.

Suggestions for test process improvement may be given (prescribed) by the model or depend on the judgment of the user. These approaches have their advantages and disadvantages, so a decision on which is considered desirable has to be made by the model user within their own project and organizational context.

![]() As discussed in section 3.1.3, some models represent improvements to test process maturity in predefined steps, or stages, and others consider improvement of particular test process aspects in a nonstaged, or continuous, manner. Together with the “degree of prescription” aspect mentioned in the preceding point, this is perhaps the most significant characteristic that defines test process improvement models. The user must determine which is most desirable in their specific project and organizational context.

As discussed in section 3.1.3, some models represent improvements to test process maturity in predefined steps, or stages, and others consider improvement of particular test process aspects in a nonstaged, or continuous, manner. Together with the “degree of prescription” aspect mentioned in the preceding point, this is perhaps the most significant characteristic that defines test process improvement models. The user must determine which is most desirable in their specific project and organizational context.

Formal Considerations

![]() To provide value for projects and organizations, a test improvement model must be publicly known, supported (e.g., by external consultants and/or websites), and available to all potential users (e.g., via the Internet and/or as a book). “Home-made,” unpublished models that have little or no support may be useful within a very limited scope, but generally speaking they are limited in value compared to publicly available models that are supported (e.g., by consultants or user groups).

To provide value for projects and organizations, a test improvement model must be publicly known, supported (e.g., by external consultants and/or websites), and available to all potential users (e.g., via the Internet and/or as a book). “Home-made,” unpublished models that have little or no support may be useful within a very limited scope, but generally speaking they are limited in value compared to publicly available models that are supported (e.g., by consultants or user groups).

![]() The merits of a model may be judged by the level of acceptance, recognition, and “take up” shown by both professional bodies and the software testing industry in general. Using a model that is not tried and tested presents an avoidable risk.

The merits of a model may be judged by the level of acceptance, recognition, and “take up” shown by both professional bodies and the software testing industry in general. Using a model that is not tried and tested presents an avoidable risk.

![]() Models that are simply promoted as a marketing vehicle for commercial organization may not exhibit the degree of independence required when suggesting test process improvements. Models must show strong independence from undesired commercial influences and be clearly unbiased.

Models that are simply promoted as a marketing vehicle for commercial organization may not exhibit the degree of independence required when suggesting test process improvements. Models must show strong independence from undesired commercial influences and be clearly unbiased.

![]() The use of a model may require the formal accreditation of assessors. This level of formality may be considered as beneficial to organizations seeking a formal certification of their test process maturity. The level of formal accreditation required for assessors and the ability to certify an organization distinguishes some models from others.

The use of a model may require the formal accreditation of assessors. This level of formality may be considered as beneficial to organizations seeking a formal certification of their test process maturity. The level of formal accreditation required for assessors and the ability to certify an organization distinguishes some models from others.

3.1.2 Using Models: Benefits and Risks

Using a model for test process improvement can be highly beneficial, but the user must be aware of particular risks in order to achieve those benefits. As you will see, many of the risks are associated with the assumptions that are frequently made when using a model. Just as with any risk, lack of awareness and failure to take the necessary mitigation actions may result in failure of the overall test process improvement program.

In this section, the benefits associated with using models are covered, followed by a description of individual risk factors and how they might be mitigated.

Benefit: Structured Approach

As mentioned in the introduction to this chapter, models permit the adoption of a structured approach to test process improvement. This not only benefits the users of the model, it also helps to communicate the test process improvement approach to stakeholders. Managers benefit from the ease with which test process improvements can be planned and controlled. Resources (people, time, money) can be clearly allocated and prioritized, progress can be transparently communicated, and return on investment can be more easily demonstrated. Business owners benefit from a structured, model-based approach by having clearly established objectives that can be prioritized, monitored, and where necessary, adjusted. This is not to say that test improvements that are not modelbased are unplanned, difficult to manage, and hard to communicate; models do, however, provide an valuable instrument with which these issues can be effectively addressed.

Benefit: Leveraging of Testing Best Practices

At the heart of all models for test process improvement is the concept of best practices in testing, as proposed by the model’s developers. Aligning a project’s test process to a particular model will leverage these best practices and, it is assumed, benefit the project. This is one of the fundamental benefits of adopting a model-based approach to test process improvement, but it needs to be balanced with the risks associated with ignoring issues of project or organizational context, which are discussed later.

Benefit: Thorough Coverage

Testing processes have many facets, and a wide range of individual aspects (e.g., test techniques, test organization, test life cycle, test environment, test tools) need to be considered if broad-based test process improvement is established as the overall objective. Such objectives can be achieved only if all relevant aspects of the test process are covered. Test process improvement models should provide this thorough coverage; they identify and describe individual testing aspects, and they give stakeholders confidence that important aspects have not been missed. Note that some test improvement models (e.g., TOM) do not provide full coverage, making this a desirable characteristic of a test improvement model.

Of course, if the objective of test process improvement is highly focused on a particular problem area (e.g., test team skills), this benefit will fully apply and a decision to use a model-based approach would need careful consideration.

Benefit: Objectivity

Models provide the test process improver with an objective body of knowledge from which to identify weaknesses in the test process and propose appropriate recommendations. This objectivity can be a decisive factor in deciding on an approach to test process improvement. An approach that is not model-based and relies on particular people will be perceived as less objective by stakeholders (especially management), even if this is not justified.

Objectivity can be an important benefit when conducting assessment interviews; it reduces the risk that particular questions are challenged by the interviewee, especially where the interviewee is more experienced than the interviewer in particular areas of testing. Similarly, the backing of an objective model can be of use when “selling” improvement proposals to stakeholders. Objectivity cannot, of course, be total. Models are developed by people and organizations. If all of those people come from the same organization, the model will be less objective than one developed by many people from different organizations and countries.

Benefit: Comparability

Consistently using a test process improvement model allows organizations to compare the maturity levels achieved by different projects in their organization. Within an organization, this enables particular projects to be defined as reference points for other projects and provides a convenient, company-specific baseline.

Industry-wide baselines are a potential benefit of using models, but only a few such baselines have been established [van Veenendaal and Cannegieter 2013], [van Veenendaal 2011].

Risk: Failure to Consider Project Context

Models are, by definition, a representation of reality. They help us to understand complexities more easily by creating a generic view that can then be applied to a specific situation. The risk with any model is that the generic representation may not always fit the specific situations in your project or organization. All projects have their own particular context, and there is no way to define a “standard” project, except at a (very) high level. Because of this, the model you are using for test process improvement must match the specific project context as closely as possible. The less precise this match is, the higher the risk that the best practices are no longer appropriate for the specific project.

Mitigating these risks is a question of judgment by the model user. What is best for the specific project? Where should I make adjustments to the model? Where do I need to make interpretations when assessing test process maturity? These are the questions the test process improver needs to answer when applying a particular test improvement model. Some of the typical areas to be considered are listed here:

![]() Project criticality. Projects with a high level of criticality often need to show compliance with standards. Any aspects of the model that would prevent this compliance need to be filtered out.

Project criticality. Projects with a high level of criticality often need to show compliance with standards. Any aspects of the model that would prevent this compliance need to be filtered out.

![]() Technology and architecture used. Certain technologies and architectures may favor particular best practices over others. Applications that are implemented using, for example, multisystem architectures may place relatively more emphasis on non-functional testing than simple web-based applications. Similarly, the importance of a representative test environment may be different for the two architectures. The model used must reflect these different project contexts. If the model used suggests that a fully representative test environment should be available, this may be “over the top” in the context of a simple application needing just functional testing.

Technology and architecture used. Certain technologies and architectures may favor particular best practices over others. Applications that are implemented using, for example, multisystem architectures may place relatively more emphasis on non-functional testing than simple web-based applications. Similarly, the importance of a representative test environment may be different for the two architectures. The model used must reflect these different project contexts. If the model used suggests that a fully representative test environment should be available, this may be “over the top” in the context of a simple application needing just functional testing.

![]() Development using a sequential software development life cycle (SDLC) places a different emphasis on testing compared to software developed according to agile practices. Test improvement models may be used in both contexts, but considerably more interpretation and tailoring will be required for the agile context (more on this in chapter 10).

Development using a sequential software development life cycle (SDLC) places a different emphasis on testing compared to software developed according to agile practices. Test improvement models may be used in both contexts, but considerably more interpretation and tailoring will be required for the agile context (more on this in chapter 10).

TPI NEXT and TMMi permit aspects of the test process assessment to be filtered out as “not applicable” without affecting the achieved maturity level. This goes some way toward eliminating aspects that do not conform to the project or organizational context.

Risk: “Model Blindness”

This is a very common risk that we have observerd on many occasions. An inexperienced user might believe that a test process improvement model does the thinking for them and relieves them of the need to apply their own judgment. This false assumption presents a substantial risk to gaining approvals and recognition for improvement recommendations. If test process improvers are questioned by stakeholders about improvement proposals or assessment results, weak responses such as “because the model says so” show a degree of “model blindness” that reveals their inexperience in improving test processes. Stakeholders generally want to hear what the test process improver thinks and not simply what the model prescribes as a result of a mechanical checklist-based assessment.

A model cannot replace common sense, experience, and judgment, and users of models should remember the famous quote by W.E. Deming and G. Box: “All models are wrong: some are useful.” The models described in this book are considered “useful,” but if the user cannot explain the reasoning behind results and recommendations, the level of confidence in the results and proposals they present may erode to an extent that they are not adopted.

Mitigating the risks of model blindness means validating the results and recommendations suggested by the model and making the appropriate adjustments if these do not make sense within the context of the project or organization. Does it make sense, for example, to propose the introduction of weekly test status reporting when the project has a web-based dashboard showing all the relevant information “on demand”? Users of models should consider them as valuable instruments to support their judgments and recommendations.

Risk: Wrong Approach

This book examines a number approaches to test process improvement, one of which involves using a test process improvement model. Risks arise when a model-based approach becomes “automatic” and is applied without due consideration for the particular objectives to be achieved. This could fundamentally result in an inappropriate approach being adopted and resources being ineffectively allocated. As discussed in chapter 5, a combination of a model-based and analytical-based approach is often preferred.

Mitigating this risk requires awareness. Test process improvers must be aware that models sometimes oversimplify complex issues of cause and effect and, indeed, that improvements may not be required in testing but in other processes, such as software development. These issues to be considered when choosing the most appropriate approach to test process improvement are described in chapter 5.

Risk: Lack of Skills

A general lack of skills in performing test process improvements and in applying a specific model may lead to one or more of the risks mentioned previously.

Mitigation of this risk is not just simply an issue of training (although that is surely one measure to be considered). Roles and responsibilities for those involved in test process improvements must include a description of required experience and qualifications (see section 7.2.). Any deviations from these requirements must be balanced by the potential impact of the risks mentioned previously on the project or organization (e.g., setting inappropriate priorities, failure to take project context into account).

3.1.3 Categories of Models

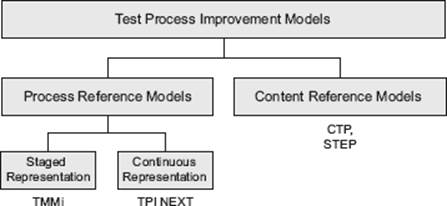

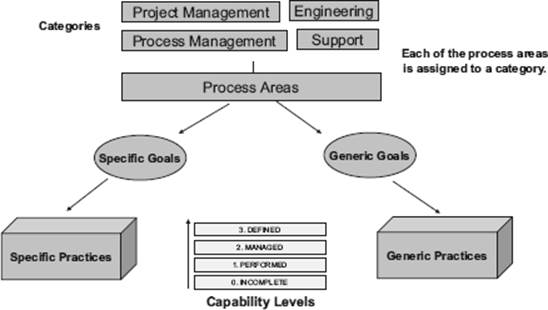

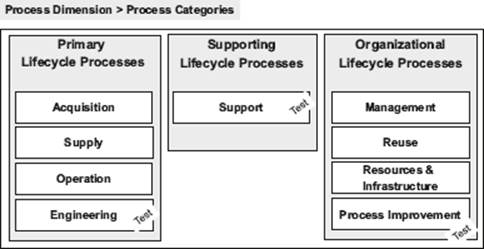

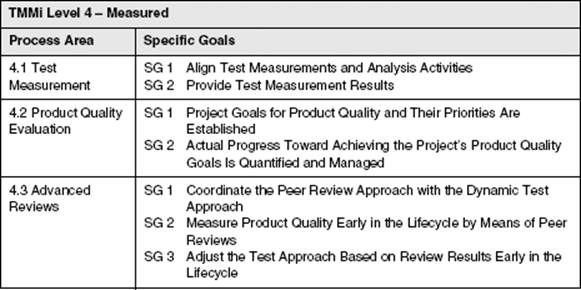

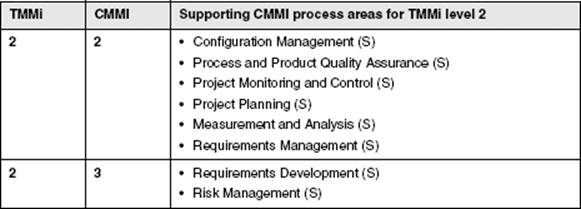

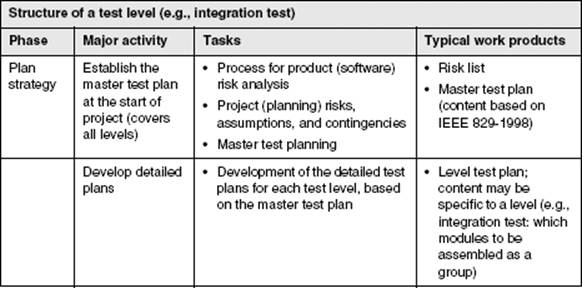

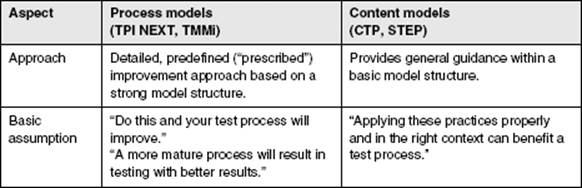

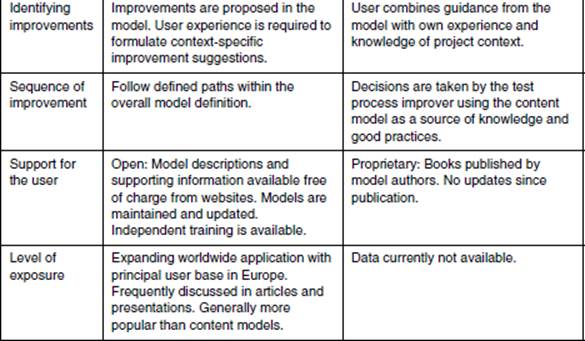

Models for test process improvement can be broadly categorized as shown in figure 3–1. The diagram also indicates the four models considered in this book.

Figure 3–1 Test process improvement models considered in this book

Process Reference Models and Content Reference Models

Reference models in general provide a body of information and testing best practices that form the core of the model. The primary difference between process reference models and content reference models lies in the way in which this core of test process information is leveraged by the model, although content reference models tend to provide more details on the various testing practices.

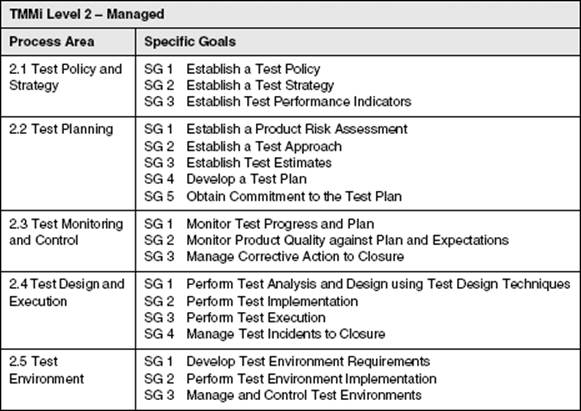

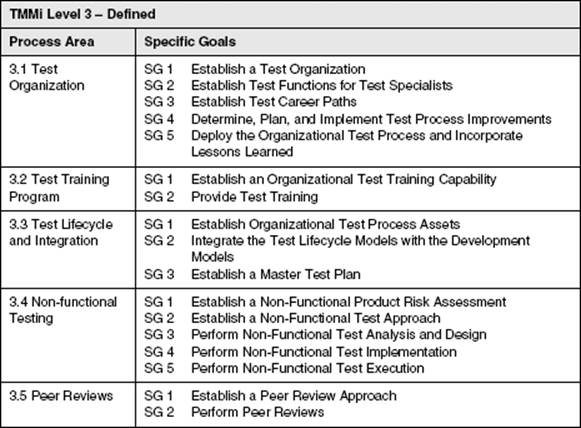

In the case of process reference models, a predefined scale of test process maturity is mapped onto the core body of test process information. Different maturity levels are defined, which range from an initial level up to an optimizing maturity level, depending both on the actual testing tasks performed and on how well they are performed. The progression from one maturity level to another is an integral feature of the model, which gives process reference models their predefined “prescribed” character. The process reference models discussed in this book are the Test Maturity Model integration (TMMi) model and the Test Process Improvement model TPI NEXT.

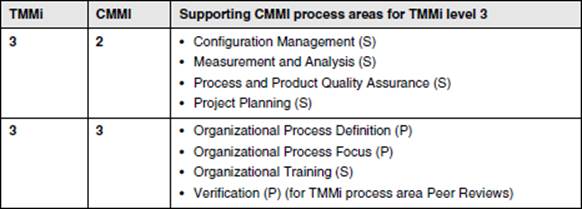

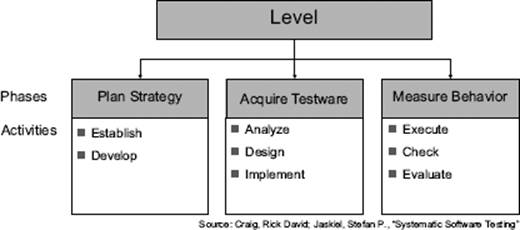

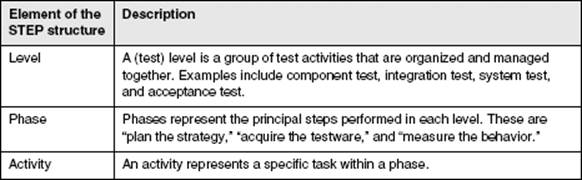

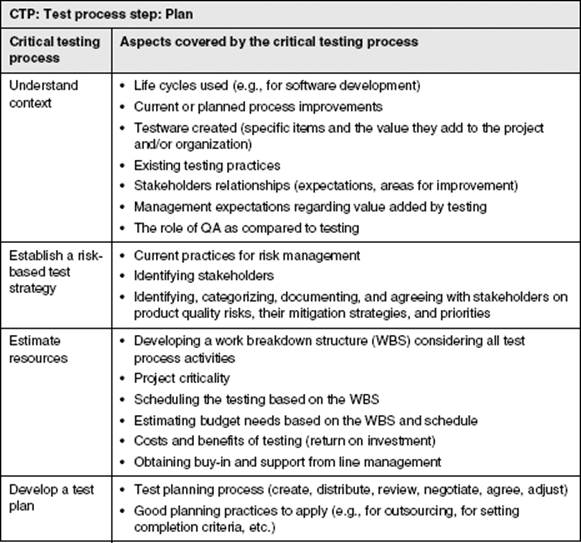

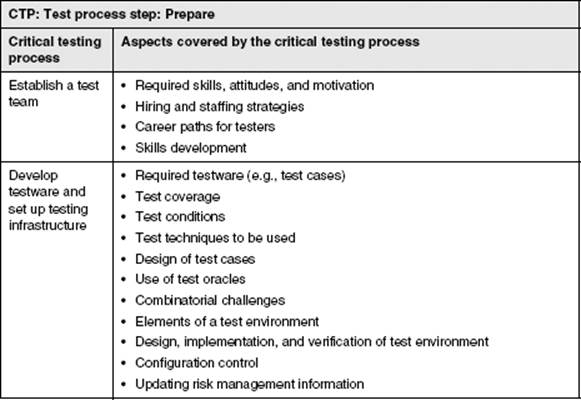

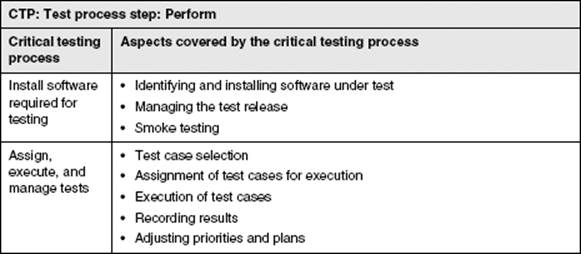

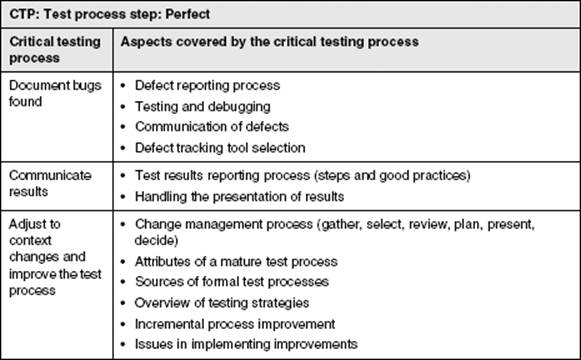

Content reference models also have a core body of best testing practices, but they do not implement the concept of different process maturity levels and do not prescribe the path to be taken for improving test processes. The principal emphasis is placed on the judgment of the user to decide on where the test process is and where it should be improved. The content reference models discussed in this book are the Critical Testing Process (CTP) model [Black 2003] and the Systematic Test and Evaluation Process (STEP) model [Craig and Jaskiel 2002].

Continuous and Staged Representations

As noted, process reference models define a scale of test process maturity. This is of practical use in showing the achieved test process maturity, in comparing the maturity levels of different projects or organizations, and in showing improvements required or achieved.

The method chosen to represent test process maturity can be classified as either staged or continuous. With a staged representation, the model describes sucessive maturity levels. Test Maturity Model integration (TMMi), which is described in section 3.3.2, defines five such maturity levels. Achieving a given maturity level (stage) requires that specific testing activities (TMMi refers to these as process areas) are performed as prescribed by the model. For example, test planning is one of the testing activities that must be perfomed to achieve the TMMi maturity level 2. Test process improvement is represented by progressing from one maturity level to the next highest level (assuming there is one).

Staged representations are easy to understand and show a clear step-by-step path toward achieving a given level of test process maturity. This simplicity can also be beneficial when discussing test process maturity with senior management or where a simple demonstration of achieved test process maturity is required, such as with tendering for testing projects. It is probably this simplicity that makes staged models popular. A recent survey showed that 90 percent of the CMMI implemenations were stage based and only 10 percent were using continuous representation.

When a model is used that implements a staged representation of test process maturity, the user should be aware of certain aspects that may be seen as limiting. One of these aspects is the relatively course-grained definition of the maturity levels. As noted, each maturity level requires that capability be demonstrated for several testing activities and that all of those activities must be present for the overall maturity level to be achieved. If a test process can demonstrate, for example, that only one of the required testing activities assigned to maturity level 2 is performed and compliant, the overall test process maturity is still rated as level 1. This is reasonable. However, when all but one of the maturity level 2 activities are performed, the model still places the test process at maturity level 1. This “all or nothing” approach can result in a negative impression of the test process maturity.

Staged representation A model structure wherein attaining the goals of a set of process areas establishes a maturity level; each level builds a foundation for subsequent levels. [Chrissis, Konrad, and Shrum 2004]

Continuous representations of process maturity (such as used in the TPI NEXT model) are generally more flexible and finer grained than staged representations. Unlike with the staged approach, there are no prescribed maturity levels through which the entire test process is required to proceed, which makes it easier to target the particular areas for improvement needed to achieve particular business goals.

The word continuous is applied to this representation because the models that use this approach define not only the various key areas of a testing process (e.g., defect management) but also the (continuous) scale of maturity that can be applied to each key area (e.g., basic managed defect management, efficient defect management using metrics, and optimizing defect management featuring root-cause analysis for defect prevention).

Assessing the maturity of a particular key area is achieved by answering specific questions that are assigned to a given maturity level. There might be, for example, four questions assigned to the managed maturity level for defect management. If all questions can be answered positively, the defect management aspect of the test process is assessed as managed. Other key areas may be assessed at different levels of maturity; for example, reporting may be at an efficient level and test case design at an optimizing maturity level.

Clearly, the continuous representation provides a more detailed and differentiated view of test process maturity compared to the staged representation, but the simplicity offered by a staged approach cannot be easily matched. Using a continuous representation model can easily lead to a complex assessment of a test process, where stakeholders may find it hard to “see the forest, and not all the trees.” Models that use a continuous representation, such as TPI NEXT (and particularly its earlier version, TPI) suffer from the perception of being too technical and difficult to communicate to non-testers.

Continuous representation A capability maturity model structure wherein capability levels provide a recommended order for approaching process improvement within specified process areas. [Chrissis, Konrad, and Shrum 2004]

3.2 Software Process Improvement (SPI) Models

Syllabus Learning Objectives

|

LO 3.2.1 |

(K2) Understand the aspects of the CMMI model with testing-specific relevance. |

|

LO 3.2.2 |

(K2) Compare the suitability of CMMI and ISO/IEC 15504-5 for test process improvement to models developed specifically for test process improvement. |

3.2.1 Capability Maturity Model Integration (CMMI)

Introduction

In this section, we’ll consider the CMMI model. The official book on CMMI provides a full description of the framework and its use (see CMMI, Guidelines for Process Integration and Product Improvement [Chrissis, Konrad, and Shrum 2004]). Users will gain additional insights into the framework by consulting this publication and the other sources of information available at [URL: SEI].

Capability Maturity Model Integration (CMMI) is a process improvement approach that provides organizations with the essential elements of effective processes. It is an approach that helps organizations improve their processes. The model provides a clear definition of what an organization should do to promote behaviors that lead to improved performance.

With five maturity levels (for the staged representation) and four capability levels (for the continous representation), CMMI defines the most important elements that are required to build better product quality, or deliver greater services, and wraps them all up in a comprehensive model. The goal of the CMMI project is to improve the usability of maturity models for software engineering and other disciplines by integrating many different models into one overall framework of best practices. It describes best practices in managing, measuring, and monitoring software development processes. The CMMI model does not describe the processes themselves; it describes the characteristics of good processes, thus providing guidelines for companies developing or honing their own sets of processes.

Capability Maturity Model Integration (CMMI) A framework that describes the key elements of an effective product development and maintenance process. The Capability Maturity Model Integration covers best practices for planning, engineering and managing product development and maintenance. [Chrissis, Konrad, and Shrum 2004]

The CMMI helps us understand the answer to the question, How do we know?

![]() How do we know what we are good at?

How do we know what we are good at?

![]() How do we know if we’re improving?

How do we know if we’re improving?

![]() How do we know if the process we use is working well?

How do we know if the process we use is working well?

![]() How do we know if our requirements change process is useful?

How do we know if our requirements change process is useful?

![]() How do we know if our products are as good as they can be?

How do we know if our products are as good as they can be?

CMMI helps you to focus on your processes as well as on the products and services you produce. This is important because many people who are working in a position such as program manager, project manager, or a similar product creation role are paid bonuses and given promotions based on whether they achieve deadlines, not whether they follow or improve processes. Essentially, using CMMI reminds us to focus on process improvement.

Commercial and government organizations use the CMMI models to assist in defining process improvements for systems engineering, software engineering, and integrated product and process development.

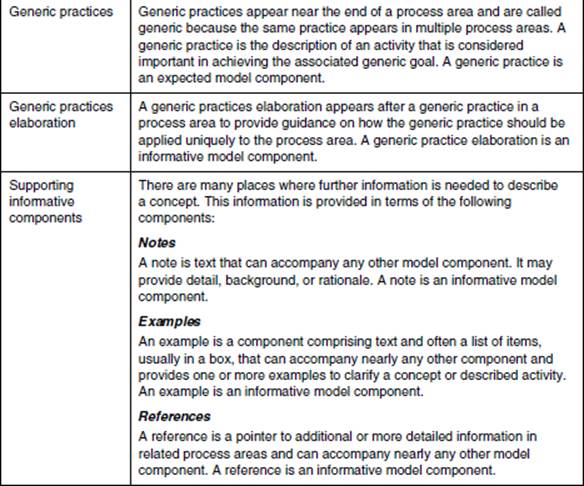

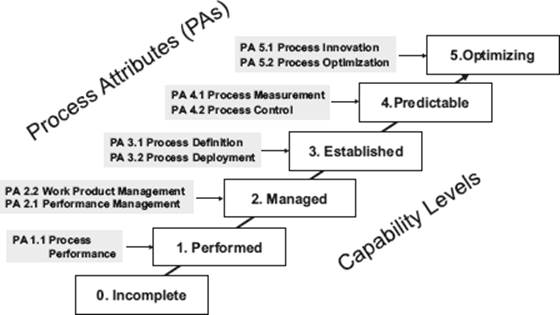

Structure

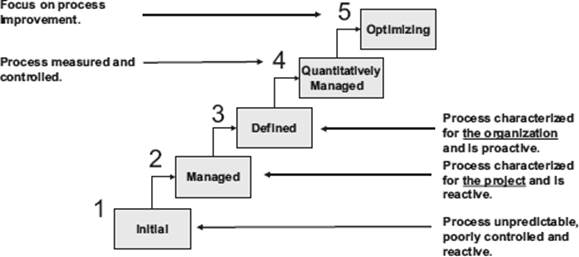

CMMI comes with two different representations: staged and continuous (see section 3.1.3). The staged version of the CMMI model identifies five levels of process maturity for an organization (figure 3–2):

1. Initial (chaotic, ad hoc, heroic): The starting point for use of a new process.

2. Managed (project management, process discipline): The process is used repeatedly.

3. Defined (institutionalized): The process is defined and confirmed as a standard business process.

4. Quantitatively Managed (quantified): Process management and measurement take place.

5. Optimizing (process improvement): Process management includes deliberate process optimization and improvement.

Figure 3–2 CMMI staged model: five maturity levels with their characteristics

There are process areas (PAs) within each of these maturity levels that characterize that level (more about process areas later). Organizations are supported with the CMMI to improve the maturity of their software process through an evolutionary path of the five maturity levels, from “ad-hoc and chaotic” to “mature and disciplined” management. As organizations become more mature, risks are expected to decrease and productivity and quality are expected to increase. The staged representation provides more focus for the organization and has by far the largest uptake in the industry.

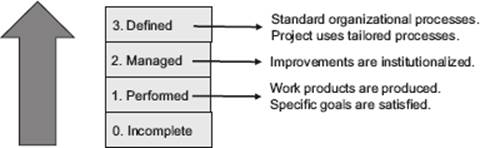

Using the continuous representation, an organization can also pick and choose the process areas that make the most sense for them to work on. The continuous representation defines capability levels within each process area (see figure 3–3). In the continuous representation, the organization is thus allowed to concentrate its improvement efforts on its own primary areas of need without regard to other areas and is therefore generally considered to be more flexible but more difficult to use.

Figure 3–3 CMMI continuous model: four capability levels per process area

The differences in the CMMI representations are solely organizational; the content is equivalent. When choosing the staged representation, an organization follows a predefined pattern of process areas that are organized by maturity level. When choosing the continuous representation, organizations pick process areas based on their interest in improving only specific areas.

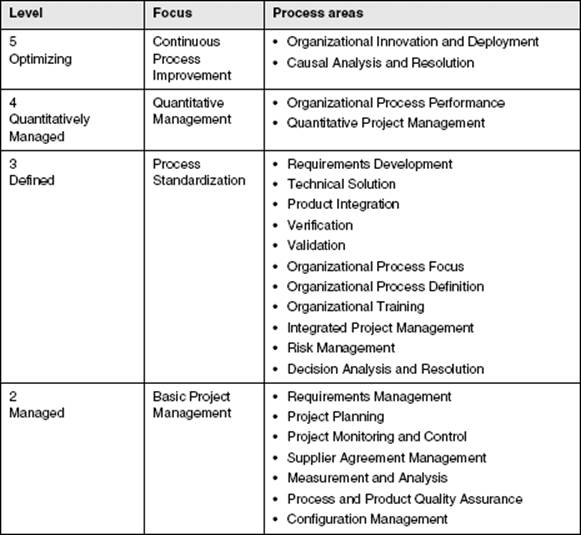

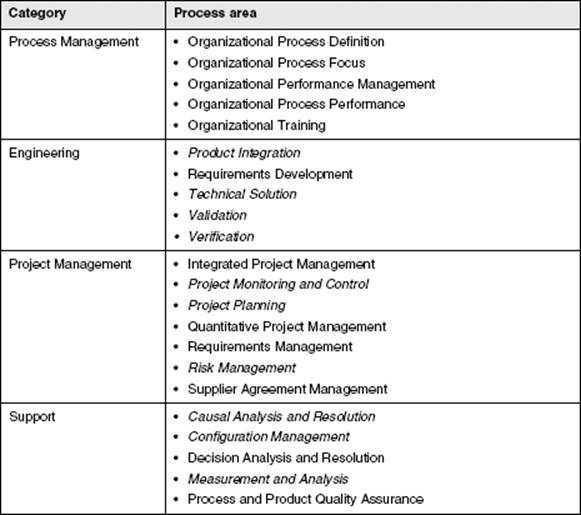

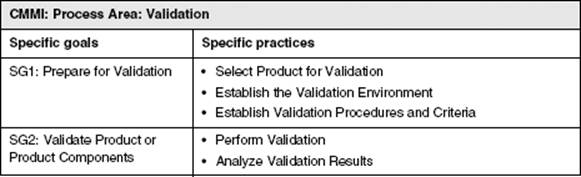

There are multiple “flavors” of the CMMI, called constellations, that include CMMI for Development (CMMI-DEV), CMMI for Services (CMMI-SVC), and CMMI for Acquisition (CMMI-ACQ). The three constellations share a core set of 16 process areas. CMMI-DEV commands the largest market share, followed by CMMI-SVC and then CMMI-ACQ. CMMI for Development has 22 process areas, or PAs (see table 3–1). Each process area is intended be adapted to the culture and behaviors of your own company.

Table 3–1 Process areas in CMMI-DEV for each maturity level

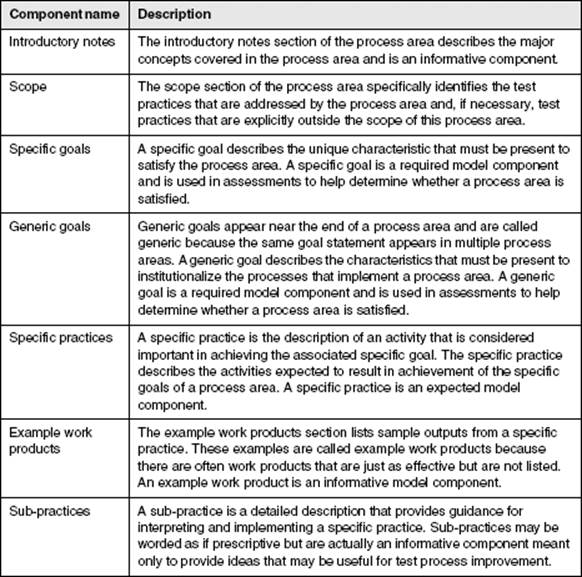

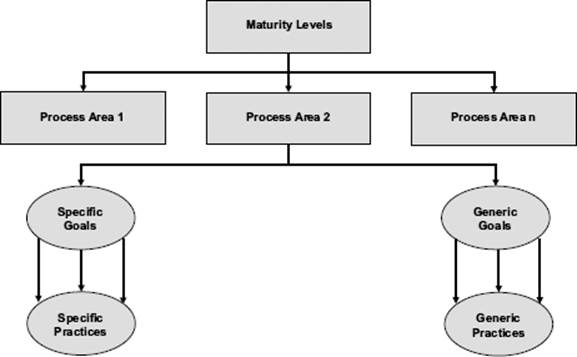

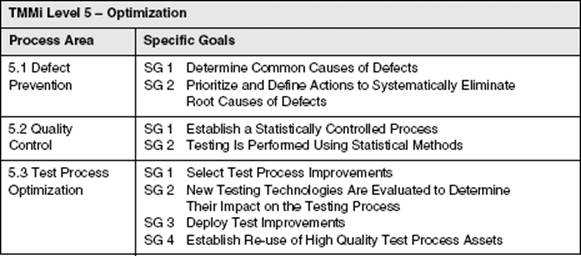

CMMI uses a common structure (set of components) to describe each of the process areas. The process area components are grouped into three types: required, expected, and informative.

![]() Required components describe what an organization must achieve to satisfy a process area. This achievement must be visibly implemented in an organization’s processes. The required components in CMMI are the specific and generic goals. Goal satisfaction is used in assessments as the basis for deciding if a process area has been achieved and satisfied.

Required components describe what an organization must achieve to satisfy a process area. This achievement must be visibly implemented in an organization’s processes. The required components in CMMI are the specific and generic goals. Goal satisfaction is used in assessments as the basis for deciding if a process area has been achieved and satisfied.

![]() Expected components describe what an organization will typically implement to achieve a required component. Expected components guide those who implement improvements or perform assessments. Expected components include both specific and generic practices. Either the practices as described or acceptable alternatives to the practices must be present in the planned and implemented processes of the organization before goals can be considered satisfied.

Expected components describe what an organization will typically implement to achieve a required component. Expected components guide those who implement improvements or perform assessments. Expected components include both specific and generic practices. Either the practices as described or acceptable alternatives to the practices must be present in the planned and implemented processes of the organization before goals can be considered satisfied.

![]() Informative components provide details that help organizations get started in thinking about how to approach the required and expected components. Sub-practices, example work products, notes, examples, and references are all informative model components.

Informative components provide details that help organizations get started in thinking about how to approach the required and expected components. Sub-practices, example work products, notes, examples, and references are all informative model components.

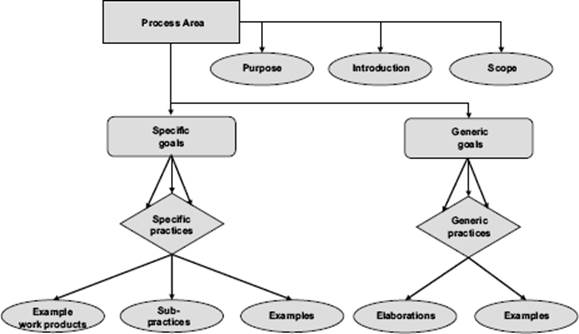

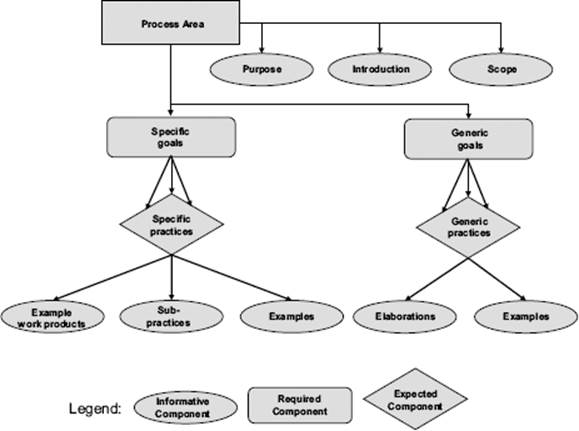

A process area has several different components (see figure 3–4 and table 3–2).

Figure 3–4 Structure of a CMMI process area

Each PA has one to four goals, and each goal is made up of practices. Within each of the PAs, these are called specific goals and practices because they describe activities that are specific to a single PA. There is one additional set of goals and practices that apply in common across all of the PAs; these are called generic goals and practices. There are 12 generic practices (GPs) that provide guidance for organizational excellence and institutionalization, including behaviors such as setting expectations, training, measuring quality, monitoring process performance, and evaluating compliance. Organizations can be rated at a capability level (continuous representation) or maturity level (staged representation) based on over 300 discreet specific and generic practices.

Table 3–2 Components of CMMI Process Areas

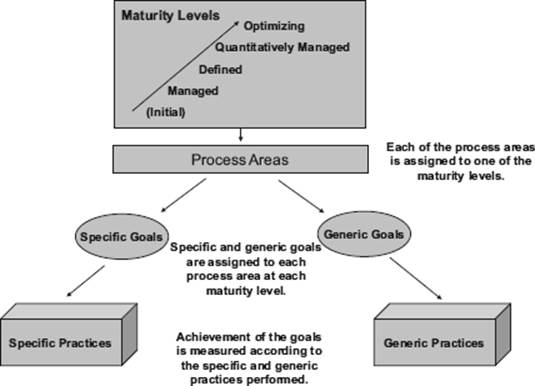

With CMMI-DEV, process areas are organized by so-called categories: Process Management, Project Management, Engineering, and Support. The grouping into categories is a way to discuss their interactions and is especially used with a continuous approach (see figure 3–5). For example, a common business objective is to reduce the time it takes to get a product to market. The process improvement objective derived from that could be to improve the project management processes to ensure on-time delivery.

The following two diagrams summarize the structural components of CMMI described so far. The first diagram (figure 3–4) shows how the structural elements are organized with the staged representation.

Figure 3–5 CMMI staged model: structural elements

The diagram in figure 3–6 shows how the structural elements of CMMI are organized in the continuous representation.

Figure 3–6 CMMI continuous model: structural elements

To conclude the description of the CMMI structure, the allocation of CMMI-DEV process areas to each category is shown in table 3–3. Note that the process areas shown in italic are particularly relevant for testing. Some of these will be discussed in more detail in the following pages.

Table 3–3 Process areas of CMMI-DEV grouped by category

Testing-Related Process Areas

The two principal process areas with testing relevance shown in table 3–3 are Validation and Verification. These process areas specifically reference both static and dynamic test processes.

Although still considered “not much” and “too high-level” by many testers, having these two dedicated process areas does make a difference. It means that process improvement initiatives using the CMMI shall also address testing. However, the number of pages dedicated by CMMI to testing is approximately 20 pages out of 400!

The Validation process area addresses testing to demonstrate that a product or product component fulfills its intended use when placed in its intended environments. Table 3–4 shows the specific goals and specific practices that make up this process area.

Table 3–4 CMMI Process Area: Validation

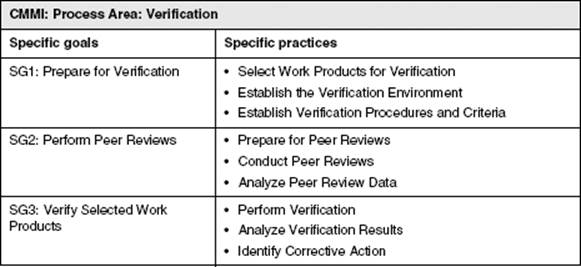

The purpose of the Verification process area is to ensure that selected work products meet their specific requirements. It also includes static testing, that is, peer reviews. Table 3–5 shows the specific goals and specific practices that make up this process area.

Table 3–5 CMMI Process Area: Verification

The process areas Technical Solution and Product Integration also deal with some testing issues, although again this is very lightweight.

The Technical Solution process area mentions peer reviews of code and some details on performing unit testing (in total, four sentences). The specific practices identified by the CMMI are as follows:

![]() Conduct peer reviews on the selected components

Conduct peer reviews on the selected components

![]() Perform unit testing of the product components as appropriate

Perform unit testing of the product components as appropriate

The Product Integration process area includes several practices in which peer reviews are mentioned (e.g., on interface documentation), and there are several implicit references to integration testing. However, none of this is specific and explicit.

In addition to the specific testing-related process areas, some process areas also provide support toward a more structured testing process, although the support provided is generic and does not address any of the specific testing issues.

Test planning can be addressed as part of the CMMI Project Planning process area. The goals and practices for test planning can often be reused for the implementation of the test planning process. Project management practices can be reused for test management.

Test monitoring and control can be addressed as part of the CMMI process area Project Monitoring and Control. The goals and practices for project planning and control can often be reused for the test monitoring and control process. Project management practices can be reused for test management.

Performing product risk assessments within testing to define a test approach and test strategy can partly be implemented based on goals and practices provided by the CMMI process area Risk Management.

The goals and practices of the CMMI process area Configuration Management can support the implementation of configuration management for test deliverables. Testing will also benefit if configuration management is well implemented during development (e.g., for the test object).

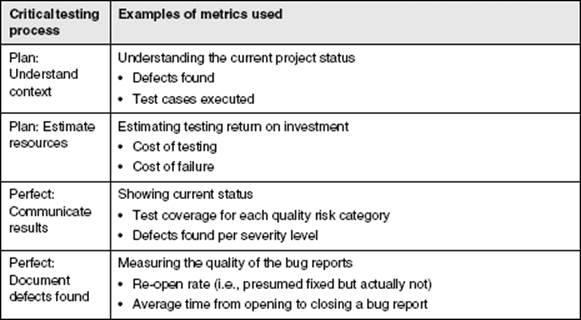

Having the CMMI process area Measurement and Analysis in place will support the task of getting accurate and reliable data for test reporting. It will also support testing if you’re setting up a measurement process on testing-related data, such as, for example, defects and the testing process itself.

The goals and practices of the Causal Analysis and Resolution CMMI process area provide support for the implementation of defect prevention, a typical test improvement objective at higher maturity levels.

Assessments

An assessment can give an organization an idea of the maturity of its processes and help it create a road map toward improvement. After all, you can’t plan a route to a destination if you don’t know where you currently are. The SEI does not offer certification of any form. It simply licenses and authorizes lead appraisers to conduct appraisals (commonly called assessments).

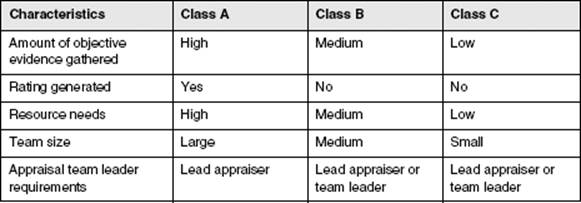

There are three different types of appraisals: Class A, B, and C (see table 3–6). The requirements for CMMI appraisal methods are described in Appraisal Requirements for CMMI (ARC). The Standard CMMI Assessment Method for Process Improvement (SCAMPI) is the only appraisal method that meets all of the ARC requirements for a Class A appraisal method. Only the Class A appraisal can result in a formal CMMI rating. A SCAMPI Class C appraisal is typically used as a gap analysis and data collection tool, and the SCAMPI Class B appraisal is often employed as a user acceptance or “test” appraisal.

The SEI certifies so-called lead appraisers. Only a Certified SCAMPI Lead Appraiser can conduct a SCAMPI A appraisal. Especially the staged representation is used to achieve a CMMI Level Rating from a SCAMPI appraisal. The results of the appraisal can then be published on the SEI website [URL: SEI].

Table 3–6 Characteristics of CMMI appraisals

Benefits

To understand what the benefit of CMMI might be to your organization, you need to think about what improved processes might mean for you. What would be the impact to your organization if project predictability was improved by 10 percent? What would be the impact if the cost of finding and fixing defects was reduced by 10 percent? By benchmarking before beginning process improvement, you can compare any process improvements to the benchmark to ensure a positive impact on the bottom line.

Turning to a real-world example of the benefits of CMMI, Lockheed Martin, between 1996 and 2002, was able to increase software productivity by 30 percent while decreasing the unit software cost by 20 percent [Weska 2004]. Another organization reported that achieving CMMI maturity level 3 allowed it to reduce its costs from rework by 42 percent over several years, and yet another described a 5:1 return on investment for quality activities in a CMMI maturity level 3 organization.

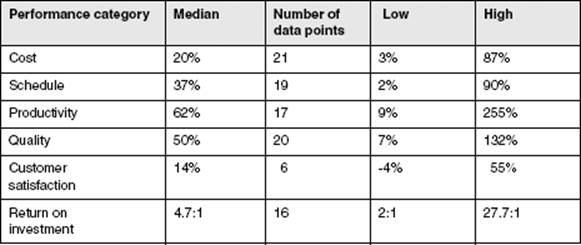

The SEI states that it measured increases of performances in the categories cost, schedule, productivity, quality, and customer satisfaction for 25 organizations (see table 3–7). The median increase in performance varied between 14 percent (customer satisfaction) and 62 percent (productivity).

However, the CMMI model mostly deals with what processes should be implemented and not so much with how they can be implemented. SEI thus also mentions that these results do not guarantee that applying CMMI will increase performance in every organization. A small company with few resources may be less likely to benefit from CMMI.

Table 3–7 Results reported by 25 organizations in terms of performance change over time

Summary

CMMI is not a process, it is a book of “whats,” not a book of “hows,” and it does not define how your company should behave. More accurately, it defines what behaviors need to be defined. In this way, CMMI is a behavioral model as well as a process model.

Like any framework, CMMI is not a quick fix for all that is wrong with a development organization. SEI cautions that improvement projects will likely be measured in months and years, not days and weeks. Because they usually have more knowledge and resources, larger organizations may find they get better results, but CMMI process changes can also help smaller companies.

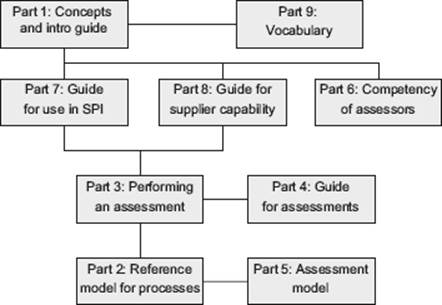

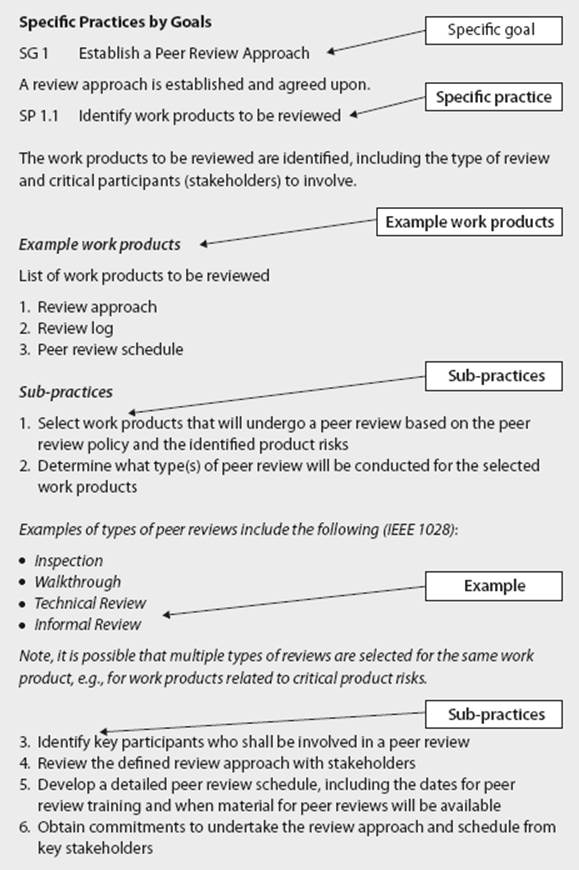

3.2.2 ISO/IEC 15504

Just like CMMI, the ISO/IEC 15504 standard [ISO/IEC 15504] (which was known in its pre-standard days as SPICE) is a model for software process improvement that includes specific testing processes. The standard comprises several parts, as shown in figure 3–7.

Figure 3–7 Parts of ISO/IEC 15504

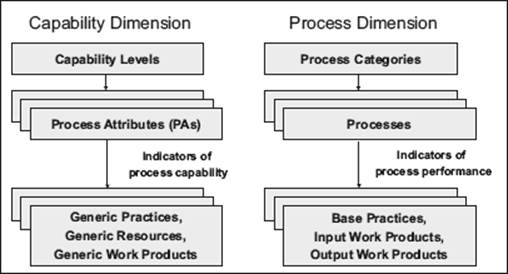

Several parts of the standard have relevance for test process improvers. Part 5 is of particular relevance because it describes a process assessment model that is made up of two specific dimensions:

![]() The process dimension describes the various software processes to be assessed.

The process dimension describes the various software processes to be assessed.

![]() The capability dimension enables us to measure how well processes are being performed.

The capability dimension enables us to measure how well processes are being performed.

Figure 3–8 ISO/IEC 15504-5: Process and Capability dimensions

To understand how ISO/IEC 15504 can be used for test process improvement, the two dimensions are summarized in the following sections with particular focus on the testing-specific aspects. Further details can be obtained from Process Assessment and ISO/IEC 15504: A Reference Book [van Loon 2007] or, of course, from the standard itself.

Process Dimension

The process dimension identifies, describes, and organizes the individual processes within the overall software life cycle. The activities (base practices) and the work products used by and created by the processes are the indicators of process performance that an assessor evaluates.

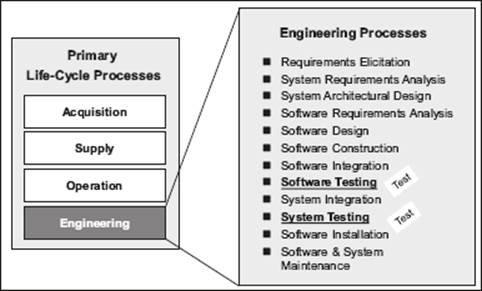

ISO/IEC 15504 allows any compliant process model to be used for the process dimension, which makes it easy for specific schemes to adopt. For example, the assessment processes and schemes of CMMI (SCAMPI) and TMMi (TAMAR) are ISO 15504 Part 4 compliant. To provide the assessor with a usable “out of the box” standard, part 5 of ISO/IEC 15504 also describes an “exemplar” process model, which is principally based on the ISO/IEC 12207 standard “Software Life Cycle Processes” [ISO/IEC 12207]. The process categories and groups defined by the exemplar process model are shown in figure 3–9; this is the process model that assessors use most often.

Figure 3–9 ISO/IEC 15504-5: Process categories and groups

The ISO/IEC 15504-5 Process Assessment Model shown in figure 3–9 contains a process dimension with three process categories and nine process groups, three of which (marked “test” in the figure) are of particular relevance for the test process improver. Let’s now take a closer look at those testing-specific process groups, starting with Engineering (see figure 3–10).

Figure 3–10 ISO/IEC 15504-5: Engineering processes

The software testing and system testing processes relate to testing the integrated software and integrated system (hardware and software). Testing activities are focused on showing compliance to software and system requirements prior to installation and productive use.

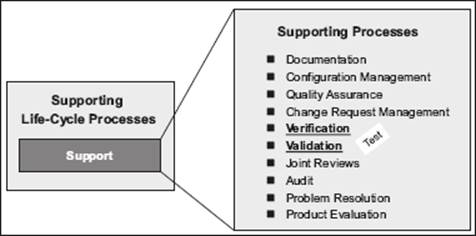

The process category Supporting Life-Cycle Processes contains only a single group of processes (see figure 3–11). These may be employed by any other processes from any other process category.

Figure 3–11 ISO/IEC 15504-5: Supporting processes

The processes Verification and Validation are very similar to the CMMI process areas with the same names covered in section 3.2.1. Verification, according to the ISTQB definition, is “confirmation by examination and through provision of objective evidence that specified requirements have been fulfilled. This process area checks that work products such as requirements, design, code, and documentation reflect the specified requirements.

A closer look at the verification of designs shows the level of detail provided in ISO/IEC 12207. A list of criteria is defined for the verification of designs:

![]() The design is correct and consistent with and traceable to requirements.

The design is correct and consistent with and traceable to requirements.

![]() The design implements proper sequence of events, inputs, outputs, interfaces, logic flow, allocation of timing and sizing budgets, and error definition, isolation, and recovery.

The design implements proper sequence of events, inputs, outputs, interfaces, logic flow, allocation of timing and sizing budgets, and error definition, isolation, and recovery.

![]() The selected design can be derived from requirements.

The selected design can be derived from requirements.

![]() The design implements safety, security, and other critical requirements correctly as shown by suitably rigorous methods.

The design implements safety, security, and other critical requirements correctly as shown by suitably rigorous methods.

The standard also defines the following list of outcomes:

![]() A verification strategy is developed and implemented.

A verification strategy is developed and implemented.

![]() Criteria for verification of all required software work products is identified.

Criteria for verification of all required software work products is identified.

![]() Required verification activities are performed.

Required verification activities are performed.

![]() Defects are identified and recorded.

Defects are identified and recorded.

![]() Results of the verification activities are made available to the customer and other involved parties.

Results of the verification activities are made available to the customer and other involved parties.

Validation, according to the ISTQB definition, is “confirmation by examination and through provision of objective evidence that the requirements for a specific intended use or application have been fulfilled.” The validation process focuses on the tasks of test planning, creation of test cases, and execution of tests.

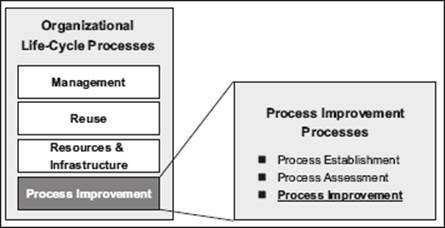

The process category Organizational Life-Cycle Processes is aimed at developing processes (including the test process) across the organization (see figure 3–12).

Figure 3–12 ISO/IEC 15504-5: Organizational processes

These processes emphasize the organizational policies and procedures used for establishing and improving life cycle models and processes. Test process improvers should take note of these processes in ISO/IEC 15504, Part 5, although the overall process of test process improvement is covered more thoroughly in the IDEAL model described in chapter 6.

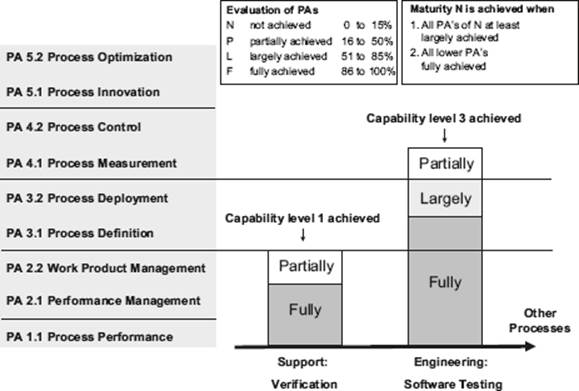

Capability Dimension

ISO/IEC 15504 defines a system for evaluating the maturity level (capability) achieved by the processes being assessed. This is the “how well” dimension of the model. It applies a continuous representation approach (see section 3.1.3).

The overall structure of the capability dimension is shown in figure 3–8 earlier in this chapter. The diagram in figure 3–13 shows the specific capability levels and the process attributes assigned to those levels.

Figure 3–13 ISO/IEC 15504-5: Capability levels and process attributes

The capability of any process defined in the process dimension can be evaluated according to the capability scheme shown in figure 3–13. This is similar but (regrettably) not identical to the scheme defined by CMMI. Process attributes guide the assessor by describing generic indicators of process capability; these include generic descriptions of practices, resources, and work products. Further details can be obtained. Please refer to Process Assessment and ISO/IEC 15504: A Reference Book [van Loon 2007] or the ISO/IEC 15504 standard for further details.

Using ISO/IEC 15504-5 for Assessing Test Processes

Test process improvers will tend to focus on the processes just described. The assessment provides results regarding the achieved capability for each assessed process and features a graded scale with four levels: fully achieved, largely achieved, partially achieved, and not achieved (per capability level). The diagram shown in figure 3–14 is an example of the assessment results for two testing-related processes that also describes the rules to be applied when grading the capability of a process.

Figure 3–14 ISO/IEC 15504-5: Assessment of capability (example)

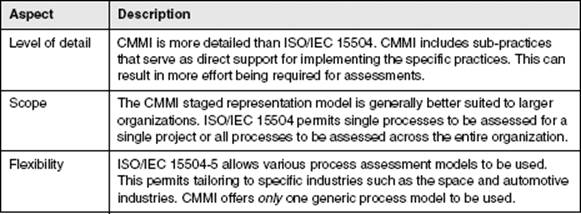

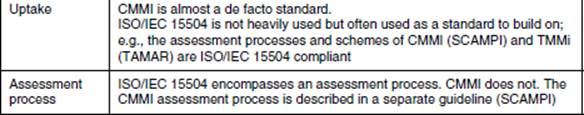

3.2.3 Comparing CMMI and ISO/IEC 15504

A full evaluation of the differences between these two software process improvement models is beyond the scope of our book on test process improvement. Some of the comparisons shown in table 3–8 may be useful background information, especially if you are involved in an overall decision on software process improvement models.

Table 3–8 Comparison between CMMI and ISO/IEC 15504-5

The suitability of using software process improvement models compared to those dedicated to test process improvement is discussed in section 3.5..

3.3 Test Process Improvement Models

3.3.1 The Test Process Improvement Model (TPI NEXT)

Syllabus Learning Objectives

|

LO 3.3.1 |

(K2) Summarize the background and structure of the TPI NEXT test process improvement model. |

|

LO 3.3.2 |

(K2) Summarize the key areas of the TPI NEXT test process improvement model. |

|

LO 3.3.8 |

(K3) Carry out an informal assessment using the TPI NEXT test process improvement model. |

|

LO 3.3.10 |

(K5) Assess a test organization using either the TPI NEXT or TMMi model. |

Introduction

We will now consider two principal areas of the TPI NEXT model. First we’ll describe the structure of the model and its various components. Then we’ll consider some of the issues involved in using the model to complete typical test process improvement tasks.

The official book on TPI NEXT provides a full description of the model and its use (see TPI NEXT – Business Driven Test Process Improvement [van Ewijk, et al. 2009]). Users will gain additional insights into the model by consulting this publication and the other sources of information avilable at the website for the TPI NEXT model [URL: TPI NEXT].

TPI NEXT A continuous business-driven framework for test process improvement that describes the key elements of an effective and efficient test process.

Overview of Model Structure

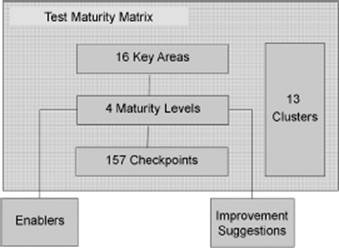

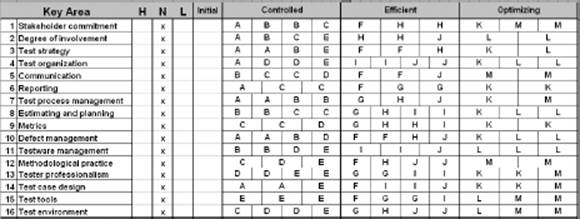

The TPI NEXT model is based upon the elements shown in figure 3–15. Users of the previous version of the model (called simply TPI) will recognize a basic structural similarity between the models, although “clusters” (described in the next paragraph) are a new addition to the structure. Different aspects of the test process are represented in TPI NEXT by 16 key areas (e.g., reporting), each of which can be evaluated at a given maturity level (e.g., managed).

Achieving a particular maturity level for a given key area (e.g., managed test reporting) requires that the specific checkpoints (questions) are all answered positively (including those of any previous maturity levels). The checkpoints are also grouped into clusters, which are made up of a number of checkpoints from different key areas. Clusters are like the “stepping stones” along the path of test process improvement. We will be looking at all these model elements in more detail in the sections that follow.

TPI NEXT uses a continuous representation to show test process maturity. This can be shown on the test maturity matrix, which visualizes the achieved test process maturity for each key area. Again, some examples of the test maturity matrix are shown in the following sections.

Figure 3–15 Structure of the TPI NEXT model

Maturity Levels

The TPI NEXT model defines the following maturity levels per key area:

|

|

No process. Ad-hoc activities |

|

|

Performing the right test process activities |

|

|

Performing the test process efficiently |

|

|

Continuously adapting to ever-changing circumstances |

The continuous representation used by the TPI NEXT model means that each key area (see the following section) can achieve a particular maturity level. Note that key areas are what other improvement models refer to as process areas.

If all key areas have achieved a specific maturity level, then the test process as a whole is said to have achieved that maturity level. Note that this permits staged objectives to be set for test process improvement if this is desired (e.g., the test process shall achieve the efficient maturity level). In practice, many organizations use TPI NEXT in this staged way as well.

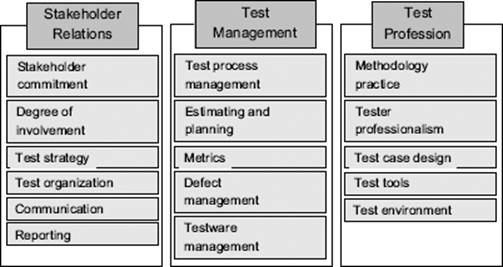

Key Areas

The body of testing best practices provided by the TPI NEXT model is based on the TMap NEXT methodology [Koomen, et al. 2006] and the previous version of the methodology simply called TMap [Pol, Teunnissen, and van Veenendaal 2002]. They are organized into 16 key areas, each of which covers a particular aspect of the test process (e.g., test environment). The key areas are further organized into three groups: stakeholder relations, test management, and test profession. This organization is particularly useful when reporting at a high level about assessment results and improvement suggestions (e.g., “the testing profession is only weakly practiced within small projects” or “stakeholder relations must be improved by organizing the test team more efficiently”).

Figure 3–16 shows the key areas and their groups.

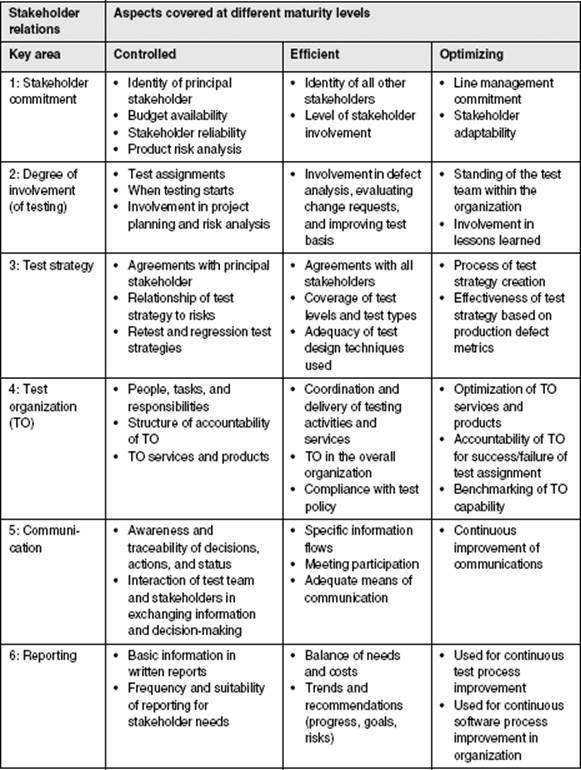

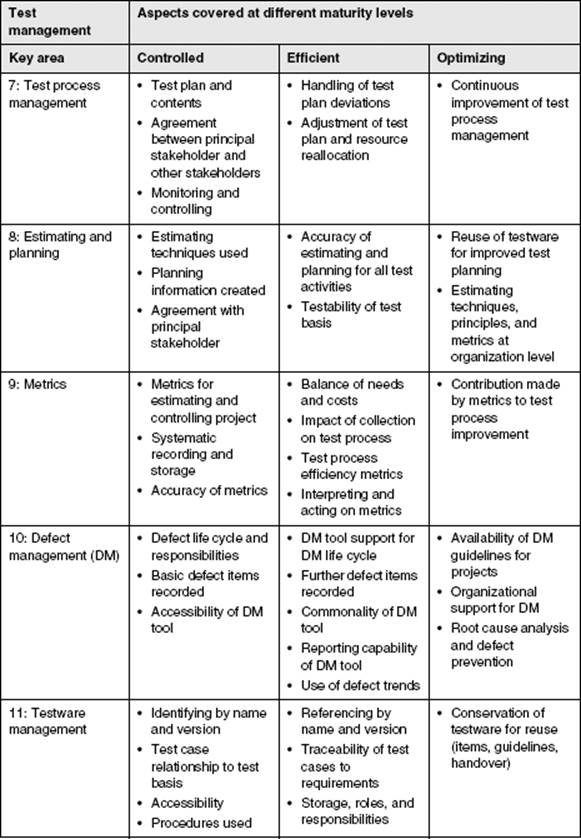

Figure 3–16 Key areas and groups in the TPI NEXT model

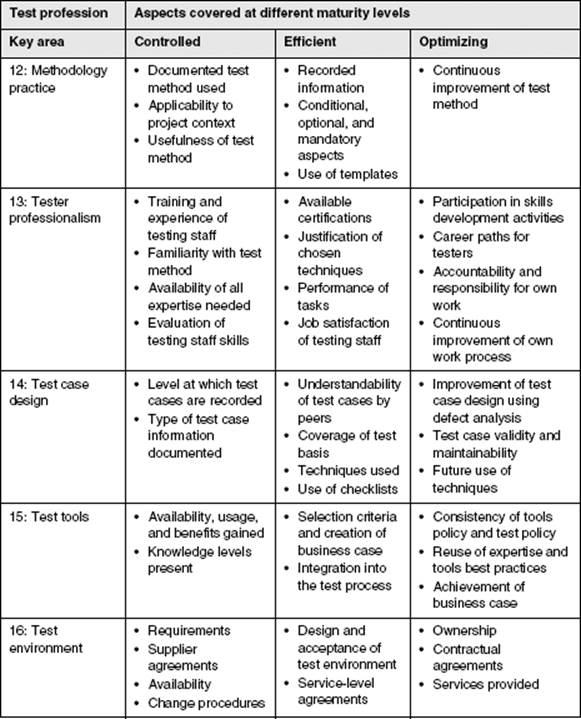

Table 3–9, table 3–10, and table 3–11 provide an overview of the principal areas considered by the key areas in the controlled, efficient, and optimizing maturity levels for the three groups shown in figure 3–16. More detailed information is available in the book TPI NEXT – Business-Driven Test Process Improvement [van Ewijk, et al. 2009].

Table 3–9 Summary of key areas in the stakeholder relations group

Table 3–10 Summary of key areas in the test management group

Table 3–11 Summary of key areas in the test profession group

Checkpoints

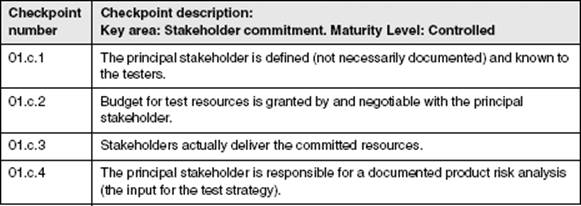

The maturity level of a particular key area is assessed by answering checkpoints. These are closed yes/no-type questions that should enable a clear judgment to be made by the interviewer.

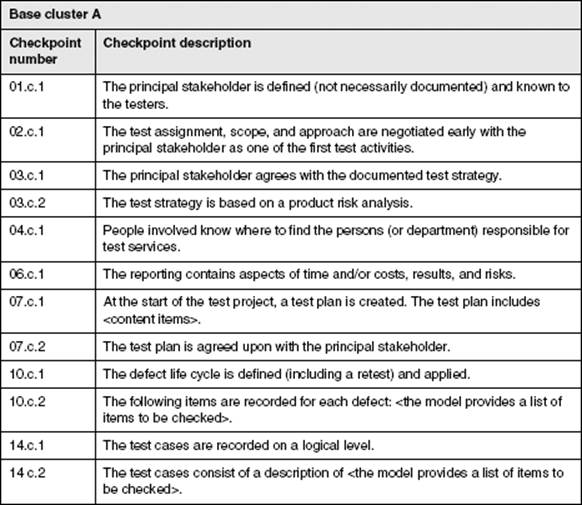

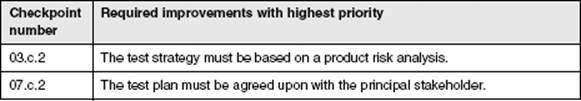

Table 3–12, from TPI NEXT – Business-Driven Test Process Improvement [van Ewijk, et al. 2009], shows the four checkpoints that must all be answered yes for the key area stakeholder commitment to achieve the controlled maturity level. Note that checkpoints are identified using a format that contains the number of the key area (01 to 16), a single letter representing the maturity level (c, e, or o), and the number of the checkpoint within the key area (1 to 4).

Table 3–12 Examples of checkpoints

When interviewing a test manager, for example, we may pose the question, “What kind of information do you receive from your principal stakeholder?” (Note that it is not recommended to use checkpoints directly for interviewing purposes; see section 7.3.1 regarding interviewing skills). If the words product risk analysis do not occur in the answer, we might mention this explicitly in a follow-up question, and if the answer is negative, the result is a clear “no” to the fourth checkpoint (01.c.4) shown in table 3–12. The stakeholder commitment key area has not been achieved at the controlled maturity level (or indeed the other higher-level maturity levels, efficient and optimizing) since all checkpoints need to be addressed positively.

Evaluating checkpoints is not always as straightforward as this. Take, for example, the third checkpoint (01.c.3). To evaluate this checkpoint, we need the following information:

![]() A list of stakeholders

A list of stakeholders

![]() A list of the resources they have committed to testing (e.g., staff, infrastructure, budget, documents)

A list of the resources they have committed to testing (e.g., staff, infrastructure, budget, documents)

![]() Some form of proof that these resources were delivered

Some form of proof that these resources were delivered

Let’s assume we have eight stakeholders and, in total, 20 individual resources they have committed to. It has been established that all resources except for one were delivered, but one (e.g., a document) was delivered late. How might an assessor evaluate this checkpoint? A strict assessor would simply evaluate the checkpoint as no. A pragmatic assessor would check on the significance of the late item, whether this was a one-off or recurring incident, and what the impact was on the test process. A pragmatic assessment may well be yes, if a minor item was slightly late and the stakeholder has otherwise been reliable. This calls for judgment and a particular attitude on the part of the assessor. As these typical examples show, some checkpoints are easy and clear to assess, but some need more work, experience, and judgment to enable an appropriate conclusion to be reached.

Test Maturity Matrix

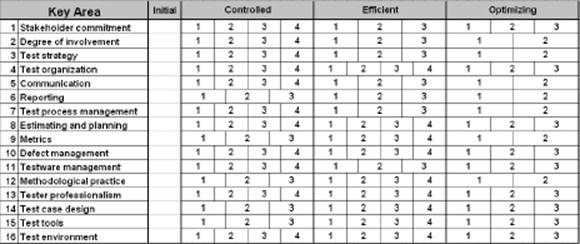

The test maturity matrix is a visual representation of the overall test process that combines key areas, test maturity, and checkpoints. Figure 3–17 shows the test maturity matrix.

Figure 3–17 Test maturity matrix: checkpoint view

The individual numbered cells in the matrix represent the checkpoints for specific key areas and maturity levels. As shown earlier in table 3–12, for example, the key area stakeholder commitment has four checkpoints at the controlled level of test process maturity.

The test maturity matrix is a useful instrument for showing the “big picture” of current and desired test process maturity across all key areas. This is discussed later in “Using TPI NEXT: Overview.”

There are two ways to show the test maturity matrix. The checkpoint view is shown in figure 3–17. The cluster view is shown in figure 3–18, after the concept of clusters is introduced.

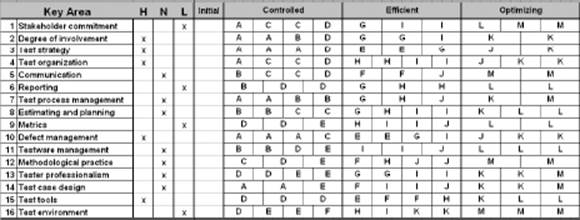

Clusters

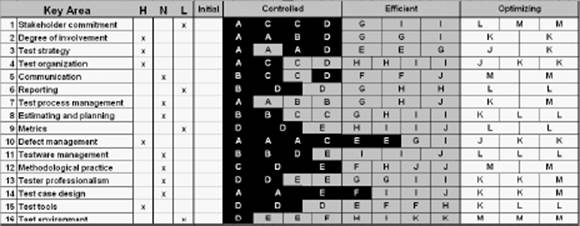

The purpose of clusters is to ensure that a sensible, step-by-step approach is adopted to test process improvement. It makes no sense, for example, to focus all our efforts on achieving an optimizing maturity level in the key area of metrics when defect management is way back at the initial stage.

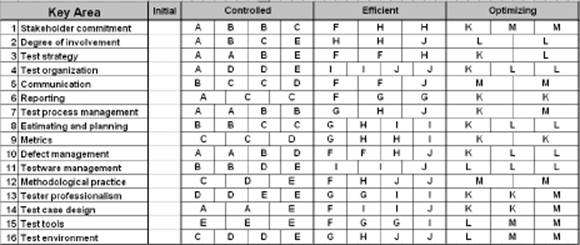

Clusters are collections of individual checkpoints taken from different key areas. The TPI NEXT model defines 13 clusters, which are identified with a single letter; A is the very first cluster and M the final cluster. Figure 3–18 shows clusters on the test maturity matrix (the cluster view).

Figure 3–18 Test maturity matrix: cluster view

In the cluster view, the checkpoints associated with a particular base cluster are represented by the letter of the cluster to which they belong (table 3–13 shows the checkpoints in base cluster A).

The path from cluster A to M represents the recommended progression of the test process and assists the test process improver in setting improvement priorities. The highest-priority recommendation would typically be assigned to fulfilling a checkpoint in the earliest cluster not yet fully completed.

Note that base clusters are allocated to a specific overall test process maturity level. Completing base clusters A thru E achieves an overall “controlled” test process maturity, for “efficient” the base clusters F thru J are required, and for “optimizing” the base clusters K, L, and M must be completed.

The TPI NEXT model permits specific business objectives (e.g., cost reduction) to be followed in test process improvement. The mechanism for applying this is to adjust the checkpoints contained in a given cluster using a procedure described later (see “Using TPI NEXT: Overview”). The predefined, unchanged clusters in TPI NEXT are referred to as base clusters to distinguish them from clusters that may have been modified to meet specific business objectives.

Base cluster A is shown in table 3–13 as an example (from TPI NEXT – Business-Driven Test Process Improvement [van Ewijk, et al. 2009]).

Table 3–13 Example of a cluster

As can be seen, base cluster A consists of 12 checkpoints drawn from eight different key areas. The locations of the 12 checkpoints that belong to base cluster A can be clearly identified in the test maturity matrix shown below in figure 3–19. The checkpoints in this cluster will be the first items to be implemented if our test process needs to be built up from the initial level of maturity and if no adjustment of the cluster contents has been performed to address specific business objectives.

Enablers

In section 3.1.2, “Using Models: Benefits and Risks,” a particular risk was discussed that highlighted the possibility of choosing the “wrong approach” when adopting a model-based solution to test process improvement. The risk originates from a failure to recognize the strong interrelationships between the testing process and other processes involved in software development. By focusing only on the test process, there is a risk that problems that are not within the test process itself get scoped out of the analysis. We end up treating symptoms (i.e., effects) instead of the root causes.

The idea behind enablers is to provide a link between the test process and other relevant processes within the software development life cycle to clarify how they can both benefit from exchanging best practices and working closely together. In some instances, enablers also provide links to software process improvement models such as CMMI and ISO/IEC 15504.

The following example applies to the key area “test strategy” at the controlled maturity level. This includes the following two checkpoints (from TPI NEXT – Business-Driven Test Process Improvement [van Ewijk, et al. 2009]) with relevance to product risk analysis:

![]() Checkpoint 03.c.2: The test strategy is based on product risk analysis.

Checkpoint 03.c.2: The test strategy is based on product risk analysis.

![]() Checkpoint 03.c.3: There is a differentiation in test levels, test types, test coverage and test depth, depending on the analyzed risks.

Checkpoint 03.c.3: There is a differentiation in test levels, test types, test coverage and test depth, depending on the analyzed risks.

Performing product risk analysis within TPI NEXT is not a part of the test process, so the TPI NEXT model simply suggests “use risk management for product risks” as an enabler to achieving the two checkpoints. The test process improver can check up on risk management practices by considering any source of information they choose. This may be the implementation of a software process improvement model, such as CMMI (risk management is a specific process area with CMMI), or it could be a knowledgeable person in their department.

Note that the TPI NEXT model does not consider enablers formally in the same way as checkpoints. Enablers are simply high-level hints and links that increase awareness and help to mitigate the risks mentioned previously.

Improvement Suggestions

There are two principal sources of test process improvements supported by the TPI NEXT model. The first source is to simply consider any checkpoints that have not yet been achieved in order to reach the desired test process maturity. This source of information covers the direct “what needs to be achieved” aspect of required improvements.

Test improvement plans (see section 6.4.) should not focus entirely on listing unachieved checkpoints; there must be an indication of how particular business objectives can be achieved through test process improvements. The TPI NEXT model supports this by providing a second source of test process improvements. These are improvement suggestions, which are described for the three maturity levels of each key area. They give advice on how particular maturity levels might be achieved by implementing the suggestions.

The following example taken from TPI NEXT shows the four improvement suggestions for achieving controlled test process maturity for the stakeholder commitment key area:

![]() Locate the person who orders test activities or is heavily dependant on test results.

Locate the person who orders test activities or is heavily dependant on test results.

![]() Research the effect of poor testing on production and make this visible to stakeholders. Show which defects could have been found earlier by testing. Indicate what the stakeholders could have done to avoid the problems.

Research the effect of poor testing on production and make this visible to stakeholders. Show which defects could have been found earlier by testing. Indicate what the stakeholders could have done to avoid the problems.

![]() Keep to simple, practical examples.

Keep to simple, practical examples.

![]() Focus on “quick wins.”

Focus on “quick wins.”

Using TPI NEXT: Overview

We will now describe some of the frequently performed activities in test process improvement with regard to using the TPI NEXT model. The following activities are described:

![]() Representing business objectives

Representing business objectives

![]() Evaluating assessment results

Evaluating assessment results

![]() Setting improvement priorities

Setting improvement priorities

Representing Business Objectives

If the objective followed by test process improvement is a general improvement in maturity across the entire range of key areas, then the base clusters defined in the TPI NEXT model can be used as a guide for planning step-by-step improvements. This is also the case for an organization that obviously has a low test process maturity where it is probably better to initially start improving right away using the base clusters. Generally speaking, however, there is more benefit to be gained for an organization by defining business objectives and not relying entirely on the default base clusters.